Abstract

In the present study, 258 doctoral students working in the health, biological, and social sciences were asked to solve a series of field-relevant problems calling for creative thought. Proposed solutions to these problems were scored with respect to critical creative thinking skills such as problem definition, conceptual combination, and idea generation. Results indicated that health, biological, and social scientists differed with respect to their skill in executing various operations, or processes, involved in creative thought. Interestingly, no differences were observed as a function of the students’ level of experience. The implications of these findings for understanding cross-field, and cross-experience level, differences in creative thought are discussed.

Keywords: creativity, creative processes, field, experience

Students of creativity have long been interested in potential cross-field differences with regard to creative achievement and the characteristics held to influence creative thought (Csikszentmihalyi, 1999; MacKinnon, 1962). For example, Lehman (1953; 1966) examined cross-field differences in the timing of eminent achievement finding that peak periods for productivity occurred later in applied than theoretical fields (Mumford & Gustafson, 1988). Other work, by Feist (1999), compared the personality characteristics of creative scientists to creative artists. His findings indicated that characteristics such as emotional viability, nonconformity, and rebelliousness distinguished creative artists from creative scientists. However, other characteristics such as openness, drive, and autonomy were found to be shared by creative people working in the arts and sciences. Findings of this sort led Csikszentmihalyi (1999) to call for research examining cross-field differences in the nature of creative work.

Clearly, many variables including personality (e.g., Feist, 1999), motivation (e.g., Eisenberger & Rhoades, 2001; Mumford, Decker, Connelly, Osburn, & Scott, 2002), climate (e.g., Amabile, Conti, Coon, Lazenby, & Herron, 1996; West, 2002), and work conditions (e.g., Amabile & Mueller, 2008; Tierney & Farmer, 2004) might contribute to cross-field differences in creativity and creative achievement. Creativity, although influenced by multiple attributes, ultimately requires the generation of new ideas and the translation of these ideas into viable products (Ghiselin, 1963). Recognition of the importance of creative thought has led to a steady stream of studies examining the influence of knowledge (e.g., Weisberg, 2006), divergent thinking (e.g., Runco & Okuda, 1988), creative thinking processes (e.g., Ward, Smith, & Finke, 1999), and strategies for effective execution of these processes (e.g., Scott, Lonergan, & Mumford, 2005).

In the present study, we assessed whether cross-field differences were observed in critical creative thinking skills, processing skills such as problem definition (Rostan, 1994), conceptual combination (Ward, Patterson, & Sifonis, 2004), and idea generation (Merrifeld, Guilford, Christensen, & Frick, 1962), known to be involved in creative thought. The basis for this comparison was three general fields in the sciences – health sciences, biological sciences, and social sciences. These sciences were selected as the basis for this investigation, in part, because it has already been established that work in these fields calls for creative thought (Feist & Gorman, 1998; Mumford, Connelly, Scott, Espejo, Sohl, Hunter, & Bedell, 2005; Simonton, 2008), and, in part, because the work demands made in these scientific fields are reasonably well understood (Dunbar, 1995; Zuckerman, 1977). Moreover, in the course of this effort, we examined how early experience working in a field contributed to development of creative thinking skills.

Creative Thinking

The development of viable, high quality, original ideas (Besemer & O’Quin, 1998) to the complex, novel, ill-defined problems that call for creative thought (Mumford & Gustafson, 2007), is commonly held to depend on knowledge or expertise (Ericsson & Charness, 1994; Weisberg & Hass, 2007). Knowledge, or expertise, while necessary for the generation of viable solutions to creative problems, is not fully sufficient. Instead, people must work with, and reshape, this knowledge to generate viable solutions to creative problems (Finke, Ward, & Smith, 1992). Recognition of the need for the restructuring of available knowledge in incidents of creative thought has led a number of scholars to propose models of the processes involved as people work with knowledge to generate creative problem solutions (e.g., Amabile, 1988; Dewey, 1910; Merrifield et al., 1962; Parnes & Noller, 1972; Sternberg, 1985; Wallas, 1926). Mumford, Mobley, Uhlman, Reiter-Palmon, and Doares (1991) conducted a review of prior studies examining the processes contributing to creative thought. Based on this review, eight core processes were identified contributing to creative thought in most domains: 1) problem definition, 2) information gathering, 3) information organization, 4) conceptual combination, 5) idea generation, 6) idea evaluation, 7) implementation planning, and 8) solution appraisal. In recent years, two streams of research have led this process model to be accepted as the best available description of the processing operations underlying creative thought (Brophy, 1998; Lubart, 2001).

One stream of evidence has been based on studies examining differential effectiveness in process execution and their relationships to performance on creative problem-solving tasks. In one set of studies along these lines, Mumford and his colleagues (Mumford, Baughman, Maher, Costanza, & Supinski, 1997; Mumford, Baughman, & Sager, 2003; Mumford, Baughman, Supinski, & Maher, 1996; Mumford, Baughman, Threlfall, Supinski, & Constanza, 1996; Mumford, Supinski, Baughman, Constanza, & Threlfall, 1997; Mumford, Supinski, Threlfall, & Baughman, 1996) developed measures intended to assess differential effectiveness in process execution. For example, information gathering was assessed through time spent reading pertinent information while conceptual combination was assessed through appraisals of the quality and originality of the new concepts produced through combinations of extant concepts (e.g., birds and sporting equipment). It was found that more effective execution of these processes was positively related to the quality and originality of solutions produced to creative problems (r = .40) – even when basic abilities, such as intelligence and divergent thinking, were taken into account. Moreover, effective execution of each process was found to make a unique contribution to the prediction of the quality and originality of creative problem solutions – producing multiple correlations in the low .60’s. Although these studies focused on problem definition, information gathering, information organization, and conceptual combination, other studies by Basadur, Runco, and Vega (2000), Dailey and Mumford (2006), Lonergan, Scott, and Mumford (2004), and Osburn and Mumford (2006) have provided evidence for the importance of idea generation, idea evaluation, and implementation planning in creative thought.

The other stream of research that has provided support for this model may be found in studies examining the strategies leading to more effective execution of these processes. For example, studies by Baughman and Mumford (1995) and Ward et al. (2003) have shown that feature search, feature mapping, and elaboration of emergent features contribute to the success of conceptual combination efforts. Other work by Scott et al. (2005) has shown that application of these strategies in conceptual combination varies as a function of the knowledge structures being applied. In contrast, Lonergan et al. (2004) showed that an attempt to compensate for deficiencies in ideas contributed to idea evaluation with better idea evaluation resulting in the production of higher quality and more original advertisements. Still other work, by Byrne, Shipman, and Mumford (in press), has shown that the extensiveness of people’s forecasts during idea evaluation and implementation planning contributes to the production of higher quality, more original, and more elegant solutions to problems calling for creative thought.

Field Differences

Taken as a whole, the studies discussed above suggest there is reason to suspect the model of creative processing activities proposed by Mumford et al. (1991) provides a plausible description of the key processes involved in creative thinking (Lubart, 2001). Recent work by Csikszentmihalyi (1999), however, brings to the fore a new question. Is their reason to suspect that cross-field differences will be observed in the effectiveness of process execution? At a general level, Mumford et al. (1991) provided one potential answer to this question. More specifically, they argued that the type of creative problems presented in different fields would emphasize, or require, greater skill in executing some processes as opposed to others. Although this proposition is plausible, evidence directly bearing on this point is not available. Moreover, work in any given field is inherently complex and may influence people’s thinking in many ways aside from execution of relevant creative thinking processes. Thus, the question arises as to whether cross-field differences in effective execution of creative processing skills will be observed.

Csikszentmihalyi (1999) has defined a field as an area of creative work occurring within a society. As a result, social institutions are established to support, maintain, and evaluate work occurring in a field (Baer & Frese, 2003). As part of these efforts, fields impose educational requirements on people intending to pursue work in this area (Sternberg, 2005). These educational experiences extend beyond course work to include mentoring and key developmental experiences such as post-doctoral work (Zuckerman, 1977). Moreover, fields impose, through institutional requirements and educational experiences, a set of normative expectations on people pursuing work in an area, including explicit definition of the major domains of creative work recognized and valued within the field (Csikszentmihalyi, 1999).

The domains of creative work that are legitimized by a field are noteworthy because they imply shifts in the key cognitive characteristics of the problems on which individuals, as members of the field, are working. For example, Csikszentmihalyi (1999) argued that fields may differ with regard to the procedures used to record information and how well this information is integrated. In fields where information is recorded in a clear fashion, information gathering demands will be reduced. In contrast, when fields are not tightly integrated with regard to key concepts, conceptual combination may become more difficult – albeit of greater importance to creative problem-solving. Similarly, in fields where creative ideas are subject to costly development and deployment efforts, for example ideas for a new car, idea evaluation and implementation planning may become particularly important to creative thought (Mumford, Schultz, & Osburn, 2002).

In fact, there is reason to suspect that domain differences of the sort described above might characterize the work conducted in various scientific fields. For example, the social sciences are characterized by a large number of only loosely linked theoretical models (Kuhn, 1996; Rule, 1997). As a result, one might expect that creative thinking in the social sciences would emphasize conceptual combination and the generation of new ideas based on the novel concepts emerging from these conceptual combination efforts (Ward et al., 1999). In contrast, within the biological sciences, a well defined set of integrated concepts (e.g., evolution, DNA, energy cycles, ecology) are available – integrative concepts that make conceptual combination and idea generation of less importance. Rather, in the biological sciences, creative thought often emphasizes gathering information about a given entity of interest and critical evaluation of observations, or ideas, about this entity (Dunbar, 1995). Accordingly, one would expect that concept selection would be of great importance to creative thought in the biological sciences with information gathering, idea generation, and idea evaluation being organized around these concepts. The health sciences represent an applied field where issues are developed through more basic work occurring in the biological and social sciences. As a result, in the health sciences, the concern is not with developing new concepts and new ideas – concerns that call for conceptual combination and idea evaluation skills. Rather, in this kind of applied field definition of the problem and implementation planning are likely to prove especially important to creative problem-solving (Mumford, 2001).

These differences in the demands made by creative problems in the social, biological, and health sciences are noteworthy because they might lead one, for two reasons, to expect differences among people working in these fields with respect to application of creative processes. First, people who are skilled in the application of requisite processing operations will likely be attracted to fields where these processing operations are emphasized (Schneider, 1987). Second, selection into the field will tend to focus on those processing skills critical to creative performance within the relevant domain (Csikszentmihalyi, 1999). Accordingly, the following hypotheses are proposed:

Hypothesis One: Differences will be observed among people working in the health, biological, and social sciences with regard to their skill in applying certain creative thinking processes.

Hypothesis Two: The differences observed between health, biological, and social scientists will be consistent with the demands made for effective process execution within the field.

Level of Experience

Of course, there is also reason to expect that experience in a field might lead to improvement in people’s application of field-relevant creative thinking processes as a result of experience, and thus, presumably, acquisition of some expertise (Weisberg, 2006). By the same token, however, in the early phases of a professional career, specifically when field members are still in graduate school, there is reason to suspect that growth in creative thinking skills may not be especially pronounced. In fact, the patterns of growth and change observed in creative thinking processes may be quite complex early in people’s career with at least three different patterns of change potentially being observed with experience: 1) no change, 2) decrements in field irrelevant processing skills, and 3) gains in field-relevant processing skills.

At an intuitive level, it would seem that more experience working in a field would result in the acquisition of stronger declarative and procedural knowledge (Chi, Bassock, Lewis, Reitman, & Glaser, 1989). Acquisition of procedural and declarative knowledge relevant to a field should, in turn, result in gains in field-relevant processing skills. These gains, moreover, should be particularly marked if instructors, or mentors, expressly seek to provide people with feedback concerning more, or less, effective strategies for application of critical creative thinking processes applying in the field. Presumably such intensive educational experiences would result in the growth of field-relevant skills in the application of creative problem-solving processes, especially when these educational interventions are accompanied by real-world or bench experience.

Although it seems reasonable to expect growth in field-relevant creative processing skills as people enter a profession, it is also plausible that little, or no, change in requisite processing skills would be observed. Typically, initial entry into a field involves the acquisition of declarative knowledge, basic concepts, not the procedural knowledge, or strategies, held to underlie more effective application of creative thinking processes (Ericsson & Charness, 1994). As a result, growth in field-relevant creative thinking processes may not be observed during the period of people’s entry into the field. Moreover, if these creative processing skills are acquired rather slowly, over long periods of time (Nickerson, 1999), then it may not prove feasible for people to display much growth in these skills during the early phases of their career. When these potential phenomena are considered in light of the emphasis placed on socialization during people’s initial entry into a field (Zuckerman, 1977), it also seems plausible to argue that little change in the application of creative processing skills will be observed during the initial period of entry into the field.

In this regard, however, it should be borne in mind that a third possibility exists. In the early phases of people’s career in a field, they are often provided with negative feedback concerning the application of inappropriate problem-solving strategies (Zuckerman, 1977). This negative feedback may lead to declines in the application of creative thinking processes that are inconsistent with demands made by work in the domains of interest to the field. Moreover, because people are invested in pursuing work within field-relevant domains, growth of non-domain relevant processing skills may be slowed. Thus, there is at least some reason to expect that declines in some creative processing skills may be observed as people enter a field.

Clearly, multiple patterns of change in creative processing skills exist as people enter a field. By the same token, it is also true that initial experience in most fields stresses acquisition of declarative knowledge, as opposed to the procedural knowledge, or strategies, underlying effective application of creative thinking processes (e.g., Baughman & Mumford, 1995); Lonergan et al., 2004; Scott et al., 2005). Thus, it seems reasonable to expect that little change will be observed in creative thinking processes during people’s period of initial entry into a field. When this observation is considered in light of the socialization demands made during this period (Zuckerman, 1977), and the possibility that both growth and decline of creative processing skills might occur, a third hypothesis seemed indicated:

Hypothesis Three: No change in the effectiveness of application of creative thinking processes will be observed as a function of experience during the period of people’s initial entry into a field.

Method

Sample

The sample used to test these hypotheses consisted of 258 doctoral students attending a large southwestern research university. The 98 men and 151 women (9 did not report) recruited to participate in this study had been in their respective doctoral programs for a minimum of 4 months and a maximum of 60 months. Doctoral students were recruited from health (e.g., medicine, dentistry, nursing, epidemiology), biological (e.g., micro-biology, zoology, botany, biochemistry), and social (e.g., psychology, sociology, anthropology, communication) science degree programs. Overall, 33% of the participants were drawn from the social sciences, 40% of the participants were drawn from the biological sciences, and 27% of the participants were drawn from the health sciences. All programs require independent research for award of a doctoral degree. On average, sample members were 28 years old and had completed 17 years of education prior to admission to their doctoral program. Roughly 45% of sample members were employed in non-research (primarily teaching) positions, while 55% were employed as research assistants. Nonetheless, all sample members were actively involved in one or more research projects.

General Procedures

The present investigation was conducted as part of a larger, federally funded, initiative concerned with research integrity among doctoral students (e.g., Antes, Brown, Murphy, Waples, Mumford, Connelly, & Devenport, 2007; Kligyte, Marcy, Waples, Sevier, Godfrey, Mumford, & Hougen, 2008; Mumford, Devenport, Brown, Connelly, Murphy, Hill, & Antes, 2006. The Office of the Vice President for Research provided names, department assignments, e-mail addresses, and telephone numbers of all doctoral students in health, biological, and social science degree programs in 2005 and 2006. A three-stage process was used to recruit doctoral students in these three program areas. First, flyers announcing the study, noting that a payment of $100.00 would be provided as compensation for participation, were placed in student mailboxes. Second, a phone call was made to each student in the relevant fields to encourage participation. Third, and finally, each student was sent up to four e-mail requests to solicit participation.

In all of these stages of the recruitment process, it was noted that the study was concerned with research integrity. Specifically, the study was described as an investigation of the effects of educational experiences on integrity in research settings. Students who agreed to participate in this investigation were asked to schedule a time during which they could complete a four-hour battery of paper-and-pencil measures. After they had completed these measures, participants were debriefed.

Participants were asked to complete five sets of measures as part of the larger investigation. First, they were asked to complete a background information form – responses to this form provided the basis for the manipulations of concern in the present study. More specifically, students were assigned to a field, health, biological, or social sciences, based on the indicated academic department. Second, level of experience, first-year versus third- or fourth-year, was assessed based on responses to a question regarding long had they been in their current doctoral program. It is of note that first-year students were recruited in 2005 and third- and fourth-year students were recruited in 2006. Comparison of these groups with respect to university data indicated no cross-year shifts in cohort characteristics.

Second, after completing this background information form, participants were asked to complete a set of inventories where they were asked to describe the work they were doing, events that had happened to them in the course of doing this work, and their impressions of the work environment. Third, participants were asked to assume the role of an institutional review board member and assign penalties for ethical breeches. Fourth, participants were asked to complete a battery of individual differences measures (described more fully below) to be used as covariate controls. Fifth, and finally, individuals were asked to complete a field-specific, low fidelity simulation task where they were asked to provide solutions to a series of professional problems that might be encountered in the course of their day-to-day work. On this problem-solving measure, half of the problems presented involved potential ethical events. The remaining problems presented were purely technical in nature. Answers to these technical questions provided the basis for assessing application of the creative problem-solving processes.

Control Measures

The first two control measures participants were asked to complete examined cognitive abilities known to influence performance on creative problem-solving tasks (Vincent, Decker, & Mumford, 2002). The first measure, examining intelligence, was the Employee Aptitude Survey (Ruch & Ruch, 1980). The Employee Aptitude Survey is a 30-item verbal reasoning measure which yields split-half reliabilities about .80. Evidence for the construct validity of this measure has been provided by Ruch and Ruch (1980).

To measure divergent thinking skills, participants were asked to complete Guilford’s (Merrifield et al., 1962) Consequences Test. On this ten minute test, people are asked to list as many consequences of unusual events (e.g., What would happen if gravity was cut in half?) as they can generate. The responses to the five consequences questions presented on this test were scored for fluency, or the number of consequences generated, due to the measure’s use as a control. When scored for fluency, this measure yielded an internal consistency coefficient in the .70s. Evidence for the construct validity of this test, as a measure of divergent thinking, has been provided by Kettner, Guilford, and Christensen (1959) and Merrifield et al. (1962).

In addition to these cognitive measures, participants were asked to complete two sets of personality measures. The first set of measures examined general dispositional constructs. One measure participants were asked to complete was John, Donahue, and Kentle’s (1991) inventory providing scales measuring agreeableness, extraversion, conscientiousness, neuroticism, and openness. These personality characteristics are measured using 44 self-report behavioral descriptions – for example, “I see myself as someone who can be moody”. Additionally, participants were asked to complete Paulhus’s (1984) measure of socially desirable responding. This inventory includes 40 behavioral self-report questions, for example “I always know why I like things”, focused on the propensity for socially desirable responding. All scales included in these two measures produced internal consistency coefficients above .70. Evidence bearing on the validity of these measures has been provided by John et al. (1991) and Paulhus (1984).

The second set of personality measures examined personality characteristics that have been linked to decision-making – specifically ethical decision-making. Accordingly, participants were asked to complete Emmons’ (1987) measure of narcissism. This 37-item questionnaire presents behavioral self-report questions, such as “I am an extraordinary person”, examining key aspects of narcissism. Additionally, participants completed Wrightsman’s (1974) philosophies of human nature measure – a self-report inventory consisting of scales measuring cynicism, trust, variability, and complexity. A typical question from the 10-items measuring cynicism includes, “Most people would tell a lie if they could gain by it”. An example question from the 8-items measuring variability includes, “Different people react to the same situations in different ways.” Finally, participants were asked to complete a 20-item self-report behavioral inventory examining manifest anxiety through questions such as “I work under a great deal of tension” (Taylor, 1953). All these scales yielded internal consistency coefficients above .70. Emmons (1987), Taylor (1953), and Wrightsman (1974) have provided evidence for the construct validity of these measures.

Measure of Creative Problem-Solving Process

The measure of creative thinking skills applied in the present study was based on a series of low-fidelity simulations which presented work-relevant problems (Motowidlo, Dunnette, & Carter, 1990). Development of these measures began with a review by three psychologists, as in the case of all panels, blind to hypotheses but not the intent of the study, of work-based case studies available for each field – health, biological, and social sciences (e.g., American Psychological Association). This review led to identification of 45 cases that might be used as a basis for measure development in each of the three fields under consideration. A panel of three psychologists who had experience working in all three fields then reviewed these cases with respect to four criteria: 1) relevance to day-to-day work in the field, 2) importance of the technical issues involved in the case, 3) relevance to multiple disciplines working within a field, and 4) potentially challenging issues relevant across a range of expertise. Application of these criteria led to the selection of 10 to 15 cases applying in a given field that might provide a basis for developing measures of creative problem-solving processes.

To develop measures of creative problem-solving processes using these cases, a two-stage process was employed: 1) content preparation and 2) item development. In content preparation, 12 cases per field were rewritten into short one or two paragraph scenarios. These case abstracts were written by a psychologists under the following three constraints: 1) the essential nature of the work being conducted must be described, 2) the description of the work must allow for a number of problems, or issues, to arise, and 3) a range, or variety, of solutions must be possible to pursue with regard to these problems. Case abstracts, or scenarios, were then reviewed by a panel of three senior psychologists who had familiarity, and experience, with regard to the work conducted in each field. The scenarios were reviewed with respect to clarity, realism, and the range of issues that might be broached.

Following development of a scenario, item development occurred. In item development, the panel was asked to generate a list of 6 events that might occur within the scenario under consideration. Half of these events were to have ethical implications while half of these events were to have only technical implications. Thus, there were 36 technical event questions that provided the basis for development of the measures of creative problem-solving processes.

The creative problem-solving processes targeted for assessment through these events were the eight creative thinking processes identified by Mumford et al. (1991): 1) problem-definition, 2) information gathering, 3) information organization, 4) conceptual combination, 5) idea generation, 6) idea evaluation, 7) implementation planning, and 8) solution appraisal. A panel of four psychologists was asked to write events that would a) elicit a particular problem-solving process as the critical component of performance in resolving the technical problem broached by an event and b) called for novel, potentially useful, solutions in resolving the issue broached by the event. Prior to writing these technical event questions, three psychologists, all doctoral students in Industrial and Organizational Psychology, were asked to take part in a 20 hour training program where the nature of each processing operation was described along with how this process was reflected in the technical work occurring in each field. These judges were asked to generate two events targeted on different processes for each scenario with four to eight events being developed for each field that would reflect application of a given process. These events were written so as to emerge logically within each scenario under consideration.

Measurement of effective process execution in working through these events occurred using a variation on the procedures suggested by Runco, Dow, and Smith (2006). More specifically, once events calling for application of a given process had been generated, three judges, two psychologists and a subject-matter expert, were asked to generate potentially viable response options that might be used in resolving the technical issue broached by the event. In all, 8 to 12 response options were to be generated for each event. These responses were to be developed under the constraint that: 1) one quarter of the responses were to reflect responses of high quality and high originality, 2) one quarter of the responses were to reflect responses of high originality but low quality, 3) one quarter of the responses were to reflect responses of low originality but high quality, and 4) one quarter of the responses were to reflect responses of low quality and low originality. Response options developed under these criteria were reviewed by a second panel consisting of three senior psychologists, and a subject-matter expert, for process relevance, response relevance, response quality, response originality, and clarity.

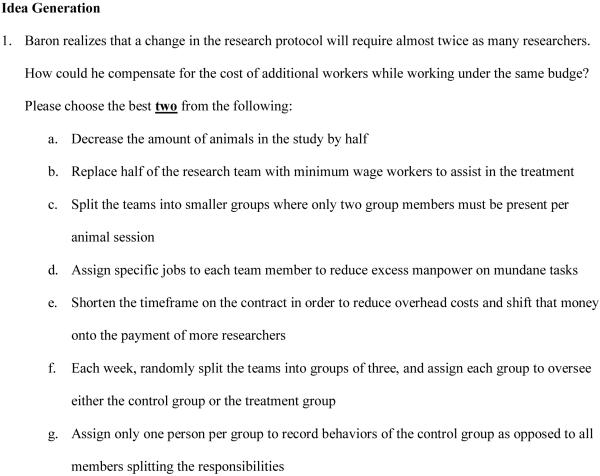

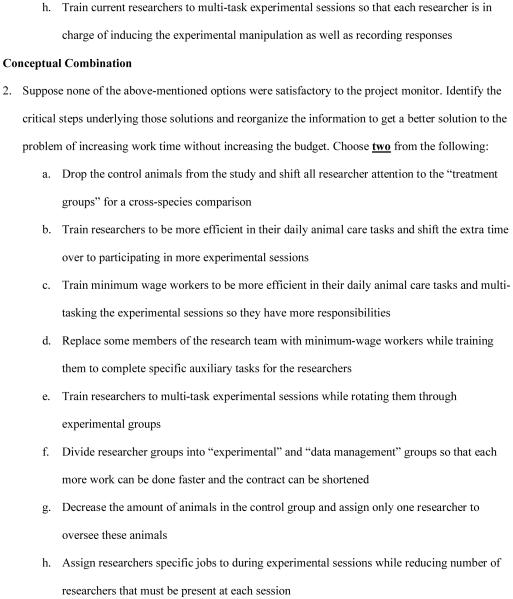

Participants in this study were asked to read through the scenario and then assume the role of the principal actor in responding to the events following the scenario. After they read through an event, they were asked to select two, from 8 response options, which they believed would provide the best solution to the problem broached by the event. The options selected were given a score of 3 if it reflected a high quality, highly original response, a score of 2 if it reflected either a response of high quality but low originality or a response of high originality but low quality, and a score of 1 if it reflected a response of low quality and low originality. These scores were then averaged for a given event. Subsequently, the average score across all events intended to measure application of a given process within a field were obtained to provide field-specific process application scores. Figure 1 provides an illustration of two creative problem-solving items, for idea generation and conceptual combination, developed for participants working in the social sciences.

Figure 1. Example questions for idea generation and conceptual combination for social scientists.

Baron works in a non-profit research center set up as part of a 500 square-mile wildlife reserve. Researchers in this lab study how wild animals respond to regular contact with humans. The National Parks and Recreations Service has funded three of the center’s research projects examining the impact of human-animal contact on reproductive behavior in different small mammal species. These projects were developed jointly and were funded together because similarities in ecosystem variables and observation methodologies will enable some level of comparison of results across species. Baron is the principal investigator for one of these projects.

The average split-half reliability coefficient obtained across the three fields for scores on these 8 process dimensions was .71. Some initial evidence bearing on the construct validity of these measures was obtained by examining their convergent and divergent validity. For example, conceptual combination was found to be positively related to idea generation (r = .29) and idea evaluation (r = .15) but not problem definition (r = .07). Problem definition, however, was found to be positively related to implementation planning (r = .19). Taken as a whole, these relationships provide some initial evidence for the construct validity of the measure as indices of the eight creative processing skills.

Analyses

In the present study, scores on these eight measures of creative problem-solving skills were treated as the dependent variables of interest in a series of analyses of covariance tests. The independent variables examined in each analysis were field (health, biological, and social sciences) and level of experience (first-year doctoral students versus third- and fourth-year doctoral students). A covariate, including potential subscales in an inventory, was retained in all analyses only if it showed a significant relationship with a given skill at the .05 level.

Results

Table 1 presents the results obtained when field and experience levels were used to account for scores on the measure of problem definition. As may be seen, both intelligence (F (1, 249) = 7.18, p ≤ .05) and openness (F (1, 249) = 3.72, p ≤ .05) explained a significant portion of the variance in problem definition. Intelligence and openness were positively related to skill in problem definition. More centrally, field type produced a significant main effect (F (2, 249) = 36.23, p ≤ .001) when used to account for scores on the problem definition scale. Inspection of the cell means indicated that the health scientists (M = 2.84, SE = .050) obtained higher scores than either biological (M = 2.34, SE = .035) or social (M = 2.40, SE = .041) scientists. A marginally significant (F (2, 249) = 2.60, p ≤ .10) interaction was also obtained between field and experience. The cell means indicated that more experienced students working in the health sciences (M = 2.91, SE = .089) obtained higher scores than all other groups (M = 2.45, SE = .052). Thus, at least in the case of problem definition, some growth was observed among health scientists as a function of experience.

Table 1. Effects of field and experience on problem definition, information gathering, information organization, and conceptual combination.

| Problem Definition |

Information Gathering |

Information Organization |

Conceptual Combination |

|||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| F | df | p | η2 | F | df | p | η2 | F | df | p | η2 | F | df | p | η2 | |

| Covariates | ||||||||||||||||

| Intelligence | 7.19 | 1, 249 | .008 | .028 | ||||||||||||

| Openness | 3.73 | 1, 249 | .055 | .015 | ||||||||||||

| Effects | ||||||||||||||||

| Field | 36.23 | 2, 249 | .001 | .225 | 26.07 | 2, 252 | .001 | .171 | 13.98 | 2, 252 | .001 | .100 | 3.83 | 2, 252 | .023 | .030 |

| Level of Experience | .16 | 1, 249 | .683 | .001 | .04 | 1, 252 | .838 | .001 | .04 | 1, 252 | .850 | .001 | 3.37 | 1, 252 | .068 | .013 |

| Field by Level of Exp. | 2.60 | 2, 249 | .076 | .020 | .45 | 2, 252 | .635 | .004 | .99 | 2, 252 | .373 | .008 | .12 | 2, 252 | .890 | .001 |

Note. F = F-ratio, df = degrees of freedom, p = significance level, η2 = eta-squared effect size

With regard to information gathering, as indicated in Table 1, no significant covariate effects were obtained. Again, however, a significant main effect was obtained for field (F (2,249) = 26.07, p ≤ .001). It was found that information gathering skills were higher in the biological sciences (M = 2.83, SE = .031) than either the health (M = 2.59, SE = .045) or social (M = 2.83, SE = .031) sciences. A different pattern of findings emerged in examining information organization skills. No significant covariates emerged in this analysis. However, field produced a significant (F (2, 249) = 13.98, p ≤ .001) main effect. It was found that the biological (M = 2.77, SE = .037) and health (M = 2.65, SE = .054) sciences obtained higher scores on information organization than the social (M = 2.47, SE = .043) sciences.

With regard to conceptual combination, a significant main effect was also obtained with respect to field (F (2, 252) = 3.83, p ≤ .05). In this analysis it was found that social (M = 2.74, SE = .039) scientists obtained higher scores than either health (M = 2.67, SE = .049) or biological (M = 2.61, SE = .034) scientists. Thus, fields appeared to differ with respect to the particular creative thinking skills where they displayed good, as opposed to poor, performance.

Table 2 presents the results obtained in the analysis of covariance when the effects of field and experience on idea generation were examined. For idea generation, cynicism (F (1, 247) = 10.72, p ≤ .001) proved to be a significant covariate with cynicism being negatively related to skill in idea generation. More centrally, a significant main effect was obtained for field (F (2,247) = 6.83, p ≤ .001) with social (M = 2.86, SE = .036) and biological (M = 2.86, SE = .031) scientists obtaining higher scores than health (M = 2.67, SE = .045) scientists. With regard to idea generation, a marginally significant main effect was also obtained for level of experience (F (1, 247) = 3.53, p ≤ .10). Interestingly, it was found that idea generation was somewhat better among first-year (M = 2.84, SE = .036), as opposed to third- and fourth-year (M = 2.76, SE = .036), doctoral students – suggesting operation of a field socialization effect which acts to constrain idea generation.

Table 2. Effects of field and experience on idea generation, idea evaluation, implementation planning, and solution appraisal.

| Idea Generation |

Idea Evaluation |

Implementation Planning |

Solution Appraisal |

|||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| F | df | p | η2 | F | df | p | η2 | F | df | p | η2 | F | df | p | η2 | |

| Covariates | ||||||||||||||||

| Cynicism | 10.73 | 1, 247 | .001 | .042 | ||||||||||||

| Variability | 4.85 | 1, 245 | .029 | .019 | ||||||||||||

| Extraversion | 6.79 | 1, 245 | .010 | .027 | ||||||||||||

| Narcissism | 4.65 | 1, 244 | .032 | .019 | ||||||||||||

| Effects | ||||||||||||||||

| Field | 6.83 | 2, 247 | .001 | .052 | 16.61 | 2, 245 | .001 | .119 | 14.88 | 2, 251 | .001 | .106 | 5.39 | 2, 244 | .005 | .042 |

| Level of Experience | 3.53 | 1, 247 | .061 | .014 | .58 | 1, 245 | .445 | .002 | 1.09 | 1, 251 | .297 | .004 | .78 | 1, 244 | .378 | .003 |

| Field by Level of Exp. | 2.26 | 2, 247 | .106 | .018 | 1.38 | 2, 245 | .252 | .011 | 1.91 | 2, 251 | .151 | .015 | .14 | 2, 244 | .863 | .001 |

Note. F = F-ratio, df = degrees of freedom, p = significance level, η2 = Eta-squared effect size

Table 2 also presents the results obtained in the analysis of covariance when idea evaluation was treated as the dependent variable of interest. This analysis yielded two significant covariates, variability in human nature (F (1, 245) = 4.89, p ≤ .05) and extraversion (F (1, 245) = 6.78, p ≤ .01), with beliefs with regard to variability and an external focus both being found to be positively related to idea evaluation – a pattern of findings suggesting that forecasting a range of others’ responses to ideas contributes to idea evaluation (Lonergan, Scott, & Mumford, 2004). A significant main effect was also obtained for field (F (2, 245) = 16.06, p ≤ .001). It was found that the biological (M = 2.71, SE = .048) sciences obtained higher scores on idea evaluation than the social (M = 2.53, SE = .038) sciences with the social scientists obtaining higher scores on idea evaluation than health (M = 2.38, SE = .048) scientists.

In examining implementation planning, no significant covariates emerged. However, a significant main effect was obtained for field (F (2, 251) = 14.88, p ≤ .001). Inspection of the cell means indicated that health (M = 2.94, SE = .047) scientists obtained higher scores on implementation planning than social (M = 2.74, SE = .038) or biological (M = 2.63, SE = .033) scientists. With regard to solution appraisal, the self-absorption scale of the narcissism inventory (F (1, 244) = 4.60, p ≤ .05) proved the only significant covariate. As might be expected, self-absorption was negatively related to effective solution appraisal. A significant main effect was also obtained for field (F (2, 244) = 5.39, p ≤ .05) with respect to solution appraisal. Inspection of the cell means indicated that social (M = 3.00, SE = .040) scientists obtained higher scores on this dimension than health (M = 2.90, SE = .053) scientists who, in turn, obtained higher scores than biological (M = 2.83, SE = .035) scientists.

Discussion

Before turning to the broader conclusions arising from the present effort, certain limitations should be noted. To begin, in the present study we contrasted a number of disciplines within the health, biological, and social sciences. While health, biological, and social sciences do represent distinct fields, within these broad groupings are a number of distinct fields and sub-fields (e.g., psychology versus sociology or industrial versus clinical psychology). Clearly, the results obtained in the present effort cannot speak to similarities and differences observed among these more narrowly defined fields. Although a number of disciplines were examined within each of these fields, it should also be recognized that all data examined in the present study was drawn from a single university. Thus, some caution is called for in generalization of our findings to other institutions.

Along related lines, it should be recognized that in the present study we examined doctoral students beginning their careers in these fields. One implication of this observation is that caution is called for in generalizing our findings to people working in these fields who have substantially greater expertise (Ericsson & Charness, 1994; Weisberg, 2006). It is, in fact, possible that different patterns of skill application might emerge as a function of further experience given the long period of career development that characterizes the sciences (Mumford et al., 2005). Moreover, it should also be recognized that level of experience comparisons were necessarily made within a relatively narrow range of experience – comparison of first-year to third- and fourth-year doctoral students. Clearly, other effects might be observed outside this particular range of field-relevant experience being examined in the present study.

In should also be borne in mind that in the present study, our focus was on cross-field differences among doctoral students. Doctoral students, of course, represent a population of interest because it is at this time that people are first entering a field where they are expected to pursue creative work. By the same token, it should be recognized that during this period, a number of pressures are placed on people, for example socialization pressure (Zuckerman, 1977), that may not be directly relevant to creative thinking but might nonetheless influence how people attempt to work through problems calling for creative thought.

In addition to these limitations arising from study sample and setting, three other limitations should be noted. First, the data collected in the present study was obtained as part of a study of “integrity and problem-solving” in the sciences where doctoral students were compensated for study participation. Although comparison of sample to non-sample members did not reveal any noteworthy differences, it is possible that study recruitment procedures and the provision of compensation induced some unique characteristics in the sample – characteristics not accounted for by the covariates applied. Moreover, despite the sequence of measure administration (the review board task was administered before the problem-solving measure of concern herein), and the findings obtained in prior studies indicating that responses to the problem-solving measure are unbiased (Mumford et al., 2006), the possibility remains that certain effects might have been induced by the study description.

Second, it should be recognized that the design of the measure administration was such that a measure of open-ended creative problem-solving could not be obtained in each of the fields under consideration. Although the measure of creative processing skills evidenced some validity, it should also be recognized that our findings pertain only to processing skills held to underlie creative problem-solving and do not speak to creative problem-solving as a more general phenomenon. Moreover, it should be borne in mind that the present study was based on one model of critical processing operations held to underlie creative thought – the model of creative processing operations proposed by Mumford et al. (1991). Although this model has acquired some support (e.g., Lubart, 2001; Mumford et al., 2003), other processing operations might exist that have not been examined in the present study.

Third, it should be recognized that the present study was conducted within a unique, and highly select, population. More specifically, we examined field and experience influences among those admitted to doctoral programs. As a result, substantial range restriction has occurred in sample members’ status on many of the variables under consideration. The operation of range restriction effects might, in part, account for the weak effects evidenced by many of the control variables. Moreover, the operation of range restriction suggests that the findings obtained in the present effort will be conservative (Mendoza, Bard, Mumford, & Ang, 2004).

Even bearing these limitations in mind, we believe that the present study does lead to two noteworthy conclusions – one pertaining to field differences and one pertaining to the effects of experience. Perhaps the most clear-cut conclusion emerging from the present study is that different fields of creative work (health, biological, and social sciences) do display differences with regard to the effectiveness with which individuals working in that field execute relevant processing operations. Health scientists evidenced stronger problem definition, information gathering, information organization, implementation planning, and solution appraisal skills. The biological scientists evidenced stronger information gathering, information organization, idea generation, and idea evaluation skills. The social scientists evidenced stronger conceptual combination, idea generation, and solution appraisal skills.

Taken as a whole, the pattern of cross-field differences observed with regard to these creative problem-solving skills is consistent with the demands made by creative work being pursued in these fields (Csikszentmihalyi, 1999). More specifically, in the social sciences where a plethora of models exists, an emphasis is placed on conceptual combination and idea generation vis-à-vis these new combinations accompanied by an emphasis on appraisal of the solutions arising from these ideas. In the biological sciences where a strong set of conceptual models are available, the emphasis is on concept selection, information gathering, and idea generation vis-à-vis these models. In the health sciences, which have a distinctly practical focus, problem definition and information gathering are critical accompanied by implementation planning and solution appraisal.

The failure of experience level to exert strong effects, or interact with these field-specific effects, is noteworthy because it eliminates one potential explanation for the origin of these field effects. These field effects do not, apparently, arise from time spent working in a field. Instead, given the findings obtained in the present study, it seems likely that they arise from the selective attraction of individuals who possess these skills to fields which demand these skills in creative problem-solving. This observation is noteworthy because it suggests that attraction, selection, and attrition models (Schneider, 1987) might prove of value in accounting for the emergence of field differences in people’s application of creative thinking skills.

The existence of these field differences, however, is also noteworthy with respect to the broader debate concerning the domain-specificity of creative thought (e.g., Baer, 2003; Plucker, 2004). In the present study, the findings obtained suggest that some fields emphasize certain creative thinking skills while other fields emphasize another set of creative thinking skills. Thus, a general model of creative thought may be used to identify relevant creative thinking skills. However, the weight placed on these skills may be greater in one field, as opposed to another, based on the demands made by creative problems within this domain. Thus, in discussions of cross-domain generality versus domain-specificity, it may be useful to take into account differential weighting of creative thinking skills (Peterson, Mumford, Borman, Jeanneret, & Fleishman, 1999).

These observations about field-specific differences in the weighting, or importance, of creative thinking skills, have two important practical implications. To begin, if a field emphasizes one set of creative thinking skills as opposed to another set, then assessments of the individual’s potential for creative work in a field will be stronger if these assessments are made with reference to those skills, knowledges, and abilities critical to creative work within this field. In fact, the results obtained in the present study suggest there might be some value in field-specific assessments of creative potential, at least within the sciences.

Along somewhat different lines, if fields differ with respect to the creative thinking skills emphasized in the work, then effective educational interventions should focus on those creative thinking skills critical for work in this field. Thus, in seeking to improve the creative work of health scientists it may prove particularly useful to focus on problem definition and implementation planning. For biological scientists, it may prove more useful to focus on information organization and idea generation. For social scientists, however, conceptual combination should be stressed along with idea generation and solution appraisal. Although these field specific educational interventions seem reasonable given the results obtained in the present study, an important caveat should be noted.

More specifically, experience in doctoral-level educational programs did not exert consistent, significant, effects on the growth of these creative thinking skills. Apparently, doctoral level education has little effect on gains in scientist’s creative thinking skills. Given the importance of creative thought to performance in the sciences (Mumford et al., 2005), the failure of these skills, especially field-relevant skills, to evidence growth during doctoral education is troublesome – although it might be attributed to a focus on doctoral students’ acquisition of declarative, factual, knowledge rather than acquisition of the procedural, strategic, knowledge underlying more effective execution of creative thinking processes. Nonetheless, this pattern of findings suggests that more emphasis should be given to the development of creative thinking skills in doctoral education.

Hopefully, the present investigation will provide an impetus for future work along these lines. Although a variety of approaches might be applied to develop creative thinking skills among doctoral students (Nickerson, 1999; Scott, Leritz, & Mumford, 2004), we hope the present study reminds investigators of an important consideration to be taken into account in such ventures. More specifically, effective educational interventions for doctoral students in the sciences, or other fields, should take into account the demands made on select creative processing skills within the particular fields under consideration.

Acknowledgements

We would like to thank Stephen Murphy, Whitney Helton-Fauth, Ginamarie Scott, Lynn Devenport, and Ryan Brown for their contributions to the present effort. The data collection was supported, in part, by the National Institutes of Health, National Center for Research Resources, General Clinical Research Center Grant M01 RR-14467. Parts of this work were also sponsored by a grant, 5R01-NS049535-02, from the National Institutes of Health and the Office of Research Integrity, Michael D. Mumford, Principal Investigator.

References

- Amabile TM. A model of creativity and innovation in organizations. Research in Organizational Behavior. 1988;10:123–167. [Google Scholar]

- Amabile TM, Conti R, Coon H, Lazenby J, Herron M. Assessing the work environment for creativity. Academy of Management Journal. 1996;39:1154–1184. [Google Scholar]

- Amabile TM, Mueller JS. Studying creativity, its processes, and its antecedents: An exploration of the componential theory of creativity. In: Zhou J, Shalley CE, editors. Handbook of organizational creativity. Lawrence Erlbaum Associates; New York: 2008. pp. 33–64. [Google Scholar]

- Antes AL, Brown RP, Murphy ST, Waples EP, Mumford MD, Connelly S, Devenport LD. Personality and ethical decision-making in research: The role of perceptions of self and others. Journal of Empirical Research on Human Research Ethics. 2007;2:15–34. doi: 10.1525/jer.2007.2.4.15. [DOI] [PubMed] [Google Scholar]

- Baer J. Evaluative thinking, creativity, and task specificity: Separating wheat from chaff is not the same as finding needles in haystacks. In: Runco M, editor. Critical creative processes. Hampton Press; Cresskill, N.J.: 2003. pp. 129–151. [Google Scholar]

- Baer M, Frese M. Innovation is not enough: Climates for initiative and psychological safety, process innovations, and firm performance. Journal of Organizational Behavior. 2003;24:45–68. [Google Scholar]

- Basadur M, Runco MA, Vega LA. Understanding how creative thinking skills, attitudes and behaviors work together: A causal process model. Journal of Creative Behavior. 2000;34:77–100. [Google Scholar]

- Baughman WA, Mumford MD. Process analytic models of creative capacities: Operations involves in the combination and reorganization process. Creativity Research Journal. 1995;8:37–62. [Google Scholar]

- Besemer SP, O’Quin K. Confirming the three-factor creative product analysis matrix model in an American sample. Creativity Research Journal. 1999;12:287–296. [Google Scholar]

- Brophy DR. Understanding, measuring, and enhancing individual creative problem-solving efforts. Creativity Research Journal. 1998;11:123–150. [Google Scholar]

- Byrne CL, Shipman AS, Mumford MD. The effects of forecasting on creative problem-solving: An experimental study. Creativity Research Journal. in press. [Google Scholar]

- Chi MT, Bassock M, Lewis MW, Reitman P, Glaser R. Self-explanations: How students study and use examples in learning to solve problems. Cognitive Science. 1989;13:145–182. [Google Scholar]

- Csikszentmihalyi M. Implications of a system’s perspective for the study of creativity. In: Stenberg RJ, editor. Handbook of creativity. Cambridge University Press; Cambridge, England: 1999. pp. 313–338. [Google Scholar]

- Dailey L, Mumford MD. Evaluative aspects of creative thought: Errors in appraising the implications of new ideas. Creativity Research Journal. 2006;18:367–384. [Google Scholar]

- Dewey J. How we think. Houghton; Boston, MA: 1910. [Google Scholar]

- Dunbar K. How do scientists really reason: Scientific reasoning in real-world laboratories. In: Sternberg RJ, Davidson JE, editors. The nature of insight. MIT Press; Cambridge, MA: 1995. pp. 365–396. [Google Scholar]

- Eisenberger R, Rhoades L. Incremental effects of reward on creativity. Journal of Personality and Social Psychology. 2001;81:728–741. [PubMed] [Google Scholar]

- Emmons RA. Narcissism: Theory and measurement. Journal of Personality and Social Psychology. 1987;52:11–17. doi: 10.1037//0022-3514.52.1.11. [DOI] [PubMed] [Google Scholar]

- Ericsson KA, Charness N. Expert performance: Its structure and acquisition. American Psychologist. 1994;49:725–747. [Google Scholar]

- Feist GJ. The influence of personality on artistic and scientific creativity. In: Sternberg RJ, editor. Handbook of creativity. Cambridge University Press; Cambridge, England: 1999. pp. 273–296. [Google Scholar]

- Feist GJ, Gorman ME. The psychology of science: Review and integration of a nascent discipline. Review of General Psychology. 1998;2:3–47. [Google Scholar]

- Finke RA, Ward TB, Smith SM. Creative cognition: Theory, research, and applications. MIT Press; Cambridge, MA: 1992. [Google Scholar]

- Ghiselin B. Ultimate criteria for two levels of creativity. In: Taylor CW, Barron F, editors. Scientific creativity: Its recognition and development. Wiley; New York: 1963. pp. 30–43. [Google Scholar]

- John OP, Donahue EM, Kentle RL. The Big Five Inventory: Versions 4a and 54. University of California, Institute of Personality and Social Research; Berkeley, CA: 1991. Technical Report. [Google Scholar]

- Kettner NW, Guilford JP, Christensen PR. A factor-analytic study across the domains of reasoning, creativity, and evaluation. Psychological Monographs. 1959;9:1–31. [Google Scholar]

- Kligyte V, Marcy RT, Waples EP, Sevier ST, Godfrey ES, Mumford MD, Hougen DF. Application of a sensemaking approach to ethics training for physical sciences and engineering. Science and Engineering Ethics. 2008;14:251–278. doi: 10.1007/s11948-007-9048-z. [DOI] [PubMed] [Google Scholar]

- Kuhn TS. The structure of scientific revolutions. 3rd. ed. University of Chicago Press; Chicago, IL: 1996. [Google Scholar]

- Lehman HC. Age and achievement. Princeton University Press; Princeton, NJ: 1953. [Google Scholar]

- Lehman HC. The psychologist’s most creative years. American Psychologist. 1966;21:363–369. doi: 10.1037/h0023537. [DOI] [PubMed] [Google Scholar]

- Lonergan DC, Scott GM, Mumford MD. Evaluative aspects of creative thought: Effects of idea appraisal and revision standards. Creativity Research Journal. 2004;16:231–246. [Google Scholar]

- Lubart TI. Models of the creative process: Past, present, and future. Creativity Research Journal. 2001;13:295–308. [Google Scholar]

- MacKinnon DW. The nature and nurture of creative talent. American Psychologist. 1962;17:484–495. [Google Scholar]

- Mendoza JL, Bard DE, Mumford MD, Ang SC. Criterion-related validity in multiple-hurdle designs: Estimation and bias. Organizational Research Methods. 2004;7:418–441. [Google Scholar]

- Merrifield PR, Guilford JP, Christensen PR, Frick JW. The role of intellectual factors in problem solving. Psychological Monographs. 1962;76:1–21. [Google Scholar]

- Motowidlo SJ, Dunnette MD, Carter GW. An alternative selection measure: The low-fidelity simulation. Journal of Applied Psychology. 1990;75:640–647. [Google Scholar]

- Mumford MD. Something old, something new: Revisiting Guilford’s conception of creative problem solving. Creativity Research Journal. 2001;13:267–276. [Google Scholar]

- Mumford MD, Baughman W, Maher M, Costanza D, Supinski E. Process-based measures of creative problem-solving skills: IV. Category combination. Creativity Research Journal. 1997;10:59–71. [Google Scholar]

- Mumford MD, Baughman WA, Sager CE. Picking the right material: Cognition processing skills and their role in creative thought. In: Runco MA, editor. Critical and creative thinking. Hampton; Cresskill, NJ: 2003. pp. 19–68. [Google Scholar]

- Mumford MD, Baughman WA, Supinski EP, Maher MA. Process-based measures of creative problem solving skills: II. Information encoding. Creativity Research Journal. 1996;9:77–88. [Google Scholar]

- Mumford MD, Baughman WA, Threlfall KV, Supinski EP, Costanza DP. Process-based measures of creative problem-solving skills: I. Problem construction. Creativity Research Journal. 1996;9:63–76. [Google Scholar]

- Mumford MD, Connelly S, Scott G, Espejo J, Sohl LM, Hunter ST, Bedell KE. Career experiences and scientific performance: A study of social, physical, life, and health sciences. Creativity Research Journal. 2005;17:105–129. [Google Scholar]

- Mumford MD, Decker BP, Connelly MS, Osburn HK, Scott GM. Beliefs and creative performance: Relationships across three tasks. Journal of Creative Behavior. 2002;36:153–181. [Google Scholar]

- Mumford MD, Devenport LD, Brown RP, Connelly MS, Murphy ST, Hill JH, Antes AL. Validation of ethical decision-making measures: Evidence for a new set of measures. Ethics and Behavior. 2006;16:319–345. [Google Scholar]

- Mumford MD, Gustafson SB. Creativity syndrome: Integration, application, and innovation. Psychological Bulletin. 1988;103:27–43. [Google Scholar]

- Mumford MD, Gustafson SB. Creative thought: Cognition and problem solving in a dynamic system. In: Runco MA, editor. Creativity research handbook. Vol. 2. Hampton; Cresskill, NJ: 2007. pp. 33–77. [Google Scholar]

- Mumford MD, Mobley MI, Uhlman CE, Reiter-Palmon R, Doares L. Process analytic models of creative capacities. Creativity Research Journal. 1991;4:91–122. [Google Scholar]

- Mumford MD, Schultz RA, Osburn HK. Planning in organizations: Performance as a multi-level phenomenon. In: Yammarino FJ, Dansereau F, editors. Research in multi-level issues (Vol. 1): The many faces of multi-level issues. Elsevier; Oxford, England: 2002. pp. 3–36. [Google Scholar]

- Mumford MD, Supinski EP, Baughman WA, Costanza DP, Threlfall KV. Process-based measures of creative problem-solving skills: I. Overall prediction. Creativity Research Journal. 1997;10:77–85. [Google Scholar]

- Mumford MD, Supinski EP, Threlfall KV, Baughman WA. Process-based measures of creative problem-solving skills: III. Category selection. Creativity Research Journal. 1996;9:395–406. [Google Scholar]

- Nickerson RS. Enhancing creativity. In: Sternberg RE, editor. Handbook of creativity. Cambridge University Press; Cambridge, England: 1999. pp. 392–430. [Google Scholar]

- Osburn H, Mumford MD. Creativity and planning: Training interventions to develop creative problem-solving skills. Creativity Research Journal. 2006;18:173–190. [Google Scholar]

- Parnes SJ, Noller RB. Applied creativity: The creative studies project: Part II results of the two-year program. Journal of Creative Behavior. 1972;6:164–186. [Google Scholar]

- Paulhus DL. Two-component models of socially desirable responding. Journal of Personality and Social Psychology. 1984;46:598–609. [Google Scholar]

- Peterson NG, Mumford MD, Borman WC, Jeanneret PR, Fleishman EA. An Occupational Information System for the 21st Century: The Development of O*NET. American Psychological Association; Washington, DC: 1999. [Google Scholar]

- Plucker JA. Generalization of creativity across domains: Examination of the method effect hypothesis. Journal of Creative Behavior. 2004;38:1–12. [Google Scholar]

- Rostan SM. Problem finding, problem solving, and cognitive controls: An empirical investigation of criticality acclaimed productivity. Creativity Research Journal. 1994;7:97–110. [Google Scholar]

- Ruch FL, Ruch WW. Employee Aptitude Survey. Psychological Services; Los Angeles, CA: 1980. [Google Scholar]

- Runco MA, Dow G, Smith WR. Information, experience, and divergent thinking: An empirical test. Creativity Research Journal. 2006;18:269–277. [Google Scholar]

- Runco MA, Okuda SM. Problem discovery, divergent thinking, and the creative process. Journal of Youth and Adolescence. 1988;17:211–220. doi: 10.1007/BF01538162. [DOI] [PubMed] [Google Scholar]

- Rule JB. Theory and progress in social science. Cambridge University Press; New York: 1997. [Google Scholar]

- Schneider B. The people make the place. Personnel Psychology. 1987;40:437–453. [Google Scholar]

- Scott GM, Leritz LE, Mumford MD. Types of creativity: Approaches and their effectiveness. The Journal of Creative Behavior. 2004;38:149–179. [Google Scholar]

- Scott GM, Lonergan DC, Mumford MD. Contractual combination: Alternative knowledge structures, alternative heuristics. Creativity Research Journal. 2005;17:79–98. [Google Scholar]

- Simonton DK. Scientific talent, training, and performance: Intellect, personality, and genetic endowment. Review of General Psychology. 2008;12:28–46. [Google Scholar]

- Sternberg RJ. A three facet model of creativity. In: Sternberg RJ, editor. The nature of Creativity: Contemporary psychological perspectives. Cambridge University Press; Cambridge, MA: 1985. pp. 124–147. [Google Scholar]

- Sternberg RJ. Intelligence, competence, and expertise. In: Elliot AJ, Dweck CS, editors. Handbook of competence and motivation. Guilford Publications; New York: 2005. pp. 15–30. [Google Scholar]

- Taylor JA. A personality scale of manifest anxiety. Journal of Abnormal & Social Psychology. 1953;48:285–290. doi: 10.1037/h0056264. [DOI] [PubMed] [Google Scholar]

- Tierney P, Farmer SM. The Pygmalion process and employee creativity. Journal of Management. 2004;30:413–432. [Google Scholar]

- Wallas G. The art of thought. Harcourt Brace; New York: 1926. [Google Scholar]

- Ward TB, Patterson MJ, Sifonis CM. The role of specificity and abstraction in creative idea generation. Creativity Research Journal. 2004;16:1–9. [Google Scholar]

- Ward TB, Smith SM, Finke RA. Creative Cognition. In: Sternberg RJ, editor. Handbook of creativity. Cambridge University Press; Cambridge, England: 1999. pp. 189–212. [Google Scholar]

- Vincent AS, Decker BP, Mumford MD. Divergent thinking, intelligence, and expertise: A test of alternative models. Creativity Research Journal. 2002;14:163–178. [Google Scholar]

- Weisberg RW. Expertise and reason in creative thinking: Evidence from case studies and the laboratory. In: Kaufman JC, Baer J, editors. Creativity and reason in cognitive development. Cambridge University Press; New York: 2006. pp. 7–42. [Google Scholar]

- Weisberg RW, Hass R. We are all partly right: Comment on Simonton. Creativity Research Journal. 2007;19:345–360. [Google Scholar]

- West MA. Sparkling fountains or stagnant ponds: An integrative model of creativity and innovation implementation in work groups. Applied Psychology: An International Review. 2002;51:355–387. [Google Scholar]

- Wrightsman LS. Assumptions about human nature: A social-psychological approach. 1st ed. Brooks/Cole; Monterey, CA: 1974. [Google Scholar]

- Zuckerman H. Scientific elite. Free Press; New York: 1977. [Google Scholar]