Abstract

Background

Central venous catheter placement is a common procedure with a high incidence of error. Other fields requiring high reliability have used Failure Mode and Effects Analysis (FMEA) to prioritize quality and safety improvement efforts.

Objective

To use FMEA in the development of a formal, standardized curriculum for central venous catheter training.

Methods

We surveyed interns regarding their prior experience with central venous catheter placement. A multidisciplinary team used FMEA to identify high-priority failure modes and to develop online and hands-on training modules to decrease the frequency, diminish the severity, and improve the early detection of these failure modes. We required new interns to complete the modules and tracked their progress using multiple assessments.

Results

Survey results showed new interns had little prior experience with central venous catheter placement. Using FMEA, we created a curriculum that focused on planning and execution skills and identified 3 priority topics: (1) retained guidewires, which led to training on handling catheters and guidewires; (2) improved needle access, which prompted the development of an ultrasound training module; and (3) catheter-associated bloodstream infections, which were addressed through training on maximum sterile barriers. Each module included assessments that measured progress toward recognition and avoidance of common failure modes. Since introducing this curriculum, the number of retained guidewires has fallen more than 4-fold. Rates of catheter-associated infections have not yet declined, and it will take time before ultrasound training will have a measurable effect.

Conclusion

The FMEA provided a process for curriculum development. Precise definitions of failure modes for retained guidewires facilitated development of a curriculum that contributed to a dramatic decrease in the frequency of this complication. Although infections and access complications have not yet declined, failure mode identification, curriculum development, and monitored implementation show substantial promise for improving patient safety during placement of central venous catheters.

Introduction

Central venous catheter (CVC) placement is a common procedure with a high incidence of error, resulting in failure to place a functional catheter, arterial puncture, pneumothorax, catheter-associated bloodstream infection, or retained guidewires.1–4 Improving the performance of this procedure requires a systematic strategy.5 Because residents perform most CVC placements in teaching settings, we developed a simulation-based training program for this intervention. Several studies have reported the benefits of simulation-based training for CVC placement. A prospective, randomized, controlled trial of standard training followed by practice on patients versus simulator-based training found that the simulator training group had a significantly higher level of comfort and ability in placing CVCs and significantly less complications than the group who trained using real patients.6 Another study showed that the knowledge and confidence gained from simulator-based CVC training was retained after 18 months.7

Systematic efforts to detect and address sources of error can result in further improvements. High-reliability industries, such as nuclear power and aerospace use Failure Mode and Effects Analysis (FMEA)8 to identify high-priority failure modes. The FMEA process recognizes that it is impossible to eliminate all failures and focuses on reducing the frequency, decreasing the severity, and improving the detection of failures before they lead to harm. These systems also continually monitor performance and assess whether interventions have had the desired effect on frequency, severity, and detection of failure modes.5,8

Cardiopulmonary resuscitation (CPR) training already uses elements of FMEA, providing a standardized education format that includes opportunities for practice and for multiple assessments.9–14 In contrast, CVC placement is typically learned through “on-the-job” training sessions,15 which follow the tradition of “see one, do one, teach one.” Training in CVC placement rarely includes strategies to avoid or detect known failure modes and usually lacks an assessment component. As a result, it is difficult to ascertain whether a trainee has mastered the desired learning objectives before performing the procedure on a patient without direct supervision. The CVC curricula lag far behind CPR curricula, even though the average new intern can expect to encounter many more instances of CVC insertion.

This prompted us to develop a formal, standardized curriculum for CVC training based on data from our concurrent failure mode analysis. The curriculum builds upon simulation-based training that focuses instruction on important failure modes, an approach that is well established in other fields16–19 and has increasingly been adopted in health care programs.20,21 The process creates feedback loops similar to those found in the process-improvement literature.16,22–25

Methods

Environment and Participants

In 2006, our 1200-bed, tertiary hospital launched an initiative to improve patient safety during CVC placement. A multidisciplinary committee was formed with representation from internal medicine, surgery, radiology, emergency medicine, anesthesiology, neurology, and obstetrics and gynecology to develop and implement recommendations for improving performance during CVC placement in adult patients. The committee also sought the expertise of vascular access nurses, critical care nurses, process improvement consultants, and simulation experts. The effort was complemented by a formal value stream analysis conducted in March 2007. After consultation with our Institutional Review Board, it was determined that informed consent was not needed to participate in this curriculum because this training was a required part of new intern orientation.

In 2007, 112 physician trainees from 6 departments participated in the training curriculum. Over the course of 3 years, the number and diversity of trainees has expanded, and in 2009, the course included 124 physicians and 6 advanced-practice nurses from 9 departments.

The resulting modular curriculum included segments that were delivered online or through hands-on training sessions. Each hands-on training session was hosted in the medical center's simulation facilities and used 16 to 19 instructors. The online content was delivered through 2 different learning management systems.

Data for FMEA

A series of internal and external data sources were used to identify high-priority failure modes.5 Because reliable and objective data on specific failure modes were lacking when the project was initiated, our primary data sources were morbidity and mortality conferences, the Joint Commission's sentinel events, national patient safety goals, the Centers for Medicare and Medicaid Services “never events” list, and the personal observations of the multidisciplinary committee members. This analysis is described in detail in supplement 1 of the online version of this article. The committee then used simulation to investigate the mechanisms for these failures. Once failure modes were defined, learning objectives were developed to address them.

Developing Training to Address Failure Modes

To develop the online modules, the committee created a storyboard that included content and short assessments, using a commercial software package (Adobe Captivate 3.0, San Jose, CA) for early testing because it generated content in a file format (HTML) that could be viewed with Internet browsing software. Prototype training modules were then loaded onto a commercial hosting service that included a learning management system (Adobe Connect Pro, San Jose, CA) to facilitate more widespread testing and to track trainee responses to the online assessments. After revision, the online training modules were hosted on 1 of 2 commercial systems (Pathlore, BJC Learning Center, Town and Country, MO, or Etrinsic, a division of Simbionix, Denver, CO).

To develop the hands-on training sessions, the committee defined learning objectives for each simulation station. These instructor-led sessions were designed to last 20 to 30 minutes and covered 6 to 8 learning objectives. The instructors worked with the simulators and other materials to develop an approach that suited their individual style.

Deploying Training Modules

The online courses were available by the first of June during each year of the study, and the hands-on sessions were held during the second and third weeks of June. These sessions were a required part of new intern orientation. For the online courses, trainees were notified through an e-mail that contained the link to the course's secure website. All online modules included an assessment, and some modules, such as catheter-associated bloodstream infection, required trainees to obtain a passing score before starting their internship.

The hands-on courses were held in the medical school's simulation center over 3 to 4 days. Initially, training followed a serial pattern, but given the limited time for these sessions and the knowledge that repetition is a crucial aspect of learning any psychomotor skill,26 we moved toward a parallel training structure where trainees are able to repeatedly practice the skill during the 20 to 40 minute training session. In 2009, each day, groups of 25 to 45 trainees rotating through a series of 10 different stations, and each station was hosted by 1 or more instructors who led the trainees through a series of exercises.

Assessing the Effectiveness of Training

We assessed the effectiveness of training through several different methods. For the online modules, in addition to trainee acceptance of the material, we used item analysis to identify problematic content or other factors that affected achievement of learning objectives. The learning management systems were capable of recording the number of incorrect responses to each question. Although most questions had a single best answer, several matching questions were used, and other questions required trainees to create an ordered list. Starting in 2009, a standardized assessment was added. This assessment required each trainee to return to the simulation center and complete an entire simulated procedure. Trainees were graded using standardized criteria, and a passing grade was required before completing postgraduate year 1 (PGY-1).

Results

Need for a Systematic CVC Training Program

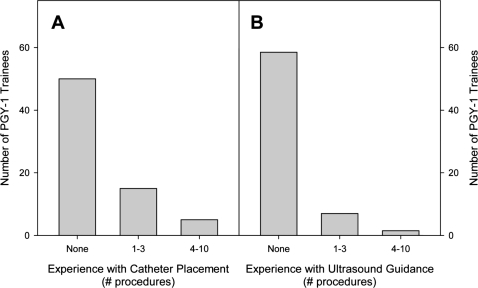

Survey data indicated that new interns (PGY-1 trainees) had little practical experience with CVC placement (Figure 1) or with crucial diagnostic tools, such as ultrasound. Of the 72 interns completing the survey as part of the training course, only 25% (18 of 72) indicated they had participated in a formal training program for central venous access during medical school. The data also indicated that most had little or no experience with placing CVCs, and even fewer had experience with ultrasound guidance.

Figure 1.

Survey Data From the 2009 PGY-1 Trainees

We estimated that these trainees would participate in more than 5000 central line placement procedures each year. Even in cases where they might not place the catheter, the trainees would still be expected to identify appropriate candidates for CVC placement, to care for patients having many different types of CVCs, and to make decisions about removing those catheters.27

Evolution of Central Line Training

The resulting training program evolved considerably during the past 3 years (online supplement 2). The current curriculum contains 4 parts: (1) a series of online training modules review the indications, contraindications, complications, and basic technique for CVC; (2) trainees participate in hands-on, simulation-based, instructor-led training sessions; (3) trainees are required to pass a standardized assessment during the latter months of their PGY-1; and (4) training in ultrasound image interpretation and scanning was added in 2009.

Identifying High-Priority Topics for Training

The FMEA process was used to identify high-priority topics for training.5,8 Priority numbers were determined by multiplying frequency, severity, and ability to escape detection (online supplement 1) and were initially based on rough estimates. In the coming years, our ability to track specific failure modes and refine those estimates will improve with the implementation of electronic health records.

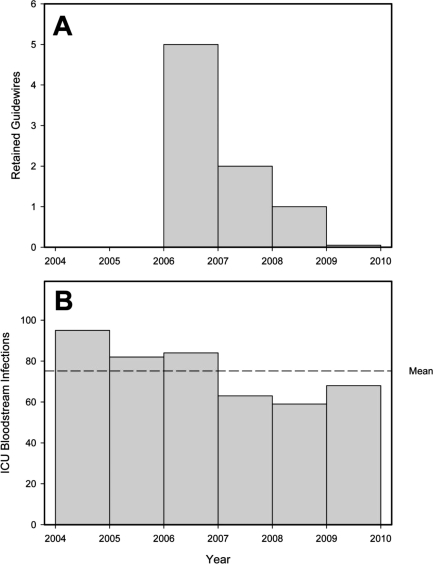

Retained guidewires became a high-priority topic when the Joint Commission designated it a sentinel event in 2006. Although the number of retained guidewires observed at our institution fell in 2007 (Figure 2), the 2007 rate was still considered unacceptable. The failure modes that caused retained guidewires were examined in detail (online supplement 1), and the analysis resulted in changing the emphasis of training from keeping a firm grip on the guidewire to inserting the guidewire to its 20-cm mark and maintaining that position while the tract was dilated and the catheter inserted (Table 1). Since introducing the catheter and guidewire skills module, the number of retained guidewires has decreased further (Figure 2).

Figure 2.

Operational Data Regarding High-Priority Failure Modes

Bar height for 2009 was based on data from the first 6 months of 2009—the total number of catheter placements throughout the institution is not known but is estimated to be approximately 10 000 per year throughout this period. Note: 2006 is the baseline—the number of retained guidewires has been tracked since 2006 and the number of catheter-associated bloodstream infections in patients in intensive care has been tracked since 2004; the first yearly training course was conducted in the summer of 2007.

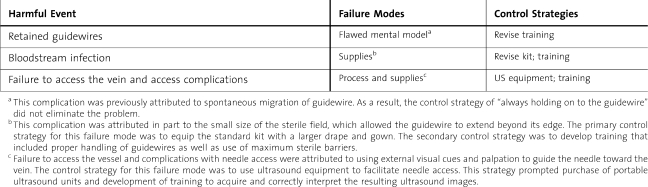

Table 1.

Control Strategies for Selected Harmful Events

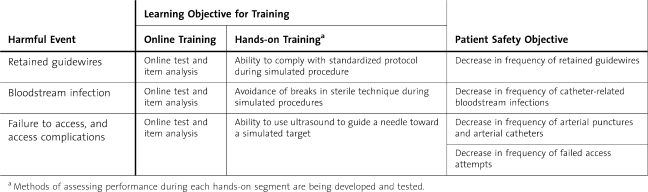

Table 2.

Assessing the Effectiveness of the Training Program

Failure to access the vein and unintended arterial puncture were considered a linked pair of high-priority failure modes that could be addressed by ultrasound guidance.28,29 To help address this need, the hospital purchased a number of ultrasound units in 2008. That purchase prompted the multidisciplinary group to begin developing and testing training materials for ultrasound guidance. Module development required a detailed evaluation of the tasks performed during ultrasound-guided access and the possible failure modes. That analysis suggested dividing ultrasound guidance into 2 parts30,31: (1) interpreting the ultrasound image and using it to determine a suitable needle path from the skin to the internal jugular vein (Figure 3C), and (2) using real-time ultrasound to confirm that the needle was being advanced along the chosen path. The failure modes for image interpretation were catalogued and used to develop training objectives. The resulting online training modules were introduced in 2009. At the same time, the team began creating content needed for a hands-on training module that included objective assessment tools.

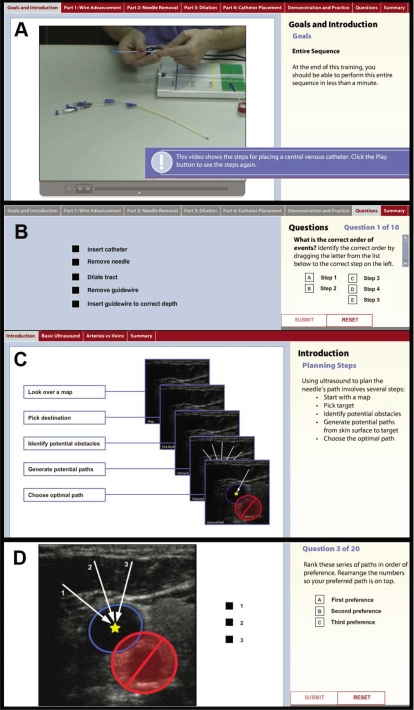

Figure 3.

Content From The Online Training Modules

Panel A how catheter and guidewire skills were introduced using video with audio narration. The entire sequence was presented and later divided into segments that were practiced in detail. Panel B illustrates 1 of the 10 questions used in this module to assess whether the trainee knew the correct sequence of events. The learning management system used for this training was capable of automatically scoring such items. Panel C shows the introduction to the ultrasound interpretation module. The desired sequence was presented and a mapping analogy was used to help convey the steps needed to examine an ultrasound image and determine the optimal needle path. Panel D is 1 of the questions from this module's final exam and again uses the ordered list task model. In this example, path 3 is preferred because it minimizes the probability that the needle might be advanced too far and enter the red “safety zone” surrounding the carotid artery. Path 1 is the least desirable because the needle would be directed toward the carotid artery, and it also requires advancing the needle a greater distance than path 2.

Breaks in sterile technique have always been a high-priority failure mode because catheter-related bloodstream infections cause substantial, excess morbidity and mortality.32–34 Studies have illustrated how the rate of such infections can be decreased through maximum sterile barriers and hand hygiene.35 Before the 2008 course, the multidisciplinary committee revised the contents of the standard central line tray so that it included larger drapes. Since 2008, the course has emphasized the rationale for these changes and this includes a demonstration of how guidewires could easily extend beyond the edge of the smaller drapes. Assessment of competence in sterile techniques was also added in 2009. The analysis of operational data (Figure 2) has yet to show significantly fewer catheter-associated bloodstream infections.

Using Assessments to Measure the Effectiveness of the Standardized Curriculum

The learning objectives provided a means of measuring the curriculum's effectiveness (Table 2). The online training modules combined explanations and examples of the desired mental models with assessments designed to elicit evidence that the trainee had mastered these objectives (Figure 3). This paradigm was based on motor control theory, which contends that all planned actions consist of planning and execution stages. The online assessments focused on planning skills because cognitive psychologists have emphasized the importance of errors in planning, which unlike errors in execution, are often missed.19,36,37 They also stress how robust planning skills allow the operator to anticipate execution errors and to develop appropriate contingency plans, which further improve system reliability.38 As shown in Figure 3, the online modules were well suited to the assessment of planning skills. In contrast, execution skills were best evaluated by direct observation during the hands-on sessions.

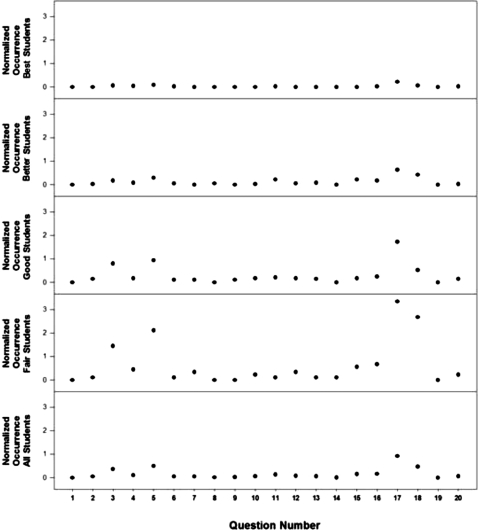

The assessment process also revealed which segments of the current curriculum were effective and which required improvements (Figure 4). Although the best students demonstrated few errors, a pattern emerged when analyzing the performance of good and fair students. A few questions accounted for most of the errors. Applying FMEA to this data set led to 2 potential remedies: (1) errors that resulted from poorly worded questions could be addressed by revising the test, and (2) errors that resulted from inadequate time, context, or exercises for a topic could be addressed by revising elements of the curriculum. Monitoring the results after changing the content or the question provides feedback on whether the changes have the intended results. This overall process of developing our curriculum is summarized in online supplement 3, a systematic approach to venous access.

Figure 4.

Item Analysis for Final Exam in the Ultrasound Image Interpretation Module

The average number of incorrect choices for each item was determined by examining the performance records captured using the online assessment's learning management system. For this analysis, trainees were divided into 5 categories based on their overall score during this assessment (best, >95%; better, 90% to 95%; good, 85% to 90%; fair, 80% to 85%; poor, <80%). These results illustrate how 4 test items (no. 3, 5, 17, and 18) account for most of the errors and how the probability of an error increases as student performance falls. Please note that item 3 from this assessment is presented in panel D of Figure 3.

Discussion

Our attempt to develop a standardized curriculum for CVC placement began with a global needs assessment and used task and failure mode analysis to identify specific training needs. Failure modes were then separated into planning and execution failures. This division prompted us to develop a combination of online training modules, which focused on planning skills, and hands-on exercises, which targeted execution skills. Planning skills were assessed by incorporating questions into the online modules. Execution skills were assessed by having instructors observe performance during simulated procedures. Assessment results were used to complete a data-driven, curriculum-development cycle.

Aspects of developing a standardized curriculum for CVC placement proved challenging. First, trainees from at least 8 different departments were organized into more than 15 different teams that place CVCs. The resulting variation in processes confounded efforts to systematically collect data on failure modes and to develop standardized training. Second, this effort required substantial resources. Experts from multiple disciplines spent considerable time in meetings to determine objectives and coordinate training approaches. Creating the online modules required multiple development and testing cycles before they were ready for use. The hands-on training and assessment sessions required coordinating the schedules of multiple instructors with the availability of the simulation center.

The effectiveness of any curricula should be judged according to its ability to change the learner's behavior. Too often training is judged according to the ease with which it is produced, packaged, and delivered. Instead, curricula should be structured as environments that facilitate the creation and refinement of each trainee's mental models.39–41 As such, we intend to continually focus, not on the amount of content delivered or performance observed during simulated procedures, but on evidence of how trainees used the desired mental models when caring for patients. Achieving this goal requires verifying that trainees not only learn the overall process needed to reliably choose, place, maintain, and remove CVCs (online supplement 4) but also reliably apply the resulting knowledge, skills, and abilities to their daily work.

One of the major advantages of the FMEA approach is that it creates a feedback cycle where data from clinical operations can be integrated into curriculum development. By requiring objective definitions of individual failure modes, FMEA improved our understanding of the underlying system. This improved our ability to measure performance during both simulated and actual patient procedures. Said another way, one does not fully understand how any complex system works until one has to correctly diagnose its failures, prescribe appropriate remedies, and assess the effectiveness of the actions taken. Indeed, medical care itself benefits from repeated cycles of diagnosis, treatment, and monitoring.

Limitations of FMEA

Learning Objectives Will Vary From Site to Site

We caution other programs and institutions against adopting our training strategies without first conducting their own FMEA. Although failure modes are universal, the frequency, severity, and detection of each individual failure mode will depend on the capabilities of each system's control strategies. Thus, FMEA results and the priority for individual failure modes will vary between programs.5,8 As a result, our curriculum will not be universally effective. However, given that agencies such as the Joint Commission and Centers for Medicare and Medicaid Services have set national priorities that can be mapped back to certain failure modes, a few failure modes will be high-priority targets for many systems. This suggests that effective control strategies will likely prove useful in a wide variety of care settings.

Training Versus Other Risk Reduction Strategies

This manuscript focuses on training because, for the foreseeable future, system performance will remain heavily dependent on operator skill. The benefits from ultrasound, maximum sterile barriers, and improved catheter and guidewire skills will only be derived if the human operators effectively employ ultrasound guidance, rigorously maintain sterility, and adroitly manipulate the catheters and guidewires. We contend that the traditional, informal training method of “see one, do one, teach one” is highly variable and leads to variation in skill that degrades system reliability far more than the decrements attributable to variation in tools, environment, or patients.

Still, we recognize that overzealous efforts to standardize training can have undesirable consequences,31 such as a loss of system flexibility. Rigid application of inflexible mental models will lead to complications when unforeseen or “latent” failure modes are encountered.36,37 Heterogeneous training populations are another shortcoming of standardized training programs because standardized training follows a “one size fits all” approach. Individuals with preexisting skills and who are fast learners will become bored when reviewing introductory material, whereas true novices will suffer from cognitive overload if the training pace is hastened.40 We expect that adaptive training programs might overcome these limitations because they use assessments to diagnose preexisting skills and prescribe coursework accordingly. However, such adaptive programs require substantially more resources to develop, and they introduce their own set of potential failure modes.

Importance of Continually Revising the Curriculum

Curricula must be continually revised to maximize the efficacy and efficiency of training.39,42–48 The marked decrease in retained guidewires suggests the training program has effectively addressed the underlying failure modes. The lack of marked improvement in catheter-associated bloodstream infections suggests that either the training program was ineffective at changing physician behavior or that factors other than breaks in sterile technique during catheter insertion are the primary cause for these infections. Our data on curriculum effectiveness suggest the current curriculum is marginally effective, and the observed improvements in patient outcomes could easily be attributed to other factors. However, the current effort has convinced members of the multidisciplinary committee that there is a clear need for the wide variety of teams involved in central venous access to continue working together. As described by Senge and Argote, organizational learning is driven by feedback loops.16,17 These loops require data, and they, in turn, require the creation of operational definitions of success and failure at numerous steps in the process. Learning organizations rely on personal mastery and explicit communication of the resulting mental models. Although there will always be reasons why a particular mental model might fail for a specific patient, without an explicit description and communication of the mental model and the nature of the failure, the organization will miss an opportunity to learn from that event. As a result, curriculum development never ends. Performance can always be improved.

Footnotes

James R. Duncan, MD, PhD, is an Associate Professor of Radiology at Washington University School of Medicine and St. Louis Children's Hospital; Katherine Henderson, MD, is an Assistant Professor of Clinical Medicine at Washington University School of Medicine and Barnes-Jewish Hospital; Mandie Street, RT, is a Quality and Safety Coordinator at Washington University School of Medicine; Amy Richmond, RN, an Infection Control Nurse at Barnes-Jewish Hospital; Mary Klingensmith, MD, is a Professor of Surgery at Washington University School of Medicine; Elio Beta, BS, is a Medical Student at the University of Illinois School of Medicine; Andrea Vannucci, MD, is an Assistant Professor of Anesthesiology at Washington University School of Medicine and Barnes-Jewish Hospital; and David Murray, MD, is a Professor of Anesthesiology at Washington University School of Medicine and St. Louis Children's Hospital.

The authors report no conflicts of interest.

This work was supported by grants from the Barnes-Jewish Hospital Foundation and the Anesthesia Patient Safety Foundation.

The authors wish to acknowledge Dr Jonathan Gottlieb for his enthusiastic support of this project. Drs Gottlieb and Vannucci served on the multidisciplinary Central Venous Access Committee along with Drs Geoffrey Cislo, Perren Cobb, Daniel Theodoro, Brent Ruoff, Michael Diringer, Katherine Henderson, David Murray, and James Duncan. Terra Mouser, MBA; Theresa Sieber; Amy Richmond, RN, MHS, CIC; and Cathy Robinson, BA, RN, CRNI, also served on the committee. Julie Woodhouse BSN, MEd, and Rebecca Snider, BSN, RN, MHS, were instrumental in setting up and conducting the hands-on simulation training sessions and assessments.

Editor's Note: The online version (1MB, pdf) of this article contains supplemental material such as determining and investigating high-priority failure modes, the evolution of the curriculum, process map and feedback loops for curriculum development, and a systematic approach to venous access.

References

- 1.Merrer J., De Jonghe B., Golliot F., et al. Complications of femoral and subclavian venous catheterization in critically ill patients: a randomized controlled trial. JAMA. 2001;286(6):700–707. doi: 10.1001/jama.286.6.700. [DOI] [PubMed] [Google Scholar]

- 2.Sznajder J. I., Zveibil F. R., Bitterman H., Weiner P., Bursztein S. Central vein catheterization. Failure and complication rates by three percutaneous approaches. Arch Intern Med. 1986;146(2):259–261. doi: 10.1001/archinte.146.2.259. [DOI] [PubMed] [Google Scholar]

- 3.Mansfield P. F., Hohn D. C., Fornage B. D., Gregurich M. A., Ota D. M. Complications and failures of subclavian-vein catheterization. N Engl J Med. 1994;331(26):1735–1738. doi: 10.1056/NEJM199412293312602. [DOI] [PubMed] [Google Scholar]

- 4.Martin C., Eon B., Auffray J. P., Saux P., Gouin F. Axillary or internal jugular central venous catheterization. Crit Care Med. 1990;18(4):400–402. doi: 10.1097/00003246-199004000-00010. [DOI] [PubMed] [Google Scholar]

- 5.Sridhar S., Duncan J. R. Strategies for choosing process improvement projects. J Vasc Interv Radiol. 2008;19(4):471–477. doi: 10.1016/j.jvir.2008.01.010. [DOI] [PubMed] [Google Scholar]

- 6.Britt R. C., Novosel T. J., Britt L. D., Sullivan M. The impact of central line simulation before the ICU experience. Am J Surg. 2009;197(4):533–536. doi: 10.1016/j.amjsurg.2008.11.016. [DOI] [PubMed] [Google Scholar]

- 7.Millington S. J., Wong R. Y., Kassen B. O., Roberts J. M., Ma I. W. Improving internal medicine residents' performance, knowledge, and confidence in central venous catheterization using simulators. J Hosp Med. 2009;4(7):410–416. doi: 10.1002/jhm.570. [DOI] [PubMed] [Google Scholar]

- 8.Stamatis D. H. Failure Mode and Effect Analysis: FMEA from Theory to Execution. 2nd ed. Milwaukee, WI: American Society for Quality, Quality Press; 2003. [Google Scholar]

- 9.American Heart Association. 2005 American Heart Association (AHA) guidelines for cardiopulmonary resuscitation (CPR) and emergency cardiovascular care (ECC) of pediatric and neonatal patients: pediatric basic life support. Pediatrics. 2006;117(5):e989–e1004. doi: 10.1542/peds.2006-0219. [DOI] [PubMed] [Google Scholar]

- 10.Chamberlain D. A., Hazinski M. F. Education in resuscitation: an ILCOR symposium: Utstein Abbey: Stavanger, Norway: June 22–24, 2001. Circulation. 2003;108(20):2575–2594. doi: 10.1161/01.CIR.0000099898.11954.3B. [DOI] [PubMed] [Google Scholar]

- 11.Paraskos J. A. History of CPR and the role of the national conference. Ann Emerg Med. 1993;22(2, pt 2):275–280. doi: 10.1016/s0196-0644(05)80456-1. [DOI] [PubMed] [Google Scholar]

- 12.Brennan R. T., Braslow A. Skill mastery in cardiopulmonary resuscitation training classes. Am J Emerg Med. 1995;13(5):505–508. doi: 10.1016/0735-6757(95)90157-4. [DOI] [PubMed] [Google Scholar]

- 13.Brennan R. T., Braslow A., Batcheller A. M., Kaye W. A reliable and valid method for evaluating cardiopulmonary resuscitation training outcomes. Resuscitation. 1996;32(2):85–93. doi: 10.1016/0300-9572(96)00967-7. [DOI] [PubMed] [Google Scholar]

- 14.Braslow A., Brennan R. T., Newman M. M., Bircher N. G., Batcheller A. M., Kaye W. CPR training without an instructor: development and evaluation of a video self-instructional system for effective performance of cardiopulmonary resuscitation. Resuscitation. 1997;34(3):207–220. doi: 10.1016/s0300-9572(97)01096-4. [DOI] [PubMed] [Google Scholar]

- 15.Girard T. D., Schectman J. M. Ultrasound guidance during central venous catheterization: a survey of use by house staff physicians. J Crit Care. 2005;20(3):224–229. doi: 10.1016/j.jcrc.2005.06.005. [DOI] [PubMed] [Google Scholar]

- 16.Senge P. M. The Fifth Discipline: The Art and Practice of the Learning Organization. New York, NY: Doubleday Publishing; 2006. [Google Scholar]

- 17.Argote L. Organizational Learning: Creating, Retaining and Transferring Knowledge. New York, NY: Springer; 2005. [Google Scholar]

- 18.Hudson P. Applying the lessons of high risk industries to health care. Qual Saf Health Care. 2003;12(suppl 1):i7–i12. doi: 10.1136/qhc.12.suppl_1.i7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Klein G. A. Sources of Power: How People Make Decisions. Cambridge, MA: MIT Press; 1998. [Google Scholar]

- 20.Rodriguez-Paz J. M., Kennedy M., Salas E., et al. Beyond “see one, do one, teach one”: toward a different training paradigm. Qual Saf Health Care. 2009;18(1):63–68. doi: 10.1136/qshc.2007.023903. [DOI] [PubMed] [Google Scholar]

- 21.Pronovost P. J., Holzmueller C. G., Martinez E., et al. A practical tool to learn from defects in patient care. Jt Comm J Qual Patient Saf. 2006;32(2):102–108. doi: 10.1016/s1553-7250(06)32014-4. [DOI] [PubMed] [Google Scholar]

- 22.Deming W. The New Economics for Industry, Government, Education. 2nd ed. Cambridge, MA: MIT Press; 2000. [Google Scholar]

- 23.Deming W. Out of the Crisis. Cambridge, MA: MIT Press; 2000. [Google Scholar]

- 24.Box G. Improving Almost Anything: Ideas and Essays. Hoboken, NJ: Wiley-Interscience; 2006. [Google Scholar]

- 25.Duncan J. R. Strategies for improving safety and quality in interventional radiology. J Vasc Interv Radiol. 2008;19(1):3–7. doi: 10.1016/j.jvir.2007.09.026. [DOI] [PubMed] [Google Scholar]

- 26.Ericsson K. Deliberate practice and the acquisition and maintenance of expert performance in medicine and related domains. Acad Med. 2004;79(suppl):S70–S81. doi: 10.1097/00001888-200410001-00022. [DOI] [PubMed] [Google Scholar]

- 27.Climo M., Diekema D., Warren D. K., et al. Prevalence of the use of central venous access devices within and outside of the intensive care unit: results of a survey among hospitals in the prevention epicenter program of the Centers for Disease Control and Prevention. Infect Control Hosp Epidemiol. 2003;24(12):942–945. doi: 10.1086/502163. [DOI] [PubMed] [Google Scholar]

- 28.Hind D., Calvert N., McWilliams R., et al. Ultrasonic locating devices for central venous cannulation: meta-analysis. BMJ. 2003;327(7411):361. doi: 10.1136/bmj.327.7411.361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.National Institute for Clinical Excellence. Guidance on the use of ultrasound locating devices for placing central venous catheters. Available at: http://www.nice.org.uk/nicemedia/pdf/Ultrasound_49_guidance.pdf. Accessed July 19, 2010.

- 30.Beta E., Parikh A. S., Street M., Duncan J. R. Capture and analysis of data from image-guided procedures. J Vasc Interv Radiol. 2009;20(6):769–781. doi: 10.1016/j.jvir.2009.03.012. [DOI] [PubMed] [Google Scholar]

- 31.Bucholz E. I., Duncan J. R. Assessing system performance. J Vasc Interv Radiol. 2008;19(7):987–994. doi: 10.1016/j.jvir.2008.03.018. [DOI] [PubMed] [Google Scholar]

- 32.O'Grady N. P., Alexander M., Dellinger E. P., et al. Guidelines for the prevention of intravascular catheter-related infections. Infect Control Hosp Epidemiol. 2002;23(12):759–769. doi: 10.1086/502007. [DOI] [PubMed] [Google Scholar]

- 33.Raad I. Intravascular-catheter-related infections. Lancet. 1998;351(9106):893–898. doi: 10.1016/S0140-6736(97)10006-X. [DOI] [PubMed] [Google Scholar]

- 34.McGee D. C., Gould M. K. Preventing complications of central venous catheterization. N Engl J Med. 2003;348(12):1123–1133. doi: 10.1056/NEJMra011883. [DOI] [PubMed] [Google Scholar]

- 35.Pronovost P., Needham D., Berenholtz S., et al. An intervention to decrease catheter-related bloodstream infections in the ICU. N Engl J Med. 2006;355(26):2725–2732. doi: 10.1056/NEJMoa061115. [DOI] [PubMed] [Google Scholar]

- 36.Reason J. T. Human Error. New York, NY: Cambridge University Press; 1990. [Google Scholar]

- 37.Reason J. T. Managing the Risks of Organizational Accidents. Farnham, Surry, UK: Ashgate; 1997. [Google Scholar]

- 38.Ebeling C. E. An Introduction to Reliability and Maintainability Engineering. New York, NY: McGraw Hill; 1997. [Google Scholar]

- 39.Gagne R., Wager W. W., Golas K. C., Keller J. M. Principles of Instructional Design. 5th ed. Belmont, CA: Wadsworth/Thomson Learning; 2005. [Google Scholar]

- 40.Clark R., Nguyen F., Sweller J. Efficiency in Learning: Evidence-Based Guidelines to Manage Cognitive Load. San Francisco, CA: Pfeiffer; 2006. [Google Scholar]

- 41.Clark R. Building Expertise: Cognitive Methods for Training and Performance Improvement. 2nd ed. Silver Spring, MD: International Society for Performance Improvement; 2003. [Google Scholar]

- 42.Mager R. Making Instruction Work. 2nd ed. Atlanta, GA: Center for Effective Performance; 1997. [Google Scholar]

- 43.Mager R. Preparing Instructional Objectives. 3rd ed. Atlanta, GA: Center for Effective Performance; 1997. [Google Scholar]

- 44.Mager R. Analyzing Performance Problems. 3rd ed. Atlanta, GA: Center for Effective Performance; 1997. [Google Scholar]

- 45.Mager R. Measuring Instructional Results. 3rd ed. Atlanta, GA: Center for Effective Performance; 1997. [Google Scholar]

- 46.Mager R. How to Turn Learners On… Without Turning Them Off. 3rd ed. Atlanta, GA: Center for Effective Performance; 1997. [Google Scholar]

- 47.Mager R. Goal Analysis. 3rd ed. Atlanta, GA: Center for Effective Performance; 1997. [Google Scholar]

- 48.Oliva P. Developing the Curriculum. 6th ed. Boston, MA: Pearson Education, Inc; 2005. [Google Scholar]