Abstract

Objective

The purpose of this article was to develop and determine the utility of a compliance form in evaluating and teaching the Accreditation Council for Graduate Medical Education competencies of professionalism, practice-based learning and improvement, and systems-based practice.

Methods

In 2006, we introduced a 17-item compliance form in an obstetrics and gynecology residency program. The form prospectively monitored residents on attendance at required activities (5 items), accountability of required obligations (9 items), and completion of assigned projects (3 items). Scores were compared to faculty evaluations of residents, resident status as a contributor or a concerning resident, and to the residents' conflict styles, using the Thomas-Kilmann Conflict MODE Instrument.

Results

Our analysis of 18 residents for academic year 2007–2008 showed a mean (standard error of mean) of 577 (65.3) for postgraduate year (PGY)-1, 692 (42.4) for PGY-2, 535 (23.3) for PGY-3, and 651.6 (37.4) for PGY-4. Non-Hispanic white residents had significantly higher scores on compliance, faculty evaluations on interpersonal and communication skills, and competence in systems-based practice. Contributing residents had significantly higher scores on compliance compared with concerning residents. Senior residents had significantly higher accountability scores compared with junior residents, and junior residents had increased project completion scores. Attendance scores increased and accountability scores decreased significantly between the first and second 6 months of the academic year. There were positive correlations between compliance scores with competing and collaborating conflict styles, and significant negative correlations between compliance with avoiding and accommodating conflict styles.

Conclusions

Maintaining a compliance form allows residents and residency programs to focus on issues that affect performance and facilitate assessment of the ACGME competencies. Postgraduate year, behavior, and conflict styles appear to be associated with compliance. A lack of association with faculty evaluations suggests measurement of different perceptions of residents' behavior.

Introduction

The Accreditation Council for Graduate Medical Education (ACGME) emphasizes the need to teach and assess resident performance on 6 general competencies.1 A systematic search of the medical and medical education literature between 1996 and 2003 identified the following expectations for the assessment of the competencies: (1) there should be multiple assessments by multiple observers using multiple tools at multiple time points; (2) tools should be reliable, reproducible, and valid; (3) tools should be practical (ie, feasible, convenient, easy to use, inexpensive to implement) and have a low time commitment; (4) tools must produce qualitative and/or quantitative data, with direct linkage to improvement in educational outcomes in the future; (5) the assessment process must be linked to explicit and public learning objectives; (6) the grading scale should be open and clearly defined; and (7) the process should be judged as fair and accurate by both faculty and residents.2,3

A quantitative compliance form that facilitates the assessment of residents prospectively and serially would thus be an acceptable assessment tool. This tool would allow the residency program to evaluate compliance issues that affect residents' functioning and performance, and could prompt the development of appropriate remediation plans. Our literature search did not find any studies describing quantitative tools for measuring compliance; rather, we found only studies that evaluated the competencies in a general fashion.4 A national review of 272 internal medicine residency programs showed that they were using an average of 4.2 to 6.0 tools to evaluate residents, with a heavy reliance on rating forms, and did not find any compliance forms useful in the assessment of the ACGME competencies.5 The literature reviews showed that compliance evaluations in residency education appeared to be limited primarily to assessment of program compliance with ACGME standards, particularly those related to resident duty hours.2,3,6–8

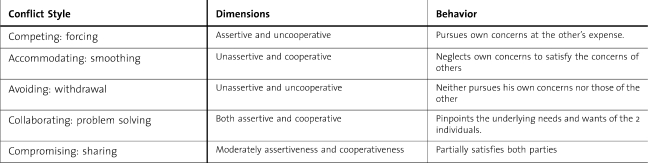

Physicians' work entails safe patient care in a multidisciplinary team setting. Conflict is universal, and the ability to resolve conflict effectively and appropriately would determine the effectiveness of residents' learning and their ability to function while being compliant with residency requirements. The Thomas-Kilmann Conflict MODE Instrument (TKI) (table 1) has been used in studies on conflict management in health care. The TKI is an ipsative (forced choice) measure consisting of 30 statement pairs, used to assess the 5 conflict modes of competing (assertive and uncooperative), collaborating (assertive and cooperative), compromising (intermediately assertive and cooperative), accommodating (unassertive and cooperative), and avoiding (unassertive and uncooperative).9,10

Table 1.

Thomas-Kilmann Conflict Mode Instrument

The objective of this study was to describe the development and the utility of a compliance form in evaluating and teaching the ACGME competencies of professionalism, practice-based learning and improvement, and systems-based practice and to compare with residents' conflict style preferences using the TKI. We chose these 3 ACGME competencies because (1) they are all involved in residents' compliance regarding their professional, educational, and clinical obligations, and (2) in our experience and those of others, they are more difficult to assess with other evaluation tools. We assessed a 17-item compliance form that prospectively monitors residents on attendance at required activities, accountability of required obligations, and completion of assigned projects. Our hypothesis was that developing and maintaining a compliance form to assess ACGME competencies is feasible and useful in the assessment of residents' performance.

Methods

Setting

The setting for the study was the obstetrics and gynecology residency at Cedars-Sinai Medical Center in Los Angeles, which trains 6 residents per year in a 4-year ACGME-accredited program. The clinical department is composed of 20 full-time faculty members and private clinicians who admit their patients to the medical center. The institutional review board granted this study exempt status because only deidentified information of educational assessments was used. In addition, residents consented to the use of their evaluations and scores in the study.

Compliance Form

The compliance form was introduced in 2006 as an innovative approach to assess the ACGME competencies and to allow ongoing structured monitoring of residents' ability to complete required tasks that were thought to be important to accreditation and practice. This form was adapted from an evaluation tool developed in 2005 by the University of Iowa Pediatric Residency Program.11 Points are assigned for completing required elements and professional responsibilities. We developed an initial list of all tasks and responsibilities expected of the residents and assigned points to each activity based on the perceived value of an assessment of the ACGME competencies of professionalism, practice-based learning and improvement, and systems-based practice. All residents were included in the compliance form evaluation process, and residents in all postgraduate years had equal opportunity to attend committees, perform procedures, do research, take quizzes, and participate in noncompensated duties.

The 17 items in the compliance form comprised attendance (5 items), responsibilities or accountability (9 items), and project completion (3 items). This information was collected and scored by the residency program coordinators, with the overall score a sum of the items attained. The compliance scores are reviewed for accuracy by the program director, are sent monthly to residents for review, and are discussed at the residents' biannual meeting with the program director. For deficiencies, action plans were developed, which included reassigning residents' rotations based on case log deficiencies, working with residents to meet required research project timelines, and getting residents to complete delinquent medical records or evaluations. At the end of the academic year, the residents with the highest compliance score for each postgraduate year and the overall highest score were given awards at the graduation dinner. A minimum score per year (472) was selected for overall compliance, and residents who fell below the benchmark were identified for remediation.

Assessment

We analyzed residents' compliance scores for academic year 2007–2008 and compared them with twice-yearly faculty evaluations that used a standardized evaluation form for the 6 ACGME competencies. Mean compliance scores were also compared with the mean standardized postgraduate year adjusted Council on Residency Education in Obstetrics and Gynecology (CREOG) in-service examination scores for each resident for the 2007–2008 academic year. The residents completed the TKI during the 2007–2008 academic year as part of an educational assessment of self-awareness and team building, and mean compliance scores were then compared with residents' conflict styles preferences.

We also compared compliance scores with residents' categorization as contributors or concerning resident. Residents were classified as contributors (n = 6) if they provided major administrative service to the program. The contributors were residents who were elected as administrative chief residents and residents who volunteered to organize the residency journal club and the medical student clerkship. Residents were classified as concerning (n = 6) if placed on remediation because of academic and professionalism matters.

Analysis

We used SPSS software 17.0 GP (SPSS Inc, Chicago, IL) for the analysis. Student t test was used to assess differences between continuous variables such as CREOG scores or compliance scores, and chi-square tests assessed discrete variables such as gender and ethnicity. Pearson correlations analyzed correlations between compliance scores and outcome variables.

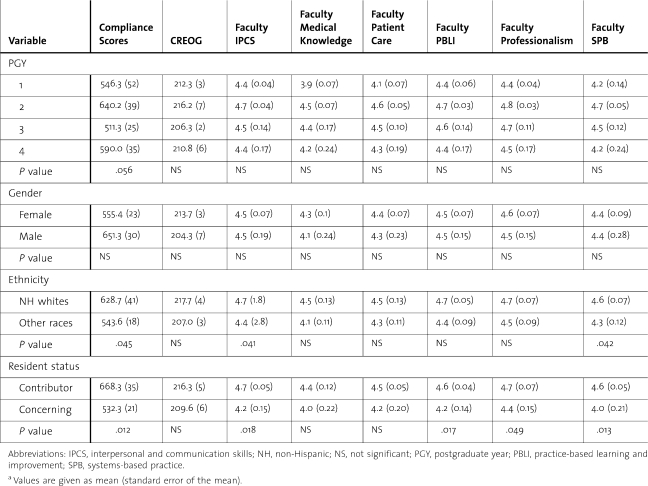

Results

A total of 18 residents (4 in PGY-1, 5 in PGY-2, 4 in PGY-3, and 5 in PGY-4) were included in the study. There were 4 male and 14 female residents. Racial-ethnic distribution was 8 non-Hispanic whites, 8 Asian Americans, 1 Hispanic, and 1 African American. The mean (standard error) compliance scores by PGY-1 to PGY-4 were 577 (65.3), 692 (42.4), 535 (23.3), and 651.6 (37.4), respectively (table 2). Comparative analysis between compliance scores, gender, ethnicity, postgraduate year of residency training, CREOG in-service examination scores, and faculty evaluation using the 6 ACGME competencies showed no significant differences for postgraduate year or gender. Non-Hispanic white residents had significantly higher scores on compliance, faculty evaluations on interpersonal and communication skills, and systems-based practice. Contributing residents also had significantly higher scores than concerning residents on compliance, faculty evaluations on interpersonal and communication skills, practice-based learning and improvement, professionalism, and systems-based practice (table 2).

Table 2.

The Relationship Between Residents' Factors With Compliance Scores, CREOG Scores, and Faculty Evaluations of the 6 ACGME Competenciesa

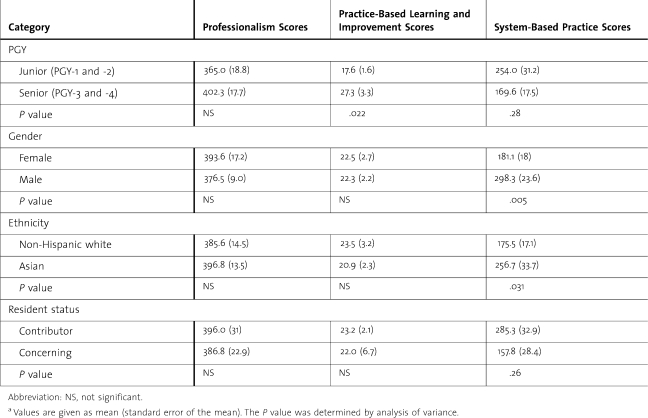

We found significant differences by postgraduate year in compliance scores for attendance, accountability, and project completion (table 3). We categorized residents into junior residents (PGY-1 and -2) and senior residents (PGY-3 and -4). Senior residents had significantly higher accountability scores [306.7 (12.2) vs 247.9 (20.7), P = .026], while junior residents had increased project completion scores [65.1 (9.2) vs 146.4 (22.3), P = .004] and tended to have higher attendance in the first half of the academic year [89.9 (10.2) vs 113.6 (5.3), P = .056]. Attendance scores were significantly higher (P = .003) and accountability or responsibility scores were significantly lower (P = .000) for the first 6 months compared with the last 6 months of the academic year, which is expected because most of the accountability scores are given in the first 6 months of the year if the resident was compliant with the credentialing process. There were no significant differences between the 2 parts of the academic year in the other scores awarded on the compliance form.

Table 3.

Compliance Scores by Category by Postgraduate Year (PGY) of Residencya

Male non-Hispanic white residents were more likely to be contributors and scored higher on systems-based practice than women or other male ethnic groups. Overall, contributor residents scored significantly higher on the systems-based practice competency (table 4).

Table 4.

Compliance Form Scores by Accreditation Council for Graduate Medical Education Competencies Compared With Postgraduate Year (PGY), Gender, Ethnicity, and Resident Statusa

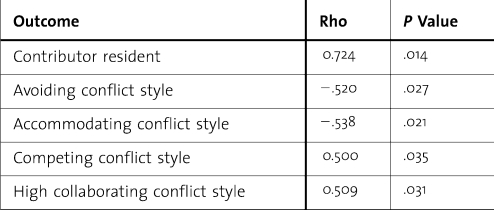

Pearson correlations analysis was used to assess correlations between compliance scores and outcome variables. This showed no correlations between compliance scores with race, gender, or the CREOG scores during the same period. There were no correlations between the faculty evaluations using the ACGME core competencies for the residents in each of the 6 competencies obtained during the same period, nor were there any significant differences in the faculty evaluations based on year of training. There was a significant positive correlation between the compliance scores and residents classified as contributors. There was a significant positive correlation between the compliance score and the TKI conflict styles of competing and high collaborating, and significant negative correlations with the conflict styles of avoiding and accommodating (table 5).

Table 5.

Statistically Significant Correlations With Compliance Scores Using Pearson Analysis

Implementation of the compliance form made residents more aware of the significance of accomplishing educational objectives, as exemplified by residents completing more evaluations (978 completed in AY 2007–2008, compared to 226 in AY 2005–2006 and 582 in AY 2006-2007).

Discussion

Our study showed that it is feasible to implement a prospective compliance form in a residency training program. The form may aid not only in assessment but also in teaching the ACGME competencies of professionalism, practice-based learning and improvement, and systems-based practice.

The differences between senior and junior resident accountability may reflect the program's educational culture. Senior residents were significantly more accountable when conducting the monthly quiz examination, entering procedures in the ACGME database, completing medical records, and observing duty hour regulations. In contrast, junior residents completed more projects such as giving educational conference presentations, and were more likely to attend required educational events. This may be partly because senior residents are assigning juniors to give more presentations to improve their cognitive knowledge. Senior residents may not attend as many educational events because they tend to cover clinical services to release the junior residents.

Residents classified as contributors had significantly higher compliance scores. Subjectively, we noted that the residents fell into 3 groups. Group 1 residents were always compliant and needed minimal intervention. Group 2 residents were initially noncompliant but became more compliant with intervention. Group 3 residents remained noncompliant after intervention. One of the major benefits of a compliance form is that it allowed the program director to identify those residents who needed closer monitoring and remediation plans. Intervention and remediation of noncompliant residents in our program included extensive mentoring and counseling.

Using the TKI conflict style categorization, residents with high compliance scores were more likely to use “competing” and “collaborating” behaviors to manage conflict. Competing defines behavior that involves taking quick and decisive actions; collaborating involves identifying and solving problems. Residents with lower compliance scores were more likely to be accommodating and avoiding, which are unassertive behavior styles. The avoiding style is more likely to sidestep issues and leave matters unresolved. Although the relationships between these behavior styles and compliance appear intuitive, ours is the first study that has shown an objective correlation between measures of compliance and conflict behavior styles. This is in support of our previous observation that residents who successfully execute administrative duties are likely to have a TKI profile that is high in collaborating and competing but low in avoiding and accommodating, and residents who have problems adjusting are likely to have the opposite profile.12

Faculty evaluation using ACGME competencies and CREOG in-service scores did not correlate with compliance scores, suggesting that these tools measure different parameters. Our findings support the ACGME mandate that assessments by multiple observers using multiple tools at multiple time points are needed to provide a holistic assessment of residents in the 6 competencies.

Our study has several limitations. The sample size is small, limiting statistical analysis and increasing the probability of a type 2 error. The study used a single site and 1 year of data, limiting generalizability. Also, it is likely that studies of this type will be influenced by regional and institutional culture and other local factors that may produce different results. There may be bias in this study because the program directors collected the information and supervised the residents, and much of the data comprised residents' evaluations and could be blinded only for the analysis.

The relevance of our study is that it assesses compliance behavior in residents and links it to performance during residency. Implementing a compliance form allowed the residents in our program to understand and prepare to fulfill the many obligations of physicians that relate to and also extend beyond direct patient care. Use of the form in other programs could contribute to programs using assessments by multiple observers using multiple tools at multiple time points to provide quantitative, reliable data that are linked to learning objectives. Use of the form may improve future educational outcomes. At the same time, it involves a significant time commitment and may not be very feasible for all programs. A simpler compliance form that monitors a small number of key items may achieve the same goal. Future research should collect longitudinal data to validate our findings. In addition, studies should assess how residents' ability to be internally compliant affects the work environment and patient safety.

Footnotes

All authors are from the Obstetrics and Gynecology Department at the David Geffen School of Medicine at UCLA and the Cedars Sinai Medical Center. Dotun Ogunyemi, MD, is Vice Chair of Education, and Program Director; Michelle Eno, MD, is a second-year resident; Steve Rad, MD, is a third-year resident; Alex Fong, MD, is a fourth-year resident; Carolyn Alexander, MD, is Associate Program Director; and Ricardo Azziz, MD, is Department Chair.

This study was presented in 2008 at the Annual Conference of the Association of Professors of Obstetrics and Gynecology in Lake Buena Vista, Florida, March 2008.

Editor's Note: The online version (40KB, doc) of this article contains the residency compliance form.

References

- 1.ACGME Outcome Project. Available at: http://www.acgme.org/outcome/project/proHome.asp. Accessed December 19, 2009.

- 2.Lee A. G., Oetting T., Beaver H. A., Carter K. Task Force on the ACGME competencies at the University of Iowa Department of Ophthalmology. Surv Ophthalmol. 2009;54(4):507–517. doi: 10.1016/j.survophthal.2009.04.004. [DOI] [PubMed] [Google Scholar]

- 3.Lee A. G., Carter K. D. Managing the new mandate in resident education: a blueprint for translating a national mandate into local compliance. Ophthalmology. 2004;111(10):1807–1812. doi: 10.1016/j.ophtha.2004.04.021. [DOI] [PubMed] [Google Scholar]

- 4.Lurie S. J., Mooney C. J., Lyness J. M. Measurement of the general competencies of the accreditation council for graduate medical education: a systematic review. Acad Med. 2009;84(3):301–309. doi: 10.1097/ACM.0b013e3181971f08. [DOI] [PubMed] [Google Scholar]

- 5.Chaudhry S. I., Holmboe E., Beasley B. W. The state of evaluation in internal medicine residency. J Gen Intern Med. 2008;23(7):1010–1015. doi: 10.1007/s11606-008-0578-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Ogden P. E., Sibbitt S., Howell M., et al. Complying with ACGME resident duty hours restrictions: restructuring the 80-hour workweek to enhance education and patient safety at Texas A&M/Scott & White Memorial Hospital. Acad Med. 2006;81(12):1026–1031. doi: 10.1097/01.ACM.0000246688.93566.28. [DOI] [PubMed] [Google Scholar]

- 7.Lundberg S., Wali S., Thomas P., Cope D. Attaining resident duty hours compliance: the acute care nurse practitioners program at Olive View-UCLA Medical Center. Acad Med. 2006;81(12):1021–1025. doi: 10.1097/01.ACM.0000246677.36103.ad. [DOI] [PubMed] [Google Scholar]

- 8.Byrne J. M., Loo L. K., Giang D. Monitoring and improving resident work environment across affiliated hospitals: a call for a national resident survey. Acad Med. 2009;84(2):199–205. doi: 10.1097/ACM.0b013e318193833b. [DOI] [PubMed] [Google Scholar]

- 9.Kilmann R. H., Thomas K. W. Developing a forced-choice measure of conflict management behavior: the MODE instrument. Educ Psychol Meas. 1977;37:309–323. [Google Scholar]

- 10.Sportsman S., Hamilton P. Conflict management styles in the health professions. J Prof Nurs. 2007;23(3):157–166. doi: 10.1016/j.profnurs.2007.01.010. [DOI] [PubMed] [Google Scholar]

- 11.Palermo J. Evaluating professionalism and practice-based learning and improvement: an example from the field. Available at: http://www.acgme.org/acWebsite/bulletin-e/e-bulletin12_06.pdf. Accessed July 26, 2010.

- 12.Ogunyemi D., Fong S., Elmore R., Korwin D., Azziz R. The associations between residents' behavior and the Thomas-Kilmann Conflict MODE Instrument. J Grad Med Educ. 2010;2(1):111–125. doi: 10.4300/JGME-D-09-00048.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Haberman S., Rotas M., Perlman K., Feldman J. G. Variations in compliance with documentation using computerized obstetric records. Obstet Gynecol. 2007;110(1):141–145. doi: 10.1097/01.AOG.0000269049.36759.fb. [DOI] [PubMed] [Google Scholar]