Abstract

The tongue drive system (TDS) is an unobtrusive, minimally invasive, wearable and wireless tongue–computer interface (TCI), which can infer its users' intentions, represented in their volitional tongue movements, by detecting the position of a small permanent magnetic tracer attached to the users' tongues. Any specific tongue movements can be translated into user-defined commands and used to access and control various devices in the users' environments. The latest external TDS (eTDS) prototype is built on a wireless headphone and interfaced to a laptop PC and a powered wheelchair. Using customized sensor signal processing algorithms and graphical user interface, the eTDS performance was evaluated by 13 naive subjects with high-level spinal cord injuries (C2–C5) at the Shepherd Center in Atlanta, GA. Results of the human trial show that an average information transfer rate of 95 bits/min was achieved for computer access with 82% accuracy. This information transfer rate is about two times higher than the EEG-based BCIs that are tested on human subjects. It was also demonstrated that the subjects had immediate and full control over the powered wheelchair to the extent that they were able to perform complex wheelchair navigation tasks, such as driving through an obstacle course.

1. Introduction

A recent study initiated by the Christopher and Dana Reeve Foundation found that 5 596 000 people in the US, almost 1 in 50, are living with paralysis. 16% of these individuals (about one million) have said that they are completely unable to move and cannot live without continuous help [1]. More than 50 million people each year provide care for those who are living with paralysis, which is valued at an annual cost of $306 billion. According to the same report, the cause of 23% of all paralysis cases (1 275 000) is the spinal cord injury (SCI). The National Institutes of Health reports that 11 000 cases of severe SCI from automotive accidents, acts of violence and falls add to this population every year. 55% of these SCI victims are between 16 and 30 years old, who will need lifelong special care services for the rest of their lives [2]. Therefore, research toward improving the quality of life for this underserved population can potentially have a large societal impact.

Many researchers are working toward helping the abovementioned population by leveraging the recent advancements in the neurosciences, microelectronics, wireless communications and computing in developing advanced assistive technologies. These technologies enable individuals with severe disabilities to communicate their intentions to other devices, particularly computers, as a mean to control their environments. The goal is to take advantage of the remaining abilities of the user and augment them with technology to bypass regions of the nervous system that are damaged as a result of injury or disease for performing the intended tasks.

A group of assistive devices, known as brain–computer interfaces (BCIs), directly tap into the source of volitional control, the central nervous system. As such, BCIs can potentially provide a broad coverage among users. However, depending on how close the electrodes are placed with respect to the brain, there is always a compromise between invasiveness and bandwidth. Noninvasive BCIs, which utilize electroencephalographic (EEG) brain activity, have not become popular among users despite being under research and development since the early 1970s [3–10]. Limited bandwidth and susceptibility to noise and interference have prevented EEG-based BCIs from being used for important tasks such as navigating powered wheelchairs in outdoor environments, which need short reaction times and high reliability. There are also other issues such as the need for learning and concentration, considerable time for setup and removal and poor aesthetics [11].

Invasive BCIs utilize signals recorded from skull screws, miniature glass cones, subdural electrode arrays and intracortical electrodes [11–14]. They have been studied mainly in non-human primates and more recently on humans [15]. Invasive BCIs can achieve higher spatial and temporal resolution compared to their EEG-based counterparts. They can also benefit from higher characteristic amplitudes, leading to less vulnerability to artifacts and ambient noise [12]. However, they are highly invasive and costly. There are also quite a few technical issues that need to be resolved such as electrode lifetime, implant size, robust transcutaneous wireless link, efficient neural signal processing, finding optimal target neural populations and highly portable processing hardware.

Another group of assistive technologies rely on the users' natural pathways to their brains, which are either completely unaffected or only partially impaired by the neurological conditions, to establish an indirect communication channel between the users' brain and target devices (e.g. computers). For example, cranial nerves are rarely affected even in the most severe cases of SCI, because of being well protected in the skull [16]. Therefore, the majority of SCI patients have normal vision, speech and facial muscle control. A key advantage of such technologies is enjoying the reasonable bandwidth that is available through those natural pathways, while remaining noninvasive. As a compromise, they may not cover a small percentage of the target population, who has absolutely no motor abilities, such as those suffering from locked-in syndrome [17]. Eye trackers, head pointers, electromyography (EMG) switches, sip-and-puff switches and speech recognition software are examples of devices in this category. Eye trackers, however, may interfere with visual tasks, which are always needed when the user is awake. Head pointers and head arrays may cause fatigue in the neck and shoulder muscles, which might be weakened if not completely paralyzed due to the neurological conditions. EMG and sip- and-puff switches are of low cost and easy to use, but they are slow and offer very few options. Finally, voice controllers provide a wide bandwidth, while being unusable in noisy and outdoor environments. Therefore, there is a need for technologies that are non- or minimally invasive, provide wide communication bandwidth, offer broad coverage among potential users, easy to use and maintain usability in various daily life environments.

Tongue and mouth occupy an amount of sensory and motor cortex in the human brain that rivals that of the fingers and the hand. Hence, they are inherently capable of sophisticated motor control and manipulation tasks with many degrees of freedom [16]. The tongue can move rapidly and accurately within the oral cavity, which indicate its capacity for wideband indirect communication with the brain. Its motion is intuitive and unlike EEG-based BCIs, does not require thinking or concentration. The tongue muscle has a low rate of perceived exertion and does not fatigue easily [18]. Therefore, a tongue-based device can be used continuously for several hours as long as it allows the tongue to freely move within the oral space. The tongue is noninvasively accessible without penetrating the skin, and its motion is not influenced by the position of the rest of the body, which can be adjusted for maximum user comfort.

Tongue capabilities have resulted in the development of a few tongue-based devices such as Tongue-Touch-Keypad® (TTK), Tongue Mouse and Tongue Point [19–22]. These devices, however, require bulky objects inside the mouth which may interfere with the users' speech, ingestion and even breathing. Think-A-Move® is another device that responds to the changes in the ear canal pressure due to tongue movements, which is only a one-dimensional parameter with limited information content [23]. There are also a number of tongue- or mouth-operated joysticks such as Jouse2 and Integra Mouse [24, 25]. These devices can only be used when the user is in the sitting position and require a certain level of head movement to grab the mouth joystick. They also require tongue and lip contact and pressure, which may cause fatigue and irritation over long-term use.

The tongue drive system (TDS) is a minimally invasive, wireless and wearable tongue–computer interface (TCI) that can offer multiple control functions over a wide variety of devices in the users' environments by taking advantage of the free tongue motion within the 3D oral space. The TDS can wirelessly detect several user-defined tongue positions inside the oral space, and translate them into a set of user-defined commands in real time without requiring the tongue to touch or press against anything. These commands can then be used to access a computer, operate a powered wheelchair or control other devices. We have reported the TDS architecture, TDS-wheelchair interface and its performance in computer access and wheelchair navigation by able-bodied subjects in our earlier publications [26–30]. Here, we are presenting the results of the first TDS clinical trial by 13 subjects with high-level SCI, as well as the improvements made in the latest TDS prototype hardware and user interface.

2. Systems and methods

2.1. External tongue drive system (eTDS) prototype

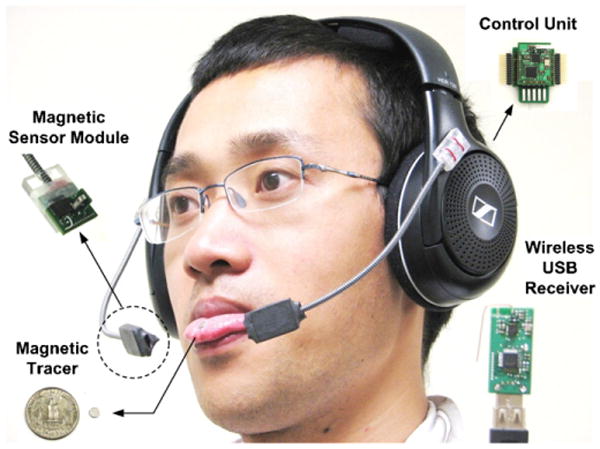

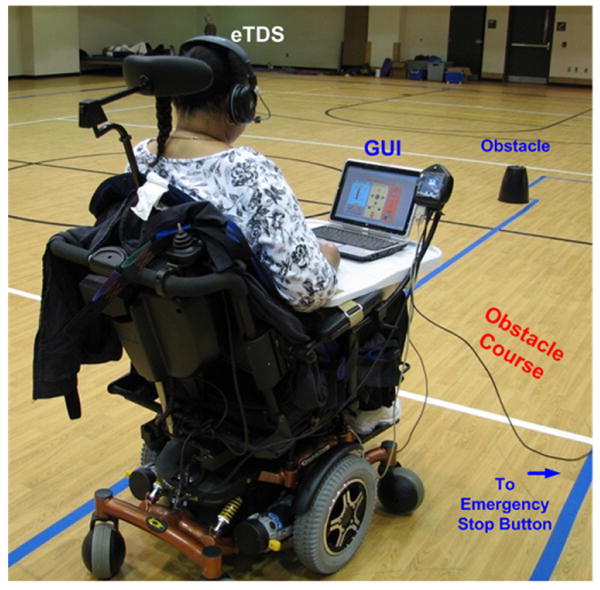

The TDS infers users' intentions, represented through their volitional tongue motion, by detecting the position of a small permanent magnetic tracer, the size of a lentil, which is attached to their tongues by tissue adhesives, piercing or implantation. The changes in the magnetic field around the mouth due to tongue motion are measured by an array of magnetic sensors and wirelessly sent to an ultra mobile personal computer or smartphone for further processing [26]. In the latest eTDS prototype, shown in figure 1, a small disk-shaped (Ø4.8 mm × 1.5 mm) rare earth permanent magnet (K&J Magnetics, Jamison, PA) was used as the tracer. A pair of 3-axial magnetic sensor modules (PNI, Santa Rosa, CA) was extended toward the users' cheeks by a pair of goosenecks, mounted bilaterally on a wireless headphone (Sennheiser, Old Lyme, CT) to facilitate sensor positioning, while maintaining an acceptable appearance with no indication of the users' disabilities. A miniaturized control unit was embedded inside the left earpiece, including an ultra low-power MSP430 microcontroller (Texas Instruments, Dallas, TX), which activates only one sensor at a time to save power. In the active mode, all six sensors (three per module) were sampled at 13 Hz. The samples were packed in a data frame and wirelessly transmitted to a laptop across a 2.4 GHz link established between two nRF24L01 transceivers (Nordic Semiconductor, Norway). In the standby mode, only the right module is sampled at 1 Hz locally, and the transceiver stays off to save power.

Figure 1.

The latest external tongue drive system (eTDS) prototype and its main components, built using only commercial off-the-shelf components, including a wireless headphone.

A sensor signal processing (SSP) algorithm, running on the laptop, uses the K-nearest-neighbors (KNN) classifier to identify the incoming samples based on their key features, which are extracted by principal components analysis (PCA). The algorithm then associates the samples with particular commands that are defined in a pre-training session, where users associate their desired tongue positions with the TDS commands. Later on during training, they repeat those commands a few times in a sequence to create a cluster of samples for each command, which is needed for the KNN classification [29]. Finally, when the users move their tongues to those particular positions during the trial, the TDS recognizes the issued commands in real time and executes them.

2.2. Tongue drive powered wheelchair interface

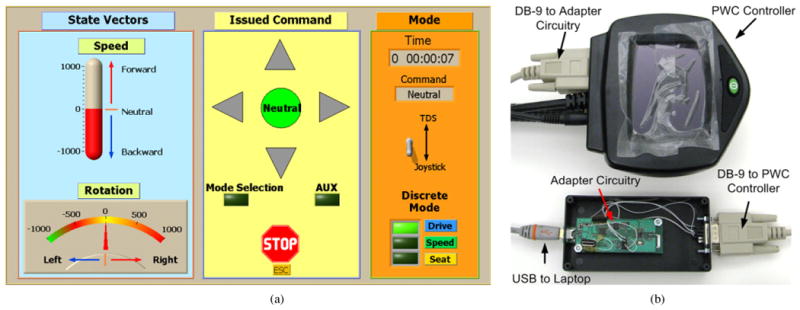

We have also developed a dedicated graphical user interface (GUI) and an adapter circuitry, shown in figure 2, to operate commercial powered wheelchairs with the eTDS [27]. In the GUI, a universal wheelchair control protocol has been implemented based on two state vectors, shown on the left column of figure 2(a): one for linear movements and one for rotations [30]. The speed and direction of the wheelchair movements or rotations are proportional to the absolute values and polarities of these two state vectors, respectively. Five commands are defined in the eTDS GUI to modify the analog state vectors, resulting in the wheelchair moving forward (FD) or backward (BD), turning right (TR) or left (TL) and stopping (N), which are indicated in the central column of figure 2(a). Each command increases/decreases its associated state vector by a certain amount until a predefined maximum/minimum level is reached. The neutral command (N), which is issued automatically when the tongue returns back to its resting position, always returns the state vectors back to zero. Therefore, by simply returning their tongues to their resting positions, the users can bring the wheelchair to a standstill [27].

Figure 2.

TDS-wheelchair interface: (a) the GUI provides users with visual feedback on the commands that have been selected. (b) Adapter circuitry connecting a laptop to the powered wheelchair controller via USB and standard DB-9 connectors, respectively. This circuit uses the TDS commands to change the values of the wheelchair analog state vectors.

Based on the above rules, we implemented two wheelchair control strategies: discrete and continuous. In the discrete control strategy, the state vectors are mutually exclusive, i.e. only one state vector can be nonzero at any time. If a new command changes the current state, e.g. from FD to TR, the old state vector (linear) has to be gradually reduced/increased to zero before the new vector (rotation) can be changed. Hence, the user is not allowed to change the moving direction of the wheelchair before stopping. This was a safety feature particularly for novice users at the cost of reducing the wheelchair agility. In the continuous control strategy, on the other hand, the state vectors are no longer mutually exclusive and the users are allowed to steer the wheelchair to the left or right as it is moving forward or backward. Thus, the wheelchair movements are continuous and much smoother, making it possible to follow a curve, for example.

As long as the users can remember the tongue positions and correctly issue the TDS commands, seeing the GUI screen during wheelchair navigation is not necessary. When the wheelchair state vectors are modified in the GUI, they are sent from the laptop to the adapter circuitry through a USB port. The adapter converts the vector values to analog voltage levels that are compatible with the wheelchair electronic controller (4.8–7.2 V) and applies them through a DB-9 connector (see figure 2(b)), which is available on most commercial wheelchairs, such as C500 (Permobil, Lebanon, TN) and Q6000 (Pride Mobility, Exeter, PA), for add-on devices [31, 32].

2.3. Subjects

Thirteen human subjects (four females and nine males) aged 18–64 years old with high-level SCI (C2–C5) were recruited from the Shepherd Center (Atlanta, GA) inpatient (eleven) and outpatient (two) populations. Among these subjects, eight were injured within 6 months before the trial and five had been injured for at least 1 year. Ten subjects were regular computer users prior to their injuries. Among the other three subjects, two had very limited knowledge of computers and one was completely new to PCs. Prior to this study, none of the subjects had been exposed to the TDS or participated in a similar research trial. Informed consent was obtained from all subjects. All trials were carried out in the SCI unit of the Shepherd Center with approvals from the Georgia Institute of Technology and the Shepherd Center institutional review boards (IRB).

2.4. Experiment protocol

Each subject participated in two sessions: first, computer access (CA) and then, powered wheelchair navigation (PWCN). The interval between the two sessions, depending on the subjects' schedules, was almost 1 week. Each session ran for about 3 hours and included TDS calibration, magnetic tracer attachment, pre-training, training, practicing and testing steps. Regular weight shift schedule was strictly followed throughout every trial.

In the CA session, the subjects were either sitting in their own wheelchair or lying on bed with a 22″ LCD monitor placed ∼1.5 m in front of them. The eTDS headset was placed on the subjects' heads and magnetic sensor positions were adjusted near their cheeks by bending the goosenecks (see figure 1). After sensor positions were fixed, the environment noise, such as the earth magnetic field, was sampled by moving the subjects around in their wheelchairs or gently moving their heads when they were lying on bed. The collected data were fed into the SSP algorithm to generate noise cancellation coefficients [29]. A magnetic tracer was sterilized and attached to a 20 cm string of dental floss using superglue. The other end of the string was tied to the eTDS headset during the trials. This was a safety measure to avoid the tracer from being accidentally swallowed or aspired if it was detached from the subjects' tongues. The top surface of the tracer was softened with a layer of medical grade silicone rubber (Nusil Technology, Carpinteria, CA) to prevent possible harm to the subjects' teeth or gums. The subjects' tongue surface was then dried for better adherence, and the magnet was attached to the subjects' tongues using a cyanoacrylic oral adhesive, called Cyanodent (Ellman International, Oceanside, NY) [33].

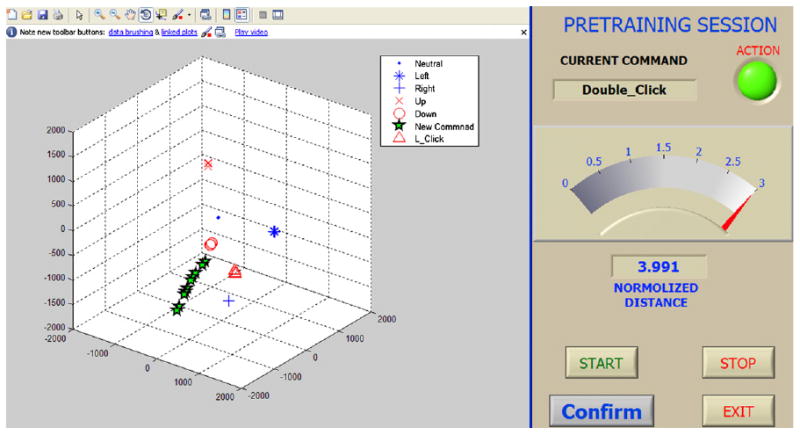

Tasks in the CA session were arranged from easy to difficult to facilitate learning as the trial went on. The number of eTDS commands was increased from 2 to 4 and then to 6 in three stages. At the beginning of each stage, the subjects went through a pre-training step to identify their desired tongue positions for each command used in that stage using a 3D tongue position representation GUI, shown in figure 3. This GUI shows the current (trace of green stars) and previous (other markers) tongue positions associated with different commands by a 3D vector that is derived from the two 3-axial sensor outputs after the earth magnetic field cancellation. The subjects were instructed to define their tongue positions for the TDS commands in a way that markers of different commands were well separated from each other. They were also asked to refrain from defining the TDS commands in the midline of the mouth (sagittal plane) because those positions are often shared with the tongue natural movements during speech, breathing and coughing. Our recommended tongue positions were as follows: touching the roots of the lower-left teeth with the tip of the tongue for ‘left’, lower-right teeth for ‘right’, upper-left teeth for ‘up’, upper-right teeth for ‘down’, left cheek for ‘left click’, and right cheek for ‘double click’.

Figure 3.

Pre-training session GUI showing the 3D representation of the current (trace of green stars) and previous (other markers) tongue positions for different TDS commands. The normalized minimum distance between the current tongue position and all previous positions is shown by a dial on the right. The subjects were instructed to define their tongue positions for TDS commands in a way that markers of different commands are separated from each other and the dial stays in the bright zone, where the normalized distance is greater than 1.5.

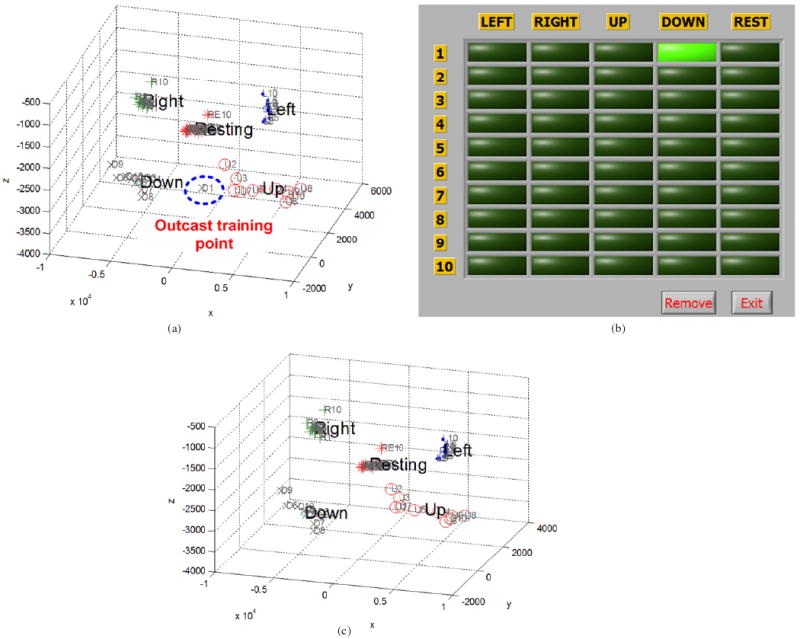

After proper tongue positions were indicated, the subjects were asked to train the TDS by repeatedly placing their tongues in those positions for ten times in a sequence [26]. At the end of this process, the training results were presented in the 3D PCA space as individually marked TDS command clusters, shown in figure 4(a). At this point, the operator was provided with a training table, shown in figure 4(b), right beside the PCA space. In this table, each button was associated with one of the training points in the PCA space, with a command name (columns) and an index number (rows). The training table allowed the operator to identify the outcast training points and manually remove them by selecting their corresponding buttons, and clicking on the ‘remove’ button.

Figure 4.

The TDS training data for four commands, individually marked and projected on to the 3D PCA space,. (b) The table in the training GUI, in which each button is associated with one training point in the PCA space with a command name (columns) and an index number (rows). This table allows the operator to identify and remove the outcast training points. (c) TDS command clusters in the 3D PCA space after the outcast training point in (a) for the ‘down’ command is removed.

2.4.1. CA-1: playing a computer game with two commands

The purpose of this test was to familiarize the subjects with TDS commands and train them on moving an object on a PC screen in one axis (either horizontal or vertical) using their tongues. In this test, the subjects were first asked to define two commands, left and right, and use them to play a ‘breakout’ game by moving a paddle horizontally with their tongues to prevent a bouncing ball from hitting the bottom of the screen. The subjects were then instructed to define another two commands, up and down, and use them to play a ‘scuba diving’ game by moving a scuba diver vertically to catch treasures while avoiding incoming fish and rocks. The subjects repeated each game three times and their scores were registered.

2.4.2. CA-2: maze navigation with four commands

The subjects were asked to define and train the TDS with four commands: left, right, up and down. Instead of going through the same training process again, the previous 2-command training results completed in CA-1 could be combined and used for this level if they appeared far apart in the 3D PCA space. The subjects were asked to complete a navigation task by using these four commands to move the mouse cursor through an on-screen maze as quickly and accurately as possible from the start to stop points, while the cursor path and elapsed time were being recorded [26]. The maze was wider at the beginning so that the subjects can start easily, and then gradually became narrower toward the end of the track. The subjects were required to complete four trials, one for practice followed by three for testing. This experiment was an emulation of the wheelchair navigation task (PWCN) on a PC screen to train the subjects in a low-risk environment.

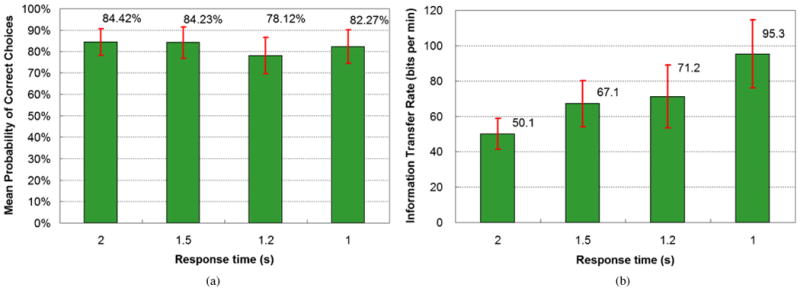

2.4.3. CA-3: response-time measurements with six commands

The subjects were asked to add two more commands to the directional commands in CA-2 for left and double mouse clicks. CA-2 training data could be reused if they were well separated in the 3D PCA space, in which case the subjects only needed to train the TDS for the two new commands. Then they were instructed to issue a randomly selected command within a specified time period, T, on an audio–visual cue [28]. T was changed from 2 s to 1.5, 1.2 and 1 s, and 40 commands were issued each time. The mean probability of correct choices (PCC) for each T was recorded. These data were then used to calculate the information transfer rate (ITR) for the TDS, which is a widely accepted measure for evaluating and comparing the performance of different BCIs. It indicates the amount of information that is communicated between a user and a computer within a certain time period. The ITR has been originally derived from Shannon's information theory, and further summarized by Pierce [34, 35]. There are various definitions for the ITR [36]. However, we have used the definition by Wolpaw in [6, 37]:

| (1) |

where N is the number of individual commands that the system can issue, T is the system response time in minutes and PCC is the mean probability that a correct command is issued within a specific time period, T.

2.4.4. PWCN

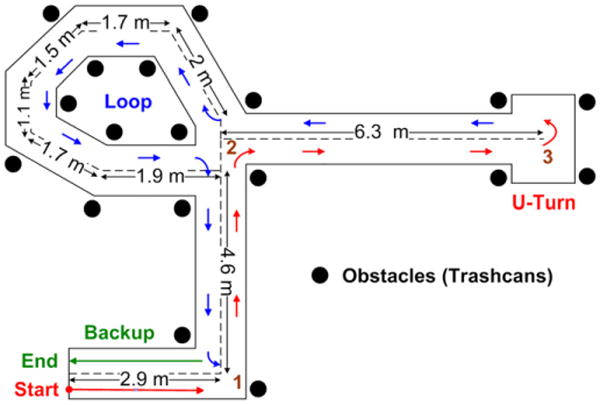

The subjects were transferred to a Q6000 powered wheelchair and went through the same preparation steps as in the CA session with a 12″ laptop that was placed on a wheelchair tray in front of them, as shown in figure 5. They were asked to define four commands (FD, BD, TR, TL) to control the wheelchair state vectors in addition to the tongue resting position (N) for stopping, and practiced them on the maze navigation GUI, similar to CA-2, for about 5 min. Then they drove the wheelchair, using eTDS, through an obstacle course, which required using all TDS commands to perform various navigation tasks such as making a U-turn, backing up and fine tuning movement direction through a loop [38]. The subjects were asked to navigate the wheelchair as fast as possible, while avoiding obstacles or running off the track. Since the trials were conducted in three different places, slightly different courses were utilized. However, they were all close to the layout shown in figure 6. The average track length was 38.9 ± 3.9 m with 10.9 ± 1.0 turns.

Figure 5.

A subject with SCI at level C4 wearing the eTDS prototype and navigating a powered wheelchair through an obstacle course. As a safety measure, the operator walked with the subject while holding an emergency stop button [38].

Figure 6.

Plan of the powered wheelchair navigation track in the obstacle course showing dimensions, location of obstacles and approximate powered wheelchair trajectory.

During the experiment, the laptop lid was initially opened to provide the subjects with visual feedback. However, later it was closed to help them see the track more easily. The subjects were required to repeat each experiment at least twice for discrete and continuous control strategies, with and without visual feedback. The navigation time, number of collisions and number of issued commands were recorded for each trial.

3. Results

3.1. Computer access session

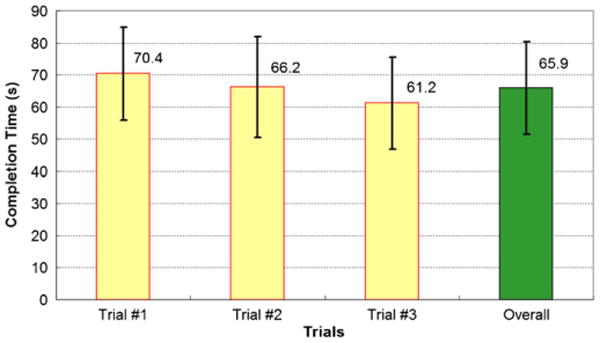

9 out of 13 subjects said that they had not played any computer games prior to the trials. However, all of them were able to learn TDS quickly and use it to play games in CA-1. They managed to define and train the system with two sets of commands (left–right and up–down) and use them to control the targets in the horizontal and vertical directions. In CA-2, all subjects successfully completed at least three maze navigation trials. The performance of each subject was calculated as the average completion time of the last three trials. Figure 7 shows the completion time for each trial along with the overall performance, averaged across all 13 subjects. The average completion time was 65.9 s with a standard deviation of 26.6 s. A gradual decrement in the completion time was observed among three consecutive trials, which can be an indication of the learning effect.

Figure 7.

Average completion time along with 95% confidence interval across 13 subjects for three trials in the CA-2 session.

For each time limit, T, in CA-3, at least 40 commands were issued to calculate PCC. Data points for one of the subjects at 1.2 s and two subjects at 1 s were not recorded because of the early termination of their CA sessions due to their poor health. PCC and its 95% confidence intervals were calculated for all the available data for each T and the results can be seen in figure 8(a). The ITR, calculated for each T from (1), are shown in figure 8(b). On average, a reasonable PCC of 82% was achieved with T = 1 s, yielding an ITR ≈ 95 bits/min, which is about two times faster than the EEG-based BCI systems that are tested on humans [6].

Figure 8.

Response time measurement results. (a) Mean probability of correct choices versus response time. (b) Information transfer rate, calculated using the definition in [6], versus response time.

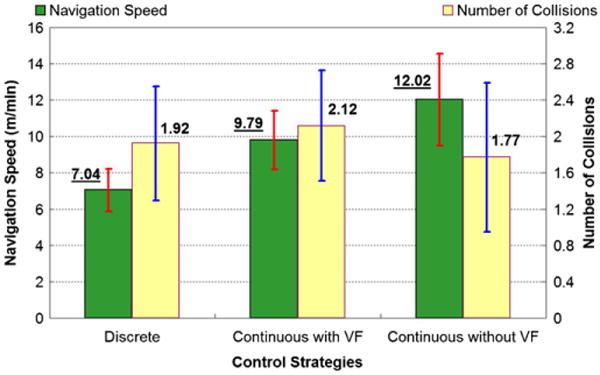

3.2. Powered wheelchair navigation session

All subjects successfully completed the powered wheelchair navigation tasks at least once. Each subject repeated the discrete control strategy for at least two trials. Continuous control strategy was also repeated at least twice with and without visual feedback for the majority of subjects. Four subjects tested the continuous control without visual feedback only once, and two subjects completed the trial for continuous control with visual feedback only once due to the early termination of their experiments for reasons unrelated to the TDS. Subjects' performance was measured by averaging the navigation speed and number of collisions across the last two trials in each category. If only one trial was completed, the result of that trial was directly used as the performance measure in completing that task. Figure 9 shows the average navigation speed and number of collisions along with their 95% confidence intervals during each experiment. In general, the continuous control strategy was much more efficient than the discrete control. The subjects consistently performed better without visual feedback by navigating faster with fewer collisions. These results demonstrate that the subjects could easily remember and correctly issue the TDS tongue commands without requiring a computer screen in front of them, which may distract their attention or block their sight. Improved performance without visual feedback can also be attributed to the learning effect because it always followed the continuous control navigation with visual feedback.

Figure 9.

Average navigation speed and number of collisions for discrete and continuous control strategies, with and without visual feedback (VF).

4. Discussions

This study demonstrated that the TDS can be easily used for both computer access and powered wheelchair control by naive subjects with high-level SCI, who were quite diverse in terms of age, post injury period, prior experience with assistive technologies and familiarity with computers. We have not yet conducted a direct side-by-side comparison between the TDS and other technologies such as BCIs, sip-and-puff, head pointers, eye trackers and voice controllers. However, the TDS is unique in terms of providing multiple control functions over both computers and wheelchairs with a single device, thereby reducing the burden of learning how to use several assistive devices and switching between them, which often requires receiving assistance.

One-way ANOVA statistical analysis, ran on the CA-2 maze navigation data in figure 7 in the Statistical Package for the Social Sciences (SPSS), revealed that there was a significant difference among users' performance in trials 1, 2 and 3 (all P = 1.0). This is a result of the learning effect, and indicates that subject can rapidly gain experience and become better at using the TDS even within a small number of trials (3).

Table 1 compares the response time, number of commands and calculated ITR of a few TCIs and BCIs that are reported in the literature. It can be seen that the TDS offers a better ITR compared to other switch-based BCIs and TCIs due to its rapid response time. Recently, head-controlled devices have been reported with higher bit rates [39]. However, it should be noted that these are considered pointing devices, similar to the computer mouse, whose bit rates are derived based on a different model, known as Fitts' law [40], as opposed to the TDS and other devices in table 1, which are discrete devices, and modeled by Wolpaw's ITR definition in (1) [36]. A limitation of the head controllers, however, is that they do not benefit users with no head motion and rely on additional switches or dwelling time for mouse clicks, which may affect their usability.

Table 1.

Comparison between the tongue drive system and other BCIs/TCIs.

In the PWCN session, we observed the real time and full control of the subjects over wheelchair movements through five simultaneously accessible tongue commands for cardinal directions and stopping. There was no noticeable delay in the wheelchair response to the subjects' tongue commands. On the other hand, in the EEG-based BCIs it takes about 2 s on average to issue a control command, which is not practical for stopping a wheelchair in an emergency situation [6]. Based on the results in figure 9 and subjects' feedback, the continuous control strategy was preferred over the discrete strategy. The subjects also issued less commands when using the continuous method. Adding a latched mode, in which the users do not need to hold their tongues at a certain position to continue issuing a commonly used command, such as forward, was also suggested by several subjects, who already had this option in their sip-and-puff controllers.

In order to assess the effects of different factors, such as age, gender and duration of injury on the computer access and wheelchair navigation performance, we categorized subjects based on each of these factors and compared their performance accordingly (see table 2). Since the number of subjects in each category was too small to conduct a statistical analysis, the discussion below is mainly based on the comparison of magnitudes of each performance parameter. For comparing computer access and wheelchair navigation performances, we have used ITR from CA-3 and navigation speed and number of collisions from PWCN sessions.

Table 2.

Categorization of subject for performance comparisons.

| Factor | Gender | Age (years) | Duration of injury (months) | |||

|---|---|---|---|---|---|---|

| Female | Male | >50 | ≤50 | ≤6 | >6 | |

| Number of subjects | 4 | 9 | 5 | 8 | 8 | 5 |

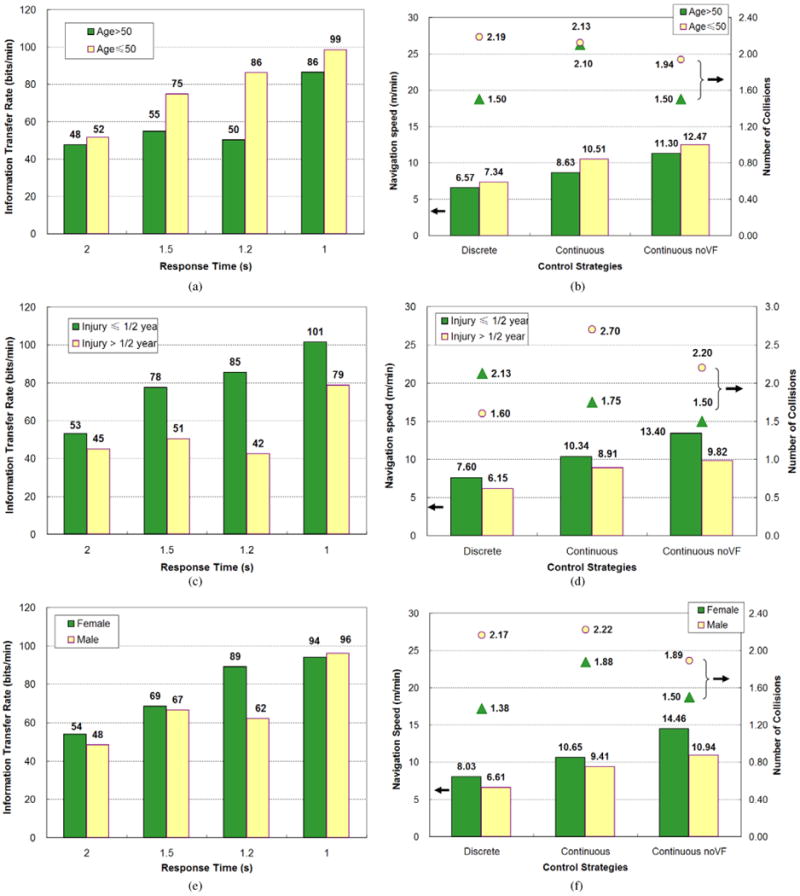

4.1. Effect of age

It can be seen in figures 10(a) and (b) that there is a substantial difference between the performance of younger (≤50) and older (>50) subjects. Younger subjects achieved higher ITR in all T values in the CA-3 session. The maximum difference, which is about 50%, occurred at T = 1.2 s. The younger subjects also tended to navigate the wheelchair faster, albeit with more collisions. The difference in navigation speed was in the range of 10–22%. It was probably because the younger subjects had shorter reaction time and also faster learning ability compared to older subjects. However, it should also be noted that the performance gap was decreasing as subjects progressed through experiments. For example, in CA-3, the ITR difference in T = 1 s was smaller than T = 1.5 s and 1.2 s, and in PWCN, the minimum difference was observed at the end of the session while testing the continuous control strategy without visual feedback. This suggests that the experience gained over a longer period of time can equalize users' performance regardless of being fast and slow learners.

Figure 10.

Comparing the effects of different factors on the subjects' performance in computer access and powered wheelchair control tasks through the ITR from the CA-3 session and wheelchair navigation speed and number of collision from the PWCN session by (a, b) younger (age ≤ 50) versus older (age > 50) subjects, (c, d) subjects with shorter (≤6 months) versus longer (>6 months) duration of injury and (e, f) female versus male subjects.

4.2. Effect of duration of injury

Differences were found between subjects with relatively short (≤6 months) or long (>6 months) periods since their injury (figures 10(c) and (d)). The former group outperformed the latter in both CA and PWCN with performances in the range of 17–100% for ITR and 17–36% for wheelchair navigation speed. Our hypothesis is that more recently injured subjects, who were beginning to learn and use assistive technologies, were probably more motivated in trying new devices. Hence, they could more easily accept and learn how to use the TDS with less preference or bias. On the other hand, the group with older injuries had already quite used their assistive devices (mostly sip-and-puff), and probably were not as motivated or open to learning and using a new device.

4.3. Effect of gender

Female subjects performed with consistently comparable or higher ITR and wheelchair navigation speed, and fewer collisions than their male counterparts. We could not find any particular reason for this difference other than considering the fact that the number of subjects in the female group was less than half of the male group. Therefore, this particular group might have been just a better fit for using the TDS. Additional tests in larger populations are needed for deriving a more accurate conclusion.

To minimize potential interference with the subjects' daily routines, almost all sessions were scheduled in the late afternoon or in the evening when subjects were generally tired after a full day of therapeutic exercises and rehabilitative activities at the Shepherd Center. As a result, some of the subjects, particularly the elders, seemed to be tired at the beginning of the 3 h sessions and exhausted at the end, to the extent that we had to cut the number of repeated trials to complete some of the sessions earlier. This situation may have degraded the subjects' overall performance and will be avoided in our future evaluations. The most realistic condition would probably be a randomized testing schedule that is evenly distributed throughout the day.

The present eTDS prototype has significant room for further improvements in its hardware, SSP algorithm and GUI software. Several subjects reported reduced sensitivity and responsiveness of the TDS to their tongue commands after the headset position was inadvertently changed in a regular weight shift. In such cases the sensor calibration and TDS training steps had to be repeated. A similar issue can arise when someone accidentally changes the sensor positions by moving the goosenecks. This is a concern that is resulted from the use of off-the-shelf components for building the current eTDS prototype as opposed to a customized headset design for this particular application. We are now working with industrial design experts to build a custom-designed headset that is significantly more stable on the users' head and more robust in supporting the sensors' optimal positions near the users' cheeks, while maintaining its ergonomic features, aesthetics and adjustability to the users' head anatomy. We are also working on an internal version of the TDS (iTDS) in the form of a dental retainer, which can be customized based on every user's oral anatomy. In the iTDS, the sensors will be completely fixated around the users' teeth until the users entirely remove the dental retainer. Another advantage of the iTDS is being completely hidden from sight, thus providing its users with a higher degree of privacy.

5. Conclusion

The tongue drive system is a new wireless, wearable and unobtrusive tongue–computer interface, which can detect its users' intentions by tracking their tongue motion with a magnetic tracer attached on their tongues and an array of magnetic sensors placed near their cheeks. The TDS is designed to interface with multiple devices in the users' environments, such as personal computers, powered wheelchairs, robotic arms, home appliances, etc. The latest eTDS prototype is built on a commercial wireless headphone and linked to PCs and powered wheelchairs via custom-designed hardware. The first clinical trial on 13 subjects with high-level SCI demonstrated that the current eTDS prototype can potentially provide its end users with effective control over both computers and wheelchairs. The eTDS in its current form, tested by naive SCI subjects, is twice as fast as EEG-based BCIs that are tested on trained humans [6]. Subjects' ability to perform all the tasks indicated that the eTDS can be easily and quickly learned with little training, thinking or concentration. The learning effect, on the other hand, showed that it is very likely that the users' performance could improve over time if they use the eTDS on a daily basis. According to the results, the eTDS performance can also be affected by the users' age, gender and post-injury duration. Additional experiments are needed to observe the end users' performance over long periods of time. We also intend to directly compare the eTDS performance with other assistive neurotechnologies in three major areas of computer access, wheelchair navigation and environmental control in different environments such as quiet indoors versus outdoors in the presence of other sources of noise and interference.

Acknowledgments

The authors would like to thank Joy Bruce and Michael Jones from the Shepherd Center and Chihwen Cheng from GT-Bionics Lab for their help with the human trials. They also thank Pride Mobility Inc. and Permobil Inc. for donating their wheelchair products.

References

- 1.Christopher and Dana Reeve Foundation. One degree of separation: paralysis and spinal cord injury in the United States. 2008 available online www.christopherreeve.org/site/c.ddJFKRNoFiG/b.5091685/k.58BD/One_Degree_of_Separation.htm.

- 2.National Institute of Neurological Disorders and Stroke (NINDS), NIH. Spinal cord injury: hope through research. 2003 available online www.ninds.nih.gov/disorders/sci/detail_sci.htm.

- 3.Vidal J. Toward direct brain–computer communication. In: Mullins LJ, editor. Annual Review of Biophysics and Bioengineering. Vol. 2. Palo Alto, CA: Annual Reviews, Inc.; 1973. pp. 157–80. [DOI] [PubMed] [Google Scholar]

- 4.Gevins AS, Yeager CL, Diamond SL, Spire J, Zeitlin GM, Gevins AH. Automated analysis of the electrical activity of the human brain (EEG): a progress report. Proc IEEE. 1975;63:1382–99. [Google Scholar]

- 5.Vidal J. Real-time detection of brain events in EEG. Proc IEEE. 1977;65:633–41. [Google Scholar]

- 6.Wolpaw JR, Birbaumer N, McFarland DJ, Pfurtscheller G, Vaughan TM. Brain–computer interfaces for communication and control. Clin Neurophysiol. 2002;113:767–91. doi: 10.1016/s1388-2457(02)00057-3. [DOI] [PubMed] [Google Scholar]

- 7.Birbaumer N, Ghanayim N, Hinterberger T, Iversen I, Kotchoubey B, Kuebler A, Perelmouter J, Taub E, Flor H. A spelling device for the paralyzed. Nature. 1999;398:297–8. doi: 10.1038/18581. [DOI] [PubMed] [Google Scholar]

- 8.Rebsamen B, Burdet E, Teo CL, Zeng Q, Guan C, Ang M, Laugier C. A brain control wheelchair with a P300 based BCI and a path following controller. The 1st IEEE/RAS-EMBS Int. Conf. on Biomedical Robotics and Biomechatronics (BioRob 2006); Pisa, Italy. 20–22 February 2006; 2006. pp. 1101–6. [Google Scholar]

- 9.Moore MM. Real-world applications for brain-computer interface technology. IEEE Trans Rehabil Eng. 2003;11:162–5. doi: 10.1109/TNSRE.2003.814433. [DOI] [PubMed] [Google Scholar]

- 10.McFarland DJ, Krusienski DJ, Sarnacki WA, Wolpaw JR. Emulation of computer mouse control with a noninvasive brain–computer interface. J Neural Eng. 2008;5:101–10. doi: 10.1088/1741-2560/5/2/001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Hochberg LR, Donoghue JP. Sensors for brain computer interfaces. IEEE Eng Med Biol Mag. 2006;25:32–8. doi: 10.1109/memb.2006.1705745. [DOI] [PubMed] [Google Scholar]

- 12.Schalk G, Miller KJ, Anderson NR, Wilson JA, Smyth MD, Ojemann JG, Moran DW, Wolpaw JR, Leuthardt EC. Two-dimensional movement control using electrocorticographic signals in humans. J Neural Eng. 2008;5:74–83. doi: 10.1088/1741-2560/5/1/008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Velliste M, Perel S, Spalding MC, Whitford AS, Schwartz AB. Cortical control of a prosthetic arm for self-feeding. Nature. 2008;453:1098–101. doi: 10.1038/nature06996. [DOI] [PubMed] [Google Scholar]

- 14.Kennedy PR, Andreasen D, Ehirim P, King B, Kirby T, Mao H, Moore M. Using human extra-cortical local field potentials to control a switch. J Neural Eng. 2004;1:72–7. doi: 10.1088/1741-2560/1/2/002. [DOI] [PubMed] [Google Scholar]

- 15.Hochberg LR, Serruya MD, Friehs GM, Mukand JA, Saleh M, Caplan AH, Branner A, Chen D, Penn RD, Donoghue JP. Neuronal ensemble control of prosthetic devices by a human with tetraplegia. Nature. 2006;442:164–71. doi: 10.1038/nature04970. [DOI] [PubMed] [Google Scholar]

- 16.Kandel ER, Schwartz JH, Jessell TM. Principles of Neural Science. 4th. New York: McGraw-Hill; 2000. [Google Scholar]

- 17.Bauby JD. The Diving Bell and the Butterfly: A Memoir of Life in Death. New York: Vintage; 1998. [Google Scholar]

- 18.Lau C, O'Leary S. Comparison of computer interface devices for persons with severe physical disabilities. Am J Occup Ther. 1993;47:1022–30. doi: 10.5014/ajot.47.11.1022. [DOI] [PubMed] [Google Scholar]

- 19.Tongue Touch Keypad. newAbilities Systems Inc.; Santa Clara, CA: available online www.newabilities.com. [Google Scholar]

- 20.Nutt W, Arlanch C, Nigg S, Staufert C. Tongue-mouse for quadriplegics. J Micromech Microeng. 1998;8:155–7. [Google Scholar]

- 21.Salem C, Zhai S. An isometric tongue pointing device. Proc CHI. 1997;97:22–7. [Google Scholar]

- 22.Struijk LNSA. An inductive tongue–computer interface for control of computers and assistive devices. IEEE Trans Biomed Eng. 2006;53:2594–7. doi: 10.1109/TBME.2006.880871. [DOI] [PubMed] [Google Scholar]

- 23.Vaidyanathan R, Chung B, Gupta L, Kook H, Kota S, West JD. A tongue-movement communication and control concept for Hands-Free Human-Machine Interfaces. IEEE Trans Syst Man Cybern A. 2007;37:533–46. [Google Scholar]

- 24.Jouse2. Compusult Limited; Compusult, Mount Pearl, NL, Canada: available online www.jouse.com. [Google Scholar]

- 25.USB. Integra Mouse Tash Inc.; Roseville, MN: available online www.tashinc.com/catalog/ca_usb_integra_mouse.html. [Google Scholar]

- 26.Huo X, Wang J, Ghovanloo M. A magneto-inductive sensor based wireless tongue–computer interface. IEEE Trans Neural Syst Rehabil Eng. 2008;16:497–504. doi: 10.1109/TNSRE.2008.2003375. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Huo X, Ghovanloo M. Using unconstrained tongue motion as an alternative control mechanism for wheeled mobility. IEEE Trans Biomed Eng. 2009;56:1719–26. doi: 10.1109/TBME.2009.2018632. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Huo X, Wang J, Ghovanloo M. Introduction and preliminary evaluation of tongue drive system: a wireless tongue–operated assistive technology for people with little or no upper extremity function. J Rehabil Res Dev. 2008;45:921–38. doi: 10.1682/jrrd.2007.06.0096. [DOI] [PubMed] [Google Scholar]

- 29.Huo X, Wang J, Ghovanloo M. A wireless tongue–computer interface using stereo differential magnetic field measurement. Proc 29th IEEE Eng in Med and Biol Conf. 2007:5723–6. doi: 10.1109/IEMBS.2007.4353646. [DOI] [PubMed] [Google Scholar]

- 30.Huo X, Wang J, Ghovanloo M. Wireless control of powered wheelchairs with tongue motion using tongue drive assistive technology. Proc 30th IEEE Eng in Med and Biol Conf. 2008:4199–202. doi: 10.1109/IEMBS.2008.4650135. [DOI] [PubMed] [Google Scholar]

- 31.Pride Mobility Products Corp. Q-logic Drive Control System Technique Manual. 2007. [Google Scholar]

- 32.PG Drives Technology. Operation and Installation Manual for OMNI+ Specialty Controls Module. 2002. [Google Scholar]

- 33.Cyanodent dental adhesive. Ellman International Inc. available online www.ellman.com/products/dental/dental_products.htm.

- 34.Shannon CE, Weaver W. The Mathematical Theory of Communication. Urbana, IL: University of Illinois Press; 1964. [Google Scholar]

- 35.Pierce JR. An Introduction to Information Theory. New York: Dover; 1980. [Google Scholar]

- 36.Kronegg J, Voloshynovskiy S, Pun T. Analysis of bit-rate definitions for Brain-Computer Interfaces. Proc of the 11th Int Conf on Human Computer Interaction HCI'05. 2005:40–6. [Google Scholar]

- 37.McFarland DJ, Sarnacki WA, Wolpaw JR. Brain-computer interface (BCI) operation: optimizing information transfer rates. Biol Psychol. 2003;63:237–51. doi: 10.1016/s0301-0511(03)00073-5. [DOI] [PubMed] [Google Scholar]

- 38.Voggle A. Tongue power: clinical trial shows quadriplegic individuals can operate powered Wheelchairs and Computers with tongue drive system. Ga Tech Res News. 2009 available online: http://gtresearchnews.gatech.edu/newsrelease/tonguedrive2.htm.

- 39.Simpson T, Gauthier M, Prochazka A. Evaluation of Tooth-Click Triggering and speech recognition in assistive technology for computer access. Neurorehabil Neural Repair. 2010;24:188–94. doi: 10.1177/1545968309341647. [DOI] [PubMed] [Google Scholar]

- 40.Fitts PM. The information capacity of the human motor system in controlling the amplitude of movement. J Exp Psychol. 1954;47:381–91. [PubMed] [Google Scholar]