Abstract

Two primary areas of damage have been implicated in apraxia of speech (AOS) based on the time post-stroke: (1) the left inferior frontal gyrus (IFG) in acute patients, and (2) the left anterior insula (aIns) in chronic patients. While AOS is widely characterized as a disorder in motor speech planning, little is known about the specific contributions of each of these regions in speech. The purpose of this study was to investigate cortical activation during speech production with a specific focus on the aIns and the IFG in normal adults. While undergoing sparse fMRI, 30 normal adults completed a 30-minute speech-repetition task consisting of three-syllable nonwords that contained either (a) English (native) syllables or (b) Non-English (novel) syllables. When the novel syllable productions were compared to the native syllable productions, greater neural activation was observed in the aIns and IFG, particularly during the first 10 minutes of the task when novelty was the greatest. Although activation in the aIns remained high throughout the task for novel productions, greater activation was clearly demonstrated when the initial 10 minutes were compared to the final 10 minutes of the task. These results suggest increased activity within an extensive neural network, including the aIns and IFG, when the motor speech system is taxed, such as during the production of novel speech. We speculate that the amount of left aIns recruitment during speech production may be related to the internal construction of the motor speech unit such that the degree of novelty/automaticity would result in more or less demands respectively. The role of the IFG as a storehouse and integrative processor for previously acquired routines is also discussed.

Introduction

Although speech production is supported by an extensive neural network, the specific contributions of the cortical areas within this network are not fully understood. For example, lesion studies can provide important information about how the brain supports speech, but certain limitations are inherent in patient data. Acquired apraxia of speech (AOS) is a motor speech disorder that most commonly results from stroke (Ogar et al., 2005). It has been conceptualized as a deficit in speech motor programming indicative of impairment in transforming the linguistic code into a coordinated motor code with specific goals for articulation of the phonological representation (Ballard, Granier, & Robin, 2000; Ballard & Robin, 2007; Deger & Ziegler, 2002; McNeil et al., 1997; Spencer & Rogers, 2005). The primary clinical characteristic of AOS include: 1) slow rate of speech, 2) sound distortions, 3) distorted, perceived sound substitutions, 4) consistent errors in terms of type and location, and 5) prosodic abnormalities (Wambaugh et al., 2006). Collectively, lesion studies including patients with AOS have revealed conflicting evidence regarding the brain regions crucial for motor speech planning. A number of regions have been associated with AOS, but the two primary areas of damage that have been implicated are: (1) the left inferior frontal gyrus (IFG) (Broca, 1865; Hillis et al., 2004; Mohr, 1978), and (2) the left anterior insula (aIns) (Dronkers, 1996; Nagao et al., 1999; Ogar et al., 2006). We propose a simple method to reconcile these apparently contradictory findings. Specifically, we note that AOS has been associated with damage to the aIns and IFG in chronic patients, but primarily to the IFG in acute patients. Based on this hypothesis, we suggest that the IFG is primarily involved with well rehearsed speech, so injury leads to immediate deficits. In contrast, we hypothesize that the aIns becomes more involved with generating novel speech, and therefore offers a redundancy for rehabilitation. If so, patients with damage restricted to the IFG may have a better long-term prognosis for recovery of function through utilization of the aIns, while patients with damage to both areas may be less likely to recover.

Damage in the left aIns has been linked to AOS in chronic stroke (Dronkers, 1996; Nagao et al., 1999; Ogar et al., 2006), primary progressive AOS (Nester et al., 2003), and reduced fluency in aphasia (Bates et al., 2003; Borovsky et al., 2007). However, several functional magnetic resonance imaging (fMRI) studies have revealed increased insular activity associated with overt speech (compared to covert speech) that was not modulated by length or complexity (Ackermann et al., 2004; Shuster & Lemieux, 2004). Such findings might suggest that the insula is not central to motor planning, but may be involved in motor coordination or sensory integration/feedback (Dogil et al., 2001). However, Bohland and Guenther (2006) found that the complexity of speech production was related to increased activity in the aIns, in addition to the IFG.

In contrast to lesion studies in chronic patients, Hillis et al. (2004) found that AOS was related to a compromised (either frank lesion or related hypoperfusion) left IFG in acute stroke regardless of Ins involvement. No relationship was found between involvement of the insular cortex and AOS. They postulated that the central location of the insular cortex within the middle cerebral artery (MCA) distribution makes it susceptible to damage in large MCA infarcts (Finley et al., 2003) when there is also structural damage and/or functional compromise within the inferior frontal lobe. Small lesions tend to result in transient AOS (Mohr, 1978; Marien et al., 2001); however, individuals with larger lesions are more likely to have persistent, chronic AOS. Therefore, large MCA lesions, and thus possible Ins damage, may be overrepresented in chronic AOS. Unfortunately, findings from neurodegenerative cases of AOS are comparably inconclusive, as they provide support for either the left aIns (Nester et al., 2003), the IFG (Broussolle et al., 1996), or both (Gorno-Tempini et al., 2004). However, the crucial importance of the IFG in speech production has been supported by a number of studies using repetitive transcranial magnetic stimulation (rTMS) in normal participants, which allows transient inhibition of a selected region. Several studies have reported speech disruptions and speech arrest when rTMS is applied to, not only the facial motor cortex, but also the IFG (Pascual-Leone, Gates, & Dhuna, 1991; Epstein, 1998; Epstein et al., 1999; Stewart, Walsh, Frith, & Rothwell, 2001; Aziz-Zadeh, Cattaneo, Rochat, & Rizzolati, 2005).

On the other hand, the role of the aIns in normal speech production remains somewhat muddled. While a number of neuroimaging studies have shown aIns activation during speech (Bohland & Guenther, 2006; Kuriki et al., 1999; Tourville et al., 2008; Wise et al., 1999;), others have revealed a lack of aIns activation (Bonilha et al., 2006; Frenck-Mestre et al., 2005; Murphy et al., 1997; Riecker et al., 2000; Soros et al. 2006). For example, Bonilha et al. (2006) did not find neural activity in the aIns associated with the production of bisyllabic nonwords. Instead, significant Ins activation was found during the production of non-speech oral movements, while significant IFG activation was found during sublexical speech. The fact that the IFG was uniquely activated during the speech condition suggests that the IFG may be responsible for motor sequencing in speech, as has been suggested in previous studies (Borovsky et al., 2007; Nishitani et al., 2005). However, the potential role of the Ins in the non-speech productions is unclear.

The IFG and aIns possibly play complementary roles in speech production, which may explain the seemingly contradictory evidence in the patient literature based on time post-onset. One possible explanation for the Bonilha et al. (2006) findings may be related to the intrinsic nature of the two tasks. While the production of native speech syllables is highly over-learned, the execution of contrasting non-speech oral motor movements is comparatively unpracticed. Therefore, Ins recruitment during the non-speech condition may have been related to the novelty (or lack of automaticity) for the task. Consistent with this hypothesis, Soros et al. (2006) suggested that involvement of the aIns may be reflective of the repetitiveness of the speech task. That is, the aIns may show greater involvement when speech is varying as opposed to repetitive. This is interesting in light of the conflicting evidence regarding lesion location in chronic and acute cases of AOS. According to the Directions Into Velocities of Articulators (DIVA) model, the inferior frontal cortex stores phonetic codes for specific syllable productions (Guenther, 2006; Guenther, Ghosh, Tourville, 2006). Such phonetic codes could be viewed as simple motor plans that can be linked together to produce more complex plans for the articulation of words and sentences. If so, then damage to this area would hinder access to these motor plans and integration of these plans into larger plans, thus compromising the translation of the phonological representation/auditory maps into an articulatory plan. Although the specific role of the aIns remains elusive, we speculate that both the pars opercularis of the IFG (OpIFG) and aIns contribute to translation of auditory maps into motor commands for speech execution, albeit in different ways. In accord with the dual-route model (Levelt & Wheeldon, 1994; Varely, Whiteside, & Luff, 1999; Varley & Whiteside, 2001), it is plausible that the IFG may be implicated in routine speech planning; however, when this area is damaged, other cortical regions, such as the insular cortex, then assume an important role in compensating for this damage. That is, the aIns may be crucial in learning/relearning motor productions and sequences when the IFG is compromised. If so, the integrity of the insular cortex may be an important diagnostic indicator in the recovery of AOS.

A study utilizing the production of native and non-native speech syllables in normal participants provides a unique and straightforward means for investigating the role of novelty/automaticity related to left aIns recruitment in speech. Accordingly, the purpose of this study was to investigate cortical activation during speech production with a specific focus on the aIns and the IFG in normal adults. Based on our theoretical framework, neural activity in the left IFG was expected during speech production regardless of the novelty of the utterance. However, when normal individuals attempt to produce novel speech sounds that are not a part of their native repertoire, additional brain regions may be recruited to support the task beyond those areas that would be active when producing native speech. Thus, it was hypothesized that the production of novel speech syllables would initially recruit areas beyond what was observed for the native syllables, including increased activity in the aIns. If the aIns is important for acquisition of new/unfamiliar motor productions and sequences, greater insular activation should be revealed during novel syllable productions compared to native productions. In addition, after repeated exposure during the task, the neural activity associated with the novel syllables should theoretically converge with the activation seen during the production of native syllables. Thus, we predicted a decrease in aIns activation for novel productions over time.

Method

Participants

Participants included 30 (13 male/17 female) right-handed, native-English speakers between 18–35 years of age (mean=22.8 years, S.D.=4.7). Individuals were excluded from participation if a screening revealed a history of neurological disease/injury, psychiatric disorder, or contraindications for MRI scanning (e.g., metal implants, pacemakers, pregnancy, and claustrophobia). Additional exclusion criteria included a history of speech-language difficulties (e.g., disorders and/or delays in articulation, fluency, resonance, or language), gross structural abnormalities of the articulators (e.g., cleft palate or severe malocclusions), or hearing impairment. All participants demonstrated visual acuity sufficient for viewing the visual stimuli.

Behavioral Task

During a 30-minute speech-repetition task, participants observed audiovisual movies of 3-syllable non-words generated by a single speaker (showing only the lower portion of the face below the nose) and were asked to repeat each production immediately following its audio-visual presentation. The non-words were equally divided between two conditions based on syllable type: (1) English (native) or (2) Non-English (novel). Non-words (sublexical syllable strings) were created for this task instead of using real words in an attempt to isolate motor speech from lexical processing and to make the conditions equal, as the inclusion of novel sounds would inherently render a non-word. A total of twelve CV syllables (6 English phonemes/6 non-English phonemes combined with the vowel /ɑ/) were used to create the stimuli (see Table 1). In order to balance across the two conditions, the targeted native and novel syllables were selected from the International Phonetic Alphabet based on shared phonetic features so that each of the 6 pairs only differed by one distinctive feature (i.e., manner, place, or voice). For the novel condition, only syllables that were distinct from any common dialectal variant of English were used. The 3-syllable non-words were constructed for each condition by either repeating one of the target syllables three times or positioning it between two other syllables to provide two articulatory environments for each target syllable. For the purpose of fMRI data analysis, a baseline condition was also included, which consisted of a still frame presentation of a neutral mouth paired with pink noise. Participants were instructed to do nothing during these trials.

Table 1.

Native-English and novel syllables used to create multisyllablic non-words for the speech-repetition task (including manner, place, and voice).

| Native | Novel | Manner | Place | Voice |

|---|---|---|---|---|

| pɑ | Ḅɑ | plosive/trill | bilabial | voiceless |

| nɑ | ṇɑ | nasal | alveolar | voiced/voiceless |

| kɑ | хɑ | plosive/fricative | velar | voiceless |

| ʃɑ | ɬɑ | fricative/lateral fricative | post alveolar/alveolar | voiceless |

| θɑ | ɼ̪ɑ | fricative/trill | dental | voiceless |

| vɑ | ɱɑ | fricative/nasal | labio-dental | voiced |

Within the entire fMRI run, participants received a total of 180 trials (72 English, 72 novel, and 36 baseline trials). The order of presentation was pseudo-randomized and counterbalanced into 3 segments to provide an event-related paradigm in which each stimulus was presented the same number of times within the first, middle, and last third of the 30-minute task. Therefore, participants heard 24 English, 24 novel, and 12 baseline trials during each third of the task. Each stimulus presentation lasted 2.5 s, followed by a jittered inter-stimulus interval (ISI) with a mean of 7 s (± 2 s). Immediately before each stimulus presentation, a fixation point appeared for 0.5 s to alert the participant of the upcoming trial. The paradigm was created using E-Prime 2.0 BETA software (2006) and was presented via DLP projection to a mirror mounted on the MRI scanner head coil, along with SereneSound headphones (www.mrivideo.com). Immediately preceding the initiation of the experimental task, each participant completed a 1.6-minute practice run with untargeted exemplars from each condition to allow familiarization to the task without exposure to the experimental trials.

To ensure task compliance and allow for the systematic analysis of behavioral data, responses were recorded via a non-ferrous microphone and audio editing software with a 16 kHz sampling rate and 16-bit resolution. The audio-recordings from all 30 participants were combined to create a rating paradigm for each of the six targeted novel syllables using an experiment-control freeware (Alvin, v. 1.19, http://homepages.wmich.edu/~hillenbr/). Repetitions of novel syllables from all participants were randomly presented to four independent raters who judged the accuracy of each production compared to the model on a 5-point scale. The audio stimuli from the fMRI task were used as the model productions for each target. Prior to beginning the rating process, each rater listened to all production of a given target. The raters were instructed to make judgments based on how closely each production matched the model exemplar. During the rating process, they had continued access to the models and were allowed to repeat each exemplar as many times as needed before proceeding to the next item. To determine whether accuracy improved with repeated exposure over the 30-minute task, the scores from each participant were compared between the first and last time segment using a paired t-test.

fMRI Data Acquisition

All MRI scanning was conducted on a Siemens 3.0 Tesla Trio system using a 12-element head coil, and event-related fMRI data were collected using a sparse echo planar imaging (EPI) sequence with a repetition time (TR) of 10 s and an acquisition time (TA) of 2 s. Sparse-sampled fMRI acquisition is ideal for imaging speech tasks (Birn et al., 1999; Bonilha et al., 2006; Fridriksson et al., 2006; Ghosh et al., 2008; Gracco et al., 2005; Soros et al., 2006) because the TR is 8s longer than the TA. This allows stimulus presentation and overt responses to occur within this window of ‘silence’ in which no volumes were being collected. Because normal speech production is associated with some degree of head movement, sparse imaging can greatly reduce the motion-related artifacts typically associated with continuous EPI data collection during overt speech (Barch et al., 1999; Birn et al., 1999; Yetkin et al., 1996), as well as allow for clearer stimulus presentation and monitoring of responses. Furthermore, because the slow rise of the hemodynamic response typically peaks 3–6 s after the associated event, the blood-oxygen-level dependent (BOLD) signal changes can be measured during the brief intervals following each stimulus-response pair. Other fMRI parameters included: 30 ms echo time, 90° flip angle, 64×64 matrix, a 3.3 × 3.3 × 3.2 mm voxel size, number of slices=33, no slice gap or parallel imaging acceleration, number of volumes=180. A high-resolution T1-weighted sequence (matrix size 256×256×160, voxel size 1×1×1 mm) was also acquired for each participant to aid normalization. Total MRI scanning lasted approximately 45 minutes.

fMRI Analyses

Individual and group analyses of the fMRI data were carried out using FSL (FMRIB’s Software Library, www.fmrib.ox.ac.uk/fsl; Smith et al., 2004). Pre-statistics processing included: motion correction through linear image registration (MCFLIRT: Multivariate Exploratory Linear Decomposition into Independent Components; Jenkinson, Bannister, Brady, & Smith, 2002); non-brain removal (BET: Brain Extraction Tool; Smith, 2002); spatial smoothing using a Gaussian kernel of 6 mm full-width half maximum; and high pass temporal filtering (Gaussian-weighted LSF straight line fitting, with sigma=100 s). Time-series statistical analysis was performed using a general linear model with local autocorrelation correction (FILM: FMRIB’s Improved Linear Model; Woolrich, Ripley, Brady, & Smith, 2001). The fMRI data for each participant were analyzed in native space and co-registered to standard MNI (Montreal Neurological Institute) space, an average template created from 152 brains (Evans et al., 1993), using linear registration (FLIRT: FMRIB’s Linear Image Registration Tool; Jenkinson & Smith, 2001, Jenkinson, Bannister, Brady, & Smith, 2002).

Higher-level (group) analyses were carried out using a Bayesian mixed effects analysis (FLAME 1 and 2: FMRIB’s Local Analysis of Mixed Effects; Beckmann, Jenkinson, & Smith, 2003; Woolrich, Behrens, Beckmann, Jenkinson, & Smith, 2004). This included a pair of group analyses to determine regions of activation that were associated with the production of: (1) English-syllable trials and (2) novel-syllable trials compared to baseline. In addition, data from the beginning and final third were analyzed separately to reveal activation associated with syllable trials during the first and last 10 minutes of the task. Neural activation was indirectly measured as localized BOLD signal intensity during each speech condition compared to the baseline neutral-mouth condition. Z (Gaussianised T) statistic images were produced by applying a cluster threshold of Z>2.3 and a (corrected) cluster significance threshold of P=0.01 (Worsley, Evans, Marrett, & Neelin, 1992). In order to answer our proposed research questions, additional comparisons were performed to reveal which areas showed significantly more activation in each condition above what was seen in the other conditions. These contrasts included: (1) English vs. novel productions for (a) the entire run, (b) the beginning third, and (c) the final third, as well as (2) beginning vs. final third for (a) English productions and (b) novel productions. The Talairach Daemon Client was accessed to provide standard coordinates of local maxima for cortical areas where greater cortical activity was revealed in each contrast (http://ric.uthscsa.edu/projects/talairachdaemon.html). For illustrative purposes, the mean statistical maps were overlaid onto a standard brain template using MRIcron (Rorden et al., 2007) with a threshold of Z=2.3 for between condition comparisons. However, because baseline comparisons yielded high levels of activity and extensive activation maps, a higher threshold of Z=3.6 was selected for baseline comparisons for clarity of display.

In addition to whole brain comparisons, a region of interest (ROI) comparison was employed for the left aIns and the left OpIFG. These ROIs were selected a priori and were defined according to the AAL (Anatomical Automatic Labeling) freeware database (http://www.cyceron.fr/freeware/; Tzourio-Mazoyer et al., 2002). While the OpIFG was predefined in the AAL database, the anterior Ins was not. Therefore, a redefined ROI was created by limiting the insular volume of interest from the AAL to include only the anterior portion by preserving those voxels that were also included in the “anterior inferior frontal gyrus insula” volume of interest from the Jerne database (http://hendrix.ei.dtu.dk/services/jerne/ninf/voi.html). The size of each ROI was as follows: aIns, 7.31 cc; OpIFG, 8.47 cc. That is, we limited the AAL insular volume by applying an intensity filter to this region so as to encompass only the anterior portion of the insula while excluding the IFG and posterior regions. The mean intensity of task-related signal change was extracted from the left aIns and the left OpIFG during the first and last third of the entire session for both task conditions (native vs. novel speech). Differences in the mean percent signal change within each ROI were analyzed using a 2×2×2 repeated measures ANOVA (within-subject factors for novelty, location, and time) with an overall significance level set at p<0.05. Pair-wise comparisons were also calculated for the eight relevant comparisons with a Bonferroni corrected significance at the p<0.05 level. The mean and standard error for each variable were used to generate a graphical display of signal intensity in both ROIs for each condition relative to time.

Results

To determine if the accuracy of novel productions improved over the length of the entire task, performance ratings from the beginning and final third of the task for each participant were compared. The group mean for the initial segment was 2.49 out of 5 (S.D.=0.36) and the final segment was 2.56 (S.D.=0.56). The results of the paired t-test did not reveal a significant difference between the ratings for the two time segments (t=−1.43, p=0.16). Instead, there was notable variability in performance accuracy across participants, and ratings remained rather constant across time for individual participants.

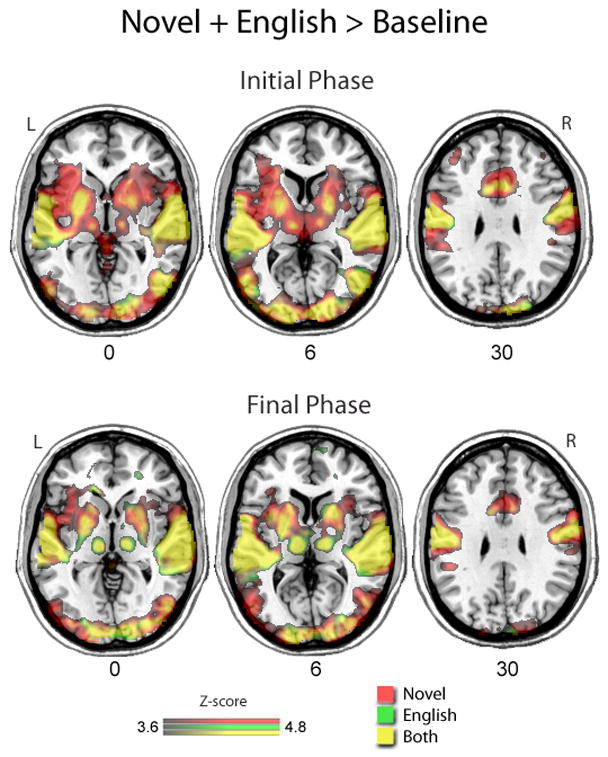

The results from the fMRI analyses revealed widespread bilateral cortical activation during speech production compared to baseline, regardless of the specific syllable composition that was produced within each condition. As expected, both speech conditions were associated with bilateral neural recruitment of the visual, auditory, motor, and somatosensory cortices, as well as subcortical activity in the basal ganglia and thalamus (anatomical abbreviations are defined in Table 2). More specifically, common areas of activity during both speech conditions included bilateral recruitment of the following areas: (1) the occipital lobe, including the IOG, MOG, SOG, Cu, FuG, and LiG; (2) the temporal lobe, including the ITG, MTG, STG, TP, HG, Wernicke’s area, and Hp; (3) the frontal lobe and cingulate cortex, including the M1, PMC, SMA, OpIFG, TriIFG, and ACgG; (4) the parietal lobe, including the S1; (5) the insula; (6) the cerebellum; (7) the BG, including the Pu and GB; and (8) the thalamus. Figure 1 shows the statistical maps of neural activity associated with the production of native (English) syllables and novel (non-English) syllables compared to baseline during the first (top panel) and final (bottom panel) third of the task. Brain regions associated with the production of English syllables are represented in green, while brain regions associated with the production of novel syllables are represented in red. Brain areas that showed significant activation during both tasks are revealed in the overlap between the two maps, which is depicted in yellow.

Table 2.

List of anatomical abbreviations.

| Abbreviation | Anatomical Region |

|---|---|

| ACgG | Anterior cingulate gyrus |

| aIns | Anterior insula |

| Amg | Amygdala |

| BG | Basal ganglia |

| CB | Cerebellum |

| Cu | Cuneus |

| FuG | Fusiform gyrus |

| GB | Globus pallidus |

| HG | Heschl’s gyrus |

| Hp | Hippocampus |

| IFG | Inferior frontal gyrus |

| Ins | Insula |

| IOG | Inferior occipital gyrus |

| IPL | Inferior parietal lobe |

| ITG | Inferior temporal gyrus |

| LiG | Lingual gyrus |

| M1 | Primary motor cortex |

| MFG | Middle frontal gyrus |

| MOG | Middle occipital gyrus |

| MTG | Middle temporal gyrus |

| OpIFG | Pars opercularis of the inferior frontal gyrus |

| OrIFG | Pars orbitalis in the inferior frontal gyrus |

| PMC | Premotor cortex |

| PTG | Posterior middle/inferior temporal gyrus |

| Pu | Putamen |

| S1 | Primary somatosensory cortex |

| SMA | Supplementary motor area |

| SMG | Supramarginal gyrus |

| SOG | Superior occipital gyrus |

| STG | Superior temporal gyrus |

| Th | Thalamus |

| TP | Temporal poles |

| TP | Temporal pole |

| TriIFG | Pars triangularis of the inferior frontal gyrus |

Figure 1.

Neural activity associated with the production of English syllables (green) and novel syllables (red) compared to baseline during the first 10 minutes (top) and the last 10 minutes (bottom). The overlap in statistically significant activation for each condition is depicted in yellow. The number below each image indicates the location of the axial slices that are displayed.

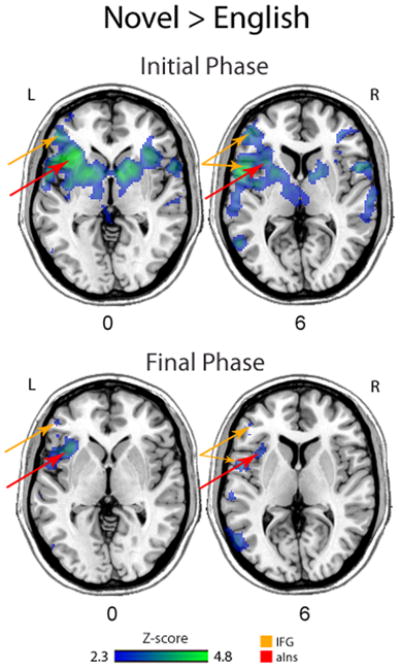

When activation associated with each condition was statistically compared against each other, no areas were significantly more active during the production of English syllables than during the production of novel syllables. In contrast, greater activity during the production of novel syllables compared to English syllables was revealed in several areas, including bilateral activity in the Amg, BG, TP (BA 38), OrIFG (BA 47), STG (BA 22), Th, M1 (BA 4), PMC/SMA (BA 6), S1 (BA 3/2), and PTG/FuG (BA 37), as well as greater activation in the MTG (BA 21), SMG (BA 40), IPL (BA 7), IFG (BA 44/45), and Ins bilaterally with greater involvement in the left hemisphere based on visual inspection of the statistical activation maps. Although the areas of activity associated with each speech condition were similar across the length of the task, some differences emerged when the beginning and ending thirds were independently examined. Table 3 provides the location and level of peak activation differences between the novel and native conditions. Brain regions that were significantly more active during the production of novel syllables compared to English syllables for both time periods are displayed in Figure 2. During the first 10 minutes, there was greater activity bilaterally in the Amg, BG, Th, TP, MTG, STG, MFG, OrIFG, OpIFG, TriIFG, Ins, ACgG, M1, PMC, SMA, S1, as well as the left PTG/FuG, SMG, and IPL. During the last 10 minutes, greater activity was found in the left Ins, BG, TriIFG, M1, PMC, S1, SMG, posterior STG, and IPL for novel compared to native productions. In both the beginning and ending time segment, the greatest difference in signal intensity for novel compared to native productions was observed in the insula, IFG, and IPL/S1. While the difference in insular activity remained extensive during the final third of the task, visual inspection suggests that the IFG activity showed less difference between the two conditions than was observed during the initial third (Figure 2).

Table 3.

Local maxima of brain regions with neural activity associated with the production of multisyllabic non-words composed of novel syllables compared to English syllables, including the anatomical locations of peak activation within each cluster, the z-scores (Z; threshold: Z > 2.3, corrected cluster threshold: p=0.01), MNI spatial coordinates (x, y, z), and corresponding Brodmann areas.

| Novel (initial) > English (initial) | ||||||

|---|---|---|---|---|---|---|

| Anatomical location | Hemisphere | Brodmann area | Z | x | y | z |

| Inferior Frontal Gyrus | L | 47 | 5.04 | −42 | 18 | −2 |

| Middle Frontal Gyrus | L | 6 | 3.01 | −26 | −6 | 60 |

| Precentral Gyrus | L | 6 | 4.97 | −62 | 2 | 26 |

| 44 | 4.83 | −54 | 8 | 10 | ||

| Superior Frontal Gyrus | L | 6 | 3.89 | −4 | 6 | 66 |

| 2.93 | −22 | −4 | 68 | |||

| Cingulate Gyrus | L | 32 | 4.42 | 0 | 24 | 34 |

| Inferior Parietal Lobule | L | 40 | 5.61 | −60 | −26 | 32 |

| Postcentral Gyrus | L | 2 | 5.36 | −62 | −20 | 26 |

| Anterior Insula | L | 47 | 5.29 | −32 | 24 | −4 |

| Medial Frontal Gyrus | R | 6 | 4.64 | 2 | 14 | 44 |

| Cingulate Gyrus | R | 24 | 3.60 | 4 | 2 | 46 |

| Novel (final) > English (final) | ||||||

| Anatomical location | Hemisphere | Brodmann area | Z | x | y | z |

| Inferior Frontal Gyrus | L | 9 | 3.85 | −60 | 6 | 22 |

| 44 | 3.77 | −56 | 6 | 18 | ||

| 3.34 | −50 | 2 | 16 | |||

| Parahippocampal Gyrus | L | * Amg | 3.30 | −20 | −2 | −12 |

| Inferior Parietal Lobule | L | 40 | 4.39 | −60 | −24 | 28 |

| 3.08 | −46 | −38 | 38 | |||

| Postcentral Gyrus | L | 40 | 4.30 | −60 | −24 | 22 |

| Insula | L | 13 | 3.75 | −36 | 18 | 2 |

| Middle Temporal Gyrus | L | 37 | 3.11 | −56 | −66 | 6 |

| Superior Temporal Gyrus | L | 42 | 3.05 | −60 | −26 | 12 |

| 22 | 3.00 | −62 | −52 | 14 | ||

| 3.47 | −52 | 4 | 4 | |||

Subcortical structure does not have a corresponding Brodmann area

Figure 2.

Brain areas that were statistically more activated in the production of novel compared to native syllables during the first 10 minutes of the task (top) and the last 10 minutes of the task (bottom). Arrows point to significant activity in the left IFG (green) and the left aIns (red). The number between the two rows of images indicates the location of the axial slices that are displayed.

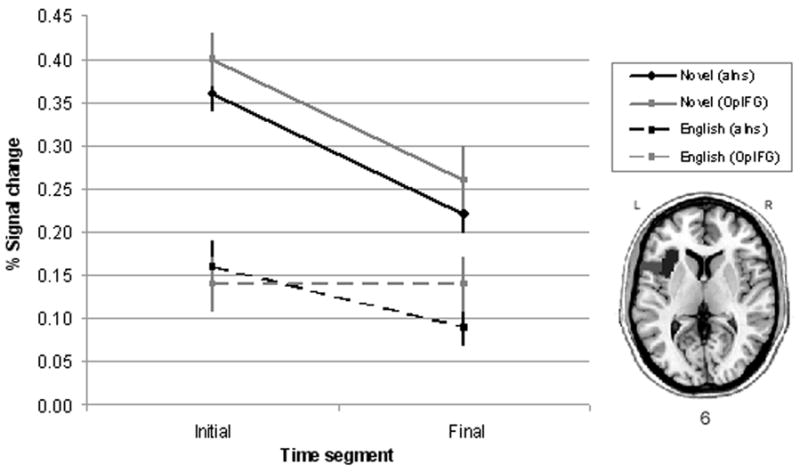

An ROI analysis was employed for the left aIns and the left OpIFG using a 2×2×2 repeated measures ANOVA to compare the mean percent signal change associated with the speech task for the three within-subject factors of cortical location, familiarity, and time. The results revealed a significant main effect for familiarity (p=0.0001) and time (p=0.04); however, there was also a significant interaction between these two factors (p=0.007). Thus, the effect associated with familiarity was modulated by time. No other main effects or interactions were significant. The results of the pair-wise comparisons are displayed in Table 4.

Table 4.

Pair-wise comparisons for ROI analysis in the aIns and OpIFG.

| Anterior Insula (aIns) | t(59) | p |

|---|---|---|

| Novel 1st vs. Novel 3rd | 3.89 | †0.0003 |

| English 1st vs. English 3rd | 1.54 | 0.1281 |

| Novel 1st vs. English 1st | 7.17 | †0.0001 |

| Novel 3rd vs. English 3rd | 4.59 | †0.0001 |

| Inferior Frontal Gyrus Pars Opercularis (OpIFG) | t(59) | p |

| Novel 1st vs. Novel 3rd | 2.14 | *0.0361 |

| English 1st vs. English 3rd | 0.03 | 0.9754 |

| Novel 1st vs. English 1st | 6.69 | †0.0001 |

| Novel 3rd vs. English 3rd | 3.25 | †0.0019 |

statistically significant at the 0.05 level

statistically significant at the 0.006 level

Figure 3 displays the group mean and standard error for the amount of change in signal intensity according to location, familiarity, and time. The signal intensity was relatively stable for English productions (Figure 3, dashed lines) across time in both ROIs. The results of the pairwise t-tests revealed no difference in the percent signal change for English-syllable production between the first and final time period in either ROI (p=0.13 and 0.98). For novel productions (Figure 3, solid lines), significantly greater activity was revealed in the aIns during the first time period compared to the final time period (p=0.0003); whereas, no difference was found over time in the OpIFG (p=0.04, although it was approaching significance, it did not reach Bonferroni corrected alpha level for multiple comparisons). However, when novel productions were compared with English productions during the first time segment, significantly greater activity was revealed in both the aIns (p=0.0001) and the OpIFG (p=0.0001). Similarly, during the final time segment, greater activation was also found in both the aIns and OpIFG for novel productions compared to English productions (p=0.0001 and 0.002, respectively). Figure 3 shows greater signal change associated with novel productions regardless of time or location, as well as the interaction between familiarity and time.

Figure 3.

Percentage signal change in the anterior portion of the left insular cortex (black) and the pars opercularis of the left inferior frontal gyrus (gray) during the production of novel (solid line) and English (dashed line) syllables. The axial slices of the brain (bottom right) displays the two ROIs: aIns (black) and OpIFG (gray).

In addition, Spearman’s correlation coefficients were calculated between the amount of signal change in each ROI during each phase of the task and the improvement in the participants’ accuracy scores for novel productions from the initial to the final phase of the task. Improvement in the accuracy scores for novel productions showed a significant positive correlation with the amount of signal change in the aIns during the final phase of the task (r=.39, p=.04).

Discussion

The purpose of this study was to investigate the neural substrates of motor speech production with a specific focus on the left aIns and IFG. Both of these regions have been frequently reported in neuroimaging studies of speech production and in neuroanatomical studies of AOS; however, the specific contribution of each area to speech production remains unclear. Based on the sometimes contradictory findings regarding the possible role of the aIns in motor speech production, it was hypothesized that the aIns may play an important and complementary role to the IFG. That is, we postulated that both regions are involved in motor speech planning, but that the recruitment within each of these regions is modulated by the content to be produced. For example, the two-stage model of motor programming proposes two types programming as follows: 1) the organization/integration of movement elements into chunks (i.e. the internal spatio-temporal structure of units) and 2) the sequencing of successive units into the correct serial order (Klapp, 2003). Although speech is normally produced with considerable ease, disruptions and/or increased demands to either of these processes could perturb the seemingly automatic nature of speech production. We suggest that the production of novel (non-English) syllables provides a unique opportunity to investigate speech production by exceeding the typical demands of motor speech planning in normal adult speakers. Therefore, this study was designed to test our a priori hypothesis regarding the neural demands for producing novel (non-English) syllable strings compared to native (English) syllable strings.

While an extensive neural network was activated during the production of both native and novel syllables, certain differences emerged. As expected, the production of novel syllables resulted in greater extent and intensity in the BOLD signal compared to English syllables. While no brain areas showed greater activity during English productions compared to novel production, a number of brain regions with greater activity emerged bilaterally when novel productions were contrasted with native productions, including the left aIns and IFG (Figure 2). All of these regions have been previously implicated in speech production in a number of studies (Dogil et al., 2001; Ackermann et al., 2004; Shuster & Lemieux, 2004; Bohland & Guenther, 2006). Thus, the overall findings suggest that the areas responsible for routine speech production are also recruited when attempting to produce new speech sounds that are not a part of the native repertoire. In particular, these data suggest that the production of unfamiliar speech sounds results in increased bilateral engagement of the entire motor speech system, including areas that are typically associated with language processing and motor execution.

It is possible that the increased neural activity associated with the novel-syllable productions merely reflected increased difficulty, as increased task demands have been associated with increased activation and right hemisphere recruitment in normal and impaired individuals (Just, Carenter, Keller, Eddy, & Thulborn, 1996; Szameitat, Schubert, Müller, & Von Cramon, 2002; Fridriksson & Morrow, 2005). Alternatively, the overall increase in activity in the motor speech network may directly reflect the lack of familiarity with the motor commands necessary to produce the target. Along with evidence supporting localization of function, many neurocognitive functions are supported by the interaction of many components within a distributed network. This concept may be particularly relevant when new constructs have to be established. According to the DIVA model, multiple brain regions are highly interactive during the learning phase of syllable productions (Guenther 2006; Guenther, Ghosh, Tourville, 2006). In the DIVA model, the auditory, motor, and somatosensory areas interact to provide both feed forward and feedback controls in acquiring new motor maps for speech.

If the increased activation associated with novel productions was related to such motor learning, then more extensive activation would be expected for novel productions during the initial segment compared to the final segment. Indeed, the contrasts for the beginning and ending third of the task revealed more extensive activation for novel productions during the initial segment, followed by a decrease with prolonged practice. During the final segment, the statistical maps of activation became much more similar between the native- and novel-syllable productions (Figure 2). That is, the amount of additional recruitment associated with novelty was modulated by repeated exposure/practice. Although a decrease in the task-related BOLD signal over time could reflect a decrease in overall activity over the length of the 30-minute scanning session (e.g. participants become more comfortable with the task and/or begin to habituate to the task), this seems highly unlikely. If this were the case, a similar decrease in activation would be expected across conditions. On the contrary, the decrease in activation was unique to the novel condition. This suggests that the increased activity seen initially may represent areas that are important in supporting the acquisition of new motor plans for speech, and that the need for recruitment in these areas may subside as these motor representations become integrated into the speech motor system.

As the task progressed, the increased activity associated with novel compared to native productions became more isolated and left-hemisphere lateralized (Figure 2). Of particular interest, significant activation was revealed in the left aIns during the production of novel syllables over what was seen during the production of native syllables regardless of time, both in the whole-brain analysis (Figure 2) and the ROI analysis (Figure 3). While there was no difference in aIns recruitment between the beginning and ending segment for native productions, greater Ins activation was found during the initial segment of the task for novel productions compared to the final segment. That is, novel productions during the first segment compared to the last segment of the task clearly demonstrated greater activity initially in the aIns bilaterally, followed by a decrease in activity during the final segment. However, even in the final segment, recruitment of the left aIns remained greater for novel compared to native productions when most of the other areas of activation in the speech motor network no longer showed a difference in activity between the two conditions.

These results may suggest that the aIns plays an important role in establishing new motor constructs. However, the implications for these finding are somewhat unclear. Although the possible role of the aIns in learning new motor speech plans provided theoretical motivation for the implementation of this study, its increased response to novel productions does not appear to be unique when other cortical areas are considered. Instead, an extensive network was recruited during novel productions, including the right aIns and the IFG bilaterally, particularly during the initial phase, when the greatest novelty would be present. The two regions of interest in this study were the left aIns and the left IFG. While the left aIns showed increased activation associated with novel productions as predicted, this was also true of the left IFG. In fact, apart from the left IPL, these two regions demonstrated the highest level of peak activity (local maxima) during the novel versus native comparisons (Table 3). Based on these findings, as well as the results from previous fMRI and neuroanatomical studies in AOS, it is likely that these two regions are highly interactive, particularly when the motor speech system is being taxed. Continued research is required in order to tease apart these two heavily interconnected regions, as activation in one area may demand an obligatory response from the other. Brain disruption techniques might provide a complementary approach for understanding whether these two brain areas play different functional roles; however, disruption of the aIns is logistically problematic due to its medial location from the lateral surface of the brain.

In general, the results of this study support the hypotheses regarding the role of the aIns in sublexical speech production containing novel syllables. While native syllables should have internal motor representations, novel syllables would not, and therefore, would require the assembly of new motor commands. If motor plans for familiar productions are stored in the IFG (Guenther, 2006; Guenther, Ghosh, Tourville, 2006), then they can be retrieved as units to facilitate speech. Online self-monitoring for content and accuracy of speech output can be provided by auditory and somatosensory feedback. During the production of native speech sounds, monitoring and integration would always be present to help guide and modify productions as needed. However, the importance of coordination and sensory feedback/integration increases greatly when the target to be produced is unfamiliar, and therefore, does not have a motor plan stored internally. Previously, the role of the aIns has been attributed to several speech-related functions, including sensory integration (Dogil et al., 2001) and motor coordination (Ackermann et al., 2004). However, the current findings do not provide sufficient evidence to distinguish between these two accounts. Nevertheless, increased neural activation in the aIns associated with novel productions, as well as the subsequent decrease in activation associated with practice, supports the claim that the aIns may be important when attempting to produce new/unestablished speech plans.

On the other hand, sustained levels of significant activation in the aIns across the length of the task could be used to argue against this hypothesis. However, this position seems inconsistent with the overall nature of the data. It is more likely that the non-native syllables remained somewhat novel throughout the 30-minute paradigm. This is supported by the fact that accuracy ratings for novel productions did not improve over the length of the task for the group as a whole. While subjective evaluation of the behavioral data clearly confirmed that all participants were attempting the novel productions, the amount of exposure that was provided did not yield increased speech accuracy (at least not as perceived by our English-speaking raters). Instead, those with lower ratings at the beginning also tended to have lower ratings at the end, and the same was true for those with higher ratings. However, the individual data show variability between participants regarding their change in performance. Although the ROI analysis revealed a significant decrease in the amount of neural activation in the aIns during novel productions over time, the correlation analysis for behavioral improvements suggests that this difference may not be completely straightforward. While the mean percent signal change associated with novel production decreased during the final 10 minutes compared to the first 10 minutes, increased behavioral performance across the length of the task was associated with greater activity in the aIns during the final phase. That is, those individuals with the greatest activation in the aIns during the final third of the task demonstrated greater improvement in their novel productions. No relationship was found with behavioral improvement and activation in the OpIFG. In addition, the amount of activation in either ROI during the initial phase was not related to the amount of improvement across the task. Taken together, these results suggest that the aIns showed the greatest amount of recruited initially for the group, but as the task progressed, the degree of individual learning became associated with the amount of activity that remained in the aIns. Speculation leads us to suggest that individuals with greater improvements remained engaged in a learning mode longer. If the motor plans for the novel productions were not yet solidified and these individuals were continuing to modify their productions, this should be reflected in their neural recruitment. That is, as the task progressed, better learners continued to recruit the aIns, while poorer learners recruited it less.

It is unclear whether the range in behavioral performance was related to differences in perception abilities or whether it independently reflected production abilities. However, in previous studies investigating novel speech-sound learning, participants who were better at producing a novel sound were not necessarily those who were better at auditory identification (Golestani, Paus, & Zatorre, 2002; Golestani, Molko, Dehaene, Le Bihan, & Pallier, 2007; Golestani & Pallier, 2007). In fact, Golestani and colleagues found structural differences within two distinct anatomical regions that were associated with performance in each of these tasks. Greater white matter density in the left HG and IPL was associated with auditory aptitude, while greater white matter density in the insula/prefrontal cortex (between BA 45, BA 47, and BA 13) was associated with production aptitude (Golestani & Pallier, 2007). The importance of connections between HG and the IPL in the auditory analysis of novel sounds may explain why the IPL showed increased activity during the novel productions in the current study. Furthermore, the importance of connections between the IFG and aIns in novel speech abilities is particularly interesting considering the anatomical differences that have been found in lesion studies of AOS, as well as the similarities in activation between the aIns and IFG in the current study during novel productions. Perhaps communication between these two regions is key. This may explain why both regions have been implicated in AOS. Although the underlying cause of struggle is different for a person with AOS who is trying to retrieve or execute a degraded/previously learned motor plan compared to a normal person trying to produce speech sounds that are not within their native repertoire, there are some parallels. If the aIns is important for facilitating new speech motor representations in normal people (either directly or via connections with the IFG), it may also play a crucial role in reestablishing representations that are impaired or inaccessible in AOS.

Thus, we suggest increased recruitment of the aIns during productions that are novel/lack automaticity; however, we do not assume that this region would not be recruited for familiar production. Instead, we propose that this area supports online processing during speech production with the extent of its involvement being modulated by particular aspects of the targeted production. For example, Maas et al. (2008) suggest differences in processing demands for speech production related to the internal complexity of units vs. the number of units within a sequence, although these two concepts are not mutually exclusive. Internal complexity not only involves the complexity of the spatial-temporal structure of the individual units (e.g. repeating vs. alternating syllables, such as dada vs. daba), but can also pertain to the number of syllables in a sequence that has been stored as a chunk. That is, the motor plans for frequently used strings of syllables (e.g. high frequency words) may be stored as a single unit where the number of syllables now determines the internal complexity of the unit. If this idea is applied to the current study, we posit that novel productions yield greater internal complexity demands then native productions due to familiarity. Because these syllables are novel, the spatio-temporal structures are not prepackaged, and therefore, the motor elements that comprise these novel syllables must be newly assembled. If the aIns and OpIFG are sensitive to internal complexity and sequence length respectively, then we would expect both of these areas to contribute to the production of multisyllabic nonwords since nonwords should not be stored as whole units in either case. Soros et al. (2006) suggested that aIns activity may be particularly involved during varying compared to repetitive speech production. If this hypothesis is correct, then repeated practice of both native and novel syllable combinations should decrease demands in the aIns compared to initial exposure. Regarding OpIFG involvement, the demands of sequence length should remain relatively unchanged for native combination, but may be altered for novel combinations. For example, during the initial phase, we would suggest greater segmentation of motor plans, which could pose greater sequence length demands. The idea of the IFG as a serial processor is not new as this region has been implicated in syntactical processing in auditory comprehension and speech production (Davis et al, 2008)

In conclusion, the current findings suggest an extensive neural network supporting speech production and an overall increase in activity within the system during the production of unfamiliar speech, including the aIns and IFG. While the observed increase in neural activation in the aIns during novel productions, particularly in the initial phase, provides support for its possible role in motor speech, other areas within the speech/language network also showed increased activation. Furthermore, the involvement of these regions in speech production does not exclude their involvement in other cognitive processes. Nevertheless, these findings are consistent with the hypothesized importance of the aIns in facilitating the production of new motor plans for speech, perhaps related to assembly of smaller motor units, and the IFG in orchestrating the serial organization of motor chunks/established plans.

Supplementary Material

Figure S-1. The mean accuracy scores of each participant for novel productions during the initial and final segment of the task using a 5-point ascending scale for subjective ratings based on similarity to target exemplar.

Figure S-2. Neural activation associated with the production of multisyllabic non-words during the first 10 minutes (red) and last 10 minutes (green) of the 30-minute task compared to baseline (the overlap of common areas of activity during the two time segments is represented in yellow). The top panel displays the statistical activation map associated with the native/English syllable condition, and the bottom panel displays the statistical activation map associated with the novel/non-English syllable condition.

Figure S-3. Scatterplot of the relationship between the change in behavioral accuracy scores for novel productions and the percent signal change in the aIns during the final phase of the task (r=.39, p=.04).

Acknowledgments

This work was supported by the National Institute on Deafness and other Communication Disorders, DC008355.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Ackermann H, Riecker A. The contribution of the insula to motor aspects of speech production: a review and a hypothesis. Brain and Language. 2004;89(2):320–328. doi: 10.1016/S0093-934X(03)00347-X. [DOI] [PubMed] [Google Scholar]

- Aziz-Zadeh L, Cattaneo L, Rochat M, Rizzolatti G. Covert Speech Arrest Induced by rTMS over Both Motor and Nonmotor Left Hemisphere Frontal Sites. Journal Cognitive Neuroscience. 2005;17:928–938. doi: 10.1162/0898929054021157. [DOI] [PubMed] [Google Scholar]

- Ballard KJ, Granier JP, Robin DA. Understanding the nature of apraxia of speech: Theory, analysis, and treatment. Aphasiology. 2000;14(10):969–995. [Google Scholar]

- Ballard KJ, Robin DA. Influence of continual biofeedback on jaw pursuit-tracking in healthy adults and in adults with apraxia plus aphasia. Journal of Motor Behavior. 2007;39(1):19–28. doi: 10.3200/JMBR.39.1.19-28. [DOI] [PubMed] [Google Scholar]

- Barch DM, Sabb FW, Carter CS, Braver TS, Noll DC, Cohen JD. Overt verbal responding during fMRI scanning: Empirical investigations of problems and potential solutions. Neuroimage. 1999;10(6):642–657. doi: 10.1006/nimg.1999.0500. [DOI] [PubMed] [Google Scholar]

- Bates E, Wilson SM, Saygin AP, Dick F, Sereno MI, Knight RT, et al. Voxel-based lesion-symptom mapping. Nature Neuroscience. 2003;6:448–450. doi: 10.1038/nn1050. [DOI] [PubMed] [Google Scholar]

- Beckmann CF, Jenkinson M, Smith SM. General multi-level linear modelling for group analysis in FMRI. NeuroImage. 2003;20:1052–1063. doi: 10.1016/S1053-8119(03)00435-X. [DOI] [PubMed] [Google Scholar]

- Birn RM, Bandettini PA, Cox RW, Shaker R. Event-related fMRI of tasks involving brief motion. Human Brain Mapping. 1999;7(2):106–114. doi: 10.1002/(SICI)1097-0193(1999)7:2<106::AID-HBM4>3.0.CO;2-O. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bohland JW, Guenther FH. An fMRI investigation of syllable sequence production. Neuroimage. 2006;32(2):821–841. doi: 10.1016/j.neuroimage.2006.04.173. [DOI] [PubMed] [Google Scholar]

- Bonilha L, Moser D, Rorden C, Baylis G, Fridriksson J. Speech apraxia without oral apraxia: Can normal brain function explain the physiopathology? NeuroReport. 2006;17(10):1027–1031. doi: 10.1097/01.wnr.0000223388.28834.50. [DOI] [PubMed] [Google Scholar]

- Borovsky A, Saygin AP, Bates E, Dronkers N. Lesion correlates of conversational speech production deficits. Neuropsychologia. 2007;45(11):2525–2533. doi: 10.1016/j.neuropsychologia.2007.03.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Broca P. Localisation of speech in the third left frontal convolution. Bulletin de la Société d’Anthropologie de Paris. 1865;6:377–393. [Google Scholar]

- Broussolle E, Bakchine S, Tommasi M, Laurent B, Bazin B, Cinotti L, et al. Slowly progressive anarthria with late anterior opercular syndrome: a variant form of frontal cortical atrophy syndromes. Journal of Neurological Sciences. 1996;144(1–2):44–58. doi: 10.1016/s0022-510x(96)00096-2. [DOI] [PubMed] [Google Scholar]

- Davis C, Kleinman JT, Newhart M, Gingis L, Pawlak M, Hillis AE, et al. Speech and language functions that require a functioning Broca's area. Brain and Language. 2008;105:50–58. doi: 10.1016/j.bandl.2008.01.012. [DOI] [PubMed] [Google Scholar]

- Deger K, Ziegler W. Speech motor programming in apraxia of speech. Journal of Phonetics. 2002;30(3):321–335. [Google Scholar]

- Dogil G, Ackermann H, Grodd W, Haider H, Kamp H, Mayer J, et al. The speaking brain: A tutorial introduction to fMRI experiments in the production of speech, prosody and syntax. Journal of Neurolinguistics. 2001;15:59–90. [Google Scholar]

- Dronkers N. A new brain region for coordinating speech articulation. Nature. 1996;384:159–161. doi: 10.1038/384159a0. [DOI] [PubMed] [Google Scholar]

- Epstein CM. Transcranial magnetic stimulation: Language function. Journal of Clinical Neurophysiology. 1998;15:325–332. doi: 10.1097/00004691-199807000-00004. [DOI] [PubMed] [Google Scholar]

- Epstien CM, Meador KJ, Loring DW, Wright RJ, Wiessman JD, Sheppard S, et al. Localization and characterization of speech arrest during transcranial magnetic stimulation. Clinical Neurophysiology. 1999;110:1073–1079. doi: 10.1016/s1388-2457(99)00047-4. [DOI] [PubMed] [Google Scholar]

- Evans AC, Collins DL, Mills SR, Brown ED, Kelly RL, Peters TM. Proceedings IEEE-Nuclear Science Symposium and Medical Imaging Conference. IEEE Inc; Piscataway, NJ: 1993. 3D statistical neuroanatomical models from 305 MRI volumes; pp. 1813–1817. [Google Scholar]

- Finley A, Saver J, Alger J, Pregenzer M, Leary M, Ovbiagele B, et al. Diffusion weighted imaging assessment of insular vulnerability in acute middle cerebral artery infarctions [abstract] Stroke. 2003;34:259. [Google Scholar]

- Frenck-Mestre C, Anton JL, Roth M, Vaid J, Viallet F. Articulation in early and late bilinguals’ two languages: evidence from functional magnetic resonance imaging. Neuroreport. 2005;16(7):761–765. doi: 10.1097/00001756-200505120-00021. [DOI] [PubMed] [Google Scholar]

- Fridriksson J, Morrow L. Cortical activation associated with language task difficulty in aphasia. Aphasiology. 2005;19(3–5):239–250. doi: 10.1080/02687030444000714. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fridriksson J, Morrow KL, Moser D, Baylis GC. Age-related variability in cortical activity during language processing. Journal of Speech Language and Hearing Research. 2006;49(4):690–697. doi: 10.1044/1092-4388(2006/050). [DOI] [PubMed] [Google Scholar]

- Ghosh SS, Tourville JA, Guenther FH. A Neuroimaging Study of Premotor Lateralization and Cerebellar Involvement in the Production of Phonemes and Syllables. J Speech Lang Hear Res. 2008;51:1183–1202. doi: 10.1044/1092-4388(2008/07-0119). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Golestani N, Pallier C. Anatomical correlates of foreign speech sound production. Cerebral Cortex. 2007;17:929–34. doi: 10.1093/cercor/bhl003. [DOI] [PubMed] [Google Scholar]

- Golestani N, Molko N, Dehaene S, Le Bihan D, Pallier C. Brain structure predicts the learning of foreign speech sounds. Cerebral Cortex. 2007;17(3):575–582. doi: 10.1093/cercor/bhk001. [DOI] [PubMed] [Google Scholar]

- Golestani N, Paus T, Zatorre RJ. Anatomical correlates of learning novel speech sounds. Neuron. 2002;35:997–1010. doi: 10.1016/s0896-6273(02)00862-0. [DOI] [PubMed] [Google Scholar]

- Gorni-Tempini ML, Dronkers NF, Rankin KP, Ogar JM, Phengrasamy L, Rosen HJ, et al. Cognition and anatomy in three variants of primary progressive aphasia. Annuals of Neurology. 2004;55:335–346. doi: 10.1002/ana.10825. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gracco VL, Tremblay P, Pike B. Imaging speech production using fMRI. Neuroimage. 2005;26(1):294–301. doi: 10.1016/j.neuroimage.2005.01.033. [DOI] [PubMed] [Google Scholar]

- Guenther Cortical interaction underlying the production of speech sounds. Journal of Communication Disorders. 2006;39(5):350–65. doi: 10.1016/j.jcomdis.2006.06.013. [DOI] [PubMed] [Google Scholar]

- Guenter FH, Ghosh SS, Tourville JA. Neural modeling and imaging of the cortical interactions underlying syllable production. Brain and Language. 2006;96:280–301. doi: 10.1016/j.bandl.2005.06.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hillis AE, Work M, Barker PB, Jacobs MA, Breese EL, Maurer K. Re-examining the brain regions crucial for orchestrating speech articulation. Brain. 2004;127:1479–1487. doi: 10.1093/brain/awh172. [DOI] [PubMed] [Google Scholar]

- Jenkinson M, Smith SM. A Global Optimisation Method for Robust Affine Registration of Brain Images. Medical Image Analysis. 2001;5:143–156. doi: 10.1016/s1361-8415(01)00036-6. [DOI] [PubMed] [Google Scholar]

- Jenkinson M, Bannister P, Brady M, Smith S. Improved optimization for the robust and accurate linear registration and motion correction of brain images. Neuroimage. 2002;17:825–841. doi: 10.1016/s1053-8119(02)91132-8. [DOI] [PubMed] [Google Scholar]

- Just MA, Carpenter PA, Keller TA, Eddy WF, Thulborn KR. Brain activation modulated by sentence comprehension. Science. 1996;274:114–116. doi: 10.1126/science.274.5284.114. [DOI] [PubMed] [Google Scholar]

- Klapp ST. Reaction time analysis of two types of motor preparation for speech articulation: Action as a sequence of chunks. Journal of Motor Behavior. 2003;35(2):135–150. doi: 10.1080/00222890309602129. [DOI] [PubMed] [Google Scholar]

- Kuriki S, Mori T, Hirata Y. Motor planning center for speech articulation in the normal human brain. Neuroreport. 1999;10(4):765–769. doi: 10.1097/00001756-199903170-00019. [DOI] [PubMed] [Google Scholar]

- Levelt WJM, Wheeldon L. Do speaker have access to a mental syllabary? Cognition. 1994;50:239–269. doi: 10.1016/0010-0277(94)90030-2. [DOI] [PubMed] [Google Scholar]

- Maas E, Robin DA, Wright DL, Ballard KJ. Motor programming in apraxia of speech. Brain and Language. 2008;106(2):107–118. doi: 10.1016/j.bandl.2008.03.004. [DOI] [PubMed] [Google Scholar]

- Marien P, Pickut BA, Engelborghs S, Martin JJ, De Deyn PP. Phonological agraphia following a focal anterior insulo-opercular infarction. Neuropsychologia. 2001;39:845–855. doi: 10.1016/s0028-3932(01)00006-9. [DOI] [PubMed] [Google Scholar]

- McNeil M, Robin D, Schmidt R. Apraxia of speech: Definition, differentiation, and treatment. In: McNeil M, editor. Clinical Management of Sensorimotor Speech Disorders. New York, NY: Thieme; 1997. pp. 311–344. [Google Scholar]

- Mohr JP, Pessin MS, Finkelstein S, Funkenstein HH, Duncan GW, Davis KR. Broca aphasia: pathologic and clinical. Neurology. 1978;28:311–324. doi: 10.1212/wnl.28.4.311. [DOI] [PubMed] [Google Scholar]

- Murphy K, Corfield DR, Guz A, Fink GR, Wise RJS, Harrison J, et al. Cerebral areas associated with motor control of speech in humans. Journal of Applied Physiology. 1997;83(5):1438–1447. doi: 10.1152/jappl.1997.83.5.1438. [DOI] [PubMed] [Google Scholar]

- Nagao M, Takeda K, Komori T, Isozaki E, Hirai S. Apraxia of speech associated with an infarct in the precentral gyrus of the insula. Neuroradiology. 1999;41:356–357. doi: 10.1007/s002340050764. [DOI] [PubMed] [Google Scholar]

- Nestor PJ, Graham NL, Fryer TD, Williams GB, Patterson K, Hodges JR. Progressive non-fluent aphasia is associated with hypometabolism centred on the left anterior insula. Brain. 2003;126:2406–2418. doi: 10.1093/brain/awg240. [DOI] [PubMed] [Google Scholar]

- Nishitani N, Schürmann M, Amunts K, Hari R. Broca’s region: from action to language. Physiology. 2005;20(1):60–69. doi: 10.1152/physiol.00043.2004. [DOI] [PubMed] [Google Scholar]

- Ogar J, Slama H, Dronkers N, Amici S, Gorno-Tempini ML. Apraxia of speech: An overview. Neurocase. 2005;11:427–432. doi: 10.1080/13554790500263529. [DOI] [PubMed] [Google Scholar]

- Ogar J, Willock S, Baldo J, Wilkins D, Ludy C, Dronkers N. Clinical and anatomical correlates of apraxia of speech. Brain and Language. 2006;97(3):343–350. doi: 10.1016/j.bandl.2006.01.008. [DOI] [PubMed] [Google Scholar]

- Pascual-Leone A, Gates JR, Dhuna A. Induction of speech arrest and counting errors with rapid-rate transcranial magnetic stimulation. Neurology. 1991;41:697–702. doi: 10.1212/wnl.41.5.697. [DOI] [PubMed] [Google Scholar]

- Riecker A, Ackermann H, Wildgruber D, Meyer J, Dogil G, Haider H, et al. Articulatory/phonetic sequencing at the level of the anterior perisylvian cortex: A functional magnetic resonance imaging (fMRI) study. Brain and Language. 2000;75(2):259–276. doi: 10.1006/brln.2000.2356. [DOI] [PubMed] [Google Scholar]

- Rorden C, Karnath HO, Bonilha L. Improving lesion-symptom mapping. Journal of Cognitive Neuroscience. 2007;19:1081–1088. doi: 10.1162/jocn.2007.19.7.1081. [DOI] [PubMed] [Google Scholar]

- Shuster LI, Lemieux SK. An fMRI investigation of covertly and overtly produced mono- and multisyllabic words. Brain and Language. 2004;93:20–31. doi: 10.1016/j.bandl.2004.07.007. [DOI] [PubMed] [Google Scholar]

- Smith SM. Fast robust automated brain extraction. Human Brain Mapping. 2002;17:143–155. doi: 10.1002/hbm.10062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith SM, Jenkinson M, Woolrich MW, Beckmann CF, Behrens TE, Johansen-Berg H, et al. Advances in functional and structural MR image analysis and implementation as FSL. Neuroimage. 2004;23:S208–219. doi: 10.1016/j.neuroimage.2004.07.051. [DOI] [PubMed] [Google Scholar]

- Soros P, Sokoloff LG, Bose A, McIntosh AR, Graham SJ, Stuss DT. Clustered functional MRI of overt speech production. Neuroimage. 2006;32(1):376–387. doi: 10.1016/j.neuroimage.2006.02.046. [DOI] [PubMed] [Google Scholar]

- Spencer KA, Rogers MA. Speech motor programming in hypokinetic and ataxic dysarthria. Brain and Language. 2005;94(3):347–366. doi: 10.1016/j.bandl.2005.01.008. [DOI] [PubMed] [Google Scholar]

- Stewart L, Walsh V, Frith U, Rothwell JC. TMS produces two dissociable types of speech disruption. Neuroimage. 2001;13:472–478. doi: 10.1006/nimg.2000.0701. [DOI] [PubMed] [Google Scholar]

- Szameitat AJ, Schubert T, Müller K, Von Cramon DY. Localization of executive functions in dual-task performance with fMRI. Journal of Cognitive Neuroscience. 2002;14:1184–1199. doi: 10.1162/089892902760807195. [DOI] [PubMed] [Google Scholar]

- Tourville JA, Reilly KJ, Guenther FH. Neural mechanisms underlying auditory feedback control of speech. Neuroimage. 2008;39(3):1429–1443. doi: 10.1016/j.neuroimage.2007.09.054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tzourio-Mazoyer N, Landeau B, Papathanassiou D, Crivello F, Etard O, Delcroix N, et al. Automated anatomical labelling of activations in spm using a macroscopic anatomical parcellation of the MNI MRI single subject brain. Neuroimage. 2002;15:273–289. doi: 10.1006/nimg.2001.0978. [DOI] [PubMed] [Google Scholar]

- Varley R, Whiteside SP. What is the underlying impairment in acquired apraxia of speech? Aphasiology. 2001;15(1):39–84. [Google Scholar]

- Varely RA, Whiteside SP, Luff H. Dual-route speech encoding in normal and apraxic speakers: Some durational evidence. Journal of Medical Speech and Language Pathology. 1999;7:127–132. [Google Scholar]

- Wambaugh JL, Duffy JR, McNeil MR, Robin DA, Rogers MA. Treatment guidelines for acquired apraxia of speech: A synthesis and evaluation of the evidence. Journal of Medical Speech-Language Pathology. 2006;14(2):XV–XXXIII. [Google Scholar]

- Wise RJS, Greene J, Buchel C, Scott SK. Brain regions involved in articulation. Lancet. 1999;353(9158):1057–1061. doi: 10.1016/s0140-6736(98)07491-1. [DOI] [PubMed] [Google Scholar]

- Worsley KJ, Evans AC, Marrett S, Neelin P. A three-dimensional statistical analysis for CBF activation studies in human brain. Journal of Cerebral Blood Flow and Metabolism. 1992;12:900–918. doi: 10.1038/jcbfm.1992.127. [DOI] [PubMed] [Google Scholar]

- Yetkin O, Yetkin FZ, Haughton VM, Cox RW. Use of functional MR to map language in multilingual volunteers. American Journal of Neuroradiology. 1996;17(3):473–477. [PMC free article] [PubMed] [Google Scholar]

- Woolrich MW, Behrens TEJ, Beckmann CF, Jenkinson M, Smith SM. Multi-level linear modelling for FMRI group analysis using Bayesian inference. NeuroImage. 2004;21:1732–1747. doi: 10.1016/j.neuroimage.2003.12.023. [DOI] [PubMed] [Google Scholar]

- Woolrich MW, Ripley BD, Brady M, Smith SM. Temporal autocorrelation in univariate linear modeling of FMRI data. Neuroimage. 2001;14:1370–1386. doi: 10.1006/nimg.2001.0931. [DOI] [PubMed] [Google Scholar]

- Worsley KJ, Evans AC, Marrett S, Neelin P. A three-dimensional statistical analysis for CBF activation studies in human brain. Journal of Cerebral Blood Flow and Metabolism. 1992;12:900–918. doi: 10.1038/jcbfm.1992.127. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Figure S-1. The mean accuracy scores of each participant for novel productions during the initial and final segment of the task using a 5-point ascending scale for subjective ratings based on similarity to target exemplar.

Figure S-2. Neural activation associated with the production of multisyllabic non-words during the first 10 minutes (red) and last 10 minutes (green) of the 30-minute task compared to baseline (the overlap of common areas of activity during the two time segments is represented in yellow). The top panel displays the statistical activation map associated with the native/English syllable condition, and the bottom panel displays the statistical activation map associated with the novel/non-English syllable condition.

Figure S-3. Scatterplot of the relationship between the change in behavioral accuracy scores for novel productions and the percent signal change in the aIns during the final phase of the task (r=.39, p=.04).