Abstract

Choosing an appropriate response in an uncertain and varying world is central to adaptive behaviour. The frequent activation of the anterior cingulate cortex (ACC) in a diverse range of tasks has lead to intense interest in and debate over its role in the guidance and control of performance. Here, we consider how this issue can be informed by a series of studies considering the ACC's role in more naturalistic situations where there is no single certain correct response and the relationships between choices and their consequences vary. A neuroimaging study of response switching demonstrates that dorsal ACC is not simply concerned with self-generated responses or error monitoring in isolation, but is instead involved in evaluating the outcome of choices, positive or negative, that have been voluntarily chosen. By contrast, an interconnected part of the orbitofrontal cortex is shown to be more active when attending to consequences of actions instructed by the experimenter. This dissociation is explained with reference to the anatomy of these regions in humans as demonstrated by diffusion weighted imaging. Lesions to a corresponding ACC region in monkeys has no effect on animals' ability to detect or immediately correct errors when response contingencies reverse, but renders them unable to sustain appropriate behaviour due to an impairment in the ability to integrate over time their recent history of choices and outcomes. Taken together, this implies a prominent role for the ACC within a distributed network of regions that determine the dynamic value of actions and guide decision making appropriately.

Introduction

Much of our everyday lives, as anyone willing to consider the issue will be aware, consists of choosing between alternative courses of action. Determining which response is appropriate in a particular context is a complex issue, influenced by current motivation and past history as well as individual assessments of the desirability of each alternative. Sometimes our actions are selected voluntarily, based on an internal assessment of what is the optimal option to choose, other times they are constrained by instruction or prompted by external stimuli. Nevertheless, in a static environment, where the relationships between what we choose to do and the concomitant consequences were fixed, such decisions would still be relatively straightforward. Unfortunately, however, we exist in a dynamic and uncertain world, cooperating and competing with others, in which the outcome of our choices can be influenced by what we and others have previously decided to do.

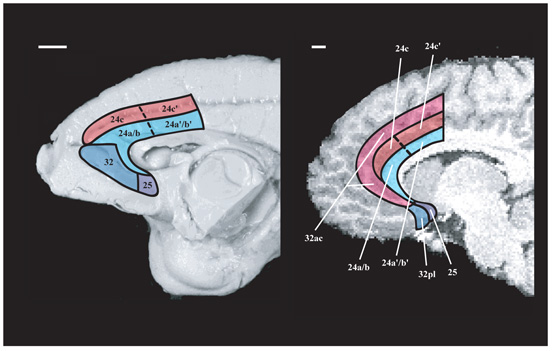

Many parts of the frontal lobe are integral to the selection and control of goal-directed actions. However, it is an open question what the exact contribution of discrete prefrontal and anterior cingulate cortical regions is to this process. This is exemplified by the debates over the function of the anterior cingulate cortex (ACC) (Figure 1). Activations have been found in this region in a diverse range of cognitive tasks as well as in response to autonomic arousal (Botvinick, Cohen and Carter, 2004; Bush, Luu and Posner, 2000; Critchley, 2005; Paus, Koski, Caramanos and Westbury, 1998; Rushworth, Walton, Kennerley and Bannerman, 2004), leading to the question of what the common factor driving the ACC response in these situations may be. However, the ACC is also seldom activated in isolation of other interconnected regions such as the orbitofrontal cortex (OFC), lateral prefrontal cortex and parts of the striatum (Duncan and Owen, 2000; Paus, Koski, Caramanos and Westbury, 1998). This raises the related issue of how the function of the ACC differs from these other prefrontal areas. Furthermore, there has frequently not been perfect correspondence between the data gathered using different techniques and species.

Figure 1.

Comparative anatomy of primate ACC (macaque monkey, left-hand panel, human, right-hand panel). In the macaque, the ACC is divided into two broad regions: i) ACC gyrus (blue) including area 24a and 24b rostrally, 24a′ and 24b′ caudally, 32, and 25. ii) ACC sulcus (red), area 24c, which occupies most of the sulcal ACC. Its caudal part, 24c′, contains the rostral cingulate motor area (CMAr). It is also possible to consider two broad subdivisions within the human ACC. Human ACC gyrus areas 32pl, 25, 24a and 24b resemble the macaque gyral areas 32, 25, 24a and 24b (Ongur, Ferry and Price, 2003; Vogt, Nimchinsky, Vogt and Hof, 1995). Human areas 24c, 24c′, and 32ac in the ACC sulcus and, when present, the second superior cingulate gyrus (CGs), bear similarities with the areas in the macaque ACC sulcus and include the cingulate motor areas.

The purpose of this present paper is not to give a detailed critique of the extant theories of ACC function, particularly with regard to the human electrophysiological literature, as this has been accomplished comprehensively by several recent reviews (Botvinick, Braver, Barch, Carter and Cohen, 2001; Holroyd and Coles, 2002; Paus, 2001; Ridderinkhof, Ullsperger, Crone and Nieuwenhuis, 2004; Rushworth, Walton, Kennerley and Bannerman, 2004). Instead, we wish to discuss how recent work investigating decision making, where the relationships between actions and their consequences do not remain fixed, has illuminated one aspect of the function of the ACC. By forcing subjects to use their recent experience to select an appropriate course of action, these studies uncover a crucial role for the ACC, particularly the dorsal sulcal region (see Figure 1), in forming action-outcome associations to guide response selection.

Interactions between action selection and performance monitoring in ACC

In a changeable environment, it is vital to be able to adapt one's behaviour rapidly depending on how successfully one's goals were achieved. Such adaptability has been seen as a cardinal aspect of cognitive control in the human literature (Allport, Styles and Hsieh, 1994; Bunge, 2004), as well as an indication in animal studies that responses are not merely being selected out of habit (though see Balleine and Dickinson, 1998 for more comprehensive criteria). Neuroimaging studies investigating task switching frequently find activation in parts of dorsomedial prefrontal cortex, including in dorsal ACC, when probing the regions that are active when people have to switch between response rules (Crone, Wendelken, Donohue and Bunge, 2006; Dove, Pollmann, Schubert, Wiggins and von Cramon, 2000; Liston, Matalon, Hare, Davidson and Casey, 2006; Rushworth, Hadland, Paus and Sipila, 2002). Surprisingly, however, monkeys with large lesions to the ACC, including both the sulcal and gyral regions, seem no more impaired at the time when switching between one of two response sets than when selecting an action with response rule well-established (Rushworth, Hadland, Gaffan and Passingham, 2003).

These tasks, while conceptually appealingly simple, contain several different factors which could be driving the ACC activation. One possibility is that the response in this region is being driven by detecting when an incorrect response has been made (Holroyd and Coles, 2002) or that the likelihood of making an error is high (Brown and Braver, 2005). A related concept is that the ACC might instead be concerned with monitoring for times of response conflict, which is likely to be at its greatest at the time of a switch as people try to inhibit the previously correct response in order to select the now appropriate one (Botvinick, Braver, Barch, Carter and Cohen, 2001; Botvinick, Cohen and Carter, 2004). Both of these theories imply that the ACC does not itself directly regulate response selection but instead signals to interconnected regions such as the lateral prefrontal cortex a need to exert control or modify behaviour.

However, there is also evidence that dorsal ACC plays an active, volitional role in choosing what response to make. Electrical microstimulation of discrete parts of the cingulate sulcus in macaque monkeys known as the cingulate motor areas (CMAs), which project directly to both primary motor cortex and to the ventral horn of the spinal cord, can elicit complex movements (He, Dum and Strick, 1995; Luppino, Matelli, Camarda and Rizzolatti, 1994; Matelli, Luppino and Rizzolatti, 1991; Wang, Shima, Sawamura and Tanji, 2001). Giant pyramidal Betz cells are found in posterior parts of both monkey and human cingulate sulcus, indicative of a motoric role for this region, and comparable subdivisions for the macaque CMAs can be found in the human brain based on the pattern of cytoarchitecture and functional comparisons (Braak, 1976; Picard and Strick, 1996; Zilles, Schlaug, Geyer, Luppino, Matelli, et al., 1996). Similarly, stimulation of an equivalent rostral CMA region in awake humans caused them to be unable to resist the urge to move towards and grasp objects within their range (Kremer, Chassagnon, Hoffmann, Benabid and Kahane, 2001). More than simply being involved in any type of movement, it seems that dorsal ACC is particularly concerned with situations where a response has to be generated voluntarily rather than being guided by an external stimulus or instruction (Frith, Friston, Liddle and Frackowiak, 1991; Jahanshahi, Jenkins, Brown, Marsden, Passingham, et al., 1995; Lau, Rogers, Ramnani and Passingham, 2004). Lesions of the superior frontal gyrus and the ACC render monkeys unable to make self-paced movements even though they are perfectly capable of responding when cued by a tone, and ACC ablation also causes them to be impaired at using reward, but not visual cues, to guide action selection (Hadland, Rushworth, Gaffan and Passingham, 2003; Thaler, Chen, Nixon, Stern and Passingham, 1995).

Neuroimaging studies of self-generated or “willed” action have tended to employ tasks in which no one response is any better than the other alternatives. However, such a scenario is unlikely to occur in any natural environment and choices instead will be guided by an assessment of the expected value of each option. When the connection between an action and its outcome is not fixed, as occurs in switching tasks, people have to pay attention to the consequences of their choices in order to determine whether or not the response they selected was appropriate. Moreover, in such uncertain situations, it is not merely negative feedback or errors that are a useful source of information; positive outcomes can be of equal importance if they are equally instructive for future actions. Indeed, reinforcement learning theories argue that both positive and negative outcomes are used to generate a prediction about the value of a choice (Montague, Hyman and Cohen, 2004).

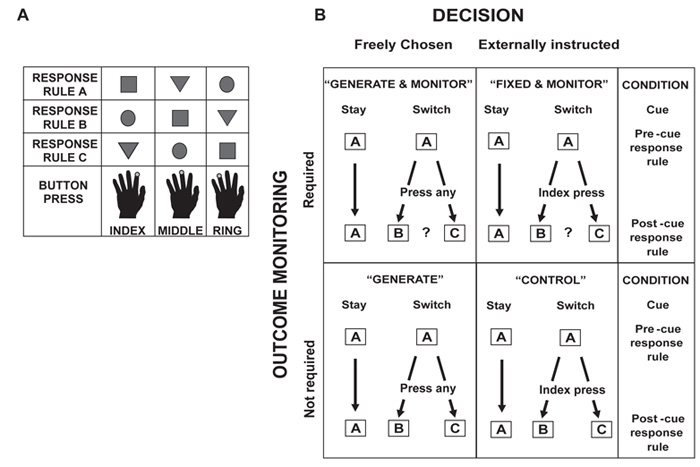

To investigate the degree to which the ACC is involved in voluntary response selection, in performance monitoring, or in assessing the outcome of people's own decisions, Walton and colleagues carried out an fMRI study which parametrically manipulated the necessity to choose a response and to monitor its consequences (Walton, Devlin and Rushworth, 2004). In this task, people were taught 3 sets of conditional response rules which linked 3 stimuli with 3 possible finger movements (Figure 2a). On each trial, one of the stimuli would be presented and the participants' task would be to make an appropriate response based on which response rule was currently in place. Feedback was provided after each response as to whether or not the correct action had been selected. After a number of trials on which participants responded according to one particular response rule, a cue appeared either instructing them to stay using the same rule or to switch to one of the other two sets of response rules. However, unlike in most previous comparable experiments, the switch cue only informed subjects that a change had occurred but did not instruct which set of response rules was now in place.

Figure 2.

A. Representation of the three stimuli and response rules used in the task. B. A schematic of four of the conditions employed. In two of the versions of the task, participants were required after a switch cue to choose their response voluntarily (left-hand column) whereas in the other two they were instructed which response to make (for example, always to make an index finger response: right-hand column). Similarly, in two of the conditions, participants had to attend to the outcome to determine what set of response rules was in place (top row) whereas in the other two the first response was always correct so participants did not need to monitor the feedback in order to switch response rules (bottom row). Adapted from Walton et al. (2004) with permission.

To manipulate the two experimental factors of interest – voluntary response selection and performance monitoring – four versions of the task were run which differed only in what people were required to do immediately after the presentation of a switch cue (Figure 2b). In two of the conditions (GENERATE+MONITOR and GENERATE), on the first trial after presentation of the switch cue, participants voluntarily chose what action to select, opting to respond using one of the two stimulus-response mappings that differed from the previous response rule set. In the two other conditions (FIXED+MONITOR and CONTROL), participants never had to choose for themselves what to do after a switch cue but instead were told by the experimenter before the experiment began which response to make on that first trial. When performing either of the MONITOR conditions, immediately after the switch cue, participants were initially uncertain which rule set was now in place and so had to pay attention to the feedback information to guide subsequent behaviour. This differs from previous studies which have manipulated the choice demands (O'Doherty, Critchley, Deichmann and Dolan, 2003; Tricomi, Delgado and Fiez, 2004). In the other two conditions (GENERATE or CONTROL), whatever response was made was always followed by correct feedback meaning that participants need not monitor the outcome information in order to work out what rule set to use.

To perform the GENERATE+MONITOR condition optimally, therefore, participants had both to (a) make voluntary decisions about which action to select and (b) monitor the outcome of their choices to work out which set of response rules is in place and to modify their behaviour accordingly. By contrast, in the GENERATE or FIXED+MONITOR versions, participants only had to contend with one of these factors when switching between response sets. By contrasting in each condition the signal time-locked to the switch cue with that at the stay cue, it was possible to assess the degree to which activity in dorsal ACC is driven by voluntary response selection, performance monitoring or a combination of the two factors.

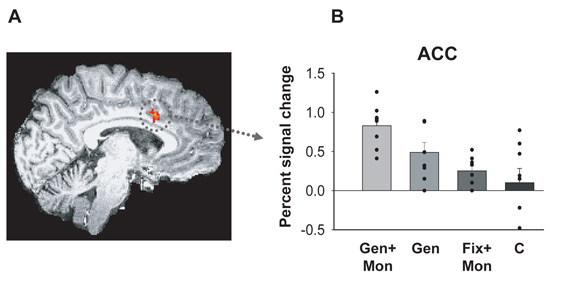

As has been observed in previous studies, the signal in the dorsal ACC increased when switching between response rules whenever participants were either able to exercise their own volition when choosing a response or had to attend to the feedback to work out which response set to use (in all conditions except CONTROL). Importantly, when the two conditions in which subjects were uncertain about which rule set was in place after a switch were compared, activity levels were significantly greater in the ACC when participants had to decide what response to make and had increased degrees of freedom of choice (GENERATE+MONITOR) compared to when they were told what to do (FIXED+MONITOR) (Figure 3a, b). This implies that an opportunity to choose voluntarily what action to make is an important component of ACC function. However, it is not merely the act of deciding what to do that is driving the ACC response here; the signal change in the identical region of dorsal ACC most active in the previous monitoring comparison (Figure 3a) was also significantly more active when participants had to attend to the outcome of a choice they had made (GENERATE+MONITOR) than when just having to choose a response without monitoring its outcome (GENERATE) (Figure 3b).

Figure 3.

A. ACC activation in the contrast examining the signal at the time of the switch cue compared to at the time of the stay in GENERATE+MONITOR compared to FIXED+MONITOR. B. Plots of the effect size in this ACC region across the four conditions. Signal in the ACC was significantly greater when participants had both to choose a response through their own volition and monitor (GEN+MON) its outcome to decide what to do then when they simply had to select a response voluntarily (GEN) or attend to the feedback of an externally-instructed action (FIX+MON). GEN+MON = GENERATE+MONITOR, GEN = GENERATE, FIX+MON=FIXED+MONITOR, CON = CONTROL. Adapted from Walton et al. (2004) with permission.

As there were only two possible new rule sets after a switch, both correct and incorrect feedback were equally informative: a correct outcome indicated that participants should keep using the chosen response rule whereas incorrect implied they should switch to the other possible set. Therefore, in contrast to work which has emphasised the importance of the ACC for processing errors (Brown and Braver, 2005; Holroyd and Coles, 2002; Ullsperger and Von Cramon, 2003), it is interesting to note that activity in the ACC was identical in the GENERATE+MONITOR condition regardless of whether the feedback was positive or negative, suggesting that it is the salience of the information gained from the outcome that is driving the change in signal. This concurs with electrophysiological studies in monkeys which show that both receipt and withholding of reward can drive ACC neurons (Amiez, Joseph and Procyk, 2006; Matsumoto, Suzuki and Tanaka, 2003; Niki and Watanabe, 1979; Procyk, Tanaka and Joseph, 2000). Moreover, in spite of some differences between participants' reaction times on the first trial after a switch cue compared to that after a stay cue (“switch cost”) between the different conditions, the increased activation in GENERATE+MONITOR appears not directly attributable to response conflict as expressed overtly as there was no relationship between the switch cost and the magnitude of the signal in this ACC region. Taken all together, the data indicate that dorsal ACC is particularly involved when people are uncertain about what response to make and so have to monitor the consequences of their own choices to work out what it is desirable to do.

Monitoring instructed actions and the orbitofrontal cortex (OFC)

Neuroimaging studies of choosing and evaluating responses during decision making contexts often show activations in OFC as well as in dorsal ACC (Berns, McClure, Pagnoni and Montague, 2001; Cohen, Heller and Ranganath, 2005; Coricelli, Critchley, Joffily, O'Doherty, Sirigu, et al., 2005; Elliott, Friston and Dolan, 2000; Ernst and Paulus, 2005; O'Doherty, Critchley, Deichmann and Dolan, 2003). Lesions which include OFC in humans, monkeys or rats cause impairments in using reward information to guide and alter choices (Bechara, Damasio and Damasio, 2000; Izquierdo, Suda and Murray, 2005; Jones and Mishkin, 1972; Rogers, Everitt, Baldacchino, Blackshaw, Swainson, et al., 1999; Schoenbaum, Setlow, Nugent, Saddoris and Gallagher, 2003), and, as in the ACC, there are cells in this region too that respond to the anticipation and delivery of reinforcement (Hikosaka and Watanabe, 2000; Padoa-Schioppa and Assad, 2006; Roesch and Olson, 2004; Schoenbaum, Chiba and Gallagher, 1998; Tremblay and Schultz, 1999; Wallis and Miller, 2003).

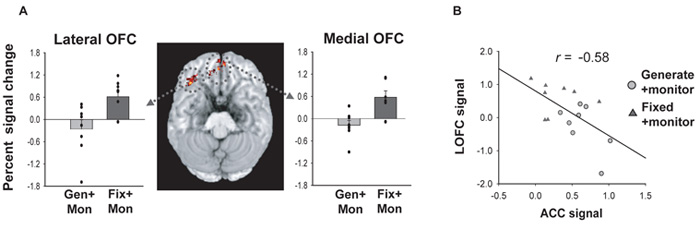

It was noticeable, therefore, that the comparison of GENERATE+MONITOR with either GENERATE or FIXED+MONITOR in Walton et al. (2004) did not show any increases in activity in the OFC. However, a separate contrast in this fMRI study was also run comparing where in the brain the signal at the time of switch was greater in FIXED+MONITOR compared to in GENERATE+MONITOR, corresponding to the regions concerned with monitoring the outcome of an action that had been externally instructed rather than internally generated, and this comparison did reveal OFC activations (Figure 4a). Such a finding concurs with studies reporting that activity in the OFC can occur in response to reward or a break in expectation even when there is no requirement to make a decision (Berns, McClure, Pagnoni and Montague, 2001; Nobre, Coull, Frith and Mesulam, 1999; O'Doherty, Dayan, Friston, Critchley and Dolan, 2003; Petrides, Alivisatos and Frey, 2002). Again, there was no difference in the signal in FIXED+MONITOR following correct or incorrect feedback. Comparing the activity in the ACC and OFC in these two monitoring conditions showed an interesting functional coupling between the two regions, with there being a negative correlation between signal levels (Figure 4b). This suggests that the degree to which either the ACC or OFC evaluate the consequences of choices depends on the nature of the decision, whether internally guided and voluntarily selected or as the result of external instruction.

Figure 4.

A. OFC activation and effect sizes in the contrast examining the signal at the time of the switch cue compared to at the time of the stay in FIXED+MONITOR compared to GENERATE+MONITOR. B. Plot of the signal in the ACC against that in the OFC for the GENERATE+MONITOR and FIXED+MONITOR conditions. There is a significant negative correlation between the two measures and a significant negative mean gradient between the points in each condition paired together for each subject. Adapted from Walton et al. (2004) with permission.

An anatomy of performance monitoring

Functional differences can often be understood through inspection of the pattern of connections of the respective regions (Passingham, Stephan and Kotter, 2002). While there is detailed literature documenting connectivity in the monkey brain, it has to date been difficult to obtain comparable information in humans. However, using diffusion weighted magnetic resonance imaging (DWI) and probabilistic tractography, it has recently become possible to examine trajectories of white matter fibre tracts in vivo in both humans and monkeys and generate estimates of the likelihood of a pathway existing between two brain areas (Behrens, Johansen-Berg, Woolrich, Smith, Wheeler-Kingshott, et al., 2003; Hagmann, Thiran, Jonasson, Vandergheynst, Clarke, et al., 2003; Tournier, Calamante, Gadian and Connelly, 2003). Therefore, we can consider whether there are differences in the anatomical connections of the ACC and the OFC which might help explain the performance monitoring dissociations observed in the fMRI study by Walton and colleagues (2004).

As discussed in an earlier section, anatomical and functional evidence indicates that dorsal ACC in humans contains the CMAs which project directly to primary motor cortex and the spinal cord, providing a direct way for the ACC to direct choices. The ACC activation in the GENERATE+MONITOR condition was approximately in the region of the rostral CMA as defined by Pickard and Strick (2001). By contrast, no such motor pathways exist from the OFC. However, outside of motor systems, it is almost impossible to impute explicit structure-function relationships based merely on cellular and dendritic organisation, meaning that an association between the activation patterns in ACC and OFC seen in Walton et al.'s (2004) fMRI study and the anatomical connections of these regions could only before be speculated upon based on studies in macaque monkeys.

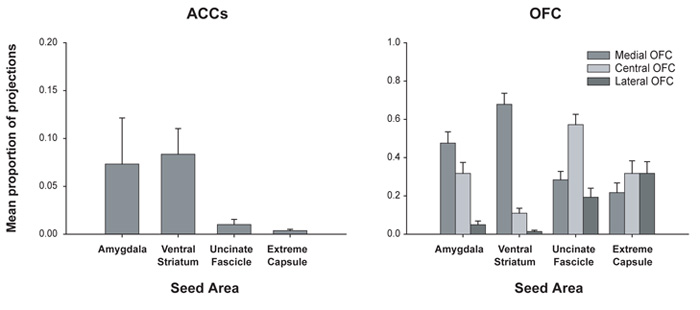

Using the method developed by Behrens (Behrens, Woolrich, Jenkinson, Johansen-Berg, Nunes, et al., 2003), Croxson and colleagues (Croxson, Johansen-Berg, Behrens, Robson, Pinsk, et al., 2005) investigated the connections of parts of the temporal lobe and striatum with the ACC and OFC in humans and monkeys. In accord with tracing studies in the macaque, both the human ACC sulcus (ACCs) and OFC (chiefly the central and medial regions) were shown to have connections with the amygdala and ventral striatum, both of which have been implicated in predicting the contingencies between environmental stimuli, actions and rewards (Baxter and Murray, 2002; O'Doherty, 2004) (Figure 5a, b). A small region of the ventral striatum was found to be commonly activated in the study by Walton and colleagues (2004) in the two conditions which required outcome monitoring, but not in a third control condition (not described above) in which it was not necessary to pay attention to the feedback. This concurs with the involvement of both the ACC and OFC in using outcome information to guide behaviour.

Figure 5.

Quantitative results of probabilistic tractography from seed masks in the human extreme capsule, uncinate fascicle, amygdala, and ventral striatum to the ACC sulcus (ACCs – left panel) and to lateral, central and medial OFC (right panel). The probability of connection with each prefrontal region as a proportion of the total connectivity with all prefrontal regions is plotted on the y-axis. Owing to the distorting influence of the high fractional anisotropy of the corpus callosum, the probability of tracts reaching the ACCs may be much lower than the OFC. Although this renders quantitative comparison between different regions problematic, it is still possible to gain important information regarding the pattern of connections within an area. Adapted from Croxson et al. (2005) with permission.

However, there is also evident divergence in the pattern of OFC and ACC connections when estimates are compared of the connectivity with parts of the temporal lobe which connect to prefrontal cortex via the uncinate fascicle and extreme capsule in monkeys (Petrides and Pandya, 2002; Ungerleider, Gaffan and Pelak, 1989). The OFC showed a high degree of interconnection with these areas, particularly in the lateral region that was observed to be activated in the FIXED+MONITOR condition, but only sparse connections with the ACCs were recorded (Figure 5a, b). These results were observed in both humans and monkeys, suggesting that these areas have correspondence between species. This means, of the two regions, only the OFC is in a position to receive highly processed sensory information, which may help account for its involvement in externally-guided actions and in altering stimulus-reward associations.

Performance monitoring and reward history in macaque ACC

Activations in neuroimaging experiments demonstrate a correlation between a component of the experimental task and an indirect measure of neuronal activity, but cannot establish whether or not the region is essential for that function. As well as evidence from neuroimaging, a large number of electrophysiological studies in monkeys have reported cells in the ACCs which respond to errors or reductions in reward which facilitate corrective behaviour (Amiez, Joseph and Procyk, 2005; Ito, Stuphorn, Brown and Schall, 2003; Shima and Tanji, 1998). However, while changes in the way errors are handled after ACC lesions have been reported (Fellows and Farah, 2005; Rushworth, Hadland, Gaffan and Passingham, 2003; Shima and Tanji, 1998; Swick and Turken, 2002), the degree of disruption is inconsistent. One possible reason is that, for a foraging animal like a macaque monkey gathering food in an uncertain environment, courses of action are rarely categorically either correct or incorrect. Instead, rather than simply considering the outcome of the immediately preceding response, the animal will have to use its history of positive and negative reinforcement in order to decide between competing options.

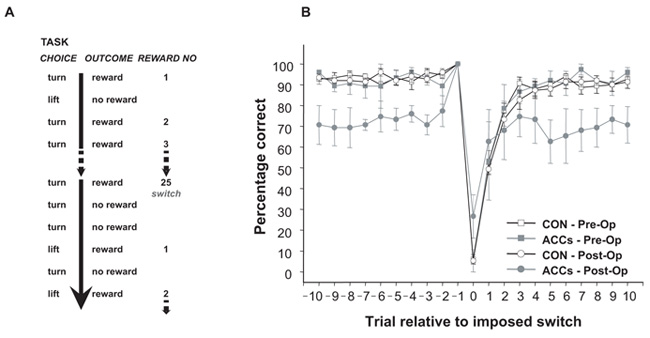

To investigate the role of ACCs in error- and reward-guided action selection, Kennerley and colleagues (Kennerley, Walton, Behrens, Buckley and Rushworth, 2006) taught monkeys a response reversal task where they could make one of two joystick movements (lift or turn) in order to receive food reinforcement (Figure 6a), and examined the effects of discrete lesions to ACCs on performance of this task. On any given trial, only one of the responses was ever rewarded at any one time. The action-outcome relationship remained fixed until the animal had gathered twenty five rewards, after which the contingency reversed and the other movement was now rewarded. Therefore, in order to obtain food at an optimal rate, the monkey had to sustain one response for a number of trials, monitoring the outcome of each action in order to be able rapidly to switch to the other response when the previous one no longer yielded reward. If the ACC is particularly important for error detection and/or correction, then it would be anticipated that lesions to this region would render monkeys poor at updating their actions at the time of a switch. Moreover, it is also to be expected that animals will experience most conflict between competing responses at this point in the task.

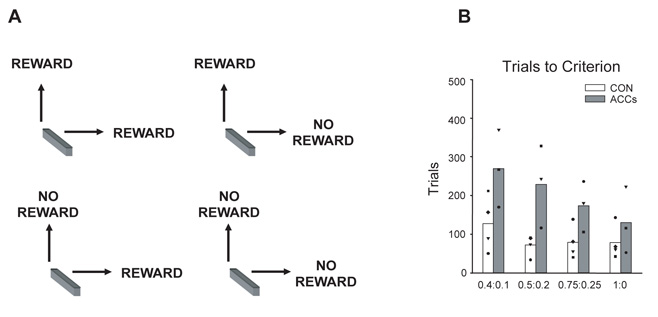

Figure 6.

A. Representation of the reward- and error-guided switching task that the monkeys performed. B. Plot of performance on the task centred around the 10 trials before and after an imposed switch (trial 0). The experimental design entailed that switches (point “0”) were only ever imposed after a correct response (point “-1”). The average performance of the control group is depicted by unfilled objects and black lines, the ACCs-lesioned animals by grey objects and lines (squares = pre-operative, circles = post-operative). As is apparent, the performance of control animals on the trial immediately after a switch is poor (point “1”) and there is an increasing likelihood of choosing the correct response on the subsequent trials. By comparison, the ACCs lesion has little effect at the time of the switch, but diverges from the performance of the control animals 4-5 trials after and never subsequently reaches their level of performance. Adapted from Kennerley et al. (2006) with permission.

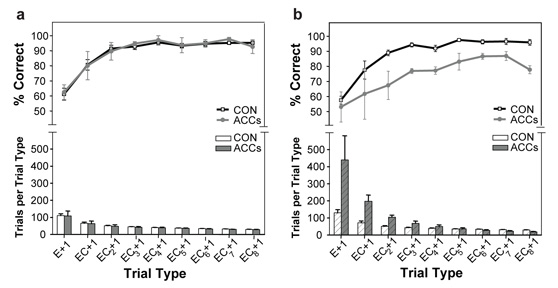

While ACCs-lesioned monkeys did render animals worse at performing the task, they were in fact no more slow to switch between responses than control animals. Inspection of Figure 6b shows that the ACCs-group were just as poor at choosing the rewarded action in the ten trials running up to an imposed switch, when they have experienced more than 15 rewards for selecting the correct action, as on the ten trials afterwards. Interestingly, in spite of many months training on this task during which the failure to receive an expected reward always indicated that the other response was correct, control animals changed their response choice on the first trial after a switch on only approximately 60% of trials (E+1 trial, far left of Figure 7a). This suggests that errors and rewards do not naturally operate like the explicit sensory cues that instruct actions in a conditional learning paradigm; instead, to a foraging animal, a single negative outcome is a piece of evidence that the chosen response may no longer be the best course of action, a fact that is weighed against the recent history of reinforcement. However, as evidence over subsequent trials accumulates that the alternative response does lead to reward, so the likelihood of changing to the new movement increases (EC+1, EC+2, and so on, moving left to right in Figure 7a). By contrast, while monkeys with ACCs lesions are just as likely to change their behaviour as control animals on the first trial after an imposed switch, they are subsequently unable to sustain the correct response, choosing the unrewarded action significantly more often than controls even after receiving as many as eight rewards in succession (Figure 7b). This suggests that rather than the ACCs-group's deficit being one of detecting that a response has been erroneous when not receiving reinforcement, it could instead be better characterised as a failure to integrate reinforcement history over time to work out which response it is desirable to make.

Figure 7.

Pre- (A) and post-operative (B) performance of the control and ACCs-lesioned animals for sustaining rewarded behaviour following an error. The trial types are plotted across the x-axis and start on the left with the trial immediately following an error (E+1). The next data point corresponds to the trial after one error and then a correct response (EC+1), the one after corresponds to the trial after one error and then two correct responses (EC+2), and so on. Moving from the left to the right of each panel corresponds to the animal acquiring more instances of positive reinforcement, after making the correct action, subsequent to an earlier error. Each graph shows the percentage of trials of each type that were correct. Control and ACCS lesion data are shown by the black and grey lines respectively. The histogram in the bottom part of each graph indicates the number of instances of each trial type. White and grey bars indicate the control and ACCS lesion data respectively while hatched bars indicate data from the post-operative session. Adapted from Kennerley et al. (2006) with permission.

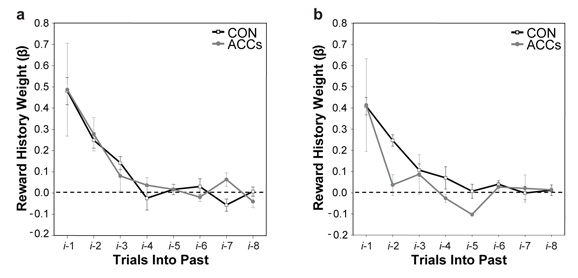

Using a logistic regression, it is possible to examine the weight of influence of the outcome of previous trials on the choice made on the present trial (Bayer and Glimcher, 2005; Lau and Glimcher, 2005). Figure 8 presents just this type of analysis, plotting the regression coefficients for the current trial (i) against each of the eight trials into the past (i-1, i-2, …, up to i-8). While the influence of reward history waned with increasing separation between the current and previous trials, control animals still appear to take into account what happened up to four trials earlier. As predicted, however, post-operatively the ACCs-group's choices were guided only by the outcome of the immediately preceding trial and none further in the past (Figure 8b).

Figure 8.

Estimates of the influence of previous reward history on current choice in the pre- (A) and post-operative (B) testing periods. Each point represents a regression coefficient value derived from the multiple logistic regression of choice on the current trial (i) against the outcomes (rewarded or unrewarded) on the previous eight trials. The influence of the previous trial (i-1) is shown on the left hand side of each figure, the influence of the previous trial but one (i-2) is shown next, and so on up until the trial that occurred eight trials previously (i-8). Control and ACCs lesion data are shown by the black and grey lines respectively. Adapted from Kennerley et al. (2006) with permission.

This description of ACCs function differs to an extent to those suggesting that this region signals alterations in outcome that in turn drives changes in behaviour as the ACCs-lesioned animals appeared unimpaired at updating their choices (Bush, Vogt, Holmes, Dale, Greve, et al., 2002; Shima and Tanji, 1998). Such ideas are based in part on reports of the effects of muscimol injections into part of the ACCs which caused difficulties on a reward-guided switching task where animals learned to switch between two movements on the basis of a reduction in the amount of reward received (Shima and Tanji, 1998). However, while the authors stress the fact that the monkeys were poor at using reward reductions to change their responses following inactivation of the ACCs, close inspection of the manuscript shows that these animals would also at times fail to persist with a rewarded response just like the monkeys with ACCs lesions in Kennerley and colleagues' study, prematurely switching away to the alternative movement. This suggests that both deficits may be better explained as a failure to learn and/or maintain an ongoing long-term representation of the value of actions rather than one specifically concerned with behavioural alterations.

Adaptive decision making and learning the value of actions in the ACC

The vast majority of the tasks used to study the function of the ACC have relied on situations which have limited possibilities of response and a single well-defined outcome. In the controlled, pre-programmed environment of the laboratory, it is straightforward for researchers to determine what the correct response to make is and to judge a participant's behaviour accordingly. All the studies described thus far in the present review have involved situations in which uncertainty is experimentally induced by switching the contingencies between actions and their consequences, which take a step towards mimicking more realistically variable situations. However, in more naturalistic settings, desirable outcomes are often dynamically uncertain, altering as a function of the patterns of choices previously made by both you and other organisms in the environment. For a foraging animal, it is important to decide when is optimal to move on from a patch of food, as continued exploitation will over time deplete the resource making its payoff potentially less valuable than other options (Charnov, 1976; Stephens and Krebs, 1986). Moreover, even when having located a fruitful source of nourishment, it is still beneficial on occasions to continue to explore and accrue more information about other potential alternatives (e.g., Daw, O'Doherty, Dayan, Seymour and Dolan, 2006). A role for the ACC in integrating an animal's record of choices and outcomes to gain a representation of the value of available options, rather than in just switching between responses, error detection or monitoring for response conflict, would imply that lesions to this region would render them selectively impaired in situations where they were required to use their reward history to guide action selection.

To investigate this hypothesis, Kennerley and colleagues (2006) used a discrete-trial version of the dynamic “matching” task, originally devised by Herrnstein (Herrnstein, Rachlin and Laibson, 1997) and used more recently by Sugrue and colleagues (Sugrue, Corrado and Newsome, 2004), which captures several of the key features faced by foraging animals described above. As in the previous joystick task, monkeys again are able to choose between one of two movements on each trial although now the contingencies never reverse throughout a testing session. However, there are two important differences to the joystick reversal task detailed in the previous section. First, rather than one of the two actions always being rewarded and the other non-rewarded, the likelihood of a response leading to a particular outcome was determined according to unequally assigned probabilities. Importantly, this allocation of rewards occurs independently for each option, meaning that on any particular trial, reward could be available regardless of which of the two actions the monkey chooses, only if it makes either a lift response or a turn response, or may not be forthcoming no matter what it selects (Figure 9a). If the animal chooses a response which has been allocated a reward, naturally it will obtain the reward. However, as it is only possible to select one response on each trial, once allocated to either a lift or turn, the reward will remain available until that action is selected. This means that an animal will not harvest the maximum amount of available food simply by working out which is the richest option of the two and choosing it every time as the cumulative probability of the less profitable alternative increases the more trials on which it is ignored until its likelihood of offering reward is actually greater than the more profitable response. Instead, to optimise foraging efficiency, the animals need to sample both options to develop a sense of the yield of each alternative and to learn when and how often it is advantageous to switch away the more profitable option to the one that normally leads to a poorer revenue of reward. In fact, in the particular version of the task used by Kennerley and colleagues (2006), rewards are obtained at the highest rate if the fraction of responses of a given type is equal to, or “matches”, the fraction of total rewards that are earned by making that response.

Figure 9.

When the likelihood of receiving reward on either option was probabilistic (0.4:0.1; 0.5:0.2; 0.75:0.25) and thus required the integration of trial-by-trial reinforcement information, the ACCs-lesioned animals were significantly slower to approach the optimal response allocation rate (Figure 9b). However, when the outcome was deterministically rewarded (1:0), they performed comparably to the controls and were thus able to sustain a rewarded response when the contingencies did not switch. As in the previous experiment, impairments were not restricted to situations of non-reward or errors. A comparison could be made of the likelihood of sustaining or switching between responses as a function of both whether the previous action selection had been the more or less profitable option and whether the animals had received a reward for that choice. Following selection of the more profitable action, the monkeys in the ACCs-group were less likely to continue choosing this response whether it was previously rewarded or unrewarded. The converse was true for the less profitable action, with the ACCs-lesioned animals being more likely to sustain this response than controls regardless of the outcome on the preceding trial. This adds further credence to the notion that the ACCs is crucial for allowing animals to integrate current reward information with the history of payoffs for that choice to develop and maintain ongoing contextual representations of the value of actions.

Such a finding concurs with recent studies showing that neurons in the ACCs appear to encode the average probabilistic value of available rewards (Amiez, Joseph and Procyk, 2006). Interestingly, in this study by Amiez and colleagues, where monkeys had to learn which of two stimuli with probabilistic associations with reward was optimal to choose, inactivation of part of ACCs again caused impairments in using reward information to guide behaviour. However, in contrast with the lack of persistence observed in the studies by Kennerley and colleagues (2006) and by Shima and Tanji (1998), here the monkeys seemed liable to persist with the option selected on the first trial, regardless of whether it gave a large or small reward. One possible reason for this discrepancy is that while the outcome on each trial was uncertain, the long-term values of the stimuli in the study by Amiez and colleagues remained stable. By contrast, both other studies involved long-periods of training on a response switching task. While the ACCs may be vital for learning about the overall value of actions, other regions such as parts of ventromedial prefrontal cortex appear also to encode higher-order strategies to help guide decisions (Hampton, Bossaerts and O'Doherty, 2006). Therefore, an inability to construct a history of recent reinforcement in all the animals without a functioning ACCs could manifest different patterns of behavioural deficits depending on whether a representation of the requirement to periodically change behaviour is also present.

ACC and distributed circuits for the value of actions

One difficulty with interpretations of brain function gained from a number of neuroscientific techniques, including the lesion method, is that they tend to encourage focus on a single area in isolation rather than allowing consideration of how a particular region works in concert with others to guide behaviour. As was discussed earlier, the ACC and OFC have frequently been co-activated in neuroimaging studies of outcome monitoring, though the evidence from Walton and colleagues (2004) is that their contribution may be dissociated depending on whether choices are made voluntarily or through external guidance, a finding supported by the anatomical connections of these two regions (Croxson, Johansen-Berg, Behrens, Robson, Pinsk, et al., 2005).

The same issue arises with Kennerley and colleagues' (2006) conclusion that the ACCs is important for encoding the value of actions based on the recent history of responses and their outcomes. Cells in dorsolateral prefrontal cortex also seem to represent these factors (Barraclough, Conroy and Lee, 2004; Genovesio, Brasted, Mitz and Wise, 2005), and activity in caudate neurons has been shown to vary as a function of the value of one of the available options (Samejima, Ueda, Doya and Kimura, 2005). Both of these regions share connections with parts of ACCs (Bates and Goldman-Rakic, 1993; Hatanaka, Tokuno, Hamada, Inase, Ito, et al., 2003; Kunishio and Haber, 1994; Takada, Nambu, Hatanaka, Tachibana, Miyachi, et al., 2004; Takada, Tokuno, Hamada, Inase, Ito, et al., 2001). Based on detailed neurophysiological studies and computational modelling, it has been shown that the firing of dopamine neurons is well predicted by theoretical descriptions of a reward prediction error signal used by reinforcement learning algorithms (Bayer and Glimcher, 2005; Doya, 2002; Montague, Dayan and Sejnowski, 1996; Schultz, 2002). These cells, which signal discrepancies between the current outcome (possibly only when better than expected – see Bayer and Glimcher, 2005) and a weighted average of previous rewards, project to both the ACC and OFC and could be used to guide reward seeking and choice (Berger, Trottier, Verney, Gaspar and Alvarez, 1988; Williams and Goldman-Rakic, 1998). The neuromodulator noradrenaline, which receives direct input from both the ACC and OFC, has also been implicated in helping animals either engage with a particular behaviour or to search for a new mode of response (Aston-Jones and Cohen, 2005; Yu and Dayan, 2005). Other regions, such as posterior cingulate cortex and parts of parietal cortex, are also sensitive to reward probability and value (McCoy and Platt, 2005; Sugrue, Corrado and Newsome, 2004).

It is also evident that actions seldom lead to outcomes without incurring some sort of response cost, whether in terms of the physical effort or time required to be invested or the levels of risk to be tolerated. A number of studies have suggested that the ACC plays an important role in integrating anticipated costs and the expected benefits to work out which of two alternatives is worth doing, and there is recent intriguing evidence for specialisation in this process depending on the nature of the decision cost needing to be overcome (Rudebeck, Walton, Smyth, Bannerman and Rushworth, in press; Walton, Bannerman, Alterescu and Rushworth, 2003; Walton, Bannerman and Rushworth, 2002).

It is an important task of future studies to try to dissociate the role of the ACC in learning the value of actions from these other interconnected regions and to discover how response costs are incorporated within such a representation. There is increasing evidence, for instance, that while the ACC appears to play a direct role in deciding which response to make, the prediction error signal encoded by dopamine neurons is not directly related to action selection (Bayer and Glimcher, 2005; Morris, Nevet, Arkadir, Vaadia and Bergman, 2006). It will be imperative to investigate, amongst other things, whether the ACCs encodes a combination of reward and previous choice history or just one of the two factors in isolation and how the context of the type of environment influences the calculation of value. While there is now a large body of research showing that the ACC uses all types of reinforcement information – rewards as well as errors – to build up a sense of action value, it is not clear how this region assigns importance to individual pieces of information. Intuitively, reward information is likely to be more valuable in a reward-sparse environment, and it may be that the ACC plays an important role in learning the average reward rate for the available options (see also Amiez, Joseph and Procyk, 2006). Similarly, the significance of information will also be affected by how changeable the local conditions are, which may also be encoded in the ACC.

Conclusion

Deciding what is the most desirable or advantageous action to choose in a variable world of numerous competing agents is a challenging question which has exercised economists and behavioural ecologists for numerous years. Recently, neuroscientists have also been approaching this topic, moving away from tasks in which only a single option is always categorically correct to ones in which contingencies change, outcomes are uncertain and the likelihood of success depends on the results of choices made in the past. Approaching the study of the ACC from this perspective has resulted in novel ways of considering the function of this region. As suggested by its anatomical position, receiving information from limbic and prefrontal regions as well as having direct access to the motor system, the ACC does seem to play an important role both in the generation of voluntary choices and in monitoring their outcome, positive or negative, particularly at times of uncertainty when the environment is changing and the connection between actions and their consequences can no longer be relied upon. Rather than responding to the outcome of a single choice in isolation, however, the ACC, and particularly the ACCs, is a crucial component in a distributed system that assimilates current information with an extended history of reward. Such a dynamic and rich representation of the action value would be vital to guide adaptive decision making.

Acknowledgements

This work was supported by the Medical Research Council, the Royal Society (MFSR) and the Wellcome Trust (MEW, PLC).

References

- Allport DA, Styles E-A, Hsieh S. Shifting intentional set: Exploring the dynamic control of tasks. In: Umilta C, editor. Attention and performance series. The MIT Press; Cambridge, MA, US: 1994. [Google Scholar]

- Amiez C, Joseph JP, Procyk E. Anterior cingulate error-related activity is modulated by predicted reward. Eur J Neurosci. 2005;21:3447–3452. doi: 10.1111/j.1460-9568.2005.04170.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amiez C, Joseph JP, Procyk E. Reward encoding in the monkey anterior cingulate cortex. Cereb Cortex. 2006;16:1040–1055. doi: 10.1093/cercor/bhj046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aston-Jones G, Cohen JD. An integrative theory of locus coeruleus-norepinephrine function: Adaptive gain and optimal performance. Annu Rev Neurosci. 2005;28:403–450. doi: 10.1146/annurev.neuro.28.061604.135709. [DOI] [PubMed] [Google Scholar]

- Balleine BW, Dickinson A. Goal-directed instrumental action: Contingency and incentive learning and their cortical substrates. Neuropharmacology. 1998;37:407–419. doi: 10.1016/s0028-3908(98)00033-1. [DOI] [PubMed] [Google Scholar]

- Barraclough DJ, Conroy ML, Lee D. Prefrontal cortex and decision making in a mixed-strategy game. Nat Neurosci. 2004;7:404–410. doi: 10.1038/nn1209. [DOI] [PubMed] [Google Scholar]

- Bates JF, Goldman-Rakic PS. Prefrontal connections of medial motor areas in the rhesus monkey. J Comp Neurol. 1993;336:211–228. doi: 10.1002/cne.903360205. [DOI] [PubMed] [Google Scholar]

- Baxter MG, Murray EA. The amygdala and reward. Nat Rev Neurosci. 2002;3:563–573. doi: 10.1038/nrn875. [DOI] [PubMed] [Google Scholar]

- Bayer HM, Glimcher PW. Midbrain dopamine neurons encode a quantitative reward prediction error signal. Neuron. 2005;47:129–141. doi: 10.1016/j.neuron.2005.05.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bechara A, Damasio H, Damasio AR. Emotion, decision making and the orbitofrontal cortex. Cereb Cortex. 2000;10:295–307. doi: 10.1093/cercor/10.3.295. [DOI] [PubMed] [Google Scholar]

- Behrens TE, Johansen-Berg H, Woolrich MW, Smith SM, Wheeler-Kingshott CA, et al. Non-invasive mapping of connections between human thalamus and cortex using diffusion imaging. Nat Neurosci. 2003;6:750–757. doi: 10.1038/nn1075. [DOI] [PubMed] [Google Scholar]

- Behrens TE, Woolrich MW, Jenkinson M, Johansen-Berg H, Nunes RG, et al. Characterization and propagation of uncertainty in diffusion-weighted mr imaging. Magn Reson Med. 2003;50:1077–1088. doi: 10.1002/mrm.10609. [DOI] [PubMed] [Google Scholar]

- Berger B, Trottier S, Verney C, Gaspar P, Alvarez C. Regional and laminar distribution of the dopamine and serotonin innervation in the macaque cerebral cortex: A radioautographic study. J Comp Neurol. 1988;273:99–119. doi: 10.1002/cne.902730109. [DOI] [PubMed] [Google Scholar]

- Berns GS, McClure SM, Pagnoni G, Montague PR. Predictability modulates human brain response to reward. J Neurosci. 2001;21:2793–2798. doi: 10.1523/JNEUROSCI.21-08-02793.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Botvinick MM, Braver TS, Barch DM, Carter CS, Cohen JD. Conflict monitoring and cognitive control. Psychol Rev. 2001;108:624–652. doi: 10.1037/0033-295x.108.3.624. [DOI] [PubMed] [Google Scholar]

- Botvinick MM, Cohen JD, Carter CS. Conflict monitoring and anterior cingulate cortex: An update. Trends Cogn Sci. 2004;8:539–546. doi: 10.1016/j.tics.2004.10.003. [DOI] [PubMed] [Google Scholar]

- Braak H. A primitive gigantopyramidal field buried in the depth of the cingulate sulcus of the human brain. Brain Res. 1976;109:219–223. doi: 10.1016/0006-8993(76)90526-6. [DOI] [PubMed] [Google Scholar]

- Brown JW, Braver TS. Learned predictions of error likelihood in the anterior cingulate cortex. Science. 2005;307:1118–1121. doi: 10.1126/science.1105783. [DOI] [PubMed] [Google Scholar]

- Bunge SA. How we use rules to select actions: A review of evidence from cognitive neuroscience. Cogn Affect Behav Neurosci. 2004;4:564–579. doi: 10.3758/cabn.4.4.564. [DOI] [PubMed] [Google Scholar]

- Bush G, Luu P, Posner MI. Cognitive and emotional influences in anterior cingulate cortex. Trends Cogn Sci. 2000;4:215–222. doi: 10.1016/s1364-6613(00)01483-2. [DOI] [PubMed] [Google Scholar]

- Bush G, Vogt BA, Holmes J, Dale AM, Greve D, et al. Dorsal anterior cingulate cortex: A role in reward-based decision making. Proc Natl Acad Sci U S A. 2002;99:523–528. doi: 10.1073/pnas.012470999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Charnov EL. Optimal foraging: The marginal value theorem. Theor Pop Biol. 1976;9:129–136. doi: 10.1016/0040-5809(76)90040-x. [DOI] [PubMed] [Google Scholar]

- Cohen MX, Heller AS, Ranganath C. Functional connectivity with anterior cingulate and orbitofrontal cortices during decision-making. Brain Res Cogn Brain Res. 2005;23:61–70. doi: 10.1016/j.cogbrainres.2005.01.010. [DOI] [PubMed] [Google Scholar]

- Coricelli G, Critchley HD, Joffily M, O'Doherty JP, Sirigu A, et al. Regret and its avoidance: A neuroimaging study of choice behavior. Nat Neurosci. 2005;8:1255–1262. doi: 10.1038/nn1514. [DOI] [PubMed] [Google Scholar]

- Critchley HD. Neural mechanisms of autonomic, affective, and cognitive integration. J Comp Neurol. 2005;493:154–166. doi: 10.1002/cne.20749. [DOI] [PubMed] [Google Scholar]

- Crone EA, Wendelken C, Donohue SE, Bunge SA. Neural evidence for dissociable components of task-switching. Cereb Cortex. 2006;16:475–486. doi: 10.1093/cercor/bhi127. [DOI] [PubMed] [Google Scholar]

- Croxson PL, Johansen-Berg H, Behrens TE, Robson MD, Pinsk MA, et al. Quantitative investigation of connections of the prefrontal cortex in the human and macaque using probabilistic diffusion tractography. J Neurosci. 2005;25:8854–8866. doi: 10.1523/JNEUROSCI.1311-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Daw ND, O'Doherty JP, Dayan P, Seymour B, Dolan RJ. Cortical substrates for exploratory decisions in humans. Nature. 2006;441:876–879. doi: 10.1038/nature04766. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dove A, Pollmann S, Schubert T, Wiggins CJ, von Cramon DY. Prefrontal cortex activation in task switching: An event-related fmri study. Brain Res Cogn Brain Res. 2000;9:103–109. doi: 10.1016/s0926-6410(99)00029-4. [DOI] [PubMed] [Google Scholar]

- Doya K. Metalearning and neuromodulation. Neural Netw. 2002;15:495–506. doi: 10.1016/s0893-6080(02)00044-8. [DOI] [PubMed] [Google Scholar]

- Duncan J, Owen AM. Common regions of the human frontal lobe recruited by diverse cognitive demands. Trends Neurosci. 2000;23:475–483. doi: 10.1016/s0166-2236(00)01633-7. [DOI] [PubMed] [Google Scholar]

- Elliott R, Friston KJ, Dolan RJ. Dissociable neural responses in human reward systems. J Neurosci. 2000;20:6159–6165. doi: 10.1523/JNEUROSCI.20-16-06159.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ernst M, Paulus MP. Neurobiology of decision making: A selective review from a neurocognitive and clinical perspective. Biol Psychiatry. 2005;58:597–604. doi: 10.1016/j.biopsych.2005.06.004. [DOI] [PubMed] [Google Scholar]

- Fellows LK, Farah MJ. Is anterior cingulate cortex necessary for cognitive control? Brain. 2005;128:788–796. doi: 10.1093/brain/awh405. [DOI] [PubMed] [Google Scholar]

- Frith CD, Friston K, Liddle PF, Frackowiak RS. Willed action and the prefrontal cortex in man: A study with pet. Proc R Soc Lond B Biol Sci. 1991;244:241–246. doi: 10.1098/rspb.1991.0077. [DOI] [PubMed] [Google Scholar]

- Genovesio A, Brasted PJ, Mitz AR, Wise SP. Prefrontal cortex activity related to abstract response strategies. Neuron. 2005;47:307–320. doi: 10.1016/j.neuron.2005.06.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hadland KA, Rushworth MF, Gaffan D, Passingham RE. The anterior cingulate and reward-guided selection of actions. J Neurophysiol. 2003;89:1161–1164. doi: 10.1152/jn.00634.2002. [DOI] [PubMed] [Google Scholar]

- Hagmann P, Thiran JP, Jonasson L, Vandergheynst P, Clarke S, et al. Dti mapping of human brain connectivity: Statistical fibre tracking and virtual dissection. Neuroimage. 2003;19:545–554. doi: 10.1016/s1053-8119(03)00142-3. [DOI] [PubMed] [Google Scholar]

- Hampton AN, Bossaerts P, O'Doherty JP. The role of the ventromedial prefrontal cortex in abstract state-based inference during decision making in humans. J Neurosci. 2006;26:8360–8367. doi: 10.1523/JNEUROSCI.1010-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hatanaka N, Tokuno H, Hamada I, Inase M, Ito Y, et al. Thalamocortical and intracortical connections of monkey cingulate motor areas. J Comp Neurol. 2003;462:121–138. doi: 10.1002/cne.10720. [DOI] [PubMed] [Google Scholar]

- He SQ, Dum RP, Strick PL. Topographic organization of corticospinal projections from the frontal lobe: Motor areas on the medial surface of the hemisphere. J Neurosci. 1995;15:3284–3306. doi: 10.1523/JNEUROSCI.15-05-03284.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herrnstein R-J, Rachlin H, Laibson D-I. The matching law: Papers in psychology and economics. 1997 [Google Scholar]

- Hikosaka K, Watanabe M. Delay activity of orbital and lateral prefrontal neurons of the monkey varying with different rewards. Cereb Cortex. 2000;10:263–271. doi: 10.1093/cercor/10.3.263. [DOI] [PubMed] [Google Scholar]

- Holroyd CB, Coles MG. The neural basis of human error processing: Reinforcement learning, dopamine, and the error-related negativity. Psychol Rev. 2002;109:679–709. doi: 10.1037/0033-295X.109.4.679. [DOI] [PubMed] [Google Scholar]

- Ito S, Stuphorn V, Brown JW, Schall JD. Performance monitoring by the anterior cingulate cortex during saccade countermanding. Science. 2003;302:120–122. doi: 10.1126/science.1087847. [DOI] [PubMed] [Google Scholar]

- Izquierdo A, Suda RK, Murray EA. Comparison of the effects of bilateral orbital prefrontal cortex lesions and amygdala lesions on emotional responses in rhesus monkeys. J Neurosci. 2005;25:8534–8542. doi: 10.1523/JNEUROSCI.1232-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jahanshahi M, Jenkins IH, Brown RG, Marsden CD, Passingham RE, et al. Self-initiated versus externally triggered movements. I. An investigation using measurement of regional cerebral blood flow with pet and movement-related potentials in normal and parkinson's disease subjects. Brain. 1995;118(Pt 4):913–933. doi: 10.1093/brain/118.4.913. [DOI] [PubMed] [Google Scholar]

- Jones B, Mishkin M. Limbic lesions and the problem of stimulus--reinforcement associations. Exp Neurol. 1972;36:362–377. doi: 10.1016/0014-4886(72)90030-1. [DOI] [PubMed] [Google Scholar]

- Kennerley SW, Walton ME, Behrens TE, Buckley MJ, Rushworth MF. Optimal decision making and the anterior cingulate cortex. Nat Neurosci. 2006;9:940–947. doi: 10.1038/nn1724. [DOI] [PubMed] [Google Scholar]

- Kremer S, Chassagnon S, Hoffmann D, Benabid AL, Kahane P. The cingulate hidden hand. J Neurol Neurosurg Psychiatry. 2001;70:264–265. doi: 10.1136/jnnp.70.2.264. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kunishio K, Haber SN. Primate cingulostriatal projection: Limbic striatal versus sensorimotor striatal input. J Comp Neurol. 1994;350:337–356. doi: 10.1002/cne.903500302. [DOI] [PubMed] [Google Scholar]

- Lau B, Glimcher PW. Dynamic response-by-response models of matching behavior in rhesus monkeys. J Exp Anal Behav. 2005;84:555–579. doi: 10.1901/jeab.2005.110-04. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lau HC, Rogers RD, Ramnani N, Passingham RE. Willed action and attention to the selection of action. Neuroimage. 2004;21:1407–1415. doi: 10.1016/j.neuroimage.2003.10.034. [DOI] [PubMed] [Google Scholar]

- Liston C, Matalon S, Hare TA, Davidson MC, Casey BJ. Anterior cingulate and posterior parietal cortices are sensitive to dissociable forms of conflict in a task-switching paradigm. Neuron. 2006;50:643–653. doi: 10.1016/j.neuron.2006.04.015. [DOI] [PubMed] [Google Scholar]

- Luppino G, Matelli M, Camarda R, Rizzolatti G. Corticospinal projections from mesial frontal and cingulate areas in the monkey. Neuroreport. 1994;5:2545–2548. doi: 10.1097/00001756-199412000-00035. [DOI] [PubMed] [Google Scholar]

- Matelli M, Luppino G, Rizzolatti G. Architecture of superior and mesial area 6 and the adjacent cingulate cortex in the macaque monkey. J Comp Neurol. 1991;311:445–462. doi: 10.1002/cne.903110402. [DOI] [PubMed] [Google Scholar]

- Matsumoto K, Suzuki W, Tanaka K. Neuronal correlates of goal-based motor selection in the prefrontal cortex. Science. 2003;301:229–232. doi: 10.1126/science.1084204. [DOI] [PubMed] [Google Scholar]

- McCoy AN, Platt ML. Risk-sensitive neurons in macaque posterior cingulate cortex. Nat Neurosci. 2005;8:1220–1227. doi: 10.1038/nn1523. [DOI] [PubMed] [Google Scholar]

- Montague PR, Dayan P, Sejnowski TJ. A framework for mesencephalic dopamine systems based on predictive hebbian learning. J Neurosci. 1996;16:1936–1947. doi: 10.1523/JNEUROSCI.16-05-01936.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Montague PR, Hyman SE, Cohen JD. Computational roles for dopamine in behavioural control. Nature. 2004;431:760–767. doi: 10.1038/nature03015. [DOI] [PubMed] [Google Scholar]

- Morris G, Nevet A, Arkadir D, Vaadia E, Bergman H. Midbrain dopamine neurons encode decisions for future action. Nat Neurosci. 2006;9:1057–1063. doi: 10.1038/nn1743. [DOI] [PubMed] [Google Scholar]

- Niki H, Watanabe M. Prefrontal and cingulate unit activity during timing behavior in the monkey. Brain Res. 1979;171:213–224. doi: 10.1016/0006-8993(79)90328-7. [DOI] [PubMed] [Google Scholar]

- Nobre AC, Coull JT, Frith CD, Mesulam MM. Orbitofrontal cortex is activated during breaches of expectation in tasks of visual attention. Nat Neurosci. 1999;2:11–12. doi: 10.1038/4513. [DOI] [PubMed] [Google Scholar]

- O'Doherty J, Critchley H, Deichmann R, Dolan RJ. Dissociating valence of outcome from behavioral control in human orbital and ventral prefrontal cortices. J Neurosci. 2003;23:7931–7939. doi: 10.1523/JNEUROSCI.23-21-07931.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O'Doherty JP. Reward representations and reward-related learning in the human brain: Insights from neuroimaging. Curr Opin Neurobiol. 2004;14:769–776. doi: 10.1016/j.conb.2004.10.016. [DOI] [PubMed] [Google Scholar]

- O'Doherty JP, Dayan P, Friston K, Critchley H, Dolan RJ. Temporal difference models and reward-related learning in the human brain. Neuron. 2003;38:329–337. doi: 10.1016/s0896-6273(03)00169-7. [DOI] [PubMed] [Google Scholar]

- Ongur D, Ferry AT, Price JL. Architectonic subdivision of the human orbital and medial prefrontal cortex. J Comp Neurol. 2003;460:425–449. doi: 10.1002/cne.10609. [DOI] [PubMed] [Google Scholar]

- Padoa-Schioppa C, Assad JA. Neurons in the orbitofrontal cortex encode economic value. Nature. 2006;441:223–226. doi: 10.1038/nature04676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Passingham RE, Stephan KE, Kotter R. The anatomical basis of functional localization in the cortex. Nat Rev Neurosci. 2002;3:606–616. doi: 10.1038/nrn893. [DOI] [PubMed] [Google Scholar]

- Paus T. Primate anterior cingulate cortex: Where motor control, drive and cognition interface. Nature Reviews Neuroscience. 2001;2:417–424. doi: 10.1038/35077500. [DOI] [PubMed] [Google Scholar]

- Paus T, Koski L, Caramanos Z, Westbury C. Regional differences in the effects of task difficulty and motor output on blood flow response in the human anterior cingulate cortex: A review of 107 pet activation studies. Neuroreport. 1998;9:R37–47. doi: 10.1097/00001756-199806220-00001. [DOI] [PubMed] [Google Scholar]

- Petrides M, Alivisatos B, Frey S. Differential activation of the human orbital, mid-ventrolateral, and mid-dorsolateral prefrontal cortex during the processing of visual stimuli. Proc Natl Acad Sci U S A. 2002;99:5649–5654. doi: 10.1073/pnas.072092299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petrides M, Pandya DN. Comparative cytoarchitectonic analysis of the human and the macaque ventrolateral prefrontal cortex and corticocortical connection patterns in the monkey. Eur J Neurosci. 2002;16:291–310. doi: 10.1046/j.1460-9568.2001.02090.x. [DOI] [PubMed] [Google Scholar]

- Picard N, Strick PL. Motor areas of the medial wall: A review of their location and functional activation. Cereb Cortex. 1996;6:342–353. doi: 10.1093/cercor/6.3.342. [DOI] [PubMed] [Google Scholar]

- Picard N, Strick PL. Imaging the premotor areas. Curr Opin Neurobiol. 2001;11:663–672. doi: 10.1016/s0959-4388(01)00266-5. [DOI] [PubMed] [Google Scholar]

- Procyk E, Tanaka YL, Joseph JP. Anterior cingulate activity during routine and non-routine sequential behaviors in macaques. Nat Neurosci. 2000;3:502–508. doi: 10.1038/74880. [DOI] [PubMed] [Google Scholar]

- Ridderinkhof KR, Ullsperger M, Crone EA, Nieuwenhuis S. The role of the medial frontal cortex in cognitive control. Science. 2004;306:443–447. doi: 10.1126/science.1100301. [DOI] [PubMed] [Google Scholar]

- Roesch MR, Olson CR. Neuronal activity related to reward value and motivation in primate frontal cortex. Science. 2004;304:307–310. doi: 10.1126/science.1093223. [DOI] [PubMed] [Google Scholar]

- Rogers RD, Everitt BJ, Baldacchino A, Blackshaw AJ, Swainson R, et al. Dissociable deficits in the decision-making cognition of chronic amphetamine abusers, opiate abusers, patients with focal damage to prefrontal cortex, and tryptophan-depleted normal volunteers: Evidence for monoaminergic mechanisms. Neuropsychopharmacology. 1999;20:322–339. doi: 10.1016/S0893-133X(98)00091-8. [DOI] [PubMed] [Google Scholar]

- Rudebeck PH, Walton ME, Smyth AN, Bannerman DM, Rushworth MF. Separate neural pathways process different decision costs. Nat Neurosci. doi: 10.1038/nn1756. in press. [DOI] [PubMed] [Google Scholar]

- Rushworth MF, Hadland KA, Gaffan D, Passingham RE. The effect of cingulate cortex lesions on task switching and working memory. J Cogn Neurosci. 2003;15:338–353. doi: 10.1162/089892903321593072. [DOI] [PubMed] [Google Scholar]

- Rushworth MF, Hadland KA, Paus T, Sipila PK. Role of the human medial frontal cortex in task switching: A combined fmri and tms study. J Neurophysiol. 2002;87:2577–2592. doi: 10.1152/jn.2002.87.5.2577. [DOI] [PubMed] [Google Scholar]

- Rushworth MF, Walton ME, Kennerley SW, Bannerman DM. Action sets and decisions in the medial frontal cortex. Trends Cogn Sci. 2004;8:410–417. doi: 10.1016/j.tics.2004.07.009. [DOI] [PubMed] [Google Scholar]

- Samejima K, Ueda Y, Doya K, Kimura M. Representation of action-specific reward values in the striatum. Science. 2005;310:1337–1340. doi: 10.1126/science.1115270. [DOI] [PubMed] [Google Scholar]

- Schoenbaum G, Chiba AA, Gallagher M. Orbitofrontal cortex and basolateral amygdala encode expected outcomes during learning. Nat Neurosci. 1998;1:155–159. doi: 10.1038/407. [DOI] [PubMed] [Google Scholar]

- Schoenbaum G, Setlow B, Nugent SL, Saddoris MP, Gallagher M. Lesions of orbitofrontal cortex and basolateral amygdala complex disrupt acquisition of odor-guided discriminations and reversals. Learn Mem. 2003;10:129–140. doi: 10.1101/lm.55203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schultz W. Getting formal with dopamine and reward. Neuron. 2002;36:241–263. doi: 10.1016/s0896-6273(02)00967-4. [DOI] [PubMed] [Google Scholar]

- Shima K, Tanji J. Role for cingulate motor area cells in voluntary movement selection based on reward. Science. 1998;282:1335–1338. doi: 10.1126/science.282.5392.1335. [DOI] [PubMed] [Google Scholar]

- Stephens DW, Krebs JR. Foraging theory. Princeton University Press; Princeton: 1986. [Google Scholar]

- Sugrue LP, Corrado GS, Newsome WT. Matching behavior and the representation of value in the parietal cortex. Science. 2004;304:1782–1787. doi: 10.1126/science.1094765. [DOI] [PubMed] [Google Scholar]

- Swick D, Turken AU. Dissociation between conflict detection and error monitoring in the human anterior cingulate cortex. Proc Natl Acad Sci U S A. 2002;99:16354–16359. doi: 10.1073/pnas.252521499. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Takada M, Nambu A, Hatanaka N, Tachibana Y, Miyachi S, et al. Organization of prefrontal outflow toward frontal motor-related areas in macaque monkeys. Eur J Neurosci. 2004;19:3328–3342. doi: 10.1111/j.0953-816X.2004.03425.x. [DOI] [PubMed] [Google Scholar]

- Takada M, Tokuno H, Hamada I, Inase M, Ito Y, et al. Organization of inputs from cingulate motor areas to basal ganglia in macaque monkey. Eur J Neurosci. 2001;14:1633–1650. doi: 10.1046/j.0953-816x.2001.01789.x. [DOI] [PubMed] [Google Scholar]

- Thaler D, Chen YC, Nixon PD, Stern CE, Passingham RE. The functions of the medial premotor cortex. I. Simple learned movements. Exp Brain Res. 1995;102:445–460. doi: 10.1007/BF00230649. [DOI] [PubMed] [Google Scholar]

- Tournier JD, Calamante F, Gadian DG, Connelly A. Diffusion-weighted magnetic resonance imaging fibre tracking using a front evolution algorithm. Neuroimage. 2003;20:276–288. doi: 10.1016/s1053-8119(03)00236-2. [DOI] [PubMed] [Google Scholar]

- Tremblay L, Schultz W. Relative reward preference in primate orbitofrontal cortex. Nature. 1999;398:704–708. doi: 10.1038/19525. [DOI] [PubMed] [Google Scholar]

- Tricomi EM, Delgado MR, Fiez JA. Modulation of caudate activity by action contingency. Neuron. 2004;41:281–292. doi: 10.1016/s0896-6273(03)00848-1. [DOI] [PubMed] [Google Scholar]

- Ullsperger M, Von Cramon DY. Error monitoring using external feedback: Specific roles of the habenular complex, the reward system, and the cingulate motor area revealed by functional magnetic resonance imaging. J Neurosci. 2003;23:4308–4314. doi: 10.1523/JNEUROSCI.23-10-04308.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ungerleider LG, Gaffan D, Pelak VS. Projections from inferior temporal cortex to prefrontal cortex via the uncinate fascicle in rhesus monkeys. Exp Brain Res. 1989;76:473–484. doi: 10.1007/BF00248903. [DOI] [PubMed] [Google Scholar]

- Vogt BA, Nimchinsky EA, Vogt LJ, Hof PR. Human cingulate cortex: Surface features, flat maps, and cytoarchitecture. J Comp Neurol. 1995;359:490–506. doi: 10.1002/cne.903590310. [DOI] [PubMed] [Google Scholar]

- Wallis JD, Miller EK. Neuronal activity in primate dorsolateral and orbital prefrontal cortex during performance of a reward preference task. Eur J Neurosci. 2003;18:2069–2081. doi: 10.1046/j.1460-9568.2003.02922.x. [DOI] [PubMed] [Google Scholar]

- Walton ME, Bannerman DM, Alterescu K, Rushworth MF. Functional specialization within medial frontal cortex of the anterior cingulate for evaluating effort-related decisions. J Neurosci. 2003;23:6475–6479. doi: 10.1523/JNEUROSCI.23-16-06475.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walton ME, Bannerman DM, Rushworth MF. The role of rat medial frontal cortex in effort-based decision making. J Neurosci. 2002;22:10996–11003. doi: 10.1523/JNEUROSCI.22-24-10996.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walton ME, Devlin JT, Rushworth MF. Interactions between decision making and performance monitoring within prefrontal cortex. Nat Neurosci. 2004;7:1259–1265. doi: 10.1038/nn1339. [DOI] [PubMed] [Google Scholar]

- Wang Y, Shima K, Sawamura H, Tanji J. Spatial distribution of cingulate cells projecting to the primary, supplementary, and pre-supplementary motor areas: A retrograde multiple labeling study in the macaque monkey. Neurosci Res. 2001;39:39–49. doi: 10.1016/s0168-0102(00)00198-x. [DOI] [PubMed] [Google Scholar]

- Williams SM, Goldman-Rakic PS. Widespread origin of the primate mesofrontal dopamine system. Cereb Cortex. 1998;8:321–345. doi: 10.1093/cercor/8.4.321. [DOI] [PubMed] [Google Scholar]

- Yu AJ, Dayan P. Uncertainty, neuromodulation, and attention. Neuron. 2005;46:681–692. doi: 10.1016/j.neuron.2005.04.026. [DOI] [PubMed] [Google Scholar]

- Zilles K, Schlaug G, Geyer S, Luppino G, Matelli M, et al. Anatomy and transmitter receptors of the supplementary motor areas in the human and nonhuman primate brain. In: Luders HO, editor. Supplementary sensorimotor area. Lippincott-Raven; Philadelphia: 1996. pp. 29–43. [PubMed] [Google Scholar]