Abstract

Adult-like attentional biases toward fearful faces can be observed in 7-month-old infants. It is possible, however, that infants merely allocate attention to simple features such as enlarged fearful eyes. In the present study, 7-month-old infants (n = 15) were first shown individual emotional faces to determine their visual scanning patterns of the expressions. Second, an overlap task was used to examine the latency of attention disengagement from centrally presented faces. In both tasks, the stimuli were fearful, happy, and neutral facial expressions, and a neutral face with fearful eyes. Eye tracking data from the first task showed that infants scanned the eyes more than other regions of the face, however, there were no differences in scanning patterns across expressions. In the overlap task, infants were slower in disengaging attention from fearful as compared to happy and neutral faces and also to neutral faces with fearful eyes. Together, these results provide evidence that threat-related stimuli tend to hold attention preferentially in 7-month-old infants and that the effect does not reflect a simple response to differentially salient eyes in fearful faces.

Keywords: Facial expression, Attention, Emotion, Eye tracking, Infant

Salient emotional and social cues exert prominent influences on perception and attention. In particular, facial signals of threat, such as angry and fearful faces, engage brain systems (e.g., the amygdala) involved in generating increased vigilance and enhanced perceptual and attentional functioning (Phelps, 2006). In situations where there is a competition of attentional resources between different stimuli, attention has been shown to be preferentially allocated to fearful over simultaneously presented neutral faces (Holmes, Green, & Vuilleumier, 2005; Pourtois, Grandjean, Sander, & Vuilleumier, 2004). Neuroimaging and event-related brain potential (ERP) measurements have shown enhanced neural responses to fearful faces in the extrastriate visual processing areas (e.g., Leppänen, Hietanen, & Koskinen, 2008; Morris et al., 1998). It has been suggested that modulation of cortical sensory excitability by the amygdala may partly account for the enhanced processing of fearful faces (Vuilleumier, 2005). The amygdala may respond differentially to changes in some relatively simple facial features that are “diagnostic” for signals of threat, which may account for the findings of enhanced amygdala activation to fearful eyes presented in isolation (Whalen et al., 2004) or embedded in a neutral face (Morris, deBonis, & Dolan, 2002). Moreover, it has been shown that fearful eyes alone are sufficient for enhanced detection of fearful faces in rapidly changing visual displays (Yang, Zald, & Blake, 2007). Visual information around the eyes is processed first when identifying facial expressions (Schyns, Petro, & Smith, 2007), and the general tendency to direct attention to the eyes in a face depends on an intact amygdala (Adolphs et al., 2005). These findings point to a crucial role for the enlarged eyes in fearful faces acting as a simple diagnostic feature activating emotion-related brain systems and enabling rapid identification of faces as fearful.

Studies investigating the ontogeny of emotional face processing suggest that the attentional bias towards fearful facial expressions may develop by the second half of the first year. Seven-month-old infants spontaneously look longer at fearful faces when they are presented simultaneously with happy faces (Kotsoni, de Haan, & Johnson, 2001; Leppänen, Moulson, Vogel-Farley, & Nelson, 2007; Nelson & Dolgin, 1985; Peltola, Leppänen, Mäki, & Hietanen, in press). In ERP measurements, a negative amplitude deflection at frontocentral recording sites approximately 400 ms after stimulus onset has been shown to be larger for fearful than happy faces in 7-month-olds (Leppänen et al., 2007; Nelson & de Haan, 1996; Peltola et al., in press). This ‘Negative central’ (Nc) component is considered to reflect the strength of attentional orienting response to salient, meaningful, and, in visual memory paradigms, to infrequent stimuli (see de Haan, 2007, for a review).

It has been further shown that infants’ looking time bias towards fearful faces may reflect an influence of fearful emotional expressions on the disengagement of attention. In spatial orienting, disengagement is considered as the process by which the focus of attention is terminated at its current location in order to shift attention to a new location (Posner & Petersen, 1990). The ability to disengage attention from stimuli under foveal vision is well-developed by 6 months of age (Johnson, 2005a). To examine whether fearful facial expressions affect attentional disengagement in infants, we (Peltola, Leppänen, Palokangas, & Hietanen, 2008) employed a gap/overlap task in which the infant was first presented with a face stimulus on the center of the screen followed by a peripheral target stimulus (i.e., a checkerboard pattern) acting as an exogenous cue to orient attention to its location. On overlap trials, the face remained on the screen during target presentation. Thus, the infant had to disengage his/her attention from the face in order to shift attention to the target (cf. Colombo, 2001). We found that infants made less saccades toward the targets when the central stimulus was a fearful face as compared to targets following a happy face or a matched visual noise control stimulus. Thus, infants showed an increased tendency to remain fixated on fearful faces, consistent with evidence from adult studies that attention tends to be held longer by fearful facial expressions (Georgiou et al., 2005).

In the present study, we aimed to extend the findings of Peltola et al. (2008) and to investigate the yet unstudied role of enlarged eyes on infants’ processing of fearful faces. As it is suggested that adults’ enhanced processing of fearful faces may be merely due to the detection of fearful eyes alone (e.g., Yang et al., 2007), our main objective was to determine whether fearful eyes alone are similarly sufficient already in infancy to bias attention preferentially toward fearful faces. Previous research has shown that infants are sensitive to visual information around the eyes from early on in development and it has been suggested that fearful faces may provide the optimal stimuli for activating the amygdala in early infancy (Johnson, 2005b). To this end, 7-month-old infants were first presented with a visual scanning task to analyze their scanning patterns for emotional faces. Second, an overlap task was presented to examine the latency of attention disengagement from emotional faces. In addition to fearful, happy, and neutral facial expression stimuli, we included a neutral face with the eyes taken from a fearful face. We hypothesized that if the increased allocation of attention to fearful faces in infants is based on enlarged eyes, the infants should (a) direct their visual scanning pattern more towards the eyes for both fearful faces and neutral faces with fearful eyes as compared to happy and neutral faces in the scanning task, and (b) exhibit delayed disengagement of attention from both fearful faces and neutral faces with fearful eyes in the overlap task.

Methods

Participants

The final sample of participants consisted of 15 7-month-old infants (mean age = 212 days; SD = 5.2). All infants were born full term (37–42 weeks), had a birth weight of >2400 g and no history of visual or neurological abnormalities. The participants came predominantly from urban middle class Caucasian families, and they were recruited from an existing list of parents who had volunteered for research after being contacted by letter following the birth of their child. An additional 13 7-month-olds were tested but excluded from the analyses due to prematurity (n = 3), poor eye tracker calibration (n = 3), fussiness (n = 2), or excessive movement artifacts (n = 5) resulting in <5 good trials in one or more stimulus conditions in the overlap task. Approval for the project was obtained from the Institutional Review Board of Children’s Hospital Boston.

Stimuli and Apparatus

The face stimuli were color images of four females posing fearful, happy, and neutral facial expressions, and a neutral face with the eyes taken from a fearful face. For this expression, only the eyes and eyelids of fearful faces, and thus not the eyebrows, were pasted on top of the neutral eyes. All minor signs of image editing were corrected to produce natural-looking images. Fearful, happy, and neutral facial expression stimuli were taken from the NimStim set (Tottenham et al., in press). Every infant saw one model posing the expressions in the scanning task and a different model in the overlap task. With a 60-cm viewing distance, the faces measured 15.4° and 10.8° of vertical and horizontal visual angle, respectively. Eye-tracker calibration and stimulus presentation were controlled by ClearView software (Tobii Technology AB; www.tobii.com).

The eye tracking data were obtained while infants sat on their parent’s lap in front of a 17-inch Tobii 1750 eye tracking monitor (Tobii Technology AB; www.tobii.com), which remained the only source of lightning in an otherwise darkened and sound-attenuated room. The eye tracker monitor has cameras embedded that record the reflection of an infrared light source on the cornea relative to the pupil from both eyes at a frequency of 50 Hz. The average accuracy of this eye tracking system is in the range of 0.5 to 1°, which approximates to a 0.5–1 cm area on the screen with a viewing distance of 60 cm. When both eyes can not be measured (e.g., due to movement or head position), data from the other eye are used to determine the gaze coordinates. The eye tracker compensates for robust head movements, which typically result in a temporary accuracy error of approximately 1° and, in case of fast head movements (i.e., more than 10 cm/s), in a 100-ms recovery time to full tracking ability after movement offset. The surroundings of the monitor and the video camera above the monitor were concealed with black curtains. The experimenter controlled the stimulus presentation from an adjacent room while monitoring the infants’ behaviour through the video camera.

Procedure

Upon arrival to the laboratory, the experimental procedure was described and informed consent was obtained from the parent. Before starting the data collection, the eye tracker cameras were calibrated by a 5-point calibration procedure in which a movie clip (i.e., a moving lobster coupled with a beeping sound) was presented sequentially at five locations on the screen (i.e., every corner and the center). The calibration procedure was repeated if good calibration was not obtained for both eyes in more than one location.

In the visual scanning task, fearful, happy, and neutral facial expressions, and a neutral face with fearful eyes were each presented twice for 10 s on the center of the screen. All four expressions were first presented in a random order, and then repeated in a different random order. The expression of the first face in the sequence was counterbalanced between the infants. The experimenter initiated each trial when the infant was attending to the blank screen and the eye tracker was able to track both of the infant’s eyes

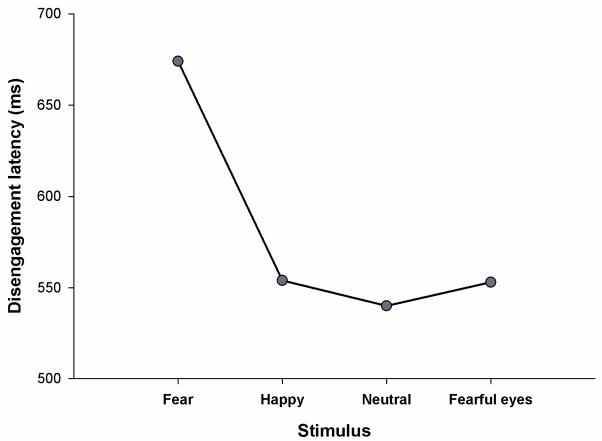

In the overlap task (Figure 1), one of the four expressions was first presented on the center of the screen for 1000 ms on a white background. Immediately following this, the face remained on the screen while being flanked by a peripheral target stimulus 13.6° equiprobably on the left or right for 3000 ms. The target stimuli were black-and-white vertically arranged circles or a checkerboard pattern, measuring 15.4° and 4.3° vertically and horizontally, respectively. Both target types were presented randomly throughout the task. In order to enhance the attention-grabbing properties of the peripheral stimuli and to increase the number of target-directed saccades (cf. Peltola et al., 2008), the target flickered by alternating its contrast polarity at a 10-Hz frequency for the first 1000 ms of its appearance. The expressions were presented in random order with the constraint that the same expression was presented no more than twice in a row. The target appeared on the same side of the screen no more than three times in a row. Between the trials, a fixation image (an animated underwater scene from Finding Nemo™ with colorful content covering the whole area of the image), measuring the size of the face stimuli, was presented on a gray background to attract infants’ attention to the center of the screen. In addition, different sounds were occasionally played from behind the monitor between trials to attract the infant’s attention toward the screen.

Figure 1.

The facial expression stimuli and the sequence of events in a single overlap task trial.

Data Reduction and Analyses

The eye tracking data for every 20-ms time points were saved in text files that contained information about where on the screen the infant was looking at each time point. To assess infants’ visual scanning patterns, multiple areas of interest (AOIs) within the face were manually defined. These included the eyes, nose, mouth, forehead, and the contour of the head. The size of each AOI was kept constant between the expressions. The scanning analyses were conducted on the percentage of looking at each AOI in relation to the total time spent looking at the expression.

To analyze the overlap task data, AOIs covering the face, the target, and the area between the face and the target were manually defined. The latency to disengage attention from different facial expressions was analyzed from trials on which there was a well-defined shift of gaze from the face toward the target. Saccade-onset latencies were calculated by measuring the time from target onset to the last time-point when a fixation was detected at the face before the eyes were shifted toward the target. Trials on which there was less than 400 ms of continuous looking at the face immediately prior to target onset were discarded. Trials were also discarded if the data were lost after target onset due to excessive movement. Fifteen infants provided ≥ 5 trials with target-directed saccades for each stimulus condition and were thus included in the disengagement latency analyses.

The latency analyses were confined to the first 5 saccade-onset latencies for each face for the following reasons. First, the infants’ looking behavior in the earlier phase of the testing session was considered to represent a better index of reflexive/exogenous attention orienting than behavior during the later course of the task. It is reasonable to assume that the infants habituate to the stimulus presentation procedure after a certain number of trials and attention orienting becomes increasingly under endogenous control. The average disengagement latencies for the first 5 latencies for each stimulus (M = 580 ms) were indeed significantly shorter than the latencies for the rest of the trials (M = 632 ms), p = .005. Second, in the same vein, previous studies using the overlap task with infants have analyzed a rather small number of overlap trials to assess disengagement latencies: Frick, Colombo, and Saxon (1999) presented only 4 overlap trials and Hunnius and Geuze (2004) presented a maximum of 8 trials per stimulus condition (a total of 32 trials).

Disengagement latencies were subjected to a repeated measures analysis of variance (ANOVA) with Expression (fearful, happy, neutral, fearful eyes) as a within-subjects factor. In order to enable a more direct association of the present findings with adult studies (e.g., Georgiou et al., 2005) we decided to analyze the latency of disengagement instead of attention disengagement frequencies (cf. Peltola et al., 2008).

Results

Visual Scanning

A 5(AOI: eyes, nose, mouth, forehead, contour) × 4(Expression: fearful, happy, neutral, fearful eyes,) ANOVA showed a significant main effect of AOI, F(4, 56) = 14.5, p = .000, however no differences in scanning patterns for different expressions were found, F(12, 168) = 0.8, p = .523. Thus, in all expressions, the infants spent the most time scanning the eye region (M = 53% of the total time spent looking at the face), followed by the forehead (M = 17%), nose (M = 16%), mouth (M = 4%), and the face contour (M = 1%).

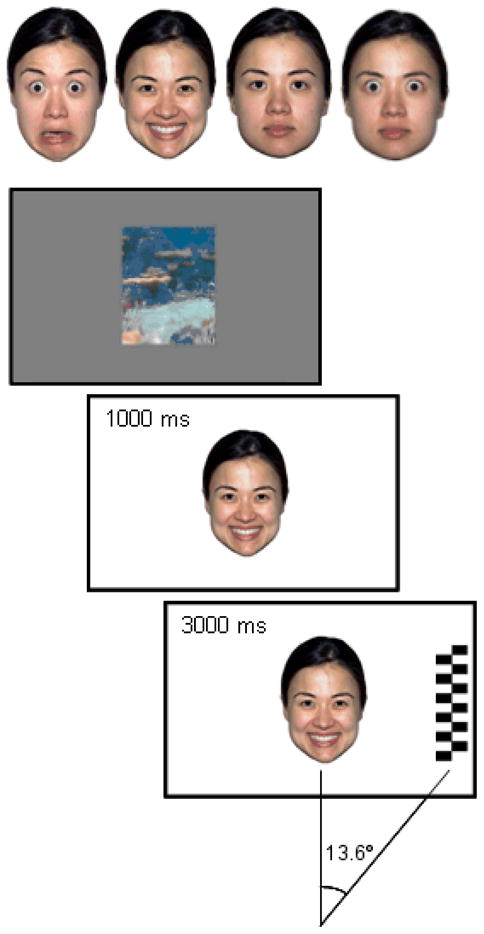

Attention Disengagement

Average disengagement latencies for the four different expressions are presented in Figure 2. Facial expression of the central stimulus had a significant effect on attention disengagement latencies, F(3, 42) = 4.7, p = .006. It took significantly longer for the infants to disengage attention from fearful faces (M = 674 ms) as compared to happy, M = 554 ms, t(14) = 2.4, p = .031, and neutral faces, M = 540 ms, t(14) = 3.5, p = .004, and neutral faces with fearful eyes, M = 553 ms, t(14) = 2.4, p = .032.

Figure 2.

Attention disengagement latencies in the overlap task.

Discussion

In the present study, 7-month-old infants took considerably longer to disengage their attention from centrally presented fearful faces as compared to happy and neutral faces, and fearful eyes alone, in order to shift their attention to a peripheral target. This result is similar to what has been observed in adults (Georgiou et al., 2005) and it extends our previous study (Peltola et al., 2008) which showed that 7-month-olds displayed a relatively stronger tendency to remain fixated on fearful than happy faces and control stimuli. The findings are also in line with previous research showing that infants start to allocate attention differentially to fearful vs. happy faces by the age of 7 months as studied by looking time and ERP measures (e.g., Leppänen et al., 2007; Peltola et al., in press).

Why should one expect relatively longer disengagement latencies from threat-related stimuli? Functionally, the stronger holding of attention may be considered a way to attain processing priority for emotionally significant stimuli and to enable a more detailed analysis of the source of the potential threat. A substantial delay in the disengagement process, however, may result in dwelling on threat-related stimuli and feelings of anxiety (Fox, Russo, Bowles, & Dutton, 2001). Moreover, a fearful face in particular can be considered an ambiguous stimulus that calls for extended processing in order to derive its communicative intent (Whalen, 1998). The ambiguity of the fearful faces used in the present study was obviously heightened by the fact that as the fearful faces gazed directly at the observer, they did not provide explicit information about the source of the potential threat as a face with an averted gaze might do.

The neural mechanisms underlying the modulation of disengagement by threat-related stimuli are currently not known. However, the amygdala is considered as the key neural structure in mediating the influence of emotional stimuli on attentional processing, mainly by producing transient increases in perceptual responsivity for stimuli tagged as emotionally significant (Phelps, 2006). These enhanced sensory representations may act to bias attentional selection in favor of emotional stimuli when there is a competition of attentional resources (Vuilleumier, 2005). In addition to amplifying extrastriate visual responses, threat-related stimuli may also result in transient suppression of neural activity in parietal areas associated with shifts of attention, thereby producing temporarily lower responsiveness to competing stimuli (Pourtois, Schwartz, Seghier, Lazeyras, & Vuilleumier, 2006). Naturally, as we do not know the developmental time course of amygdala maturation, its structural and functional connectivity with mechanisms implicated in emotion processing (e.g., orbitofrontal and anterior cingulate cortices) and visual processing of faces (inferotemporal cortex), or the role of the amygdala in emotional face perception in human infants, caution should be warranted in relating the adult model of emotional attention (Vuilleumier, 2005) to early development.

The present findings indicate that infants’ stronger attentional responses to fearful faces can not be interpreted simply as responses to enlarged fearful eyes. Consistent with previous data (e.g., Adolphs et al., 2005), results from our scanning task showed that infants focused primarily on the eye region of all faces, however, this bias was not strengthened in response to fearful faces or neutral faces with fearful eyes. In the overlap task, the latency to disengage attention from the neutral face with fearful eyes was indifferent from happy and neutral faces, with only full fearful faces resulting in longer disengagement latencies. Postulating that the effects of threat-related stimuli on attention are contingent on enhanced amydgala activity(Vuilleumier, 2005), the results seem to be partially at odds with previous findings of comparable amygdala responses to fearful faces and fearful eyes alone in adults (Morris et al., 2002; Whalen et al., 2004). However, more recent evidence suggests that the perception of fearful eyes is not solely responsible for the enhanced amygdala reactivity to fearful faces. With fMRI, Asghar et al. (2008) found amygdala responses of similar magnitude to whole fearful faces, fearful eyes alone, and fearful faces with the eye region masked. With similar stimulus conditions, Leppänen et al. (2008) found enhanced visual ERP responses to fearful as compared to neutral faces both when the eyes were covered and presented in isolation. However, in Leppänen et al. (2008), behavioral and ERP differentiation of fearful and neutral expressions occurred at a shorter latency when the whole face instead of the eye region alone was visible. This suggests that although adults may respond to the eyes per se and the eye region may provide sufficient information for classifying faces as fearful vs. non-fearful, whole face expressions provide additional diagnostic cues that permit faster and more accurate discrimination performance (e.g., cues in the mouth region). Collectively, these findings seem to suggest that adults flexibly utilize information from multiple sources to recognize fearful expressions whereas infants, in turn, seem to require the whole face in order to detect the face as fearful. As a limitation, it should be noted that the design of the present study does not permit an answer to the question of whether a fearful mouth embedded in an otherwise neutral face would yield similar results in infant participants as a stimulus with fearful eyes in a neutral face.

In conclusion, the present study provides evidence that by 7 months of age, infants show enhanced sensitivity to facial signals of threat as measured by the latency to disengage attention from centrally presented facial expression stimuli. It also seems that low-level visual information consisting of wide-open eyes is not sufficient to account for infants’ attentional biases for fearful faces. The results contribute to the growing research area of emotion-attention interactions in infancy which aims to elucidate the ontogenetic mechanisms of the processing of emotional significance in environmental stimuli (Leppänen & Nelson, 2009). Finally, it remains to be determined how the differential disengagement from different emotional stimuli contributes to other measures of infant attention such as looking times and attention-sensitive ERPs.

Acknowledgments

This research was supported by Emil Aaltonen Foundation and the Finnish Graduate School of Psychology (M.J.P), the Academy of Finland (projects #1111850 and #1115536; J.M.L., J.K.H.), and the National Institutes of Mental Health (MH078829; C.A.N.).

Development of the MacBrain Face Stimulus Set was overseen by Nim Tottenham and supported by the John D. and Catherine T. MacArthur Foundation Research Network on Early Experience and Brain Development. Please contact Nim Tottenham at tott0006@tc.umn.edu for more information concerning the stimulus set.

References

- Adolphs R, Gosselin F, Buchanan TW, Tranel D, Schyns P, Damasio AR. A mechanism for impaired fear recognition after amygdala damage. Nature. 2005;433:68–72. doi: 10.1038/nature03086. [DOI] [PubMed] [Google Scholar]

- Asghar AUR, Chiu Y, Hallam G, Liu S, Mole H, Wright H, et al. An amygdala response to fearful faces with covered eyes. Neuropsychologia. 2008;46:2364–2370. doi: 10.1016/j.neuropsychologia.2008.03.015. [DOI] [PubMed] [Google Scholar]

- Colombo J. The development of visual attention in infancy. Annual Review of Psychology. 2001;52:337–367. doi: 10.1146/annurev.psych.52.1.337. [DOI] [PubMed] [Google Scholar]

- de Haan M. Visual attention and recognition memory in infancy. In: de Haan M, editor. Infant EEG and event-related potentials. New York: Psychology Press; 2007. pp. 101–143. [Google Scholar]

- Fox E, Russo R, Bowles R, Dutton K. Do threatening stimuli draw or hold visual attention in subclinical anxiety? Journal of Experimental Psychology: General. 2001;130:681–700. [PMC free article] [PubMed] [Google Scholar]

- Frick JE, Colombo J, Saxon TF. Individual and developmental differences in disengagement of fixation in early infancy. Child Development. 1999;70:537–548. doi: 10.1111/1467-8624.00039. [DOI] [PubMed] [Google Scholar]

- Georgiou GA, Bleakley C, Hayward J, Russo R, Dutton K, Eltiti S, et al. Focusing on fear: Attentional disengagement from emotional faces. Visual Cognition. 2005;12:145–158. doi: 10.1080/13506280444000076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holmes A, Green S, Vuilleumier P. The involvement of distinct visual channels in rapid attention towards fearful facial expressions. Cognition & Emotion. 2005;19:899–922. [Google Scholar]

- Hunnius S, Geuze RH. Gaze shifting in infancy: A longitudinal study using dynamic faces and abstract stimuli. Infant Behavior & Development. 2004;27:397–416. doi: 10.1207/s15327078in0602_5. [DOI] [PubMed] [Google Scholar]

- Johnson MH. Developmental cognitive neuroscience: An introduction. 2. Oxford: Blackwell; 2005a. [Google Scholar]

- Johnson MH. Subcortical face processing. Nature Reviews Neuroscience. 2005b;6:766–774. doi: 10.1038/nrn1766. [DOI] [PubMed] [Google Scholar]

- Kotsoni E, de Haan M, Johnson MH. Categorical perception of facial expressions by 7-month-old infants. Perception. 2001;30:1115–1125. doi: 10.1068/p3155. [DOI] [PubMed] [Google Scholar]

- Leppänen JM, Hietanen JK, Koskinen K. Differential early ERPs to fearful versus neutral facial expressions: A response to the salience of the eyes? Biological Psychology. 2008;78:150–158. doi: 10.1016/j.biopsycho.2008.02.002. [DOI] [PubMed] [Google Scholar]

- Leppänen JM, Moulson MC, Vogel-Farley VK, Nelson CA. An ERP study of emotional face processing in the adult and infant brain. Child Development. 2007;78:232–245. doi: 10.1111/j.1467-8624.2007.00994.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leppänen JM, Nelson CA. Tuning the developing brain to social signals of emotions. Nature Reviews Neuroscience. 2009;10:37–47. doi: 10.1038/nrn2554. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morris JS, deBonis M, Dolan RJ. Human amygdala responses to fearful eyes. Neuroimage. 2002;17:214–222. doi: 10.1006/nimg.2002.1220. [DOI] [PubMed] [Google Scholar]

- Morris JS, Friston KJ, Buchel C, Frith CD, Young AW, Calder AJ, et al. A neuromodulatory role for the human amygdala in processing emotional facial expressions. Brain. 1998;121:47–57. doi: 10.1093/brain/121.1.47. [DOI] [PubMed] [Google Scholar]

- Nelson CA, de Haan M. Neural correlates of infants’ visual responsiveness to facial expressions of emotion. Developmental Psychobiology. 1996;29:577–595. doi: 10.1002/(SICI)1098-2302(199611)29:7<577::AID-DEV3>3.0.CO;2-R. [DOI] [PubMed] [Google Scholar]

- Nelson CA, Dolgin K. The generalized discrimination of facial expressions by 7-month-old infants. Child Development. 1985;56:58–61. [PubMed] [Google Scholar]

- Peltola MJ, Leppänen JM, Mäki S, Hietanen JK. Emergence of enhanced attention to fearful faces between 5 and 7 months of age. Social Cognitive and Affective Neuroscience. doi: 10.1093/scan/nsn046. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peltola MJ, Leppänen JM, Palokangas T, Hietanen JK. Fearful faces modulate looking duration and attention disengagement in 7-month-old infants. Developmental Science. 2008;11:60–68. doi: 10.1111/j.1467-7687.2007.00659.x. [DOI] [PubMed] [Google Scholar]

- Phelps EA. Emotion and cognition: Insights from studies of the human amygdala. Annual Review of Psychology. 2006;57:27–53. doi: 10.1146/annurev.psych.56.091103.070234. [DOI] [PubMed] [Google Scholar]

- Posner MI, Petersen SE. The attention system of the human brain. Annual Review of Neuroscience. 1990;13:25–42. doi: 10.1146/annurev.ne.13.030190.000325. [DOI] [PubMed] [Google Scholar]

- Pourtois G, Grandjean D, Sander D, Vuilleumier P. Electrophysiological correlates of rapid spatial orienting towards fearful faces. Cerebral Cortex. 2004;14:619–633. doi: 10.1093/cercor/bhh023. [DOI] [PubMed] [Google Scholar]

- Pourtois G, Schwartz S, Seghier ML, Lazeyras F, Vuilleumier P. Neural systems for orienting attention to the location of threat signals: An event-related fMRI study. Neuroimage. 2006;31:920–933. doi: 10.1016/j.neuroimage.2005.12.034. [DOI] [PubMed] [Google Scholar]

- Schyns PG, Petro LS, Smith ML. Dynamics of visual information integration in the brain for categorizing facial expressions. Current Biology. 2007;17:1580–1585. doi: 10.1016/j.cub.2007.08.048. [DOI] [PubMed] [Google Scholar]

- Tottenham N, Tanaka JW, Leon AC, McCarry T, Nurse M, Hare TA, et al. The NimStim set of facial expressions: Judgments from untrained research participants. Psychiatry Research. doi: 10.1016/j.psychres.2008.05.006. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vuilleumier P. How brains beware: Neural mechanisms of emotional attention. Trends in Cognitive Sciences. 2005;9:585–594. doi: 10.1016/j.tics.2005.10.011. [DOI] [PubMed] [Google Scholar]

- Whalen PJ. Fear, vigilance, and ambiguity: Initial neuroimaging studies of the human amygdala. Current Directions in Psychological Science. 1998;7:177–188. [Google Scholar]

- Whalen PJ, Kagan J, Cook RG, Davis FC, Kim H, Polis S, et al. Human amygdala responsivity to masked fearful eye whites. Science. 2004;306:2061. doi: 10.1126/science.1103617. [DOI] [PubMed] [Google Scholar]

- Yang E, Zald DH, Blake R. Fearful expressions gain preferential access to awareness during continuous flash suppression. Emotion. 2007;7:882–886. doi: 10.1037/1528-3542.7.4.882. [DOI] [PMC free article] [PubMed] [Google Scholar]