Abstract

We investigate analytically a firing rate model for a two-population network based on mutual inhibition and slow negative feedback in the form of spike frequency adaptation. Both neuronal populations receive external constant input whose strength determines the system’s dynamical state—a steady state of identical activity levels or periodic oscillations or a winner-take-all state of bistability. We prove that oscillations appear in the system through supercritical Hopf bifurcations and that they are antiphase. The period of oscillations depends on the input strength in a nonmonotonic fashion, and we show that the increasing branch of the period versus input curve corresponds to a release mechanism and the decreasing branch to an escape mechanism. In the limiting case of infinitely slow feedback we characterize the conditions for release, escape, and occurrence of the winner-take-all behavior. Some extensions of the model are also discussed.

Keywords: Hopf bifurcation, antiphase oscillations, slow negative feedback, winner-take-all, release and escape, binocular rivalry, central pattern generators

1. Introduction

Competition models have a long tradition in ecology and population biology (see, e.g., [22]). Typically, the competition involves negative interactions in the battle for a common resource. Eventually, one of the participant populations emerges as the winner eliminating the competitors. This framework has appeared in models of neuronal development where the competition is for synapse formation such as the development of neuromuscular connections for innervated muscle fibers and for the formation of ocular dominance columns and topographic maps (as reviewed in [35]). The notion of competition has also been applied in the modeling of various neuronal computational tasks. Winner-take-all behavior, when one neural population remains active and the others inactive indefinitely as a result of inhibitory interactions, has been proposed in models for short term memory and attention [13] or for the selection and switching in the striatum of the basal ganglia under both normal and pathological conditions [15, 21].

The winner-take-all steady state may persist for a long time but not indefinitely if some mechanism for slow fatigue or adaptation is at work. In this case one population may be dominant for a while, then another, and so on. Competition between, say, two neuronal populations, via reciprocal inhibition and slow adaptation underlies models for alternating rhythmic behavior in central pattern generators (CPGs) [11, 32, 6, 33] and in perceptual bistability [17, 37, 24]. CPGs consist of neural circuits that drive alternately contracting muscle groups. Perceptual bistability refers to a class of phenomena in which a deeply ambiguous stimulus gives rise to two different interpretations that alternate over time, only one being perceived at any given moment. Slow adaptation may be implemented via a cellular mechanism (fatigue in the spike generation mechanism) or a negative feedback in the coupling (depression of the synaptic transmission mechanism). In some neuronal competition models the alternations may be irregular and caused primarily by noise, with adaptation playing a secondary role [30, 10, 24].

Both for CPGs and perceptual bistability the issue of oscillations’ frequency or period detection (and eventually control) seems to be important. For example, a classical example of perceptual bistability is binocular rivalry whose properties were summarized in the so-called Levelt’s propositions [19]. In binocular rivalry, a subject views an ambiguous stimulus in which each eye is presented with a drastically different image. Instead of perceiving a mixture of the two images, the subject reports (over a large range of stimulus conditions) an alternation between the two competing percepts; one image is perceived for a while (a few seconds), then the other, etc. Levelt’s proposition IV (LP-IV) states that increasing the contrast of the rivaling images increases the frequency of percept switching, or, in other words, that dominance times of both perceived images decrease with equal increase of stimulus strength. Since 1968, binocular rivalry has been investigated intensively in other psychophysics experiments [2, 25, 20, 1, 28, 29, 3], in experiments using fMRI techniques [34, 26, 38, 18], and also in modeling studies [17, 37, 10, 24].

In a recent modeling paper, Shpiro et al. [31] show that for a class of competition models, the LP-IV type of dynamics occurs in fact only within a limited range of stimulus strength. Outside this range four other types of behavior were observed: (i) fusion at a very high level of activity, (ii) winner-take-all behavior, (iii) a region where dominance times increase with stimulus strength (as opposed to LP-IV), and then (iv) fusion again for very low levels of activity (see Figure 3F in section 2). These differences between experimental reports and theory have important implications, either predicting new possible dynamics in binocular rivalry or, if future experiments do not confirm them, pointing to the necessity for other types of models. Meanwhile, it is important to understand the sources or mechanisms that lead to the nonmonotonic dependence of oscillation period on the stimulus strength for this class of neuronal competition models. Our paper aims to investigate this issue.

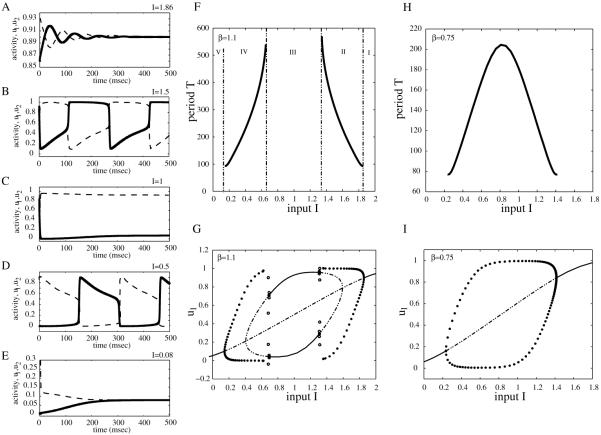

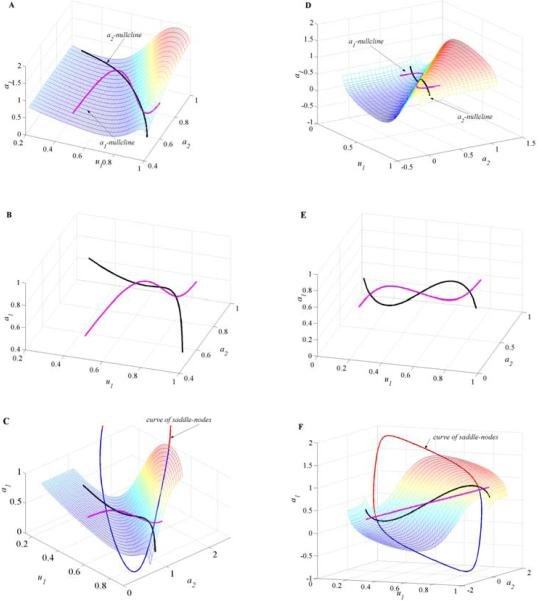

Figure 3.

Bifurcation diagrams and examples of activity timecourses for neuronal competition model (2.1) with parameter values g = 0.5, τ = 100, r = 10, θ = 0.2, and β = 1.1 (A–G), respectively, β = 0.75 (H–I). Timecourses of u1, u2 corresponding to panel F for different values of I: (A) I = 1.86, (B) I = 1.5, (C) I = 1, (D) I = 0.5, and (E) I = 0.08. Bifurcation diagrams of period T of the network oscillation versus input strength I (F and H). Bifurcation diagram of population activity u1 versus I (G and I).

We analyze a firing rate model in which competition between populations is a result of a combination of reciprocal inhibition and a slow negative feedback process. We prove that, as the input strength changes, oscillations appear in the system through a Hopf bifurcation and that they are antiphase. Due to the two time scales involved in the system there is a regime where periodic solutions take the form of relaxation-oscillators. Their period of oscillations depends nonmonotonically on the input strength, say, I: in a range of low values for I, the period increases with I, and we show that the dynamics is due to a release mechanism; on the other hand, in a range of higher values for I, the period decreases with I, and escape is the underlying mechanism (see section 4; we define release as the case when the switch in dominance during oscillation occurs due to a significant change in the response to an input to the dominant population. On the contrary, escape corresponds to the case of a significant change in the input-output function for the suppressed population). For intermediate values of I, winner-take-all is possible and we explain how it appears. Then in section 5 we present some model modifications that allow for reducing, or even excluding, one of the escape and release regimes, thus leading to a monotonic period versus input curve.

2. The mathematical model

The model we investigate in this paper assumes a network architecture of two populations of neurons (Figure 1) that respond to two competing stimuli of equal strength:

| (2.1) |

Variables uj (j = 1, 2) measure short-time and spatially averaged firing rates of the two populations that inhibit each other. The system is nonlinear due to the gain function S; it is the steady input-output function for the population and it has a sigmoid shape as in Figure 2A. The strength of inhibition is modeled by the positive parameter β, while I is the control parameter directly associated to the external stimulus strength (e.g., it grows with growing stimulus strength such as contrast). Each population is subject to a slow negative feedback process aj such as spike frequency adaptation of positive strength g. Since variables aj evolve in much slower time than uj, the parameter τ takes large values, τ ⪢ 1 (e.g., the time-scale for uj is about 10 msec, while for aj it is about 1000 msec).

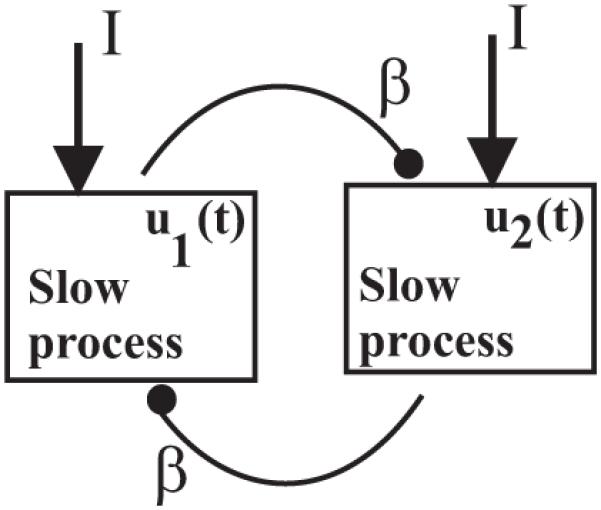

Figure 1.

Network architecture of neuronal competition model.

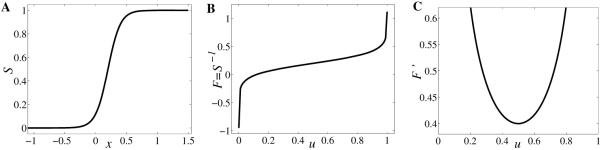

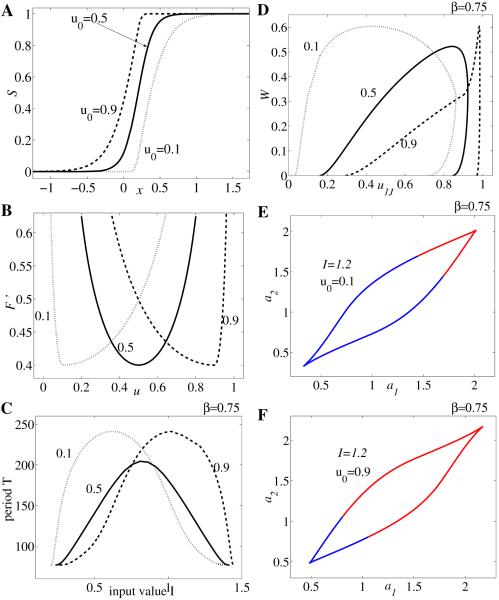

Figure 2.

Graphical representations of generic (A) gain function S; (B) its inverse F; and (C) first derivative F’.

Remark 2.1. In general, firing-rate models like (2.1) include in the equation of uj a nonlinear term of the form S(I + αuj − βuk − gaj). The product αuj is associated with the intrapopulation recurrent excitation. It is important to note that for neuronal competition model (2.1) we have disallowed recurrent excitation (taken α = 0) in order to preclude an isolated population (β = 0) from oscillating on its own. This is a restriction imposed by experimental observations on binocular rivalry and other perceptual bistable phenomena.

The nonlinear gain function S that appears in the differential equations for uj is usually defined in neuronal models by

| (2.2) |

with positive r and real θ.

Function S is invertible with F = S−1 a -function and (Figure 2B–C). Based on this example, we consider the following assumptions for with positive for the gain function S.

S : R → (0, 1) is a differentiable, monotonically increasing function with S(θ) = u0 ∈ (0, 1) and limx→−∞ S(x) = 0, limx→∞ S(x) = 1. Moreover, its first and second derivatives satisfy the conditions limx→±∞ S’(x) = 0, S”(x) > 0 for x < θ, S”(x) < 0 for x > θ, and S”(θ) = 0, so S’ has a maximum at θ.

As a consequence, S is invertible with F = S−1 : (0, 1) → R monotonically increasing function such that limu→0 F(u) = −∞, limu→1 F(u) = ∞, F(u0) = θ, and limu→0 F’(u) limu→1 F’(u) = ∞, F”(u) < 0 for u ∈ (0, u0), F”(u) > 0 for u ∈ (u0, 1), F”(u0) = 0. Obviously, F’ has a minimum value at u0 which is F’(u0) = 1/S’(θ).

Additionally we assume that F is a -function on (0, 1), or at least on (0, 1) and on (0, 1) \ {u0}.

The typical graphs of function S and its corresponding F and F’ are drawn in Figure 2A–C. We used the example (2.2) with parameter values r = 10 and θ = 0.2; obviously in this case S(θ) = u0 = 0.5.

All the experiments that motivated our work report oscillatory phenomena with frequencies tightly connected to the stimulus strengths. Moreover, as Levelt [19] pointed out for binocular rivalry, those experiments show large ranges for stimulus strength where the corresponding oscillation periods/frequencies behave monotonically. What kind of possible mechanism is behind this type of dynamics is the question we will focus on in this paper.

Given the neuronal competition model (2.1), the goal is to examine the effect the parameter I has on the existence of oscillations and on their period. The system is a simplified version of an entire class of competition models that, as we found [31], share important dynamical features.

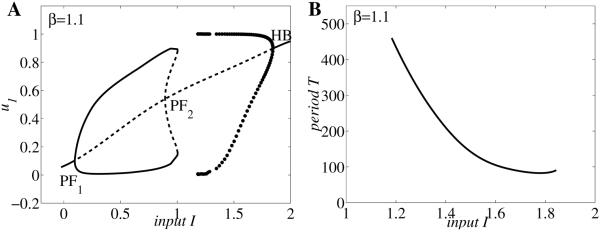

To illustrate those commonalities we draw in Figure 3A–E the timecourses of activity variables u1(t) and u2(t) for different values of control parameter I. Then we summarize the result in the bifurcation diagram of the period T versus I (Figure 3F). Other parameter values are fixed to β = 1.1, g = 0.5, τ = 100, and S as in (2.2) with r = 10 and θ = 0.2 (here u0 = 1/2). The system (2.1) exhibits five possible types of behavior: for large values of I (region I in Figure 3F) both populations are active at identically high levels (Figure 3A); the timecourses of u1(t) and u2(t) tend to a stable steady state larger than u0. As I decreases (region II, Figure 3F) the system starts oscillating with u1(t) and u2(t) alternatively on and off (Figure 3B); in this region the period of oscillation decreases with increasing input strength. At intermediate values of I a winner-take-all kind of behavior is observed (region III, Figure 3F); depending on the choice of initial conditions, one of the two populations is active indefinitely, while the other one remains inactive (Figure 3C). Decreasing I even more (region IV, Figure 3F) the neuronal model becomes oscillatory again (Figure 3D) with u1 and u2 competing for the active state; however, for this range of parameter the oscillation period T increases with input value I—an opposite behavior to that observed in region II. Last, for small values of stimulus strength (region V, Figure 3F) both populations remain inactive at identically low level firing rates (Figure 3E); the timecourses of u1(t) and u2(t) tend to a stable steady state less than u0.

To further characterize system (2.1)’s dynamics as the input value I is varied, we also construct the local bifurcation diagram of amplitude response u1 to I (Figure 3G). For the parameter ranges I and V the trajectories are attracted to a stable fixed point satisfying the condition (thick line in Figure 3G). This fixed point becomes unstable (dashed line) in regions II, III, and IV, where the attractor is replaced by either a stable limit cycle (regions II and IV: branched filled-circle curves corresponding to the maximum and minimum amplitudes during rivalry oscillations) or another stable fixed point with (region III). Due to the symmetry in the equations of (2.1) whenever (,, , ) is an equilibrium point, (, , , ) is as well. The local bifurcation diagram suggests the existence of some Hopf and pitchfork bifurcations in the model that we will further investigate in section 3.

There is another common feature of many neuronal competition models based on reciprocal inhibition architecture with slow negative feedback in the form of spike frequency adaptation and/or synaptic depression [31]: the absence of the winner-take-all behavior when inhibition strength β is sufficiently small. As we illustrate in Figure 3H–I for β = 0.75 (all other parameters are the same as above), the winner-take-all regime at intermediate I disappears. However, the dependency of period T on stimulus strength remains nonmonotonic.

An intriguing question is: What are the neuronal mechanisms underlying the two distinct dynamics—one of increasing period with increase of stimulus strength (for smaller values of input), and one of decreasing period (for larger values)? This issue is discussed in section 4 and then extended in section 5.

Our general goal is to understand and possibly to analytically characterize the numerical results obtained for this specific competition model (2.1). Consequently, as already pointed out, this step will help us understand the typical behavior of an entire class of neuronal competition models.

3. Oscillatory antiphase solutions and local analysis

In this section we use methods from local bifurcation theory [14, 16] to prove the existence of periodic solutions (u1(t), u2(t), a1(t), a2(t)) for the two-population network (2.1). We also show that the main variables u1 and u2 oscillate in antiphase, therefore competing for the ON/active state.

The bifurcation diagrams obtained numerically in section 2 suggest the existence of an equilibrium point satisfying u1 = u2 no matter the value of parameter I. Let us now investigate system (2.1) theoretically.

All equilibria satisfy the conditions u1 = S(I − βu2 − ga1), u2 = S(I − βu1 − ga2), a1 = u1, and a2 = u2 that are equivalent (due to the invertibility of S) to F(u1) = I − βu2 − ga1, F(u2) = I − βu1 − ga2, and a1 = u1, a2 = u2. Looking for a particular type of equilibrium point, that is, for points with u1 = u2, we obtain the equation I = H(u) with H defined by

| (3.1) |

Since F is monotonically increasing on (0, 1) with vertical asymptotes limu→0 F(u) = −∞ and limu→1 F(u) = ∞, (3.1) has a unique solution uI ∈ (0, 1) for any real value of the parameter I. Moreover, from the identity I = F(uI) + (β + g)uI we compute

| (3.2) |

so a decrease in I leads to a decrease in uI with limI→∞ uI = 1 and limI→−∞ uI = 0.

The neuronal competition model (2.1) always possesses an equilibrium of the type (uI, uI, uI, uI). Its stability properties are then defined by the linearized system , Y = (u1 − uI, u2 − uI, a1 − uI, a2 − uI)T, where ()T stays for the transpose, and matrix

This form of the matrix relies on the equality S’(I − βu2 − ga1) = S’(F(u1)) = S’(S−1(u1)) = 1/(S−1)’(u1) = 1/F’(u1), which is true at the equilibrium point.

The characteristic equation of takes the form

As a difference of squares it can be decomposed into two quadratic equations: the first is , so two eigenvalues of the matrix , say, λ1 and λ2, have negative real part no matter the value of parameter I. The other eigenvalues λ3 and λ4 satisfy the second quadratic equation , and their real part can change sign when I is varied.

For |I| sufficiently large, uI is close to either zero or one, keeping F’(uI) larger than both and β − g (see Figure 2C); the corresponding equilibrium point (uI, uI, uI, uI) is asymptotically stable.

There are two ways this equilibrium point can lose stability: either through a pair of purely imaginary eigenvalues λ3,4 = ±iω at or through a zero eigenvalue λ3 = 0, λ4 < 0 at F’(uI) = β − g. Which of these two cases occurs first depends on the relationship between and β − g: if , i.e., β/g < τ + 1, then the eigenvalues λ3, λ4 change the sign of their real part from negative to positive by crossing the imaginary axis (λ3,4 = ±iω); if β/g > τ + 1, then the case λ3 = 0, λ4 < 0 is encountered first.

At this point we remind the reader of our assumption of a large time constant value τ. (The competition between the populations in the network comes from the combination of two important ingredients: reciprocal inhibition and the addition of a slow negative feedback process.) Therefore, it makes sense to situate ourselves in the case of β/g ⪡ τ, which implies

| (3.3) |

Inequality (3.3) can be interpreted as a feature of the neuronal competition model to be rather (adaptation) feedback-dominated than (inhibitory) coupling-dominated.

We will assume in the following that parameters g and τ are fixed and that β is chosen such that (3.3) is true.

Another observation is that the graph of F’ has a well-like shape with positive minimum at 1/S’(θ) as in Figure 2C. Consequently the straight horizontal line intersects it twice (if ), once (for the equality), or not at all (if ). As observed in numerical simulations, in order for the system to oscillate, a sufficiently large inhibition strength has to be considered. Mathematically that reduces to

| (3.4) |

Thus we are able to characterize the stability of the equilibrium (uI, uI, uI, uI).

Theorem 3.1. The dynamical system (2.1) has a unique equilibrium point with u1 = u2, say, eI = (uI, uI, uI, uI), for any real I. The value uI increases monotonically with I and belongs to the interval (0, 1).

Let us assume that the adaptation-dominance condition (3.3) is true.

If , then eI is asymptotically stable for all I ∈ R.

- If then there exist exactly two values , such that and

(3.5)

The equilibrium point eI is asymptotically stable for all and unstable for , where , defined by (3.1). At and the stability is lost through a pair of purely imaginary eigenvalues.

Proof.

Since , all eigenvalues of the linearized system about eI have negative real part.

The conclusion is based on the properties of F’, which decreases on interval (0, u0) and increases on (u0, 1) with F’(u0) = min(F’). The sum λ3 + λ4 changes sign from negative to positive when I increases through and then back from positive to negative when passing through . For at least one real part of λ3 and λ4 is positive, so eI is unstable. At and we have λ3,4 = ±iω. For all other values of I we have λ3 + λ4 < 0, λ3λ4 > 0; so eI is asymptotically stable.

Since the equilibrium point eI becomes unstable through a pair of purely imaginary eigen-values as I crosses the values and , we expect to find there two Hopf bifurcation points. Indeed, in section 3.1 we prove the existence of a supercritical Hopf bifurcation at both and and, as a consequence, the existence of stable oscillatory solutions for system (2.1).

3.1. Normal form for the Hopf bifurcation

In the following we assume that both inequalities (3.3) and (3.4) are true; that is, we take the coupling in (2.1) to be sufficiently strong but still adaptation-dominated.

Let us use the notation I* for any of the critical values and and similarly the notation u* for . Then the linearization matrix at u* becomes

and it has eigenvalues λ1,2 with Re(λ1,2) < 0 and λ3,4 = ±iω,

| (3.6) |

The system (2.1) has an equilibrium eI for any I ∈ R; we translate eI to the origin with the change of variables vj = uj − uI, bj = aj − uI (j = 1, 2) and obtain a system topologically equivalent to (2.1),

| (3.7) |

Near u*, u* ≠ u0, we expand the nonlinear terms in (3.7) with respect to uI and obtain for v1 (and similarly for v2) an equation of the form

| (3.8) |

Here h.o.t. means the higher order terms. The parameter value I* is a possible Hopf bifurcation, so we consider small perturbations about it and about the solution u*. That is, we take

| (3.9) |

where α is the bifurcation parameter and V = (v1, v2, b1, b2)T.

The expansions of the coeffcients S(k)(F(uI)), k = 1, 2, 3, …, with respect to take the form , and , where A, B, and D are defined by

| (3.10) |

(see Appendix A for more details). Let us introduce the following notation: for two vectors U = (v1, v2, b1, b2)T and W = (w1, w2, c1, c2)T we define first the quantities , and then, using the scalars from (3.10), we define the operators

Then based on (3.8), (3.9), and (3.10), we write system (3.7) as

The construction of the normal form relies on an algorithm that we describe in Appendix A (see also [4, 5] for a similar approach) and that involves tedious calculations; we present here only the main result.

Theorem 3.2. Let us assume that conditions (3.3) and (3.4) are true (sufficiently strong coupling and adaptation-dominated system), and take , as in normal Theorem 3.1. Then the system (2.1) has in the neighborhood of I* the normal form

| (3.11) |

where z is a complex variable and

with , and A, B, D as in (3.10).

In order to show that and are supercritical Hopf bifurcation points, we need to check that the coefficient of z2z‾ has negative real part, i.e., that .

As we prove in the appendix, for our range of parameters β, g, and τ, the first term in has indeed positive real part (see (A.3)); on the other hand, the real part of the second term (see (A.4)) is larger than

Remark 3.1. The inverse of function S defined by (2.2) satisfies the condition

| (3.12) |

Moreover, the inequality (3.12) is true not only for u* but for all u ∈ (0, 1).

We use this observation to state our next result.

Theorem 3.3. Let us assume the same hypotheses as in Theorem 3.2. Given at the property (3.12) for the gain function S, the input value I* is a supercritical Hopf bifurcation point for system (2.1). The stable limit cycle occurs on the left side of (that is, for sufficiently close ) and on the right side of .

Proof. The Hopf bifurcation is supercritical since in the normal form; the nondegeneracy condition is Re(Aφ) = Aβ/2 ≠ 0.

The sign of A is opposite to the sign of F”(u*), so A > 0 for and A < 0 for . Consequently the sign of Re(Aφ(I − I*)) that shows the direction of limit cycle bifurcation is positive for and .

Remark 3.2. It is possible to obtain supercritical Hopf bifurcation points for other types of gain-function than (3.12), as long as has positive value. When (3.12) is not valid, the sign of will be computed directly from the definition formula of .

3.2. Antiphase oscillations

The stable limit cycle that exists in the neighborhood of the bifurcation points (for ) and (for ) is a periodic solution L1(t) = (u1(t), u2(t), a1(t), a2(t)) of period, say, T. Due to the symmetry of the system (2.1) with respect to the group Γ = {14, γ} where 14 is the unitary 4-by-4 matrix and

L2(t) = γL1(t) = (u2(t), u1(t), a2(t), a1(t)) is also a periodic solution for (2.1). L2 results automatically from solution L1 by relabeling the two network’s populations. Moreover, it belongs to the same neighborhood of the equilibrium point as L1 does.

Since the limit cycle born through the Hopf bifurcation is unique, the corresponding phase space trajectories of L1 and L2 coincide. Therefore, there exists a phase shift α0 ∈ [0, T) such that L2(t) = L1(t + α0). This implies u2(t) = u1(t + α0) and u1(t) = u2(t + α0), i.e., u1(t) = u1(t + 2α0) for all real t [12]. The phase shift α0 needs to satisfy α0 = kT/2 with k an integer, so k is either k = 0 or k = 1. If k = 0, the two populations in the network will oscillate in synchrony. If k = 1, we have u2(t) = u1(t + T/2) and a2(t) = a1(t + T/2), which means an antiphase oscillation.

Let us assume for the moment that k = 0. Then U(t) = (u1(t), u1(t), a1(t), a1(t)) is a periodic solution of (2.1), and, as a consequence, (u1(t), a1(t)) is a periodic solution of the two-dimensional system

This contradicts Bendixon’s criterion: the expression

is always negative, so our initial assumption should be false.

Excluding the first case, the limit cycle has to be an antiphase solution of (2.1): the two populations compete indeed for the active state (see, for example, Figure 3B or 3D).

We state our conclusion in the following theorem.

Theorem 3.4. Let us assume that conditions (3.3) and (3.4) are true and the coefficient in the normal form (3.11) has positive real part. Then the stable limit cycle obtained at the supercritical Hopf bifurcation (as I crosses either or ) corresponds to an antiphase oscillation: the limit cycle of period T satisfies u2(t) = u1(t + T/2) and a2(t) = a1(t + T/2) for any real t.

3.3. Multiple equilibria for large enough inhibition

Our local analysis shows how stable oscillations occur in system (2.1)—through a Hopf bifurcation. The uniform equilibrium point eI has four eigenvalues, λ1 and λ2 with negative real part independent of I (Re(λ1,2) < 0), and λ3 and λ4 that can cross the imaginary axis. Besides Hopf, another type of local bifurcation appears in (2.1) when one of the eigenvalues λ3, λ4 takes zero value, that is, when F’(uI) = β − g. Because of the system’s symmetry we expect it to be a pitchfork bifurcation.

Numerical simulations of system (2.1) reveal indeed the existence of additional equilibrium points. However, they exist for stronger (Figure 3G, β = 1.1) but not for weaker inhibition (Figure 3I, β = 0.75). We explain analytically how that happens.

Theorem 3.5.

If β − g < 1/S’(θ), then the dynamical system (2.1) has a unique equilibrium point for all real I and this is eI = (uI, uI, uI, uI).

- For strong inhibition,

there are exactly two values, say, , such that and(3.13)

At and defined by (3.1), the equilibrium point eI has a zero eigenvalue.(3.14)

Proof. The condition that characterizes the equilibrium points of (2.1) is equivalent to G(u1) = G(u2) = I − β(u1 + u2), where we define G by G(u) = F(u) + (g − β)u, u ∈ (0, 1). We have limu→0 G(u) = −∞, limu→1 G(u) = ∞, and G’(u) = F’(u) + g − β ≥ F’(u0) + g − β = 1/S’(θ) + g − β. Obviously, based on hypothesis (i), we have G’(u) > 0. Therefore, G is a monotonically increasing function; so it is injective, and the conclusion follows immediately. Statement (ii) results from the shape of F’.

By constructing the normal form of the system around the bifurcation point or , we prove the existence of a subcritical pitchfork bifurcation. Therefore, in the neighborhood of or the system (2.1) has multiple (three) equilibria. However, since the pitchfork is subcritical, the two newly born equilibrium points are unstable. In the four-dimensional eigenspace they actually possess two unstable modes (see Remark 3.4).

In some cases these nonuniform equilibria (having u1 ≠ u2) might change their stability for I between and . Depending on the initial condition a trajectory will be attracted either to the fixed point with u1 > u2 or to that with u1 < u2, so one population is dominant and the other is suppressed forever. We call this type of dynamics in (2.1) winner-take-all behavior. The issue of the existence of the winner-take-all regime will be discussed separately in section 4.2. In this section we focus only on the mechanism that introduces additional equilibrium points to system (2.1).

Theorem 3.6. Let us assume and take , as in (3.14), I° = F (u°) + (β + g)u°. Then the system (2.1) has in the neighborhood of I° the normal form

| (3.15) |

Moreover, if the gain function S satisfies (3.12) at , then I° is a subcritical pitchfork bifurcation point for the system (2.1). Two additional unstable equilibrium points occur on the left side of and on the right side of .

Proof. The construction of the normal form is sketched in Appendix B. Since (3.12) is true, the coefficient of z3 in the normal form is positive and the pitchfork is subcritical. Additional equilibrium points appear for (I − I°)F”(u°) < 0 with F”(u°) negative at and positive at .

Remark 3.3. In case of adaptation-dominated systems (when condition (3.3) or, equivalently, is true) we conclude the following: (1) for weak inhibition system (2.1) has a unique equilibrium point eI which is asymptotically stable for all I; (2) for some intermediate value of inhibition (), the system still has a unique equilibrium point for all I but this becomes unstable in the interval (, ). However in order to obtain this case we need to properly adjust the maximum gain to the adaptation parameters (i.e., we need S’(θ) > 1/(τg)); (3) for strong inhibition (), additional equilibrium points occur in system (2.1) for and

Remark 3.4. In case of strong inhibition and adaptation-dominated system we obtain and

(Note that I0 = H(u0) is independent of β.) At each I between and , the equilibrium point eI has at least one eigenvalue of positive real part. In fact for it has exactly two eigenvalues with positive real part and for it has only one eigenvalue with positive real part. Due to the multidimensionality of the eigenspace, at and the equilibrium eI does not actually change its stability (even if an eigenvalue takes zero value). Instead two new (nonuniform) equilibria are born (e.g., Figure 3G). The two nonuniform equilibria inherit the number of unstable modes from their “parent”–fixed point, which means they have exactly two unstable modes.

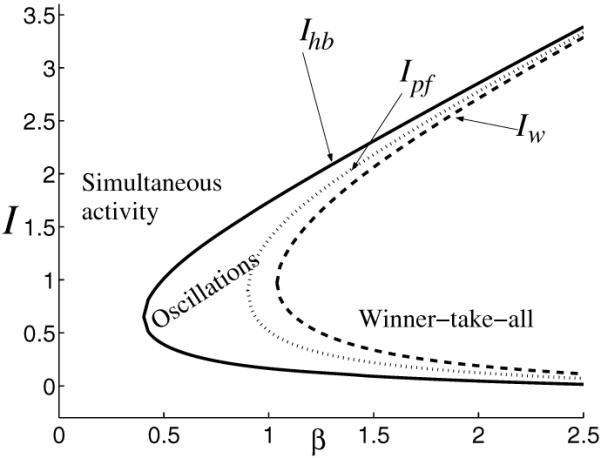

As we see, condition (3.13) is necessary but not sufficient to obtain a winner-take-all behavior in system (2.1). In section 4.2, equations (4.11) and (4.10), we will determine the minimum value of β for which winner-take-all exists (βwta) and the corresponding values , where transition from oscillation to winner-take-all dynamics takes place. We summarize all these results in Figure 4 by drawing the bifurcation diagram in the parameter plane (I, β).

Figure 4.

Dynamical regimes in system (2.1) as inhibition strength β and stimulus strength I vary (other parameters are fixed: g = 0.5, τ = 100, r = 10, θ = 0.2). To the left of curve Ihb (solid line) the system has a unique stable equilibrium, and this satisfies u1 = u2 (simultaneous activity); in the region between curves Ihb and Iw (dashed line) the system oscillates; then to the right of Iw the system has a winner-take-all behavior (two stable and one unstable equilibria). The curve Ipf (dotted line) indicates a transition from one equilibrium to multiple equilibria in (2.1). While the attractor’s type (limit cycle) does not change between Ihb and Iw, the number of equilibria does: we find one unstable equilibrium between Ihb, Ipf and three unstable equilibria between Ipf, Iw. The turning points of curves Ihb, Ipf, and Iw are obtained at , β = g+1/S’(θ) = 0.9, and βwta = 1.0387, respectively.

4. Release, escape, and winner-take-all mechanisms in neuronal competition models

As we mentioned in section 1, some common features are observed for a large class of neuronal competition models based on mutual inhibition and slow negative feedback process. An important example is the nonmonotonic dependency of the rivalry-oscillation’s period T on the stimulus strength I: in a range of small values for I the period increases with input strength; however, there exists another range for I, at larger values, where the period decreases with stimulus strength. These two dynamical regimes are usually separated by another one that is nonoscillatory; it occurs for sufficiently strong inhibition and corresponds to winner-take-all behavior (see Figures 3F and 3H).

The goal of this section is to characterize the underlying mechanisms of the above dynamical scheme. We aim to understand what causes the two opposite rivalry dynamics: as we will see, a release kind of mechanism is associated with the increasing branch of the T versus I curve (region IV in Figure 3F); on the other hand, for the decreasing branch of the I-T curve (region II in Figure 3F), an escape mechanim is responsible.

The terms release and escape were previously introduced by [36] for inhibition-mediated rhythmic patterns in thalamic model neurons and then extended and refined by [32] for Morris–Lecar equations. Most cases include an autocatalytic process either intrinsic by voltage-gated persistent inward currents or synaptic by intrapopulation recurrent excitation. In neuronal competition model (2.1) mutual inhibition plays the role of autocatalysis: one population inhibits the network partner that inhibited it; thus the combination of these two negative factors has a positive effect on its own activity. Rhythmicity is obtained due to a fast positive feedback (disinhibition) and a slow negative feedback process. The slow negative feedback process can be either an intrinsic property of the neuronal populations (e.g., spike frequency adaptation as in (2.1)) or a property of the inhibitory connections between them (e.g., synaptic depression). A simplified model similar to (2.1) but with synaptic depression and a Heaviside step-gain function was analytically investigated in [33]. Numerical results for models with synaptic depression and smooth sigmoid gain functions were also reported in [33] and [31].

In the context of neuronal competition models we define release and escape mechanisms as follows: The two populations in the network oscillate in antiphase competing for the active state; for the dominant population of variable, say, u1, the net input I − βu2 − ga1 decreases as the slow negative feedback accumulates; on the contrary, for the suppressed population u2 the feedback recovers (decays) so the net input I − βu1 − ga2 increases. However, since function S is highly nonlinear, equal changes in the net input I − βuj − gak of both populations can lead to drastically different changes in the corresponding effective response S(I − βuj − gak). This transformation has a direct influence on the variation of u1 and u2. The switch in dominance is due either to a significant, more abrupt change (decrease) in the response to an input to the dominant population, or, on the contrary, to a significant change (increase) in the response to an input to the suppressed population. In the first case the dominant population loses control, its activity drops, and it no longer suppresses its competitor, which becomes active. We call this mechanism release. In the latter case, when the input-output function S of the suppressed population changes faster, this population regains control, its activity rises, and it forces its competitor into the inhibited state. We call this mechanism escape.

Intuitively, escape occurs for higher stimulus ranges than release. Therefore, we expect that an escape (release) mechanism underlies the dynamics in region II (region IV) in Figure 3F with decreasing (increasing) I-T curve. For large values of I the gain function for the dominant population is relatively constant and close to 1 while that for the suppressed population falls in the interval where it is steeper. For example, let us consider the fast plane (u1, u2) and assume that u1 is ON and u2 is OFF; then the dominance switching point is on the shallow part of the active population nullcline u1 = S(I − βu2 − ga1) and on steeper part of down population nullcline u2 = S(I − βu1 − ga2) (see the animation 70584_01.gif [3.7MB] in Appendix C). A larger variation in u2 than in u1 is expected, and that corresponds to the escape mechanism. For small values of I the gain function for the dominant population is steeper (the steeper part of active population nullcline u1 = S(I − βu2 − ga1)), while the gain function for the suppressed population is relatively constant and close to 0 (the shallow part of the down population nullcline u2 = S(I − βu1 − ga2))—see the animation 70584_02.gif [3.8MB] in Appendix C. That is what we call release.

For sufficiently large inhibition β, at intermediate I the effective response to an input to both populations might end up being relatively constant: closer to 1 for the active population and closer to 0 for the down population. The switching does not take place anymore; instead a winner-take-all dynamics is obtained.

In the following we investigate analytically how the period of oscillations for system (2.1) depends on the input strength and show that the increasing (decreasing) branch of the I-T curve is associated with the release (escape) mechanism. Our analysis is done in two steps: first, in section 4.1, we consider the limiting case sigmoid function S(x) = Heav(x − θ) such that S(x) = 0 if x < θ and S(x) = 1 if x > θ; the function does not obey the hypotheses in section 2, but it provides a useful example where escape, release, and winner-take-all dynamics are easily characterized. Then in section 4.2 we return to the case of smooth sigmoid function and describe the notions defined above in this more general context. We find a precise mathematical characterization for the minimum value of β and then for the corresponding input values, say, and , where the winner-take-all regime appears. In the absence of a winner-take-all regime we provide a mathematical definition for the transition between escape and release.

4.1. A relevant example: The Heaviside step function

The choice of S(x) = Heav(x−θ) allows us to solve for completely the intervals of the stimulus strength I where oscillations and winner-take-all dynamics exist. For system (2.1) with a Heaviside step function, there are only four possible equilibrium points: (1, 1, 1, 1), (1, 0, 1, 0), (0, 1, 0, 1), and (0, 0, 0, 0). Here (1, 1, 1, 1) and (0, 0, 0, 0) correspond respectively to the simultaneously high and low activity states observed in numerical simulations in regions I and V of Figure 3F. Oscillations can occur only between the states (u1, u2) = (1, 0) and (u1, u2) = (0, 1) in which one population is dominant and the other one is suppressed. Let us now determine the necessary and sufficient conditions for the oscillations to exist. The idea used in our analysis is similar to that in [17] and [33].

In the fast plane, the nullclines of u1 and u2 consist in two constant plateaus of zero and unit value discontinuously connected at a “threshold” point (I − (θ + ga1))/β and (I − (θ + ga2))/β, respectively (Figure 5A). During oscillation, due to the change in slow variables a1 and a2 these thresholds move along the vertical and horizontal axes. For example, assuming u1 = 1, u2 = 0, the slow equations become and ; thus the u1-nullcline moves down while the u2-nullcline moves to the right. If these nullclines slide enough and the thresholds cross either 0 (for the u1-nullcline) or 1 (for the u2-nullcline), i.e., either a1J = (I − θ)/g or a2J = (I − (θ + β))/g are reached, then the equilibrium point (u1, u2) = (1, 0) disappears and the system will be attracted to (u1, u2) = (0, 1). The switch takes place and the slow equations change to , , now pushing the nullclines in opposite directions. As we explain below, depending on which of the two jumping values a1J or a2J is reached first, a release or an escape mechanism will underlie the oscillation.

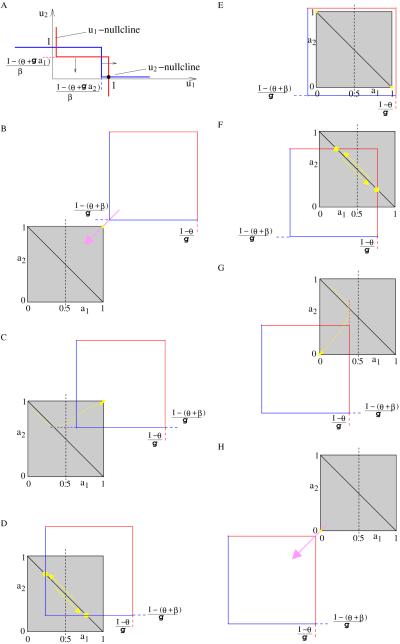

Figure 5.

System (2.1)’s dynamics for large inhibition strength (β/g > 1) and Heaviside step function. (A) Nullclines in the plane of fast variables (u1, u2); (B–H) System’s dynamics in the slow variables plane (a1, a2) for: (B) I ≥ θ+β+g; (C) θ+β+g/2 ≤ I < θ+β+g; (D) θ+β < I < θ+β+g/2; (E) θ+g ≤ I ≤ θ+β; (F) θ + g/2 < I < θ + g; (G) θ ≤ I ≤ θ + g/2; (H) I < θ.

We note that for an oscillatory solution, u1+u2 = 1 always, and so . Asymptotically, the slow dynamics will occur along the diagonal a1 + a2 = 1 of the unit square (Figure 5D or 5F). The positions the horizontal line a2 = (I − (θ + β))/g and the vertical line a1 = (I − θ)/g have relative to the unit square is important when the trajectory points to the lower-right corner; on the other hand, when the trajectory points to the upper-left corner, the position of vertical line a1 = (I − (θ + β))/g and horizontal line a2 = (I − θ)/g will matter. Therefore, in the slow plane (a1, a2) (see Figure 5B–5H) we are interested in the intersection of the unit square (grey) with the square defined by the possible jumping values (I − (θ+β))/g (blue) and (I − θ)/g (red). If the red line is reached, then the active cell (uj = 1) becomes suddently inactive (uj = 0), allowing its competitor to go up. That is why we call this case a release mechanism; otherwise, if the blue line is reached first, then the suppressed cell becomes suddenly active, forcing its competitor to go down. That is the escape mechanism.

As parameter I decreases, the blue-red square slides down along the first diagonal, starts to intersect the grey unit square, and then leaves it (Figure 5B–5H). The way those two squares intersect determines the system’s dynamics, so we need to take into account the relative size of their sides: 1 and β/g.

Theorem 4.1 (five modes of behavior for large enough inhibition strength). Let us assume that β/g > 1. The following five dynamical regimes exist for the neuronal competition model (2.1) with S(x) = Heav(x − θ).

If I ≥ θ + β + g/2, then the system’s attractor is the simultaneously high activity state u1 = u2 = 1.

- If θ+β < I < θ+β+g/2, then the system oscillates between the states (u1, u2) = (1, 0) and (u1, u2) = (0, 1) due to an escape mechanism. The period of oscillations decreases with I and satisfies

(4.1) If θ + g ≤ I ≤ θ + β, then the system is in a winner-take-all regime with fast variables either (1, 0) or (0, 1) depending on the initial condition choice.

- If θ + g/2 < I < θ + g, then the system oscillates between the states (u1, u2) = (1, 0) and (u1, u2) = (0, 1) due to a release mechanism. The period of oscillations increases with I and satisfies

(4.2) If I ≤ θ + g/2, then the system’s attractor is the simultaneously low activity state u1 = u2 = 0.

Proof. Since β/g > 1, there are exactly seven relative positions of the blue-red square to the unit square that lead to conclusions (i) to (v).

-

For 1 ≤ (I − (θ + β))/g (Figure 5B) both a1, a2 are smaller than (I − (θ + β))/g, so I − βui − gak ≥ I − β − g ≥ θ, and we always have u1 = u2 = 1.

If 1/2 ≤ (I − (θ + β))/g < 1 < (I − θ)/g (Figure 5C), let us assume u1 = 1, u2 = 0, and a1 + a2 = 1 as initial values. The slow variable a1 increases while a2 decreases; in this case only a2 can cross the horizontal blue line, producing the jump of u2 from 0 to 1. However, just after the jump, in the equation of u1 we have I − βu2 − ga1 = I − β − g(1 − a2J) = 2(I − (β + θ)) − g + θ ≥ θ, which keeps u1 at its value 1. Therefore, the point will be attracted to the corner (a1, a2) = (1, 1) and the oscillation dies.

For 0 < (I − (θ + β))/g < 1/2 < 1 < (I − θ)/g (Figure 5D), a2 will first cross the blue line and induce a sudden change in u2. Then in the u1-equation we obtain I − βu2 − ga1 = I − β − g(1 − a2J) = 2(I − (β + θ)) − g + θ < θ, which forces u1 to take zero value. The fast system switches from (1, 0) to (0, 1) and the slow dynamics changes its direction of movement along the upper-left–lower-right diagonal of the unit square. The change I − βu1 − ga2 = I − ga2 ≥ I − g ≥ θ does not affect the new value u2 = 1; oscillation exists indeed and its projection on the slow plane is the segment defined by the intersection of the unit square’s secondary diagonal with the two blue lines. When I decreases, the length of this segment increases, so it will take longer to go from one endpoint to the other. We expect the period T to increase as I decreases. Indeed, at the jumping point, the slow variable for the suppressed population takes the value af ≔ a2J = (I − (θ + β))/g; however, just after the previous jump it was ai ≔ 1 − a1J = 1 − (I − (θ + β))/g. Therefore, from we compute the solution a(t) = aie−t/τ, t ∈ (0,T/2). The period T of oscillation is T = 2τ ln(ai/af), i.e., exactly (4.1). Moreover, dT/dI < 0, so T decreases with I.

By choosing I such that (I − (θ + β))/g < 0 < 1 ≤ (I − θ)/g, the unit square falls completely inside the blue-red square (Figure 5E). Starting at u1 = 1, u2 = 0 we have I − βu2 − ga1 = I − ga1 ≥ I − g ≥ θ and I − βu1 − ga2 = I − β − ga2 ≤ I − β < θ; the slow variables a1 and a2 continue to increase, respectively, decrease, approaching the lower-right corner of the unit square, and a winner-take-all state is achieved. For u1 = 0, u2 = 1, the point (a1, a2) will be attracted to the upper-left corner.

Decreasing I even more, we enter the region (I − (θ + β))/g < 0 < 1/2 < (I − θ)/g < 1 (Figure 5F). Here the threshold is crossed at the red line and (when u1 = 1, u2 = 0) u1 jumps from 1 to 0. In the u2-equation, the expression I − βu1 − ga2 becomes I − g(1 − a1J) = 2(I − θ) − g + θ ≥ θ, which leads to u2 = 1. That is the release mechanism. In the slow plane (a1, a2) the point changes its direction of movement; the oscillation occurs along the segment defined by the intersection of the unit square’s secondary diagonal with the two red lines. The length of this segment decreases as I decreases; it takes less time to go from one endpoint to the other, so we expect the period T to decrease. Indeed, for the release mechanism, af ≔ a1J = (I − θ)/g, with value just after the previous jump ai ≔ 1 − a2J = 1 − (I − θ)/g. The slow differential equation is now , that is, a(t) = 1 − (1 − ai)e−t/τ, t ∈ (0, T/2). The period T of oscillation satisfies T = 2τ ln((1−ai)/(1−af)), or (4.2). Obviously dT/dI > 0.

Oscillations cannot exist anymore for (I−(θ+β))/g < 0 (I−θ)/g 1/2 (Figure 5G). Say, that again, we have u1 = 1, u2 = 0: only a1 can reach the threshold (red) line for u1 to become inactive. However, just after the jump, in the u2-equation the net input I−βu1−ga2 = I − g(1 − a1J) = 2(I − θ) − g + θ is still less than θ, and it keeps u2 at zero. Both slow variables start to decrease approaching the corner (a1, a2) = (0, 0). The oscillation dies, and the fast system has (0, 0) as a single attractor. For even smaller values of input strength, (I − (θ + β))/g < (I − θ)/g < 0 (Figure 5H), it is true that I − βui − gak ≤ I < θ and u1 = u2 = 0.

Remark 4.1. We note that in the escape regime, T → 0 as I → θ + β + g/2 and T → ∞ as I → θ + β. Similarly, in the release regime, T → 0 as I → θ + g/2 and T → ∞ as I → θ + g.

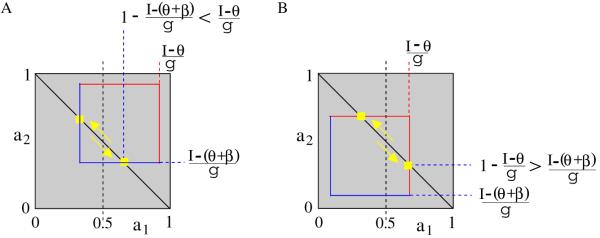

Case (iii) in Theorem 4.1 (case (E) in Figure 5) is not possible if the side of the blue-red square is smaller than the unit. Therefore, the winner-take-all dynamics is eliminated. On the other hand, the blue-red square can lie completely inside the unit square. Then we need to distinguish between two cases: the point moving along the secondary diagonal of the unit square may first reach the blue line (Figure 6A) or the red line (Figure 6B).

Figure 6.

(A) Escape and (B) release mechanisms for low inhibition strength (β/g < 1) in system (2.1) with Heaviside step function.

Theorem 4.2 (no winner-take-all regime for low inhibition strength). Let us assume that β/g < 1. Then the neuronal competition model (2.1) with S(x) = Heav(x − θ) exhibits only four dynamical regimes.

If I ≥ θ + β + g/2, then the system’s attractor is the high activity state u1 = u2 = 1. Similarly, if I ≤ θ + g/2, the system’s attractor is the low activity state u1 = u2 = 0.

For intermediate values of input strength, the system oscillates between the states (u1, u2) = (1, 0) and (u1, u2) = (0, 1). If θ + (β + g)/ 2 < I < θ + β + g/2, oscillations occur due to an escape mechanism; the period T decreases with I and satisfies (4.1). If θ + g/2 < I < θ + (β + g)/2, then a release mechanism underlies the oscillations and T increases with I according to (4.2).

Moreover, at ITmax = θ + (β + g)/2 the system has maximum oscillation period

Proof. Let us assume again initial conditions u1 = 1, u2 = 0, and a1 + a2 = 1; therefore, a1 increases and a2 decreases. In order to first reach the blue line (Figure 6A), the inequality 1 − (I − (θ + β))/g < (I − θ)/g, i.e., I > ITmax, must be true. When the blue line is reached, the down variable u2 switches from 0 to 1. If (I − (θ + β))/g < 1/2, the net input for u1 becomes Ne ≔ I − βu2 − ga1 = I − β − g(1 − a2J) = 2(I − β − θ) − g + θ < θ, so u1 changes to 0 and oscillation occurs due to an escape mechanism. If (I − (θ + β))/g ≥ 1/2, then Ne ≥ θ and u1 remains 1; the fast system has (1, 1) as an attractor. Similar arguments are used for the release mechanism; the condition for intersection with the red line (Figure 6B) is equivalent to the inequality 1 − (I − θ)/g > (I − (θ + β))/g, i.e., I < ITmax. For both (4.1) and (4.2), T → Tmax as I → θ + (β + g)/2. Also, Tescape → 0 as I → θ + β + g/2 and Trelease → 0 as I → θ + g/2.

4.2. Global features of the competition model with smooth sigmoid gain function

Numerical simulations of system (2.1) with smooth gain function S indicate that the limit cycle born through the Hopf bifurcation as in section 3 takes a relaxation-oscillator form just beyond the bifurcation (Figure 3B and 3D). That is, because of the two time-scales involved in the system, variables u1 and u2 evolve much faster than a1 and a2 (τ ⪢ 1). We use this observation to describe for (2.1) the relaxation-oscillator solution in the singular limit 1/τ = 0. In the plane (a1, a2) of slow variables we construct the curve of “jumping” points from the dominant to the suppressed state of each population, and back. This curve is the equivalent of the blue-red square from the case of Heaviside step function, and similarly it consists of two arcs associated with an escape and release mechanism, respectively. Then we give analytical conditions for the winner-take-all regime to exist.

4.2.1. The singular relaxation-oscillator solution

For large values of τ let us consider the slow time s = εt (ε = 1/τ; ’ = d/ds) and rewrite system (2.1) as , , , .

In the singular perturbation limit ε = 0 any solution will belong to the slow manifold Σ defined by −u1 + S(I − βu2 − ga1) = 0, −u2 + S(I − βu1 − ga2) = 0 or, based on the inverse property of S (S−1 = F),

| (4.3) |

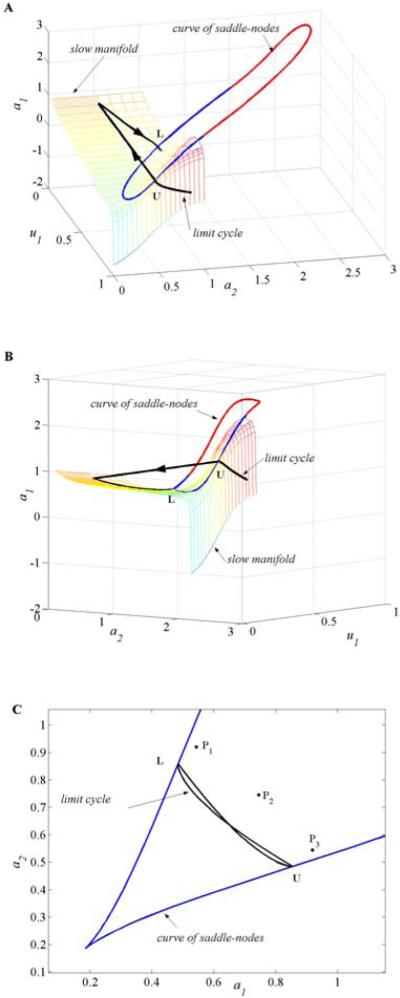

The surface Σ is multivalued and can be visualized by plotting a1 as a function of u1 and a2 (Figure 7).

Figure 7.

Projection of the slow manifold Σ on the space (u1, a1, a2). System (2.1)’s parameters are β = 1.1, g = 0.5, θ = 0.2, r = 10, and I = 1.5 (A–C), respectively, I = 1 (D–F). The curve of saddle-nodes (jumping points) is represented in blue-red (C, F). Nullclines (magenta) and (black curve) intersect in three points for (B) I = 1.5 and (E) I = 1. However, either the equilibrium points can all lie on the middle branch of Σ (A), that is, inside the curve of saddle-nodes (C), or two equilibrium points can move to the lateral branches, outside of (D, F).

Then the slow dynamics is according to equations , , where (, , a1, a2) ∈ Σ. The “slow” nullclines are characterized by additional conditions: a1 = u1 for a1-nullcline () and a2 = u2 for a2-nullcline (); geometrically these are two curves situated on the surface Σ and defined by

| (4.4) |

and

| (4.5) |

Here α1, α2 ∈ (0, 1) are the unique solutions of the equations F(α1) + gα1 = I − β and F(α2) + gα2 = I, respectively.

The equilibrium points are at the nullclines’ intersection, which means a1 = u1 and a2 = u2 simultaneously on Σ. They are characterized by the conditions

| (4.6) |

We recall from section 3 that if (which in the limit case ε = 0 corresponds to β > 1/S’(θ)), there exist two values of input parameter such that the system has a unique stable equilibrium point for . This equilibrium point takes the form eI = (uI, uI, uI, uI) with uI ∈ (0, 1), F(uI) + (β + g)uI = I, and it becomes unstable at and ; a stable limit cycle appears for and . The critical parameter values are defined by and with , according to (3.5). Again, in the limit case ε = 0, condition (3.5) becomes

| (4.7) |

and obviously we have F’(uI) < β for each .

Moreover, if β − g > 1/S’(θ), two additional equilibrium points occur for and through a pitchfork bifurcation. However, at least in the neighborhood of or , these equilibrium points are unstable. Their coordinates (u1p, u2p) satisfy (4.6), so either u1p < uI < u2p or u1p > uI > u2p. In addition, they are close to uI for all I sufficiently close to or ; in conclusion, at least in a small region, F’(u1p) < β and F’(u2p) < β.

In Figure 7 we plotted the projection on the three-dimensional space (u1, a1, a2) of the slow manifold Σ and the nullclines and ( is colored in magenta and is colored in black; because Σ is defined over the full range −∞ to ∞ with respect to a1 and a2, we plot only part of it). All plots are done for S as in (2.2), β = 1.1, g = 0.5, r = 10, θ = 0.2, and two values of input strength, I = 1.5 (Figure 7A–C) and I = 1 (Figure 7D–F). For these values of parameters we have and , so at both I = 1.5 and I = 1 the system has three equilibrium points; nullclines intersect three times and the middle intersection point is exactly eI. We note that all equilibrium points are unstable at I = 1.5 while two of them become stable at I = 1 (see also Figure 3G).

On the surface Σ we have and , i.e.,

For an initial condition (u1, u2, a1, a2) with u1 sufficiently large (close to 1) we have and ; therefore, a simultaneous increase in a1 and decrease in a2 lead to a decrease in u1. Similarly, for a choice of u1 sufficiently low (close to 0), , , and a simultaneous decrease in a1 and increase in a2 lead to an increase in u1. On the other hand, the limit cycle exists for some . Since here eI ∈ Σ with F’(uI) < β, we have and in a neighborhood of this equilibrium point; the behavior of u1 with respect to a1 and a2 is opposite that previously described.

Therefore, relative to the plane (u1, a1) the surface Σ has a cubic-like shape: its left and right branches decrease with u1 while the middle branch increases. The curve of lower (u1 < u2) and upper (u1 > u2) knees on Σ is defined by F’(u1)F’(u2) = β2 (blue-red curve in Figure 7C and 7F). As we show in the following, when the trajectory on surface Σ reaches the curve of knees on its upper side, the point will jump from the right branch of Σ to its left branch. Similarly, when it reaches the lower side of the curve of knees, the point will jump from the left to the right branch of Σ. In the plane of fast variables (u1, u2) the curve of knees corresponds to a saddle-node bifurcation (a node approaching a saddle then merging with it and disappearing—see the animations 70584_01.gif [3.7MB] and 70584_02.gif [3.8MB] in Appendix C). For this reason we also call it the curve of saddle-nodes () or the curve of jumping points. It is defined by the equations

| (4.8) |

We do not prove here the existence of the relaxation-oscillator singular solution. We aim only to provide the reader with the intuition for how oscillations occur in the competition model (2.1) if a smooth sigmoid is taken as a gain function. Thus, if the oscillations exist and, for example, u1 is dominant and u2 is suppressed (u1 > u2), we have −a1 + u1 > 0, −a2 + u2 < 0, so a1 increases and a2 decreases. They push u1 down and the point moves on the trajectory until it reaches at an upper knee U of coordinates (u1J, u2J); here the derivatives and become infinite, so u1 jumps from the upper to the lower branch of Σ (Figure 8A–8B). On the lower branch (u1 < u2) we have −a1 + u1 < 0 and −a2 + u2 > 0, so u1 increases and the point will move due to the decrease of a1 and increase of a2 until it touches at L. At L the point jumps up to the right branch of Σ. The projection of the limit cycle on the slow plane (a1, a2) is a closed curve that touches the projection of at two points (a1J, a2J) and (a2J, a1J) symmetric to the line a1 = a2 (Figures 8C and 9B).

Figure 8.

(A–B) Limit cycle solution and the slow manifold Σ for system (2.1) with parameters I = 1.5, β = 1.1, g = 0.5, r = 10, θ = 0.2, and τ = 5000. (C) The projection of the limit cycle on the slow plane (a1, a2); P1, P2, and P3 are the projections of the equilibrium points.

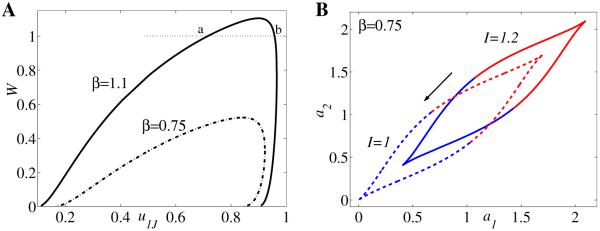

Figure 9.

(A) The graph of W = W(u1J) for upper knees on computed for g = 0.5, r = 10, θ = 0.2, and S as in (2.2). At β = 0.75 (dashed-dotted curve) the maximum value of W is less than unity: Winner-take-all regime does not exist; at β = 1.1 (thick curve) the maximum value of W is larger than unity: the values of I where winner-take-all occurs correspond to u1J at points a and b. (B) Projection Γ of the curve of saddle-nodes on the slow plane (a1, a2) for β = 0.75, g = 0.5, r = 10, θ = 0.2, S as in (2.2), and two values of input I = 1.2 and I = 1; Γ moves down on the first bisector direction as I decreases. The blue side is associated to the right branch of W in panel A, from W = 0 to Wmax (an escape mechanism), and the red side is associated to the left branch of W from Wmax to 0 (a release mechanism).

4.2.2. Release, escape, and winner-take-all in the competition model with smooth sigmoid gain function

Analogous to our analysis in section 4.1 we consider in the following the projection of the curve of jumping points on the slow plane (a1, a2). As mentioned above, if the up-to-down jump takes place at (a1J, a2J), then the down-to-up jump is at (a2J, a1J); so the projection is a closed curve symmetric to the diagonal a1 = a2.

We consider in (a1, a2) the curve Γ0 of equations

with F’(u1J) F’(u2J) = β2. Then from (4.8) we have

For a given I, the projection of on the slow plane (say, Γ = ΓI) is exactly the translation of Γ0 with quantity (I/g, I/g) along the first bisector. Obviously as I decreases, the curve Γ moves down on the upper-right–lower-left direction (Figure 9B). That is similar to the movement of the blue-red square for the case of the Heaviside step function in section 4.1 (Figure 5).

Let us consider now an oscillatory solution that exists for some . If population 1 is dominant (u1 > u2), then −a1 + u1 > 0 and −a2 + u2 < 0 imply u1 − u2 > a1 − a2. On the other hand, since the trajectory is on Σ, we also have a1 = [I − F(u1) − βu2]/g, a2 = [I − F(u2) − βu1]/g, and thus a1 − a2 = [β(u1 − u2) − (F(u1) − F(u2))]/g. At the jumping point a1 reaches its maximum and a2 its minimum, so 0 < a1J − a2J < u1J − u2J can be written as

| (4.9) |

The point (u1J, u2J), u1J > u2J, satisfies F’(u1J)F’(u2J) = β2 and describes the part of that corresponds to the upper knees. Let umβ, uMβ ∈ (0, 1) be the values defined by , F’(umβ) = F’(uMβ) = β2/F’(u0) = β2S’(θ) (see the shape of F’ in Figure 2C). Then the upper branch of results by gluing together three arcs on which (1) u1J increases between and uMβ (so u2J decreases from to u0); (2) u1J decreases from uMβ to u0 (and u2J decrease from u0 to umβ); (3) u1J continues to decrease from u0 to (however, u2J increases now between umβ and ).

We can plot the expression W(u1J) from (4.9) for and with the corresponding u2J = u2(u1J) and check if the graph is below the horizontal line W = 1 (Figure 9A). If for all combinations (u1J, u2J) we have W < 1, then the winner-take-all regime does not exist in the interval (, ). Otherwise, for (u1J, u2J) where W = 1 we can compute the values of I where winner-take-all occurs (see below).

Remark 4.2. Let us observe that W(u1J) = [β − F’(ξ)]/g for some intermediate ξ between u2J and u1J. At or (that represents exactly the equilibrium point eI at and , respectively) we have F’(ξ) = β; therefore, we can extend by continuity the function at and the function at by taking W = 0 at these edges.

For gain function S as in (2.2) and different values of β we plotted the curve W; the curve W always has a maximum value Wmax for some with u2 = u2(u1) ∈ (umβ, u0). Here ∥u2/∥u1 = -[F’(u2)F”(u1)]/[F’(u1)F”(u2)] > 0. W measures the relative distance between the values of slow variables against that between fast variables. Based on this observation we color , and obviously Γ, in blue for (u1J, u2J) starting at (, ) and varying until it reaches (,) and in red for (u1J, u2J) between (,) and (,) (Figures 7C, 9B). The first corresponds to the right branch of W from W = 0 to Wmax, and the latter corresponds to the left branch of W from Wmax to 0.

Well inside the interval that defines the blue part of Γ (that is, not too close to the value that gives the maximum W) we have either or ; so F’(u2J) < β < F’(u1J). However, we recall that F’(u2J) = 1/S’(I − βu2J − ga2J) and F’(u1J) = 1/S’(I − βu2J − ga1J). That means that the gain function S has at the jump a bigger slope at I − βu1 − ga2, the net input to the suppressed population, than at I−βu2−ga1, the net input to the dominant population; in other words, the gain to the suppressed population falls in the range of steeper S. According to the definitions introduced at the beginning of section 4, this case corresponds to an escape type of dynamics.

On the red part of Γ there is an opposite behavior: at least away from the edge we have either or . That is, F’(u2J) = 1/S’(I − βu1J − ga2J) > β > F’(u1J) = 1/S’(I − βu2J − ga1J). At the jump the gain S’(I − βu2 − ga1) to the dominant population falls in the range of steeper S than that to the suppressed population. We called this a release mechanism.

In fact, the values and with (and not and ) determine what we generically call the “steeper” part of S. For the particular case of gain function symmetric to its inflection point (θ, u0), i.e., for S such that S(θ + x) + S(θ − x) = 1 it can be shown that indeed and (for example, S as in (2.2); see Remark 4.3). However, in other cases that is not true anymore; then it is more difficult to interpret the terms escape and release for u1J close to , the passage point from one dynamical regime to another.

Occurrence of the winner-take-all regime

We can determine now the minimum value of β, say, βwta, where the winner-take-all regime occurs in system (2.1). Moreover, for a given β > βwta we find the corresponding values of I that delineate this regime.

We note that if , then system (2.1) has a unique equilibrium point and it belongs to the middle branch of Σ (F’(uI) < β).

For or sufficiently close to the pitchfork bifurcation point, system (2.1) has three equilibria and all are situated again on the middle branch of Σ, inside , the curve of lower and upper knees (Figure 7A and 7C). That is because F’(u1p) < β and F’(u2p) < β, so F’(u1p)F’(u2p) < β2. A trajectory that starts on either the upper or the lower branch of Σ cannot approach an equilibrium point since it will first reach , and so the system oscillates. However, for intermediate values of I between and , two of the equilibrium points may move on the lateral branches of Σ and become stable (F’(u1p)F’(u2p) > β2; see Figure 7D and 7F). That is the case where the winner-take-all regime occurs. We should point out that the equilibrium eI = (uI, uI, uI, uI) always remains unstable for . The boundary between oscillatory and winner-take-all dynamics is obtained when the equilibrium points (u1p, u2p) belong to , that is, when on both a1 = u1 and a2 = u2 are true. We find in the following the values of I where winner-take-all appears.

The values of I, say, Iw, that delineate the winner-take-all regime are defined by

| (4.10) |

The left-hand side of the second equation in (4.10) is in fact W(u1J). Since the curve W always has a maximum value Wmax for some , the line W = 1 can be either above the maximum or below it. If Wmax < 1 (Figure 9A, β = 0.75), then there is no winner-take-all regime. If Wmax > 1 (Figure 9A, β = 1.1), then there exist two values for u1J where the curve W intersects the horizontal line W = 1.

The critical (minimum) value βwta where the winner-take-all regime appears in system (2.1) results from the case of Wmax = 1; i.e., it satisfies the conditions W(u1J) = 1 and W’(u1J) = 0. Thus the minimum value βwta that introduces winner-take-all dynamics in system (2.1) is defined by

| (4.11) |

Remark 4.3. We note that due to its particular form (2.2), S is symmetric about the point (θ, u0) with u0 = 0.5. The graph of F’ is symmetric to the vertical line u = 0.5, i.e., F’(1 − u) = F’(u) for all u ∈ (0, 1), and so F”(1 − u) = F”(u). In this particular case we have , so and . According to (4.11) the maximum value Wmax is obtained exactly at , .

For gain function S as in (2.2) and different values of β we plotted the curve W and determined the interval [, ] where winner-take-all occurs. For example, choosing r = 10, θ = 0.2, g = 0.5, and β = 1.1, we have Wmax = 1.1046 and (computed at u1J = 0.7158, u2J = 0.0424) and (computed at u1J = 0.9576, u2J = 0.2842). The minimum value of β for the winner-take-all regime is βwta = 1.0387. As expected from our analysis in section 3.3, the value of β that guarantees existence of multiple equilibria in system (2.2), i.e., βpf = g + 1/S’(θ) = 0.9, is smaller than βwta.

For the same choice of parameters as above (β = 1.1, g = 0.5, and S as in (2.2) with r = 10, θ = 0.2), we plot the projection of the limit cycle and the curve of saddle-nodes on the slow plane (a1, a2) for different values of parameter I. Figure 10A gives the bifurcation diagram of activity u1 versus input strength I for τ = 100. In the rest of the panels we choose τ = 5000 to mimic the singular limit cycle solution with jumping points exactly on the curve of saddle-nodes (for smaller τ, e.g., τ = 100, the jumping points do not belong to the curve of saddle-nodes but fall close to it). In this case we have

At larger values of I (I = 1.7 and I = 1.5) oscillation is due to an escape mechanism (Figure 10B–C); at an intermediate value I = 1 the system is in the winner-take-all regime (Figure 10D); at smaller values of I (I = 0.5 and I = 0.3) oscillation is due to a release mechanism (Figure 10E–F). Besides the limit cycle other important trajectories are the equilibrium points. There are three unstable equilibria for I = 1.5 and I = 0.5 but only one equilibrium point at I = 1.7 and I = 0.3. At I = 1 two out of three equilibria are stable.

Figure 10.

(A) Bifurcation diagram of activity u1 versus input I for β = 1.1, g = 0.5, r = 10, θ = 0.2, τ = 100. (B–F) Projection of the limit cycle and the curve of saddle-nodes on the slow plane (a1, a2) for different values of parameter I chosen according to the bifurcation diagram in panel A; however, in order to mimic the singular limit cycle solution we choose here τ = 5000. Symbol * indicates the location of equilibria. At large values of I oscillation is due to an escape mechanism: (B) I = 1.7, (C) I = 1.5; (D) at intermediate value I = 1 winner-take-all dynamics is observed; then at low values of I oscillation is due to a release mechanism: (E) I = 0.5, (F) I = 0.3. Single (panels B, F) or multiple (panels C, E) unstable equilibria can coexist with the limit cycle.

Remark 4.4. Equations (4.11) and (4.10), used to determine the critical β where the winner-take-all regime exists in system (2.1) and then, for β > βwta, to estimate and , prove to be reliable. The estimations obtained by this method are in excellent agreement with the results found in system (2.1)’s numerical simulations for both symmetric and asymmetric gain functions. The latter case is discussed in section 5.

5. Neuronal competition models that favor the escape (or release) dynamical regime

As seen in section 4.1, the dynamical scheme of T versus I is symmetric to for S, the Heaviside step function. When the winner-take-all regime exists, its corresponding I-input interval is equally split around the value , that is, θ + g ≤ I ≤ θ + β as in Theorem 4.1. Moreover, an equal input range is found for both release and escape mechanisms: and as in Theorem 4.1, or and as in Theorem 4.2. Therefore, it seems reasonable to ask to what extent the symmetry of the I-T dynamical scheme about a specific value I* relates to the geometry of S and, more generally, to the form of the equations in (2.1). We address this question in the following and find a heuristic method to reduce one of the two ranges of escape and release mechanisms while still maintaining the other one.

First let us note that we obtain a result similar to that in section 4.1 for any smooth sigmoid S as long as it is symmetric about its threshold. The threshold (say, th) is defined as the value where the gain function reaches its middle point: for S taking values between 0 and 1, it is S(th) = 0.5. The terminology comes from the fact that if a net input to one population is below the threshold (x < th), then it determines a weak effective response (S(x) < 1/2); at equilibrium that would correspond to an inactive state. On the other hand, a net input above the threshold (x > th) determines a strong effective response (S(x) > 1/2) which, at equilibrium, corresponds to an active state. The symmetry condition of S with respect to the threshold is described mathematically by the equality S(th + x) + S(th − x) = 1 for any real x or, equivalently, S(x) + S(2 th − x) = 1. The gain function defined by (2.2) is such an example: in this case the threshold is exactly the inflection point θ (S”(θ) = 0; S(θ) = 0.5).

Theorem 5.1. If the gain function S satisfies S(θ + x) + S(θ − x) = 1, then system (2.1) with input I* is diffeomorphic equivalent to system (2.1) with input I = 2θ + β + g − I*.

Proof. Due to the symmetry of S, equation with the change , , , becomes ; on the other hand, equation becomes . Therefore, system (2.1) with input I* is diffeomorphic equivalent to system (2.1) with input (2θ + β + g − I*).

Remark 5.1. Theorem 5.1 implies that system (2.1) has the same type of solutions for any two values of input strength I1 and such that . Moreover, if at I1 and an oscillatory solution of period T1 and exists, then, due to the diffeomorphism, . Obviously, if T1 < T2 for , then for the corresponding values . Therefore, for symmetric S to its inflection point (threshold in this case), the intervals of I for regions II and IV (see Figure 3F or H) have the same length and are symmetric to the line .

In order to explore the effect the asymmetry of S has on the bifurcation diagram, we consider S to be

| (5.1) |

with u0 ∈ (0, 1).

Therefore the inflection point θ and the threshold th satisfy S(θ) = u0 and th > θ if u0 < 1/2, respectively, th < θ if u0 > 1/2.

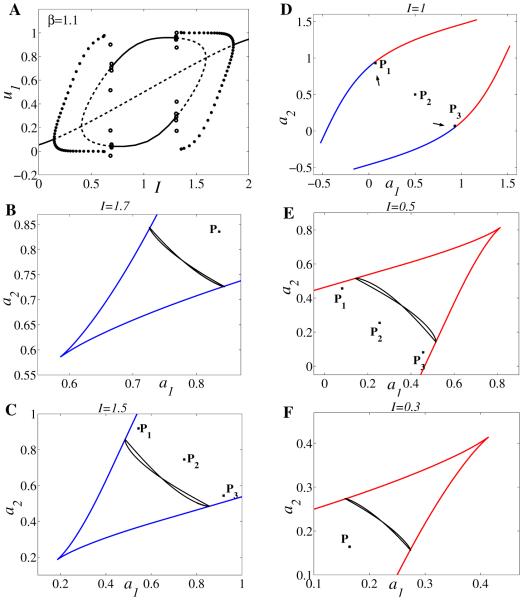

The graphs of S as in (5.1) and their corresponding F’ (where F = S−1) are drawn in Figure 11A–B with parameter values r = 10, θ = 0.2, and u0 = 0.1, 0.5, 0.9. Then the I-T bifurcation diagram for the competition model (2.1) is constructed at β = 0.75 (Figure 11C). Numerical results show that the symmetry of this bifurcation diagram is indeed a direct consequence of the symmetry of S to its threshold. Such is the case u0 = 0.5. For other choices of u0 one of the two regions that correspond to the increasing and decreasing I-T branch is favored (it is wider)—the former when u0 > 1/2 and the latter when u0 < 1/2.

Figure 11.

Consider parameter values r = 10, θ = 0.2, g = 0.5, τ = 100 and gain functions S as in (5.1) with u0 = 0.1, 0.5, 0.9. (A) Graphs of S. (B) Their corresponding F’ where F = S−1. (C) Bifurcation diagram for period T versus input I in system (2.1) as β = 0.75. (D) The graph of W = W(u1J) for upper knees on computed as β = 0.75. (E–F) Projection Γ of the curve of saddle-nodes on the slow plane (a1, a2) for β = 0.75, I = 1.2, and u0 = 0.1, 0.9. The blue side is associated to the escape mechanism and the red side to the release mechanism. The asymmetry of S to the threshold favors (E) escape if u0 < 1/2 and (F) release if u0 > 1/2.

Recall from section 4 the definition (4.8) for the curve of jumping points (with its projection Γ on the plane of slow variables (a1, a2)) and the definition (4.9) for W that characterizes escape, release, and winner-take-all regimes. The shape of W changes dramatically with u0 (Figure 11D, β = 0.75) but not at all with β. However, for any fixed u0 the curve W always has a unique maximum point; this maximum moves down as β decreases.

The asymmetry of S leads to an asymmetry of the curve Γ: for u0 < 1/2 we have , , and the curves and Γ have a longer blue part than red part; the escape mechanism is favored (Figure 11E, u0 = 0.1). On the contrary, for u0 > 1/2 the release mechanism is favored since the red part of Γ is longer: and (Figure 11F, u0 = 0.9). We can understand this specific behavior by looking at the network populations’ dynamics about the threshold: in case of u0 < 1/2 the maximum gain to each population is reached for some net input below the threshold (S’(θ) = max S’ with S(θ) = u0, so θ < th); then about the threshold th the gain S’ has a decreasing trend. Consequently, there is a wider range for inputs where the inactive population has a significant gain compared to the active population, and so the escape mechanism is favored (the suppressed population regains control on its own, thus becoming active). On the other hand, if u0 > 1/2, the maximum gain to the network’s populations is reached for some net input above the threshold (θ > th) and the gain has an increasing trend in the vicinity of th. Therefore, the release mechanism is indeed much more easily obtained.

Remark 5.2. The function S defined by (5.1) does not entirely satisfy the hypotheses introduced in section 2. In this case F”’(u0) does not exist anymore at u0 ≠ 1/2 even if we still have F as and . Nevertheless this property does not affect our analytical results (e.g., the expansion we used in section 3 to prove the existence of stable oscillatory solutions still makes sense since it is done locally about some point u* different than u0). Consequently, all the results found for system (2.1) in previous sections remain valid.

5.1. A competition model that favors escape

In [31] we investigated four distinct neuronal competition models: a model by Wilson [37], one by Laing and Chow (the LC-model [17]), and two other variations of the LC-model that we called depression-LC and adaptation-LC. The latter is exactly the system (2.1) with symmetric sigmoid function. Besides the models’ commonalities we also noticed some differences: in some cases the bifurcation diagrams show a preference of the system to the escape mechanism (the region of I that corresponds to the decreasing I-T branch is wider; see Figures 3 and 4 in [31]). Moreover, for sufficiently low inhibition in Wilson’s and the depression-LC models the increasing (release-related) branch can disappear completely. Based on the results obtained in the present paper, we can explain those numerical observations.

Let us take, for example, Wilson’s model for binocular rivalry [37, 31]. Since the time-scale for inhibition is much shorter than the time-scale for the (excitatory) firing rate, we can assume that the inhibitory population tracks the excitatory population almost instantaneously. Thus Wilson’s model becomes equivalent to a system of the form

| (5.2) |

where γ is a positive constant and [x]+ is defined as [x]+ = 0 if x < 0 and [x]+ = x if x ≥ 0. As in (2.1), parameters β and g represent here the strength of the inhibition and adaptation; I is the external input strength. We see that in the differential equations for uj the nonlinearity is introduced through a function

that is, and . We note that is asymptotic to γ as x → ∞ and satisfies for x ≤ 0 and , which means Θ is the threshold. To compare system (5.2)’s dynamics with that of (2.1), we will assume without loss of generality that γ = 1.

By the change of variables w1 = βu1 − I, w2 = βu2 − I, A1 = βa1/g − I, A2 = βa2/g − I, the system (5.2) is diffeomorphic equivalent to

| (5.3) |

with and .

On the other hand, let us consider the system (2.1) with S as in (2.2). By a similar change of variables w1 = βu1 − I, w2 = βu2 − I, A1 = βa1 − I, A2 = βa2 − I this system is diffeomorphic equivalent to

| (5.4) |

with θ1(t) and θ2(t) as above. The threshold of S (rewritten as S(x; θ) = 1/(1 + e−r(x−θ))) is θ.

As we can see, up to the specific expression of the gain function, Wilson’s and the adaptation-LC models are equivalent. The reason Wilson’s model shows preference to the escape mechanism instead of release (while for the adaptation-LC model the interval ranges for escape and release dynamics have equal length) resides in the asymmetric shape of with respect to its threshold. The threshold is Θ but the maximum gain is obtained at ( at ). The resulting behavior is similar to that of S as in (5.1) with u0 < 1/2 (S”(θ) = 0, S(θ) = u0, and θ < th). The role of θ is played by and the corresponding value for u0 is .

Remark 5.3. In fact, the difference between and asymmetric S from (5.1) is more subtle. Take again γ = 1; the restriction of on (0, ∞) is invertible with inverse defined on (0, 1) by . In systems (5.3) and (5.4) the graph of , has a well-like shape similar to that of the graph of if u ∈ (0, u0] and if u ∈ (u0, 1). However, depends on θj (that implicitly means dependence on the slow variable) while does not. That explains why, for S as in (5.1), when they exist, the Hopf bifurcation points always come in pairs (and oscillations start at both points with the same frequency—see (3.5) and (3.6) in section 3); so release and escape oscillatory regimes (even if not equally balanced) always coexist for (2.1). On the contrary, in Wilson’s model (5.2) the dependence of on the slow variable allows us to find a (reduced) parameter regime where only the escape mechanism is possible [31].

5.2. Competition models with nonlinear slow negative feedback