Abstract

Purpose

Low health literacy has been associated with poor health-related outcomes. The purposes are to report the development of a website for low-literate parents in the Neonatal Intensive Care Unit (NICU), and the findings of heuristic evaluation and a usability testing of this website.

Methods

To address low literacy of NICU parents, multimedia educational Website using visual aids (e.g., pictographs, photographs), voice-recorded text message in addition to a simplified text was developed. The text was created at the 5th grade readability level. The heuristic evaluation was conducted by three usability experts using 10 heuristics. End-users’ performance was measured by counting the time spent completing tasks and number of errors, as well as recording users’ perception of ease of use and usefulness (PEUU) in a sample of 10 NICU parents.

Results

Three evaluators identified 82 violations across the 10 heuristics. All violations, however, received scores <2, indicating minor usability problems. Participants’ time to complete task varies from 81.2 seconds (SD=30.9) to 2.2 seconds (SD=1.3). Participants rated the Website as easy to use and useful (PEUU Mean= 4.52, SD=0.53). Based on the participants’ comments, appropriate modifications were made.

Discussion and Conclusions

Different types of visuals on the Website were well accepted by low-literate users and agreement of visuals with text improved understanding of the educational materials over that with text alone. The findings suggest that using concrete and realistic pictures and pictographs with clear captions would maximize the benefit of visuals. One emerging theme was “simplicity” in design (e.g., limited use of colors, one font type and size), content (e.g., avoid lengthy text), and technical features (e.g., limited use of pop-ups). The heuristic evaluation by usability experts and the usability test with actual users provided complementary expertise, which can give a richer assessment of a design for low literacy Website. These results facilitated design modification and implementation of solutions by categorizing and prioritizing the usability problems.

Keywords: low literacy, pictographs, visual aids, heuristic evaluation, usability, web-based education, Neonatal Intensive Care Unit

1. Introduction

Low health literacy is a prevalent public health problem. Nearly 90 million adult Americans (about 36%) have basic or below basic health literacy, indicating that they cannot perform the simplest healthcare activities without help [1]. For example, they need healthcare providers to point out and circle the date of follow-up appointment on a hospital appointment slip [1]. Low health literacy has been associated with poor communication between patients and health care providers [2] and with poor health-related outcomes. These outcomes include increased hospitalization rates, poor adherence to prescribed treatment and self-care regimens, increased medication or treatment errors, failure to seek preventive care, lack of skills needed to navigate the health care system, disproportionately high rates of disease and mortality, and increased use of emergency rooms for primary care [2–6].

To deal with low-literate adults, most healthcare educators currently simplify the text language of educational materials. However, easy-to-read instructions alone only marginally improve low-literate patients’ understanding [7,8] due to their (1) different way of dealing with written text, and (2) learning style preference for visual or audio-based health information rather than written text-based sources such as newspapers, magazines, books or brochures [1]. Low-literate adults deal with written text by reading word for word, focusing on each word and accessory details rather than on key concepts; by letting their eyes wander about page without finding the key points; and are unable to scan text, resulting in skipping many key information points [9,10]. To address these unique characteristics of low-literate adults, we developed multimedia educational materials using visuals such as pictographs (simple line drawings representing healthcare actions to be taken), images created by clip art or photographs, and voice-recorded text message in addition to a simplified text. Our rationale for using visuals is based on the cognitive theory of multimedia learning, [11, 12] which describes appropriate visuals facilitate the cognitive learning process of storing information, stimulating retrieval of that information, and reconstructing information through visual associations.

To effectively facilitate the presentation of online information, it is imperative to design and develop websites that are user-centered and take into account the users’ needs. These goals are achieved by evaluating the design at various stages of development and by amending it to suit users’ needs [13]. The design, therefore, progresses in iterative cycles of design, evaluation, and redesign. Developing websites in this way turns out to be less expensive than fixing problems that are discovered after a website has been implemented [14]. Different evaluation techniques are used at different stages of design to examine different aspects of the design.

One particularly useful method for assessing early designs and prototypes of user interface elements, such as dialogue boxes, menus, and navigation structure is heuristic evaluation conducted by the designer or a usability expert without the direct involvement of users [15–17]. However, useful as heuristic evaluation is for filtering and refining the design, it would not assess functions that are not implemented nor reveal how the end user would actually interact with the website. Therefore, a richer assessment of website design can be provided by heuristic evaluation combined with usability testing of actual users. Usability testing measures end users’ performance of typical user tasks for which the website is designed [13].

To assess the prototype of our educational website for low-literate adults, we first conducted an expert evaluation (heuristic evaluation). For the later stage of design evaluation, we supplemented the heuristic evaluation by conducting end-user testing in a laboratory-based setting. The purposes of this paper are to report (1) the development of a low-literacy educational website for parents of neonates in the Neonatal Intensive Care Unit (NICU), and the findings of heuristic evaluation and a usability test of this website.

2. Review of the Literature

2-1. Web-based Education for Low-Literate Adults

According to the cognitive theory of multimedia learning [11,12], people understand instruction better when they receive words and corresponding pictures rather than words alone, a phenomenon called the “multimedia effect.” Appropriate visuals can facilitate connections between written text and mental images when learners reconstruct the information through visual association [11, 12]. When words are presented alone, learners may try to form their own mental images and connect those with the words, a feat that may be difficult for most learners, especially those with low literacy. For these adults, visuals such as pictographs (simple line drawings showing explicit actions to be taken), video clips, and clip art combined with simplified text are an effective way to deliver healthcare information written in unfamiliar terms or complex phrases. Visuals can also show a step-by-step procedure and make an entire action sequence seem easier to learn than purely textual explanations.

Adding visuals to simplified text has been shown to effectively enhance adults’ comprehension of health information [18–23], but few studies have been conducted for low-literate adults [24–26]. A study of non-literate women in rural Cameroon found that those whose health education included simple line drawings had better comprehension and compliance than a control group without visual aids during training [27]. Patients with inadequate or marginal literacy skills, or cognitive impairment were found to be most likely to refer to a “pill card”, which depicts the patient’s daily medication regimen with pill images and icons, on a regular basis initially and at 3 months [26]. Regardless of literacy level, most pill card users (92%) rated the tool as very easy to understand, and 94% found it helpful for remembering important medication information, such as the name, purpose, or time of administration [26]. Michielutte et al.’s study (1992) reveals low literacy adults benefit from a pictograph-based education more than high literacy adults [19]. They examined the effects of pictures on 217 women’s comprehension of information on cervical cancer prevention and found that there was a large difference in comprehension scores among women with low readability scores (61% versus 35%) and only a small difference among women with higher readability scores (70% versus 72%). Although these studies reveal significant comprehension effects of visuals for low-literate adults [24, 27], and low-literate adults’ preference for visual aided materials [24, 26], most efforts have used simulated regimens, or addressed only pharmaceutical pictographs in medication instruction [26]. Thus, additional research is needed on low-literate adults’ broad healthcare education needs. In addition, these studies delivered educational material using a paper-based format, whereas low-literate adults are known to prefer receiving health information from non-print media such as a video- or audio-based medium [1]. Indeed, 67% of adults with below basic or basic health literacy receive some or most health information from non-print media [1]. Educational materials need to be delivered through media preferred by this group of people.

2-2. Heuristic Evaluation

A heuristic evaluation is an informal usability inspection technique in which experts evaluate whether user interface elements of a system adhere to a set of usability principles known as heuristics [13–15]. The purpose of the heuristic evaluation method is to identify any serious usability problems that users might encounter and to recommend improvements to the design before implementing the system. By evaluating the interface in the developmental phase, design problems may be identified and corrected earlier rather than later in the design cycle, thus reducing potential usability errors that may later be more costly and prohibitive to rectify [16,17].

Heuristic evaluation is inexpensive, easy to conduct, and provides feedback quickly. It can be performed on very early-stage prototypes, including paper mockups, as well as on later stage prototypes with or without all of the back-end functionality implemented [13, 15]. However, heuristic evaluation has some limitations. The major limitation is its inability to assess functions that are not implemented. Thus, evaluators would have difficulty understanding how the user would ultimately interact with the website and whether the user interface violates any of the heuristics. The other downside is that most usability problems found through heuristic evaluation are minor and would actually cause little trouble for the system’s users [28]. For these reasons, heuristic evaluation is usually supplemented by end-user testing in a laboratory-based or field setting.

2-3. Usability Test

Usability testing is a way of ensuring that interactive systems such as web sites are adapted to users and their tasks and that their usage has no negative outcomes [29]. Usability testing involves measuring the performance of end users doing typical tasks in controlled laboratory-like conditions [13, 14, 29, 30]. The goal of this fundamental step in the user-centered design process is to obtain objective performance data to assess a system or product in terms of usability goals such as efficiency, avoiding errors, satisfaction, and learnability. Most often, this is accomplished through video-analytic methods. As users perform typical tasks, they are watched and recorded on video and by logging their interactions with software. In addition, users’ opinions are generally elicited with usability questionnaires and interviews. Typically 5–12 users are involved in user testing [13]. Usability testing tends to occur in the later stages of development when at least a working prototype of the system is in place.

Heuristic evaluation and usability testing have been used to discover usability problems in healthcare websites (e.g., Medline Plus) [31–34], as well as in medical devices such as infusion pumps [16, 17], personal digital assistants [35, 36], and healthcare information systems (e.g., electronic health records) [37–39]. These studies all found that heuristic evaluation and usability testing are useful, efficient, and low cost methods for evaluating patient safety features of medical devices, interfaces of electronic health records and educational websites. However, few heuristic and usability tests have targeted interactive sites designed for low-literate users.

3. Methods

3-1. Development of Low-literacy Text

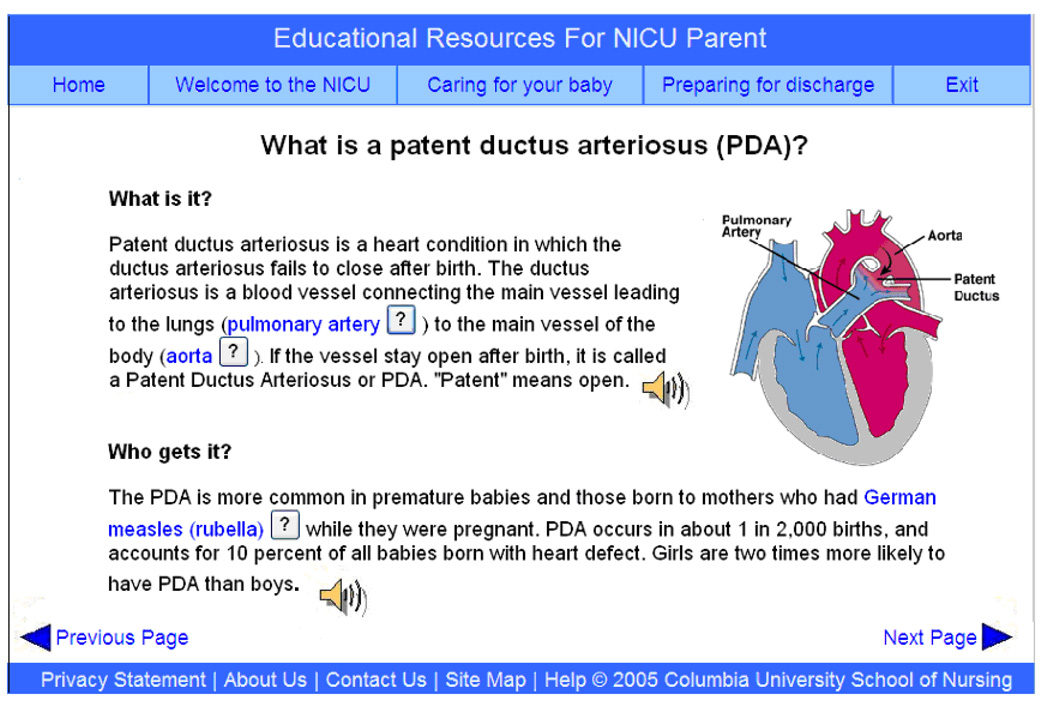

Patent ductus arteriosus (PDA) in the NICU was selected as the clinical focus of the website because it is a condition with multiple treatment options that must be considered by the family in discussion with the infant’s clinical team. The text of educational website contains NICU information, information on PDA, commonly used medications, abbreviations, family support, and discharge preparation. The text was created at the 5th grade readability level measured by Flesch-Kincaid Grade Level, MS Word (Microsoft, Redmond, WA) and reviewed by a low-literacy expert.

Guidelines for low-literacy text were derived from suggested strategies [9, 40]: simplify the text, strictly limit the content, keep content short and focused, write the text the way we talk, use plain language (common words, action verbs, present tense, positive words, personal pronouns [we, you] to engage users, gender-neutral terminology, avoid long strings of nouns), use short sentences (15 to 18 words per sentence), and write in the active voice. Technical words were substituted by simpler words [41, 42]. The text was saved as Microsoft Word or Microsoft PowerPoint files for later conversion to HTML files.

3-2. Development of Visuals

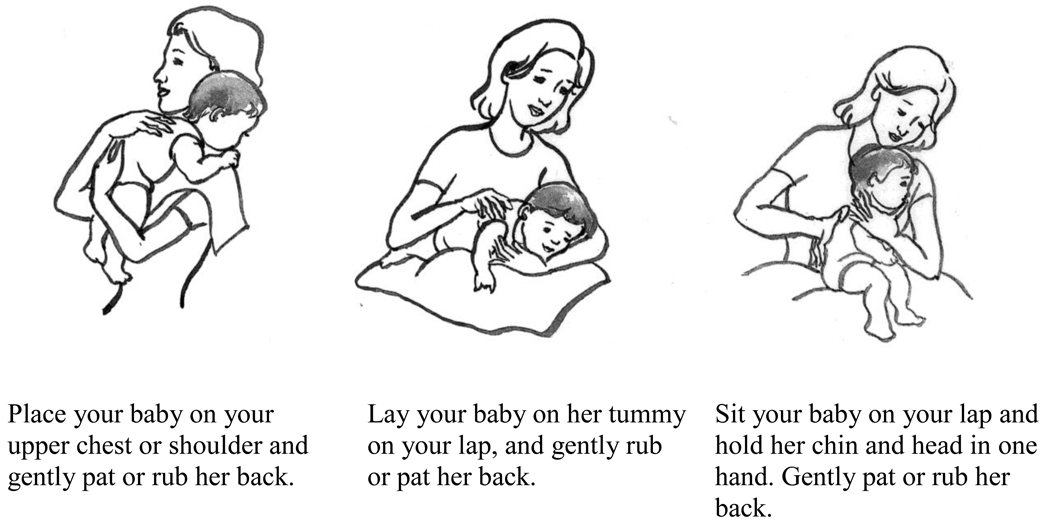

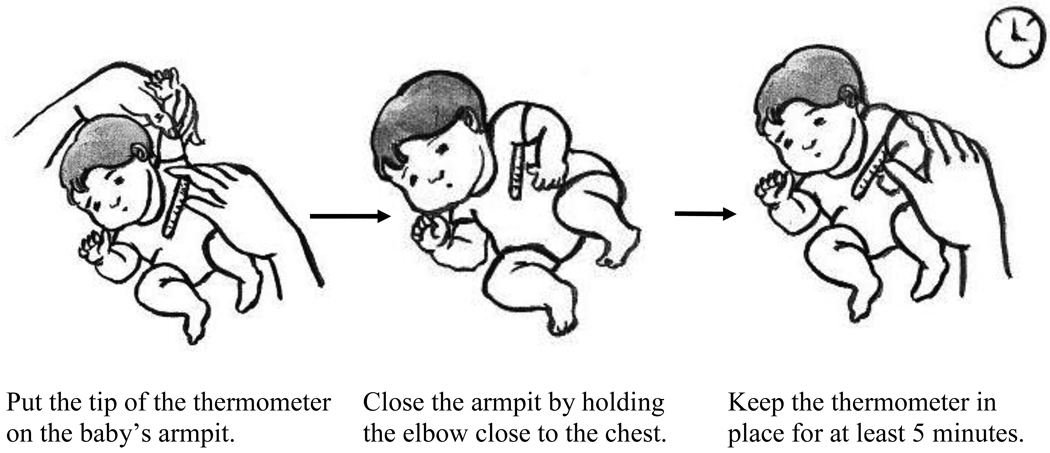

Information from the text was categorized for use with different visuals: pictographs were used to present recommended actions, tasks, or procedures (e.g., “how to burp a baby,” “how to take baby’s temperature” [Figure 1 & 2]), and images created by clip art or photographs were used to present static concepts (e.g., “PDA heart” [Figure 3], “the NICU bed [radiant warmer]”). Clip art was collected from the Internet for freely available healthcare images (e.g., MS Clipart collections) or was purchased for commercially available images (e.g., LifeART images, Waukesha, WI). Photographs of NICU equipment (e.g., NICU cribs, monitors, or incubators) were taken at the NICU of the New York-Presbyterian Children's Hospital, NY.

Figure 1.

How to Burp a Baby

Figure 2.

How to Take Axillary Temperature

Figure 3.

Sample Screenshot of the Low Literacy Website: Information about PDA Heart

For creating pictographs, key topics were identified and for each topic, draft scripts were developed for a list of relevant actions. The actions for each topic were then represented by a professional illustrator in pictographs with bite-size steps. Each pictograph includes action captions and/or prompts such as labels or arrows to help deliver the intended meaning of the pictures and to minimize inconsistent interpretations by parents [9, 43]. The authors were actively involved in creating pictographs by explaining the intended messages and desired outcomes to the illustrator. Visuals were saved as PNG or JPEG files for easy transfer across the Internet. After creating text and visuals, text was voice-recorded and saved as sound files.

3-3. Implementation of a Prototype Educational Website for Low-literate Parents

The text file and visuals were integrated and converted using Adobe Dreamweaver, a web-development application, to HTML files as mockup screens. Voice-recorded sound files were linked to relevant text elements and a small speaker icon  was created near relevant text elements to play the sound. The mockup screens were implemented for display on a desktop computer in a usability laboratory (Figure 3).

was created near relevant text elements to play the sound. The mockup screens were implemented for display on a desktop computer in a usability laboratory (Figure 3).

The interface design for low-literate parents followed specific guidelines: limit key topics to 3 per screen; use subheadings to inform the following text; use ordered format, steps, lists (bullets), comparison and contrast; keep pages short/concise; use large buttons adequately spaced apart; and maintain design simplicity by avoiding mouse drag, scroll bars, drop-down menus, and multiple windows.

3-4. Heuristic Evaluation

The most pertinent heuristics for evaluating a website were identified by reviewing the literature [15, 44]. For this study, we adapted and tailored 10 heuristics: visibility of system status; match between system and the real world; user control and freedom; consistency and standards; help users recognize, diagnose, and recover from errors; error prevention; recognition rather than recall; flexibility and efficiency of use; aesthetic and minimalist design; and help and documentation. After relevant heuristics were identified, we selected specific questions for each usability heuristic based on a commercial heuristic evaluation form [45], audience-centered heuristics for older adults [46], and our own design considerations for a low-literacy educational website. The heuristic evaluation form includes four sections: name of heuristic and general description, specific questions about the heuristics, checkbox for overall severity rating score (0= no usability problem to 4=usability catastrophe), and section for additional comments.

The heuristic evaluation was conducted by three usability experts with backgrounds in nursing, cognitive science, or social science. Experts were not considered research subjects; consequently, IRB approval was not sought for this study. All three experts had expertise in heuristic evaluations and one in developing a website for diabetic elders with low-literacy skills. To ensure that all evaluators received the same instruction, they were given a written instruction package to with information about the website (the purpose of the site, target users, topics covered, etc.), instructions about the heuristic evaluation, case scenarios, and the heuristic evaluation form. After one author (JC) confirmed that evaluators understood the instructions, they were instructed to go through the website with the case scenarios, which were based on typical user tasks, e.g., retrieving the information module, playing the voice-recorded text message.

Violations were defined as problems that could potentially interfere with end users’ ability to interact effectively with the website. During evaluation sessions, evaluators were encouraged to “think aloud” [47] and verbalizations were voice-recorded using Morae (TechSmith, Okemos, MI), a usability testing tool. After each session, evaluators completed the evaluation form. They were encouraged to be as specific as possible when recording violations and to rank the severity of each heuristic from 0 (no usability problem) to 4 (usability catastrophe). Evaluators’ comments were documented in field notes by one author (JC) along with her own observations.

3-5. Usability Test

Users’ performance was measured by logging the time spent completing each task and number of errors, as well as recording users’ perception of ease of use and usefulness. Users’ perception was measured by the 6-item Perceived Ease of Use and Usefulness (PEUU) scale, rated on a 5-point Likert-type scale from 1 (strongly disagree) to 5 (strongly agree). Internal consistency reliabilities range from 0.91 to 0.94 [48].

For the usability test, 10 NICU parents participated. Once the study was approved by the authors’ university Institutional Review Board, recruitment flyers were posted in a family waiting room of the neonatal intensive care unit (NICU) at New York-Presbyterian Children's Hospital. Participants were also recruited by referral from NICU staff (physicians, neonatal nurse practitioners, registered nurses, and medical social workers). Criteria for family participation in the study were: (1) family member of neonate with PDA, (2) readability level <6th grade (<16 score on the Short Test of Functional Health Literacy in Adults [S-TOFHLA] [49], (3) able to complete study instruments in English, and (4) age 21 or older unless parent of the neonate. Family members who met the inclusion criteria were invited to participate, all procedures were explained, and they were given freedom to decide if they wish to participate. Once informed consent was obtained, 1½ hour sessions were arranged at the usability laboratory.

Participants were provided a brief user training session by JC, including how to use the keyboard and mouse. They were then given a written instruction package with information about the website, instructions about the usability test, case scenarios, and the usability questionnaire. Case scenarios were constructed based on user tasks: logging into the website, completing the demographic questionnaire, retrieving information (e.g., echocardiogram, medication information about digoxin), and playing the voice-recorded text message. Participants walked through the case scenarios with JC and were encouraged to think aloud as they were audio-taped. At the end each session, participants were asked to complete the usability questionnaire and encouraged to be as specific as possible in their comments. JC also documented field notes during the session.

3-6. Data Analysis

3-6-1. Heuristic Evaluation

Each evaluator’s comments on the heuristic evaluation form and verbalizations captured during the session were used to generate separate lists of violations, which were compiled into a single master list. The frequencies and mean severity rating scores of heuristic violations across three evaluators were calculated. Duplicate usability problems from multiple evaluators were grouped and treated as a single entity.

Qualitative data were retrieved from audio files and comments in the heuristic evaluation form. Participants’ verbalizations were transcribed and compared to the field notes for inconsistencies or missing information. These data were analyzed using content analysis [52], i.e., data were coded and categorized into three groups (design and layout, navigation, and function) based on the potential design solutions to be implemented.

3-6-2. Usability Testing

Time spent completing each task (seconds) and the number of errors for each task were counted. Qualitative data were retrieved from Morae files, analyzed as for heuristic evaluation analysis, and categorized into four groups (design and layout, navigation, content, and function) based on the potential design solutions to be implemented. Responses to the Ease of Use and Usefulness scale were analyzed using descriptive statistics.

4. Results

4-1. Heuristic Evaluation

4-1-1. Frequencies and Mean Severity Ratings of Heuristic Violations

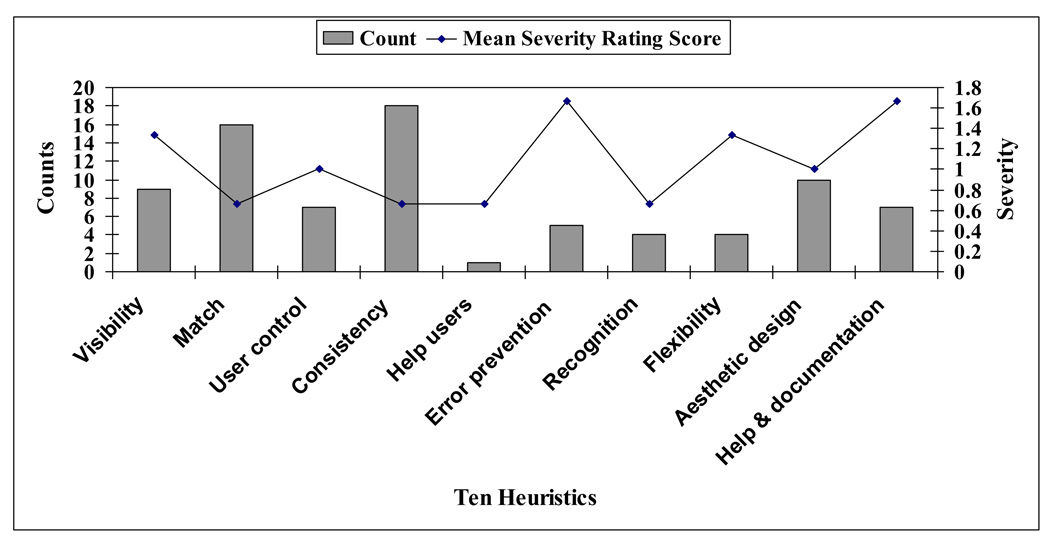

The three evaluators identified 82 violations across the 10 heuristics (Figure 4). Two heuristics, consistency and match, were the most frequently violated: 18 (22%) and 16 (20%), respectively. These heuristics, however, received mean severity rating scores of 0.3 (no usability problem) and 0.6 (cosmetic problem only). Aesthetic design and visibility were the next most common violations: 10 (12%) and 9 (11%), respectively. These four heuristics accounted for 65% of the violations. The heuristic with the fewest violations was help: 1 (1.2%). In terms of mean severity rating scores, two heuristics, error prevention and help, scored highest (1.7, minor usability problem), followed by visibility (1.3, cosmetic problem only) and flexibility (1.3, cosmetic problem only). All heuristics receiving scores <2 were considered minor usability problems.

Figure 4.

Frequencies and Severity Rating Score by Heuristic

4-1-2. Characteristics of Violations and Corresponding Design Solutions

Of the 82 violations reviewed, 72 (88%) were addressed in the design modification. Most violations were due to the design and layout (n=37, 51%), followed by function (n =18, 25%), and navigation (n =17, 24%). Ten violations were dismissed because the design solutions suggested were inconsistent to current database requirement (n =2), and addressing the violations would have resulted in more serious violations to other heuristics (n =8). For example, one of suggestions, “the use of yellow-colored hypertext against light blue background to make a better contrast” would go against webpage conventions of blue text representing hyperlinks (violation of Consistency and standards and Match between system and the real world). It also results in too many colors in one page (violation of Aesthetic and minimalist design), distracting the user’s attention. After weighing the benefits and adverse effects, the authors decided to dismiss ten violations.

The following four examples include a description of each problem, heuristics violated, and the corresponding design solutions that were made before deployment.

Example 1. Design and Layout

Problem description: The buttons were found throughout the website, which varied not only in font and color, but also vacillated between uppercase and lowercase.

Heuristics violated: Consistency and standards

Design solutions; Buttons were changed to be consistent in font (Arial, 18pt), color (black), and case (title case).

Example 2: Design and Layout

Problem description: On the Visitation and Hospital Stay Guidelines page, the headers, “Telephone” and “No Smoking” were underlined, which violates web page conventions of underlined text representing hyperlinks.

Heuristics violated: Match between system and the real world, Consistency and standards

Design solutions: Underlines were removed from the headers and replaced with a larger bold font.

Example 3: Function

Problem description: In the Demographics page, numeric data entry fields for “age” and “years of formal education” allowed the user alphabetic letters as well as numeric letters.

Heuristics violated: Error prevention

Design solutions: Numeric data entry fields were re-set up to allow only numeric letters. Number check function was also added to allow only two characters.

Example 4: Navigation

Problem description: some menu structures were deep (many menu levels) rather than broad (many items on a menu). Users may get lost in the middle of navigation.

Heuristics violated: User Control and Freedom

Design solutions: Menu hierarchy was adjusted to have no more than three in-depth links to get to the information.

4-2. Usability Test

4-2-1. NICU Parents’ Demographics

The parent participants (N=10) had a mean age of 44 years (SD=12.8, range 26–59). Five participants were mothers, one was a father, and 4 were grandmothers. Five participants were White Hispanic, 2 were White non-Hispanic, 2 were African American, and one was Asian. The majority of participants (n=7, 70%) used a computer several times per month or more, and the rest (n=3) used a computer several times per week or more. The types of computer programs they were accustomed to using were e-mail (n=9, 90%) and the Internet (n=9, 90%).

4-2-2. Task Performance

Among tasks, logging into the website took the longest time to complete (mean=81.2 seconds, SD =30.9), followed by taking demographic questionnaire, finding information about dioxin, playing the voice-recorded text message, and finding information about the echocardiogram. The most errors were made when users (1) answered questions or changed answers in the free-text field by putting more characters than allowed or typing letters other than those suggested (e.g., alphabetical instead of numeric letters), and (2) navigated in the list of medication or abbreviations. Table I shows users’ mean time and the number of errors in completing tasks.

Table I.

Users’ Mean Time and Number of Errors in Completing Tasks

| Task 1 (logging in) |

Task 2 (taking demographics survey) |

Task 3 (finding echo information) |

Task 4 (finding digoxin information) |

Task 5 (playing voice- recorded text) |

|

|---|---|---|---|---|---|

| Mean time (SD), seconds |

81.2 (30.9) | 51.9 (27.4) | 2.2 (1.3) | 21.4. (16.8) | 3.6 (1.7) |

| Time range | 42–108 | 20–95 | 0–3 | 3.5–48.0 | 2–6 |

| Error range | 0–4 | 2–5 | 0–1 | 1–3 | 0–1 |

4-2-3. Participants’ Perceived Ease of Use and Usefulness and Comments

The mean PEUU score was 4.52 (SD=0.53), indicating participants agreed that the website was ease to use and useful. The majority of participants commented on the design and layout (n=11, 52%), followed by content (n=5, 24%), navigation (n=3, 14%), and function (n=2, 10%). Comments about the design and layout were (1) some clip art was not realistic, (2) colorful visuals were needed, (3) most babies in the pictures were White, (4) some pictographs did not clearly represent the actions, and (5) too many pop-ups were distracting and interfered with focus.

Another comment was lack of a navigation feature to go “back to previous page” and “back to Table of Contents” (navigation). The remaining comments were related to the NICU information, e.g., missing NICU education information needed to be incorporated into the website or some information was not relevant to the current NICU environment. For example, a picture of a mother holding up her baby without supporting the baby’s neck would portray improper parental behavior because most NICU babies can not control their neck. Some text passages needed to be linked or dealt with together, e.g., the breastfeeding section needed links to kangaroo care. Kangaroo care is a practice of holding diapered baby on parents’ bare chest - tummy to tummy, in between the breasts-with a blanket draped over baby's back. [54] The last and the most frequently cited comment was “too much text with too much detail, so information often was overwhelming.” For example, at the NICU equipment page, the text size was equivalent to 5 printed, double-spaced pages. Reading the page required frequent scrolling and side-to-side eye movement.

5. Discussion

5-1. Heuristic Evaluation

The violation frequencies for each heuristic suggested that website improvements needed to focus on two heuristics, consistency and standards, and match between the system and real world. However, the average severity ratings for these heuristics ranged between 0.5 and 2, suggesting that violations were serious enough to require attention, but did not need to be fixed immediately. These findings help in developing guidelines to modify design by prioritizing the violations to be fixed.

The heuristic findings were incorporated into the design modification. For example, suggested data entry format was added next to data entry fields as examples (e.g., 02/03/1970 for date of birth). For the medication and abbreviation web pages, an alphabetical index menu was added at the top of the page to simplify the menu structure and facilitate easy navigation. Some clip arts were replaced with photographs (e.g., an illustration of kangaroo care was replaced with a photo of a mother at the NICU holding her baby). Colors also were added to emphasize the key points e.g., to highlight each immunization by different color scheme on the Immunization Web page. Pictographs were reviewed to determine whether they needed to be further chunked to bitesize steps or captions rephrased to clearly explain each step. Authors also consciously included an equal number of Latino, African American, and Caucasian figures in each information session. Pop-ups were limited no more than three per screen.

The heuristic evaluation revealed that our website received the highest number of violations for the heuristic, match between the system and real world, i.e., icons and visuals not delivering a clear meaning or not matching real world conventions. The heuristic evaluators often did not correctly interpret the intended meaning of certain images. For example, they did not match the physician’s hearing device to a “hearing screening test.” Based on the results, authors changed the icons to more closely resemble what authors intended the icon to deliver. The picture of a physician’s hearing device was replaced with a picture of a baby wearing earphones and three electrodes placed on the baby's head. These findings suggest that future designs of low-literacy interfaces should include a careful selection of icons and visual images that are more realistic rather than abstract, and closely resemble the intended meaning of visuals.

The findings of the heuristic evaluation suggest that it is a useful, efficient, and low-cost method for evaluating a low-literacy interface of an early stage prototype website and the method detected many usability problems associated with design criteria for a low-literacy interface. Authors utilized many types of visual images and icons in the website to address low-literate users. The heuristic evaluation detected the highest number of violations for the heuristic associated with these visuals and icons.

Although the heuristic evaluation form is comprehensive, several improvements could be made to create a more accurate tool. When authors generated a separate list of violations from each evaluator’s comments in the heuristic evaluation form and in the verbalization captured during the session, authors found that these lists could not be directly compared because evaluators often identified the same problems with different terminology and/or different levels of abstraction, and assigned different severity levels to each heuristic. To ensure that each evaluator receives the same understanding of the principles behind each heuristic, future evaluations should include a structured training session (review of heuristics, how to apply them, and real-time example of severity rating, etc.) with a training manual containing a detailed description of the heuristic evaluation.

Heuristic evaluation has several limitations, one of which is associated with its tendency to detect more minor usability problems than major problems. Authors found that 85% of violations received mean severity scores below 1.5, which is considered a cosmetic problem only. These findings are consistent a report that “usability problems found by heuristic evaluation tend to be dominated by minor problems [28]”. The major limitation of heuristic evaluation would be its inability to assess functions that are not implemented, making it difficult for evaluators to understand how users will ultimately interact with the website and whether the user interface violates any heuristics. When combined with other usability testing methods, this method can provide a richer assessment of a user interface design [28]. Authors supplemented the heuristic evaluation by end-user testing in a laboratory-based setting before deploying the website.

5-2. Usability

Usability testing showed that participants generally perceived the website as easy to use and useful. Most participants agreed the readability was appropriate—they were able to understand most of the information, but some had difficulty pronouncing certain medical terms such as patent ductus arteriosus. Thus, they referred to the voice-recorded message as the most favorable feature.

Based on the usability participants’ comments, appropriate modifications were made. These included NICU information suggested by participants (e.g., map of NICU floor, specific NICU equipment [scale, heat lamp, bilirubin lights], parent support groups, parent education courses/workshops [e.g., car seat, feeding]). The pictures were reviewed and redesigned to correctly reflect the current NICU babies. Relevant text passages were reorganized to deal with related topics or appropriate links were made. Lengthy text was grouped into smaller chunks and moved to separate pages.

5-3. Specific Design Issues for Low-literate Users

To address the low literacy of our website’s users, we used different kinds of visuals—clip art, photographs, and pictographs. Low-literate participants preferred photographs over clip art because these gave more realistic images. For example, they favored actual photographs of kangaroo care (mother holding baby in NICU bassinet) rather than clip art of kangaroo care. However, authors found another issue related to using photographs; they are loaded with irrelevant details that are likely to attract the attention of low-literate users rather than key concepts [9, 10]. The other issue related with the use of photographs is that these are not neutral in gender and culture. Many participants perceived people in photographs as "not like me." While photographs are very good at gaining attention and generating emotional responses, they are less good at controlling how users interpret the message. As Houts et al., suggest, simple line drawings using stick figures would be an alternative way to insure that low-literate readers interpret visuals as intended [43, 53].

Usability findings revealed that pictographs clearly represented the recommended healthcare actions (e.g., how to take baby’s temperature) and were well accepted by low-literate participants. They perceived pictographs as illustrating action steps more understandable than text alone. The study findings, however, suggest careful use of captions to clearly explain each action step, and chunking healthcare actions into bite-size actions not only to maximize the effects of pictographs, but also to prevent misunderstanding the intended messages.

Another issue emphasized by the usability findings is the importance of detailed instruction for any specific feature of the website such as voice-recorded message, tabular format menu structure, or free text field in the log-in page. Low-literate users frequently entered more characters than allowed for user name and password fields in the log-in page and alphabetic rather than numeric characters in the birth-date data entry field on the demographic page. Although the instructions gave the format as “month (MM),” “day (DD),” and “year (YYYY),” these examples were still not clear enough for low-literate users. More detailed directions with an actual example (e.g., 02/03/2005) would prevent errors. Voice-recorded text was the favored feature for low-literate participants, but some were unable to follow the instructions and had difficulty playing the text, e.g., starting it, stopping it, or increasing the volume. A good way to teach the skills needed to use specific features would be user-training sessions demonstrating how to execute each feature.

One emerging theme throughout the usability findings was “simplicity” in design (e.g., limited use of colors, one font type and size), content (e.g., avoid lengthy text), and technical features (e.g., limited use of pop-ups). This website used several formats or technical features (e.g., pop-ups, hypertext links) to enhance interactive learning of low-literate users. These features specifically improved the engagement and empowerment of users by attracting users’ attention and stimulating them to pay attention to the information of interest, keeping their attention and interest for longer periods, and providing a greater depth of content [13]. However, our findings suggest that too many pop-ups or hypertext links distracted users and reduced their focus. Balancing these two goals, providing enough stimulation, but not too much distraction, is crucial to maximize the benefit to low-literate users.

6. Conclusion

Web-based educational materials for NICU parents with low-literacy skills were developed based on the cognitive theory of multimedia learning [11, 12], and usability was tested by heuristic and usability methods. Different types of visuals (clip art, photographs, and pictographs) were well accepted by low-literate users and usability experts, and agreement of visuals with text improved understanding of the educational materials over that with text alone. The findings suggest that using concrete and realistic pictures (e.g., photographs) and pictographs with clear captions and in bite-size steps would maximize the benefit of visuals.

The heuristic evaluation by usability experts and the usability test with actual users provide complementary expertise which is essential in developing a user-centered website. These results facilitate design modification and implementation of solutions by categorizing and prioritizing the usability problems. Although each method has several limitations, combining heuristic evaluation with end-user usability testing can provide a richer assessment of a user interface design of website for people with low-literacy skills. The usability evaluation would also be more accurate by adding a structured training session with a training manual containing a detailed description of the heuristic evaluation and usability test.

Summary Table

What was already known on the topic?

To deal with low-literate adults, most healthcare educators currently simplify the text language of educational materials. However, easy-to-read instructions alone only marginally improve low-literate patients’ understanding because of low-literate learners’ different way of dealing with written text such as reading word for word, focusing on each word and accessory details.

Heuristic evaluation is inexpensive, easy to conduct, and provides feedback quickly.

Usability testing is a fundamental step in the user-centered design process to assess a system or product in terms of end-user’s goals such as efficiency, avoiding errors, satisfaction, and learnability.

What this study added to our knowledge

Appropriate visuals aids (i.e., pictographs [simple line drawings showing explicit actions to be taken]) combined with simplified text is an effective way to deliver healthcare information written in unfamiliar terms or complex phrases, specifically for people with low literacy skills.

“Simplicity” in design (e.g., limited use of colors, one font type and size), content (e.g., avoid lengthy text), and technical features (e.g., limited use of pop-ups) is an important theme for design of low literacy website.

The heuristic evaluation by usability experts and the usability test with actual users provide complementary expertise, which can give a richer assessment of a design for low literacy Website

Acknowledgements

This project was supported by T32 NR 007969: Reducing Health Disparities through Informatics and P20 NR 007799: Center for Evidence-based Practice in the Underserved. The authors thank David R. Kaufman, Tiffani J. Bright, and Tsai-Ya Lai for participating in the heuristic evaluation.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Authors' contributions

Both authors (JC and SB) have participated in (1) the conception and design of the study, (2) analysis and interpretation of data, and (3) drafting the manuscript and approving the final version of the manuscript. JC also performed the data collection.

Conflict of Interest Statement

Both authors have no conflict of interest.

References

- 1.Kutner M, Greenberg E, Jin Y, Paulsen C. U.S. Department of Education. Washington, DC: National Center for Education; 2006. The Health Literacy of America’s Adults: Results From the 2003 National Assessment of Adult Literacy (NCES 2006–483) [Google Scholar]

- 2.Williams MV, Davis T, Parker RM, Weiss BD. The role of health literacy in patient-physician communication. Fam Med. 2002 May;34(5):383–389. [PubMed] [Google Scholar]

- 3.Baker DW, Gazmararian JA, Williams MV, Scott T, Parker RM, Green D, Ren J, Peel J. Health literacy and use of outpatient physician services by medicare managed care enrollees. J Gen Intern Med. 2004 Mar;19(3):215–220. doi: 10.1111/j.1525-1497.2004.21130.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Berkman ND, Dewalt DA, Pignone MP, Sheridan SL, Lohr KN, Lux L, Sutton SF, Swinson T, Bonito AJ. Literacy and health outcomes. Evid Rep Technol Assess (Summ) 2004 Jan;(87):1–8. (87) [PMC free article] [PubMed] [Google Scholar]

- 5.Gordon MM, Hampson R, Capell HA, Madhok R. Illiteracy in rheumatoid arthritis patients as determined by the rapid estimate of adult literacy in medicine (REALM) score. Rheumatology (Oxford) 2002 Jul;41(7):750–754. doi: 10.1093/rheumatology/41.7.750. [DOI] [PubMed] [Google Scholar]

- 6.Lindau ST, Basu A, Leitsch SA. Health literacy as a predictor of follow-up after an abnormal pap smear: A prospective study. J Gen Intern Med. 2006 Aug;21(8):829–834. doi: 10.1111/j.1525-1497.2006.00534.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Davis TC, Williams MV, Marin E, Parker RM, Glass J. Health literacy and cancer communication. CA Cancer J Clin. 2002 May–Jun;52(3):134–149. doi: 10.3322/canjclin.52.3.134. [DOI] [PubMed] [Google Scholar]

- 8.Davis TC, Fredrickson DD, Arnold C, Murphy PW, Herbst M, Bocchini JA. A polio immunization pamphlet with increased appeal and simplified language does not improve comprehension to an acceptable level. Patient Educ Couns. 1998 Jan;33(1):25–37. doi: 10.1016/s0738-3991(97)00053-0. [DOI] [PubMed] [Google Scholar]

- 9.Doak CC, Doak LG, Root JH. Teaching patients with low literacy skills. 2nd ed. Philadelphia: J.B. Lippincott; 1996. p. 212. [Google Scholar]

- 10.Doak CC, Doak LG, Friedell GH, Meade CD. Improving comprehension for cancer patients with low literacy skills: Strategies for clinicians. CA Cancer J Clin. 1998 May–Jun;48(3):151–162. doi: 10.3322/canjclin.48.3.151. [DOI] [PubMed] [Google Scholar]

- 11.Mayer RE. Multimedia aids to problem-solving transfer. International Journal of Educational Research. 1999;31:611–623. [Google Scholar]

- 12.Mayer RE. Instructional technology. In: Durso F, editor. Handbook of applied cognition. Chichester, England: Wiley; 1999. pp. 551–570. [Google Scholar]

- 13.Sharp H, Rogers Y, Preece J, editors. Interaction Design: Beyond Human-Computer Interaction. 2nd. ed. New York, NY: John Wiley & Sons, Inc.; 2007. [Google Scholar]

- 14.Dix A, Finlay J, Abowd GD, Beale R. Human-Computer Interaction. 3rd ed. England: Prentice Hall; 2004. [Google Scholar]

- 15.Nielsen J, Mack RL, editors. Usability Inspection Methods. New York: John Wiley & Sons; 1994. [Google Scholar]

- 16.Zhang J, Johnson TRVP, Paige DL, Kubose T. Using usability heuristics to evaluate patient safety of medical devices. J Biomed Inform. 2003 Feb–Apr;36(1–2):23–30. doi: 10.1016/s1532-0464(03)00060-1. [DOI] [PubMed] [Google Scholar]

- 17.Graham MJ, Kubose TK, Jordan D, Zhang J, Johnson TRVP. Heuristic evaluation of infusion pumps: Implications for patient safety in intensive care units. Int J Med Inform. 2004 Nov;73(11–12):771–779. doi: 10.1016/j.ijmedinf.2004.08.002. [DOI] [PubMed] [Google Scholar]

- 18.Gazmararian JA, Baker DW, Williams MV, Parker RM, Scott TL, Green DC, Fehrenbach SN, Ren J, Koplan JP. Health literacy among medicare enrollees in a managed care organization. Jama. 1999 Feb 10;281(6):545–551. doi: 10.1001/jama.281.6.545. [DOI] [PubMed] [Google Scholar]

- 19.Michielutte R, Bahnson J, Dignan MB, Schroeder EM. The use of illustrations and narrative text style to improve readability of a health education brochure. J Cancer Educ. 1992;7(3):251–260. doi: 10.1080/08858199209528176. [DOI] [PubMed] [Google Scholar]

- 20.Leiner M, Handal G, Williams D. Patient communication: A multidisciplinary approach using animated cartoons. Health Educ Res. 2004 Oct;19(5):591–595. doi: 10.1093/her/cyg079. [DOI] [PubMed] [Google Scholar]

- 21.Morrow DG, Hier CM, Menard WE, Leirer VO. Icons improve older and younger adults' comprehension of medication information. J Gerontol B Psychol Sci Soc Sci. 1998 Jul;53(4):P240–P254. doi: 10.1093/geronb/53b.4.p240. [DOI] [PubMed] [Google Scholar]

- 22.Dowse R, Ehlers M. Medicine labels incorporating pictograms: Do they influence understanding and adherence? Patient Educ Couns. 2005 Jul;58(1):63–70. doi: 10.1016/j.pec.2004.06.012. [DOI] [PubMed] [Google Scholar]

- 23.Austin PE, Matlack R, 2nd, Dunn KA, Kesler C, Brown CK. Discharge instructions: Do illustrations help our patients understand them? Ann Emerg Med. 1995 Mar;25(3):317–320. doi: 10.1016/s0196-0644(95)70286-5. [DOI] [PubMed] [Google Scholar]

- 24.Mansoor LE, Dowse R. Effect of pictograms on readability of patient information materials. Ann Pharmacother. 2003 Jul–Aug;37(7–8):1003–1009. doi: 10.1345/aph.1C449. [DOI] [PubMed] [Google Scholar]

- 25.Ngoh L. Design, development, and evaluation of visual aids for communicating prescription drug instructions to nonliterate patients in rural cameroon. Patient Educ Couns. 1997;30(3):257. doi: 10.1016/s0738-3991(96)00976-7. [DOI] [PubMed] [Google Scholar]

- 26.Kripalani S, Robertson R, Love-Ghaffari MH, Henderson LE, Praska J, Strawder A, Katz MG, Jacobson TA. Development of an illustrated medication schedule as a low-literacy patient education tool. Patient Educ Couns. 2007 Jun;66(3):368–377. doi: 10.1016/j.pec.2007.01.020. [DOI] [PubMed] [Google Scholar]

- 27.Sweller J, Van Merrienboer J, Paas F. Cognitive architecture and instructional design. Educational Psychology Review. 1998;10(3):251–296. [Google Scholar]

- 28.Nielsen J. Characteristics of usability problems found by heuristic evaluation [Internet] [cited 2009 Aug 25]; Available from: http://www.useit.com/papers/heuristic/usability_problems.html. [Google Scholar]

- 29.Bastien Usability testing: A review of some methodological and technical aspects of the method. Int J Med Inf. 2009 doi: 10.1016/j.ijmedinf.2008.12.004. [Epub ahead of print] [DOI] [PubMed] [Google Scholar]

- 30.Nielsen J. Usability Engineering. San Diego, LA: Morgan Kaufman; 1993. [Google Scholar]

- 31.Cogdill K. College Park, MD: College of Information Studies, University of Maryland; MEDLINEplus Interface Evaluation: Final Report. 1999

- 32.Nahm ES, Preece J, Resnick B, Mills ME. Usability of health web sites for older adults: A preliminary study. Comput Inform Nurs. 2004 Nov–Dec;22(6):326–334. doi: 10.1097/00024665-200411000-00007. [DOI] [PubMed] [Google Scholar]

- 33.Britto, Jimison, Munafo, Wissman, Rogers, Hersh Usability testing finds new problems for novice users of pediatric portals. Journal of the American Medical Informatics Association. 2009 doi: 10.1197/jamia.M3154. [Epub ahead of print] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Lai TY. Iterative refinement of a tailored system for self-care management of depressive symptoms in people living with HIV/AIDS through heuristic evaluation and end user testing. Int J Med Inform. 2007 Oct;76 Suppl 2:S317–S324. doi: 10.1016/j.ijmedinf.2007.05.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Scandurra, Hgglund, Koch, Lind Usability laboratory test of a novel mobile homecare application with experienced home help service staff. The Open Medical Informatics Journal. 2008;2:117. doi: 10.2174/1874431100802010117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Treadwell I. The usability of personal digital assistants (PDAs) for assessment of practical performance. Med Educ. 2006;40(9):855. doi: 10.1111/j.1365-2929.2006.02543.x. [DOI] [PubMed] [Google Scholar]

- 37.Bright T, Bakken S, Johnson S. Heuristic evaluation of eNote: An electronic notes system. AMIA. Annual Symposium proceedings; 2006. p. 864. [PMC free article] [PubMed] [Google Scholar]

- 38.Tang Z, Johnson T, Tindall, Zhang J. Applying heuristic evaluation to improve the usability of a telemedicine system. Telemedicine Journal and E-health. 2006;12(1):24. doi: 10.1089/tmj.2006.12.24. [DOI] [PubMed] [Google Scholar]

- 39.Hyun, Johnson, Stetson, Bakken Development and evaluation of nursing user interface screens using multiple methods. J Biomed Inform. 2009 doi: 10.1016/j.jbi.2009.05.005. [Epub ahead of print] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.National Cancer Institute. Clear & Simple: Developing Effective Print Materials for Low-Literate Readers [Internet] [cited 2009 Aug 1];National Institutes of Health. Available from: http://www.nci.nih.gov/cancerinformation/clearandsimple.

- 41.PlainLanguage.gov. Simple Words and Phrases [Internet] [cited 2009 Aug 25];PlainLanguage.gov. Available from: http://www.plainlanguage.gov/howto/wordsuggestions/simplewords.cfm.

- 42.Doak LG, Doak CC. Pfizer Inc.; [cited 2009 Aug 1]. Pfizer Principles for Clear Health Communication [Internet] Available from: http://www.pfizerhealthliteracy.com/pdf/PfizerPrinciples.pdf. [Google Scholar]

- 43.Houts PS, Doak CC, Doak LG, Loscalzo MJ. The role of pictures in improving health communication: A review of research on attention, comprehension, recall, and adherence. Patient Educ Couns. 2006 May;61(2):173–190. doi: 10.1016/j.pec.2005.05.004. [DOI] [PubMed] [Google Scholar]

- 44.Shneiderman B. Designing the user interface: strategies for effective human-computer-interaction. 3rd ed. Reading, Mass: Addison-Wesley; 1998. p. 639. [Google Scholar]

- 45.Pierottie D. Heuristic Evaluation - A System Checklist [Internet] [cited 2009 Aug 2]; Available from: http://www.stcsig.org/usability/topics/articles/he-checklist.html. [Google Scholar]

- 46.Chisnell D, Redish J. Designing Web Sites for Older Adults: Expert Review of Usability for Older Adults at 50 Web Sites [Internet] [cited 2009 Aug 8]; Available from: http://assets.aarp.org/www.aarp.org_/articles/research/oww/AARP-50Sites.pdf. [Google Scholar]

- 47.Ericsson KA, Simon HA. Protocol analysis : verbal reports as data. Rev ed. Cambridge, Mass: MIT Press; 1993. p. 443. [Google Scholar]

- 48.Davis FD. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly. 1989;13(3):319. [Google Scholar]

- 49.Baker DW, Williams MV, Parker RM, Gazmararian JA, Nurss J. Development of a brief test to measure functional health literacy. Patient Educ Couns. 1999 Sep;38(1):33–42. doi: 10.1016/s0738-3991(98)00116-5. [DOI] [PubMed] [Google Scholar]

- 50.Campbell MJ, Edwards MJ, Ward KS, Weatherby N. Developing a parsimonious model for predicting completion of advance directives. J Nurs Scholarsh. 2007;39(2):165–171. doi: 10.1111/j.1547-5069.2007.00162.x. [DOI] [PubMed] [Google Scholar]

- 51.Donelle L, Hoffman-Goetz L, Arocha JF. Assessing health numeracy among community-dwelling older adults. J Health Commun. 2007 Oct–Nov;12(7):651–665. doi: 10.1080/10810730701619919. [DOI] [PubMed] [Google Scholar]

- 52.Webber RP. Basic Content Analysis. Beverly Hills, CA: Sage; 1985. [Google Scholar]

- 53.Houts PS, Witmer JT, Egeth HE, Loscalzo MJ, Zabora JR. Using pictographs to enhance recall of spoken medical instructions II. Patient Educ Couns. 2001 Jun;43(3):231–242. doi: 10.1016/s0738-3991(00)00171-3. [DOI] [PubMed] [Google Scholar]

- 54.Luddington-Hoe SM, Golant SK. Kangaroo care: The best you can do to help your preterm infant. New York, NY: Bantam Books; 1993. [Google Scholar]