Abstract

Background

In the era of pay for performance and outcome comparisons among institutions, it is imperative to have reliable and accurate surveillance methodology for monitoring infectious complications. The current monitoring standard often involves a combination of prospective and retrospective analysis by trained infection control (IC) teams. We have developed a medical informatics application, the Surgical Intensive Care-Infection Registry (SIC-IR), to assist with infection surveillance. The objectives of this study were to: (1) Evaluate for differences in data gathered between the current IC practices and SIC-IR; and (2) determine which method has the best sensitivity and specificity for identifying ventilator-associated pneumonia (VAP).

Methods

A prospective analysis was conducted in two surgical and trauma intensive care units (STICU) at a level I trauma center (Unit 1: 8 months, Unit 2: 4 months). Data were collected simultaneously by the SIC-IR system at the point of patient care and by IC utilizing multiple administrative and clinical modalities. Data collected by both systems included patient days, ventilator days, central line days, number of VAPs, and number of catheter-related blood steam infections (CR-BSIs). Both VAPs and CR-BSIs were classified using the definitions of the U.S. Centers for Disease Control and Prevention. The VAPs were analyzed individually, and true infections were defined by a physician panel blinded to methodology of surveillance. Using these true infections as a reference standard, sensitivity and specificity for both SIC-IR and IC were determined.

Results

A total of 769 patients were evaluated by both surveillance systems. There were statistical differences between the median number of patient days/month and ventilator-days/month when IC was compared with SIC-IR. There was no difference in the rates of CR-BSI/1,000 central line days per month. However, VAP rates were significantly different for the two surveillance methodologies (SIC-IR: 14.8/1,000 ventilator days, IC: 8.4/1,000 ventilator days; p = 0.008). The physician panel identified 40 patients (5%) who had 43 VAPs. The SICIR identified 39 and IC documented 22 of the 40 patients with VAP. The SIC-IR had a sensitivity and specificity of 97% and 100%, respectively, for identifying VAP and for IC, a sensitivity of 56% and a specificity of 99%.

Conclusions

Utilizing SIC-IR at the point of patient care by a multidisciplinary STICU team offers more accurate infection surveillance with high sensitivity and specificity. This monitoring can be accomplished without additional resources and engages the physicians treating the patient.

Surveillance of nosocomial infections traditionally has been carried out by trained infection control (IC) professionals in order to supply timely and accurate data about infectious complications, as well as to provide feedback about the efficacy of infection-related patient care practices [1]. The rationale for tracking hospital-acquired infections (HAI) as a means to reduce overall infection rates can be traced to the U.S. Centers for Disease Control and Prevention (CDC) landmark trial, the Study on the Efficacy of Nosocomial Infection Control (SENIC). The SENIC study concluded that nearly one-third of nosocomial infections could be prevented by infection surveillance and control programs [2, 3]. In the current environment of patient outcome comparisons among institutions, pay for performance calculations, and national efforts to establish quality improvement practices, surveillance of HAI remains particularly relevant.

Currently, IC teams use various combinations of prospective and retrospective methods to monitor HAI. The methodology chosen determines efficacy, cost, and resource expenditure. The accuracy of IC reporting depends on both the timing and the frequency of monitoring [4]. Prospective daily review of all patient records, although considered to be the gold standard, is time-consuming and costly [2]. Other documented methods of surveillance include retrospective or prospective review of targeted patients' records, such as those with positive cultures or those with an intensive care unit stay greater than five days [2]. The success of all of these surveillance methods relies on thorough health care documentation [3, 5, 6].

We have designed and implemented the Surgical Intensive Care-Infection Registry (SIC-IR), which has been validated as an accurate and reliable medical informatics program [7, 8]. The technique is a real-time data acquisition research and clinical tool utilized at the point of care to assist in caring for critically ill patients. In addition to its research registry qualities, SIC-IR creates an electronic medical record for all STICU patients.

The purpose of this study was to identify differences in infection-related data collected by SIC-IR and IC, as well as to determine which of these methods has the best sensitivity and specificity for identifying ventilator-associated pneumonia (VAP). We hypothesized that the SIC-IR system would be more accurate in data collection and infection identification than IC.

Patients and Methods

A 12-month prospective analysis was conducted in two surgical and trauma intensive care units (STICUs) at a level I trauma center (Unit 1: 8 months of data collection; Unit 2: 4 months of data collection). Data collected by both the SIC-IR system and IC included patient days, ventilator days, central line days, number of VAPs, and number of catheter-related blood stream infections (CR-BSI).

The SIC-IR is a research-specific prospective registry, designed with input from a multidisciplinary team of surgical intensivists, surgical residents, pharmacists, and computer scientists, that facilitates the evaluation of infectious complications in the STICU. The SIC-IR collects more than 100 clinical variables daily on each STICU patient, including components implicated in infectious complications, such as demographics (age, sex, race), vital statistics, laboratory values, current antibiotic treatment, prior and current infectious complications, co-morbidities, and impact of time and interventions (e.g., length of time in the STICU, time to tracheostomy, and surgical interventions). The SIC-IR is integrated with the hospital's laboratory information system as well as the medication administration record for automatic data loading to ensure registry consistency and accuracy. The program also accumulates information regarding indwelling urinary catheters, central venous access devices, ventilator requirements, use of steroids, blood product transfusions, and all Joint Commission intensive care unit core measures (e.g., venous throm-boembolism prophylaxis, gastrointestinal bleeding prophylaxis, and head of bed elevation to 30°) [9]. The SIC-IR system provides functionality for creating patient admission histories and physical findings, daily progress notes, procedure notes, and transfer of patient care documentation.

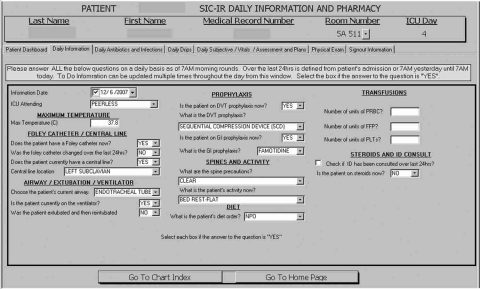

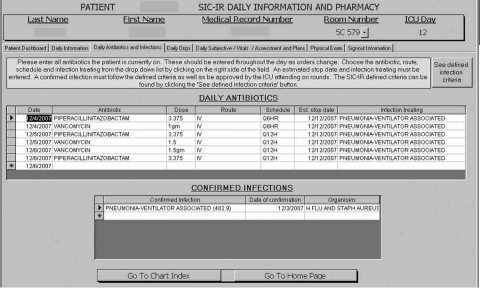

The SIC-IR system utilized a graphical user interface to obtain the infection information daily at the point of patient care, with reporting completed by physicians treating the patient (see Figs. 1 and 2 for examples of data entry forms). Infection-related data were reviewed daily by a single designated STICU pharmacist, who was present during daily rounds. On days when the pharmacist was absent, data were reviewed by the rounding pharmacist and then re-reviewed on return of the designated pharmacist. In this study, a SIC-IR patient day was recorded when either an admission history and physical examination or a daily progress note was completed for a particular patient. If the treating physician responded “yes” to daily mandatory questions regarding the requirement for mechanical ventilation or the presence of a central venous catheter, an SIC-IR ventilator day or central line day was recorded, respectively. In addition, ventilator and central line data were updated throughout the day as physicians completed mandatory SIC-IR procedure notes when intubating patients or placing central venous catheters.

FIG. 1.

Data entry page for Surgical Intensive Care-Infection Registry daily information and pharmacy data. Note mandatory prompts regarding presence of central line and mechanical ventilation.

FIG. 2.

Data entry page for Surgical Intensive Care-Infection Registry antibiotics and confirmed infections. Note that infection, date of confirmation, organism cultured, and antibiotic treatment are recorded daily. The button labeled “See defined infection criteria” will take the user to the U.S. Centers for Disease Control and Prevention definitions of specific infections.

The IC team employed multiple clinical and administrative modalities to obtain data. Patient days were derived from the hospital's administrative midnight census. Ventilator days were based on respiratory therapy billing records defined as having a ventilator in the patient's room. Central line days were calculated from once a day rounds by a designated STICU nurse recording the presence or absence of a central line. The IC surveillance practices involve one of two IC trained nurses prospectively monitoring all patients' charts with positive microbiology culture results, as well as daily data interrogation for any patients staying in the STICU for five or more days.

Both VAP and CR-BSI were defined using the CDC definitions [10]. Bronchoalveolar lavage (BAL) specimens were considered positive with growth of 104 organisms/mL. For this study, BAL was performed according to review of chest radiographs, assessment of respiratory secretions, fever, and leukocytosis. A semi-quantitative rolling technique was used for central venous catheter tip cultures, which were considered positive with the growth of ≥15 colony-forming units of a single type of bacteria. Central line and ventilator use, as well as VAP and CR-BSI rates, were calculated using the National Healthcare Safety Network (NHSN) surveillance method [1]. Rate calculations were performed by normalizing the number of VAPs and CR-BSIs to 1000 ventilator and central line days, respectively. Our data are compared with the NHSN data using the surgical intensive care units as a reference because our units contain fewer than 80% trauma patients.

Data were analyzed using SPSS 15.0 (SPSS Inc., Chicago IL). Numbers are expressed as percentiles (median = 50th percentile) and compared for SIC-IR and IC using the Wilcoxon signed rank test for two related samples. Discrepancies in reported cases of VAP in IC and SIC-IR were analyzed individually, and true infections were identified by a physician panel that was blinded to the surveillance methodology. The physician panel consisted of five practicing surgical intensivists at our institution. Using these true infections as a reference standard, the sensitivity and specificity of SIC-IR and IC were determined.

Results

This analysis evaluated 769 consecutive patients admitted to the STICU over the 12-month period. Table 1 summarizes SIC-IR and IC NHSN data. The SIC-IR documented significantly more patient days per month than did the IC (p = 0.003). However, IC recorded significantly more ventilator days per month (p = 0.009). There was no significant difference in the number of central line days for the two systems.

Table 1.

Summary of Surgical Intensive Care-Infection Registry vs. Infection Control Reporting of National Healthcare Safety Network Data

| |

SIC-IR |

IC |

|

||

|---|---|---|---|---|---|

| Median | 25th–75th percentiles | Median | 25th–75th percentiles | P value | |

| Patient days | 403 | 361–425 | 372 | 319–381 | 0.003 |

| Central line days | 208 | 177–225 | 197 | 177–221 | 0.289 |

| Ventilator days | 236 | 189–250 | 239 | 199–262 | 0.009 |

| Central line utilization (%) (NHSN percentile) | 52 (25th–50th) | 45–53 | 57 (25th–50th) | 52–59 | 0.005 |

| Ventilator utilization (%) (NHSN percentile) | 56 (75th–90th) | 54–59 | 67 (>90th) | 61–71 | 0.002 |

| Number of VAPs | 3 | 2–5 | 2 | 1–3 | 0.009 |

| Number of CR-BSIs | 0 | 0–0 | 0 | 0–0.8 | 0.317 |

| VAP rate (NHSN percentile) | 14.8 (>90th) | 9.1–22.3 | 8.4 (75th–90th) | 3.8–2.6 | 0.008 |

| CR-BSI rate (NHSN percentile) | 0 (0–10th) | 0–0 | 0 (0–10th) | 0–3.7 | 0.144 |

All values are reported per month.

Utilization and rates are calculated utilizing the NHSN equations:

Utilization = (number of device days/total patient days) × 100

Rate = (number of infections/number of device days) × 100

SIC-IR = Surgical Intensive Care-Infection Registry; IC = infection control; NHSN = National Healthcare Safety Network; VAP = ventilator-associated pneumonia; CR-BSI = catheter-related blood stream infection.

The SIC-IR data calculated significantly less ventilator and central line utilization per month. At 56%, the SIC-IR report of ventilator use was 11% less than that of IC, and placed SIC-IR results between the 75th and 90th percentiles on the NHSN scale for surgical ICUs. In contrast, the IC report of 67% ventilator use per month would be greater than the 90th percentile nationally. The SIC-IR calculated 52% central line utilization, whereas IC calculated 57%, placing both SIC-IR and IC results between the 25th and 50th percentiles for surgical ICUs [1].

The SIC-IR recorded significantly more episodes of VAP per month than did IC (p = 0.009), and when converted to a VAP rate per 1,000 ventilator days per month, SIC-IR had nearly double the VAP rate of IC (p = 0.008). Compared with surgical ICUs nationally, the SIC-IR reported a VAP rate greater than the 90th percentile on the NHSN scale, whereas IC reported a rate between the 75th and 90th percentiles [1]. There were no significant differences between SIC-IR and IC with respect to the number of CR-BSIs per month, nor the CR-BSI rate. The rate of CR-BSI calculated from both SIC-IR and IC data was between zero and the 10th percentile on the NHSN scale [1]. Further comparisons of CR-BSI were not made because of the low incidence.

A second analysis of central line utilization, ventilator utilization, and VAP rates were performed to eliminate the differences in the methodology of the way IC and SIC-IR determine ventilator days and patient days. Central line use and ventilator use in SIC-IR and IC normalized to the same administrative data denominator (midnight census). In addition, VAP rates were normalized to administrative data (ventilator billed days). These results are shown in Table 2. This normalization analysis removed the statistical difference between central venous catheter utilization; however, ventilator utilization and VAP rates remain statistically different for the two surveillance methodologies.

Table 2.

Summary of Surgical Intensive Care-Infection Registry vs. Infection Control Reporting of National Healthcare Safety Network Data Normalized to Administrative Data

| |

Normalized SIC-IR |

Normalized IC |

|

||

|---|---|---|---|---|---|

| Median | 25th–75th percentile | Median | 25th–75th percentile | P value | |

| Central line utilization (%) (NHSN percentile) | 58 (25th–50th) | 51–60 | 57 (25th–50th) | 52–59 | 0.308 |

| Ventilator utilization (%) (NHSN percentile) | 63 (>90th) | 61–65 | 67 (>90th) | 61–71 | 0.008 |

| VAP rate (NHSN percentile) | 14.1 (>90th) | 9.1–20.4 | 8.4 (>90th) | 3.8–12.6 | 0.009 |

All values are reported per month.

Utilization and rates are calculated utilizing the NHSN equations normalized to administrative data:

Utilization = (number of device days/midnight census patient days) × 100

Rate = (number of VAPs/number of ventilator days per administrative data) × 100

SIC-IR = Surgical Intensive Care-Infection Registry; IC = infection control; NHSN = National Healthcare Safety Network.

The two reporting systems agreed on the diagnosis of VAP in 21 patients. The SIC-IR detected 18 patients with VAP that IC failed to report. In contrast, IC discovered only one patient with VAP that SIC-IR overlooked. After investigating all episodes of VAP identified by IC and SIC-IR, a physician panel identified 40 patients who accounted for 43 episodes of VAP. The SIC-IR had a sensitivity of 97% and a specificity of 100% in identifying patients with VAP (Table 3). The IC sensitivity and specificity were 56% and 99%, respectively (Table 4). Of the 18 patients only SIC-IR reported as having VAP, 14 had positive BAL cultures at a concentration of ≥104/mL organisms. The remaining four patients that IC did not record were those who had had positive respiratory secretion cultures and had been treated with an antibiotic regimen for VAP with a documented response.

Table 3.

Two-by-Two Table for Surgical Intensive Care-Infection Registry-Reported Cases of Ventilator-Associated Pneumonia Used to Calculate Sensitivity and Specificity

| |

Confirmed VAP cases |

||

|---|---|---|---|

| SIC-IR-reported VAP cases | VAP | No VAP | Total |

| VAP reported | 39 | 0 | 39 |

| No VAP reported | 1 | 729 | 730 |

| Total | 40 | 729 | 769 |

SIC-IR = Surgical Intensive Care-Infection Registry; VAP = ventilator-associated pneumonia.

Table 4.

Two-by-Two Table for Infection Control-Reported Cases of Ventilator-Associated Pneumonia Used to Calculate Sensitivity and Specificity

| |

Confirmed VAP cases |

||

|---|---|---|---|

| IC-reported VAP cases | VAP | No VAP | Total |

| VAP reported | 22 | 1 | 39 |

| No VAP reported | 18 | 728 | 730 |

| Total | 40 | 729 | 769 |

IC = infection control; VAP = ventilator-associated pneumonia.

Discussion

The results of this study demonstrate that our current practice of monitoring HAI can be improved by utilizing a computerized registry in real time to track all patients in the STICU prospectively. The SIC-IR system documented significantly more patient days per month, but at the same time reported fewer ventilator days and less ventilator and central line utilization when matched against IC. Of 40 patients with confirmed VAP, SIC-IR detected 39, whereas IC recognized only 22, giving SIC-IR a higher sensitivity and specificity. When converted to a VAP rate to allow for both intra-facility and inter-facility comparisons, the VAP rate obtained from SIC-IR was nearly double that of IC (14.8/1000 ventilator days vs. 8.4/1000 ventilator days).

Previous investigations of infection surveillance have highlighted key issues underscored by the results of our analysis. Retrospective examination of all patient records tends to be of low yield, with prior studies reporting sensitivities of 74 and 88%, and requires significant time expenditure [5, 11, 12]. However, concentrating efforts by examining only discharge summaries or singling out records containing positive microbiology cultures does not improve the accuracy of retrospective review [5, 13]. Prospective examination of patients with positive cultures is generally accepted as a better case-identification method, with investigators reporting sensitivities ranging from 75 to 91% [4, 14, 15]. Emori et al. specifically reviewed the accuracy of National Nosocomial Infection Surveillance (NNIS) personnel in finding infections according to site, and found the sensitivities to differ widely, from 68% for pneumonia to 85% for blood stream infections [6]. Reviewing patient data more frequently was associated with greater sensitivities in prior reviews [4], but evaluating only those patients with positive cultures did not improve results [16].

Consistently, models with the greatest sensitivity and specificity for identifying HAI have incorporated computer assistance in the surveillance system. Evans et al. reported a computer monitoring method was able to identify 90% of HAI, whereas IC teams found only 76% [16]. Similarly, Bouam et al. reported the use of an automated system that missed only 9% of infections, compared with the 41% missed by IC [13].

This study confirmed that monitoring practices by IC personnel reliably have high specificity, but lower sensitivity for detecting HAI. That is, it is rare for IC teams to identify a patient falsely as having disease; however, it is common for teams to fail to detect patients who are truly infected [6]. Nationally, only 90% of HAI actually yield an organism [16]. The diagnosis of the 10% not defined by positive cultures is based solely on clinical judgment, and may be one important group of patients ultimately missed by IC surveys [16].

Several factors may account for discrepancies noted between SIC-IR and IC reporting of VAP. Regardless of whether a detection cutoff of 104 or 105 organisms/mL is used to define a positive BAL culture, IC missed 14 VAPs with positive BAL results. Even at the more stringent cutoff of 105/mL, SIC-IR would have documented VAP in eight patients that IC failed to identify. Furthermore, there may have been some misinterpretation on the part of IC personnel regarding BAL results that reported “normal oral flora,” as oral flora are not normal organisms to culture from the lungs. Identification of patients with infectious complications requires more than simply looking for positive cultures; indeed, four patients in this analysis were treated for VAP solely on the basis of physician judgment and respiratory secretion cultures. Thus, it seems a system that requires input from physicians, who ultimately are responsible for integrating information and formulating clinical judgments, is a better surveillance model for identifying patients with infection. A study by Crabtree et al. [17] added further support for surgeon involvement in monitoring infections. That study demonstrated that the rate of nosocomial infections detected was significantly higher when determined by surgeons dedicated to IC than for CDC-trained IC practitioners. Infection control reports rely on passive surveillance, for example, by respiratory therapists and nursing staff, whose primary role is not infection surveillance [2, 18]. Heavily laboratory-based and record-based IC practices may not utilize ward rounds and routine discussion with caregivers to monitor HAI [2].

A limitation of this study is our assumption that all patients in the population not identified by SIC-IR or IC as having an infection actually were disease-free. Five percent of patients in this analysis were found to have VAP by SIC-IR or IC or both. We believe that the combined efforts of IC and SIC-IR captured virtually every case of VAP diagnosed. Another limitation of this study is that resource utilization was not analyzed specifically. This information could be evaluated by looking at the number of full-time employees utilized and the time spent collecting data and then evaluating it. Although we have no quantitative data, we are confident that SIC-IR utilizes fewer additional resources. Several people are utilized to capture IC data, and retrospective review requires time. The IC data were available only two to three months after the fact. In contrast, SIC-IR captures data in real time as part of the routine work flow to assist with the delivery of patient care. Furthermore, a factor that makes surveillance difficult is that there is no true gold standard for diagnosing pneumonia. However, the definition of VAP was similar for the surgical group and the IC group.

In summary, the results of this analysis highlight the potential for better infection surveillance in the STICU through the use of a prospective registry to track all patients at the point of care. The SIC-IR proved to have high sensitivity for detecting HAI, while at the same time being readily integrated into physician practice. As the CDC has stated, data collection should never be an end in itself; rather, it should be used to achieve the goal of decreasing HAI [18]. However, to decrease HAI and make comparisons among hospitals, the true infection rate must be documented. The utilization of SIC-IR allows standardization of surveillance methodology using real-time data evaluation 24 hours a day, seven days a week. The SIC-IR uses fewer resources and provides more accurate infection surveillance than standard IC practices.

Footnotes

Presented at the 28th Annual Meeting of the Surgical Infection Society, Hilton Head, South Carolina, May 6–9, 2008.

Acknowledgments

This work and J.A. Claridge were supported in part by Grant Number 1KL2RR024990 from the National Center for Research Resources (NCRR), a component of the National Institutes of Health (NIH), and NIH Roadmap for Medical Research. Its contents are solely the responsibility of the authors and do not necessarily represent the official view of NCRR or NIH.

This work was also supported by the Surgical Infection Society Foundation through the Junior Faculty Fellowship awarded to J.A. Claridge in 2007.

Author Disclosure Statement

No competing financial interests exist.

References

- 1.Edwards JR. Peterson KD. Andrus ML, et al. National Healthcare Safety Network (NHSN) Report, data summary for 2006, issued June 2007. Am J Infect Control. 2007;35:290–301. doi: 10.1016/j.ajic.2007.04.001. [DOI] [PubMed] [Google Scholar]

- 2.Pottinger JM. Herwaldt LA. Peri TM. Basics of surveillance: An overview. Infect Control Hosp Epidemiol. 1997;18:513–527. doi: 10.1086/647659. [DOI] [PubMed] [Google Scholar]

- 3.Scheckler WE. Brimhall D. Buck AS, et al. Requirements for infrastructure and essential activities of infection control and epidemiology in hospitals: A consensus panel report. Society for Healthcare Epidemiology of America. Infect Control Hosp Epidemiol. 1998;19:114–124. doi: 10.1086/647779. [DOI] [PubMed] [Google Scholar]

- 4.Delgado-Rodriguez M. Gomez-Ortega A. Sierra A, et al. The effect of frequency of chart review on the sensitivity of noso-comial infection surveillance in general surgery. Infect Control Hosp Epidemiol. 1999;20:208–212. doi: 10.1086/501615. [DOI] [PubMed] [Google Scholar]

- 5.Belio-Blasco C. Torres-Fernandez-Gil MA. Echeverria-Echarri JL. Gomez-Lopez LI. Evaluation of two retrospective active surveillance methods for the detection of nosocomial infection in surgical patients. Infect Control Hosp Epidemiol. 2000;21:24–27. doi: 10.1086/501692. [DOI] [PubMed] [Google Scholar]

- 6.Emori TG. Edwards JR. Culver DH, et al. Accuracy of reporting nosocomial infections in intensive-care-unit patients to the National Nosocomial Infections Surveillance System: A pilot study [erratum appears in Infect Control Hosp Epidemiol 1998;19:479] Infect Control Hosp Epidemiol. 1998;19:308–316. doi: 10.1086/647820. [DOI] [PubMed] [Google Scholar]

- 7.Fadlalla AM. Golob JF. Claridge JA. The Surgical Intensive Care-Infection Registry (SIC-IR): A research registry with daily clinical support capabilities. Am J Med Qual. 2009;24:29–34. doi: 10.1177/1062860608326633. [DOI] [PubMed] [Google Scholar]

- 8.Golob JF., Jr Fadlalla AMA. Kan JA, et al. Validation of SIC-IR: A medical informatics system for intensive care unit research, quality of care improvement, and daily patient care. J Am Coll Surg. 2008;207:164–173. doi: 10.1016/j.jamcollsurg.2008.04.025. [DOI] [PubMed] [Google Scholar]

- 9.Joint Commission on Accreditation of Healthcare Organizations. Attributes of core measures performance measures and associated evaluation criteria. http://www.jointcommission.org/ http://www.jointcommission.org/

- 10.U.S. Centers for Disease Control and Prevention. CDC definitions of nosocomial infections. http://health2k.state.nv.us/sentinel/Forms/UpdatedForms105/CDC_Defs_Nosocomial.pdf http://health2k.state.nv.us/sentinel/Forms/UpdatedForms105/CDC_Defs_Nosocomial.pdf

- 11.Haley RW. Culver DH. White JW, et al. The efficacy of infection surveillance and control programs in preventing nosocomial infections in US hospitals. Am J Epidemiol. 1985;121:182–205. doi: 10.1093/oxfordjournals.aje.a113990. [DOI] [PubMed] [Google Scholar]

- 12.Haley RW. Schaberg DR. McClish DK, et al. The accuracy of retrospective chart review in measuring nosocomial infection rates: Results of validation studies in pilot hospitals. Am J Epidemiol. 1980;111:516–533. doi: 10.1093/oxfordjournals.aje.a112931. [DOI] [PubMed] [Google Scholar]

- 13.Bouam S. Girou E. Brun-Buisson C, et al. An intranet-based automated system for the surveillance of nosocomial infections: Prospective validation compared with physicians' self-reports. Infect Control Hosp Epidemiol. 2003;24:51–55. doi: 10.1086/502115. [DOI] [PubMed] [Google Scholar]

- 14.Bouletreau A. Dettenkofer M. Forster DH, et al. Comparison of effectiveness and required time of two surveillance methods in intensive care patients. J Hosp Infect. 1999;41:281–289. doi: 10.1053/jhin.1998.0530. [DOI] [PubMed] [Google Scholar]

- 15.Delgado-Rodriguez M. Gomez-Ortega A. Llorca J, et al. Nosocomial infection, indices of intrinsic infection risk, and in-hospital mortality in general surgery. J Hosp Infect. 1999;41:203–211. doi: 10.1016/s0195-6701(99)90017-8. [DOI] [PubMed] [Google Scholar]

- 16.Evans RS. Larsen RA. Burke JP, et al. Computer surveillance of hospital-acquired infections and antibiotic use. JAMA. 1986;256:1007–1011. [PubMed] [Google Scholar]

- 17.Crabtree TD. Pelletier SJ. Raymond DP, et al. Effect of changes in surgical practice on the rate and detection of nosocomial infections: A prospective analysis. Shock. 2002;17:258–262. doi: 10.1097/00024382-200204000-00003. [DOI] [PubMed] [Google Scholar]

- 18.U.S. Centers for Disease Control and Prevention. Outline for Healthcare-Associated Infections Surveillance. Atlanta: Apr, 2006. [Google Scholar]