Abstract

The phonemic restoration effect refers to the tendency for people to hallucinate a phoneme replaced by a non-speech sound (e.g., a tone) in a word. This illusion can be influenced by preceding sentential context providing information about the likelihood of the missing phoneme. The saliency of the illusion suggests that supportive context can affect relatively low (phonemic or lower) levels of speech processing. Indeed, a previous event-related brain potential (ERP) investigation of the phonemic restoration effect found that the processing of coughs replacing high versus low probability phonemes in sentential words differed from each other as early as the auditory N1 (120-180 ms post-stimulus); this result, however, was confounded by physical differences between the high and low probability speech stimuli, thus it could have been caused by factors such as habituation and not by supportive context. We conducted a similar ERP experiment avoiding this confound by using the same auditory stimuli preceded by text that made critical phonemes more or less probable. We too found the robust N400 effect of phoneme/word probability, but did not observe the early N1 effect. We did however observe a left posterior effect of phoneme/word probability around 192-224 ms -- clear evidence of a relatively early effect of supportive sentence context in speech comprehension distinct from the N400.

Keywords: Phonemic restoration effect, speech comprehension, N400, ERP

1. INTRODUCTION

Like many of our perceptual abilities, speech perception is a difficult computational problem that we humans accomplish with misleading ease. Although we are not typically consciously aware of it, the sonic instantiation of the same utterance can vary dramatically from speaker to speaker or even across multiple utterances from the same speaker (Peterson & Barney, 1952). This superficial variation and other factors such as environmental noise make speech perception a remarkable challenge that is still generally beyond the abilities of artificial speech recognition (O’Shaughnessy, 2003).

So how do we accomplish such an impressive perceptual feat? A partial answer to this question is that we use preceding linguistic context to inform our comprehension of incoming speech. Indeed, natural languages are highly redundant communication systems. In other words, given even a modicum of linguistic context (e.g., a word or two of an utterance), we typically have some idea of how the utterance might continue1. Studies have clearly demonstrated that preceding sentence context makes it easier for people to perceive likely continuations of that sentence. Specifically, listeners can identify words more rapidly (Grosjean, 1980) and can better identify words obscured by noise (e.g., Miller, Heise, & Lichten, 1951) when the words are (more) likely given previous sentence context. The great benefit of linguistic context is also evident in artificial speech comprehension systems, whose accuracy can increase by orders of magnitude when a word’s preceding context is used to help identify the word (Steinbiss et al., 1995).

While it is clear that preceding context aids speech comprehension, the mechanisms of this process remain largely unknown. In particular, there is no consensus on whether early stages of auditory processing (e.g., initial processing at phonemic and sub-phonemic levels) are affected by top-down constraints from more abstract lexical or discourse processes. “Interactive” models of speech processing (McClelland & Elman, 1986; D. Mirman, McClelland, & Holt, 2006b) generally posit that such top-down effects are possible while “feedforward” models (Norris, McQueen, & Cutler, 2000; Norris & McQueen, 2008) assume no such mechanisms exist. Both types of models are generally consistent with a large body of behavioral findings (McClelland, Mirman, & Holt, 2006), though disagreements as to the implications of some behavioral results do remain (McQueen, Norris, & Cutler, 2006; D. Mirman, McClelland, & Holt, 2006a).

Interactive models seem more neurally plausible given the general preponderance of feedback connections among cortical areas (McClelland et al., 2006), evidence of low level anticipatory activity to simple auditory stimuli (e.g., tone sequences--Baldeweg, 2006; Bendixen, Schroger, & Winkler, 2009), evidence of low level effects of auditory attention (Giard, Fort, Mouchetant-Rostaing, & Pernier, 2000), evidence of low level effects of word boundary knowledge (Sanders, Newport, & Neville, 2002), and general theories of predictive cortical processing (Friston, 2005; Summerfield & Egner, 2009). Nevertheless, it is not unreasonable to assume that top-down effects play little-to-no-role in early speech processing for several reasons. First of all, it may be that the mapping from abstract levels of linguistic processing to phonemic and sub-phonemic levels is too ambiguous to be very useful. As already mentioned, the acoustic instantiation of a word can vary greatly between individuals, between repeated utterances by the same individual, and between difference linguistic contexts (Peterson & Barney, 1952). Thus knowing the likelihood of the next phoneme may not provide that much information about incoming acoustic patterns. Secondly, the time constraints of any top-down mechanism also might limit its utility. It probably takes around 200 to 300 ms for a speech stimulus to influence semantic and syntactic processing (Kutas, Van Petten, & Kluender, 2006) and yet even more time for that activity to feedback to auditory cortex. If typical speech rates are around 5 syllables per second (Tsao, Weismer, & Iqbal, 2006) and syllables typically consist of two to three phonemes (i.e., 67-100 ms per phoneme), then any abstract linguistic information provided by the preceding 2-5 phonemes cannot aid the low-level processing of an incoming phoneme. Finally, even if they could be useful in principle, the brain may simply not have such feedback mechanisms.

1.1 Previous Research

The time course of abstract linguistic context effects on speech comprehension has been most clearly studied using event-related brain potentials (ERPs). Decades of ERP research have found that sentence context greatly influences the brain’s average response to a word. The most robust effect of sentence context on speech comprehension is on the N400 ERP component (Kutas & Hillyard, 1980; Kutas & Federmeier, 2000; Lau, Phillips, & Poeppel, 2008; Van Petten & Luka, 2006), which occurs from approximately 220 to 600 ms post-word onset and is broadly distributed across the scalp with a medial centro-parietal focus. Multiple studies have shown that N400 amplitude is negatively correlated with the probability of occurrence of the eliciting word given previous sentence context (Dambacher, Kliegl, Hofmann, & Jacobs, 2006; DeLong, Urbach, & Kutas, 2005; Kutas & Hillyard, 1984) or discourse context (van Berkum, Hagoort, & Brown, 1999). However, this correlation can be over-ridden by semantic factors such as the semantic similarity of a word to a highly probable word (Federmeier & Kutas, 1999; Kutas & Hillyard, 1984). Indeed, the N400’s sensitivity to such semantic manipulations, and relative insensitivity to other types of linguistic factors (e.g., syntactic and phonetic relationships) has led to a general consensus that the N400 primarily reflects some type of semantic processing (e.g., the retrieval of information from semantic memory and/or the integration of incoming semantic information with previous context -- Kutas & Federmeier, 2000; Friederici, 2002; Hagoort, Hald, Bastiaansen, & Petersson, 2004). Thus it is clear that supportive sentence context is generally closely related to the semantic processing of a word.

A few pre-N400 effects of sentence comprehension also have been reported, but the effects are not as reliable nor as functionally well understood as the N400 (Kutas et al., 2006). Of particular relevance to this report are effects that are believed to be related to phonemic or relatively low-level semantic processing. The two most studied such effects are the “phonological mismatch negativity” (PMN) and the “N200.”

The PMN (originally called the N200), first reported by Connolly, Stewart, & Phillips (1990), is typically defined as the most negative ERP peak between 150 and 350 ms after the onset of the first phoneme of a word, with a mean peak latency around 235-275 ms (Connolly & Phillips, 1994). The PMN is more negative to low probability phonemes than to higher probability phonemes and (when elicited by sentences) is generally distributed broadly across the scalp with either non-significant fronto-central tendencies (Connolly et al., 1990; Connolly, Phillips, Stewart, & Brake, 1992), a rather uniform distribution (Connolly & Phillips, 1994) or a medial centro-posterior focus (D’Arcy, Connolly, Service, Hawco, & Houlihan, 2004). In general, the distribution of the PMN is very similar to that of the following N400 (e.g, D’Arcy et al., 2004). Connolly and colleagues (Connolly & Phillips, 1994; D’Arcy et al., 2004) have interpreted the PMN as the product of phonological analysis because it can be elicited by improbable yet sensible continuations of sentences (Connolly & Phillips, 1994; D’Arcy et al., 2004), because it occurs before the N400 should occur (Connolly & Phillips, 1994), and because it and the N400 are consistent with distinct sets of neural generators (D’Arcy et al., 2004)).

The N200 (originally called the N250) ERP component, first reported by Hagoort and Brown (2000), is very similar to the PMN. It is a negative going deflection in the ERP to word onsets that typically occurs between 150 and 250 ms (van den Brink, Brown, & Hagoort, 2001; van den Brink & Hagoort, 2004). It is broadly distributed across the scalp rather uniformly or with a centro-parietal focus that is rather similar to that of the N400 (Hagoort & Brown, 2000; van den Brink et al., 2001; van den Brink & Hagoort, 2004). Like the PMN, the N200 is more negative to improbable words and is believed to reflect a lower-level of linguistic processing than the N400 due to its earlier onset. However, van den Brink and colleagues (2001, 2004) argue that the N200 reflects lexical processing rather than phonological processing because the N200 also has been elicited by highly probable words.

Despite this evidence, it is currently not clear if the PMN or the N200 are indeed distinct from the N400. All three effects are functionally quite similar, in that they are elicited by spoken words and are more negative to improbable words. Although, as mentioned above, Connolly and colleagues have argued that the N400 effect should not be elicited by low probability, sensible words, there is ample evidence that the N400 is indeed elicited by such stimuli (Dambacher et al., 2006; DeLong et al., 2005; Kutas & Hillyard, 1984). Moreover, the topographies of the effects are quite similar and have not been shown to reliably differ. Although some studies have found subtle differences between PMN or N200 topographies and that of the N400 (D’Arcy et al., 2004; van den Brink et al., 2001), other studies have failed to find significant differences (Connolly & Phillips, 1994; Connolly et al., 1990; Connolly et al., 1992; Hagoort & Brown, 2000; Revonsuo, Portin, Juottonen, & Rinne, 1998; van den Brink & Hagoort, 2004). Finally, the fact that the PMN and N200 occur before the N400 could potentially be explained by a subset of stimuli for which participants are able to identify critical phonemes/words more rapidly than usual. This could result from co-articulation effects that precede critical phonemes and could facilitate participants’ ability to anticipate critical phonemes/words or from having particularly early isolation points2 in critical words. Indeed, only one of the PMN and N200 studies referenced above (Revonsuo, et al., 1998) controlled for co-articulation effects.

In light of these considerations and the results of multiple studies that have failed to find any pre-N400 effects of sentence context on word comprehension (Diaz & Swaab, 2007; Friederici, Gunter, Hahne, & Mauth, 2004; Van Petten, Coulson, Rubin, Plante, & Parks, 1999)3, the existence of pre-N400 effects of sentence context on phonemic or semantic processing remains uncertain.

1.2 Goal of the current study

The goal of this study was to investigate the existence of relatively early level (i.e., pre-N400) effects of sentence context on speech comprehension using a novel paradigm that may be more powerful than that used in conventional speech ERP studies. The experimental paradigm is based on the phonemic restoration effect (Warren, 1970), an auditory illusion in which listeners hallucinate a phoneme replaced by a non-speech sound (e.g., a tone) in a word.

The premise of our approach is that the ERPs to the noise stimulus in the phonemic restoration effect would better reveal context effects on initial speech processing than ERPs to words per se because the clear onset of the noise stimulus should provide clearer auditory evoked potentials (EPs) than are typically found in ERPs time-locked to word onset. Indeed, ERPs to spoken word onsets often produce no clear auditory EPs (e.g., Connolly et al., 1992; Friederici et al., 2004; Sivonen, Maess, Lattner, & Friederici, 2006) presumably due to variability across items, difficult to define word onsets, and auditory habituation from previous words. Moreover, there is some evidence that the phonemic restoration effect is influenced by preceding sentential context that provides information about the likelihood of the missing phoneme (Samuel, 1981). This, the saliency of the illusion (Elman & McClelland, 1988), and fMRI evidence that the superior temporal sulcus (an area involved in relatively low level auditory processing--Tierney, 2010) is involved in the illusion (Shahin, Bishop, & Miller, 2009) suggest that sentence context modifies early processing of phonemic restoration effect noise stimuli and ERPs to the noise stimuli might be able to detect this.

In fact, a study by Sivonen and colleagues (2006), suggests this is the case. Sivonen et al. measured the ERPs to coughs that replaced the initial phonemes of sentence final words that were highly probable or improbable given the preceding sentence context. During the N1 time window (120-180 ms), the ERPs to coughs that replaced highly probable initial phonemes were found to be more negative than those that replaced improbable phonemes. This result, however, was confounded by physical differences between the high and low probability speech stimuli. Thus, their early effect could have been caused by factors such as habituation (Naatanen & Winkler, 1999) and not by supportive sentence.

We conducted an ERP experiment similar in many respects to that of Sivonen et al., but different in that we avoided their confounding auditory stimulus differences by using the exact same auditory stimuli preceded by text that made the critical phonemes more or less probable. In addition we conducted two behavioral experiments. One was a standard cloze probability norming study (Taylor, 1953) designed to estimate the probabilities of critical phonemes and words in our stimuli. The other was a pilot behavioral version of the ERP experiment reported here to help interpret the reliability of the behavioral results in the ERP experiment.

2. RESULTS

2.1 Experiment 1: Cloze Norming Experiment

Participant accuracy on the comprehension questions was near ceiling regardless of the type of sentence context. Mean accuracy following ambiguous and informative contexts was 97% (SD=3%) and 96% (SD=3%) respectively. Moreover, participants were all at least 85% accurate following either context. With the relatively large number of participants, the tendency for participants to be more accurate following ambiguous contexts reached significance (t(60)=2.14, p=0.04, d=0.27)4, but the difference is too small to be of interest.

The effect of preceding sentence context on critical phoneme probability was quantified in two ways: the cloze probability of the implied critical phoneme and the entropy of the distribution of all possible phonemes. Cloze probability is the proportion of participants who provided that phoneme as the next phoneme in the continuation of the sentence stem during the cloze norming task. Entropy is the estimated mean log of the probability of all possible phoneme continuations given previous context (Shannon, 1948) and quantifies how predictable the next phoneme is5. A perfectly predictable phoneme would result in an entropy of 0 bits. As uncertainty increases so does entropy until it reaches a maximal value when all possible phonemes are equally likely (in this case 5.29 bits)6. Analogous measures were estimated at the word level of analysis as well.

Preceding sentence context clearly affected both measures of critical phoneme probability (see Table 1). The cloze probability of implied phonemes was higher (t(147)=14.2, p=1e-29, d=1.17) and phoneme entropy was lower (t(147)=−9.42, p=6e-17, d=0.77) when participants had read the informative context. Similar effects were observed at the word level. The cloze probability of implied words was higher (t(147)=15.71, p=1e-33, d=1.29) and word entropy was lower (t(147)=11.44, p<6e-17, d=0.94) when participants had read the informative context. Implied phoneme and word cloze probability were highly correlated (r=0.94, p<1e-6) as were phoneme and word entropy (r=0.93, p<1e-6).

Table 1.

Mean (SD) estimates of phoneme and word probabilities given different preceding sentence contexts from Experiment 1

| Cloze Probability of Implied Phoneme |

Cloze Probability of Implied Word |

Phoneme Entropy |

Word Entropy |

|

|---|---|---|---|---|

|

Informative

Context |

0.50 (0.30) | 0.46 (0.30) | 1.79 (0.88) | 2.19 (1.04) |

|

Ambiguous

Context |

0.16 (0.22) | 0.10 (0.19) | 2.50 (0.78) | 3.19 (0.93) |

2.2 Experiments 2 & 3: Behavioral Results

Participant comprehension question accuracy in the phonemic restoration experiments was near ceiling. In Experiment 2, mean accuracy after reading ambiguous and informative contexts was 95% (SD=5%) and 95% (SD=3%), respectively, and did not significantly differ (t(33)=0.16, p=.87, d=0.03). In Experiment 3, mean accuracy after reading ambiguous and informative contexts was 94% (SD=4%) and 95% (SD=4%), respectively, and did not significantly differ (t(36)=1.71, p=.09, d=0.28). Minimum participant accuracy following either context was 74% and 80% in Experiments 2 and 3, respectively.

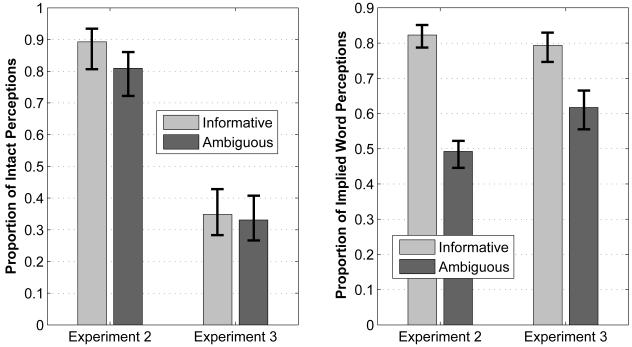

Figure 1 summarizes the analysis of participants’ perceptual reports. In Experiment 2, sentence contexts affected participant perceptions in the expected way. After reading the informative sentence contexts, participants were more likely to perceive the spoken sentences as intact (i.e., not missing any phonemes; t(33)=9.00, p=1e-6, d=1.54). Moreover, when participants reported that the spoken sentence was intact, they were more likely to report implied words (as opposed to the word that was actually spoken) after reading the informative context (t(33)=26.70, p=3e-24, d=4.58). However, in Experiment 3, only the latter finding replicated (t(34)=6.28, p=4e-5, d=1.06)7 and participants only tended to be more likely to report intact sentences after reading informative contexts (t(36)=1.40, p=0.08, d=0.23).

Figure 1.

Effects of written sentence context (informative or ambiguous) on perceptions of subsequently heard sentences. (Left) The proportion of trials in which participants reported hearing an intact sentence (i.e., not missing any phonemes). (Right) The proportion of perceived-intact sentences for which participants reported hearing the word that was implied by the informative context (as opposed to the word that was actually spoken). All error bars indicate 95% confidence intervals derived via the bias corrected and accelerated bootstrap (10,000 bootstrap resamples).

2.3 Experiment 3: ERP Results

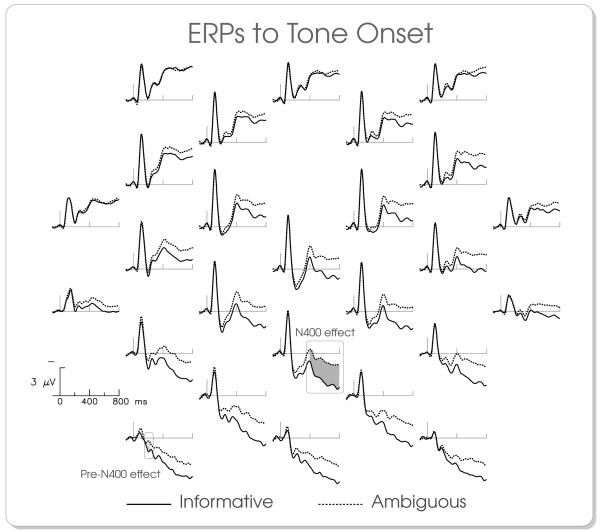

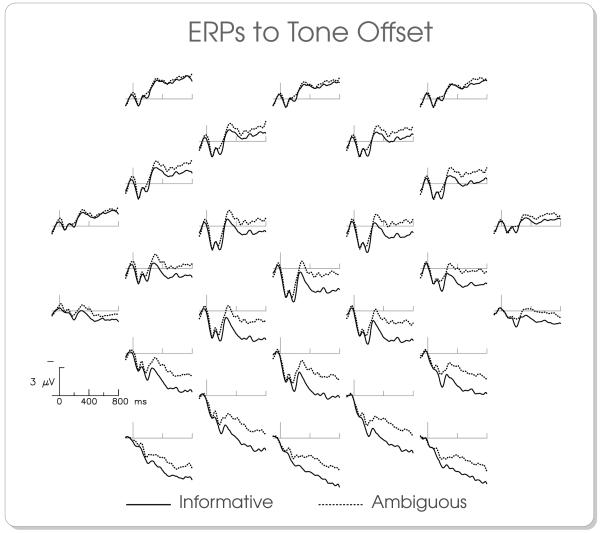

Figure 2 presents the ERPs to tones following informative or ambiguous sentence contexts, time locked to tone onset. A clear auditory N1 is visible from 80 to 140 ms, followed by a P2 from around 160 to 270 ms. Between 200 and 300 ms, the two sets of ERPs begin to diverge at central and posterior electrodes, with the ERPs to tones that replace less probable phonemes/words being more negative (an N400 effect).

Figure 2.

ERPs to the onset of tones that replaced phonemes in sentences that followed informative or ambiguous written sentence contexts. ERP figure locations represent corresponding electrode scalp locations. Up/down on the figure corresponds to anterior/posterior on the scalp and left/right on the figure corresponds to left/right on the scalp. See cartoon heads in Figure 3 for a more exact visualization of electrode scalp locations.

2.3.1 N1

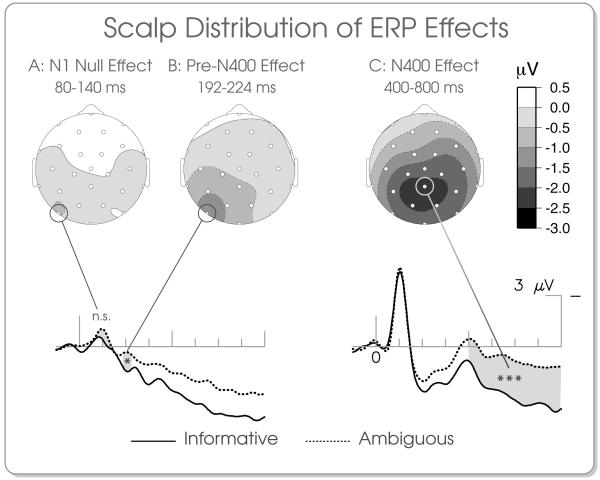

Based on Sivonen et al. (2006), we expected the N1 to tones following informative contexts to be ~1.71 μV more negative than that to tones following ambiguous contexts. To test for this effect, a repeated measures ANOVA was performed on mean ERP amplitudes in the N1 time window (80 to 140 ms) with factors of Sentence Context and Electrode. p-values for this and all other repeated measures ANOVAs in this report were Epsilon corrected (Greenhouse-Geiser) for potential violations of the repeated measures ANOVA sphericity assumption. Both the main effect of Context (F(1,36)=0.06, p=0.81) and the Context x Electrode interaction (F(25,900)=1.66, p=0.18) failed to reach significance. Indeed, the difference between conditions tends to be in the opposite direction (Figure 3). To determine if this failure to replicate their N1 effect was due to a lack of statistical power, we performed a two-tailed, repeated measures t-test at all electrodes against a null hypothesis of a difference of 1.71 μV (i.e., that the ERPs to tones following informative contexts were 1.71 μV more negative). The “tmax” permutation procedure (Blair & Karniski, 1993; Hemmelmann et al., 2004) was used to correct for multiple comparisons. This permutation test and all other such tests in this report used 10,000 permutations to approximate the set of all possible (i.e., 237) permutations. This is 10 times the number recommended by Manly (1997) for an alpha level of 0.05. We were able to reject the possibility of such an effect at all electrodes (all p<1e-6)8. Thus, the effect found by Sivonen et al. is clearly not produced in the present experiment.

Figure 3.

ERPs to the onset of tones that replaced phonemes in sentences that followed informative or ambiguous written sentence contexts at electrodes of interest. Scalp topographies visualize the effects of sentence context (ambiguous-informative) on ERPs averaged across three different time windows of interest. Asterisks indicate significant effects (p<0.05).

2.3.2 N400

In addition to an N1 effect, a somewhat delayed N400 effect of context was expected based on Sivonen et al.9. A clear tendency for a late N400 effect was found in our data between 400 and 800 ms (Figure 2). A repeated measures ANOVA on mean ERP amplitudes in this time window10 found that the ERPs to tones following ambiguous contexts were indeed more negative than those to ones following informative contexts (main effect of Context: F(1,36)=23.45, p<1e-4). Moreover, this effect had a canonical N400 distribution (Figure 3) being largest at central/posterior electrodes and slightly right lateralized (Electrode x Context interaction: F(25,900)=14.88, p<1e-4).

2.3.3 Pre-N400 effect

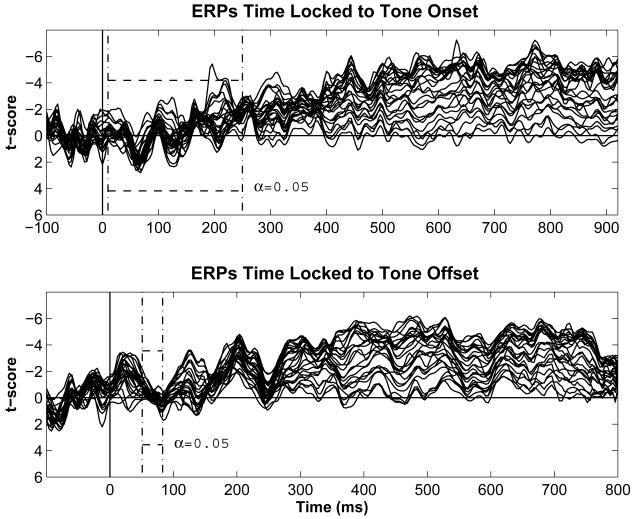

To determine if context produced any ERP effects prior to the N400 effect, two-tailed repeated measures t-tests were performed at every time point from 10 ms (the onset of the initial cortical response to an auditory stimulus -- Naatanen & Winkler, 1999) to 250 ms (an approximate lower-bound on the onset of the N400 effect to speech in standard N400 paradigms) and at all 26 scalp electrodes. Time points outside of this time window were ignored for this analysis in order to increase statistical power by minimizing the number of statistical tests. Again, the tmax permutation procedure was used to correct for multiple comparisons. This analysis (Figure 4: Top) found that ERPs to tones following informative contexts were more positive than those following ambiguous contexts from 192 to 204 ms and 212 to 224 ms at the left lateral occipital electrode (LLOc; all p<0.05). The mean ERP difference between conditions in this time window (192-224 ms) shows a left-posterior distribution (Figure 3) that is markedly distinct from that of the N400 effect.

Figure 4.

Butterfly plots of difference wave t-scores (i.e., difference wave amplitude divided by difference wave standard error) at all electrodes. Difference waves were obtained by subtracting ERPs to tones following informative contexts from those following ambiguous contexts. Each waveform corresponds to a single electrode. Time windows analyzed via tmax permutation tests are indicated with dot-dashed lines. Critical t-scores are indicated by dashed lines. If difference wave t-scores exceed critical t-scores then they significantly deviate from zero (α=0.05). The visualized time range is shorter (−100 to 800 ms) for ERPs time locked to tone offset because the EEG artifact correction procedure did not extend beyond 800 ms post-tone offset for many trials.

Given the mean duration of tones (141 ms), it is possible that this effect was produced by speech following the tone rather than the tone itself. To determine if this was case, ERPs were formed time locked to tone offset (Figure 5) and effects of context were tested for with the tmax procedure in the time window where the LLOc effect should occur, 51 to 83 ms. This analysis found no significant effects (all p>0.68; Figure 4: Bottom).

Figure 5.

ERPs to the offset of tones that replaced phonemes in sentences that followed informative or ambiguous written sentence context. ERP figure locations represent corresponding electrode scalp locations. Up/down on the figure corresponds to anterior/posterior on the scalp and left/right on the figure corresponds to left/right on the scalp. See ERP cartoon head in Figure 3 for a more exact visualization of electrode scalp locations.

To assess the functional correlates of the LLOc effect, repeated measures ordinary least squares (OLS) multiple regression (Lorch & Myers, 1990) was performed on the mean single trial amplitude at electrode LLOc from 192 to 224 ms post tone offset. Predictors in the analysis were: (1) the mean of the cloze probabilities of the implied phonemes and words, (2) the mean of phoneme and word entropies, (3) whether or not the sentence was perceived as intact, (4) whether or not the implied word was perceived, and (5) the number of words in the written sentence context. The averages of phoneme and word probabilities and entropies were used because they were so highly correlated that including each individual phoneme and word predictor would greatly diminish the power of the analysis to detect a relationship with cloze probability or entropy. One participant was excluded from the analysis because he perceived all sentences as missing phonemes.

The only significant predictor of EEG amplitude found by the analysis was the cloze probability of the implied phoneme/word (Table 2). To determine the degree to which collinearity between predictors may have hurt the power of the regression analysis, the co-predictor R2 was calculated for each predictor (Berry & Feldman, 1985). The co-predictor R2 for a predictor is obtained by using OLS multiple regression to determine how much of that predictor’s variance can be explained by the rest of the predictors. R2 achieves a maximal value of one (i.e., perfect collinearity) if the other predictors can explain all of the variance. R2 achieves a minimal value of zero if the other predictors cannot explain any of the variance. Four of the predictors show a relatively high degree of collinearity (0.6<=R2<=0.7). However, since the degree of collinearity was nearly equal for all four variables, none were disproportionally affected and collinearity alone cannot explain why three of these four predictors were not shown to be reliable.

Table 2.

Results of a multiple regression analysis of the mean EEG amplitude from 192 to 224 ms post-tone onset at electrode LLOc. Degrees of freedom for all t-scores is 35. R2 values for the full regression model, participant predictors, and non-participant predictors are 0.024, 0.004, and 0.020 (respectively). These R2 values are comparable to other applications of regression analysis to single trial EEG data (Dambacher, et al., 2006)

| Predictor | Mean Co- efficient |

95% Coefficient Confidence Interval |

t-Score | p-Value | Cohen’s d |

Median (IQR) Collinearity R2 |

|---|---|---|---|---|---|---|

| Intercept | 0.07 | −1.66/1.80 | 0.08 | 0.93 | 0.01 | NA |

| Implied Phoneme/ Word Cloze Probability |

1.42 | 0.02/2.82 | 2.06 | 0.05* | 0.34 | 0.68(0.06) |

| Phoneme/ Word Entropy |

0.002 | −0.36/0.36 | 0.01 | 0.99 | 0.00 | 0.67(0.06) |

| Context Length | 0.02 | −0.09/0.12 | 0.30 | 0.76 | 0.05 | 0.06(0.04) |

| Sentence Perceived as Intact |

0.24 | −0.72/1.20 | 0.51 | 0.61 | 0.09 | 0.64(0.23) |

| Implied Word Perceived |

0.58 | −0.76/1.91 | 0.88 | 0.39 | 0.15 | 0.65(0.24) |

Abbreviations: IQR=interquartile range, NA=not applicable.

indicates p-value less than 0.05.

Finally, in an attempt to determine if the LLOc effect reflects phoneme or word level processing, a second repeated measures OLS multiple regression analysis was performed. The response variable was the same as in the previous regression analysis and the predictors in the analysis were: (1) the mean of the cloze probabilities of the implied phonemes and words, (2) whether or not the tone replaced word initial phonemes, and (3) the product of the first two predictors. The logic of the analysis was that if the LLOc effect is a correlate of word level processing, the relationship between the effect and cloze probability could vary as a function of the missing phonemes’ word position. This interaction between cloze and word position would be detected by the third predictor, which acts as an interaction term in the regression model. Additional predictor variables were ignored to increase the power of the analysis and because only cloze probability was shown to reliably correlate with the LLOc effect in the original regression analysis. Results of the analysis are presented in Table 3 and show no evidence of an effect of word position.

Table 3.

Results of a multiple regression analysis of the mean EEG amplitude from 192 to 224 ms post-tone onset at electrode LLOc. Degrees of freedom for all t-scores is 36. Phoneme position was coded as a value of 1 for word initial missing phonemes and 0 for word post-initial phonemes

| Predictor | Mean Co- efficient |

95% Coefficient Confidence Interval |

t-Score | p-Value | Cohen’s d |

Median (IQR) Collinearity R2 |

|---|---|---|---|---|---|---|

| Intercept | 0.19 | −0.55/0.93 | 0.51 | 0.61 | 0.08 | NA |

| Implied Phoneme/ Word Cloze Probability |

1.62 | 0.3/2.95 | 2.48 | 0.02* | 0.41 | 0.53(0.05) |

| Phoneme Position (Word initial or post- initial) |

0.46 | −0.33/1.26 | 1.18 | 0.25 | 0.19 | 0.53(0.04) |

| Cloze Probability x Phoneme Position |

0.06 | −1.56/1.67 | 0.07 | 0.94 | 0.01 | 0.64(0.05) |

Abbreviations: IQR=interquartile range, NA=not applicable.

indicates p-value less than 0.05.

3. DISCUSSION

The main purpose of this study was to use the phonemic restoration effect to detect the modulation of early stages of speech processing due to supportive sentence context. More specifically, we analyzed the brain’s response to tones that replaced relatively high or low probability phonemes. Phoneme probability was manipulated by having participants read informative or ambiguous sentence contexts before hearing the spoken sentence. The informative contexts strongly implied a particular missing phoneme/word that differed from the word that was actually spoken. Ambiguous contexts provided little-to-no information about the missing phoneme. We expected the context manipulation to affect participants’ perception of the tones and the early neural processing of the tone.

Participant self-reports in Experiments 2 and 3 indicate that the written sentence contexts affected what participants thought they heard. Specifically, participants were more likely to report having heard words implied by informative sentence contexts than words that were actually spoken. Somewhat puzzlingly, in Experiment 3 (the ERP experiment), sentence contexts did not affect how likely participants were to hallucinate phonemes, even though informative contexts very reliably increased the likelihood of hallucination in Experiment 2 (i.e., the strictly behavioral version of Experiment 3). We do not know why this result failed to replicate, although it may be due to the differences in auditory presentation across the two experiments (e.g., headphones vs. speakers in Experiments 2 and 3, respectively) or due to differences in participant attentiveness and/or strategies.

The ERPs to tones that replaced missing phonemes also manifest clear effects of sentence context. The most pronounced difference was an N400 effect from approximately 400 to 800 ms post-tone onset. This effect was later and more temporally diffuse than is typically observed in N400 effects to spoken words (Friederici et al., 2004; Hagoort & Brown, 2000; Van Petten et al., 1999). The delayed onset of the effect is consistent with the delayed N400 effect to coughs that replaced high and low probability phonemes in Sivonen et al. (2006); it is probably indicative of delayed word recognition due to the missing phonemes and the deleted co-articulation cues. The temporal spread of this N400 effect is likely due to variability across items in the latency at which the critical words are recognizable (Grosjean, 1980).

The main purpose of this study was to detect pre-N400 effects of sentence context, if any, in the absence of auditory stimulus confounds. Based on the Sivonen et al. study, we expected the ERPs to tones that replaced contextually probable phonemes to be more negative than those to less probable phonemes in the N1 time range. Not only did we fail to replicate their effect, but we were able to reject the null hypothesis of such an effect. Thus, their reported effect is not replicated by these stimuli in this experimental paradigm. Our failure to replicate this early effect may be due to the fact that we used tones instead of coughs to replace phonemes, the fact that the difference in cloze probability between their high and low probability words was much greater than ours, and/or other factors. In particular, given the auditory confounds in their study, the sensitivity of the N1 to habituation11 (Naatanen & Winkler, 1999), and the magnitude of pre-stimulus noise in their ERPs (see Figure 4 in Sivonen et al., 2006) we maintain that their early N1 effect is likely not a correlate of phoneme/word probability nor even of speech perception.

While we found no evidence of an effect of sentential context on the N1 component, we did find a somewhat later context effect from 192 to 224 ms at a left lateral occipital electrode site. This effect is mostly likely present at other left-posterior electrodes as well, but it failed to reach significance at other sites due to the correction for multiple statistical comparisons. The topography of this effect (especially its left occipital focus) is distinct from that of the N400 and it reflects processing of the tone or pre-tone stimuli (i.e., it is not produced by the speech following the tone). The effect correlates with the probability of the phoneme/word implied by the informative sentence contexts. As it happens, phoneme and word probability are too highly correlated in these stimuli (r=0.94) for us to be able to determine if the effect better correlates with phoneme or with word probability. Moreover, the effect shows no evidence of being sensitive to the missing phonemes’ word position or participant perceptions.

To our knowledge this left lateral occipital effect is the clearest evidence to date of an ERP correlate of phoneme/word probability prior to the N400 effect. As reviewed in the introduction, some researchers have claimed to find pre-N400 ERP correlates of phoneme or lexical probability -- the phonological mismatch negativity and N200, respectively). However, given the similarity of their topographies to the N400 and the absence of controls for potential auditory confounds like co-articulation effects in these experiments, their dissociation from N400 effects is questionable. Moreover, it has yet to be demonstrated that either the phonological mismatch negativity or the N200 correlate with phoneme or lexical probabilities in a graded fashion. Those effects have only been analyzed using discrete comparisons, which is a less compelling level of evidence than continuous correlations (Nature Neuroscience Editors, 2001).

That being said, it is important to note that this pre-N400 effect may be a part of the N400 effect to intact speech, given that natural intact speech has been shown to elicit an N400 effect as early as 200-300 ms post-word onset (Van Petten et al., 1999). If this is the case, then the effect would be a subcomponent of the N400 rather than a completely distinct ERP phenomena. Unfortunately, given the small size and scope of the effect, it is difficult to tell if such an effect has been found to generally precede the N400 in existing ERP speech studies. In either case, our data demonstrate that phoneme/word probability can correlate with neural processing in advance of the canonical N400 effect.

The implications of this novel early ERP effect for theories of speech comprehension are currently unknown, since we do not yet know what level of processing produces it or if it reflects processing of the tone or pre-tone stimuli. If the effect does indeed reflect phonological processing, it would support interactive models of speech processing (McClelland & Elman, 1986; McClelland et al., 2006). Future studies with stimuli that can better dissociate phonemic and word level probabilities in sentences can address this question and the methods used here (estimates of phoneme probabilities and the tmax multiple comparison corrections) can help in the design and analysis of such studies

Finally, this study informs, to a very limited extent, our understanding of the mechanisms of the phonemic restoration effect. Although our context manipulation was not successful at manipulating the likelihood of phoneme restoration in the ERP experiment, it did affect phoneme/word perceptual reports and our analysis found no evidence of any early (i.e., 250 ms or before) correlates of phoneme/word perception. This suggests that the locus of influence of sentence context on this behavior might be rather late and affecting participant reports more than participant perceptions (see Samuel, 1981, for a discussion of the distinction). That being said, the null result may well be due to a lack of statistical power and, even if accurate, these results might not generalize to other phonemic restoration paradigms (Samuel, 1996; Shahin et al., 2009).

4. EXPERIMENTAL PROCEDURE

4.1 Materials

All experiments utilized a set of 148 spoken sentences as stimuli. The sentences were spoken by a female native English speaker and recorded using a Shure KSM 44 studio microphone (cardioid pickup pattern, low frequency cutoff filter at 115 Hz, 6dB-octave) in a sound attenuated chamber to a PC, digitized at a 44.1 kHz sampling rate via a Tascam FireOne. Using the software Praat (Boersma & Weenink, 2010), the sentences were stored uncompressed in Microsoft Waveform Audio File Format (mono, 16-bit, linear pulse code modulation encoding). Each spoken sentence contained a critical phoneme or, rarely, a critical consecutive pair of phonemes that was replaced by a 1 kHz pure tone with the intention of making the sentence ambiguous. For example, the labiodental fricative /f/ of the word “fountain” was the critical phoneme of the sentence:

| (1) |

Replacing /f/ with a tone made the sentence ambiguous because the final word could be “fountain” or “mountain.”

The 1 kHz tone had 10 ms rise and fall times and the peak amplitude of the tone was set to six times the 95th percentile of the absolute magnitude of all sentences. A 1 kHz tone was chosen to replace the critical phonemes because it has been shown to be effective for producing the phonemic restoration effect (Warren, 1970; Warren & Obsuek, 1971). The exact start and stop time of the tone was manually determined for each sentence to make the missing phoneme as ambiguous as possible. This involved extending the tone to replace co-articulation signatures of the critical phoneme as well.

The type and location of critical phonemes varied across sentences. 70% of the critical phonemes were a single consonant, 22% were a single vowel, and 8% were two consecutive phonemes. 56% of the critical phonemes were word initial. The mean duration of tones was 141 (SD=49) ms.

Each spoken sentence was paired with an “informative” and an “ambiguous” written sentence context designed to be read before hearing the spoken sentence. The informative context was intended to make one of the possible missing phonemes, the “implied phoneme,” very likely. The implied phoneme always differed from the phoneme that had actually been spoken and replaced by a tone. For example, the informative context for the spoken sentence above was:

| (2) |

which made the word “mountain” likely even though “fountain” was the word that had been spoken. This was done to ensure that participant perception of the implied phoneme would be due to sentence context and not residual coarticulatory cues. For 10 of the 148 sentences the implied word was grammatical but the spoken word was not. For the remaining sentences, both implied and spoken words were grammatical.

In contrast to the informative context, the ambiguous context was intended to provide little-to-no information about the missing phoneme. For example, the informative context for the spoken sentence above was:

| (3) |

4.2 Participants & Procedures

The participants in all three experiments were native English speakers who claimed to have normal hearing and no history of reading/speaking difficulties or psychiatric/neurological disorders. 61 young adults participated in Experiment 1 (mean age: 21 [SD=1.6]; 31 male). 34 young adults participated in Experiment 2 (mean age: 20 [SD=1.4] years; 12 male) and another 37 participated in Experiment 3 (mean age: 20 [SD=2.4] years; 17 male). The volunteers were all 18 years of age or older and participated in the experiments for class credit or pay after providing informed consent. Each volunteer participated in only one of the experiments. The University of California, San Diego Institutional Review Board approved the experimental protocol.

4.3 Procedure

4.3.1 Experiment 1: Cloze Norming

In order to estimate the probability of the critical phonemes and words, a standard cloze norming procedure (Taylor, 1953) was executed. Each participant heard the beginning of all 148 spoken sentences once. Specifically, they heard each sentence from the beginning up to the point where the tone would begin; they did not hear the tone. Prior to hearing a sentence, participants read either the informative or ambiguous written sentence context for that sentence. The type of context was randomly determined for each participant with the constraint that 50% of the contexts were informative.

Stimuli were presented to participants via headphones and a computer monitor. Written sentences were presented for 350 ms multiplied by the number of words in the sentence minus one. Subsequent to each spoken sentence, participants were asked to type the first completion of the sentence that came to mind. Participants were told that if the sentence ended mid-word, they should start their completion with that word. If the participants had no idea how the sentence should continue, they were instructed to skip the trial.

After typing in a completion, participants were presented with a binary multiple-choice comprehension question to ensure that they had read the spoken sentence context. After each comprehension question response, they were told whether or not their response was accurate. Participants were told to concentrate equally on both tasks, even though they were only getting feedback on the comprehension questions.

Before beginning the experiment, participants were given demonstrations and practice trials to ensure they understood the task. In addition, participants were allowed to manually adjust the headphone volume before beginning the experiment. The mean number of participants who normed each item-context pair was 29 (SD=3.9).

4.3.2 Experiment 2: Phonemic Restoration Behavioral Experiment

In order to determine if the written sentence context manipulation was capable of affecting the phonemic restoration effect, a behavioral experiment was conducted. This experiment was identical to Experiment 1 save for the following changes:

Participants heard each spoken sentence in its entirety

- Subsequent to hearing a spoken sentence, participants were not asked to continue the spoken sentence. Rather they were presented with a written version of sentence with a blank space in place of the word containing the critical phoneme. For example, if participants heard Example Sentence 1 (see above), they would be shown:

Participants were instructed to fill-in-the blank by typing what they thought they heard. If they thought the word was intact, they were instructed to type the word they heard. If they thought any part of the word had been replaced by a tone, they were instructed to use a single asterisk to represent the missing portion. If the participants had no idea what the critical word was, they were instructed to type a question mark. When participants were introduced to the experiment, they were told that some sentences would have part of a word replaced by a tone and that others would co-occur with a tone. Participants were told this under the assumption that they would experience the phonemic restoration effect for some stimuli and not others, even though all spoken sentences in the experiment were missing phonemes.

In addition, the participants were told that some spoken sentences might not make sense (e.g., “A few people each year are attacked by parks.”) and were asked to report what they heard as accurately as possible (regardless of how much sense it made).

4.3.3 Experiment 3: Phonemic Restoration EEG Experiment

The procedure for Experiment 3 was the same as that for Experiment 2, save for the following changes:

Spoken sentences were presented via wall-mounted speakers instead of headphones. Participants were not allowed to manually adjust the volume. Auditory stimuli were presented with tones at 93 dB peak SPLA as measured with a precision sound meter positioned to approximate the location of the participant’s right ear (Brüel and Kjær model 2235 fitted with a 4178 microphone).

Responses to comprehension questions were given verbally instead of typed and perceptual reports were typed into a spreadsheet. These changes were made to accommodate the stimulus presentation/EEG recording hardware in the EEG recording chamber.

One-quarter of the way into the experiment, participants were given a break and their auditory reports examined. If the participants had indicated that all of the sentences were missing phonemes or that all of the sentences were intact, we repeated the experimental instructions to make sure they understood the task. Again, although all the sentences were missing phonemes, participants were expected to experience the phonemic restoration effect for some stimuli and not others. The experimental instructions were repeated for five participants.

In addition to the sentence task, participants were given a simple tone counting task. 74 1 kHz tones of various durations were pseudorandomly divided into three blocks and participants were asked to silently count them. The three blocks were interleaved with two blocks of the sentence task. The purpose of the counting task was to obtain clean measures of each participant’s auditory response to such tones. The data collected during this task turned out not to be of much relevance to the study and will not be discussed further.

4.4 Phonetic Transcription

In order to quantify the cloze probability of critical phonemes, the 2,288 unique participant responses in Experiment 1 were phonetically transcribed using the Carnegie Mellon University Pronouncing Dictionary (CMUdict--Weide, 2009). CMUdict consists of North American English phonetic transcriptions of over 125,000 words based on a set of 39 phonemes. 21 of the 2,288 participant responses were not found in CMUdict and were transcribed using American English entries in the Longman Pronunciation Dictionary (Wells, 1990). Finally, 25 of the 2,265 participant responses were not found in either dictionary and were manually transcribed. Transcription was complicated by the fact that some words can be pronounced multiple ways. When pronunciation depended on word meaning (e.g., the noun “resume” vs. the verb “resume”), the appropriate pronunciation was selected. For the remaining ambiguous 247 items, each possible pronunciation was treated as equally likely. Incorrectly spelled participant responses were corrected before phonetic transcription.

4.5 EEG Recording Parameters & Preprocessing

The electroencephalogram (EEG) was recorded from 26 tin electrodes embedded in an Electro-cap arrayed in a laterally symmetric quasi-geodesic pattern of triangles approximately 4 cm on a side (see Figure 3), referenced to the left mastoid. Additional electrodes located below each eye and adjacent to the outer canthus of each eye were used to monitor and correct for blinks and eye movements. Electrode impedances were kept below 5 KΩ. EEG was amplified by Nicolet Model SM2000 bioamplifiers set to a bandpass of 0.016-100 Hz and a sensitivity of 200 or 500 (for non-periocular and periocular channels respectively). EEG was continuously digitized (12-bits, 250 samples/s) and stored on hard disk for later analysis.

EEG data was re-referenced off-line to the algebraic sum of the left and right mastoids and divided into 1020 ms, non-overlapping epochs extending from 100 ms before to 920 ms after tone onset (both sentence embedded and counting task tones). Each epoch was 50 Hz low-pass filtered and the mean of each epoch was removed. After filtering, individual artifact-polluted epochs were rejected via a combination of visual inspection and objective tests designed to detect blocking, drift, and outlier epochs (EEGLAB Toolbox, Delorme & Makeig, 2004). After epochs were rejected, the mean number of epochs per participant was 126 (SD=10). Extended InfoMax independent components analysis (ICA--Lee, Girolami, & Sejnowski, 1999) was then applied to remove EEG artifacts generated by blinks, eye movements, muscle activity, and heart beat artifact via sets of spatial filters (Jung et al., 2000). The mean number of independent components removed per participant was 12 (SD=3). Time-domain average ERPs to the tones embedded in sentences were subsequently computed after subtraction of the 100 ms prestimulus baseline.

ACKNOWLEDGMENTS

The authors would like to thank Jenny Collins for her help with recording the stimuli used in these experiments and Michael Dambacher and Reinhold Kliegl for their help with the multiple regression analysis. This research was supported by US National Institute of Child Health and Human Development grant HD22614 and National Institute of Aging grant AG08313 to Marta Kutas and a University of California, San Diego Faculty Fellows fellowship to David Groppe.

Footnotes

According to Genzel and Charniak (2002), the entropy of the distribution of written sentences between 3 and 25 words in length is approximately between 7 and 8 bits. Bates (1999) claims that fluent adults know between 20,000 and 40,000 words. If a speaker produced utterances from a set of 20,000 words where each word was equally likely and independent of previous words, the entropy of sentences between 3 and 25 words in length would be between 43 and 357 bits. Similarly, Philip B. Gough (1983) has estimated that readers can predict the 9th open class word (e.g., nouns, verbs) of 30% of sentences with greater than 10% accuracy and they can predict the 9th closed class word (e.g., pronouns, articles) of 78% of sentences with greater than 10% accuracy. Clearly there is a massive degree of redundancy in natural language (see also Gough et al., 1981).

A word’s “isolation point” is the point at which a listener can identify the entire word with a high degree of accuracy (e.g., 70% of participants). Participants can often identify a word before have heard the entire word (Van Petten, et al., 1999).

The fact that Van Petten et al. failed to find a pre-N400 effect in their study is particularly notable as they contrasted ERPs to the same types of stimuli as Connolly et al. (1994) and van den Brink et al. (2001, 2004). They found no evidence of a pre-N400 effect in the grand average waveforms or in single participant averages. Indeed, their analysis suggests that the PMN in particular (which has often been identified in single participant averages--e.g., Connolly, et al. 1992) may simply be residual alpha activity.

d in all t-test results is Cohen’s d (Cohen, 1988), a standardized measure of effect size.

Entropy is conventionally measured using log base 2 and the resulting value is said to be in units of “bits.”

Entropy is similar to the more commonly used measure of contextual “constraint” (e.g., Federmeier & Kutas, 1999), which is the highest cloze probability of all possible continuations. We choose to use entropy because it reflects the probability of all possible continuations (not just the most probable) and is thus a richer measure of uncertainty.

Two participants did not report any sentences as intact after reading either or both written sentence contexts and were excluded from this analysis.

Sivonen et al. (2006) used a later N1 time window in their analysis (120-180 ms) as the N1 in their data occurred later (presumably due to the fact that they used coughs instead of tones to replace phonemes). To ensure that our failure to replicate Sivonen and colleagues’ results was not due to the difference in time windows, we repeated our N1 analyses using their later time window. All test results were qualitatively identical.

Sivonen et al. found that the N400 effect to coughs that replaced phonemes was not significant until 380-520 ms post-cough onset.

This time window was subjectively defined primarily by the scalp topography of the context effect. However, as can be seen in the t-score representation of the context effect (top axis of Figure 4) the effect of context does not remarkably deviate from zero at a large number of electrodes until around 400 ms post-tone onset. The effect of context remains significant after 800 ms, but the topography of the effect is somewhat more right lateralized or posterior than is typical of the N400.

As reviewed by Naatanen and Winkler (1999), the auditory N1’s amplitude decreases when the eliciting stimulus is preceded by sounds of similar frequency even with a lag of 10 seconds or greater. This decrease can be similar in scale to the N1 effect reported by Sivonen and colleagues (i.e., 1.71 μV). Given the broad fricative-like spectral composition of coughs, the speech preceding the coughs in Sivonen et al.’s stimuli surely led to some habituation of the N1. It is possible that this habituation was greater in their sentences with low probability critical phonemes than in their sentences with high probability phonemes and that this difference is what produced their effect.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Section: Cognitive and Behavioral Neuroscience

References

- Baldeweg T. Repetition effects to sounds: Evidence for predictive coding in the auditory system. Trends in Cognitive Sciences. 2006;10(3):93–94. doi: 10.1016/j.tics.2006.01.010. [DOI] [PubMed] [Google Scholar]

- Bates EA. On the nature and nurture of language. In: Bizzi E, Calissano P, Volterra V, editors. Frontiere Della Biologia [Frontiers of Biology]. The Brain of Homo Sapiens. Giovanni Trecanni; Rome: 1999. [Google Scholar]

- Bendixen A, Schroger E, Winkler I. I heard that coming: Event-related potential evidence for stimulus-driven prediction in the auditory system. Journal of Neuroscience. 2009;29(26):8447–8451. doi: 10.1523/JNEUROSCI.1493-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berry WD, Feldman S. Multiple Regression in Practice. Sage Publications; Beverly Hills: 1985. [Google Scholar]

- Blair RC, Karniski W. An alternative method for significance testing of waveform difference potentials. Psychophysiology. 1993;30(5):518–524. doi: 10.1111/j.1469-8986.1993.tb02075.x. [DOI] [PubMed] [Google Scholar]

- Boersma P, Weenink D. Praat: Doing phonetics by computer. 2010 http://www.praat.org/:

- Cohen J. Statistical Power Analysis for the Behavioral Sciences. 2nd ed. Lawrence Erlbaum Associates; Hillsdale, New Jersey: 1988. [Google Scholar]

- Connolly JF, Phillips NA. Event-related potential components reflect phonological and semantic processing of the terminal word of spoken sentences. Journal of Cognitive Neuroscience. 1994;6(3):256–266. doi: 10.1162/jocn.1994.6.3.256. [DOI] [PubMed] [Google Scholar]

- Connolly JF, Phillips NA, Stewart SH, Brake WG. Event-related potential sensitivity to acoustic and semantic properties of terminal words insentences. Brain and Language. 1992;43(1):1–18. doi: 10.1016/0093-934x(92)90018-a. [DOI] [PubMed] [Google Scholar]

- Connolly JF, Stewart SH, Phillips NA. The effects of processing requirements on neurophysiological responses to spoken sentences. Brainand Language. 1990;39(2):302–318. doi: 10.1016/0093-934x(90)90016-a. [DOI] [PubMed] [Google Scholar]

- Dambacher M, Kliegl R, Hofmann M, Jacobs AM. Frequency and predictability effects on event-related potentials during reading. Brain Research. 2006;1084(1):89–103. doi: 10.1016/j.brainres.2006.02.010. [DOI] [PubMed] [Google Scholar]

- D’Arcy RC, Connolly JF, Service E, Hawco CS, Houlihan ME. Separating phonological and semantic processing in auditory sentence processing: A high-resolution event-related brain potential study. Human Brain Mapping. 2004;22(1):40–51. doi: 10.1002/hbm.20008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- DeLong KA, Urbach TP, Kutas M. Probabilistic word pre-activation during language comprehension inferred from electrical brain activity. Nature Neuroscience. 2005;8(8):1117–1121. doi: 10.1038/nn1504. [DOI] [PubMed] [Google Scholar]

- Delorme A, Makeig S. EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. Journal of Neuroscience Methods. 2004;134(1):9–21. doi: 10.1016/j.jneumeth.2003.10.009. [DOI] [PubMed] [Google Scholar]

- Diaz MT, Swaab TY. Electrophysiological differentiation of phonological and semantic integration in word and sentence contexts. Brain Research. 2007;1146:85–100. doi: 10.1016/j.brainres.2006.07.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Elman JL, McClelland JL. Cognitive penetration of the mechanisms of perception: Compensation for coarticulation of lexically restored phonemes. Journal of Memory and Language. 1988;27(2):143–165. [Google Scholar]

- Federmeier KD, Kutas M. A rose by any other name: Long-term memory structure and sentence processing. Journal of Memory and Language. 1999;41:469–495. [Google Scholar]

- Friederici AD. Towards a neural basis of auditory sentence processing. Trends in Cognitive Sciences. 2002;6(2):78–84. doi: 10.1016/s1364-6613(00)01839-8. [DOI] [PubMed] [Google Scholar]

- Friederici AD, Gunter TC, Hahne A, Mauth K. The relative timing of syntactic and semantic processes in sentence comprehension. NeuroReport. 2004;15(1):165–169. doi: 10.1097/00001756-200401190-00032. [DOI] [PubMed] [Google Scholar]

- Friston K. A theory of cortical responses. Philosophical Transactions of the Royal Society of London.Series B, Biological Sciences. 2005;360(1456):815–836. doi: 10.1098/rstb.2005.1622. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Genzel D, Charniak E. Entropy rate constancy in text; Proceedings of the 40th Annual Meeting of the ACL; Philadelphia. 2002.pp. 199–206. [Google Scholar]

- Giard MH, Fort A, Mouchetant-Rostaing Y, Pernier J. Neurophysiological mechanisms of auditory selective attention in humans. Frontiers in Bioscience. 2000;5:D84–94. doi: 10.2741/giard. [DOI] [PubMed] [Google Scholar]

- Gough PB. Context, form, and interaction. In: Rayner K, editor. Eye Movements in Reading: Perceptual and Language Processes. Academic Press; New York: 1983. pp. 203–211. [Google Scholar]

- Gough PB, Alford JA, Holley-Wilcox P. Words and contexts. In: Tzeng OJL, Singer H, editors. Perception of Print: Reading Research in Experimental Psychology. Lawrence Erlbaum Associates; Hillsdale, New Jersey: 1981. pp. 85–102. [Google Scholar]

- Grosjean F. Spoken word recognition processes and the gating paradigm. Perception & Psychophysics. 1980;28(4):267–283. doi: 10.3758/bf03204386. [DOI] [PubMed] [Google Scholar]

- Hagoort P, Brown CM. ERP effects of listening to speech: Semantic ERP effects. Neuropsychologia. 2000;38(11):1518–1530. doi: 10.1016/s0028-3932(00)00052-x. [DOI] [PubMed] [Google Scholar]

- Hagoort P, Hald L, Bastiaansen MC, Petersson KM. Integration of word meaning and world knowledge in language comprehension. Science. 2004;304(5669):438–441. doi: 10.1126/science.1095455. [DOI] [PubMed] [Google Scholar]

- Hemmelmann C, Horn M, Reiterer S, Schack B, Susse T, Weiss S. Multivariate tests for the evaluation of high-dimensional EEG data. Journal of Neuroscience Methods. 2004;139(1):111–120. doi: 10.1016/j.jneumeth.2004.04.013. [DOI] [PubMed] [Google Scholar]

- Jung TP, Makeig S, Humphries C, Lee TW, McKeown MJ, Iragui VJ, et al. Removing electroencephalographic artifacts by blind source separation. Psychophysiology. 2000;37(2):163–178. [PubMed] [Google Scholar]

- Kutas M, Van Petten CK, Kluender R. Psycholinguistics electrified II (1994-2005) In: Gernsbacher MA, Traxler M, editors. Handbook of Psycholinguistics. 2nd ed. Elsevier; New York: 2006. pp. 659–724. [Google Scholar]

- Kutas M, Federmeier KD. Electrophysiology reveals semantic memory use in language comprehension. Trends in Cognitive Sciences. 2000;4(12):463–470. doi: 10.1016/s1364-6613(00)01560-6. [DOI] [PubMed] [Google Scholar]

- Kutas M, Hillyard SA. Brain potentials during reading reflect word expectancy and semantic association. Nature. 1984;307(5947):161–163. doi: 10.1038/307161a0. [DOI] [PubMed] [Google Scholar]

- Lau EF, Phillips C, Poeppel D. A cortical network for semantics: (de)constructing the N400. Nature Reviews.Neuroscience. 2008;9(12):920–933. doi: 10.1038/nrn2532. [DOI] [PubMed] [Google Scholar]

- Lee TW, Girolami M, Sejnowski TJ. Independent component analysis using an extended infomax algorithm for mixed subgaussian and supergaussian sources. Neural Computation. 1999;11(2):417–441. doi: 10.1162/089976699300016719. [DOI] [PubMed] [Google Scholar]

- Lorch RF, Jr, Myers JL. Regression analyses of repeated measures data in cognitive research. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1990;16(1):149–157. doi: 10.1037//0278-7393.16.1.149. [DOI] [PubMed] [Google Scholar]

- Miller GA, Heise GA, Lichten W. The intelligibility of speech as a function of the context of the test materials. Journal of Experimental Psychology. 1951;41(5):329–335. doi: 10.1037/h0062491. [DOI] [PubMed] [Google Scholar]

- Manly BFJ. Randomization, Bootstrap, and Monte Carlo Methods in Biology. 2nd ed. Chapman & Hall; London: 1997. [Google Scholar]

- McClelland JL, Elman JL. The TRACE model of speech perception. Cognitive Psychology. 1986;18(1):1–86. doi: 10.1016/0010-0285(86)90015-0. [DOI] [PubMed] [Google Scholar]

- McClelland JL, Mirman D, Holt LL. Are there interactive processes in speech perception? Trends in Cognitive Sciences. 2006;10(8):363–369. doi: 10.1016/j.tics.2006.06.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McQueen JM, Norris D, Cutler A. Are there really interactive processes in speech perception? Trends in Cognitive Sciences. 2006;10(12):533. doi: 10.1016/j.tics.2006.10.004. author reply 534. [DOI] [PubMed] [Google Scholar]

- Mirman D, McClelland JL, Holt LL. Response to McQueen et al.: Theoretical and empirical arguments support interactive processing. Trends in Cognitive Sciences. 2006a;10(12):534. doi: 10.1016/j.tics.2006.06.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mirman D, McClelland JL, Holt LL. An interactive Hebbian account of lexically guided tuning of speech perception. Psychonomic Bulletin & Review. 2006b;13(6):958–965. doi: 10.3758/bf03213909. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Naatanen R, Winkler I. The concept of auditory stimulus representation in cognitive neuroscience. Psychological Bulletin. 1999;125(6):826–859. doi: 10.1037/0033-2909.125.6.826. [DOI] [PubMed] [Google Scholar]

- Nature Neuroscience Editors Analyzing functional imaging studies. Nature Neuroscience. 2001;4(4):333. doi: 10.1038/85950. [DOI] [PubMed] [Google Scholar]

- Norris D, McQueen JM. Shortlist B: A Bayesian model of continuous speech recognition. Psychological Review. 2008;115(2):357–395. doi: 10.1037/0033-295X.115.2.357. [DOI] [PubMed] [Google Scholar]

- Norris D, McQueen JM, Cutler A. Merging information in speech recognition: Feedback is never necessary. The Behavioral and Brain Sciences. 2000;23(3):299–325. doi: 10.1017/s0140525x00003241. discussion 325-70. [DOI] [PubMed] [Google Scholar]

- O’Shaughnessy D. Interacting with computers by voice: Automatic speech recognition and synthesis. Proceedings of the IEEE. 2003;91(9):1272–1305. [Google Scholar]

- Peterson GE, Barney HL. Control methods used in a study of vowels. Journal of the Acoustical Society of America. 1952;24:175–184. [Google Scholar]

- Revonsuo A, Portin R, Juottonen K, Rinne JO. Semantic processing of spoken words in Alzheimer’s disease: An electrophysiological study. Journal of Cognitive Neuroscience. 1998;10(3):408–420. doi: 10.1162/089892998562726. [DOI] [PubMed] [Google Scholar]

- Samuel AG. Phonemic restoration: Insights from a new methodology. Journal of Experimental Psychology: General. 1981;110(4):474–494. doi: 10.1037//0096-3445.110.4.474. [DOI] [PubMed] [Google Scholar]

- Samuel AG. Phoneme restoration. Language and Cognitive Processes. 1996;11(6):647–653. [Google Scholar]

- Sanders LD, Newport EL, Neville HJ. Segmenting nonsense: An event-related potential index of perceived onsets in continuous speech. Nature Neuroscience. 2002;5(7):700–703. doi: 10.1038/nn873. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shahin AJ, Bishop CW, Miller LM. Neural mechanisms for illusory filling-in of degraded speech. NeuroImage. 2009;44(3):1133–1143. doi: 10.1016/j.neuroimage.2008.09.045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shannon CE. A mathematical theory of communication. Bell System Technical Journal. 1948;27:379–423. 623–656. [Google Scholar]

- Sivonen P, Maess B, Lattner S, Friederici AD. Phonemic restoration in a sentence context: Evidence from early and late ERP effects. Brain Research. 2006;1121(1):177–189. doi: 10.1016/j.brainres.2006.08.123. [DOI] [PubMed] [Google Scholar]

- Steinbiss V, Ney H, Aubert X, Besling S, Dugast C, Essen U, et al. The Philips Research system for continuous speech recognition. Phillips Journal of Research. 1995;49(4):317–352. [Google Scholar]

- Summerfield C, Egner T. Expectation (and attention) in visual cognition. Trends in Cognitive Sciences. 2009;13(9) doi: 10.1016/j.tics.2009.06.003. [DOI] [PubMed] [Google Scholar]

- Taylor WL. “Cloze procedure”: A new tool for measuring readability. Journalism Quarterly. 1953;30:415–433. [Google Scholar]

- Tierney AT. Ph.D. dissertation. University of California; San Diego: 2010. The Structure and Perception of Human Vocalizations. [Google Scholar]

- Tsao YC, Weismer G, Iqbal K. Interspeaker variation in habitual speaking rate: Additional evidence. Journal of Speech, Language, and Hearing Research. 2006;49(5):1156–1164. doi: 10.1044/1092-4388(2006/083). [DOI] [PubMed] [Google Scholar]

- van Berkum JJ, Hagoort P, Brown CM. Semantic integration in sentences and discourse: Evidence from the N400. Journal of Cognitive Neuroscience. 1999;11(6):657–671. doi: 10.1162/089892999563724. [DOI] [PubMed] [Google Scholar]

- van den Brink D, Brown CM, Hagoort P. Electrophysiological evidence for early contextual influences during spoken-word recognition:N200 versus N400 effects. Journal of Cognitive Neuroscience. 2001;13(7):967–985. doi: 10.1162/089892901753165872. [DOI] [PubMed] [Google Scholar]

- van den Brink D, Hagoort P. The influence of semantic and syntactic context constraints on lexical selection and integration in spoken-word comprehension as revealed by ERPs. Journal of Cognitive Neuroscience. 2004;16(6):1068–1084. doi: 10.1162/0898929041502670. [DOI] [PubMed] [Google Scholar]

- Van Petten C, Coulson S, Rubin S, Plante E, Parks M. Time course of word identification and semantic integration in spoken language. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1999;25(2):394–417. doi: 10.1037//0278-7393.25.2.394. [DOI] [PubMed] [Google Scholar]

- Van Petten C, Luka BJ. Neural localization of semantic context effects in electromagnetic and hemodynamic studies. Brain and Language. 2006;97(3):279–293. doi: 10.1016/j.bandl.2005.11.003. [DOI] [PubMed] [Google Scholar]

- Warren RM. Perceptual restoration of missing speech sounds. Science. 1970;167(917):392–393. doi: 10.1126/science.167.3917.392. [DOI] [PubMed] [Google Scholar]

- Warren RM, Obsuek CJ. Speech perception and phonemic restorations. Perception and Psychophysics. 1971;9(3B):358–362. [Google Scholar]

- Weide RL. [accessed September 25th, 2009];Carnegie Mellon University pronouncing dictionary. 2009 from http://www.speech.cs.cmu.edu/cgi-bin/cmudict.

- Wells JC. Longman Pronunciation Dictionary. 1st ed. Longman; Harlow: 1990. [Google Scholar]