Summary

How does the brain construct a percept of the world from sensory signals? One approach to this fundamental question is to investigate perceptual learning as induced by exposure to different statistical regularities in sensory signals [1–7]. For example, recent studies showed that exposure to novel correlations between different sensory signals can cause a signal to have new perceptual effects [2,3]. In all those studies, however, the signals were always clearly visible. The automaticity of the learning was therefore difficult to determine. Here, we investigate whether learning of this sort, that causes a signal to have new effects on appearance, can be low-level and automatic, by employing a visual signal whose perceptual consequences were made invisible—a vertical disparity gradient masked by other depth cues. This approach excluded high-level influences such as attention or consciousness. Our stimulus for probing perceptual appearance was a rotating cylinder. During exposure we introduced a new contingency between the invisible signal and the rotation direction of the cylinder. When we subsequently presented an ambiguously rotating version of the cylinder, we found that the invisible signal influenced the perceived rotation direction. This demonstrates that perception can rapidly undergo “structure learning” by automatically picking up novel contingencies between sensory signals, thus automatically recruiting signals for novel uses during the construction of a percept.

Results

To convincingly show that new perceptual meanings for sensory signals can be learned automatically, one needs an “invisible visual signal,” that is, a signal that is sensed but that has no effect on visual appearance. The gradient of vertical binocular disparity, created by 2% vertical magnification of one eye's image (eye of vertical magnification—EVM), can be such a signal [8,9]. In several control experiments (see Supplemental Data and Fig. S1), we ensured that EVM could not be seen by the participants.

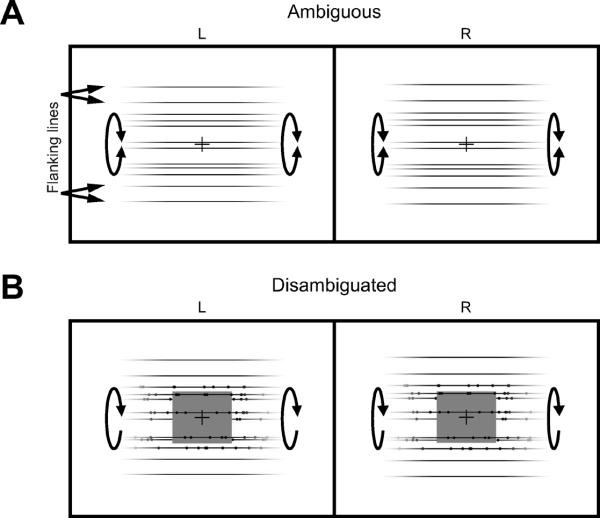

The stimulus we used was a horizontal cylinder rotating either front-side-up or front-side-down. In its basic form the cylinder was defined by horizontal lines with fading edges (Figure 1A). The lines moved up and down on the screen, thereby creating the impression of a rotating cylinder with ambiguous rotation direction (Movie M1A), so participants perceived it rotating sometimes front-side-up and sometimes front-side-down [11] (see also Supplemental Data).

Figure 1.

Stereoscopic pair for uncrossed fusion. The right images are vertically magnified by 2% to create an invisible signal, EVM (eye of vertical magnification). See also Movie M1A and M1B. (A) Cylinder with ambiguous direction of rotation (as indicated by the double arrow). (B) Cylinder with added depth cues (horizontal disparity of dots and rectangle that occludes part of the back surface of the cylinder) that disambiguated rotation direction (indicated by the arrow).

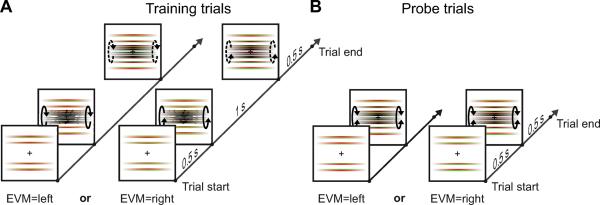

We tested whether the signal created by 2% vertical magnification could be recruited to control the perceived rotation direction of this ambiguously rotating cylinder. To do so we exposed participants to a new contingency. We used a disambiguated version of the cylinder which contained additional depth cues—dots providing horizontal disparity and a rectangle occluding part of the farther surface of the cylinder (Fig. 1B). These cues disambiguated the perceived rotation direction of the cylinder (see Fig. S2A). In “training trials” we exposed participants to cylinder stimuli in which EVM and the unambiguously perceived rotation direction were contingent upon one another (Fig. 2A and Movie M1B). To test whether EVM had an effect on the perceived rotation direction of the cylinder we interleaved these training trials (Fig. 2A) with “probe trials” having ambiguous rotation direction (Fig. 2B). If participants recruit EVM to the new use, then perceived rotation direction on probe trials would come to depend on EVM. If participants do not recruit EVM, then perceived rotation direction would be independent of EVM.

Figure 2.

Timeline of trials. Red and green colors show the images seen by the left and right eyes, respectively. All stimuli contained a rotating cylinder composed of lines and EVM. (A) During training trials, the cylinder is first presented with depth cues that disambiguated rotation direction. Then the depth cues were removed but perceived rotation direction was generally determined by the cues present at the beginning of the trials (see Fig. S2A). The specified rotation direction was contingent on EVM (see Movie M1A). (B) During probe trials, the cylinder was shown without disambiguating depth cues (see Movie M1B). If EVM is recruited, perceived rotation will be the same as the rotation associated with EVM during exposure.

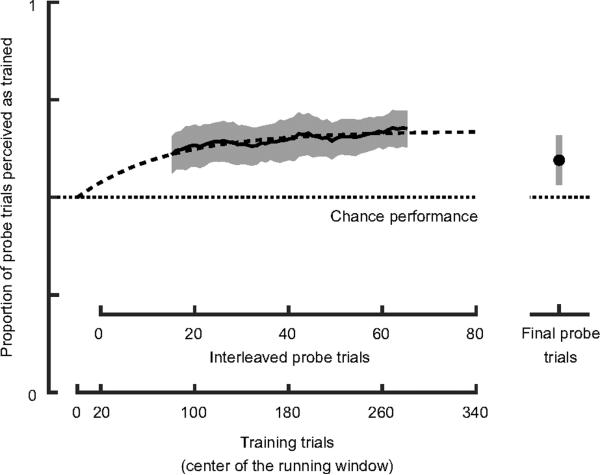

Importantly, after exposure to the new contingency, all participants saw a majority of probe trials consistent with the rotation direction contingent with EVM during exposure, that is, the learning effect was highly significant (see Supplementary Information). However, the effect of exposure did not result in a complete disambiguation as cylinders in probe trials were still seen sometimes to be moving front-side-up and sometimes front-side-down. The proportion of responses consistent with the contingency gradually increased over the course of the experiment as shown in Figure 3. The two parameter exponential fit depicted in the figure is obtained with an asymptote of 0.67 and a time constant of 76 training trials (corresponding to 19 interleaved probe trials). These results show that EVM affected perceived rotation direction by disambiguating the probe trials when interleaved with training trials.

Figure 3.

Proportion of probe trials perceived consistent with the contingency during exposure, indicating recruitment of EVM. Data for probe trials interleaved with training trials are shown. We used a running window of 30 probe trials to filter the data. Error bars represent 95% confidence intervals across participants calculated separately for each datapoint of the running average (see Supplemental Experimental Procedures). See also Figure S2 for further data analysis and Figure S3 for an additional experiment that investigates the buildup of learning over multiple days and the decay of the effect in the final probe trials.

We also asked whether the recruitment was sufficiently long lasting as to have an effect on perception after exposure was completed. For this, participants were provided with a set of final probe trials. The rightmost data point in Figure 3 shows that the effect was retained beyond exposure. In Figure S3 we analyze the increase of the effect of recruitment across different days, and the time course of the decay of recruitment.

Discussion

It has long been debated how the visual system learns which signals are informative about any given property of the environment [1]. How does it know that certain signals extracted from the retinal images—for example binocular disparities, relative image sizes, and certain retinal image motions—can be trusted as signals to construct a depth percept? Recent work showed that signals can be recruited for new perceptual uses, but the recruited signal was always clearly visible to the subject during the experiments [2–4]. This posed the question whether high-level processes such as consciousness, awareness, attention, cognition, and any form of reward or incentives were necessary for learning new statistical regularities in general and for cue recruitment in particular.

We have demonstrated that exposure to a new contingency between an invisible signal (EVM) and an established percept (rotation direction of a cylinder) can cause the perceptual system to learn a new use for the EVM signal (cue to rotation direction, which disambiguates an otherwise ambiguous cylinder). That is, the vertical disparity signal affected perceptual appearance by disambiguating perceived rotation direction. This result indicates that associative learning in perception is an automatic learning process, it does not require reinforcement, and it can proceed without high-level processes such as cognition and attention. The process can detect contingencies between signals prior to the signal's use for constructing appearance.

Knowledge about human perceptual learning has increased greatly in recent years [13]. Yet most work on perceptual learning measured refinements in the visual system's use of signals that it already used to perform a perceptual task, not the learning of new contingencies. One type of refinement is improvement in the ability to make fine discriminations between similar stimuli [14–19]. It was even shown that such an improvement in discrimination can occur for signals that are unseen [14]. A second type of refinement is recalibration, such as occurs during reaching or throwing when a person wears prism goggles [e.g. 20–22]. Differentiation and recalibration are examples of “parameter” learning: learning that occurs by adjusting the use of signals that are already known to be useful [6,23,24].

Here we asked a different question, namely, how (automatically) the perceptual system learns to use a novel signal to construct perceptual appearance. This form of learning from contingency can be described as “structure” learning (i.e. learning about statistical structure) insofar as a signal goes from being treated as independent of a scene property to being treated as conditionally dependent (and therefore useful to estimate the scene property) [23,24]. In the Bayesan Framework, structure learning is typically modeled by adding (or removing) edges, and sometimes nodes, in a Bayes net representation of dependence, while parameter learning requires no such changes to the graph structure of the Bayes net. Formally, however, structure learning can be implemented as an increase in the strength of a pre-existing parameter that previously specified no conditional dependence [24]. Thus, starting to use a signal in a new way has sometimes been treated as parameter learning (e.g. [6]). However, dependency is qualitatively different from the absence of dependency, so consistent with usage elsewhere, we describe the acquisition of new knowledge about dependency to be structure learning [24]. Structure learning is generally considered more difficult than parameter learning [6].

Our results suggest that the goal of perceptual learning is to exploit signals that are informative about some aspect of the visual environment. The visual system cannot directly ascertain which signals are related to a property of the world. The only plausible way for it to assess whether a signal should be recruited is to observe how the signal covaries with already-trusted sources of information and their perceptual consequences. In principle then, signals can sometimes be recruited to affect the appearance of world properties with which they are not normally linked [25,2]. However, since two or more signals in ecological situations are usually correlated with each other only when they carry information about the same property of the environment [7], the accidental recruitment of signals that are not valid is unlikely.

Thus, these results suggest that humans possess a mechanism that automatically detects contingency between signals and exploits it to improve perceptual function, and that this mechanism has access to signals that need not have perceptual consequences (i.e., they can be unseen). Such a mechanism may in fact be necessary, if the meanings of some cues must be learned by experience [1,26,27]. The newly recruited cue can then either stand in for a long-trusted cue when the latter is missing from a scene [3], or be integrated with other cues to improve the accuracy and precision of perceptual estimates [28].

Experimental Procedures

Twenty naïve volunteers (19–26 years) took part after giving informed consent. They were recruited from the Subject Database of the Max Planck Institute, Tübingen. In return for their participation they received payment of 8 €/h. Participants had normal or corrected-to-normal vision (Snellen-equivalent of 20/25 or better), normal stereopsis of 60 arcsec or better (Stereotest circles; Stereo Optical, Chicago), and were tested for anomalous color vision. The experiments were approved by the Ethik-Kommission der Medizinischen Fakultät of the Universitätsklinikum Tübingen.

Stimuli were produced using Psychtoolbox [29,30] and were displayed on a CRT monitor at a distance of 60 cm. All displayed elements were rendered on a violet background in red and blue for the two eyes, respectively. Colors were matched so that with a pair of Berezin ProView anaglyph lenses (red on left eye) stimuli would appear black in one eye and near perfectly blended with the background in the other eye [31]. All displayed elements were fully visible in the central part of the screen and were blended into the background by reducing their contrast towards the screen edges.

Trials started with a zero disparity fixation cross (1.9 deg visual angle) and four flanking horizontal lines above and below the cylinder's location (5.5 deg and 7.5 deg from fixation). The purpose of these lines was to make EVM easier for the visual system to measure, as the window of integration for vertical scale disparity is typically about 20 deg in diameter [32]. After 0.5 s, a horizontal cylinder appeared (8 deg diameter). The cylinder rotated (14.4 deg/s angular speed) around its axis of symmetry, which passed through fixation. The cylinder could be displayed in two configurations, ambiguous or disambiguated (Fig. 1).

-Ambiguous configuration: Using 8 horizontal lines that contained no discontinuities and were blended into the background at the sides. In this way, the lines could only weakly support horizontal disparity signals and could not specify the rotation direction of the cylinder—i.e., perceived direction was ambiguous.

-Disambiguated configuration: Using 80 dots (0.25 deg diameter) randomly positioned on the 8 visible horizontal lines, each of which was placed randomly within an equal sector around the cylinder's circumference. A gray rectangle (12 × 3.5 deg) occluded the central portion of the farthest side of the cylinder. The horizontal disparity of the dots and the rectangle specified the rotation direction—i.e., perceived direction was unambiguous (cf. Fig. S2A).

In probe trials the cylinder was presented in the ambiguous configuration for 0.5 s (Fig. 2A). In training trials the cylinder was presented in the disambiguated configuration for 1.0 s, after which the additional depth cues were removed, leaving the ambiguous cylinder for 0.5 s (Fig. 2B). Participants were instructed to fixate the central cross and report the perceived rotation direction of the cylinder in the ambiguous configuration at the end of each trial by pressing one of two buttons. In training trials the perceived rotation direction of the cylinder in the ambiguous configuration was primed by the cylinder in the disambiguated configuration (see Fig. S2A for data showing the induction of perceived rotation direction).

In all trials there was 2% vertical magnification of one eye's image (EVM). This caused a scaling of the image away from the center—i.e. displacement of image elements increased linearly with distance above and below the line of sight. In training trials EVM was contingent with the direction of rotation. The contingency was balanced across participants (for half of the participants EVM was the right eye when the cylinder rotated front-up). Both contingencies contributed to the overall effect so data were combined (Fig. S2B).

The experiment started with 20 training trials lasting twice as long. Subsequently, participants were presented with 80 blocks of 5 trials composed of 4 training trials and 1 probe trial in random order. Participants were required to take two 2 min breaks during exposure. At the end, participants were presented with 40 probe trials. For half of the participants, before the final probe trials, there was another 2 min break. Data from these trials did not differ for the two groups so they were combined for analysis.

Supplementary Material

Acknowledgments

M.D.L. was funded by the EU grant 27141 “ImmerSence” and by EU grant 248587 “THE”. M.O.E. was funded by HFSP grant RPG 2006/3, by EU grant 248587 “THE”, and by the Max Planck Society. B.T.B. was funded by HFSP grant RPG 2006/3, NSF grant BCS-0617422, and NIH grant EY-013988.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Berkeley G. An essay towards a new theory of vision. In: Turbayne CM, editor. Works on Vision. Bobbs-Merrill; Indianapolis: 1709. 1963. [Google Scholar]

- 2.Haijiang Q, Saunders JA, Stone RW, Backus BT. Demonstration of cue recruitment: Change in visual appearance by means of Pavlovian conditioning. Proceedings of the National Academy of Science. 2006;103:483–486. doi: 10.1073/pnas.0506728103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Backus BT, Haijiang Q. Competition between newly recruited and preexisting visual cues during the construction of visual appearance. Vision Research. 2007;47:919–924. doi: 10.1016/j.visres.2006.12.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Ernst MO, Banks MS, Bülthoff HH. Touch can change visual slant perception. Nature Neuroscience. 2000;3(1):69–73. doi: 10.1038/71140. [DOI] [PubMed] [Google Scholar]

- 5.Adams WJ, Graf EW, Ernst MO. Experience can change the “light-from-above” prior. Nature Neuroscience. 2004;7(10):1057–1058. doi: 10.1038/nn1312. [DOI] [PubMed] [Google Scholar]

- 6.Michel MM, Jacobs RA. Parameter learning but not structure learning: A Bayesian network model of constraints on early perceptual learning. Journal of Vision. 2007;7(1):4, 1–18. doi: 10.1167/7.1.4. [DOI] [PubMed] [Google Scholar]

- 7.Ernst MO. Learning to integrate arbitrary signals from vision and touch. Journal of Vision. 2007;7:1–14. doi: 10.1167/7.5.7. [DOI] [PubMed] [Google Scholar]

- 8.Ogle KN. Researches in binocular vision. W.B. Saunders Co; London: 1950. [Google Scholar]

- 9.Backus BT, Banks MS, van Ee R, Crowell JA. Horizontal and vertical disparity, eye position, and stereoscopic slant perception. Vision Research. 1999;39:1143–1170. doi: 10.1016/s0042-6989(98)00139-4. [DOI] [PubMed] [Google Scholar]

- 10.Backus BT. Perceptual metamers in stereoscopic vision. In: Dietterich TG, Becker S, Ghahramani Z, editors. Advances in Neural Information Processing Systems 14. MIT Press; Cambridge, MA: 2001. [Google Scholar]

- 11.Wallach H, O'Connell DN. The kinetic depth effect. Journal of Experimental Psychology. 1953;45:205–217. doi: 10.1037/h0056880. [DOI] [PubMed] [Google Scholar]

- 12.Backus BT. The Mixture of Bernoulli Experts: A theory to quantify reliance on cues in dichotomous perceptual decisions. Journal of Vision. 2009;9:1–19. doi: 10.1167/9.1.6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Sasaki Y, Nanez JE, Watanabe T. Advances in visual perceptual learning and plasticity. Nature Reviews Neuroscience. 2010;11:53–60. doi: 10.1038/nrn2737. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Watanabe T, Náñez JE, Sasaki Y. Perceptual learning without perception. Nature. 2001;413:844–848. doi: 10.1038/35101601. [DOI] [PubMed] [Google Scholar]

- 15.Seitz AR, Yamagishi N, Werner B, Goda N, Kawato M, Watanabe T. Task-specific disruption of perceptual learning. Proceedings of the National Academy of Sciences of the USA. 2005;102:14895–14900. doi: 10.1073/pnas.0505765102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Fiorentini A, Berardi N. Perceptual learning specific for orientation and spatial frequency. Nature. 1980;287:43–44. doi: 10.1038/287043a0. [DOI] [PubMed] [Google Scholar]

- 17.Karni A, Sagi D. Where practice makes perfect in texture discrimination: evidence for primary visual cortex plasticity. Proc Natl Acad Sci U S A. 1991;88:4966–4970. doi: 10.1073/pnas.88.11.4966. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Poggio T, Fahle M, Edelman S. Fast perceptual learning in visual hyperacuity. Science. 1992;256:1018–1021. doi: 10.1126/science.1589770. [DOI] [PubMed] [Google Scholar]

- 19.Gibson JJ, Gibson EJ. Perceptual learning: Differentiation or enrichment? Psychological Review. 1955;62:32–41. doi: 10.1037/h0048826. [DOI] [PubMed] [Google Scholar]

- 20.Welch RB, Bridgeman B, Anand S, Browman K. Alternating prism exposure causes dual adaptation and generalization to a novel displacement. Perception & Psychophysics. 1993;54:195–204. doi: 10.3758/bf03211756. [DOI] [PubMed] [Google Scholar]

- 21.Martin TA, Keating JG, Goodkin HP, Bastian AJ, Thach WT. Throwing while looking through prisms. II. Specificity and storage of multiple gaze-throw calibrations. Brain. 1996;119:1199–1211. doi: 10.1093/brain/119.4.1199. [DOI] [PubMed] [Google Scholar]

- 22.Burge J, Ernst MO, Banks MS. The statistical determinants of adaptation rate in human reaching. Journal of Vision. 2008;8(4):20, 1–19. doi: 10.1167/8.4.20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Larrafiaga P, Poza M, Yurramendi Y, Murga RH, Kuijpers CMH. Structure Learning of Bayesian Networks by Genetic Algorithms: Performance Analysis of Control Parameters. IEEE Transactions on Pattern Analysis and Machine Intelligence. 1996;18(9):912–926. [Google Scholar]

- 24.Griffiths TL, Tenenbaum JB. Structure and strength in causal induction. Cognitive Psychology. 2005;51:334–384. doi: 10.1016/j.cogpsych.2005.05.004. [DOI] [PubMed] [Google Scholar]

- 25.Brunswik E, Kamiya J. Ecological Cue-Validity of 'Proximity' and of Other Gestalt Factors. American Journal of Psychology. 1953;66:20–32. [PubMed] [Google Scholar]

- 26.Hebb DO. The Organization of Behavior: A neuropsychological theory. Wiley; New York: 1949. [Google Scholar]

- 27.Wallach H. Learned stimulation in space and motion perception. The American psychologist. 1985;40:399–404. doi: 10.1037//0003-066x.40.4.399. [DOI] [PubMed] [Google Scholar]

- 28.Clark JJ, Yuille AL. Data fusion for sensory information processing systems. Kluwer; Boston: 1990. [Google Scholar]

- 29.Brainard DH. The Psychophysics Toolbox. Spatial Vision. 1997;10:433–436. [PubMed] [Google Scholar]

- 30.Pelli DG. The VideoToolbox software for visual psychophysics: Transforming numbers into movies. Spatial Vision. 1997;10:437–442. [PubMed] [Google Scholar]

- 31.Mulligan JB. Optimizing stereo separation in color television anaglyphs. Perception. 1986;15:27–36. doi: 10.1068/p150027. [DOI] [PubMed] [Google Scholar]

- 32.Kaneko H, Howard I. Spatial limitation of vertical-size disparity processing. Vision Research. 1997;37:2871–2878. doi: 10.1016/s0042-6989(97)00099-0. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.