Abstract

Background

Computer models played an important role in the health care reform debate, and they will continue to be used during implementation. However, current models are limited by inputs, including available data.

Aim

We review microsimulation and cell-based models. For each type of model, we discuss data requirements and other factors that may affect its scope. We also discuss how to improve models by changing data collection and data access procedures.

Materials and Methods

We review the modeling literature, documentation on existing models, and data resources available to modelers.

Results

Even with limitations, models can be a useful resource. However, limitations must be clearly communicated. Modeling approaches could be improved by enhancing existing longitudinal data, improving access to linked data, and developing data focused on health care providers.

Discussion

Longitudinal datasets could be improved by standardizing questions across surveys or by fielding supplemental panels. Funding could be provided to identify causal parameters and to clarify ranges of effects reported in the literature. Finally, a forum for routine communication between modelers and policy makers could be established.

Conclusion

Modeling can provide useful information for health care policy makers. Thus, investing in tools to improve modeling capabilities should be a high priority.

Keywords: Modeling, microsimulation, health care policy

Modeling tools played an important role in the debate over health care reform legislation—quantifying the likely effects of changes and specifying the extent to which effects will vary by the design of a policy option. Such tools can continue to be useful as the new law is implemented and as additional reforms (e.g., payment reforms) are considered. For models to be useful, however, there must be data to support them and the results must be effectively communicated to policy makers.

Previous researchers (Glied, Remler, and Graff Zivin 2002; Weinstein et al. 2003;) have produced guidelines regarding best practices for developing health care policy models and reporting results to stakeholders. In this paper, we focus more narrowly on data required to develop useful models, and the limitations of existing data sources. We begin by reviewing two types of models commonly used to evaluate health policy options: microsimulation and cell-based models. For each type of model, we discuss its basic mechanisms, its applications, the data requirements, and other factors that may affect its scope. We then discuss the limitations of the available data. We conclude with recommendations for enhancing existing databases, and developing additional data inputs that could be useful in modeling health policy outcomes.

TWO TYPES OF MODELS

Microsimulation

Microsimulation models are powerful tools for analyzing policy options (Mitton, Sutherland, and Weeks 2000; Epstein 2006; Gilbert 2007; Miller and Page 2007;). For example, the Congressional Budget Office uses a microsimulation model to analyze the effects of health care reform on cost and coverage (CBO 2007). A microsimulation can be thought of as a video game without fancy graphics and jarring sounds. Instead of having “characters,” microsimulation models have “agents” that represent the entities that are affected by the policy reform. Common types of agents are people, groups of people (e.g., families), firms, insurers, health care providers, and federal/state governments. Each agent is endowed with “attributes,” or defining characteristics (e.g., age, income). An attribute is typically included if it is a dimension along which one wishes to stratify the final results or the agent's behavior will depend on it. A behavior is a rule that determines which action the agent will undertake in response to a message received from another agent. The number of different types of agents included in the model, as well as the variety of behaviors they can act on, are strong determinants of the scope of the model. Table 1 lists some typical agents and behaviors.

Table 1.

Examples of Agents and Their Behaviors

| Agent 1 (Sends Message) | Behavior(s) Described in Message | Agent 2 (Receives Message) |

|---|---|---|

| Person/family/tax unit | Buying one of the ESI plans offered | Firm |

| Complying with individual mandate; enrolling in public program | Government | |

| Buying an individual plan; buying into purchasing pool | Insurer | |

| Firm | Offering several choices of ESI plans; setting employee premium contribution (single and family) | Person |

| Complies or not with pay or play mandate | Government | |

| Buys group coverage for workers | Insurer | |

| Insurer | Quoting nongroup premium price | Person |

| Complying with limit on medical-loss ratios; quoting premiums for purchasing pools | Government | |

| Quoting group premium price | Firm |

Note. The table should be read in the following way: Agent 1 (person) sends a message that he/she will or will not buy a plan offered by the employer; the message is received by Agent 2 (the firm).

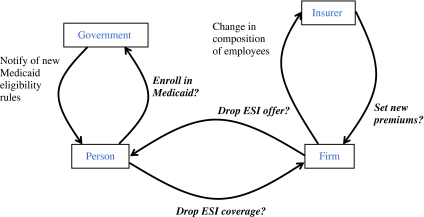

A microsimulation is run by perturbing the environment in which the agents operate (e.g., the government notifies selected individuals that they are now eligible for Medicaid). Perturbations cause “chain reactions” in which agents of different types react to the policy change and to each other's behaviors (see Figure 1). Because the behavior of one agent interacts with the behaviors of other agents, the perturbation generates a dynamic “back-and-forth” among the agents that usually converges after multiple iterations. The new equilibrium resulting from this dynamic process is the predicted outcome of the reform. Although microsimulation models are used to predict future outcomes, they can be validated by “projecting” outcomes for a historical year, where actual outcomes are known, and comparing results.

Figure 1.

An Example of the Type of Messages Exchanged by Four Agents in a Microsimulation Model

Note. In italics are the messages that notify that a decision needs to be taken applying some behavior. In this case, it is the government action (new Medicaid eligibility rules) that induces the agents to reevaluate their choices and possibly take some action.

Models used to simulate policy changes include the Health Benefits Simulation Model (Sheils and Haught 2003; The Lewin Group 2009;), the Health Insurance Reform Simulation Model (now called Health Insurance Policy Simulation Model; Blumberg et al. 2003), RAND COMPARE (Girosi et al. 2009), the Health Insurance Simulation Model (CBO 2007), the AHRQ MEDSIM, KIDSIM, and PUBSIM models (Selden and Moeller 2000; AHRQ 2009;), the Urban Institute's Transfer Income Model or TRIM (Giannarelli 1992), and models developed by academic researchers, including Gruber (2000a, b, 2008) and Meara et al. (2008).

There are several reasons why microsimulation models are used to analyze health insurance reforms. First, there is a considerable heterogeneity both in agents' preferences and in the choices that individuals and families face. Second, in analyzing the effects of health care policy changes we are often interested in distributional effects and microsimulation allows such estimates. Third, in the case of health care reform, many policies are proposed in combination, such as an employer mandate, a tax credit, and a regulatory change in the nongroup market, and microsimulation allows for the analysis of multiple policy options simultaneously. Finally, because each economic transaction is recorded, microsimulation can produce a large number of outcome measures such as the number of newly insured, the cost of a government program, and changes in insurance premiums. Additional examples of outcomes commonly reported are shown in Table 2.

Table 2.

Examples of Reforms and Outcome Measures Reported by Health Reform Microsimulation Models

| Examples of Reforms That Could Be Evaluated Using Microsimulation | Examples of Outcomes That Could be Assessed Using Microsimulation |

|---|---|

| Medicaid expansion | Number of newly insured (aggregate or by individual and family characteristics) |

| Tax credit | |

| Individual mandate | |

| Employer mandate | |

| Medicaid expansion | Cost of government program (aggregate or per newly insured) |

| Tax incentive | |

| Reinsurance | |

| Penalty of individual mandate | Revenue received by government program |

| Penalty of pay-or-play employer mandate | |

| Reduction in uncompensated care due to insurance expansion | Savings to government |

| Pay-or-play employer mandate | Additional expenditures for firms |

| Pay-or-play employer mandate | Firms offering ESI (aggregate and distribution) |

| Firm tax credit | |

| Individual income tax credit | Firms dropping ESI offer (aggregate and distribution) |

| Medicaid expansion | |

| Newly insured/uninsured in any insurance expansion | People switching from one insurance status to another: aggregate number and distribution (by age/income/health status …) |

| Switching from ESI to Medicaid (crowd-out) in Medicaid expansion | |

| Joining a purchasing pool | |

| Mandating guaranteed issue, community ratings | Change in nongroup premiums |

| Tax credit | |

| Pay-or-play employer mandate | Change in group premiums |

| Government funded reinsurance | |

| Any of the above | Change in consumer financial risk (median and distribution) |

| Any of the above | Change in national health expenditures (total/out of pocket) |

| Any of the above | Additional population life years |

Despite the breadth of outcomes that can be generated, some important outcomes, including labor market effects (e.g., effects on wages, unemployment, job creation, and economic growth) and health outcomes (e.g., quality-adjusted life years, disease prevalence), are rarely considered by microsimulation models. Many researchers feel that the complexity and data requirements of a simulation that simultaneously deals with health and labor market outcomes are beyond current capabilities. However, some labor market outcomes could be reported without necessarily simulating the entire labor market. For example, Meara et al. (2008) report changes in employed workers, hours worked per week, and annual wages by exploiting the aggregate relationship between changes in insurance premiums and labor outcomes (Baicker and Chandra 2006).

Finally, most existing microsimulation models for health reform have focused on the demand side (e.g., firm offer rates, employee insurance take-up), rather than supply side (e.g., provider behavior) factors. This is not a limitation of the approach, but rather reflects the existing databases.

Data Requirements

In principle, microsimulation is the ideal modeling tool for policy analysis. It provides the finest resolution possible, it tracks each economic transaction, and it can be used to analyze the effects of a reform on subsets of the population. However, microsimulation models tend to be complex and require large amounts of data to accurately predict behaviors and outcomes. As a starting point, a microsimulation model must reproduce the status quo, necessitating large amounts of data to ascertain the current distribution of individuals, firms, and other agents in population. Uncertainty regarding the distribution of agents in the population, or the likely response of agents to policies, can introduce substantial error in the model. Data for microsimulation models are often culled from a variety of sources, and sophisticated statistical techniques are often required to standardize the data or to predict behavioral responses. Tracking data sources, methods, and assumptions can become a challenge, and detailed documentation is needed to record and explain modeling decisions. Too often, modelers do not effectively communicate information about data sources, assumptions, and uncertainty to policy makers, which can lead to overconfidence in or misinterpretation of model results. Below, we describe the type of data needed to inform microsimulation modeling for health policy, and the limitations of existing data.

Data Needed to Define the Agents

Information on agents' attributes and behaviors typically comes from survey data. Because there is rarely a single database that can be used to populate all the attributes of an agent, researchers use statistical matching techniques to merge information from different surveys (D'Orazio, Di Zio, and Scanu 2006). In addition, agents of different types—such as workers and firms—must often be linked to each other, but few datasets include such linkages. In these cases, researchers use statistical methods to synthetically link the agents together. There are significant methodological challenges to this process and no gold standard for synthetic linking exists. Improved data, such as information linking workers to firms or patients to providers, would alleviate some of these issues.

Data Needed to Model Behaviors

The scope and accuracy of microsimulation are limited by the researchers' ability to design realistic behaviors for all the agents of interest. There are several approaches used to model behaviors, and they all require reliable data in order to produce accurate estimates.

In the econometric regression approach, one or more equations are constructed that describe how agents have made choices in the past. This requires, in principle, datasets that describe the full set of choices available to the agents and their response to these choices. In practice, the choice set is rarely fully observed, and imputation is needed to fill the gaps. For example, imputation and other methods must often be used to infer Medicaid eligibility, nongroup premiums, employer premiums, and health plans available to individuals who are not enrolled in insurance. Some of these problems could be alleviated if appropriate datasets were available. For example, more comprehensive data on enrollees in the nongroup market could help us better understand premium prices faced by this group. Similarly, while there are datasets where one can observe both premiums and health plan characteristics, the sample sizes are typically too small to precisely estimate the household-level choice of health plan.

In a related approach, estimates from the literature are used to summarize an agent's behavior, typically with a single number—the elasticity—which represents the degree of response to a change in an external variable. Elasticities may depend on basic agents' attributes such as age and income (for individual) and size (for firms). Because published elasticity estimates may vary widely, sensitivity analyses varying the parameter are typically conducted.

One limitation of all regression-based estimates is that they are derived from marginal changes observed within the data. If health reform options propose large changes that are beyond what has been observed in the past, then it is not clear that the regression-based estimates will provide accurate behavioral responses. Another problem is that, in some cases, literature or data to estimate parameters are virtually nonexistent. For example, there are few studies estimating the effect of cost-saving approaches such as the use of comparative effectiveness information.

A third approach, grounded in economic theory, assigns a “utility” to each choice faced by an agent, and the agent selects the option that produces the greatest utility (Varian 1993; Cutler and Zeckhauser 2000;). Utility maximization requires the researcher to quantify how much agents value certain goods, such as health care and protection against financial risk. This information, usually derived from the literature, tends to be incomplete. In particular, details on how the value varies with characteristics of the good in question or attributes of the agents are often not available. Moreover, the difficult and important task of capturing the status quo is more readily achieved with a regression or elasticity-based model than with a utility maximization approach. Nevertheless, the advantage of utility maximization is that it can be applied to health reform proposals requiring large changes that are beyond the scope of what has been observed in the past.

Cell-Based Models

There are many situations in which researchers do not need (or the data cannot support) the complexity of a microsimulation model. In these cases, modelers may use cell-based models, for which the population of interest is stratified into cells according to several key attributes (e.g., age, income, and insurance status). Each cell is like an agent in a microsimulation and has one or more behaviors that allow it to respond to exogenous stimuli. The response typically consists of a number of people migrating to another cell. For example, the uninsured population of a given socioeconomic status would react to an exogenous decrease in the price of individual insurance by partially migrating to the cell of people of the same socioeconomic status who have individual insurance. Unlike microsimulation, however, cell-based models offer no feedback loops. For example, a cell-based model would not typically account for the effect of an influx of people in the nongroup market on the price of insurance to produce an additional response that propagates through the system.

Cell-based modeling is coarser than microsimulation, but it is appropriate in a number of situations, such as when:

the outcomes of interest are aggregate measures, such as total cost or total number of people insured.

The behaviors either do not vary much with individual characteristics, or we do not know how they vary.

Feedback loops are known to be of second-order importance and can be disregarded.

Cell-based models offer a number of advantages:

the parameters tend to be single numbers (like take-up rates) that can be found in the literature;

there are few model parameters, allowing for greater transparency;

the model is relatively compact and easy to explain to stakeholders;

implementation and maintenance of the model tends to be simple and inexpensive compared with other models;

the model can often be implemented in an interactive spreadsheet that allows the user to change the policy parameters and immediately see the result.

Overall, cell-based models often represent an attractive alternative to more complex models. For example, cell-based models were to analyze five health insurance options for the state of New York (Glied, Tilipman, and Carrasquillo 2009) and to evaluate four Medicaid reform options (Holahan and Weil 2007). Examples of downloadable, interactive spreadsheets for the analysis of the effects of incentives to providers for the adoption of electronic medical records can be found in the work of Girosi, Meili, and Scoville (2005).

IMPROVING CURRENT DATA SYSTEMS

Many of the modeling challenges described above could be addressed by improving available data. In this section, we describe some specific improvements that would be beneficial.

Improving Longitudinal Data

Longitudinal, representative, and health-focused surveys can help modelers to identify causal relationships, model transitions, and understand long-term relationships between health policies and health and labor outcomes. While longitudinal data on individuals exist, most datasets have limitations. In particular, no data exist that capture health and economic outcomes over the entire life course. Instead, separate studies focus on older adults (the Health and Retirement Study; HRS), working-age adults (the Panel Study of Income Dynamics; PSID), and youth (the National Longitudinal Survey of Youth [NLSY], the National Longitudinal Study of Adolescent Health [ADD-HEALTH]). An additional challenge is that sample size constraints make it difficult to analyze low-probability events, like the development of chronic disease. While fielding a large, nationally representative longitudinal survey capturing all ages would be ideal, it may be prohibitively expensive. As an alternative, existing data could be improved by standardizing questions across surveys, or by fielding supplemental panels focused on individuals with specific needs (e.g., individuals with chronic conditions, unemployed individuals). Researchers at the University of Michigan have begun to facilitate linkages across existing longitudinal datasets by developing a crosswalk mapping comparable questions in the PSID and the HRS.1

Longitudinal data on employers and providers are less available than such data on individuals. The major surveys used to model employers' decisions to offer coverage, such as the MEPS Insurance Component (MEPS-IC), are primarily cross-sectional. The Kaiser Family Foundation and Health Research and Educational Trust (Kaiser-HRET) Employer Survey collect longitudinal information for some firms, but sample sizes are too small for detailed modeling by firm characteristics. Longitudinal data would be valuable for understanding how an employer mandate or a change in the tax treatment of employer-sponsored health insurance affects firms' decisions to offer coverage. Because longitudinal data focused on firms' health insurance decision making is virtually nonexistent, we think that creating such a database would be a valuable use of resources.

Physicians are rarely incorporated into simulation models because their behavior is not well understood. Expanding existing longitudinal datasets of physicians would allow researchers to gain a better understanding of how payment policy or health care delivery reforms alter their behaviors (e.g., hours worked, acceptance of Medicaid patients). The Community Tracking Study Physician Survey (CTS-PS), the sole longitudinal survey of physicians, only collects data every 2–3 years and physicians are typically only observed at two time points. More frequent data collection and a longer panel would facilitate identifying the physician-level effects of policy changes. In addition, it would be useful to be able to link the physicians in the survey to information about the patients they treat.

Increasing Data Timeliness

For many datasets, including MEPS-HC and MEPS-IC, there is a 2- to 4-year lag between when data are collected and when research files are available for public use. This affects all models and limits the ability to provide “real time” information on the effects of current events (e.g., recessions) on outcomes such as coverage and health. Smaller surveys may be more useful for generating timely information. For example, the Kaiser-HRET, which surveys approximately 3,000 firms annually, releases current year data at the end of each calendar year (KFF 2009).

One option for improving the timeliness of data is to make use of Internet panels, such as knowledge networks (KNs) and the RAND American Life Panel (ALP). These are ongoing, Internet-based surveys of individuals (RAND 2005; KN 2008;). For a fee, researchers can add questions to these panels, which can be fielded quickly, with analytic files often available within a few weeks. Sample sizes, population representation, timeliness, and transparency vary across surveys. Both KN and APL use random digit dialing to recruit participants, and they can provide Internet access to respondents who do not have a computer. Unfortunately, some surveys rely on participants to opt-in to the panel, which could lead to sample-selection biases.

Improving the Ability to Link Data Systems

Linking agents to each other (e.g., workers to firms, patients to providers) is an important component of a microsimulation model. For example, if we were able to link patients to providers, we could estimate how individual insurance status and sociodemographic characteristics influence treatments provided and quality of care received. Unfortunately, the available data do not support a robust linking of patients to provider. For example, the NHIS can be linked to Medicare claims data, which can be used to extrapolate information on providers. However, because the data are focused on patients and not physicians, they do not include comprehensive information on physician attributes like practice organization and staffing.

Data linking individuals and firms would substantially increase the accuracy of current models. By providing a better picture of the choice set faced by an employee, such data would help modelers understand employees' behaviors regarding health insurance, and how the behaviors vary depending on cost-sharing requirements and the quality of plans offered. Better understanding of these issues would greatly improve modelers' ability to model worker and firm choices. Currently, only the MEPS HC-IC data allow users to simultaneously observe employers and workers. Unfortunately, the dataset is small and cannot produce nationally representative estimates (AHRQ 2000).

Furthermore, the usefulness of linked data systems such as the MEPS HC-IC is often limited by confidentiality constraints, because they can be accessed only on-site at government research data centers (RDCs) and therefore cannot easily be used as the basis for a simulation. While these restrictions are necessary to protect confidentiality, they seriously limit the usefulness of these data to researchers, especially those who are not geographically close to an RDC.

In some cases, linked files can be made more accessible by creating public-use versions of the data that include statistical noise—that is, data that have been distorted so that the distribution of key variables is preserved, but exact values that could be used to identify individual agents are obscured. This approach enables researchers to work with the data directly, while minimizing the concern that confidentiality could be compromised. For example, the CDC released a public-use version of the NHIS linked to the National Death Index that includes noise but produces estimates similar to the unaltered data (Lochner et al. 2008). Introducing noise into linked files could be adopted by other surveys, such as the MEPS. Alternatively, systems could be improved to allow users to log in remotely to run statistical analyses with confidential data, without having direct access to the database. Currently, the National Center for Health Statistics (NCHS) allows remote access use of confidential data, although procedures and options are limited to insure confidentiality.

Improving Behavioral Parameter Estimates

Obtaining the necessary behavioral parameter estimates is one of the biggest challenges faced by modelers (Thorpe 1995). Often, modelers must rely on estimates from the literature to parameterize the model. Incorporating these estimates, however, can be difficult, particularly when findings in the literature vary widely. The literature on crowd-out of employer-sponsored coverage due to the expansion of public programs illustrates this point, with crowd-out estimates ranging from less than 10 to as much as 60 percent (Shone et al. 2007; Gruber and Simon 2008;). Often, findings in the literature are difficult to compare because they focus on different populations and time periods, or reported results are noncomparable (e.g., regression coefficients versus elasticities). There are also areas where estimates identifying causal relationships are lacking; for example, studies have not definitively answered the question of the degree to which health insurance affects health outcomes. One approach to clarifying key parameters might be to commission a series of studies, all designed to answer the same question using different, investigator-initiated approaches. Such approaches could make use of natural experiments, quasiexperimental designs, or other novel methods aimed at addressing causality. Results could then be reported in a consistent manner, and study authors could be required to conduct sensitivity analyses to attempt to reconcile differences. Comparisons across studies could be facilitated by having study authors review each other's work, enabling authors to identify methodological differences or assumptions that might contribute to discrepant results. While it is likely that this approach would still produce a range of estimates, modelers would gain insight into the factors that contribute to differences across studies.

Standardizing the Reporting of Behavioral Parameters in the Literature

A lack of reporting standards is another challenge associated with using parameters from the literature, because parameters are not always reported with enough detail to incorporate into models. For example, in many cases, the behavioral estimates are only presented for the whole sample and not by basic demographic characteristics (e.g., gender, age). In addition, articles will often report odds ratios, which cannot always be used to extrapolate underlying probabilities. In other cases, results are presented in graphical form and it may not be possible to infer specific point estimates. Because results are not always reported in a consistent and useful way, opportunities to use the information in models to improve our understanding of the effects of policy changes are lost.

One potential solution to this problem is to develop and disseminate a set of analysis and reporting standards that will facilitate the use of estimated behavioral parameters in analytic models. This approach has been used in the past by the Panel on Cost Effectiveness in Health and Medicine convened by the U.S. Public Health Service to develop standards for cost-effectiveness analysis (Siegel et al. 1996). One challenge in developing such standards is the wide range of methods that are used to estimate these parameters. Moreover, the standards would be in place to facilitate the use of behavioral estimates in a simulation model, which will likely not be the primary purpose of the study.

Developing Standardized Sources for Parameters

While many basic statistics (e.g., the share of the population on Medicaid) can be computed from existing data sources, it is often more efficient to have standardized sources that summarize this information. The Kaiser State Health Facts Database is a good source for state-specific information on the health care delivery system. AHRQ has several query tools, including MEPS-Net and HCUP-Net, that enable researchers to generate customized estimates online. Similarly, prevalence and trend data for specific illnesses can be accessed via the Behavioral Risk Factor Surveillance System (BRFSS) website. Building similar resources to address other questions, such as health information technology adoption rates, take-up of high deductible health plans, and state-specific regulations in the nongroup market, could be useful to modelers. It would also be helpful to have an online clearinghouse pointing researchers to the various databases containing health and health care statistics.

POLICY RECOMMENDATIONS

Several messages emerge from our review of models and data. First, models can be powerful tools for analyzing health care policy options. They are, however, complex and require large amounts of data. In many cases, the data needed to parameterize the model are not available. Fortunately, there are actions regarding the collection, reporting, and distribution of data that would ameliorate the problem. Policy makers could improve data for modeling through three key actions:

Provide funding to identify causal parameters and clarify ranges of effects that have been reported in the literature. Policy makers might consider funding several studies that use different approaches to answer the same question, and require standardized reporting so that results could be easily compared. The results could be synthesized to highlight the likely ranges of important parameters (e.g., crowd-out, take-up elasticities for nongroup coverage) and describe the underlying reasons for any differences across estimates.

Enhance nationally representative, longitudinal study on individuals to clarify behavioral responses to policy changes. Standardization across nationally representative surveys, increases in sample size, and the addition of specialized modules to deal with key populations could lead to a better understanding of the relationship between health insurance and health outcomes. Longitudinal studies focused on employers would improve our understanding of the firms' decision-making process, and better data on physicians would allow modelers to consider provider behavior.

Improve access to linked data sources. While many linked data sources are available for use at RDCs, the application process to use these data can be onerous, and researchers who are not in close proximity to RDCs may be at a disadvantage. Introducing statistical noise into files that can be made available for public use, or allowing remote access log in, would enable a wider range of modelers to use the data.

Once the model is developed and estimates are generated, modelers need to be able to clearly communicate the capabilities, limitations, and degree of uncertainty inherent in existing models so that stakeholders have realistic expectations and understand the strengths and limitations of the model. Moreover, model results must be communicated in a way that informs, rather than confuses, the policy process. To facilitate this improved communication, we recommend the following action:

Establish a forum for routine communication between modelers and policy makers. While there are clearly financial, organizational, and legislative barriers to the improvement of our capabilities to model health policy alternatives, progress could be accelerated if ongoing communication occurred among modelers, stakeholders, and policy makers.

CONCLUSION

Models can be useful even when there are significant data limitations. Weinstein et al. (2003) argue that decisions made with a model parameterized with limited data are likely better than decisions made with limited data and no model. The passage of health care reform legislation underscores the importance of improving our ability to evaluate policy changes. Modeling can provide the information needed to guide implementation efforts and to identify additional reforms that would be effective in containing health care costs.

Thus, investing in the tools necessary to provide policy makers with useful information for decision making should be a high priority.

Acknowledgments

Joint Acknowledgment/Disclosure Statement: This paper was commissioned by AcademyHealth with support from The Commonwealth Fund and The Robert Wood Johnson Foundation.

Disclosures: None.

Disclaimers: None.

NOTE

See http://psidonline.isr.umich.edu/Guide/PSIDHRS/ (accessed April 21, 2010).

Supporting Information

Additional supporting information may be found in the online version of this article:

Appendix SA1: Author Matrix.

Please note: Wiley-Blackwell is not responsible for the content or functionality of any supporting materials supplied by the authors. Any queries (other than missing material) should be directed to the corresponding author for the article.

REFERENCES

- Agency for Healthcare Research and Quality (AHRQ) 2000. “MEPS LINK_HC/IC: Household Component-Insurance Component Linked Data for 1996” [accessed on April 21, 2010]. Available at http://www.meps.ahrq.gov/mepsweb/data_stats/download_data_files_detail.jsp?cboPufNumber=LINK_96HC/IC. [DOI] [PubMed]

- Agency for Healthcare Research and Quality (AHRQ) 2009. “MEPS Data and Analytic Capabilities for Supporting Health Policy Research”. [accessed on April 21, 2010]. Available at http://www.ncvhs.hhs.gov/090227p5.pdf. [DOI] [PubMed]

- Baicker K, Chandra A. The Labor Market Effects of Rising Health Insurance Premiums. Journal of Labor Economics. 2006;24(3):609–34. [Google Scholar]

- Blumberg L, Shen Y, Nichols L, Buettgens M, Dubay L, McMorrow S. 2003. “The Health Insurance Reform Simulation Model (HIRSM) Methodological Detail and Prototypical Simulation Results,”The Urban Institute [accessed on April 21, 2010]. Available at http://www.urban.org/url.cfm?ID=410867.

- Congressional Budget Office (CBO) 2007. “CBO's Health Insurance Simulation Model: A Technical Description” [accessed on April 20, 2010]. Available at http://www.cbo.gov/ftpdocs/87xx/doc8712/10-31-HealthInsurModel.pdf.

- Cutler D, Zeckhauser R. The Anatomy of Health Insurance. Handbook of Health Economics. 2000;1:563–643. [Google Scholar]

- D'Orazio M, Di Zio M, Scanu M. Statistical Matching: Theory and Practice (Wiley Series in Survey Methodology) New York: Wiley; 2006. [Google Scholar]

- Epstein J. Generative Social Science: Studies in Agent-Based Computational Modeling. Princeton, NJ: Princeton University Press; 2006. [Google Scholar]

- Giannarelli L. 1992. “The Transfer Income Model, Version 2,”The Urban Institute [accessed on April 21, 2010]. Available at http://www.urban.org/publications/204603.html.

- Gilbert N. Agent-Based Models. London: Sage Publications; 2007. [Google Scholar]

- Girosi F, Cordova A, Eibner C, Gresenz CR, Keeler E, Ringel JS, Sullivan J, Bertko J, Buntin MB, Vardavas R. 2009. “Overview of the COMPARE Microsimulation Model,”RAND Working Paper WR 650 [accessed on April 21, 2010]. Available at http://www.randcompare.org/sites/default/files/docs/pdfs/COMPARE_Model_Overview_0.pdf.

- Girosi F, Meili R, Scoville R. 2005. “Extrapolating Evidence of Health Information Technology Savings and Costs,”RAND MG-410-HLTH [accessed on April 21, 2010]. Available at http://www.rand.org/pubs/monographs/MG410/ [DOI] [PubMed]

- Glied S, Remler D, Graff Zivin J. Inside the Sausage Factory: Improving Estimates of the Effects of Health Insurance Expansion Proposals. Milbank Quarterly. 2002;80(4):603–35. doi: 10.1111/1468-0009.00026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glied S, Tilipman N, Carrasquillo O. 2009. “Analysis of Five Health Insurance Options for New York State,”NYS Health Foundation [accessed on April 21, 2010]. Available at http://www.nyshealthfoundation.org/userfiles/file/Microsoft%20Word%20-%20Analysis%20of%20Five%20Health%20Insurance%20Expansion%20Options%20for%20NYS_FINAL.pdf.

- Gruber J. Microsimulation Estimates of the Effects of Tax Subsidies for Health Insurance. National Tax Journal. 2000a;LIII(3, Part 1):329–42. [Google Scholar]

- Gruber J. 2000b. “Tax Subsidies for Health Insurance: Evaluating the Costs and Benefits,”NBER Working Paper w7553 [accessed on April 21, 2010]. Available at http://www.nber.org/papers/w7553.

- Gruber J. 2008. “Covering the Uninsured in the US,”NBER Working Paper w13758 [accessed on April 21, 2010]. Available at http://www.nber.org/papers/w13758.

- Gruber J, Simon KI. Crowd-Out 10 Years Later: Have Recent Public Insurance Expansions Crowded Out Private Health Insurance? Journal of Health Economics. 2008;27:201–17. doi: 10.1016/j.jhealeco.2007.11.004. [DOI] [PubMed] [Google Scholar]

- Holahan J, Weil A. Toward Real Medicaid Reform. Health Affairs. 2007;26(2):w254–70. doi: 10.1377/hlthaff.26.2.w254. [accessed on April 21, 2010]. Available at http://content.healthaffairs.org/cgi/reprint/hlthaff.26.2.w254v1. [DOI] [PubMed] [Google Scholar]

- Kaiser Family Foundation (KFF) 2009. “Employer Health Benefits 2009 Annual Survey, Survey Design and Methods” [accessed on April 21, 2010]. Available at http://ehbs.kff.org/pdf/2009/7936.pdf.

- Knowledge Networks (KN) 2008. “Knowledge Networks Methodology” [accessed on April 21, 2010]. Available at http://www.knowledgenetworks.com/ganp/docs/Knowledge%20Networks%20Methodology.pdf.

- The Lewin Group. 2009. “The Health Benefits Simulation Model (HBSM): Methodology and Assumptions” [accessed on April 21, 2010]. Available at http://www.lewin.com/content/publications/HBSMDocumentationMar09.pdf.

- Lochner K, Hummer RA, Bartee S, Wheatcroft G, Cox C. The Public Use National Health Interview Survey Linked Mortality Files: Methods of Reidentification Risk Avoidance and Comparative Analysis. American Journal of Epidemiology. 2008;168(3):336–44. doi: 10.1093/aje/kwn123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meara E, Rosenthal M, Sinaiko A, Baicker K. 2008. “State and Federal Approaches to Health Reform: What Works for the Working Poor?”NBER Working Paper 14125 [accessed on April 21, 2010]. Available at http://www.nber.org/papers/w14125.

- Miller JS, Page S. Complex Adaptive Systems: An Introduction to Computational Models of Social Life. Princeton, NJ: Princeton University Press; 2007. [Google Scholar]

- Mitton L, Sutherland H, Weeks M. Microsimulation Modelling for Policy Analysis, Challenges and Innovations. Cambridge, UK: Cambridge University Press; 2000. [Google Scholar]

- RAND Corporation. 2005. “American Life Panel Brochure. CP-508 (November, 2008), RAND: Santa Monica” [accessed on April 21, 2010]. Available at http://www.rand.org/pubs/corporate_pubs/CP508-2005-11/

- Selden T, Moeller J. Estimates of the Tax Subsidy for Employment-Related Health Insurance. National Tax Journal. 2000;53(4, part 1):877–87. [Google Scholar]

- Sheils J, Haught R. 2003. “Cost and Coverage Analysis of Ten Proposals to Expand Health Insurance Coverage—Appendix A: The Health Benefits Simulation Model (HBSM): Uniform Methodology and Assumptions, in Covering American: Real Remedies for the Uninsured,” The Lewin Group [accessed on April 21, 2010]. Available at http://www.rwjf.org/files/research/costCoverageMethodology.pdf.

- Shone LP, Lantz PM, Dick AW, Chernew ME, Szilagyi PG. Crowd-Out in the State Children's Health Insurance Program: Incidence, Enrollee Characteristics, and Experiences, and Potential Impact on New York's SCHIP. Health Services Research. 2007;43(1, part II):419–34. doi: 10.1111/j.1475-6773.2007.00819.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Siegel JE, Weinstein MC, Russell LB, Gold MR. Recommendations for Reporting Cost-Effectiveness Analyses. Journal of the American Medical Association. 1996;276(16):1339–41. doi: 10.1001/jama.276.16.1339. [DOI] [PubMed] [Google Scholar]

- Thorpe KE. A Call for Health Services Researchers. Health Affairs. 1995;14(1):63–6. doi: 10.1377/hlthaff.14.1.63. [DOI] [PubMed] [Google Scholar]

- Varian H. Microeconomic Analysis. New York: W. W. Norton and Company; 1993. [Google Scholar]

- Weinstein MC, O'Brien B, Hornberger J, Jackson J, Johannesson M, McCabe C, Luce BR. Principles of Good Practice for Decision Analytic Modeling in Health-Care Evaluation: Report of the ISPOR Task Force on Good Research Practices—Modeling Studies. Value in Health. 2003;16(1):9–17. doi: 10.1046/j.1524-4733.2003.00234.x. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.