SYNOPSIS

Objective

In 2007, the Centers for Disease Control and Prevention (CDC) commissioned an Evidence-Based Gaps Collaboration Group to consider whether past experience could help guide future efforts to educate and train public health workers in responding to emergencies and disasters.

Methods

The Group searched the peer-reviewed literature for preparedness training articles meeting three criteria: publication during the period when CDC's Centers for Public Health Preparedness were fully operational, content relevant to emergency response operations, and content particular to the emergency response roles of public health professionals. Articles underwent both quantitative and qualitative analyses.

Results

The search identified 163 articles covering the topics of leadership and command structure (18.4%), information and communications (14.1%), organizational systems (78.5%), and others (23.9%). The number of reports was substantial, but their usefulness for trainers and educators was rated only “fair” to “good.” Thematic analysis of 137 articles found that organizational topics far outnumbered leadership, command structure, and communications topics. Disconnects among critical participants—including trainers, policy makers, and public health agencies—were noted. Generalizable evaluations were rare.

Conclusions

Reviews of progress in preparedness training for the public health workforce should be repeated in the future. Governmental investment in training for preparedness should continue. Future training programs should be grounded in policy and practice needs, and evaluations should be based on performance improvement.

The Centers for Disease Control and Prevention (CDC) supported Centers for Public Health Preparedness (CPHPs) beginning in 2000, with annual investments in the training and education of public health professionals for responding to emergencies and disasters with public health consequences. The CPHP network grew to full capacity in 2002 and 2003. Since then, policy makers and legislators have asked whether this funding was producing the intended outcome.

In response, CDC commissioned the Evidence-Based Gaps Collaboration Group (EBGC Group) in January 2007 to consider whether past experience could help guide future efforts to educate and train public health workers in responding to emergencies and disasters. The EBGC Group conducted a review of training reports published in the peer-reviewed literature during the period of time when CPHPs were fully organized and operational. All Group members were associated with a CPHP based at a school of public health. The membership included 10 faculty members and professionals with expertise in preparedness education and training. The Group members authored a detailed report to CDC1 as well as this article.

BACKGROUND

Previously published reports and a decade of public health training experience shared by many of the Group members served as background for this literature review. These resources defined the scope of preparedness training, characterized the public health workforce, helped to specify relevant training topics, and defined the components of training programs.

Scope of preparedness training

CDC has emphasized the central importance of emergency response operations as a strategic imperative for the nation's health.2 Response is part of the four-phased cycle of emergency management, distinguishable from the other phases (pre-event mitigation, pre-event preparedness, and post-event recovery). According to the Federal Emergency Management Agency, “Response begins as soon as a disaster is detected or threatens. It involves mobilizing and positioning emergency equipment; getting people out of danger; providing needed food, water, shelter, and medical services; and bringing damaged services and systems back on line.”3 The EBGC Group decided that training for the operational response to emergencies and disasters should be the focus of its work. Additionally, in a comprehensive synopsis of national and global health protection needs to be addressed through research and education for preparedness, CDC had emphasized the need “to prioritize preparedness and response activities at the community level.”4 For the EBGC Group's work, this suggested a primary—though not exclusive—focus on trainings relevant to local public health operations.

Public health workforce

CDC's research synopsis also emphasized the need “to promote and evaluate integrated systems of care and risk management, incident management, and communication among health and safety authorities and residents.”4 The EBGC Group embraced the concept of the public health workforce as multidisciplinary and multiprofessional, and it specifically recognized emergency response roles of administrators, field epidemiologists, health educators, laboratorians, lawyers, nurses, physicians, sanitarians, and others.

Training topics

CDC's concept of effective public health performance published in 2002 was based on three elements: workforce competencies, communication systems, and organizational capacities.5 Emergency response operations require specific focus within each of these elements. Though workforce competencies are many, emergencies require specialized skills of leadership and specialized roles of individuals in the command structure. Emergencies call for timely sharing of information among responders and for communication of risk to the populations served. Finally, intra-agency and inter-agency organizational structures assume additional and potentially different functions in emergencies. Therefore, the EBGC Group classified the content of training programs within these three broad categories.

Training programs

Training programs develop through a cycle of processes, which were adapted for the public health workforce during the preceding decade.6 The cycle begins with needs assessments based on trainees' performance of assigned roles and responsibilities, which are the foundation for curriculum development. Curricula are adapted for delivery in various media and formats, including combinations of instructor-mediated, self-instructional, in-person, and/or distance-learning technologies. The final phase of the cycle is evaluation of training results to improve programs, which is the grounds for repeating periodic reassessment of training needs and priorities. The EBGC Group used these phases as a framework for classifying and distinguishing purposes and methods presented in published training reports.

METHODS

The EBGC Group used a purposive sampling strategy, as outlined by Patton,7 to identify a set of published articles and analyzed them using both quantitative and qualitative-thematic methods. This sampling frame is recommended for information-rich and critical-incidence situations where clarification rather than empirical generalization is needed. This type of sampling is particularly useful in studies of emerging phenomena, as was preparedness training at the time of this work. CDC imposed a nine-month time limit for completion of the work; that constraint, as well as the agency's need to evaluate training effectiveness during the CPHP's funding period, led the Group to focus on literature published between 2003 and 2007.

Journals

The professional journals included in the literature search were published in both print and electronic formats and were addressed both to the general public health audience and to specific public health professions (administrators, field epidemiologists, health educators, laboratorians, lawyers, nurses, physicians, and sanitarians). Given the strictly public health focus of this project and its time constraints, the Group excluded journals with a clinical or diagnostic focus. The Group agreed upon a list of journals before undertaking the search and added journals for the specified professions during the search. A list of the 79 journals reviewed (not all of which yielded articles for subsequent abstracting and analysis) can be found in the Group's report to CDC.1

Articles

Three criteria governed the selection of preparedness training articles for review: publication date between 2003 (when all CPHP grantees were funded) and the Group's formation in 2007, content relevance to response operations, and content relevance to the specific roles of public health professionals. Full citations of each of the 163 articles thus selected are included in the Group's report to CDC.1

Abstracts

EBGC Group members served as abstractors and as a panel of experts to review the selected articles. They developed a template that served as a qualitative code book to specify details and define terms for the abstracts. The abstracts included both selected-response and text-response fields to:

Identify each article by its publication citation.

lassify each article by (a) training topic (leadership-command, information management, organization-system, or other), (b) intended audience or those to whom findings are addressed, and (c) intended beneficiaries or those expected to gain from the training whether as trainee or trainer.

Summarize in the authors' own text each article's descriptions of methodology, findings and lessons learned, limitations, and conclusions and/or recommendations.

Assess the article's implications for the CPHPs in abstractors' comments.

Rate each article on a scale of 1 (lowest) to 5 (highest). The scale served as a composite indicator for assessment of the article's usefulness in workforce development, novelty, reproducibility, evaluative rigor, and systematic use of data. These defined criteria served as the reviewers' “utility tests”7 to assess each article's relevance.

Group members posted the abstract template on a website, entered relevant information for each article, and downloaded all entries to a Microsoft® Excel database and spreadsheets.

Descriptive statistics

Statistical analysis for the selected articles summarized their topical coverage and, within each topic, the audiences addressed, the professions intended for training, and the utility ratings.

Thematic analysis

The use of qualitative methodology to review the literature was set forth by Glaser and Strauss in 1967 when they observed that using these methods could be as rigorous and useful as fieldwork.8 For the qualitative analysis, the Group reordered narrative data from the abstracts into clusters—called themes—that would suggest new insights or problem-solving strategies. Themes are considered units of qualitative review that can include key terms, larger blocks of text, analytic procedures, and other forms of comparisons including citations or missing information.9 In the scientific literature, authors posit their ideas, interpretations, and recommendations, and they describe events in ways that are analogous to the data that are collected during fieldwork.

Using the established template as a code book, pairs of EBGC Group members working in teams downloaded the completed abstracts. A team was assigned to review one of the five content areas of the articles (methodologies, findings/lessons, limitations, conclusions/recommendations of the authors, and implications for CPHP education) as summarized in the abstracts. Within each team, two members worked first individually and then together to identify themes occurring commonly throughout the entire set of abstracts. During this process, Group members occasionally queried each other to clarify the meaning of text fields in the abstracts.

RESULTS

The quantitative and thematic analyses provided insights about the status of research on the effectiveness of preparedness training for public health professionals and suggested where future training programs would benefit from further attention and additional resources.

Quantity and ratings of the training literature

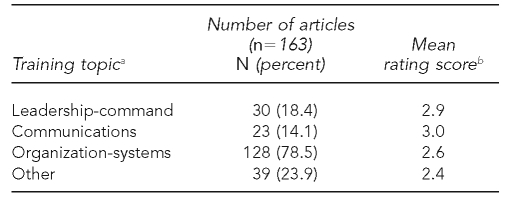

Table 1 shows the number of articles by topic and their mean rating scores. The mean rating scores varied little by topic: communications had the highest mean utility score (3.0), and those classified as “other” had the lowest mean score (2.4).

Table 1.

Number of articles and mean rating score by topic, Evidence-Based Gaps Collaboration Group review of literature on training preparedness published from 2003 to 2007

aTopic classifications are not exclusive, as many articles addressed more than one topic.

bScore as composite indicator for utility was on a scale of 1 (lowest) to 5 (highest). For a complete description of criteria used to determine this score, see the full Evidence-Based Gaps Collaboration Group Report (Association of Schools of Public Health, Centers for Disease Control and Prevention [US]; Centers for Public Health Preparedness Network. 2006–2007 ASPH/CDC Evidence-Based Gaps Collaboration Group. October 2007 [cited 2009 Oct 9]. Available from: URL: http://preparedness.asph.org/cphp/documents/Evidence-Based%20Gaps%20CG-FINAL.pdf).

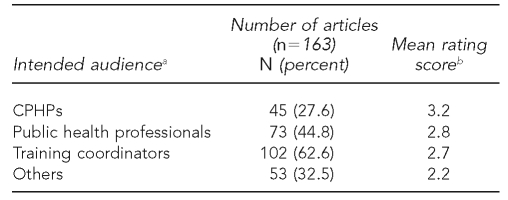

Table 2 shows the distribution of articles according to their intended audiences. The most prevalent audience was training coordinators (n=102). The least prevalent audience was the CPHP network (n=45), which—though relatively few in number—had the highest mean rating score (3.2). Articles deemed relevant to “other” audiences had the lowest mean rating score (2.2). The mean rating score for articles relevant to public health professionals (2.8) was similar to that for articles relevant to training coordinators (2.7). Given the five-point utility scale, overall ratings ranged only from fair to good.

Table 2.

Mean rating scores by intended audience, Evidence-Based Gaps Collaboration Group review of literature on training preparedness published from 2003 to 2007

aIntended audience classifications are not exclusive, as many articles addressed more than one audience.

bScore as composite indicator for utility was on a scale of 1 (lowest) to 5 (highest). For a complete description of criteria used to determine this score, see the full Evidence-Based Gaps Collaboration Group Report (Association of Schools of Public Health, Centers for Disease Control and Prevention [US]; Centers for Public Health Preparedness Network. 2006–2007 ASPH/CDC Evidence-Based Gaps Collaboration Group. October 2007 [cited 2009 Oct 9]. Available from: URL: http://preparedness.asph.org/cphp/documents/Evidence-Based%20Gaps%20CG-FINAL.pdf).

CPHP = Center for Public Health Preparedness

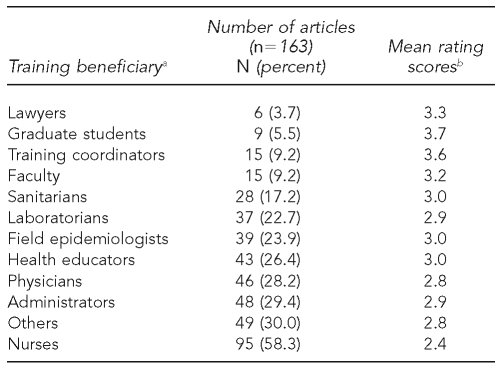

Table 3 shows that the most prevalent articles described training for nurses (n=95), while the least prevalent articles described training for lawyers (n=6). The high number of nursing articles reflects the large proportion of nursing journals captured in the literature review as well as the frequency with which these journals published articles on relevant topics. Although numerous, these articles for training of nurses had the lowest mean rating score (2.4). Articles describing training activities for graduate students had the highest mean rating score (3.7). Also scoring high in utility were articles for which the intended beneficiaries were training coordinators. Most reported trainings were targeted to a single professional group or agency.

Table 3.

Mean rating scores by intended beneficiary, Evidence-Based Gaps Collaboration Group review of literature on training preparedness published from 2003 to 2007

aTraining beneficiary classifications are not exclusive, as many articles addressed more than one beneficiary group.

bScore as composite indicator for utility was on a scale of 1 (lowest) to 5 (highest). For a complete description of criteria used to determine this score, see the full Evidence-Based Gaps Collaboration Group Report (Association of Schools of Public Health, Centers for Disease Control and Prevention [US]; Centers for Public Health Preparedness Network. 2006–2007 ASPH/CDC Evidence-Based Gaps Collaboration Group. October 2007 [cited 2009 Oct 9]. Available from: URL: http://preparedness.asph.org/cphp/documents/Evidence-Based%20Gaps%20CG-FINAL.pdf).

Recurring themes in the abstracts of training articles

Group members prepared an abstract for each of 137 articles, and they identified themes as found in the abstracts' language. Recurring themes were those found frequently throughout abstracts classified by each phase of the training process (needs assessment, curriculum development, delivery modes and media, and evaluation) and by an additional set of articles concerning the government's role in preparedness training. The themes provided a summary of group members' comments and opinions about the content and quality of articles reviewed within each set.

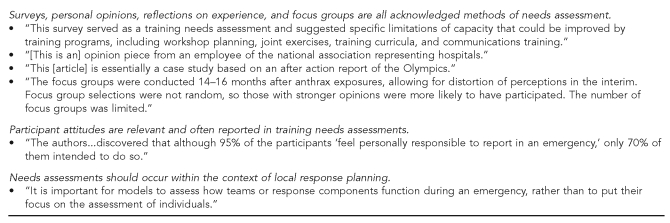

Figures 1 through 5 list the abstract themes in each of the four training phases (assessment, curriculum development, delivery, and evaluation) and the governmental role in training programs. Quotations illustrating the themes are taken from the Group members' abstracts.

Figure 1.

Needs assessment themes, Evidence-Based Gaps Collaboration Group review of literature on training preparedness published from 2003 to 2007

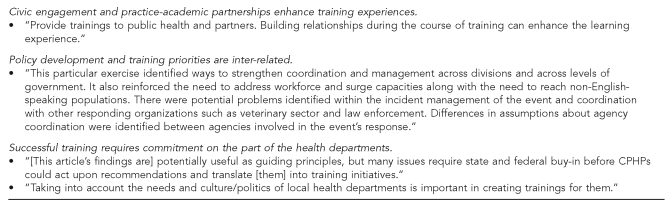

Figure 5.

Governmental roles, Evidence-Based Gaps Collaboration Group review of literature on training preparedness published from 2003 to 2007

CPHP = Center for Public Health Preparedness

Assessment.

Articles in this set described assessment techniques and topics, including audiences, subject areas, proficiency levels, and incentives for training. Illustrative quotations from these abstracts in Figure 1 express Group members' observations about sources and methods of needs assessment, recognition of participants' attitudes as well as their skills and expertise, and the tendency of assessments to focus on work teams rather than exclusively on individuals.

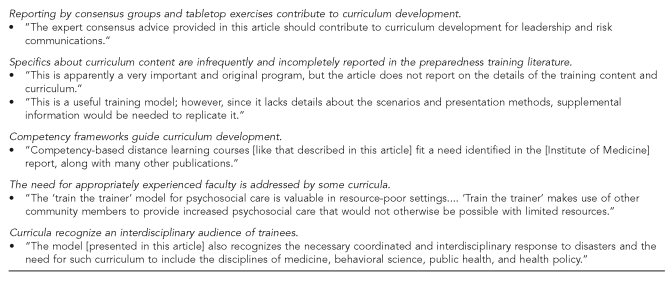

Curriculum development.

Articles in this set described curriculum design and development as the essence of what occurs in exchanges of information between instructor and student, and as the content of information shared. Figure 2 presents themes and illustrative quotations from Group members' abstracts. Reports of tabletop exercises often lacked any recommendations for the content of trainings. Also absent from most articles in this set were detailed descriptions of curricula, the use of competency frameworks, the importance of appropriately experienced faculty, and the interdisciplinary composition of trainee audiences.

Figure 2.

Curriculum development themes, Evidence-Based Gaps Collaboration Group review of literature on training preparedness published from 2003 to 2007

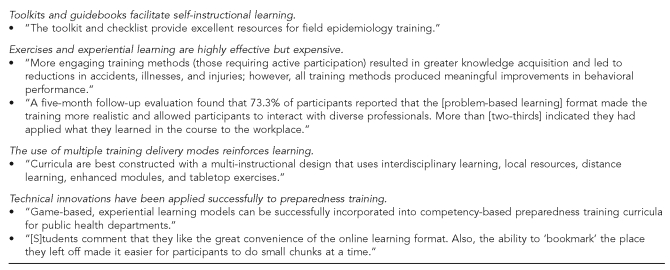

Delivery modes.

Effective delivery of training curricula depends on such factors as suitability of media to content material, learners' preferences, and access to and quality of technology. Figure 3 summarizes recurring themes in the abstracts about various media and methods, including self-instructional materials, experiential learning and its cost, the combination of multiple delivery modes, and technical innovations.

Figure 3.

Program delivery themes, Evidence-Based Gaps Collaboration Group review of literature on training preparedness published from 2003 to 2007

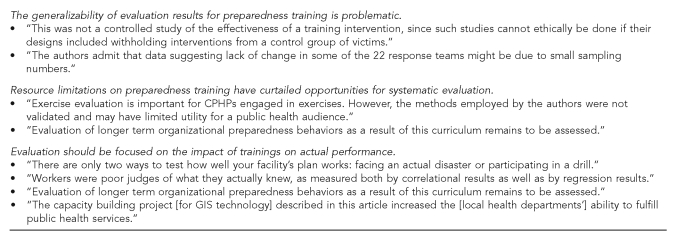

Evaluation.

Evaluation should be grounded in the outcome of a training event and should inform future needs assessments. Recurring themes for this set of abstracts, shown in Figure 4, included poor generalizability of evaluation results, the lack of funding for field exercises, and the need to measure performance outcomes in addition to training processes.

Figure 4.

Evaluation themes, Evidence-Based Gaps Collaboration Group review of literature on training preparedness published from 2003 to 2007

CPHP = Center for Public Health Preparedness

GIS = geographic information system

Governmental roles.

Governmental agencies exercise responsibility for training programs by defining their goals and supporting them financially. These are roles different from those of trainers and educators. Recurring themes in the abstracts of this set of articles, shown in Figure 5, included civic engagement as inherent in training experiences, the need to integrate policy-making and training priorities, and the need for commitment to training by public health agencies.

DISCUSSION

The method of this literature review combined both quantitative and qualitative analyses and relied heavily on the collective expertise of the EBGC Group members in assessing the existence of gaps in the effectiveness of preparedness training for the public health workforce. The Group found some strengths, but also some important gaps.

The number of articles on preparedness training published in the peer-reviewed literature during a relatively brief period of years was substantial. This is particularly notable because funding for training providers does not typically include writing for publication—unlike funding for researchers. Reports addressed the needs of trainees in a wide number of professions involved in public health emergencies. Needs assessments used a broad range of methods. Competency frameworks guided curriculum development. Trainees' preferences and training content dictated a wide variety of delivery methods.

Nevertheless, this literature review exposed notable gaps. If the peer-reviewed literature should be a repository for best practices and examples, then its usefulness for preparedness training is limited. Most articles assumed training coordinators as their audience, but their utility for this audience was only fair to good. Even though the public health response to emergencies is multidisciplinary, training reports tended to isolate single professional groups and agencies. Training reports for nurses were twice as numerous as for any other profession; though this may be an artifact due to the high number of nursing journals, it nevertheless points to a possible deficit of attention to training among other professions' journals.

The topics of training may be poorly distributed among workforce competencies, communications procedures, and organizational capacities. For the most part, articles said little about the content of training. However, to the extent that this review could identify topics addressed, training reports about organizational systems far outnumbered those on leadership, command structure, and communications. Further study is necessary to determine if there is a systematic deficit of training content in those areas.

There are apparent disconnects among critical participants in the development of training programs. Those who study preparedness policy did not report the implications of their analyses for workforce development. The influence of public health agencies appeared weak with regard to training content. Trainers and educators tended to focus needs assessments on individual learners and to neglect the organizational context of emergency performance; it remains unclear how this assessment approach, which deemphasized the organization, aligns with the topics of training, which were often about organizational systems.

Generalizable evaluations of training effectiveness were rare. In part, this may be due to the fact that funding for training programs gives priority to curriculum designers and educators over evaluators and researchers. There were no case-controlled studies among the reports reviewed. The gold standard for evaluating the impact of training on actual performance is demonstration of competence in a field exercise or an actual event, but these too were absent among the reports reviewed.

Limitations

Limitations arise from the methods and constraints of this literature review. First, the inclusion period for publication was brief, the inclusion of journals was selective rather than comprehensive, gray literature was excluded, and some articles were identified too late for inclusion in the thematic analysis. Nevertheless, the inclusion criteria and analytic approaches probably yielded a reliably broad selection of articles from a total of 79 peer-reviewed journals. For the purpose of this review—which was to give clarification and insight rather than rigorous generalization—the use of only peer-reviewed literature established a quality standard for the included articles.

Second, the study method did not include a test for inter-rater reliability among the Group members as abstractors. The method was a hybrid of standard qualitative analysis and expert opinion process among Group members. Rather than striving for uniformity in abstracting and rating articles, the Group's approach emphasized consensus-building: all members collaborated in selecting journals and defining the inclusion criteria; Group members compared the results of “practice” abstracting of a single article to resolve differences of interpretation and to align their rating standards.

CONCLUSIONS

This literature review offers insight about the progress of preparedness training for public health and highlights some priorities for the future. Such a review was needed at the time because public health preparedness training was a relatively new field. Currently, the results presented in this article can inform government policy makers as they reevaluate the costs, benefits, and methods of preparedness training. In the future, reiterations of this review can build and maintain an evidence base for training the public health workforce in preparedness. Future literature reviews should take note of training content and assess whether important preparedness topics are sufficiently covered.

The gaps identified in this review suggest ways to shape and fund future training programs. It is clear that public health preparedness requires a continued investment in training and education of the public health workforce from government agencies. Governments should link their ongoing policy-making and planning processes to the evaluation of training programs—especially through field exercises so that training outcomes are measured by performance improvement.

Footnotes

This article reported on a project conducted by the authors as the Evidence-Based Gaps Collaboration Group and funded by the Centers for Disease Control and Prevention (CDC) under cooperative agreements with the Centers for Public Health Preparedness. Contributing staff support to the Group was Leah Trahan of the Association of Schools of Public Health. Providing technical advice during the course of the project were Barbara Ellis and Gregg Leeman of CDC. Also contributing was Erin Rothney, then a graduate student at the University of Michigan School of Public Health and currently with CDC. Peer reviews by members of the Collaboration Group Steering Committee—representing a national network of Centers for Public Health Preparedness—provided valuable feedback and advice on earlier drafts. The authors acknowledge the contributions of each of these individuals.

This article is the sole responsibility of the authors and does not represent the official views of CDC.

REFERENCES

- 1.Association of Schools of Public Health, Centers for Disease Control and Prevention (US) Centers for Public Health Preparedness Network. 2006–2007 ASPH/CDC Evidence-Based Gaps Collaboration Group. 2007. Oct, [cited 2009 Oct 9]. Available from: URL: http://preparedness.asph.org/cphp/documents/Evidence-Based%20Gaps%20CG-FINAL.pdf.

- 2.Centers for Disease Control and Prevention (US) Budget request summary fiscal year 2007. 2006. Feb, [cited 2009 Oct 18]. Available from: URL: http://www.cdc.gov/fmo/topic/Budget%20Information/appropriations_budget_form_pdf/FY07budgetreqsummary.pdf.

- 3.Federal Emergency Management Agency (US) What we do. [cited 2010 Apr 25]. Available from: URL: http://www.fema.gov/about/what.shtm.

- 4.Centers for Disease Control and Prevention (US) Advancing the nation's health: a guide to public health research needs, 2006–2015. 2006. Dec, [cited 2010 Jun 25]. Available from: URL: http://www.cdc.gov/od/science/PHResearch/cdcra/Guide_to_Public_Health_Research_Needs.pdf.

- 5.Baker EL, Jr, Koplan JP. Strengthening the nation's public health infrastructure: historic challenge, unprecedented opportunity. Health Aff (Millwood) 2002;21:15–27. doi: 10.1377/hlthaff.21.6.15. [DOI] [PubMed] [Google Scholar]

- 6.Potter MA, Barron G, Cioffi JP. A model for public health workforce development using the National Public Health Performance Standards Program. J Public Health Manag Pract. 2003;9:199–207. doi: 10.1097/00124784-200305000-00004. [DOI] [PubMed] [Google Scholar]

- 7.Patton MQ. Qualitative research and evaluation methods. 3rd ed. Thousand Oaks (CA): Sage Publications, Inc.; 2002. [Google Scholar]

- 8.Glaser BG, Strauss AL. The discovery of grounded theory. Chicago: Aldine Publishing Co.; 1967. [Google Scholar]

- 9.Ryan GW, Bernard HR. Techniques to identify themes in qualitative data. [cited 2010 Apr 15]. Available from: URL: http://www.analytictech.com/mb870/Readings/ryan-bernard_techniques_to_identify_themes_in.htm.