Abstract

Engaging busy healthcare providers in online continuing education interventions is challenging. In an Internet-delivered intervention for dental providers, we tested a series of email-delivered reminders - cues to action. The intervention included case-based education and downloadable practice tools designed to encourage providers to increase delivery of smoking cessation advice to patients. We compared the impact of email reminders focused on 1) general project announcements, 2) intervention related content (smoking cessation), and 3) unrelated content (oral cancer prevention focused content). We found that email reminders dramatically increased participation. The content of the message had little impact on the participation, but day of the week was important – messages sent at the end of the week had less impact, likely due to absence from clinic on the weekend. Email contact, such as day of week an email is sent and notice of new content posting, is critical to longitudinal engagement. Further research is needed to understand which messages and how frequently, will maximize participation.

Keywords: Internet, Dentistry, Cues, Marketing

Introduction

Use of the Internet for continuing education interventions has risen but little is known about what predicts level of participation and engagement by healthcare providers. Health care providers often access the Internet to search for information. In addition, the Internet is also used for continuing education and quality improvement educational interventions. However, there are various barriers to use of the Internet. Little research has been conducted on overcoming these barriers to sustained participation in Internet interventions. In this analysis we monitored continued participation over time, and utilized various email methods to sustain participation in an Internet intervention.

Providers, generally, have a preference for web-based continuing education as compared to local or national meetings [1]. Many fields have begun to offer online continuing education to meet this preference [2]; however, the format used for continuing education can also be an important factor in predicting online continuing education use. Prior literature has shown that providing content in small amounts, such as cases, spaced over time is more effective than one, large posting of content [3,4] or traditional online continuing education lectures.

Once a website has been accessed, not much is known about maintaining website engagement by providers. One method often used to encourage repeat usage is email. Email has shown promise in encouraging participation in an educational intervention with minimal effort on the part of the provider [1]. When an email message is received, the provider can choose when, where, and how to engage in the website. This ease of use greatly enhances a website’s adoption by the provider community. Several studies have found high response rates from email, some as high as 50% [1, 5, 6, 7] and have shown that multiple reminders, even though thought to be intrusive by many, can actually increase participation rates [1]. Reaching an audience with multiple time pressures and scheduling difficulties in such an efficient manner bodes well for future Internet interventions.

Engaging health care providers in educational interventions is especially problematic considering the time constraints of the office setting. Internet delivered programs offer greater flexibility to such schedules. Unfortunately, past studies have found that even if a website is accessed, content is only viewed for short amounts of time [8, 9], indicating the need for increased focus on user retention or “stickiness.” This study examines use of targeted email reminders (designed as cues to action) to influence participation by dental providers in an Internet delivered intervention for tobacco control.

Materials and Methods

Study Design

The Dental Tobacco Control.net project (DTC) assessed the use of an Internet-delivered intervention designed to encourage dentists, hygienists, and dental assistants to discuss tobacco use with their patients. Prior to randomization, all practices were required to have Internet access in their practice and complete a run-in phase including baseline data collection from patients. General and periodontal dental practices in both urban and rural settings in Alabama, Georgia, Florida, and North Carolina participating in the Dental Practice-based Research Network (www.dpbrn.org) were enrolled. One hundred and ninety were randomized to the two-year trial from 2005–2007. Of the 190 dental practices, 95 were randomized to the DTC.net intervention website and 95 were randomized to a wait-list control. Our prior published results demonstrate that the DTC.net intervention was successful in increasing smoking cessation advice at six-months of follow-up. For this analysis, we followed the cohort of intervention practices. These 95 intervention practices included 118 dentists and 334 hygienists/dental assistants. The study was approved by the University of Alabama at Birmingham Institutional Review Board.

The intervention website consisted of four educational cases, patient education and practice tools, a forum for chatting, an area to ask questions, and updates in the dental tobacco literature. Users decided how much time they wished to spend on the website. All materials were periodically updated and released over time. The educational cases contained questions to allow interactive learning based on user response and were supported by references and literature. Patient Education Materials and Practice Tools, such as brochures and posters, were available for download or could be requested from the investigative team. The discussion forum allowed providers to directly post questions and receive responses from peers. The “Ask a Question” feature allowed providers to directly communicate with the investigative team.

To encourage more-frequent website participation, push technologies such as email reminders were used by the study to drive participants to the website. Emails were sent to providers to announce cases. The interactive cases (tailored according to provider’s responses) were spaced over six months. Dentists received online continuing education credits for completing a case. In addition to case advertisements, to encourage participation, three types of email reminders were sent: 1) general project announcements, 2) intervention related content (smoking cessation), and 3) unrelated content (oral cancer prevention focused content). The new content was summarized in the email reminder and then linked to the updated website content or a full-text version of a new research article. Reminders were delivered on a variable basis, with one or more sent per month for 30-months.

Data Collection

Upon log-in, user authentication was required for all providers and each visit was linked to user tracing logs on the intervention server. The user tracing was used to calculate the various measures of participation including tracking the date, time, pages visited (type and volume), and total time of website visit. We narrowed our user tracing to a group of key web-pages (home, main page of each educational case modules, headlines, etc.). We defined accessing these pages as an “audited event.” For this analysis we used longitudinal user tracing follow-up data from the cohort of 95 intervention practices.

Analyses

The main dependent variable was provider participation (number of audited events) collected by user tracing on the intervention server. We first assessed the overall impact of email reminders (independent variable) on website participation. We compared days immediately after the email reminders to days when no reminders were sent. Because the messages were sent on different days, we assessed the impact of day of the week on participation. We then modeled the impact of reminders adjusted for day of the week. Subsequently, we compared the impact of the three categories of email reminders (1. general project announcements, 2. intervention related content (smoking cessation), and 3. unrelated content (oral cancer prevention focused content)) on participation. The dependent variable is a count of tracked pages, and could be modeled with Poisson. Because of over-dispersion in the participation data, we used a negative binomial regression to model the association of email reminders with participation.

Results

Practice Characteristics

Ninety-five intervention dental practices were invited to participate in the Internet intervention (Table 1 below).

Table 1.

Characteristics of Dental Practices (N=95)

| Intervention | ||

|---|---|---|

| Practice Characteristics | n/N | % |

| Solo/Group * | ||

| Solo Dental | 73/93 | 78.5 |

| Group | 20/93 | 21.5 |

| Total Staff (hygienists/assistants) | ||

| 0 | 5/95 | 5.3 |

| 1–2 | 26/95 | 27.4 |

| 3–4 | 37/95 | 39.0 |

| >4 | 27/95 | 28.4 |

| Rural/Urban Status | ||

| Urban over 1 million | 28/95 | 29.5 |

| Other metro | 48/95 | 50.5 |

| Non-metro next to urban | 10/95 | 10.5 |

| Non-metro not next to urban | 9/95 | 9.5 |

| State | ||

| Alabama | 15/95 | 15.8 |

| Florida | 39/95 | 41.1 |

| Georgia | 27/95 | 28.4 |

| North Carolina | 14/95 | 14.7 |

| Patient volume | ||

| <=40 patients/week | 9/95 | 9.4 |

| 40–100 patients/week | 62/95 | 65.3 |

| >100 patients/week | 24/95 | 25.3 |

missing data on 2 practices

Participation in the Intervention Website

Of the 95 intervention practices, at least one provider from 72 practices (76%) accessed the website at least once. Overall, there were 4,797 audited events (accesses to unique traced pages). These audited events occurred during 531 unique visits to the intervention website by 138 dental providers (73 dentists and 65 hygienists/dental assistants). There were 87 dentists and 249 hygienists/dental assistants in these 72 practices. Thus, 84% of the dentists and 26% of the hygienists/dental assistants in these 72 practices logged on to the intervention website.

The mean number of audited events per day was 5 (SD = 12). Of note, activity occurred only on 28% of days in the 30-month tracing period. On days when activity occurred, the mean audited events was 19 (SD = 18). Our user tracing demonstrated that dentists had higher participation (median audited events (AE) = 32 (95% CI 22–45)) as compared with hygienists/dental assistants (median AE = 17 (95% CI 14–24), median test p = 0.01. The number of visits to the website was also different (dentist median visits = 3, hygienist/dental assistants median visits = 2, p = 0.001). Participation did not vary by the practice characteristics listed in Table 1.

Impact of Email Reminders on Provider Participation

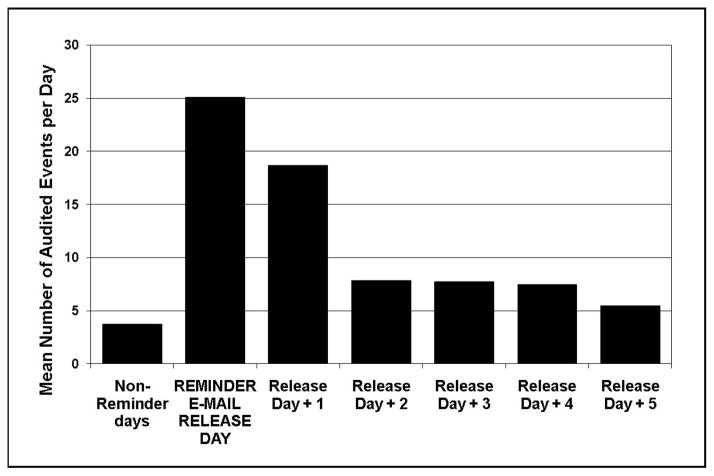

Thirty announcements about cases were emailed to the practices. In addition, 28 reminders were emailed to the providers over time. Figure 1 shows the resulting website usage after each email reminder. The email reminders resulted in the largest number of visits on the day the email was sent (E-mail release day). The day after the email also showed an increase in website visits, then returning to baseline.

Figure 1.

Dentist and staff participation by day of email reminder release

Unadjusted model

Compared with non-reminder days, in our initial unadjusted negative binomial model, the day of email reminder release and Release Day + 1 were more likely to have had higher audited events (Incidence Rate Ratios 6.6 (95% CI 1.9–24.0) and 5.0 (95% CI 1.4–17.9) respectively), but release day + 2 and beyond were similar to non-reminder days. Incidence Rate Ratios (IRR) are a measure of observed over expected counts from negative binomial regression.

Providers engaged in the website more on weekdays than weekends. As noted in Figure 2, participation peaked on Wednesdays, and began to decline on Thursday, and was quite low on weekends.

Figure 2.

Participation by day of email reminder release

After adjustment for day of the week, when compared to the reference day, the expected count for the number of logons is multiplied by a factor of 5.13 the day the email was sent, and 6.27 on the day after (Table 2). In this multivariable model, adjusting for day of the week, the second day after the email was sent was also significantly greater than the reference.

Table 2.

Association between email reminders and rate of participation, after adjustment for day of the week and day of education case release

| Adj. IRR* | 95% CI | P-value | |

|---|---|---|---|

| Reminders Emails | |||

| Non-reminder days | Ref. | ||

| e-Mail release day | 5.13 | 1.53–17.18 | 0.008 |

| Release day + 1 | 6.27 | 1.85–21.31 | 0.003 |

| Release day + 2 | 4.79 | 1.35–16.97 | 0.015 |

| Release day + 3 | 2.31 | 0.67–7.95 | 0.184 |

| Release day + 4 | 1.74 | 0.49–6.23 | 0.392 |

| Release day + 5 | 2.25 | 0.64–7.93 | 0.207 |

| Day reminder sent | |||

| Monday | Ref. | ||

| Tuesday | 1.21 | 0.54–2.69 | 0.641 |

| Wednesday | 1.50 | 0.68–3.30 | 0.309 |

| Thursday | 0.82 | 0.37–1.83 | 0.630 |

| Friday | 0.71 | 0.32–1.56 | 0.392 |

| Saturday | 0.25 | 0.11–0.56 | 0.001 |

| Sunday | 0.15 | 0.06–0.34 | 0.000 |

| Case Release Email | |||

| No case release | Ref. | ||

| Case Release Day | 3.38 | 1.09–10.50 | 0.035 |

Adjusted Incidence Rate Ratio (IRR) from a negative binomial model, including 4,797 audited events. IRR adjusted for day of the week, and accounts for release of case emails over time.

Of note, six of our messages were sent out on a Thursday or Friday. The negative binomial model provided a better fit than the Poisson model due to over-dispersion of the outcome.

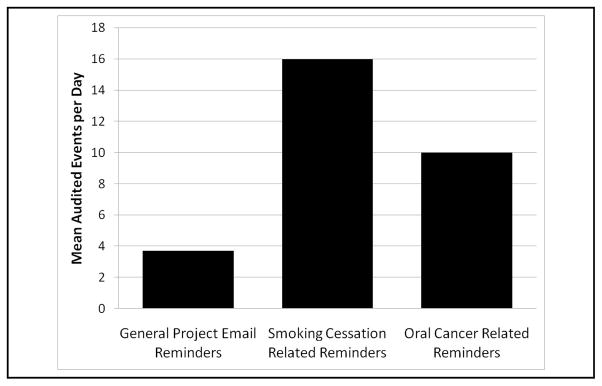

As discussed above, three types of email reminders were sent: five emails were general project announcements (e.g., describing the practices involved, goals, etc.); 13 email reminders were directly related to smoking cessation; and 10 email reminders were about oral cancer prevention, a topic that dental providers in our formative work noted to be of importance to them, a desired area for up-to-date information.

The reminders related to smoking cessation resulted in the most audited events (mean 16 per day), followed by oral cancer prevention (10) (Figure 3). Of note, general project announcements that did not include links to new content had less impact. In a subsequent negative binomial model, general project email release days were not significantly greater than non-reminder days.

Figure 3.

Participation per day for first three days after email release, comparing three types of email reminders

Discussion

In this randomized trial of an Internet-delivered continuing education intervention in dental practices, we were successful in engaging 138 dental providers. We found that email reminders were critical to longitudinal participation in the intervention. On days when no recent reminders had been sent, very little participation occurred. The day after an email was sent, participation had multiplied six-fold, compared with non-reminder days.

As previously published, our randomized trial was successful in increasing rates of smoking cessation advice in these dental practices, compared with control [10]. Intervention dental practices increased rates of smoking cessation advice by 11% from a baseline rate of 44% (OR = 1.55 [95% CI 1.28–1.87]). Control practices did not significantly improve.

The impact of the randomized trial was directly associated with level of participation. Thus, our success in changing provider behavior was dependent on our email reminder system. Without the reminders, level of participation would likely have been too low to have resulted in a change in rates of smoking cessation advice.

Nearly all providers report having access to the Internet [6, 11] but with the growth in new health care knowledge, staying up-to-date can be challenging. Internet use among healthcare providers is at an all-time high and online clinical information seeking and engagement in online education continues to grow. In a study of U.S. physicians of all specialties in active practice, 71.5% of medical providers use the Internet for literature searching and ranked journals as the most important source for clinical information [12]. Updating the content of the website to summarize current news and journal articles and provide training and education in these areas could prove beneficial to the providers and drive website usage.

We have demonstrated the potential improvement in provider website participation and engagement following the introduction of email reminders. Of note, each of our email reminders included an “opt-out” option to no longer receive messages. In all, we sent 28 reminders to 138 dental providers, and received zero opt-out requests despite the nearly 4,000 opt-out opportunities. Future interventions could consider sending email reminders even more frequently.

The email reminders provide a direct cue to engage providers and encourage user retention; however, care should be given to the timing of emails [13] and message content. Provider website visits were higher when the email content was a subject of interest. Our general project announcements had little effect. Only reminders linked to new content were effective.

We learned an important lesson – take care to place messages at the beginning of the week, not the end. Because it may take providers two to three days to respond, one must avoid having the time of potential activity overlap with the weekend days. In our multivariable analysis, accounting for day of the week, the association of email reminders and participation was stronger for more days, suggesting reverse confounding by day of the week for Release day + 2.

The results of this analysis are limited to only one intervention. We found that provider characteristics listed in Table 1 were not associated with participation, but other variables may be important predictors. Our analysis did not account for provider variations in interest, time constraints, and computer experience, all of which could influence provider participation in an Internet intervention.

Further research into reasons for participation can enhance website engagement and help researchers learn more about designing effective interventions. Researchers should experiment with varying frequency of email reminders [13], and varying content. In general, additional innovative approaches are needed to engage providers in longitudinal interventions.

Acknowledgments

This project was supported by grants R01-DA-17971, U01-DE-16747, and U01-DE-16746 from the National Institute on Drug Abuse (NIDA) and the National Institute of Dental and Craniofacial Research (NIDCR) at the National Institutes of Health.

References

- 1.Abdolrasulnia M, Collins BC, Casebeer L, Wall T, Spettell C, Ray MN, Weissman NW, Allison JJ. Using email reminders to engage physicians in an Internet-based CME intervention. BMC Medical Education. 2004;4:17. doi: 10.1186/1472-6920-4-17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Kerfoot BP, Kearney MC, Connelly D, Ritchey ML. Interactive Spaced Education to Assess and Improve Knowledge of Clinical Practice Guidelines. A Randomzied Controlled Trial. Annals of Surgery. 2009;249(5):744–749. doi: 10.1097/SLA.0b013e31819f6db8. [DOI] [PubMed] [Google Scholar]

- 3.Pashler H, Rohrer D, Cepeda NJ, Carpenter SK. Enhancing learning and retarding forgetting: Choices and consequences. Psychonomic Bulletin and Review. 2007;14(2):187–193. doi: 10.3758/bf03194050. [DOI] [PubMed] [Google Scholar]

- 4.Houston TK, Funkhouser E, Allison JJ, Levine DA, Williams OD, Kiefe CI. Multiple Measures of Provider Participation in Internet Delivered Interventions. Med Info. 2007 [PubMed] [Google Scholar]

- 5.Flanagan JR, Peterson M, Dayton C, Strommer Pace L, Plank A, Walker K, Carlson W. Email recruitment to use web decision support tools for pneumonia. Proceedings of the American Medical Informatics Association’s 2002 Annual Symposium; 9–13 November; San Antonio. 2002. pp. 255–9. [PMC free article] [PubMed] [Google Scholar]

- 6.McMahon RS, Iwamoto M, Massoudi SM, Yusuf HR, Stevenson JM, David F, Chu SY, Pickering LK. Comparisons of e-mail, fax, and postal surveys of pediatricians. Pediatrics. 2003;111 (4 Pt 1):e299–303. doi: 10.1542/peds.111.4.e299. [DOI] [PubMed] [Google Scholar]

- 7.Wall TC, Mian MAH, Ray MN, Casebeer L, Collins BC, Kiefe CI, Weissman N, Allison JJ. Improving physician performance through Internet-based interventions: Who will participate? J Med Internet Res. 2005;7(4):e48. doi: 10.2196/jmir.7.4.e48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Danaher BG, Boles SM, Akers L, Gordon JS, Severson HH. Defining participant exposure measures in web-based health behavior change programs. J Med Internet Res. 2006;8(3):e15. doi: 10.2196/jmir.8.3.e15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Eysenbach G. The Law of Attrition. The Journal of Medical Internet Research. 2005;7 (1):e11. doi: 10.2196/jmir.7.1.e11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Houston TK, Richman JS, Ray MN, Allison JJ, Gilbert GH, Shewchuk RM, Kohler CL, Kiefe CI. Internet delivered support for tobacco control in dental practice: randomized controlled trial. J Med Internet Res. 2008;10:e38. doi: 10.2196/jmir.1095. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Casebeer L, Bennett N, Kristofco R, Carillo A, Centor R. Physician Internet Medical Information Seeking and Online Continuing Education Use Patterns. The Journal of Continuing Education in the Health Professions. 2002;22:33–42. doi: 10.1002/chp.1340220105. [DOI] [PubMed] [Google Scholar]

- 12.Bennett NL, Casebeer LL, Kristofco RE, Strasser SM. Physicians’ Internet Information-Seeking Behaviors. The Journal of Continuing Education in the Health Professions. 2004;24:31–28. doi: 10.1002/chp.1340240106. [DOI] [PubMed] [Google Scholar]

- 13.Fry JP, Neff RA. Periodic Prompts and Reminders in Health Promotion and Health Behavior Interventions: Systematic Review. J Med Internet Research. 2009;11(2):e16. doi: 10.2196/jmir.1138. [DOI] [PMC free article] [PubMed] [Google Scholar]