Abstract

Objective

To test the feasibility of a framework for prioritizing new comparative effectiveness research questions related to management of primary open-angle glaucoma (POAG) using practice guidelines and a survey of clinicians.

Design

Cross-sectional survey

Participants

Members of the American Glaucoma Society

Methods

We restated as an answerable clinical question each recommendation in the 2005 American Academy of Ophthalmology Preferred Practice Patterns (PPPs) regarding the management of POAG. We asked members of the American Glaucoma Society to rank the importance of each clinical question, on a scale of 0 (not important at all) to 10 (very important), using a two-round Delphi survey conducted online between April and September 2008. Respondents had the option of selecting “no judgment” or “research has already answered this question” to each question in lieu of the 0 to 10 rating. We used the ratings assigned by the Delphi respondents to determine the importance of each clinical question.

Main Outcome Measure

Ranking of importance of each clinical question

Results

We derived 45 clinical questions from the PPPs. Of the 620 American Glaucoma Society members invited to participate in the survey, 169 completed the Round One survey; 105 of 169 also completed Round Two. We observed four response patterns to the individual questions. Nine clinical questions were ranked as the most important: four on medical intervention, four on filtering surgery, and one on adjustment of therapy.

Conclusions

Our theoretical model for priority setting for comparative effectiveness research question is a feasible and pragmatic approach that merits testing in other medical settings.

Comparative effectiveness research (CER), “the generation and synthesis of evidence that compares the benefits and harms of alternative methods to prevent, diagnose, treat and monitor a clinical condition, or to improve the delivery of care,”1 serves as the foundation for evidence-based practice and decision making, and is one of the top priorities of the nation’s health care agenda.2 Findings of CER inform patients, providers, payers, policy makers and others about the treatments that work best for a defined group of patients under specified conditions, and whether more extensive or expensive management leads to better health outcomes.3,4 Substantial investment of resources is required to conduct new primary studies or to synthesize existing evidence regarding the clinical effectiveness and appropriateness of interventions that are used to manage health conditions. For clinical questions about intervention effectiveness, randomized controlled trials (RCTs) and systematic reviews of RCTs are generally considered to be the highest level of evidence.5

To conserve resources and to obtain the answers that decision makers need, increased attention has focused on ways to prioritize CER. The Institute of Medicine (IOM), for example, was asked by the United States Congress to recommend research priorities that present the most compelling need for evidence of comparative effectiveness considering input from all stakeholders. 2, 6

While the necessity to establish CER priorities is well recognized, there is little empirical evidence to guide the criteria and processes for priority setting. Many organizations report using a nomination-based approach and use similar broad criteria for setting priorities, including cost, burden of disease, public interest, topic controversy, new evidence, impact on quality of life, measurable patient outcomes, and the potential to reduce variations in clinical practice.7–9

The objective of this study was to test a framework for prioritizing clinical questions for new CER starting with clinical practice guidelines. We believe that clinical practice guidelines reflect the state of knowledge in the clinical community regarding prevention, screening and therapy, as well as the community’s interpretation of evidence,10, 11 and provide a reasonable starting point for priority-setting. We used the American Academy of Ophthalmology (AAO)’s practice guidelines, the Preferred Practice Patterns (PPPs),16 for primary open-angle glaucoma (POAG), last updated in 2005 (http://one.aao.org/CE/PracticeGuidelines/PPP.aspx; accessed March 21, 2010), as a topic area for testing the framework. We selected POAG because of its substantial burden on patients and health care resources.12–15 AAO PPPs for POAG have been used in the United States, and have been referenced in international glaucoma guidelines.16

Methods

In 2006, we developed a list of clinical questions about POAG that could be addressed by clinical trials and systematic reviews of trials (e.g., questions about intervention effectiveness) that we proposed to send to clinicians with a request to rank their relative research priorities. To do this, two individuals independently reviewed the 2005 AAO PPPs on the management of POAG and extracted every statement that could be considered as a recommendation. We restated each recommendation as an answerable clinical question based on characteristics of the patient population, test and comparison interventions, and outcomes.17 We did not translate into clinical questions guideline statements on disease definition, etiology, screening, and diagnosis; statements that we classified as ethical or legal statements; or statements that were not recommendations.

In the PPP, the AAO panel “rated each recommendation according to its important to the care process (http://one.aao.org/CE/PracticeGuidelines/PPP.aspx; accessed March 21, 2010).” We extracted the assigned importance rating for each AAO recommendation, defined as: “level A,” most important, “level B,” moderately important, and “level C,” relevant but not critical. Not all recommendations were rated.

We consulted with three glaucoma specialists who have expertise both in the management of glaucoma and in forming answerable clinical questions to confirm that our restatement was accurate. We also consulted the Cochrane Eyes and Vision Group US Satellite (CEVG@US) Advisory Board and methodologists on the accuracy of the restatements and on our proposal to ask ophthalmologists to rank the relative importance of each question. This revealed that many ophthalmologists are uncomfortable ranking clinical questions outside their sub-specialty. Furthermore, when we pilot tested the survey, some clinicians responded to our request as if we were asking about their knowledge rather than prioritization. The clinician advisors also suggested that we add a response option “research has already answered this question” to our survey. We modified the survey questionnaire and methods accordingly.

Between April and September 2008, we surveyed the membership of the American Glaucoma Society (AGS), asking them to rank the relative importance of the clinical questions we derived from the AAO PPPs for POAG using two rounds of an online Delphi survey. The format of the questionnaire and the data collection plan were adapted from the defining Standard Protocol Items for Randomized Trials (SPIRIT) Initiative Delphi Survey.18 The Johns Hopkins Bloomberg School of Public Health Institutional Review Board (IRB) approved the Delphi survey on March 13, 2008 (IRB number: 00001124). The AGS Research Committee approved the Delphi survey and their role on March 26, 2008.

Round One of the survey, which included the initial invitation, consent to participate, and access to the survey website, was sent by the AGS office via email to their membership list of 620 people. The invitation was signed by the Chair of the AGS Research Committee (Dr. Henry Jampel). The survey instructions said “Please rate the importance of having the answer to each of the following questions for providing effective patient care” on a scale of 0 (not important at all) to 10 (very important). Participants also had the option of assigning a rating of “no judgment” or “research has already answered this question” to each question in lieu of the 0 to 10 rating. We provided space for comments, questions, and nomination of items not included in the list of research questions derived from PPPs. Additionally, Round One requested demographic and other information such as occupation/field, sub-specialty, and place of employment (e.g., government, industry, academia, other), and experience in clinical trials and systematic reviews. AGS members were given 3 weeks to respond to Round One. After the initial request, an email reminder was sent at the end of week 1, week 2, and 1 day prior to the end of week 3, from the AGS office.

Round Two of the survey was sent electronically to Round One respondents approximately 4 weeks after the completion of Round One. We modified the wording of seven survey questions, for clarity only, using feedback from Round One. In Round Two, we provided each respondent with his/her previous individual rating for each question, as well as a summary, in the form of a histogram, of all responses for each question. Respondents were asked to re-rate each clinical question in light of ratings and comments from the previous round. In Round Two we also asked respondents “Please rate the amount you have relied on the AAO PPPs for POAG in deciding how best to provide effective patient care” on a scale of 0 (none) to 10 (heavy). Demographic questions asked in Round One were not asked again in Round Two. Round One respondents were given 3 weeks to respond to Round Two. After the initial request, and at the end of week 1, the study moderator (TL) sent an email reminder. To increase response, the Chair of the AGS Research Committee sent an email reminder at the end of week 2, and 1 day prior to the end of week 3, with a personalized message encouraging participation.

To analyze the survey data, we fitted a 3-level hierarchical model to allow for dependence among the responses observed for units belonging to the same cluster (responses are clustered within question and clustered within respondent). We assumed a normal likelihood for ratings assigned by respondents, and chose non-informative prior distribution for each parameter. We calculated the mean rating and the 95% credible interval for each question using Markov chain Monte Carlo (MCMC) methods in WinBUGS 1.4.3.19 In Bayesian statistics, a credible interval is a posterior probability interval that is used similarly to confidence intervals in frequentist statistics. We categorized ratings into three levels resembling the system used by the AAO PPPs: “most important,” “moderately important,” and “relevant but not critical.” “Most important” questions were those with a lower credible limit (2.5% percentile) above the overall mean; “moderately important” questions were those with 95% credible intervals that include the overall mean; and “relevant but not critical” questions were those with an upper credible limit (97.5% percentile) below the overall mean. We compared the ratings assigned by the Delphi respondents with the associated importance rating assigned in the AAO PPPs.

We initially coded “research has already answered this question” and “no judgment” as “missing” in the analysis. We conducted sensitivity analyses, assigning a 10 for “research has already answered this question”, and compared ranks under this assumption. A “sensitivity analysis” repeats the primary analysis, substituting alternative ranges of values for decisions that are subjective, and is used to examine how robust the findings are.17 In assigning a 10 for “research has already answered this question” we assumed that sufficient research to answer a question indicates that the question is important and a systematic review is warranted.

Results

We derived 45 clinical questions from the AAO PPPs related to management of POAG (Table 1, available at http://aaojournal.org): 13 on medical interventions, eight on laser trabeculoplasty, 20 on filtering surgery, one on cyclodestructive surgery, and three on adjustment of therapy.

Of the 620 AGS members invited to participate in the survey, 169 completed the Round One survey; 105 of 169 also completed Round Two (Table 2). The number of responses per day increased when reminder emails were sent. In Round Two, the number of responses per day peaked when the reminder email was sent by the Chair of the AGS Research Committee. We compared the characteristics of those who completed Round One, Round Two, and those who completed Round One but not Round Two (Table 3). Respondents who classified themselves in Round One as “at least moderately experienced in clinical trials as an investigator,” as “ever (co)-authoring a systematic review,” or as “self-employed/private practice” were less likely to complete Round Two. In Round Two, the mean score for respondent’s level of reliance on AAO PPPs for providing effective patient care was 5.2 (standard deviation=2.7; range: 0 to 10).

Table 2.

Responses and completions by Delphi survey round

| Round | Total Invited | # (%) Responded | # Completed |

|---|---|---|---|

| Round One | 620 | 174 (28.1) | 169* |

| Round Two | 169 | 111 (65.7) | 105† |

3 refused to participate, 2 did not complete the entire Round One questionnaire

6 did not complete the entire Round Two questionnaire

Table 3.

Characteristics of respondents to each round of Delphi survey

| Characteristics | Round One | Round Two | |

|---|---|---|---|

| Respondents (N=169) n (%) |

Respondents (N=105) n (%) |

Non-respondents (N=64) n (%) |

|

| n=169 | n=105 | n=64 | |

|

Experience in clinical trials as an investigator |

|||

| 4 Very experienced | 32 (18.9) | 17 (16.2) | 15 (23.4) |

| 3 | 14 (8.3) | 8 (7.6) | 6 (9.4) |

| 2 Moderately experienced | 60 (35.5) | 35 (33.3) | 25 (39.1) |

| 1 | 42 (24.9) | 29 (27.6) | 13 (20.3) |

| 0 Not experienced at all | 21 (12.4) | 16 (15.2) | 5 (7.8) |

|

Ever (co)-author on a systematic review |

|||

| Yes | 47 (27.8) | 27 (25.7) | 20 (31.3) |

| No | 119 (70.4) | 76 (72.4) | 43 (67.2) |

| Don’t know | 3 (1.8) | 2 (1.9) | 1 (1.6) |

| Place of employment | |||

| Academic center/University | 92 (54.4) | 61 (58.1) | 31 (48.4) |

| Self-employed/Private practice | 66 (39.1) | 36 (34.3) | 30 (46.9) |

| Hospital | 4 (2.4) | 3 (2.9) | 1 (1.6) |

| Government | 3 (1.8) | 3 (2.9) | 0 (0.0) |

| Other | 4 (2.4) | 2 (1.9) | 2 (3.1) |

| Primary professional affiliation | |||

| Ophthalmologist | 167 (98.8) | 104 (99.1) | 63 (98.44) |

| Other | 2 (1.2) | 1 (1.0) | 1 (1.6) |

| Specialty area | |||

| Glaucoma | 165 (97.6) | 102 (97.1) | 63 (98.4) |

| Other | 4 (2.4) | 3 (2.9) | 1 (1.6) |

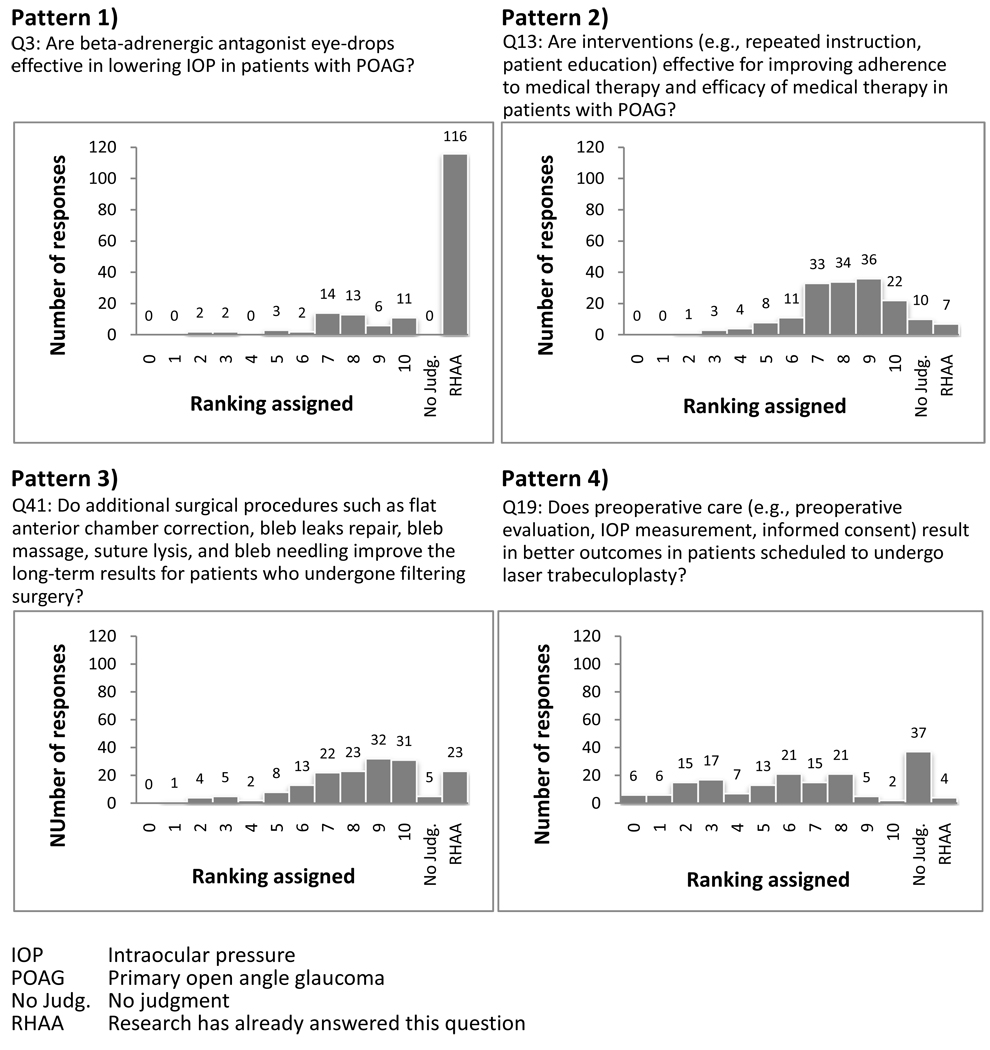

We observed four response patterns to the individual questions (Figure 1): 1) the majority (>50%) of respondents said that the clinical question had been answered by the research; 2) respondents varied in their opinions of the importance of the research question and a few (≤10%) said that the clinical question had been answered by research; 3) respondents varied in their opinions of the importance of the research question and a substantial proportion (>10% and ≤50%) said that the clinical question had been answered by research; 4) some respondents may not have understood the clinical question, indicated by high frequency of “no judgment” and the written comments. For example, 8/169 respondents in Round One commented that they did not understand question 13 “Are interventions (e.g., repeated instructions, patient education) effective for improving adherence to medical therapy and efficacy of medical therapy in patients with POAG?” In addition, respondents sometimes answered the clinical question or nominated new clinical questions and appeared less interested in or aware of our methodologic goal of setting research priorities. For example, for question 45 “What is the optimal interval for conducting follow-up visits to assess the response and side effects from washout of the old medication and onset of maximum effect of the new medication,” 12/169 respondents in Round One provided an optimal follow-up interval, ranging from 4 to 6 weeks. An example of a nominated clinical question is whether beta-blockers stop progression of POAG.

Figure 1.

Examples of response patterns for selected questions, with ranking assigned by 169 participants in Round One of Delphi survey

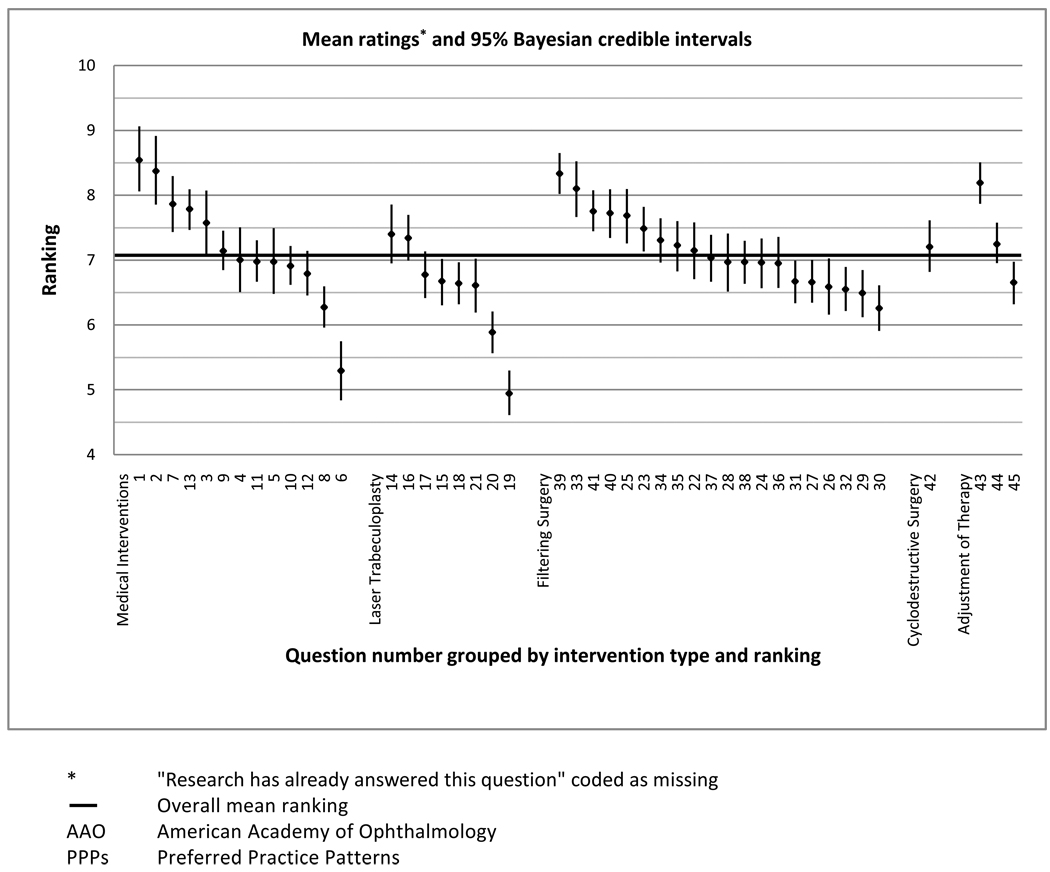

We calculated the mean and 95% credible intervals for the ratings of importance for 45 clinical questions (Figure 2). When “no judgment” and “research has already answered this question” were coded as missing, questions 1, 2, 3, 7, 13, 23, 25, 33, 39, 40, 41, 42, and 43 were ranked as the most important clinical questions to which answers are needed for providing effective patient care, with their lower 2.5% credible limits above the overall means.

Figure 2.

Mean ranking and 95% Bayesian credible intervals for ranked importance of 45 clinical questions derived from AAO PPPs guidelines

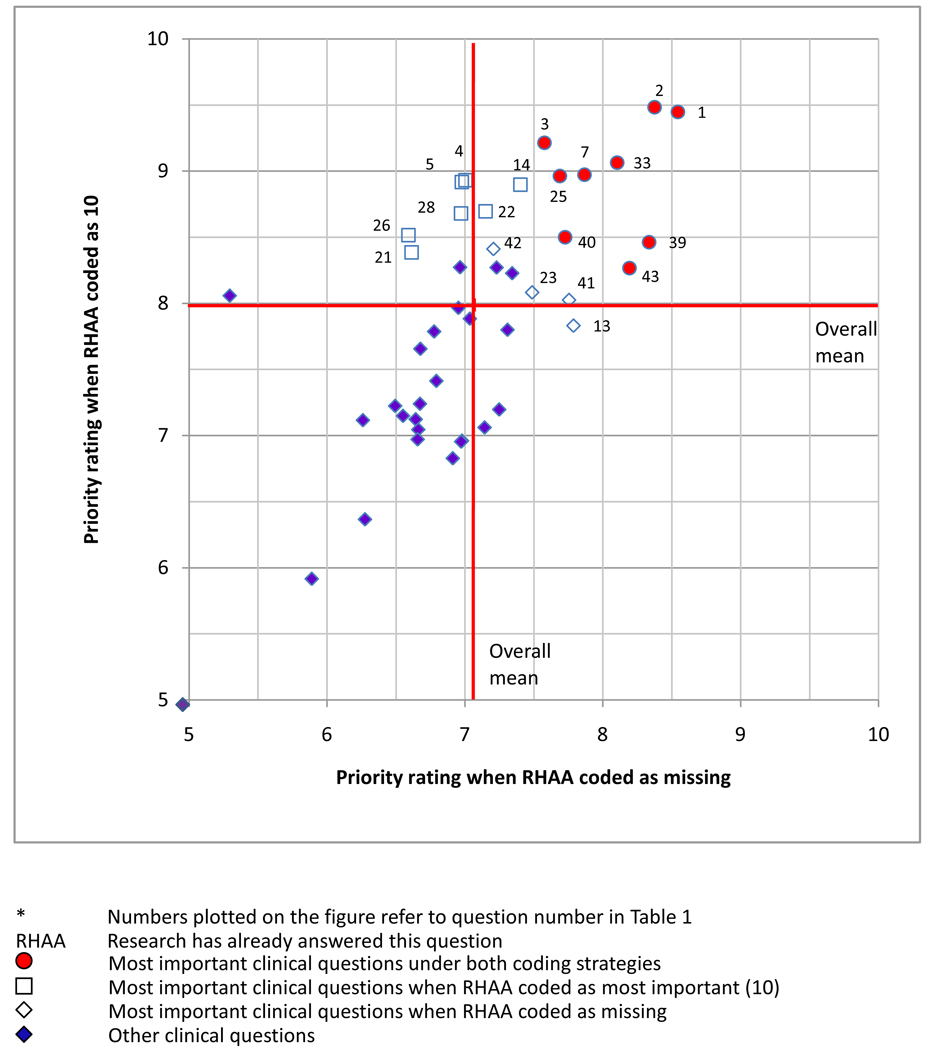

We performed a sensitivity analysis, assigning a rating of 10 to response option “research has already answered this question” (“no judgment” was coded as missing), and again compared ranks of the 45 clinical questions. Nine questions (1, 2, 3, 7, 25, 33, 39, 40 and 43) were classified as the most important clinical questions under both coding strategies (Figure 3 and Table 4): four on medical intervention, four on filtering surgery, and one on the adjustment of therapy. The proportion of respondents selected “research has already answered this question” ranged from 7% to 68% in Round One, and 6% to 89% in Round Two for these 9 questions. Respondents who changed from assigning a score in Round One to selecting “research has already answered this question” in Round Two were less likely to report expertise in clinical trials and systematic reviews. Questions 13, 23, 41, 42, were classified as the most important clinical questions when “research has already answered this question” was coded as missing, and questions 4, 5, 14, 21, 22, 26, 28 were classified as the most important clinical questions when “research has already answered this question” was coded as 10, but not under both coding strategies (Figure 3). When the highest ranking questions under both coding assumptions were compared to the AAO importance ratings, 4/9 questions that the AGS members ranked highly in our Delphi survey received a “level A” rating in the AAO PPPs, while 5/9 questions were unrated by AAO (Table 4).

Figure 3.

Scatter plot of mean rankings of 45 clinical questions* using two coding strategies

Table 4.

Most important clinical questions identified using both coding strategies*

| Question no. |

Clinical Questions | AAO rating |

% Selected RHAA | |||

|---|---|---|---|---|---|---|

| Round One |

Round Two |

|||||

| Medical interventions |

1 | Is medical therapy an effective initial treatment in lowering IOP in patients with POAG? |

A | 63.9 | 85.7 | |

| 2 | Are prostaglandin analog eye-drops effective in lowering IOP in patients with POAG? |

-† | 71.0 | 90.5 | ||

| 3 | Are beta-adrenergic antagonist eye-drops effective in lowering IOP in patients with POAG? |

-† | 68.6 | 88.6 | ||

| 7 | Is combination medication effective in lowering IOP in patients with POAG? |

-† | 51.5 | 71.4 | ||

| Filtering surgery | 25 | Is intraoperative mitomycin C effective and safe in improving the success rate of primary and repeat filtering surgery? |

-† | 52.1 | 78.1 | |

| 33 | Is use of drainage devices effective and safe in management of POAG among patients with failed filtering surgery/scared conjunctiva/poor prognosis of filtration surgery? |

-† | 49.7 | 73.3 | ||

| 39 | Does postoperative care (e.g. topical corticosteroids,bleb leaks repair, bleb massage, suture lysis,etc) result in better outcomes in patients who undergo filtering surgery? |

A | 11.2 | 14.3 | ||

| 40 | Does the use of topical corticosteroids in the postoperative period improve patient outcomes? |

A | 29.0 | 53.3 | ||

| Adjustment of therapy |

43 | Do the recommended indications (ie. Target IOP not achieved, etc.) for adjusting therapy improve outcomes in patient with POAG? |

A | 7.1 | 5.7 | |

First strategy coded “research has already answered this question” as “missing”; second strategy coded “research has already answered this question” as “most important (10).”

No rating provided by AAO Preferred Practice Patterns guidelines

AAO American Academy of Ophthalmology

RHAA Research has already answered this question

IOP Intraocular pressure

POAG Primary open angle glaucoma

Discussion

Using questions derived from clinical practice guidelines, and a survey of glaucoma specialists, we generated a ranked list of clinical questions for comparative effectiveness research related to the management of POAG. The questions to which clinicians assigned the highest importance ranking related to the effectiveness of medical interventions, filtering surgery, and adjustment of therapy. Clinical questions on laser trabeculoplasty and cyclodestructive surgery were ranked as less important. The clinical questions that respondents ranked as the most important involved either common clinical scenarios that practitioners face several times daily, such as the decision to use eye drops to lower the intraocular pressure, or less common scenarios, such as interventions after filtration surgery for which practitioners may lack knowledge about their utility.

The top five questions receiving a high importance ranking under both coding schemas were also ranked as “research has already answered this question” by more than 50% of respondents. This may indicate the existence of evidence, for example, from one or two trials that has convinced many clinicians, but not others. It is particularly interesting that the proportion responding that “research has already answered the question” increased after respondents had the opportunity to view the responses by others. We do not know whether those changing their responses were prompted to check the evidence or whether they were simply influenced by their peers.

The clinician survey rankings agreed with the AAO importance rankings in cases where importance rankings had been assigned. We frequently derived more than one clinical question from each AAO PPP recommendation, however. For example, for the PPP statement that medical interventions are generally effective for POAG, we derived seven questions, specifying each type of medical intervention (e.g., beta-blockers) as a unique question. This may explain why over half of the survey questions did not have an AAO importance ranking.

Two main steps are involved in any priority setting effort: identifying important answerable clinical questions, and prioritizing the list of questions using a specific methodology. Our approach for identifying important questions used direct clinician input. Because practice guidelines typically are developed by professional societies aiming to assist healthcare practitioners with decision making,11 the clinical questions derived from them reflect key issues and dilemmas facing clinicians at the time of guideline development.

Our study also used Delphi survey methods, a formal consensus technique, which incorporates individual value judgment into group decision-making. This method is in contrast to nomination-based methods in which, first, topics may be suggested by curious investigators, by payers concerned about cost, or members of the public concerned about contradictory claims of a treatment’s efficacy, and then explicit pre-decided criteria are applied to develop rankings.7, 8, 20–22 In certain cases, these other methods have not demonstrated satisfactory validity.21

Our method has several unique strengths. First, by surveying AGS members, we have queried a large group of stakeholders with highly specialized knowledge. This group of experts allowed us to examine a broad range of interventions for the same condition, thus meeting the urgent needs of practitioners to answer myriad questions within the subspecialty. In contrast, most priority setting methods in use focus on just a few questions in a specialty area. This is especially true for vision research. Since their start in 1997, the Agency for Healthcare Research and Quality (AHRQ) Evidence-based Practice Centers (EPCs), the key U.S. producers of systematic reviews, have released only three eye and vision related evidence reports out of a total of 185 evidence reports completed.23 The recent Institute of Medicine report “Initial National Priorities for Comparative Effectiveness Research,”6 recommending research priorities for $400 million allocated by the American Recovery and Reinvestment Act fund to the Department of Health and Human Services, includes only two topics related to vision heath. While dealing with important topics, these reviews and nominated priority topics do not begin to address the important clinical questions of vision subspecialists.

Second, our method promises to lessen the gap between evidence generation and the translation of evidence to care, when the method is used in partnership with guideline developers, research funders, evidence producers, and consumers. We propose the following approach to filling the evidence gaps in a subspecialty area: 1) choose a topic area and work with guidelines producers to derive answerable clinical questions from existing guidelines; 2) survey members of one or more professional associations to assess individual and consensus rankings of the clinical questions; 3) determine evidence needs and research priorities by matching the ranked questions with existing evidence; 4) partner with funders, evidence producers, and evidence synthesizers (e.g., groups within The Cochrane Collaboration such as the Cochrane Eyes and Vision Group) to fill the information gaps.

Our approach can also be used to re-assess research priorities when novel medicine and technology emerge, new evidence gaps develop, and healthcare resources need to be re-allocated to meet these immediate needs.

Given our goal of developing a framework for prioritizing comparative effectiveness research, we faced several challenges. First, when translating the AAO guidelines into answerable clinical questions, we relied on interventions and outcome measures that were stated explicitly in the guidelines. One consequence was that intraocular pressure was over-emphasized as an outcome in the restated clinical questions. In addition, by their nature, practice guidelines may not cover all important clinical questions; the fact that respondents nominated new questions provides evidence regarding this issue.

A second challenge was that some clinicians failed to grasp that our purpose was research prioritization. At both the pilot testing and survey stages of the study, clinicians sometimes responded to the questions as if we were asking about their knowledge of the subject. We found that questions related to the delivery of care (e.g., behavioral interventions, pre- and post- operative care) appeared to be the most difficult in this regard.

We faced a particular challenge in analyzing clinical questions where a meaningful proportion of respondents selected “research has already answered the question,” a response option suggested by a clinician co-investigator. Although we performed sensitivity analyses to test how priority ratings would change under two different assumptions, neither approach provides information about whether research indeed has answered the questions and how existing evidence influenced a respondent’s interpretation of the question. To address these issues would require matching existing systematic reviews and RCTs to the 45 clinical questions. In future applications of our method, it may be useful to separate response options into two parts: 1) “Please rate the importance of having the answer to each of the following questions for providing effective patient care,” and 2) “Do you believe research has already answered each question?” so that responses can be analyzed separately. One could also ask respondents to cite the evidence if they say “research has already answered this question.”

High response variability may be used as an alternative criterion to prioritize clinical questions for additional research. Questions with high response variability (which would result in a wide credible interval) might reflect greater clinical uncertainty, and may be more suitable for additional research. In our study, however, response variability was small for all questions. Regardless, it is critical to search for and synthesize existing evidence for clinical questions identified as priorities by preparing, maintaining and disseminating systematic reviews, a necessary step prior to investment of research funds.24

Although our Delphi survey response rate was comparable to other web-based surveys of medical specialists,25 the opinions and rankings of our respondents may not be comparable to those of other American glaucoma specialists since fewer than one third of AGS members responded to the survey. In addition, those who responded to both Round One and Round Two were less likely to report expertise in clinical trials and systematic reviews, and were less likely to identify themselves as self-employed/private practice than those responding to Round 1 only, which may have influenced the final rankings.

If providers and patients are to make well-informed decisions about health care, comparative effectiveness research must be prioritized and conducted with all due speed. Prioritization of the generation and synthesis of research evidence to prevent, diagnose, treat, and monitor clinical conditions should reflect the most urgent needs. Those setting priorities report experience but little empirical evidence as to effective methods of prioritization.8, 22 Our study provides evidence supporting the practicality of a systematic method to identify priority questions utilizing stakeholder input.

In conclusion, we tested the feasibility of a framework for prioritizing answerable clinical questions for new comparative effectiveness research by using practice guidelines and a survey of clinicians. Our approach is systematic, transparent, participatory, and produces a ranked list of questions in a subspecialty area. We have demonstrated that our theoretical model for priority setting for comparative effectiveness research question is a pragmatic approach that merits testing in other medical settings.

Supplementary Material

Acknowledgement

We acknowledge valuable clinical and methodological perspective provided by Drs. Richard Wormald, Donald Minckler, and David S. Friedman for the Cochrane Eyes and Vision Group US Project (CEVG@US) Advisory Board, who helped to verify that our restatement from guidelines was accurate and our research plan was feasible. We thank Dave Shade who developed the survey webpage and database.

Financial Support:

This study was supported by the Cochrane Collaboration Opportunities Fund, and Contract N01-EY2-1003, National Eye Institute, National Institutes of Health, USA.

The sponsor or funding organization had no role in the design or conduct of this research.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Meeting Presentation:

Evidence-based Priority-setting for New Systematic Reviews and Randomized Controlled Trials: a Case Study for Primary Open-angle Glaucoma. Presented at the 30th Society for Clinical Trials Annual Meeting, Atlanta, GA. May 2009

Evidence-based Priority-setting for New Systematic Reviews: a Case Study for Primary Open-angle Glaucoma. Presented at the XVI Cochrane Colloquium, Freiburg, Germany. October, 2008

Conflict of Interest:

Dr. Jampel is a consultant for Allergan, Glaukos, Ivantis, Endo Optics, Sinexux, and owns equity for Allergan. Other authors have no financial/conflicting interests to disclose.

Online only material:

This article contains online-only material. The following should appear online-only: Table 1. Statements in the American Academy of Ophthalmology primary open angle glaucoma Preferred Practice Pattern translated into clinical questions.

References

- 1.Sox HC, Greenfield S. Comparative effectiveness research: a report from the Institute of Medicine. Ann Intern Med. 2009;151:203–205. doi: 10.7326/0003-4819-151-3-200908040-00125. [DOI] [PubMed] [Google Scholar]

- 2.American Recovery and Reinvestment Act of 2009. [Accessed April 21, 2010];111 USC. Public Law 111-5. 2009 Feb 17;:177. Available at: http://www.gpo.gov/fdsys/pkg/PLAW-111publ5/pdf/PLAW-111publ5.pdf.

- 3.Fisher ES, Wennberg DE, Stukel TA, et al. The implications of regional variations in Medicare spending. Part 1: the content, quality, and accessibility of care. Ann Intern Med. 2003;138:273–287. doi: 10.7326/0003-4819-138-4-200302180-00006. [DOI] [PubMed] [Google Scholar]

- 4.Fisher ES, Wennberg DE, Stukel TA, et al. The implications of regional variations in Medicare spending. Part 2: health outcomes and satisfaction with care. Ann Intern Med. 2003;138:288–298. doi: 10.7326/0003-4819-138-4-200302180-00007. [DOI] [PubMed] [Google Scholar]

- 5.Guyatt GH, Haynes RB, Jaeschke RZ, et al. Evidence-Based Medicine Working Group. Users′ guides to the medical literature: XXV. Evidence-based medicine: principles for applying the users′ guides to patient care. JAMA. 2000;284:1290–1296. doi: 10.1001/jama.284.10.1290. [DOI] [PubMed] [Google Scholar]

- 6.Iglehart JK. Prioritizing comparative-effectiveness research--IOM recommendations. N Engl J Med. 2009;361:325–328. doi: 10.1056/NEJMp0904133. [DOI] [PubMed] [Google Scholar]

- 7.Agency for Healthcare Research and Quality. The Effective Health Care Program: How are research topics chosen? [Accessed October 29, 2009]; Available at: http://effectivehealthcare.ahrq.gov/index.cfm/submit-a-suggestion-for-research/how-are-research-topics-chosen/

- 8.Eden J, editor. Knowing what Works in Health Care: A Roadmap for the Nation. Washington, DC: National Academies Press; 2008. Committee on Reviewing Evidence to Identify Highly Effective Clinical Services, Board on Health Care Services; pp. 57–80. [Google Scholar]

- 9.Noorani HZ, Husereau DR, Boudreau R, Skidmore B. Priority setting for health technology assessments: a systematic review of current practical approaches. Int J Technol Assess Health Care. 2007;23:310–315. doi: 10.1017/s026646230707050x. [DOI] [PubMed] [Google Scholar]

- 10.Cook DJ, Greengold NL, Ellrodt AG, Weingarten SR. The relation between systematic reviews and practice guidelines. Ann Intern Med. 1997;127:210–216. doi: 10.7326/0003-4819-127-3-199708010-00006. [DOI] [PubMed] [Google Scholar]

- 11.Woolf SH, Grol R, Hutchinson A, et al. Clinical guidelines: Potential benefits, limitations, and harms of clinical guidelines. BMJ. 1999;318:527–530. doi: 10.1136/bmj.318.7182.527. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Ferris FL, III, Tielsch JM. Blindness and visual impairment: a public health issue for the future as well as today. Arch Ophthalmol. 2004;122:451–452. doi: 10.1001/archopht.122.4.451. [DOI] [PubMed] [Google Scholar]

- 13.Eye Diseases Prevalence Research Group. Prevalence of open-angle glaucoma among adults in the United States. Arch Ophthalmol. 2004;122:532–538. doi: 10.1001/archopht.122.4.532. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Quigley HA. Number of people with glaucoma worldwide. Br J Ophthalmol. 1996;80:389–393. doi: 10.1136/bjo.80.5.389. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Quigley HA, Broman AT. The number of people with glaucoma worldwide in 2010 and 2020. Br J Ophthalmol. 2006;90:262–267. doi: 10.1136/bjo.2005.081224. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Sommer A, Abbott RL, Lum F, Hoskins HD., Jr PPPs--twenty years and counting. Ophthalmology. 2008;115:2125–2126. doi: 10.1016/j.ophtha.2008.09.032. [DOI] [PubMed] [Google Scholar]

- 17.Higgins JP, Green S, editors. Cochrane Handbook for Systematic Reviews of Interventions Version 5.0.2. Part 2. General Methods for Cochrane Reviews. Chapter 5. Defining the review question and developing criteria for including studies. Chapter 9. Analysing Data and Undertaking Meta-analyses. [Accessed April 21, 2010];Cochrane Collaboration. 2009 Available at: http://www.cochrane-handbook.org/

- 18.Equator Network. The SPIRIT initiative: Defining Standard protocol items for randomized trials. [Accessed June 30, 2010];Executive Summary. 2010 April; Available at: http://www.equator-network.org/resource-centre/library-of-health-research-reporting/reporting-guidelines-under-development/

- 19.Lunn DJ, Thomas A, Best N, Spiegelhalter D. WinBUGS-a Bayesian modeling framework: concepts, structure, and extensibility. Stat Comput. 2000;10:325–337. [Google Scholar]

- 20.Agency for Healthcare Research and Quality. Solicitation for nominations for new primary and secondary health topics to be considered for review by the United States Preventive Services Task Force. [Accessed April 21, 2010];FR Doc 06–612. Fed Reg. 2006 January 24;71(15):3849–3850. Available at: http://edocket.access.gpo.gov/2006/06-612.htm.

- 21.Woolf SH, DiGuiseppi CG, Atkins D, Kamerow DB. Developing evidence-based clinical practice guidelines: lessons learned by the US Preventive Services Task Force. Annu Rev Public Health. 1996;17:511–538. doi: 10.1146/annurev.pu.17.050196.002455. [DOI] [PubMed] [Google Scholar]

- 22.Oxman AD, Schunemann HJ, Fretheim A. Improving the use of research evidence in guideline development: 2. priority setting. [Accessed April 21, 2010];Health Res Policy Syst [serial online] 2006 :414. doi: 10.1186/1478-4505-4-14. Available at: http://www.health-policy-systems.com/content/4/1/14. [DOI] [PMC free article] [PubMed]

- 23.Agency for Healthcare Research and Quality. Evidence-based Practice. [Accessed October 29, 2009];Evidence report series by number. Available at: http://www.ahrq.gov/clinic/epc/epcseries.htm.

- 24.Clarke M, Hopewell S, Chalmers I. Reports of clinical trials should begin and end with up-to-date systematic reviews of other relevant evidence: a status report. J R Soc Med. 2007;100:187–190. doi: 10.1258/jrsm.100.4.187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Braithwaite D, Emery J, De Lusignan S, Sutton S. Using the Internet to conduct surveys of health professionals: a valid alternative? Fam Pract. 2003;20:545–551. doi: 10.1093/fampra/cmg509. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.