Abstract

The physiological basis of human cerebral asymmetry for language remains mysterious. We have used simultaneous physiological and anatomical measurements to investigate the issue. Concentrating on neural oscillatory activity in speech-specific frequency bands and exploring interactions between gestural (motor) and auditory-evoked activity, we find, in the absence of language-related processing, that left auditory, somatosensory, articulatory motor, and inferior parietal cortices show specific, lateralized, speech-related physiological properties. With the addition of ecologically valid audiovisual stimulation, activity in auditory cortex synchronizes with left-dominant input from the motor cortex at frequencies corresponding to syllabic, but not phonemic, speech rhythms. Our results support theories of language lateralization that posit a major role for intrinsic, hardwired perceptuomotor processing in syllabic parsing and are compatible both with the evolutionary view that speech arose from a combination of syllable-sized vocalizations and meaningful hand gestures and with developmental observations suggesting phonemic analysis is a developmentally acquired process.

Keywords: EEG/functional MRI, natural stimulation, resting state, oscillation

Auditory asymmetry (1) and hand preference are traits humans share with other primate and nonprimate species (2–5). Both have been proposed as the functional origin of human cerebral dominance in speech and language (6, 7). The motor theory of language evolution argues that speech evolved from a preexisting manual language (8) involving lateralized hand/mouth gestures. Such asymmetric control of gesture or pharyngeal musculature could have led to left lateralization of speech and language (9). Conversely, if auditory preceded motor asymmetry in evolution, the alignment of vocalization to gestures (10) might have gradually led to left-lateralized motor and executive language functions (11). It remains unknown and controversial which of these scenarios accounts for asymmetry in speech and language processing, so we set out to find empirical evidence in favor of one or the other.

We obtained simultaneous functional magnetic resonance imaging (fMRI) and electroencephalography (EEG) recordings at rest and while watching an ecologically valid stimulus (movie) to identify where brain activity correlates with electrophysiological oscillations in frequency bands related to syllabic and phonemic components of speech. We tested for evidence of lateralization of component-associated frequencies. Our experimental approach was based on two assumptions that have received recent experimental support (12–15): The first is that there are two intrinsic hardwired auditory speech sampling mechanisms, working in parallel, at rates that are optimal for syllabic and phonemic parsing of the input (delta–theta (∼4 Hz) and gamma (∼40 Hz) oscillations, respectively) (6, 16). They shape neuronal firing elicited by auditory stimulation (17) with fast phonemic gamma modulated by slower syllabic theta oscillations (18, 19), thus aligning neuronal excitability to the most informative parts of speech. The second assumption is that motor areas express natural oscillatory activity that characterizes intrinsic jaw (4 Hz) movements and those of the tongue (e.g., trill at 35–40 Hz). These frequencies correspond to the two rhythms required to produce syllables and phonemes (see above) (12, 20). Activity in brain systems associated with both speech perception and production should therefore be correlated in a lateralized manner with neuronal oscillations in speech-related frequency bands. Our data probed the intrinsic tuning notion and then tested, with a naturalistic speech stimulus, a spoken movie, whether it predicts lateralized language network activity.

Results

Intrinsic Hemispheric Asymmetries in Speech-Related Frequency Components.

Across the entire brain we measured (i) local brain activity fluctuation by change in the blood oxygen level-dependent (BOLD) signal and (ii) power fluctuations in cortical rhythms by concurrent EEG, in 16 subjects who alternately “rested” for 10 min (intrinsic correlations) and watched a 10-min movie clip (speech-evoked correlations, refs. 21, 22). The movie, a documentary on an ecological topic, featured two main characters lecturing, interleaved with brief illustrative sequences without speech (Movie S1). We extracted fMRI signals bilaterally from cytoarchitectonically defined regions and correlated their time courses with EEG oscillations determined in 1-Hz steps between 1 and 72 Hz. We then quantified the degree of hemispheric asymmetry in EEG/fMRI coupling for 18 pairs of homotopic territories covering the whole language network and the articulatory motor cortex (Materials and Methods). We also included two nonspeech-related control regions, one in the motor (the foot area) and another in the visual cortex (BA18).

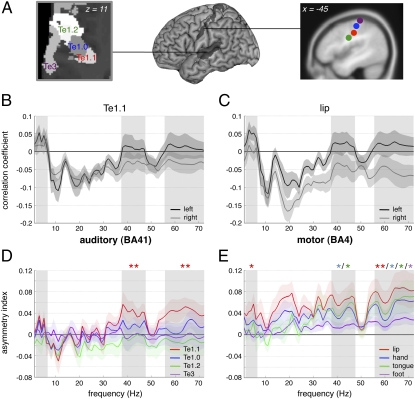

All sampled territories shared some intrinsic correlations (i.e., at rest) between EEG power and BOLD signal fluctuations (SI Discussion 1); these were characterized by positive correlations in the delta–theta (2–6 Hz; SI Discussion 2) and negative in alpha and beta bands. Positive correlations indicate that regional synaptic activity increased whenever the power in the delta–theta range increased. Conversely negative correlations indicate regional drops in synaptic activity when alpha and beta power increased. In the gamma band, intrinsic correlation depended on territory, with positive correlations found in auditory and motor cortices (Fig. 1 B and C). Asymmetrical EEG/fMRI correlations were present in the medial part of the primary auditory cortex A1 (Te1.1; Fig. 1 A and D), and the tongue and hand motor cortices in the gamma band, and in the motor lip area in the gamma and delta–theta bands (Fig. 1E). Asymmetry was also detected in the ventral part of the parietal operculum/secondary somatosensory cortex S2, which contained somatosensory/proprioceptive representations of speech sounds (23) important for feedback control of speech production and in BA40 in the vicinity of the Sylvian parietal temporal (SPT) area implicated in sensorimotor transformations of speech (24) (Fig. 2, colored bars). Motor cortical asymmetry was also found in the beta domain around 20 Hz (Fig. 1E). Asymmetry was greatest in the articulatory motor cortex and in BA40 (Fig. 2), but in relation to speech-associated frequencies (delta–theta and gamma bands) asymmetry was most pronounced in the auditory cortex. There was no significant intrinsic left dominance in control regions. Likewise, we found no significant left lateralization in the planum temporale or the ventral prefrontal cortex. This is a critical finding because they are recognized as archetypal left lateralized, language-specific regions, since Wernicke and Broca showed that lesions in them caused major perception and production impairments (25). The findings indicate that left auditory, somatosensory, inferior parietal, and articulatory-motor cortices show better inherent tuning to speech-related frequencies than right homotopic regions. Frequency-specific asymmetries in auditory cortices were replicated using magnetoencephalography (MEG) at rest where we computed the frequency power over auditory left and right hemispheric sensors (Fig. S1 and SI Results).

Fig. 1.

Auditory and motor oscillatory profiles at rest. (A) Cytoarchitectonic and functional parcellation of (Left) auditory (axial plane) and motor cortices (sagittal plane), respectively. (B and C) Correlation coefficients between each EEG frequency band (1–72 Hz; mean ± SEM) and left (black) or right (gray) (A) auditory Te1.1 and (B) motor lip BOLD time courses. (D and E) Asymmetry indexes (mean ± SEM) for four (D) auditory and (E) motor regions. The index corresponds to left minus right difference in the correlation between BOLD and EEG fluctuations over 1–72 Hz during rest. Three frequency bands of interest are highlighted in gray: delta–theta 2–6 Hz, gamma 38–47 Hz, and gamma 56–72 Hz (*P < 0.05, **P ≤ 0.01, uncorrected).

Fig. 2.

Asymmetry indexes (mean ± SEM) during rest (colored bars) and movie (white bars) averaged over the whole spectrum (1–72 Hz) for each region of interest. Positive values correspond to left dominance and significant interactions are highlighted with brackets [*P < 0.05, **P ≤ 0.01, ***P ≤ 0.001 at the post hoc (Fisher's LSD) comparison].

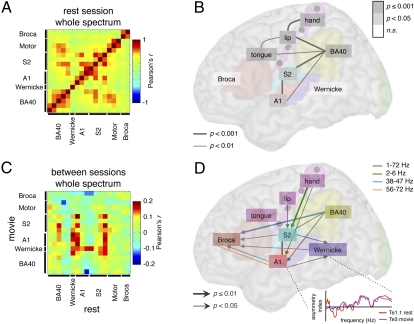

We evaluated whether regions exhibiting asymmetries of oscillatory activity at rest constitute a functional network (26). We used a cross-correlogram approach to assess similarity of asymmetry in the sampled frequency spectrum across all sampled territories (Fig. 3 A and B; Materials and Methods). Asymmetry profiles were shared as a function of anatomical proximity, resulting in correlated patterns across A1, S2, and BA40, between BA40 and the motor cortex, and across the tongue, lip, and hand motor regions (SI Discussion 3). These data suggest that left auditory, somatosensory, and motor cortices constitute with SPT a core lateralized network at rest, which does not include Wernicke's and Broca's areas. Overall we found no evidence for any computational advantage in these regions resulting from the intrinsic presence of speech-matching oscillations, i.e., no greater ability to phase lock with speech than their right homolog. This result suggests that this property is either irrelevant for such neural computations in these regions, e.g., strictly time-independent computations, or more likely, that speech-matching oscillations are asymmetrically elaborated during language-associated stimulation.

Fig. 3.

Shared asymmetry profiles (A and B) at rest and (C and D) between rest and movie. (A and C) Pearson's cross-correlation (r) matrices of the normalized asymmetry indexes of 18 regions of interest computed over the whole spectrum (1–72 Hz) data points (A) during rest condition and (C) between rest (horizontal axis) and movie (vertical axis) conditions (see the schematic in the Lower Right part of D). (B and D) Representation of the significant correlations (B) during rest condition over the whole spectrum (P values corrected for multiple comparisons) and (D) driven by rest over movie conditions (uncorrected P values). Note some bidirectional influences (simple traits).

Language-Network Lateralization During Audiovisual Linguistic Stimulation.

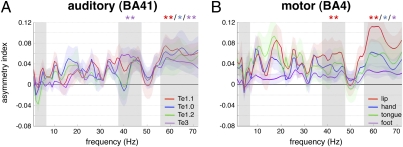

We therefore addressed the hypothesis that the left-dominant activity in Wernicke's and Broca's areas seen during language processing results from a propagation of lateralized output from the core network. To test this hypothesis, we carried out the same analyses as above on data acquired watching the spoken movie. We assumed that watching a movie would activate the cortical language network ecologically, so we were unsurprised to find an increase of BOLD signal in a set of regions including bilateral auditory and visual areas, bilateral precentral sulci, and the left inferior frontal gyrus between “movie” and “rest” conditions (Fig. S2 and SI Materials and Methods; ref. 27); equally, we noted increased strength of EEG/fMRI coupling over the whole spectrum of frequencies in most sampled territories (Fig. S3). Augmented coupling was often, but not always, accompanied by an increase in asymmetry (Fig. S4). All core auditory territories were left dominant and tuned to the gamma frequency band (Fig. 4A). In Wernicke's area, left dominance became significant in the two, rest-associated, gamma bands asymmetrically tuned in the auditory region Te1.1 (Fig. 4A, shaded columns; SI Discussion 4). Asymmetry also increased in Broca's region and BA40, but over a broader frequency range that included beta frequencies (Fig. 2, white bars; Fig. S4). No increase of asymmetry was found in the motor cortex (Figs. 2 and 4B). We conclude that asymmetry in articulatory and hand motor cortex is intrinsically strong and not prone to modulation by audiovisual language processing. This asymmetry may be due to left-dominant pharyngeal muscle control (9). EEG/fMRI coupling remained symmetric in the visual cortex (Fig. 2 and Fig. S4D), indicating that the induction of asymmetry in classical language areas is not due to stimulus-driven cortical entrainment but to specific augmentation of a constitutive asymmetry that in other regions is already manifest at rest.

Fig. 4.

Asymmetry indexes (1–72 Hz; mean ± SEM) during movie in (A) auditory and (B) motor regions. Positive values correspond to left dominance (*P < 0.05, **P ≤ 0.01, uncorrected). Frequency bands of interest are highlighted in gray (Fig. 1).

Impact of Intrinsic on Stimulus-Driven Asymmetry.

To test the hypothesis that intrinsic lateralization of the core system determines lateralization of the language network during language stimulation we computed cross-correlograms between spectral asymmetry profiles in all territories at rest and when watching a movie, thus probing which intrinsically lateralized, frequency-coupled regions predict the asymmetry profile of the language network during processing of audiovisual speech. We found four regions where resting asymmetry predicts language-driven asymmetry (Fig. 3C, vertical red stripes): A1, S2, BA40, and Wernicke's area. Whereas the first three of these were asymmetric at rest, Wernicke's area was not (Fig. 1D). This acquired asymmetry pattern reflects both the integration from auditory and somatosensory cortices (horizontal red stripe in Fig. 3C) during movie watching, and the induction of Wernicke's profile (vertical red stripe; nonsignificant trends over A1 and BA40, both P values <0.1) on other regions. These data are in line with the notion that the planum temporale is an important hub (28) for relaying speech-induced neural activity to language areas.

Leftward dominance in the language network appears to be rooted in left-hemisphere regions tuned to speech rhythms. However, motor cortex, although showing strong asymmetry at rest, failed to influence other regions. In addition, the asymmetry in Broca's area induced by watching a movie was not determined by activity in any other region at rest. These results were obtained from analyses over the complete frequency range, so we then asked whether the regions interacted with other components of the language system in speech-specific frequency bands, e.g., delta–theta (2–6 Hz) and gamma (Fig. 3D and Fig. S5; Materials and Methods). We found that asymmetry in the profile of activity in Broca's area was driven specifically by several lower gamma band (38–47 Hz) inputs from the auditory and somatosensory cortices, Wernicke's region, and left BA40 (Fig. 3D, blue arrows). This pattern emphasizes the integrative function of Broca's area (29) (see also the complex asymmetry profile in Broca's region in Fig. S4) and implies that its neural computations occur at specific timescales that include at least low gamma frequencies. It is not yet clear how to relate this timescale to the function of Broca's region in speech and language subroutines, but if Broca's region is involved in a number of them, as has been suggested empirically (30), local processing, reflected in gamma band activity is neurophysiologically plausible and unsurprising.

These additional analyses also showed that the intrinsic asymmetry (i.e., at rest) in the delta–theta frequency bands found in hand motor cortex predicted that found in auditory and somatosensory cortices during movie viewing (Fig. 3D, green arrows). This frequency band characterizes dominant hand movements that accompany periodic jaw and lip movements when articulating syllables. They can be meaningful in their own right and are functionally linked to oral communication (31). Our data suggest that input from motor hand and lip areas, directly or via the somatosensory cortex, may contribute to speech parsing at syllabic rates by auditory cortex. This interpretation is supported by recent findings showing a facilitation of speech perception when the motor lip area is stimulated by transcranial magnetic stimulation (TMS) (32), and also by electrophysiological data in monkeys showing that an input from the somatosensory cortex shapes the response of the auditory cortex to sounds by phase-resetting ongoing oscillations (33). That intrinsic left dominance in motor areas at syllabic rates predicts auditory and somatosensory left dominance during a movie illustrates the idea that sensing is an active process that is constrained by motor routines (34). This does not imply that the motor cortex is causally indispensable to speech perception, but that it may, much like the visual system in perception, provide cross-modal synchronization cues subtended by delta–theta phase modulation that are useful to speech processing (35, 36). Understanding the computational steps that involve delta (∼1–3 Hz) and theta (∼5–7 Hz) as a separate (19) or common (36) machinery is an important and open issue. We did not detect propagation of asymmetry from the motor tongue area to auditory cortex in the range of frequencies corresponding to tongue movements and phonemic timescales (low gamma frequencies). This negative finding is consistent with the view that the motor cortex does not account for left auditory cortex tuning to phoneme-associated frequencies, but suggests mutual tuning of auditory and motor cortices, perhaps throughout adaptive motor and language development.

Discussion

Our online concurrent EEG and BOLD fMRI data suggest that leftward asymmetry in language processing originates in auditory, somatosensory (37), articulatory motor, and inferior parietal cortices. All four regions show a stronger intrinsic expression of specific oscillations that can serve speech parsing (38) in the left than in the right hemisphere. Delta–theta and gamma cortical rhythms can be found in many brain regions and are involved in a broad range of cognitive operations (39, 40). However, our data show that within the studied regions, delta–theta and gamma asymmetric expression is confined to the language system, i.e., is absent in (motor and visual) control regions, suggesting that these rhythms play a specific role in speech processing. It is possible that throughout evolution, the human brain has developed a communication signal adapted to the cortical oscillatory machinery present in sensory and motor cortices, and has exploited incidental cortical asymmetry (4) to implement parallel handling of linguistic and nonlinguistic material (1). That the oscillatory machinery has been locally reinforced by speech processing is also supported by recent data showing that the topography of gamma and delta–theta rhythms overlaps more with neural speech perception and production than with other cognitive systems (12).

Our data show that asymmetric expression of speech-related rhythms found in the articulatory motor areas extends to the motor hand area and interacts with auditory left dominance at the syllabic, but not the phonemic timescale. Inherent auditory–motor tuning at the syllabic rate and acquired tuning at the phonemic rate are also compatible with two recognized stages of language development in infants; an early stage with production of syllables that does not depend on hearing (also observed in deaf babies) (41), followed by a later stage in which infants match their phonemic production to what they hear in caregiver speech (42). This finding is hence compatible with the observation that syllabic “packaging” is universal and perhaps innately specified, while a phonemic repertoire is acquired over the course of development in specific linguistic contexts (43). From phylo- and ontogenetic perspectives, our findings support an evolutionary scheme in which spoken language arose from the combination of syllable-like vocalizations with hand gestures (10). Whereas this scenario appears hardwired in the human brain, auditory–motor interactions underlying the evolution of phonemic complexity in speech are not. Phonemic complexity probably results from acquired tuning of articulatory performance to fast integration properties of left auditory cortex during maturation. An important further step will be to assess how this later stage relates to the recent evolution of FOXP2 (44), which influences fine articulatory processes and thus may have orchestrated the capacity to fine-tune for motor–auditory integration.

Finally, we show that speech parsing oscillatory machinery is not intrinsically asymmetric in the posterior superior temporal cortex, although Wernicke's area typically responds more specifically to speech than its right homolog (28). Our data demonstrate that Wernicke's area inherits speech-specific integration properties from nearby left auditory and orofacial somatosensory cortices when speech is heard, which is a key argument in a scenario where asymmetry originates in mutual tuning across primary cortices. Likewise, Broca's area, which is critical for speech production, expresses stronger gamma oscillations than its right homolog during automatic speech processing, under the influence of “posterior” regions, i.e., Wernicke's area, A1, S2, and BA40. These data support previous studies showing that Broca's area is not asymmetrically involved in nonlinguistic tasks and hence not specific to language (45), even though recent empirical work suggest that it carries out essential computations in hierarchical language processing (30). As functional selection of different subroutines (within one structurally connected system) is one of the possible functions of neuronal gamma-band synchronization (39), our study could reflect that the hierarchical selection of language-specific subroutines in Broca's area is in part controlled by posterior language regions.

Materials and Methods

Subjects, Methods, and Data Acquisition.

Sixteen right-handed, healthy male volunteers (age range, 19–29; written informed consent) with (corrected to) normal vision and audition, and no history of neurological or psychiatric illness, underwent simultaneous EEG and BOLD fMRI to explore temporal correlations in specific regions between the amount of synaptic activity driving the hemodynamic BOLD signal and power at different EEG frequency bands (46, 47) (see also SI Discussion 1). Only male subjects were used to minimize subject-based variance of no interest (48) as possible sex differences were not a subject of this study. They wore ear defenders and earplugs to attenuate scanner noise and were requested to stay awake and to avoid moving.

Subjects were either asked to rest with eyes closed or to watch a movie (Movie S1) for 10 min each. Data were acquired in three sessions, as follows: session 1, rest; sessions 2 and 3, movie followed by rest.

The three sessions yielded 1,560 echoplanar fMRI image volumes (Tim-Trio; Siemens, 40 transverse slices, voxel size = 3 × 3 × 3 mm3; repetition time = 2,000 ms; echo time = 50 ms; field of view = 192) and continuous EEG data recorded at 5 kHz from 62 scalp sites (Easycap electrode cap) using MR-compatible amplifiers (BrainAmp MR and Brain Vision Recorder software; Brainproducts). Two additional electrodes (electrooculograph, EOG and electrocardiograph, ECG) were placed under the right eye and on the collarbone. Impedances were kept under 10 kΩ and EEG was time-locked with the scanner clock, which further reduced artifacts and resulted in higher EEG quality in the gamma band (49). Additionally, an eye-tracking system allowed for online monitoring of pupil movements during the movie, ensuring an attentive watching in all subjects. A 7-min anatomical T1-weighted magnetization-prepared rapid acquisition gradient echo sequence (176 slices, field of view = 256, voxel size = 1 × 1 × 1 mm) was acquired at the end of scanning.

fMRI and EEG Preprocessing.

We used statistical parametric mapping (SPM5; Wellcome Department of Imaging Neuroscience, UK; www.fil.ion.ucl.ac.uk) for fMRI standard preprocessing, which first involved realignment of each subject's functional images and coregistration with structural images. Structural images were segmented and spatially normalized using the unified segmentation/normalization approach implemented in SPM5. Segmentation made territory delineation easier. Scans from different subjects were spatially normalized on the basis of their corresponding normalized structural image and finally spatially smoothed with a 10-mm full-width half-maximum isotropic Gaussian kernel to compensate for residual variability after spatial normalization.

EEGlab v.7 (sccn.ucsd.edu/eeglab) and the FMRIB plug-in (users.fmrib.ox.ac.uk/∼rami/fmribplugin) were used on EEG data for gradient and pulse artifact subtraction. In two subjects, one of three rest sessions was excluded due to poor EEG quality, and another subject was completely excluded. The EEG data were downsampled to 250 Hz, and low-passed filtered at 75 Hz.

Cortical Territory Delineation.

We used the SPM Anatomy Toolbox v.1.5 to delineate 16 different cortical territories on the basis of probabilistic cytoarchitectonic maps, for each hemisphere. We sampled them within the language network including the primary auditory cortex A1 (BA41: territories Te1.0, Te1.1, and Te1.2, Fig. 1A), the planum temporale (Wernicke’s region: Te3), the ventral prefrontal cortex (Broca’s region: BA44 and BA45), the parietal operculum/secondary somatosensory cortex S2 (OP1 to OP4), and the rostral inferior parietal cortex BA40 (PFop, PFt, PF, PFm, and PFcm). We included the secondary visual cortex (BA18) as a control region.

As functional areas corresponding to articulatory and nonarticulatory primary motor regions are all located within a single cytoarchitectonic territory (BA4p), we used spatial coordinates resulting from functional MRI studies of tongue, hand, foot (50), and lip (51) movements. Using MarsBaR v.0.41 toolbox, we created, for each left and right area, a sphere with a 5-mm radius centered on the literature-defined coordinates.

These territories and spheres were used as masks to extract, for each subject, their associated BOLD time courses (averaged across all respective voxels) over the entire scanning period, using MarsBaR.

Time–Frequency Analysis.

A time–frequency wavelet transform using in-house Fast_tf v.4.5 software was applied at the frontocentral electrode (equidistant to both hemispheres) referenced to the mean of the two occipital electrodes (i.e., most distant position), using a family of complex Morlet wavelets, resulting in an estimate of oscillatory power at each time sample, from 1 to 72 Hz in steps of 1 Hz. Importantly, the time–frequency resolution of the wavelets was frequency dependent, so we applied a different wavelet factor for low and high frequencies: 1–20 Hz, m = 10; 21–72 Hz, m = 30. Data were further downsampled at 8 Hz (sliding average of 33%), centered to the mean for each frequency band, convolved with the hemodynamic response function, and further downsampled to match the fMRI repetition time.

Territory-Based Correlation Analysis with the EEG Power Spectrum.

For each sampled area (20 territories × 2 hemispheres) the BOLD time course was correlated with each EEG power fluctuation across the spectrum of 1–72 Hz, after concatenation of the 3-rest or 2-movie sessions. Covariates of no interest corresponding to head-motion parameters were included in each model (using partial correlations). Resulting correlation values were Fisher Z-transformed, allowing standard statistics on a near Gaussian population to be performed. Of note, correlation values were obtained between the global EEG time course and the BOLD time course of small anatomofunctional areas, resulting in rather small but reproducible values. We interpret a stronger correlation as a stronger coupling between oscillatory activity and BOLD response, i.e., a stronger contribution of the oscillatory activity to regional synaptic activity.

Statistical Analyses.

We assessed hemispheric differences by computing the difference between the correlations coefficients of left and right homotopic areas, for each 72-frequency band, each 20-territory, each 2-condition (rest or movie), and each 15-subject. This difference is referred to as “asymmetry index.” Preliminary observations showed that the different territories of S2 (4) and BA40 (5) had similar profiles of asymmetry. We subsequently only studied in detail one territory for each of these two regions, with the criteria that it should express the most representative asymmetry profile of its own group and be part of the functionally relevant language network. Accordingly, we selected OP4, which contained somatosensory/proprioceptive representations of speech sounds (23), and PFt involved in linguistic sensorimotor transformation (24).

One-sample t tests were performed for each remaining 13-territory, within the delta–theta 2- to 6-Hz, low gamma 38- to 47-Hz, and high gamma 56- to 72-Hz bands. These bands were chosen because they correspond to the typical pattern of asymmetry (with left hemispheric positive correlation coefficients) as observed in Te1.1 (Fig. 1B). Our statistical approach was therefore a mass univariate one, as the different frequency bands of interest corresponded to different Gaussian filters, and the 13 territories corresponded to nonoverlapping voxels. P values <0.05 uncorrected were then considered significant and are reported in Figs. 1 and 4 and Fig. S4.

To obtain a single signature of the asymmetry profile in each 13-territories within each double condition, we computed the mean asymmetry index over the whole spectrum (1–72 Hz). Significant hemispheric asymmetries were tested on the mean correlation coefficients by repeated-measures ANOVA (factors: territory, 13 levels; condition, 2 levels; and hemisphere, 2 levels), which showed significant main effects of interest [hemisphere: F(1,15) = 6.41, P = 0.02; territory × condition × hemisphere: F(12,180) = 2.22, P = 0.01], allowing post hoc t tests to be performed. Significant differences between conditions were tested on the mean asymmetry indexes by repeated-measures ANOVA (factors: territory, 13 levels; condition, 2 levels), which showed significant main effects of interest [condition: F(1,15) = 5.46, P = 0.03; territory × condition: F(12,180) = 2.22, P = 0.01], allowing for computation of post hoc t tests. P < 0.05 at the post hoc (Fisher's least significant difference, LSD) comparison were considered significant. These values are reported in Figs. 2 and 3 B and D. In Fig. 3 we pooled together the territories according to their functional relevance (ex: A1, S2, Broca) and reported the value of the strongest asymmetric territory.

Finally, to confirm that the different territories of S2 or BA40 had similar asymmetry profiles, we performed two (region dependent) repeated-measures ANOVAs on the global asymmetry indexes (factors: territory, 4 or 5 levels; condition, 2 levels) and found no significant main effects in either case (all F values <2.31; all P values >0.15).

Correlation Analysis Between the Asymmetry Indexes of Different Territories.

We examined the degree of similarity in the asymmetry profile across all sampled territories (SI Discussion 3). As post hoc comparisons (Fig. 2) showed no significant global asymmetry during rest or movie in the two control territories (motor foot and visual BA18), we excluded them from subsequent analyses. For each 15-subject, each 18-territory, and each 2-condition, we normalized the asymmetry profile values. We used the asymmetry profile values over either the whole spectrum or the 2- to 6-Hz, 38- to 47-Hz, or 56- to 72-Hz bands. Pearson's cross-correlations were computed for each 15-subject across the 36 vectors (18 territories and 2 conditions). Cross-correlation matrices of each 15-subject were then averaged. Fig. 3A and Fig. S5A represent Pearson's cross-correlation matrices computed between the whole spectrum asymmetry profiles of each 18-territory during rest and movie, respectively. Fig. 3C shows Pearson's cross-correlation matrix of the 18 territories (whole spectrum asymmetry) computed between rest and movie conditions (see the schematic in Fig. 3D). This matrix can be interpreted in a directional way, as we hypothesize that the resting state profile determines lateralization of the language network during the movie. Fig. S5 B–D represents similar matrices, computed over the 2- to 6-Hz, 38- to 47-Hz, and 56- to 72-Hz bands, respectively.

In a second-level analysis, one-sample t tests were performed across subjects to test for significant correlations. As within-condition asymmetry profiles of the different territories were highly correlated (they belonged to the same dataset) we applied conservative statistics, considering P values <0.01 (corrected). A summary of these statistics are reported in Fig. 3B. Similarly Pearson's cross-correlations were also computed to assess the influence of resting state over movie. As these correlations were performed across two distinct datasets, we used more lenient statistical criteria. Note that we do not compare the two cross-correlation analyses. For cross-correlation matrices between rest and movie (whole spectrum, 2- to 6-Hz, 38- to 47-Hz, and 56- to 72-Hz bands), results were thresholded at P values <0.05 and reported in Fig. 3D. We then further lowered this statistical threshold to verify the absence of any trend across motor and auditory cortices. In Fig. 3 B and D the territories corresponding to one functional area were pooled together to facilitate visualization (i.e., A1, S2, Broca).

Supplementary Material

Acknowledgments

We thank C. A. Kell, E. Koechlin, F. Ramus, and L. H. Arnal for critical discussions and reading of the manuscript and L. Hugueville, E. Bertasi, J. Martinerie, and L. Garnero for technical help with simultaneous EEG/fMRI record. This work was funded by the Centre National de la Recherche Scientifique (CNRS), the Agence Nationale pour la Recherche (ANR), the Fondation Bettencourt-Schueller, the European Commission (ERC), and the Fondation pour la Recherche Médicale (FRM).

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1007189107/-/DCSupplemental.

References

- 1.Zatorre RJ, Gandour JT. Neural specializations for speech and pitch: Moving beyond the dichotomies. Philos Trans R Soc Lond B Biol Sci. 2008;363:1087–1104. doi: 10.1098/rstb.2007.2161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Bailey WJ, Yang S. Hearing asymmetry and auditory acuity in the Australian bushcricket Requena verticalis (Listroscelidinae; Tettigoniidae; Orthoptera) J Exp Biol. 2002;205:2935–2942. doi: 10.1242/jeb.205.18.2935. [DOI] [PubMed] [Google Scholar]

- 3.Voss HU, et al. Functional MRI of the zebra finch brain during song stimulation suggests a lateralized response topography. Proc Natl Acad Sci USA. 2007;104:10667–10672. doi: 10.1073/pnas.0611515104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Corballis MC. The evolution and genetics of cerebral asymmetry. Philos Trans R Soc Lond B Biol Sci. 2009;364:867–879. doi: 10.1098/rstb.2008.0232. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Meguerditchian A, Vauclair J. Contrast of hand preferences between communicative gestures and non-communicative actions in baboons: Implications for the origins of hemispheric specialization for language. Brain Lang. 2009;108:167–174. doi: 10.1016/j.bandl.2008.10.004. [DOI] [PubMed] [Google Scholar]

- 6.Poeppel D. The analysis of speech in different temporal integration windows: Cerebral lateralization as ‘asymmetric sampling in time.’. Speech Commun. 2003;41:245–255. [Google Scholar]

- 7.Corballis MC. From mouth to hand: Gesture, speech, and the evolution of right-handedness. Behav Brain Sci. 2003;26:199–208. doi: 10.1017/s0140525x03000062. discussion 208–260. [DOI] [PubMed] [Google Scholar]

- 8.Corballis MC. Language as gesture. Hum Mov Sci. 2009;28:556–565. doi: 10.1016/j.humov.2009.07.003. [DOI] [PubMed] [Google Scholar]

- 9.Hamdy S, et al. The cortical topography of human swallowing musculature in health and disease. Nat Med. 1996;2:1217–1224. doi: 10.1038/nm1196-1217. [DOI] [PubMed] [Google Scholar]

- 10.Gentilucci M, Corballis MC. From manual gesture to speech: A gradual transition. Neurosci Biobehav Rev. 2006;30:949–960. doi: 10.1016/j.neubiorev.2006.02.004. [DOI] [PubMed] [Google Scholar]

- 11.Schenker NM, et al. Broca's area homologue in chimpanzees (Pan troglodytes): Probabilistic mapping, asymmetry, and comparison to humans. Cereb Cortex. 2010;20:730–742. doi: 10.1093/cercor/bhp138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Giraud AL, et al. Endogenous cortical rhythms determine cerebral specialization for speech perception and production. Neuron. 2007;56:1127–1134. doi: 10.1016/j.neuron.2007.09.038. [DOI] [PubMed] [Google Scholar]

- 13.Boemio A, Fromm S, Braun A, Poeppel D. Hierarchical and asymmetric temporal sensitivity in human auditory cortices. Nat Neurosci. 2005;8:389–395. doi: 10.1038/nn1409. [DOI] [PubMed] [Google Scholar]

- 14.Abrams DA, Nicol T, Zecker S, Kraus N. Right-hemisphere auditory cortex is dominant for coding syllable patterns in speech. J Neurosci. 2008;28:3958–3965. doi: 10.1523/JNEUROSCI.0187-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Telkemeyer S, et al. Sensitivity of newborn auditory cortex to the temporal structure of sounds. J Neurosci. 2009;29:14726–14733. doi: 10.1523/JNEUROSCI.1246-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Shamir M, Ghitza O, Epstein S, Kopell N. Representation of time-varying stimuli by a network exhibiting oscillations on a faster time scale. PLOS Comput Biol. 2009;5:e1000370. doi: 10.1371/journal.pcbi.1000370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Schroeder CE, Lakatos P, Kajikawa Y, Partan S, Puce A. Neuronal oscillations and visual amplification of speech. Trends Cogn Sci. 2008;12:106–113. doi: 10.1016/j.tics.2008.01.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Canolty RT, et al. High gamma power is phase-locked to theta oscillations in human neocortex. Science. 2006;313:1626–1628. doi: 10.1126/science.1128115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Lakatos P, et al. An oscillatory hierarchy controlling neuronal excitability and stimulus processing in the auditory cortex. J Neurophysiol. 2005;94:1904–1911. doi: 10.1152/jn.00263.2005. [DOI] [PubMed] [Google Scholar]

- 20.MacNeilage PF, Davis BL. Motor mechanisms in speech ontogeny: Phylogenetic, neurobiological and linguistic implications. Curr Opin Neurobiol. 2001;11:696–700. doi: 10.1016/s0959-4388(01)00271-9. [DOI] [PubMed] [Google Scholar]

- 21.Nir Y, et al. Coupling between neuronal firing rate, gamma LFP, and BOLD fMRI is related to interneuronal correlations. Curr Biol. 2007;17:1275–1285. doi: 10.1016/j.cub.2007.06.066. [DOI] [PubMed] [Google Scholar]

- 22.Hasson U, Malach R, Heeger DJ. Reliability of cortical activity during natural stimulation. Trends Cogn Sci. 2010;14:40–48. doi: 10.1016/j.tics.2009.10.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Guenther FH, Ghosh SS, Tourville JA. Neural modeling and imaging of the cortical interactions underlying syllable production. Brain Lang. 2006;96:280–301. doi: 10.1016/j.bandl.2005.06.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Hickok G, Poeppel D. The cortical organization of speech processing. Nat Rev Neurosci. 2007;8:393–402. doi: 10.1038/nrn2113. [DOI] [PubMed] [Google Scholar]

- 25.Duffau H. The anatomo-functional connectivity of language revisited. New insights provided by electrostimulation and tractography. Neuropsychologia. 2008;46:927–934. doi: 10.1016/j.neuropsychologia.2007.10.025. [DOI] [PubMed] [Google Scholar]

- 26.Honey CJ, Thivierge JP, Sporns O. Can structure predict function in the human brain? Neuroimage. 2010;52:766–776. doi: 10.1016/j.neuroimage.2010.01.071. [DOI] [PubMed] [Google Scholar]

- 27.Skipper JI, Nusbaum HC, Small SL. Listening to talking faces: Motor cortical activation during speech perception. Neuroimage. 2005;25:76–89. doi: 10.1016/j.neuroimage.2004.11.006. [DOI] [PubMed] [Google Scholar]

- 28.Griffiths TD, Warren JD. The planum temporale as a computational hub. Trends Neurosci. 2002;25:348–353. doi: 10.1016/s0166-2236(02)02191-4. [DOI] [PubMed] [Google Scholar]

- 29.Hagoort P. On Broca, brain, and binding: A new framework. Trends Cogn Sci. 2005;9:416–423. doi: 10.1016/j.tics.2005.07.004. [DOI] [PubMed] [Google Scholar]

- 30.Sahin NT, Pinker S, Cash SS, Schomer D, Halgren E. Sequential processing of lexical, grammatical, and phonological information within Broca's area. Science. 2009;326:445–449. doi: 10.1126/science.1174481. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Skipper JI, Goldin-Meadow S, Nusbaum HC, Small SL. Gestures orchestrate brain networks for language understanding. Curr Biol. 2009;19:661–667. doi: 10.1016/j.cub.2009.02.051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.D'Ausilio A, et al. The motor somatotopy of speech perception. Curr Biol. 2009;19:381–385. doi: 10.1016/j.cub.2009.01.017. [DOI] [PubMed] [Google Scholar]

- 33.Lakatos P, Chen CM, O'Connell MN, Mills A, Schroeder CE. Neuronal oscillations and multisensory interaction in primary auditory cortex. Neuron. 2007;53:279–292. doi: 10.1016/j.neuron.2006.12.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Schroeder CE, Wilson DA, Radman T, Scharfman H, Lakatos P. Dynamics of Active Sensing and perceptual selection. Curr Opin Neurobiol. 2010;20:172–176. doi: 10.1016/j.conb.2010.02.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Arnal LH, Morillon B, Kell CA, Giraud AL. Dual neural routing of visual facilitation in speech processing. J Neurosci. 2009;29:13445–13453. doi: 10.1523/JNEUROSCI.3194-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Luo H, Liu Z, Poeppel D. Auditory cortex tracks both auditory and visual stimulus dynamics using low-frequency neuronal phase modulation. PLoS Biol. 2010;8:e1000445. doi: 10.1371/journal.pbio.1000445. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Kell CA, Morillon B, Kouneiher F, Giraud AL. Lateralization of speech production starts in sensory cortices—A possible sensory origin of cerebral left dominance for speech. Cereb Cortex. 2010 doi: 10.1093/cercor/bhq167. 10.1093/cercor/bhq167. [DOI] [PubMed] [Google Scholar]

- 38.Ghitza O, Greenberg S. On the possible role of brain rhythms in speech perception: Intelligibility of time-compressed speech with periodic and aperiodic insertions of silence. Phonetica. 2009;66:113–126. doi: 10.1159/000208934. [DOI] [PubMed] [Google Scholar]

- 39.Fries P. Neuronal gamma-band synchronization as a fundamental process in cortical computation. Annu Rev Neurosci. 2009;32:209–224. doi: 10.1146/annurev.neuro.051508.135603. [DOI] [PubMed] [Google Scholar]

- 40.Kahana MJ, Seelig D, Madsen JR. Theta returns. Curr Opin Neurobiol. 2001;11:739–744. doi: 10.1016/s0959-4388(01)00278-1. [DOI] [PubMed] [Google Scholar]

- 41.Petitto LA, Marentette PF. Babbling in the manual mode: Evidence for the ontogeny of language. Science. 1991;251:1493–1496. doi: 10.1126/science.2006424. [DOI] [PubMed] [Google Scholar]

- 42.Kuhl PK. Early language acquisition: Cracking the speech code. Nat Rev Neurosci. 2004;5:831–843. doi: 10.1038/nrn1533. [DOI] [PubMed] [Google Scholar]

- 43.Cutler A, Demuth K, McQueen JM. Universality versus language-specificity in listening to running speech. Psychol Sci. 2002;13:258–262. doi: 10.1111/1467-9280.00447. [DOI] [PubMed] [Google Scholar]

- 44.Vargha-Khadem F, Gadian DG, Copp A, Mishkin M. FOXP2 and the neuroanatomy of speech and language. Nat Rev Neurosci. 2005;6:131–138. doi: 10.1038/nrn1605. [DOI] [PubMed] [Google Scholar]

- 45.Koechlin E, Jubault T. Broca's area and the hierarchical organization of human behavior. Neuron. 2006;50:963–974. doi: 10.1016/j.neuron.2006.05.017. [DOI] [PubMed] [Google Scholar]

- 46.Laufs H, Daunizeau J, Carmichael DW, Kleinschmidt A. Recent advances in recording electrophysiological data simultaneously with magnetic resonance imaging. Neuroimage. 2008;40:515–528. doi: 10.1016/j.neuroimage.2007.11.039. [DOI] [PubMed] [Google Scholar]

- 47.Sadaghiani S, et al. Intrinsic connectivity networks, alpha oscillations, and tonic alertness: A simultaneous electroencephalography/functional magnetic resonance imaging study. J Neurosci. 2010;30:10243–10250. doi: 10.1523/JNEUROSCI.1004-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Sommer IE, Aleman A, Bouma A, Kahn RS. Do women really have more bilateral language representation than men? A meta-analysis of functional imaging studies. Brain. 2004;127:1845–1852. doi: 10.1093/brain/awh207. [DOI] [PubMed] [Google Scholar]

- 49.Mandelkow H, Halder P, Boesiger P, Brandeis D. Synchronization facilitates removal of MRI artefacts from concurrent EEG recordings and increases usable bandwidth. Neuroimage. 2006;32:1120–1126. doi: 10.1016/j.neuroimage.2006.04.231. [DOI] [PubMed] [Google Scholar]

- 50.Stippich C, Ochmann H, Sartor K. Somatotopic mapping of the human primary sensorimotor cortex during motor imagery and motor execution by functional magnetic resonance imaging. Neurosci Lett. 2002;331:50–54. doi: 10.1016/s0304-3940(02)00826-1. [DOI] [PubMed] [Google Scholar]

- 51.Fukunaga A, et al. The possibility of left dominant activation of the sensorimotor cortex during lip protrusion in men. Brain Topogr. 2009;22:109–118. doi: 10.1007/s10548-009-0101-x. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.