Abstract

Magnetic resonance imaging (MRI) can provide high-quality 3-D visualization of prostate and surrounding tissue, thus granting potential to be a superior medical imaging modality for guiding and monitoring prostatic interventions. However, the benefits cannot be readily harnessed for interventional procedures due to difficulties that surround the use of high-field (1.5T or greater) MRI. The inability to use conventional mechatronics and the confined physical space makes it extremely challenging to access the patient. We have designed a robotic assistant system that overcomes these difficulties and promises safe and reliable intraprostatic needle placement inside closed high-field MRI scanners. MRI compatibility of the robot has been evaluated under 3T MRI using standard prostate imaging sequences and average SNR loss is limited to 5%. Needle alignment accuracy of the robot under servo pneumatic control is better than 0.94 mm rms per axis. The complete system workflow has been evaluated in phantom studies with accurate visualization and targeting of five out of five 1 cm targets. The paper explains the robot mechanism and controller design, the system integration, and presents results of preliminary evaluation of the system.

Index Terms: Magnetic resonance imaging (MRI) compatible robotics, pneumatic control, prostate brachytherapy and biopsy

I. INTRODUCTION

Each year approximately 1.5 M core needle biopsies are performed, yielding about 220 000 new prostate cancer cases [1]. If the cancer is confined to the prostate, then low-dose-rate (LDR) permanent brachytherapy is a common treatment option; a large number (50–150) of radioactive pellets/seeds are implanted into the prostate using 15–20 cm long 18 G needles [2]. A complex seed-distribution pattern must be achieved with great accuracy in order to eradicate the cancer, while minimizing radiation toxicity to adjacent healthy tissues. Over 40 000 brachytherapies are performed in the United States each year, and the number is steadily growing [3]. Transrectal ultrasound (TRUS) is the current “gold standard” for guiding both biopsy and brachytherapy due to its real-time nature, low cost, and apparent ease of use [4]. However, TRUS-guided biopsy has a detection rate of only 20%–30% [5]. Furthermore, TRUS cannot effectively monitor the implant procedure as implanted seeds cannot be seen in the image. Magnetic resonance imaging (MRI) seems to possess many of the capabilities that TRUS is lacking with high sensitivity for detecting prostate tumors, high spatial resolution, excellent soft tissue contrast, and volumetric imaging capabilities. However, closed-bore high-field MRI has not been widely adopted for prostate interventions because strong magnetic fields and confined physical space present formidable challenges.

The clinical efficacy of MRI-guided prostate brachytherapy and biopsy was demonstrated by D’Amico et al. at the Brigham and Women’s Hospital using a 0.5T open-MRI scanner [6], [7]. Magnetic resonance (MR) images were used to plan and monitor transperineal needle placement. The needles were inserted manually using a guide comprising a grid of holes, with the patient in the lithotomy position, similar to the TRUS-guided approach. Zangos et al. used a transgluteal approach with 0.2T MRI, but did not specifically target the tumor foci [8]. Susil et al. described four cases of transperineal prostate biopsy in a closed-bore scanner, where the patient was moved out of the bore for needle insertions, and then, placed back into the bore to confirm satisfactory placement [9]. Beyersdorff et al. performed targeted transrectal biopsy in a 1.5T MRI unit with a passive articulated needle guide and have reported 12 cases of biopsy to date [10].

A thorough review of MRI compatible systems to date for image-guided interventions by Tsekos et al. can be found in [11]. Robotic assistance has been investigated for guiding instrument placement in MRI, beginning with neurosurgery [12] and later percutaneous interventions [13], [14]. Chinzei et al. developed a general-purpose robotic assistant for open MRI [15] that was subsequently adapted for transperineal intraprostatic needle placement [16]. Krieger et al. presented a 2-DOF passive, un-encoded, and manually manipulated mechanical linkage to aim a needle guide for transrectal prostate biopsy with MRI guidance [17]. With the use of three active tracking coils, the device is visually servoed into position, and then, the patient is moved out of the scanner for needle insertion.

Developments in MR-compatible motor technologies include Stoianovici et al. who describe a fully MRI-compatible pneumatic stepper motor called PneuStep in [18], Elhawary et al. describe an air motor for limb localization in [19], and Suzuki et al. who describe a stepper motor that uses the scanner’s magnetic field as a driving force is described in [20]. Other recent developments in MRI-compatible mechanisms include pneumatic stepping motors on a light needle puncture robot [21], the Innomotion commercial pneumatic robot for percutaneous interventions (Innomedic, Herxheim, Germany), and haptic interfaces for functional MRI (fMRI) [22]. Ultrasonic motor drive techniques that enhance MR compatibility are described in [20]. The feasibility of using piezoceramic motors for robotic prostate biopsy is presented in [23]. Stoianovici et al. have taken their developments in MR-compatible pneumatic stepper motors and applied them to robotic brachytherapy seed placement [24]. This system is a fully MR-compatible, fully automatic prostate brachytherapy seed placement system; the patient is in the decubitus position and seeds are placed in the prostate transperineally. The relatively high cost and complexity of the system, in addition to the requirement to perform the procedure in a different pose than used for preoperative imaging are issues that we intend to overcome with the work presented here.

This paper introduces the design of a novel computer-integrated robotic mechanism for transperineal prostate needle placement in 3T closed-bore MRI. The mechanism is capable of positioning the needle for treatment by ejecting radioactive seeds or diagnosis by harvesting tissue samples inside the magnet bore, under remote control of the physician without moving the patient out of the imaging space. This enables the use of real-time imaging for precise placement of needles in soft tissues. In addition to structural images, protocols for diffusion imaging and MR spectroscopy will be available intraoperatively, promising enhanced visualization and targeting of pathologies. Accurate and robust needle-placement devices, navigated based on such image guidance, are becoming valuable clinical tools and have clear applications in several other organ systems.

The full system architecture, including details regarding planning software and integration of real-time MR imaging, are described in [25]. The focus of this paper is design and evaluation of the robotic needle placement manipulator and is organized as follows. Section II describes the workspace analysis and design requirements for the proposed device and Section III describes the detailed design of system prototype. Results of the MR compatibility, workflow validation, and accuracy are presented in Section IV, with a discussion of the system in Section V.

II. DESIGN REQUIREMENTS

A. Workspace Analysis

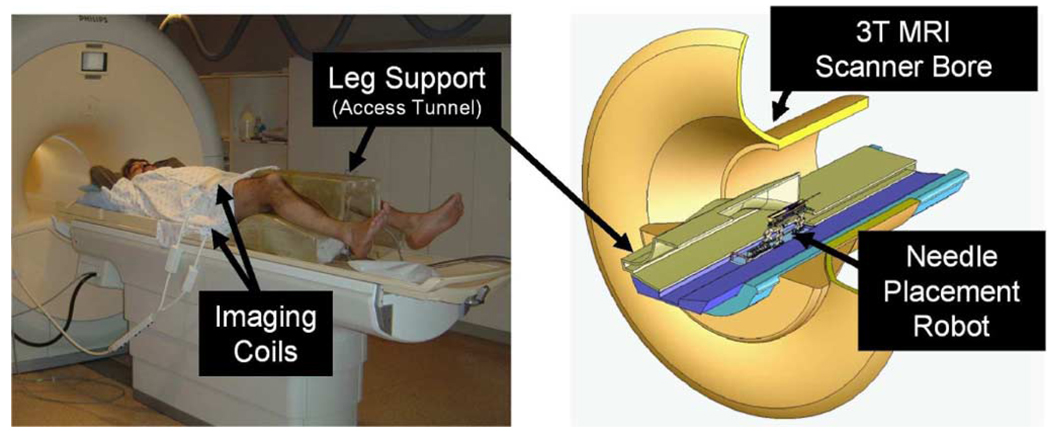

The system’s principal function is accurate needle placement in the prostate for diagnosis and treatment, primarily in the form of biopsy and brachytherapy seed placement, respectively. The patient is positioned in the supine position with the legs spread and raised, as shown in Fig. 1 (left). The patient is in a similar configuration to that of TRUS-guided brachytherapy, but the MRI bore’s constraint (60 cm diameter) necessitates reducing the spread of the legs and lowering the knees into a semilithotomy position. The robot operates in the confined space between the patient’s legs without interference with the patient, MRI scanner components, anesthesia equipment, and auxiliary equipment present, as shown in the cross section shown in Fig. 1 (right).

Fig. 1.

Positioning of the patient in the semilithotomy position on the leg support (left). The robot accesses the prostate through the perineal wall, which rests against the superior surface of the tunnel within the leg rest (right).

The average size of the prostate is 50 mm in the lateral direction by 35 mm in the anterior–posterior direction by 40 mm in length. The average prostate volume is about 35 cm3; by the end of a procedure, this volume may enlarge by as much as 25% due to swelling [26]. For our system, the standard 60 mm × 60 mm perineal window of TRUS-guided brachytherapy was increased to 100 mm × 100 mm, in order to accommodate patient variability and lateral asymmetries in patient setup. In depth, the workspace extends to 150 mm superior of the perineal surface. Direct access to all clinically relevant locations in the prostate is not always possible with a needle inserted purely along apex-base direction due to pubic arch interference (PAI). If more than 25% of the prostate diameter is blocked (typically in prostates larger than 55 cm3), then the patient is usually declined for implantation [26]. Needle angulation in the sagittal and coronal planes will enable procedure to be performed on many of these patients who are typically contraindicated for brachytherapy due to PAI.

B. System Requirements

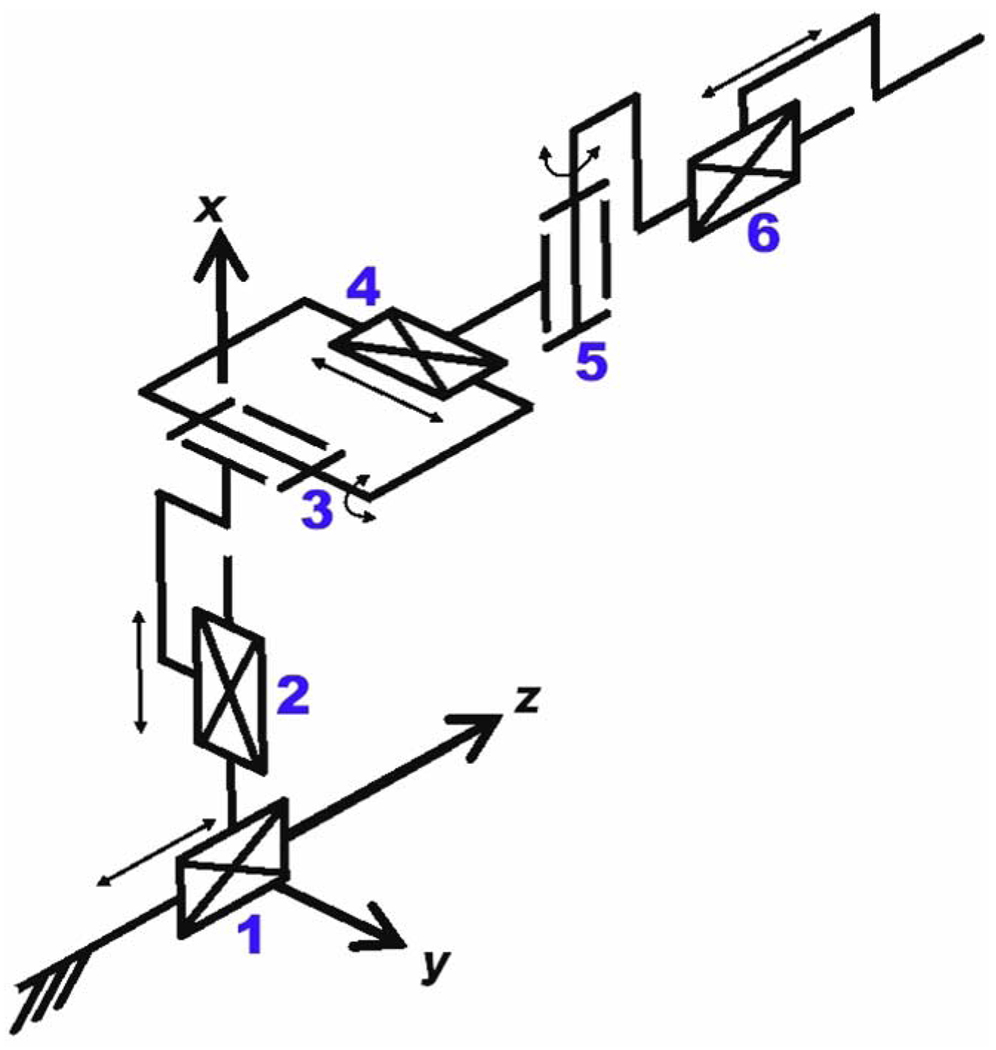

The kinematic requirements for the robot are derived from the workspace analysis. A kinematic diagram of the proposed system is shown in Fig. 2. The robot is situated upon a manual linear slide that repeatedly positions the robot in the access tunnel and allows fast removal for reloading brachytherapy needles or collecting harvested biopsy tissue. The primary actuated motions of the robot include two prismatic motions and two rotational motions for aligning the needle axis. In addition to these base motions, application-specific motions are also required; these include needle insertion, canula retraction or biopsy gun actuation, and needle rotation.

Fig. 2.

Equivalent kinematic diagram of the robot; six DOFs are required for needle insertion procedures with this manipulator. Additional application-specific end effectors may be added to provide additional DOF.

The accuracy of the individual servo-controlled joints is targeted to be 0.1 mm, and the needle placement accuracy of the robot is targeted to be 0.5 mm. This target accuracy approximates the voxel size of the MR images used that represents the finest possible targeting precision. The overall system accuracy, however, is expected to be somewhat less when effects such as imaging resolution, needle deflection, and tissue deformation are taken into account. The MR image resolution used is typically 1 mm and the clinically significant target is typically 5 mm in size. The specifications for the requirements of each motion are shown in Table I. The numbered motions in the table correspond to the labeled joints in the equivalent kinematic diagram shown in Fig. 2. DOF 1 through 6 represent the primary robot motions and DOF 7 and 8 represent application specific motions. These specifications represent a flexible system that can accommodate a large variety of patients. The proof-of-concept system presented here is designed to replicate the DOF of traditional TRUS-guided procedures with needle insertion only along the apex-base direction, and thus, does not include angulation (DOF 3 and 5).

TABLE I.

Kinematic Specifications

| Degree of Freedom | Motion | Requirements | |

|---|---|---|---|

| 1) | Gross Axial Position | 1m | Manual with repeatable stop |

| 2) | Vertical Motion | 0–100mm | Precise servo control |

| 3) | Elevation Angle | +15°, −0° | Precise servo control |

| 4) | Horizontal Motion | ±50mm | Precise servo control |

| 5) | Azimuth Angle | ±15° | Precise servo control |

| 6) | Needle Insertion | 150mm | Manual or Automated |

| 7) | Cannula Retraction or Biopsy Gun Firing |

60mm | Manual or Automated |

| 8) | Needle Rotation | 360° | Manual or Automated |

C. MRI Compatibility Requirements

Significant complexity is introduced when designing a system operating inside the bore of high-field 1.5–3T MRI scanners since traditional mechatronics materials, sensors, and actuators cannot be employed. The requirements for MR compatibility include: 1) MR safety; 2) maintained image quality; and 3) ability to operate unaffected by the scanner’s electric and magnetic fields [15]. Ferromagnetic materials must be avoided entirely because they cause image artifacts and distortion due to field inhomogeneities, and can pose a dangerous projectile risk. Non-ferromagnetic metals, such as aluminum, brass, and titanium, or high-strength plastic and composite materials are, therefore, permissible. However, the use of any conductive materials in the vicinity of the scanner’s isocenter must be limited because of the potential for induced eddy currents to disrupt the magnetic field homogeneity. To prevent or limit local heating in the proximity of the patient’s body, the materials and structures used must be either nonconductive or free of loops and of carefully chosen lengths to avoid eddy currents and resonance. In this robot, all electrical and metallic components are isolated from the patient’s body. The following section details material and component selection, with the consideration of MRI compatibility issues.

III. SYSTEM AND COMPONENT DESIGN

A. Overview

The first embodiment of the system for the initial proof-of-concept and phase-1 clinical trials provides the two prismatic motions in the axial (transverse) plane over the perineum (DOF 2 and DOF 4) and an encoded manual needle guide (DOF 6). This represents an automated high-resolution needle guide, functionally similar to the template used in conventional brachytherapy. The next design iteration will produce a 4-DOF robot base that includes the two angulation DOFs. The base of the manipulator has a modular platform that allows for different end effectors to be mounted on it. The two initial end effectors will accommodate biopsy guns and brachytherapy needles. Both require an insertion phase; the former requires activating a single-acting button to engage the device and a safety lock. The latter requires an additional controlled linear motion to accommodate the cannula retraction to release the brachytherapy seeds (DOF 7). Rotation of the needle about its axis may be implemented to either “drill” the needle in to limit deflect, or to steer the needle using bevel steering techniques such as those described in [27]. Sterility has been taken into consideration for the design of the end effectors. In particular, the portions of the manipulator and leg rest that come in direct contact with the patient or needle will be removable and made of materials that are suitable for sterilization. The remainder of the robot will be draped. An alternative solution is to enclose the entire leg rest with the robot in a sterile drape, thus completely isolating the robot from the patient except for the needle.

B. Mechanism Design

The design of the mechanism is particulary important since there are very confined spaces and the robot is constructed without the use of metallic links. The design was developed such that the kinematics can be simplified, control can be made less complex, motions may be decoupled, actuators can be aligned appropriately, and system rigidity can be increased. Based upon analysis of the workspace and the application, the following additional design requirements have been adopted: 1) prismatic base motions should be able to be decoupled from angulation since the majority of procedures will not require the two rotational DOFs; 2) actuator motion should be in the axial direction (aligned with the scanner’s axis) to maintain a compact profile; and 3) extension in both the vertical and horizontal planes should be telescopic to minimize the working envelope.

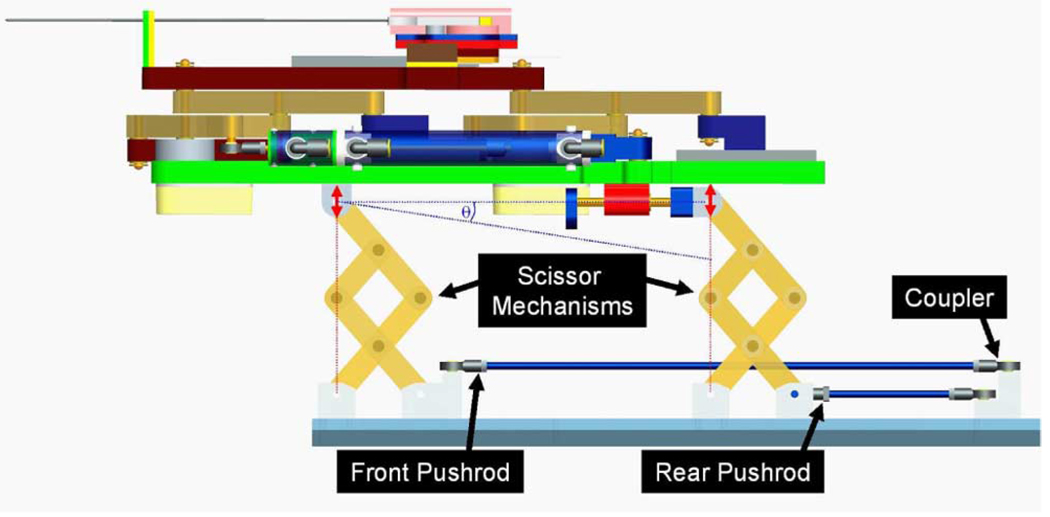

The primary base DOFs (DOF 2–5 in Table I) are broken into two decoupled planar motions. Motion in the vertical plane includes 100 mm of vertical travel, and optionally up to 15° of elevation angle. This is achieved using a modified version of a scissor lift mechanism that is traditionally used for plane parallel motion. By coupling two such mechanisms, as shown in Fig. 3, 2-DOF motion can be achieved. Stability is increased by using a pair of such mechanisms in the rear. For purely prismatic, both slides move in unison; angulation (θ) is generated by relative motions. To aid in decoupling, the actuator for the rear slide can be fixed to the carriage of the primary motion linear drive, thus allowing one actuator to be locked when angulation is unnecessary. As shown in Fig. 3, the push rods for the front and rear motions are coupled together to maintain only translational motion in the current prototype.

Fig. 3.

This mechanism provides for motion in the vertical plane. Coupling the forward and rear motion provides for vertical travel, independently moving the rear provides for elevation angle (θ) adjustment.

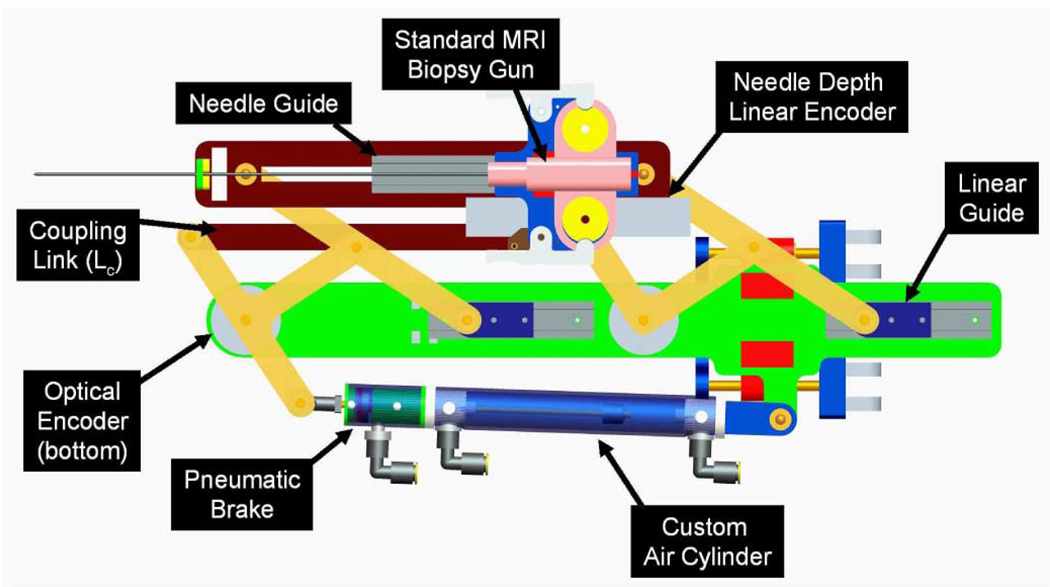

Motion in the horizontal plane is accomplished with a second planar bar mechanism. This motion is achieved by coupling two straight line motion mechanisms, as shown in Fig. 4, generally referred to as Scott–Russell mechanisms [28]. By combining two such straight-line motions, both linear and rotational motions can be realized in the horizontal plane. The choice of this design over the use of the previously described scissor-type mechanism is that this allows for bilateral motion with respect to the nominal center position. Fig. 4 shows the mechanism where only translation is available; this is accomplished by linking the front and rear mechanisms with a connecting bar. A benefit of this design is that it is straightforward to add the rotational motion for future designs by replacing the rigid connecting bar (LC) with another actuator. Due to the relative ease of manufacturing, the current iteration of the system is made primarily out of acrylic. In future design iterations, the links will be made out of high strength, dimensionally stable, highly electrically insulating, and sterilizable plastic [e.g., Ultem or polyetheretherketone (PEEK)].

Fig. 4.

This mechanism provides for motion in the horizontal plane. The design shown provides prismatic motion only; rotation can be enabled by actuating rear motion independently by replacing coupling link (LC) with a second actuator. The modular, encoded needle guide senses the depth during manual needle insertion and can be replaced with different end effectors for other procedures.

C. Actuator Design

The MRI environment places severe restrictions on the choice of sensors and actuators. Many mechatronic systems use electrodynamic actuation, however, the very nature of an electric motor precludes its use in high-field magnetic environments. Therefore, it is necessary to either use actuators that are compatible with the MR environment, or to use a transmission to mechanically couple the manipulator in close proximity to the scanner to standard actuators situated outside the high field. MR-compatible actuators such as piezoceramic motors have been evaluated in [15], [20], and [23]; however, these are prone to introducing noise into MR imaging, and therefore, negatively impacting image quality. Mechanical coupling can take the form of flexible driveshafts [17], push–pull cables, or hydraulic (or pneumatic) couplings [22].

Pneumatic cylinders are the actuators of choice for this robot. Accurate servo control of pneumatic actuators using sliding mode control (SMC) with submillimeter tracking accuracy and 0.01 mm steady-state error (SSE) have been demonstrated in [29]. Although pneumatic actuation seems ideal for MRI, most standard pneumatic cylinders are not suitable for use in MRI. Custom MR compatible pneumatic cylinders have been developed for use with this robot. The cylinders are based upon Airpel 9.3 mm bore cylinders.1 These cylinders were chosen because the cylinder bore is made of glass and the piston and seals are made of graphite. This design has two main benefits; the primary components are suitable for MRI and they inherently have very low friction (as low as 0.01 N). In collaboration with the manufacturer, we developed the cylinders shown in Fig. 4 (bottom) that are entirely nonmetallic except for the brass shaft. The cylinders can handle up to 100 lbf/in2 (6.9 bar), and therefore, can apply forces up to 46.8 N.

In addition to moving the robot, it is important to be able to lock it in position to provide a stable needle insertion platform. Pneumatically operated, MR-compatible brakes have been developed for this purpose. The brakes are compact units that attach to the ends of the previously described cylinders, as shown in Fig. 4 (top), and clamp down on the rod. The design is such that the fail-safe state is locked and applied air pressure releases a spring-loaded collet to enable motion. The brakes are disabled when the axis is being aligned and applied when the needle is to be inserted or an emergency situation arises.

Proportional pressure regulators were the valves of choice for this robot because they allow for direct control of air pressure, thus the force applied by the pneumatic cylinder. This is an advantage because it aids in controller design and also has the inherent safety of being able to limit applied pressure to a prescribed amount. Most pneumatic valves are operated by a solenoid coil; unfortunately, as with electric motors, the very nature of a solenoid coil is a contraindication for its use in an MR environment. With pneumatic control, it is essential to limit the distance from the valve to the cylinder on the robot; thus, it is important to use valves that are safe and effective in the MR environment. By placing the controller in the scanner room near the foot of the bed, air tubing lengths are reduced to 5 m. The robot controller uses piezoelectrically actuated proportional pressure valves,2 thus permitting their use near MRI. A pair of these valves provide a differential pressure of ± 100 lbf/in2 on the cylinder piston for each actuated axis. A further benefit of piezoelectrically actuated valves is the rapid response time (4 ms). Thus, by using piezoelectric valves, the robot’s bandwidth can be increased significantly by limiting tubing lengths and increasing controller update rate.

D. Position Sensing

Standard methods of position sensing that are generally suitable for pneumatic cylinders include: linear potentiometers, linear variable differential transformers (LVDTs), capacitive sensors, ultrasonic sensors, magnetic sensors, laser sensors, optical encoders, and cameras (machine vision). Most of these sensing modalities are not practical for use in an MR environment. However, there are two methods that do appear to have potential: 1) linear optical encoders and 2) direct MRI image guidance.

Standard optical encoders3 have been thoroughly tested in a 3T MRI scanner for functionality and induced effects in the form of imaging artifacts, as described in [25], and later, in Section IV-A. The encoders have been incorporated into the robot and have performed without any stray or missed counts; the imaging artifact is confined locally to within 2–5 cm from the encoder. This is sufficient because the robot is designed to distance the sensors from the prostate imaging volume.

E. Registration to MR Imaging

Direct MRI-based image guidance shows great promise for high-level control, safety, and verification. However, the refresh rate and resolution is not sufficient for use in low-level servo control of a robot joint. Practical methods of robot tracking are discussed in [17].

Inherently, the robot system has two different coordinate systems: 1) the image coordinate system for the imaging, planning, and verification and 2) the robot coordinate system based on the encoders for servo control of the robot joint described in Section III-D. The interpretation of positional information between these two coordinate systems is crucial for the targeting accuracy. To achieve dynamic global registration between the robot and image coordinates a z-shape passive tracking fiducial [30] is attached on the robot base. The rigid structure of the fiducial frame is made up of seven rigid glass tubes with 3 mm inner diameters that are filled with contrast agent extracted from commercially available MRI fiducials.4 The rods are placed on three faces of a 60 mm cube, as shown in Fig. 9, and any arbitrary MR image slicing through rods provides the full 6-DOF pose of the frame, and hence, the robot, with respect to the scanner. Thus, by locating the fiducial attached to the robot, the transformation between image coordinate (where planning is performed) and the robot coordinate is known. Once the transformation is known, the end-effector location with respect to the fiducial frame is calculated from the kinematics and encoder positions and transformed to the representation in the image coordinate system.

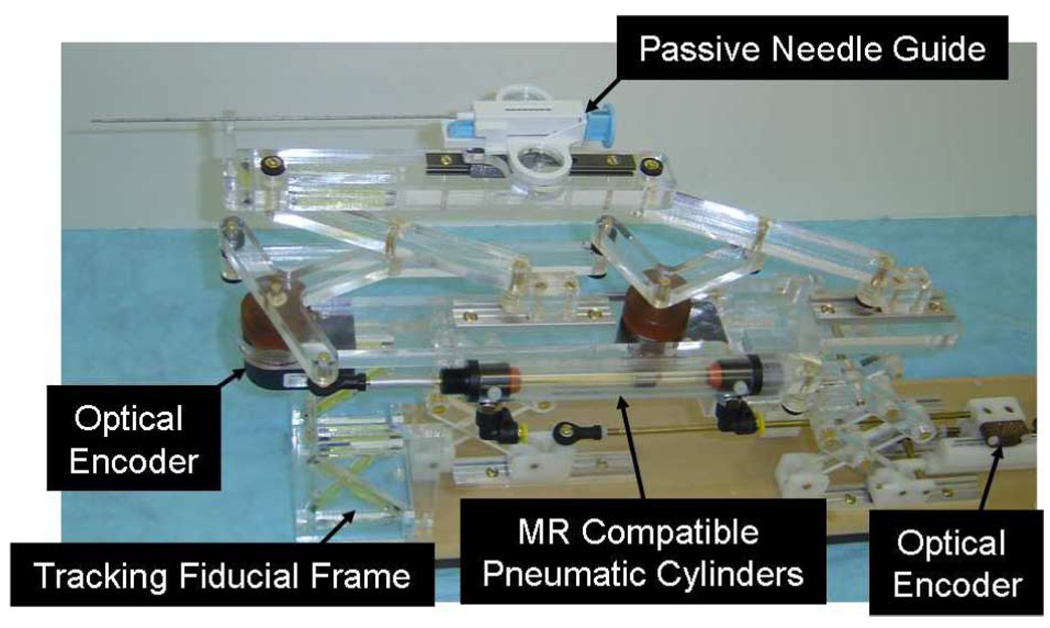

Fig. 9.

Robotic needle placement mechanism with two active DOF and one passive, encoded needle insertion. Dynamic global registration is achieved with the attached tracking fiducial.

F. Robot Controller Hardware

MRI is very sensitive to electrical signals passing in and out of the scanner room. Electrical signals passing through the patch panel or wave guide can act as antennas, bringing stray RF noise into the scanner room. For that reason, and to minimize the distance between the valves and the robot, the robot controller is placed inside of the scanner room with no external electrical connections. The controller comprises an electromagnetic interference (EMI) shielded enclosure that sits at the foot of the scanner bed, as shown in Fig. 6; the controller has proved to be able to operate 3 m from the edge of the scanner bore. Inside of the enclosure is an embedded computer with analog I/O for interfacing with valves and pressure sensors and a field-programmable gate array (FPGA) module for interfacing with joint encoders (see Fig. 5). Also, in the enclosure are the piezoelectric servo valves, piezoelectric brake valves, and pressure sensors. The distance between the servo valves and the robot is minimized to less than 5 m, thus maximizing the bandwidth of the pneumatic actuators. Control software on the embedded PC provides for low-level joint control and an interface to interactive scripting and higher level trajectory planning. Communication between the low-level control PC and the planning and control workstation sitting in the MR console room is through a 100-FX fiber-optic Ethernet connection. In the prototype system, power is supplied to the controller from a filtered dc power supply that passes through the patch panel; a commercially available MR-compatible power supply will be used in future design iterations. No other electrical connections pass out of the scanner room, thus significantly limiting the MR imaging interference.

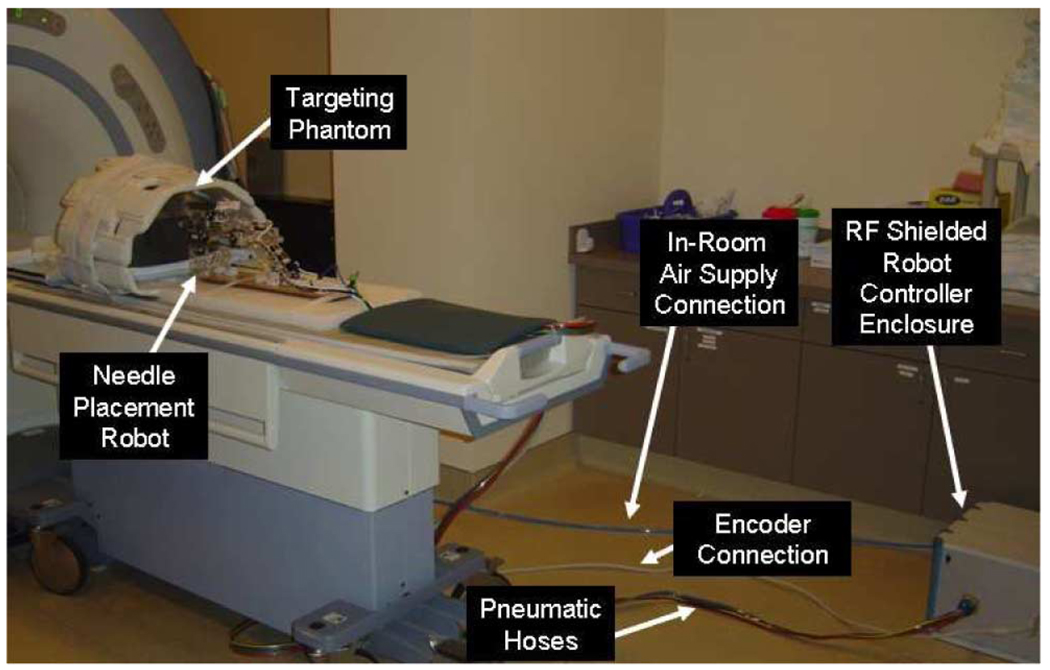

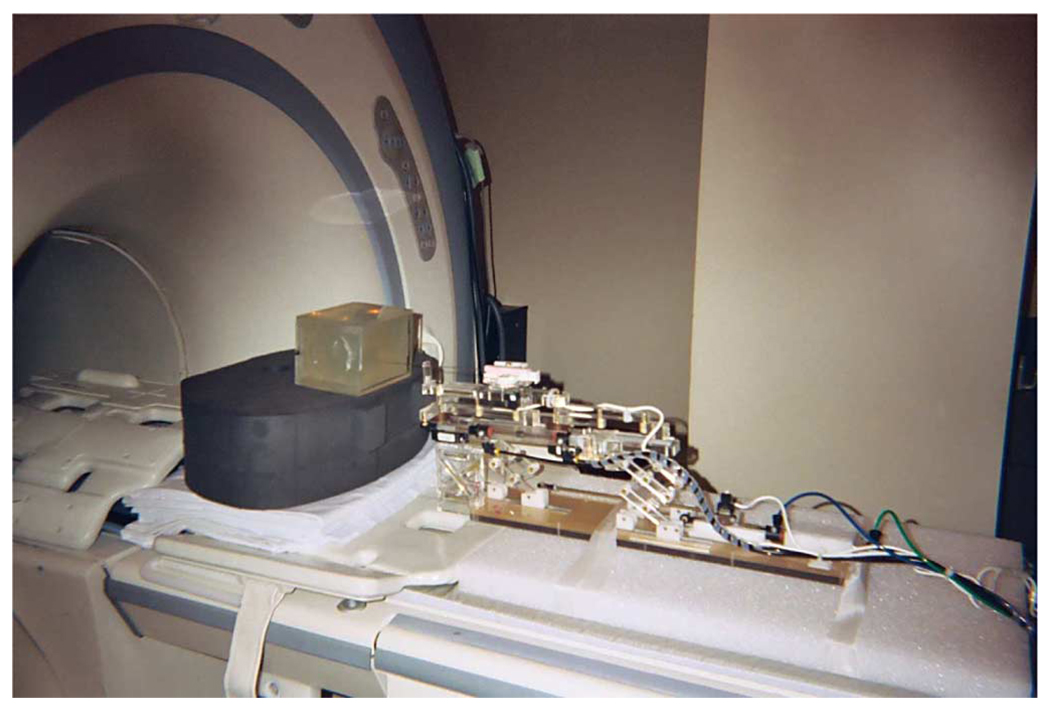

Fig. 6.

Configuration of robot for system evaluation trials. The robot resides on the table at a realistic relative position to the phantom. The controller operates in the room at a distance of 3 m from the 3-T MRI scanner without functional difficulties or significant image quality degradation.

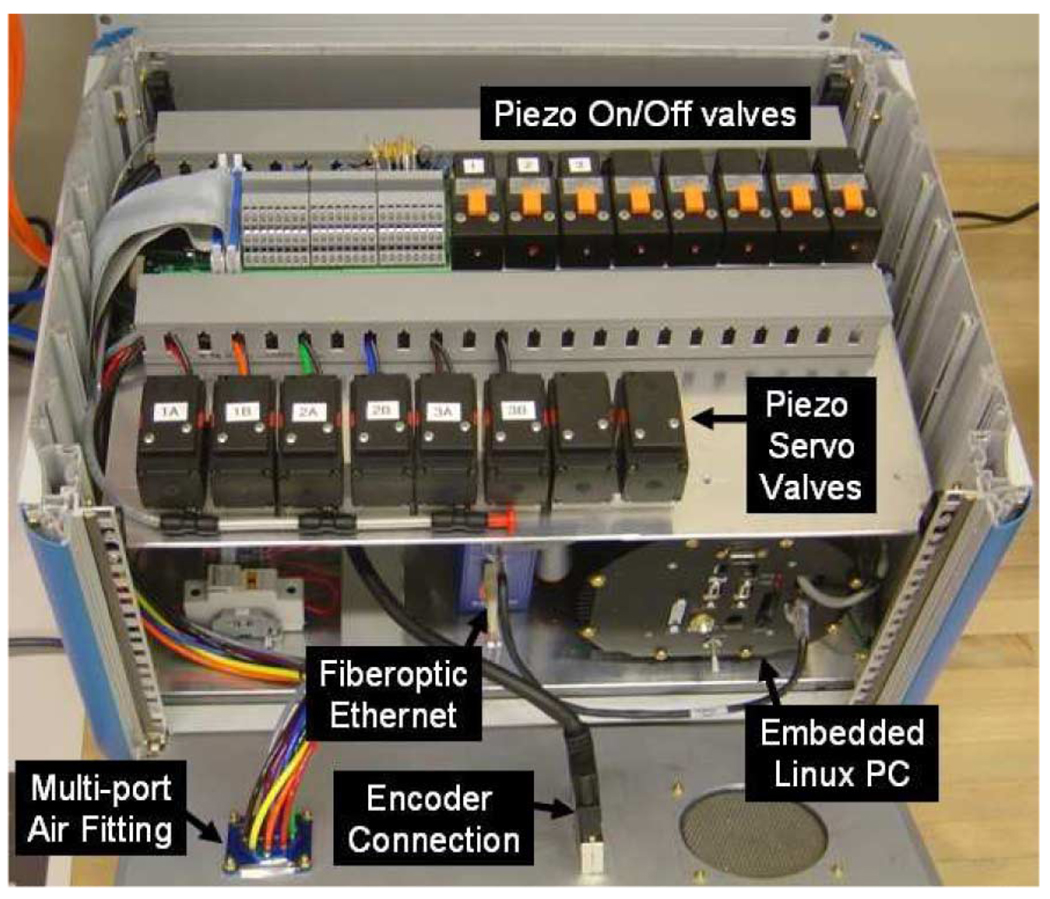

Fig. 5.

Controller contains the embedded Linux PC providing low-level servo control, the piezoelectric valves, and the fiber-optic Ethernet converter. The EMI shielded enclosure is placed inside the scanner room near the foot of the bed. Connections to the robot include the multitube air hose and the encoder cable; connection to the planning workstation is via fiber-optic Ethernet.

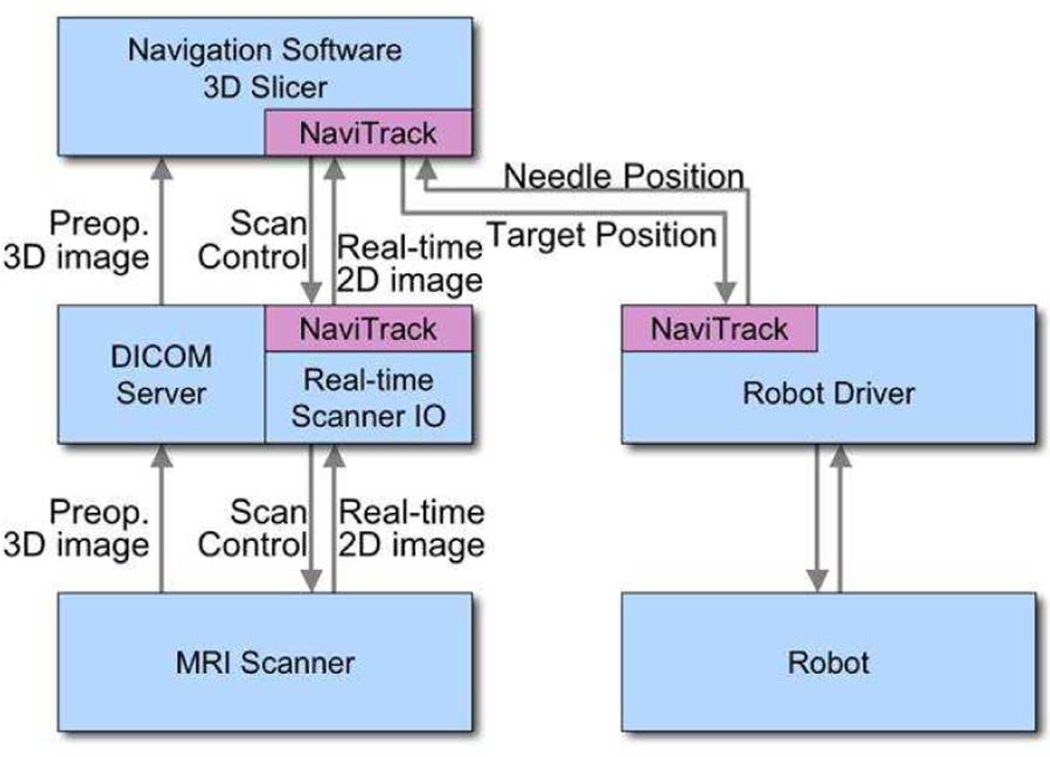

G. Interface Software

Three-dimensional slicer surgical navigation software5 serves as a user interface with the robot. The navigation software is running on a Linux-based workstation in the scanner’s console room. A customized graphical user interface (GUI) specially designed for the prostate intervention with the robot is described in [31]. The interface allows smooth operation of the system throughout the clinical workflow including registration, planning, targeting, monitoring, and verification (see Fig. 8). The workstation is connected to the robot and the scanner’s console via Ethernet. An open-source device connection and communication tool originally developed in virtual reality research [32] was used to exchange various types of data such as control commands, positional data, and images among the components. Fig. 7 shows the configuration used in the system.

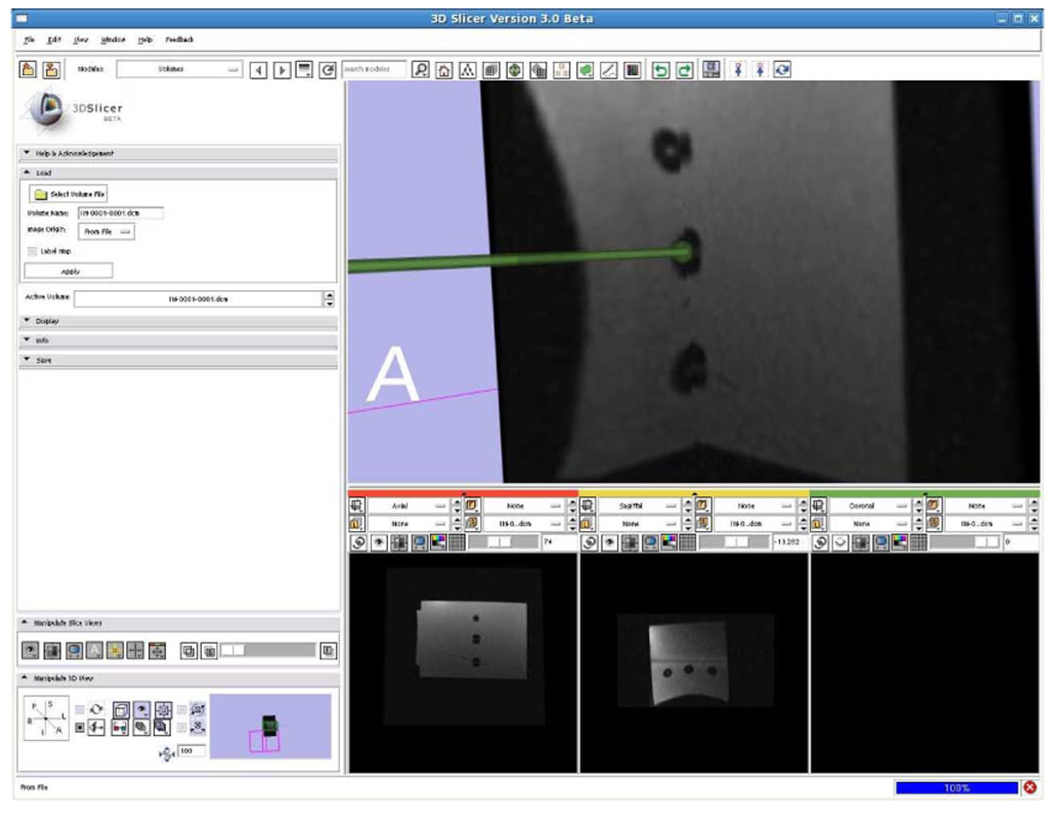

Fig. 8.

3-D slicer planning workstation showing a selected target and the real-time readout of the robot’s needle position. The line represents a projection along the needle axis and the sphere represents the location of the needle tip.

Fig. 7.

Diagram shows the connection and the data flow among the components. NaviTrack, an open-source device communication tool, is used to exchange control, position, and image data.

In the planning phase, preoperative images are retrieved from a DICOM server and loaded into the navigation software. Registration is performed between the preoperative planning images and intraoperative imaging using techniques such as those described in [33]. Target points for the needle insertion are selected according to the preoperative imaging, and the coordinates of the determined target points are selected in the planning GUI. Once the patient and the robot are placed in the MRI scanner, a 2-D image of the fiducial frame is acquired and passed to the navigation software to calculate the 6-DOF pose of the robot base for the robot-image registration, as described in Section III-E. The position and orientation of the robot base is sent through the network from the navigation software to the robot controller. After the registration, the robot can accept target coordinates represented in the image (patient) coordinate system.

During the procedure, a target and an entry point are chosen on the navigation software, and the robot is sent the coordinates and aligns the needle guide appropriately. In the current system, the needle is inserted manually, while the needle position is monitored by an encoded needle guide and displayed in real-time on the display. Needle advancement in the tissue is visualized on the navigation software in two complementary ways: 1) a 3-D view of needle model combined with preoperative 3-D image resliced in planes intersecting the needle axis and 2) 2-D real-time MR images acquired from the planes along or perpendicular to the needle path and continuously transferred from the scanner through the network. The former provides a high refresh rate, typically 10 Hz, allowing a clinician to manipulate the needle interactively, while the latter provides the changing shape or position of the target lesion with relatively slower rate depending on the imaging speed, typically 0.5 frames per second.

The interface software enables “closed-loop” needle guidance, where the action made by the robot is captured by the MR imaging, and immediately fed back to a physician to aid their decision for the next action. The reason for keeping a human in the loop is to increase the safety of the needle insertion, and to allow for the live MR images to monitor progress. Typically, the robot fully aligns the needle as planned before coming in contact with the patient, then the placement is adjusted if necessary responsive to the MR images, and the physician performs the insertion under real-time imaging. Fig. 8 shows the planning software with an MR image of the phantom loaded, and real-time feedback of the robot position is used to generate the overlaid needle axis model.

IV. RESULTS

The first iteration of the needle placement robot has been constructed and is operational. All mechanical, electrical, communications, and software issues have been resolved. The current state of the manipulator is two actuated DOFs (vertical and horizontal) with an encoded passive needle insertion stage, as shown in Fig. 9. Evaluation of the robot is in three distinct phases: 1) evaluation of the MR compatibility of the robot; 2) evaluation of the workspace and workflow; and 3) evaluation of the localization and placement accuracies.

A. MR Compatibility

MR compatibility includes three elements: 1) safety; 2) preserving image quality; and 3) maintaining functionality. Safety issues such as RF heating are minimized by isolating the robot from the patient, avoiding wire coils, and avoiding resonances in components of the robot; ferrous materials are completely avoided to prevent the chance of a projectile. Image quality is maintained by again avoiding ferromagnetic materials, limiting conductive materials near the imaging site, and avoiding RF sources that can interfere with the field homogeneity and sensed RF signals. Pneumatic actuation and optical sensing, as described in Section III, preserve full functionality of the robot in the scanner during imaging. The MR compatibility of the system is thoroughly evaluated in [25].

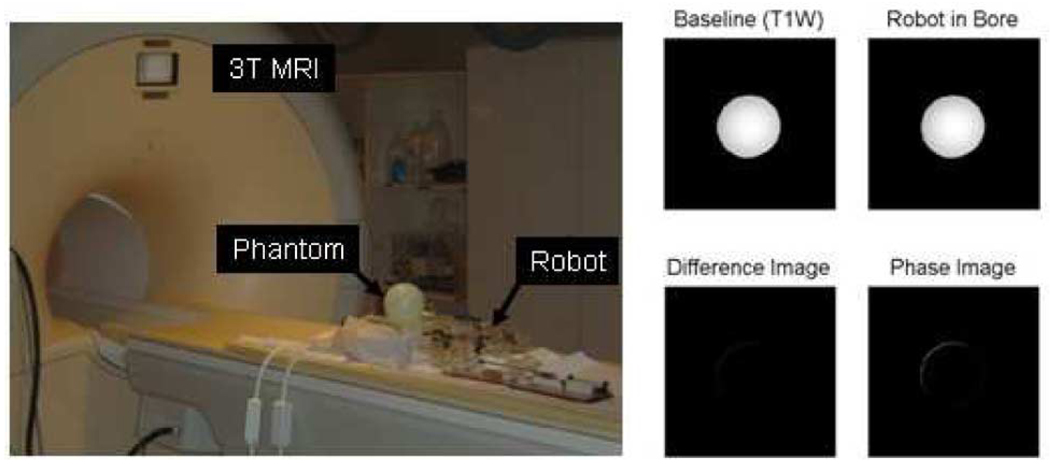

Compatibility was evaluated on a 3T Philips Achieva scanner. A 110 mm, fluid-filled spherical MR phantom was placed in the isocenter and the robot placed such that the tip was at a distance of 120 mm from the center of the phantom (a representative depth from perineum to prostate), as shown in Fig. 10 (left). The phantom was imaged using three standard prostate imaging protocols:

T2W TSE: T2 weighted turbo spin echo (28 cm FOV, 3 mm slice, TE = 90 ms, TR = 5600 ms;

T1W FFE: T1 weighted fast field gradient echo (28 cm FOV, 3 mm slice, TE = 2.3 ms, TR = 264 ms); and

TFE (FGRE): “real-time” turbo field gradient echo (28 cm FOV, 3 mm slice, TE = 10 ms, TR = 26 ms).

Fig. 10.

Configuration of the system for MRI compatibility trials. The robot is placed on the bed with the center of a spherical phantom (left) 120 mm from the tip of the robot and the controller is placed in the scanner room near the foot of the bed. Images of the phantom taken with the T1W sequence are shown with and without the robot present (right).

A baseline scan with each sequence was taken of the phantom with no robot components using round flex coils similar to those often used in prostate imaging. The following imaging series were taken in each of the following configurations: 1) phantom only; 2) controller in room and powered; 3) robot placed in scanner bore; 4) robot electrically connected to controller; and 5) robot moving during imaging (only with T1W imaging). For each step, all three imaging sequences were performed and both magnitude and phase images were collected.

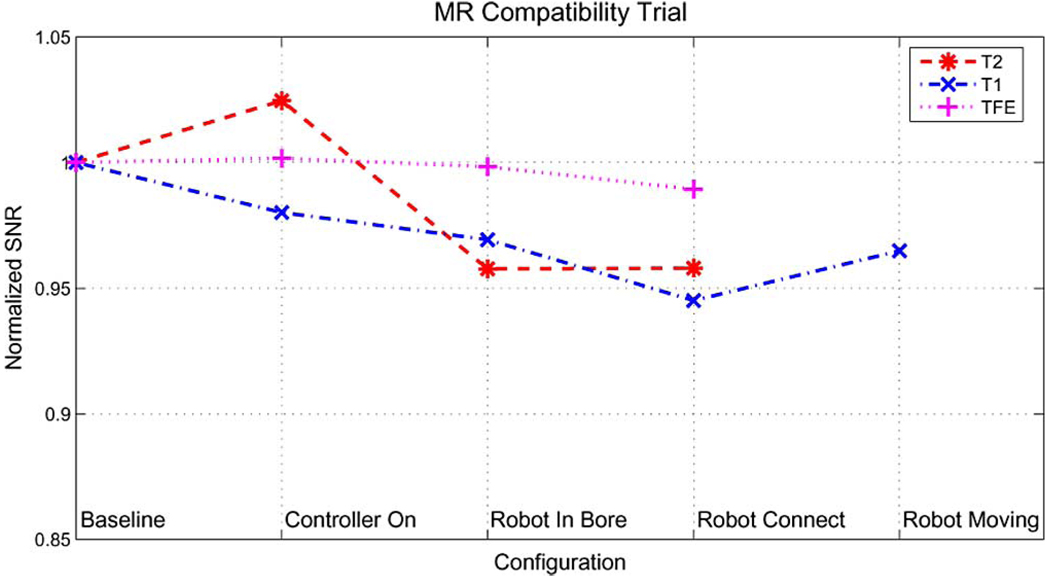

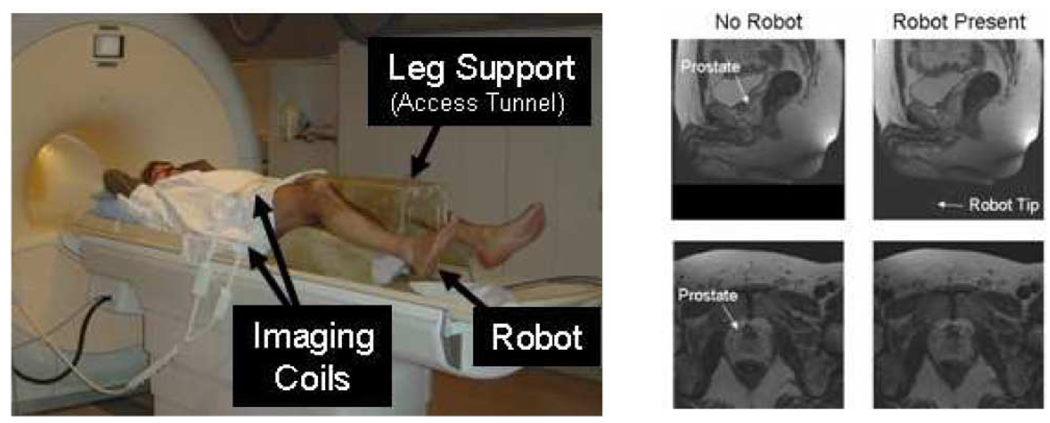

The amount of degradation of SNR was used as the measure of negative effects on image quality. SNR of the MR images was defined as the mean signal in a 25 mm square at the center of the homogeneous sphere divided by the standard deviation of the signal in that same region. The SNR was normalized by the value for the baseline image, thus limiting any bias in choice of calculation technique or location. SNR was evaluated at seven 3 mm thick slices (representing a 25 mm cube) at the center of the sphere for each of the three imaging sequences. The points in the graph in Fig. 11 show the SNR in the phantom for each of the seven 3 mm thick slices for each sequence at each configuration. The lines represent the average SNR in the 25 mm cube at the center of the spherical phantom for each sequence at each configuration. When the robot was operational, the reduction in SNR of the cube at the phantom’s center for these pulse sequences was 5.5% for T1W FFE, 4.2% for T2W TSE, and 1.1% for TFE (FGRE). Further qualitative means of evaluating the effect of the robot on image quality are obtained by examining prostate images taken both with and without the presence of the robot. Fig. 12 (right) shows images of the prostate of a volunteer placed in the scanner bore on the leg rest. With the robot operational, there is no visually identifiable loss in image quality of the prostate.

Fig. 11.

Qualitative measure of image quality for three standard prostate imaging protocols with the system in different configurations. Lines represent mean SNR within 25-mm cube at center of homogeneous phantom normalized by the baseline.

Fig. 12.

Qualitative analysis of prostate image quality. Patient is placed on the leg support and the robot sits inside of the support tunnel inside the scanner bore (left). T2 weighted sagittal and transverse images of the prostate taken when no robot components were present and when the robot was active in the scanner (right).

B. Accuracy

Accuracy assessment is broken into two parts: localization and placement. These two must be distinguished, especially in many medical applications. In prostate biopsy, it is essential to know exactly where a biopsy comes from in order to be able to form a treatment plan if cancer is located. In brachytherapy treatment, radioactive seed placement plans must be made to avoid cold spots where irradiation is insufficient; by knowing where seeds are placed, the dosimetry and treatment plan can be interactively updated. Based on encoder resolution, localization accuracy of the robot is better than 0.1 mm in all directions.

Positioning accuracy is dependent on the servo pneumatic control system. The current control algorithm for pneumatic servo control is based upon SMC techniques. Positioning accuracy of 0.26 mm rms error has been achieved with the cylinder alone over 120 point-to-point moves. With the robot fully connected, positioning accuracy of the robot was measured to be 0.94 mm rms error over 120 point-to-point moves. Development and refinement of novel pneumatic control architecture are underway, and the goal is for the target positioning accuracy per axis to approach the 0.1 mm resolution of the encoders.

C. System Integration and Workflow

To evaluate the overall clinical layout and workflow, the robot was placed in the bore of a 3T Philips scanner inside of the leg rest with a volunteer, as shown in Fig. 12 (left). Round flex receiver coils were used for this trial; endorectal coils can be used for clinical case to obtain optimal image quality. There was adequate room for a patient and the robot was able to maintain its necessary workspace. Further studies of this are underway where volunteers are imaged on the leg rest in the appropriate semilithotomy position and the prostate and anatomical constraints are analyzed.

Co-registration of the robot to the scanner was performed in a 3T GE scanner using the tracking fiducial described in Section III-E that is shown in Fig. 9. Images of the robot’s tracking fiducial provide the location of the robot base in the scanner’s coordinate system with an rms accuracy of 0.14 mm and 0.37°. The joint encoders on the robot allow the end effector position and orientation to be determined with respect to the robot. The end effector localization accuracy is nonlinear due to the mechanism design; based on encoder resolution, the worst case resolution for link localization for the horizontal and vertical motions is 0.01 and 0.1 mm, respectively. The overall accuracy of needle tip localization with respect to the MR images is better than 0.25 mm and 0.5°.

To validate the workflow, five needle insertions were performed in a gel phantom according to the configuration shown in Fig. 13 in a 3T GE scanner. The robot was registered to the scanner, planning images were acquired of the phantom, targets were selected and transmitted to the robot, and the robot aligned the needle with real-time feedback of the needle position overlaid on the planning software. Five out of five 10-mm targets were successfully targeted in the phantom study. Detailed analysis of the full system accuracy incorporating errors induced in registration, needle deflection, and tissue deformation are underway.

Fig. 13.

Robotic system performing a needle insertion into a phantom in preliminary workflow evaluation experiments. Five out of five 1-cm targets were successfully targeted.

V. DISCUSSION

We have developed a prototype of an MRI-compatible manipulator and the support system architecture that can be used for needle placement in the prostate for biopsy and brachytherapy procedures. The robot has been designed such that it operates in the confined space between the patient’s legs inside the leg rest in a high-field, closed-bore MRI scanner. Unlike any other attempts at transperineal robotic prostate interventions, the patient is in the semilithotomy position. This affords several benefits: 1) preoperative imaging corresponds directly to the intraprocedural images; 2) patient and organ motions are limited; and 3) the workflow of the conventional TRUS-guided procedure can be preserved. The configuration allows the use of diagnostic MRI scanners in interventional procedures; there is no need for open or large bore scanners that often are difficult to come by and sacrifice image quality.

Attaining an acceptable level of MR compatibility required significant experimental evaluation. Several types of actuators, including piezoelectric motors and pneumatic cylinder/valve pairs, were evaluated. The author’s experiences with piezoelectric motors were not as positive as reported elsewhere in the literature; although high-quality images could be obtained with the system in the room, noticeable noise was present when the motors were running during imaging. This prompted the investigation of pneumatic actuators. Pneumatic actuators have great potential for MRI-compatible mechatronic systems. Since no electronics are required, they are fundamentally compatible with the MR environment. However, there are several obstacles to overcome. These include: 1) material compatibility that was overcome with custom air cylinders made of glass with graphite pistons; 2) lack of stiffness or instability that was overcome with the development of a pneumatic brake that locks the cylinder’s rod during needle insertion; and 3) difficult control that was ameliorated by using high-speed valves and shortening pneumatic hose lengths by designing an MRI-compatible controller. Pneumatic actuation seems to be an ideal solution for this robotic system, and it allows the robot to meet all of the design requirements set forth in Section II. Further, MR compatibility of the system including the robot and controller is excellent with no more that a 5% loss in average SNR with the robot operational.

The system has been evaluated in a variety of tests. The physical configuration of the robot and controller seems ideal for this procedure. The MR compatibility has shown to be sufficient for anatomical imaging using traditional prostate imaging sequences. Communications between all of the elements, including the robot, the low level controller, the planning workstation, and the MR scanner real-time imaging interface, are in place. Initial phantom studies validated the workflow and the ability to accurately localize the robot and target a lesion.

The primary elements of the system are now in place. The current research focusses on refinement of the control system and interface software. The next phase of this research focuses on generating a clinical-grade system and preparing for phase-1 clinical trials. The initial application will be prostate biopsy, followed later by brachytherapy seed placement. With the addition of the two rotational DOFs, the design of the manipulator will allow for treatment of patients that may have otherwise been denied such treatment because of contraindications such as significant PAI. The robot, controller, and/or system architecture are generally applicable to other MR-guided robotic applications.

Acknowledgments

This work was supported in part by the National Science Foundation under Grant EEC-97-31478, in part by the National Institutes of Health (NIH) Bioengineering Research Partnership under Grant RO1-CA111288-01, in part by the NIH under Grant U41-RR019703, and in part by the Congressionally Directed Medical Research Programs Prostate Cancer Research Program (CDMRP PCRP) Fellowship W81XWH-07-1-0171.

Biographies

Gregory S. Fischer (M’02) received the B.S. degree in electrical engineering and the B.S. degree in mechanical engineering from Rensselaer Polytechnic Institute, Troy, NY, both in 2002, and the M.S.E. degree in electrical engineering and the M.S.E. degree in mechanical engineering in 2004 and 2005, respectively, from The Johns Hopkins University, Baltimore, MD, where he is currently working toward the Ph.D. degree in mechanical engineering.

Since 2002, he has been a Graduate Research Assistant at The Johns Hopkins University Center for Computer Integrated Surgery. He will be taking on the role of Assistant Professor at Worcester Polytechnic Institute, Worcester, MA, in Fall 2008. His current research interests include development of interventional robotic systems, robot mechanism design, pneumatic control systems, surgical device instrumentation, and MRI-compatible robotic systems.

Iulian Iordachita received the B.Eng. degree in mechanical engineering, the M.Eng. degree in industrial robots, and the Ph.D. degree in mechanical engineering in 1984, 1989, and 1996, respectively, all from the University of Craiova, Craiova, Romania.

He is currently an Associate Research Scientist at the Center for Computer Integrated Surgery, The Johns Hopkins University, Baltimore, MD, where he is engaged in research on robotics, in particular, robotic hardware. His current research interests include design and manufacturing of surgical instrumentation and devices, medical robots, mechanisms and mechanical transmissions for robots, and biologically inspired robots.

Csaba Csoma received the B.Sc. degree in computer science from Dennis Gabor University, Budapest, Hungary, in 2002.

He is currently with the Engineering Research Center for Computer Integrated Surgical Systems and Technology, The Johns Hopkins University, Baltimore, MD, as a Software Engineer. His current research interests include development of communication software and graphical user interface for surgical navigation and medical robotics applications.

Junichi Tokuda received the B.S. degree in engineering, the M.S. degree in information science and technology, and the Ph.D. degree in information science and technology in 2002, 2004, and 2007, respectively, all from the University of Tokyo, Tokyo, Japan.

He is currently a Research Fellow at Brigham and Women’s Hospital and Harvard Medical School, Boston, MA. His current research interest include magnetic resonance imaging (MRI) guided therapy including magnetic resonance pulse sequence, navigation software, MRI-compatible robots, and integration of these technologies for operating environment.

Simon P. DiMaio (M’98) received the B.Sc. degree in electrical engineering from the University of Cape Town, Cape Town, South Africa, in 1995, and the M.A.Sc. and Ph.D. degrees from the University of British Columbia, Vancouver, BC, Canada, in 1998 and 2003, respectively.

He was a Research Fellow at the Surgical Planning Laboratory, Brigham and Women’s Hospital, Harvard Medical School, Boston, MA, where he was engaged in research on robotic mechanisms and tracking devices for image-guided surgery. Since 2007, he has been with the Applied Research Group at Intuitive Surgical, Inc., Sunnyvale, CA. His current research interests include mechanisms and control systems for surgical robotics, medical simulation, image-guided therapies, and haptics.

Clare M. Tempany received the M.D. degree from the Royal College of Surgeons, Dublin, Ireland, in 1981.

She is currently the Ferenc Jolesz Chair and the Vice-Chair of Radiology Research, Department of Radiology, Brigham and Women’s Hospital (BWH), Boston, MA, and a Professor of Radiology at Harvard Medical School, Boston. She is also the Co-Principal Investigator and the Clinical Director of the National Image Guided Therapy Center (NCIGT), BWH. She is also leading an active research group at the MR Guided Prostate Interventions Laboratory, BWH. Her current research interests include magnetic resonance imaging of the pelvis and image-guided therapy.

Nobuhiko Hata (M’01) was born in Kobe, Japan. He received the B.E. degree in precision machinery engineering in 1993, and the M.E. and D.Eng. degrees in precision machinery engineering in 1995 and 1998, respectively, all from the University of Tokyo, Tokyo, Japan.

He is currently an Assistant Professor of Radiology at Harvard Medical School, Boston, MA, and the Technical Director of the Image Guided Therapy Program, Brigham and Womens Hospital, Boston. His current research interests include medical image processing and robotics in image-guided surgery.

Gabor Fichtinger (M’04) received the B.S. and M.S. degrees in electrical engineering, and the Ph.D. degree in computer science from the Technical University of Budapest, Budapest Hungary, in 1986, 1988, and 1990, respectively.

During 1998, he was a Charter Faculty Member at the National Science Foundation Engineering Research Center for Computer Integrated Surgery Systems and Technologies, The Johns Hopkins University, Baltimore, MD. Since 2007, he has been with Queen’s University, Kingston, ON, Canada, where he was an Interdisciplinary Faculty of Computer Assisted Surgery and is currently an Associate Professor of Computer Science, Mechanical Engineering, and Surgery. His current research interests include commuter-assisted surgery and medical robotics, with a strong emphasis on image-guided oncological interventions.

Footnotes

Color versions of one or more of the figures in this paper are available online at http://ieeexplore.ieee.org.

Airpel E9 Anti-stiction Air Cylinder—http://www.airpel.com

Hoerbiger–Origa PRE-U piezo valve—http://www.hoerbigeroriga.com

EM1-1250 and E5D-1250 encoder modules with PC5 differential line driver—U.S. Digital, Vancouver, WA.

MR Spots—Beekley, Bristol, CT.

3-D slicer, http://www.slicer.org

Contributor Information

Gregory S. Fischer, Email: gfischer@jhu.edu, Engineering Research Center for Computer Integrated Surgery, Johns Hopkins University, Baltimore, MD 21218 USA.

Iulian Iordachita, Email: iordachita@jhu.edu, Engineering Research Center for Computer Integrated Surgery, Johns Hopkins University, Baltimore, MD 21218 USA.

Csaba Csoma, Email: csoma@jhu.edu, Engineering Research Center for Computer Integrated Surgery, Johns Hopkins University, Baltimore, MD 21218 USA.

Junichi Tokuda, Email: tokuda@bwh.harvard.edu, National Center for Image Guided Therapy (NCIGT), Brigham and Womens Hospital, Department of Radiology, Harvard Medical School, Boston, MA 02115 USA.

Simon P. DiMaio, Email: Simon.DiMaio@intusurg.com, Intuitive Surgical, Inc., Sunnyvale, CA 94086 USA.

Clare M. Tempany, Email: ctempany@bwh.harvard.edu, National Center for Image Guided Therapy (NCIGT), Brigham and Womens Hospital, Department of Radiology, Harvard Medical School, Boston, MA 02115 USA.

Nobuhiko Hata, Email: hata@bwh.harvard.edu, National Center for Image Guided Therapy (NCIGT), Brigham and Womens Hospital, Department of Radiology, Harvard Medical School, Boston, MA 02115 USA.

Gabor Fichtinger, Email: gabor@cs.queensu.ca, School of Computing, Queen’s University, Kingston, ON K7L 3N6, Canada.

REFERENCES

- 1.Jemal A, Siegel R, Ward E, Murray T, Xu J, Thun M. Cancer statistics, 2007. CA Cancer J. Clin. 2007;vol. 57(no. 1):43–66. doi: 10.3322/canjclin.57.1.43. [DOI] [PubMed] [Google Scholar]

- 2.Blasko JC, Mate T, Sylvester J, Grimm P, Cavanagh W. Brachytherapy for carcinoma of the prostate. Semin. Radiat. Oncol. 2002;vol. 12(no. 1):81–94. doi: 10.1053/srao.2002.28667. [DOI] [PubMed] [Google Scholar]

- 3.Cooperberg MR, Lubeck DP, Meng MV, Mehta SS, Carroll PR. The changing face of low-risk prostate cancer: Trends in clinical presentation and primary management. J. Clin. Oncol. 2004 Jun.vol. 22(no. 11):2141–2149. doi: 10.1200/JCO.2004.10.062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Presti JC., Jr. Prostate cancer: Assessment of risk using digital rectal examination, tumor grade, prostate-specific antigen, and systematic biopsy. Radiol. Clin. North Amer. 2000;vol. 38(no. 1):49–58. doi: 10.1016/s0033-8389(05)70149-4. [DOI] [PubMed] [Google Scholar]

- 5.Terris MK, Wallen EM, Stamey TA. Comparison of mid-lobe versus lateral systematic sextant biopsies in detection of prostate cancer. Urol. Int. 1997;vol. 59:239–242. doi: 10.1159/000283071. [DOI] [PubMed] [Google Scholar]

- 6.D’Amico AV, Cormack R, Tempany CM, Kumar S, Topulos G, Kooy HM, Coleman CN. Real-time magnetic resonance image-guided interstitial brachytherapy in the treatment of select patients with clinically localized prostate cancer. Int. J. Radiation Oncol. 1998 Oct.vol. 42:507–515. doi: 10.1016/s0360-3016(98)00271-5. [DOI] [PubMed] [Google Scholar]

- 7.D’Amico AV, Tempany CM, Cormack R, Hata N, Jinzaki M, Tuncali K, Weinstein M, Richie J. Transperineal magnetic resonance image guided prostate biopsy. J. Urol. 2000;vol. 164(no. 2):385–387. [PubMed] [Google Scholar]

- 8.Zangos S, Eichler K, Engelmann K, Ahmed M, Dettmer S, Herzog C, Pegios W, Wetter A, Lehnert T, Mack MG, Vogl TJ. MR-guided transgluteal biopsies with an open low-field system in patients with clinically suspected prostate cancer: Technique and preliminary results. Eur. Radiol. 2005;vol. 15(no. 1):174–182. doi: 10.1007/s00330-004-2458-2. [DOI] [PubMed] [Google Scholar]

- 9.Susil RC, Camphausen K, Choyke P, McVeigh ER, Gustafson GS, Ning H, Miller RW, Atalar E, Coleman CN, Ménard C. System for prostate brachytherapy and biopsy in a standard 1.5 T MRI scanner. Magn. Resonance Med. 2004;vol. 52:683–6873. doi: 10.1002/mrm.20138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Beyersdorff D, Winkel A, Hamm B, Lenk S, Loening SA, Taupitz M. MR imaging-guided prostate biopsy with a closed MR unit at 1.5 T. Radiology. 2005;vol. 234:576–581. doi: 10.1148/radiol.2342031887. [DOI] [PubMed] [Google Scholar]

- 11.Tsekos NV, Khanicheh A, Christoforou E, Mavroidis C. Magnetic resonancecompatible robotic and mechatronics systems for image-guided interventions and rehabilitation: A review study. Annu. Rev. Biomed. Eng. 2007 Aug.vol. 9:351–387. doi: 10.1146/annurev.bioeng.9.121806.160642. [DOI] [PubMed] [Google Scholar]

- 12.Masamune K, Kobayashi E, Masutani Y, Suzuki M, Dohi T, Iseki H, Takakura K. Development of an MRI-compatible needle insertion manipulator for stereotactic neurosurgery. J. Image Guid. Surg. 1995;vol. 1(no. 4):242–248. doi: 10.1002/(SICI)1522-712X(1995)1:4<242::AID-IGS7>3.0.CO;2-A. [DOI] [PubMed] [Google Scholar]

- 13.Felden A, Vagner J, Hinz A, Fischer H, Pfleiderer SO, Reichenbach JR, Kaiser WA. ROBITOM-robot for biopsy and therapy of the mamma. Biomed. Tech. (Berl.) 2002;vol. 47(Pt. 1) Suppl. 1:2–5. doi: 10.1515/bmte.2002.47.s1a.2. [DOI] [PubMed] [Google Scholar]

- 14.Hempel E, Fischer H, Gumb L, Höhn T, Krause H, Voges U, Breitwieser H, Gutmann B, Durke J, Bock M, Melzer A. An MRI-compatible surgical robot for precise radiological interventions. CAS. 2003 Apr.vol. 8(no. 4):180–191. doi: 10.3109/10929080309146052. [DOI] [PubMed] [Google Scholar]

- 15.Chinzei K, Hata N, Jolesz FA, Kikinis R. MR compatible surgical assist robot: System integration and preliminary feasibility study. MICCAI. 2000 Oct.vol. 1935:921–933. [Google Scholar]

- 16.DiMaio SP, Pieper S, Chinzei K, Fichtinger G, Tempany C, Kikinis R. Proc. MRI Symp. 2004. Robot assisted percutaneous intervention in open-MRI; p. 155. [Google Scholar]

- 17.Krieger A, Susil RC, Menard C, Coleman JA, Fichtinger G, Atalar E, Whitcomb LL. Design of a novel MRI compatible manipulator for image guided prostate interventions. IEEE Trans. Biomed. Eng. 2005 Feb.vol. 52(no. 2):306–313. doi: 10.1109/TBME.2004.840497. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Stoianovici D, Patriciu A, Petrisor D, Mazilu D, Kavoussi L. A new type of motor: Pneumatic step motor. IEEE/ASME Trans. Mechatron. 2007 Feb.vol. 12(no. 1):98–106. doi: 10.1109/TMECH.2006.886258. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Elhawary H, Zivanovic A, Rea M, Tse ZTH, McRobbie D, Young I, Paley BDM, Lamprth M. A MR compatible mechatronic system to facilitate magic angle experiments in vivo; Proc. Mid. Image Comput. Comput.-Assisted Interv. Conf. (MICCAI); 2007. Nov., pp. 604–611. [DOI] [PubMed] [Google Scholar]

- 20.Suzuki T, Liao H, Kobayashi E, Sakuma I. Ultrasonic motor driving method for EMI-free image in MR image-guided surgical robotic system; Proc. IEEE Int. Conf. Intell. Robots Syst. (IROS); 2007. Oct., pp. 522–527. [Google Scholar]

- 21.Taillant E, Avila-Vilchis J, Allegrini C, Bricault I, Cinquin P. CT and MR compatible light puncture robot: Architectural design and first experiments; Proc. Mid. Image Comput. Comput.-Assisted Interv. Conf. (MICCAI); 2004. pp. 145–152. [Google Scholar]

- 22.Gassert R, Moser R, Burdet E, Bleuler H. MRI/fMRI-compatible robotic system with force feedback for interaction with human motion. T. Mech. 2006 Apr.vol. 11(no. 2):216–224. [Google Scholar]

- 23.Elhawary H, Zivanovic A, Rea M, Davies B, Besant C, McRobbie D, de Souza N, Young I, Lamprth M. The feasibility of MR-Image guided prostate biopsy using piezoceramic motors inside or near to the magnet isocentre; Proc. Mid. Image Comput. Comput.-Assisted Interv. Conf. (MICCAI); 2006. Nov., pp. 519–526. [DOI] [PubMed] [Google Scholar]

- 24.Stoianovici D, Song D, Petrisor D, Ursu D, Mazilu D, Mutener M, Schar M, Patriciu A. ‘MRI Stealth’ robot for prostate interventions. Minim. Invasive Ther. Allied Technol. 2007 Jul.vol. 16(no. 4):241–248. doi: 10.1080/13645700701520735. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Fischer GS, DiMaio SP, Iordachita I, Fichtinger G. Development of a robotic assistant for needle-based transperineal prostate interventions in MRI; Proc. Mid. Image Comput. Comput.-Assisted Interv. Conf. (MICCAI); Brisbane, Australia: 2007. Nov., pp. 425–453. [DOI] [PubMed] [Google Scholar]

- 26.Wallner K, Blasko J, Dattoli M. Prostate Brachytherapy Made Complicated. 2nd ed. Seattle, WA: SmartMedicine Press; 2001. [Google Scholar]

- 27.Webster RJ, III, Memisevic J, Okamura AM. Design considerations for robotic needle steering; Proc. IEEE Int. Conf. Robot. Autom; 2005. pp. 3599–3605. [Google Scholar]

- 28.Schwamb P, Merrill AL. Elements of Mechanism. New York: Wiley; 1905. [Google Scholar]

- 29.Bone GM, Ning S. Experimental comparison of position tracking control algorithms for pneumatic cylinder actuators. IEEE/ASME Trans. Mechatron. 2007 Oct.vol. 12(no. 5):557–561. [Google Scholar]

- 30.DiMaio SP, Samset E, Fischer GS, Iordachita I, Fichtinger G, Jolesz F, Tempany C. Dynamic MRI scan plane control for passive tracking of instruments and devices; Proc. Mid. Image Comput. Comput.-Assisted Interv. Conf. (MICCAI); 2007. Nov., pp. 50–58. [DOI] [PubMed] [Google Scholar]

- 31.Mewes P, Tokuda J, DiMaio SP, Fischer GS, Csoma C, Gobi DG, Tempany C, Fichtinger G, Hata N. An integrated MRI and robot control software system for an MR-compatible robot in prostate intervention; Proc. IEEE Int. Conf. Robot. Autom. (ICRA); 2008. May, [Google Scholar]

- 32.Spiczak JV, Samset E, Dimaio SP, Reitmayr G, Schmalstieg D, Burghart C, Kikinis R. Device connectivity for image-guided medical applications. Stud. Health Technol. Inform. 2007;vol. 125:482–484. [PubMed] [Google Scholar]

- 33.Haker S, Warfield S, Tempany C. Lecture Notes in Computer Science. vol. 3216. New York: Springer-Verlag; 2004. Jan., Landmark-guided surface matching and volumetric warping for improved prostate biopsy targeting and guidance; pp. 853–861. [Google Scholar]