Abstract

The relationships between hospital Magnet® status, nursing unit staffing, and patient falls were examined in a cross-sectional study using 2004 National Database of Nursing Quality Indicators (NDNQI®) data from 5,388 units in 108 Magnet and 528 non-Magnet hospitals. In multivariate models, the fall rate was 5% lower in Magnet than non-Magnet hospitals. An additional registered nurse (RN) hour per patient day was associated with a 3% lower fall rate in ICUs. An additional licensed practical nurse (LPN) or nursing assistant (NA) hour was associated with a 2–4% higher fall rate in non-ICUs. Patient safety may be improved by creating environments consistent with Magnet hospital standards.

Keywords: patient safety, staffing, hospitals, magnet hospitals, nursing units, patient falls

Despite staff efforts to keep patients safe, some patients fall during their hospital stay. From one to eight patients fall per 1,000 inpatient days depending upon the type of nursing unit (Enloe et al., 2005). Patient falls are one of the eight patient outcomes included in the nursing care performance measures adopted by the National Quality Forum (NQF, 2004, 2009). We theorized that adequate evaluation, support, and supervision of patients by hospital staff can minimize the fall rate. The capacity for staff to evaluate, support, and supervise patients may depend on how a nursing unit is staffed with registered nurses (RNs), licensed practical nurses (LPNs), and nursing assistants (NAs), as well as the proportion of RNs with bachelor’s degrees in nursing, specialty certification, or who are hospital employees. We therefore expected that patient fall rates on similar units would differ based on their nurse staffing and their RN composition (i.e., education, certification, and employment status).

The association between staffing and falls has been examined in several studies with scant evidence of a significant relationship. Few researchers evaluating falls have examined all types of nursing staff, the RN composition or considered the hospital’s Magnet® status. Better understanding of the multiple factors that influence patient safety may assist hospital managers in making evidence-based recruitment and staffing decisions and encourage consideration of the potential benefits of Magnet recognition.

The purpose of this study was to examine the relationships among nurse staffing, RN composition, hospitals’ Magnet status, and patient falls. We studied general acute-care hospitals, hereafter referred to as “general hospitals.” Our results may advance the understanding of how to staff nursing units better and support nurses to promote patient safety.

BACKGROUND

This study builds on a theoretical foundation, a decade of empirical literature, and a unique national database—the National Database of Nursing Quality Indicators (NDNQI®)—designed to measure nursing quality and patient safety. We outline these components before describing our methods.

Theoretical Framework

Our research was guided by a theoretical framework first presented by Aiken, Sochalski, and Lake (1997) that linked organizational forms such as Magnet hospitals and dedicated AIDS units through operant mechanisms including nurse autonomy, control, and nurse-physician relationships, to nurse and patient outcomes. Lake (1999) modified the framework to specify two dimensions of nursing organization: nurse staffing (i.e., the human resources available) and the nursing practice environment (i.e., the social organization of nursing work). In terms of nurse staffing, Lake hypothesized that more registered nurses (RNs), both per patient and as a proportion of all nursing staff, would result in better outcomes for both nurses and patients. The nurse staffing dimension has evolved to detail the composition of the RN staff such as level of education and specialty certification.

The two organizational factors examined in this study are nurse staffing and Magnet status. The American Nurses Credentialing Center developed the Magnet Recognition Program® in 1994 to recognize health care organizations that provide nursing excellence (American Nurses Credentialing Center, 2009). Currently, of roughly 5,000 general hospitals in the U.S., over 350 or 7% have Magnet recognition.

We theorized that adequate evaluation, support, and supervision of patients to prevent falls depend on having a sufficient number of well-educated and prepared RNs as well as sufficient numbers of LPNs and NAs (we use NA to refer to all nursing assistants and unlicensed assistive personnel). The relationships between staffing and Magnet status with patient falls are presumed to operate through evaluation, support, and supervision, which were not measured in this study. We considered the evaluation component to pertain principally to the RN role. Adequate patient evaluation would be influenced by nurse knowledge, judgment, and assessment skills, which may vary according to nurse education, experience, certification, and expertise. We attributed the supervision role predominantly to RNs and LPNs, and the support role to NAs. Patient supervision and support would be directly influenced by staff availability, measured here as hours per patient day (Hppd).

To explore multiple aspects of staffing for this study we considered all nurse staffing measures available in the NDNQI. The database did not contain measures of nurse experience or expertise. Because the relative importance of nursing evaluation, support, and supervision in the prevention of falls is unknown, and because different types of staff may play different roles in fall prevention, we examined Hppd for RNs, LPNs, and NAs separately.

Literature Review

Patient falls in hospitals have been a focus of outcomes research to assess the variation in patient safety across hospitals and explore whether nurse staffing may be associated with safety. Lake and Cheung (2006) reviewed published literature through mid-2005 and concluded that evidence of an effect of nursing hours or skill mix on patient falls was equivocal. Subsequently, six studies of nursing factors and patient falls were published using data from California (Burnes Bolton et al., 2007; Donaldson et al., 2005), the US (Dunton, Gajewski, Klaus, & Pierson, 2007; Mark et al., 2008), Switzerland (Schubert et al., 2008), and England (Shuldham, Parkin, Firouzi, Roughton,& Lau-Walker, 2009).

In the US, Donaldson et al. (2005) and Burnes Bolton et al. (2007) investigated whether staffing improvements following the California staffing mandate were associated with improved patient outcomes in 252 medical-surgical and stepdown nursing units in 102 hospitals. The nursing factors studied were total nurse staffing, RN and licensed staffing levels, and skill mix. No significant changes in falls were found for the period 2002–2006. In cross-sectional data they detected non-significant trends linking staffing level to falls with injury on medical-surgical units and falls on stepdown units. Dunton et al. (2007) studied a subset of units in hospitals who reported data to NDNQI (n = 1,610) from July 2005 to June 2006. Calculating annualized measures from quarterly data and controlling for hospital size, teaching status, and six nursing unit types, Dunton et al. found a statistically significantly lower patient fall rate (10.3% lower) in Magnet hospitals. They also found negative associations between the fall rate and three nursing factors: total nursing hours, RN skill mix, and RN experience. Negative associations are consistent with the theoretical assumption that more nursing hours, a greater fraction of RN hours of total hours, and more RN experience could minimize the fall rate. Mark et al. (2008) studied unit organizational structure, safety climate, and falls in 2003 and 2004 data from 278 medical-surgical units from a nationally representative sample of 143 hospitals. They controlled for the nursing unit’s average patient age, sex, and health status and found that units with a high capacity (i.e., a high proportion of RNs among total nursing staff and a high proportion of RNs with nursing baccalaureate degrees) and higher levels of safety climate had higher fall rates. They did not find significant direct effects of unit capacity or safety climate on the fall rate. They speculated that higher unit capacity may mean fewer support personnel are available to assist patients with toileting or other daily activities.

In Europe, findings from Schubert et al.’s (2008) study of 118 Swiss nursing units in 2003–2004 showed that rationing of care, the principal independent variable, was positively associated with falls. Staffing and the practice environment were not significant predictors, perhaps because they operate through rationing. Shuldham et al. (2009) studied staffing, the proportion of staff who was permanent employees, and patient falls in two English hospitals in 2006–2007. They reported null findings and noted that the study may not have been sufficiently robust to detect significant associations.

In summary, recent findings reveal a lack of association between staffing and falls in data from California, Switzerland, and England with the exception of Dunton et al. (2007) who identified significant negative relationships in a U.S. sample. In each of these studies, RN-only hours or total nursing hours combining RN, LPN, and NA were used. The influence of nursing hours from LPN or NA staff on patient falls has not been studied separately.

NDNQI Database Overview

The NDNQI, a unique database that was well-suited to our study aims, is part of the American Nurses Association’s (ANA) Safety and Quality Initiative. This initiative started in 1994 with information gathering from an expert panel and focus groups to specify a set of 10 nurse-sensitive indicators to be used in the database (ANA, 1995, 1996, 1999). The database was pilot tested in 1996 and 1997 and was established in 1998 with 35 hospitals. Use of the NDNQI has grown rapidly (Montalvo, 2007). In 2009 1,450 hospitals—one out of every four general hospitals in the U.S.—participated in it.

The NDNQI has served as a unit-level benchmarking resource, but research from this data repository has been limited. NDNQI researchers have published two studies on the association between characteristics of the nursing workforce and fall rates (Dunton et al., 2007; Dunton, Gajewski, Taunton, & Moore, 2004). Their more recent study was described earlier. Their earlier study of step-down, medical, surgical, and medical-surgical units in 2002 showed that higher fall rates were associated with fewer total nursing hours per patient day and a lower percentage of RN hours for most unit types. The scope of work on this topic was extended in the current study by: (a) specifying nurse staffing separately for RNs, LPNs, and NAs, (b) using the entire NDNQI database, (c) selecting the most detailed level of observation (month), and (d) applying more extensive patient risk adjustment than had been evaluated previously.

METHODS

Design, Sample, and Data Sources

This was a retrospective cross-sectional observational study using 2004 NDNQI data. These data were obtained in 2006. NDNQI data pertain to selected nursing units in participating hospitals. In conjunction with NDNQI staff, participating hospitals identify units by type of patient population and primary service: intensive care, stepdown, medical, surgical, medical-surgical, and rehabilitation. Our sample contained 5,388 nursing units in 636 hospitals.

Data are submitted to the NDNQI from multiple hospital departments (e.g., human resources, utilization management) either monthly or quarterly. We assembled an analytic file of monthly observations for all nursing units that submitted data for any calendar quarters for the year 2004. Each observation had RN, LPN, and NA nursing care hours, patient days, RN education and certification, a count of the number of reported falls, average patient age, and proportion of male patients. The RN education and certification data were submitted quarterly and assigned to each month in that quarter. Missing quarters of RN education and certification data or months of nursing care hours and patient days data were filled with data from a quarter or month just before or after the missing data. In compliance with the contractual agreement between the NDNQI and participating hospitals, no hospital identifiers (i.e., hospital ID, name, address, or zip code) were included with the data.

Data external to the NDNQI included hospital characteristics from the American Hospital Association (AHA) 2004 Annual Hospital Survey, the Medicare Case-Mix Index (CMI), and the hospital’s Magnet status. The AHA has surveyed hospitals annually since 1946. The Annual Hospital Survey is the only survey that details the structural, utilization, and staffing characteristics of hospitals nationwide. Presently the AHA survey database contains 800 data fields on 6,500 hospitals of all types. Missing data are noted as missing, and estimation fields are filled in with estimates based on the previous year or information from hospitals of similar size and orientation (AHA, 2010). The CMI database, a public use file, is released by Medicare annually as part of the rules governing the inpatient prospective payment system (Centers for Medicare and Medicaid Services, 2010). NDNQI staff obtained information from the Magnet website (http://www.nursecredentialing.org/Magnet/facilities.html) on hospital Magnet status. Hospital characteristics, CMI, and Magnet status were merged by NDNQI staff and provided with the de-identified dataset.

Variables

The dependent variable, a patient fall, is defined by the NDNQI as an unplanned descent to the floor, with or without an injury to the patient. The NDNQI data contain the number of falls in a unit during the month, including multiple falls by the same patient in the same month. Only falls that occurred while the patient was present on the unit were counted. Nursing unit fall rates were calculated as falls per 1,000 patient days. A patient day is defined as 24 hours beginning the day of admission and excluding the day of discharge.

The independent variables studied were nurse staffing, RN staff composition, and hospital Magnet status. Nurse staffing was measured as nursing care Hppd. Nursing care hours were defined as the number of productive hours worked by RNs, LPNs, or NAs assigned to the unit who had direct patient care responsibilities for greater than 50% of their shift. Nursing Hppd was calculated as nursing care hours divided by patient days. The nursing Hppd measure is the accepted standard in the nurse staffing and patient outcomes literature, receiving the highest consensus score from a panel of international experts when asked to rate the importance and usefulness of staffing variables (Van den Heede, Clarke, Sermeus, Vleugels, & Aiken, 2007). Hppd by RNs, LPNs, and NAs and fall rates are NQF-endorsed standards.

Measures of RN composition included nurse educational level, national specialty certification, and proportion of hours supplied by agency employee nurses. Nursing educational level was measured as the proportion of unit nurses who have a Bachelor of Science degree in Nursing (BSN) or higher degree. Certification was measured as the proportion of unit nurses who have obtained certification granted by a national nursing organization. Agency staff was measured as the proportion of nursing hours on a unit that were supplied by contract or agency nurses.

Magnet recognition was used to measure a hospital’s adherence to standards of nursing excellence, which may translate into greater safety and quality. In the study a hospital was defined as a Magnet if it had been recognized as such for the year 2004.

The control variables were selected to address the differential risk of falling across patients, a major consideration in analysis of falls. Our principal approach was to control for nursing unit type, which clusters patients by case mix and acuity. Additional control variables were the nursing unit’s patient age and gender mix, the hospital’s Medicare CMI, and hospital structural characteristics. The risk of falling varies by both age and gender—older people and women have a higher likelihood of falling (Chelly et al., 2009; Hendrich, Bender, & Nyhuis, 2003). To better account for differences in patient characteristics across units, we computed the nursing unit’s average patient age and proportion of male patients. These demographic data were obtained from NDNQI quarterly prevalence studies of pressure ulcers. The 2004 CMI was used to measure a hospital’s patient illness severity. Measuring the relative illness severity of a hospital’s patients is only possible with patient-level data on many hospitals. The only national patient-level hospital data are from hospitals that participate in Medicare. The CMI is the average Diagnosis-Related Group (DRG) weight for a hospital’s Medicare discharges. Each DRG’s weight is based on the resources consumed by patients grouped into it. Thus, the CMI measures the resources used and implies severity of a hospital’s Medicare patients relative to the national average. The nationwide average CMI across 4,111 hospitals in 2006, the earliest year downloadable online, was 1.32 and ranged from 0.36 to 3.14.

Prior researcher have found that both nurse staffing and patient outcomes vary by structural characteristics of hospitals such as ownership, size, teaching status and urban versus rural location (Blegen, Vaughn, & Vojir, 2008; Jiang, Stocks, & Wong, 2006; Mark & Harless, 2007). This variation in staffing and outcomes may be due to variation in patient acuity. If so, models linking staffing to outcomes should control for hospital characteristics as an additional measure of patient acuity. If the staffing variation is unrelated to patient acuity and is instead due to other factors, such as nurse supply in the market area, including these characteristics in multivariate models will not add to variance explained or improve estimation of the independent variable. We included hospital size, teaching intensity, and ownership as control variables. We specified hospital size as less or greater than 300 beds, as this size divided our sample in half. Teaching intensity was specified as non-teaching, minor teaching (less than 1 medical resident per 4 beds), and major teaching (more than 1 medical resident per 4 beds). We classified hospitals as non-profit, for profit, and public. We classified the three Veterans Administration hospitals in the sample as public hospitals because they are government owned.

Analysis

Descriptive statistics were used to summarize the data. To explore staffing patterns in greater depth, we examined the distribution of hours for each type of nursing staff. We evaluated bivariate associations between all nursing factors (RN, LPN, and NA Hppd, RN education, certification, and employment status) and the patient fall rate. Nursing factors found to be statistically significant were analyzed as independent variables in multivariate models. The independent variables were specified at two different levels consistent with their multilevel effects. The Magnet/non-Magnet comparison was at the hospital level. The staffing and RN composition variables’ effects were at the nursing unit level.

The dependent variable was fall count, and patient days was the exposure on the right side of the equation. This approach is equivalent to a model with the fall rate as the dependent variable. The advantage of analyzing the actual fall count and patient days is that all available information in the data is used for estimation. Because the fall count follows a negative binomial distribution (i.e., its variance exceeds its mean) a negative binomial model was used. Coefficients were estimated using Generalized Estimating Equations (GEE), which take into account repeated measures and clustering (Hanley, Negassa, Edwardes, & Forrester, 2003). GEE corrects the standard errors for the within-hospital clustering in the NDNQI.

We ran four multivariate models. Model 1 used only independent variables. Model 2 added all control variables. Model 1 revealed the initial effect sizes of the independent variables alone. Model 2 showed the final effect sizes accounting for control variables. Four percent of the observations were missing AHA hospital characteristics or Medicare CMI. These observations were included in all models by adding flag variables that excluded them from the estimation of variables they were missing but used their non-missing data otherwise.

Models 3 and 4 were for ICUs and non-ICUs separately. Fundamental differences between ICUs and non-ICUs may result in different patterns of relationships among nursing factors and falls. ICUs have a high level of RN hours and a nearly all RN-level staff. ICU patients may be at lower risk for falling because they are critically ill and frequently sedated. In contrast, non-ICU units (stepdown, medical, surgical, medical-surgical, and rehabilitation) staff with RNs, LPNs, and NAs, and they care for less critically ill patients who are physically able to move enough to fall. Based on Dunton et al. (2004), who found a shift in the relationship direction linking staffing to falls, we tested for a shift in direction at a certain level of nursing hours; we found a consistent slope across nursing hours.

Because the NDNQI is a benchmarking database, we speculated that the overall nurse staffing may differ from typical general hospitals. Different staffing levels might influence the relationships we detect within the NDNQI vs. those that may be observed in a more typical sample. To explore this sampling implication, we analyzed AHA staffing data to compare US general hospitals to NDNQI hospitals by using t-tests.

We followed the recommendations of experts based on recent empirical work to evaluate nurse staffing measures calculated from AHA data (Harless & Mark, 2006; Jiang et al., 2006; Spetz, Donaldson, Aydin, & Brown, 2008). We calculated RN staffing as RN hours per adjusted patient day (Hpapd; note the difference in this abbreviation, which indicates that these are adjusted patient days). For the numerator we calculated RN hours for the year from the AHA full time equivalent RNs (RN FTE) multiplied by 2,080, which is the number of work hours in 1 year (40 hours per week × 52 weeks). The RN FTE variable includes RNs in acute, ambulatory, and long-term care. For the denominator we chose adjusted patient days to match the service areas of the numerator. To incorporate outpatient services, the AHA adjusts patient days by the ratio of outpatient to inpatient revenue. There are limitations in these AHA data, and results should be interpreted with caution. Harless and Mark (2006) found that the adjusted patient days method was less biased than alternatives but still led to deflated coefficients in multivariate models. Our use was to compare overall staffing across hospital groups. Jiang et al. (2006) compared this staffing measure in a California hospital sample using AHA data and state data, which are considered more accurate. They found greater than 20% difference in nurse staffing values for small, rural, nonteaching, public, and for-profit hospitals. These discrepancies imply that the AHA staffing estimates for NDNQI hospitals would be more accurate than the estimates for hospitals throughout the US because the NDNQI database contains more large, urban, nonprofit, and teaching hospitals.

We speculated further that NDNQI Magnet hospitals may staff at higher levels than NDNQI non-Magnet hospitals. We compared staffing levels at the hospital level using AHA Hpapd data and at the nursing unit level using NDNQI Hppd data.

RESULTS

Descriptive Results

As shown in Table 1, the NDNQI and US general hospitals had similar geographic and teaching status distributions. Compared with general hospitals NDNQI hospitals were more often not-for-profit and had more than 300 beds. Seventeen percent of NDNQI hospitals had achieved Magnet recognition. The average CMI for NDNQI hospitals was 1.65, indicating that NDNQI hospitals cared for more complex Medicare patients than the average hospital. Fifty-seven percent of nursing units were either medical, surgical or medical-surgical units, 24% were intensive care, 15% were stepdown, and 4% were rehabilitation. The average age of patients in these nursing units was 50, and 41% of patients were male.

Table 1.

Characteristics of NDNQI Hospitals and General Acute Care Hospitals in the US

| NDNQI Hospitalsa (n = 636), % |

General Acute Care Hospitalsb (n = 4,919), % |

|

|---|---|---|

| Ownership | ||

| Non-profit | 82 | 60 |

| For-profit | 6 | 17 |

| Public | 12 | 23 |

| Bed size | ||

| <100 | 8 | 48 |

| 100–299 | 41 | 36 |

| 300–499 | 30 | 11 |

| 500+ | 21 | 5 |

| Teaching status | ||

| Academic medical center | 19 | 7 |

| Region | ||

| Northeast | 21 | 13 |

| Midwest | 31 | 29 |

| West | 14 | 18 |

| South | 34 | 40 |

NDNQI, National Database of Nursing Quality Indicators; AHA, American Hospital Association.

Of the 636 NDNQI hospitals, 32 could not be matched to AHA for ownership and bed size. These hospitals are omitted from the percent distribution.

2004 NDNQI Database.

2004 AHA Annual Hospital Survey Database.

In 2004, the sample nursing units reported 113,067 patient falls. The observed fall rate across all nursing units was 3.32 per 1,000 patient days (1,000PD). Table 2 shows that falls were most common in rehabilitation units and least common in intensive care units. Most patients (72%) had no injury from their falls; most of the others (23%) suffered a minor injury from the fall. Five percent had a moderate or major fall-related injury.

Table 2.

Nursing Staff Hours Per Patient Day (Hppd) and Fall Rate by Nursing Unit Type

| Staff Hppd | |||||

|---|---|---|---|---|---|

| Falls per 1,000PDa Mean (SD) |

|||||

| Nursing Unit Type |

% (n = 5,388) |

RN, Mean (SD) |

LPN, Mean (SD) |

NA, Mean (SD) |

|

| ICU | 24 | 14.84 (3.06) | 0.13 (0.51) | 1.67 (1.47) | 1.38 (2.79) |

| Stepdown | 15 | 7.03 (2.29) | 0.39 (0.68) | 2.51 (1.24) | 3.35 (3.32) |

| Medical | 19 | 5.11 (1.65) | 0.55 (0.76) | 2.39 (0.98) | 4.51 (3.45) |

| Surgical | 14 | 5.22 (1.50) | 0.58 (0.74) | 2.38 (1.01) | 2.79 (2.71) |

| Med-surg | 24 | 5.04 (1.68) | 0.65 (0.87) | 2.39 (1.06) | 3.93 (3.42) |

| Rehab | 4 | 4.02 (1.47) | 0.75 (0.85) | 2.87 (1.29) | 7.33 (6.62) |

Data Source: 2004 NDNQI Database.

ICU, intensive care unit; Med-Surg, medical-surgical; Rehab, rehabilitation; Hppd, hours per patient day; RN, registered nurse; LPN, licensed practical nurse; NA, nursing assistant.

Per 1,000 patient days.

Overall, most nursing staff hours were provided by RNs: 88% of hours in intensive care (15 out of 17 hours) and 63% of hours in non-intensive care (5 out of 8 hours).NAs provided 2–3 hours of care per patient day; LPNs provided less than an hour of care per patient day. Forty-four percent of RNs had a BSN or higher degree, and 11% of RNs had national specialty certification. Of the six types of units, intensive care units had the highest proportions of nurses with a BSN or higher degree (52%) and certification (15%). Four percent of RN hours were provided by agency staff.

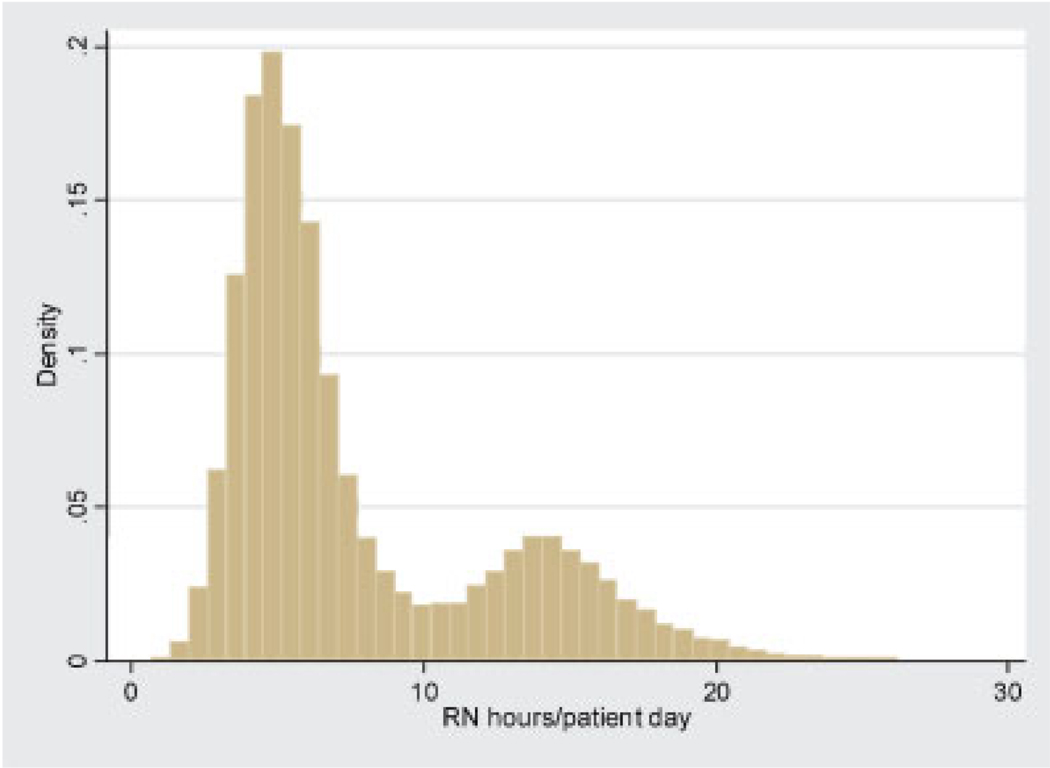

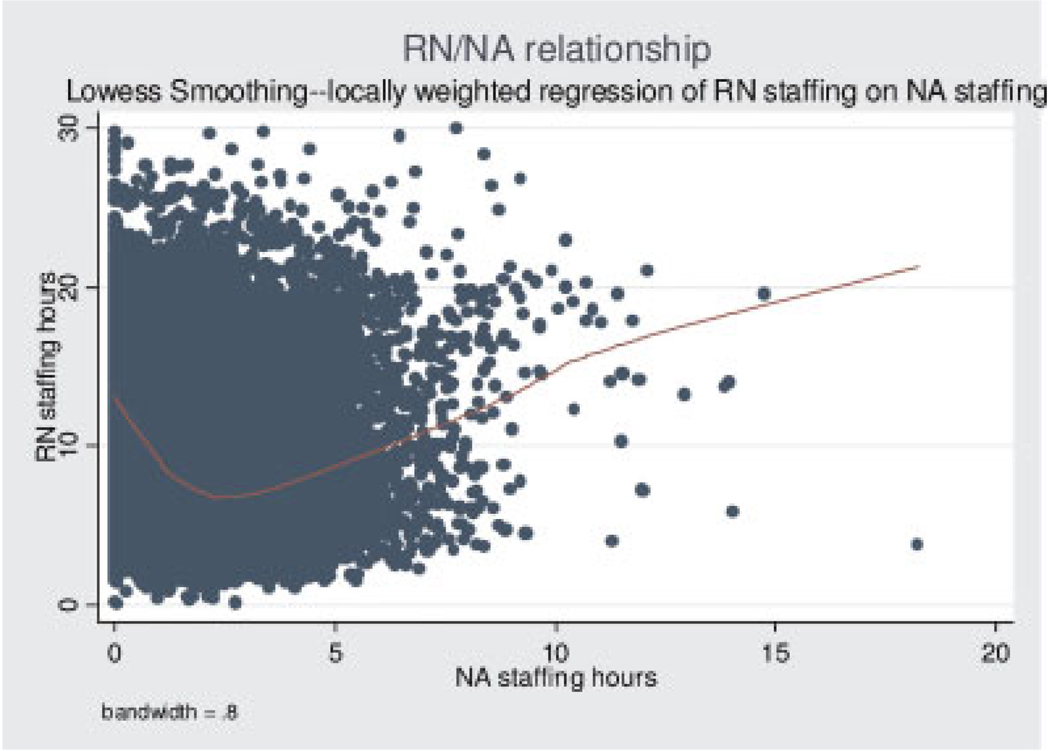

Table 2 also displays the nursing hours for different unit types. RN Hppd ranged from 14.8 for intensive care to 4.0 for rehabilitation. Conversely, average LPN and NA Hppd were highest for intensive care and lowest for rehabilitation. Both LPN and NA Hppd were normally distributed. RN Hppd exhibited a bimodal distribution.

Figure 1 shows that most units were staffed so that RN Hppd were either about 5 hours or about 15 hours. The units with over 10 RN Hppd were primarily ICUs (84%). As shown in Table 2, units with more RN hours had fewer LPN and NA hours. This relationship changes direction at the point of 2 NA Hppd (see Fig. 2), which reflects the ICU and non-ICU patterns observed in Table 2. The line superimposed on the scatter plot of Figure 2 is a locally weighted regression line of NA Hppd on RN Hppd.

FIGURE 1.

Distribution of RN hours per patient day.

FIGURE 2.

Scatter plot of the relationship between RN and NA hours per patient day. RN, registered nurse; NA, nursing assistant; Note: Line on plot is the locally weighted regression line.

Bivariate Results

Nursing staff hours and hospital Magnet status were significantly associated with the fall rate. RN Hppd were negatively associated with the fall rate; conversely, LPN and NA Hppd were positively associated with the fall rate: r =−.29 for RN Hppd, .12 for LPN Hppd, and .10 for NA Hppd (p < .001). The average fall rates were 8.3% lower in Magnet hospitals as compared to non-Magnet hospitals: 3.11 and 3.39 per 1,000PD, respectively (t = 7.99; p < .001). These rates were aggregated from the participating nursing units, and may reflect differing subsets of unit types in the Magnet and non-Magnet hospital subgroups. Elements of RN staff composition—proportions of BSNs, specialty-certified nurses, and agency nurse hours—were not significantly associated with the fall rate. These RN staff composition elements were excluded from multivariate analyses.

Multivariate Results

Table 3 displays incident rate ratios (IRRs) estimated from the negative binomial model using GEE. The IRR is the expected change in the incidence of the dependent variable with one unit change in the independent variable holding all other model variables constant. Hospital Magnet recognition was negatively associated with patient falls. The likelihood of falls was 5% lower in Magnet hospitals (IRR = 0.95), which is equivalent to a 5% lower fall rate. At the nursing unit level, all types of nursing staff hours were significantly associated with patient falls, but in different directions; the directions were consistent with their bivariate patterns. RN hours were negatively associated with falls; an additional hour of RN care per patient day reduced the fall rate by 2%. LPN and NA hours had positive relationships with falls; an additional hour of LPN care increased the fall rate by 2.9% and an additional hour of NA care increased the fall rate by 1.5%. Note that the increment of 1 hour of care per patient day has different implications across types of nursing staff and nursing units due to differing standard deviations. One RN hour is only a third of a standard deviation in ICUs (SD for RN Hppd = 3.06). At the other extreme, one LPN hour is two standard deviations in ICUs (SD for LPN Hppd = 0.51).

Table 3.

Incident Rate Ratios of Patient Falls Based on Negative Binomial Regressions

| Model 1, IRR (n = 50,810) | Model 2, IRR (n = 50,810) | Model 3 (ICU), IRR (n = 11,520) | Model 4 (non-ICU), IRR (n = 39,290) | |

|---|---|---|---|---|

| Nurse staffing | ||||

| RN Hppd | 0.910*** | 0.984*** | 0.967*** | 0.994 |

| LPN Hppd | 1.015 | 1.030** | 1.098** | 1.035** |

| NA Hppd | 1.043*** | 1.011* | 0.989 | 1.015* |

| Magnet hospital | 0.948*** | 0.947*** | 0.860*** | 0.960** |

| Nursing unit type | N/A | |||

| ICU | 0.211*** | |||

| Stepdown | 0.484*** | 0.471*** | ||

| Medical | 0.632*** | 0.627*** | ||

| Surgical | 0.397*** | 0.396*** | ||

| Med-surg | 0.545*** | 0.544*** | ||

| Rehab | Reference | Reference | ||

| R2 | 0.030 | 0.049 | 0.008 | 0.019 |

Notes:

p < .001,

p < .01,

p < .05.

Observations are nursing unit months.

Incident rate ratios are from generalized estimating equations models that clustered observations within nursing units.

Models 2, 3, and 4 controlled for the hospital’s 2004 Medicare Case Mix Index, teaching status, bedsize, and ownership, and the nursing unit’s average patient age and sex.

RN, registered nurse; LPN, licensed practical nurse; NA, nursing assistant; Hppd, hours per patient day; ICU, intensive care unit; Med-Surg, medical-surgical; Rehab, rehabilitation.

Because ICUs were at the extreme ends of the nursing hours and falls distributions, we duplicated our analyses in ICUs and non-ICUs (Models 3 and 4 in Table 3). We found that the effect of RN hours was slightly larger in ICUs than in all units combined (Model 2; IRRs of 0.967 and 0.984, respectively) and became nonsignificant in non-ICUs. Conversely, the LPN hours effect was larger in non-ICUs than ICUs, while the NA hours effect became nonsignificant in ICUs. The standard deviation of NA Hppd is about 1 hour in non-ICUs. Therefore, the association between NA Hppd and falls in non-ICUs can readily be interpreted as a one standard deviation increase (i.e., 1 hour) is associated with a 1.5% higher fall rate. Although the coefficient for LPN Hppd in ICUs was the highest among the different models (IRR = 1.098) its clinical significance is trivial due to the minimal Hppd of LPNs in ICUs, which was on average 0.13 hours (i.e., 8 minutes).

To translate our findings into scenarios that may be useful from policy and management perspectives, predicted fall rates for each nursing unit type by Magnet status are presented in Table 4. The predicted fall rate was calculated from Models 3 and 4 by entering the nursing unit type and Magnet status into the relevant model depending on the scenario. The sample mean was used for all other variables. Table 5 displays the annual number of falls expected by unit type in Magnet and non-Magnet hospitals. Here we multiplied the respective predicted fall rate from Table 4 by the number of patient days on average for that unit type. For example, in an average medical-surgical unit, which had 8,282 patient days in 2004, we would have expected 1.4 fewer falls per year in Magnet (3.75/1,000 × 8,282 = 31.1 falls per year) as compared to non-Magnet hospitals (3.92/1,000 × 8,282 = 32.5 falls per year; 32.5− 31.1 = 1.4).

Table 4.

Predicted Patient Fall Rate per 1,000 Patient Days on Different Types of Nursing Units by Hospital Magnet Status

| Unit Type | ||||||

|---|---|---|---|---|---|---|

| ICU | Stepdown | Medical | Surgical | Med-Surg | Rehab | |

| Magnet | 1.12 | 3.29 | 4.35 | 2.67 | 3.75 | 6.84 |

| Non-magnet | 1.30 | 3.44 | 4.54 | 2.79 | 3.92 | 7.15 |

ICU, intensive care unit; Med-surg, medical-surgical; Rehab, rehabilitation.

Table 5.

Estimated Number of Patient Falls Per Year in Magnet and Non-Magnet Hospitals by Nursing Unit Type

| Unit Type | ||||||

|---|---|---|---|---|---|---|

| ICU | Stepdown | Medical | Surgical | Med-Surg | Rehab | |

| Magnet | 4.5 | 24.0 | 23.1 | 34.8 | 31.1 | 43.3 |

| Non-magnet | 5.2 | 25.1 | 24.2 | 36.3 | 32.5 | 45.3 |

ICU, intensive care unit; Med-Surg, medical-surgical; Rehab, rehabilitation.

Nurse Staffing Comparisons Across Hospital Groups

Using AHA data, we found that NDNQI hospitals had nearly 2 hours higher RN Hpapd than US general hospitals (means = 7.86 and 6.06 respectively, t = 11.52, p < .001). Among NDNQI hospitals, at the hospital level, the RN Hpapd in Magnet hospitals was nearly 1 hour higher than non-Magnet hospitals (mean = 8.50 and 7.70 respectively, t = 2.92, p < .01). At the nursing unit level, NDNQI data showed the RN Hppd in Magnet hospitals was significantly higher for every unit type. This difference ranged from 0.20 to 0.80 Hppd (12–48 minutes). The LPN Hppd in Magnet hospitals was 0.07 to 0.30 (4–18minutes) lower for five unit types; the exception was rehabilitation units where the difference was not statistically significant. The NA Hppd did not exhibit consistent patterns across unit types between Magnet and non-Magnet hospitals.

DISCUSSION

Key Findings

Using a sample of 5,388 units in 636 hospitals, we investigated the relationships among nurse staffing (i.e., RNs, LPNs, NAs), RN staff composition, hospital Magnet status, and patient falls to develop evidence about how the distribution of nursing resources and achievement of nursing excellence contribute to patient safety. Our principal findings suggest that staffing levels have small effects on patient falls, that RN hours are negatively associated with falls in ICUs, LPN, and NA hours are positively associated with falls principally in non-ICUs, and that fall rates are lower in Magnet hospitals. This evidence suggests there are potentially two mechanisms for enhancing patient safety: becoming or emulating a Magnet hospital, or adjusting staffing patterns at the unit level.

Our reported fall rate of 3.3 falls per 1,000 patient days is similar to the rate of 3.73 from the analysis of the 2002 NDNQI database (Dunton et al., 2004).We found higher fall rates on medical units compared to surgical units. Typical medical, surgical, and medical-surgical units in this sample had about 693 patient days per month, meaning about 2–3 patients fell each month on the most common acute care units.

We separated nursing staff hours into RN, LPN, and NA hours, a new approach in the staffing literature. We identified statistically significant opposite effects of RN hours as compared to LPN and NA hours. RN education level and certification did not appear to be associated with falls in a meaningful way. Our insignificant finding regarding agency RN hours and falls may be due to the small percentage of RN hours by agency nurses, which would not be expected to have a substantial influence. We did not analyze skill mix (i.e., the RN proportion of total nursing staff) due to its high correlation with all types of nursing hours per patient day.

The negative association between RN hours and falls in the ICU may reflect the causal explanation that providing more RN hours will lead to fewer falls. The alternative explanation is that ICUs with higher RN hours have patients who are too ill to move and accordingly have a lower fall risk. In this case, the lower risk, rather than the better staffing, accounts for the fewer falls. We cannot rule out this explanation with the data at hand. We note that given the extremely low risk of falls in ICUs, they may not be a productive focus for future research.

The positive association between NA hours and falls in non-ICUs was not expected. Because NAs provide toileting assistance and would seem to have a greater opportunity to prevent falls, we expected this relationship to be negative. Because cross-sectional regression models cannot determine causality, one possibility for this unexpected positive relationship between NA Hppd and falls is that nursing units attempted to address high fall rates by increasing their least expensive staffing component, NAs, rather than higher NA staff causing a higher fall rate.

The fall rate was substantially higher on rehabilitation units than on medical units, the nursing unit type with the next highest fall rate (7.33 vs. 4.51 per 1,000PD). The high rate of falls in rehabilitation settings is likely due to people learning to walk again post-surgery. How to reduce falls on rehabilitation units is a compelling topic for future study. Research questions could include the role of physical therapy or the effectiveness of alternative fall prevention protocols.

Our multivariate results show that patients in Magnet hospitals had a 5% lower fall rate. This difference is important to identify as it controls for multiple factors influencing fall risk, principally nursing unit type, which may differ across the Magnet and non-Magnet hospitals in this sample. This is the second study to analyze Magnet status and patient falls. The first study using NDNQI data from July 2005 to June 2006 (Dunton et al., 2007) identified a 10.3% lower fall rate in Magnet hospitals. The difference between the Dunton et al. (2007) report and our findings may be due to sampling differences: Dunton et al. evaluated only the 1,610 NDNQI nursing units that participated in the NDNQI RN survey. By contrast our findings reflect the entire 2004 NDNQI database of 5,388 nursing units.

The beneficial finding of Magnet status is consistent with the limited literature showing better patient outcomes such as lower mortality and higher patient satisfaction in Magnet hospitals (Aiken, 2002), although the earlier empirical evidence is from the cohort of Magnet hospitals identified by reputation and predates the Magnet Recognition Program era. We confirmed in two different data sources that Magnet hospitals in this sample had higher RN staffing levels than non-Magnet hospitals. In multivariate regression analyses we identified a Magnet hospital effect independent of the RN staffing level. Therefore, higher RN staffing was not the reason for the lower fall rates identified in Magnet hospitals. The basis for lower fall rates in Magnet hospitals remains an open question for future research.

Using the NDNQI for Research

The NDNQI database granted us the benefits of its unprecedented national scope. However, the NDNQI database is a benchmarking database that may not represent all general hospitals. In particular, the NDNQI has more not-for-profit and large hospitals than the national profile. Therefore, our results will generalize best to not-for-profit and larger hospitals. The disproportionate share of Magnet hospitals in the NDNQI database (17% in this sample vs. 7% nationally in 2004) likely reflects the Magnet recognition requirement that a hospital participate in a quality benchmarking system as well as the interest in quality improvement that is common to the Magnet hospital ethos.

Two aspects of the NDNQI sample may yield effect sizes that differ from those that might be estimated in a representative sample of general hospitals. First, the benchmarking purpose of the NDNQI attracts hospitals oriented towards quality improvement through nursing systems decisions. The feedback provided through benchmarking reports may lead these hospitals to implement similar staffing patterns. The result could be less variability in nursing hours than would be observed typically in general hospitals. This possibility was reflected in AHA staffing statistics for the entire hospitals by a lower standard deviation for RN Hpapd in the NDNQI cohort as compared to all U.S. general hospitals (SD = 0.50 vs. 0.75 respectively).

In addition, we detected significantly higher RN staffing in NDNQI hospitals as compared to US general hospitals, suggesting that our multivariate model results apply to hospitals at the high end of the staffing range. Moreover, the Magnet hospital effect identified here may underestimate the “true” Magnet effect were we to compare Magnets with all general hospitals. That is, the “comparison” hospitals in this sample already participate in a quality benchmarking initiative and may therefore differ from hospitals not involved in nursing benchmarking. Lastly, the “non-Magnet”group includes some “Magnet applicants” in various stages of implementing Magnet standards.

The NDNQI remains useful for research questions that incorporate new measures including other nursing workforce characteristics (e.g., expertise, experience), a survey measure of the nursing practice environment, nursing unit types (psychiatric), and outcomes (restraint use). The NDNQI also can be useful to test fall-prevention interventions by comparing the pre- and post intervention fall rate.

Limitations

Our study is limited by a cross-sectional design, the limited data to adjust for patient characteristics, and the age of the data. Another limitation discussed previously is the convenience sample.

The classic weakness of the cross-sectional study design is the inability to establish causality. One hypothesized causal sequence is that providing more nursing hours will lead to fewer falls. Our results showing the opposite, that more LPN and NA hours are associated with more falls, may reflect this design weakness. Another hypothesized causal sequence is that the nursing excellence acknowledged by Magnet Recognition translates into safer practice and fewer patient falls. However, the converse may be plausible: hospitals with fewer falls happen to become Magnet hospitals. Future research on patient falls before and after hospital Magnet Recognition may illuminate this question.

Outcomes studies must control for differences in patients to discern the effects of nursing variables. In this study we controlled for nursing unit type and each nursing unit’s average patient age and gender, thus the control variables were limited. At the hospital level we controlled for patient differences that may be reflected in the Medicare CMI and hospital structural characteristics. This set of control variables exceeds those of most earlier studies of falls by including average patient demographics and hospital CMI. Mark et al. (2008) included average health status but not CMI in their analysis of falls. In fact our additions of the nursing unit’s average patient demographics and hospital CMI contributed minimally to explained variance (not shown). The diminished effect sizes of the independent variables and the increased variance explained in Model 2 was due predominantly to nursing unit type; the other control variables had minimal influence. The NDNQI data do not contain patient diagnosis, cognitive impairment, time or shift of the fall, or acuity mix within nursing unit types. Better risk adjustment may yield other findings.

The age of the data (2004) limits the results in two ways. Several national initiatives since 2004 have heightened attention to the prevention of patient falls. In 2005, the Joint Commission implemented a new National Patient Safety Goal to reduce the risk of patient harm resulting from falls with a requirement of fall risk assessment and action (Joint Commission, 2010). By 2009, the requirement had evolved to implement and evaluate a falls reduction program. In October 2008, Medicare stopped reimbursing hospitals for care due to preventable falls (Centers for Medicare and Medicaid Services, 2008). These changes may have altered the roles of nursing staff, the incidence of patient falls, and the associations between them. The age of the data also limit how well the results generalize to NDNQI hospitals presently. The database has more than doubled in the past 5 years and hospitals under 100 beds are now a larger share of the participants. The study variables have been stable during the years 2004–2010, except for a few clarifications in the data collection guidelines. The changes were minor and would be unlikely to influence the findings reported herein.

CONCLUSION

This study stands apart from previous staffing/fall literature due to the measurement of three different categories of nursing staff hours, the national scope of the hospital sample, the range of nursing unit types, as well as analysis of count data at the unit-months level, the most detailed level of observation. An additional noteworthy feature was risk adjustment for the nursing unit’s average patient characteristics (age, gender) and the hospital’s Medicare CMI. This study provided a thorough presentation of staffing patterns across unit types. We used a national data source, the AHA’s Annual Hospital Survey, to provide a national context for the RN staffing levels in NDNQI hospitals and to compare RN staffing levels in hospitals with and without Magnet recognition within the NDNQI.

Our study findings have implications for management, research, and policy. At the highest management level, hospital executives can improve patient safety by creating environments consistent with Magnet hospital standards. Fewer falls can yield cost savings and prevent patients’ pain and suffering. Nursing unit managers can use these nursing hours and falls statistics for their nursing unit type as reference values to support their staffing decisions. The current study strengthens the evidence base on how nurse staffing patterns and practice environments support patient safety.

Acknowledgments

The National Database of Nursing Quality Indicators data were supplied by the American Nurses Association. The ANA specifically disclaims responsibility for any analyses, interpretations or conclusions. This study was supported by the National Institute of Nursing Research (R01-NR09068; T32-NR007104; P30-NR005043).

REFERENCES

- Aiken LH. Superior outcomes for magnet hospitals: The evidence base. In: McClure ML, Hinshaw AS, editors. Magnet hospitals revisited: Attraction and retention of professional nurses. Washington, DC: American Nurses Publishing; 2002. pp. 61–81. [Google Scholar]

- Aiken LH, Sochalski J, Lake ET. Studying outcomes of organizational change in health services. Medical Care. 1997;35(11 Suppl):NS6–NS18. doi: 10.1097/00005650-199711001-00002. [DOI] [PubMed] [Google Scholar]

- American Hospital Association. AHA Data: Survey History & Methodology. 2010 from http://www.ahadata.com/ahadata/html/historymethodology.html.

- American Nurses Association. Nursing care report card for acute care. Washington, DC: Author; 1995. [Google Scholar]

- American Nurses Association. Nursing quality indicators: Definitions and implications. Washington, DC: Author; 1996. [Google Scholar]

- American Nurses Association. Nursing-sensitive quality indicators for acute care settings and ANA’s Safety and Quality Initiative (No. PR-28) Washington, DC: Author; 1999. [Google Scholar]

- American Nurses Credentialing Center. Magnet recognition program overview. 2009 from http://www.nursecredentialing.org/Magnet/ProgramOverview.aspx.

- Blegen MA, Vaughn T, Vojir CP. Nurse staffing levels: Impact of organizational characteristics and registered nurse supply. Health Services Research. 2008;43:154–173. doi: 10.1111/j.1475-6773.2007.00749.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burnes Bolton L, Aydin CE, Donaldson N, Brown DS, Sandhu M, Fridman M, et al. Mandated nurse staffing ratios in California: A comparison of staffing and nursing-sensitive outcomes pre- and post-regulation. Policy, Politics, & Nursing Practice. 2007;8:238–250. doi: 10.1177/1527154407312737. [DOI] [PubMed] [Google Scholar]

- Centers for Medicare & Medicaid Services. Medicare program: Changes to the hospital inpatient prospective payment systems and fiscal year 2009 rates. Final Rules. Federal Register. 2008;73(16):48433–49084. [PubMed] [Google Scholar]

- Centers for Medicare & Medicaid Services. Acute Inpatient PPS. 2010 from http://www.cms.hhs.gov/AcuteInpatientPPS/

- Chelly JE, Conroy L, Miller G, Elliott MN, Horne JL, Hudson ME. Risk factors and injury associated with falls in elderly hospitalized patient in a community hospital. Journal of Patient Safety. 2009;4:178–183. [Google Scholar]

- Donaldson N, Burnes Bolton L, Aydin C, Brown D, Elashoff JD, Sandhu M. Impact of California’s licensed nurse-patient ratios on unit-level nurse staffing and patient outcomes. Policy, Politics, & Nursing Practice. 2005;6:198–210. doi: 10.1177/1527154405280107. [DOI] [PubMed] [Google Scholar]

- Dunton N, Gajewski B, Klaus S, Pierson B. The relationship of nursing workforce characteristics to patient outcomes. OJIN: The Online Journal of Issues in Nursing. 2007;12(3) Manuscript 3. [Google Scholar]

- Dunton N, Gajewski B, Taunton RL, Moore J. Nurse staffing and patient falls on acute care hospital units. Nursing Outlook. 2004;52:53–59. doi: 10.1016/j.outlook.2003.11.006. [DOI] [PubMed] [Google Scholar]

- Enloe M, Wells TJ, Mahoney J, Pak M, Gangnon RE, Pellino TA, Hughes S, et al. Falls in acute care: An academic medical center six-year review. Journal of Patient Safety. 2005;1:208–214. [Google Scholar]

- Hanley JA, Negassa A, Edwardes MD, Forrester JE. Statistical analysis of correlated data using generalized estimating equations: An orientation. American Journal of Epidemiology. 2003;157:364–375. doi: 10.1093/aje/kwf215. [DOI] [PubMed] [Google Scholar]

- Harless DW, Mark BA. Addressing measurement error bias in nurse staffing research. Health Services Research. 2006;41:2006–2024. doi: 10.1111/j.1475-6773.2006.00578.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hendrich AL, Bender PS, Nyhuis A. Validation of the Hendrich II fall risk model: A large concurrent case/control study of hospitalized patients. Applied Nursing Research. 2003;16:9–21. doi: 10.1053/apnr.2003.YAPNR2. [DOI] [PubMed] [Google Scholar]

- Jiang HJ, Stocks C, Wong C. Disparities between two common data sources on hospital nurse staffing. Journal of Nursing Scholarship. 2006;38:187–193. doi: 10.1111/j.1547-5069.2006.00099.x. [DOI] [PubMed] [Google Scholar]

- Joint Commission. National Patient Safety Goals. [Retrieved Jan 28, 2010];2010 from http://www.jointcommission.org/PatientSafety/NationalPatientSafetyGoals/

- Lake ET. The organization of hospital nursing. Philadelphia: University of Pennsylvania; 1999. (unpublished dissertation) [Google Scholar]

- Lake ET, Cheung R. Are patient falls and pressure ulcers sensitive to nurse staffing? Western Journal of Nursing Research. 2006;28:654–677. doi: 10.1177/0193945906290323. [DOI] [PubMed] [Google Scholar]

- Mark BA, Harless DW. Nurse staffing, mortality, and length of stay in for-profit and not-for-profit hospitals. Inquiry. 2007;44:167–186. doi: 10.5034/inquiryjrnl_44.2.167. [DOI] [PubMed] [Google Scholar]

- Mark BA, Hughes LC, Belyea M, Bacon CT, Chang Y, Jones CA. Exploring organizational context and structure as predictors of medication errors and patient falls. Journal of Patient Safety. 2008;4:66–77. [Google Scholar]

- Montalvo I. The National Database of Nursing Quality Indicators TM (NDNQI®) OJIN: The Online Journal of Issues in Nursing. 2007;12(3) Manuscript 3. [Google Scholar]

- National Quality Forum. National voluntary consensus standards for nursing-sensitive care: An initial performance measure set—A consensus report. 2004 Available from http://www.qualityforum.org/Projects/n-r/Nursing-Sensitive_Care_Initial_Measures/Nursing_Sensitive_Care__Initial_Measures.aspx.

- National Quality Forum. Nursing-sensitive care: Measure maintenance. 2009 from http://www.qualityforum.org/Projects/n-r/Nursing-Sensitive_Care_Measure_Maintenance/Nursing_Sensitive_Care_Measure_Maintenance.aspx.

- Schubert M, Glass TR, Clarke SP, Aiken LH, Schaffert-Witvliet B, Sloane DM, et al. Rationing of nursing care and its relationship to patient outcomes: The Swiss extension of the International Hospital Outcomes Study. International Journal for Quality in Health Care. 2008;20:227–237. doi: 10.1093/intqhc/mzn017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shuldham CM, Parkin C, Firouzi A, Roughton M, Lau-Walker M. The relationship between nurse staffing and patient outcomes: A case study. International Journal of Nursing Studies. 2009;46:986–992. doi: 10.1016/j.ijnurstu.2008.06.004. [DOI] [PubMed] [Google Scholar]

- Spetz J, Donaldson N, Aydin C, Brown D. How many nurses per patient? Measurements of nurse staffing in health services research. Health Services Research. 2008;43:1674–1692. doi: 10.1111/j.1475-6773.2008.00850.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van den Heede K, Clarke SP, Sermeus W, Vleugels A, Aiken LH. International experts’ perspectives on the state of the nurse staffing and patient outcome literature. Journal of Nursing Scholarship. 2007;39:290–297. doi: 10.1111/j.1547-5069.2007.00183.x. [DOI] [PubMed] [Google Scholar]