PREFACE

Humans in diverse cultures develop a similar capacity to recognize the emotional signals of different facial expressions. This capacity is mediated by a brain network that involves emotion-related brain circuits and higher-level visual representation areas. Recent studies suggest that the key components of this network begin to emerge early in life. The studies also suggest that initial biases in emotion-related brain circuits and the early coupling of these circuits and cortical perceptual areas provides a foundation for a rapid acquisition of representations of those facial features that denote specific emotions.

INTRODUCTION

Most humans are particularly skilled at recognizing affectively-relevant information displayed in faces. Scientific interest in the recognition of emotional expressions was reawakened nearly 40 years ago by the discovery that facial expressions are universal1, 2. With the advent of neuroimaging, the brain systems that underlie the ubiquitous human capacity to recognize emotions from facial expressions and other types of social cues have become a burgeoning area of research in cognitive and affective neuroscience. A closely related field of research has examined these processes and their developmental foundations in human children and experimental animals. In this article, we review and synthesize recent findings from these interrelated areas of research. These findings reveal that emotion-related brain circuits (which include amygdala and orbitofrontal cortex) and the influence of these circuits on higher-level visual areas underlies rapid and prioritized processing of emotional signals from faces. The findings also suggest that the key components of the emotion-processing network and emotion-attention interactions begin to emerge early in postnatal life at the time that infants’ visual discrimination abilities undergo substantial experience-driven refinement. Collectively, there follows the suggestion that the ability to mentally represent facial expressions of emotion might be a paradigmatic case of how emotional brain systems (which are biased to respond to certain biologically salient cues) and interconnected perceptual representation areas attune to species-typical and salient signals of emotions in the social environment. We will also discuss how genetic and environmental factors may bias this developmental process and give rise to individual differences in sensitivity to signals of certain (negative) emotions.

NEURAL BASES OF FACIAL EMOTION PROCESSING

An important function of the emotional brain systems is to scan incoming sensory information for the presence of biologically relevant features (e.g., stimuli that represent a threat to well-being) and grant them priority in access to attention and awareness3, 4. For humans, the most salient signals of emotion are often social in nature, such as facial expressions of fear (which are indicative of a threatening stimulus in the environment) or facial expressions of anger (which are indicative of potential aggressive behavior). Consistent with the view that such signals are rapidly detected and subjected to enhanced processing, behavioral studies in adults have shown preferential attention to fearful facial expressions relative to simultaneously presented neutral or happy facial expressions5, better detection of fearful than neutral facial expressions in studies in which the likelihood of stimulus detection is reduced by using rapidly changing visual displays6, 7, and delayed disengagement of attention from fearful as compared to neutral or happy facial expressions8.

Electrophysiological5, 9, 10 and functional Magnetic Resonance Imaging (fMRI)11–13 studies have further shown that activity in face-sensitive cortical areas such as the fusiform gyrus and the superior temporal sulcus is enhanced in response to fearful as compared to neutral facial expressions. Although similar enhanced activation in these areas is observed to attended relative to unattended facial stimuli, there is evidence that attentional and emotional modulation of perceptual processing are mediated by a distinct neural network, the former reflecting a distal influence of frontoparietal attention networks and the latter reflecting the influence of emotion-related brain structures such as the amygdala and orbitofrontal cortex on perceptual processing4 (FIG. 1).

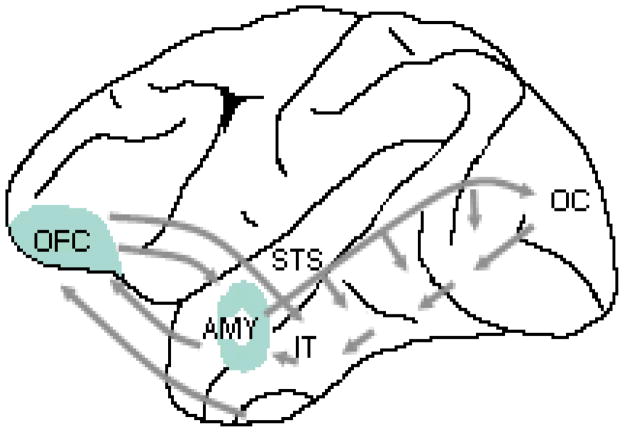

Figure 1. Emotion processing network in the brain.

Neural systems involved in processing emotional signals from faces (Based on models presented in REFS. 4, 14, 127). Emotion-related neural systems (amygdala and orbitofrontal cortex) receive visual information from cortical regions that are involved in the visual analysis of invariant and changeable aspects of faces (fusiform gyrus and superior temporal sulcus) and possibly also via a faster magnocellular pathway directly from the early visual cortex33 or via a subcortical collicular-pulvinar pathway to the amygdala4, 41. The amygdala and orbitofrontal cortex send feedback projections to widespread visual areas, including the fusiform gyrus and superior temporal sulcus. AMY, Amygdala: OFC, Orbitofrontal Cortex: FG, Fusiform Gyrus: STS, Superior Temporal Sulcus

The importance of the amygdala for emotion recognition is well established14 but only recently have studies begun to shed light on the mechanisms by which the amygdala enhances the processing of emotional stimuli4. Findings from these studies are consistent with a model in which the amygdala responds to coarse, low-spatial frequency information about facial expressions (i.e., the global shape and configuration of facial expressions) in the very early stages of information processing (possibly as rapidly as 30 ms after stimulus onset)15 and subsequently enhances more detailed perceptual processing in cortical face-sensitive areas such as the fusiform gyrus and the superior temporal sulcus16–18. The amygdala may enhance cortical activity via direct feedback projections to visual representation areas19–21 or via connections to basal forebrain cholinergic neurons that transiently increase cortical excitability22–24.

The exact stimulus features to which the amygdala is responsive are unknown. The amygdala was initially associated with the processing of fearful facial expressions but more recent findings point to a broader role in processing biological relevance (either reward- or threat-related)25, and in evaluating and acquiring information about associations between stimuli and emotional significance13, 26. Such processes may be more reliably engaged in response to fearful than, for example, happy expressions, explaining why enhanced amygdala activity is more consistently observed to fearful as compared to other facial expressions.

The orbitofrontal cortex has also been implicated in the recognition of emotions from facial expressions and in top-down modulation of perceptual processing. Patients with localized brain damage to the orbitofrontal cortex exhibit impaired recognition of a range of facial expressions27, and this region is activated in fMRI and PET studies when neurologically normal adults view positive or negative facial expressions28, 29. Activity in the orbitofrontal cortex is increased when observers learn object-emotion associations from stimuli that show facial expressions paired with novel objects, which is consistent with the putative role of this region in representing positive and negative reinforcement value of stimuli30, 31.

The orbitofrontal cortex has reciprocal connections with the amygdala and widespread cortical areas, including face-sensitive regions in the inferotemporal cortex and the superior temporal sulcus (FIG. 1)32. As is the case with the amygdala, the orbitofrontal cortex may receive low-spatial frequency information via a rapid magnocellular pathway and exert a top-down facilitation effect on more detailed perceptual processing in perceptual representation areas33. Consistent with such a neuromodulatory role, recent studies have provided evidence for an early response in orbitofrontal cortex (130 ms after stimulus onset) that precedes activity in occipitotemporal perceptual representation areas (165 ms post-stimulus)34.

Individual differences in facial emotion processing

Although a common neural network is generally engaged in response to salient facial expressions, the strength of activity in this network and sensitivity to signals of certain emotions can vary substantially across individuals35. For example, stable individual differences in anxiety-related traits predict sensitivity to facial expressions of threat so that individuals with high trait-anxiety show relatively enhanced orienting of attention to threat-related facial cues and are relatively less efficient in disengaging their attention from fearful facial expressions8, 36. Consistent with these behavioral findings, fMRI studies have shown that high trait-anxiety is correlated with elevated activity in the amygdala in response to fearful and angry facial expressions37, 38, and that individuals with higher trait anxiety show less habituation in the amygdala over repeated presentation of facial expressions39. The elevated activity in the amygdala may partly reflect less efficient emotion regulation processes arising from reduced functional connectivity between the amygdala and regions in the prefrontal cortex (anterior cingulated cortex)39.

THE ONTOGENY OF FACIAL EMOTION PROCESSING

Prior to the onset of language, the primary means infants have in communicating with others in their environment, including caregivers, is through “reading” faces. Thus, it is important for an infant not only to discriminate familiar from unfamiliar individuals, but as importantly, to derive information about the individual’s feelings and intentions; for example, whether the caregiver is pleased or displeased, afraid or angry. After the onset of locomotion, infants also use others’ facial expressions to acquire knowledge about objects in the physical environment - that is, about objects that are safe and can be approached and objects that are potentially harmful and should be avoided40. Thus, the accurate decoding of facial signals, particularly facial expressions, is absolutely fundamental in early interpersonal communication. Further, even after language develops, the accurate decoding of facial emotion continues to play a prominent role in face to face interactions.

The human infant’s ability to discriminate and recognize facial emotion has received extensive study over the past 20 years. Such work has recently been complemented by electrophysiological and optical imaging studies as well as developmental work in other species. Evidence from these studies converges to suggest that the key components of the adult emotion-processing network emerge early in postnatal life. It has been suggested that some of these brain systems (the amygdala) are functional at birth and have a role in orienting newborn infants’ attention towards faces and in enhancing activity in certain cortical areas to faces41. Consistent with their role in adult facial emotion processing, the evidence reviewed below suggests that the amygdala and associated brain regions also participate in facial emotion processing and emotion-attention interactions in infants, although these functions likely do not emerge until the second half of the first year of life.

Behavioral studies

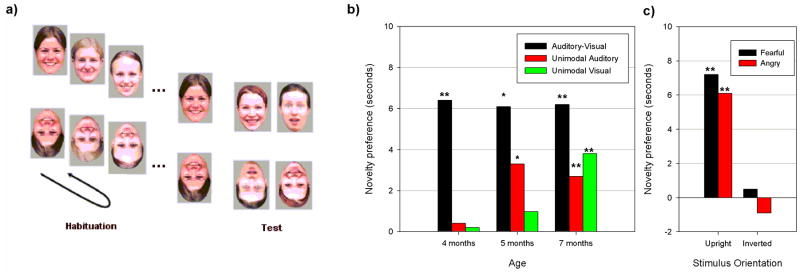

Because of infants’ limited visual acuity, contrast sensitivity and ability to resolve high spatial frequency information at birth42, and limited attention to internal features of faces during the first two months of life43, 44, it is unlikely that infants are able to visually discriminate facial expressions at birth or during the first months of life, except when highly salient facial features change (such as open vs. closed mouth; see REF. 45). Consistent with this view, several studies have shown that the reliable perception of facial expressions, such as attention to configural rather than featural information in faces46, and the ability to recognize facial expressions across variations in identity or intensity47–49, are not present until the age of 5 to 7 months. It also seems that instead of visual information from facial expressions, infants may initially use more salient multimodal cues (e.g., synchronous facial and vocal stimuli) to detect and discriminate emotional expressions, and only later acquire representations of the relevant unimodal cues50–52. Supporting this view, a recent study51 demonstrated that the ability to discriminate emotional expressions in audiovisual stimuli emerged between 3 and 4 months of age, earlier than discrimination of emotions in unimodal auditory (at 5 months of age) or visual stimuli (at 7 months of age, see FIG. 2).

Figure 2. Development of facial emotion discrimination in infancy.

a) An illustration of the habituation visual-paired test paradigm in infants. Presentation of facial expressions (either right side up or upside down) from a specific category (“happy”) is continued until the infant habituates (e.g., until their looking time declines to half of what it was when the stimulus was first presented). After habituation, the stimulus from the familiar category is paired with a stimulus from a novel category (“fearful’). Discrimination is inferred from a preference (that is, an increased looking time) for the novel stimulus. b) Results showing that discrimination of emotional expressions in bimodal (audiovisual) stimuli emerges earlier than discrimination of emotional expressions in unimodal auditory or visual stimuli. (adapted from REF. 51). c) After habituation to happy expressions on different faces, 7-month-old infants were able to discriminate this expression from fearful and angry expressions when the stimuli were presented upright but not when they were inverted. These findings show that, similar to adults, infants attend to orientation-specific configural cues to categorize facial expressions (adapted from REF. 46). * p < .05, ** p < .01

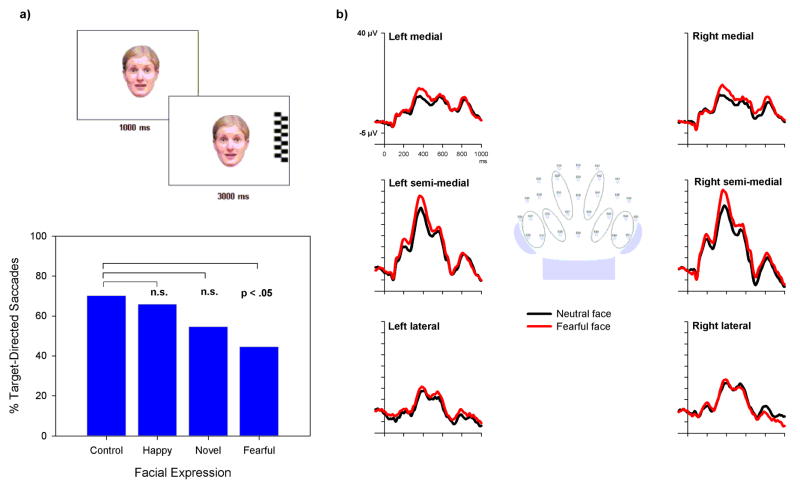

At around the same age as infants start to exhibit stable visual discrimination of facial expressions, infants begin to exhibit adult-like attentional preference towards fearful over neutral or happy facial expressions: for example, when exposed to face pairs, 7-month-old infants look longer at a fearful over a happy facial expression53, 54. More detailed investigation into this looking-time bias has shown that fearful facial expressions affect the ability to disengage attention55. Specifically, infants are less likely to disengage their attention from a centrally presented target face towards a suddenly appearing peripheral target when the face displays a fearful expression as compared to neutral or happy expressions (FIG. 3). The finding that novel non-emotional grimaces55 or neutral faces with large eyes (M. J. Peltola, J. M. Leppänen, V. K. Vogel-Farley, J. K Hietanen, & C. A. Nelson, unpublished manuscript) fail to exert similar effects on attention disengagement indicates that the effect of fearful faces is not simply attributable to their novelty in infants’ environment or to their distinctively large eyes (a feature that is also known to affect infants’ attention). It is also of note that the effect of fear on attention in infants is very similar to that observed in adults8, suggesting a similar underlying mechanism.

Figure 3. Emotional influences on attention and perception in infants.

Adults show enhanced perceptual processing and attention to stimuli that are associated with emotional significance138, which probably reflects a modulatory effect of emotion-related brain structures on cognitive processing. Recent data suggest that similar effects of emotion on attention and perception occur in infants. a) In a behavioral attention task, 7-month-old infants were less likely to move their gaze from a centrally presented fearful face to a peripheral target than from a non-face control stimulus, suggesting enhanced attention to fearful faces. This effect is not explained by the low-level features of fearful facial expressions (e.g., salient eyes) or the novelty of these expressions in infants’ rearing environment because control stimuli with these characteristics failed to produce similar effects (adapted from REF. 55). b) Recordings of event-related potentials from posterior scalp regions reveal augmented ERP activity over the semi-medial occipito-temporal scalp in response to fearful as compared to neutral facial expressions, suggesting a modulatory effect of fear on early cortical face processing (adapted from REF. 66).

Electrophysiological and optical imaging studies

There is a substantial body of literature concerning the neural correlates of face perception in infants. An extensive review of this literature has been published elsewhere56. In brief, studies that have recorded event-related potentials (ERPs) to measure brain activity have shown that neural activity over the occipitotemporal part of the scalp differs for faces as compared to various non-face objects in 3–12 month-old infants57–60. Evidence from other sources such as a rare PET study in 2-month-old infants61 and results of recent optical imaging studies62 further suggests that the fusiform gyrus and the superior temporal sulcus are functional in infants at this age and exhibit some degree of tuning to faces. It seems, however, that infants’ face-processing mechanisms are activated by a broader range of stimuli than those of adults58, 60, suggesting that the underlying neuronal populations become more tuned to human faces over the course of development.

Reciprocal connections between visual representation areas and the amygdala63 and the orbitofrontal cortex64 are observed soon after birth in anatomical tracing studies in monkeys (BOX 1). This suggests that emotion-related brain structures are possibly functional at the time when infants start to exhibit behavioral discrimination of facial expressions. To date, the evidence for this hypothesis has accrued from investigations of the neural correlates of infants’ processing of happy and fearful facial expressions. A recent study examined the neural bases of perceiving neutral and smiling faces in 9–13-month-old infants and their mothers65. An extensive adult literature has shown that regions in the orbitofrontal cortex are activated in responses to positive affective cues, and may have a role in representing the reward value of such cues28, 31. To study whether the same regions are active in infants, Minagawa-Kawai et al.65 used Near Infra-Red Spectroscopy (NIRS) to measure changes in activity in fontal brain regions in response to neutral and smiling faces. The results revealed an increase in brain activity in response to smiling as compared to neutral faces, with the peak of the activity observed in anterior parts of the orbitofrontal cortex. This increase in activity was particularly pronounced when infants were viewing their mother’s face as compared to an unfamiliar adult’s smiling face, although it was present in both conditions. A similar increase in activity in the same brain regions was observed in mothers while they viewed happy expressions of their own infant. Mothers showed no response to an unfamiliar infant’s happy expressions, suggesting that the same regions are activated in infants and adults but in adults, the activation of the orbitofrontal cortex is more selective to happy expressions of a specific individual.

Box 1. Anatomical development of emotion-related brain structures.

Most of the information regarding the anatomical maturation of the amygdala and the orbitofrontal cortex comes from neuroanatomical studies in macaque monkeys (reviewed in REF. 64). Both structures seem to reach anatomical maturity relatively early in development. Neurogenesis of the amygdala is completed by birth109–111, reciprocal connections to various cortical regions are established in 2-week old monkeys63, and the distribution of opiate receptors as well as the density and distribution of serotonergic fibers seem adult-like at birth or soon after64, 112–114. There is no information regarding the neurogenesis of the orbitofrontal cortex64 but connections between orbitofrontal cortex and temporal cortical areas115 and adult-like dopamine innervation64, 113 are established by one week of age in the monkey. Although these pieces of information point to very early anatomical maturation of structures implicated in emotion recognition, there is also evidence that notable anatomical changes in these structures and their connectivity with other brain regions occur during a relatively protracted period of postnatal life. For example, there are connections from inferotemporal cortex area TEO to the amygdala in infant monkeys that do not exist in adults116, and, although feedback projections from the orbitofrontal cortex to temporal cortical areas emerge early, they continue to mature until the end of the first year64. Also, myelination of axons in the amygdala, the orbitofrontal cortex and their connections with other brain regions, begins in the first months of life but continues for several years 64, 117. Together, these latter findings suggest that, although the key components of the emotion-processing networks and their interconnectivity are established soon after birth, the wiring pattern becomes more refined over the course of postnatal development.

Because ERP and optical imaging tools are generally insensitive to activity in subcortical brain structures, it has not been possible to demonstrate directly the role of the amygdala in infants’ emotion processing. However, some ERP findings are consistent with the existence of adult-like neural circuitry that is specifically engaged by fearful facial expressions and modulates activity in cortical perceptual and attention networks. In 7-month-old infants, a positive component that occurs ~400 ms after stimulus onset over medial occipito-temporal scalp and relates to visual processing of faces57–60 is enhanced when infants are viewing a fearful as compared to happy or neutral facial expressions (FIG. 3)66. Similar effects are well-documented in the adult literature and are thought to reflect an effect of affective significance on cortical processing9, 10. Besides visual processing, fearful facial expressions also enhance activity in cortical attention networks, which is consistent with behavioral indications of enhanced attention towards fearful facial expressions. In 7-month-old infants, the negative central (Nc) component over the frontocentral scalp is larger to fearful as compared to happy facial expressions66, 67. The Nc is known to relate to orienting of attentional resources in response to salient, meaningful, or infrequently occurring stimuli68, 69. The cortical sources of the Nc have been localized to the anterior cingulate region70, which is consistent with the role of this region in the regulation of attention71.

Recent studies have further shown that the augmented Nc in response to fearful expressions is more pronounced when infants view a person who expresses fear and gazes at a novel object (implying that the object possesses an attribute of which the infant should be fearful) as compared to a situation in which a fearful looking person directs eye gaze at the infant (suggesting that the person looking at the infant feels afraid)72. It also seems that infants attend more to a novel object after they have seen an adult expressing fear towards the object73. These findings are remarkable as they not only suggest that the neural circuitry that underlies the modulatory effect of affective significance on perceptual and attention networks are functional in infants but also that the stimulus conditions that engage these circuits resemble those that are optimal for engaging emotion-related brain circuitry in adults (i.e., situations that involve stimulus-emotion learning)13.

Evidence from other species

Studies in monkeys provide further evidence for an important role of the amygdala in mediating early-emerging affective behaviors. The strongest evidence for this comes from experimental lesion studies in monkeys, showing that amygdala lesions in neonate monkeys result in abnormal affiliation and fear-related behaviors, possibly due to underlying impairments in the evaluation and discrimination of safe and potentially threatening physical and social stimuli74. Another important finding that has emerged from recent work in rats shows that the neural circuitries for learning stimulus-reward associations (preferences) and stimulus-shock associations (aversion) have distinct developmental time-courses in early infancy. In rats, the ability to form preferences to cues associated with positive reinforcement is present from birth whereas the ability to avoid cues associated with negative stimuli (footshock) is not observed until postnatal day 10 when the pup is ready to leave the nest75. Other experiments have shown that the delayed onset of learning to avoid aversive stimuli reflects immature GABAergic function and amygdala plasticity during the first postnatal days76. These findings are of interest as they may shed light on the observation that the differential responsiveness to happy and fearful emotional expressions (a preference for fear) is not observed in humans infants until several months after birth66, 77.

MECHANISMS OF DEVELOPMENT

The early emergence of some components of the emotion processing network begs a more fundamental question concerning the mechanisms that govern the development of this brain network. In the sections that follow, we discuss the possibility that these early foundations reflect a functional emergence of an experience-expectant mechanism78 that is sensitive to and shaped by exposure to species-typical aspects of emotional expressions. We will also discuss how these early foundations are further modified by individual-specific experiences, reflecting an additional experience-dependent78 component of the development of emotion processing networks.

Experience-expectant mechanisms

The universal nature of some facial expressions and the presence of these expressions throughout the evolutionary history of humans raises the possibility that the species has come to “expect” the occurrence of these expressions in different environments at a particular time in development (cf. REF. 78). The species might have evolved brain mechanisms that are to some extent biased from the beginning for processing biologically salient signals displayed in the face. The evidence for the early maturation of emotion-related brain circuits, functional coupling of these structures with visual-representation areas, and the behavioral indices of a bias to attend more to emotionally salient than to neutral facial expressions is consistent with the existence of such an experience-expectant foundation for the development of emotion recognition. What is known so far about the attentional biases in infants is more consistent with a prewired readiness to attend and incorporate information about some salient cues rather than a bias towards a stimulus that infants have learned signals a specific meaning. That is, infants “prefer” to attend to fearful faces54, show enhanced visual and attention-related ERPs to fearful faces66, 67 and have difficulty in disengaging from fearful faces55, but there is no evidence that they feel afraid when they are exposed to fearful faces. Thus, infants exhibit a seemingly obligatory attentional bias towards fearful facial expressions and find them perceptually salient even though they do not seem to derive meaning from fearful facial expressions or understand why they do so. It also seems that the bias to attend to fearful expressions emerges at the developmental time point at which such expressions are most likely to occur in the infant’s environment; that is, around 6 to 7 months, when infants start to crawl and actively explore the environment (and hence place themselves at risk for harm unless there is an attentive caregiver in proximity). Although the evidence is consistent with the existence of limited preparation to attend to biologically salient cues that are displayed in the face, the exact stimulus features to which the infant is sensitive are currently not known. The bias to attend to fearful facial expressions might reflect a bias towards some visual features of fearful facial expressions, a bias towards expressions of fear more generally, or a more abstract and broadly tuned bias towards certain feature configuration of which fearful facial expressions are only a good example.

As is the case with other experience-expectant mechanisms78, the preparedness to process facial expressions is likely to involve a coarsely specified but slightly biased neural circuitry that requires exposure to species-typical emotional expressions in order to be refined and develop towards more mature form. This developmental process may involve preservation and stabilization of some initially existing synaptic connections and pruning of others, resulting in a more refined pattern of connectivity in the network (see BOX 2), and a narrowing of the range of stimuli to which the network is responsive. Recent studies in infants illustrate how the perceptual mechanisms that underlie face processing are initially broadly tuned, and become more specialized for specific types of perceptual discriminations with experience79–82. In a study that first demonstrated this phenomenon, 6-month-old infants were shown to be able to discriminate two monkey faces from one another as easily as two human faces, whereas 9-month-old infants and adults were able to discriminate only human faces79. It was subsequently reported that 6-month-old infants who were given 3 months of experience viewing monkey faces retained the ability to discriminate novel monkey faces (at 9 months) whereas infants who lacked such experience could not80. Similar phenomena have now been demonstrated to occur in the perception of intersensory emotion-related cues (i.e., in the ability to match a heard vocalization with the facial expression producing that vocalization)81 and in the perception of lip movements that accompany speech82. Four to 6 month-old infants can, for example, discriminate silent lip movements that accompany their native speech as well as lip movements that accompany non-native speech, but only the native-language discrimination is maintained at the age of 8 months82. Collectively, these findings suggest that experience-driven fine-tuning of perceptual mechanisms reflects a general principle of the development of different aspects of face processing83.

Box 2. Multiple functions of the superior temporal sulcus.

Evidence from single-cell studies in the macaque monkey118–121 and functional MRI studies in humans122–125 show that regions in occipito-temporal cortex, which in humans include the inferior occipital gyri, the fusiform gyrus, and the superior temporal sulcus, are critical for perceptual processing of information from faces. Of these face-responsive regions, the superior temporal sulcus (STS) seems to have a key role in the perception of “changeable” aspects of faces such as facial expression, eye gaze, and lip movements125–127. The importance of the STS in the perception of facial expressions may also reflect its role in the integration of separate sources of information128. People typically use and integrate information from several sources to recognize emotional expressions, including the spatial relations of key facial features129, dynamic cues related to temporal changes in expression130, gaze direction131, and concurrent expressive cues in other sensory modalities such as vocal emotional expressions132, 133.

The STS has been implicated not only in the perception of changeable aspects of faces and audiovisual integration133 but also in several other domains such as the perception of biological motion and social stimuli134 and speech perception135 (See REF. 136 for a review). Although it is possible that these functions are mediated by distinct subregions of the STS, a recent review of fMRI studies in humans identified only two distinct clusters of activation, one in the anterior STS that was systematically associated with speech perception, and another in the posterior STS that was associated with several functions, including face perception, biological motion processing, and audiovisual integration136. The similar activation of the STS in different contexts may be explained by a common cognitive process across different domains137. Alternatively, differential patterns of co-activation and interactions with other brain regions may explain how the same region can participate in different functions127, 136.

A recent study in monkeys84 has further demonstrated that face-processing mechanisms remain in the immature state if the expected experiences do not occur and also that experiences occurring in a sensitive period (i.e., a period when the animal is first exposed to faces) may have irreversible influences on the developing face-processing system. Here, infant monkeys were reared with no exposure to faces for 6–24 months and were then selectively exposed to either monkey or human faces for one month. Upon termination of the deprivation period (and prior to exposure to any faces), the monkeys exhibited a capacity to discriminate between individual monkey face identities as well as human face identities, suggesting that such discrimination abilities require little if any visual experience in order to develop. This initial capacity was, however, changed after the short exposure period so that monkeys maintained the ability to discriminate face identities of the exposed species but had considerable difficulties in discriminating face identities of the non-exposed species.

Experience-dependent development

Although experience-expectant mechanisms and exposure to species-typical facial expression may provide a foundation for a rapid acquisition of perceptual representation of the universal features of facial expressions, these representations are likely to be further shaped by individual-specific experiences and the frequency and intensity of certain facial expressions in the rearing environment. The strongest evidence that emotion-recognition mechanisms are shaped by individual experience comes from studies in maltreated children. Children of abusive parents are exposed to heightened levels of parental expressions of negative emotions and high rates of direct verbal and physical aggression. Studies85–89 have shown that emotion-recognition mechanisms are significantly shaped by such experiences. School-aged children with a history of being physically abused by their parents exhibit generally normally organized perceptual representations of basic facial expressions such as fearful, sad, and happy facial expressions, but they exhibit heightened sensitivity and a broader perceptual category for signals of anger as compared to children who are reared in typical environments. Compared to non-maltreated children, abused children show a response bias for anger, which means that they are more likely to respond as if a person is angry (displays an angry expression) when the nature of the emotional situation (the emotional state of a protagonist in a story) is ambiguous85. They also allocate a disproportionate amount of processing resources (as inferred by the amplitude of the attention-sensitive ERPs) to angry facial expressions87. Finally, abused children show a perceptual bias in the processing of angry faces, which causes them to classify a broader range of facial expressions as perceptually similar to angry faces and also to recognize anger on the basis of partial sensory cues86, 88. Together, these different indices of increased perceptual sensitivity to visual cues of anger may reflect an adaptive process in which the perceptual mechanisms that underlie emotion recognition become attuned to those social signals that serve as important predictive cues in abusive environments88.

Given the generally normal recognition of facial expressions in abused children (except for the broadened perceptual category for signals of anger), the effect of such species-atypical experience seem to reflect a tuning shift rather than a gross alteration of representations of facial expressions. It seems, therefore, that the basic organization of the emotion-recognition networks is specified by an experience-expectant neural circuitry that emerges during a sensitive period of development – perhaps the first few years of life - and that rapid refinement of this circuitry occurs by exposure to universal features of expressions. Individual-specific experiences may, however, alter the category boundaries of facial expressions. In theory, because experience-dependent processes are not tied to a particular point in development, this speaks to the brain’s continual plasticity in both adaptive and maladaptive responses; for example, a tendency to view ambiguous faces in a positive light vs. a negative light. In addition, the perceptual biases that transpire through experience-dependent changes should, again in theory, be modifiable. Thus, a maltreated child who acquires a bias to see anger more readily than other emotions should be able to unlearn this same bias. This is quite different, however, from the early perceptual biases that come about through experience-expectant development; thus, for example, it is unlikely that one can “unlearn” the bias to respond quickly to fear, since doing so a) was acquired during a sensitive period of development, leading to a crystallization of neural circuits, and b) being tuned to fearful faces may confer survival.

Recent studies are consistent with the view that components of the emotion-processing network retain some plasticity throughout the life span and can quickly alter their response properties to stimuli that are associated with rewarding or aversive experiences13, 26, 90. Cells in the monkey amygdala, for example, come to represent such associations very rapidly, often on the basis of a single exposure to a stimulus and subsequent reward or aversive stimulation90. Although representations of salient stimuli may first be stored in the amygdala and the orbitofrontal cortex, it is likely that plasticity also occurs in connected visual regions that are relevant for the processing of visual and intersensory information from emotional expressions. In rats, for example, neurons in the primary auditory cortex can tune their receptive fields to the frequency of stimuli that are associated with appetitive or aversive reinforcements91. Such tuning shifts are acquired rapidly and are retained for up to eight weeks. Tuning shifts may be mediated by projections from the amygdala to the cholinergic nucleus basalis, resulting in increased release of acetylcholine from the nucleus basalis to the cerebral cortex91. Although such long-term plasticity has not been demonstrated in the context of human facial-expression processing, recent findings have shown similar-type changes including heightened perceptual sensitivity and strengthened cortical representation of pictures of facial expressions that are paired with affectively significant events92–94.

INDIVIDUAL DIFFERENCES IN DEVELOPMENT

Initial biases in emotion-related brain circuits and their experience-driven refinement are likely to contribute not only to the general development of facial-expression processing but also to individual differences in this developmental process. Explicating these mechanisms is important given that heightened sensitivity to signals of some emotions (such as threat) may predispose an individual to learn fears in social settings95 and is known to have a causal role in vulnerability to emotion-related disorders96.

One possibility is that genetic factors, such as common variants in gene sequence (polymorphisms) that affect major neurotransmitter systems, contribute to the reactivity of emotion-related brain circuits. A promising line of research has shown, for example, that a polymorphism in a gene that regulates the serotonin transporter (5-HTT) and affects brain serotonin transmission, is associated with the reactivity of the amygdala and associated perceptual representation areas in response to fearful and angry facial expressions97. Individuals with one or two copies of the “short” (S) allele of the 5-HTT polymorphism (i.e., the allele that is associated with reduced 5-HTT availability and vulnerability to depression)98 exhibit relatively greater amygdala responses to threatening facial expressions compared to individuals who are homozygous for the 5-HTT “long” (L) allele97. Given that such genetically driven differences in serotonin function are likely to be present from birth99, 100, they may, in combination with environmental factors (such as exposure to negative emotions), set the stage for the development of increased perceptual sensitivity to negative emotions. It is important to note, however, that the heightened attention to potent signs of danger as seen in adults with anxiety disorders96 is also likely to depend on other factors, such as the integrity of later developing cortico-amygdalar control mechanisms that act to regulate stimulus selection and the allocation of attentional resources to negative emotional cues101.

CONCLUSIONS AND FUTURE DIRECTIONS

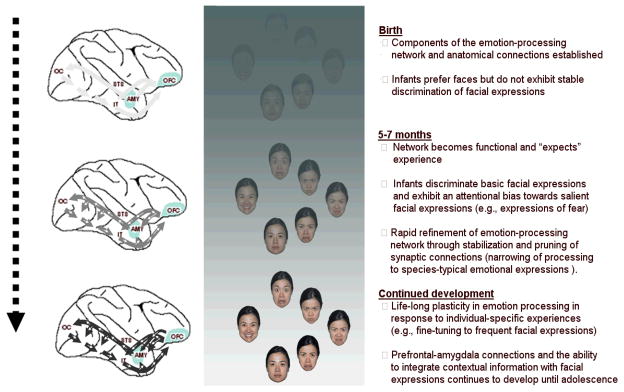

A network of emotion-related brain systems (amygdala and orbitofrontal cortex) and higher-level visual representation areas in the occipital-temporal cortex mediates the capacity to efficiently detect and attend to facial expressions of emotions. The evidence reviewed here suggests that the amygdala and orbitofrontal cortex emerge in early infancy and are to a limited extent biased towards processing and storing information about certain biologically salient cues. Based on these findings, we propose that the early emergence of emotion-related brain systems, the initial biases in these systems, and the functional coupling of these systems with cortical perceptual areas that are supportive of more fine-grained perceptual processing and integration of different emotion-relevant cues (such as the STS, see BOX 2) provide a foundation for rapid acquisition of representations of species-typical facial expressions. Thus, the acquisition of representations of facial expressions may be based on a combination of initial biases in emotion-related neural systems and their experience-driven refinement, rather than on experience-independent maturation of a highly specialized system.

There are some indications that the emotion-relevant brain network may be particularly sensitive to the expected experience around the time of their functional emergence between 5 and 7 months of age. The amount of experiential input that is required for these systems to develop normally is not known but the evidence for similar development of emotion recognition in different cultures and even in severely deprived environments102, 103, suggests that rudimentary perceptual representations of the universal features of facial expressions are acquired on the basis of limited environmental input. The rapid alterations of the response properties of neurons in the amygdala in response to stimulus-emotion associations26 further suggests that emotion-processing networks can also quickly adapt to individual-specific experiences in the environment.

The proposal that rudimentary representations of some universal features of facial expressions are acquired early in life (possibly during a sensitive period) does not preclude the possibility that functional changes in emotion-processing networks occur later in childhood. Indeed, behavioral studies have shown age-related improvement throughout childhood in tasks that measure the ability to label facial expressions104. Although such changes may be partly due to general cognitive improvement, they may also reflect functional changes in the brain network that underlies facial emotion processing. For example, fMRI studies in children and adolescents have shown age-related changes in childhood and adolescence in amygdala responses to facial expressions and in connections between amygdala and ventral prefrontal cortex (e.g., anterior cingulate cortex) 39, 105. Amygdalo-prefrontal connections may be of critical importance for the ability to label facial expressions106 and for the utilization of contextual information to modulate responses to facial expressions107. Recent studies have also shown that cortical face-sensitive regions (fusiform gyrus) are relatively immature in 5–8 year old children and continue to specialize for face processing until adolescence 108. Thus, although the basic connectivity pattern in the emotion-processing network and some of its response properties (e.g., differential response to neutral and fearful facial expressions)39 may emerge early in life, other aspects of emotion processing such as those that include prefrontal-amygdala connections and fine-tuning of responses to specific facial expressions may continue to develop until adolescence (FIG. 4).

Figure 4. The proposed model of the development of emotion-recognition mechanisms.

It is proposed that the basic organization of the emotion-recognition networks is specified by an experience-expectant neural circuitry that emerges at 5–7 months of age and is rapidly refined by exposure to universal features of expressions during a sensitive period of development (perhaps the first few years of life). The network retains some plasticity throughout life span and can be fine-tuned by individual-specific experiences (i.e., experience-dependent development). Also, functional connectivity between emotion processing networks and other prefrontal regulatory systems continues to develop until adolescence. The development is affected by genetic factors (e.g., functional polymorphisms that affect reactivity of relevant neural systems), environmental factors (frequency of certain emotional expressions), and their interaction. The depicted time points might become more specific as more data become available.

Several interesting directions for future research emerge from this review. First, further investigation into the normative changes in perceptual-discrimination abilities over the course of the first year and the neural correlates of these changes will shed further light on the existence of sensitive periods in development during which the underlying neural mechanisms “expect” exposure to emotional expressions. Second, to understand better the early foundations of emotion recognition, an important goal for future studies is to elucidate the neural bases of emotion processing in infants and the exact stimulus features to which early developing emotion-processing networks are responsive. With the new developments in techniques that allow investigation of the brain basis of different cognitive functions in developing populations (e.g., high-density recordings of event-related potential and brain oscillations, near infrared spectroscopy), these questions are now more approachable than they were even a decade ago. Third, merging of molecular genetics, brain imaging methods, and psychological characterizations of critical environmental variables will shed new light on the developmental pathways through which the brain is shaped towards normal and vulnerable patterns of responding to social cues of emotions. Such studies will be important for elucidating the early precursors of vulnerability to emotional and social disorders later in life.

Acknowledgments

Charles A. Nelson gratefully acknowledges support from the NIH (MH078829) and the Richard David Scott endowment; Jukka Leppänen acknowledges financial support from the Academy of Finland (grant #1115536). The authors thank Jerome Kagan for his comments on an earlier draft of this article.

References

- 1.Ekman P, Sorenson ER, Friesen WV. Pan-cultural elements in facial displays of emotion. Science. 1969;164:86–88. doi: 10.1126/science.164.3875.86. [DOI] [PubMed] [Google Scholar]

- 2.Ekman P, Friesen WV. A new pan-cultural facial expression of emotion. Motiv Emot. 1986;10:159–168. [Google Scholar]

- 3.Levenson RW. In: Handbook of Affective Sciences. Davidson RJ, Scherer KR, Goldsmith HH, editors. Oxford University Press; New York: 2003. pp. 212–224. [Google Scholar]

- 4.Vuilleumier P. How brains beware: neural mechanisms of emotional attention. Trends Cogn Sci. 2005;9:585–594. doi: 10.1016/j.tics.2005.10.011. Presents an overview of studies showing that the influence of voluntary attention (task relevance) and affective significance on sensory processing are dissociable and mediated by distinct neural mechanisms. [DOI] [PubMed] [Google Scholar]

- 5.Pourtois G, Grandjean D, Sander D, Vuilleumier P. Electrophysiological correlates of rapid spatial orienting towards fearful faces. Cereb Cortex. 2004;14:619–633. doi: 10.1093/cercor/bhh023. [DOI] [PubMed] [Google Scholar]

- 6.Milders M, Sahraie A, Logan S, Donnellon N. Awareness of faces is modulated by their emotional meaning. Emotion. 2006;6:10–17. doi: 10.1037/1528-3542.6.1.10. [DOI] [PubMed] [Google Scholar]

- 7.Yang E, Zald DH, Blake R. Fearful expressions gain preferential access to awareness during continuous flash suppression. Emotion. 2007;7:882–886. doi: 10.1037/1528-3542.7.4.882. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Georgiou GA, et al. Focusing on fear: Attentional disengagement from emotional faces. Vis Cogn. 2005;12:145–158. doi: 10.1080/13506280444000076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Leppänen JM, Hietanen JK, Koskinen K. Differential early ERPs to fearful versus neutral facial expressions: a response to the salience of the eyes? Biol Psychol. 2008;78:150–158. doi: 10.1016/j.biopsycho.2008.02.002. [DOI] [PubMed] [Google Scholar]

- 10.Batty M, Taylor MJ. Early processing of the six basic facial emotional expressions. Cognitive Brain Res. 2003;17:613–620. doi: 10.1016/s0926-6410(03)00174-5. [DOI] [PubMed] [Google Scholar]

- 11.Morris JS, et al. A neuromodulatory role for the human amygdala in processing emotional facial expressions. Brain. 1998;121:47–57. doi: 10.1093/brain/121.1.47. [DOI] [PubMed] [Google Scholar]

- 12.Hadj-Bouziane F, Bell AH, Knusten TA, Ungerleider LG, Tootell RBH. Perception of emotional expressions is independent of face selectivity in monkey inferior temporal cortex. Proc Natl Acad Sci U S A. 2008;105:5591–5596. doi: 10.1073/pnas.0800489105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Hooker CI, Germine LT, Knight RT, D’Esposito M. Amygdala response to facial expressions reflects emotional learning. J Neurosci. 2006;26:8915–8922. doi: 10.1523/JNEUROSCI.3048-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Adolphs R. Neural systems for recognizing emotion. Curr Opin Neurobiol. 2002;12:169–177. doi: 10.1016/s0959-4388(02)00301-x. [DOI] [PubMed] [Google Scholar]

- 15.Luo Q, Holroyd T, Jones M, Hendler T, Blair J. Neural dynamics for facial threat processing as revealed by gamma band synchronization using MEG. Neuroimage. 2007;34:839–847. doi: 10.1016/j.neuroimage.2006.09.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Vuilleumier P, Armony JL, Driver J, Dolan RJ. Distinct spatial frequency sensitivities for processing faces and emotional expressions. Nat Neurosci. 2003;6:624–631. doi: 10.1038/nn1057. [DOI] [PubMed] [Google Scholar]

- 17.Vuilleumier P, Richardson MP, Armony JL, Driver J, Dolan RJ. Distant influences of amygdala lesion on visual cortical activation during emotional face processing. Nat Neurosci. 2004;7:1271–1278. doi: 10.1038/nn1341. Shows that the amygdala is critically involved in enhanced perceptual processing of emotionally expressive faces. [DOI] [PubMed] [Google Scholar]

- 18.Anderson AK, Phelps EA. Lesions of the human amygdala impair enhanced perception of emotionally salient events. Nature. 2001;411:305–309. doi: 10.1038/35077083. [DOI] [PubMed] [Google Scholar]

- 19.Freese JL, Amaral DG. The organization of projections from the amygdala to visual cortical areas TE and V1 in the macaque monkey. J Comp Neurol. 2005;486:295–317. doi: 10.1002/cne.20520. [DOI] [PubMed] [Google Scholar]

- 20.Freese JL, Amaral DG. Synaptic organization of projections from the amygdala to visual cortical areas TE and V1 in the macaque monkey. J Comp Neurol. 2006;496:655–667. doi: 10.1002/cne.20945. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Amaral DG, Behniea H, Kelly JL. Topographic organization of projections from the amygdala to the visual cortex in the macaque monkey. Neuroscience. 2003;118:1099–1120. doi: 10.1016/s0306-4522(02)01001-1. [DOI] [PubMed] [Google Scholar]

- 22.Kapp BS, Supple WF, Whalen PJ. Effects of electrical stimulation of the amygdaloid central nucleus on neocortical arousal in the rabbit. Behav Neurosci. 1994;108:81–93. doi: 10.1037//0735-7044.108.1.81. [DOI] [PubMed] [Google Scholar]

- 23.Bentley P, Vuilleumier P, Thiel CM, Driver J, Dolan RJ. Cholinergic enhancement modulates neural correlates of selective attention and emotional processing. Neuroimage. 2003;20:58–70. doi: 10.1016/s1053-8119(03)00302-1. [DOI] [PubMed] [Google Scholar]

- 24.Whalen PJ. Fear, vigilance, and ambiguity: initial neuroimaging studies of the human amygdala. Curr Dir Psychol Sci. 1998;7:177–188. [Google Scholar]

- 25.Adolphs R. Fear, faces, and the human amygdala. Curr Opin Neurobiol. 18:166–172. doi: 10.1016/j.conb.2008.06.006. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Paton JJ, Belova MA, Morrison SE, Salzman CD. The primate amygdala represents the positive and negative value of visual stimuli during learning. Nature. 2006;439:865–870. doi: 10.1038/nature04490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Hornak J, et al. Changes in emotion after circumscribed surgical lesions of the orbitofrontal and cingulate cortices. Brain. 2003;126:1691–1712. doi: 10.1093/brain/awg168. [DOI] [PubMed] [Google Scholar]

- 28.O’Doherty J, et al. Beauty in a smile: the role of medial orbitofrontal cortex in facial attractiveness. Neuropsychologia. 2003;41:147–155. doi: 10.1016/s0028-3932(02)00145-8. [DOI] [PubMed] [Google Scholar]

- 29.Blair RJR, Morris JS, Frith CD, Perrett DI, Dolan RJ. Dissociable neural responses to facial expressions of sadness and anger. Brain. 1999;122:883–893. doi: 10.1093/brain/122.5.883. [DOI] [PubMed] [Google Scholar]

- 30.Rolls ET. The representation of information about faces in the temporal and frontal lobes. Neuropsychologia. 2007;45:124–143. doi: 10.1016/j.neuropsychologia.2006.04.019. [DOI] [PubMed] [Google Scholar]

- 31.Hooker CI, Verosky SC, Miyakawa A, Knight RT, D’Esposito M. The influence of personality on neural mechanisms of observational fear and reward learning. Neuropsychologia. 2008;46:2709–2724. doi: 10.1016/j.neuropsychologia.2008.05.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Cavada C, Company T, Tejedor J, Cruz-Rizzolo RJ, Reinoso-Suarez F. The anatomical connections of the macaque monkey orbitofrontal cortex: a review. Cereb Cortex. 2000;10:220–242. doi: 10.1093/cercor/10.3.220. [DOI] [PubMed] [Google Scholar]

- 33.Bar M, et al. Top-down facilitation of visual recognition. Proc Natl Acad Sci U S A. 2006;103:449–454. doi: 10.1073/pnas.0507062103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Kringelbach ML, et al. A specific and rapid neural signature for parental instinct. PLoS ONE. 2008;3:e1664. doi: 10.1371/journal.pone.0001664. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Canli T. Toward a neurogenetic theory of neuroticism. Annals in New York Academy of Sciences. 2008;1129:153–174. doi: 10.1196/annals.1417.022. [DOI] [PubMed] [Google Scholar]

- 36.Fox E, Mathews A, Calder AJ, Yiend J. Anxiety and sensitivity to gaze direction in emotionally expressive faces. Emotion. 2007;7:478–486. doi: 10.1037/1528-3542.7.3.478. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Etkin A, et al. Individual Differences in Trait Anxiety Predict the Response of the Basolateral Amygdala to Unconsciously Processed Fearful Faces. Neuron. 2004;44:1043–1055. doi: 10.1016/j.neuron.2004.12.006. [DOI] [PubMed] [Google Scholar]

- 38.Stein MB, Simmons AN, Feinstein JS, Paulus MP. Increased Amygdala and Insula Activation During Emotion Processing in Anxiety-Prone Subjects. Am J Psychiatry. 2007;164:318–327. doi: 10.1176/ajp.2007.164.2.318. [DOI] [PubMed] [Google Scholar]

- 39.Hare TA, et al. Biological Substrates of Emotional Reactivity and Regulation in Adolescence During an Emotional Go-Nogo Task. Biol Psychiatry. 2008;63:927–934. doi: 10.1016/j.biopsych.2008.03.015015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Sorce FF, Emde RN, Campos JJ, Klinnert MD. Maternal emotional signaling: Its effect on the visual cliff behavior of 1-year-olds. Dev Psychol. 1985;21:195–200. [Google Scholar]

- 41.Johnson MH. Subcortical face processing. Nat Rev Neurosci. 2005;6:766–774. doi: 10.1038/nrn1766. [DOI] [PubMed] [Google Scholar]

- 42.Banks MS, Salapatek P. In: Handbook of Child Psychology. Haith MM, Campos JJ, editors. Wiley; New York: 1983. pp. 435–571. [Google Scholar]

- 43.Bushnell IWR. Modification of the externality effect in young infants. J Exp Child Psychol. 1979;28:211–229. doi: 10.1016/0022-0965(79)90085-7. [DOI] [PubMed] [Google Scholar]

- 44.Maurer D, Salapatek P. Developmental changes in the scanning of faces by young infants. Child Dev. 1976;47:523–527. [PubMed] [Google Scholar]

- 45.Field TM, Woodson RW, Greenberg R, Cohen C. Discrimination and imitation of facial expressions by neonates. Science. 1982;218:179–181. doi: 10.1126/science.7123230. [DOI] [PubMed] [Google Scholar]

- 46.Kestenbaum R, Nelson CA. The recognition and categorization of upright and inverted emotional expressions by 7-month-old infants. Infant Behav Dev. 1990;13:497–511. [Google Scholar]

- 47.Bornstein MH, Arterberry ME. Recognition, discrimination and categorization of smiling by 5-month-old infants. Dev Sci. 2003;6:585–599. [Google Scholar]

- 48.Ludemann PM, Nelson CA. Categorical representation of facial expressions by 7-month-old infants. Dev Psychol. 1988;24:492–501. [Google Scholar]

- 49.Nelson CA, Morse PA, Leavitt LA. Recognition of facial expressions by 7-month-old infants. Child Dev. 1979;50:1239–1242. One of the first papers to report that infants can perceptually discriminate certain facial expressions from one another (specifically, happy vs. fear) and prefer to look longer at fearful faces than happy faces. [PubMed] [Google Scholar]

- 50.Bahrick LE, Lickliter R, Flom R. Intersensory redundancy guides the development of selective attention, perception, and cognition. Curr Dir Psychol Sci. 2004;13:99–102. [Google Scholar]

- 51.Flom R, Bahrick LE. The development of infant discrimination of affect in multimodal and unimodal stimulation: the role of intersensory redundancy. Dev Psychol. 2007;43:238–252. doi: 10.1037/0012-1649.43.1.238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Walker-Andrews AS. Infants’ perception of expressive behaviors: differentiation of multimodal information. Psychol Bull. 1997;121:437–456. doi: 10.1037/0033-2909.121.3.437. [DOI] [PubMed] [Google Scholar]

- 53.Kotsoni E, de Haan M, Johnson MH. Categorical perception of facial expressions by 7-month-old infants. Perception. 2001;30:1115–1125. doi: 10.1068/p3155. [DOI] [PubMed] [Google Scholar]

- 54.Nelson CA, Dolgin K. The generalized discrimination of facial expressions by 7-month-old infants. Child Dev. 1985;56:58–61. [PubMed] [Google Scholar]

- 55.Peltola MJ, Leppänen JM, Palokangas T, Hietanen JK. Fearful faces modulate looking duration and attention disengagement in 7-month-old infants. Dev Sci. 2008;11:60–68. doi: 10.1111/j.1467-7687.2007.00659.x. Provides evidence for adult-like emotion–attention interactions in infants and shows that the influence of fearful faces on attention in infants is not simply attributable to the novelty of these faces. [DOI] [PubMed] [Google Scholar]

- 56.de Haan M, Johnson MH, Halit H. Development of face-sensitive event-related potentials during infancy: a review. Int J Psychophysiol. 2003;51:45–58. doi: 10.1016/s0167-8760(03)00152-1. [DOI] [PubMed] [Google Scholar]

- 57.de Haan M, Nelson CA. Brain activity differentiates face and object processing in 6-month-old infants. Dev Psychol. 1999;35:1113–1121. doi: 10.1037//0012-1649.35.4.1113. Electrophysiological evidence that the brain of the typically developing 6 month old distinguishes between faces and non-face objects. [DOI] [PubMed] [Google Scholar]

- 58.de Haan M, Pascalis O, Johnson MH. Specialization of neural mechanisms underlying face recognition in human infants. J Cogn Neurosci. 2002;14:199–209. doi: 10.1162/089892902317236849. [DOI] [PubMed] [Google Scholar]

- 59.Halit H, Csibra G, Volein Á, Johnson MH. Face-sensitive cortical processing in early infancy. J Child Psychol Psychiatry. 2004;45:1228–1234. doi: 10.1111/j.1469-7610.2004.00321.x. [DOI] [PubMed] [Google Scholar]

- 60.Halit H, de Haan M, Johnson MH. Cortical specialization for face processing: face-sensitive event-related potential components in 3- and 12-month-old infants. Neuroimage. 2003;19:1180–1193. doi: 10.1016/s1053-8119(03)00076-4. [DOI] [PubMed] [Google Scholar]

- 61.Tzourio-Mazoyer N, et al. Neural correlates of woman face processing by 2-month-old infants. Neuroimage. 2002;15:454–461. doi: 10.1006/nimg.2001.0979. [DOI] [PubMed] [Google Scholar]

- 62.Otsuka Y, et al. Neural activation to upright and inverted faces in infants measured by near infrared spectroscopy. Neuroimage. 2007;34:399–406. doi: 10.1016/j.neuroimage.2006.08.013. [DOI] [PubMed] [Google Scholar]

- 63.Amaral DG, Bennett J. Development of amygdalo-cortical connection in the macaque monkey. Soc Neurosci Abs. 2000;26:17–26. [Google Scholar]

- 64.Machado CJ, Bachevalier J. Non-human primate models of childhood psychopathology: the promise and the limitations. J Child Psychol Psychiatry. 2003;44:64–87. doi: 10.1111/1469-7610.00103. [DOI] [PubMed] [Google Scholar]

- 65.Minagawa-Kawai Y, et al. Prefrontal activation associated with social attachment: facial emotion recognition in mothers and infants. Cereb Cortex. doi: 10.1093/cercor/bhn081. (in press). Reports an optical imaging study showing that orbitofrontal cortex is involved in the processing of happy facial expressions in infants. [DOI] [PubMed] [Google Scholar]

- 66.Leppänen JM, Moulson MC, Vogel-Farley VK, Nelson CA. An ERP study of emotional face processing in the adult and infant brain. Child Dev. 2007;78:232–245. doi: 10.1111/j.1467-8624.2007.00994.x. Reports evidence that affective significance modulates the early stages of cortical face processing in adult and 7-month-old infants. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Nelson CA, de Haan M. Neural correlates of infants’ visual responsiveness to facial expressions of emotions. Dev Psychobiol. 1996;29:577–595. doi: 10.1002/(SICI)1098-2302(199611)29:7<577::AID-DEV3>3.0.CO;2-R. Examines the neural correlates of the infant’s ability to discriminate facial expressions of emotion. [DOI] [PubMed] [Google Scholar]

- 68.de Haan M. In: Infant EEG and event-related potentials. de Haan M, editor. Psychology Press; New York: 2007. pp. 101–143. [Google Scholar]

- 69.Nelson CA. In: Human Behavior and the Developing Brain. Dawson G, Fischer K, editors. Guilford Press; New York: 1994. pp. 269–313. [Google Scholar]

- 70.Reynolds GD, Richards JE. Familiarization, attention, and recognition memory in infancy: an event-related-potential and cortcial source localization study. Dev Psychol. 2005;41:598–615. doi: 10.1037/0012-1649.41.4.598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Bush G, Luu P, Posner MI. Cognitive and emotional influences in anterior cingulate cortex. Trends Cogn Sci. 2000;4:215–222. doi: 10.1016/s1364-6613(00)01483-2. [DOI] [PubMed] [Google Scholar]

- 72.Hoehl S, Palumbo L, Heinisch C, Striano T. Infants’ attention is biased by emotional expressions and eye gaze direction. Neuroreport. 2008;19:579–582. doi: 10.1097/WNR.0b013e3282f97897. [DOI] [PubMed] [Google Scholar]

- 73.Hoehl S, Wiese L, Striano T. Young infants’ neural processing of objects is affected by eye gaze direction and emotional expression. PLoS ONE. 2008;3:e2389. doi: 10.1371/journal.pone.0002389. Provides evidence that infants utilize information from adults’ gaze direction and emotional expression to enhance attention towards certain objects. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Bauman MD, Lavenex P, Mason WA, Capitanio JP, Amaral DG. The development of social behavior following neonatal amygdala lesions in rhesus monkeys. J Cogn Neurosci. 2004;16:1388–1411. doi: 10.1162/0898929042304741. [DOI] [PubMed] [Google Scholar]

- 75.Moriceau S, Sullivan RM. Maternal presence serves as a switch between learning fear and attraction in infancy. Nat Neurosci. 2004;9:1004–1006. doi: 10.1038/nn1733. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Thompson JV, Sullivan RM, Wilson DA. Developmental emergence of fear learning corresponds with changes in amygdala synaptic plasticity. Brain Res. 2008;1200:58–65. doi: 10.1016/j.brainres.2008.01.057. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Farroni T, Menon E, Rigato S, Johnson MH. The perception of facial expressios in newborns. Eur J Dev Psychol. 2007;4:2–13. doi: 10.1080/17405620601046832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Greenough WT, Black JE, Wallace CS. Experience and brain development. Child Dev. 1987;58:539–559. A theoretical review that illustrates the differences between experience expectant vs. dependent plasticity and learning. [PubMed] [Google Scholar]

- 79.Pascalis O, de Haan M, Nelson CA. Is face processing species-specific during the first year of life? Science. 2002;296:1321–1323. doi: 10.1126/science.1070223. Reports the first study showing that face-processing mechanisms are initially broadly tuned but narrow with experience. [DOI] [PubMed] [Google Scholar]

- 80.Pascalis O, et al. Plasticity of face processing in infancy. Proc Natl Acad Sci U S A. 2005;102:5297–5300. doi: 10.1073/pnas.0406627102. Demonstrates that one can rescue the sensitive period for discriminating monkey faces by providing infants with distributed exposure to monkey faces. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Lewkowicz DJ, Ghazanfar AA. The decline of cross-species intersensory perception in human infants. Proc Natl Acad Sci U S A. 2006;103:6771–6774. doi: 10.1073/pnas.0602027103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Weikum WM, et al. Visual language discrimination in infancy. Science. 2007;316:1159. doi: 10.1126/science.1137686. [DOI] [PubMed] [Google Scholar]

- 83.Scott LS, Pascalis O, Nelson CA. A domain general theory of the development of perceptual discrimination. Curr Dir Psychol Sci. 2007;16:197–201. doi: 10.1111/j.1467-8721.2007.00503.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Sugita Y. Face perception in monkeys reared with no exposure to faces. Proc Natl Acad Sci U S A. 2008;105:394–398. doi: 10.1073/pnas.0706079105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Pollak SD, Cicchetti D, Hornung K, Reed A. Recognizing emotion in faces: developmental effects of child abuse and neglect. Dev Psychol. 2000:679–688. doi: 10.1037/0012-1649.36.5.679. [DOI] [PubMed] [Google Scholar]

- 86.Pollak SD, Kistler DJ. Early experience is associated with the development of categorical representations for facial expression of emotions. Proc Natl Acad Sci U S A. 2002;88:7572–7575. doi: 10.1073/pnas.142165999. Reports evidence for experience-dependent plasticity in brain mechanisms underlying perception of universal facial expressions. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Pollak SD, Klorman R, Thatcher JE, Cicchetti D. P3b reflects maltreated children’s reactions to facial displays of emotion. Psychophysiology. 2001;38:264–274. [PubMed] [Google Scholar]

- 88.Pollak SD, Sinha P. Effects of early experience on children’s recognition of facial displays of emotion. Dev Psychol. 2002;38:784–791. doi: 10.1037//0012-1649.38.5.784. [DOI] [PubMed] [Google Scholar]

- 89.Pollak SD, Tolley-Schell SA. Selective attention to facial emotion in physically abused children. J Abnorm Psychol. 2003;112:323–338. doi: 10.1037/0021-843x.112.3.323. [DOI] [PubMed] [Google Scholar]

- 90.Salzman CD, Paton JJ, Belova MA, Morrison SE. Flexible neural representations of value in the primate brain. Ann N Y Acad Sci. 2007;1121:336–354. doi: 10.1196/annals.1401.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Weinberger NM. Specific long-term memory traces in primary auditory cortex. Nat Rev Neurosci. 2004;5:279–290. doi: 10.1038/nrn1366. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Keil A, Stolarova M, Moratti S, Ray WJ. Adaptation in human visual cortex as a mechanism for rapid discrimination of aversive stimuli. Neuroimage. 2007;36:472. doi: 10.1016/j.neuroimage.2007.02.048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Lim SL, Pessoa L. Affective learning increases sensitivity to graded emotional faces. Emotion. 2008;8:96–103. doi: 10.1037/1528-3542.8.1.96. [DOI] [PubMed] [Google Scholar]

- 94.Pizzagalli DA, Greischar LL, Davidson RJ. Spatio-temporal dynamics of brain mechanisms in aversive classical conditioning: high-density event-related potential and brain electrical tomography analyses. Neuropsychologia. 2003;41:184–194. doi: 10.1016/s0028-3932(02)00148-3. [DOI] [PubMed] [Google Scholar]

- 95.Olsson A, Phelps EA. Social learning of fear. Nat Neurosci. 2007;10:1095–1102. doi: 10.1038/nn1968. [DOI] [PubMed] [Google Scholar]

- 96.Mathews A, MacLeod C. Cognitive vulnerability to emotional disorders. Annu Rev Clin Psychol. 2005;1:167–195. doi: 10.1146/annurev.clinpsy.1.102803.143916. [DOI] [PubMed] [Google Scholar]

- 97.Hariri AR, et al. Serotonin transporter genetic variation and the response of the human amygdala. Science. 2002;297:400–403. doi: 10.1126/science.1071829. [DOI] [PubMed] [Google Scholar]

- 98.Caspi A, et al. Influence of Life Stress on Depression: Moderation by a Polymorphism in the 5-HTT Gene. Science. 2003;301:386–389. doi: 10.1126/science.1083968. [DOI] [PubMed] [Google Scholar]

- 99.Hariri AR, Holmes A. Genetics of emotional regulation: the role of the serotonin transporter in neural function. Trends Cogn Sci. 2006;10:182–191. doi: 10.1016/j.tics.2006.02.011. [DOI] [PubMed] [Google Scholar]

- 100.Lakatos K, et al. Association of D4 dopamine receptor gene and serotonin transporter promoter polymorphisms with infants’ response to novelty. Mol Psychiatry. 2003;8:90–97. doi: 10.1038/sj.mp.4001212. [DOI] [PubMed] [Google Scholar]

- 101.Bishop SJ. Neurocognitive mechanisms of anxiety: an integrative account. Trends Cogn Sci. 2007;11:307–317. doi: 10.1016/j.tics.2007.05.008. [DOI] [PubMed] [Google Scholar]

- 102.Moulson MC, Nelson CA. Early adverse experiences and the neurobiology of facial emotion processing. Dev Psychol. doi: 10.1037/a0014035. (in press) [DOI] [PubMed] [Google Scholar]

- 103.Nelson CA, Parker SW, Guthrie D. The discrimination of facial expressions by typically developing infants and toddlers and those experiencing early institutional care. Infant Behav Dev. 2006;29:210–219. doi: 10.1016/j.infbeh.2005.10.004. [DOI] [PubMed] [Google Scholar]

- 104.Widen SC, Russell JA. A closer look at preschoolers’ freely produced labels for facial expressions. Dev Psychol. 2003;39:114–128. doi: 10.1037//0012-1649.39.1.114. [DOI] [PubMed] [Google Scholar]

- 105.Cunningham MG, Bhattacharyya S, Benes FM. Amygdalo-cortical sprouting continues into early adulthood: implications for the development of normal and abnormal function during adolescence. J Comp Neurol. 2002;453:116–130. doi: 10.1002/cne.10376. [DOI] [PubMed] [Google Scholar]

- 106.Hariri AR, Bookheimer SY, Mazziotta JC. Modulating emotional responses: effects of a neocortical network on the limbic system. Neuroreport. 2000;11:43–48. doi: 10.1097/00001756-200001170-00009. [DOI] [PubMed] [Google Scholar]

- 107.Kim H, et al. Contextual modulation of amygdala responsivity to surprised faces. J Cogn Neurosci. 2004;16:1730–1743. doi: 10.1162/0898929042947865. [DOI] [PubMed] [Google Scholar]

- 108.Cohen Kadosh K, Johnson MH. Developing a cortex specialized for face perception. Trends Cogn Sci. 2007;11:367–369. doi: 10.1016/j.tics.2007.06.007. [DOI] [PubMed] [Google Scholar]

- 109.Humphrey T. The development of the human amygdala during early embryonic life. J Comp Neurol. 1968;132:135–165. doi: 10.1002/cne.901320108. [DOI] [PubMed] [Google Scholar]

- 110.Kordower JH, Piecinski P, Rakic P. Neurogenesis of the amygdaloid nuclear complex in the rhesus monkey. Brain Res Dev Brain Res. 1992;68:9–15. doi: 10.1016/0165-3806(92)90242-o. [DOI] [PubMed] [Google Scholar]

- 111.Nikolic I, Kostovic I. Development of the lateral amygdaloid nucleus in the human fetus: transient presence of discre cytoarchitectonic units. Anat Embryol (Berl) 1986;174:355–360. doi: 10.1007/BF00698785. [DOI] [PubMed] [Google Scholar]

- 112.Bachevalier J, Ungerleider LG, O’Neill JB, Friedman DP. Regional distribution of [3H] naloxone binding in the brain of a newborn rhesus monkey. Brain Res. 1986;390:302–308. doi: 10.1016/s0006-8993(86)80240-2. [DOI] [PubMed] [Google Scholar]

- 113.Berger B, Febvret A, Greengard P, Goldman-Rakic PS. DARPP-32, a phosphoprotein enriched in dopaminoceptive neurons bearing dopamine D1 receptors: distribution in the cerebral cortex of the newborn and adult rhesus monkey. J Comp Neurol. 1990;299:327–348. doi: 10.1002/cne.902990306. [DOI] [PubMed] [Google Scholar]

- 114.Prather MD, Amaral DG. The development and distribution of serotonergic fibers in the macaque monkey amygdala. Soc Neurosci Abs. 2000;26:1727. [Google Scholar]

- 115.Webster MJ, Bachevalier J, Ungerleider LG. Connections of inferior temporal area TEO and TE with parietal and frontal cortex in macaque monkeys. Cereb Cortex. 1994;5:470–483. doi: 10.1093/cercor/4.5.470. [DOI] [PubMed] [Google Scholar]

- 116.Webster MJ, Ungerleider LG, Bachevalier J. Connections of inferior temporal areas TE and TEO with medial temporal-lobe structures in infant and adult monkeys. J Neurosci. 1991;11:1095–1116. doi: 10.1523/JNEUROSCI.11-04-01095.1991. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 117.Rodman HR, Consuelos MJ. Cortical projections to anterior inferior temporal cortex in infant macaque monkeys. Vis Neurosci. 1994;11:119–133. doi: 10.1017/s0952523800011160. [DOI] [PubMed] [Google Scholar]

- 118.Afraz SR, Kiani R, Esteky H. Microstimulation of inferotemporal cortex influences face categorization. Nature. 2006;442:692–695. doi: 10.1038/nature04982. [DOI] [PubMed] [Google Scholar]

- 119.Hasselmo ME, Rolls ET, Baylis GC. The role of expression and identity in the face-selective responses of neurons in the temporal visual cortex of the monkey. Behav Brain Res. 1989;32:203–218. doi: 10.1016/s0166-4328(89)80054-3. [DOI] [PubMed] [Google Scholar]

- 120.Sugase Y, Yamane S, Ueno S, Kawano K. Global and fine information coded by single neurons in the temporal visual cortex. Nature. 1999;400:869–873. doi: 10.1038/23703. [DOI] [PubMed] [Google Scholar]

- 121.Tsao DY, Freiwald WA, Tootell RBH, Livingstone MS. A cortical region consisting entirely of face-selective cells. Science. 2006;311:670–674. doi: 10.1126/science.1119983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 122.Bentin S, Allison T, Puce A, Perez E, McCarthy G. Electrophysiological studies of face perception in humans. J Cogn Neurosci. 1996;8:551–565. doi: 10.1162/jocn.1996.8.6.551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 123.Hoffman EA, Haxby JV. Distinct representations of eye gaze and identity in the distributed human neural system for face perception. Nat Neurosci. 2000;3:80–84. doi: 10.1038/71152. [DOI] [PubMed] [Google Scholar]

- 124.Kanwisher N, McDermontt J, Chun MM. The fusiform face area: a module in human extrastriate cortex specialized for face perception. J Neurosci. 1997;17:4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 125.LaBar KS, Crupain MJ, Voyvodic JT, McCarthy G. Dynamic perception of facial affect and identity in the human brain. Cereb Cortex. 2003;13:1023–1033. doi: 10.1093/cercor/13.10.1023. [DOI] [PubMed] [Google Scholar]

- 126.Engell AD, Haxby JV. Facial expression and gaze-direction in human superior temporal sulcus. Neuropsychologia. 2007;45:3234–3241. doi: 10.1016/j.neuropsychologia.2007.06.022. [DOI] [PubMed] [Google Scholar]

- 127.Haxby JV, Hoffman EA, Gobbini MI. Human neural systems for face recognition and social communication. Biol Psychiatry. 2002;15:59–67. doi: 10.1016/s0006-3223(01)01330-0. [DOI] [PubMed] [Google Scholar]

- 128.Calder AJ, Young AW. Understanding the recognition of facial identity and facial expression. Nat Rev Neurosci. 2005;6:641–651. doi: 10.1038/nrn1724. [DOI] [PubMed] [Google Scholar]

- 129.Calder AJ, Young AW, Keane J, Dean M. Configural information in facial expression perception. J Exp Psychol Hum Percept Perform. 2000;26:527–551. doi: 10.1037//0096-1523.26.2.527. [DOI] [PubMed] [Google Scholar]

- 130.Ambadar Z, Schooler JW, Cohn JF. Deciphering the enigmatic face: the importance of facial dynamics in interpreting subtle facial expressions. Psychol Sci. 2005;16:403–410. doi: 10.1111/j.0956-7976.2005.01548.x. [DOI] [PubMed] [Google Scholar]

- 131.Adams RBJ, Kleck RE. Perceived gaze direction and the processing of facial displays of emotion. Psychol Sci. 2003;14:644–647. doi: 10.1046/j.0956-7976.2003.psci_1479.x. [DOI] [PubMed] [Google Scholar]

- 132.Campanella S, Belin P. Integrating face and voice in person perception. Trends Cogn Sci. 2007;11:535–543. doi: 10.1016/j.tics.2007.10.001. [DOI] [PubMed] [Google Scholar]

- 133.Kreifelts B, Ethofer T, Grodd W, Erb M, Wildgruber D. Audiovisual integration of emotional signals in voice and face: an event-related fMRI study. Neuroimage. 2007;37:1445–1456. doi: 10.1016/j.neuroimage.2007.06.020. [DOI] [PubMed] [Google Scholar]

- 134.Puce A, Perrett D. Electrophysiology and brain imaging of biological motion. Philos Trans R Soc Lond B Biol Sci. 2003;358:435–445. doi: 10.1098/rstb.2002.1221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 135.Möttönen R, et al. Perceiving identical sounds as speech or non-speech modulates activity in the left posterior superior temporal sulcus. Neuroimage. 2006;30:563–569. doi: 10.1016/j.neuroimage.2005.10.002. [DOI] [PubMed] [Google Scholar]