Abstract

To examine the ontogeny of emotional face processing, event-related potentials (ERPs) were recorded from adults and 7-month-old infants while viewing pictures of fearful, happy, and neutral faces. Face-sensitive ERPs at occipital-temporal scalp regions differentiated between fearful and neutral/happy faces in both adults (N170 was larger for fear) and infants (P400 was larger for fear). Behavioral measures showed no overt attentional bias toward fearful faces in adults, but in infants, the duration of the first fixation was longer for fearful than happy faces. Together, these results suggest that the neural systems underlying the differential processing of fearful and happy/neutral faces are functional early in life, and that affective factors may play an important role in modulating infants’ face processing.

Keywords: Brain Development, Electrophysiology, Emotion, Face Perception

Humans glean a wealth of behaviorally and biologically significant information from others’ facial expressions. Infants will crawl over a visual cliff to approach a novel toy if their mother’s face displays a happy expression, whereas they avoid the cliff if their mother poses a fearful facial expression (Sorce, Emde, Campos, & Klinnert, 1985). Investigation into the neural mechanisms that underlie the processing of facial expressions may, therefore, provide a useful model as to how behaviorally and emotionally significant stimuli are processed in the human brain, and how the processing of such stimuli differs from the processing of other types of visual stimuli.

Recording of event-related potentials (ERPs) provides one tool to examine the neural mechanisms of facial expression processing. ERP studies of face processing in adults have revealed that, compared to other visual objects, faces typically elicit a larger negative deflection at occipital-temporal recording sites approximately 170 ms after the stimulus onset (Bentin, Allison, Puce, Perez, & McCarthy, 1996). This component is known as the N170, and it presumably reflects perceptual processing of structural information from faces in specialized occipital-temporal brain areas (e.g., Allison, Puce, & McCarthy, 2000; Haxby, Hoffman, & Gobbini, 2002). Recent studies have shown that the amplitude of the N170 can differ as a function of the emotional significance of the facial stimulus. Specifically, the N170 is larger to fearful compared to neutral or happy facial expressions (Batty & Taylor, 2003; Rossignol, Philippot, Douilliez, Crommelinck, & Campanella, 2005), affectively significant biological motion (e.g., mouth opening indicative of an utterance) relative to various types of neutral control stimuli (Puce et al., 2003; Puce & Perrett, 2003; Puce, Smith, & Allison, 2000; Wheaton, Pipingas, Silberstein, & Puce, 2001), and to liked relative to neutral/disliked faces (Pizzagalli et al., 2002). Other studies have shown differential ERPs to emotional and neutral stimuli beyond the latency of the N170. A well-replicated finding in these studies has been that both negative and positive facial expressions elicit a negative shift in ERP activity at occipital-temporal scalp regions approximately 200 ms after stimulus presentation (Eimer, Holmes, & McGlone, 2003; Krolak-Salmon, Fischer, Vighetto, & Mauguiére, 2001; Sato, Kochiyama, Yoshikawa, & Matsamura, 2001; Schupp et al., 2004). This negative shift is similar to that observed in response to emotional visual cues other than faces (e.g., pictures of mutilations, see Schupp, Jungöfer, Weike, & Hamm, 2004).

The enhanced ERPs in response to emotionally salient faces are typically interpreted as reflecting differential processing of emotionally meaningful and neutral stimuli in cortical visual systems. Emotionally significant stimuli may engage subcortical emotion-related brain structures (e.g., the amygdala), and the activation of these structures, in turn, may modulate and enhance cortical information processing and guide limited processing resources to emotionally significant stimuli (Amaral, Behniea, & Kelly, 2003; Morris et al., 1998; Vuilleumier, Richardson, Armony, Driver, & Dolan, 2004; Whalen, 1998). It is also possible that the amygdala and the face-specific cortical areas are sensitive to some low-level features in emotional faces such as the wide-open eyes and increased size of the white sclera around the dark pupil in fearful faces (Johnson, 2005; Whalen et al., 2004). Recent neuroimaging studies with human adults have shown differential amygdalar response to fearful and happy eyes presented in isolation (Whalen et al., 2004). There is also evidence showing that the N170 component in adults responds primarily to the eyes within a face (Schyns, Jentzsch, Johnson, Schweinberger, & Gosselin, 2003). These results raise a possibility that the N170 is particularly sensitive to expression changes in the eye region.

Hence, progress has been made in understanding the neural mechanisms underlying emotional information processing in adults. Little is known, however, about how these mechanisms develop and when the developing brain starts to respond differentially to emotional versus neutral faces. Human infants show an early interest in the faces of other people and by the age of 5 to 7 months, infants’ visual system is sufficiently developed to support discrimination of most facial expression contrasts (for reviews, see Leppänen & Nelson, in press; Nelson, 1987; Walker-Andrews, 1997). There are also some indications that emotional factors may influence infants’ visual behavior toward faces. Seven-month-old infants, for example, preferentially allocate attention to a fearful face over a simultaneously presented happy face in behavioral visual paired comparison tasks (Nelson & Dolgin, 1985). There is also evidence that viewing angry facial expressions increases the magnitude of an eye blink startle to loud noise in 5-month-old infants (Balaban, 1995). The augmentation of the startle reflex by negative stimulation is known to be mediated by amygdala circuitry (Angrilli et al., 1996; Funayama, Grillon, Davis, & Phelps, 2001; Pissiota et al., 2003) and, hence, these findings may suggest that amygdala circuitry is already functional and responsive to facial expressions in the early stages of postnatal development. Further support for this possibility comes from studies with macaque monkeys showing that cortico-amygdalar connections and efferent connections from the amygdala back to sensory and other cortical regions are established soon after birth (Amaral & Bennett, 2000; Nelson et al., 2002).

Few studies that have examined the electrophysiological correlates of emotional face processing in infants have been reported. Nelson and de Haan (1996) found that the “negative central” (NC) component of the infant ERP was of larger amplitude in response to fearful compared to happy facial expressions. This finding was recently replicated by de Haan and colleagues (de Haan, Belsky, Reid, Volein, & Johnson, 2004). Given that the NC reflects the orienting of processing resources to attention-grabbing stimuli (Courchesne, Ganz, & Norcia, 1981; Nelson & Monk, 2001; Richards, 2003), the finding of larger NC may reflect greater allocation of processing resources to fearful faces. Although these findings point to differential cortical processing of fearful and happy faces in early infancy, it is not known how the ERP findings in infants compare to the results of the adult ERP studies described above. Comparison of the results of the existing adult and infant studies is difficult because of the differences in the employed stimuli and task procedures between the studies (e.g., adult studies typically compare ERPs to emotionally salient and neutral faces whereas infant studies have compared ERPs to different emotional expressions such expressions of fear and happiness). Thus, further research is needed to examine developmental differences in emotional information processing by measuring ERPs to facial expression in adults and infants under equivalent testing conditions.

Even when recorded under the same testing conditions, comparison of the infant and adult ERPs is complicated by the fact that infant and adult ERP waveforms differ in morphology, most likely due to physiological differences in the head, skull, and underlying brain tissue (DeBoer, Scott, & Nelson, 2004). Considerable developmental changes also occur in the timing, amplitude, and scalp-topography of individual ERP components (DeBoer et al., 2004). Due to these factors, comparisons across age groups cannot be based on components that are similar in terms of the latency and scalp-topography, but rather on components that have similar response properties and that, hence, reflect functionally equivalent processes (de Haan, Johnson, & Halit, 2003). The adult literature reviewed above suggests that the ERP differentiation between facial expressions is observed for those occipital-temporal ERP components that respond preferentially to faces as compared to other visual objects. Recent ERP studies in infants have found that the N290 (i.e., a negative deflection occurring at 290 ms) and P400 (i.e., a positive deflection occurring at 400 ms), recorded at occipital temporal scalp regions in 3- to 12-month-old infants, are sensitive to faces and may reflect processes similar to those reflected in the adult N170 (de Haan et al., 2003). The evidence for this comes from findings that the amplitude of the N290 is larger for human faces relative to monkey faces or matched visual noise stimuli (de Haan, Pascalis, & Johnson, 2002; Halit, Csibra, & Johnson, 2004; Halit, de Haan, & Johnson, 2003), the P400 peaks earlier for faces than for other objects (de Haan & Nelson, 1999), and the amplitude of the P400 is larger for upright compared to inverted faces (de Haan et al., 2002). It is not known whether the amplitude or latency of these face-sensitive components is affected by facial emotional expressions. Given the adult studies suggesting that posterior face-sensitive ERPs differentiate between facial expressions (Batty & Taylor, 2003), it is of great interest to examine whether the amplitude and/or latency of the N290 and P400 differ between facial expressions. It is possible that the posterior face-sensitive components in infants are particularly sensitive to the expressive changes in the eye region as infants show early sensitivity to information in the eye region (Farroni, Johnson, & Csibra, 2004) and there are electrophysiological data showing that ERP responses to eyes mature relatively early in development (Taylor, Edmonds, McCarthy, & Allison, 2001).

To summarize, ERPs recorded over occipital-temporal scalp regions have been found to be sensitive to the emotional signal value of faces in adults. The present study was designed to examine whether this ERP differentiation between facial expressions is observed in infants and thus, whether it reflects an early-developing characteristic of face processing. We recorded ERPs to fearful, happy, and neutral faces in young adults and 7-month-old infants under equivalent viewing conditions. Since young infants were tested, the task procedure used differed in two ways from those typically used in adult ERP studies. First, participants were not given any specific instructions to categorize or label the facial expressions. In some studies, ERP differentiation between emotional and neutral faces has been shown to be specifically contingent upon this task feature (e.g., Krolak-Salmon et al., 2001) but other findings have shown that reliable effects can also be obtained in a passive task (Batty & Taylor, 2003). Second, in the present task participants viewed repeated presentations of fearful, happy, and neutral expressions of one individual female model (the model differed across participants). Although adult studies routinely use multiple exemplars of each expression, studies with developing populations have typically used only one model to avoid possible confounds arising from using a demanding perceptual task (e.g., Dawson, Webb, Carver, Panagiotides, & McPartland, 2004; Nelson & de Haan, 1996). Based on the literature reviewed above, we predicted that, in adults, the amplitude of the ERPs recorded at lateral occipital-temporal sites would differ between emotionally salient (fearful) and neutral faces starting 170 ms after the stimulus onset. Of particular interest in the present study was to establish whether ERPs also differentiate between emotional and neutral faces in infants, and whether it is manifested in those occipital-temporal components of the infant ERP that constitute the developmental precursors of the mature face-sensitive N170.

In addition to recording ERPs, a behavioral visual paired comparison (VPC) task was used to examine participants’ overt visual attention to fearful and happy facial expressions. Participants were presented with a pair of faces showing happy and fearful facial expressions and looking times toward each of the faces were measured. Earlier studies have shown that infants spontaneously prefer to look at a fearful face compared to a simultaneously presented happy face (Kotsoni, de Haan, & Johnson, 2002; Nelson & Dolgin, 1985). The purpose of the present study was to replicate this finding and also to examine whether there is any association between electrophysiological responses to fearful faces and a behavioral bias towards fearful faces.

Method

Participants

The final group of participants consisted of 10 young adults (M = 19.3 years, range = 18–21 years; 7 females) and fifteen 7-month-old infants (M = 214 days, range = 207–219 days; 8 females). All of the infants were born full-term (i.e., between 38 and 42 weeks gestational age), were of normal birth weight, and had no history of visual or neurological abnormalities. Adult participants were recruited from the University of Minnesota introductory psychology courses by using an on-line sign-up system. Infant participants were recruited from an existing list of families who had volunteered for research after being contacted by letter following the birth of their child. Participants were primarily White and of middle socioeconomic status. An additional 2 adults were tested but were excluded for technical problems (n = 1) or falling asleep during the ERP recording (n = 1). An additional 30 infants were excluded for fussiness (n = 14), movement artifact (n = 12), or computer/procedural error (n = 4). The attrition rate of this study is similar to those of previous ERP studies in infants of similar age (Courchesne et al., 1981; de Haan et al., 2002; 2004; Hirai & Hiraki, 2005).

Stimuli and Task Procedure

ERPs were recorded while participants viewed a color picture of an individual female model posing fearful, happy, and neutral facial expressions. The model differed across participants. The pictures were selected from the MacBrain Face Stimulus Set. Development of the MacBrain Face Stimulus Set was overseen by Nim Tottenham and supported by the John D. and Catherine T. MacArthur Foundation Research Network on Early Experience and Brain Development. The expressions in the MacBrain Face Stimulus Set have been shown to be highly recognizable by children and adults (Tottenham, Borscheid, Ellertsen, Marcus, & Nelson, 2002). Stimulus presentation was controlled by E-Prime software running on a desktop computer, and the stimuli subtended approximately 12° × 16° when viewed from a distance of 60 cm. The stimuli were presented on a monitor surrounded by black panels that blocked the participant’s view of the room behind the screen and to his/her sides.

For adult participants, the presentation of each facial stimulus was preceded by the presentation of a fixation cross on the center of the screen. After a randomly varying interval ranging from 500 to 700 ms, the fixation was replaced by a facial stimulus presented for 500 ms followed by a 1000-ms post-stimulus recording period. Each facial expression was presented 32 times, making a total of 96 test trials. In addition, 4 trials were run on which a color picture of a monkey face with a neutral expression was presented on the screen. Pictures of monkey faces served as target stimuli to which adult participants were asked to respond by a button press. Although the task was designed to examine ERPs evoked during passive observation of different facial expressions, we felt it important to ensure that the participants attended to the screen throughout the testing session. Subsequent analyses revealed that participants detected an average of 98% of the targets, indicating that all participants were highly attentive to the task.

Infants were tested while sitting in their parent’s lap. The presentation of each stimulus was preceded by a fixation cross on the center of the screen, followed by the presentation of the facial stimulus for 500 ms, and a 1000-ms post-stimulus recording period. The experimenter monitored participants’ eye movements and attentiveness through a hidden video camera mounted on top of the computer screen and initiated stimulus presentation when the infant was attending to the screen. Another experimenter sat beside the infant and, if needed, tapped the screen to attract the infant’s attention to the screen. Trials during which the infant turned his/her attention away from the screen or showed other types of movement were marked on-line as bad by the experimenter controlling the stimulus presentation. The stimulus presentation was continued until the infant had seen the maximum number of trials or became too fussy or inattentive to continue. A maximum of 96 facial expression trials and 4 monkey face trials were presented. All trials were completed in a single session.

After recording ERPs to the facial expressions, a visual paired-comparison task (VPC) was administered in which a happy facial expression and a fearful facial expression posed by the same female model were presented side-by-side (and 12 cm apart from each other) for two, fixed 10-second periods. A fixation cross was presented prior to presentation of the two faces. The participants were also monitored through a video camera prior to presenting the pictures, and when their face/eyes were in the orientation of looking directly at the middle of the screen, the stimuli were presented. The left-right arrangement of the two images was counterbalanced across participants during the first period and reversed for the second period. Participants were videotaped during the stimulus presentation for off-line analysis of the length of time spent fixating each of the two faces.

Analysis of Electrophysiological Data

Continuous EEG was recorded using a Geodesic Sensor Net (Electrical Geodesics, Inc.) with 128 (adults) or 64 (infants) electrodes, and referenced to vertex (Cz). The electrical signal was amplified with a 0.1- to 100-Hz band-pass, digitized at 250 Hz, and stored on a computer disk. The data were analyzed offline by using NetStation 4.0.1 analysis software (Electrical Geodesics, Inc.).

For adult participants, the continuous EEG signal was segmented to 900-ms periods starting 100 ms prior to stimulus presentation. The segments were then digitally filtered by using a 30-Hz lowpass elliptical filter and baseline-corrected against the mean voltage during the 100-ms prestimulus period. Segments with eye movements and blinks were detected and excluded from further analysis by using Net Station’s eyeblink and eye-movement algorithms (±70 µV thresholds). Artifact detection was restricted to the first 600 milliseconds following stimulus onset to avoid excessive data rejection due to blinking and eye movements occurring after stimulus offset. Note that restricting the eye movement analysis to the first 600 milliseconds was not problematic as all the adult ERPs components of interest occurred within 500 ms after stimulus onset. After excluding segments with eye movements and blinks, the remaining segments were visually scanned for bad channels and other artifacts (i.e., off-scale activity, eye movement, body movements or high frequency noise). If more than 10% of the channels (i.e., ≥ 13 channels) were marked as bad, the whole segment was excluded from further analysis. If fewer than 13 channels were marked as bad in a segment, the bad channels were replaced using spherical spline interpolation. Finally, average waveforms for each individual participant within each experimental condition were calculated and re-referenced to the average reference. The average number of good trials was 26 (SD = 3.7, range = 17–31) for fearful, 27 (SD = 4.1, range = 19–31) for happy, and 26 (SD = 4.8, range = 14–32) for neutral facial expressions.

For infants, the analysis of the ERP data followed the same general steps as those described above. First, the continuous EEG signal was segmented to 1100-ms periods starting 100 ms prior to stimulus presentation and extending for 1000 ms after the onset of the face presentation. Digitally filtered (30-Hz lowpass elliptical filter) and baseline-corrected segments were visually inspected for artifacts. Channels with off-scale activity and channels contaminated by eye movement, body movements, or high frequency noise were marked as bad. If more than 15% of the channels (≥ 10 channels) were marked as bad, the whole segment was excluded from further analysis. If fewer than 10 channels were marked as bad in a segment, the bad channels were replaced using spherical spline interpolation. For infants, the criterion for trial exclusion due to bad channels was raised from 10 to 15% because the 10% threshold would have resulted in an excessive loss of data. Note that the 15% criterion is consistent with previous high-density ERP studies with infants (e.g., de Haan et al., 2002; Halit et al., 2003). Participants with fewer than 10 good trials in an experimental condition were excluded from further analysis. For the remaining participants, the average waveforms for each experimental condition were calculated and re-referenced to the average reference. The average number of good trials was 15 (SD = 3.1, range = 11–22) for fearful, 14 (SD = 3.8, range = 10–22) for happy, and 15 (SD = 2.5, range = 10–19) for neutral facial expressions. Thus, in infants, the average waveforms were calculated from fewer trials than in adults. Although this difference raises a concern about lower signal-to-noise ratio and likelihood of finding significant differences in infants, the group differences in the power of the measurement are balanced by the fact that infant ERPs are typically much larger than adult ERPs (due to thinner skulls, less dense cell packing in brain tissue etc., DeBoer et al., 2004), and the infant sample was larger than the adult sample in the present study.

Statistical analyses of the ERP data were targeted at examining emotional expression effects on the ERPs at occipital-temporal electrode sites. For adults, the peak amplitude and latency of the N170 were determined by detecting the minimum amplitude of the ERP activity within a time window extending from 120 to 200-ms post-stimulus onset. For infants, the peak amplitudes and latencies to peak were determined within two time windows: 192–336 ms (N290) and 288–484 (P400). The data obtained from individual electrodes at posterior regions were averaged to create groups of electrodes that covered lateral, semi-medial, and medial occipital-temporal scalp regions over the left and right hemispheres. The individual electrodes that were included in these groups were: 57, 58, 59, 64 (left lateral), 65, 66, 70 (left semi-medial), 53, 61, 67, 72 (left medial), 92, 96, 97, 101 (right lateral), 85, 90, 91 (right semi-medial), and 77, 78, 79, 87 (right medial) in adults; and 26, 27, 31 (left lateral), 32, 36 (left semi-medial), 29, 33, 37 (left medial), 48, 49, 51 (right lateral), 44, 45 (right semi-medial), and 40, 41, 42 (right medial) in infants. These electrodes were selected in order to cover approximately similar occipital-scalp regions for adults and infants. The lateral electrodes were selected because they are located around T5 and T6 in the international 10–20 system, which are the scalp locations where the face-sensitive N170 and the expected emotional expression effects on the N170 have been found in adults (Batty & Taylor, 2003; Bentin et al., 1996). The semi-medial and medial electrodes were added to cover the areas where the face-sensitive ERP components in infants (N290/ P400) have been found (de Haan et al., 2002; Halit et al., 2003). It is important to note that the medial electrode groups were not only more medial but also more superior than the semi-medial and lateral electrode groups.

The amplitude and latency scores of the N170 (adults) and N290/P400 (infants) were analyzed by a 3 × 2 × 3 repeated measures analyses of variance (ANOVA) with emotional expression (happy, neutral, and fearful), hemisphere (left, right), and electrode group (lateral, semi-medial, medial) as within-subject factors. When necessary, the degrees of freedom were adjusted by using Greenhouse-Geisser epsilon. When the ANOVA yielded significant effects, pair-wise comparisons including ≤ 3 means were carried out by using t-tests (Fischer’s Least Significant Difference procedure, Howell, 1987). An alpha level of .05 was used for all statistical tests.

Analysis of the Behavioral Data

The videotaped records of participants’ eye movements during the administration of the two VPC trials were analyzed by an observer who was blind to the left/right positioning of the happy and fearful stimulus expressions. The observer recorded the time each participant spent looking at the stimulus on the left and the stimulus on the right for each trial. Three infant participants were excluded due to a side bias (i.e., they looked at one of the stimuli less than 5% out of the total looking time for each trial). For the remaining participants, the total time spent fixating the fearful and happy facial expressions as well as the duration of participants’ first visual fixations for fearful and happy faces were calculated and averaged across the two VPC trials. Although previous studies using VPC tasks to examine attention to happy and fearful faces have used total looking times as a measure of visual preference, it is possible that the duration of the first fixation provides a more reliable measure of visual preference than the total looking time (Kujawski & Bower, 1993). To check the reliability of the looking time judgments, another independent observer recorded the looking times of 30% of the participants. Pearson correlations between the two coders’ measurements of the participants’ total looking times during individual VPC trials ranged from .93 to 1.

Results

Electrophysiological Data

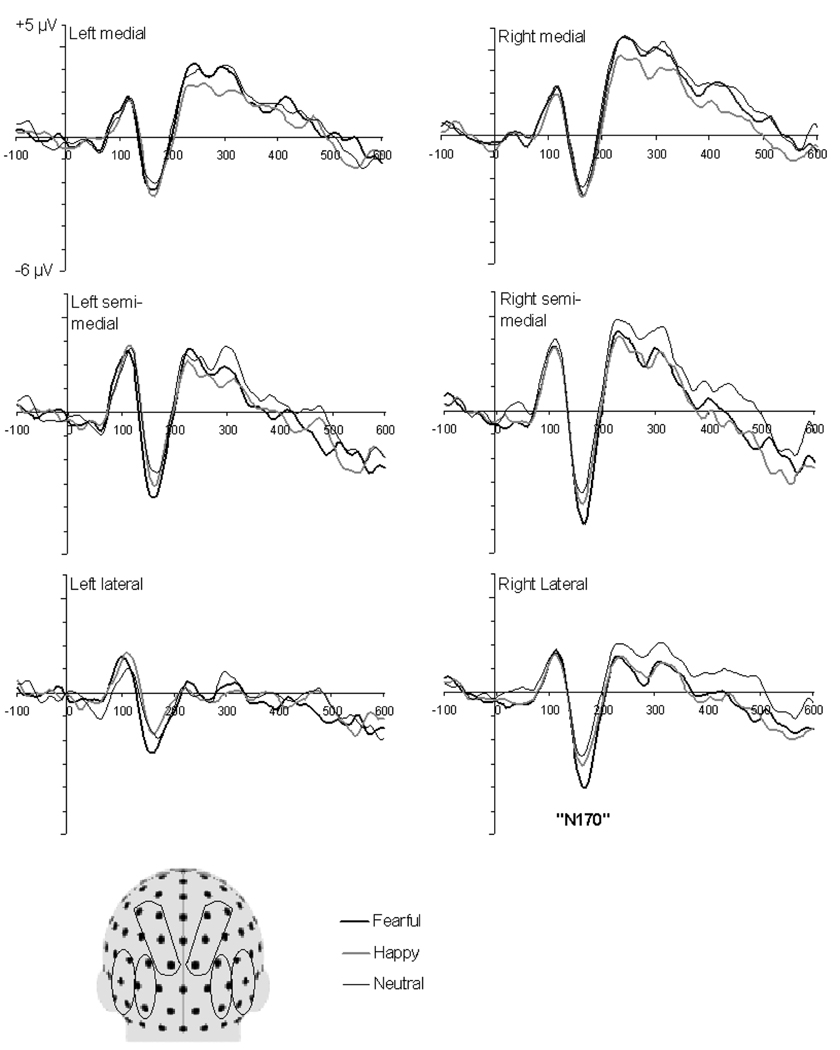

In adults, face stimuli elicited a positive deflection (P1) at 100 ms and a prominent negative peak (N170) at a mean latency of 162 ± 12 ms (Figure 1). There was no significant main effect of emotional expression on the amplitude of the N170. However, there was a trend for an interaction between emotional expression and electrode group, F(4, 36) = 2.47, p = .06. Because of this trend and the predicted emotional expression effects at lateral electrodes, the N170 amplitude scores were further analyzed by examining the effect of emotional expression within each electrode group separately. No significant effects were observed at medial and semi-medial electrode groups (ps > .1) but the expected main effect of emotional expression at lateral electrodes was significant, F(2, 18) = 5.32, p = .02. At lateral electrodes, the N170 amplitude was larger for fearful (M = −4.1 µV, SD = 2.1) relative to neutral (M = −3.2 µV, SD = 2.0) and happy (M = −3.4 µV, SD = 2.1) faces, whereas happy and neutral faces did not differ from one another. Paired comparisons were: fearful vs. neutral, t(9) = 3.63, p < .01, fearful vs. happy, t(9) = 2.21, p = .05, happy vs. neutral, t(9) = 0.48, ns. There were no other main effects or interactions found for the amplitude of the N170, ps > .1. Inspection of Figure 1 shows that the N170 was larger over the right than left recording sites. However, the hemisphere effect was not significant, p > .1. The only significant effect found in the analyses of the peak latency of the N170 was a two-way interaction between emotional expression and hemisphere, F(2, 18) = 5.25, p = .02. This reflected the fact that for happy and neutral faces, the N170 peaked earlier at right relative to left hemisphere (these differences were, however, not statistically significant, ps > .05) whereas a similar hemispheric difference was not observed for fearful expressions.

Figure 1.

Grand average ERP waveforms for fearful, happy, and neutral facial expressions at selected posterior channels in adults. The waveforms represent average activity over groups of electrodes at lateral, semi-medial, and medial recording sites over the left and right hemispheres. The schematic head model indicates the locations of the electrode groups (Head model adapted from Halit et al., 2004).

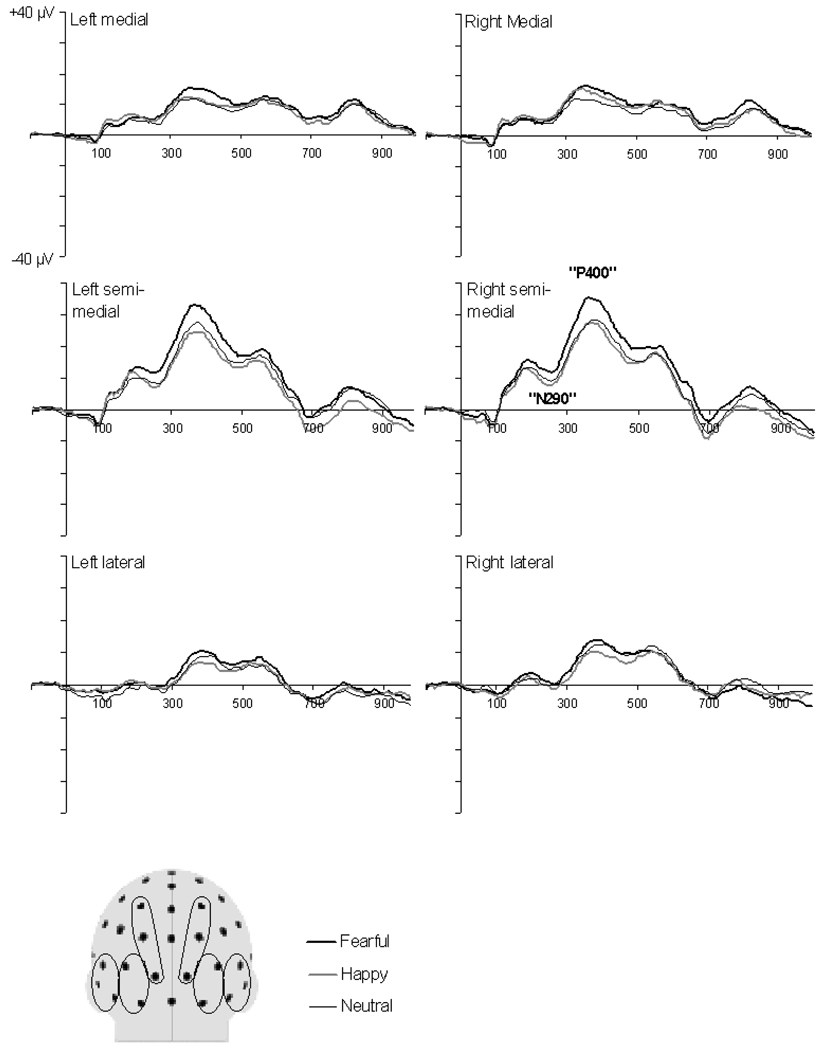

The grand average ERPs to facial expressions at occipitotemporal electrodes in infants are shown in Figure 2. In infants, a positive peak was recorded starting at 120 ms, followed by negative-going (N290) and prominent positive (P400) deflections at mean latencies of 245 ± 14 ms and 388 ± 25 ms, respectively. It is noteworthy that the morphology of the grand average waveforms as well as the timing, amplitude, and scalp topography of the ERP components in the present study are similar to those reported in previous studies with infants of similar age (see Figure 2 in de Haan & Nelson, 1999 and Figure 3 in de Haan et al., 2002).

Figure 2.

Grand average ERP waveforms for fearful, happy, and neutral facial expressions at selected posterior channels in infants. The waveforms represent average activity over groups of electrodes at lateral, semi-medial, and medial recording sites over the left and right hemispheres. The schematic head model indicates the locations of the electrode groups (Adapted from Halit et al., 2004).

There were no main or interaction effects involving facial expressions on the amplitude or peak latency of the N290, ps > .1. However, there was a clear effect of facial expression on the P400 amplitude, although this effect was restricted to the semi-medial recording sites (a significant facial expression × site interaction, F(4, 56) = 2.79, p = .04). Separate two-way ANOVAs with emotional expression and hemisphere as within-subject factors showed that there was a significant effect of facial expression at semi-medial recording sites, F(2, 28) = 3.76, p = .04, but not at lateral and medial electrodes, ps > .1. At semi-medial electrodes, the P400 was of larger amplitude for fearful (M = 32.7 µV, SD = 12.3) relative to neutral (M = 27.9 µV, SD = 15.2) and happy (M = 27.3 µV, SD = 14.0) faces whereas the difference between happy and neutral faces was not significant. Paired comparisons were: fearful vs. neutral, t(14) = 2.20, p = .05, fearful vs. happy, t(14) = 2.97, p = .01, happy vs. neutral t(14) = 0.24, ns. There was also a significant main effect of electrode group on the P400 amplitude, F(2, 28) = 28.13, p < .01, arising from the fact that this component was larger at inferior occipital regions (the region covered by the semi-medial electrode groups) than at more superior occipital regions and lateral occipital-temporal regions (i.e., the regions covered by the lateral and medial electrode groups, respectively). Paired comparisons showed that the P400 amplitude was significantly larger at semi-medial (M = 29.3 µV, SD = 13.0) compared to medial (M = 16.3 µV, SD = 8.8), t(14) = 5.78, p < .01, and lateral (M = 13.5 µV, SD = 5.8), t(14) = 6.59, p < .01, electrodes. There were no further main or interaction effects on the amplitude of the P400. There were also no effects on the latency of the P400 (all ps > .1).

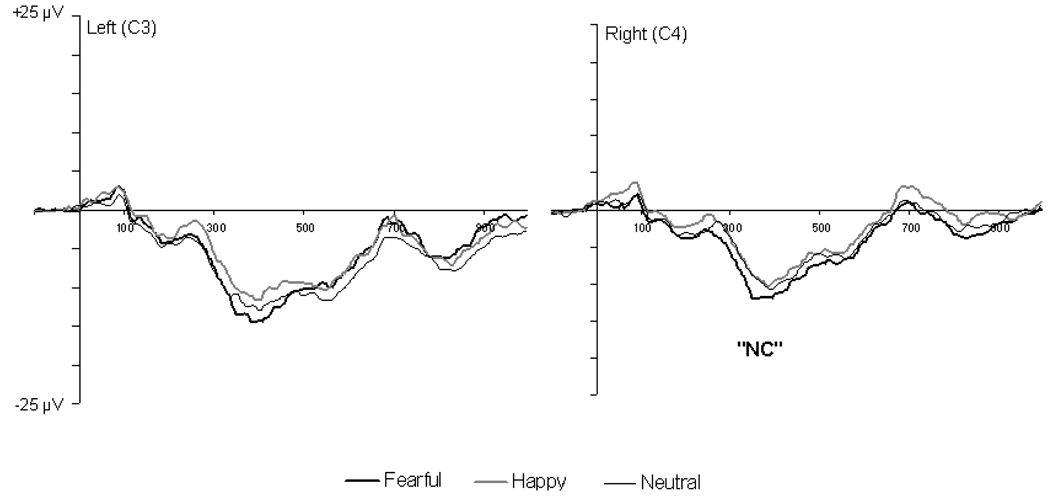

Although the main goal of the present study was to examine emotional expression effects on the face-sensitive components, we also analyzed whether the emotional expression effects found in previous ERP studies with developmental populations were replicated in the present study. Previous studies with 7-month-old infants have shown that the amplitude of the NC at central sites differentiates between fearful and happy faces (de Haan et al., 2004; Nelson & de Haan, 1996). To examine whether this effect was replicated in the present study, the peak amplitude of the NC wave was determined within a 300–600 ms time window for waveforms recorded at electrodes around C3 (electrodes 16, 17, 21) and C4 (electrodes 53, 54, 57) over the left and right central regions (Figure 3). Consistent with previous findings, a 2 × 2 repeated measures ANOVA with facial expression (fearful and happy) and hemisphere as within-subject factors showed that the NC was of larger for fearful (M = −14.8 µV, SD = 3.9) than for happy (M = −13.1 µV, SD = 4.7) faces, F(1, 14) = 4.42, p = .05. We also analyzed whether the early positive component at posterior recording sites (P1) differentiated between facial expressions because previous studies with older, 1- to 7-year-old children have shown that this component (Batty & Taylor, 2006; Dawson et al., 2004) or the polarity reversal of it at central recording sites (Parker & Nelson, 2005) is sensitive to facial expressions. The peak amplitude and latency of the P1 were defined by detecting the maximum positive peak within a 80–146 ms (adults) or 116–240 ms (infants) time window for waveforms at medial and semi-medial posterior electrodes. No effects involving facial expressions were found on the amplitude or latency of the P1 in either adults or infants (all ps > .05).

Figure 3.

Grand average ERP waveforms for fearful, happy, and neutral facial expressions at electrodes around C3 (electrodes 16, 17, 21) and C4 (electrodes 53, 54, 57) over the left and right central regions in 7-month-old infants. The waveforms represent average activity over the selected electrodes.

Behavioral Data

Behavioral data for adults showed that the average looking times for fearful (M = 4.6 s, SD = 0.8) and happy (M = 5.1 s, SD = 0.8) faces across the two VPC trials did not differ significantly, p > .1. Also, no significant difference in the duration of first fixations for fearful (M = 0.9 s, SD = 0.6) and happy (M = 1.1 s, SD = 1.0) faces was found in adults, p > .1. In infants, the mean total looking times across the two VPC trials were 3.7 s (SD = 0.9) for fearful faces, and 3.2 s (SD = 1.0) for happy faces. The total looking times were, hence, slightly longer for fearful compared to happy faces but this difference was not significant, p > .1. However, the duration of the first fixation was significantly longer for fearful (M = 1.9 s, SD = 0.8) compared to happy (M = 1.3 s, SD = 0.6) faces, t(11) = 2.37, p = .04. No significant correlations were found between the emotional expression effect on ERPs (i.e., the P400 or NC amplitude difference between fearful and happy faces) and the behavioral looking time bias towards fearful faces (i.e., the difference in the duration of the first fixation for fearful and happy faces).

Discussion

The present study examined the electrophysiological and behavioral correlates of emotional face processing in young adults and 7-month-old infants. In adults, the ERP differentiation between facial expressions was manifested as augmented peak amplitude of the N170 for fearful relative to neutral and happy faces at lateral occipitotemporal scalp regions. This finding is consistent with the results of previous ERPs studies in adults (Batty & Taylor, 2003; Rossignol et al., 2005; see also Ashley, Vuilleumier, &, Swick, 2003). In infants, a significantly larger positivity was recorded in response to fearful relative to neutral and happy faces ~ 380 ms following stimulus onset at inferior semi-medial regions of the occipitotemporal scalp. Consistent with previous infant studies, it was also found that the frontocentral NC component was of larger amplitude for fearful compared to happy faces, and that infants showed an attentional bias toward fearful faces in the behavioral VPC task (de Haan et al., 2004; Nelson & de Haan, 1996). Together, these findings support the hypothesis that the cortical information processing systems respond differentially to different facial expressions, and they further suggest that the mechanisms supporting this discrimination are functional in the first year of life.

The present results extend previous ERP findings in infants by showing differential ERPs to fearful and happy/neutral faces not only at central recording sites but also at posterior, occipitotemporal recording sites. The present results showed that the ERPs to facial expressions in infants were, in many ways, similar to those obtained from adults under equivalent testing conditions. First, in both groups, the ERPs recorded at occipitotemporal scalp-regions were of larger amplitude in response to fearful compared to happy and neutral faces. Second, in both groups, the difference in ERPs to fearful and happy/neutral faces were observed in those ERP components that are known to reflect processes involved in face perception. In adults, the N170 was of larger amplitude to fearful compared to happy and neutral faces at lateral posterior recording sites. In infants, the P400 component, recorded at semi-medial occipital sites, was of larger amplitude to fearful than to happy and neutral faces. Recent ERP studies have suggested that the adult N170 and the infant N290/P400 are sensitive to similar stimulus manipulations and, therefore, they may reflect functionally equivalent processes (for review, see de Haan et al., 2003). Specifically, like the adult N170, the infant P400 has been shown to differentiate between faces and objects and between upright and inverted faces (de Haan et al., 2002; de Haan & Nelson, 1999). It has been suggested that, during the course of development, the processes reflected in the P400 become integrated in time with the processes reflected in the N290 (i.e., the other face-sensitive component in infants) and, therefore, in older children and adults, these processes give rise to a single ERP component (i.e., the N170, see de Haan et al., 2003, for discussion). The N290 and P400 observed in the present study were largely similar in terms of the scalp topography, peak latency and amplitude to those observed previously in studies with infants of similar age (de Haan et al., 2002, 2003; de Haan & Nelson, 1999).

Therefore, it seems that although the latency and the scalp topography of the emotional expression effects on ERPs differed in adults and infants, these effects were observed in components that have similar response properties and that, hence, reflect functionally equivalent processes (de Haan et al., 2003). From this perspective, our results suggest that similar cortical face processing stages are modulated by affective information in young adults and 7-month-old infants. It is important to note, however, that this conclusion may be qualified as further knowledge is gained about the specific processes underlying the infant N290 and P400 and their equivalence to the adult N170.

Some recent studies with 1- to 7-year-old children have shown that the early positive component (P1), which precedes the face-sensitive components in adults and infants, differs in amplitude and/or latency between fearful and neutral/happy facial expressions (Batty & Taylor, 2006; Dawson et al., 2004). These findings raise the possibility that some discrimination of facial expressions may occur at very early visual processing stages prior to face-specific responses. In the present study, the P1 amplitude or latency did not vary between facial expressions in infants (or adults). Whether the null finding in infants reflects a true developmental difference between infants and children or whether it is accounted for by paradigm differences remains to be resolved.

An important question concerns the brain circuits that mediate the differential cortical responses to fearful faces. Although this question was not directly addressed in the present study and caution must be exercised when interpreting the neural generators of the scalp-recorded ERPs, the existing literature points to the critical role of the amygdala in mediating rapid discrimination of fearful faces. Of different emotional facial expressions, fearful faces have been most consistently shown to activate the amygdala in brain imaging studies in adults (Whalen, 1998). The amygdala may respond to fearful faces in the very early stages of information processing and, when activated, may modulate and enhance cortical processing of emotionally significant stimuli (Morris et al., 1998; Vuilleumier, Armony, Driver, & Dolan, 2003; Vuilleumier et al., 2004). The amygdala may influence cortical face processing via direct feedback projections to ventral visual areas (Amaral et al., 2003). The amygdala also projects to cholinergic neurons in the nucleus basalis that, in turn, release acetylcholine onto cortical sensory systems and thereby increase their excitability and processing capacity (Bentley, Vuilleumier, Thiel, Driver, & Dolan, 2003; Whalen, 1998). In light of these results, one possibility is that the enhanced occipitotemporal ERPs for fearful faces reflect modulatory effects arising from rapid engagement of the amygdala (Batty & Taylor, 2003). Although scalp-recorded ERPs cannot directly reflect amygdala activity (ERPs are primarily generated by pyramidal cells in the cerebral cortex and hippocampus), it is possible that the face-sensitive ERP signals originate from a circuit that involves the amygdala and is under the modulatory control of amygdaloid projections. Our findings showing that differential ERPs to fearful faces were observed in a sample of 7-month-old infants raise the possibility that the subcortical emotion-related brain structures and the functional connectivity between these structures and cortical information processing systems come on-line in the early stages of development. This possibility is corroborated by direct anatomical evidence showing that, in monkey infants, afferent connections from higher order sensory cortices to the amygdala and efferent connections from the amygdala back to sensory and other cortical regions are established soon after birth (Amaral & Bennett, 2000; Nelson et al., 2002).

Besides the underlying neural mechanisms, it is important to consider the specific stimulus attributes to which these mechanisms respond. Differences in brain responses to fearful and other facial expressions are often interpreted as reflecting an enhanced sensitivity of the occipitotemporal face processing system to threat-related cues. Given that fearful faces may signal the presence of a threat in the environment, it may be adaptive to allocate processing resources to these cues in order to learn more about the source of the threat. Although this reference to the emotional signal value of fearful faces is often made, there are also other possible explanations for the enhanced responses to fearful faces that must be considered. Whalen (1998), for example, noted that it is unlikely that subtle emotional cues such as fearful faces elicit fear in the perceiver. Whalen further argued that rather than signals of threat, fearful faces may be perceived as ambiguous stimuli that require additional information in order to be understood. Specifically, another person’s fearful face and wide-open eyes may signal to the perceiver that the person is trying to gather more information about the environment. Seeing this expression may initiate a similar information-gathering process in the perceiver (i.e., increased vigilance). Whalen and colleagues (Whalen et al., 2004) have also suggested that the salient low-level features in the eye region in fearful faces (i.e., wide-open eyes and increased size of the white sclera around the dark pupil) are particularly important in rapid differential responding to fearful faces. The importance of the eyes in discriminating facial expressions is also suggested by the evidence showing that the amygdala (Whalen, 1998) and the N170 (Schyns et al., 2003) are particularly responsive to the eyes within a face, and that the processing of information in the eyes may mature relatively early in development (Farroni et al., 2004; Taylor et al., 2001).

A further alternative possibility is that the differential response to fearful faces does not reflect the emotional or social signal value of fearful faces but rather the fact that expressions of fear are relatively novel in normal social environments. Due to their novelty, more processing resources might be required to perceive and recognize fearful faces. Clearly, further work is required to test these alternative possibilities and to tease apart those stimulus features that are critical for eliciting different ERP activity at posterior scalp regions.

Our behavioral visual preference test showed no evidence for an attentional bias toward or away from fearful faces in adults, but in infants, the duration of the first look at faces was longer for fearful compared to happy faces. This result is consistent with the previous results showing longer overall looking times for fearful versus happy faces (de Haan et al., 2004; Kotsoni et al., 2002; Nelson & Dolgin, 1985). It is noteworthy that, in the previous studies, the difference in the looking times for fearful and happy faces was observed in the overall looking times whereas, in the present study, the expected difference was obtained only in the duration of the first fixations. Although there is no clear explanation for this discrepancy, it may relate to some subtle differences in the testing conditions between the studies. The study by de Haan et al. (2004) is most similar to the present study in that the behavioral task was administered after the ERP recordings; however, they used sequential instead of paired presentation of faces (i.e., faces were presented one at a time), and the stimulus presentation times were considerably longer than those used in the present study (i.e., 20 sec). Due to these factors, the task used by de Haan et al., was perhaps more sensitive to the looking time biases and, hence, the bias was manifested in the overall looking times. It is important to emphasize, however, that the results obtained in the present study are entirely consistent with previous findings in showing a preferential allocation of attention to fearful over happy faces in infants. The fact that this bias was not observed in adults raises a possibility that the bias ceases to exist at some point in postnatal development, possibly due to increased voluntary control over visual attention.

No significant correlations were found between the ERPs to fearful faces and the looking time bias towards fearful faces. Although this result suggests that ERPs and behavioral measures were sensitive to different processes associated with facial expression processing, further research is needed before this conclusion can be drawn. Only a subset of participants provided good behavioral and ERP data and, therefore, the present sample was perhaps not large enough for correlation analyses. It is also possible that the correlations were reduced due to restricted variance associated with the first look durations.

In summary, the present findings are consistent with previous results showing enhanced ERPs to fearful relative to neutral/happy faces at lateral occipital-temporal scalp regions. Extending these results, the present findings showed that the ERP differentiation between fearful and neutral/happy faces is also observed in young infants. These findings fit with the idea that cortical face processing systems are sensitive to facial expressions and they also suggest that the neural architecture that underlies this discrimination is functional by the age of 7 months. The present findings raise the possibility that affective factors play an important role in guiding infants’ attention towards faces and in shaping the development of the plastic cortical information processing systems. Further research is required to determine the neural source of the scalp-recorded potentials and the specific stimulus attributes that are important for the differential processing of fearful and happy/neutral faces.

Acknowledgments

This study was supported in part by grants from the Academy of Finland and Finnish Cultural Foundation to the first author and NIH (NS32976) to the fourth author. The data for this study were collected during the time the first author was a postdoctoral fellow at the Institute of Child Development, University of Minnesota, and the fourth author was on the faculty at the University of Minnesota. We thank Ethan Schwer for his help in data collection.

Contributor Information

Jukka M. Leppänen, Human Information Processing Laboratory, Department of Psychology, University of Tampere, Finland

Margaret C. Moulson, Institute of Child Development, University of Minnesota and Children’s Hospital Boston

Vanessa K. Vogel-Farley, Children’s Hospital Boston

Charles A. Nelson, Children’s Hospital Boston and Harvard Medical School

References

- Allison T, Puce A, McCarthy G. Social perception from visual cues: role of the STS region. Trends in Cognitive Sciences. 2000;4:267–278. doi: 10.1016/s1364-6613(00)01501-1. [DOI] [PubMed] [Google Scholar]

- Amaral DG, Bennett J. Development of amygdalo-cortical connection in the macaque monkey. Society for Neuroscience Abstracts. 2000;26:17–26. [Google Scholar]

- Amaral DG, Behniea H, Kelly JL. Topographic organization of projections from the amygdala to the visual cortex in the macaque monkey. Neuroscience. 2003;118:1099–1120. doi: 10.1016/s0306-4522(02)01001-1. [DOI] [PubMed] [Google Scholar]

- Angrilli A, Mauri A, Palomba D, Flor H, Birbaumer N, Sartori G, et al. Startle reflex and emotion modulation impairment after a right amygdala lesion. Brain. 1996;119:1991–2000. doi: 10.1093/brain/119.6.1991. [DOI] [PubMed] [Google Scholar]

- Ashley V, Vuilleumier P, Swick D. Time course and specificity of event-related potentials to emotional expressions. Neuroreport. 2004;19:211–216. doi: 10.1097/00001756-200401190-00041. [DOI] [PubMed] [Google Scholar]

- Balaban MT. Affective influences on startle in five-month-old infants: reactions to facial expressions of emotions. Child Development. 1995;66:28–36. doi: 10.1111/j.1467-8624.1995.tb00853.x. [DOI] [PubMed] [Google Scholar]

- Batty M, Taylor MJ. Early processing of the six basic facial emotional expressions. Cognitive Brain Research. 2003;17:613–620. doi: 10.1016/s0926-6410(03)00174-5. [DOI] [PubMed] [Google Scholar]

- Batty M, Taylor MJ. The development of emotional face processing during childhood. Developmental Science. 2006;9:207–220. doi: 10.1111/j.1467-7687.2006.00480.x. [DOI] [PubMed] [Google Scholar]

- Bentin S, Allison T, Puce A, Perez E, McCarthy G. Electrophysiological studies of face perception in humans. Journal of Cognitive Neuroscience. 1996;8:551–565. doi: 10.1162/jocn.1996.8.6.551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bentley P, Vuilleumier P, Thiel CM, Driver J, Dolan RJ. Cholinergic enhancement modulates neural correlates of selective attention and emotional processing. Neuroimage. 2003;20:58–70. doi: 10.1016/s1053-8119(03)00302-1. [DOI] [PubMed] [Google Scholar]

- Courchesne E, Ganz L, Norcia AM. Event-related potentials to human faces in infants. Child Development. 1981;52:804–811. [PubMed] [Google Scholar]

- Dawson G, Webb SJ, Carver L, Panagiotides H, McPartland J. Young children with autism show atypical brain responses to fearful versus neutral facial expressions of emotion. Developmental Science. 2004;7:340–359. doi: 10.1111/j.1467-7687.2004.00352.x. [DOI] [PubMed] [Google Scholar]

- DeBoer T, Scott L, Nelson CA. Event-Related Potentials in developmental populations. In: Handy T, editor. Event-Related Potentials: A Methods Handbook. Massachusetts: MIT Press; 2004. pp. 263–297. [Google Scholar]

- de Haan M, Belsky J, Reid V, Volein A, Johnson MH. Maternal personality and infants' neural and visual responsivity to facial expressions of emotion. Journal of Child Psychology and Psychiatry. 2004;45:1209–1218. doi: 10.1111/j.1469-7610.2004.00320.x. [DOI] [PubMed] [Google Scholar]

- de Haan M, Johnson MH, Halit H. Development of face-sensitive event-related potentials during infancy: a review. International Journal of Psychophysiology. 2003;51:45–58. doi: 10.1016/s0167-8760(03)00152-1. [DOI] [PubMed] [Google Scholar]

- de Haan M, Nelson CA. Recognition of the mother's face by six-month-old infants: a neurobehavioral study. Developmental Psychology. 1997;68:187–210. [PubMed] [Google Scholar]

- de Haan M, Nelson CA. Brain activity differentiates face and object processing in 6-month-old infants. Developmental Psychology. 1999;35:1113–1121. doi: 10.1037//0012-1649.35.4.1113. [DOI] [PubMed] [Google Scholar]

- de Haan M, Pascalis O, Johnson MH. Specialization of neural mechanisms underlying face recognition in human infants. Journal of Cognitive Neuroscience. 2002;14:199–209. doi: 10.1162/089892902317236849. [DOI] [PubMed] [Google Scholar]

- Eimer M, Holmes A, McGlone FP. The role of spatial attention in the processing of facial expressions: an ERP study of rapid brain responses to six basic emotions. Cognitive, Affective, & Behavioral Neuroscience. 2003;3:97–110. doi: 10.3758/cabn.3.2.97. [DOI] [PubMed] [Google Scholar]

- Farroni T, Johnson MH, Csibra G. Mechanisms of eye gaze perception during infancy. Journal of Cognitive Neuroscience. 2004;16:1320–1326. doi: 10.1162/0898929042304787. [DOI] [PubMed] [Google Scholar]

- Funayama ES, Grillon C, Davis M, Phelps EA. A double dissociation in the affective modulation of startle in humans: Effects of unilateral temporal lobectomy. Journal of Cognitive Neuroscience. 2001;13:721–729. doi: 10.1162/08989290152541395. [DOI] [PubMed] [Google Scholar]

- Halit H, Csibra G, Volein Á, Johnson MH. Face-sensitive cortical processing in early infancy. Journal of Child Psychology and Psychiatry. 2004;45:1228–1234. doi: 10.1111/j.1469-7610.2004.00321.x. [DOI] [PubMed] [Google Scholar]

- Halit H, de Haan M, Johnson MH. Cortical specialisation for face processing: face-sensitive event-related potential components in 3- and 12-month-old infants. Neuroimage. 2003;19:1180–1193. doi: 10.1016/s1053-8119(03)00076-4. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI. Human neural systems for face recognition and social communication. Biological Psychiatry. 2002;51:59–67. doi: 10.1016/s0006-3223(01)01330-0. [DOI] [PubMed] [Google Scholar]

- Hirai M, Hiraki K. An event-related potentials study of biological motion perception in human infants. Cognitive Brain Research. 2005;22:301–304. doi: 10.1016/j.cogbrainres.2004.08.008. [DOI] [PubMed] [Google Scholar]

- Howell DC. Statistical methods for psychology. Boston, MA: PWS-Kent; 1987. [Google Scholar]

- Johnson MH. Subcortical face processing. Nature Reviews: Neuroscience. 2005;6:766–774. doi: 10.1038/nrn1766. [DOI] [PubMed] [Google Scholar]

- Kotsoni E, de Haan M, Johnson MH. Categorical perception of facial expressions by 7-month-old infants. Perception. 2001;30:1115–1125. doi: 10.1068/p3155. [DOI] [PubMed] [Google Scholar]

- Krolak-Salmon P, Fischer C, Vighetto A, Mauguiére F. Processing of facial emotional expression: spatio-temporal data as assessed by scalp event-related potentials. European Journal of Neuroscience. 2001;13:987–994. doi: 10.1046/j.0953-816x.2001.01454.x. [DOI] [PubMed] [Google Scholar]

- Kujawski J, Bower TG. Same-sex preferential looking during infancy as a function of abstract representation. British Journal of Developmental Psychology. 1993;11:201–209. [Google Scholar]

- Leppänen JM, Nelson CA. The development and neural bases of facial emotion recognition. In: Kail RV, editor. Advances in Child Development and Behavior. Vol. 34. San Diego, CA: Academic Press; (in press) [DOI] [PubMed] [Google Scholar]

- Morris JS, Friston KJ, Buechel C, Frith CD, Young AW, Calder AJ, et al. A neuromodulatory role for the human amygdala in processing emotional facial expressions. Brain. 1998;121:47–57. doi: 10.1093/brain/121.1.47. [DOI] [PubMed] [Google Scholar]

- Nelson CA. The recognition of facial expressions in the first two years of life: mechanisms of development. Child Development. 1987;58:889–909. [PubMed] [Google Scholar]

- Nelson CA, Bloom FE, Cameron J, Amaral D, Dahl R, Pine D. An integrative, multidisciplinary approach to the study of brain-behavior relations in the context of typical and atypical development. Development & Psychopathology. 2002;14:499–520. doi: 10.1017/s0954579402003061. [DOI] [PubMed] [Google Scholar]

- Nelson CA, de Haan M. Neural correlates of infants’ visual responsiveness to facial expressions of emotion. Developmental Psychobiology. 1996;29:577–595. doi: 10.1002/(SICI)1098-2302(199611)29:7<577::AID-DEV3>3.0.CO;2-R. [DOI] [PubMed] [Google Scholar]

- Nelson CA, Dolgin K. The generalized discrimination of facial expressions by 7-month-old infants. Child Development. 1985;56:58–61. [PubMed] [Google Scholar]

- Nelson CA, Monk C. The use of event-related potentials in the study of cognitive development. In: Nelson CA, Luciana M, editors. Handbook of Developmental Cognitive Neuroscience. Cambridge, MA: MIT Press; 2001. pp. 125–136. [Google Scholar]

- Parker SW, Nelson CA BEIP Core Group. The impact of early institutional rearing on the ability to discriminate facial expressions of emotion: An event-related potential study. Child Development. 2005;76:1–19. doi: 10.1111/j.1467-8624.2005.00829.x. [DOI] [PubMed] [Google Scholar]

- Pissiota A, Frans O, Michelgard A, Appel L, Langstrom B, Flaten MA, et al. Amygdala and anterior cingulate cortex activation during affective startle. European Journal of Neuroscience. 2003;18:1325–1331. doi: 10.1046/j.1460-9568.2003.02855.x. [DOI] [PubMed] [Google Scholar]

- Pizzagalli DA, Lehman D, Hendrick AM, Regard M, Pascual-Marqui RD, Davidson R. Affective judgments of faces modulate early activity (~160 ms) within the fusiform gyri. Neuroimage. 2002;16:663–677. doi: 10.1006/nimg.2002.1126. [DOI] [PubMed] [Google Scholar]

- Puce A, Syngeniotis A, Thompson JC, Abbott DF, Wheaton KJ, Castiello U. The human temporal lobe integrates facial form and motion: evidence from fMRI and ERP studies. Neuroimage. 2003;19:861–869. doi: 10.1016/s1053-8119(03)00189-7. [DOI] [PubMed] [Google Scholar]

- Puce A, Perrett D. Electrophysiology and brain imaging of biological motion. Philosophical Transactions of the Royal Society of London: B. 2003;358:435–445. doi: 10.1098/rstb.2002.1221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Puce A, Smith A, Allison T. ERPs evoked by viewing facial movements. Cognitive Neuropsychology. 2000;17:221–239. doi: 10.1080/026432900380580. [DOI] [PubMed] [Google Scholar]

- Richards JE. Attention affects the recognition of briefly presented visual stimuli in infants: An ERP study. Developmental Science. 2003;6:312–328. doi: 10.1111/1467-7687.00287. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rossignol M, Philippot P, Douilliez C, Crommelinck M, Campanella S. The perception of fearful and happy facial expression is modulated by anxiety: an event-related potential study. Neuroscience Letters. 2005;377:115–120. doi: 10.1016/j.neulet.2004.11.091. [DOI] [PubMed] [Google Scholar]

- Sato W, Kochiyama T, Yoshikawa S, Matsumura M. Emotional expression boosts early visual processing of the face: ERP recording and its decomposition by independent component analysis. Neuroreport. 2001;26:709–714. doi: 10.1097/00001756-200103260-00019. [DOI] [PubMed] [Google Scholar]

- Schupp HT, Junghöfer M, Weike AI, Hamm AO. The selective processing of briefly presented affective pictures: an ERP analysis. Psychophysiology. 2004;41:441–449. doi: 10.1111/j.1469-8986.2004.00174.x. [DOI] [PubMed] [Google Scholar]

- Schupp HT, Öhman A, Junghöfer M, Weike AI, Stockburger J, Hamm AO. The facilitated processing of threatening faces: an ERP analysis. Emotion. 2004;4:189–200. doi: 10.1037/1528-3542.4.2.189. [DOI] [PubMed] [Google Scholar]

- Schyns PG, Jentzsch I, Johnson M, Schweinberger SR, Gosselin F. A principled method for determining the functionality of brain responses. Neuroreport. 2003;14:1665–1669. doi: 10.1097/00001756-200309150-00002. [DOI] [PubMed] [Google Scholar]

- Sorce JF, Emde RN, Campos JJ, Klinnert MD. Maternal emotional signaling: its effect on the visual cliff behavior of 1-year-olds. Developmental Psychology. 1985;21:195–200. [Google Scholar]

- Taylor MJ, Edmonds GE, McCarthy G, Allison T. Eyes first! Eye processing develops before face processing in children. Neuroreport. 2001;12:1671–1676. doi: 10.1097/00001756-200106130-00031. [DOI] [PubMed] [Google Scholar]

- Tottenham N, Borscheid A, Ellertsen K, Marcus DJ, Nelson CA. Categorization of facial expressions in children and adults: Establishing a larger stimulus set. Poster presented at the Annual Meeting of the Cognitive Neuroscience Society; San Francisco, CA. 2002. Apr, [Google Scholar]

- Vuilleumier P, Armony JL, Driver J, Dolan RJ. Distinct spatial frequency sensitivities for processing faces and emotional expressions. Nature Neuroscience. 2003;6:624–631. doi: 10.1038/nn1057. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Richardson MP, Armony JL, Driver J, Dolan RJ. Distant influences of amygdala lesion on visual cortical activation during emotional face processing. Nature Neuroscience. 2004;7:1271–1278. doi: 10.1038/nn1341. [DOI] [PubMed] [Google Scholar]

- Walker-Andrews AS. Infants' perception of expressive behaviors: differentiation of multimodal information. Psychological Bulletin. 1997;121:437–456. doi: 10.1037/0033-2909.121.3.437. [DOI] [PubMed] [Google Scholar]

- Whalen PJ. Fear, vigilance, and ambiguity: initial neuroimaging studies of the human amygdala. Current Directions in Psychological Science. 1998;7:177–188. [Google Scholar]

- Whalen PJ, Kagan J, Cook RG, Davis FC, Kim H, Polis S, et al. Human amygdala responsivity to masked fearful eye whites. Science. 2004;306:2061. doi: 10.1126/science.1103617. [DOI] [PubMed] [Google Scholar]

- Wheaton KJ, Pipingas A, Silberstein RB, Puce A. Human neural responses elicited to observing the actions of others. Visual Neurocience. 2001;18:401–406. doi: 10.1017/s0952523801183069. [DOI] [PubMed] [Google Scholar]