Abstract

Radiologists make many diagnoses, but only sporadically get feedback on the subsequent clinical courses of their patients. We have created a web-based application that empowers radiologists to create and maintain personal databases of cases of interest. This tool integrates with existing information systems to minimize manual input such that radiologists can quickly flag cases for further follow-up without interrupting their clinical work. We have integrated this case-tracking system with an electronic medical record aggregation and search tool. As a result, radiologists can learn the outcomes of their patients with much less effort. We intend this tool to aid radiologists in their own personal quality improvement and to increase the efficiency of both teaching and research. We also hope to develop the system into a platform for systematic, continuous, quantitative monitoring of performance in radiology.

Key words: Web technology, databases, radiology teaching files, quality assurance, Electronic Medical Record (EMR), productivity, Radiology Information Systems (RIS), radiology workflow

Background

As part of ongoing quality improvement, diligent radiologists frequently seek information on the subsequent clinical course of patients whose studies they have read. Commonly, this takes the form of asking referring clinicians about the outcome of further workup. Alternatively, a radiologist might make a note of the medical record number (MRN) for an interesting or mysterious case so that he or she can later independently look for subsequent operative notes, pathology reports, or imaging results in the electronic medical record (EMR)1. This process of follow-up plays a vital role in developing a radiologist’s diagnostic acumen. Every time a radiologist finds out that she was wrong—or right—about an interpretation is an opportunity for self-improvement, both during and after training2. Exploring outcomes also helps with many ancillary missions in a radiology practice. Knowing clinical outcomes of shared patients allows radiologists to establish better connections with referring colleagues. Learning clinical outcomes is also important for creating good teaching material; a case which ends with a description of what actually happened to the patient can be very powerful. Finally, integrating clinical outcomes can be vital for research, as connections which might not have been apparent reveal themselves after follow-up.

However, in spite of the importance of follow-up, it does not fit easily into the busy radiology workflow. As radiologists typically see a large volume of patients with limited continuity of care, the process of data aggregation and outcome inference takes substantial time and effort. As radiology workloads have increased and the volume of data contained in the EMR has expanded, the required chart review and data assimilation has become a rate-limiting factor. With current EMR portals, diligent radiologists could spend several hours each week following up their patients, and even then only follow the most remarkable cases. As a result, many opportunities for feedback and improvement are missed1.

Technology should be able to provide a solution. Though information systems at our institution have some facilities for noting cases, most members of our department have found them to be unsatisfactory, and they are used only sporadically. Therefore, we set out to create a tool to allow radiologists to add to a personal database of cases as they perform their clinical work, to which they can return to review the imaging and search for follow-up clinical information.

Methods

We have created a case-tracking application that we have dubbed “RaceTrack” (for “Radiology Case Tracking”). The RaceTrack system itself is a database-backed web application that integrates with our department’s existing radiology information systems, including the radiology information system (RIS; IDXRad, GE Healthcare), picture archiving and communication system (PACS; IMPAX, Agfa), and dictation software (RadWhere, Nuance). The RaceTrack system also interfaces with the Queried Patient Inference Dossier (QPID), which aggregates and indexes electronic medical records to allow efficient semantic search. This search capability is used to integrate tracked cases with clinical data3. In addition, the system utilizes a single sign-on service administered by our organization for authentication.

The core RaceTrack system is implemented using Ruby on Rails4, an open-source web application framework with integrated object-relational mapping. Application data, which amounts to radiologist-generated metadata about imaging examinations, is managed by the open-source MySQL database engine5. The Apache web server6 directly handles HTTP requests. This application stack runs in the Ubuntu Linux operating system7. The system is currently deployed as a virtual machine running in a VMware virtualization environment (VMware, Palo Alto, CA, USA) on server hardware (Dell, Round Rock, TX, USA) running Windows Server 2003 (Microsoft, Redmond, WA, USA). The web-based user interface relies on the open-source jQuery JavaScript library8. Scripts that capture radiologist workflow context were created using the open-source AutoHotkey utility9.

The architecture of the system is summarized in Figure 1. On the server side, user requests are routed through the Apache HTTP server to the Ruby-based application. Database requests are currently made to the MySQL database engine running on the local machine, though the database can be moved to a dedicated server as the application scales to greater use. User authentication is handled via SSL-encrypted HTTP by confirming a username and password against an enterprise-provided Web Services Security service10. Lookups of information from the RIS are via the ODBC protocol. Queries for medical record information are managed via HTTP web service requests to the QPID system.

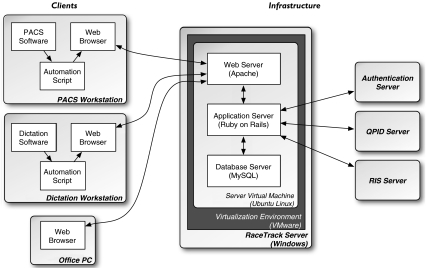

Fig 1.

Architecture of the RaceTrack case-tracking system. Users interact with the RaceTrack system using web browsers via HTTP. On PACS and dictation workstations, scripts extract working context and feed that information into web browsers for more convenient case creation. The RaceTrack application stack consists of a HTTP server, application server, and database server, which all run in a Linux virtual machine. The application communicates with an external authentication system via encrypted HTTP to provide a single enterprise-wide sign-on for users. The RaceTrack application also communicates with the radiology information system (RIS) via ODBC protocol to retrieve information about radiology examinations and the Queried Patient Inference Dossier (QPID) server via HTTP to retrieve other medical record information.

On the client side, users at our institution are standardized on the Internet Explorer version 6 or 7 web browsers. The RaceTrack interfaces, however, are designed to be browser agnostic. AutoHotkey scripts automatically extract the accession number of the current exam from either the PACS environment or the dictation system and open a web browser window with this information pre-filled to minimize manual entry.

Results

This system and its integration with other radiology information systems allows radiologists to create, manipulate, and follow their tracked cases with a minimum of effort and interruption of their clinical work (Fig. 2). The process begins when the radiologist presses a “hotkey” in either the PACS or the dictation environment. This opens a simple entry form that is automatically pre-filled with study information gathered from the radiologist’s current work context. The radiologist types a brief description of his or her interest in the case, and dismisses the form. The system then creates an entry in the radiologist’s personal database of tracked cases. Later, to review cases, the radiologist logs in to the RaceTrack system from an office computer, a personal laptop, or a home computer via a virtual private network. The system displays a personalized table of tracked cases that includes links directly to the patient’s EMR entry and to a web-based PACS display of the study of interest (Fig. 3). At no time does the radiologist have to type the MRN or accession number.

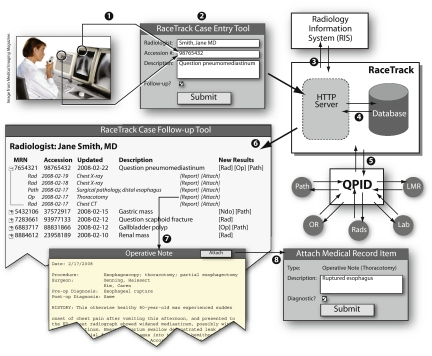

Fig 2.

Case-tracking workflow using the RaceTrack system. A radiologist who sees an examination of interest during her clinical work (1) uses a hotkey to bring up a case-entry tool with the accession number pre-entered. She enters a brief description of her interest in the case (2), and returns to her work. The RaceTrack system retrieves ancillary patient and exam information from the radiology information system (RIS; 3), and stores the description and other metadata in a database for the radiologist (4). The system then periodically queries the patient’s medical records via the Queried Patient Inference Dossier (QPID; 5). At her leisure, the radiologist can return to view her database of cases (6), which includes potentially useful medical record information such as pathology reports and operative notes (7). She can choose to form an annotated link between her description of the case and these medical record items (8).

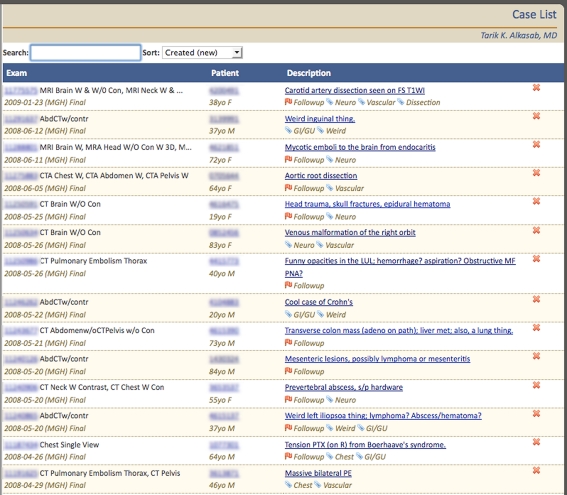

Fig 3.

Personal database of tracked cases. A radiologist’s personal list of cases includes information from the radiology information system (RIS), such as examination description and date, and also patient demographic information, including medical record number (MRN). For each case in the list, the accession number is displayed (blurred in this image for privacy) as a link directly to the examination in a web-based PACS application. The patient MRN is displayed (blurred here) as a link to the patient’s electronic medical record entry. The case description links to a more detailed display of the case (see Fig. 4).

Further, the integration with EMR systems helps radiologists place radiology examinations in a broader clinical context. RaceTrack periodically searches the patient’s record via the QPID system for subsequent relevant clinical information such as new operative notes, pathology reports, or follow-up imaging examinations. When new data are found, they are displayed as part of the case description in the RaceTrack tool without the radiologist having to pore through medical records manually (Fig. 4). The radiologist can then choose relevant reports and create annotated links to these selected medical record items that are maintained with the radiologist’s personal description of the case. This drastically decreases the amount of work required for radiologists to find the subsequent clinical course of their patients and keep track of subsequent diagnoses.

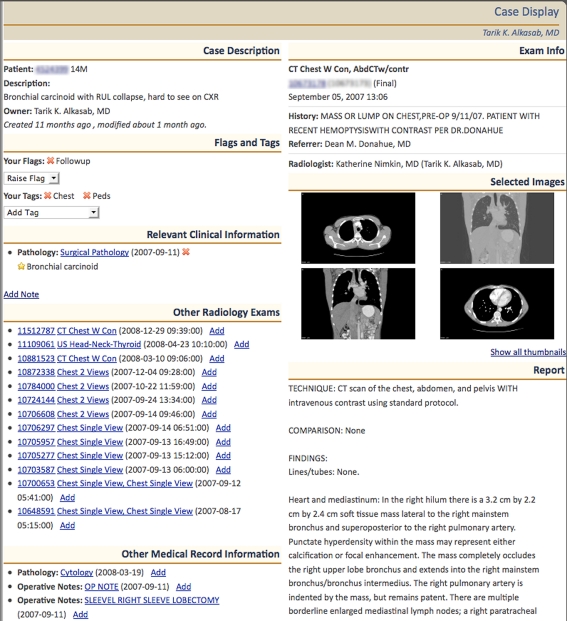

Fig 4.

Detailed information on a tracked case. The detailed case display integrates information from both the radiology information system (RIS), the electronic medical record (EMR), and the radiologist’s own metadata. In the case displayed, the radiologist has made an annotated link to a pathology report which has diagnosed the tumor seen on the examination as a bronchial carcinoid (under “Relevant Clinical Information”). Other potentially relevant radiology exams and medical record entries are available for linking (“Other Radiology Exams” and “Other Medical Record Information”).

As an example, consider a busy radiologist interpreting a series of CT scans in the reading room. On a non-enhanced scan of a middle-aged man’s abdomen, he sees a renal mass with an unusual but non-specific appearance and recommends follow-up imaging to further characterize it. Since he wishes to find out himself what the subsequent workup of the lesion reveals, he activates the RaceTrack tool. A form is brought up which is pre-filled with the accession number for the study he is viewing. He types a brief description (“Strange renal mass, not definitely cystic”), indicates that he wishes to receive follow-up, and dismisses the form. The case is now stored in his personal database of cases, and he can return to his queue of studies to be interpreted.

Later that week, he logs in to the RaceTrack system from his office computer and peruses his list of cases. He notices the new entry, remembers the case, and uses the link to the web-based PACS system to review the images. He shows the mass to a colleague, who is equally mystified, which further raises his interest in the case. Three weeks later, the radiologist is again reviewing his list of cases, and notices a flag on the case of the renal mass, which indicates that new clinical information is available. He clicks on the case, and finds that there has been a contrast-enhanced renal MR exam (read by one of his colleagues), which demonstrates enhancement in a solid portion of the mass, raising suspicion of neoplasm. He adds an annotated link to the MR report to his record of the case (“Mass shows enhancement”). Finally, a month later, while going through his case list, he finds that there has been a nephrectomy and the surgical pathology report is available: the lesion is diagnosed as an oncocytoma. The radiologist creates another annotated link to the pathology report with the text “Oncocytoma” and a “diagnostic” flag raised indicating that the item provides the diagnosis for the case. As a result of using the RaceTrack system, the radiologist has found the clinical and pathological outcome of the workup initiated by his recommendation. He can return to the case as needed for reference.

At the time of this writing, the system has been deployed for approximately 6 weeks. Eight volunteer radiologists act as alpha users, and they have already created more than 600 cases for follow-up. Users have used a web-based issue-tracking application to report problems with the application and request enhancements. One radiologist maintains an extensive teaching-file collection; she reports that the system improves her efficiency in tracking potential teaching cases for later follow-up (L. Avery, personal communication). Another user has used the system to maintain lists of cases for an IRB-approved research project, and reports that the maintained list and easy access to EMR systems facilitates finding clinical outcomes for included cases (M. Cushing, personal communication).

Discussion

The case-tracking system we have created allows radiologists to receive clinical feedback on more of their cases more efficiently. This changed dynamic is already demonstrating that it can improve ancillary processes in radiology: both teaching file creation and research have been made easier by lowering barriers between radiologists and outcome information. More importantly, as more radiologists in our department use the system, we hope that it will become routine for them to receive diagnostic feedback. We expect that this system will contribute to improved diagnostic quality by providing radiologists more opportunities for self-assessment and better integrating them with longitudinal patient care. We anticipate that radiologists in training will find the tool especially useful as they develop their skills, but expect that practicing radiologists undergoing maintenance of certification will also use the tool to gather material to meet the new requirement for Practice Quality Improvement11,12.

In addition to extending the system to a larger group of users, we intend to make several enhancements. We are adding the ability to organize cases using tags. This would allow users to create virtual folders of cases for easy retrieval. We are also developing a search tool that will allow users to quickly find cases based on text contained in their descriptions and other annotations. As an adjunct to both tagging and searching, we intend to add automatic indexing and tagging against RadLex13 to increase the specificity of available semantic information. The system will also soon include the ability to export anonymized representations of cases to presentation software formats (such as PowerPoint), to a Medical Imaging Resource Center server14, or to online systems such as the Radiological Society of North America’s myRSNA service15. In addition, we would like to hone the process by which the RaceTrack system finds and presents potentially relevant entries in the electronic medical record. Currently, the system returns all subsequent radiology exams, pathology reports, operative notes, and endoscopy results. Our goal is to devise algorithms that rank medical record entries based on how relevant they are to the case and the radiologist’s interest in it. This will further increase the efficiency of radiologists following up on their patients.

In addition to enhancements that improve the ability of radiologists to organize their cases and access subsequent patient information, we anticipate using the RaceTrack system as a platform for developing new applications for tracking radiology cases. One potential application is to develop a tool radiologists use to flag cases as having a technical quality issue. When a radiologist interprets such an examination, they can create a “technical quality ticket” based on the examination in question. This ticket would include relevant information from the RIS without requiring manual re-entry, and would then be added to a queue for technical managers to review. The inclusion of this embedded information will increase the efficiency of exploring and resolving these issues compared to ad hoc workflows. In addition, since the cases are stored in a database, they can be queried to generate metrics and mined for potential sources of systemic problems. We also hope to apply the RaceTrack system to the workflow surrounding radiologist-recommended follow-up examinations. A recommendation-tracking system could automatically capture these recommendations, and then monitor patients’ subsequent records for the recommendation being followed (or not followed). This information could then be used to send reminders to a patient’s physicians when a recommended study has not been obtained in a timely manner. Alternatively, when recommended studies are obtained, the system can bring those studies to the attention of the radiologist who originally recommended them, thereby closing the loop they opened.

Conclusion

We have implemented a radiology case-tracking system that allows radiologists to create and maintain personal databases of cases of interest. By integrating this tool with a medical record aggregation and search system, we have reduced the energy barrier for radiologists to follow clinical outcomes of their patients. Our tool has immediate utility in self-assessment, teaching, and research. In addition, we hope to use the system as a platform for developing applications for quality improvement in radiology.

Acknowledgements

The authors express their thanks to Drs. Laura Avery, Matthew Cushing, and Vincent Flanders for serving as early test users. This work is supported by a Resident Research Grant to TKA from the RSNA Research and Education Foundation.

References

- 1.Boonn WW, Langlotz CP. Radiologist use of and perceived need for patient data access. J Digit Imaging. 2009;22(4):357–62. doi: 10.1007/s10278-008-9115-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Lau LS. A continuum of quality in radiology. J Am Coll Radiol. 2006;3:233–39. doi: 10.1016/j.jacr.2005.10.006. [DOI] [PubMed] [Google Scholar]

- 3.Zalis ME, Harris MA: The Queried Patient Inference Dossier (QPID): an automated, ontology-driven search system for radiology. Program of the 93rd Annual Meeting and Scientific Assembly of the RSNA, 2007

- 4.Ruby on Rails. Available at http://rubyonrails.org. Accessed 3 June, 2009

- 5.MySQL: The World’s Most Popular Open Source Database. Available at http://www.mysql.org. Accessed 3 June 2009

- 6.The Apache HTTP Server Project. Available at http://httpd.apache.org. Accessed 3 June 2009

- 7.Ubuntu Home Page. Available at http://www.ubuntu.com. Accessed 3 June 2009

- 8.jQuery: The Write Less, Do More, JavaScript Library. Available at http://jquery.com. Accessed 3 June 2009

- 9.AutoHotkey—Free Mouse and Keyboard Macro Program with Hotkeys and AutoText. Available at http://www.autohotkey.com. Accessed 3 June 2009

- 10.OASIS Web Services Security (WSS) TC. Available at http://www.oasis-open.org/committees/tc_home.php?wg_abbrev=wss. Accessed 3 June 2009

- 11.Bosma J, Laszakovits D, Hattery RR. Self-assessment for maintenance of certification. J Am Coll Radiol. 2007;4:45–52. doi: 10.1016/j.jacr.2006.08.014. [DOI] [PubMed] [Google Scholar]

- 12.Strife JL, Kun LE, Becker GJ, Dunnick NR, Bosma J, Hattery RR. The American Board of Radiology perspective on maintenance of certification: part IV—Practice Quality Improvement in diagnostic radiology. J Am Coll Radiol. 2007;4:300–304. doi: 10.1016/j.jacr.2007.02.008. [DOI] [PubMed] [Google Scholar]

- 13.Langlotz CP. RadLex: a new method for indexing online educational materials. RadioGraphics. 2006;26:1595–1597. doi: 10.1148/rg.266065168. [DOI] [PubMed] [Google Scholar]

- 14.Radiological Society of North America. RSNA Medical Imaging Resource Center. Available at http://mirc.rsna.org. Accessed 3 June 2009

- 15.Radiological Society of North America. RSNA.org: Users Praise myRSNA. Available at http://www.rsna.org/Publications/rsnanews/February-2009/0209_rsnaorg.cfm. Accessed 3 June 2009