Abstract

Reading and speech-in-noise perception, fundamental aspects of human communication, have been linked to neural indices of auditory brainstem function. However, how these factors interact is currently unclear. Multivariate analysis methods (structural equation modeling) were employed to delineate and quantify the relationships among factors that relate to successful reading and speech-in-noise perception in children. Neural measures of subcortical speech encoding that reflect the utilization of stimulus regularities, differentiation of stop consonants, and robustness of neural synchrony predicted 73% of the variance in reading scores. A different combination of neural measures, specifically, utilization of stimulus regularities, strength of encoding of lower harmonics, and the extent of noise-induced timing delays uniquely predicted 56% of the variance in speech-in-noise perception measures. The neural measures relating to reading and speech-in-noise perception were substantially non-overlapping and resulted in poor fitting models when substituted for each other, thereby suggesting distinct neural signatures for the two skills. When phonological processing and working memory measures were added to the models, brainstem measures still uniquely predicted variance in reading ability and speech-in-noise perception, highlighting the robustness of the relationship between subcortical auditory function and these skills. The current study suggests that objective neural markers may prove valuable in the assessment of reading or speech-in-noise abilities in children.

Keywords: electrophysiology, reading, speech-in-noise perception, auditory brainstem, children

1. Introduction

Neural correlates of reading and language function have been substantiated through electrophysiological and functional imaging studies. Reading skill is associated with specific regional activation and white matter microstructure in the left hemisphere [1-4], as well as robust representation of the syllabic rate of speech in the right hemisphere [5]. Additionally, pre-attentive detection of stimulus differences in primary auditory cortex of both speech and tone stimuli is related to reading ability, with deficient neural differentiation in dyslexics predicting poor behavioral identification and discrimination performance [6-9]. Subcortically, strength of encoding of stimulus features important for identifying phonemes, such as transient elements and dynamic harmonic content of the stimulus, and neural synchrony in the auditory brainstem vary systematically with language and reading ability [10-13]. These studies suggest that poor readers may have inefficient encoding of complex sounds, in particular speech, which may account for poor translation from phonology to orthography when reading and for the poor phonological processing skills often found in children with reading impairments. Remediation and training of dyslexic populations can lead to improvements in these neural deficiencies, with neural response patterns mimicking those of typically developing children after training [14-17].

Similarly, there are neural correlates of speech-in-noise perception. Encoding of stimulus features important for tracking a voice over time, such as vocal pitch, and the resilience of response timing to the disruptive effects of background noise in the auditory brainstem relate to speech-in-noise perception, being degraded in children with reading and speech-in-noise impairments but enhanced in professional musicians [13, 18-21]. In children, the developmental trajectory of auditory cortical responses to speech stimuli presented in background noise correlates with speech-in-noise perception, with poor speech-in-noise perceivers having more immature cortical responses relative to good speech-in-noise perceivers [22]. Functional imaging shows increased bilateral auditory cortical activity while identifying speech presented in background noise, with greater activation in the left hemisphere for weaker signal to noise ratios [23]. Additionally, poor speech-in-noise perceivers have poor auditory efferent function, which in typical listeners can enhance target stimuli relative to background noise [24, 25]. As in reading, auditory training may enhance brainstem representation of speech in noise [16], and efferent function [26].

Reading and speech-in-noise perception may be related, particularly in children with reading disorders who are often more susceptible to increases in background noise than their typically developing peers [27-30]. The neural correlates of these two abilities may also be linked. Reading and speech-in-noise perception are both related to auditory brainstem differentiation of stop consonants [11] and the utilization of stimulus regularities [13]. Because dyslexia and language-learning impairments are associated with poor auditory streaming [31] and poor utilization of statistical regularities when learning a pseudo language [32], it may be that children with these impairments lack the neural substrate to benefit from regularities in their auditory environment, possibly affecting early language development, development of phonemic awareness, and speech-in-noise perception [13, 33]. Additionally, the robustness of neural responses to speech presented in background noise, as assessed by the response timing shift due to background noise, has been linked to both speech-in-noise and reading abilities, suggesting children with better behavioral performance on these measures are less affected by the degradative effects of background noise [18].

Despite the evidence linking the subcortical correlates of reading and speech-in-noise perception, other studies using similar methods have found that these abilities can exhibit distinct subcortical mechanisms. Reading is related to encoding of higher speech harmonics by the brainstem [10, 34], which correspond to the dynamic spectral activity of the transition between a consonant and a vowel [35-37]. Additionally, good and poor readers differ on their ability to represent the transient elements of stop consonants, such as the initial noise burst, through subcortical response timing [10, 34, 38]. Stop consonants are particularly difficult to perceive in background noise and by children with reading and language-learning impairments [39-41] likely because they are unable to adequately represent the dynamic spectral content and brief temporal events corresponding to the consonant [10, 34]. On the other hand, successful speech-in-noise perception requires robust auditory stream segmentation in order to break the acoustic scene into distinct elements and track those elements over time [42]. Vocal pitch has been shown to be a primary cue for differentiating two voices and tracking the continuity of one voice over time [42, 43]. Thus, it is not surprising that the encoding of the fundamental frequency and lower harmonics, which correspond to vocal pitch, is correlated with speech-in-noise perception [19, 21].

Reading and speech-in-noise perception can also share cognitive correlates. Successful reading may depend on the ability to access and manipulate phonological representations, as well as working memory and attention [44-50]. Similarly, better speech-in-noise perception has been linked to greater working memory and attention capacity in both exceptional (musicians) and impaired (hearing loss) populations of adults [51, 52]. Thus, converging evidence suggests that reading and speech-in-noise perception have overlapping but also independent neural correlates in the auditory brainstem, and may share cognitive correlates, but the exact nature of these interrelationships is unknown.

The present study sought to delineate and quantify the relationships among measures of auditory brainstem responses to speech (complex ABR, cABR) and reading and speech-in-noise perception using structural equation modeling. Structural equation modeling (SEM) is a multivariate analysis that evaluates the overall fit of a proposed model of the interrelationships among variables [53, 54]. Relationships among observable variables, e.g. scores on standardized reading measures, are evaluated to determine the relationships among construct variables that are not directly measurable, e.g. reading skill [53, 54]. Previously, SEM methods have been employed to identify relationships among behavioral measures of auditory processing, phonological awareness, working memory, intelligence, speech-in-noise perception, and reading ability [55-57], but these studies have lacked physiological measures of sensory function.

The current study was divided into two experiments; Experiment 1 sought to identify the best-fitting models relating brainstem encoding of speech with reading, and brainstem encoding of speech with speech-in-noise perception, and Experiment 2 sought to determine to what extent the influence of subcortical function on these behaviors (informed by Experiment 1) is mediated by other cognitive factors (phonological processing and working memory). In Experiment 1, we hypothesized that brainstem measures relating to temporal and harmonic encoding that have previously related to reading would contribute significantly to predicting variance in reading ability. Similarly, brainstem measures which have previously related to speech-in-noise perception would contribute significantly to the prediction of variance in speech-in-noise perception. Because these neural indices are largely non-overlapping, we hypothesized that interchanging them would result in poor fitting models. In Experiment 2, we hypothesized that brainstem processing would be a unique predictor of variance in measures of reading and speech-in-noise perception, and not wholly mediated by cognitive processes such as phonological processing or working memory.

2. Methods

All data collection and analysis methods were the same for Experiments 1 and 2.

2.1 Participants

Eighty-one children, ages 8-13 years (mean 10.8 years, 36 girls) participated in the study. All had pure tone, air conduction thresholds of <20 dB HL for octaves from 250-8000 Hz and clinically normal brainstem responses to click stimuli (100 μs, presented at 80 dB SPL). All children also had normal or corrected to normal vision and had scores of 85 or better on the Wechsler Abbreviated Scale of Intelligence (WASI, [58]). The participants ranged in reading ability from below average to superior. Children and their parent or guardian assented and consented, respectively, to participate and were compensated for their participation. All procedures were approved by the Institutional Review Board at Northwestern University.

2.2 Behavioral measures

Reading abilities were assessed with the Woodcock Johnson-III Tests of Achievement Word Attack subtest [59], the Test of Silent Word Reading Fluency (TOSWRF, [60]), and the Test of Oral Word Reading Efficiency (TOWRE, [61]). Additionally, phonological processing skills were assessed with the Comprehensive Test of Phonological Processing (CTOPP, [62]), using the Phonological Awareness and Phonological Memory composite scores. Working memory was assessed with the Woodcock Johnson-III Tests of Cognitive Abilities [63] Digits Reversed subtest and the CTOPP Memory for Digits subtest, requiring digit recall in the reverse or forward direction, respectively.

Speech-in-noise perception was assessed using the Hearing in Noise Test (HINT, Biologic Systems Corp.). Sentence stimuli were presented from the front while speech-shaped noise was presented from the front, 90° to the right, and 90° to the left of the child to produce HINT Front, HINT Right, and HINT Left scores. Data reported here were age-corrected percentiles of the 50% threshold signal to noise ratios (SNRs) in the three conditions.

2.3 Neural measures

2.3.1 Stimuli

Stimuli were stop consonant-vowel syllables synthesized in KLATT [64]. Stop consonants syllables were chosen because they are perceptually challenging in noise and for children with reading and language-learning impairments [39-41], being characterized by transient and rapidly dynamic spectral cues that are additionally masked by the following vowel [35-37]. The base stimulus was a 170 ms long [da] with a fundamental frequency (F0) of 100 Hz. Within the first 50 ms, the first, second, and third formants were dynamic, while the fourth, fifth, and sixth formants were stable across the whole stimulus. The base stimulus was presented in quiet in isolation (Predictable/Quiet condition), in multi-talker background noise (Noise condition), and in quiet intermixed with seven other stop consonant stimuli (Variable condition). The multi-talker background noise was a six speaker babble presented at +10 dB SNR. Among the other stop consonant stimuli, those of interest were [ba] and [ga] stimuli, which differed from [da] only on the second formant trajectory during the transition to the steady-state vowel. Additionally, responses to a five-formant, 40 ms [da] presented in quiet were also collected. See Figure 1 and [10, 11, 13, 18] for further stimulus details.

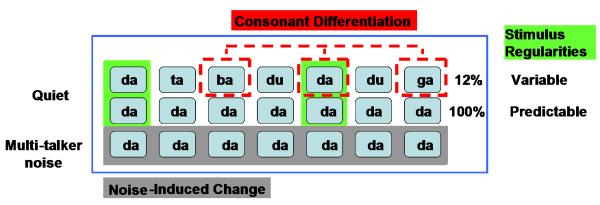

Figure 1.

Schematic of stimulus presentation conditions. A 170 ms [da] was presented in quiet in a Predictable condition (middle row), in a Variable condition (top row), and in Noise (multi-talker babble, bottom row). These conditions allowed for comparisons of responses to [da], [ba], and [ga] (Consonant Differentiation, red), [da] in Variable and trial-matched Predictable contexts (Stimulus Regularities, green), and [da] in quiet and in noise (Noise-Induced Change, gray). Not pictured here is a 40ms [da] presented in quiet that served as the stimulus for the Onset Precision measure.

Stimuli were presented in alternating polarity at 80 dB SPL through insert earphones (ER-3, Etymotic Research) to the right ear to cancel stimulus artifact and the cochlear microphonic during processing. To facilitate compliance, children watched a movie of their choice with the sound track quietly playing from a speaker, audible through the non-test ear. Because auditory input from the soundtrack was not stimulus-locked and stimuli were presented directly to the ear at an approximately +40 dB signal to noise ratio, the soundtrack had virtually no impact on the recorded responses.

2.3.2 Data Collection and Processing

Electrophysiological responses were collected using a vertical electrode montage (active Cz, forehead ground, ipsilateral earlobe reference) using Ag-AgCl electrodes. Responses were bandpass filtered from 70-2000 Hz for the longer stimuli and 100-2000 Hz for the 40 ms [da] (12dB/octave), baseline corrected, and events with amplitude greater than ±35 μV (±23 μV for the 40 ms [da]) were deleted. Responses to individual polarities were averaged separately and then added to eliminate stimulus artifact and the cochlear microphonic (CM) [65]. Both stimulus artifact and the CM are phase-dependent and cancel when activity to alternating polarities is added, leaving the neural response which is phase-independent. Averages of 6000 sweeps were made for comparing responses in quiet and noise, for comparing responses to the [ba], [da], and [ga] stimuli, and responses to the 40 ms [da]. Trial-matched averages of 700 sweeps were made for comparing the Predictable to Variable [da] presentation (see below).

The following measures were computed for the present analysis using previously-described methods.

Stimulus Regularities

Auditory brainstem enhancement of regularly-occurring acoustic events was previously shown to relate to both reading and speech-in-noise abilities [13]. Trial matched responses to [da] in the Predictable condition were compared to responses to [da] in the Variable condition by calculating spectral amplitudes within the formant transition portion of the response (7-60ms). Amplitudes were averaged over the second and fourth harmonics (200 and 400 Hz, combined as Low Harmonics) and the frequency range 530-590 Hz (High Harmonics), which falls within the dynamic trajectory of the first formant. The impact of stimulus regularity on the neural response was calculated by subtracting the spectral amplitudes between the two conditions (Predictable - Variable). See [13] for additional details.

Consonant Differentiation

Stop consonants are especially vulnerable to misperception in noise and by children with language-based learning impairments [39-41]. The stop consonants [ba], [da], and [ga] can be distinguished on the basis of their brainstem response timing and this temporal precision is related to both reading and speech-in-noise abilities [11]. The consonant differentiation score is a composite metric that reflects the presence and magnitude of neural timing shifts corresponding to the differing second formant frequencies by weighing both the presence and magnitude of the expected latency pattern ([ga]-[da]-[ba]) across response peaks within the formant transition (10-70 ms). See [11] for more details on its computation.

H2 Magnitude

Lower harmonics, particularly the second harmonic (H2), are known to underlie successful perception of speech in noise by being important object-grouping cues reflecting vocal pitch [42, 66]. The strength of H2 encoding was assessed by calculating the spectral amplitude of the second harmonic (H2, 200 Hz) over 20-60 ms of the response to [da] in quiet. This time period of the response reflects the spectrally-dynamic formant transition while excluding the onset response. See [19] for additional information.

Noise-Induced Timing Delay

The well-documented neural timing delays incurred by the addition of background noise [67] vary systemically with the perception of speech in noise (i.e., less delay, better perception in children [18] and musicians who have enhanced speech-in-noise perception [20]). The difference in peak latencies of responses to [da] presented in quiet relative to those to [da] presented in noise were calculated during the formant transition time period (20-60 ms). Responses latencies for [da] presented in noise were subtracted from those for [da] in quiet (Quiet - Noise) for peaks occurring at approximately 42 and 43 ms. These specific peak timing shifts previously served as the most effective indices for differentiating good and poor speech-in-noise perceivers. See [18] for additional details.

Onset Precision

A composite of the onset response measures for the 40 ms [da] was calculated by normalizing the V latency, A latency, and VA slope to norms for 8-12 year old children. The speech-evoked wave V is analogous to the click-evoked wave V, although possibly generated by different mechanisms [68]. Wave A is the trough following wave V and the VA slope is calculated by triangulating the latencies and amplitudes of the two peaks. Thus, earlier responses and sharper (more negative) slopes are indicative of more precise brainstem timing and these three metrics have been repeatedly shown to be the most fruitful in differentiating poor readers from good [10, 34, 38]. Here the normalized latencies and slope were averaged together to create the Onset Precision metric. See [10] for further information about these response characteristics.

2.4 Statistical Analyses

Pearson’s correlations were calculated using SPSS (SPSS Inc.) and then variables were included in structural equation models using LISREL 8.80 [69]. While SEM is often thought to be a causal analysis, the present data are from a single time point and do not allow for causal predictions beyond those allowed in multivariate regressions. The mean of a given measure was substituted for missing values (n = 15 across the entire dataset). Model fit was calculated using a chi-square test that evaluated whether the proposed model was significantly different from the optimal model determined by the program. Therefore, an insignificant chi-square statistic (p > 0.05) indicated a good fit. However, because chi-square statistics are easily influenced by sample size, the ratio of the chi-square statistic to the degrees of freedom and the root mean square error of approximation (RMSEA) are also reported as goodness of fit indices. Both are established indices of fit and should be approximately 1 and less than 0.05, respectively [53, 54]. Models were initiated by including all variables that were previously and/or presently correlated to the behavioral measures. Only the single best-fit models for reading and speech-in-noise perception are reported.

3. Results

3.1 Experiment 1

Reading and speech-in-noise perception had overlapping but largely independent neural correlates. In both cases, neural measures predicted upwards of 50% of the variance in the behavioral measures, highlighting the relationship between auditory function and reading, and also speech-in-noise perception.

3.1.1 Reading

Previous results showing that measures of subcortical differentiation of stop consonants, utilization of stimulus regularities, and onset response precision were correlated with measures of reading fluency were replicated (see Table 1). Additionally, response amplitude of the second harmonic related to reading measures.

Table 1.

Pearson’s correlations between measures of subcortical encoding of speech and reading and speech-in-noise measures. Relationships identified in previous studies are shaded.

| Speech in Noise | Stimulus Regularities (Low Harmonics) |

Stimulus Regularities (High Harmonics) |

Consonant Differentiation |

H2 Magnitude |

Noise- Induced Timing Shift |

Onset Precision |

|---|---|---|---|---|---|---|

| HINT Front | 0.29 ** | 0.20 ~ | 0.06 | 0.28 * | 0.45 ** | −0.11 |

| HINT Right | 0.19 ~ | −0.02 | −0.08 | 0.05 | −0.24 * | −0.02 |

| HINT Left | 0.21 ~ | −0.06 | 0.07 | 0.16 | 0.08 | 0.00 |

| Reading | ||||||

| TOWRE | 0.29 ** | 0.25 * | 0.20 ~ | 0.24 * | 0.06 | −0.27 * |

| TOSWRF | 0.31 ** | 0.23 * | 0.27 * | 0.01 | −0.14 | −0.17 |

| Word Attack | 0.08 | 0.12 | 0.18 | 0.16 | −0.04 | −0.10 |

p < 0.1

p < 0.05

p < 0.01

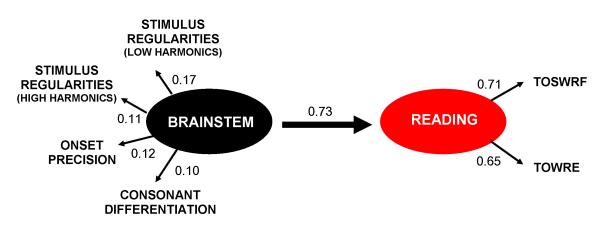

The best-fit model of reading and speech-evoked brainstem response measures (Reading-cABR) included variables that were previously reported to be related and were significantly correlated in the current population. The Reading construct variable was defined by TOSWRF and TOWRE scores, and the Brainstem construct variable was defined by Stimulus Regularities (Low Harmonics), Stimulus Regularities (High Harmonics), Consonant Differentiation, and Onset. Reading-cABR was an excellent fit to the data (χ2 = 8.35, χ2/df = 1.04, p = 0.4, RMSEA = 0.023) with Brainstem predicting 73% of the variance in Reading. All factors contributing to Reading and Brainstem construct variables were significant (see Figure 2).

Figure 2.

Best-fit model of Brainstem encoding of speech and Reading (Reading-cABR). Brainstem measures assessing the ability to utilize stimulus regularities, differentiation of stop consonants, and onset response robustness predicted 73% of the variance in measures of Reading. All relationships between observed and construct factors were significant and R2 values are indicated. (Overall fit: χ2 = 8.35, χ2/df = 1.04, p = 0.4, RMSEA = 0.023).

3.1.2 Speech-in-Noise Perception

As with Reading, previous results indicating that subcortical utilization of stimulus regularities (Low Harmonics) and degree of noise-induced timing delay were correlated with speech-in-noise perception were replicated. Additionally, utilization of stimulus regularities within the high harmonics range was marginally related to speech-in-noise perception (see Table 1).

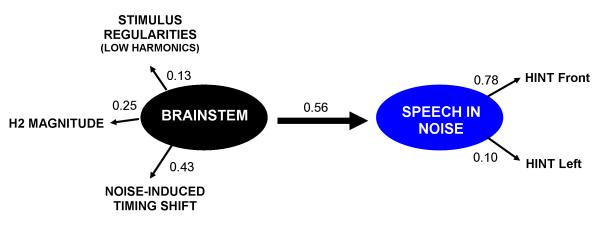

Model SIN-cABR included measures that were previously related to speech-in-noise perception and those that exhibited relationships in the current population, excluding variables that did not significantly contribute to the initial model. The Speech in Noise construct variable was comprised of HINT Front and HINT Left percentiles, while the Brainstem construct variable comprised Stimulus Regularities (Low Harmonics), H2 Magnitude, and Noise-Induced Timing Shift. Model SIN-cABR was a good fit to the data (χ2 = 5.03, χ2/df = 1.3, p = 0.28, RMSEA = 0.056) with Brainstem predicting 56% of the variance in Speech in Noise. As with Reading-cABR, all factors were significantly related to their construct variables (see Figure 3).

Figure 3.

Best-fit model of Brainstem encoding of speech and Speech in Noise perception (SIN-cABR). Brainstem measures assessing the ability to utilize stimulus regularities, faithfully encode lower harmonics of the stimulus, and resilience to timing shifts in background noise predicted of 56% of the variance in Speech in Noise perception. All relationships between observed and construct factors were significant and R2 values are indicated. (Overall fit: χ2 = 5.03, χ2/df = 1.3, p = 0.28, RMSEA = 0.056).

3.1.3 Exclusivity of the models

In order to test the overlap between Brainstem measures related to Reading and those related to Speech in Noise, models were tested in which the best-fit neural measures were substituted for each other. If reading and speech-in-noise perception have largely distinct neural correlates, then the best-fit Brainstem measures for Speech in Noise would not produce a well fitting model for Reading and vice versa.

Model Reading-cABR(SIN) included TOWRE and TOSWRF scores as measures of Reading, and Stimulus Regularities (Low Harmonics), H2 Amplitude, and Noise-Induced Timing Shift as measures of Brainstem. This model proved to be a poor fit to the data (χ2 = 10.02, χ2/df = 2.5, p = 0.04, RMSEA = 0.14) and Brainstem predicted very little variance of Reading (1.5%). Model SIN-cABR(Reading) included HINT Front and HINT Left as measures of Speech in Noise and Stimulus Regularities (Low Harmonics), Stimulus Regularities (High Harmonics), Consonant Differentiation, and Onset as measures of Brainstem. Interestingly, SIN-cABR(Reading) was an adequate fit to the data (χ2 = 5.75, χ2/df = 0.72, p = 0.68, RMSEA < 0.01), likely due to the robust correlations between Stimulus Regularities (Low Harmonics) and both measures of Speech in Noise (see Table 1), supported by the fact that Stimulus Regularities (Low Harmonics) was the only variable significantly related to the Brainstem construct variable. When the Stimulus Regularities (Low Harmonics) measure was removed, the model was a very poor fit (χ2 = 0.37, χ2/df = 0.09, p = 0.99, RMSEA < 0.01) with the relationships among all variables insignificant and Brainstem predicting only 2.9% of the variance in Speech in Noise.

3.2. Interim Discussion

Reading and speech-in-noise perception are known to involve a combination of sensory and cognitive factors. In Experiment 1, we focused primarily on the sensory factors, as defined by various cABR measures. Auditory brainstem function contributes significantly to the variance for both of these communication skills. Nevertheless, distinct neural indices of reading and speech-in-noise perception were found using structural equation modeling. Enhancement of subcortical encoding of lower harmonics when presented with a predictable stimulus was common to both Reading and Speech in Noise models. The other neural measures were found to be distinctly related to either Reading or Speech in Noise, but not to both. Importantly, when the neural correlates of Reading and Speech in Noise variables were switched, the resulting models were inadequate representations of the data. These analyses support our hypothesis that reading and speech-in-noise perception are related to distinct neural mechanisms. Deficits in language-learning, reading, and speech-in-noise perception have been linked to impaired subcortical encoding of speech and speech-like stimuli [10-13, 18], and although poor readers are often more adversely affected by increasing background noise [27-30], the present study suggests that in children with a wide range of reading ability, reading and speech-in-noise perception have largely distinct neural mechanisms involving subcortical processing of sound.

3.3 Experiment 2

Relationships between auditory function and reading were weakly mediated by phonological processing and working memory. No such relationships were found for speech-in-noise perception. The robustness of the original relationships between subcortical auditory function and behavior highlights the contribution of primary sensory function to communication.

3.3.1 Reading

Phonological processing skills and working memory are two cognitive abilities that have been linked to reading and reading impairments [45-50]. To determine the extent to which relationships between cABR measures and reading are mediated by phonological processing or working memory, behavioral measures of these abilities were added to the model. As expected, measures of reading were highly correlated with measures of phonological processing and working memory in the present study (see Table 2).

Table 2.

Pearson’s correlations among measures of reading, speech-in-noise perception, and cognitive factors.

| Speech in Noise | Phonological Awareness | Phonological Memory |

Memory for Digits | Digits Reversed |

|---|---|---|---|---|

| HINT Front | 0.04 | 0.206 ~ | 0.146 | 0.08 |

| HINT Left | 0.197 ~ | 0.17 | 0.14 | 0.17 |

| Reading | ||||

| TOWRE | 0.441 ** | 0.552 ** | 0.423 ** | 0.322 ** |

| TOSWRF | 0.436 ** | 0.389 ** | 0.456 ** | 0.349 ** |

p < 0.1

p < 0.05

p < 0.01

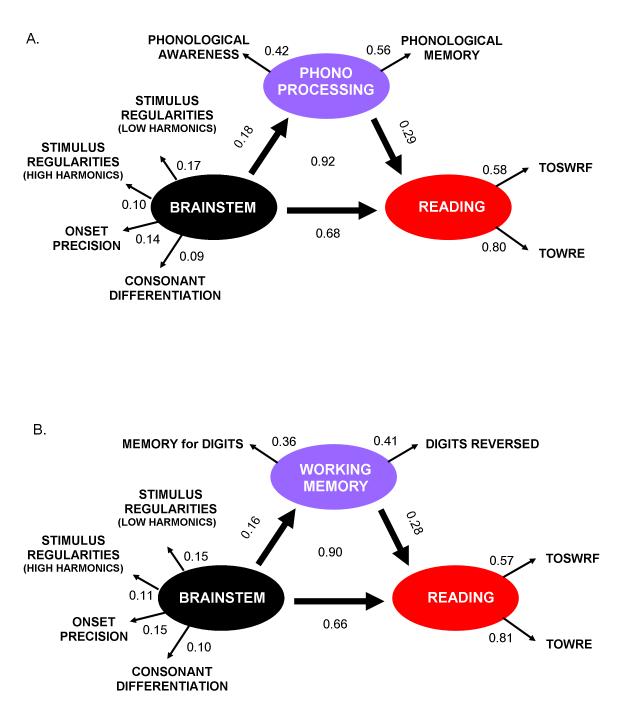

Model Reading-Phonological Processing included the CTOPP Phonological Awareness and Phonological Memory cluster scores in the Phonological Processing construct variable, which was added to the best-fit model for Reading, Reading-cABR. This model was an adequate fit to the data (χ2 = 15.10, χ2/df = 0.89, p = 0.59, RMSEA < 0.01) and Phonological Processing and Brainstem both contributed significantly to the prediction of 92% of the variance in Reading. Individually, Brainstem predicted 69% of the variance in Reading, similar to the amount accounted for by Brainstem without Phonological Processing included in the model (see Figure 4A).

Figure 4.

Relationships between Brainstem encoding of speech and Reading remain robust with the inclusion of Phonological Processing and Working Memory. A. Phonological processing skills contributed to the prediction of variance in Reading, but the relationship between Brainstem and Reading remained significant and robust (Overall fit: χ2 = 15.10, χ2/df = 0.89, p = 0.59, RMSEA < 0.01). Phonological Processing is abbreviated as Phono Processing due to space constraints. B. Working memory skills also contributed to the prediction of variance in Reading, but the relationship between Brainstem and Reading remained significant and robust (Overall fit: χ2 = 12.21, χ2/df = 0.72, p = 0.79, RMSEA < 0.01). All relationships between observed and construct factors were significant in both models, and R2 values are indicated. Note: A model including both cognitive factors was attempted, but failed to be fitted. SEM methods fail when the number of elements of the model meets or exceeds the number of participants in the data set because each element is uniquely defined or under-defined (Blunch, 2008).

In Model Reading-Memory, CTOPP Memory for Digits and WJ-III Digits Reversed scores were included in the Working Memory construct variable, which was added to Reading-cABR. Here, Reading-Memory was also an adequate fit (χ2 = 12.21, χ2/df = 0.72, p = 0.79, RMSEA < 0.01), with both Brainstem and Working Memory significantly contributing to predict 90% of the variance in Reading. As in the previous model, Brainstem still independently predicted 66% of the variance in Reading (see Figure 4B).

In order to determine the joint impact of Working Memory and Phonological Processing on Reading, the two models were combined. Despite the large sample size, the combined model could not be evaluated because too many parameters were included for the data set. SEM methods fail when the number of elements of the model meets or exceeds the number of participants in the data set because each element is uniquely defined or under-defined [53]. Although this joint model could not be evaluated, the individual models suggest that while cognitive factors such as phonological awareness and working memory contribute to reading ability, subcortical measures of neural encoding still significantly impact reading independently.

3.3.2 Speech-in-Noise Perception

As with reading, speech-in-noise perception is often linked to working memory skills, and the concurrence of speech-in-noise difficulties and reading impairments in children suggests that phonological processing may also be related to speech-in-noise perception [27-29, 51]. The speech-in-noise measures in the present study were only marginally correlated with phonological processing measures and were not significantly correlated with working memory measures (see Table 2).

In Model SIN-Phonological Processing, the construct variable representing Phonological Processing was included in the best-fit model for Speech in Noise (SIN-cABR). Model SIN-Phonological Processing was an adequate fit to the data (χ2 = 9.40, χ2/df = 0.85, p = 0.59, RMSEA < 0.01), but Phonological Processing did not significantly predict any of the variance in Speech in Noise. The relationship between Brainstem and Speech in Noise remained significant, with Brainstem predicting 65% of the variance in Speech in Noise.

Model SIN-Working Memory added the Working Memory construct variable described above to the best-fit SIN-cABR model. The model was also an adequate fit to the data (χ2 = 10.06, χ2/df = 0.91, p = 0.53, RMSEA < 0.01). Like Phonological Processing, Working Memory did not significantly predict variance in Speech in Noise, while the direct connection between the Speech in Noise and Brainstem construct variables remained significant and robust, with Brainstem accounting for 69% of the variance in Speech in Noise.

The insignificant contributions by the Phonological Processing and Working Memory variables to the models suggests that the relationships between subcortical encoding and speech-in-noise perception found in the current study are only weakly mediated by cognitive factors.

4. Discussion

Brainstem encoding of speech is a significant factor contributing to both reading and speech perception in noise in school-aged children. Nevertheless, these skills appear to have substantially distinct neural indices. The present study found that the best-fit models for reading and speech-in-noise perception contained one common measure of subcortical encoding of speech, the ability to take into account stimulus regularities, particularly the lower harmonics, when speech is presented in a predictable relative to a variable context. This measure, Stimulus Regularities (Low Harmonics), has been found to correlate with both speech-in-noise perception and reading ability, with poor readers/speech-in-noise perceivers showing no enhancement with repetition [13, 70]. Both reading and speech-in-noise perception may be dependent on the ability of the nervous system to track repetitive elements in speech as a means to surmount the disruptive effects of internal and external noise [27, 29, 33, 71-73]. The adaptivity of cortical and subcortical auditory neurons in response to statistical regularities and behaviorally relevant stimuli is supported by numerous animal studies [74-76]. Language learning, thought to rely on the tracking of statistically predictable elements in spoken language [77, 78], would also be impacted by the inability to represent regularities in speech signals. Children with language-learning impairments appear to be unable to utilize statistical regularities in learning a novel language [32], supporting this hypothesis. The inability of the nervous system to identify and track important and repeating elements in speech could contribute to impaired phonological representation of speech sounds during language learning, corticofugal modulation of subcortical encoding, and tracking of a speaker’s voice in background noise (discussed in further detail below).

4.1 Reading—Onset Precision, Consonant Differentiation, and Stimulus Regularities (High Harmonics)

cABR measures that were exclusively predictive of reading scores were those corresponding to response onset synchrony, consonant differentiation, and enhanced representation of higher harmonics through utilization of stimulus regularities. Subcortical measures of temporal and harmonic encoding have been previously linked to reading ability [10-12], with children who are poor readers showing later and less robust responses than good readers. While conventional click-evoked brainstem responses in these children are normal, the precision of encoding stop consonant onsets is disrupted, possibly due to backward masking effects from the subsequent vowel in the syllable [37]. Poor neural synchrony would also impact the temporal encoding of spectral features in a frequency-specific fashion, resulting in the poor neural and behavioral differentiation of stop consonants seen in children with language-learning or reading impairments [11, 40, 41]. Subcortical encoding deficits in poor readers have been found for the temporal and harmonic elements of the response, which reflect the time-varying phonetic content in the speech signal [79], and not elements corresponding to the pitch of the stimulus [10, 79]. In the present study, the enhancement of both lower and higher harmonics with stimulus repetition is predictive of reading scores, which supports a pervasive deficit in statistical learning and environmental adaptability discussed above. However, higher harmonic enhancement, which corresponded to the dynamic first formant of the stimulus, significantly contributed only to the prediction of reading ability, replicating previous results of impaired harmonic encoding by poor readers [10, 34]. Taken as a whole, these studies suggest that elements corresponding to the representation of the phonetic, and ultimately phonemic, content of the speech stimulus are those that relate to reading, which the present study confirms.

Etiological theories of dyslexia suggest that poor formation of phonological representations or the ability to access and manipulate those representations contribute to reading impairments [44-50]. Additionally, impairments in attention, which was not assessed in the current study, are largely comorbid with impairments in reading and may impact reading skill [80, 81]. While measures of phonological processing and working memory were all highly correlated with measures of reading in the current study, brainstem measures still predicted unique variance in reading scores. Brainstem measures did predict a small amount of variance in these cognitive measures, which suggests that the relationship between measures of neural encoding and reading is at least partially mediated by cognitive factors. This direct link between subcortical encoding of speech and reading suggests that neural asynchrony is associated with impaired reading fluency, in accordance with results that dyslexic children are more adversely affected than their peers when task difficulty increases [27, 29, 72]. This result also supports reports of impaired neural and behavioral measures of auditory processing as risk factors for subsequent reading and language impairments [28, 82, 83]. Moreover, the utility of electrophysiological methods to identify children who may be at risk for reading impairments, more thoroughly assess children with reading deficits, inform choice of intervention, and monitor training-related gains is highlighted. Electrophysiological responses from the auditory brainstem are objective and collected passively, eliminating behavioral demands in testing such as attention (reviewed in [84]). Additionally, brainstem responses to speech are known to be malleable with short-term auditory training [16, 85] and lifelong language and musical experience [86, 87], and may indicate which children might benefit the most from training [38].

4.2 Speech in Noise—H2 Magnitude and Noise-Induced Timing Shift

Measures related exclusively to speech-in-noise perception included the encoding of lower harmonics of the speech signal and robustness of response timing in the presence of background noise. Along with utilization of stimulus regularities, these measures likely relate to the ability to robustly represent and track target elements in speech when presented in noise.

Successful speech-in-noise perception requires the ability to segment the target voice from ongoing background noise [42]. Vocal pitch is one cue that can be used to track a target voice over time [42] and numerous studies have shown that increasing differences in vocal pitch between competing voices improves discrimination of the voices and their detection in background noise [43, 88]. As with response enhancement to predictable stimulus presentation, robust representation of the lower harmonics allows for better vocal pitch tracking in background noise.

Noise is known to delay response timing, indicating reduced neural synchrony when encoding degraded stimuli [67]. The resilience of the brainstem to the detrimental effects of background noise, as measured by the extent of the timing delay of response peaks within the formant transition, was related to speech-in-noise perception. Like pitch, temporal processing is important for sound-stream segregation [52] and reduced cABR timing delays in noise have been shown to be related to enhanced speech-in-noise perception in adults [20]. Taken together, the measures relating to speech-in-noise perception were those that specifically contribute to the parsing of a complex auditory scene into traceable elements.

Relationships between speech-in-noise perception and phonological awareness and working memory were not found in the current study. Children with reading impairments have often been noted to have speech-in-noise deficits [27, 29, 30] and speech-in-noise perception has been directly correlated with phonological processing [28, 29]. Although the current results appear to contradict these relationships, they do support previous studies that found speech-in-noise perception and phonological processing scores did not significantly predict one another in typically developing children or when auditory processing ability was taken into account [57, 89]. Additionally, it is possible that the speech-in-noise perception task employed here is not complex enough or life-like enough to produce the otherwise documented speech-in-noise impairments of reading impaired children in the classroom. The current study also failed to find a relationship between working memory and speech-in-noise perception. Working memory has been linked to speech-in-noise perception in adults [51], but the lack of relationship in the present study may be due to the speech-in-noise measure employed. The Hearing in Noise Test presents simple sentences in speech shaped noise and likely does not have the cognitive demands of the Quick Speech-In-Noise test, utilizing long, complex sentences with rich vocabulary in multi-talker babble, with which a robust relationship was found in the previous study [51]. Thus, it remains possible that working memory may be related to speech-in-noise perception in children when more difficult tasks are used, such as those with pseudo-words, more complex sentences, or background noise more similar to the target. Recent work also suggests that attention and intrasubject variability are predictive of speech-in-noise and communication skills [73], and although attention was not assessed, subcortical measures still likely predict significant variance in speech-in-noise perception with attention taken into account.

4.3 Conclusion

Reading and speech-in-noise perception have overlapping but largely distinct subcortical neural correlates in school-aged children. These neural indices predict greater than 50% of the variance in their respective behavioral measure. While phonological processing and working memory are highly related to reading, the contribution of neural measures is still unique and robust when these behavioral measures are added to the model. These results indicate that the subcortical encoding of sound is an easily accessible and non-invasive means to examine the biological bases of reading and speech-in-noise perception. Objective neural markers may be used to assess and monitor children with difficulties in these listening and learning skills and to identify those at risk for impaired reading or impaired speech-in-noise perception, independent of the attentional and cognitive demands present in behavioral assessments.

Acknowledgements

This work was supported by National Institutes of Health (R01DC01510) and the Hugh Knowles Center at Northwestern University. We thank Erika Skoe and Trent Nicol for their assistance in stimulus design and data reduction techniques, Samira Anderson, Judy Song, and Trent Nicol for their careful review of the manuscript, and the children and their families for participating.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- [1].Shaywitz BA, Shaywitz SE, Pugh KR, Mencl WE, Fulbright RK, Skudlarski P, et al. Disruption of posterior brain systems for reading in children with developmental dyslexia. Biological Psychiatry. 2002;52:101–10. doi: 10.1016/s0006-3223(02)01365-3. [DOI] [PubMed] [Google Scholar]

- [2].Pugh KR, Mencl WE, Jenner AR, Katz L, Frost SJ, Lee JR, et al. Functional neuroimaging studies of reading and reading disability (developmental dyslexia) Mental Retardation and Developmental Disabilities Research Reviews. 2000;6:207–13. doi: 10.1002/1098-2779(2000)6:3<207::AID-MRDD8>3.0.CO;2-P. [DOI] [PubMed] [Google Scholar]

- [3].Cao F, Bitan T, Chou T-L, Burman DD, Booth JR. Deficient orthographic and phonological representations in children with dyslexia revealed by brain activation patterns. Journal of Child Psychology and Psychiatry. 2006;47:1041–50. doi: 10.1111/j.1469-7610.2006.01684.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Poldrack RA. A structural basis for developmental dyslexia: Evidence from diffusion tensor imaging. In: Wolf M, editor. Dyslexia, Fluency, and the Brain. Pro-Ed Inc; Austin, TX: 2001. pp. 213–33. [Google Scholar]

- [5].Abrams DA, Nicol T, Zecker SG, Kraus N. Abnormal cortical processing of the syllable rate of speech in poor readers. Journal of Neuroscience. 2009;29:7686–93. doi: 10.1523/JNEUROSCI.5242-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Bradlow AR, Kraus N, Nicol T, McGee T, Cunningham J, Zecker SG. Effects of lengthened formant transition duration on discrimination and neural representation of synthetic CV syllables by normal and learning-disabled children. Journal of the Acoustical Society of America. 1999;106:2086–96. doi: 10.1121/1.427953. [DOI] [PubMed] [Google Scholar]

- [7].Banai K, Nicol T, Zecker SG, Kraus N. Brainstem timing: Implications for cortical processing and literacy. Journal of Neuroscience. 2005;25:9850–7. doi: 10.1523/JNEUROSCI.2373-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Kraus N, McGee TJ, Carrell TD, Zecker SG, Nicol T, Koch DB. Auditory neurophysiologic responses and discrimination deficits in children with learning problems. Science. 1996;273:971–3. doi: 10.1126/science.273.5277.971. [DOI] [PubMed] [Google Scholar]

- [9].Nagarajan SS, Mahncke H, Salz T, Tallal P, Roberts T, Merzenich MM. Cortical auditory signal processing in poor readers. Proceedings of the National Academy of Sciences. 1999;96:6483–8. doi: 10.1073/pnas.96.11.6483. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Banai K, Hornickel J, Skoe E, Nicol T, Zecker SG, Kraus N. Reading and subcortical auditory function. Cerebral Cortex. 2009;19:2699–707. doi: 10.1093/cercor/bhp024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Hornickel J, Skoe E, Nicol T, Zecker SG, Kraus N. Subcortical differentiation of stop consonants relates to reading and speech-in-noise perception. Proceedings of the National Academy of Sciences. 2009;106:13022–7. doi: 10.1073/pnas.0901123106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Basu M, Krishnan A, Weber-Fox C. Brainstem correlates of temporal auditory processing in children with specific lanugage impairment. Developmental Science. 2010;13:77–91. doi: 10.1111/j.1467-7687.2009.00849.x. [DOI] [PubMed] [Google Scholar]

- [13].Chandrasekaran B, Hornickel J, Skoe E, Nicol T, Kraus N. Context-dependent encoding in the human auditory brainstem relates to hearing speech in noise: Implications for developmental dyslexia. Neuron. 2009;64:311–9. doi: 10.1016/j.neuron.2009.10.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [14].Temple E, Deutsch GK, Poldrack RA, Miller SL, Tallal P, Merzenich MM, et al. Neural deficits in children with dsylexia ameliorated by behavioral remediation: Evidence from functional MRI. Proceedings of the National Academy of Sciences. 2003;100:2860–5. doi: 10.1073/pnas.0030098100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Shaywitz BA, Shaywitz SE, Blachman B, Pugh KR, Fulbright RK, Skudlarski P, et al. Development of left occipitotemporal systems for skilled reading in children after a phonologically-based intervention. Biological Psychiatry. 2004;55:926–33. doi: 10.1016/j.biopsych.2003.12.019. [DOI] [PubMed] [Google Scholar]

- [16].Russo N, Nicol T, Zecker SG, Hayes E, Kraus N. Auditory training improves neural timing in the human brainstem. Behavioural Brain Research. 2005;156:95–103. doi: 10.1016/j.bbr.2004.05.012. [DOI] [PubMed] [Google Scholar]

- [17].Gaab N, Gabrieli JDE, Deutsch GK, Tallal P, Temple E. Neural correlates of rapid auditory processing are disrupted in children with developmental dyslexia and ameliorated with training: An fMRI study. Restorative Neurology and Neuroscience. 2007;25:295–310. [PubMed] [Google Scholar]

- [18].Anderson S, Skoe E, Chandrasekaran B, Kraus N. Neural timing is linked to speech perception in noise. Journal of Neuroscience. 2010;30:4922–6. doi: 10.1523/JNEUROSCI.0107-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Anderson S, Skoe E, Chandrasekaran B, Zecker SG, Kraus N. Brainstem correlates of speech-in-noise perception in children. Hearing Research. doi: 10.1016/j.heares.2010.08.001. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Parbery-Clark A, Skoe E, Kraus N. Musical experience limits the degradative effects of background noise on the neural processing of sound. Journal of Neuroscience. 2009;29:14100–7. doi: 10.1523/JNEUROSCI.3256-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Song JH, Skoe E, Banai K, Kraus N. Perception of speech in noise: Neural correlates. Journal of Cognitive Neuroscience. doi: 10.1162/jocn.2010.21556. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Anderson S, Chandrasekaran B, Yi H, Kraus N. Cortical-evoked potentials reflect speech-in-noise perception in children. European Journal of Neuroscience. doi: 10.1111/j.1460-9568.2010.07409.x. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [23].Wong PCM, Uppunda AK, Parrish TB, Dhar S. Cortical mechanisms of speech perception in noise. Journal of Speech, Language, and Hearing Research. 2008;51:1026–41. doi: 10.1044/1092-4388(2008/075). [DOI] [PubMed] [Google Scholar]

- [24].Kumar UA, Vanaja CS. Functioning of olivocochlear bundle and speech perception in noise. Ear and Hearing. 2004;25:142–6. doi: 10.1097/01.aud.0000120363.56591.e6. [DOI] [PubMed] [Google Scholar]

- [25].Muchnik C, Roth DA-E, Othman-Jebara R, Putter-Katz H, Shabtai EL, Hildesheimer M. Reduced medial olivocochlear bundle system function in children with auditory processing disorders. Audiology & Neuro-otology. 2004;9:107–14. doi: 10.1159/000076001. [DOI] [PubMed] [Google Scholar]

- [26].deBoer J, Thornton ARD. Neural correlates of perceptual learning in the auditory brainstem: Efferent activity predicts and reflects improvement at a speech-in-noise discrimination task. Journal of Neuroscience. 2008;28:4929–37. doi: 10.1523/JNEUROSCI.0902-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [27].Bradlow AR, Kraus N, Hayes E. Speaking clearly for children with learning disabilities: Sentence perception in noise. Journal of Speech, Language, and Hearing Research. 2003;46:80–97. doi: 10.1044/1092-4388(2003/007). [DOI] [PubMed] [Google Scholar]

- [28].Boets B, Ghesquiere P, van Wieringen A, Wouters J. Speech perception in preschoolers at family risk for dyslexia: Relations with low-level auditory processing and phonological ability. Brain and Language. 2007;101:19–30. doi: 10.1016/j.bandl.2006.06.009. [DOI] [PubMed] [Google Scholar]

- [29].Ziegler JC, Pech-Georgel C, George F, Lorenzi C. Speech-perception-in-noise deficits in dyslexia. Developmental Science. 2009;12:732–45. doi: 10.1111/j.1467-7687.2009.00817.x. [DOI] [PubMed] [Google Scholar]

- [30].Brady S, Shankweiler D, Mann V. Speech perception and memory coding in relation to reading ability. Journal of Experimental Child Psychology. 1983;35:345–67. doi: 10.1016/0022-0965(83)90087-5. [DOI] [PubMed] [Google Scholar]

- [31].Helenius P, Uutela K, Hari R. Auditory stream segregation in dyslexic adults. Brain. 1999;122:907–13. doi: 10.1093/brain/122.5.907. [DOI] [PubMed] [Google Scholar]

- [32].Evans JL, Saffran JR, Robe-Torres K. Statistical learning in children with specific language impairment. Journal of Speech, Language, and Hearing Research. 2009;52:312–35. doi: 10.1044/1092-4388(2009/07-0189). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [33].Nahum M, Nelken I, Ahissar M. Low-level information and high-level perception: The case of speech in noise. PLoS Biology. 2008;6:978–91. doi: 10.1371/journal.pbio.0060126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [34].Wible B, Nicol T, Kraus N. Atypical brainstem representation of onset and formant structure of speech sounds in children with language-based learning problems. Biological Psychology. 2004;67:299–317. doi: 10.1016/j.biopsycho.2004.02.002. [DOI] [PubMed] [Google Scholar]

- [35].Delattre PC, Liberman AM, Cooper FS. Acoustic loci and transitional cues for consonants. Journal of the Acoustical Society of America. 1955;27:769–73. [Google Scholar]

- [36].Summerfield Q, Haggard M. On the dissociation of spectral and temporal cues to the voicing distinction in initial stop consonants. Journal of the Acoustical Society of America. 1977;62:436–48. doi: 10.1121/1.381544. [DOI] [PubMed] [Google Scholar]

- [37].Ohde RN, Sharf DJ. Order effect of acoustic segments of VC and CV syllables on stop and vowel identification. Journal of Speech and Hearing Research. 1977;20:543–54. doi: 10.1044/jshr.2003.543. [DOI] [PubMed] [Google Scholar]

- [38].King C, Warrier CM, Hayes E, Kraus N. Deficits in auditory brainstem encoding of speech sounds in children with learning problems. Neuroscience Letters. 2002;319:111–5. doi: 10.1016/s0304-3940(01)02556-3. [DOI] [PubMed] [Google Scholar]

- [39].Miller GA, Nicely PE. An analysis of perceptual confusions among some English consonants. Journal of the Acoustical Society of America. 1955;27:338–52. [Google Scholar]

- [40].Tallal P, Piercy M. Developmental aphasia: Rate of auditory processing and selective impairment of consonant perception. Neuropsychologia. 1974;12:83–93. doi: 10.1016/0028-3932(74)90030-x. [DOI] [PubMed] [Google Scholar]

- [41].Tallal P, Piercy M. Developmental aphasia: The perception of brief vowels and extended stop consonants. Neuropsychologia. 1975;13:69–74. doi: 10.1016/0028-3932(75)90049-4. [DOI] [PubMed] [Google Scholar]

- [42].Bregman AS. Auditory Scene Analysis: The Perceptual Organization of Sound. MIT Press; Cambridge, MA: 1990. [Google Scholar]

- [43].Darwin CJ, Hukin RW. Effectiveness of spatial cues, prosody, and talker characteristics in selective attention. Journal of the Acoustical Society of America. 2000;107:970–7. doi: 10.1121/1.428278. [DOI] [PubMed] [Google Scholar]

- [44].Shapiro B, Church RP, Lewis MEB. Specific Learning Disabilities. In: Batshaw ML, Pellegrino L, Roizen NJ, editors. Children with Disabilities. 6 ed Paul H. Brookes Publishing Co.; Baltimore, Maryland: 2007. pp. 367–85. [Google Scholar]

- [45].Gibbs S. Phonological awareness: An investigation into the developmental role of vocabulary and short-term memory. Educational Psychology. 2004;24:13–25. [Google Scholar]

- [46].Ramus F, Szenkovits G. What phonological deficit? The Quarterly Journal of Experimental Psychology. 2008;61:129–41. doi: 10.1080/17470210701508822. [DOI] [PubMed] [Google Scholar]

- [47].Richardson U, Thomson JM, Scott SK, Goswami U. Auditory processing skills and phonological represenation in dyslexic children. Dyslexia. 2004;10:215–33. doi: 10.1002/dys.276. [DOI] [PubMed] [Google Scholar]

- [48].Paul I, Bott C, Heim S, Wienbruch C, Elbert TR. Phonological but not auditory discrimination is impaired in dyslexia. European Journal of Neuroscience. 2006;24:2945–53. doi: 10.1111/j.1460-9568.2006.05153.x. [DOI] [PubMed] [Google Scholar]

- [49].Gathercole SE, Alloway TP, Willis C, Adams A-M. Working memory in children with reading disabilities. Journal of Experimental Child Psychology. 2006;93:265–81. doi: 10.1016/j.jecp.2005.08.003. [DOI] [PubMed] [Google Scholar]

- [50].Alloway TP. Working memory, but not IQ, predicts subsequent learning in children with learning difficulties. European Journal of Psychological Assessment. 2009;25:92–8. [Google Scholar]

- [51].Parbery-Clark A, Skoe E, Lam C, Kraus N. Musician enhancement for speech in noise. Ear and Hearing. 2009;30:653–61. doi: 10.1097/AUD.0b013e3181b412e9. [DOI] [PubMed] [Google Scholar]

- [52].Shinn-Cunningham BG, Best V. Selective attention in normal and impaired hearing. Trends in Amplification. 2008;12:283–99. doi: 10.1177/1084713808325306. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [53].Blunch NJ. Introduction to Structural Equation Modeling Using SPSS and AMOS. SAGE Publications Ltd; London, England: 2008. [Google Scholar]

- [54].Ullman JB. Structural Equation Modeling. In: Tabachnick BG, Fidell LS, editors. Using Multivariate Statistics. 5th ed Pearson Education Inc.; Boston, MA: 2007. pp. 676–80. [Google Scholar]

- [55].Watson BU, Miller TK. Auditory perception, phonological processing, and reading ability/disability. Journal of Speech and Hearing Research. 1993;36:850–63. doi: 10.1044/jshr.3604.850. [DOI] [PubMed] [Google Scholar]

- [56].Schulte-Korne G, Deimel W, Bartling J, Remschmidt H. The role of phonological awareness, speech perception, and auditory temporal processing for dyslexia. European Child & Adolescent Psychiatry. 1999;8:III/28–III/34. doi: 10.1007/pl00010690. [DOI] [PubMed] [Google Scholar]

- [57].Boets B, Wouters J, Van Wieringen A, De Smedt B, Ghesquiere P. Modelling relations between sensory processing, speech perception, orthographic and phonological ability, and literacy achievement. Brain and Language. 2008;106:29–40. doi: 10.1016/j.bandl.2007.12.004. [DOI] [PubMed] [Google Scholar]

- [58].Woerner C, Overstreet K. Wechsler Abbreviated Scale of Intelligence (WASI) The Psychological Corporation; San Antonio, TX: 1999. [Google Scholar]

- [59].Woodcock RW, McGrew KS, Mather N. Woodcock-Johnson III Tests of Achievement. Riverside Publishing; Itasca, IL: 2001. [Google Scholar]

- [60].Mather N, Hammill DD, Allen EA, Roberts R. Test of Silent Word Reading Fluency (TOSWRF) Pro-Ed; Austin, TX: 2004. [Google Scholar]

- [61].Torgensen JK, Wagner RK, Rashotte CA. Test of Word Reading Efficiency (TOWRE) Pro-Ed; Austin, TX: 1999. [Google Scholar]

- [62].Wagner RK, Torgensen JK, Rashotte CA. Comprehensive Test of Phonological Processing (CTOPP) Pro-Ed; Austin, TX: 1999. [Google Scholar]

- [63].Woodcock RW, McGrew KS, Mather N. Woodcock-Johnson III Tests of Cognitive Abilities. Riverside Publishing; Itasca, IL: 2001. [Google Scholar]

- [64].Klatt DH. Software for a cascade/parallel formant synthesizer. Journal of the Acoustical Society of America. 1980;67:971–95. [Google Scholar]

- [65].Gorga M, Abbas P, Worthington D. Stimulus calibration in ABR measurements. In: Jacobsen J, editor. The Auditory Brainstem Response. College-Hill Press; San Diego: 1985. pp. 49–62. [Google Scholar]

- [66].Meddis R, O’Mard L. A unitary model of pitch perception. Journal of the Acoustical Society of America. 1997;102:1811–20. doi: 10.1121/1.420088. [DOI] [PubMed] [Google Scholar]

- [67].Burkard RF, Don M. The auditory brainstem response. In: Burkard RF, Don M, Eggermont JJ, editors. Auditory Evoked Potentials: Basic Principles and Clinical Applications. Lippincott Williams & Wilkins; Baltimore, MD: 2007. pp. 229–53. [Google Scholar]

- [68].Song JH, Banai K, Russo N, Kraus N. On the relationship bewteen speech and nonspeech evoked auditory brainstem responses. Audiology & Neuro-otology. 2006:11. doi: 10.1159/000093058. [DOI] [PubMed] [Google Scholar]

- [69].Joreskog KG, Sorbom D. LISREL. 8.80 ed Scientific Software International, Inc.; Lincolnwood, IL: 2006. [Google Scholar]

- [70].Strait DL, Ashley R, Hornickel J, Kraus N. Context-dependent encoding of speech in the human auditory brainstem as a marker of musical aptitude. Association for Research in Otolaryngology; Baltimore, MD: 2010. [Google Scholar]

- [71].Sperling AJ, Zhong-Lin L, Manis FR, Seidenberg MS. Deficits in perceptual noise exclusion in developmental dyslexia. Nature Neuroscience. 2005;8:862–3. doi: 10.1038/nn1474. [DOI] [PubMed] [Google Scholar]

- [72].Ahissar M. Dyslexia and the anchoring deficit hypothesis. TRENDS in Cognitive Sciences. 2007;11:458–65. doi: 10.1016/j.tics.2007.08.015. [DOI] [PubMed] [Google Scholar]

- [73].Moore D, Ferguson M, Edmondson-Jones A, Ratib S, Riley A. Nature of auditory processing disorder in children. Pediatrics. 2010 doi: 10.1542/peds.2009-2826. DOI: 10.1542/peds.2009-2826. [DOI] [PubMed] [Google Scholar]

- [74].Dean I, Harper NS, McAlpine D. Neural population coding of sound level adapts to stimulus statistics. Nature Neuroscience. 2005;8:1684–9. doi: 10.1038/nn1541. [DOI] [PubMed] [Google Scholar]

- [75].Pressnitzer D, Sayles M, Micheyl C, Winter IM. Perceptual organization of sound begins in the auditory periphery. Current Biology. 2008;18:1124–8. doi: 10.1016/j.cub.2008.06.053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [76].Atiani S, Elhilali M, David SV, Fritz J, Shamma S. Task difficulty and performance induce diverse adaptive patterns in gain and shape of primary auditory cortical receptive fields. Neuron. 2009;61:467–80. doi: 10.1016/j.neuron.2008.12.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [77].Storkel HL. Learning new words: Phonotactic probability in language development. Journal of Speech, Language, and Hearing Research. 2001;44:1321–37. doi: 10.1044/1092-4388(2001/103). [DOI] [PubMed] [Google Scholar]

- [78].Saffran JR. Statistical language learning: Mechanisms and constraints. Current Directions in Psychological Science. 2003;12:110–4. [Google Scholar]

- [79].Kraus N, Nicol T. Brainstem origins for cortical “what” and “where” pathways in the auditory system. TRENDS in Neurosciences. 2005;28:176–81. doi: 10.1016/j.tins.2005.02.003. [DOI] [PubMed] [Google Scholar]

- [80].Willcutt EG, Pennington BF. Comorbidity of reading disability and attention-deficit/hyperactivity disorder: Differences by gender and subtype. Journal of Learning Disabilities. 2000;33:179–91. doi: 10.1177/002221940003300206. [DOI] [PubMed] [Google Scholar]

- [81].Facoetti A, Lorusso ML, Paganoni P, Cattaneo C, Galli R, Umilta C, et al. Auditory and visual automatic attention deficits in developmental dyslexia. Cognitive Brain Research. 2003;16:185–91. doi: 10.1016/s0926-6410(02)00270-7. [DOI] [PubMed] [Google Scholar]

- [82].Benasich AA, Tallal P. Infant discrimination of rapid auditory cues predicts later language development. Behavioural Brain Research. 2002;136:31–49. doi: 10.1016/s0166-4328(02)00098-0. [DOI] [PubMed] [Google Scholar]

- [83].Raschle NM, Chang M, Lee M, Buchler R, Gaab N. Examining behavioral and neural pre-markers of developmental dyslexia in children prior to reading onset. NeuroImage. 2009;47:S120. [Google Scholar]

- [84].Skoe E, Kraus N. Auditory brainstem response to complex sounds: A tutorial. Ear and Hearing. 2010;31:302–24. doi: 10.1097/AUD.0b013e3181cdb272. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [85].Song JH, Skoe E, Wong PCM, Kraus N. Plasticity in the adult human auditory brainstem following short-term linguistic training. Journal of Cognitive Neuroscience. 2008;20:1892–902. doi: 10.1162/jocn.2008.20131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [86].Krishnan A, Xu Y, Gandour J, Cariani P. Encoding of pitch in the human brainstem is sensitive to language experience. Cognitive Brain Research. 2005;25:161–8. doi: 10.1016/j.cogbrainres.2005.05.004. [DOI] [PubMed] [Google Scholar]

- [87].Chandrasekaran B, Kraus N. Music, noise-exclusion, and learning. Music Perception. 2010;27:297–306. [Google Scholar]

- [88].Assmann PF. Fundamental frequency and the intelligibility of competing voices. Proceedings of the 14th International Congress of Phonetic Sciences; 1999. [Google Scholar]

- [89].Lewis D, Hoover B, Choi S, Stelmachowicz P. Relationship between speech perception in noise and phonological awareness skills for children with normal hearing. Ear and Hearing. 2010 doi: 10.1097/AUD.0b013e3181e5d188. doi: 10.1097/AUD.0b013e3181e5d188. [DOI] [PMC free article] [PubMed] [Google Scholar]