Abstract

The authors introduce a quantitative measure of the capacity of a small biological network to evolve. The measure is applied to a stochastic description of the experimental setup of Guet et al. (Science 2002, 296, pp. 1466), treating chemical inducers as functional inputs to biochemical networks and the expression of a reporter gene as the functional output. The authors take an information-theoretic approach, allowing the system to set parameters that optimise signal processing ability, thus enumerating each network’s highest-fidelity functions. All networks studied are highly evolvable by the measure, meaning that change in function has little dependence on change in parameters. Moreover, each network’s functions are connected by paths in the parameter space along which information is not significantly lowered, meaning a network may continuously change its functionality without completely losing it along the way. This property further underscores the evolvability of the networks.

Many signals in cells are processed using a network of interacting genes: exogenous signals affect expression of genes coding for transcription factor proteins, which in turn regulate the expression of other genes. Although early works have suggested that the connectivity of such regulatory networks dictates their function [1–3], recent studies offer evidence that a network with fixed connectivity can change its function simply by varying its biochemical parameters [4–7]. The diversity of a network’s achievable functions and the ease with which it can realise them are central to its capacity to evolve epigenetically, without slow and costly modifications to the genetic code, and thus central to the evolutionary capacity of the organism as a whole.

The evolvability of a regulatory network has been a topic of much discussion in recent literature [7–12], but little has been done to quantify the concept in a principled way. Here we propose a quantitative measure of network evolvability, and we apply it to a set of small regulatory networks, such that a principled comparison can be made across networks. Networks are taken from the experimental setup of Guet et al. [4] and modelled stochastically. We find biochemical parameters that optimise the information flow between a chemical ‘input’ signal and a particular ‘output’ gene, and we indeed find that a single network performs different functions at different sets of optimal parameters. We argue that a more evolvable network will be able to access a richer diversity of its functions with smaller changes in its parameters, and as such we quantify evolvability using a measure of anti-correlation between parametric and functional change.

We find that while there are small differences among networks’ evolvability scores, all are highly evolvable, meaning that the magnitude of a functional change has little dependence on the parametric change required to produce it. Moreover, we find that transitions among a network’s optimally informative functions can be made without significant loss of the input–output information along the way. By proposing and demonstrating a principled evolvability measure, we reveal these features quantitatively; both features suggest a high capacity of the studied regulatory networks to evolve.

1 Methods

First, we briefly outline the methods used to develop a quantitative measure of evolvability; each step is discussed in more detail in the sections that follow, and further detail can be found in previous work [5]. The system of interest is a small (four-gene) transcriptional regulatory network. As in Guet et al. [4], we treat the presence or absence of chemical inducers (small molecules that affect the efficacy of the transcription factors) as the inputs to the network, and the expression of a particular gene as the output. We use a stochastic model to find expression level distributions at a steady state, and we search for the model parameters that maximise the mutual information between input and output. We characterise the function that the network performs by the order in which the output distributions corresponding to each input state are encoded [13]; a single network can perform different functions at different parameter settings. We define the evolvability of the network as the ability to perform a diverse set of functions with only small changes in parameters, and we quantify evolvability accordingly using a measure of anti-correlation between pairwise function distance and parameter distance.

1.1 Model

Following the experimental setup of Guet et al. [4], we study all networks that can be built out of three genes A, B and C, in which each gene is regulated by one other gene, and regulation edges can be up-regulating or down-regulating. Additionally, as in the experiment, gene C down-regulates a ‘reporter’ gene G (e.g. GFP), whose expression we treat as the functional output of the network. This yields a total of 24 networks, as shown on the horizontal axis of Fig. 2. Also in analogy to the experiment, the efficacy of each transcription factor can be inhibited by the presence of a chemical inducer s, a small molecule that binds to the transcription factor and lowers its affinity for its binding site. The presence or absence of the chemical inducers sA, sB and sC corresponding to each transcription factors A, B and C define the functional input state of the network. The inhibitory effect of each inducer is illustrated for an example network in the top panel of Fig. 1a, and the eight possible input states i, determined by the presence or absence of the inducers, are listed in the bottom panel.

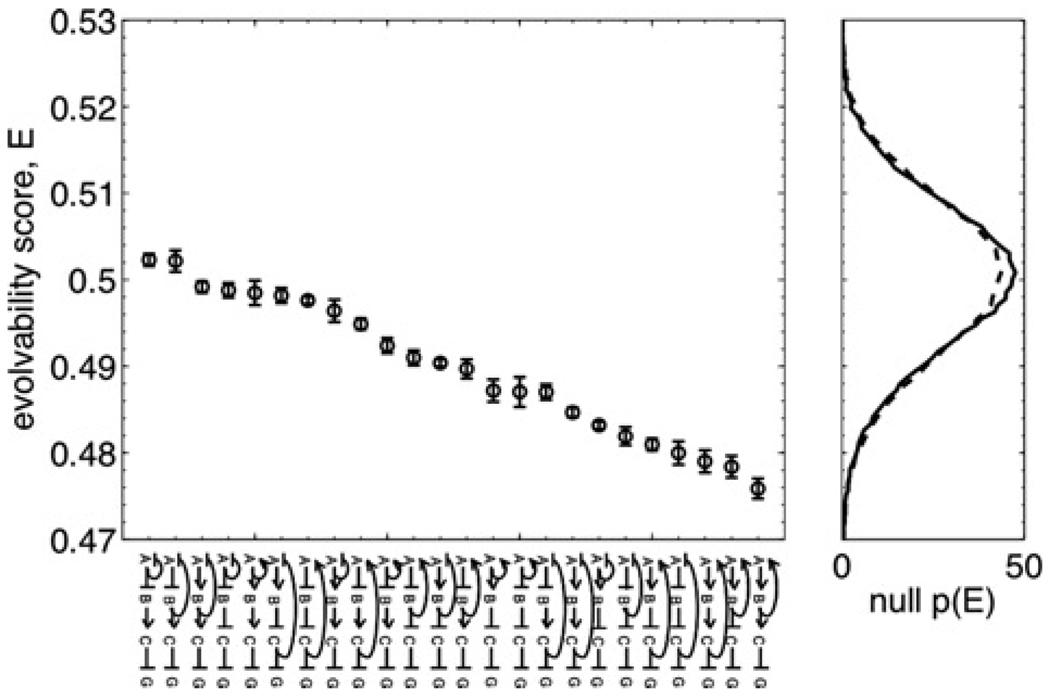

Figure 2. Evolvability scores for all networks.

Left: All 24 regulatory networks studied are shown along the horizontal axis, ranked by evolvability score E (sharp arrows denote up-regulation, and blunt arrows denote down-regulation). E values are calculated via (10), with error bars showing the sampling error, calculated as described in the text.

Right: Two null distributions generated according to the null hypothesis that the function distance is independent of the parameter distance. The solid line is the distribution of E scores calculated from solution sets in which the locations in parameter space were held fixed, and the function assignments were randomly permuted. The dotted line is the distribution of E scores calculated from solution sets in which the locations in parameter space were held fixed, and the function assignments were drawn randomly from the set of possible functions for the given network. Both distributions are averages over the individual distributions for each network, as there was no correlation between the means or variances of the individual distributions and the networks’ E scores

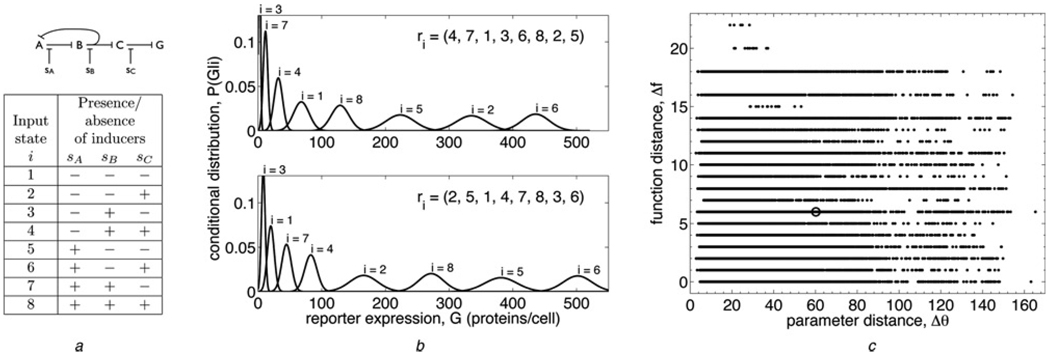

Figure 1. Defining evolvability.

a Top: a sample regulatory network (see Fig. 2 for diagrams of all 24 networks studied). A, B and C are genes whose transcription factors regulate each other’s expression according to the given network topology, and G is a ‘reporter’ gene, such as GFP. Sharp arrows indicate up-regulation, whereas blunt arrows indicate down-regulation (all arrows are blunt in this network). sA, sB and sC are chemical inducers that reduce the efficacy of the corresponding transcription factors. Bottom: table showing the eight input states i that are defined by the presence or absence of each chemical inducer in the cell (+ indicates presence and − indicates absence). In the model, the sA, sB and sC are scale factors that are free parameters (greater than 1, to effect an interference with transcriptional regulation) if the inducer is present, and are set to 1 if the inducer is absent

b Two maximally informative functions performed by the sample network at two different parameter settings. Function is characterized by the order of the output distributions P(G|i), the probability of expressing G proteins per cell given that the system is in input state i. Specifically, the function is quantified by the vector r⃗ of ranks of the P(G|i), as shown for each function in the upper right corner. For example, in the top function, the first output distribution (i = 1) is ranked 4th, the second (i = 2) is ranked 7th, the third (i = 3) is ranked 1st and so on, so the rank vector is r⃗ = (4, 7, 1, …)

c Plot of function distance Δf (9) against parameter distance Δθ (8) for all pairs of maximally informative model solutions (340 solutions were used for this network). Function distance is scaled such that the swapping of two adjacent output states from solution one to solution two gives Δf = 1, and parameter distance is scaled such that the doubling of one parameter from solution one to solution two gives Δθ = 1. The point corresponding to the pair of functions in (b) is circled. The evolvability score for this network, calculated from these data via (10), is E = 0.482 ± 0.001

For a typical prokaryotic regulatory network inside a cell, intrinsic noise arising from fluctuations in the small numbers of species [14, 15] is the primary factor limiting transmission of information from a chemical input to a genetic output [5, 13, 16, 17]. This observation has two important consequences for modelling: (a) a realistic model should capture not just mean protein concentrations, but probability distributions over numbers of proteins [18, 19], and (b) the most biologically relevant model parameters will retain an optimal flow of information in the presence of this noise [5, 20, 21]. Indeed, information seems to be maximised in at least some real biological systems (see e.g. [20]), and theoretical considerations for when information maximisation is important are discussed in detail in [5].

The first consequence is most fully addressed by solving the chemical master equation [22], which describes the time evolution of the joint probability distribution for the numbers of all molecules in the system given the elementary reactions (which are ultimately a function of the network topology). For our systems, the master equation is not analytically solvable. Progress can be made either by Monte-Carlo simulation of the master equation [23], or by approximating the master equation, for example with the linear-noise approximation (LNA) [5, 13, 22, 24, 25]. Since it does not rely on sampling, the LNA is much more computationally efficient (and thus more amenable to a search for high-fidelity model parameters), and in previous work [5] we found the distributions obtained via the LNA were practically indistinguishable from those obtained via stochastic simulation for copy numbers above 10–20.

In the LNA, the reaction rates in the master equation are linearised, and the steady-state solution is a multivariate Gaussian distribution [5, 13, 22]. The individual species’ marginal distributions are thus described at the level of Gaussian fluctuations, with means given by the steady-state solution to the deterministic dynamical system describing mean protein numbers. In this context, mean expression has been modelled with remarkable success by combining transcription and translation into one step [26–28], and accordingly, for each of our networks, we use the following dynamical system in which species are directly coupled to one another

| (1) |

where theXj ∈ {A, B, C, G} are the expression levels (in units of proteins per cell) of the four genes, the Rj are degradation rates, and the αj are production rates which depend on the expression level Xπj and a scale factor sπj of the parent πj of gene j (where the parent–children connectivity is determined by the network topology). The scale factors, sπj ∈ {sA, sB, sC} ≥ 1, incorporate the inhibitory effect of each chemical inducer (when present) by reducing the effective transcription factor concentration; when an inducer is absent, its scale factor is set to 1. Regulation is modelled using Hill functions

| (2) |

where a0 (kept the same for all genes) is the basal production rate, a0 + aj is the maximal production rate, Kj is the binding constant or the protein number at which production is half-maximal and n is the cooperativity, which we set to 2. Since a cooperativity of one is not common in bacterial gene regulation (of which our system is a model), in order to limit the number of optimised parameters we fix n to 2. Fixing the cooperativity at other constant values greater than two has marginal effects compared to n = 2; for further discussion see [5]. Note from (1) and (2) that increasing the scale factor can be equivalently interpreted as increasing the effective binding constant or lowering the binding affinity. Steady states of (1) are found by solving (using MATLAB’s roots) the polynomial equations that result from setting the left-hand side to zero, and keeping only those solutions for which the Jacobian matrix J of (1) has eigenvalues whose real parts are all negative.

The variances of the marginal distributions are the diagonal entries in the covariance matrix Ξ, which under the LNA satisfies a Lyapunov equation

| (3) |

where D is a diagonal matrix with, for the system in (1), the jth entry equal to αj (Xπj/sπj) + RjXj. Equation (3) is solved using a standard Lyapunov solver (MATLAB’s lyap). Since the steady-state distributions are Gaussian under the LNA, the solution is fully specified by the means and the variances. The distributions of particular functional importance are P(G|i), the probability of expressing G reporter proteins per cell given that the chemical inducers are in state i.

To address the second consequence, that biologically relevant solutions often optimise information flow in the presence of intrinsic noise [20, 21], as in previous work [5] we allow the system to set parameters that maximise the mutual information I [29] between input state i and output expression G, where

| (4) |

| (5) |

Here I is measured in bits, and the second step uses P(i, G) = P(G|i)P(i), P(G) = Σi′ P(i′, G), and an assertion that each input state occurs with equal likelihood (i.e. P(i) = 1/|i|, where |i| = 8 is the number of input states) to write I entirely in terms of the model solutions P(G|i).

Two computationally trivial ways for the system to maximise I are (a) to use an unbounded number of reporter proteins G to encode the signal, and (b) to set degradation rates such that G responds on a timescale much longer than that of the upstream genes (called a ‘stiff’ system), which has the effect of averaging out the upstream noise. In contrast, in cells, protein production requires energy, which sets a limit on the number of proteins that a cell can produce, and most protein degradation rates are comparable. Therefore we seek model parameters θ⃗* that optimise a constrained objective function

| (6) |

where the constants λ and γ are a metabolic cost and a constraint against stiffness, respectively, the average 〈Xj 〉 is taken over all genes, and the average 〈Rπj 〉 is taken over upstream genes A, B and C. Optimisation is performed using a simplex algorithm (MATLAB’s fminsearch) in a 15-dimensional parameter space, as

| (7) |

(RG was fixed at 4 × 10−4 s−1 to set a biologically realistic degradation rate scale).

Varying initial parameters yields many local optima θ⃗* at which the input signal may be encoded differently in the output distributions P(G|i). For example, two optimally informative solutions are shown in Fig. 1b for the network in Fig. 1a. Intuitively, maximising mutual information has resulted in sets of distributions that are well separated, such that knowledge of the output G would leave little ambiguity about the original input state i. We point out, however, that the ordering of the output distributions is different between the two solutions, meaning that the network is performing two different functions at two different points in parameter space. The relationship between diversity of such functions and exploration of parameters is crucial to the discussion of evolvability; in the next section we develop a quantitative measure of evolvability in the context of this system.

1.2 Quantifying evolvability

As seen in several experimental and numerical studies [4–7], and in data from the model described above, a single regulatory network can perform different functions simply by varying its biochemical parameters. Intuitively, a network should be deemed more evolvable if it is able to access a richer diversity of its functions with smaller changes in its parameters. Quantification of this concept requires definitions of both parametric and functional change.

As in Barkai et al. [30], we characterise the magnitude of the parametric change in going from one model solution to another by calculating fold changes in the model parameters. Specifically, we define a parameter distance Δ θ between two solutions as the Euclidean distance in the logs of the parameters

| (8) |

where |k| = 15 is the number of parameters. Under this definition, equal fold changes in each parameter constitute equal contributions to Δ θ (for scale, the doubling of one parameter corresponds to Δ θ = 1). We note that although this definition is motivated by published work [30], it is only one of a number of possible definitions of parameter distance, and the results herein should be interpreted within this context.

As in previous work [13] and in the original experiment of Guet et al. [4], we define the function of a network analogously to logic in electrical circuits (AND, OR, XOR etc.), in which the function is determined by the magnitude of the output’s response to each input state (for example, with two inputs, AND would be defined by a ‘high’ output in response to the [++] state, and a ‘low’ output in response to the [−−], [−+] and [+−] states). Since, in our setup, optimising information produces well-separated output distributions P(G|i) (see Fig. 1b), we extend this idea beyond a simple ‘high’ or ‘low’ output classification, and characterise function by the order of the P(G|i). Specifically, we record a vector r⃗ of ranks of the P(G|i); for example, in the top panel of Fig. 1b, the first output distribution (i = 1) is ranked 4th, the second (i = 2) is ranked 7th, the third (i = 3) is ranked 1st and so on, so the rank vector is r⃗ = (4, 7, 1, …). We then define the function distance Δf between two solutions in terms of the vector distance between their rank vectors

| (9) |

(for scale, the swapping of two adjacent output distributions corresponds to Δf = 1). In networks in which the overall sign of the feedback cycle is negative, there can exist parameter values that support multiple stable fixed points. This would correspond to one or more of the output distributions being multimodal. Since we effectively minimise overlap of output states by optimising information transmission, such solutions are rare (13% occurrence in all negative-feedback networks). When they do occur, we equally weight each fixed point in constructing the multimodal Gaussian output, and continue to define r⃗ by the ranks of the means of the output distributions. Other function distances, including other permutation distances between the rank vectors, and a continuous distance measure defined by averaging the Jensen–Shannon divergence [31] between corresponding output distributions in the solution pair, produced similar results, as discussed in the Results section.

It is now clear that, if a network is better able to explore its function set with smaller changes in its parameters (i.e. is more evolvable by our definition), then it will exhibit less correlation between Δf and Δθ than other networks. Therefore we define an evolvability score E for a given network as a measure of anti-correlation between Δf and Δθ, calculated for every pair of its optimal model solutions. If two solutions from the same local information maximum are treated as distinct, they will have the same function but (slightly) different parameters; this will artificially lower E. To correct for this effect, we merge (at their mean parameter location) nearest neighbours whose functions are the same until all nearest neighbours have different functions. This procedure reduced networks’ solution sets by at most ~10%. Specifically, evolvability is defined as

| (10) |

where τ is Kendall’s tau [32], a non-parametric measure of correlation between all pairwise Δf and Δθ; we rescale τ such that 0 < E < 1 and take its complement to obtain an anti-correlation. Using a non-parametric correlation statistic has the advantage that our evolvability measure remains invariant upon any monotonic rescaling in the definitions of either Δθ or Δf (even with this advantage, we again remind the reader that (10) represents a particular choice of correlation function, and the results herein should be interpreted in this context). Additionally, we note that E can be thought of as the probability that a pair of solutions drawn at random have a larger Δf than another pair given that the first pair had a smaller Δθ, or as the fraction of discordant pairs of (Δθ, Δf) data points. Many sources (including MATLAB’s built-in corr) use an adjustment to the calculation of τ in the case of tied data (see e.g. [33]). In keeping with the interpretation of our statistic as a probability, we do not introduce an adjustment; we simply count each tied pair as neither concordant nor discordant (i.e. if, for example, in computing the fraction of concordant pairs, we assigned each concordant pair a 1 and each discordant pair a 0, a tied pair would count as 0.5).

Function distance Δf against parameter distance Δθ for all pairs of model solutions is plotted in Fig. 1c for the example network in Fig. 1a. The evolvability score calculated from these data is E = 0.482 which, since there is little correlation (or anti-correlation) between Δf and Δθ in this case, is near the middle value E = 0.5.

We obtain a fairer estimate of E and an estimate of its error by subsampling. Specifically, using jack-knifing as in [34, 35], we compute the mean Ē and standard error δE in E values calculated on randomly drawn subsets of a given size n (from the full data set of size N). We then repeat for various n, plot Ē ± δE against N/n, and fit with a line (all plots generated were roughly linear). The value and uncertainty of the N/n = 0 intercept give an estimate of E, extrapolated to infinite data, and a measure of sampling error, respectively. The sampling error estimated in this way for the data in Fig. 1c is 0.001.

2 Results

2.1 All networks studied are evolvable

Using the methods described above, between 200 and 500 optimally informative model solutions were obtained, and an evolvability score E was calculated for each of the 24 networks shown on the horizontal axis of Fig. 2. The constraints were set to λ = 0.01 or 0.005, for an average protein count of ~100–200, and γ = 0.001, allowing a maximum of about three orders of magnitude between upstream and reporter degradation rates. Solutions with mutual information values below I = 2 bits were discarded as not transmitting high enough information (for scale, a solution with perfectly overlapping output distributions would have I = 0 bits, and a solution with eight perfectly non-overlapping output states would have I = 3 bits).

Networks’ evolvability scores are shown in Fig. 2. All 24 networks have E values within 5% of 0.5 (recall that E is bounded by 0 ≤ E ≤ 1), which means that, in all cases, there is little correlation between change in function and change in parameters, suggesting that all networks studied are evolvable. Using other function distances, including other permutation distances between the rank vectors, and a continuous distance measure defined by averaging the Jensen–Shannon divergence [31] between corresponding output distributions in the solution pair, produced similar results: E scores very near 0.5, indicating little correlation between functional and parametric distances.

The claim that function has little dependence on parameters can be tested more rigorously by comparison with a null hypothesis. The null hypothesis that function is independent of parameters was implemented in two ways. First, given each network’s solution set, locations of solutions in parameter space were kept the same, but the functions associated with each solution were randomly permuted. Second, locations of solutions in parameter space were again kept the same, but functions were drawn randomly from the set of possible functions for each network (not all 8! rankings of the output distributions are allowed functions for a given network; as shown in previous work [13], the topology of the network constrains the set of possible steady-state functions). Specifically, since each gene is regulated by one other gene, allowed functions are ‘direct’ functions: those in which the output distribution responds to a change in inducer concentration according to the direct path from inducer to reporter (i.e. ignoring feedback pathways). For example, for the network in Fig. 1a, in going from state [−−−] (i = 1) to [− + −] (i = 3), sB increases; the direct path from sB to G consists of a repression–repression–repression chain, which is net repressive, so the output distribution must decrease (as it does in both panels of Fig. 1b). With three inducers, there are 48 direct functions for each network; this is the set from which functions are randomly drawn in the second implementation of the null hypothesis. In each case, the function reassignment was performed many times, and the E value was computed each time to produce a distribution of null E scores. There was no correlation between the means or variances of the networks’ null distributions and their actual E scores, so the individual null distributions were averaged across networks. Averaged null distributions from each of the two implementations are qualitatively similar, and both are shown in Fig. 2. All networks’ actual E values lie well within both null distributions (the smallest p-value is 0.023, and, with 24 networks, we expect at least one to attain a p-value lower than 1/24 = 0.04 simply by chance). This means that none of the networks’ solution sets significantly differ from a set in which the function performed is independent of the setting of the parameters.

Even though all E values lie within the null distribution, only two lie above the null mean of 0.5; the probability of two or fewer values lying above the mean by chance is 2 × 10−5. For a network with E much larger than 0.5, the parameter and the functional distances would be anti-correlated, and the network function would evolve dramatically with very small parameter changes. Thus the vast majority of the networks studied show a statistically significant, yet unexpectedly small, positive correlation among the functional and the parametric distance.

Despite the fact that the E values lie in a narrow range, sampling errors are small (see Fig. 2), meaning that the networks can be ranked with some confidence according to their evolvability. We asked statistically whether this ranking was correlated with any topological features of the network, including the sign of the regulation of each gene, the length and net sign of the feedback cycle, and the total number of activators and repressors in the network, both in and out of the cycle. Correlation was tested for features with categorical values using a Wilcoxon rank-sum test [36, 37] (for two categories) or a Kruskal–Wallis H-test [38] (for more than two categories), and for features with real values using Kendall’s τ [32]. No topological feature significantly correlated with E. The lowest p-value was 0.04, and, since many correlations were tested for at once, a Bonferoni correction [39] showed that the likelihood of obtaining a p-value this low simply by chance was 0.33. Thus we identified no topological aspect that significantly imparted higher or lower evolvability to the networks.

2.2 Changing functions without a large loss of functionality

As described in the previous section, we have found that the networks studied organise their optimally informative solutions in parameter space in such a way that large changes in function do not require large changes in parameters. We further demonstrate here that the networks can change from one function to another in parameter space without significant loss of the input–output information along the way. This further underscores the evolvability of these networks, since it shows that random steps in parameter space not only explore the full variety of a network’s functions, but do so without significant loss of fidelity. In the context of electric logical circuits, such evolvability would correspond to an ability to change a logic gate continuously from performing one logical function to another while remaining a largely functional gate in the interim.

For each network, mutual information I was calculated using (5) along straight-line paths in parameter space between all solutions pairs within a randomly chosen subset of its optimally informative solutions. Examples of these paths are shown in Fig. 3a, for ten solutions from the inset network. The solutions at either end are local maxima in I, and the paths show the loss in information capacity the network would suffer if it were to move from one solution to the other along a straight line in parameter space. Some information loss is unavoidable: changing function requires reordering the output distributions (see Fig. 1b), which means overlapping at least two of them at a time, and with eight distributions the shift of two distributions from fully separated to fully overlapped incurs a minimum loss of 0.25 bits. Seven of the ten functions corresponding to the ten solutions in Fig. 3a are unique; at least 91% of the plotted paths involve a change in function.

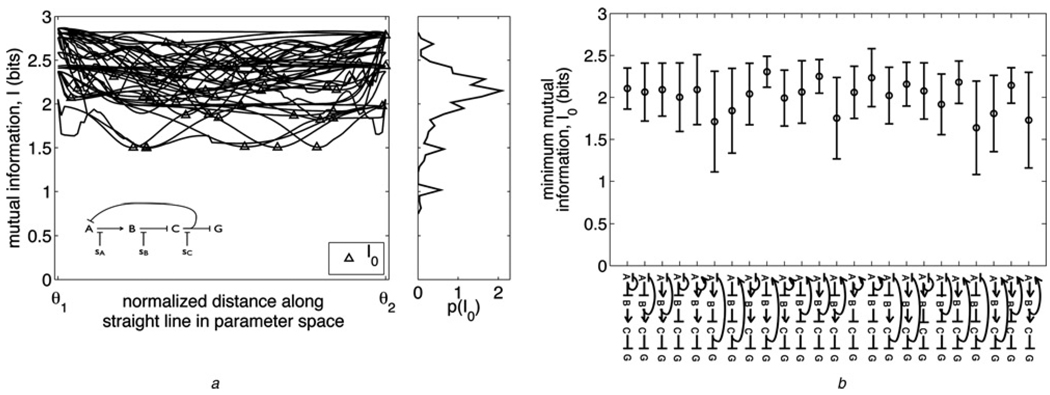

Figure 3. Changing function without losing information.

a Left: Mutual information I along straight-line paths in parameter space between pairs of 10 randomly chosen optimally informative model solutions for a particular network (inset). For each path, the starting and ending solution’s locations in parameter space are denoted θ⃗1 and θ⃗2, respectively, on the horizontal axis. The minimum mutual information I0 along each path is marked with a triangle. A specific function is performed at each of the ten solutions (as characterised in Methods); seven of the ten functions are unique. Right: Distribution of I0 values built from paths between 37 randomly chosen solutions for the inset network, of which the ten solutions used for the left plot are a subset

b Means (circles) and standard deviations (error bars) of I0 distributions like that in (a) (right), for all networks studied; 37 randomly chosen solutions were used to build each network’s distribution. Networks are shown on the horizontal axis, in the same order as in Fig. 2, i.e. ranked by evolvability score E

Nonetheless, we find that the loss in information suffered in going between optimal solutions is surprisingly minimal. The right panel of Fig. 3a shows the distribution of minimal mutual information values I0 along the paths for the inset network, and Fig. 3b shows the means and the standard deviations of I0 distributions for all networks. For only a few networks do a significant portion of the paths drop below 1.5 bits, and almost no paths drop below 1 bit. In contrast, the typical information Irand in networks with random parameters (generated uniformly in log space from the region within which optimisations are initialised) is negligible (the median is Irand = 0.02 bits for the network in Fig. 3a, and Irand = 0.05 bits averaged over all 24 networks). We note in passing that the networks in Fig. 3b are shown as in Fig. 2, i.e. ranked by evolvability score E, and so Fig. 3b also demonstrates that there is no significant correlation between I0 and E.

We emphasise that Fig. 3b represents a lower bound on minimum mutual information encountered in transitioning between solutions. It is by no means necessary (and is most likely biologically unrealistic) for a functional change to proceed via such uniform changes in biochemical parameters. It is more likely that there exist transition paths that are more optimal than the straight-line paths, and that the most optimal I0 distributions are actually shifted higher in information than those generated here (given the large computational cost of implementing an optimal path-finding algorithm in 15 dimensions when each evaluation of the objective function involves finding the steady state of a dynamical system and solving a Lyapunov equation, the lower bound on minimum information obtained from the straight-line paths between maxima suffices to illustrate the claim that information loss is minimal in transitions between functions). Thus it is quite non-trivial (and it is further testament to their evolvability) that even along direct paths between optimal solutions these networks in most cases do not drop below 1.5 bits of processing ability, considering that the solutions themselves operate in the range of ~2–2.8 bits. A network can be evolving and functional at the same time.

3 Discussion

We have quantified the concept of evolvability in the context of regulatory networks by introducing an interpretable measure, and by probing the space of the networks’ most informative functions. Our measure is an anti-correlation between the amount of functional change experienced by a network and the parametric change required to affect it, such that more evolvable networks explore more diverse functions with smaller variation in their biochemical parameters. We have fully defined functional and parametric distances (as well as the characterisation of ‘function’ itself) in the context of a stochastic description of the experimental setup of Guet et al. [4], and we have chosen a correlation measure that is invariant to monotonic transformations in either definition.

We have found that all networks studied share the property that functional change is largely independent of parametric change, meaning that they are highly evolvable by our measure. This property holds for several different definitions of function distance. This means that high-information functions are not organised in parameter space in such a way that similar functions are near each other; instead nearby solutions are approximately as likely to be similar in function as they are to be different in function.

Furthermore, we have found that all networks studied can transition among their maximally informative functions without significant loss of information in the process. Along straight-line paths in parameter space between functions (with mutual information values in the range ~2–2.8 bits), mutual information remains above ~2 bits on average and very rarely drops below 1 bit. Moreover, these values represent a lower bound, since transition paths need not be straight. This suggests that the networks can evolve without losing functionality in the process, which resonates with the idea from evolutionary biology that evolution happens not by crossing high fitness barriers (low-information solutions in our case), but by finding neutral paths [40].

Ultimately, we have uncovered two important properties of the regulatory networks described by our model: (a) high-information solutions do not cluster by function, and (b) transitions among solutions are possible without significant loss of fidelity. Both of these properties underscore the high evolvability of the networks studied. It is possible that these properties are general characteristics of a class of systems extending beyond small transcriptional regulatory networks, particularly systems governed by a large number of tunable parameters. However, we argue that these properties are especially relevant here, as they are critical to a quantitative description of the capacity of biological networks to evolve.

Acknowledgments

We are grateful to the organisers, participants and sponsors of The Second q-bio Conference in Santa Fe, New Mexico, where a preliminary version of this work was presented. AM was supported by NSF Grant No. DGE-0742450. CW and AM were supported by NSF Grant No. ECS-0332479. CW was supported by NIH Grant Nos. 5PN2EY016586-03, 1U54CA121852-01A1, and 5P50GM071558-02. IN was supported by DOE under Contract No. DE-AC52-06NA25396 and by NSF Grant No. ECS-0425850.

References

- 1.Shen-orr SS, Milo R, Mangan S, Alon U. Network motifs in the transcriptional regulation network of escherichia coli. Nat Genet. 2002;31(1):64–68. doi: 10.1038/ng881. [DOI] [PubMed] [Google Scholar]

- 2.Mangan S, Alon U. Structure and function of the feed-forward loop network motif. Proc. Natl. Acad. Sci. USA. 2003;100(21):11980–11985. doi: 10.1073/pnas.2133841100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Kollmann M, LØvdok L, Bartholomé K, Timmer J, Sourjik V. Design principles of a bacterial signalling network. Nature. 2005;438(7067):504–507. doi: 10.1038/nature04228. [DOI] [PubMed] [Google Scholar]

- 4.Guet CC, Elowitz MB, Hsing W, Leibler S. Combinatorial synthesis of genetic networks. Science. 2002;296(5572):1466–1470. doi: 10.1126/science.1067407. [DOI] [PubMed] [Google Scholar]

- 5.Ziv E, Nemenman I, Wiggins CH. Optimal signal processing in small stochastic biochemical networks. PLoS ONE. 2007;2(10):e1077. doi: 10.1371/journal.pone.0001077. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Wall ME, Dunlop MJ, Hlavacekw S. Multiple functions of a feedforward- loop gene circuit. J. Mol. Biol. 2005;349(3):501–514. doi: 10.1016/j.jmb.2005.04.022. [DOI] [PubMed] [Google Scholar]

- 7.Voigt CA, Wolf DM, Arkin AP. The bacillus subtilis sin operon: an evolvable network motif. Genetics. 2005;169(3):1187–1202. doi: 10.1534/genetics.104.031955. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Ptashne M, Gann A. Imposing specificity by localization: mechanism and evolvability. Curr. Biol. 1998;8(24):R897. doi: 10.1016/s0960-9822(07)00557-x. [DOI] [PubMed] [Google Scholar]

- 9.Kitano H. Biological robustness. Nat. Rev. Genet. 2004;5(11):826–837. doi: 10.1038/nrg1471. [DOI] [PubMed] [Google Scholar]

- 10.Buchler NE, Gerland U, Hwa T. On schemes of combinatorial transcription logic. Proc. Natl. Acad. Sci. USA. 2003;100(9):5136–5141. doi: 10.1073/pnas.0930314100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Braunewell S, Bornholdt S. Reliability of genetic networks is evolvable. Phys. Rev. E. 2008;77:60902. doi: 10.1103/PhysRevE.77.060902. [DOI] [PubMed] [Google Scholar]

- 12.Kashtan N, Alon U. Spontaneous evolution of modularity and network motifs. Proc. Natl. Acad. Sci. USA. 2005;102(39):13773–13778. doi: 10.1073/pnas.0503610102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Mugler A, Ziv E, Nemenman I, Wiggins CH. Serially regulated biological networks fully realise a constrained set of functions. IET Syst. Biol. 2008;2(5):313–322. doi: 10.1049/iet-syb:20080097. [DOI] [PubMed] [Google Scholar]

- 14.Elowitz MB, Levine AJ, Siggia ED, Swain PS. Stochastic gene expression in a single cell. Science. 2002;297(5584):1183–1186. doi: 10.1126/science.1070919. [DOI] [PubMed] [Google Scholar]

- 15.Thattai M, Van Oudenaarden A. Intrinsic noise in gene regulatory networks. Proc. Natl. Acad. Sci. USA. 2001;98(15):8614–8619. doi: 10.1073/pnas.151588598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Acar M, Becskei A, Van Oudenaarden A. Enhancement of cellular memory by reducing stochastic transitions. Nature. 2005;435(7039):228–232. doi: 10.1038/nature03524. [DOI] [PubMed] [Google Scholar]

- 17.Pedraza JM, Van Oudenaarden A. Noise propagation in gene networks. Science. 2005;307(5717):1965–1969. doi: 10.1126/science.1109090. [DOI] [PubMed] [Google Scholar]

- 18.Shahrezaei V, Swain PS. Analytical distributions for stochastic gene expression. Proc. Natl. Acad. Sci. USA. 2008;105(45):17256–17261. doi: 10.1073/pnas.0803850105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Hornos JE, Schultz D, Innocentini GC, et al. Self-regulating gene: an exact solution. Phys. Rev. E. 2005;72:51907. doi: 10.1103/PhysRevE.72.051907. [DOI] [PubMed] [Google Scholar]

- 20.Tkacik G, Callan CG, Bialek W. Information flow and optimization in transcriptional regulation. Proc. Natl. Acad. Sci. USA. 2008;105(34):12265–12270. doi: 10.1073/pnas.0806077105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Doan T, Mendez A, Detwiler PB, Chen J, Rieke F. Multiple phosphorylation sites confer reproducibility of the rod’s single-photon responses. Science. 2006;313(5786):530–533. doi: 10.1126/science.1126612. [DOI] [PubMed] [Google Scholar]

- 22.Van Kampen NG. Stochastic processes in physics and chemistry. Amsterdam: North-Holland; 1992. [Google Scholar]

- 23.Gillespie DT. Exact stochastic simulation of coupled chemical reactions. J Phys Chem. 1977;81(25):2340–2361. [Google Scholar]

- 24.Paulsson J. Summing up the noise in gene networks. Nature. 2004;427(6973):415–418. doi: 10.1038/nature02257. [DOI] [PubMed] [Google Scholar]

- 25.Elf J, Ehrenberg M. Fast evaluation of fluctuations in biochemical networks with the linear noise approximation. Genome Res. 2003;13(11):2475–2484. doi: 10.1101/gr.1196503. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Elowitz MB, Leibler S. A synthetic oscillatory network of transcriptional regulators. Nature. 2000;403(6767):335–338. doi: 10.1038/35002125. [DOI] [PubMed] [Google Scholar]

- 27.Gardner TS, Cantor CR, Collins JJ. Construction of a genetic toggle switch in escherichia coli. Nature. 2000;403(6767):339–342. doi: 10.1038/35002131. [DOI] [PubMed] [Google Scholar]

- 28.Hasty J, McMillen D, Isaacs F, Collins JJ. Computational studies of gene regulatory networks: in numero molecular biology. Nat. Rev. Genet. 2001;2(4):268–279. doi: 10.1038/35066056. [DOI] [PubMed] [Google Scholar]

- 29.Shannon CE. Communication in the presence of noise. Proc. IRE. 1949;37:10–21. [Google Scholar]

- 30.Barkai N, Leibler S. Robustness in simple biochemical networks. Nature. 1997;387(6636):913–917. doi: 10.1038/43199. [DOI] [PubMed] [Google Scholar]

- 31.Lin J. Divergence measures based on the Shannon entropy. IEEE Trans. Inf. Theory. 1991;37(1):145–151. [Google Scholar]

- 32.Kendall MG. A new measure of rank correlation. Biometrika. 1938;30:81–93. [Google Scholar]

- 33.Kendall MG. Rank correlation methods. Charles Griffin; 1990. [Google Scholar]

- 34.Strong SP, Koberle R, De Ruyter Van Steveninck RR, Bialek W. Entropy and information in neural spike trains. Phys. Rev. Lett. 1998;80:197. [PubMed] [Google Scholar]

- 35.Nemenman I, Lewen GD, Bialek W, De Ruyter Van Steveninck RR. Neural coding of natural stimuli: Information at sub-millisecond resolution. PLoS Comput. Biol. 2008;4:e1000025. doi: 10.1371/journal.pcbi.1000025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Mann HB, Whitney DR. On a test of whether one of two random variables is stochastically larger than the other. Ann. Math. Stat. 1947;18:50–60. [Google Scholar]

- 37.Wilcoxon F. Individual comparisons by ranking methods. Biometrics Bull. 1945;1(6):80–83. [Google Scholar]

- 38.Kruskal WH, Wallis WA. Use of ranks in one-criterion variance analysis. J. Am. Stat. Assoc. 1952;47(260):583–621. [Google Scholar]

- 39.Salkind N. Encyclopedia of measurement and statistics. Thousand Oaks, CA: Sage; 2007. [Google Scholar]

- 40.Van Nimwegen E, Crutchfield JP. Metastable evolutionary dynamics: crossing fitness barriers or escaping via neutral paths? Bull. Math. Biol. 2000;62(5):799. doi: 10.1006/bulm.2000.0180. [DOI] [PubMed] [Google Scholar]