Abstract

Impulsivity refers to a set of heterogeneous behaviors that are tuned suboptimally along certain temporal dimensions. Impulsive inter-temporal choice refers to the tendency to forego a large but delayed reward and to seek an inferior but more immediate reward, whereas impulsive motor responses also result when the subjects fail to suppress inappropriate automatic behaviors. In addition, impulsive actions can be produced when too much emphasis is placed on speed rather than accuracy in a wide range of behaviors, including perceptual decision making. Despite this heterogeneous nature, the prefrontal cortex and its connected areas, such as the basal ganglia, play an important role in gating impulsive actions in a variety of behavioral tasks. Here, we describe key features of computations necessary for optimal decision making, and how their failures can lead to impulsive behaviors. We also review the recent findings from neuroimaging and single-neuron recording studies on the neural mechanisms related to impulsive behaviors. Converging approaches in economics, psychology, and neuroscience provide a unique vista for better understanding the nature of behavioral impairments associated with impulsivity.

Keywords: intertemporal choice, temporal discounting, basal ganglia, speed-accuracy tradeoff, response inhibition, switching

Introduction

Decision making refers to the process of choosing a particular action among a number of alternative options that is expected to produce the most beneficial outcome to the decision maker. Given the complexity and diversity of necessary computations, it is perhaps not surprising that many cortical and subcortical areas in the brain are involved in decision making. In addition, there is a substantial variability in the decision-making strategies displayed by different individuals, and many psychiatric disorders, including mood disorders and substance abuse, are thought to result from decision-making abilities impaired in some aspects. Accordingly, understanding the precise nature and neural mechanisms of such variability is an important goal for theories on personality traits and psychiatric disorders (1). In particular, individuals vary substantially in their strategies to deal with time during decision making, and impulsivity often refers to the failures in optimally incorporating temporal factors in decision making (2). In the last decade, substantial progress has been made in uncovering the basic mechanisms of decision making, including how the brain synthesizes incoming sensory information and the decision maker’s previous reward history to select the action that is most beneficial to the subject (3–9). This knowledge is essential for understanding the precise nature of dysfunctions in the decision-making circuitries in the brain, including those responsible for impulsive choice behaviors. This review first describes the range of computations required for optimal decision making. Next, how suboptimal solutions to some of these computational problems can lead to impulsive behaviors is discussed. The rest of the article is devoted to the review of the neural mechanisms for impulsive behaviors.

Computations necessary for optimal decision making

Economic decision making

In general, outcomes from a particular action might vary depending on the state of the decision maker’s environment. For relatively simple types of decision making, the relationship between actions and their outcomes might be fully known, but often the relationship among the environment, action, and outcomes might change dynamically and may not be known completely. Nevertheless, formal analyses of decision making tend to share two common features. First, a set of available actions from which the final action can be chosen must be specified. In most behavioral and neurobiological studies on decision making, this is determined explicitly by the experimenter. In reality, the number of actions that need to be considered by the decision maker can be potentially very large. Second, for each of the actions under consideration, the desirability of outcomes expected from the action must be evaluated. Outcomes of actions can vary in many different dimensions, including not only the type of an outcome, but also its statistical and temporal properties. For example, performing a particular action or a sequence of actions might determine the probability of obtaining a particular amount of money or food, and such an outcome might become available after some delay. In some cases, decision makers might dread the arrival of an aversive outcome, and savor the anticipation of a pleasant outcome. As a result, the preference for reward might increase as it is delayed and produces more opportunity for its anticipation, while the preference for some painful outcome might increase when it happens sooner (10). Such anomaly is referred to as negative time preference (11, 12). In most cases, however, humans and animals are more likely to take an action when the reward expected from that action is delivered sooner than later, and when the aversive outcome that can be avoided by taking that action is more imminent. This is referred to as positive time preference and the process by which the value of a delayed outcome is diminished according to its delay is referred to as delay or temporal discounting (13–15). Similarly, the subjective value of an outcome is commonly depreciated by its uncertainty. Although a standard economic choice theory has assumed that the subjective preference or utility of an outcome is depreciated by its probability (16), behavioral studies have shown that the relative weight given to a particular outcome might be determined by a non-linear function of its probability (17, 18).

Various cues from the environment of decision makers provide information about the probabilities and timing of outcomes expected from each action. In some cases, as in behavioral studies on economic decision making, statistics and temporal properties of outcomes are explicitly indicated to the decision makers, making it possible to calculate the utilities of alternative actions. However, more often, how the state of the decision maker’s environment alters the relationship between an action and its outcomes has to be learned through experience. Accordingly, the utilities of alternative actions cannot be determined precisely, but can be only estimated empirically. To distinguish such estimates from the real utilities of actual outcomes, the empirical estimates of outcomes expected from a particular action are referred to as value functions in the reinforcement learning theory (19). As assumed by the economic theories of decision making, in which rational decision makers select the action with the maximum utility, decision makers or agents in the reinforcement learning theory tend to choose the action with the maximum value function. In contrast to rational economic decision makers, however, reinforcement learning theory requires its agents to behave stochastically, namely explore their environment, to gain the opportunity to improve the accuracy of their value functions. This conflict between the need to choose the action with the maximum value function (“exploitation”) and the need to choose randomly (“exploration”) is often referred to as exploration-exploitation dilemma.

Perceptual decision making

In a stable environment, decision makers might be able to extract sufficient information from sensory stimuli about the action that produces the most beneficial outcome in a given context. In addition, the outcomes of choosing the correct vs. incorrect actions might be fixed and well known to the decision makers. In such cases, the nature of decision making is perceptual, since the outcome of the chosen action depends entirely on identifying the sensory stimulus correctly. However, the task of such perceptual decision making can be challenging, when the sensory inputs are noisy, and their quality fluctuates over time. In the last two decades, important insights into the neural mechanisms of decision making have resulted from the studies that manipulated the difficulty of decision making by changing the signal strength of sensory stimuli (20–22). Results from the research on two-alternative forced-choice paradigms have generated converging evidence that during the time interval between the stimulus onset and motor response, noisy sensory evidence is temporally integrated, analogous to a diffusion process or random walk, and the response is emitted when this accumulated evidence reaches a certain threshold (23, 24). Such models predict, consistent with the findings from many studies on reaction times, that the accuracy of the choice will deteriorate and the reaction time will be prolonged as the sensory stimulus becomes noisier.

Models of impulsivity in decision making

Impulsivity and temporal discounting

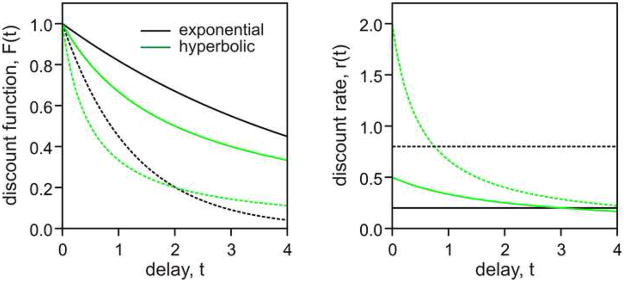

Impulsive decision making can be viewed as a failure to take certain types of temporal factors into account appropriately. However, impulsivity is not a unitary concept, and includes several distinct cases in which time is handled suboptimally. For economic decision making, impulsivity refers to the tendency to weigh immediate outcomes strongly and to discount the value of delayed rewards precipitously (13–15, 25). As described above, humans and animals tend to prefer an immediate but smaller reward to a delayed but larger reward, when the difference in magnitude is too small or when the difference in delay is too large. This pattern of preference is often modeled by a temporal discount function, F(t), which describes how steeply the value of delayed reward decreases with its delay t. Although several different types of discount functions have been proposed (25), exponential and hyperbolic discount functions have been used in most behavioral studies on inter-temporal choice (Figure 1, left). Each of these discount functions has a single free parameter that controls its steepness, and therefore, such parameters can be used as measures of impulsivity. First, exponential discount function is given by the following.

Figure 1.

Comparison of exponential and hyperbolic discount functions (left) and their corresponding discount rates (right). Exponential discount functions (black) are given by Fexp(t) = exp(−kexp t), where kexp = 0.2 (solid) or 0.8 (dotted), whereas hyperbolic discount functions (green) are given by Fhyp(t) = 1/(1+khyp t), where khyp = 2.0 (solid) or 0.5 (dotted). For both types of discount functions, larger values of k parameters correspond to more impulsive choices.

where t denotes reward delay, and kexp corresponds to discount rate r, which is defined as the ratio r=−F′(t)/F(t). Therefore, for a given exponential discount function, its discount rate is fixed and independent of reward delays (Figure 1, right). Second, hyperbolic discount function is given by the following.

where khyp is the parameter that controls the steepness of the discount function. In contrast to exponential discount functions, the discount rate for hyperbolic discount function decreases with delay, according to khyp/(1+khyp t) (Figure 1, right). Nevertheless, for any delay t, Fhyp decreases as the parameter khyp increases, so the value of khyp in a hyperbolic discount function can be used as a measure of impulsivity in inter-temporal choice. Many studies have consistently demonstrated that behaviors of humans and other animals during inter-temporal choice are better accounted for by hyperbolic discount functions (13, 25–28). In addition, patients of substance abuse tend to display steeper discount functions (29–33), suggesting that such maladaptive behaviors arise from impulsive choices.

Impulsivity and response inhibition

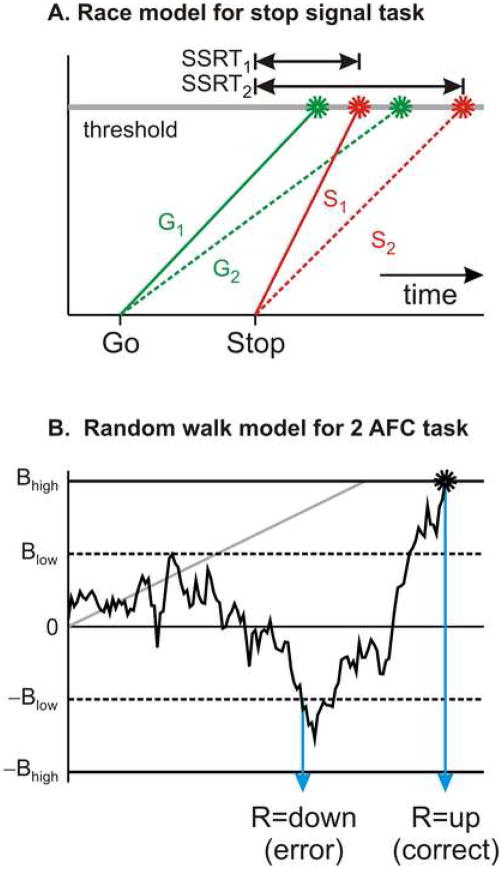

In addition to steep temporal discounting, impulsivity also refers to the tendency to produce a habitual or some other default action prematurely. The ability to abort or suppress the movement that was previously prepared but is no longer desired has been studied extensively by using a stop-signal task (34, 35). In this task, the subject is required to respond to a particular sensory stimulus, such as a tone or visual target, but in a small proportion of trials, the subject is also required to cancel the movement in response to a stop signal. Whether the subjects can successfully abort the movement in response to a stop signal largely depends on the interval between the target onset and stop signal onset, which is often referred to as stop signal delay (SSD). For a short SSD, namely, when the stop signal immediately follows the target, the subjects are more likely to cancel the movement compared to when the SSD is longer. The performance of humans and monkeys in stop-signal tasks has been well accounted for by the horse race model (34, 36; Figure 2 top). In this model, the onset of a target and the onset of a stop signal trigger go and stop processes, respectively, and the movement is canceled only when the stop process reaches the threshold before the go process. The duration of the go process can be estimated directly from the observed distribution of reaction times during the trials without stop signals. In addition, the duration of the stop process can be inferred indirectly from the frequencies of cancelling the movement for each SSD, and is referred to as stop signal reaction time (SSRT). A relatively short SSRT implies that the subjects can successfully abort the movement even when the stop signal is somewhat delayed (i.e., long SSD). In contrast, a long SSRT corresponds to a reduced ability to suppress a movement that is no longer appropriate and therefore can be used as a measure of impulsivity. In fact, children with attention-deficit/hyperactivity disorder (ADHD) show prolonged SSRT compared to normal children (37), suggesting that impaired response inhibition might be a core deficit in this disorder (38). However, it is not entirely clear whether longer SSRT is a main characteristic for the behavioral deficit in ADHD, because reaction times in children with ADHD are more variable and this might introduce systematic biases in SSRT estimates (39, 40).

Figure 2.

Models of impulsive behavioral responses. A. Horse-race model for response inhibition in a stop-signal task. Go (green, G1 or G2) and stop (red, S1 or S2) processes are initiated when the target for the movement and stop signal are presented, respectively. Movements are canceled only if the stop process reaches the threshold (gray line) before the go process. Stop-signal reaction time (SSRT) refers to the time needed by the stop process to reach the threshold. In this example, a short SSRT (S1) successfully cancels the movement with a slow go process (G2), whereas a long SSRT (S2) fails to cancel any movement. B. Random-walk or drift-diffusion model for a two-alternative forced choice (2AFC) task. Noisy evidence from a sensory stimulus is temporally integrated and “up” or “down” response is triggered depending on whether this integrated evidence reaches the upper or lower bound. In this example, the average signal is positive (a gray straight line). For a bound closer to the origin (Blow), an error is committed when the integrated evidence crosses the lower bound (−Blow). In contrast, a correct response is made for a bound farther from the origin (Bhigh).

Impulsivity and evidence accumulation

In the horse race model used to account for the behaviors in stop-signal tasks, the rate of increase in the activation for each process is variable across trials but remains constant within a given trial (Figure 2A). In contrast, in the so-called random-walk or drift-diffusion models, it is assumed that the sensory inputs are corrupted by noise and therefore display moment-to-moment fluctuations in their signal strengths (Figure 2B). The process of accumulating noisy sensory evidence is therefore considered analogous to the diffusion of a particle in one dimension, and the ultimate choice is determined by which of the two bounds (upper or lower) is crossed first. The accuracy of identification based on such noisy sensory evidence is enhanced by this temporal integration. These models provide an intuitive explanation for speed-accuracy trade-off, commonly observed in choice reaction time tasks (41). When the bounds are set farther away from the starting point, the choices become more accurate, but the reaction times increase (Figure 2B). Conversely, if the bounds are close to the origin, random fluctuations in the momentary sensory signals are more likely to produce errors, but this decreases the average reaction times. Therefore, within this framework, impulsive behaviors result from the bounds that are set too close to the origin (e.g., Blow in Figure 2B).

Neural mechanisms of impulsive decision making

Neural basis of temporal discounting

Inter-temporal choice behaviors are relatively well described by the models of temporal discounting. Therefore, the neural substrates of inter-temporal choice should somehow integrate the information about the magnitude and delay of reward expected from each option. Indeed, the blood-oxygen-level-dependent (BOLD) signals obtained from the medial frontal cortex and ventral striatum in functional magnetic resonance imaging (fMRI) studies are correlated with the temporally discounted values of rewards chosen by the subjects during inter-temporal choice tasks, suggesting that the information about reward magnitude and delay might be combined in these brain areas (42–49). Lesion and neuropharmacological studies have also implicated orbitofrontal cortex (50, 51) and nucleus accumbens (52) in temporal discounting. However, the results from neuroimaging or lesion studies do not reveal how the information about reward magnitude and delay is combined at the level of individual neurons.

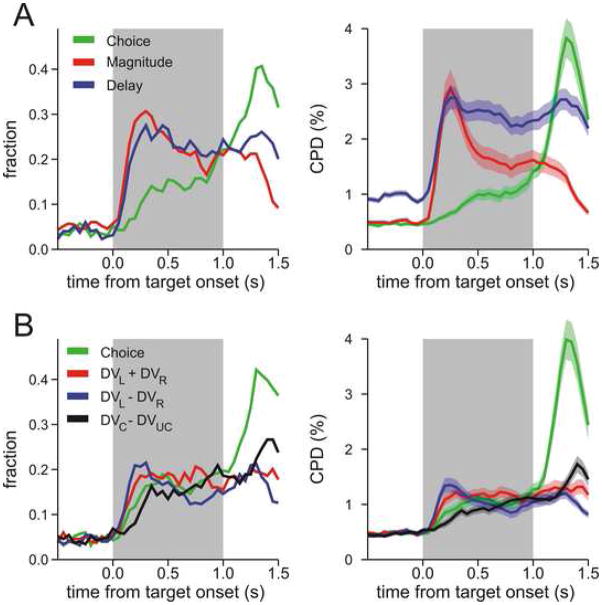

In particular, if the activity of a given neuron related to reward magnitude and delay reflect the temporally discounted values, then it should change similarly as the magnitude of a reward is increased and as its delay is decreased. In fact, single-neuron recording studies in rodents and non-human primates have shown that the neurons in the prefrontal cortex and dopamine neurons change their activity according to the magnitude or delay of reward expected by the animal (53–60). When the animal’s choice depends on both magnitude and delay of reward, the activity of neurons in the prefrontal cortex is systematically influenced by both variables, and consequently, prefrontal activity reflects signals related to temporally discounted values (7, 59). In addition, the time course and the nature of signals related to temporally discounted values represented in the prefrontal cortex suggest that the prefrontal cortex is involved in multiple computations during inter-temporal choice. For example, immediately after the magnitude and delay of reward available from each option are specified, neurons in the prefrontal cortex largely encode the signals related to the sum of the temporally discounted values for the rewards available from different options as well as their difference, whereas the signals related to the animal’s choice and the temporally discounted value of the reward chosen by the animal develop more gradually (Figure 3b). These results suggest that the prefrontal cortex is involved in gradual transformation of the signals related to the values of individual options to the animal’s choice.

Figure 3.

Time course of signals related to the temporally discounted values and choice in the dorsolateral prefrontal cortex (DLPFC). A. Signals related to the magnitude (red) and delay (blue) of the reward expected from each choice and the choice of the animal (leftward or rightward saccade; green). B. Signals related to the sum of the temporally discounted values with the two alternative rewards (DVL and DVR for the left and right target, respectively; red), their differences (blue), the difference between the temporally discounted values of the chosen (DVC) and unchosen (DVUC) rewards (black), and the animal’s choice (green). Left panels, the fraction of neurons that showed significant modulations in their activity according to each variable during a 200-ms sliding window used for a multiple linear regression model. Right panels, coefficient of partial determination (CPD) averaged across the population of DLPFC neurons estimated for each variable in the same regression model (59).

The convergence of signals related to reward magnitude and delay and encoding of temporally discounted values in the prefrontal cortex might also endow it with the ability to monitor and enhance the decision maker’s ability to pursue the long-term benefits by resisting the temptation to accept immediate reward. Consistent with this possibility, some neuroimaging studies have found that the BOLD activity in the dorsolateral prefrontal cortex is enhanced when the subject chooses the larger but more delayed reward during inter-temporal choice (61, 62). Moreover, the involvement of the dorsolateral prefrontal cortex might generalize to other situations in which the decision makers must exert self-control to choose the options that will maximize their long-term benefits. Such abilities have strong implications in health care, since they might prevent over-eating and substance abuse (29–33). For example, a recent study identified a stronger BOLD activity in the dorsolateral prefrontal cortex when the dieters successfully choose healthy rather than tasty food (63). In addition, during the trials in which the subjects successfully exerted self control and chose healthy food, the activity in the dorsolateral prefrontal cortex influenced the activity in the ventromedial prefrontal cortex, which commonly shows the activity related to the subjective value of the option chosen by the subject. This interaction might be indirect and mediated by the Brodmann area 46 in the inferior frontal gyrus (63).

Neural basis of response inhibition

Many of our actions are habitual and they are often generated automatically and rapidly without much deliberation. Often, in order to produce most appropriate behaviors, such automatic and habitual behaviors must be first suppressed. Numerous studies based on lesions, neuroimaging, and single-neuron recording studies have suggested that this ability to suppress undesirable movements might require the prefrontal cortex (64–69) and basal ganglia (70, 71). Currently, however, whether and how specific cognitive processes involved in response inhibition are localized within different subregions of the prefrontal cortex is not completely understood. For example, a number of studies suggested that the right inferior frontal gyrous (IFG) or the ventrolateral prefrontal cortex (VLPFC) might be particularly important for response inhibition during a go/no-go or stop-signal task (64–66). However, some studies have also implicated the involvement of the dorsolateral prefrontal cortex in response inhibition (67–69).

Single-neuron recording studies in non-human primates have focused on the role of the frontal eye field (FEF) and supplementary eye field (SEF) in inhibiting previously planned saccadic eye movements during a stop-signal task (72–74). These studies have found that some neurons in the FEF display differential activity sufficiently early to cause the cancellation of upcoming eye movements during stop-signal trials (72), whereas the neurons in the SEF seem to play a role largely in monitoring the outcome of unwanted eye movements (73, 74). Single neuron recordings in the supplementary and pre-supplementary motor areas during a manual stop-signal task also indicated that the medial frontal cortex might be more involved in monitoring the consequences of animal’s actions and their motivational control (75). These results suggest that the activation in the human medial frontal cortex identified during a stop-signal task might also reflect functions related to performance monitoring (76). In addition, results from diffusion-weighted imaging tractography studies suggest that the network consisting of pre-supplementary motor area (pre-SMA), right inferior frontal gyrus (IFG), and subthalamic nucleus might play an important role in inhibiting unwanted movements (77).

Whereas the medial frontal cortex might not play a major role in response inhibition during a stop-signal task, it might be more involved in overcoming the tendency to produce habitual and automatic response according to various contextual cues (78). For example, neurons in the pre-SMA often increase their activity immediately before the animal switches from automatic, repeated patterns of behaviors to a new pattern of behaviors in response to sensory cues (79, 80). The lack of phasic activity in these so-called switching neurons was associated with the failure to suppress automatic actions. In addition, the activity patterns of switch neurons during a go/no-go task suggest that they are involved in suppressing a particular action as well as facilitating the desired action (80). In contrast, neurons in the subthalamic nucleus contribute largely to suppressing automatic actions that are no longer appropriate (81). These results are consistent with the finding that the disruption of the subthalamic nucleus leads to premature and impulsive actions (78, 82, 83). The subthalamic nucleus is known to mediate a short-latency excitation in the internal segment of the globus pallidus (GPi) induced by cortical stimulation. Since the output from the GPi inhibits the downstream neurons in the thalamus, the subthalamic nucleus is considered a part of the “hyperdirect” pathway (84), capable of suppressing alternative actions and promoting the selection of the most appropriate action (70, 85). Therefore, neurophysiological studies on action switching have highlighted the role of the subthalamic nucleus in response inhibition and controlling impulsive actions. However, whether this function is unique to the subthalamic nucleus or shared by other areas of the basal ganglia, such as the striatum, needs to be investigated further in future studies (86).

Neural basis of perceptual decision making

The random-walk or drift-diffusion models describe how both accuracy and speed of decision making are influenced by the stimulus discriminability (Figure 2b). The same models can also provide a parsimonious explanation for the speed-accuracy trade-off by shifts in their threshold or bounds (Figure 2b). A threshold placed closer to the origin can be crossed more easily by the noisy fluctuations in the integrated variable, leading to shorter reaction times but with more errors. There is a large amount of theoretical as well as empirical evidence for these mathematical models of decision making (5, 87, 88), but the mechanisms for adjusting the threshold are not understood. For example, the onset of saccadic eye movement during a choice reaction time task is tightly coupled to the moment when the activity level of individual neurons in the frontal eye field reaches a particular threshold (89). In the posterior parietal cortex, such as the lateral intraparietal area (LIP), the activity of individual neurons tends to rise at a rate that increases with the stimulus strength during a perceptual decision making task, converging to a particular level shortly before the animal produces its behavioral response (5, 90). These results suggest that the bound or threshold postulated in the drift-diffusion models might correspond to a fixed level of activity that needs to be reached by the neurons responsible for implementing a particular choice or action. Accordingly, impulsive behaviors during perceptual decision making might be accompanied by a lower threshold, causing the movements to be triggered more quickly even when the rate of activity increase in such cortical areas as FEF and LIP remains unchanged. These predictions need to be tested in future studies. Results from neuroimaging studies have also suggested that the cortical and subcortical network involved in switching from automatic to more controlled behaviors, such as pre-SMA and subthalamic nucleus, might also be involved in speed-accuracy trade-off (41, 91–93). However, the detailed underlying neural mechanisms need to be investigated by characterizing the properties of single neurons in these areas.

Conclusions

Impulsivity is often associated with a number of mental illnesses, ranging from attention deficit hyperactivity disorder (94) to substance abuse (95). While this label applies to a set of heterogeneous behavioral features, they are related by the fact that impulsive behaviors are suboptimally tuned along certain temporal dimensions. For inter-temporal choice, impulsivity implies placing too much weight on immediate outcomes, whereas other types of impulsivity result from the premium on speed rather than accuracy of responses or failures to suppress appropriate actions. The data from a number of studies suggest an important role of the prefrontal cortex and basal ganglia in controlling multiple types of impulsivity. Neuroimaging and lesion studies have implicated specific anatomical areas, including the medial frontal cortex and basal ganglia in addition to the prefrontal cortex, in controlling impulsive behaviors. In addition, single-neuron recording studies in non-human primates have also characterized the dynamic properties of neural activity that underlies temporal discounting and the suppression of prepotent but inappropriate behaviors. As we understand better the nature of neural mechanisms underlying various forms of impulsivity, such knowledge will lead to the improvement in the diagnosis and treatment of impulsivity-related behavioral disorders.

Acknowledgments

This study was supported by the National Institute of Health (RL1 DA024855).

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Whiteside SP, Lynam DR. The five factor model and impulsivity: using a structural model of personality to understand impulsivity. Pers Indiv Differ. 2001;30:669–689. [Google Scholar]

- 2.Evenden JL. Varieties of impulsivity. Psychopharmacology. 1999;146:348–361. doi: 10.1007/pl00005481. [DOI] [PubMed] [Google Scholar]

- 3.Glimcher PW. The neurobiology of visual-saccadic decision making. Annu Rev Neurosci. 2003;26:133–179. doi: 10.1146/annurev.neuro.26.010302.081134. [DOI] [PubMed] [Google Scholar]

- 4.Lee D. Neural basis of quasi-rational decision making. Curr Op Neurobiol. 2006;16:191–198. doi: 10.1016/j.conb.2006.02.001. [DOI] [PubMed] [Google Scholar]

- 5.Gold JI, Shadlen MN. The neural basis of decision making. Ann Rev Neurosci. 2007;30:535–574. doi: 10.1146/annurev.neuro.29.051605.113038. [DOI] [PubMed] [Google Scholar]

- 6.Seo H, Lee D. Cortical mechanisms for reinforcement learning in competitive games. Philos Trans Roy Soc Lond B Biol Sci. 2008;363:3845–3857. doi: 10.1098/rstb.2008.0158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Kim S, Hwang J, Seo H, Lee D. Valuation of uncertain and delayed rewards in primate prefrontal cortex. Neural Networks. 2009;22:294–304. doi: 10.1016/j.neunet.2009.03.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Curtis CE, Lee D. Beyond working memory: the role of persistent activity in decision making. Trends Cogn Sci. 2010;14:216–222. doi: 10.1016/j.tics.2010.03.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Rangel A, Hare T. Neural computations associated with goal-directed choice. Curr Op Neurobiol. 2010;20:262–270. doi: 10.1016/j.conb.2010.03.001. [DOI] [PubMed] [Google Scholar]

- 10.Berns GS, Chappelow J, Cekic M, Zink CF, Pagnoni G, Martin-Skurski ME. Neurobiological substrates of dread. Science. 2006;312:754–758. doi: 10.1126/science.1123721. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Loewenstein G, Prelec D. Negative time preference. Am Econ Rev. 1991;81:347–352. [Google Scholar]

- 12.Loewenstein GF, Prelect D. Preferences for sequences of outcomes. Psychol Rev. 1993;100:91–108. [Google Scholar]

- 13.Frederick S, Loewenstein G, O’Donoghue T. Time discounting and time preference: a critical review. J Econ Lit. 2002;40:351–401. [Google Scholar]

- 14.Green L, Myerson J. A discounting framework for choice with delayed and probabilistic rewards. Psychol Bull. 2004;130:769–792. doi: 10.1037/0033-2909.130.5.769. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Kalenscher T, Pennartz CMA. Is a bird in the hand worth two in the future? The neuroeconomics of intertemporal decision-making. Prog Neurobiol. 2008;84:284–315. doi: 10.1016/j.pneurobio.2007.11.004. [DOI] [PubMed] [Google Scholar]

- 16.von Neumann J, Morgenstern O. Theory of games and economic behavior. Princeton, NJ: Princeton University Press; 1944. [Google Scholar]

- 17.Kahneman D, Tversky A. Prospect theory: an analysis of decision under risk. Econometrica. 1979;47:263–291. [Google Scholar]

- 18.Hertwig R, Erev I. The description-experience gap in risky choice. Trends Cogn Sci. 2009;13:517–523. doi: 10.1016/j.tics.2009.09.004. [DOI] [PubMed] [Google Scholar]

- 19.Sutton RS, Barto AG. Reinforcement learning: an introduction. Cambridge, MA: MIT Press; 1998. [Google Scholar]

- 20.Britten KH, Shadlen MN, Newsome WT, Movshon JA. The analysis of visual motion: a comparison of neuronal and psychophysical performance. J Neurosci. 1992;12:4745–4765. doi: 10.1523/JNEUROSCI.12-12-04745.1992. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Shadlen MN, Britten KH, Newsome WT, Movshon JA. A computational analysis of the relationship between neuronal and behavioral responses to visual motion. J Neurosci. 1996;16:1486–1510. doi: 10.1523/JNEUROSCI.16-04-01486.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Romo R, Salinas E. Touch and go: decision-making mechanisms in somatosensation. Annu Rev Neurosci. 2001;24:107–137. doi: 10.1146/annurev.neuro.24.1.107. [DOI] [PubMed] [Google Scholar]

- 23.Laming DRJ. Information theory of choice-reaction times. London: Academic Press; 1968. [Google Scholar]

- 24.Smith PL, Ratcliff R. Psychology and neurobiology of simple decisions. Trends Neurosci. 2004;27:161–168. doi: 10.1016/j.tins.2004.01.006. [DOI] [PubMed] [Google Scholar]

- 25.Hwang J, Kim S, Lee D. Temporal discounting and inter-temporal choice in rhesus monkeys. Front Behav Neurosci. 2009;3:9. doi: 10.3389/neuro.08.009.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Mazur JE. An adjusting procedure for studying delayed reinforcement. In: Commons ML, Mazur JE, Nevin JA, Rachlin H, editors. Quantitative analyses of behavior: the effect of delay and of intervening events on reinforcement value. Vol. 5. Hillsdale: Erlbaum; 1987. pp. 55–73. [Google Scholar]

- 27.Kirby KN. Bidding on the future: evidence against normative discounting of delayed rewards. J Exp Psychol Gen. 1997;126:54–70. [Google Scholar]

- 28.Madden GJ, Begotka AM, Raiff BR, Kastern LL. Delay discounting of real and hypothetical rewards. Exp Clin Psychopharmacol. 2003;11:139–145. doi: 10.1037/1064-1297.11.2.139. [DOI] [PubMed] [Google Scholar]

- 29.Madden GJ, Petry NM, Badger GJ, Bickel WK. Impulsive and self-control choices in opioid-dependent patients and non-drug-using control patients: drug and monetary rewards. Exp Clin Psychopharmacol. 1997;5:256–262. doi: 10.1037//1064-1297.5.3.256. [DOI] [PubMed] [Google Scholar]

- 30.Vuchinich RE, Simpson CA. Hyperbolic temporal discounting in social drinkers and problem drinkers. Exp Clin Psychopharmacol. 1998;6:292–305. doi: 10.1037//1064-1297.6.3.292. [DOI] [PubMed] [Google Scholar]

- 31.Mitchell SH. Measures of impulsivity in cigarette smokers and non-smokers. Psychopharmacology. 1999;146:455–464. doi: 10.1007/pl00005491. [DOI] [PubMed] [Google Scholar]

- 32.Kirby KN, Petry NM. Heroin and cocaine abusers have higher discount rates for delayed rewards than alcoholics or non-drug-using controls. Addiction. 2004;99:461–471. doi: 10.1111/j.1360-0443.2003.00669.x. [DOI] [PubMed] [Google Scholar]

- 33.Reynolds BA. A review of delay-discounting research with humans: relations to drug use and gambling. Behav Pharmacol. 2006;17:651–667. doi: 10.1097/FBP.0b013e3280115f99. [DOI] [PubMed] [Google Scholar]

- 34.Logan GD, Cowan WB. On the ability to inhibit thought and action: a theory for an act of control. Psych Rev. 1984;91:295–327. [Google Scholar]

- 35.Verbruggen F, Logan GD. Models of response inhibition in the stop-signal and stop-change paradigms. Neurosci Biobehav Rev. 2009;33:647–661. doi: 10.1016/j.neubiorev.2008.08.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Band GPH, van der Molen MW, Logan GD. Horse-race model simulations of the stop-signal procedure. Acta Psychol. 2003;112:105–142. doi: 10.1016/s0001-6918(02)00079-3. [DOI] [PubMed] [Google Scholar]

- 37.Oosterlaan J, Logan GD, Sergeant JA. Response inhibition in AD/HD, CD, comorbid AD/HD+CD, anxious, and control children: a meta-analysis of studies with the stop task. J Child Psychol Psychiatry. 1998;39:411–425. [PubMed] [Google Scholar]

- 38.Barkley RA. Behavioral inhibition, sustained attention, and executive functions: constructing a unifying theory of ADHD. Psychol Bull. 1997;121:65–94. doi: 10.1037/0033-2909.121.1.65. [DOI] [PubMed] [Google Scholar]

- 39.Lijffijt M, Kenemans L, Verbaten MN, van Engeland H. A meta-analytic review of stopping performance in attention-deficit/hyperactivity disorder: deficient inhibitory motor control? J Abnorm Psychol. 2005;114:216–222. doi: 10.1037/0021-843X.114.2.216. [DOI] [PubMed] [Google Scholar]

- 40.Alderson RM, Rapport MD, Kofler MJ. Attention-deficit/hyperactivity disorder and behavioral inhibition: a meta-analytic review of the stop-signal paradigm. J Abnorm Child Psychol. 2007;35:745–758. doi: 10.1007/s10802-007-9131-6. [DOI] [PubMed] [Google Scholar]

- 41.Bogacz R, Wagenmakers E-J, Forstmann BU, Nieuwenhuis S. The neural basis of the speed-accuracy tradeoff. Trends Neurosci. 2010;33:10–16. doi: 10.1016/j.tins.2009.09.002. [DOI] [PubMed] [Google Scholar]

- 42.Hariri AR, Brown SM, Williamson DE, Flory JD, de Wit H, Manuck SB. Preference for immediate over delayed rewards is associated with magnitude of ventral striatal activity. J Neurosci. 2006;26:13213–13217. doi: 10.1523/JNEUROSCI.3446-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Wittmann M, Leland DS, Paulus MP. Time and decision making: differential contribution of the posterior insular cortex and the striatum during a delay discounting task. Exp Brain Res. 2007;179:643–653. doi: 10.1007/s00221-006-0822-y. [DOI] [PubMed] [Google Scholar]

- 44.Kable JW, Glimcher PW. The neural correlates of subjective value during intertemporal choice. Nat Neurosci. 2007;10:1625–1633. doi: 10.1038/nn2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Weber BJ, Huettel SA. The neural substrates of probabilistic and intertemporal decision making. Brain Res. 2008;1234:104–115. doi: 10.1016/j.brainres.2008.07.105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Luhmann CC, Chun MM, Yi D-J, Lee D, Wang X-J. Neural dissociation of delay and uncertainty in intertemporal choice. J Neurosci. 2008;28:14459–14466. doi: 10.1523/JNEUROSCI.5058-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Pine A, Seymour B, Roiser JP, Bossaerts P, Friston KJ, Curran HV, Dolan RJ. Encoding the marginal utility across time in the human brain. J Neurosci. 2009;29:9575–9581. doi: 10.1523/JNEUROSCI.1126-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Ballard K, Knutson B. Dissociable neural representations of future reward magnitude and delay during temporal discounting. Neuroimage. 2009;45:143–150. doi: 10.1016/j.neuroimage.2008.11.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Bickel WK, Pitcock JA, Yi R, Angtuaco EJC. Congruence of BOLD response across intertemporal choice conditions: fictive and real money gains and losses. J Neurosci. 2009;29:8839–8846. doi: 10.1523/JNEUROSCI.5319-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Mobini S, Body S, Ho M-Y, Bradshaw CM, Szabadi E, Deakin JFW, Anderson IM. Effects of lesions of the orbitofrontal cortex on sensitivity to delayed and probabilistic reinforcement. Psychopharmacology. 2002;160:290–298. doi: 10.1007/s00213-001-0983-0. [DOI] [PubMed] [Google Scholar]

- 51.Winstanley CA, Theobald DEH, Cardinal RN, Robbins TW. Contrasting roles of basolateral amygdala and orbitofrontal cortex in impulsive choice. J Neurosci. 2004;24:4718–4722. doi: 10.1523/JNEUROSCI.5606-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Cardinal RN, Pennicott DR, Sugathapala CL, Robbins TW, Everitt BJ. Impulsive choice induced in rats by lesions of the nucleus accumbens core. Science. 2001;292:2499–2501. doi: 10.1126/science.1060818. [DOI] [PubMed] [Google Scholar]

- 53.Roesch MR, Olson CR. Neuronal activity related to reward value and motivation in primate frontal cortex. Science. 2004;304:307–310. doi: 10.1126/science.1093223. [DOI] [PubMed] [Google Scholar]

- 54.Roesch MR, Olson CR. Neuronal activity dependent on anticipated and elapsed delay in macaque prefrontal cortex, frontal and supplementary eye fields, and premotor cortex. J Neurophysiol. 2005;94:1469–1497. doi: 10.1152/jn.00064.2005. [DOI] [PubMed] [Google Scholar]

- 55.Roesch MR, Olson CR. Neuronal activity in primate orbitofrontal cortex reflects the value of time. J Neurophysiol. 2005;94:2457–2471. doi: 10.1152/jn.00373.2005. [DOI] [PubMed] [Google Scholar]

- 56.Roesch MR, Taylor AR, Schoenbaum G. Encoding of time-discounted rewards in orbitofrontal cortex is independent of value representation. Neuron. 2006;51:509–520. doi: 10.1016/j.neuron.2006.06.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Roesch MR, Calu DJ, Schoenbaum G. Dopamine neurons encode the better option in rats deciding between differently delayed or sized rewards. Nat Neurosci. 2007;10:1615–1624. doi: 10.1038/nn2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Sohn J-W, Lee D. Order-dependent modulation of directional signals in the supplementary and presupplementary motor areas. J Neurosci. 2007;27:13655–13666. doi: 10.1523/JNEUROSCI.2982-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Kim S, Hwang J, Lee D. Prefrontal coding of temporally discounted values during intertemporal choice. Neuron. 2008;59:161–172. doi: 10.1016/j.neuron.2008.05.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Kobayashi S, Schultz W. Influence of reward delays on responses of dopamine neurons. J Neurosci. 2008;28:7837–7846. doi: 10.1523/JNEUROSCI.1600-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.McClure SM, Ericson KM, Laibson DI, Loewenstein G, Cohen JD. Time discounting for primary rewards. J Neurosci. 2007;27:5796–5804. doi: 10.1523/JNEUROSCI.4246-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.McClure SM, Laibson DI, Loewenstein G, Cohen JD. Separate neural systems value immediate and delayed monetary rewards. Science. 2004;306:503–507. doi: 10.1126/science.1100907. [DOI] [PubMed] [Google Scholar]

- 63.Hare TA, Camerer CF, Rangel A. Self-control in decision-making involves modulation of the vmPFC valuation system. Science. 2009;324:646–648. doi: 10.1126/science.1168450. [DOI] [PubMed] [Google Scholar]

- 64.Konishi S, Nakajima K, Uchida I, Kikyo H, Kameyama M, Miyashita Y. Common inhibitory mechanism in human inferior prefrontal cortex revealed by event-related functional MRI. Brain. 1999;122:981–991. doi: 10.1093/brain/122.5.981. [DOI] [PubMed] [Google Scholar]

- 65.Aron AR, Fletcher PC, Bullmore ET, Sahakian BJ, Robbins TW. Stop-signal inhibition disrupted by damage to right inferior frontal gyrus in humans. Nat Neurosci. 2003;6:115–116. doi: 10.1038/nn1003. [DOI] [PubMed] [Google Scholar]

- 66.Aron AR, Robbins TW, Poldrack RA. Inhibition and the right inferior frontal cortex. Trends Cogn Neurosci. 2004;8:170–177. doi: 10.1016/j.tics.2004.02.010. [DOI] [PubMed] [Google Scholar]

- 67.Garavan H, Ross TJ, Stein EA. Right hemispheric dominance of inhibitory control: an event-related functional MRI study. Proc Natl Acad Sci USA. 1999;96:8301–8306. doi: 10.1073/pnas.96.14.8301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Rubia K, Russell T, Overmeyer S, Brammer MJ, Bullmore ET, Sharma T, Simmons A, Williams SCR, Giampietro V, Andrew CM, Taylor E. Mapping motor inhibition: conjunctive brain activations across different versions of go/no-go and stop tasks. Neuroimage. 2001;13:250–261. doi: 10.1006/nimg.2000.0685. [DOI] [PubMed] [Google Scholar]

- 69.Zheng D, Oka T, Bokura H, Yamaguchi S. The key locus of common response inhibition network for no-go and stop signals. J Cogn Neurosci. 2008;20:1434–1442. doi: 10.1162/jocn.2008.20100. [DOI] [PubMed] [Google Scholar]

- 70.Mink JW. The basal ganglia: focused selection and inhibition of competing motor programs. Prog Neurobiol. 1996;50:381–425. doi: 10.1016/s0301-0082(96)00042-1. [DOI] [PubMed] [Google Scholar]

- 71.Frank MJ. Hold your horses: a dynamic computational role for the subthalamic nucleus in decision making. Neural Networks. 2006;19:1120–1136. doi: 10.1016/j.neunet.2006.03.006. [DOI] [PubMed] [Google Scholar]

- 72.Hanes DP, Patterson WF, II, Schall JD. Role of frontal eye fields in countermanding saccades: visual, movement, and fixation activity. J Neurophysiol. 1998;79:817–834. doi: 10.1152/jn.1998.79.2.817. [DOI] [PubMed] [Google Scholar]

- 73.Stuphorn V, Brown JW, Schall JD. Role of supplementary eye field in saccade initiation: executive, not direct, control. J Neurophysiol. 2010;103:801–816. doi: 10.1152/jn.00221.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Stuphorn V, Taylor TL, Schall JD. Performance monitoring by the supplementary eye field. Nature. 2000;408:857–860. doi: 10.1038/35048576. [DOI] [PubMed] [Google Scholar]

- 75.Scangos KW, Stuphorn V. Medial frontal cortex motivates but does not control movement initiation in the countermanding task. J Neurosci. 2010;30:1968–1982. doi: 10.1523/JNEUROSCI.4509-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Aron AR, Poldrack RA. Cortical and subcortical contributions to stop signal response inhibition: role of the subthalamic nucleus. J Neurosci. 2006;26:2424–2433. doi: 10.1523/JNEUROSCI.4682-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Aron AR, Behrens TE, Smith S, Frank MJ, Poldrack RA. Triangulating cognitive control network using diffusion-weighted magnetic resonance imaging (MRI) and functional MRI. J Neurosci. 2007;27:3743–3752. doi: 10.1523/JNEUROSCI.0519-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Hikosaka O, Isoda M. Switching from automatic to controlled behavior: cortico-basal ganglia mechanisms. Trends Cogn Sci. 2010;14:154–161. doi: 10.1016/j.tics.2010.01.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Shima K, Mushiake H, Saito N, Tanji J. Role for cells in the presupplementary motor area in updating motor plans. Proc Natl Acad Sci USA. 1996;93:8694–8698. doi: 10.1073/pnas.93.16.8694. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Isoda M, Hikosaka O. Switching from automatic to controlled action by monkey medial frontal cortex. Nat Neurosci. 2007;10:240–248. doi: 10.1038/nn1830. [DOI] [PubMed] [Google Scholar]

- 81.Isoda M, Hikosaka O. Role of subthalamic nucleus neurons in switching from automatic to controlled eye movement. J Neurosci. 2008;28:7209–7218. doi: 10.1523/JNEUROSCI.0487-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Baunez C, Nieoullon A, Amalric M. In a rat model of parkinsonism, lesions of the subthalamic nucleus reverse increases of reaction time but induce a dramatic premature responding deficit. J Neurosci. 1995;15:6531–6541. doi: 10.1523/JNEUROSCI.15-10-06531.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Frank MJ, Samanta J, Moustafa AA, Sherman SJ. Hold your horses: impulsivity, deep brain stimulation, and medication in parkinsonism. Science. 2007;318:1309–1312. doi: 10.1126/science.1146157. [DOI] [PubMed] [Google Scholar]

- 84.Nambu A, Tokuno H, Hamada I, Kita H, Imanishi M, Akazawa T, Ikeuchi Y, Hasegawa N. Excitatory cortical inputs to pallidal neurons via the subthalamic nucleus in the monkey. J Neurophysiol. 2000;84:289–300. doi: 10.1152/jn.2000.84.1.289. [DOI] [PubMed] [Google Scholar]

- 85.Nambu A, Tokuno H, Takada M. Functional significance of the cortico-subthalamo-pallidal ‘hyperdirect’ pathway. Neurosci Res. 2002;43:111–117. doi: 10.1016/s0168-0102(02)00027-5. [DOI] [PubMed] [Google Scholar]

- 86.Cameron IGM, Coe BC, Watanabe M, Stroman PW, Munoz DP. Role of the basal ganglia in switching a planned response. Eur J Neurosci. 2009;29:2413–2425. doi: 10.1111/j.1460-9568.2009.06776.x. [DOI] [PubMed] [Google Scholar]

- 87.Wong K-F, Wang X-J. A recurrent network mechanism of time integration in perceptual decisions. J Neurosci. 2006;26:1314–1328. doi: 10.1523/JNEUROSCI.3733-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Wang X-J. Decision making in recurrent neuronal circuits. Neuron. 2008;60:215–234. doi: 10.1016/j.neuron.2008.09.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Hanes DP, Schall JD. Neural control of voluntary movement initiation. Science. 1996;274:427–430. doi: 10.1126/science.274.5286.427. [DOI] [PubMed] [Google Scholar]

- 90.Roitman JD, Shadlen MN. Response of neurons in the lateral intraparietal area during a combined visual discrimination reaction time task. J Neurosci. 2002;22:9475–9489. doi: 10.1523/JNEUROSCI.22-21-09475.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Forstmann BU, Dutilh G, Brown S, Neumann J, von Cramon DY, Ridderinkhof KR, Wagenmakers E-J. Striatum and pre-SMA facilitate decision making under time pressure. Proc Natl Acad Sci USA. 2008;105:17538–17542. doi: 10.1073/pnas.0805903105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Ivanoff J, Branning P, Marois R. fMRI evidence for a dual process account of the speed-accuracy tradeoff in decision-making. PLoS One. 2008;3:e2635. doi: 10.1371/journal.pone.0002635. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.van Veen V, Krug MK, Carter CS. The neural and computational basis of controlled speed-accuracy tradeoff during task performance. J Cogn Neurosci. 2008;20:1952–1965. doi: 10.1162/jocn.2008.20146. [DOI] [PubMed] [Google Scholar]

- 94.Winstanley CA, Eagle DM, Robbins TW. Behavioral models of impulsivity in relation to ADHD: translation between clinical and preclinical studies. Clin Psychol Rev. 2006;26:379–395. doi: 10.1016/j.cpr.2006.01.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Jentsch JD, Taylor JR. Impulsivity resulting from frontostriatal dysfunction in drug abuse: implications for the control of behavior by reward-related stimuli. Psychopharmacology. 1999;146:373–390. doi: 10.1007/pl00005483. [DOI] [PubMed] [Google Scholar]