Abstract

People are remarkably smart: they use language, possess complex motor skills, make non-trivial inferences, develop and use scientific theories, make laws, and adapt to complex dynamic environments. Much of this knowledge requires concepts and this paper focuses on how people acquire concepts. It is argued that conceptual development progresses from simple perceptual grouping to highly abstract scientific concepts. This proposal of conceptual development has four parts. First, it is argued that categories in the world have different structure. Second, there might be different learning systems (sub-served by different brain mechanisms) that evolved to learn categories of differing structures. Third, these systems exhibit differential maturational course, which affects how categories of different structures are learned in the course of development. And finally, an interaction of these components may result in the developmental transition from perceptual groupings to more abstract concepts. This paper reviews a large body of empirical evidence supporting this proposal.

Keywords: Cognitive development, category learning, concepts, conceptual development, cognitive neuroscience

1. Knowledge Acquisition: Categories and Concepts

People are remarkably smart: they use language, possess complex motor skills, make non-trivial inferences, develop and use scientific theories, make laws, and adapt to complex dynamic environments. At the same time, they do not exhibit evidence of this knowledge at birth. Therefore, one of the most interesting and exciting challenges in the study of human cognition is to gain an understanding of how people acquire this knowledge in the course of development and learning.

A critical component of knowledge acquisition is the ability to use acquired knowledge across a variety of situations, which requires some form of abstraction or generalization. Examples of abstraction are ample. People can recognize the same object under different viewing conditions. They treat different dogs as members of the same class and expect them to behave in fundamentally similar ways. They learn words uttered by different speakers. Upon learning a hidden property of an item, they extend this property to other similar items. And they apply ways of solving familiar problems to novel problems. In short, people can generalize or form equivalence classes by focusing only on some aspects of information while ignoring others.

This ability to form equivalence classes or categories is present in many non-human species (see Zentall et al., 2008 for a review); however, only humans have the ability to acquire concepts – lexicalized groupings that allow ever increasing levels of abstraction (e.g., Cat --> Animal --> Living thing --> Object). These lexicalized groupings may include both observable and unobservable properties. For example, although pre-linguistic infants can acquire a category “cat” by strictly perceptual means (Quinn, Eimas, & Rosenkrantz, 1993), the concept “cat” may include many properties that have to be inferred rather than observed directly (e.g., “mating only with cats, but not with dogs”, “being able to move in a self-propelled manner”, “having insides of a cat”, etc.). Often such properties are akin to latent variables – they are inferred from patterns of correlations among observable properties (cf., Rakison & Poulin-Dubois, 2001). These properties can also be lexicalized, and when lexicalized, they allow non-trivial generalizations (e.g., “plants and animals are alive” or “plants and animals reproduce themselves”). While the existence of pre-linguistic concepts is a matter of considerable debate, it seems rather non-controversial to define those lexicalized properties that have to be inferred (rather than observed) as conceptual and lexicalized categories that include such properties as concepts.

Concepts are central to human intelligence as they allow uniquely human forms of expression, such as many forms of reasoning. For example, counterfactuals (e.g., “if the defendant were at home at the time of the crime, she could not have been at the crime scene at the same time”) would be impossible without concepts. According to the present proposal, most concepts develop from perceptual categories and most conceptual properties are inferred from perceptual properties1. Therefore, although categories comprise a broader class than concepts (i.e., there are many categories that are not lexicalized and are not based on conceptual properties), there is no fundamental divide between category learning and concept acquisition.

Most of the examples presented in this paper deal with “thing” concepts (these are lexicalized by “nominals”), whereas many other concepts, such as actions, properties, quantities, and conceptual combinations are left out. This is because nominals are often most prevalent in the early vocabulary (Nelson, 1973; Gentner, 1982) and entities corresponding to nominals are likely to populate the early experience. Therefore, these concepts appear to be a good starting point in thinking about conceptual development.

The remainder of the paper consists of four parts. First, I consider what may develop in the course of conceptual development. Second, I consider some of the critical components of category learning: the structure of input, the multiple competing learning systems, and the asynchronous developmental time course of these systems. Third, I consider evidence for interactions among these components in category learning and category representation. And, finally, I consider how conceptual development may proceed from perceptual groupings to abstract concepts.

2. The Origins of Conceptual Knowledge

In an attempt to explain developmental origins of conceptual knowledge, a number of theoretical accounts have been proposed. Some argue that input is dramatically underconstrained to enable acquisition of complex knowledge and some knowledge has to come a priori from the organism, thus constraining future knowledge acquisition. Others suggest that there is much regularity (and thus many constraints) in the environment, with additional constrains stemming from biological specifications of the organism (e.g., limited processing capacity, especially early in development). In the remainder of this section I review these theoretical approaches.

2.1. Skeletal Principles, Core Knowledge, Constraints, and Biases

According to this proposal, structured knowledge cannot be recovered from perceptual input because the input is too indeterminate to enable such recovery (cf. R. Gelman, 1990). This approach is based on an influential idea that was originally proposed for the case of language acquisition but was later generalized to some other aspects of cognitive development, including conceptual development. The original idea is that linguistic input does not have enough information to enable the learner to recover a particular grammar, while ruling out alternatives (Chomsky, 1980). Therefore, some knowledge has to be innate to enable fast, efficient, and invariable learning under the conditions of impoverished input. This argument (known as the Poverty of the Stimulus argument) has been subsequently generalized to perceptual, lexical, and conceptual development. If input is too impoverished to constrain possible inductions and to license the concepts that we have, the constraints have to come from somewhere. It has been proposed that these constraints are internal – they come from the organism, and they are a priori and top-down (i.e., they do not come from data). A variety of such constraints have been proposed, including, but not limited to, innate knowledge within “core” domains (Carey, 2009; Carey & Spelke, 1994, 1996; Spelke, 2000; Spelke & Kinzler, 2007), skeletal principles (e.g., R. Gelman, 1990), ontological knowledge (Keil, 1979; Mandler, Bauer, & McDonough, 1991; Pinker, 1984; Soja, Carey, & Spelke, 1991), conceptual assumptions (S. Gelman, 1988; S. Gelman & Coley, 1991; E. Markman, 1989), and word learning biases (E. Markman, 1989; see also Golinkoff, Mervis, & Hirsh-Pasek, 1994).

However, there are several lines of evidence challenging (a) the explanatory machinery of this account with respect to language (Chater & Christiansen, this issue) and (b) the existence of particular core abilities (e.g., Twyman & Newcome, this issue). Furthermore, while the Poverty of the Stimulus argument is formally valid, its premises and therefore its conclusions are questionable. Most importantly, very little is known about the information value of input with respect to knowledge in question. Therefore it is not clear whether input is in fact as impoverished as it has been claimed. In addition, there are several lines of evidence suggesting that input might be richer than it is expected under the Poverty of the Stimulus assumption.

First, the fact that infants, great primates, monkeys, rats, and birds all can learn a variety of basic level perceptual categories (Cook & Smith, 2006; Quinn, et al, 1993; Smith, Redford, & Haas, 2008; Zentall, et al, 2008) strongly indicates that perceptual input (at least for basic level categories) is not impoverished. Otherwise, one would need to assume that all these species have the same constraints as humans, which seems implausible given vastly different environments in which these species live.

In addition, there is evidence that perceptual input (Rakison & Poulin-Dubois, 2001) or a combination of perceptual and linguistic input (Jones & Smith, 2002; Samuelson & Smith, 1999; Yoshida & Smith, 2003) can jointly guide acquisition of broad ontological classes. Furthermore, cross-linguistic evidence suggests that ontological boundaries exhibit greater cross-linguistic variability than could be expected if they were fixed (Imai & Gentner, 1997; Yoshida & Smith, 2003). Therefore there might be enough information in the input for the learner to form both basic-level categories and broader ontological classes. There is also modeling work (e.g., Gureckis & Love, 2004; Rogers & McClelland, 2004) offering a mechanistic account of how coherent covariation in the input could guide acquisition of broad ontological classes as well as more specific categories.

In short, there are reasons to doubt that input is in fact impoverished, and if it is not impoverished, then a priori assumptions are not necessary. Therefore, to understand conceptual development, it seems reasonable to shift the focus away from a priori constraints and biases and towards the input and the way it is processed.

2.2. Similarity, Correlations, and Attentional Weights

According to an alternative approach, conceptual knowledge as well as some of the biases and assumptions are a product rather than a precondition of learning (see Rogers & McClelland, 2004, for a connectionist implementation of these ideas). Early in development cognitive processes are grounded in powerful learning mechanisms, such as statistical and attentional learning (Smith, 1989; Smith, Jones, & Landau, 1996; French, Mareschal, Mermillod, & Quinn, 2004; Mareschal, Quinn, & French, 2002; Rogers & McClelland, 2004; Saffran, Johnson, Aslin, & Newport, 1999; Sloutsky, 2003; Sloutsky & Fisher, 2004a).

According to this view, input is highly regular and the goal of learning is to extract these regularities. For example category learning could be achieved by detecting multiple commonalities, or similarities, among presented entities. In addition, not all commonalities are the same – features may differ in salience and usefulness for generalization, with both salience and usefulness of a feature reflected in its attentional weight. However, unlike the a priori assumptions, attentional weights are not fixed and they can change as a result of learning: attentional weights of more useful features increase while these weights decrease for less useful features (Kruschke, 1992; Nosofsky, 1986; Opfer & Siegler, 2004; Sloutsky & Spino, 2004, see also Hammer & Diesendruck, 2005).

There are several lines of research presenting evidence that both basic level categories (e.g., dogs) and broader ontological classes (e.g., animates vs. inanimates) have multiple perceptual within-category commonalities and between-category differences (French, et al., 2004; Rakison & Poulin-Dubois, 2001; Samuelson & Smith, 1999). Some researchers argue that additional statistical constraints come from language in the form of syntactic cues, such as count noun and mass noun syntax (Samuelson & Smith, 1999). Furthermore, cross-linguistic differences in the syntactic cues (e.g., between English and Japanese) can push ontological boundaries in speakers of respective languages (Imai & Gentner, 1997; Yoshida & Smith, 2003). Finally, different categories could be organized differently (e.g., living things could be organized by multiple similarities, whereas artifacts could be organized by shape), and there might be multiple correlations between category structure, perceptual cues and linguistic cues. All this information could be used to distinguish between different kinds. As children acquire language, they may become sensitive to these correlations, which may affect their attention to shape in the context of artifacts versus living things (Jones & Smith, 2002).

This general approach may offer an account of conceptual development that does not posit a priori knowledge structures. It assumes that input is sufficiently rich to enable the young learner to form perceptual groupings. Language provides learners with an additional set of cues that allow them to form more abstract distinctions. Finally, lexicalization of such groupings as well as of some unobservable conceptual features could result in acquisition of concepts at progressively increasing levels of abstraction. In the next section, I will outline how conceptual development could proceed from perceptual groupings to abstract concepts.

2.3. From Percepts to Concepts: What Develops?

If people start out with perceptual groupings, how do they end up with sophisticated conceptual knowledge? According to the proposal presented here, conceptual development hinges on several critical steps. These include the ability to learn similarity-based uni-modal categories, the ability to integrate cross-modal information, the lexicalization of learned perceptual groupings, the lexicalization of conceptual features, and the development of executive function. The latter development is of critical importance for acquiring abstract concepts that are not grounded in similarity. Examples of such concepts are unobservables (e.g., love, doubt, thought), relational concepts (e.g., enemy or barrier), as well as a variety of rule-based categories (e.g., island, uncle, or acceleration). Because these concepts require focusing on unobservable abstract features, their acquisition may depend on the maturity of executive function.

This developmental time course is determined in part by an interaction of several critical components. These components include: (a) the structure of the to-be-learned category, (b) the competing learning systems that might sub-serve learning categories of different structures, and (c) developmental course of these learning systems. First, categories differ in their structure. For example, some categories (e.g., most of natural kinds, such as cat or dog) have multiple intercorrelated features relevant for category membership. These features are jointly predictive, thus yielding a highly redundant (or statistically dense) category. These categories often have graded membership (i.e., a typical dog is a better member of the category than an atypical dog) and fuzzy boundaries (i.e., it is not clear whether a cross between a dog and a cat is a dog). At the same time, other categories are defined by a single dimension or a relation between or among dimensions. Members of these categories have very few common features, with the rest of the features varying independently and thus contributing to irrelevant or “surface” variance. Good examples of such sparse categories are mathematical and scientific concepts. Consider the two situations: (1) increase in a population of fish in a pond and (2) interest accumulation in a bank account. Only a single commonality – exponential growth – makes both events instances of the same mathematical function. All other features are irrelevant for membership in this category and can vary greatly.

Second, there might be multiple systems of category learning (e.g., Ashby, et al., 1998) evolved to learn categories of different structures. In particular, a compression-based system may sub-serve category learning by reducing perceptually-rich input to a more basic format. As a result of this compression, features that are common to category members (but not to non-members) become a part of representation, whereas idiosyncratic features get washed out. In contrast, the selection-based system may sub-serve category learning by shifting attention to category-relevant dimension(s) and away from irrelevant dimension(s). Such selectivity may require the involvement of brain structures associated with executive function. The compression-based system could have an advantage for learning dense categories, which could be acquired by mostly perceptual means. At the same time, the selection-based system could have an advantage for learning sparse categories, which require focusing on few category-relevant features (Kloos & Sloutsky, 2008; see also Blair, Watson and Meire, 2009, for a discussion).

The involvement of each system may also affect what information is encoded in the course of category learning, and, subsequently, how a learned category is represented. In particular, the involvement of the compression-based system may result in a reduced yet fundamentally perceptual representation of a category, whereas the involvement of the selection-based system may result in a more abstract (e.g., lexicalized) representation. Given that many real-life categories (e.g., dogs, cats, or cups) are acquired by perceptual means and later undergo lexicalization, there are reasons to believe that these categories combine perceptual representation with a more abstract lexicalized representation. These abstract lexicalized representations are critically important for the ability to reason and form arguments that could be all but impossible to form by strictly perceptual means. For example, it is not clear how purely perceptual representation of constituent entities would support a counterfactual of the form “If my grandmother were my grandfather…”

And third, the category learning systems and associated brain structures may come on-line at different points in development, with the system sub-serving learning of dense categories coming on-line earlier than the system sub-serving learning of sparse categories. In particular, there is evidence that many components of executive function critical for learning sparse categories exhibit late developmental onset (e.g., Davidson, Amso, Anderson, & Diamond, 2006). If this is the case, then able learning and representation of dense categories should precede that of sparse categories. Under this view, “conceptual” assumptions do not have to underlie category learning, as most categories that children acquire spontaneously are dense and can be acquired implicitly, without a teaching signal or supervision. At the same time, some of these “conceptual” assumptions could be a product of later development.

The current proposal of conceptual development has three parts (see Sections 3-5). In the next section (Section 3), I consider in detail components of category learning: category structure, the multiple competing learning systems, and the potentially different maturational course of these systems. I suggest that categories in the world differ in their structure and consider ways of quantifying this structure. I then present another argument that there might be different learning systems (sub-served by different brain mechanisms) that evolved to learn categories of differing structures. Finally, I argue that these systems exhibit differential maturational course, which affects how categories of different structures are learned in the course of development. Then, in Section 4, I consider an interaction of these components. This interaction is important because it may result in the developmental transition from perceptual groupings to abstract concepts. These arguments point to a more nuanced developmental picture (presented in Section 5), in which learning of perceptual categories, cross-modal integration, lexicalization, learning of conceptual properties, the ability to focus and shift attention, and the development of lexicalized concepts are logical steps in conceptual development.

3. Components of Category Learning: Input, Learning System, and the Learner

3.1. Characteristics of Input: Category Structure

It appears almost self-evident that categories differ in their structure. Some categories are coherent: their members have multiple overlapping features and are often similar (e.g., cats or dogs are good examples of such categories). Other categories seem to be less coherent: their members have few overlapping features (e.g., square things). These differences have been noted by a number of researchers who pointed to different category structures between different levels of ontology (e.g., Rosch, Mervis, Gray, Johnson, & Boyes-Braem, 1976) and between animal and artifact categories (Jones & Smith, 2002; Jones, Smith, & Landau, 1991; E. Markman, 1989). Category structure can be captured formally and one such treatment of category structure has been offered recently (Kloos & Sloutsky, 2008). The focal idea of this proposal is that category structure can be measured by statistical density of a category. Statistical density is a function of within-category compactness and between-category distinctiveness, and may have profound effects on category learning. In what follows, I flesh out this idea.

3.1.1. Statistical Density as a Measure of Category Structure

Any set of items can have a number of possible dimensions (e.g., color, shape, size), some of which might vary and some of which might not. Categories that are statistically dense have multiple intercorrelated (or covarying) features relevant for category membership, with only a few features being irrelevant. Good examples of statistically dense categories are basic-level animal categories such as cat or dog. Category members have particular distributions of values on a number of dimensions (e.g., shape, size, color, texture, number of parts, type of locomotion, type of sounds they produce, etc.). These distributions are jointly predictive, thus yielding a dense (albeit probabilistic) category. Categories that are statistically sparse have very few relevant features, with the rest of the features varying independently. Good examples of sparse categories are dimensional groupings (e.g., “round things”), relational concepts (e.g., “more”), scientific concepts (e.g., “accelerated motion”), or role-governed concepts (e.g., cardinal number, see A. Markman & Stillwell, 2001, for a discussion of role-governed categories).

Conceptually, statistical density is a ratio of variance relevant for category membership to the total variance across members and non-members of the category. Therefore, density is a measure of statistical redundancy (Shannon & Weaver, 1948), which is an inverse function of relative entropy.

Density can be expressed as

| (1) |

where Hwithin is the entropy observed within the target category, and Hbetween is the entropy observed between target and contrasting categories.

A detailed treatment of statistical density and ways of calculating it is presented elsewhere (Kloos & Sloutsky, 2008); thus, only a brief overview of statistical density is presented below. Three aspects of stimuli are important for calculating statistical density: variation in stimulus dimensions, variation in relations among dimensions, and attentional weights of stimulus dimensions.

First, a stimulus dimension may vary either within a category (e.g., members of a target category are either black or white) or between categories (e.g., all members of a target category are black, whereas all members of a contrasting category are white). Within-category variance decreases density, whereas between-category variance increases density.

Second, dimensions of variation may be related (e.g., all items are black circles), or they may vary independently of each other (e.g., items can be black circles, black squares, white circles or white squares). Co-varying dimensions result in smaller entropy than dimensions that vary independently. It is not unreasonable to assume that only dyadic relations (i.e., relations between two dimensions) are detected spontaneously, whereas relations of higher arity (e.g., a relation among color, shape, and size) are not (cf., Whitman & Garner, 1962). Therefore, only dyadic relations are included in the calculation of entropy.

The total entropy is the sum of the entropy due to varying dimensions (Hdim), and the entropy due to varying relations among the dimensions (H rel). More specifically,

| (2a) |

| (2b) |

The concept of entropy was formalized by the information theory (Shannon & Weaver, 1948), and we use these formalisms here. First consider the entropy due to dimensions. This within-category and between-category entropy is presented in equations 3a and 3b respectively.

| (3a) |

| (3b) |

where M is the total number of varying dimensions, wi is the attentional weight of a particular dimension (the sum of attentional weights equals to a constant), and pj is the probability of value j on dimension i (e.g., the probability of a color being white). The probabilities could be calculated within a category or between categories.

The attentional-weight parameter is of critical importance – without this parameter, it would be impossible to account for learning of sparse categories. In particular, when a category is dense, even relatively small attentional weights of individual dimensions add up across many dimensions. This makes it possible to learn the category without supervision. Conversely, when a category is sparse, only few dimensions are relevant. If attentional weights of each dimension are too small, supervision could be needed to direct attention to these relevant dimensions.

Next, consider entropy that is due to a relation between dimensions. To express this entropy, we need to consider the co-occurrences of dimensional values. If dimensions are binary, with each value coded as 0 or 1 (e.g., white = 0, black = 1, circle = 0, and square = 1), then the following four co-occurrence outcomes are possible: 00 (i.e., white circle), 01 (i.e., white square), 10 (i.e., black circle), and 11 (i.e., black square). The within-category and between-category entropy that is due to relations are presented in equations 4a and 4b, respectively.

| (4a) |

| (4b) |

where O is the total number of possible dyadic relations among the varying dimensions, wk is the attentional weight of a particular relation (again, the sum of attentional weights equals to a constant), and pmn is the probability of a co-occurrence of values m and n on a binary relation k (which conjoins two dimensions of variation).

3.1.2. Density, Salience, and Similarity

The concept of density is closely related to the ideas of salience and similarity, and it is necessary to clarify these relations. First, density is a function of weighted entropy, with attentional weight corresponding closely to the salience of a feature. Therefore, feature salience can affect density by affecting the attentional weight of the feature in question. Of course, as mentioned above, attentional weights are not fixed and they can change as a result of learning. Second, perceptual similarity is a sufficient, but not necessary condition of density – all categories bound by similarity are dense, but not all dense categories are bound by similarity. For example, some categories could have multiple overlapping relations rather than overlapping features (e.g., members of a category have short legs and short neck or long legs and long neck). It is conceivable that such non-linearly-separable categories could be relatively dense, yet not bound by similarity.

3.1.3. Category Structure and Early Learning

Although it is difficult to precisely calculate density of categories surrounding young infants, some estimates can be made. It seems that many of these categories, while exhibiting within-category variability in color (and sometime in size), have similar within-category shape, material, and texture (ball, cup, bottle, shoe, book, or apple are good examples of such categories); these categories should be relatively dense. As I show below, dense categories can be learned implicitly, without supervision. Therefore, it is possible that pre-linguistic infants implicitly learn many of the categories surrounding them. Incidentally, the very first noun words that infants learn denote these dense categories (see Dale & Fenson, 1996; Nelson, 1973). Therefore, it is possible that some of early word learning consists of learning lexical entries for already known dense categories. This possibility, however, is yet to be tested empirically.

Characteristics of the Learning System: Multiple Competing Systems of Category Learning

The role of category structure in category learning has been a focus of the neuroscience of category learning. Recent advances in that field suggest that there might be multiple systems of category learning (e.g., Ashby, et al, 1998; Cincotta & Seger, 2007; Nomura & Reber, 2008; Seger, 2008; Seger & Cincotta, 2002) and an analysis of these systems may elucidate how category structure interacts with category learning. I consider these systems in this section.

There is an emerging body of research on brain mechanisms underlying category learning (see Ashby & Maddox, 2005; Seger, 2008, for reviews). While the anatomical localization and the involvement of specific circuits remain a matter of considerable debate, there is substantial agreement that “wholistic” or “similarity-based” categories (which are typically dense) and “dimensional” or “rule-based” categories (which are typically sparse) could be learned by different systems in the brain.

There are several specific proposals identifying brain structures that comprise each system of category learning (Ashby, et al, 1998; Cincotta & Seger, 2007; Nomura & Reber, 2008; Seger, 2008; Seger & Cincotta, 2002). Most of the proposals involve three major hierarchical structures: cortex, basal ganglia, and thalamus. There is also evidence for the involvement of the medial temporal lobe (MTL) in category learning (e.g., Nomura, et al, 2007; see also Love & Gureckis, 2007). However, because the maturational time course of the MTL is not well understood (Alvarado & Bachevalier, 2000), I will not focus here on this area of the brain.

One influential proposal (e.g., Ashby et al, 1998) posited two cortical-striatal-pallidal-thalamic-cortical loops, which define two acting in parallel circuits. The circuit responsible for learning of similarity-based categories originates in extrastriate visual areas of the cortex (such as, inferotemporal cortex) and includes the posterior body and tail of the caudate nucleus. In contrast, the circuit responsible for the learning of rule-based categories originates in the prefrontal and anterior cingulated cortices (ACC) and includes the head of the caudate (Lombardi et al., 1999; Rao et al., 1997; Rogers et al., 2000).

In a similar vein, Seger and Cincotta (2002) propose the visual loop, which originates in the inferior temporal areas and passes through the tail of the caudate nucleus in the striatum, and the cognitive loop, which passes through the prefrontal cortex and the head of the caudate nucleus. The visual loop has been shown to be involved in visual pattern discrimination in nonhuman animals (Buffalo, et al., 1999; Fernandez-Ruiz et al., 2001; Teng et al., 2000), and Seger and Cincotta (2002) have proposed that this loop may sub-serve learning of similarity-based visual categories. The cognitive loop has been shown to be involved in learning of rule-based categories (e.g., Rao et al., 1997; Seger & Cincotta, 2002; see also Seger, 2008).

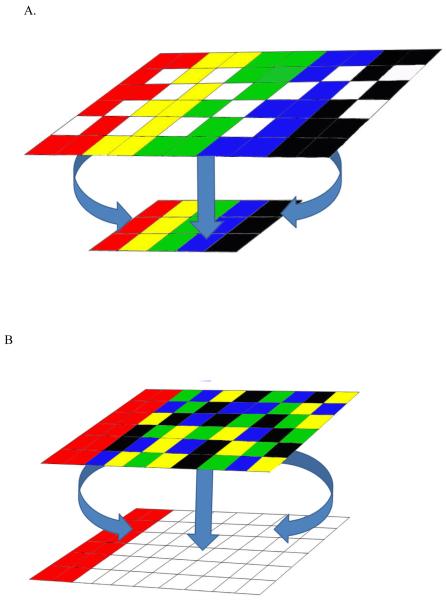

There is also evidence that category learning is achieved differently in the two systems. The critical feature of the visual system is the reduction of information or compression, with only some but not all stimulus features being encoded. Therefore, I will refer to this system as the compression-based system of category learning. A schematic representation of processing in this system is depicted in Figure 1A. The feature map in the top layer gets compressed in the bottom layer, with only some features of the top layer represented in the bottom layer.

Figure 1.

A. Schematic depiction of the compression-based system. The top layer represents stimulus encoding in inferotemporal cortex. This rich encoding gets compressed to a more basic form in the striatum represented by the bottom layer. Although some of the features are left out, much perceptual information present in the top layer is retained in the bottom layer. B. Schematic depiction of the selection-based system. The top layer represents selective encoding in the prefrontal cortex. The selected dimension is then projected to the striatum represented by the bottom layer. Only the selected information is retained in the bottom layer.

This compression is achieved by many-to-one projections of the visual cortical neurons in the inferotemporal cortex onto the neurons of the tail of the caudate (Bar-Gad, Morris, Bergman, 2003; Wilson, 1995). In other words, many cortical neurons converge on an individual caudate neuron. As a result of this convergence, information is compressed to a more basic form, with redundant and highly probable features likely to be encoded (and thus learned) and idiosyncratic and rare features likely to be filtered out.

Category learning in this system results in a reduced (or compressed) yet fundamentally perceptual representation of stimuli. If every stimulus is compressed, then those features and feature relations that are frequent in category members should survive the compression, whereas rare or unique features/relations should not. Because compression does not require selectivity, compression-based learning could be achieved implicitly, without supervision (such as feedback or even more explicit forms of training), and it should be particularly successful in learning of dense categories.

In short, there is a critical feature of the compression-based system – it can learn dense categories without supervision. Under some conditions, the compression-based system may also learn structures defined by a single dimension of variation (e.g., color or shape). For example, when there is a small number of dimensions of variation (e.g., color and shape, with shape distinguishing among categories), compression may be sufficient for learning a category relevant dimension. However, if categories are sparse, with only few relevant dimensions and multiple irrelevant dimensions, learning of the relevant dimensions by compression could be exceedingly long or not possible at all.

The critical aspect of the second system of category learning is the cognitive loop which involves (in addition to the striatum) the dorsolateral prefrontal cortex and the Anterior Cingulate Cortex (ACC) -- the cortical areas that sub-serve attentional selectivity, working memory, and other aspects of executive function (cf. Posner & Petersen, 1990). I will therefore refer to this system as selection-based. The selection-based system enables attentional learning – allocation of attention to some stimulus dimensions and ignoring others (e.g., Kruschke, 1992, Kruschke, 2001; Mackintosh, 1975; Nosofsky, 1986). Unlike the compression-based system where learning is driven by reduction and filtering of idiosyncratic features (while retaining features and feature correlations that recur across instances), learning in the selection-based system could be driven by error reduction. As schematically depicted in Figure 1B, attention is shifted to those dimensions that predict error reduction and away from those that do not (e.g., Kruschke, 2001, but see Blair et al, 2009).

Given that attention has to be shifted to a relevant dimension, the task of category learning within the selection-based system should be easier when there are fewer relevant dimensions (see Kruschke, 1993, 2001 for related arguments). This is because it is easier to shift attention to a single dimension than to allocate it to multiple dimensions. Therefore, the selection-based system is better suited to learn sparse categories (recall that the compression-based system is better suited to learn dense categories). For example, Kruschke (1993) describes an experiment where participants learned a category in a supervised manner, with feedback presented on every trial. For some categories, a single dimension was relevant, whereas for other categories, two related dimensions were relevant for categorization. Participants were shown to learn better in the former than in the latter condition. Given that learning was supervised (i.e., category learning and stimulus dimensions that might be relevant for categorization were mentioned explicitly, and feedback was given on every trial), it is likely that the selection-based system was engaged.

The selection-based system depends critically on prefrontal circuits as these circuits enable the selection of a relevant stimulus dimension (or rule), while inhibiting irrelevant dimensions. The selected (and perhaps amplified) dimension is likely to survive the compression in the striatum, whereas the non-selected (and perhaps weakened) dimensions may not. Therefore, there is little surprise that young children (whose selection-based system is still immature) tend to exhibit successful categorization performance when categories are based on multiple dimensions than when they are based on a single dimension (e.g., L. B. Smith, 1989).

How are the systems deployed? Although the precise mechanism remains unknown, several ideas have been proposed. For example, Ashby et al (1998) posited competition between the systems, with the selection-based system being deployed by default. This idea stems from evidence that participants exhibited more able learning when categories were based on a single dimension than when categories are based on multiple dimensions (e.g., Ashby, et al., 1998; Kruschke, 1993). However, it is possible that the selection-based system was triggered by feedback and explicit learning regime, whereas in the absence of supervision the compression-based system is a default (cf. Kloos & Sloutsky, 2008). Furthermore, it seems unlikely that the idea of the default deployment of the selection-based system describes accurately what happens early in development. As I argue in the next section, because some critical cortical components of the selection-based system mature relatively late, it is likely that early in development the competition is weakened (or even absent), thus making the compression-based system default.

If the compression-based system is deployed by default early in development (and, when supervision is absent, it is deployed by default in adults as well), this default deployment may have consequences for category learning. In particular, if a category is sparse, the compression-based system may fail to learn it due to a low signal-to-noise ratio in the sparse category. In contrast, the selection-based system may have the ability to increase the signal-to-noise ratio by shifting attention to the signal, thus either amplifying the signal or by inhibiting noise.

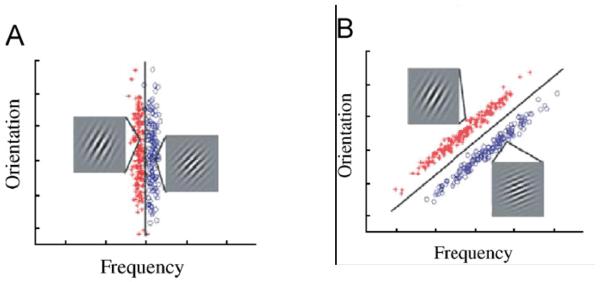

The idea of multiple systems of category learning has been supported by both fMRI and neuropsychological evidence. In one neuroimaging study reported by Nomura et al. (2007) participants were scanned while learning two categories of sine wave gratings. The gratings varied on two dimensions: spatial frequency and orientation of the lines. In the rule-based condition, category membership was defined only by the spatial frequency of the lines (see Figure 2a), whereas in the “wholistic” condition, both frequency and orientation determined category membership (see Figure 2b). Note that each point in Figure 2 represents an item and the colors represent distinct categories. Rule-based categorization showed greater differential activation in the hippocampus, the ACC, and medial frontal gyrus. At the same time, the wholistic categorization exhibited greater differential activation in the head and tail of the caudate.

Figure 2.

(After Nomura & Reber, 2008). RB (A) and II stimuli (B). Each point represents a distinct Gabor patch (sine-wave) stimulus defined by orientation (tilt) and frequency (thickness of lines). In both stimulus sets, there are two categories (red and blue points). RB categories are defined by a vertical boundary (only frequency is relevant for categorization) whereas II categories are defined by a diagonal boundary (both orientation and frequency are relevant). In both RB and II stimuli there are examples of a stimulus from each category.

Some evidence for the possibility of the two systems of category learning stem from neuropsychological research. One of the most frequently studied populations are patients with Parkinson’s disease (PD), because the disease often affects frontal cortical areas in addition to striatal areas (e.g., van Domburg & ten Donkelaar, 1991). As a result, these patients often exhibit impairments in both the compression-based and the selection-based systems of category learning. Therefore, this group provides only indirect rather than clear cut evidence for the dissociation between the systems. For example, impairments of the compression-based system in PD were demonstrated in a study by Knowlton, Mangles, and Squire (1996), in which patients with Parkinson’s disease (which affects the release of dopamine in the striatum) had difficulty learning probabilistic categories that were determined by co-occurrence of multiple perceptual cues. Impairments of the selection-based learning system have been demonstrated in patients with damage to the prefrontal cortex (which also often include PD patients). Specifically, in the multiple studies using the Wisconsin Card Sorting Test (WCST: Berg, 1948; Brown & Marsden, 1988; Cools et al., 1984), it was found that the patients often exhibit impaired learning of categories based on verbal rules, as well as impairments in shifting attention from successfully learned rules to new rules (see Ashby, et al., 1998, for a review).

In the WCST, participants have to discover an experimenter-defined matching rule (e.g., “objects with the same shape go together”) and respond according to the rule. In the middle of the task, the rule may change and participants must sort according to the new rule. Two aspects of the task are of interest, rule learning and response shifting, with both being likely to be sub-served by the selection-based system (see Ashby, et al., 1998, for a discussion). There are several types of shifts, with two being of particular interest for understanding of the selection-based system – the reversal shift and the extradimensional shift.

The reversal shift consists of a reassignment of a dimension to a response. For example, a participant could initially learn that “if Category A (say the color is green), then press button 1, and if Category B (say the color is red), then press button 2.” The reversal shift requires a participant to change the pattern of responding, such that “if Category A, then press button 2, and if Category B, then press button 1.” In contrast, the extradimensional shift consists of change in which dimension is relevant. For example, if a participant initially learned that “if Category A (say the color is green), then press button 1, and if Category B (say the color is red), then press button 2,” the extradimensional shift would require a different pattern of responding: “if Category K (say the size is small), then press button 1, and if Category M (say the size is large), then press button 2.” Findings indicate that patients with lesions to prefrontal cortices had substantial difficulties with extradimensional, but not with the reversal shifts on the WCST (e.g., Rogers, Andrews, Grasby, Brooks, & Robbins, 2000). Therefore, these patients did not have a difficulty inhibiting the previously learned pattern of responding but rather had difficulty shifting attention to a formerly irrelevant dimension, which is indicative of a selection-based system impairment.

In sum, there is evidence that the compression-based and the selection-based system may be dissociated in the brain. Furthermore, although both systems involve parts of the striatum, they differ with respect to other areas of the brain. Whereas the selection-based system relies critically on the prefrontal cortex and the ACC, the compression-based system relies on inferotemporal cortex. As I argue in the next section, the inferotemporal and the prefrontal cortices may exhibit differential maturational time course. The relative immaturity of prefrontal cortices early in development coupled with a relative maturity of the inferotemporal cortex and the striatum should result in young children having a more mature compression-based than selection-based system and thus being more efficient in learning dense than sparse categories (cf., L. B. Smith, 1989; J. D. Smith & Kemler-Nelson, 1984).

3.3. Characteristics of the Learner: Differential Maturational Course of Brain Systems Underlying Category Learning

Many vertebrates have a brain structure analogous to the inferotemoral cortex (IT) and the striatum, whereas only mammals have a developed prefrontal cortex (Striedter, 2005). Studies of normal brain maturation (Jernigan et al., 1991; Pfefferbaum et al., 1994; Caviness et al., 1996; Giedd et al., 1996a; 1996b; Sowell and Jernigan, 1999; Sowell et al., 1999a, b) have indicated that brain morphology continues to change well into adulthood. As noted by Sowell, et al. (1999a), maturation progresses in a programmed way, with phylogenetically more primitive regions of the brain (e.g., brain stem and cerebellum) maturing earlier, and more advanced regions of the brain (e.g., the association circuits of the frontal lobes) maturing later. In addition to the study of brain development focused on the anatomy, physiology, and chemistry of the changing brain, researchers have studied the development of function that is sub-served by particular brain areas.

Given that the two learning systems differ primarily with respect to the cortical structures involved (the basal ganglia structures are involved in both systems), I will focus primarily on the maturational course of these cortical systems. I will first review data pertaining to the maturational course of IT and associated visual recognition functions and then pertaining to the prefrontal cortex and associated executive function.

3.3.1. Maturation of the Inferotemoral (IT) Cortex

Maturation of the IT cortex has been extensively studied in monkeys using single cell recording techniques. As demonstrated by several researchers (e.g., Rodman, 1994; Rodman, Skelly, & Gross, 1991) many fundamental properties of IT emerge quite early. Most importantly, as early as 6 weeks, neurons in this cortical area exhibit adult-like patterns of responsiveness. In particular, researchers presented subjects with different images (e.g., monkey faces and objects varying in spatial frequency), while recording electrical activity of IT neurons. The found that in both infant and adult monkeys, IT neurons exhibited a pronounced form of tuning, with different neurons responding selectively to different types of stimuli. These and similar findings led researchers to conclude that the IT cortex is predisposed to rapidly develop major neural circuitry necessary for basic visual processing. Therefore, while some aspects of the IT circuitry may exhibit a more prolonged development, the basic components develop relatively early. These findings contrast sharply with findings indicating a lengthy developmental time course of prefrontal cortices (e.g., Bunge & Zelazo, 2006).

3.3.2. Maturation of the Prefrontal Cortex (PFC)

There is a wide range of anatomical, neuroimaging, neurophysiological, and neurochemical evidence indicating that the development of the PFC continues well into adolescence (e.g., Sowell, et al. 1999b; see also Luciana & Nelson, 1998; Rueda, Fan, McCandliss, Halparin, Gruber, Lercari, & Posner, 2004, Davidson et al., 2006, for extensive reviews).

The maturational course of the PFC has been studied in conjunction with research on executive function -- the cognitive function that depends critically on the maturity of the PFC (Davidson et al., 2006; Diamond & Goldman-Rakic, 1989; Fan, McCandliss, Sommer, Raz, & Posner, 2002; Goldman-Rakic, 1987; Posner & Petersen, 1990). Executive function comprises of a cluster of abilities such as holding information in mind while performing a task, switching between tasks or between different demands of a task, inhibiting a dominant response, deliberate selection of some information and ignoring other information, selection among different responses, and resolving conflicts between competing stimulus properties and competing responses.

There is a large body of behavioral evidence that early in development children exhibit difficulties in deliberately focusing on relevant stimuli, inhibiting irrelevant stimuli, and switching attention between stimuli or stimulus dimensions (Diamond, 2002; Kirkham, Cruess, & Diamond, 2003; Napolitano & Sloutsky, 2004; Shepp & Swartz, 1976; Zelazo, Frye, & Rapus, 1996; Zelazo, Müller, Frye, & Marcovitch, 2003; see also Fisher, 2007, for a more recent review).

Maturation of the prefrontal structures in the course of individual development results in progressively greater efficiency of executive function, including the ability to deliberately focus on what is relevant while ignoring what is irrelevant. This is a critical step in acquiring the ability to form abstract, similarity-free representations of categories and use these representations in both category and property induction. Therefore, the development of relatively abstract category-based generalization may hinge on the development of executive function. As suggested above, while the selection-based system could be deployed by default in adults when learning is supervised (e.g., Ashby et al, 1998), it could be that early in development, it is the compression-based system that is deployed by default.

Therefore, there are reasons to believe that the cortical circuits that sub-serve the compression-based learning system (i.e., IT) come on-line earlier than the cortical circuits that sub-serve the selection-based learning system (i.e., PFC). Thus, it seems likely that early in development children would be more efficient in learning dense, similarity-bound categories (as these could be efficiently learned by the compression-based system) than sparse, similarity-free ones (as these require the involvement of the selection-based system).

In sum, understanding category learning requires understanding an interaction of at least three components: (a) the structure of the input, (b) the learning system that evolved to process this input, and (3) the characteristics of the learner in terms of the availability and maturity of each of the system. Understanding the interaction among these components leads to several important predictions. First, dense categories should be learned more efficiently by the non-deliberate, compression-based system, whereas sparse categories should be learned more efficiently by the more deliberate selection-based system. Second, because the critical components of the selection-based system develop late (both phylo- and ontogenetically) relative to the compression-based system, learning of dense categories should be more universal, whereas learning of sparse categories should be limited to those organisms that have a developed PFC. Third, because the selection-based system of category learning undergoes a more radical developmental transformation, learning of sparse categories should exhibit greater developmental change than learning of dense categories. Fourth, young children can spontaneously learn dense categories that are based on multiple overlapping features, whereas they should have difficulty spontaneously learning sparse categories that have few relevant features or dimensions and multiple irrelevant features. Note that the critical aspect here is not whether a category is defined by a single dimension or by multiple dimensions, but whether the category is dense or sparse. For example, it should be less difficult to learn a color-based categorization if color is the only dimension that varies across the categories, whereas it should be very difficult to learn a color-based categorization if items vary on multiple irrelevant dimensions. And finally, given the immaturity of the selection-based system of category learning and of executive function it seems implausible that early in development children can spontaneously use a single predictor as a category marker overriding all other predictors. In particular, this immaturity casts doubt on the ability of babies or even young children to spontaneously use linguistic labels as category markers in category representation. Because the issue of the role of category labels in category representation is of critical importance for understanding of conceptual development, I will focus on it in one of the sections below.

In what follows, I review empirical evidence that has been accumulated over the years, with particular focus on research generated in my lab. Although many issues remain unknown, I will present two lines of evidence supporting these predictions. First, I present evidence that category structure, learning system, and developmental characteristics of the learner interact in category learning and category representation. In particular, early in development the compression-based system exhibits greater efficiency than the selection-based system. In addition, early in development, categories are represented perceptually, and only later do participants form more abstract, dimensional, rule-based or lexicalized representations of categories. And second, the role of words in category learning is not fixed; rather, it undergoes developmental change: words initially affect processing of visual input, and only gradually they become category markers.

4. Interaction among Category Structure, Learning System and Characteristics of the Learner: Evidence from Category Learning and Category Representation

Recall that I hypothesized an interaction among (a) the structure of the category (in particular, its density), (b) the learning system that evolved to process this input, and (3) the characteristics of the learner in terms of the availability and maturity of each system. In what follows, I consider components of this interaction with respect to category learning and category representation.

4.1. Category Learning

As discussed above, there are reasons to believe that in the course of individual development, the compression-based system comes online earlier than the selection-based system (i.e., due to the protracted immaturity of the executive function that sub-serves the selection-based system). Therefore, it seems plausible that at least early in development the compression-based system is deployed by default, whereas the selection-based system has to be triggered explicitly (see Ashby, et al, 1998 for arguments that this may not be the case in adults). It is also possible that there are experimental manipulations that could trigger the non-default system. In particular, the selection-based system could be triggered by explicit supervision or an error signal.

If the systems are dissociated, then sparse categories that depend critically on selective attention (as they require focusing on a few relevant dimensions, while ignoring irrelevant dimensions) may be learned better under the conditions triggering the selection-based system. At the same time, dense categories that have much redundancy may be learned better under the conditions of implicit learning. Finally, because dense categories could be efficiently learned by the compression-based system, which is more primary, both phylo- and ontogenetically, learning of dense categories should be more universal than learning of sparse categories. In what follows, I review evidence exemplifying these points.

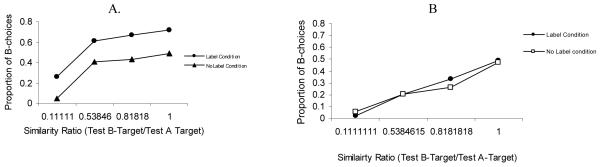

4.1.1. Interactions between Category Structure and the Learning System

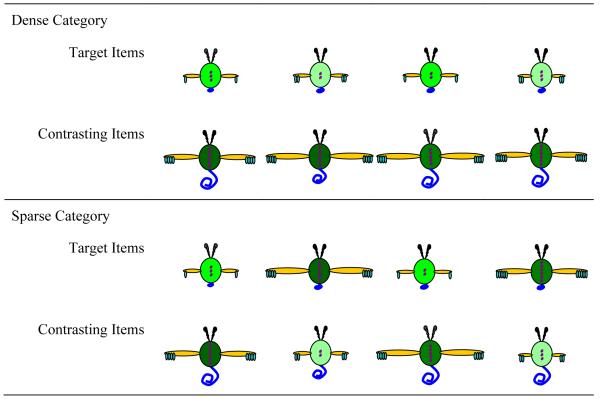

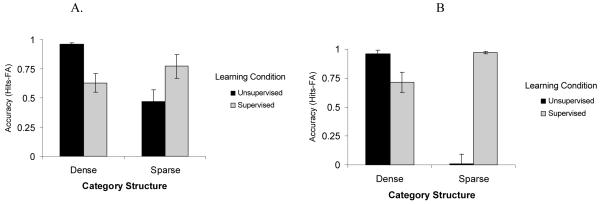

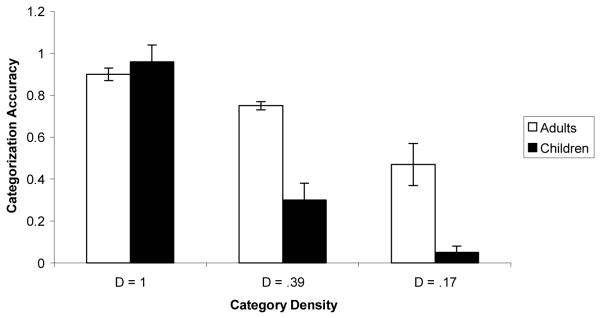

In a recent study (Kloos & Sloutsky, 2008), we demonstrated that category structure interacts with the learning system as well as with characteristics of the learner. In this study, 5-year-olds and adults were presented with a category learning task where they learned either dense or sparse categories. These categories consisted of artificial bug-like creatures that had a number of varying features: sizes of tail, wings, and fingers; the shadings of body, antenna, and buttons; and the numbers of fingers and buttons (see Figure 3, for examples of categories). Category learning was administered under either an unsupervised, spontaneous learning condition (i.e., participants were merely shown the items) or under a supervised, deliberate learning condition (i.e., participants were told the category inclusion rule). Recall that the former learning condition was expected to trigger the compression-based system of category learning, whereas the latter was expected to trigger the selection-based system. If category structure interacts with the learning system, then implicit, unsupervised learning should be more optimal for learning dense categories, whereas explicit, supervised learning should be more optimal for learning sparse categories. This is exactly what was found: for both children and adults, dense categories were learned better under the unsupervised, spontaneous learning regime, whereas sparse categories were learned more efficiently under the supervised learning regime. Critical data from this study are presented in Figure 4. The figure presents categorization accuracy (i.e., the proportion of hits, or correct identification of category members minus the proportion of false alarms, or confusion of non-members for members) after the category learning phase.

Figure 3.

Examples of items used in Kloos and Sloutsky (2008), Experiment 1. In the dense category, items are bound by similarity, whereas in the sparse category, the length of the tale is the predictor of the category membership.

Figure 4.

Mean accuracy scores by category type and learning condition in adults (A) and in children (B). In this and all other figures error bars represent standard errors of the mean. For the dense category D = 1 and for the sparse category D = 0.17

These findings dovetail with results reported by Yamauchi, Love, & A. Markman (2002) and Yamauchi & A. Markman (1998) in adults. In these studies, participants completed a category learning task that had two learning conditions, classification and inference. In the classification condition, participants learned categories by predicting category membership of each study item. In the inference condition, participants learned categories by predicting a feature shared by category members. Across the conditions, results revealed a category structure by learning condition interaction. In particular, non-linearly-separable (NLS) categories (which are typically sparser) were learned better in the classification condition, whereas prototype-based categories (which are typically denser) were learned better in the inference condition.

The interaction between the category structure and the learning system has been recently demonstrated by Hoffman & Rehder (submitted), with respect to the cost of selectivity in category learning. Similar to Yamauchi & A. Markman (1998), participants learned categories either by classification or by feature inference. In the classification condition, participants were presented with two categories (e.g., A and B). On each trial, they saw an item and their task was to predict whether the item in question is a member of A or B. In the inference condition, participants were also presented with categories A and B. On each trial, they saw an item that had one missing feature and their task was to predict whether it was a feature common to A or common to B. In both conditions, upon responding, participants received feedback.

Each category had three binary dimensions whose values were designated as 0 or 1. There were two learning phases. In Phase 1, participants learned two categories A and B, with dimensions 1 and 2 distinguishing between the categories and dimension 3 being fixed across the categories (e.g., all items had a value of 0 on the fixed dimension 3). In Phase 2, participants learned two other categories C and D, with dimensions 1 and 2 again distinguishing between the categories and dimension 3 being fixed again (e.g., now items had a value of 1 on the fixed dimension 3). After the two training phases, participants were given categorization trials involving contrasts between categories that were not paired during training (e.g., A vs. C). Note that correct responding on these novel contrasts required attending to dimension 3 which had been previously irrelevant during training. If participants attend selectively to dimensions, their attention should have been allocated to dimensions 1 and 2 during learning, which should have attenuated attention to dimension 3. This attenuated attention represents the cost of selectivity. Alternatively, if no selectivity is involved, there should be little or no attenuation, and therefore, little or no cost. It was found that the cost was higher for classification learners than for inference learners, thus suggesting that classification learning, but not inference learning, engages the selection-based system.

4.1.2. Developmental Primacy of the Compression-Based System

Zentall et al. (2008) present an extensive literature review indicating that although birds, monkeys, apes, and humans are capable of learning categories consisting of highly similar yet discriminable items (i.e., dense categories), only some apes and humans could learn sparse relational categories, such as “sameness” when an equivalence class consisted of dissimilar items (e.g., a pair of red squares and a pair of blue circles are members of the same sparse category). However, even here it is not clear that subjects were learning a sparse category. As shown by Wasserman and colleagues (e.g., Wasserman, Young, & Cook, 2004), non-human animals readily distinguish situations with no variability in the input (i.e., zero entropy) from situations where input has stimulus variability (i.e., non-zero entropy). Therefore, it is possible that learning was based on the distinction between zero entropy in each of the “same” displays and non-zero entropy in each of the “different” displays.

The idea of the developmental primacy of the compression-based system is supported by data from Kloos and Sloutsky (2008) reviewed above. In particular, data presented in Figure 4 clearly indicate that for both children and adults, sparse categories were learned better under the explicit, supervised condition, whereas dense categories were learned better under the implicit, unsupervised condition. Also note that adults learned the sparse category even in the unsupervised condition, whereas young children exhibited no evidence of learning. These data support the contention that the compression-based system is the default in young children.

In addition, data from Kloos and Sloutsky (2008) indicate that the while both children and adults exhibited able spontaneous learning of a dense category, there were marked developmental differences in spontaneous learning of sparse categories. Categorization accuracy in the spontaneous condition by category density and age are presented in Figure 5. Two aspects of these data are worth noting. First, there was no developmental difference in spontaneous learning of the very dense category, which suggests that the compression-based system of category learning exhibits the adult level of functioning in 4-5-year-olds. And second, there were substantial developmental differences in spontaneous learning of sparser categories, which suggests that adults, but not young children, may spontaneously deploy the selection-based system of category learning. Therefore, the marked developmental differences pertain mainly to the deployment and functioning of the selection-based system, but not of the compression-based system (see also Hammer, Diesendruck, Weinshall, & Hochstein, 2009, for related findings).

Figure 5.

Unsupervised category learning by density and age group in Kloos and Sloutsky (2008).

Additional evidence for the developmental primacy of the compression-based learning system stems from research demonstrating that young children can learn complex contingencies implicitly, but not explicitly (Sloutsky & Fisher, 2008). The main idea behind the Sloutsky and Fisher (2008) experiments was that implicit (and perhaps compression-based) learning of complex contingencies might underlie seemingly selective generalization behaviors of young children. There is much evidence suggesting that even early in development, people’s generalization could be selective – depending on the situation, people may rely on different kinds of information. This selectivity has been found in a variety of generalization tasks, including lexical extension, categorization, and property induction. For example, in a lexical extension task (Jones, Smith, & Landau, 1991), 2- and 3-year-olds were presented with a named target (i.e., “this is a dax”), and then were asked to find another dax among test items. Children extended the label by shape alone when the target and test objects were presented without eyes. However, they extended the label by shape and texture when the objects were presented with eyes.

Similarly, in a categorization task, 3- and 4-year-olds were more likely to group items on the basis of color if the items were introduced as food, but group on the basis of shape if the items were introduced as toys (Macario, 1991). More recently, Opfer and Bulloch (2007) examined flexibility in lexical extension, categorization, and property induction tasks. It was found that across these tasks, 4- to 5-year-olds relied on one set of perceptual predictors when the items were introduced as “parents and offspring,” whereas they relied on another set of perceptual predictors when items were introduced as “predators and prey”. These finding pose an interesting problem – is this putative selectivity sub-served by the selection-based system or by the compression-based system? Given critical immaturities of the selection-based system early in development, the latter possibility seems more plausible. Sloutsky and Fisher’s (2008) study supported this possibility.

A key idea is that many stimulus properties inter-correlate, such that some clusters of properties co-occur with particular outcomes, and other clusters co-occur with different outcomes, thus resulting in a dense “context-outcome” structures (cf. with the idea of “coherent covariation” presented in Rogers & McClelland, 2004). Learning these correlations may result in differential allocation of attention to different stimulus properties in different situations or contexts, with flexible generalizations being a result of this learning. In particular, participants could learn the following set of contingencies: in Context 1, Dimension 1 (say, color) was predictive, while Dimension 2 (say, shape) was not, whereas the reverse is true in Context 2. If, as argued above, the system of implicit compression-based learning is fully functioning even early in development, then the greater the number of contextual variables correlating with the relevant dimension (i.e., the greater the density), the greater the likelihood of learning. However, if learning is selection-based the reverse may be the case. This is because the larger the number of relevant dimensions, the more difficult it could be to formulate a contingency as a simple rule.

These possibilities have been tested in multiple experiments reported in Sloutsky and Fisher (2008). In these experiments, 5-year-olds were presented with triads of geometric objects differing in color and shape. Each triad consisted of a Target and two Test items. Participants were told that a prize was hidden behind the Target and their task was to determine the Test item that had a prize behind it. Children were trained that in Context 1 shape of an item was predictive of an outcome, whereas in Context 2 color was predictive. Context was defined as the color of the background on which stimuli appeared and the location of the stimuli on the screen. Therefore, in Context 1, training stimuli appeared on a yellow background in the upper-right corner of the computer screen, and on a green background in the bottom-left corner of the computer screen in Context 2. Training stimuli were triads each consisting of a target and two test items. Participants were given information about a target item and they had to generalize this information to one of the test items. Each participant was given three training blocks. In one training block, only color was predictive, in another training block, only shape was predictive, whereas the third block was a mixture of the former two blocks. Participants were then presented with testing triads that had an important difference from training triads. Whereas training triads were “unambiguous” in that only one dimension of variation (either color or shape) was predictive and only one test item matched the target on the predictive dimension, this was not the case for testing triads. In particular, testing triads were “ambiguous” in that one test item matched the target on one dimension and the other test item matched the target on the other dimension. The only disambiguating factor was the context.

It was found that participants had no difficulty learning the contingency between the context and the predictive dimension when there were multiple contextual variables correlating with the predictive dimension. In particular, children tested in Context 1 primarily relied on shape and children tested in Context 2 primarily relied on color. Learning, however, attenuated markedly when the number of contextual variables was reduced, which should not have happened if learning was selection-based. And finally, when presented with testing triads and explicitly given a simple rule (e.g., children were asked to make choices by focusing either on color or on shape), they were unable to focus on the required dimension. These findings present further evidence for the developmental asynchrony of the two learning systems: while 5-year-old children could readily perform the task when relying on the compression-based learning system, they were unable to perform the task when they had to rely on the selection-based system. In sum, there is emerging body of evidence from category learning suggesting an interaction between the category structure and the learning system, pointing to developmental asynchronies in the two systems. Future research should re-examine category structure and category learning in infancy. In particular, given the critical immaturity of the selection-based system, most (if not all) of category learning in infancy should be accomplished by the compression-based system.

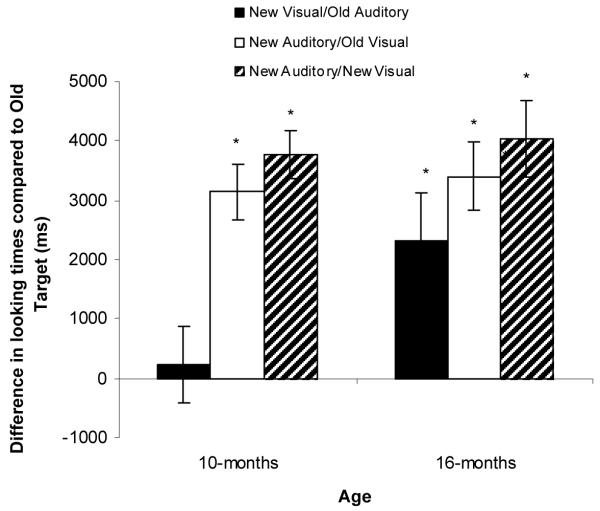

4.2. Category Representation

In the previous section, I reviewed evidence indicating that category learning is affected by an interaction among category structure, the learning systems processing this structure, and the characteristics of the learner. In this section, I will review evidence demonstrating components of this interaction for category representation. Most of the evidence reviewed in this section pertains to developmental asynchronies between the learning systems. Two interrelated lines of evidence will be presented: (1) the development of selection-based category representation and (2) the changing role of linguistic label in category representation.

4.2.2. The Development of Selection-based Category Representation

If the compression-based and the selection-based learning systems mature asynchronously, such that early in development the former system exhibits greater maturity than the latter, then it is likely that most of the spontaneously acquired categories are learned implicitly by the compression-based learning system. If this is the case, it is unlikely that young children form abstract rule-based representations of spontaneously acquired categories, whereas they are likely to form perceptually-rich representations. A representation of a category is abstract if category items are represented by either a category inclusion rule or by a lexical entry. A representation of a category is perceptually-rich if category representation retains (more or less fully) perceptual detail of individual exemplars.

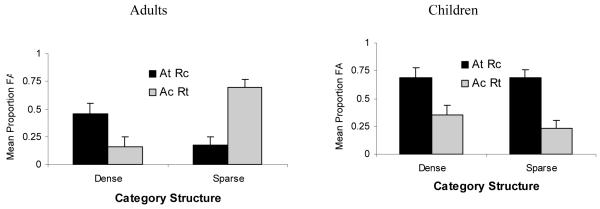

One way of examining category representation is focusing on what people remember about category members. For example, Kloos and Sloutsky (2008, Experiment 4B) presented 5-year-olds and adults with a category learning task. Similar to the above-described experiment by Kloos and Sloutsky (2008), there were two between-subjects conditions, with some participants learning a dense category and some learning a sparse category. Both categories consisted of the described above artificial bug-like creatures that had a number of varying features: sizes of tail, wings, and fingers; the shadings of body, antenna, and buttons; and the numbers of fingers and buttons. The relation between the two latter features defined the arbitrary rule: Members of the target category had either many buttons and many fingers or few buttons and few fingers. All the other features constituted the appearance features. Members of the target category had a long tail, long wings, short fingers, dark antennas, a dark body, and light buttons (target appearance AT), whereas members of the contrasting category had a short tail, short wings, long fingers, light antennas, a light body, and dark buttons (contrasting appearance AC). All participants were presented with the same set of items; however, in the sparse condition participants’ attention was focused on the inclusion rule, whereas in the dense condition it was focused on appearance information. This was achieved by varying the description of items across the conditions. In the sparse-category condition, the description was: “Ziblets with many aqua fingers on each yellow wing have many buttons, and Ziblets with few aqua fingers on each yellow wing have few buttons.” In the dense-category condition, in addition to the above-described rule, the appearance of exemplars was described. In both conditions, appearance features were probabilistically related to category membership, whereas the rule was fully predictive. After training, participants were tested on their category learning and then presented with a surprise recognition task. During the recognition phase, they were presented with four types of recognition items: ATRT (the items that had both the appearance and the rule of the Target category), ACRC (the items that had both the appearance and the rule of the Contrast category), ATRC (the items that had the appearance of Target category and the rule of the Contrast category), and ACRT (the items that had the appearance of the Contrast category and the rule of the Target category). If participants learned the category, they should accept ATRT items and reject ACRC items. In addition, if participants’ representation of the category is based on the rule, they may false alarm on ACRT, but not on ATRC items. However, if participants’ representation of the category is based on appearance, they should false alarm on ATRC, but not on ACRT items.

False alarm rates by age and test item type are presented in Figure 6. As can be seen in the figure, adults were more likely to false alarm on same appearance items (i.e., ATRC) in the dense condition and on same rule items (i.e., ACRT) in the sparse condition. In contrast, young children were likely to false alarm on same appearance items (i.e., ATRC) in both conditions. These results suggest that in adults dense and sparse categories could be represented differently: the former are represented perceptually, whereas the latter are represented more abstractly. At the same time, 5-year-old children are likely to represent perceptually both dense and sparse categories. These data suggest that representation of sparse (but not dense) categories changes in the course of development.

Figure 6.

False alarm rate by category structure and foil type in adults and children in Kloos and Sloutsky (2008), Experiment 4.

These findings, however, were limited to newly learned categories that were not lexicalized. What about representation of lexicalized dense categories? One possibility is that lexicalized dense categories are also represented perceptually, similar to newly learned dense categories. In this case, there should be no developmental differences in representation of lexicalized dense categories. However, representations of lexicalized dense categories may include the linguistic label (which could be the most reliable guide to category membership). In particular, it is possible that lexicalization of a perceptual grouping eventually results in an abstract label-based representation (in the limit, a member of a category could be represented just by its label). If this is the case, then there should be substantial developmental differences in representation of lexicalized dense categories. Furthermore, in this case, adults should differently represent highly familiar lexicalized dense categories (e.g., cat) and newly learned non-lexicalized dense categories (e.g., categories consisting of bug-like creatures). In particular, they should form an abstract representation of the former, but not the later.