Summary

In semiparametric models, the dimension d of the maximum likelihood problem is potentially unlimited. Conventional estimation methods generally behave like O(d3). A new O(d) estimation procedure is proposed for a large class of semiparametric models. Potentially unlimited dimension is handled in a numerically efficient way through a Nelson–Aalen-like estimator. Discussion of the new method is put in the context of recently developed minorization–maximization algorithms based on surrogate objective functions. The procedure for semiparametric models is used to demonstrate three methods to construct a surrogate objective function: using the difference of two concave functions, the EM way and the new quasi-EM (QEM) approach. The QEM approach is based on a generalization of the EM-like construction of the surrogate objective function so it does not depend on the missing data representation of the model. Like the EM algorithm, the QEM method has a dual interpretation, a result of merging the idea of surrogate maximization with the idea of imputation and self-consistency. The new approach is compared with other possible approaches by using simulations and analysis of real data. The proportional odds model is used as an example throughout the paper.

Keywords: EM algorithm, Frailty, Nonparametric maximum likelihood estimation, Profile likelihood, Semiparametric models

1. Introduction

Potentially unlimited dimension has been the most critical deterrent to the use of maximum likelihood estimation (MLE) in semiparametric regression models. In survival analysis, methods based on the partial likelihood (Cox, 1972) are specific to the proportional hazards (PH) model and do not extend to other models. Straightforward Newton-type methods of maximizing the likelihood for the full model generally require O(d3) operations to solve the system of score equations, where d is the number of model parameters. The principal part of the set of d parameters in a semiparametric model is used to specify a stepwise function H which approaches a continuous ‘true’ H in probability, as d → ∞. Although theoretically almost any likelihood can be maximized by a Newton-type method, its high complexity makes the problem computationally difficult for large d. The development of general, stable and numerically efficient algorithms for semiparametric MLE has been a long-standing problem (Fleming and Lin, 2000). Such algorithms are the subject of this paper. The argument goes as follows. The bottle-neck of a maximization algorithm for a semiparametric likelihood is the estimation of H. Let l be the log-likelihood of a semiparametric model, treated as a functional of H. Consider a class of continuous semiparametric models with the log-likelihood of the form (informally)

| (1) |

where Dt is the number of exact observations at t (failures), z is a vector of covariates and ϑ > 0 is some functional of H. The basic assumption that contributes to equation (1) is that the probability of failure in [t, t + dt] is proportional to dH(t), which is differentiability. To obtain an estimator for H, we differentiate l with respect to the set of {dH(τ)}. Informally, we arrive at the so-called self-consistency equation

| (2) |

where Θ is a functional representing a negative ‘derivative’ of log(ϑ). Since both sides of equation (2) depend on H, an iterative procedure is required to make the equation self-consistent,

| (3) |

where k counts iterations. Iterative updating of H by using equation (3) is the basic idea behind the algorithm. As we shall see, the above procedure is intimately linked to the EM algorithm as used to fit certain PH frailty models in survival analysis (Oakes, 1989; Klein, 1992; Nielsen et al., 1992). The EM algorithm handles H in an O(d) way through the use of the Nelson–Aalen–Breslow estimator (Andersen et al., 1993) for the cumulative hazard H. This is made possible as the M-step reduces to the PH model. However, a large amount of analytic work would be required to specify an estimation procedure for a new non-PH model. Expectation at the E-step may prove to be inaccessible in a closed form, and Monte Carlo extensions of the EM approach are much less computationally attractive. Recently, an optimization transfer approach (Lange et al., 2000) was proposed that allows us to construct EM-like procedures without the use of missing data. For a target function l(x), x ∈ ℝn, the minorization–maximization (MM) algorithm (Lange et al., 2000) proceeds by construction of the so-called surrogate objective function Q(x|y) such that Q(y|y) = l(y), and Q(x|y) ≤ l(y), for any x, to ensure monotonicity of the procedure. Maximization of the target function l proceeds iteratively as

| (4) |

The MM algorithm converges in l and in x under fairly general conditions (Lange et al., 2000). In the likelihood interpretation, the EM algorithm is a particular case of the MM algorithm. Unfortunately, there is no automatic way to construct Q. The procedure (3) interpreted as an MM algorithm is used to highlight three methods to construct a surrogate objective function: using the difference of two concave functions, the EM way and a new quasi-EM (QEM) approach. These methods link the EM algorithm for frailty models and its modifications with the MM algorithms. In the QEM approach, ‘E’ in the EM is replaced by the quasi-expectation operator QE, which is not based on the concept of a random variable. The result is the so-called QEM algorithm, which presents us with a recipe of generalizing an EM procedure into a ‘distribution-free’ one, representing a particular MM algorithm.

2. Profile likelihood approach

The problem of nonparametric maximum likelihood estimation (NPMLE) with the semiparametric model is to find estimates of regression coefficients β and an NPMLE estimate of H such that they deliver the maximum of a suitably defined likelihood function l = l(β, H). In this paper we use a profile likelihood approach to maximize l. The profile likelihood is defined as a supremum of the full likelihood taken over the nonparametric part of the model

| (5) |

Assuming that we can find the global maximum of l with respect to H, given β, we may write the profile likelihood as an implicit function of β

| (6) |

where H(β) is the solution of equation (5). Our algorithms will be designed following a straightforward nested procedure:

maximize lpr(β) by a conventional non-linear programming method (e.g. a directions set method);

for any β as demanded in the above maximization procedure, solve problem (5).

As the number of parameters of a semiparametric model is potentially unlimited, obtaining the inverse of the full information matrix can be computationally prohibitive. Therefore, we use the profile information matrix

| (7) |

to derive a standard error estimator for β. In this paper we adopt a pragmatic numerical approach. In the course of maximization of the profile likelihood with respect to β, a dense sample of the profile likelihood surface is generated near a stationary point. The curvature of the profile likelihood surface at the stationary point can be estimated by fitting a quadratic function to some domain around the point by using least squares. For example, the domain can be limited to points that cannot be rejected by using the likelihood ratio test (applied informally). Alternatively, a more sophisticated approach can be used based on implicit differentiation of lpr.

The rest of the paper will be devoted to constructing efficient NPMLE methods for obtaining lpr, i.e. for maximizing l with respect to H, given β, as this is the crux of the matter.

Practically, inference based on the profile likelihood is similar to that based on the partial likelihood for the PH model, which is quite convenient. Theoretically, however, inference based on the profile likelihood is not straightforward, as the usual theory of MLE does not apply to unlimited dimension. Important results have been obtained regarding a theoretical justification for the NPMLE method and the profile likelihood for semiparametric models (Murphy, 2000; van der Vaart, 1998; Murphy and van der Vaart, 1997). It was shown that profile likelihoods with nuisance parameters estimated out behave like ordinary likelihoods under some conditions. In particular, these results apply to the PH model, the proportional odds (PO) model (Murphy, 2000; Murphy et al., 1997) and the PH frailty model (Murphy, 1994, 1995), and presumably to most other models.

Let ti, i = 1,…,n, be a set of times, arranged in increasing order, and define tn+1 ≔ ∞. Associated with each ti is a set of individuals 𝒟i with time-independent covariates zij, j ∈ 𝒟i, who fail at ti, and a similar set of individuals 𝒞i with covariates zij, j ∈ 𝒞i, who are censored at ti. The observed event ℰij for the subject ij is a triple (ti, zij, cij), where c is a censoring indicator: c = 1 if failure; c = 0 if right censored. For any function A(t), let Ai = A(ti), ΔAi = |A(ti) − A(ti −0)|. A stepwise function H can be characterized by two vectors ΔH = (ΔH1,…,ΔHn)T and t = (t1,…,tn)T. With this notation, the likelihood of survival data under non-informative censoring takes the form

| (8) |

where Di is the number of failures that are associated with ti, and the function ϑ will be specified later for the class of non-linear transformation models (NTMs).

3. EM algorithm for a semiparametric model

For example, consider a PO model for the survival function G, given covariates z,

| (9) |

where θ is a predictor and H is some nonparametrically specified base-line cumulative hazard. The model is named after the PO property that for any two values of the predictor, θ1 and θ2, with corresponding survival functions Gi(t) = G(t|θi), i = 1, 2, the odds ratio

is a constant in t, where odds(a) = a/(1 − a).

This paper was inspired by the idea of representing a semiparametric model as a mixture (frailty) model, and to use the EM algorithm to fit it. With this idea in mind, consider a PH mixture model

| (10) |

where F = exp(−H) is the base-line survival function corresponding to H, and U = U(β, z) is used to indicate that the distribution of random variable U depends on covariates and regression coefficients. This model can be considered a compact expression for a family of so-called PH frailty models, or PH models with random effects considered by Hougaard (1984), Klein (1992), Nielsen et al. (1992), Wassel and Moeschberger (1993), Clayton and Cuzick (1985) and many others, for different distributions of U, possibly dependent on covariates.

To construct the EM algorithm for a particular model (PO in the example), we represent it as a PH mixture model (inverse transform), and then follow the usual logic of the EM algorithm construction for frailty models, as for example in Nielsen et al. (1992).

3.1. Inverse transform

We note that ℒ(s|·) = E[exp{−s U(·)}] is the Laplace transform of U(·), and that for the PH mixture model (10)

From the latter equation and equation (9), we conclude that U for the PO model represents exponential regression, as ℒ(s|·) = θ(·)/{θ(·) + s} is the Laplace transform of an exponential distribution with mean θ−1.

3.2. Complete-data likelihood

With the PH mixture model (10), pretend that U is known for each subject ij, continuing the notation of Section 2. The complete-data likelihood under non-informative right censoring corresponds to the PH model with predictors Uij

| (11) |

3.3. E-step

Since the complete-data likelihood (11) is linear in missing data Uij, the E-step reduces to imputation of each U by the corresponding Û, the conditional expectation of U, given the observed event. Using the exponential distribution of U with mean θ−1, after a little algebra, we obtain

| (12) |

where h is the hazard function corresponding to H. A similar derivation of Û for a gamma frailty model can be found, for example, in Parner (1998).

3.4. M-step

Maximization of the complete-data likelihood (11) with respect to H, and with Uij imputed by Ûij, results in the Nelson–Aalen estimator

where ℛm = {ij : j ∈ 𝒟i ∪ 𝒞i, i ≥ m} is the set of subjects at risk just before tm.

3.5. EM procedure for the proportional odds model

Finally, for the PO model we have the iterative EM procedure

| (13) |

where k counts iterations.

3.6. Alternative derivation of procedure (13)

It is intriguing that we can formally derive procedure (13) as an immediate corollary of the argument presented in Section 1. Indeed, using equation (9), we write the likelihood for the PO model as

| (14) |

On differentiating equation (14) with respect to ΔHm, and assigning the iteration index k as in equations (1)–(3), we obtain expression (13).

This observation deserves discussion. The EM derivation presented above for the PO model is model specific and its feasibility depends on the success and simplicity of the inverse Laplace transformand the integrals that are evaluated at the E-step (12). The PH mixture representation of a semiparametric model may not exist, in which case the EM derivation ultimately fails. Necessary and sufficient conditions for this representation to exist are a corollary of the Bernstein theorem (Feller, 1971): the survival function must be a completely monotonic function of H. A function ψ(H) is called completely monotonic if all its derivatives ψ(i) exist, i = 1, 2,…, and (−1)i ψ(i)(H) ≥ 0, H > 0. In particular, the survival function (10) of the PH mixture model is an infinitely differentiable function of F. The alternative derivation of procedure (13) bypasses all the above-mentioned difficulty and formally works for any model in a straightforward and simple fashion. This raises a series of questions. Does the procedure of Section 1 work for any model? What is its relationship to the EM algorithm? Does it inherit the monotonicity, stability and convergence of the EM algorithm?

A clue to generalizing the EM algorithm described above is the observation that the derivation of the E-step (12) does not require knowledge of the distribution of U. Indeed, denote by γ(x) the moment-generating function of U (other arguments are omitted), so that γ(x) = E(xU) = ℒ{−log(x)}. Observe that the first equation in expression (12) can be written as

| (15) |

where γ(c) denotes the derivative of order c; γ(0) ≔ γ, c = 0, 1. Expression (15) represents a variation on the topic of the derivation of moments from the transform of a distribution. The consequence of equation (15) is a straightforward and general specification of the E-step for any mixture model formulated in terms of the moment-generating function. In fact, it is even more general as will be shown in what follows. To elaborate further on the issues raised above, we need to make the few theoretical observations considered in the next section.

4. General concepts

4.1. Construction using the difference of two concave functions

For studying procedure (3), the following MM construction (Lange et al., 2000) is useful. Let l(x) = B(x) − A(x), where A and B are differentiable concave functions. The iterative maximization procedure,

| (16) |

where ∇A(x) = ∂A/∂x is the gradient of A, represents an MM algorithm, as follows from convexity arguments. The surrogate objective function for the above construction has the form

| (17) |

4.2. EM construction

Let ℰ be the observed event, and U be a random variable (vector) representing missing data. The EM algorithm is a method to maximize the log-likelihood function l(x) = log{L(x)} of the form

| (18) |

where L0(x|U) is the conditional likelihood, given missing data (the likelihood constructed to estimate x as if U were a covariate), and x does not include parameters of the distribution of U. To facilitate ‘distribution-free’ generalizations, we intentionally avoid explicit expressions involving the distribution of U, and we use the conditional rather than the joint likelihood of U and ℰ to represent the EM procedure. In this construction, unknown parameters entering the distribution of U do not participate in the procedure, and the maximization is considered with respect to parameters x only. For any function f(U), the conditional expectation of f(U), given observed event ℰ and x, is represented as

This suggests the following explicit functional notation for the conditional expectation operator

| (19) |

for any functions f and g of U, where U is a random variable, and E(g) is the probability of the condition. A standard Jensen inequality argument shows that, with this notation,

| (20) |

is a surrogate objective function for the target function l(x). The operation of finding Û such that l0(x|Û) = E{l0(x|U)|L0(y|U)} is referred to as missing data imputation. If imputation is easy (E-step), maximization of Q with respect to x reduces to that of l0(x|Û), a complete-data problem.

To prove that any converging sequence x(k) → x*, designed according to equation (4), gives us a stationary point in the limit, we follow the argument at x = y = x*

| (21) |

which implies that the score equation ∂L(x)/∂x = 0 is satisfied in the limit.

The EM algorithm proceeds by iterations , where at the kth iteration this equation is solved for x(k+1).

4.3. Quasi-EM construction

Let us revisit the EM construction under the question what properties of the E-operator did we actually use in the derivation of Section 4.2? They are conditional expectation performed according to expression (19), linearity and the Jensen inequality in equation (20) and interchangeability of E and ∂/∂x in equation (21). Operators satisfying these properties will be called QE. As soon as a QE operator satisfying these requirements and such that L(x) = QE{L0(x|U)} is constructed, the likelihood function L can be maximized by an MM algorithm with the surrogate objective function as in equation (20) with E replaced by QE. The rationale behind this substitution is that the evaluation of E requires that U be a random variable, and that we know its distribution, whereas that of QE does not.

Formally, let ℬ be some set of basis functions (including the function f(u) ≡ 1), and 𝒮 be a set of all admissible functions stretched on ℬ using linear combinations. In other words 𝒮 consists of functions f such that f = Σiaifi for any sequence (finite or infinite) of functions {fi}, fi ∈ ℬ, and real numbers {ai}.

Define QE as a linear functional mapping 𝒮 to real numbers such that

QE(1) ≔ 1, where 1 means a function that is equivalent to 1 (normalization),

for any f = Σiaifi ∈ 𝒮, fi ∈ ℬ, QE(f) ≔ Σiai QE(fi) (linearity),

- for any function f(u, a) ∈ 𝒮, and such that ∂f(u, a)/∂a ∈ 𝒮,

(interchangeability), - as in expression (19), for any functions g and fg ∈ 𝒮 and such that QE(g) ≠ 0,

(conditional QE) and(22) - given the functions g, fg and g log(f) ∈ 𝒮,

(Jensen inequality).(23)

Let us consider the QE requirements more closely. We start by postulating one basis function f0(U, a) and the value of QE on that basis function QE(f0) ≔ γ(a), where γ is some function of a. Dependent on how many times we are allowed to differentiate under the QE symbol, the derivatives

must also be included in the set of admissible functions 𝒮, so that derivatives of QE(f0) can be defined. Moreover, QE on f(i), i = 1, 2,…, are automatically defined by the interchangeability property through derivatives of γ, as QE{f(i)} = γ(i). As we can see, QE construction is cloned from f0 and γ. Mathematical expectation E{f0(U, a)} is an integral transform of U, where a is an argument of the transform. Dependent on the choice of the function f0, QE mimics differential properties of the corresponding transform, whereas the function γ is not necessarily an integral transform.

To study procedure (3), a QE construction based on moment-generating functions is useful. This construction is cloned from the basis function f0(U, a) = aU, 0 ≤ a ≤ 1, U ≥ 0. For a mathematical expectation, E(f0) = γ is the moment-generating function of U. If we want to be able to differentiate twice under the QE symbol, we need two more basis functions UaU and U2aU, so that

| (24) |

by the interchangeability property with two derivatives of γ allowed. We now derive the Jensen inequality for this construction. First, noting equation (15), introduce the conditional QE

| (25) |

where we used expressions (22) and (24) to obtain the right-hand part of expression (25), c = 0, 1. The function Θ is a surrogate of conditional expectation of U, given observed data, and it serves as an imputation operator in the QEM construction. Next, let ℬk be a family of functions ℬk = {UkaU, 0 ≤ a ≤ 1, U ≥ 0}. Then by using the fact that, for f = f(U, a) = aU and g = g(U, b) = UcbU,

the following can be proved.

Theorem 1 (Jensen inequality for QE). Let Θ(x|c) as defined by expression (25) be a non-decreasing function of x, c = 0, 1. Then inequality (23) holds true for any f ∈ ℬ0 and g ∈ ℬ0 ∪ ℬ1.

It is interesting that mathematical expectation satisfies the assumption of non-decreasing Θ, which we call the convexity assumption for reasons that will become clear later as we discuss an application of the above theory to semiparametric likelihood.

Theorem 2 (convexity for mathematical expectation). Let γ be a function defined by using the mathematical expectation operator E as γ(x) ≔ E(xU), where U is a non-negative random variable. Then Θ(x|c) as defined in expression (25) is non-decreasing in x for any c = 0, 1.

Proof. The Cauchy–Schwartz inequality with functions ξ(U, x) = U1+c/2xU/2 and ζ(U, x) = Uc/2xU/2 can be used to show that Θ′(x|c) ≥ 0.

5. Non-linear transformation models

5.1. Definition

To study procedure (1)–(3) in more detail using the developments of Section 4, we need to specify a certain structure of the likelihood function to be optimized. To do this, let us confine ourselves to a large, yet specific, class of semiparametric survival models. Consider a parametric regression model with support on [0, 1]. Let γ(x|β, z), x ∈ [0, 1], be a parametrically specified distribution function in x, conditional on covariates z. We require that γ be twice differentiable with respect to x and regression coefficients β.

We can nowdefine a semiparametrically specified survival function G(t|β, z), given covariates, as

| (26) |

where the base-line survival function F is specified nonparametrically. The class of models (26) will be called NTMs, to give it a name. Functions like γ will be called NTM-generating functions. An NTM is obtained by plugging a nonparametrically specified survival function F into a parametric distribution function γ with the support compatible with the range of F. One important subclass of NTMs is the family of PH mixture models (10), for which γ is a moment-generating function of U,

To represent a semiparametric model in the NTM form, we need to express its survival function G as a function γ of a base-line survival function F (this representation is not unique and is not always possible). For example, from equation (9) we obtain the PO model in the form G(t|·) = θ(·)/[θ(·) − log{F(t)}], which gives

| (27) |

The class of NTMs includes the class of linear transformation models (Cheng et al., 1995, 1997). It is easy to show that a linear transformation model can be represented as γ(x|β, z) = p[log{θ(β, z)} + q(x)], where p is a parametrically specified tail function, q is an inverse tail function and θ is a predictor (it is convenient to specify q as the inverse of p; then θ = 1 corresponds to the base-line γ(x|·) = x).

5.2. Algorithm

In the survival analysis formulation, under non-informative right censoring, the contribution of observations sampled from an NTM (26) to the likelihood are log(−dG) and log(G), for a failure and censored observation respectively. We have

where γ′(x|·) = ∂γ(x|·)/∂x, differentials are taken with respect to t under a continuous model and we recall that F = exp(−H). We may now rewrite the likelihood (8) for an NTM as

| (28) |

where

and ΔHi is substituted for dH(ti). It is easy to check that a negative derivative of log{ϑ(Fi|·, c)} with respect to ΔHm is represented by Θ(Fi|·, c), if m ≤ i, and is equal to 0 otherwise, where the function Θ is defined by expression (25). Therefore, the construction (1)–(3) of Section 1 leads us to the iteration scheme

| (29) |

This procedure is a generalization of procedure (13) to the NTM family. For the PO model, substituting equation (27) into expression (25), we obtain

| (30) |

It is clear that, with Θ given by equation (30), the general procedure (29) turns into the procedure (13) derived for the PO model in Section 3.

5.3. Quasi-expectation form of a non-linear transformation model

We now make use of the QE theory of Section 4.3 to provide a link between NTMs and the QE operator. Equations (24) summarizing second-order differential properties of the QE operator will be the main instrument of this section.

First, let us synchronize the development of Section 4.3 and the definition of NTM (26) in Section 5.1 by assuming that the function γ that is used in both sections is the same function. In fact, we already used this synchronization when we noticed in the previous section that Θ in equation (29) and in expression (25) is the same function. Now, from the first line of expression (24), with F(t) instead of a, we obtain the QE form of the NT model

| (31) |

where the subscript β, z to the QE operator indicates that QE is defined by using the function γ(x|β, z). Equation (31) is a postulate in the definition of the QE operator, and its link to the NTMs is established as we assume that QE is defined by using an NTM-generating function γ.

Now, consider the likelihood function l (28). Given an observation (t, z, c), c = 0, 1, its contribution υ{F(t)|β, z, c} to the likelihood L = exp(l) can be written as

where the last equation follows from the first two lines of expression (24) and linearity of QE. As a result, the likelihood of an NTM mimics that of a mixture model

Consider the hazard function λ(t|z), corresponding to the survival function G(t|z). Differentiating the survival function (26), and using expression (24), we have

where h is the hazard function corresponding to F. Applying the definition of conditional QE (22) to this expression, and using expression (25), we obtain

This is a generalization of the fact that the population hazard function at time t in a heterogeneous population is represented as a conditional average, given survival up to time t.

Bringing these derivations together with expression (25), we have the following theorem.

Theorem 3 (QEM construction). Consider a survival analysis problem for an NTM generated by the function γ(x|β, z), with fixed covariates. With the QE operator as defined in Section 4.3, and using the same NTM-generating function γ in its definition, the following representations are valid: survival function,

hazard function,

where λ and h are hazards functions corresponding to G and F respectively; likelihood function,

imputation operator,

where Û denotes U, imputed by using the conditional QE operator.

6. Summary and justification of the procedure

Let us now go back to the EM algorithm of Section 3 and see how the results obtained since then allow us to streamline and justify our algorithm construction, using the PO model as an example. In summary, we now have the following procedure.

- Obtain the NTM-generating function, representing the model survival function as a function of F. For the PO model (9) G(t|·) = θ(·)[θ(·) − log{F(t)}]−1, we have equation (27),

-

Obtain the imputation operator (25)For the PO model, this results in equation (30),

Check that Θ(x|·) is a non-decreasing function of x (see the justification below).

- Obtain the profile likelihood by iterations (29),

Maximize the profile likelihood with respect to β as in Section 2.

For the PH mixture model, QE is equivalent to E (compare equations (31) and (10)), which makes Θ the conditional expectation of U, given observed data (compare expressions (25) and (15)). In this case, the above procedure is an EM algorithm.

Justification of this procedure works through the proof of monotonicity (i.e. the likelihood is improved at each step) under the following assumption.

6.1. Convexity assumption

Consider an NTM with the NTM-generating function γ. Assume that

| (32) |

We have two ways to show monotonicity.

Observe that, under assumption (32), the likelihood (28), as a function of the vector ΔH, represents a difference between two concave functions Σi Di log(ΔHi) and −Σij log{ϑ(Fi|·)}. This follows from the fact that Θ is the negative derivative of log(ϑ) with respect to H. Therefore, monotonicity follows from the results of Section 4.1.

Observe that the likelihood is represented as a QE L = Π QE(ΔHcUcFU) (Section 5.3), and that under assumption (32) the QE operator satisfies the Jensen inequality (Section 4.3). Therefore, the EM proof of monotonicity works.

Convergence of the algorithm under monotonicity follows from the results of Lange et al. (2000) and Wu (1983) under fairly general conditions.

7. Real data example

As an example we use data from the National Cancer Institute’s ‘Surveillance epidemiology and end results’ programme. Using the publicly available database for the programme, 11621 cases of primary prostate cancer diagnosed in the state of Utah between 1988 and 1999 were identified. The following selection criteria were applied to a total of 19819 Utah cases registered in the database: valid positive survival time, valid stage of the disease and age 18 years or more. Prostate cancer specific survival was analysed by the stage of the disease (localized or regional versus distant). For the definition of stages as well as for other details of the data we refer the reader to the documentation for the programme at http://seer.cancer.gov/.

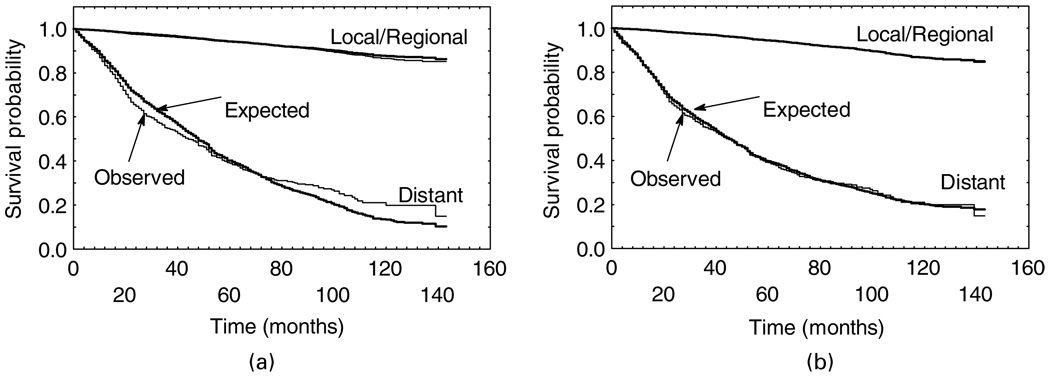

The PH and the PO models with z representing two groups corresponding to the localized or regional stage (10765 cases) and distant stage (856 cases) respectively were fitted by using the profile MM algorithm. The log-odds-ratio was estimated as β̂ = −3:251 with 95% asymptotic confidence interval (−3:416, −3:086).A likelihood ratio test showed that the difference between groups is highly significant (p < 0:0001). Observed (Kaplan–Meier) and expected model-based estimates of the survival functions by group are shown in Fig. 1. The PO model showed a superior fit to the data.

Fig. 1.

Observed (————) versus expected (————) plots corresponding to (a) the PH and (b) the PO model fitted to the prostate cancer data

On the basis of the PO model, four different approaches to model fitting are compared.

MM or QEM: the method is described in Section 6. Maximization of the profile likelihood is performed by the Powell method (Press et al., 1994).

- EM: the EM method is similar to the EM algorithm as used to fit frailty models with predictor θ(β, z). Using the QEM formulation, the procedure is as follows.

- With the current iteration β(k) and F(k) compute for each subject ij.

- Maximize the partial likelihood for a PH model with (imputed) predictor with respect to β. Set β(k+1) at the solution.

- Update the function F by using the Nelson–Aalen estimator for the PH model fitted at the previous step. Denote the solution by F(k+1).

- Set k = k + 1. Continue iterations until convergence.

Parametric: in the parametric method, the function F is specified as a Weibull survival function. The parametric regression model is fitted by using the Powell method applied to all model parameters.

Full model (Powell): apply the Powell method to maximize the log-likelihood of the full semiparametric model with respect to the joint vector of regression coefficients β and the base-line hazard ΔH.

Computation of θ, Θ or γ is counted as one operation. For a given tolerance ε, the stopping rule is defined as l(k+1) − l(k) < ε. If the method required solving a nested numerical problem (MM or EM), the tolerance for the nested problem is specified as ε/100.

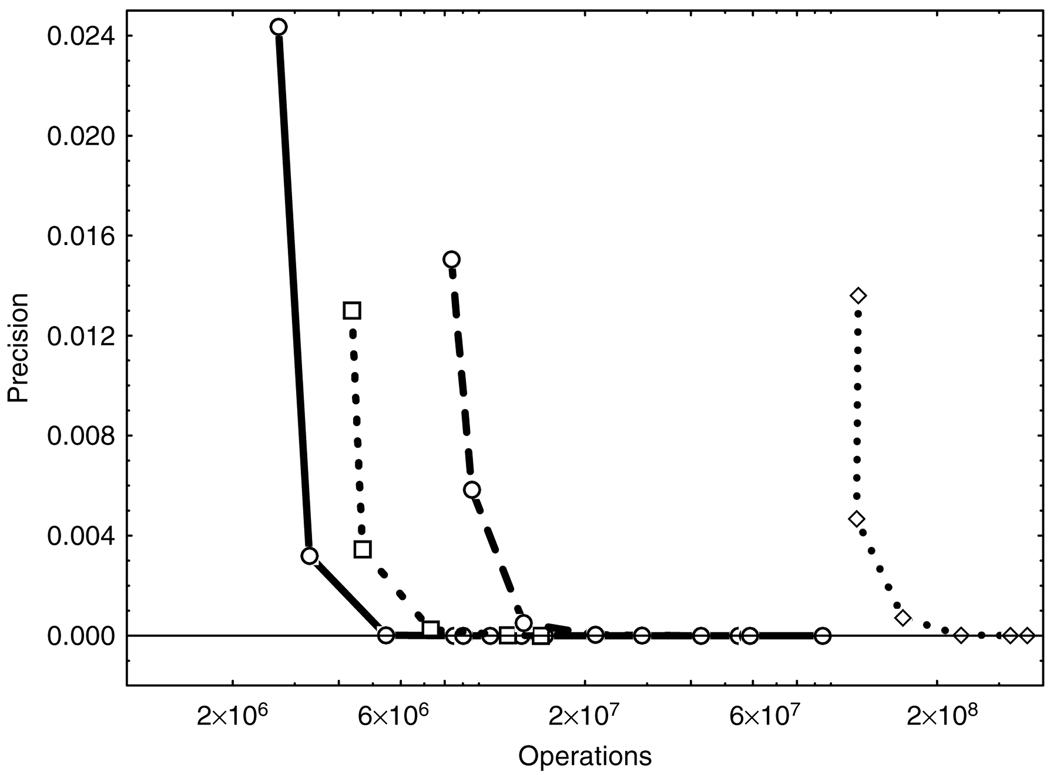

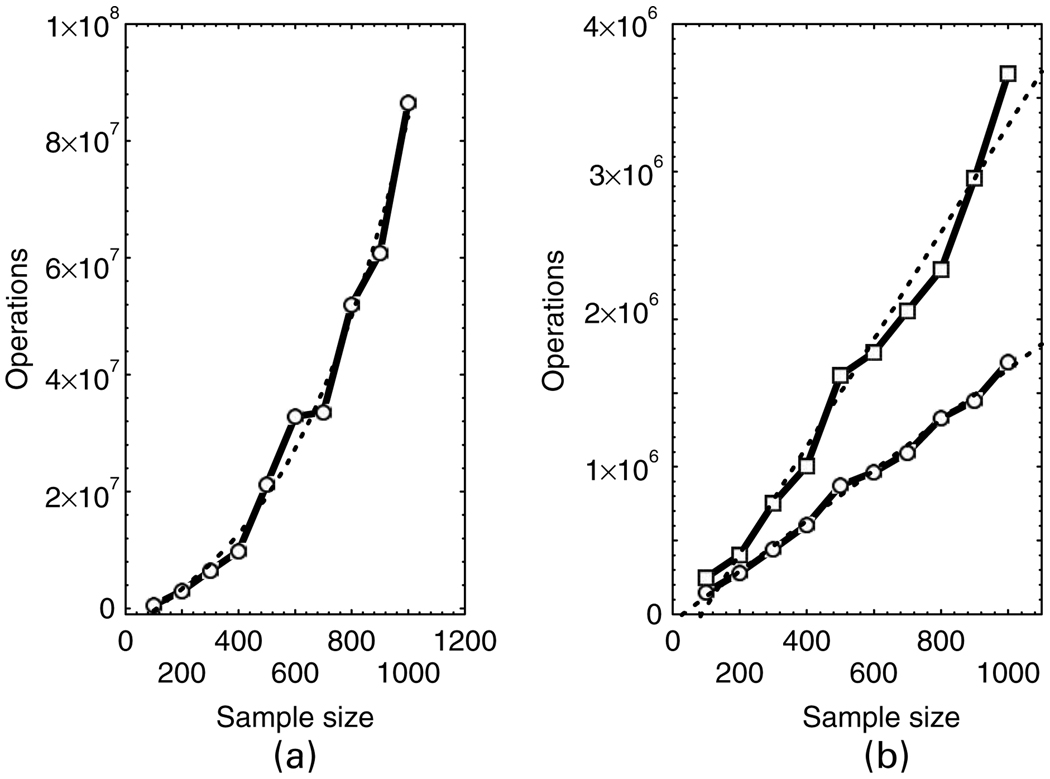

First, we evaluate the precision by operations characteristics of the above numerical methods. The precision is measured by l* − l(k), where the exact solution l* was approximated by the solution obtained for ε = 10−20. Shown in Fig. 2 are the precision by operations curves for the four methods, obtained by varying ε. It is clear from Fig. 2 that the profile MM algorithm outperforms the other approaches in the number of operations that are required to reach a given precision. The profile MM method is closely followed by the frailty EM algorithm. Fitting the full semiparametric model by the Powell method shows the worst performance. The advantage of the EM-like approaches compared with methods that invoke the function F into a conventional maximization is explained by the utilization of a closed form solution for F in the form of the Nelson–Aalen–Breslow estimator. For the same reason, the MM and the EM procedures show a linear trend with increasing dimension, given fixed precision as shown in Fig. 3. To obtain Fig. 3, samples of size 100–1000 were generated from the parametric (Weibull) PO model fitted to the same data. The MM, EM and full model (Powell) procedures were applied to each such sample. The tolerance ε = 10−3 was used for the MM algorithm. The tolerance for the other two methods was tuned to give a likelihood that was as close as possible yet smaller than the likelihood achieved by the MM method (to keep the comparison conservative). The profile MM algorithm shows the most favourable behaviour with increasing dimension, followed by the EM procedure. It comes as no surprise that the full model (Powell) method shows the greatest complexity.

Fig. 2.

Precision of likelihood maximization by the number of operations (precision is measured as the difference between the limiting value of the likelihood as operations tend to ∞ and the maximal likelihood value achieved under a stopping rule; curves closer to the y-axis correspond to more efficient numerical methods): ————, MM; -------, EM;– – –, parametric; ·······, full model

Fig. 3.

Numerical efficiency by sample size for (a) the full model (·······, polynomial fit) and (b) the EM (□) and MM (○) methods (·······, linear fits): ————, number of operations needed to achieve a fixed precision by sample size (each point corresponds to a sample generated from a parametric PO model with a Weibull base-line survival function with parameters specified by using the model fit to data described in Section 7)

8. Conclusion

We presented an application of the general MM principle to a class of semiparametric models. Three methods of specifying the surrogate objective function were demonstrated. In particular, we clarified the connection between the likelihood-based MM principle and the imputation-based self-consistency principle that is used in EM algorithms for semiparametric models. To study this connection, we built an EM-like world behind the MM algorithm by using the QEM construction. The approaches were illustrated by using continuous NTMs in a survival analysis context. This is just one example of how these constructions can be used. Discrete survival models, cure models, multivariate semiparametric models, models with time-dependent covariates and many other statistical models can be treated by application of the principles presented in this paper. Construction of surrogate objective functions is not straightforward. Having an option to work a particular problem from both ends (likelihood or convexity versus imputation or self-consistency) may increase the chance of finding efficient and general procedures that are applicable to large classes of models.

Acknowledgements

The author thanks Dr Ken Boucher, Dr Marvin Zelen, the referees and the Joint Editor for many helpful comments. This research is partially supported by National Cancer Institute grant 1U01 CA97414-01 (Cancer Intervention and Surveillance Modeling Network), Department of Defence grant DAMD17-03-1-0034 and National Institute of Ageing grant 1RO1 AG14650-01A2.

References

- Andersen P, Borgan Ø, Gill R, Keiding N. Statistical Models based on Counting Processes. New York: Springer; 1993. [Google Scholar]

- Cheng S, Wei L, Ying Z. Analysis of transformation models with censored data. Biometrika. 1995;82:835–845. [Google Scholar]

- Cheng S, Wei L, Ying Z. Predicting survival probabilities with semiparametric transformation models. J. Am. Statist. Ass. 1997;92:227–235. [Google Scholar]

- Clayton D, Cuzick J. Multivariate generalizations of the proportional hazards model (with discussion) J. R. Statist. Soc. A. 1985;148:82–117. [Google Scholar]

- Cox D. Regression models and life-tables (with discussion) J. R. Statist. Soc. B. 1972;34:187–220. [Google Scholar]

- Feller W. An Introduction to Probability Theory and Its Applications. New York: Wiley; 1971. [Google Scholar]

- Fleming T, Lin D. Survival analysis in clinical trials: past developments and future directions. Biometrics. 2000;56:971–983. doi: 10.1111/j.0006-341x.2000.0971.x. [DOI] [PubMed] [Google Scholar]

- Hougaard P. Life table methods for heterogeneous populations: distributions describing the heterogeneity. Biometrika. 1984;71:75–83. [Google Scholar]

- Klein J. Semiparametric estimation of random effects using the Cox model based on the EM algorithm. Biometrics. 1992;48:795–806. [PubMed] [Google Scholar]

- Lange K, Hunter D, Yang I. Optimization transfer using surrogate objective functions (with discussion) J. Comput. Graph. Statist. 2000;9:1–59. [Google Scholar]

- Murphy S. Consistency in a proportional hazards model incorporating a random effect. Ann. Statist. 1994;22:712–731. [Google Scholar]

- Murphy S. Asymptotic theory for the frailty model. Ann. Statist. 1995;23:182–198. [Google Scholar]

- Murphy S. On profile likelihood. J. Am. Statist. Ass. 2000;95:449–485. [Google Scholar]

- Murphy S, Rossini A, van der Vaart A. Maximum likelihood estimation in the proportional odds model. J. Am. Statist. Ass. 1997;92:968–976. [Google Scholar]

- Murphy S, van der Vaart A. Semiparametric likelihood ratio inference. Ann. Statist. 1997;25:1471–1509. [Google Scholar]

- Nielsen G, Gill R, Andersen P, Sorensen T. A counting process approach to maximum likelihood estimation in frailty models. Scand. J. Statist. 1992;19:25–43. [Google Scholar]

- Oakes D. Bivariate survival models induced by frailties. J. Am. Statist. Ass. 1989;84:487–493. [Google Scholar]

- Parner E. Asymptotic theory for the correlated gamma frailty model. Ann. Statist. 1998;26:183–214. [Google Scholar]

- Press W, Flannery B, Teukolsky S, Vetterling W. Numerical Recipes in Pascal: the Art of Scientific Computing. New York: Cambridge University Press; 1994. [Google Scholar]

- van der Vaart A. Asymptotic Statistics. Cambridge: Cambridge University Press; 1998. [Google Scholar]

- Wassel J, Moeschberger M. A bivariate survival model with modified gamma frailty for assessing the impact of interventions. Statist. Med. 1993;12:241–248. doi: 10.1002/sim.4780120308. [DOI] [PubMed] [Google Scholar]

- Wu C. On the convergence properties of the EM algorithm. Ann. Statist. 1983;11:95–103. [Google Scholar]