Abstract

Objective

To improve identification of pertussis cases by developing a decision model that incorporates recent, local, population-level disease incidence.

Design

Retrospective cohort analysis of 443 infants tested for pertussis (2003–7).

Measurements

Three models (based on clinical data only, local disease incidence only, and a combination of clinical data and local disease incidence) to predict pertussis positivity were created with demographic, historical, physical exam, and state-wide pertussis data. Models were compared using sensitivity, specificity, area under the receiver-operating characteristics (ROC) curve (AUC), and related metrics.

Results

The model using only clinical data included cyanosis, cough for 1 week, and absence of fever, and was 89% sensitive (95% CI 79 to 99), 27% specific (95% CI 22 to 32) with an area under the ROC curve of 0.80. The model using only local incidence data performed best when the proportion positive of pertussis cultures in the region exceeded 10% in the 8–14 days prior to the infant's associated visit, achieving 13% sensitivity, 53% specificity, and AUC 0.65. The combined model, built with patient-derived variables and local incidence data, included cyanosis, cough for 1 week, and the variable indicating that the proportion positive of pertussis cultures in the region exceeded 10% 8–14 days prior to the infant's associated visit. This model was 100% sensitive (p<0.04, 95% CI 92 to 100), 38% specific (p<0.001, 95% CI 33 to 43), with AUC 0.82.

Conclusions

Incorporating recent, local population-level disease incidence improved the ability of a decision model to correctly identify infants with pertussis. Our findings support fostering bidirectional exchange between public health and clinical practice, and validate a method for integrating large-scale public health datasets with rich clinical data to improve decision-making and public health.

Keywords: Bordetella pertussis, decision modeling, public health surveillance

Introduction

Bordetella pertussis outbreaks can infect hundreds of people across all age groups, though the infection is most dangerous for young infants.1 2 3 Pertussis is difficult to diagnose, especially in its early stages, and definitive test results are not available for several days. Timely administration of antibiotics decreases transmissibility of the disease.4 Most patients with cough do not have pertussis, but a missed case of the contagious disease is likely to have important consequences for the patient, her contacts, and the public health.5 A patient's risk of exposure to infection varies by local disease burden,6 7 though clinicians rarely have ready access to information about epidemiologic context8—the recent regional incidence of an infectious disease—when making management decisions. The proliferation of real-time infectious disease surveillance systems9 10 and electronic laboratory reporting systems11 creates an opportunity to open a key communications channel between public health agencies and point-of-care providers. Currently there are no clinical decision-support systems that integrate public health incidence data into management algorithms in real time.12

Because of the temporal and geographic variability of pertussis outbreaks, the delay in diagnostic test results, and the personal and public health ramifications13 of incorrect management decisions at the point of care, pertussis is a prototypical disease for which real-time public health incidence data might inform, guide, and improve clinical decision-making. The purpose of this study is to quantify the value of recent, local disease incidence, derived from public health sources, in improving management of pertussis in the clinical setting.

Methods

Design, setting, and subjects

A retrospective review was conducted of charts for infants tested for pertussis by culture, presenting to the pediatric emergency department (ED) of a large urban tertiary care US hospital from 1 January 2003 to 31 December 2007. The ED volume exceeds 50 000 patients per year. The study received institutional review board approval.

Inclusion and exclusion criteria

Subjects included all infants tested for pertussis by culture from 2003 to 2007. If a patient had multiple pertussis cultures from 2003 to 2007, only the first test was included.

Case definition

An infant was defined as pertussis-positive or pertussis-negative based on culture result, which is widely regarded as the gold standard.14 15 Alternate tests like PCR, serology, and direct fluorescent antibody (DFA) were not used in the case definition. Positive culture from a nasopharyngeal specimen is 100% specific for pertussis.4 16 Sensitivity, however, may be limited for several reasons including the organism's fastidious nature, specimen collection technique, when the patient is tested in the course of the illness, and prior or concurrent use of antibiotics.16 17 While PCR may have a better sensitivity, we did not rely on it because there is no FDA-approved test kit available, because test characteristics vary widely by laboratory and because outbreaks have recently been attributed to PCR false positives.4 18 PCR may, in fact, be oversensitive, and requires correlation with at least 2 weeks of cough and paroxysm, whoop or post-tussive emesis,4 which are difficult to assess accurately in a retrospective review. Serology is not recommended for infants, and DFA is not widely available.19

Clinical data collection

Demographics, signs, and symptoms commonly associated with infant pertussis, local disease incidence data and outcomes were collected for each patient.4 20 21 Demographics included visit date, gender, and age (months). Signs and symptoms included cough duration (days), fever duration (days), history of apnea, post-tussive emesis, cyanosis, seizure, and contact with a person with known pertussis. If the record did not contain information about these symptoms, they were coded as absent. Cough descriptors like paroxysm, staccato, and “whoop” were not included because they could not be measured accurately by chart review. Outcome data including antibiotic use, hospitalization, and mortality were collected to help describe the study population.

In the initial review, the pertussis culture result for each patient was obtained from the hospital laboratory information system. Subsequently, the chart abstractor (an attending physician specializing in pediatric emergency medicine) responsible for collecting and entering patient data into structured forms was blinded to the culture result. The culture result was accessible through a unique laboratory link to a PDF file from the external laboratory that performed the culture. These results were kept separated from the portion of the electronic chart used from the ED clinical encounter. No linkage between the culture result and the clinical portion of the chart was conducted until after all clinical charts had been reviewed. Historical and physical exam features were based on the EMR generated during the ED encounter. Outcome data were collected from the ED EMR, inpatient discharge summaries, and outpatient follow-up visits. To assess inter-rater reliability, a second abstractor (also an attending physician specializing in pediatric emergency medicine) reviewed a random sample of 7% of charts.22 23 The two chart abstractors had over 90% agreement (range 91–97%) and κ24 from 0.52 to 0.87 for all candidate predictors.

Local disease-incidence data collection

A query of the State Laboratory of the Massachusetts Department of Public Health database yielded 19 907 pertussis culture results from patients of all ages over the study period (2003–7). These data were obtained through a limited data sharing agreement. State data about cultures included date sent and culture result, but not demographics, clinical findings or outcomes.

Aggregate disease incidence variables were created for the number of pertussis cultures performed, the number of positives and the proportion positive at the state laboratory. Each of these variables was tabulated over a range of different timescales: 1–7, 8–14, 15–21, and 22–28 days prior to each visit date. Based on date of presentation, the corresponding public health incidence variables (number of cultures performed, positive, and proportion positive in the prior and cumulative 1–4 weeks) were assigned to each infant.

Building the decision models

The same sequence of steps was used to build three decision models: (1) “clinical only” model—candidate predictors included only clinical data based on demographics, history, and physical exam; (2) “local disease incidence” model—candidate predictors included only public health incidence data; and (3) “contextualized” model—all clinical and public health predictors were considered.

Variable discretization and selection

Dichotomous variables (history of apnea, post-tussive emesis, cyanosis, and seizure) associated with positive pertussis culture in the clinical data set were identified. Significance of association was tested with a χ2 goodness-of-fit test (p<0.05). Continuous variables (duration of cough, duration of fever, and local disease incidence variables) were dichotomized at categorical cut-offs considered by the clinical investigators to be clinically useful and easy to remember (eg, cough at least 1 week, presence of fever, and proportion positive past 21 days >0.10).

In the multivariate analysis, candidate variables were entered into a forward stepwise logistic regression to identify independent predictors of infants testing pertussis positive. Cut-offs for entry and departure for the logistic regression model were 0.25 and 0.10.

For the local disease incidence model, each variable was considered for entry into the model as an independent predictor. Because of the interdependence of these variables, it was established a priori that no more than one candidate incidence variable would be contained in the final model. Thresholds were defined for the numbers of tests performed, positive, and proportion positive over 1–4 weeks. For proportion positive, thresholds were tested from 0.01 to 0.20 in increments of 0.01.

For the contextualized model, each clinical and local disease incidence variable was considered for entry into the multivariate model. Variables not included in the final clinical only or final local disease incidence model were still considered for inclusion into the contextualized model.

Validation

After selecting the best final model for each analysis (clinical, local disease incidence, or contextualized), a bootstrap validation was performed. Predictors that were selected in over 50% of the 1000 bootstrap samples were retained in the final model.25 26 27

Measurement of model performance

Sensitivity, specificity, positive and negative predictive values, area under the receiver-operating characteristics (ROC) curve (AUC), and percent correct classification were used to compare performance. The best model was defined as that with the greatest specificity among those with highest sensitivity, in order to minimize missed pertussis cases, and also minimize misclassification of those without pertussis.

Comparing clinician performance with decision models

Clinicians' actual performance was compared with the clinical, local disease incidence, and contextualized models by measuring correct classification. Clinician performance of correct classification was judged by utilization or omission of antibiotics in the clinical encounter. The clinical actions taken, as determined by chart review, were compared with what would have been recommended based on the three decision models generated.

Results

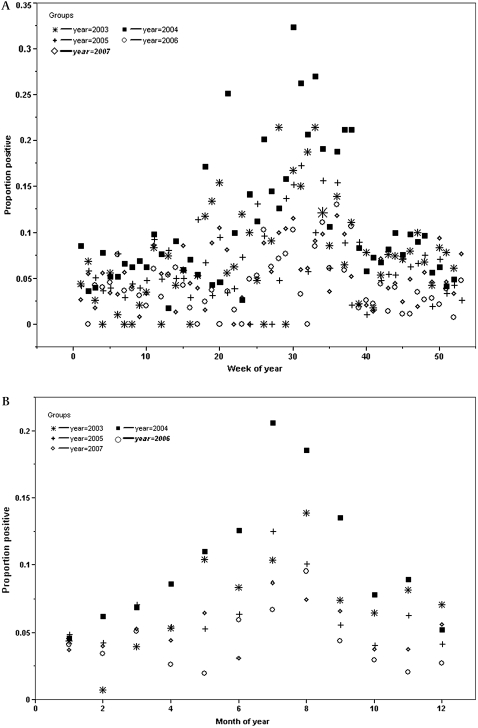

Four hundred and forty-three infants had a pertussis culture sent from 2003 to 2007, and 38 (8.4%) tested positive. Nineteen thousand nine hundred and seven cultures were performed at the State Laboratory Institute of the Massachusetts Department of Public Health during the study; 1103 (5.5%) tested positive. For these 19 907 cultures, the weekly proportion positive ranged from 0 to 32% (mean 6.8%, median 5.6%, interquartile range (IQR) 3.3 to 8.8%). A mean of 4.2 cultures tested positive each week (median 4, range 0 to 20, IQR 2 to 6). Weekly and monthly (figure 1A,B) proportion positive at the state laboratory demonstrate that the timing, height, width, and total number of pertussis peaks vary annually.

Figure 1.

(A) Weekly proportion positive pertussis culture 2003–7. (B) Monthly proportion positive pertussis cultures, 2003–7. (A) and (B) demonstrate that the number, timing, height, and duration of pertussis peak vary annually. While the graph suggests some seasonality, it also shows that pertussis varies substantially from year to year.

Development of “clinical only” decision model

Univariate analysis

Infants testing positive for pertussis were younger, more likely to have a history of apnea or cyanosis, or cough for at least 1 week and were less likely to have fever than those who tested negative (table 1). There were no significant differences between those testing positive and negative for gender, history of post-tussive emesis or seizure, or exposure to a contact with known pertussis.

Table 1.

Subject characteristics by culture result

| Pertussis culture* | p Value† | ||

| Variable | Negative (n=405) N (%) | Positive (n=38) N (%) | |

| Male gender | 216 (53%) | 19 (50%) | 0.40 |

| Mean age (months) (median/IQR) | 3.4 (3, 1 to 5) | 2.3 (2, 1 to 3) | 0.02 |

| Mean days of cough (median/IQR) | 8.8 (4, 1 to 10) | 9.6 (7, 5 to 14) | 0.71 |

| Cough ≥1 week | 147 (36%) | 26 (68%) | 0.002 |

| History of apnea | 72 (17%) | 13 (34%) | 0.04 |

| Post-tussive emesis | 85 (21%) | 12 (32%) | 0.22 |

| History of cyanosis | 73 (18%) | 24 (63%) | <0.0001 |

| History of fever | 86 (21%) | 1 (2.6%) | 0.019 |

| History of seizure | 4 (1.0%) | 0 (0%) | 0.38 |

| Hospitalized | 244 (59%) | 31 (82%) | 0.04 |

| Died | 6 (1.5%) | 0 (0%) | 0.88 |

| Exposure to pertussis | 12 (2.9%) | 2 (5.3%) | 0.42 |

| Antibiotics | 226 (55%) | 33 (87%) | 0.0009 |

| Public health incidence mean percent positive pertussis tests 2 weeks prior (median, IQR) | 5.6 (4.8, 2.9 to 7.7) | 9.9 (8.8, 5.8 to 14) | |

Pertussis culture has 100% specificity and variable sensitivity (4, 16).

χ2.

IQR, interquartile range.

Multivariate analysis

History of cyanosis was the best predictor of pertussis, followed by history of cough for at least 1 week and absence of fever (table 2). Gender, history of post-tussive emesis or seizure, exposure to pertussis contact, and age in months were not included in the final clinical only model.

Table 2.

Multivariate logistic regression analysis (“clinical only” model)

| Predictor | OR | 95% CI | p Value |

| Cyanosis | 6.3 | 2.9 to 13.4 | <0.0001 |

| Cough ≥1 week | 3.1 | 1.4 to 7.0 | 0.0036 |

| Absence of fever | 6.5 | 1.3 to 118 | 0.019 |

Development of “local disease incidence” decision model

Selection of incidence variables

In the 1–4 weeks prior to each visit date, the ranges of mean numbers of cultures performed (102 to 110), positive (4.7 to 4.9) and proportion positive (0.058–0.060) showed a small variation. Because means, medians, ranges, and SD correlated closely for 1–7, 8–14, 15–21, and 22–28 days, metrics from a single time interval were chosen to represent “local disease incidence.” Due to the time required to definitively process a pertussis culture,4 8–14 days (2 weeks) was chosen as the best metric available for a clinical application. A range of thresholds were examined to determine the cut-off that would optimize specificity for maximum sensitivity. The area under the ROC curve for these variables was maximized when the proportion positive from 8 to 14 days prior exceeded 0.10 (p<0.0001).

Development of contextualized decision model

Among clinical variables, presence of cyanosis and cough for at least 1 week met criteria for selection into the final logistic regression model (table 3). The proportion positive 8–14 days prior to the test date also met criteria for selection into the final logistic regression model. Proportion positive thresholds ranging from 0.01 to 0.20 in increments of 0.01 were tested. In conjunction with the clinical variables above, the maximal area under the ROC curve occurred with a cut-off of 0.10. The best contextualized model contained three variables—history of cyanosis, cough at least 1 week, and proportion positive >0.10 eight to 14 days earlier. The incidence variable was a stronger predictor than any clinical factor considered, except for history of cyanosis.

Table 3.

Multivariate logistic regression analysis with local disease incidence variables included (contextualized model)

| Predictor | OR | 95% CI | p Value |

| Cyanosis | 7.0 | 3.3 to 16 | <0.0001 |

| Proportion positive prior 8–14 days >10% | 5.5 | 2.3 to 13 | 0.0001 |

| Cough ≥1 week | 3.3 | 1.5 to 7.9 | 0.0045 |

Validation

All predictors from the multivariate analyses were validated by the bootstrap method and retained in the final models. Cyanosis was selected in over 99%, public health pertussis proportion positive ≥0.10 eight to 14 days prior in over 90%, and cough for at least 1 week in over 80% of 1000 bootstrap samples.

Measurement of performance of decision models

The best model derived in the clinical only analysis (history of cyanosis, cough for at least 1 week and absence of fever), generated an area under the ROC of 0.80, with 89% sensitivity and 27% specificity (table 4). Addition of variables not significant in the univariate analysis (gender, history of post-tussive emesis, history of apnea, history of seizure, exposure to known pertussis case, and age under 3 months) did not improve the area under the ROC. The best local disease incidence model achieved only 13% sensitivity and 53% specificity. In the best contextualized model (history of cyanosis, proportion positive 8–14 days earlier ≥0.10, and cough for at least 1 week) the area under the ROC was 0.82 with 100% sensitivity and 38% specificity.

Table 4.

Performance of decision models with 95% CIs

| Clinical only | Local disease incidence only | Contextualized | |

| Sensitivity | 89 (79 to 99) | 13 | 100 (92 to 100) |

| Specificity | 27 (22 to 32) | 53 | 38 (33 to 43) |

| Positive predictive value | 12 (8 to 16) | 26 | 15 (11 to 19) |

| Negative predictive value | 96 (92 to 99.96) | 94 | 100 (98 to 100) |

| Area under receiver operator curve | 0.80 | 0.65 | 0.82 |

Sensitivity, specificity, positive and negative predictive value, and area under the receiver-operating characteristics curve for the best model in each category. The contextualized model was superior to the clinical model for all metrics, and was statistically better for sensitivity (p<0.04), specificity (p<0.001), and negative predictive value (p<0.02).

The contextualized model outperformed the clinical and local disease incidence models across all metrics (table 4). Compared with the clinical model, the contextualized model achieved superior sensitivity (89–100), specificity (27–38), PPV (12–15), NPV (96–100), and area under the ROC (0.80–0.82).

Comparing clinician performance with decision models

The percent of positives treated with antibiotics and percent of negatives not treated with antibiotics were compared with hypothetical outcomes generated by the three decision models (table 5). The contextualized model missed no patients with pertussis. Among models that did not miss any cases (100% sensitivity), the contextualized model misclassified the fewest patients (62%, 95% CI 57% to 67%) without pertussis, which would have resulted in the most judicious antibiotic use and correct categorization of infants. While clinicians did not do as well as the contextualized model, clinicians outperformed the clinical only model, misclassifying about the same number of patients with pertussis (11% vs 13%) but misclassifying fewer patients without the disease (60% vs 73%) (table 5).

Table 5.

Misclassification of best models versus actual clinician performance

| Percent of patients pertussis positive but not treated (95% CI) | Percent of patients pertussis negative but treated (95% CI) | |

| Clinician's actual treatment per electronic chart review | 13% (2 to 23) | 60% (55 to 65) |

| Best clinical only model | 11% (1 to 21) | 73% (69 to 78) |

| Best local disease incidence only model | 61% (45 to 76) | 15% (11 to 18) |

| Best contextualized model | 0% (0 to 8) | 62% (57 to 67) |

Discussion

Clinicians make critical decisions in the face of uncertainty, and typically rely on individual clinical experience and discussion with close colleagues when making decisions about diagnosis and treatment.28 29 30 Previously, we showed that local disease incidence information about meningitis from a single hospital provides valuable epidemiologic context and enhances a decision model for distinguishing aseptic from bacterial meningitis.8 Here, we demonstrate for the first time how an external public health surveillance source improves a clinical decision model, by incorporating state-wide “epidemiologic context.” Previous prediction models, derived from small numbers of patients, have identified clinical predictors of infantile pertussis like cyanosis and cough, and some models have considered seasonality, but none have incorporated local disease incidence.20 21 Seasonality is not a substitute for accurate real-time information about pertussis incidence as pertussis outbreaks are sporadic and do not follow consistent seasonal or geographic patterns.15 31 32 33 34 In our analysis, epidemiologic context was stronger than all but one clinical predictor (cyanosis). This finding underscores the importance of “situational awareness” in the clinical setting. Understanding the epidemiologic context in which a patient presents may provide critical information about the etiology of the patient's problem, but currently, this type of information is not formally processed, considered, or utilized in clinical decision-making.

Our findings support a general approach of estimating clinical risk of disease at the point of care, accounting for local disease incidence. This approach uses epidemiologic context in the clinical decision-making process rather than relying solely on history, physical exam, heuristics, and preliminary diagnostic test results.10 29 35 36 It is becoming increasingly feasible to deliver public health information to clinicians at the point of care. The emergence of robust, real-time surveillance systems, automated reporting to public health, and widespread adoption of electronic health records present opportunities for bidirectional communication between clinical practice and public health. Our study also promotes the value of disease reporting and surveillance at the state level.

We demonstrate a useful synergy between clinical and public health information in the generation and refinement of clinical decision rules. Public health data have not previously been used to generate decision models because, while they contain detailed information about those who test positive, they contain limited information about those testing negative. Public health efforts focus on tracking, interviewing, and following patients with reportable diseases, so public health data sets contain far greater detail about individuals who test positive. Data about those testing negative are limited even further by patient privacy laws, which prohibit collection of detailed information about people without the reportable disease. This unbalanced data stream creates a unique challenge to the use of public health datasets for the creation of decision models, which rely on rich information about patients both with and without the disease.37 In an effort to use available high-quality data, we approached this problem by integrating a large statewide public health dataset with a detailed hospital-based clinical dataset to develop a decision model for a disease with major public health importance—pertussis.

Limitations

The design of this study was retrospective, so a further validation would be necessary prior to integration into a clinical setting.38 The retrospective nature of the study also required basing the clinical models on patients who had pertussis tests ordered, potentially biasing toward subjects for whom clinicians already suspected of pertussis. While the contextualized model outperformed the clinical only model, the clinical only model is most limited by its retrospective nature. First, a prospectively derived clinical model where testing was based on symptoms and structured data were acquired systematically might improve the performance of a clinical model. Second, while the study was carried out at a single site, this site provides care for 75% of the children who live in and around this large metropolitan area. Third, the incidence data are state-wide, while the patients are from a single, large metropolitan area. Fourth, we relied on the most conservative method for evaluating pertussis—culture—because it is widely regarded as the gold standard.14 15 As delineated in the case definition section of the methods, PCR may be oversensitive, and requires correlation with at least 2 weeks of cough and paroxysm, whoop, or post-tussive emesis,4 which are difficult to assess accurately in a retrospective review. Serology is not recommended for infants, and DFA is not widely available.19 Fifth, the study was limited by a lack of immunization data on the subjects because primary care records were not accessible for these ED patients. Sixth, most patients did not have blood tests performed as part of the evaluation, and so lymphocytosis could not be included in the models; however, while lymphocytosis is classically associated with pertussis, it has been shown to be neither sensitive nor specific.39

Conclusion

This study validates a scientific method for integrating incidence data into a clinical decision model and suggests that “epidemiologic context” could be an important component of future clinical decision-support systems. A software application integrated with an electronic health record might display data to physicians about ambient public health conditions and prompt appropriate management, treatment and reporting processes based on a calculation that considered patient factors in a specific epidemiologic situation. This important refinement of clinical decision-making requires communication between public health and clinical settings, and programs to enable integration of public health data with clinical environments.

Footnotes

Funding: This work was supported by grants K01HK000055 and 1 P01 HK000088 from the Centers for Disease Control and Prevention and by G08LM009778 and R01 LM007677 from the National Library of Medicine.

Competing interests: None.

Ethics approval: The Committee on Clinical Investigation of Children's Hospital Boston approved the study.

Contributors: All authors made substantial contributions to conception, design, analysis, and interpretation of data. AMF and KDM drafted the manuscript, and all authors were involved in revising it critically for important intellectual content and final approval. AMF is guarantor.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1.Cherry JD. Pertussis in adults. Ann Intern Med 1998;128:64–6 [DOI] [PubMed] [Google Scholar]

- 2.Lee GM, Lett S, Schauer S, et al. Societal costs and morbidity of pertussis in adolescents and adults. Clin Infect Dis 2004;39:1572–80 [DOI] [PubMed] [Google Scholar]

- 3.Sotir MJ, Cappozzo DL, Warshauer DM, et al. A countywide outbreak of pertussis: initial transmission in a high school weight room with subsequent substantial impact on adolescents and adults. Arch Pediatr Adolesc Med 2008;162:79–85 [DOI] [PubMed] [Google Scholar]

- 4.Murphy TV, Slade BA, Broder KR, et al. Prevention of pertussis, tetanus, and diphtheria among pregnant and postpartum women and their infants: recommendations of the Advisory Committee on Immunization Practices (ACIP). MMWR Morb Mortal Wkly Rep 2008;57:1–47 [PubMed] [Google Scholar]

- 5.Davis JP. Clinical and economic effects of pertussis outbreaks. Pediatr Infect Dis J 2005;24(6 Suppl):S109–16 [DOI] [PubMed] [Google Scholar]

- 6.Fiore AE, Shay DK, Haber P, et al. Prevention and control of influenza. Recommendations of the Advisory Committee on Immunization Practices (ACIP), 2007. MMWR Recomm Rep 2007;56:1–54 [PubMed] [Google Scholar]

- 7.Fisman DN. Seasonality of infectious diseases. Annu Rev Public Health 2007;28:127–43 [DOI] [PubMed] [Google Scholar]

- 8.Fine AM, Nigrovic LE, Reis BY, et al. Linking surveillance to action: incorporation of real-time regional data into a medical decision rule. J Am Med Inform Assoc 2007;14:206–11 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Brownstein JS, Kleinman KP, Mandl KD. Identifying pediatric age groups for influenza vaccination using a real-time regional surveillance system. Am J Epidemiol 2005;162:686–93 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Reis BY, Pagano M, Mandl KD. Using temporal context to improve biosurveillance. Proc Natl Acad Sci U S A 2003;100:1961–5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Overhage JM, Grannis S, McDonald CJ. A comparison of the completeness and timeliness of automated electronic laboratory reporting and spontaneous reporting of notifiable conditions. Am J Public Health 2008;98:344–50 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Kukafka R, Ancker JS, Chan C, et al. Redesigning electronic health record systems to support public health. J Biomed Inform 2007;40:398–409 [DOI] [PubMed] [Google Scholar]

- 13.Calugar A, Ortega-Sanchez IR, Tiwari T, et al. Nosocomial pertussis: costs of an outbreak and benefits of vaccinating health care workers. Clin Infect Dis 2006;42:981–8 [DOI] [PubMed] [Google Scholar]

- 14.Baughman AL, Bisgard KM, Cortese MM, et al. Utility of composite reference standards and latent class analysis in evaluating the clinical accuracy of diagnostic tests for pertussis. Clin Vaccine Immunol 2008;15:106–14 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Centers for Disease Control and Prevention Manual for the surveillance of vaccine-preventable diseases. 3rd edn Atlanta, GA: CDC, 2002 [Google Scholar]

- 16.Halperin SA. The control of pertussis—2007 and beyond. N Engl J Med 2007;356:110–13 [DOI] [PubMed] [Google Scholar]

- 17.Mattoo S, Cherry JD. Molecular pathogenesis, epidemiology, and clinical manifestations of respiratory infections due to Bordetella pertussis and other Bordetella subspecies. Clin Microbiol Rev 2005;18:326–82 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Centers for Disease Control and Prevention (CDC) Outbreaks of respiratory illness mistakenly attributed to pertussis—New Hampshire, Massachusetts, and Tennessee, 2004–2006. MMWR Morb Mortal Wkly Rep 2007;56:837–42 [PubMed] [Google Scholar]

- 19.American Academy of Pediatrics Section 3: Summaries of infectious diseases: pertussis (whooping cough). In: Pickering LK, Baker CJ, Long SS, et al., eds. Red book 2006: report of the committee on infectious diseases. 27th edn Elk Grove Village: AAP, 2006 [Google Scholar]

- 20.Guinto-Ocampo H, Bennett JE, Attia MW. Predicting pertussis in infants. Pediatr Emerg Care 2008;24:16–20 [DOI] [PubMed] [Google Scholar]

- 21.Mackey JE, Wojcik S, Long R, et al. Predicting pertussis in a pediatric emergency department population. Clin Pediatr (Phila) 2007;46:437–40 [DOI] [PubMed] [Google Scholar]

- 22.Gilbert EH, Lowenstein SR, Koziol-McLain J, et al. Chart reviews in emergency medicine research: where are the methods? Ann Emerg Med 1996;27:305–8 [DOI] [PubMed] [Google Scholar]

- 23.Gorelick MH, Yen K. The kappa statistic was representative of empirically observed inter-rater agreement for physical findings. J Clin Epidemiol 2006;59:859–61 [DOI] [PubMed] [Google Scholar]

- 24.Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics 1977;33:159–74 [PubMed] [Google Scholar]

- 25.Austin PC, Tu JV. Bootstrap methods for developing predictive models. Am Stat 2004;58:131–7 [Google Scholar]

- 26.Efron B, Gong G. A leisurely look at the bootstrap, the jackknife, and cross-validation. Am Stat 1983;37:36–48 [Google Scholar]

- 27.Glaser N, Barnett P, McCaslin I, et al. Risk factors for cerebral edema in children with diabetic ketoacidosis. N Engl J Med 2001;344:264–9 [DOI] [PubMed] [Google Scholar]

- 28.Patel VL, Kaufman DR, Arocha JF. Emerging paradigms of cognition in medical decision-making. J Biomed Inform 2002;35:52–75 [DOI] [PubMed] [Google Scholar]

- 29.Tversky A, Kahneman D. Judgment under uncertainty: heuristics and biases. Science 1974;186:1124–31 [DOI] [PubMed] [Google Scholar]

- 30.Wolf FM, Gruppen LD, Billi JE. Differential diagnosis and the competing-hypotheses heuristic. A practical approach to judgment under uncertainty and Bayesian probability. JAMA 1985;253:2858–62 [PubMed] [Google Scholar]

- 31.Crowcroft NS, Pebody RG. Recent developments in pertussis. Lancet 2006;367:1926–36 [DOI] [PubMed] [Google Scholar]

- 32.Fine PE, Clarkson JA. Seasonal influences on pertussis. Int J Epidemiol 1986;15:237–47 [DOI] [PubMed] [Google Scholar]

- 33.Skowronski DM, De Serres G, MacDonald D, et al. The changing age and seasonal profile of pertussis in Canada. J Infect Dis 2002;185:1448–53 [DOI] [PubMed] [Google Scholar]

- 34.Tanaka M, Vitek CR, Pascual FB, et al. Trends in pertussis among infants in the United States, 1980–1999. JAMA 2003;290:2968–75 [DOI] [PubMed] [Google Scholar]

- 35.Croskerry P. The importance of cognitive errors in diagnosis and strategies to minimize them. Acad Med 2003;78:775–80 [DOI] [PubMed] [Google Scholar]

- 36.Morse SS. Global infectious disease surveillance and health intelligence. Health Aff (Millwood) 2007;26:1069–77 [DOI] [PubMed] [Google Scholar]

- 37.Wasson JH, Sox HC, Neff RK, et al. Clinical prediction rules. Applications and methodological standards. N Engl J Med 1985;313:793–9 [DOI] [PubMed] [Google Scholar]

- 38.Charlson ME, Ales KL, Simon R, et al. Why predictive indexes perform less well in validation studies. Is it magic or methods? Arch Intern Med 1987;147:2155–61 [PubMed] [Google Scholar]

- 39.Long S, Pickering L, Prober C. Principles and practice of pediatric infectious diseases. 3rd edn New York: Churchill Livingstone, 2008 [Google Scholar]