Abstract

Objective

Electronic medical records (EMRs) facilitate abnormal test result communication through “alert” notifications. The aim was to evaluate how primary care providers (PCPs) manage alerts related to critical diagnostic test results on their EMR screens, and compare alert-management strategies of providers with high versus low rates of timely follow-up of results.

Design

28 PCPs from a large, tertiary care Veterans Affairs Medical Center (VAMC) were purposively sampled according to their rates of timely follow-up of alerts, determined in a previous study. Using techniques from cognitive task analysis, participants were interviewed about how and when they manage alerts, focusing on four alert-management features to filter, sort and reduce unnecessary alerts on their EMR screens.

Results

Provider knowledge of alert-management features ranged between 4% and 75%. Almost half (46%) of providers did not use any of these features, and none used more than two. Providers with higher versus lower rates of timely follow-up used the four features similarly, except one (customizing alert notifications). Providers with low rates of timely follow-up tended to manually scan the alert list and process alerts heuristically using their clinical judgment. Additionally, 46% of providers used at least one workaround strategy to manage alerts.

Conclusion

Considerable heterogeneity exists in provider use of alert-management strategies; specific strategies may be associated with lower rates of timely follow-up. Standardization of alert-management strategies including improving provider knowledge of appropriate tools in the EMR to manage alerts could reduce the lack of timely follow-up of abnormal diagnostic test results.

Keywords: medical records systems, computerized, task performance and analysis, diagnostic errors/classification, primary healthcare, software

The purpose of this study is to document how primary care providers working with an electronic medical record (EMR) manage alerts related to critical diagnostic test results, and compare differences in alert-management strategies among providers who had high versus low rates of timely follow-up of these results.

Background

Breakdowns in the diagnostic process may arise when critical results (both imaging and laboratory) are not communicated to the ordering providers, a scenario not uncommon in the outpatient setting where care is fragmented.1 2 For instance, in paper-based result transmission systems, communication breakdowns between the ordering clinician and the laboratory or radiologist may occur when results are lost in transit. Integrated EMR systems can notify providers about abnormal test results directly on their desktops.3 This type of communication involves using automated notifications or “alerts” and can facilitate prompt review and action on test results.4 Nevertheless, recent literature has revealed that outpatient test results continue to be missed in systems that use computerized notifications, including the Computerized Patient Record System (CPRS),4 5 an integrated EMR used in Veterans Affairs (VA) facilities. For instance, we recently found that almost 8% of critical imaging results may not receive timely follow-up actions despite evidence of transmission of the alert to the ordering provider through a “View Alert” notification window in CPRS.6

Little is known about how providers manage abnormal diagnostic test alerts they receive on their EMR screens.7 Factors including information overload from too many alerts could lead to a lack of alert review and may be partially responsible for the lack of follow-up actions, although we found a comparable rate of lack of follow-up even when providers reviewed their alerts.8 We also found some providers to have lower rates of timely follow-up than others, suggesting that they may process their alerts differently.

What would lead to a variation in follow-up timeliness? Assuming that this variation is related to differences in how alerts are processed, differences in alert processing could be the result of multiple factors, including differences in information-processing strategies (such as a heuristic vs an algorithmic based approach), or variability in workload. Sittig and colleagues9 found that providers are less likely to acknowledge alerts when they are behind schedule, and that they pay differential attention to alerts concerning patients of different clinical burden (eg, providers were more likely to acknowledge alerts about elderly or highly comorbid patients than about younger or less complex patients). This suggests a clinically based, yet heuristic, strategy for managing alerts. Additionally, workload has been associated in multiple settings as a factor in medical errors, including pharmacy,10 inpatient/surgical settings11 and primary care.12 In the case of managing alerts, both the volume of alert notifications and the clinical workload associated with those notifications are likely contributors to response differences.

Research suggests that primary care providers are not utilizing all of the features and functions available in their EMR to their full extent.13 This results in their spending considerable time—as much as 30 min a day in the case of managing alerts.14 Currently, electronic alert-management features exist in EMRs to help process alerts more efficiently, freeing providers' time and cognitive resources for the more analytical task of clinical follow-up. However, we are unaware of the degree to which these existing features play a role in providers' alert-management activities. An understanding of how providers process and manage their alerts, and factors influencing the interaction between providers and their EMR screens (human–computer interface issues) could improve timely follow-up in these systems and lay groundwork for potential interventions.

Research question

Using techniques from cognitive task analysis,15 we systematically documented how providers process alerts through an EMR View Alert system, the problems they encountered while performing the task, and whether management strategies of providers with higher rates of timely follow-up differ from those with lower rates.

Methods

Participants

Twenty-eight primary care providers (50% men) participated individually in the task analysis interviews. Providers were purposively sampled according to their rates of timely follow-up in our previous study.8 Within each follow-up group, we sampled residents (n=6), attending physicians (n=11), and allied health professionals (physician assistants and nurse practitioners) (n=11).

Setting and EMR training environment

This study was conducted at the Michael E DeBakey VA Medical Center (MEDVAMC) and its five satellite clinics in Houston, Texas. Being a large tertiary care VA facility, it serves as the primary healthcare provider for more than 116 000 veterans in southeast Texas logging more than 800 000 outpatient visits a year.

Providers receive a 2 h introductory training to CPRS as part of new employee orientation, to acquaint users with the program's basic features. Training content related to alert notifications usually consists of less than 5 min in the 2 h training, which orients users to the contents (but not the alert-management features) of the alert-notifications window, and how to acknowledge alerts they receive. Web-based training is also available on users' own time, though provider awareness and use of this resource appear to be low. An information-technology help desk is available to clinicians for questions, as are clinical application coordinators, who serve as technical liaisons between information technology and clinicians.

Measures

Timely follow-up of alerts

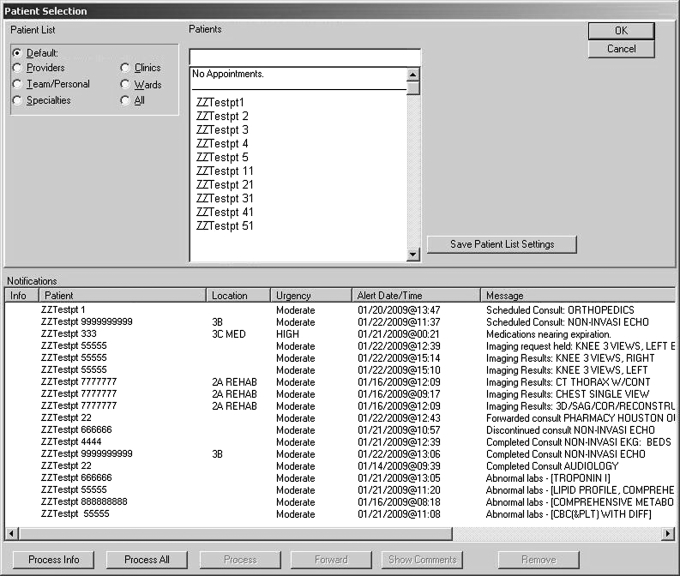

We recently studied a follow-up of 1196 electronic alerts (“View Alerts”) of five types of critical imaging results (critically abnormal x-ray, CT scan, MRI, Mammography and ultrasound) at the facility.8 The View Alerts system has been used in all VA facilities for several years (figure 1 displays an example of the CPRS alert notification window). Because of computerized provider order entry, the ordering clinician is always known and notified of these results. Additionally, all types of alerts included in the study were mandatory in nature, such that the receiving provider (attending physicians, allied healthcare providers and trainees working in a variety of specialties) did not have an option to stop receiving them. All primary care providers were initially eligible to be included for subsequent task analysis.

Figure 1.

Alert notification window in CPRS.

Results of this study were used to classify providers into two groups: providers with two or more alerts without follow-up after 4 weeks were classified as the “untimely” follow-up group; providers with only one alert (or zero) lacking timely follow-up at 4 weeks were classified as the “timely” follow-up group. To check for timeliness of follow-up, alert-management-tracking software determined whether the alert was “acknowledged” (ie, the provider clicked on and opened the alert message), within 2 weeks of transmission. Evidence of response and follow-up actions by providers for both “acknowledged” and “unacknowledged” alerts was then determined by chart review; actions included ordering a follow-up test or referral, or contacting the patient within 4 weeks of alert transmission. In the absence of a documented response confirming a follow-up action, providers were called to determine their awareness of the test result and any undocumented or planned follow-up action. If the provider was unaware of the test result, the alert was considered without timely follow-up (determined at 4 weeks after alert transmission).

Workload volume

Workload was assessed via the volume of alerts received by each provider, available from CPRS.

Alert-management strategies

Provider alert-management strategies were captured using techniques from cognitive task analysis, elaborated upon in the Procedure section.

Procedure

We used techniques from cognitive task analysis15 to interview each provider on how they manage alerts received in CPRS, with specific emphasis on the strategies they use to filter alerts, reduce unnecessary alerts and sort alerts for easier processing. Cognitive task analysis is a family of task analysis techniques that yields “information about the knowledge, thought processes and goal structures that underlie observable task performance”16 and is particularly suitable for gaining detailed information about work that is highly cognitive in nature (such as processing clinical alerts). We used a combination of participant demonstration (ie, we asked them to demonstrate in CPRS how they managed their alerts) and verbal protocols (ie, we asked participants to verbalize the strategies they use to manage alerts)17 to elicit the decision-making processes they used in alert-management (a study protocol with greater details about our task analysis procedures is currently in press).18 Two pilot interviews were conducted to test our procedures and question protocol; no difficulties or problems were experienced during these pilot interviews, so we proceeded with our protocol as planned.

We were particularly interested in four currently existing alert-management features in CPRS: (1) the alert-notification settings window (notification feature), which allows providers to customize notification settings to reduce alerts deemed unnecessary, (2) the ability to sort alerts for faster and easier processing (sorting feature), (3) appropriate use of the alert-processing function (“process all” feature), which allows batch processing of alerts, and (4) the “alert when results” feature, which allows providers to alert additional providers on a particular test result when not in office. Appendix A (available as an online data supplement at http://www.jamia.org/) lists the questions asked of each participant. Interviews were audio-recorded; each interview was conducted by a primary interviewer, and a secondary note-taker to capture responses and make field notes as the interview occurred. Interview recordings were transcribed for analysis.

We also compared the volume of alerts received by all study providers on a daily basis. To estimate this, we extracted the number of alerts received by each participating provider from the CPRS alerts data file for a non-consecutive 2-month period and calculated the mean number of alerts each provider received daily.

Analysis

We used techniques from grounded theory19 and content analysis20 to analyze our interviews and identify patterns in how participants manage their alerts. This included the development of an initial coding taxonomy, open coding (where the text passages were examined for recurring themes and ideas), artifact correction and validation, and quantitative tabulation of coded passages.

Coding taxonomy development

Immediately after each interview, the interviewing team organized and summarized the responses from each interviewee into a structured data form to develop an initial taxonomy to be used in coding the full transcripts. The structured data forms were analyzed for content using ATLAS.ti 5.021 by the lead author, an industrial/organizational psychologist experienced in task analysis and qualitative research methods. To minimize bias in code development, the lead author was not present during the interviews. Table 1 displays the resulting initial code list used to analyze the full transcripts.

Table 1.

Initial code taxonomy for view alerts task analysis

| Code category | Code |

| Ability to turn off nonmandatory notifications (notification feature) | Not familiar with “turn off” feature |

| Familiar with feature but uses default settings | |

| Familiar with feature | |

| “Alert when results” feature | Not familiar with feature |

| Familiar with feature | |

| “Process all” feature | Not familiar with feature |

| Familiar with feature | |

| Familiar with feature but does not use it | |

| Uses feature | |

| Sorting feature | Does not know how |

| Does not prioritize/sort | |

| Knows how | |

| Preferred order: newest first | |

| Preferred order: oldest first | |

| Sorts manually by urgency/clinical priority | |

| When do you manage your alerts? | First thing in the morning |

| In between patients | |

| Lets them stack up | |

| Not reported |

Open coding

Two coders independently coded all 28 interviews using the initial taxonomy (see table 1) developed from the response summaries. Both coders had a background in health and previous experience coding transcripts for qualitative analysis, and were trained in the use of the coding taxonomy. Furthermore, transcripts from the two pilot interviews were used to calibrate the coders before beginning coding work on the actual transcripts. Coders were required to use the existing taxonomy first but were permitted to create additional codes if interesting material appeared in the transcripts that did not fit into any of the existing code categories.

Artifact correction and validation

The two independent coding sets were then reviewed by a third coder with a clinical background (trained similarly in the use of the coding taxonomy) for correcting coding artifacts, validation, and inter-rater agreement. The goal of correcting coding artifacts was to prepare the two independent coders' transcripts for validation and facilitating the calculation of inter-rater agreement. This involved (a) mechanically merging the two coders' coded transcripts using the ATLAS.ti software (so that all data appear in a single, analyzable file), (b) identifying and reconciling nearly identical quotations (eg, each coder may have captured a slightly longer or shorter piece of the same text) that were assigned the same codes by each coder and (c) correcting misspellings or extraneous characters in the code labels.

The goal of validation was to ensure that pre-existing codes were being used by both coders in the same way, to reconcile newly created codes from each coder that referred to the same phenomenon but were labeled differently, and to resolve discrepancies between the coders. For quotations that did not converge (ie, did not receive identical codes from each coder), the validator (a) identified quotations common to both coders that received discrepant codes, and selected the best-fitting code and (b) identified discrepant quotations (eg, quotations identified by one coder but not the other) for resolution by team consensus.

Code tabulation and statistics

After all quotations were resolved and coded, a code/subject matrix was created, tabulating the number of quotations identified from each subject about each code. This matrix was then used to perform quantitative analyses. Descriptive statistics were calculated for three types of alert-management strategies: alert-management schedules (ie, when providers manage their alerts), use of computerized alert-management software features and use of workarounds. Non-parametric statistics (χ2) were computed to identify differences in the alert-management strategies of providers with timely and untimely follow-up.

Results

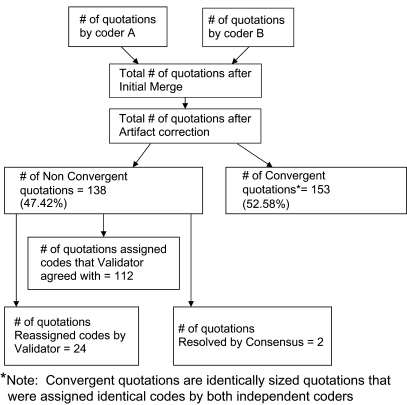

Inter-rater agreement

Figure 2 summarizes the validation process and the resulting inter-rater agreement. The two coders identified 248 and 233 quotations, respectively. Mechanically merging the coders' coded transcripts using the ATLAS.ti software resulted in 479 quotations; as shown in the figure, however, the majority of discrepancies between the two coders were artifacts of the coding process, for example, misspellings, label differences in codes for the same concept, or capturing a longer versus shorter piece of the same text. Correcting these artifacts resulted in 291 quotations to be validated. One hundred and fifty-three of the 291 validated quotations (53%) converged exactly (ie, identical quotations by both coders, assigned identical codes). Of the remaining 138 quotations, only 26 quotations needed either code reassignment by the validator (24) or resolution by group consensus (2). Thus, the validator/coder team agreed on 265 out of 291 quotations (91%).

Figure 2.

Validation process and inter-rater agreement flow diagram.

Daily alert volume

In general, providers received a mean of 57.5 alerts per day (SD=27.9). On average, providers in the untimely group received almost twice as many alerts daily (M=64.8, SD=30.7) as providers in the timely group (M=36.8, SD=29.1, F=4.72, p=0.04). However, this difference was mostly accounted for by provider type; residents generally tend to receive a low volume of alerts because the residents in the study were seeing outpatients only about half a day per week in those 2 months. After removing the residents from the sample, alert volume between groups was far more comparable (Mtimely=50.1, SDtimely=24.4; Muntimely=64.8; SDuntimely=30.7; F=1.26, p=0.278). Though providers in the untimely group still received 28% more alerts than the timely group did, our sample size was too small to determine whether this difference was statistically significant.

Alert-management strategies

Schedule patterns

We first examined providers' alert-management schedule patterns by analyzing differences in their responses to the question of when they manage their alerts. Table 2 presents the alert-management schedules emergent from these responses as well as the number of participants endorsing each strategy by provider follow-up timeliness. As shown in the table, the most common times to manage alerts are in between patients (n=11) and first thing in the morning (n=9), though considerable variability existed. Twice as many providers used multiple schedules (eg, both first thing in the morning and between patients) as opposed to a single schedule (eg, only in between patients). However, there were no significant differences in alert-management schedule patterns of timely and untimely follow-up providers.

Table 2.

Alert-management schedules by provider follow-up timeliness

| Schedule | Follow-up timeliness | Total | |||

| Timely | Untimely | ||||

| At the end of the day | 2 | 40% | 3 | 60% | 5 |

| Clears alerts by the end of the day | 5 | 71.4% | 2 | 28.6% | 7 |

| Does not process alerts | 2 | 100% | 0 | 0% | 2 |

| First thing in the morning | 4 | 44.4% | 5 | 55.5% | 9 |

| In between patients | 4 | 36.4% | 7 | 63.6% | 11 |

| Lets them stack up | 2 | 100% | 0 | 0% | 2 |

| Not reported | 1 | 100% | 0 | 0% | 1 |

| As soon as they appear | 3 | 75% | 1 | 25% | 4 |

Totals add up to more than 28 (the total number of participants) because participants may have reported using more than one alert-management schedule.

Use of alert-management software features

Next, we examined providers' use of the four CPRS features described earlier (notification settings, sorting, “process all,” and “alert when results”) specifically designed to help manage critical alerts. Different providers reported different levels of awareness and proficiency with each feature; table 3 shows the number of participants who reported using each feature at various levels of proficiency in the timely and untimely groups. Between 25% (for notifications) and 96% (for “alert when results”) of all respondents specifically reported they were unaware of the alert-management features studied; only two providers reported actively using more than one of these features (both used sorting and notifications) to manage their alerts. Providers in the untimely group did not use these features in significantly different ways than timely providers; for example, almost half of the providers do not use any of the alert-management features provided by CPRS, and no providers used more than two features. The one exception is the notification feature: whereas everyone in the untimely group reported being aware of the notifications feature, less than half of the providers in the timely group (n=7) reported being aware (χ2 (1)=10.09, p=0.039).

Table 3.

Provider use of Computerized Patient Record System (CPRS) features by provider follow-up timeliness

| CPRS feature | Timely providers (n=15) | Untimely providers (n=11) |

| Sorting | ||

| Unaware | 8 | 6 |

| Aware | 4 | 1 |

| Uses feature | 3 | 4 |

| Notification | ||

| Unaware | 7 | 0 |

| Aware | 3 | 5 |

| Uses feature | 5 | 6 |

| Process all | ||

| Unaware | 4 | 6 |

| Aware | 11 | 5 |

| Unaware | 0 | 0 |

| Alert when results | ||

| Unaware | 14 | 11 |

| Aware | 1 | 0 |

| Uses feature | 0 | 0 |

| No of CPRS features used | ||

| 0 | 8 | 5 |

| 1 | 5 | 6 |

| 2 | 2 | 0 |

Timely providers are those who have lost one or fewer alerts to follow-up during a 4-month period. Untimely providers are those who have lost two or more alerts to follow-up during a 4-month period.

Use of workarounds

Finally, we examined providers' use of “workarounds” that is, “staff actions that do not follow explicit or implicit rules, assumptions, workflow regulations or intentions of system designers.”22 Forty-six percent of providers used one or the other of two work around strategies: (a) the use of handwritten notes as reminders, n=5 and (b) the use of another electronic method to process, n=8. One provider used both workarounds. Currently, CPRS does not provide any tools that allow providers to easily track their alerts over time, hence the use of these strategies. Perhaps most striking, however, was the providers' heuristic reliance on their professional judgment rather than using CPRS's automated sorting and processing features algorithmically. We found that many providers visually scanned the alert list and first attended to what they deemed higher clinical priority or urgent alerts as opposed to using CPRS' alert-management features to process their alerts in a more systematic, algorithmic manner. Only one provider in the timely follow-up group employed this strategy compared with nearly half of the providers in the untimely follow-up group (n=5 of 12) employed this strategy (χ2 (1)=5.19, p=0.024).

Discussion

We sought to identify differences in the alert-management strategies of providers with high versus low rates of timely follow-up of critical diagnostic imaging alerts, with particular attention to their use of computerized alert-management tools. Using techniques from cognitive task analysis, we found that providers of both types were relatively unaware of important alert-management features and used workarounds (such as handwritten notes as reminders) to process alerts. Although more providers in the untimely than in the timely group were aware of the notifications feature, more providers from the untimely group also reported manually scanning the alert list and heuristically processing alerts according to their judgment of clinical priority. Overall, we found a lack of standardization in the management of critical diagnostic test alerts.

The results of this study are consistent with research and theory on cognitive workload and attention. Managing large numbers of alerts (over 50/day average in our sample) is cognitively complex because making a decision about any given alert requires processing many variables at the same time (eg, criticality, urgency, date received, whether the alert is informational or requires action).14 Related research suggests that decision-makers (such as providers trying to prioritize alerts) cannot cognitively evaluate more than about four to five variables at a time in making their decisions.23 Thus, busy providers, short on time, using heuristics based on multiple clinical and time-based criteria to decide which alerts to address first have difficulty managing a large volume of alerts.

Though managing alerts overall requires a high level of reasoning due to the complexity of interaction with the clinical data, deciding which alerts to address first requires far less complex reasoning. Providers compile, rather than analyze, information to make this kind of decision;14 this type of compiling activity could be offloaded to some degree to a computer, thus saving precious cognitive resources for more complicated clinical analysis that only the provider can accomplish. Alert-management features such as those we studied provide an algorithmic, systematic means of prioritizing and filtering alerts, so as to reduce cognitive workload and allow the provider to devote more cognitive resources to the task of following up on an alert, instead of deciding which alert needs attention first. This could explain why providers who manually prioritized alerts were more likely to be in the untimely group compared to providers who relied on the systematic use of alert-management tools.

In addition to cognitive workload, other explanations for the observed results such as “clinical inertia”24 and cognitive style are also possible. In our previous work,6 we found that a lack of timely follow-up was minimal when the radiologist notified the provider by phone, which at the study site is required only when test results are life-threatening or require an emergent intervention. This “inertia” has been described in other settings and associated with a lack of action on results that have less immediate consequential implications.

Cognitive style (a concept increasingly studied in the technology acceptance literature) has also been posited as a potential source of variation in technology usage. Chakraborty and colleagues25 found that people with innovative cognitive styles are more likely than people with adaptive styles to perceive a new technology (such as alert-management features) as useful (and thus make them more likely to use it). However, a contemporary study26 found that after accounting for computer anxiety, self-efficacy, and gender (variables common in early adoption and use models), cognitive style accounts for no significant incremental variance, though personality characteristics do. Thus, the evidence is mixed, and more studies are needed before firm conclusions can be drawn from the cognitive style literature.

Implications

Our findings highlight the importance of usability, user knowledge, and adequate provider training in utilization and integration of EMRs into their clinical work. A significant portion of participants were unaware of the alert-management features we studied, and none were aware of all of them; providers often employed workarounds, the most common being handwritten notes and reminders, to aid them in managing their alerts. In our ongoing work, we have demonstrated several of the features discussed in the paper to participants and found that many were unaware that CPRS had these functionalities, and “new” tools. Even the most perfectly designed user interface is useless if the user is unaware of its features, or if the user does not know how to use the feature properly.

An excellent example of this from our study is the notifications feature. This feature displays a list of all available events types (eg, notifications that certain lab results were completed, that a patient was seen by a specialty clinic, etc) for which an alert can be generated; users can then select the types of events of which they want to be notified. At the study site, 10 types of notifications are mandatory (ie, the user cannot opt out of receiving them); on average, providers have approximately 15 types of notifications turned on, though we found some providers with as many as 50. When a new user account is created, only a set of institutionally determined “mandatory” alert notifications are turned on by default. However, providers often want additional alerts about clinical events they consider important. If the user is unaware of the notifications feature and does not change the default settings, then they may be missing important information impacting patient safety; conversely, if the user is not selective about what is most relevant to him/her, he/she could inadvertently increase both their overall alert volume and their signal-to-noise ratio, making it more difficult to address alerts in a timely fashion. This could partially explain the difference between groups in knowledge of the notifications feature, and why providers in the untimely group had 28% greater alert volume.

User knowledge of and targeted training on EMR features may also help reduce the observed follow-up variation. Many institutions (including our study site) provide a single, cursory training on the basic features of their EMR; this training usually occurs during new employee orientation, when providers are cognitively overloaded with a myriad of other logistical details. Decades of training and skill acquisition research suggest the need for activating multiple sensory modalities during training, accommodating trainee learning styles, distributed practice schedules, and opportunities to practice newly learnt skills on the job.27 28 29 Thus, institutions should consider strengthening their EMR training programs to include features such as audiovisual or computerized media, and periodic refresher trainings (targeted to the needs of the individual). Further, research also demonstrates that pretraining conditions such as the availability of protected training time, trainee readiness and strategically framing the purpose of the training can have a considerable impact on training effectiveness.29 30 Training could also be improved by involving clinical “super users” from the institution in the training process. EMR training sessions framed to help providers view the EMR as a natural part of their clinical work (eg, a tool as basic as a stethoscope) rather than a bureaucratic barrier are likely to have a greater impact, and thus improve the way physicians manage alerts (and perhaps other EMR components). Though the study site offered several training tools (initial instructor led workshop, web-based training, super users in the form of clinical applications coordinators), barriers such as the lack of protected time for training and improper framing of the training tools offered may have significantly reduced their effectiveness.

Finally, this research serves to highlight the fact that the alert system is also being used as a clinical task-management system by clinicians when it was not designed as such, and thus has significant limitations. Regardless, clinical information system designers should begin to devote far more resources to building in methods to facilitate the process of capturing user actions along with the clinical context in which they take place. Such data would enable clinical system designers, along with researchers, to reconstruct user actions enabling them to better understand what is working and what is not as these complex systems are used for routine clinical activities. This increased understanding should greatly facilitate the process of system redesign and thus allow future systems to better meet clinicians' needs.

Limitations

This work contains two important limitations. First, the study was conducted at a single VA medical center, which limits generalizability. However, the basic features of CPRS (including which alert-management features are available) are consistent across VAMCs throughout the USA. Thus, the alert-management strategies observed at this VAMC are likely observable at other VAMCs. Although our findings may not generalize to EMRs in the private sector, users in the private sector can benefit from the implications of this work.

Second, with a sample size of 28, it is difficult to identify probabilistic differences between the timely and untimely follow-up groups. A larger sample size may have allowed for better detection of some of the more subtle effects, such as differences in exactly which alert-management tools were used by each group. Because of its labor intensiveness, the cognitive task analysis technique used for this research does not lend itself to large-scale data collection. However, in exchange for labor intensiveness, this technique offers a rich depth of information not obtainable from a survey or structured simulation. Indeed, one of the most interesting findings that providers in the untimely group manually prioritize whereas timely providers do not was not an a priori hypothesis and emerged from the data. Further, significant findings, despite a small sample size, are often considered stronger evidence of an effect than significant findings based on a large sample size, where small effects are easy to find.31

Finally, we were unable to ascertain individual differences among providers outside of provider type, such as differences in cognitive style, personality characteristics, or usage habits. Future studies taking these factors into account could clarify some of the mixed findings currently in the literature.

Conclusion

We found that providers do not use the full capabilities of the EMR tools available to them to help manage their alerts, mainly due to lack of awareness; and the strategies they use to manage critical diagnostic alerts are not standardized. Healthcare organizations should consider improving the design of existing interventions (eg, their training programs) as well as new interventions to improve awareness, knowledge, and use of electronic alert-management software features in an effort to reduce the number of alerts that lack timely follow-up. Future research should examine broader factors, such as workflow, personnel and policy-related issues that could similarly impact the alerts management process and consequently patient safety.

Footnotes

Funding: The research reported here was supported in part by a VA Career Development Award (CD2-07-0818) to SJH, the VA National Center of Patient Safety, and the Houston VA HSR&D Center of Excellence (HFP90-020). Other funders: VA Career Development Award; VA National Center of Patient Safety; Houston VA HSR&D Center of Excellence.

Competing interests: None.

Provenance and peer review: Not commissioned; not externally peer reviewed.

References

- 1.Poon EG, Haas JS, Louise PA, et al. Communication factors in the follow-up of abnormal mammograms. J Gen Intern Med 2004;19:316–23 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Poon EG, Gandhi TK, Sequist TD, et al. “I wish I had seen this test result earlier!”: dissatisfaction with test result management systems in primary care. Arch Intern Med 2004;164:2223–8 [DOI] [PubMed] [Google Scholar]

- 3.Poon EG, Wang SJ, Gandhi TK, et al. Design and implementation of a comprehensive outpatient Results Manager. J Biomed Inform 2003;36:80–91 [DOI] [PubMed] [Google Scholar]

- 4.Singh H, Arora H, Vilhjalmsson R, et al. Communication outcomes of critical imaging results in a computerized notification system. J Am Med Inform Assoc 2007;14:459–66 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Wahls TL, Cram PM. The frequency of missed test results and associated treatment delays in a highly computerized health system. BMC Fam Pract 2007;8:32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Singh H, Thomas E, Mani S, et al. Timely follow-up of abnormal diagnostic imaging test results in an outpatient setting: are electronic medical records achieving their potential? Arch Intern Med 2009. In press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Murff HJ, Gandhi TK, Karson AK, et al. Primary care physician attitudes concerning follow-up of abnormal test results and ambulatory decision support systems. Int J Med Inform 2003;71:137–49 [DOI] [PubMed] [Google Scholar]

- 8.Singh H, Thomas E, Mani S, et al. Will providers follow-up on abnormal test result alert if they read it? Society for General Internal Medicine; 31st Annual Meeting, Pittsburgh, Pennsylvania April 9–12, 2008. J Gen Intern Med 2008;23(Suppl 2):374 [Google Scholar]

- 9.Sittig DF, Krall MA, Dykstra RH, et al. A survey of factors affecting clinician acceptance of clinical decision support. BMC Med Inform Decis Mak 2006;6:6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Malone DC, Abarca J, Skrepnek GH, et al. Pharmacist workload and pharmacy characteristics associated with the dispensing of potentially clinically important drug-drug interactions. Med Care 2007;45:456–62 [DOI] [PubMed] [Google Scholar]

- 11.Weissman JS, Rothschild JM, Bendavid E, et al. Hospital workload and adverse events. Med Care 2007;45:448–55 [DOI] [PubMed] [Google Scholar]

- 12.Singh H, Thomas EJ, Petersen LA, et al. Medical errors involving trainees: a study of closed malpractice claims from 5 insurers. Arch Intern Med 2007;167:2030–6 [DOI] [PubMed] [Google Scholar]

- 13.Simon SR, McCarthy ML, Kaushal R, et al. Electronic health records: which practices have them, and how are clinicians using them?. J Eval Clin Pract 2008;14:43–7 [DOI] [PubMed] [Google Scholar]

- 14.Best RG, Pugh JA. VHA primary care task database [CD-ROM]. San Antonio: South Texas Veterans Health Care System, 2006 [Google Scholar]

- 15.Schraagen JM, Chipman SF, Shalin VL.(eds). Cognitive task analysis. Mahwah, NJ: Lawrence Erlbaum Associates, 2000 [Google Scholar]

- 16.Chipman SF, Schraagen JM, Shalin VL. Introduction to cognitive task analysis. In: Schraagen JM, Chipman SF, Shalin VL, eds. Cognitive task analysis. Mahwah: Lawrence Erlbaum Associates, 2000:3–23 [Google Scholar]

- 17.DuBois D, Shalin VL. Describing job expertise using cognitively oriented task analyses. In: Schraagen JM, Chipman SF, Shalin VL, eds. Cognitive task analysis. Mahwah: Lawrence Erlbaum Associates, 2000:41–56 [Google Scholar]

- 18.Hysong SJ, Sawhney M, Wilson L, et al. Improving outpatient safety through effective electronic communication: a study protocol. Implementation Sci. In press. [DOI] [PMC free article] [PubMed]

- 19.Strauss AL, Corbin J. Basics of qualitative research: techniques and procedures for developing grounded theory. 2nd edn Thousand Oaks: Sage Publications, 1998 [Google Scholar]

- 20.Weber RP. Basic content analysis. 2nd edn Newbury Park: Sage, 1990 [Google Scholar]

- 21.Muhr T. User's Manual for ATLAS.ti 5.0 [computer program]. Version 5. Berlin: Scientific Software Development GmbH, 2004 [Google Scholar]

- 22.Koppel R, Wetterneck T, Telles JL, et al. Workarounds to barcode medication administration systems: their occurrences, causes, and threats to patient safety. J Am Med Inform Assoc 2008;15:408–23 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Shanteau J, Stewart TR. Why study expert decision making? Some historical perspectives and comments. Organ Behav Hum Decis Process 1992;53:95–106 [Google Scholar]

- 24.Phillips LS, Branch WT, Cook CB, et al. Clinical inertia. Ann Intern Med 2001;135:825–34 [DOI] [PubMed] [Google Scholar]

- 25.Chakraborty I, Hu PJH, Cui D. Examining the effects of cognitive style in individuals' technology use decision making. Decision Support Systems 2008;45:228–41 [Google Scholar]

- 26.McElroy JC, Hendrickson AR, Townsend AM, et al. Dispositional factors in internet use: personality versus cognitive style. Mis Q 2007;31:809–20 [Google Scholar]

- 27.Arthur W, Bennett W, Edens PS, et al. Effectiveness of training in organizations: a meta-analysis of design and evaluation features. J Appl Psychol 2003;88:234–45 [DOI] [PubMed] [Google Scholar]

- 28.Salas E, Cannon-Bowers JA. Methods, tools, and strategies for team training. In: Quiñones MA, Ehrenstein A, eds. Training for a rapidly changing workplace: applications of psychological research. Washington: American Psychological Association, 1997:249–79 [Google Scholar]

- 29.Salas E, Cannon-Bowers JA. The science of training: a decade of progress. Annu Rev Psychol 2001;52:471–99 [DOI] [PubMed] [Google Scholar]

- 30.Bryan R, Kreuter M, Brownson R. Integrating adult learning principles into training for community partners. Health Promot Pract. In press. [DOI] [PubMed]

- 31.Darlington RB. Regression and linear models. New York: McGraw-Hill, 1990 [Google Scholar]