Abstract

Health IT implementations often introduce radical changes to clinical work processes and workflow. Prior research investigating this effect has shown conflicting results. Recent time and motion studies have consistently found that this impact is negligible; whereas qualitative studies have repeatedly revealed negative end-user perceptions suggesting decreased efficiency and disrupted workflow.

We speculate that this discrepancy may be due in part to the design of the time and motion studies, which is focused on measuring clinicians' ‘time expenditures’ among different clinical activities rather than inspecting clinical ‘workflow’ from the true ‘flow of the work’ perspective. In this paper, we present a set of new analytical methods consisting of workflow fragmentation assessments, pattern recognition, and data visualization, which are accordingly designed to uncover hidden regularities embedded in the flow of the work. Through an empirical study, we demonstrate the potential value of these new methods in enriching workflow analysis in clinical settings.

Introduction

Adoption of health IT (HIT) applications, such as electronic health records (EHR) and computerized provider order entry (CPOE), often introduces radical changes to clinical work processes and workflow.1 These changes could have an undesirable impact on user satisfaction, time efficiency, quality of care, and patient safety.1 2 Qualitative studies investigating HIT-related ‘unintended consequences’ have amply demonstrated that disruption to established work processes and workflow introduced by HIT adoption is a principal cause of these suboptimal or adverse outcomes.3–5

Time and motion (T&M) is a commonly used approach for quantifying workflow to assess the potential impact associated with HIT. Recent T&M studies have consistently shown that this impact is either non-significant or only marginal, evincing that HIT implementations do not adversely affect clinicians' time utilization and clinical workflow.6–10

This suggests a paradox: why have qualitative studies repeatedly reported negative end-user perceptions with suggestions of decreased efficiency and disrupted workflow whereas quantitative studies have consistently shown that the impact is negligible? We speculate that this discordance may be due in part to the oversimplified workflow quantifier used in the previous T&M studies: ‘average aggregated clinician time.’ While this ‘time expenditures’ measure can generate valuable insights into whether HIT adoption may cause a redistribution of clinician time spent in various clinical activities (eg, direct patient care vs documentation or ordering), it is not capable of uncovering the temporal dynamics embedded in workflow. In other words, this measure is useful for studying clinicians' ‘time utilization’ but not the ‘flow of the work.’

As defined in the workflow literature, a workflow process refers to ‘a predefined set of work steps, and partial ordering of these steps,’11 and workflow refers to ‘systems that help organizations to specify, execute, monitor, and coordinate flow of the work cases within a distributed office environment.’11 Inspired by this view, we developed a set of new analytical methods to quantify HIT's impact on workflow from the true ‘flow of the work’ perspective, through the lens of the sequential ordering among different clinical tasks.

Background

Despite the great potential,12–19 deployment of HIT applications such as EHR and CPOE may not always lead to desirable outcomes.20–25 Further, a significant body of literature has shown that adoption of HIT is often associated with unintended adverse consequences (UACs), which could result in diminished quality of care and escalated risks to patient safety.3 4 26 27 Among the UACs reported to date, ‘more/new work’ and ‘unfavorable workflow change’ are most common and most disruptive.3–5 They are generally attributable to problematic human-machine interfaces,27 overly simplistic workflow models,1 and other unfavorable implementation characteristics, such as inconvenient locations of computer workstations,26 disrupted power structures among clinicians,28 and unexpected changes introduced to the patterns of team coordination.29

While these UAC studies have produced detailed user accounts regarding encountered problems and suspected causes, most of them are qualitative investigations soliciting end-users' self-reported perceptions of how HIT adoption may have influenced their work. Therefore, they are not adequate to quantify the impact to assess its magnitude and prevalence. Time and motion, which collects scrupulous details of how clinicians spend their time performing each of the clinical tasks (what, when, for how long), is a useful approach for quantifying workflow to inspect for pre-post nuances. As compared to other quantitative workflow assessment methods, such as work sampling and time efficiency questionnaires, T&M has been shown to yield the most accurate results.30–32

We reviewed prior T&M studies that have investigated the workflow impact introduced by implementing HIT applications such as EHR, CPOE, and ambulatory care e-prescribing modules. With a few exceptions, the results of this stream of work suggest a clear time divide: studies published before 2001 have generally reported that HIT implementations were associated with an increase in clinician time,33–36 whereas studies published after 2001 have consistently shown that HIT implementations do not adversely affect clinicians' time utilization in significant ways.6–10 For example, ‘little extra time, if any, was required for physicians to use (the POE system);’6 ‘(the EHR system) does not require more time than a paper-based system during a primary care session;’8 ‘(implementation of ambulatory e-prescribing) was not associated with an increase in combined computer and writing time;’9 and ‘following EHR implementation, the average adjusted total time spent per patient across all specialties increased slightly but not significantly.’10

The discordance between the qualitative findings and the quantitative results is thus evident. This discordance, in fact, has already been noted in some of the T&M studies. For example, Pizziferri et al8 surveyed the physicians of the study practice where their T&M data were collected. They found that a majority of the survey respondents (71%) reported perceptions of increased time spent on patient documentation contradicting the fact that no significant differences were indicated in the T&M data.

What may account for this discordance? We speculate that the previous T&M studies have been overly focused on evaluating whether HIT adoption may affect how clinicians allocate their time among different clinical activities. This ‘time expenditures’ focus neglects that HIT's impact on clinical workflow may also originate from the changed sequence of task execution—that is, disruption to the flow of the work. In this paper, we present a set of new analytical methods consisting of workflow fragmentation assessments, pattern recognition, and data visualization, which are accordingly designed to uncover hidden regularities embedded in clinical workflow.

Method description

Workflow fragmentation assessments

First, we propose a new workflow quantifier, average continuous time (ACT), to assess the magnitude of workflow fragmentation. Average continuous time is herein defined as the average amount of time continuously spent on performing a single clinical activity (or similar activities belonging to a single category or theme; see the study design and empirical setting section for category and theme definitions). Workflow fragmentation, also referred to as frequency of task switching, is defined as the rate at which clinicians switch between tasks. The shorter continuous time spent on performing a single task, the higher frequency of task switching. Note that ‘activity’ and ‘task’ are used interchangeably in this paper unless otherwise specified.

This new quantifier, and magnitude of workflow fragmentation it measures, is potentially important in several ways. First, it has been shown in the cognition literature that frequent task switching is often associated with increased extra mental burden on the performer (eg, task prioritizing and task activation).37–40 Second, in a clinical setting, frequent task switching may cause increased amounts of physical activities (eg, locating a nearby computer workstation) and, hence, more frequent interruptions. Third, switching between tasks that are of distinct natures could result in a higher likelihood of cognitive slips and mistakes; for example, the loss-of-activation error manifesting as forgetting what the preceding task was about in a task execution sequence.41 42 Therefore, using this new quantifier to study workflow disruption may provide insights as to why HIT users may perceive decreased efficiency and disrupted workflow even though the total amount of clinical time and its distribution among different tasks are not significantly affected.

Recognition of workflow patterns

We define workflow patterns as hidden regularities embedded in the sequential order of a series of clinical task execution. Workflow patterns are collectively determined by multiple factors, such as individual physicians' practice styles, regulatory requirements, team coordination needs, and even the physical layout of a medical facility. As a result, workflow patterns are sensitive to any new changes introduced into the environment such as adoption of novel HIT systems.

To uncover workflow patterns from time-stamped T&M data, we use two pattern recognition techniques: consecutive sequential pattern analysis (CSPA) and transition probability analysis (TPA). The CSPA searches for workflow segments that reoccur frequently both within and across observations—referred to as consecutive sequential patterns.43 Each consecutive sequential pattern is composed of a sequence of clinical activities carried out one after another in a given sequential order. Further, we define the support for a consecutive sequential pattern as its hourly occurrence rate. For example, if the sequence ‘talking/rounding'’ → ‘paper—writing’ → ‘talking/rounding’ appears twice per hour in workflow data on average, we note that this is a plausible pattern with a support of 2.

The TPA, on the other hand, computes the probabilities of transitioning among pairs of tasks. The transition probabilities can be estimated using the maximum-likelihood estimation method based on empirical data. For example, the transition probability of ‘talking/rounding’ → ‘computer—writing’ is calculated as the number of times that this transition is observed in the field, divided by the total number of transitions observed originating from ‘talking/rounding.’ As compared to CSPA, the results of the TPA analysis provide an overall probabilistic view of the sequential relations among different clinical tasks.

Note that in this study we did not consider lagged sequential patterns (non-consecutive sequential patterns). Performing a useful lagged sequential pattern analysis requires fine-tuning of multiple parameters; for example, what lag constraints should be set in order to make sure that the resulted patterns are empirically meaningful (eg, whether a pattern A…B that receives sufficient support should be considered even if A and B occur many steps apart) and whether observing a lagged sequential pattern is due to odds (eg, in a randomly generated event sequence of infinite length, any event combinations may be recognized as plausible patterns). Without a priori knowledge of what settings are empirically meaningful, such decisions need to be arbitrarily made yet can have a significant impact on pattern recognition results. Higher-order transition probabilities (predicting the likelihood of observing a clinical activity based on multiple preceding events) were not considered in this study for the same reason.

Data visualization

Data visualization and visual analytics provide a means for transforming large quantities of numeric or textual data into graphical formats to facilitate human exploration and hypothesis generation.44 They have been widely applied in many fields, such as the analysis of gene expressions,45 structure of biomedical databases,46 and, increasingly, temporal relations among patient records.47 48

In this study, we use three visualization techniques to turn complex clinical workflow data into more easily comprehensible and more informative visual representations: (1) A ‘timeline belt’ diagram using distinct colors to delineate the sequential execution of a series of clinical tasks (figure 1). In this representation, the ‘flow of the work’ becomes apparent and the magnitude of workflow fragmentation becomes readily observable. (2) A network plot exhibiting the transition frequencies between pairs of tasks (figure 3). In this visualization, the temporal relations among different activities and the pre-post nuances can be easily told. (3) Heatmap visualizations displaying transition probabilities between different tasks using varied density of colors (figure 4). In these heatmaps, higher transition probabilities and significant pre-post differences can be instantly recognized.

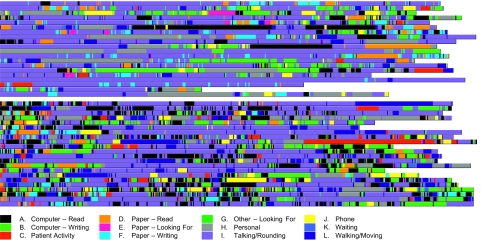

Figure 1.

A ‘timeline belt’ visualization exhibiting workflow fragmentation before and after the computerized provider order entry (CPOE) implementation. Each row (belt) represents a time and motion (T&M) observation session. Colored stripes designate the execution of clinical activities belonging to different task categories. For example, the purple stripes represent ‘talking/rounding’ activities and the black stripes represent ‘computer—read.’ Hence, color transitions indicate cross-category task switches. Length of a colored stripe is proportional to how long the task lasted.

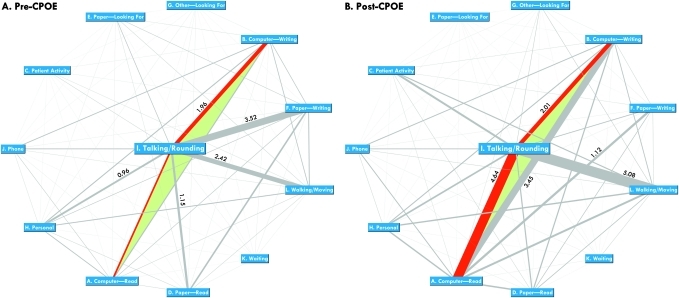

Figure 3.

Network plots exhibiting bidirectional task transition frequencies. Nodes=task categories; edges=transitions between pairs of task categories. Width of an edge is proportional to the transition frequency between the pair (number of bidirectional transitions observed per hour between the two task categories). The edges representing the transitions between ‘talking/rounding’ and ‘computing—read’ or ‘computing—writing’ are highlighted in red because they are most relevant in studying health IT's impact on clinical workflow. In both graphs, numeric labels are provided for the top five most frequent task transitions.

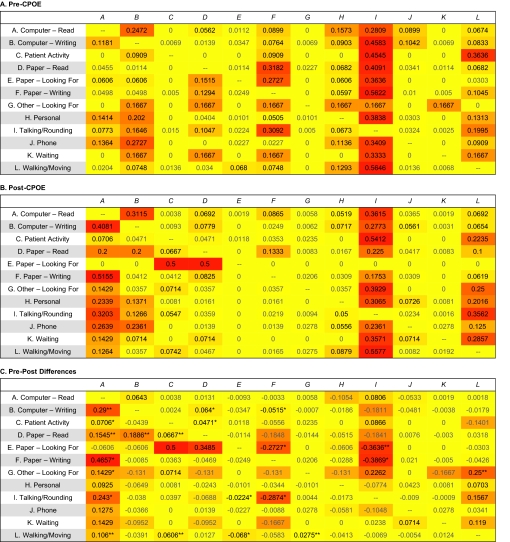

Figure 4.

Heatmaps exhibiting task transition probabilities (*p<0.05, **p<0.01, ***p<0.001; based on Welch's t test). Formula for color determination = red: 255; green: 255−transition probability×500, rounded to integers; blue: 0.

The visualization algorithms, as well as the other methods presented in this paper, were implemented in an analytical tool called Clinical Workflow Analysis Tool (CWAT)—available online at http://sitemaker.umich.edu/workflow/. This tool was programmed using Microsoft ASP.net and C# 2.0 (Redmond, Washington, USA). The statistical procedures are based on Visual Numerics IMSL C# Numerical Library 2.0 (Houston, Texas, USA). Source code is available upon request.

Empirical validation

Study design and empirical setting

To demonstrate the purpose and the value of these new analytical methods, we conducted an empirical validation study using the T&M approach to assess the workflow impact introduced by a recent CPOE implementation at our institution. The University of Michigan Institutional Review Board reviewed and approved the research protocol.

The study setting is a 16-bed level-1 pediatric intensive care unit (PICU) at the University of Michigan Health System (UMHS). A commercially sold CPOE system (Sunrise Clinical Manager, Eclipsys, Atlanta, Georgia, USA) was deployed in the unit in July 2007. Eight independent observers shadowed a convenience sample of second- and third-year resident physicians rotating through the study unit two months before and six months after the system implementation. These observers were paid medical students and graduate students enrolled in the Programs and Operations Analysis Department at the University of Michigan Hospitals. They were uniformly trained by the last author (DAH) and each conducted a few hours of training observations in the field before the actual study data collection took place.

The observation sessions started 7:30–8:00 am or 11:30–12:00 pm; each lasted approximately three to four hours. The morning and afternoon sessions were equally split roughly. At the beginning of a session, the observer randomly approached a resident physician who was working in the unit at the time. With the resident's consent, the observer started shadowing the subject and recording T&M data using a portable tablet computer equipped with a standard data acquisition tool. Elements of the data captured included date of observation, tasks performed, starting and ending time, and a unique study code assigned to each of the study participants.

The data acquisition tool, initially developed by Overhage et al and subsequently refined by Pizziferri et al,6 8 is recommended by the Agency for Healthcare Research and Quality for collecting T&M data in clinical workflow studies.49 In this tool, the clinical work is characterized as 60 distinct activities, which are further grouped into 12 categories and 6 themes to allow for analysis at different level of specificity. The 12 categories include: ‘computer—read,’ ‘computer—writing,’ ‘patient activity,’ ‘paper—read,’ ‘paper—looking for,’ ‘paper—writing,’ ‘other—looking for,’ ‘personal,’ ‘talking/rounding,’ ‘phone,’ ‘waiting,’ and ‘walking/moving’. The six themes are: ‘direct patient care,’ ‘indirect patient care—write,’ ‘indirect patient care—read,’ ‘indirect patient care—other,’ ‘administration,’ and ‘miscellaneous.’ In this study, we modified this classification schema slightly to reflect special data acquisition needs in inpatient settings (provided in Appendix 1 of the online supplementary data).

Results from the empirical validation study

Descriptive statistics

The pre-implementation T&M data contain 67.8 hours of observations of a cohort of two second-year and two third-year resident physicians (all female) over 20 clinical sessions. The post-implementation data, consisting of 86.7 hours of observations over 22 clinical sessions, were collected from another cohort of ten second-year and two third-year residents (7 females and 5 males). The residents participating in the pre-implementation data collection were not part of the post-implementation observations (or vice versa) because none of them rotated in the study unit during both study phases. The average patient census did not change significantly in the study unit before and after the implementation (15.3±0.1 pre and 14.6±0.9 post, p=0.13).

The ‘timeline belt’ visualization

Figure 1 depicts the ‘timeline belt’ visualization. Each row (belt) represents an observation session composed of colored stripes designating the execution of clinical activities belonging to different task categories. Length of a colored stripe is proportional to how long the task lasted. Note that several observations were right-truncated to fit the graph for print. Further, all observations are left-aligned regardless of their actual starting time. The online tool provides more alignment options.

In the ‘timeline belt’ visualization, it can be easily observed that the post-CPOE representation contains more densely populated color transitions each corresponding to a task switch. This suggests that the CPOE implementation might have caused an increased level of workflow fragmentation. We further quantified this visual observation using statistical analysis methods and pattern recognition techniques.

Workflow impact measured as ‘time expenditures’

Before performing the workflow fragmentation analysis, we first applied the traditional ‘average aggregated clinician time’ measure to study how the physician participants allocated their time among different clinical tasks before and after the CPOE implementation. Key findings at the activity level are presented in table 1. A full report of the results is provided in Appendix 2A of the online supplementary data.

Table 1.

Time distribution among clinical activities (%): summary of key findings

| Activity | Pre-CPOE | Post-CPOE | p Value | |||

| Mean | SEM | Mean | SEM | Welch's t | ||

| Computer—read/chart, data, labs** | 2.47 | 0.69 | 6.3 | 0.74 | 3.77 | <0.001 |

| Computer—writing/orders** | 0.03 | 0.03 | 2.69 | 0.53 | 5.04 | <0.001 |

| Paper—read/chart, data, labs* | 1.1 | 0.33 | 1.89 | 0.29 | 1.81 | 0.039 |

| Paper—writing/orders** | 4.21 | 1.06 | 0.11 | 0.06 | −3.88 | <0.001 |

| Writing orders (combining computer—and paper-based ordering activities) | 4.24 | 1.05 | 2.81 | 0.52 | −1.23 | 0.12 |

p<0.05;

p<0.001. CPOE, computerized provider order entry.

As table 1 shows, the proportion of physician time spent on using computers to read ‘chart, data, labs’ (2.47% pre vs 6.3 post, p<0.001) and ‘write orders’ (0.03% pre vs 2.69% post, p<0.001) significantly increased after the CPOE implementation. These increases were, not surprisingly, compensated by a nearly fourfold drop in the proportion of time allocated to ‘paper—writing orders’ (4.21% pre vs 0.11% post, p<0.001) as well as decreases in other paper-based activities (reported in Appendix 2A of the online supplementary data). With both computer-based and paper-based ordering activities combined, the proportion of physician time spent on writing orders reduced considerably after the CPOE implementation (4.24% pre vs 2.81% post), although this decrease is only marginally significant (p=0.115).

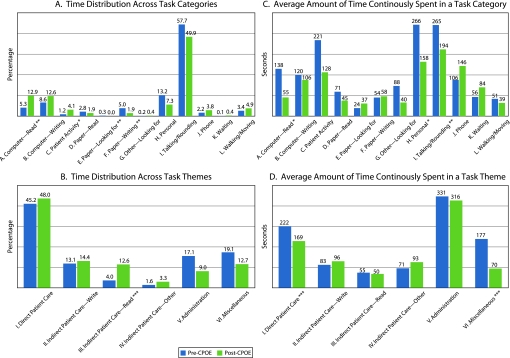

Figure 2A shows the results at the category level. Significant pre-post differences were found in four task categories. First, there was a more than doubled increase in the proportion of physician time spent on retrieving data at computer terminals (‘computerdread’: 5.33% pre vs 12.87% post, p<0.01). Further, significant decreases were found in the proportion of time allocated to paper documentation activities and finding paper forms (‘paperdwriting’: 5% pre vs 1.89% post, p<0.01; ‘paperdlooking for’: 0.32% pre vs 0.02% post, p<0.01). These changes were natural consequences of the transition from a paper-based operation to computerized order entry. The other significantly affected task category is ‘patient activities.’ After the CPOE implementation, the physicians were able to spend more time interacting with patients (1.18% pre vs 4.05% post, p<0.05). At the theme level (figure 2B), the only significant change was a more than threefold increase in the proportion of physician time spent in ‘indirect patient caredread’ activities (3.99% pre vs 12.59% post, p<0.001). The proportion of time distributed to ‘direct patient care’ was barely affected (45.17% pre vs 48.01% post, p=0.34).

Figure 2.

Pre-post comparison: multiple measures (*p<0.05, **p<0.01, ***p<0.001; based on Welch's t test).

These findings based on the ‘time expenditures’ analysis are consistent with the results of the recent T&M studies.6–10 Therefore, the conclusions are similar—the CPOE implementation was neither associated with an increase in clinician time spent on writing orders nor did it cause a reduction in clinician time allocated to direct patient care activities.

Results of workflow fragmentation assessments

Overall, the average amount of time continuously spent performing a single task significantly decreased from 163 seconds before the CPOE implementation to 107 seconds after the implementation (p<0.001). Figures 2C, D display the workflow fragmentation analysis results at the category and the theme level, respectively.

Among the 12 task categories, significant decreases in ACT were found in three categories: ‘computer—read’ (138 seconds pre vs 55 seconds post, p<0.05), ‘personal’ (266 seconds pre vs 158 seconds post, p<0.05), and ‘talking/rounding’ (265 seconds pre vs 194 seconds post, p<0.01). The largest relative decrease occurred in the ‘computer—read’ category where average task duration dropped more than 60%. Similarly, significantly shorter durations were observed on conducting tasks related to the ‘direct patient care’ theme (222 seconds pre vs 169 seconds post, p=0.001) as well as ‘miscellaneous’ (177 seconds pre vs 70 seconds post, p=0.001). These findings confirm the visual observation of the ‘timeline belt’ diagram that the post-CPOE clinical workflow had become more fragmented.

Results of workflow pattern recognition

Table 2 reports the consecutive sequential patterns uncovered that received a support of 1 or above. Five CSPA patterns were identified in the pre-implementation phase of the study and eleven in post-implementation. For example, after the CPOE system was implemented, the hourly rates of observing the transitions of ‘talking/rounding’ → ‘walking/moving’ (1.19 pre vs 2.68 post), ‘walking/moving’ → ‘talking/rounding’ transition (1.24 pre vs 2.39 post), ‘talking/rounding’ → ‘computer—read’ (<1 pre vs 2.41 post), and ‘computer—read’ → ‘talking/rounding’ (<1 pre vs 2.21 post) increased nearly or more than twofold. These prominent transitions were all centered on the ‘talking/rounding’ activity (either from or to), which is the most essential clinical process in inpatient settings.50

Table 2.

Consecutive sequential patterns discovered

| Pattern | Support |

| A. 2-Length sequential pattern, pre-CPOE | |

| Talking/rounding → paper—writing | 1.84 |

| Paper—writing → talking/rounding | 1.68 |

| Walking/moving → talking/rounding | 1.23 |

| Talking/rounding → walking/moving | 1.19 |

| B. 3-Length sequential pattern, pre-CPOE | |

| Talking/rounding → paper—writing → talking/rounding | 1.22 |

| C. 2-Length sequential pattern, post-CPOE | |

| Talking/rounding → walking/moving | 2.69 |

| Talking/rounding → computer—read | 2.42 |

| Walking/moving → talking/rounding | 2.39 |

| Computer—read → talking/rounding | 2.22 |

| Computer—read → computer—writing | 1.91 |

| Computer—writing → computer—read | 1.54 |

| Computer—writing → talking/rounding | 1.05 |

| D. 3-Length sequential pattern, post-CPOE | |

| Talking/rounding → walking/moving → talking/rounding | 1.56 |

| Computer—read → talking/rounding → computer—read | 1.27 |

| Walking/moving → talking/rounding → walking/moving | 1.21 |

| Talking/rounding → computer—read → talking/rounding | 1.05 |

We further plotted the bidirectional transition frequencies as two network graphs (figures 3A, B). The purpose was to exhibit the pre-post differences more effectively. In both graphs, the network nodes represent task categories; width of an edge is proportional to the bidirectional transition frequency (hourly occurrence rate) between the two task categories. Further, the network nodes are distributed using a circular layout and the ‘talking/rounding’ activity is placed in the middle because of its central role.

By contrasting figure 3A, B, representing the pre-CPOE and post-CPOE clinical environment, respectively, it can be easily observed that several task transitions had become much more frequent (thicker edges) after the CPOE system was implemented. These include ‘talking/rounding’ ↔ ‘computer—read,’ ‘talking/rounding’ ↔ ‘paper—writing,’ and ‘talking/rounding’ ↔ ‘walking/moving,’ as well as between ‘computer—read’ and ‘computer—writing.’

The network plots shown in figure 3 were produced using GUESS (v0.5-alpha), an open-source graph exploration system (http://graphexploration.cond.org). A full report of the transition frequencies among all pairs of task categories is provided in online Appendix 2C of the online supplementary data.

Next, we performed the TPA analysis to compute the transition probabilities between different pairs of clinical tasks. Figure 4 visualizes the results as three heatmaps (pre-CPOE, post-CPOE, and pre-post comparison). On these heatmaps, varied density of color designates transition probabilities estimated based on the empirical data. The probabilities are also reported in each of the cells; for example, the second cell in the first row in figure 4A can be read as ‘before the CPOE implementation, the probability of observing the task context changed from computer—read to computer—writing was 0.2472, out of all possible transitions originating from computer—read.’

In these heatmap representations, it can be easily observed that the CPOE implementation might have recalibrated the probabilities of transitioning between different task pairs. For example, the likelihood of observing the ‘talking/rounding’ → ‘computer—read’ transition significantly increased from 0.077 pre-implementation to 0.32 post-implementation (p<0.05), compensated by a similar level of decrease in the likelihood of observing the transition of ‘talking/rounding’ → ‘paper—writing’ (0.31 pre vs 0.022 post, p<0.05). Depending on the implementation characteristics (eg, where the CPOE workstations were located, whether mobile computing devices were available), these changes may potentially introduce significant disruption to clinical workflow.

Discussion

The limitations of the empirical validation study, such as the small sample size (hence unbalanced pre and post sample characteristics), idiosyncratic features of the CPOE system studied, and unique settings of the PICU site, constrain the power and generalizability of practical inferences that can be drawn. Therefore, the empirical study should only be interpreted as a demonstration of how the new methods presented in this paper may be used in future research to enrich workflow analysis in clinical settings.

The T&M data contain rich time-stamped information that can be used to examine the sequential ordering of distinct tasks in a task execution sequence. Analyzing T&M data from this ‘flow of the work’ perspective makes use of this information to allude to the actual impact HIT adoption may introduce to clinical ‘workflow.’ As shown in the empirical validation study, using the traditional ‘time expenditures’ measure—that is, lumping clinician time spent in different clinical activities to assess whether introduction of HIT may cause a redistribution—does not use this temporal information and, therefore, loses the ‘flow of the work’ insights derivable from T&M data. This fact may account for the discordance discussed earlier between the quantitative results and the qualitative findings. For example, our T&M data collected in the empirical study seem to suggest that the post-CPOE environment contained shorter, more fragmented task execution episodes. The direct consequence is a higher frequency of task switching, which may be associated with more rapid swapping of task rules in clinicians' memory, increased rates of running into distinct task contexts, extra physical activities (eg, locating a nearby computer terminal), and more frequent waiting and idling (eg, waiting for the computer system to respond). Consequently, clinicians may perceive decreased time efficiency and disrupted workflow even though the time utilization analysis does not suggest an adverse impact. To confirm this speculation, we encourage other researchers to consider using the new methods presented in this paper to analyze T&M data collected in future studies as well as those collected in prior efforts. We believe that this exercise may help illuminate the quantitative-qualitative paradox found in the literature.

It must be noted that, although these new methods may help enrich T&M analysis, several limitations intrinsic to the T&M approach (eg, observer bias and difficulty in observing multitasking activities) could undermine the validity or generalizability of T&M-based research findings. In addition to improving the accuracy and consistency of T&M observations, researchers have shown that using automated activity recognition tools, such as radio-frequency identification (RFID) tags, can greatly enhance the quality and efficiency in collecting workflow data.51 Further, quantitative methods such as T&M are not capable of revealing the root causes of HIT workflow impact and whether the impact may exert an actual influence on user satisfaction, time efficiency, clinician performance, and patient outcomes. Additional research is needed to relate HIT-associated clinical workflow changes to these outcomes variables. Researchers have demonstrated that some of these facets can be studied using ethnographically based investigations,52 53 questionnaires and interviews,52–55 cognitive engineering56 57 and computer-supported cooperative work (CSCW) approaches,58 or even using physiological devices to directly measure the level of clinicians' brain activities in different task situations.59 60

Conclusion

Recent quantitative studies applying the time and motion approach to assess HIT workflow impact have shown non-significant effects, conflicting with the guarded end-user perceptions reported in qualitative investigations. The workflow measure used in the recent T&M studies, ‘average aggregated clinician time,’ may be a factor accounting for the inconsistency. In this paper, we introduce a set of new analytical methods consisting of workflow fragmentation assessments, pattern recognition, and data visualization that are accordingly designed to address its limitation. Through an empirical validation study, we show that applying these new methods can enrich workflow analysis, which may allude to potential workflow deficiencies and corresponding re-engineering insights. In this paper, we also demonstrate the value of using data visualization techniques to turn complex workflow data into more comprehensible and more informative graphical representations.

Supplementary Material

Acknowledgments

We would like to thank Mary Duck, John Schumacher, Heidi Reichert, Jackie Aeto, Richard Loomis, Samuel Clark, Barry DeCiccio, Michelle Morris, Amy Shanks, Frank Manion, Anwar Hussain, and the entire PICU team at the University of Michigan Mott Children's Hospital as well as the patients and their families for helping to make this study possible.

Footnotes

Funding: This project was supported in part by Grant # UL1RR024986 received from the National Center for Research Resources (NCRR): a component of the National Institutes of Health (NIH) and NIH Roadmap for Medical Research.

Ethics approval: This study was conducted with the approval of the Medical School Institutional Review Board, The University of Michigan.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1.Niazkhani Z, Pirnejad H, Berg M, et al. The impact of computerized provider order entry (CPOE) systems on inpatient clinical workflow: a literature review. J Am Med Inform Assoc 2009;16:539–49 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.National Research Council Computational technology for effective health care: immediate steps and strategic directions. Washington, DC: National Academies Press; 2009 [PubMed] [Google Scholar]

- 3.Campbell EM, Sittig DF, Ash JS, et al. Types of unintended consequences related to computerized provider order entry. J Am Med Inform Assoc 2006;13:547–56 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Ash JS, Sittig DF, Poon EG, et al. The extent and importance of unintended consequences related to computerized provider order entry. J Am Med Inform Assoc 2007;14:415–23 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Campbell EM, Guappone KP, Sittig DF, et al. Computerized provider order entry adoption: implications for clinical workflow. J Gen Intern Med 2009;24:21–6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Overhage JM, Perkins S, Tierney WM, et al. Controlled trial of direct physician order entry: effects on physicians' time utilization in ambulatory primary care internal medicine practices. J Am Med Inform Assoc 2001;8:361–71 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Wong DH, Gallegos Y, Weinger MB, et al. Changes in intensive care unit nurse task activity after installation of a third-generation intensive care unit information system. Crit Care Med 2003;31:2488–94 [DOI] [PubMed] [Google Scholar]

- 8.Pizziferri L, Kittler AF, Volk LA, et al. Primary care physician time utilization before and after implementation of an electronic health record: A time-motion study. J Biomed Inform 2005;38:176–88 [DOI] [PubMed] [Google Scholar]

- 9.Hollingworth W, Devine EB, Hansen RN, et al. The impact of e-Prescribing on prescriber and staff time in ambulatory care clinics: A time motion study. J Am Med Inform Assoc 2007;14:722–30 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Lo HG, Newmark LP, Yoon C, et al. Electronic health records in specialty care: A time-motion study. J Am Med Inform Assoc 2007;14:609–15 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Ellis CA. Workflow technology. In: Beaudouin-Lafon M, ed. Computer supported cooperative work. Chichester: John Wiley & Sons, 1999:29–54 [Google Scholar]

- 12.Bates DW, Leape LL, Cullen DJ, et al. Effect of computerized physician order entry and a team intervention on prevention of serious medication errors. JAMA 1998;280:1311–16 [DOI] [PubMed] [Google Scholar]

- 13.Mekhjian HS, Kumar RR, Kuehn L, et al. Immediate benefits realized following implementation of physician order entry at an academic medical center. J Am Med Inform Assoc 2002;9:529–39 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Kaushal R, Jha AK, Franz C, et al. Return on investment for a computerized physician order entry system. J Am Med Inform Assoc 2006;13:261–6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Holdsworth MT, Fichtl RE, Raisch DW, et al. Impact of computerized prescriber order entry on the incidence of adverse drug events in pediatric inpatients. Pediatrics 2007;120:1058–66 [DOI] [PubMed] [Google Scholar]

- 16.Guss DA, Chan TC, Killeen JP. The impact of a pneumatic tube and computerized physician order management on laboratory turnaround time. Ann Emerg Med 2008;51:181–5 [DOI] [PubMed] [Google Scholar]

- 17.Shamliyan TA, Duval S, Du J, et al. Just what the doctor ordered. Review of the evidence of the impact of computerized physician order entry system on medication errors. Health Serv Res 2008;43:32–53 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Taylor JA, Loan LA, Kamara J, et al. Medication administration variances before and after implementation of computerized physician order entry in a neonatal intensive care unit. Pediatrics 2008;121:123–8 [DOI] [PubMed] [Google Scholar]

- 19.Amarasingham R, Plantinga L, Diener-West M, et al. Clinical information technologies and inpatient outcomes: A multiple hospital study. Arch Intern Med 2009;169:108–14 [DOI] [PubMed] [Google Scholar]

- 20.Ali NA, Mekhjian HS, Kuehn PL, et al. Specificity of computerized physician order entry has a significant effect on the efficiency of workflow for critically ill patients. Crit Care Med 2005;33:110–14 [DOI] [PubMed] [Google Scholar]

- 21.Chaudhry B, Wang J, Wu S, et al. Systematic review: impact of health information technology on quality, efficiency, and costs of medical care. Ann Intern Med 2006;144:742–52 [DOI] [PubMed] [Google Scholar]

- 22.Ammenwerth E, Talmon J, Ash JS, et al. Impact of CPOE on mortality rates—contradictory findings, important messages. Methods Inf Med 2006;45:586–93 [PubMed] [Google Scholar]

- 23.Bonnabry P, Despont-Gros C, Grauser D, et al. A risk analysis method to evaluate the impact of a computerized provider order entry system on patient safety. J Am Med Inform Assoc 2008;15:453–60 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Ammenwerth E, Schnell-Inderst P, Machan C, et al. The effect of electronic prescribing on medication errors and adverse drug events: a systematic review. J Am Med Inform Assoc 2008;15:585–600 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Walsh KE, Landrigan CP, Adams WG, et al. Effect of computer order entry on prevention of serious medication errors in hospitalized children. Pediatrics 2008;121:e421–7 [DOI] [PubMed] [Google Scholar]

- 26.Han YY, Carcillo JA, Venkataraman ST, et al. Unexpected increased mortality after implementation of a commercially sold computerized physician order entry system. Pediatrics 2005;116:1506–12 [DOI] [PubMed] [Google Scholar]

- 27.Koppel R, Metlay JP, Cohen A, et al. Role of computerized physician order entry systems in facilitating medication errors. JAMA 2005;293:1197–203 [DOI] [PubMed] [Google Scholar]

- 28.Ash JS, Sittig DF, Campbell E, et al. An unintended consequence of CPOE implementation: Shifts in power, control, and autonomy. AMIA Annu Symp Proc 2006:11–15 [PMC free article] [PubMed] [Google Scholar]

- 29.Cheng CH, Goldstein MK, Geller E, et al. The effects of CPOE on ICU workflow: an observational study. AMIA Annu Symp Proc 2003:150–4 [PMC free article] [PubMed] [Google Scholar]

- 30.Finkler SA, Knickman JR, Hendrickson G, et al. A comparison of work-sampling and time-and-motion techniques for studies in health services research. Health Serv Res 1993;28:577–97 [PMC free article] [PubMed] [Google Scholar]

- 31.Burke T, McKee J, Wilson H, et al. A comparison of time-and-motion and self- reporting methods of work measurement. J Nurs Adm 2000;30:118–25 [DOI] [PubMed] [Google Scholar]

- 32.Ampt A, Westbrook J, Creswick N, et al. A comparison of self-reported and observational work sampling techniques for measuring time in nursing tasks. J Health Serv Res Policy 2007;12:18–24 [DOI] [PubMed] [Google Scholar]

- 33.Bates DW, Boyle DL, Teich JM. Impact of computerized physician order entry on physician time. Proc Annu Symp Comput Appl Med Care 1994:996. [PMC free article] [PubMed] [Google Scholar]

- 34.Tierney WM, Miller ME, Overhage JM, et al. Physician inpatient order writing on microcomputer workstations. Effects on resource utilization. JAMA 1993;269:379–83 [PubMed] [Google Scholar]

- 35.Evans KD, Benham SW, Garrard CS. A comparison of handwritten and computer-assisted prescriptions in an intensive care unit. Crit Care 1998;2:73–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Shu K, Boyle D, Spurr C, et al. Comparison of time spent writing orders on paper with computerized physician order entry. Stud Health Technol Inform 2001;84:1207–11 [PubMed] [Google Scholar]

- 37.Kirmeyer SL. Coping with competing demands: interruption and the type A pattern. J Appl Psychol 1988;73:621–9 [DOI] [PubMed] [Google Scholar]

- 38.Afterword RJ. In: Bogner MS, ed. Human error in medicine. Hillsdale, NJ: Lawrence Erlbaum Associates, 1994:385–93 [Google Scholar]

- 39.Weingart SN. House officer education and organizational obstacles to quality improvement. Jt Comm J Qual Improv 1996;22:640–6 [DOI] [PubMed] [Google Scholar]

- 40.Edwards MB, Gronlund SD. Task interruption and its effects on memory. Memory 1998;6:665–87 [DOI] [PubMed] [Google Scholar]

- 41.Norman DA. The psychology of everyday things. New York: Basic Books, 1988 [Google Scholar]

- 42.Reason J. Human error. Cambridge, UK: Cambridge University Press, 1992 [Google Scholar]

- 43.Zheng K, Padman R, Johnson MP, et al. An interface-driven analysis of user interactions with an electronic health records system. J Am Med Inform Assoc 2009;16:228–37 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Card SK, Mackinlay JD, Shneiderman B. Readings in information visualization: using vision to think. San Francisco, CA: Morgan Kaufmann Publishers, 1999 [Google Scholar]

- 45.Chesler EJ, Lu L, Shou S, et al. Complex trait analysis of gene expression uncovers polygenic and pleiotropic networks that modulate nervous system function. Nat Genet 2005;37:233–42 [DOI] [PubMed] [Google Scholar]

- 46.Mudunuri U, Stephens R, Bruining D, et al. botXminer: Mining biomedical literature with a new web-based application. Nucleic Acids Res 2006;34:W748–52 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Bui AA, Aberle DR, Kangarloo H. TimeLine: Visualizing integrated patient records. IEEE Trans Inf Technol Biomed 2007;11:462–73 [DOI] [PubMed] [Google Scholar]

- 48.Plaisant C, Lam SJ, Shneiderman B, et al. Searching electronic health records for temporal patterns in patient histories: a case study with Microsoft Amalga. AMIA Annu Symp Proc 2008:601–5 [PMC free article] [PubMed] [Google Scholar]

- 49.Time and motion study tool: ambulatory practice (TMS-AP), http://healthit.ahrq.gov/portal/server.pt/gateway/PTARGS_0_1248_216071_0_0_18/AHRQ%20NRC%20Time-Motion%20Study%20Tool%20Guide.pdf (accessed 10 Jun 2009).

- 50.Kirkpatrick JN, Nash K, Duffy TP. Well rounded. Arch Intern Med 2005;165:613–16 [DOI] [PubMed] [Google Scholar]

- 51.Vankipuram M, Kahol K, Cohen T, et al. Visualization and analysis of activities in critical care environments. AMIA Annu Symp Proc 2009:662–6 [PMC free article] [PubMed] [Google Scholar]

- 52.Johnson CD, Zeiger RF, Das AK, et al. Task analysis of writing hospital admission orders: Evidence of a problem-based approach. AMIA Annu Symp Proc 2006:389–93 [PMC free article] [PubMed] [Google Scholar]

- 53.Georgiou A, Westbrook J, Braithwaite J, et al. When requests become orders—a formative investigation into the impact of a computerized physician order entry system on a pathology laboratory service. Int J Med Inform 2007;76:583–91 [DOI] [PubMed] [Google Scholar]

- 54.Aarts J, Ash J, Berg M. Extending the understanding of computerized physician order entry: Implications for professional collaboration, workflow and quality of care. Int J Med Inform 2007;76(Suppl 1):S4–13 [DOI] [PubMed] [Google Scholar]

- 55.Weir CR, Nebeker JJ, Hicken BL, et al. A cognitive task analysis of information management strategies in a computerized provider order entry environment. J Am Med Inform Assoc 2007;14:65–75 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Patel VL, Kaufman DR. Medical informatics and the science of cognition. J Am Med Inform Assoc 1998;5:493–502 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Kushniruk AW, Patel VL. Cognitive and usability engineering methods for the evaluation of clinical information systems. J Biomed Inform 2004;37:56–76 [DOI] [PubMed] [Google Scholar]

- 58.Pratt W, Reddy MC, McDonald DW, et al. Incorporating ideas from computer-supported cooperative work. J Biomed Inform 2004;37:128–37 [DOI] [PubMed] [Google Scholar]

- 59.Wilson GF, Eggemeier FT. Psychophysiological assessment of workload in multi-task environments. In:Damos DL, ed. Multiple-task performance. London: Taylor & Francis, 1991:329–60 [Google Scholar]

- 60.Prinze LJ, Parasuraman R, Freeman FG, et al. Three experiments examining the use of electroencephalogram, event-related potentials, and heart-rate variability for real-time human-centered adaptive automation design. Hanover, MD: NASA Center for AeroSpace Information (CASI), 2003 [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.