Abstract

Introductory biology courses are widely criticized for overemphasizing details and rote memorization of facts. Data to support such claims, however, are surprisingly scarce. We sought to determine whether this claim was evidence-based. To do so we quantified the cognitive level of learning targeted by faculty in introductory-level biology courses. We used Bloom's Taxonomy of Educational Objectives to assign cognitive learning levels to course goals as articulated on syllabi and individual items on high-stakes assessments (i.e., exams and quizzes). Our investigation revealed the following: 1) assessment items overwhelmingly targeted lower cognitive levels, 2) the cognitive level of articulated course goals was not predictive of the cognitive level of assessment items, and 3) there was no influence of course size or institution type on the cognitive levels of assessments. These results support the claim that introductory biology courses emphasize facts more than higher-order thinking.

INTRODUCTION

Contemporary biology has experienced a paradigm shift away from linear, reductionist thinking toward a study of complex, interconnected systems ( Woese, 2004; Goldenfeld and Woese, 2007). Research in the basic life sciences requires quantitative methodologies and integration of knowledge across scales of time and space. This changing landscape of biology has amplified expectations for students majoring in the life sciences. Practitioners in both academics and industry place a premium on students who not only know biology but also are skilled in effective communication, critical thinking, and problem-solving abilities in the field ( National Science Foundation [NSF], 1996; National Academy of Sciences, National Academy of Engineering, Institute of Medicine, 2007; American Association of Medical Colleges and Howard Hughes Medical Institute, 2009). In fact, the particular relevance of biological science in society merits attention to these skill sets for all students, regardless of discipline ( National Research Council [NRC], 2002, 2003).

Undergraduate biology courses are widely criticized for overemphasizing details and rote memorization of facts, especially at the introductory level ( American Association for the Advancement of Science [AAAS], 1989; Bransford et al., 1999). “Large-enrollment, fact-oriented, instructor-centered, lecture-based biology courses” ( Wood, 2009b) persist at the expense of helping students develop higher-level cognitive skills. On what evidence do we base such claims?

Critics of undergraduate biology education often blame standardized tests, like the Medical College Admission Test, Graduate Record Examination, and Advanced Placement (AP) exam, for promoting assessment of factual minutiae in introductory courses ( NRC, 2002; Wood, 2002; NRC, 2003). Yet a recent analysis of the cognitive levels targeted by these exams debunks this claim ( Zheng et al., 2008), finding that standardized exams routinely assess some higher cognitive levels. Furthermore, revisions of the AP courses and associated assessments are expected to shift the emphasis in biology from memorization of facts to understanding big ideas in biology through the application of science practices such as quantitative reasoning, analyzing data, and evaluating evidence ( Mervis, 2009; Wood, 2009b).

All of these criticisms of undergraduate biology lack the support of rigorous studies of assessments actually used in undergraduate biology courses. Faculty often acknowledge the importance of students gaining higher-level cognitive skills such as using models, integrating knowledge, and transferring concepts to novel problems. However, only institution-specific data are available that describe the cognitive skills actually taught and assessed in introductory biology ( Fuller, 1997; Evans, 1999).

Our review of the literature reveals an absence of national-level baseline data to describe the cognitive skills assessed and taught in undergraduate biology courses. Recent research ( Zheng et al., 2008) calls for comprehensive studies of this nature, and our study represents one such effort to bridge that gap. Here, we present an analysis of course goals and assessments from a national sample of universities and colleges to address three questions. First, what is the mean cognitive level faculty routinely target in introductory undergraduate biology as evidenced on course syllabi and assessments? Second, did faculty align their course goals and assessments to determine the degree to which students achieved the stated goals? Third, what factors—class size, institution type, or articulating objectives on the course syllabus—predict the cognitive levels of assessment items used on exams?

Bloom's Taxonomy

We used Bloom's (1956) Taxonomy of Educational Objectives: The Classification of Educational Goals, Handbook I, Cognitive Domain (hereafter referred to as Bloom's taxonomy) to classify the cognitive skills targeted by introductory biology courses. Created as a tool to facilitate communication among assessment experts, Bloom's taxonomy enables the classification of assessment items into one of six cognitive skill levels: knowledge, comprehension, application, analysis, synthesis, and evaluation, which represent a continuum from simple to complex cognitive tasks and concrete to abstract thinking ( Krathwohl, 2002). These cognitive skills are organized into a hierarchy, where higher levels of Bloom's taxonomy encompass preceding levels—an analysis-level question, for example, requires mastery of application, comprehension, and knowledge. Research has confirmed the hierarchical nature of the first four levels; strong support for a strict ordering of evaluation and synthesis is currently lacking, thus these two levels are often combined ( Kreitzer and Madaus, 1994).

In the 50 years since its publication, Bloom's taxonomy has become widely cited and influential in K–16 education ( Anderson et al., 2001) as a useful tool to identify the cognitive processing levels of objectives and assessments irrespective of assessment type (e.g., multiple choice or open-ended response). Although considered overly simplistic by many in education ( Furst, 1981), Bloom's taxonomy is an approachable tool that scientists can readily use ( Crowe et al., 2008). In this study, we use Bloom's taxonomy to categorize the cognitive processing levels targeted by learning objectives and assessments. Bloom's taxonomy has been used in this way by others ( Fuller, 1997; Evans, 1999; Zheng et al., 2008), and State Departments of Education assessment programs use cognitive taxonomies, like Bloom's, as part of the alignment process ( La Marca et al., 2000; Bhola et al., 2003; Martone and Sireci, 2009).

METHODS

Data Sources

Course materials analyzed in this study were voluntarily submitted by faculty who completed one of two nationally recognized professional development workshops: Faculty Institutes for Reforming Science Teaching ( FIRST II; 2009) and the Summer Institutes on Undergraduate Education in Biology (SI) ( Pfund et al., 2009). After completion of each workshop, participants were contacted via email and invited to participate in this study. Faculty who volunteered to participate in this study gave permission for the analysis of all submitted course materials (i.e., assessments and syllabi).

Both FIRST and SI sought to engage faculty in the process of scientific teaching by providing both a theoretical foundation of how people learn and opportunities to design and revise instructional units. Faculty worked in collaborative teams to apply principles of backward design ( Wiggins and McTighe, 2005) and Bloom's taxonomy ( Bloom, 1956) to develop learning objectives, assessments, and instructional activities. Completed modules were peer reviewed by workshop participants and revised.

The teaching experience of our study participants ranged from 3 to 36 years, with an average of 13 years. Their appointments varied from lecturer to full professor. Further, these faculty represent a broad range of public and private institutions, including Associate's colleges, Baccalaureate institutions, Master's colleges and universities, and Doctoral universities ( Table 1). Because SI recruited faculty exclusively from research-intensive and -extensive universities, approximately half of the courses analyzed for this study were taught at Doctoral institutions.

Table 1.

Courses, assessments, and instructors by institution type

| Institution type a | No. institutions | No. courses b | No. instructor c | No. assessments | No. items | Weighted mean Bloom's level (±SEM) d |

|---|---|---|---|---|---|---|

| Associate | 5 | 11 | 8 | 39 | 923 | 1.53 ± 0.09 |

| Baccalaureate | 4 | 12 | 7 | 26 | 601 | 1.95 ± 0.10* |

| Masters | 9 | 11 | 9 | 25 | 883 | 1.43 ± 0.05 |

| Doctoral | 26 | 43 | 26 | 289 | 7306 | 1.38 ± 0.02 |

| Total | 44 | 77 | 50 | 379 | 9713 | 1.45 ± 0.02 |

a We used the Carnegie classification (2000) to categorize institutions into four broad groups.

b Several faculty taught multiple iterations of the same course. We include all iterations of a course in our analysis.

c Several faculty submitted materials for the same course taught at two different times. In addition, several faculty submitted materials from two distinct courses (e.g., Introduction to Biology and Introduction to Plant Biology).

d The asterisk indicates significantly higher Bloom's level (Kruskal–Wallis one-way analysis of variance with a post hoc Wilcoxon test to explore pairwise comparisons (p < 0.05)).

Collectively, 50 faculty taught 77 introductory biology courses (defined here as 100- and 200-level courses and their equivalent) over the duration of two years. Based on course titles, we classified more than half of the courses as general biology ( Table 2); the remaining half represented a broad range of biological disciplines, from environmental science to cell and molecular biology. The mean class size was 192 students, with a range of 14 students in the smallest course and nearly 500 students in the largest course.

Table 2.

Course demographics

| Course type a | Proportion of courses | Mean class size (±SEM) | No. courses reporting class size |

|---|---|---|---|

| Cell and molecular biology | 11.7% | 144 ± 37 | 9 |

| Environmental science | 10.4% | 70 ± 15 | 6 |

| Ecology | 5.2% | n.d. | 0 |

| General biology | 55.8% | 253 ± 20 | 34 |

| Genetics | 3.9% | 70 ± 10 | 3 |

| Other b | 13% | 81 ± 37 | 5 |

a Courses were categorized based on course title.

b Courses categorized as “Other” included plant biology, microbiology, and zoology.

Data Collection

All data (syllabi and assessments) were collected from faculty within four semesters of their participation in the professional development program. We focused our study on two types of data: high-stakes course assessments (i.e., quizzes and exams) that accounted for a large proportion (60–80%) of the course grade, and course goals as described in course syllabi. These data provide evidence of what faculty consider important in courses. Goals stated in syllabi reflect faculty priorities about what they expect students to know and be able to do; assessments reflect how faculty evaluate students' achievement of those learning goals ( Wiggins and McTighe, 2005). In practice, good course design aligns course goals, assessments, and learning activities through the process of backward design ( Fink, 2003; Tanner and Allen, 2004; Wiggins and McTighe, 2005), which supports our decision to use these data to address our questions. Indeed, faculty participants in SI and FIRST were explicitly trained in backward design, which led to our expectation that these faculty would articulate course learning goals that aligned with subsequent assessments.

Rating Course Goals and Assessment Items

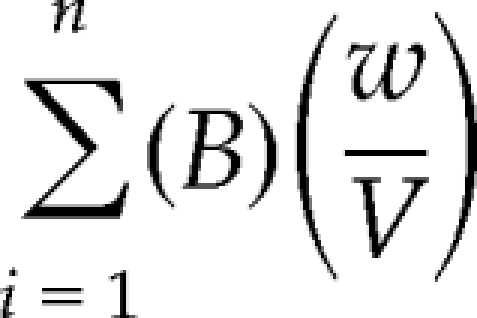

Two scientists independently assigned a Bloom's level (1 through 6, where 1 = knowledge, 2 = comprehension, 3 = analysis, 4 = application, 5 = synthesis, and 6 = evaluation) to each goal and assessment item. There was substantial agreement between raters for both assessments (Cohen's kappa = 0.64) and objectives (Cohen's kappa = 0.65). We computed a simple average of the ratings between raters for syllabi goals. We calculated a weighted average for each assessment item because questions written at higher cognitive levels are often weighted more heavily (i.e., worth more points) than questions written at the knowledge or comprehension level. We then used these weighted values to compute a single score for each assessment (i.e., exam or quiz). As such, the Bloom's score for an assessment represents the summation of the weighted average of each item, where B equals the average Bloom score for a particular item, w equals the point value of the item, and V equals the total points possible on an assessment:

|

Statistical Analyses

To test the alignment between the mean Bloom's level of the course goals and the weighted mean Bloom's level for the corresponding assessments, we used a one-way interclass correlation, focusing on the consistency between the course goals and assessments. Perfect consistency between course goals and objectives would yield a consistency of 1. To investigate whether class size influences cognitive levels of assessment items on exams, we used a Pearson Product Moment Correlation. To test for differences in cognitive levels assessed by institution types, we used the Kruskal–Wallis one-way analysis of variance; a nonparametric statistic appropriate for categorical data. We examined significant differences using a post-hoc Wilcoxon test to explore pairwise comparisons. Finally, to compare the Bloom's level assessed by courses with and without explicit goals stated on syllabi, we used a Wilcoxon rank sum test, a nonparametric means test to compare categorical data with unequal sample sizes. All statistical analyses were conducted in the R statistical environment ( R Development Core Team, 2009).

RESULTS

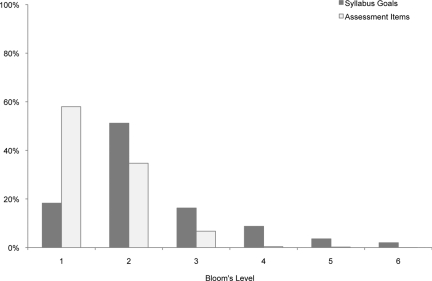

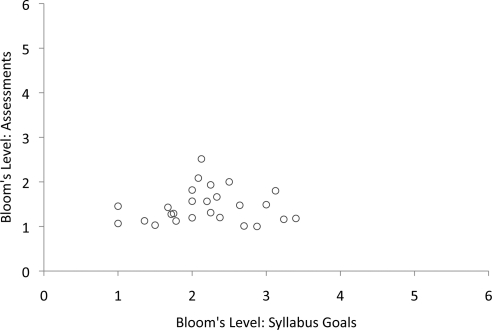

Of the 9713 assessment items submitted to this study by 50 faculty teaching introductory biology, 93% were rated Bloom's level 1 or 2—knowledge and comprehension ( Figure 1). Of the remaining items, 6.7% were rated level 3 with <1% rated level 4 or above. For goals, 69% of the 250 goals submitted were rated Bloom's level 1 or 2. Mean Bloom's level of course goals was not significantly correlated with corresponding assessments, indicating no alignment (n = 26; p = 0.92; Figure 2).

Figure 1.

Assessment items and syllabi goals binned by cognitive (Bloom's) level. Level 1: comprehension; 2: understanding; 3: application; 4: analysis; 5: synthesis; 6: evaluation. Frequencies show faculty set course goals that target higher cognitive processes and assess lower cognitive processes.

Figure 2.

Alignment of course learning goals with assessments. There is no correlation between syllabus goals and assessment items (one-way interclass correlation, p = 0.92).

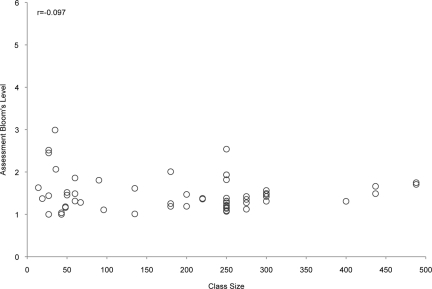

Cognitive level of assessments was unaffected by class size (n = 57; r = −0.097, p = 0.47; Figure 3) with both large- and small-enrollment courses focusing assessments on lower-level cognitive skills. Baccalaureate institutions had a significantly higher average Bloom score for assessments than the remaining three institution types (p < 0.05), indicating a statistical effect due to institution type (χ2 = 38.7794, df = 3, p < 0.0001; Table 1). However, the mean Bloom's level of assessments from Baccalaureate institutions was only 1.95 ± 0.10 (SEM) indicating that regardless of institution type, introductory courses overwhelmingly assess students at lower Bloom's levels (knowledge and comprehension).

Figure 3.

Relationship between class size and weighted mean Bloom's level of assessments. Circles represent individual courses. Class size was unrelated to Bloom's level of assessment (Pearson Product Moment Correlation, r = −0.097, p = 0.47).

Assessments by faculty who included goals on their course syllabi differed significantly from those of faculty who had no course goals or who did not submit a syllabus (p = 0.03); however, the weighted mean (±SEM) Bloom's level were 1.40 (±0.04) for faculty who included goals on their syllabus and 1.43 (±0.02) for faculty who did not, a difference that, while significant, lacks meaning in the realm of education.

DISCUSSION

This study offers the first national analysis of learning objectives and related assessments routinely used in introductory biology courses. Our data support the typically anecdotal claim that introductory biology courses, regardless of class size or institution type, focus on the recall and comprehension of facts. Although course goals are written to address a wide range of cognitive skills, students are primarily assessed at low cognitive levels (Bloom's levels 1 and 2).

We recognize that learning goals and assessments alone do not represent the totality of the teaching and learning experiences that occur in introductory biology courses. Other activities or experiences not accounted for in this study may engage students in higher-level cognitive domains. Term papers and group projects, for example, often ask students to synthesize multiple biological concepts. Variables such as class size and/or institution type may influence the implementation and frequency of such activities. Faculty teaching large lecture courses with minimal support staff may feel constrained to machine-scored assessments that are both simple and quick to grade but that typically assess lower cognitive levels. Although it is possible to write well-constructed and high-level multiple-choice questions, this task proves both challenging and time-consuming ( Haladyna et al., 2002). Still, we contend that the goals that faculty articulate on their syllabi and the assessments they give their students together reflect their expectations for student learning in their courses.

Our results expand the analysis of Zheng et al. (2008). Although the weighted mean Bloom level of assessments analyzed in this study (1.45 ± 0.02) is lower than reported by Zheng et al. (2.43 ± 0.14), this reflects sampling differences. Indeed, Zheng et al. acknowledged the limits of their data and called for additional analysis across a larger sample of institution types. Still, Zheng et al. recognized that many of their sampled introductory biology courses relied too heavily on lower cognitive-level assessments. Thus, we join Zheng et al. in calling for the reform of many introductory biology courses such that a broader range of cognitive skills is assessed.

The redefined vision of undergraduate biology education advocates that understanding the nature of science, participating in scientific practices, and using evidence and logic to reach conclusions are critical to learning biology ( NRC, 2007; Alberts, 2009; AAAS, 2009; Wood, 2009a). The focus on lower-level cognitive processes (i.e., recalling and comprehending facts) may not prepare students for the challenges of transferring knowledge to new contexts or approaching realistic problem solving ( Bransford et al., 1999; Handelsman et al., 2007). We believe that students should begin practicing the skills of connecting, transferring, and modeling scientific concepts at the start, not end, of their degree programs.

We do not have a prescription for the “right” cognitive level of goals and assessments in an introductory biology course. However, we contend that “practice makes perfect.” Students need early introduction to higher-level cognitive tasks, and they need multiple opportunities to practice these skills ( NRC, 2007). Introductory biology classes must be part of the practice in which students engage.

Many faculty argue that a primary focus on knowledge and comprehension is necessary for introductory college science courses (e.g., Guo, 2008) before students can complete higher-level thinking tasks. Evidence to support such claims, however, is lacking. Without question, experts have ready access to a deep body of knowledge. But an expert can build connections between ideas, recognize patterns and relationships that are often not apparent, and transfer knowledge to novel situations ( Bransford et al., 1999). Novice students must learn and practice these skills with feedback from their instructors ( Krathwohl et al., 1964). It is only through questions that aspire to higher cognitive domains that students will have the opportunity to hone and refine their skills while learning content within a meaningful context.

Indeed, the approaches students take to studying course material are greatly influenced by classroom activities and assessments ( Eley, 1992; Kember et al., 2008). Students adopt deep learning approaches for a variety of reasons, including the perceived demand of a course and related assessments ( Entwistle and Ramsden, 1983). Thus, courses that offer students a variety of cognitive tasks have the potential to positively impact student study habits and thus their learning.

In an environment where time, energy, and resources are limited, current and future faculty are confronted with the seemingly insurmountable challenge of doing more in the introductory biology classroom with less. There is a demand for faculty to create assessments that assess a broad range of cognitive skills. Such assessments establish expectations for student learning and provide meaningful feedback to the student and instructor. Further, faculty must develop learning goals at multiple cognitive levels and corresponding classroom activities to support the development of students' cognitive skills. Investing in the infrastructure of these introductory biology courses will afford students multiple opportunities to develop and practice critical thinking skills while holding them accountable for their learning through rigorous assessments that reflect real-world problems that challenge multiple cognitive domains.

ACKNOWLEDGMENTS

This research received approval from the local Institutional Review Board (IRB protocol #03-700). Supported in part by the NSF grant DUE 0088847.

REFERENCES

- Alberts, 2009.Alberts B. Redefining science education. Science. 2009;323:437. doi: 10.1126/science.1170933. [DOI] [PubMed] [Google Scholar]

- American Association for the Advancement of Science (AAAS), 1989.American Association for the Advancement of Science (AAAS) Science for All Americans: A Project 2061 Report on Literacy Goals in Science, Mathematics, and Technology. Washington, DC: 1989. [Google Scholar]

- AAAS, 2009.AAAS. Vision and Change: A Call to Action, A Summary of Recommendations; Vision and Change Conference; Washington, DC. 2009. www.visionandchange.org. [Google Scholar]

- American Association of Medical Colleges and Howard Hughes Medical Institute, 2009.American Association of Medical Colleges and Howard Hughes Medical Institute. Scientific Foundations for Future Physicians. Washington, DC: AAMC; 2009. [Google Scholar]

- Anderson et al., 2001.Anderson L. W., Krathwohl D. R., Bloom B. S. A Taxonomy for Learning, Teaching, and Assessing: A Revision of Bloom's Taxonomy of Educational Objectives. New York: Longman; 2001. [Google Scholar]

- Bhola et al., 2003.Bhola D. S., Impara J. C., Buckendahl C. W. Aligning tests with states' content standards: methods and issues. EM:IP. 2003;22:21–29. [Google Scholar]

- Bloom, 1956.Bloom B. S. New York: Longmans, Green; 1956. Taxonomy of Educational Objectives: The Classification of Educational Goals, Handbook I: Cognitive Domain. [Google Scholar]

- Bransford et al., 1999.Bransford J., Brown A. L., Cocking R. R. National Research Council Committee on Developments in the Science of Learning. How People Learn: Brain, Mind, Experience, and School. Washington, DC: National Academy Press; 1999. [Google Scholar]

- Crowe et al., 2008.Crowe A., Dirks C., Wenderoth M. P. Biology in Bloom: implementing Bloom's taxonomy to enhance student learning in biology. CBE Life Sci. Educ. 2008;7:368–381. doi: 10.1187/cbe.08-05-0024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eley, 1992.Eley M. G. Differential adoption of study approaches within individual students. Higher Educ. 1992;23:231–254. [Google Scholar]

- Entwistle and Ramsden, 1983.Entwistle N. J., Ramsden P. Understanding Student Learning. London, UK: Croom Helm; 1983. [Google Scholar]

- Evans, 1999.Evans C. Improving test practices to require and evaluate higher levels of thinking. Education. 1999;119:616–618. [Google Scholar]

- Faculty Institutes for Reforming Science Teaching II, 2009.Faculty Institutes for Reforming Science Teaching II. 2009. [accessed 1 December 2009]. http://first2.plantbiology.msu.edu.

- Fink, 2003.Fink L. D. Creating Significant Learning Experiences: An Integrated Approach to Designing College Courses. San Francisco, CA: Jossey-Bass; 2003. [Google Scholar]

- Fuller, 1997.Fuller D. Critical thinking in undergraduate athletic training education. J. Athl. Train. 1997;32:342–347. [PMC free article] [PubMed] [Google Scholar]

- Furst, 1981.Furst E. J. Bloom's taxonomy of educational objectives for the cognitive domain: philosophical and educational issues. Rev. Educ. Res. 1981;51:441–453. [Google Scholar]

- Goldenfeld and Woese, 2007.Goldenfeld N., Woese C. Biology's next revolution. Nature. 2007;445:369. doi: 10.1038/445369a. [DOI] [PubMed] [Google Scholar]

- Guo, 2008.Guo S. S. Science education: Should facts come first? Science. 2008;320:1012. doi: 10.1126/science.320.5879.1012a. [DOI] [PubMed] [Google Scholar]

- Haladyna et al., 2002.Haladyna T. M., Downing S. M., Rodriguez M. C. A review of multiple-choice item-writing guidelines for classroom assessment. Applied Measurement in Education. 2002;15:309–333. [Google Scholar]

- Handelsman et al., 2007.Handelsman J., Miller S., Pfund C. Scientific Teaching. New York: W. H. Freeman & Co; 2007. [DOI] [PubMed] [Google Scholar]

- Kember et al., 2008.Kember D., Leung D. Y., McNaught C. A workshop activity to demonstrate that approaches to learning are influenced by the teaching and learning environment. Active Learning in Higher Education. 2008;9:43–56. [Google Scholar]

- Krathwohl, 2002.Krathwohl D. R. A revision of Bloom's Taxonomy: an overview. Theory into Practice. 2002;41:212. [Google Scholar]

- Krathwohl et al., 1964.Krathwohl D. R., Bloom B. S., Masia B. B. New York: David McKay Company; 1964. Taxonomy of Educational Objectives. Handbook II: The Affective Domain. [Google Scholar]

- Kreitzer and Madaus, 1994.Kreitzer A. E., Madaus G. F. Empirical investigations of the hierarchical structure of the taxonomy. In: Anderson L. W., Sosniak L. A., editors. Bloom's Taxonomy: A Forty-Year Retrospective. Chicago, IL: University of Chicago Press; 1994. pp. 64–81. [Google Scholar]

- La Marca et al., 2000.La Marca P. M., Redfield D., Winter P. C., Despriet L. Washington, DC: Council of Chief State School Officers; 2000. State standards and state assessment systems: A guide to alignment. Series on standards and assessments. [Google Scholar]

- Martone and Sireci, 2009.Martone A., Sireci S. G. Evaluating alignment between curriculum, assessment, and instruction. Rev. Educ. Res. 2009;79:1332–1361. [Google Scholar]

- Mervis, 2009.Mervis J. U.S. STEM education: Obama's science advisers look at reform of schools. Science. 2009;326:654–654. doi: 10.1126/science.326_654b. [DOI] [PubMed] [Google Scholar]

- National Academy of Sciences, National Academy of Engineering, and Institute of Medicine, 2007.National Academy of Sciences, National Academy of Engineering, and Institute of Medicine. Rising Above the Gathering Storm: Energizing and Employing America for a Brighter Economic Future. Washington, DC: National Academies Press; 2007. [Google Scholar]

- National Research Council (NRC), 2002.National Research Council (NRC) High Schools. Washington, DC: National Academies Press; 2002. Learning and Understanding - Improving Advanced Study of Mathematics and Science in U.S. [Google Scholar]

- NRC, 2003.NRC. BIO2010, Transforming Undergraduate Education for Future Research Biologists. Washington, DC: National Academies Press; 2003. [PubMed] [Google Scholar]

- NRC, 2007.NRC. Taking Science to School: Learning and Teaching Science in Grades K-8. Washington, DC: National Academies Press; 2007. [Google Scholar]

- National Science Foundation, 1996.National Science Foundation. Shaping the Future: New Expectations for Undergraduate Education in Science, Mathematics, Engineering, and Technology. Arlington, VA: 1996. [Google Scholar]

- Pfund, 2009.Pfund C., et al. Summer institute to improve university science teaching. Science. 2009;324:470–471. doi: 10.1126/science.1170015. [DOI] [PubMed] [Google Scholar]

- R Development Core Team, 2009.R Development Core Team. R: A language and environment for statistical computing. Vienna, Austria: R Foundation for Statistical Computing; 2009. [Google Scholar]

- Tanner and Allen, 2004.Tanner K., Allen D. Approaches to biology teaching and learning: from assays to assessments—on collecting evidence in science teaching. Cell Biol. Educ. 2004;3:69–74. doi: 10.1187/cbe.04-03-0037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wiggins and McTighe, 2005.Wiggins G. P., McTighe J. Understanding by Design. Danvers, MA: Association for Supervision and Curriculum Development; 2005. [Google Scholar]

- Woese, 2004.Woese C. R. A new biology for a new century. Microbiol. Mol. Biol R. 2004;68:173–186. doi: 10.1128/MMBR.68.2.173-186.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wood, 2002.Wood W. B. Advanced high school biology in an era of rapid change: a summary of the biology panel report from the NRC Committee on Programs for Advanced Study of Mathematics and Science in American High Schools. Cell Biol. Educ. 2002;1:123–127. doi: 10.1187/cbe.02-09-0038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wood, 2009a.Wood W. B. Innovations in teaching undergraduate biology and why we need them. Annu. Rev. Cell Dev. Biol. 2009a;25:93–112. doi: 10.1146/annurev.cellbio.24.110707.175306. [DOI] [PubMed] [Google Scholar]

- Wood, 2009b.Wood W. B. Revising the AP biology curriculum. Science. 2009b;325:1627–1628. doi: 10.1126/science.1180821. [DOI] [PubMed] [Google Scholar]

- Zheng et al., 2008.Zheng A. Y., Lawhorn J. K., Lumley T., Freeman S. Assessment - Application of Bloom's taxonomy debunks the “MCAT myth.”. Science. 2008;319:414–415. doi: 10.1126/science.1147852. [DOI] [PubMed] [Google Scholar]