Abstract

Speech stimuli give rise to neural activity in the listener that can be observed as waveforms using magnetoencephalography. Although waveforms vary greatly from trial to trial due to activity unrelated to the stimulus, it has been demonstrated that spoken sentences can be discriminated based on theta-band (3–7 Hz) phase patterns in single-trial response waveforms. Furthermore, manipulations of the speech signal envelope and fine structure that reduced intelligibility were found to produce correlated reductions in discrimination performance, suggesting a relationship between theta-band phase patterns and speech comprehension. This study investigates the nature of this relationship, hypothesizing that theta-band phase patterns primarily reflect cortical processing of low-frequency (<40 Hz) modulations present in the acoustic signal and required for intelligibility, rather than processing exclusively related to comprehension (e.g., lexical, syntactic, semantic). Using stimuli that are quite similar to normal spoken sentences in terms of low-frequency modulation characteristics but are unintelligible (i.e., their time-inverted counterparts), we find that discrimination performance based on theta-band phase patterns is equal for both types of stimuli. Consistent with earlier findings, we also observe that whereas theta-band phase patterns differ across stimuli, power patterns do not. We use a simulation model of the single-trial response to spoken sentence stimuli to demonstrate that phase-locked responses to low-frequency modulations of the acoustic signal can account not only for the phase but also for the power results. The simulation offers insight into the interpretation of the empirical results with respect to phase-resetting and power-enhancement models of the evoked response.

INTRODUCTION

Natural speech gives rise to a cascade of neuronal responses in the listener that reflect a multitude of computations associated with extracting and processing information contained in the input signal. When the speech signal is intelligible, these computations may include all processing elements required for comprehension, such as the analysis of acoustic, phonological, lexical, syntactic, semantic, and indexical content. The composite of these neuronal responses contributes to electromagnetic waveforms that can be observed extracranially via electroencephalographic (EEG) and magnetoencephalographic (MEG) imaging. These waveforms also inherently reflect neuronal activity unrelated to the processing of the stimulus that varies randomly across presentations, such that waveforms associated with a repeated stimulus vary greatly from trial to trial (Arieli et al. 1996; Hu et al. 2009). Despite this intrinsic variation, Luo and Poeppel (2007) demonstrated that phase patterns for the theta-band (3–7 Hz) frequency components of single-trial waveforms can be used to discriminate between different spoken sentences, implying the existence of phase-locked theta-band responses to stimulus features that differ across stimuli. In addition, they found that manipulations of the speech signal envelope and fine structure that reduce sentence intelligibility produce similar reductions in discrimination performance, thus revealing a relationship between theta-band phase patterns and speech comprehension. The nature of this relationship was not determined but could depend on any of the computational processes involved in speech comprehension.1 Although Luo and Poeppel found significant dissimilarity in response phase patterns for different speech stimuli (in the theta band), they found no significant dissimilarity in power patterns, suggesting that theta-band responses involve phase-resetting of an ongoing theta oscillation, but no additive activity that would increase power (Fuentemilla et al. 2006; Hamada 2005; Penny et al. 2002).

In this study, we hypothesize that the theta-band phase patterns in the response waveform primarily reflect processing related to acoustic properties of speech that are essential to intelligibility—i.e., slow modulations of the speech envelope (<40 Hz)—rather than processing directly related to lexical, syntactic, or semantic analysis. Our hypothesis derives from the conjunction of several findings related to slow temporal modulations. First, converging evidence indicates that the auditory cortex produces phase-locked responses to slow temporal modulations and that these responses are especially widespread and robust for theta-band modulation frequencies (Giraud et al. 2000; Liégeois-Chauvel et al. 2004; Lu et al. 2001). Furthermore, slow modulations of the speech envelope appear to contain the acoustic features most essential to intelligibility (Drullman 2006; Drullman et al. 1994a,b), particularly in the theta-band where power in the temporal modulation spectrum is concentrated (Greenberg 2003, 2006; Houtgast and Steeneken 1985). In addition, theta-band modulations of the envelope are thought to be associated with syllabic structure (Greenberg 2003) and thus will typically differ between stimuli. Finally, phase-locking to slow modulations of the speech envelope has been observed in both EEG (Abrams et al. 2008; Aiken and Picton 2008) and MEG (Ahissar et al. 2001) responses to speech stimuli. Together, these findings suggest that slow modulations in the speech envelope evoke phase-locked responses that contribute to the poststimulus waveform, potentially creating phase patterns that are particularly salient and stimulus-differentiated in the theta band.

The hypothesis implies that theta-band phase discrimination performance for auditory stimuli that are similar to speech in terms of slow envelope modulations, but are completely unintelligible, should be comparable to that for speech stimuli. That is, the neuronal response pattern should be driven by acoustic patterns that potentially underlie intelligibility but are not an index of higher-order linguistic operations. We created such stimuli by time-inverting normal speech stimuli, a transformation that eliminates intelligibility (Saberi and Perrott 1999) while retaining the envelope modulation structure in a reversed temporal order. We then tested the hypothesis by replicating the Luo and Poeppel (2007) experiment using both normally spoken sentences and their time-inverted counterparts as stimuli and comparing discrimination performance based on power and phase of the MEG response for the two types of stimuli.

In addition to the experimental investigation, we developed a model that investigates the potential for phase-locked responses to slow modulations in the signal envelope, to account for the empirical phase–power analysis results obtained in both this and the Luo and Poeppel (2007) study. Specifically, the model allowed us to simulate single-trial response data for a set of sentence stimuli based on the hypothesis that the evoked response contains phase-locked responses to slow envelope modulations. The simulated data were then subjected to the same analyses performed on the empirical data and the results directly compared. The model deepens our understanding of the empirical results by providing evidence related to the interpretation of the phase–power findings with regard to phase-resetting and power-enhancement models of the evoked response (for a review of these models, see Sauseng et al. 2007).

METHODS

Subjects

Six subjects (two male, mean age 25 yr, range 20–41 yr) took part in the experiment after providing informed consent. All were right-handed (Oldfield 1971), native English speakers, reported normal hearing, and had no history of neurological disorders. The experiment was conducted in accordance with the Institutional Review Board of the University of Maryland.

Neuromagnetic recording

The neuromagnetic data were acquired in a magnetically shielded room (Yokogawa, Hiroshima, Japan) using a 160-channel, whole-head axial gradiometer system (5 cm baseline, SQUID-based sensors; KIT, Kanazawa, Japan). MEG channels included 157 head channels plus 3 reference channels that recorded the environmental magnetic field data for noise reduction purposes. Data were continuously recorded using a sampling rate of 1,000 Hz, on-line filtered between 1 and 100 Hz, with a notch at 60 Hz.

Stimuli

The Luo and Poeppel (2007) study used a mix of male and female voices within the set of three spoken sentences to be discriminated, thus incorporating a potential confound. In this study we eliminated this confound, while continuing to use both male and female voices as stimuli, by using one set of sentences voiced by males and another voiced by females. The two sets were selected from the DARPA TIMIT Acoustic-Phonetic Continuous Speech Corpus (sampling frequency 16 kHz) such that all stimuli had lengths exceeding 4,000 ms. Set 1 sentences (male voice) were: 1) “Maybe it's taking longer to get things squared away than the bankers expected.”; 2) “His tough honesty condemned him to a difficult and solitary existence.”; and 3) “The most recent geological survey found seismic activity.” Set 2 sentences (female voice) were: 1) “She had your dark suit in greasy wash water all year.”; 2) “Ants carry the seeds so better be sure there are no ant hills nearby.”; and 3) “Buying a thoroughbred horse requires intuition and expertise.” Two corresponding sets of target stimuli were constructed by including a normal and a time-reversed version of each sentence. A set of 22 distractor stimuli consisted of acoustically altered versions of other sentences drawn from the TIMIT database, specifically sine-wave speech (Remez et al. 1981) or speech-noise chimaeras (Smith et al. 2002) based on sine-wave speech. The distractor stimuli were speech-like stimuli that had been constructed for other experiments and were used in this experiment simply as a means of reducing monotony in the total stimulus set. Only the responses to target stimuli were analyzed.

Procedure

All stimuli were delivered at a comfortable loudness level (∼70 dB) through plastic tubing connected to ear pieces inserted in the ear canals of the subjects (EAR Tone 3A System). Presentation of the experimental stimuli was preceded by pretesting to check subject hearing and head position. The pretest included the binaural presentation of tone stimuli (1,000 Hz, 50 ms, 131–153 per subject). The data recorded for these stimuli were used to localize channels reflecting response activity originating in the auditory cortex (see Data processing).

The experimental stimuli were presented binaurally under the control of a Presentation version 11.3 (Neurobehavioral Systems) script. Three subjects (one male) were assigned to Set 1 stimuli (male speaker) and three subjects (one male) to Set 2 stimuli (female speaker). The experiment consisted of 88 fixed-length (13,000 ms) presentations of stimulus pairs with the first stimulus presented at 500 ms, the second stimulus at 6,500 ms, and a beep signal at 11,800 ms. Each stimulus in the pair could be either a distractor or a member of the assigned stimulus set. Stimuli were presented in pseudorandom order, with each member of the assigned stimulus set presented 21 times. Subjects were instructed to decide whether the stimuli in a presentation pair were the same or different and to respond accordingly via a handheld button press following the beep. Because the sole purpose of this task was to keep subjects alert and engaged, the behavioral results were not analyzed.

Data processing

The recorded responses to the pretest tone stimuli were averaged, noise-reduced off-line using the CALM (categorization and learning module) algorithm (Adachi et al. 2001), and base-line corrected. The results were then averaged across the six subjects and low-pass filtered (22 Hz filter, 91 point Hamming window) to extract the grand-average slow-wave response. Because the M100 (also referred to as N1m and N100m) component of the slow-wave response, generated roughly 100 ms after stimulus onset, reflects activity arising in the auditory cortex (Lütkenhöner and Steinsträter 1998), the topography at the M100 peak (96 ms) was used to determine a set of 80 auditory cortex activity channels, with 20 selected from each quadrant (Fig. 1).

Fig. 1.

Topography of grand-average M100 peak response to 1,000 Hz tones based on grayscale map of magnetic field absolute values from highest (white) to lowest (black). Auditory cortex activity channel set (80 channels) is indicated by white “ ” marks.

” marks.

The recorded responses to the experimental stimuli were noise-reduced using the time-shift principle component analysis algorithm (TSPCA) (de Cheveigné and Simon 2007). For each subject, responses to the three normal and the three time-reversed versions of the sentences were analyzed separately. The analysis methods used were essentially those described in Luo and Poeppel (2007). Specifically, for each response to one of the three stimuli included in a particular analysis, spectrograms of the initial 4,000 ms, based on a 500 ms time window and 100 ms steps, were calculated for each of the 157 MEG recording channels. The resulting values for phase and power as a function of frequency and time were retained for further analysis. Three within-sentence groups were formed, each comprising the spectrogram response data for a particular sentence (21 trials). For each channel, three across-sentence groups were also constructed, each comprising the spectrogram response data for seven randomly selected trials for each of the three sentences (21 trials in total). Cross-trial phase and power coherence values for each of the three within-sentence and the three across-sentence groups were computed as

where θknij and Aknij are, respectively, the phase and amplitude for frequency bin i and time bin j in trial n and group k with N = 21 for this analysis. Cphase is in the range of [0 1]. For Cpower, the SD of the distribution of power values is normalized by the distribution mean, to produce a similar range of coherence values for all frequency bins (SD varies directly with mean power, which is roughly proportional to 1/f). Note that a larger Cphase value corresponds to strong cross-trial phase coherence, whereas a smaller Cpower value corresponds to strong cross-trial power coherence. The cross-trial coherence values were used to compute a dissimilarity function for each frequency bin i, defined as

For this analysis, J = 36 and K = 3. A dissimilarity index value >0 for a frequency bin indicates larger cross-trial coherence for the within-sentence groups than for the across-sentence groups at that frequency. In other words, a positive index value means that a phase or power pattern is more dissimilar between the within-sentence groups than between the across-sentence groups. Dissimilarity index values were computed for each of the 157 MEG channels. Mean dissimilarity index values were computed across the 80 auditory cortex activity channels (see Fig. 1).

For each subject, the 20 channels with the highest Dphase values for the theta band (3–7 Hz, frequency bins 4 and 6 Hz), within the set of 80 auditory cortex activity channels, formed the basis of the classification analysis. In this analysis, each single-trial response observed in the top 20 channels was classified as a response to sentence 1, sentence 2, or sentence 3 by comparing the trial's theta-band phase values to the phase values for a template of three single-trial responses (one for each sentence) and determining the closest match in terms of distance. The classification for each single-trial response was computed for templates that included all possible combinations of the responses to the three sentences, excluding the response being classified (8,820 in total). The results for each set of sentence responses were divided by the total number of classifications for those responses to find the sentence's classification distribution with respect to the three template categories. A correct classification value of 0.33 corresponds to chance level, given three possible choices for each classification. Aggregate correct classification rates for both normal and reversed sentences were determined by averaging across the individual correct classification rates obtained for the three sentences in the respective stimulus sets.

Simulation model

A basic “signal plus noise” model was used to simulate the single-trial response to spoken sentences in a single MEG channel (Sauseng et al. 2007; Yeung et al. 2004). In this model, the response is formulated as the linear sum of phase-locked activity evoked by the stimulus that remains constant from trial to trial (signal) and background activity uncorrelated with the stimulus that varies randomly from trial to trial (noise). Thus the model is based on the finding that the relationship between ongoing and evoked activity is linear to a first approximation (Arieli et al. 1996).

Two separate simulations were performed corresponding to two assumptions regarding the nature of the evoked response. In simulation 1, the evoked response was assumed to replicate the low-frequency modulations of the stimulus envelope. For this formulation, the envelope of each stimulus signal was extracted by computing the magnitude of its Hilbert transform and then low-pass filtered with a 40 Hz cutoff (order 5 digital Butterworth filter with normalized cutoff frequency), to retain the modulation frequencies that produce substantial phase-locked responses in the auditory cortex. The filtered envelope was down-sampled from 16k to 1k Hz and used as the evoked response. In simulation 2, the evoked response was assumed to consist of theta-band responses to sound energy transients in the stimulus. This formulation was based on evidence that auditory transitions, including onsets (Goff et al. 1969; Hari et al. 1987; Pantev et al. 1986; Picton et al. 1974), offsets (Davis and Zerlin 1966; Hari et al. 1987; Pantev et al. 1996; Pratt et al. 2005), and changes of amplitude or frequency (Jones and Perez 2002; Mäkelä et al. 1987; Martin and Boothroyd 2000; Pratt et al. 2009) evoke a low-frequency complex in the EEG/MEG response2 with energy concentrated in the theta band (Howard and Poeppel 2009; Hu et al. 2009; Makinen et al. 2005; Riecke et al. 2009; Stampfer and Bašar 1985). For this formulation, sound energy transients were represented by the absolute value of the derivative of the stimulus envelope (as computed for the response in simulation 1) and the theta-band response was represented by a single-cycle sine wave with a period of 200 ms (5 Hz). The sound energy transient representation was then convolved with the theta-band response and the result used as the evoked response.

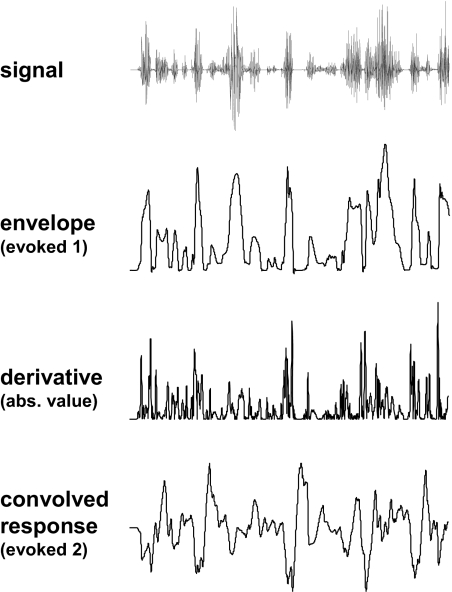

Evoked responses were computed for each of the three stimuli included in set 1 (see Stimuli section) using both formulations. An example derivation of the two formulations is shown in Fig. 2. For both simulations, background activity was represented as random 1/f noise characterized by a uniform distribution of phase between −π and π and a chi-square distribution of power around the 1/f spectrum (Little et al. 2007).

Fig. 2.

Example waveforms for the signal, the envelope of the signal, the derivative of the envelope (absolute value), and the convolution of the derivative of the envelope (absolute value) with the theta-band response (sentence 1 in stimulus set 1).

The mean power spectra (normalized) were computed for the envelope, the absolute value of the envelope derivative, and the convolved response for the set 1 stimuli (first 4 s, fast Fourier transform). Power was concentrated below 5 Hz for the envelope, but was more broadly distributed for the derivative formulation, extending to frequencies >5 Hz (Fig. 3A). The convolution of the envelope derivative formulation with the transient theta-band response essentially band-pass filtered the derivative modulations, concentrating power in the theta band. Thus power for both the envelope (evoked response in simulation 1) and the convolution (evoked response in simulation 2) was concentrated below 8 Hz (Fig. 3B).

Fig. 3.

Mean power for stimulus set 1 of (A) envelope and derivative of envelope (absolute value) and (B) evoked responses used in simulations. The evoked response was the envelope in simulation 1 and the convolution of the envelope derivative (absolute value) with the transient theta-band response in simulation 2.

For both simulations, 21 single-trial responses were generated for each of the three stimuli. A single-trial response was formed by adding a random 4-s segment of normalized background activity to the first 4 s of an amplitude-scaled evoked response representation with a single scaling factor applied to all three evoked responses. The data for simulation 1 were subjected to the same phase–power dissimilarity analysis performed on the empirical data and the amplitude-scaling factor was manually adjusted to yield theta-band phase dissimilarity index values comparable to those observed in the top channel for the six experiment participants (index values given in results). The six scaling factors determined by this process were used to generate six sets of simulated data that could be qualitatively compared and contrasted with the empirical data. For simulation 2, the amplitude-scaling factors determined for simulation 1 were proportionally adjusted to yield theta-band phase dissimilarity index values comparable to those observed empirically. For simulation 1, the amplitude-scaling factors were 0.44, 0.44, 0.46, 0.54, 0.65, and 0.85 and for simulation 2, 0.19, 0.19, 0.20, 0.24, 0.28, and 0.37. These scaling factors produced an evoked response-to-background activity power ratio of about 7.5–8% in the theta-band for both simulations and ratios of roughly 9% for simulation 1 and roughly 1.6% for simulation 2 in the delta band.

Statistical analysis

Grand averages across the six subjects were computed for the 80-channel mean dissimilarity index results. Positivity in a grand-average dissimilarity index value for a particular frequency bin was considered significant if two criteria were met: 1) the bin index value was positive for every subject and 2) the index value exceeded the random-effects threshold. The random-effects threshold value was set to the magnitude of the largest negative dissimilarity index value for frequency bins 2–50 Hz in a grand-average result. This determination was based on the assumption that negative dissimilarity is attributable solely to random effects and is thus representative of the magnitude of positive dissimilarity that could be attributable to random effects (in the case of power dissimilarity, 2 Hz bin values appeared to be excessively noisy and were not used as thresholds).

The grand-average correct classification rates were computed across subjects based on their aggregate correct classification rates. Z-tests were applied to the subject data to test the significance of performance above chance level (0.33) in the grand-average results for both stimulus types. The significance of the difference in correct classification performance for the two stimulus types was examined using a paired t-test (two-tailed).

A composite theta-band phase dissimilarity index value was computed for each subject as the average of the 4 and 6 Hz frequency bin values both for the top 20-channel mean and for the top channel in each hemisphere. Subject grand averages were then computed for these three (top 20 channels, top right hemisphere channel, top left hemisphere channel) composite values. The significance of the difference in the grand-average top 20 channel composite values for the two stimulus types was examined by applying a paired t-test (two-tailed) to the subject data. The effects of stimulus type and hemisphere on the composite theta-band phase dissimilarity index value were examined by applying two-way repeated measures ANOVA to the subject data for the top channel in each hemisphere.

In the modeling investigation, six data sets simulating single-trial response data for the top theta phase dissimilarity channel in six subjects were generated and the grand average was computed for both simulation 1 and simulation 2. The significance of positivity in grand-average dissimilarity index values was evaluated based on 95% confidence limits as determined by bootstrap resampling (1,000 repetitions).

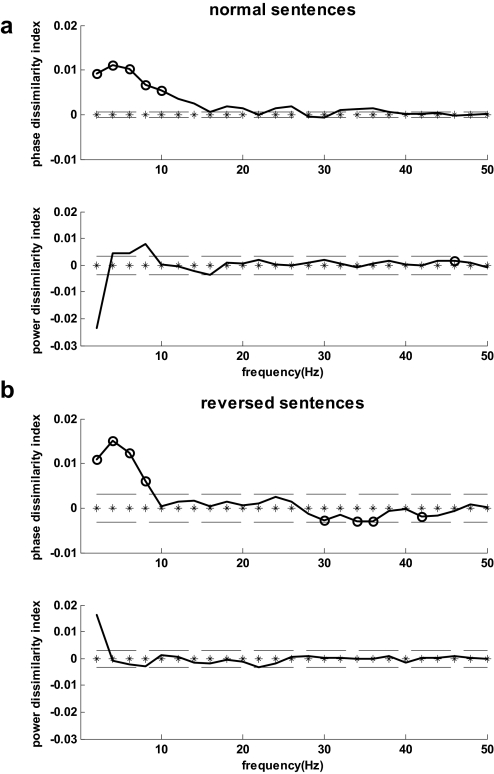

RESULTS

For normally spoken sentences, the phase dissimilarity function based on the 80 auditory cortex activity channels (Fig. 1) was consistently positive across all subjects for frequencies <11 Hz. Furthermore, the grand-average phase dissimilarity value for each frequency <11 Hz exceeded the random-effects threshold. For the grand average, phase dissimilarity peaked at 4 Hz, followed closely by 6 Hz and then 2 Hz (Fig. 4A). Thus the highest phase dissimilarity appeared, on average, in the theta-band frequency bins (4 and 6 Hz). The power dissimilarity function was consistently positive at 46 Hz, but the grand-average value did not exceed the random-effects threshold at this frequency.

Fig. 4.

Grand averages for phase and power dissimilarity index spectra based on 80 auditory channels for a) normal sentences. b) time-reversed sentences. Circle markers indicate consistent positive or negative values across all subjects. Dashed lines represent the random-effects threshold.

Similar results were observed for the time-reversed sentences (Fig. 4B). In this case, consistent positivity for phase dissimilarity was limited to frequencies <9 Hz for which all grand-average phase dissimilarity values exceeded the random-effects threshold. No consistent positive dissimilarity effects were observed for power.

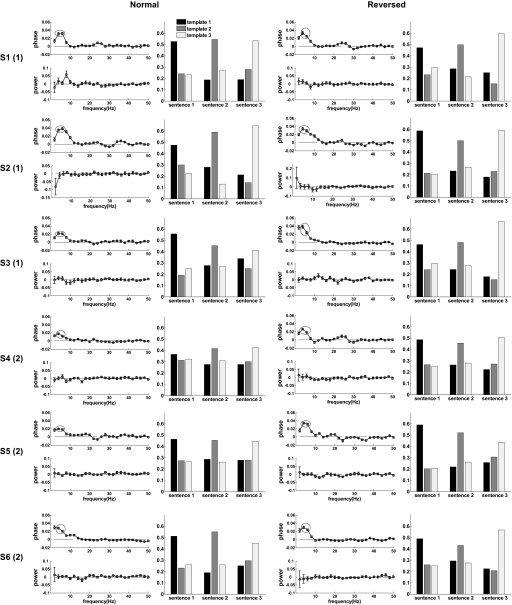

The topography of theta-band phase dissimilarity for both normally spoken sentences and their time-reversed counterparts is shown in Fig. 5. Comparison of this topography with that for the M100 response to an auditory stimulus (see Fig. 1) indicates that, in all cases, the channels with the highest index values were clustered in locations consistent with an auditory cortex origin. Furthermore, within-subject clustering tended to be similar for the two sentence types.

Fig. 5.

Theta-band phase dissimilarity index topography. Subject number followed by stimulus number in parentheses shown on left. Grayscale map of dissimilarity index values from highest (white) to lowest (black) shown for normal (left map column) and time-reversed (right map column) sentences. Note clusters of highest dissimilarity in vicinity of auditory cortex channels in all cases. The top 20 theta phase dissimilarity channels within the set of auditory channels are indicated by black “ ” marks.

” marks.

Phase and power dissimilarity functions averaged over the 20 auditory channels exhibiting the highest phase dissimilarity values for the theta band (4 and 6 Hz bins) in each subject are shown for both normal and reversed sentences in Fig. 6. Phase dissimilarity was consistently positive in the range of 1–9 Hz, with a peak in the theta band for both stimulus types in all subjects. The power dissimilarity function was not consistently positive for any frequency. The strength of the theta-band phase dissimilarity effect varied across subjects from relatively strong index values near 0.04 to relatively weak values near 0.02. Classification performance (also shown in Fig. 6) based on the closest match of single trials to target templates with respect to theta-band phase for the top 20 channels varied with theta-band phase dissimilarity, as expected, and was well above chance level (0.33) for both stimulus types in all subjects [total correct classification results: 1) normal: 0.535, 0.571, 0.474, 0.402, 0.454, 0.505; 2) reversed: 0.523, 0.561, 0.538, 0.483, 0.516, 0.496]. One-tailed z-tests indicated that the grand-average correct classification rates of 0.49 for normal sentences and 0.52 for reversed sentences were significantly above chance level in both cases (P < 1.0E-14).

Fig. 6.

Dissimilarity indices and classification performance. Subject number followed by stimulus number in parentheses shown on the left. Graph pairs shown for normal (2 left columns) and time-reversed (2 right columns) sentences. First graph in pair displays phase and power dissimilarity indices averaged across 20 auditory channels having the strongest theta-band phase dissimilarity (theta-band phase dissimilarity values circled; error bars represent SE). Second graph in pair displays fraction of trials for each sentence classified as matching template 1 (black), 2 (gray), and 3 (white) based on theta-band phase information for the 20 channels.

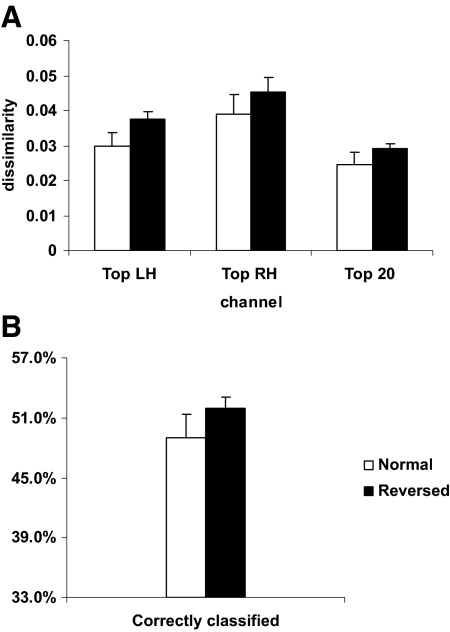

The composite theta-band phase dissimilarity index values (average of the 4 and 6 Hz frequency bin values) for the normal stimuli in the top channel (either hemisphere), used in the determination of the amplitude-scaling factors for the simulations as described in methods, were 0.0439, 0.0654, 0.0297, 0.0309, 0.0298, and 0.0362. The grand averages of these composite values for the top 20-channel mean and for the top channel in each hemisphere, together with the grand average of the percentage of trials correctly classified (aggregate), are shown in Fig. 7. Comparison of these results for normal and reversed sentences (paired t-test, two-tailed) revealed a slight trend toward better performance for reversed sentences in both the top 20-channel mean dissimilarity values (t = 1.49, degrees of freedom [df] = 5, P = 0.20, α = 0.05) and correct classification (t = 1.63, df = 5, P = 0.16, α = 0.05) that did not rise to the level of significance. Analysis of the theta phase dissimilarity values for the top channel in each hemisphere using two-way ANOVA with repeated measures indicated that the slight trends toward better performance for reversed sentences and for the right hemisphere were not statistically significant: sentence type [F(1,5) = 3.477, P = 0.12, α = 0.05] and hemisphere [F(1,5) = 3.477, P = 0.12, α = 0.05]. No significant interaction between hemisphere and sentence type was observed [F(1,5) = 0.105, P = 0.76, α = 0.05].

Fig. 7.

Grand-average theta-band phase dissimilarity and classification results for normal (white) and time-reversed (black) sentences. Error bars represent SE. A: theta-band phase dissimilarity index values for the top channel in the left and right hemispheres and the top 20-channel mean. B: percentage correct classification for all trials.

Simulation analysis

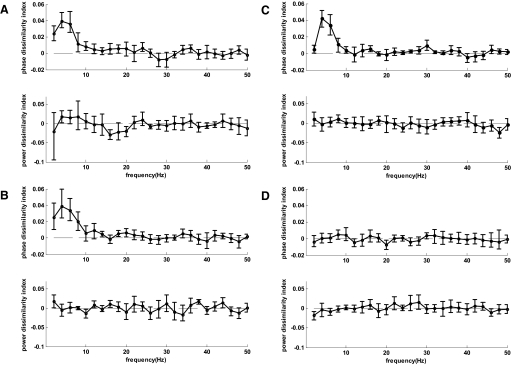

The grand-average results for the empirical data (normal stimuli, top channel) are shown in Fig. 8A, together with the grand-average results of the phase–power dissimilarity analysis for the two simulations (Fig. 8, B and C). The results of a phase–power dissimilarity analysis performed on six simulated data sets of single trials consisting only of background activity (no evoked response added) are also shown (Fig. 8D). The results for background activity established baselines against which the empirical and simulated response results can be compared.

Fig. 8.

Grand average of phase and power dissimilarity results for 6 data sets. Error bars show 95% confidence limits based on bootstrap resampling. A: empirical results for top channel response to normal stimuli in 6 subjects B: simulation 1 results. Evoked response is proportional to stimulus envelope. C: simulation 2 results. Evoked response is proportional to derivative of envelope (absolute value) convolved with a theta-band response. D: simulation results assuming single-trial responses consist of noise only (no evoked response).

The results for simulation 1 (Fig. 8B), which used the filtered envelope of the stimulus as the evoked response, displayed exactly the same characteristics as those of the empirical results. Specifically, significant positive phase dissimilarity (based on 95% confidence limits) was observed at low frequencies that clearly exceeded baseline level <9 Hz, but no significant power dissimilarity exceeding baseline level was observed for any frequency. Phase dissimilarity was greatest in the theta band, peaking at 4 Hz, even though evoked response power was much greater in the delta band for this simulation. The results for simulation 2 (Fig. 8C), which used the convolution of the envelope derivative with a transient theta-band response (5 Hz sine wave) as the evoked response, were quite similar but with phase dissimilarity even more concentrated in the theta band.

DISCUSSION

The results of this study strongly support the Luo and Poeppel (2007) finding that theta-band phase patterns in single trials of MEG-derived auditory cortical responses suffice to discriminate among acoustic stimuli consisting of spoken sentences. Using a different set of subjects and sentences, we observed dissimilarity indices that are a close match both quantitatively and qualitatively to those observed for the original experiment. In particular, we found consistent positive phase dissimilarity that exceeded the random-effects threshold for theta-band frequencies but no consistent positive power dissimilarity exceeding the random-effects threshold for any frequency. Likewise, classification of single-trial response data based on theta-band phase patterns produced results quite similar to those obtained previously, significantly exceeding chance performance levels. Finally, the topography associated with theta-band phase dissimilarity in the present study was also consistent in suggesting that the discriminable phase patterns originate in auditory cortex. Although we detected a trend toward right hemisphere lateralization in theta-band phase dissimilarity, as observed by Luo and Poeppel, it was not found to be significant.

The findings on phase dissimilarity are consistent with the results of a MEG study that identified the response frequencies most critical to the stimulus–response classification of a set of spoken sentences (Suppes et al. 1998). In this study, test samples consisting of the average of five responses to a particular sentence were classified based on the closest least-squares fit to templates formed by averaging 50 responses for each sentence. Both the test sample and the templates were filtered to find the frequencies that optimized classification performance. Best performance was obtained for a 3- to 10-Hz filter, suggesting that maximum phase pattern dissimilarity falls in this range.

The findings are also consistent with the results of a study that performed an information theoretic analysis on local field potential (LFP) responses to naturalistic sound stimuli in the auditory cortex of alert monkeys (Kayser et al. 2008). This study examined the signal information provided by phase and power for response frequencies ranging from 4 to 72 Hz and found that frequencies in the theta band (4–8 Hz) provided the most information. Furthermore, phase provided three times as much information as power.

If distinct intertrial phase or power synchronies are present for higher frequencies only over short intervals, it seems possible that dissimilarity effects at higher frequencies could be somewhat obscured by the use of the 500 ms analysis window in both this and the Luo and Poeppel (2007) study. However, the findings of Suppes et al. (1998) and Kayser et al. (2008) imply that the greatest dissimilarity effects will be found at low frequencies (in phase), regardless of analysis window size. For the same reasons, a reformulation of the dissimilarity analysis based on wavelet analysis could potentially mitigate any bias introduced by window size, but would not be expected to materially change the results.

Phase tracking and intelligibility

This study extends the finding that discriminability based on theta-band phase patterns correlates with speech intelligibility (Luo and Poeppel 2007) by providing evidence that processing associated with acoustical attributes critical to intelligibility, rather than processing associated exclusively with speech comprehension, underlies the correlation. Our results demonstrate that auditory stimuli with speech-like envelope properties but totally lacking in intelligibility—i.e., time-reversed versions of spoken sentences—can be discriminated based on theta-band phase patterns in single trials of MEG-derived auditory cortical responses as well as, or even better than, their normal counterparts. Indeed, for the two types of stimuli, we observed very similar topographical patterns for theta-band phase dissimilarity within subjects and found no significant differences in either the theta-band phase dissimilarity or the single-trial classification results. These findings support the view that theta-band phase tracking is linked to early processing in primary and secondary auditory cortex that is strongly phase-locked to stimulus acoustics and largely independent of comprehension.

Strong phase-locking is associated with the processing of acoustic transients (i.e., edges) in the auditory signal. These transients may contribute critically to speech intelligibility by permitting the signal to be parsed into chunks of appropriate granularity to support comprehension. That is to say, acoustic transients may provide a temporal structure that can be exploited by cortical processing properties to create analysis windows of appropriate size. Thus if theta-phase tracking reflects the presence of transients required for parsing the speech signal, manipulations that eliminate them will result in a decrease in both theta-phase discrimination performance and intelligibility, as observed by Luo and Poeppel (2007). Conversely, assuming that all spoken languages incorporate such transients, the results of the present study suggest that theta-phase tracking in a particular listener will be independent of the listener's comprehension of the language, reflecting the presence of temporal structure properties that support intelligibility.

The conclusion that theta-band phase tracking is linked to early cortical processing that is strongly phase-locked to stimulus acoustics and largely independent of comprehension is consistent with EEG findings that suggest that the N1 response is independent of phonetic perception. Specifically, a study of the perception of voicing in English speakers using brief consonant–vowel (C–V) stimuli found that N1 characteristics, such as latency, amplitude, and the presence of a double peak, were not influenced by phonetic categorization of the stimulus (as indicated by a behavioral response), but rather appeared to depend principally on stimulus acoustic properties (Sharma et al. 2000; for related evidence, see also Gage et al. 1998). Furthermore, a similar study that examined the neurophysiological correlates of native and nonnative phonemic categories defined by voicing found that, although behavioral discrimination of the phonemic categories differed between native and nonnative speakers, no differences were observed in N1 features between the two subject groups (Sharma and Dorman 2000).

Phase and power tracking and the nature of the evoked response

The discrimination of speech stimuli based on phase patterns present in single-trial responses depends on the evoked response, that is, activity that is phase-locked to the stimulus. The exact nature of the evoked response is not yet fully understood, but proposed sources include additive activity, phase-resetting of ongoing oscillations, and baseline shifts in oscillatory activity (Fuentemilla et al. 2006; Hu et al. 2009; Jansen et al. 2003; Nikulin et al. 2007; Penny et al. 2002; Sauseng et al. 2007; Yeung et al. 2004). The finding of phase dissimilarity in the absence of power dissimilarity in the empirical data would appear to support a pure phase-resetting model of the evoked response. However, the same finding in the simulations, which were based on a formulation of the single-trial response that can be interpreted as an additive model (i.e., response activity added to ongoing activity), demonstrates that such a result is compatible with stimulus-evoked power enhancement. Thus the finding of dissimilarity in phase but not power patterns cannot be interpreted as evidence in favor of the pure phase-resetting model.

The somewhat counterintuitive absence of power dissimilarity in the simulated data based on the added activity model can be understood in terms of how the addition of evoked activity affects phase and power in total activity relative to background activity within an analysis window across a set of trials. The addition of evoked activity consistently shifts the phase of total activity away from the phase of the background activity, which is random, toward the phase of the evoked activity, which is fixed. As a result, the distribution of phase in total activity coheres around the phase of the evoked activity, with phase coherence varying as a function of the ratio of evoked-to-mean background power. For the ratio assumed in the simulations for theta-band power (∼8%), the difference in the cross-trial phase distributions should be quite salient (based on the results from 10k simulated trials; Fig. 9A). Because the phase patterns of the stimuli differ, phase coherence will be greater for within-stimulus trial sets than that for across-stimulus trial sets. In contrast, the addition of evoked activity to background activity produces an increase in the power if evoked activity is in-phase with background activity but a decrease if it is out-of-phase (assuming that the ratio of evoked-to-background power is <2). Consequently, the addition of evoked activity increases the cross-trial power distribution spread for total activity relative to that for background activity, resulting in a decrease in power coherence. For the relative level of evoked power assumed in the simulation, the difference in the power distributions for the 4 Hz frequency bin should be modest (Fig. 9B), with the SD increasing from 1.76 to 1.89 for 10k simulated trials. Because the mean also increases from 1.82 to 1.98, there is actually no difference in the normalized power coherence measure used in the analysis (Cpower = SD/mean), which is 0.97 for both distributions. Thus although the evoked response power patterns vary for the stimuli, power coherence for within-stimulus trial sets will be indistinguishable from coherence for across-stimulus trial sets.

Fig. 9.

Theta (4 Hz bin) phase and power distributions over 10k random trials based on fast Fourier transform for 500 ms data segment (500 ms window). Signal is a single one-cycle 5 Hz sine wave of amplitude 0.082 that begins at 100 ms. Noise is random1/f noise as described in methods. A: phase. For noise only, mean = 0, SD = 1.8. For noise plus signal, mean = −0.12, SD = 1.5. B: power. For noise only, mean = 1.82, SD = 1.76. For signal plus noise, mean = 1.98, SD = 1.89.

The simulation results establish only that power enhancement in the poststimulus response is compatible with the phase and power dissimilarity effects observed empirically, but not that power enhancement actually occurs. In fact, the “signal plus noise” model used to simulate the single-trial response could be interpreted as a phase-resetting model. Under this interpretation, “signal” represents theta-band activity present in prestimulus activity that is phase reset in response to the stimulus (with no change in mean power), whereas “noise” represents all other background activity, including theta-band activity that is not phase-locked to the stimulus. Nevertheless, the model findings suggest that, even in the absence of significant pre-to-post stimulus power enhancement, a correlation will exist between transient changes in phase coherence and in mean power across a large set of poststimulus responses. The investigation of this possibility requires more trials per stimulus than acquired in the present experiment but will be included in a follow-up study that closely examines the power and phase characteristics of pre- versus poststimulus activity for spoken sentences.

Basis of theta-band phase pattern dissimilarity

The simulation results demonstrate that phase-locked activity evoked by the slow temporal modulations of the envelope (<40 Hz) can account for the discrimination of speech stimuli based on theta-band phase patterns. The activity could comprise a high fidelity replication of the low frequencies present in the stimulus envelope, as assumed in simulation 1. Alternatively, it could involve transient theta-band responses evoked by sound energy transitions that effectively produce an ongoing evoked response that is band-pass filtered in the theta band, as assumed in simulation 2. In the former case, the power spectrum of the evoked response would be determined by stimulus characteristics, whereas in the latter case, cortical response properties would concentrate power in the evoked response within the theta band. Because power in the modulation spectrum for normal speech is concentrated between 2 and 10 Hz (Greenberg 2003, 2006; Houtgast and Steeneken 1985), the phase–power dissimilarity analysis results for speech stimuli would be similar under either alternative. In addition, as the results of simulation 1 demonstrate, even if evoked response power is greater for delta- than that for theta-band frequencies, phase pattern dissimilarity between stimuli may be greater for theta- than that for delta-band frequencies. Thus phase dissimilarity that peaks in the theta band is consistent with, but not conclusive evidence of, the involvement of theta-band oscillatory activity in the response to auditory stimuli.

Evidence that the evoked response is not simply a high-fidelity replication of the low frequencies present in the stimulus envelope but is constrained by cortical response properties that may involve theta-band oscillations was found in an investigation of the MEG response to highly compressed speech (Ahissar et al. 2001). The study examined the average evoked response for sets of stimuli with very similar temporal envelopes at four compression levels (0.2, 0.35, 0.5, 0.75), specified as the ratio of the duration of the compressed stimulus to the duration of the original stimulus. The study observed that the frequency spectrum of cortical-evoked modulations in the response (averaged over all accepted trials) remained the same or shifted downward even as the stimulus frequency spectrum shifted upward with increasing compression. Moreover, power was found to be concentrated in the theta band or lower, with theta-band power maintaining prominence over power in higher-frequency bands, at all compression rates. Because power in the response extracted by averaging over many trials depends, to a great extent, on the presence of phase-locked responses in the single-trial data, the findings on compressed speech imply the existence of a greater amount of phase-locked response activity within the theta band than at higher frequencies for all compression levels. Thus phase dissimilarity could be expected to continue to peak in or below the theta band rather than shift toward higher frequencies for compressed speech stimuli.

Further support for the hypothesis that the evoked response to speech involves theta-band oscillations determined by cortical processing properties is found in a study that examined the evoked response to syllables in continuous speech (Sanders and Neville 2003). This study observed that syllable onsets evoke the theta-band response complex associated with the processing of acoustic transients (see Simulation model in methods). If the theta-band response reflects the existence of a cortical analysis window on a timescale of roughly 150 to 250 ms that subserves speech decoding on the syllabic level (Poeppel 2003), then the intelligibility of compressed speech should decline dramatically as syllabic rates exceed theta-band frequencies, a prediction consistent with empirical observations (Beasley et al. 1980).

Some of the questions that cannot be resolved in this investigation can be addressed in experiments using compressed speech as stimuli. First, the results of such a study could confirm or contradict the prediction that peak phase dissimilarity will remain in the theta band even as the compression rate increases and thus provide further evidence of cortical response constraints. In addition, the results could supply a basis for determining which of the two simulation models presented here more accurately represents the qualities of the MEG response evoked by auditory stimuli. Finally, because intelligibility degrades rapidly as compression ratios exceed 0.5 (Beasley et al. 1980), phase dissimilarity results for compressed speech could offer additional evidence that theta-band phase patterns are linked to processing that is phase-locked to stimulus features and largely independent of comprehension.

GRANTS

This work was supported by National Institute on Deafness and Other Communication Disorders Grant 2R01-DC-05660 to D. Poeppel.

DISCLOSURES

No conflicts of interest, financial or otherwise, are declared by the author(s).

ACKNOWLEDGMENTS

We thank H. Luo for analytical support and insightful discussions; G. Cogan, Y. Zhang, and N. Ding for valuable comments; and J. Walker for excellent technical assistance.

Footnotes

In the Luo and Poeppel (2007) experiment, English stimuli were presented to native English speakers such that all processes involved in speech comprehension would be activated.

Identified as the P1-N1-P2-N2 complex, this response consists of alternating deflections of opposite polarity that occur within 300 ms following the stimulus transient.

REFERENCES

- Abrams et al., 2008.Abrams DA, Nicol T, Zecker S, Kraus N. Right-hemisphere auditory cortex is dominant for coding syllable patterns in speech. J Neurosci 28: 3958–3965, 2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adachi et al., 2001.Adachi Y, Shimogawara M, Higuchi M, Haruta Y, Ochiai M. Reduction of nonperiodic environmental magnetic noise in MEG measurement by continuously adjusted least square method. IEEE Trans Appl Superconduct 11: 669–672, 2001 [Google Scholar]

- Ahissar et al., 2001.Ahissar E, Nagarajan S, Ahissar M, Protopapas A, Mahncke H, Merzenich MM. Speech comprehension is correlated with temporal response patterns recorded from auditory cortex. Proc Natl Acad Sci USA 98: 13367–13372, 2001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aiken and Picton, 2008.Aiken SJ, Picton TW. Human cortical responses to the speech envelope. Ear Hear 15: 139–157, 2008 [DOI] [PubMed] [Google Scholar]

- Arieli et al., 1996.Arieli A, Sterkin A, Grinvald A, Aertsen A. Dynamics of ongoing activity: explanation of the large variability in evoked cortical responses. Science 273: 1868–1871, 1996 [DOI] [PubMed] [Google Scholar]

- Beasley et al., 1980.Beasley DS, Bratt GW, Rintelmann WF. Intelligibility of time-compressed sentential stimuli. J Speech Hear Res 23: 722–731, 1980 [DOI] [PubMed] [Google Scholar]

- Davis and Zerlin, 1966.Davis H, Zerlin S. Acoustic relations of the human vertex potential. J Acoust Soc Am 39: 109–116, 1966 [DOI] [PubMed] [Google Scholar]

- de Cheveigné and Simon, 2007.de Cheveigné A, Simon JZ. Denoising based on time-shift PCA. J Neurosci Methods 165: 297–305, 2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Drullman, 2006.Drullman R. The significance of temporal modulation frequencies for speech intelligibility. In: Listening to Speech: An Auditory Perspective, edited by Greenberg S, Ainsworth W. Mahwah, NJ: Erlbaum, 2006, p. 39–47 [Google Scholar]

- Drullman et al., 1994a.Drullman R, Festen JM, Plomp R. Effect of temporal envelope smearing on speech reception. J Acoust Soc Am 95: 1053–1064, 1994a [DOI] [PubMed] [Google Scholar]

- Drullman et al., 1994b.Drullman R, Festen JM, Plomp R. Effect of reducing slow temporal modulations on speech reception. J Acoust Soc Am 95: 2670–2680, 1994b [DOI] [PubMed] [Google Scholar]

- Fuentemilla et al., 2006.Fuentemilla Ll, Marco-Pallarés J, Grau C. Modulation of spectral power and of phase resetting of EEG contributes differentially to the generation of auditory event-related potentials. NeuroImage 30: 909–916, 2006 [DOI] [PubMed] [Google Scholar]

- Gage et al., 1998.Gage N, Poeppel D, Roberts TP, Hickok G. Auditory evoked M100 reflects onset acoustics of speech sounds. Brain Res 814: 236–239, 1998 [DOI] [PubMed] [Google Scholar]

- Giraud et al., 2000.Giraud AL, Lorenzi C, Ashburner J, Wable J, Johnsrude I, Frackowiak R, Kleinschmidt A. Representation of the temporal envelope of sounds in the human brain. J Neurophysiol 84: 1588–1598, 2000 [DOI] [PubMed] [Google Scholar]

- Goff et al., 1969.Goff WR, Matsumiya Y, Allison T, Goff GD. Cross-modality comparisons of average evoked potentials. In: Average Evoked Potentials: Methods, Results, and Evaluations, edited by Donchin E, Lindsley DB. Washington, DC: NASA, 1969, p. 95–141 [Google Scholar]

- Greenberg, 2006.Greenberg S. A multi-tier framework for spoken language. In: Listening to Speech: An Auditory Perspective, edited by Greenberg S, Ainsworth W. Mahwah, NJ: Erlbaum, 2006, p. 411–433 [Google Scholar]

- Greenberg et al., 2003.Greenberg S, Carvey H, Hitchcock L, Chang S. Temporal properties of spontaneous speech: a syllable-centric perspective. J Phonetics 31: 465–485, 2003 [Google Scholar]

- Hamada, 2005.Hamada T. A neuromagnetic analysis of the mechanism for generating auditory evoked fields. Int J Psychophysiol 56: 93–104, 2005 [DOI] [PubMed] [Google Scholar]

- Hari et al., 1987.Hari R, Pelizzone M, Mäkelä JP, Hällström J, Leinonen L, Lounasmaa OV. Neuromagnetic responses of the human auditory cortex to on- and offsets of noise bursts. Audiology 26: 31–43, 1987 [DOI] [PubMed] [Google Scholar]

- Houtgast and Steeneken, 1985.Houtgast T, Steeneken H. A review of the MTF concept in room acoustics and its use for estimating speech intelligibility in auditoria. J Acoust Soc Am 77: 1069–1077, 1985 [Google Scholar]

- Howard and Poeppel, 2009.Howard MF, Poeppel D. Hemispheric asymmetry in mid and long latency neuromagnetic responses to single clicks. Hear Res 257: 41–52, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hu et al., 2009.Hu L, Boutros NN, Jansen BH. Evoked potential variability. J Neurosci Methods 178: 228–236, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jansen et al., 2003.Jansen BH, Agarwal G, Hegde A, Boutros NN. Phase synchronization of the ongoing EEG and auditory EP generation. Clin Neurophysiol 114: 79–85, 2003 [DOI] [PubMed] [Google Scholar]

- Jones and Perez, 2002.Jones SJ, Perez N. The auditory “C-process” of spectral profile analysis. Clin Neurophysiol 113: 1558–1565, 2002 [DOI] [PubMed] [Google Scholar]

- Kayser et al., 2009.Kayser C, Montemurro MA, Logothetis NK, Panzeri S. Spike-phase coding boosts and stabilizes information carried by spatial and temporal spike patterns. Neuron 61: 597–608, 2009 [DOI] [PubMed] [Google Scholar]

- Liégeois-Chauvel et al., 2004.Liégeois-Chauvel C, Lorenzi C, Trébuchon A, Régis J, Chauvel P. Temporal envelope processing in the human left and right auditory cortices. Cereb Cortex 14: 731–740, 2004 [DOI] [PubMed] [Google Scholar]

- Little et al., 2007.Little MA, McSharry PE, Roberts SJ, Costello DAE, Moroz IM. Exploiting nonlinear recurrence and fractal scaling properties for voice disorder detection. Biomed Eng Online 6: 23, 2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lu et al., 2001.Lu T, Liang L, Wang X. Temporal and rate representations of timevarying signals in the auditory cortex of awake primates. Nat Neurosci 4: 1131–1138, 2001 [DOI] [PubMed] [Google Scholar]

- Luo and Poeppel, 2007.Luo H, Poeppel D. Phase patterns of neuronal responses reliably discriminate speech in human auditory cortex. Neuron 54: 1001–1010, 2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lütkenhöner and Steinsträter, 1998.Lütkenhöner B, Steinsträter O. High-precision neuromagnetic study of the functional organization of the human auditory cortex. Audiol Neurootol 3: 191–213, 1998 [DOI] [PubMed] [Google Scholar]

- Mäkelä et al., 1987.Mäkelä JP, Hari R, Linnankivi A. Different analysis of frequency and amplitude modulations of a continuous tone in the human auditory cortex: a neuromagnetic study. Hear Res 27: 257–264, 1987 [DOI] [PubMed] [Google Scholar]

- Mäkinen et al., 2005.Mäkinen V, Tiitinen H, May P. Auditory event-related responses are generated independently of ongoing brain activity. NeuroImage 24: 961–968, 2005 [DOI] [PubMed] [Google Scholar]

- Martin and Boothroyd, 2000.Martin BA, Boothroyd A. Cortical, auditory, evoked potentials in response to change of spectrum and amplitude. J Acoust Soc Am 107: 2155–2161, 2000 [DOI] [PubMed] [Google Scholar]

- Nikulin et al., 2007.Nikulin VV, Linkenkaer-Hansen K, Nolte G, Lemm S, Müller KR, Ilmoniemi RJ, Curio G. A novel mechanism for evoked responses in the human brain. Eur J Sci 25: 3146–3154, 2007 [DOI] [PubMed] [Google Scholar]

- Oldfield, 1971.Oldfield RC. The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia 9: 97–113, 1971 [DOI] [PubMed] [Google Scholar]

- Pantev et al., 1996.Pantev C, Eulitz C, Hampton S, Ross B, Roberts LE. The auditory evoked “off” response: sources and comparison with the “on” and the “sustained” responses. Ear Hear 17: 255–265, 1996 [DOI] [PubMed] [Google Scholar]

- Pantev et al., 1986.Pantev C, Lütkenhöner B, Hoke M, Lehnertz K. Comparison between simultaneously recorded auditory-evoked magnetic fields and potentials elicited by ipsilateral, contralateral and binaural tone burst stimulation. Audiology 25: 54–61, 1986 [DOI] [PubMed] [Google Scholar]

- Penny et al., 2002.Penny WD, Kiebel SJ, Kilner JM, Rugg MD. Event-related brain dynamics. Trends Neurosci 25: 387–389, 2002 [DOI] [PubMed] [Google Scholar]

- Picton et al., 1974.Picton TW, Hillyard SA, Krausz HI, Galambos R. Human auditory evoked potentials. I. Evaluation of components. Electroencephalogr Clin Neurophysiol 36: 179–190, 1974 [DOI] [PubMed] [Google Scholar]

- Poeppel, 2003.Poeppel D. The analysis of speech in different temporal integration windows: cerebral lateralization as “asymmetric sampling in time.” Speech Commun 41: 245–255, 2003 [Google Scholar]

- Pratt et al., 2005.Pratt H, Bleich N, Mittelman N. The composite N1 component to gaps in noise. Clin Neurophysiol 116: 2648–2663, 2005 [DOI] [PubMed] [Google Scholar]

- Pratt et al., 2009.Pratt H, Starr A, Michalewski HJ, Dimitrijevic A, Bleich N, Mittelman N. Auditory-evoked potentials to frequency increase and decrease of high- and low-frequency tones. Clin Neurophysiol 120: 360–373, 2009 [DOI] [PubMed] [Google Scholar]

- Remez et al., 1981.Remez RE, Rubin PE, Pisoni DB, Carrell TD. Speech perception without traditional speech cues. Science 212: 947–950, 1981 [DOI] [PubMed] [Google Scholar]

- Riecke et al., 2009.Riecke L, Esposito F, Bonte M, Formisano E. Hearing illusory sounds in noise: the timing of sensory-perceptual transformations in auditory cortex. Neuron 64: 550–561, 2009 [DOI] [PubMed] [Google Scholar]

- Saberi and Perrott, 1999.Saberi K, Perrott DR. Cognitive restoration of reverse speech (Letter). Nature 398: 760, 1999 [DOI] [PubMed] [Google Scholar]

- Sanders and Neville, 2003.Sanders LD, Neville HJ. An ERP study of continuous speech processing. I. Segmentation, semantics, and syntax in native speakers. Cogn Brain Res 15: 228–240, 2003 [DOI] [PubMed] [Google Scholar]

- Sauseng et al., 2007.Sauseng P, Klimesch W, Gruber WR, Hanslmayr S, Freunberger R, Dopplemayr M. Are event-related potential components generated by phase resetting of brain oscillations? A critical discussion. Neuroscience 146: 1435–1444, 2007 [DOI] [PubMed] [Google Scholar]

- Sharma and Dorman, 2000.Sharma A, Dorman M. Neurophysiologic correlates of cross-language phonetic perception. J Acoust Soc Am 107: 2697–2703, 2000 [DOI] [PubMed] [Google Scholar]

- Sharma et al., 2000.Sharma A, Marsh C, Dorman M. Relationship between N1 evoked potential morphology and the perception of voicing. J Acoust Soc Am 108: 3030–3035, 2000 [DOI] [PubMed] [Google Scholar]

- Smith et al., 2002.Smith DM, Delgutte B, Oxenham AJ. Chimaeric sounds reveal dichotomies in auditory perception. Nature 416: 87–90, 2002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stampfer and Bašar, 1985.Stampfer HG, Bašar E. Does frequency analysis lead to better understanding of human event related potentials? Int J Neurosci 26: 181–196, 1985 [DOI] [PubMed] [Google Scholar]

- Suppes et al., 1998.Suppes P, Han B, Lu ZL. Brain-wave recognition of sentences. Proc Natl Acad Sci USA 95: 15861–15866, 1998 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yeung et al., 2004.Yeung N, Bogacz R, Holroyd CB, Cohen JD. Detection of synchronized oscillations in the electroencephalogram: an evaluation of methods. Psychophysiology 41: 822–832, 2004 [DOI] [PubMed] [Google Scholar]