Abstract

Children often have difficulty understanding speech in challenging listening environments. In the absence of peripheral hearing loss, these speech perception difficulties may arise from dysfunction at more central levels in the auditory system, including subcortical structures. We examined brainstem encoding of pitch in a speech syllable in 38 school-age children. In children with poor speech-in-noise perception, we find impaired encoding of the fundamental frequency and the second harmonic, two important cues for pitch perception. Pitch, an important factor in speaker identification, aids the listener in tracking a specific voice from a background of voices. These results suggest that the robustness of subcortical neural encoding of pitch features in time-varying signals is an important factor in determining success with speech perception in noise.

Keywords: speech-in-noise perception, brainstem encoding, fundamental frequency, harmonics, noise exclusion, pitch

Introduction

In our industrial society, increased noise levels can interfere with communication and learning. The noise levels and reverberation times in today's classrooms commonly exceed recommended levels (Summers and Leek, 1998; ANSI, 2002; Knecht et al., 2002; Krishnan and Gandour, 2009), hindering communication and putting children at risk for academic failure. In fact, classroom noise levels are directly related to scholastic achievement (Shield and Dockrell, 2003; Shield and Dockrell, 2008), and increases in noise of as little as 10 dB can result in a significant decrease in test scores. Some children seem to have more trouble adapting to background noise than others, and many explanations for these difficulties have been proposed, including the presence of auditory processing disorders (Moore, 2007; Lagace et al., 2010), language-based learning disorders (Bradlow et al., 2003; Ziegler et al., 2005; Ziegler et al., 2009), attention deficit disorders (Abdo et al., 2010), or noise exclusions deficits (Sperling et al., 2005). In the absence of peripheral hearing loss, these speech perception difficulties may arise from dysfunction at more central levels in the auditory system, including subcortical structures. In the current study, we explored the role of subcortical function in speech-in-noise (SIN) perception by measuring brainstem responses to a speech syllable in a group of children with a broad range of SIN abilities. We expected to see group differences between top and bottom SIN perceivers in their encoding of the acoustic cues linked to SIN perception, particularly those involving pitch.

Brainstem testing is ideally suited for pediatric evaluation, as it is objective, noninvasive and pre-attentive (Hall and Mueller, 1997). The auditory brainstem response to speech (speech-ABR) is a highly replicable representation of the stimulus (Kraus and Nicol, 2005; Song et al., in press-a), with (as with click-evoked responses) latency differences on the order of fractions of milliseconds being clinically significant (Wible et al., 2004). The brainstem's representation of the stimulus is remarkably robust; in addition to the brainstem response waveform being visually similar to the stimulus waveform (Kraus et al., 2009; Krishnan and Gandour, 2009), the audio file of the brainstem response is also acoustically similar to the stimulus (Galbraith et al., 1995). Importantly, brainstem encoding of speech features is known to be experience-dependent (Krishnan et al., 2005; Song et al., 2008; Tzounopoulos and Kraus, 2009) and relates to cortical (Banai et al., 2005; Wible et al., 2005; Musacchia et al., 2009) and cognitive processes such as language (Krishnan et al., 2005; Banai et al., 2009; Krishnan and Gandour, 2009) and music experience (reviewed in Chandrasekaran and Kraus, 2010).

The brainstem response to speech syllables can be used as a neural index of asynchrony in impaired populations. For example, children with learning impairments (Cunningham et al., 2001; Wible et al., 2004; Krizman et al., 2010) and reading disabilities (Banai et al., 2005; Banai et al., 2009; Chandrasekaran et al., 2009a; Hornickel et al., 2009) exhibit neural asynchrony, or temporal jitter, leading to delayed peak timing and/ or broadened peaks compared to controls. These response differences are especially apparent in the response to the syllable onset and to the consonant-vowel formant transition.

Given the demonstrated utility of the brainstem response in measuring neural asynchrony in children with language-based learning impairments and the findings that SIN perception relates to brainstem encoding of stop consonants (Hornickel et al., 2009; Anderson et al., 2010), we hypothesized that the speech-ABR may provide a biological marker for children with impaired SIN perception. Although previous studies have not demonstrated a relationship between fundamental frequency (F0) encoding and reading (Banai et al., 2009), the strength of the F0 plays an important role in SIN perception in young adults (Song et al., in press-b).

Many acoustic ingredients contribute to the perception of pitch (Fellowes et al., 1997). In particular, the F0 and the lower harmonics provide essential acoustic cues (Meddis and O'Mard, 1997), and pitch cues, along with spatial, timing, harmonic, and other cues, aid in speaker identification and object formation, two processes that are integral for SIN perception (Oxenham, 2008; Shinn-Cunningham and Best, 2008). Object formation (grouping of spectrotemporal cues to form a perceptual identity) is a necessary step in stream segregation, a process that allows the listener to extract meaning from an auditory scene consisting of simultaneous inputs (Bregman, 1990). When listening to one voice in a background of voices and other noises, the listener must perceive that voice as a distinct auditory stream. According to Oxenham (2008), auditory streaming involves two stages: simultaneous grouping (perceiving simultaneously-presented sounds as separate entities) and sequential grouping (binding together of elements that belong to the same sound source). The F0 directly influences both sequential and simultaneous grouping. For example, when listeners are presented with two vowels played simultaneously, vowel identification improves as the F0 differences increase (Brokx and Nooteboom, 1982; Scheffers, 1983; Assmann and Summerfield, 1987; Culling and Darwin, 1993; de Cheveigne, 1997), and the extent to which F0 separation aids identification is related to F0 discrimination thresholds in normal-hearing and hearing-impaired listeners (Summers and Leek, 1998). Similarly, when listening to competing sentences, increasing the F0 separation between concurrent sentences improves performance (Brokx and Nooteboom, 1982; Bird and Darwin, 1998). The importance of the lower harmonics in pitch perception has also been demonstrated in behavioral (Moore et al., 1985; Lin and Hartmann, 1998; Moore et al., 2005) and electrophysiological studies (Alain et al., 2001; Krishnan et al., 2005). This behavioral advantage with greater separation in the F0 may reflect neurobiological processes at both cortical (Alain et al., 2005) and subcortical levels (Song et al., in press-b). Thus, impairments in object formation and pitch representation may be a factor in the SIN perception deficits experienced by clinical populations such as children with language-based learning disabilities and hearing impaired listeners.

We hypothesized that poor SIN perception results in part from impaired neural mechanisms responsible for pitch encoding and that we would therefore see neural correlates of degraded pitch representation in the brainstem responses of normal-hearing children with poor SIN perception.

Materials and methods

Participants

Participants were recruited from public and private schools in the Chicago area as part of an on-going study examining neural encoding of speech in children. Thirty-eight typically developing children (ages 8 to 14; 22 male) with a wide range of SIN perception abilities participated. All children underwent otoscopic examination and were excluded if they had tympanic membrane perforation, cerumen occlusion, or middle ear effusion. Audiometric thresholds were measured at octave intervals from 250 to 8000 Hz using air conduction and from 500 to 4000 Hz using bone conduction. Participants were excluded if they had pure-tone thresholds greater than 15 dB in either ear or air-bone gaps ≥ 15 dB at two or more frequencies in either ear. Inclusionary criteria included normal Wave V click-evoked ABR latencies and normal cognitive abilities as evidenced by standard scores of ≥ 85 on the Wechsler Abbreviated Scales of Intelligence (WASI) Verbal, Performance and Full Scale scores (Zhu and Garcia, 1999). Based on these criteria, no children were excluded from participating in this study. The children were paid for their participation. Northwestern University's institutional review board approved all experimental procedures.

Behavioral Measures

Speech understanding in noise was evaluated with a well-vetted, commonly-used clinical instrument, the Hearing in Noise Test (HINT – Bio-logic Systems Corp., Mundelein, IL). HINT uses the Bamford-Kowal-Bench (BKB) phonetically-balanced sentences (Bench et al., 1979), which are appropriate for use with children at the first-grade reading level and above, and age-corrected percentile scores are available. HINT uses an adaptive paradigm that varies the intensity of the target sentence relative to the fixed speech-shaped noise masker until a threshold signal-to-noise ratio (SNR) is determined. Participants are told to ignore the noise and repeat lists of 10 BKB sentences. The experimenter counts a sentence as correct only when all words are repeated correctly. The full HINT protocol includes three conditions: HINT-Front (target sentences and noise are presented from a single loud speaker placed directly in front of the child), HINT-Right (target sentences are presented from the loud speaker in front of the child, and speech-shaped noise is presented from a loud speaker placed 90 degrees to the right of the child), and HINT-Left (same as HINT-Right, except that speech-shaped noise is presented from a loud speaker placed 90 degrees to the left of the child). We chose to use the HINT-Front subtest in our analysis in order to eliminate the effect of spatial cues in the HINT-Right (target and HINT-Left conditions, thus forcing the listeners to rely only on acoustic cues such as pitch. Because previous studies have demonstrated a relationship between working memory and SIN performance (Pichora-Fuller and Souza, 2003; Heinrich et al., 2007; Parbery-Clark et al., 2009b), we assessed working memory using the Woodcock Johnson III Digits Reversed subtest (Woodcock et al., 2001) to determine whether memory was a factor in our results.

Participant Groups

The top SIN group included children with HINT-Front SNRs ≤ -1.0 (N = 18) and the bottom SIN group included children with HINT SNRs > -1.0 (N = 20). There were no significant differences between the top and bottom SIN groups for pure-tone average audiometric thresholds (.5 kHz to 4 kHz) (t = -0.500, p = 0.621), click-evoked ABR latencies (t = -0.812, p = 0.428), WASI full scale IQ standard scores (t = 1.272, p = 0.212), and Digits Reversed (t = -0.471, p = 0.640). See Table 1 for means and standard deviations for each measure.

Table 1.

The means and standard deviations for top and bottom SIN groups are listed for HINT-Front scores, click latencies, WASI standard scores, WJIII Digits Reversed standard scores, and pure-tone averages (.5 to 4 kHz).

| HINT dB (SNR) | Click Latency Wave V (ms) | WASI Full (Standard Score) | Digits Rev. (Standard Score) | PTA for (.5-4kHz) dB HL | |

|---|---|---|---|---|---|

|

Total Group Mean (SD) |

-1.11(1.42) | 5.89 (.11) | 125.21(13.34) | 111.11(17.82) | 1.85 (3.53) |

|

Top SIN Mean (SD) |

-.09 (.63) | 5.86 (.07) | 127.80 (13.79) | 109.80 (22.10) | 1.45 (2.37) |

|

Bottom SIN Mean (SD) |

-2.23 (1.19) | 5.90 (.12) | 122.33 (12.60) | 112.55 (11.87) | 2.13 (4.21) |

Electrophysiology

Stimuli

The speech syllable [da] is a six-formant syllable synthesized at a 20 kHz sampling rate using a Klatt synthesizer (Klatt, 1980). The duration of the syllable is 170 ms, the first five ms of which is a noiseburst, representing the initial unvoiced portion of the stop consonant preceding voicing. The formant transition from the [d] to the [a] is 50 ms in duration and is characterized by the following formant frequency trajectories: linearly rising F1 (400 to 720 Hz) and linearly falling F2 (1,700 to 1,240 Hz) and F3 (2,580 to 2,500 Hz). F4 (3,300 Hz), F5 (3,750 Hz), and F6 (4,900 Hz) remain constant for the duration of the stimulus. The steady-state region has a constant F0 of 100 Hz as well as a constant F1 (720 Hz), F2 (1,240 Hz), F3 (2,500 Hz), F4 (3,300 Hz), F5 (3,750 Hz), and F6 (4900 Hz).

Recording

Using NeuroScan Acquire 4.3 (NeuroScan Compumedics Inc., Charlotte, NC), evoked brainstem responses were differentially recorded from Cz to right earlobe with forehead as ground at a sampling rate of 20 kHz. Stimuli were presented in the right ear through an insert earphone via an electromagnetically-shielded transducer (ER-3, Etymotic Research, Elk Grove Village, IL) using the stimulus presentation software NeuroScan Stim2. The left ear was unoccluded enabling the participants to hear movies or cartoons of their choice with the sound presented at < 40 dB SPL. The use of movies ensured participant cooperation and enabled them to sit quietly for two-hour sessions.

The [da] syllable was presented at 80 dB SPL with a 60 ms inter-stimulus interval using interleaved alternating polarities. Because the two polarities are 180° out of phase, they can be added together to cancel out the cochlear microphonic and stimulus artifact (Gorga et al., 1985; Russo et al., 2004). Our approach emphasizes the envelope following response (Aiken and Picton, 2008; Skoe and Kraus, 2010), which does not invert between the two stimulus polarities.

Data Analysis

Following data collection, the continuous response was filtered from 70 to 2000 Hz (12dB/octave, zero phase-shift) and averaged over a window of -40 to 190 ms with time 0 corresponding to the stimulus onset. This bandpass range was chosen to minimize low frequency myogenic noise and cortical activity and to include energy that would be expected in the brainstem response given its phase locking properties (Chandrasekaran et al., 2009a; Skoe and Kraus, 2010). An artifact reject criterion of ±35μV was applied, and for each stimulus polarity 3000 artifact-free responses were averaged together. The final average was generated by adding the averaged responses to the two polarities. To ensure response replicability, two sub-averages, representing the first half and last half of the recordings, were created for comparison purposes. The two sub-averages and final added average waveforms were overlaid, and the response was considered reliable if the three waveforms were visually aligned and the first and last halves of the recording were replicable as quantified by a correlation coefficient of at least 0.55.

The SNR of the final average response, which was assessed by dividing the root mean square (RMS) of the response portion (0 to 190 ms) of the waveform by the RMS of the prestimulus portion (-40 to 0 ms), was used as a criterion for determining if the response was adequately free of extraneous myogenic and electrical noise. All subjects had a minimum SNR of 1.35 (mean: 2.31, S.D.: 0.54).

Measurement of the brainstem response

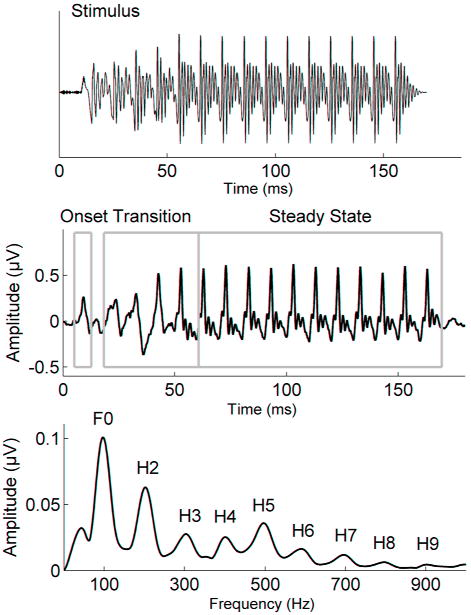

The brainstem evoked response to this 170-ms [da] syllable is characterized by 2 general components: the onset response and the frequency following response (FFR) to the consonant-vowel transition and vowel. Neural phase locking that underlies the FFR represents the periodicity of the stimulus up to about 1500 Hz, the phase locking limit of the brainstem (Moushegian et al., 1973; Chandrasekaran and Kraus, 2010b). The onset response occurs within 8 to 11 ms after the sound begins and is analogous to Wave V in the click-evoked ABR (Song et al., 2006; Chandrasekaran et al., 2009a). The FFR includes the transition response and the steady-state response. The transition response occurs within the range of 20 to 60 ms and corresponds to the consonant-vowel transition in the stimulus. The steady-state response, from 60-170 ms, corresponds to the vowel [a]. The transition and the steady state are characterized by large, periodic peaks occurring approximately every 10 ms, corresponding to the periodicity of the syllable's fundamental frequency of 100 Hz. The morphology of the transition peaks becomes sharper and more defined approaching 60 ms, the point at which the formants are constant, and the response is also less variable. The amplitudes of the peaks in the transition are modulated by changing formant frequencies (Johnson et al., 2008), while the peaks during the steady state are relatively stable in amplitude. Figure 1, middle, depicts the major regions of the response.

Figure 1.

Top: The stimulus waveform of the speech syllable [da]. Middle: Grand average brainstem responses (N = 38) to the speech syllable [da]. Bottom: The spectrum of the brainstem response to the speech syllable [da] contains energy at the fundamental frequency (F0 = 100 Hz) and integer multiples.

The spectral energy in the frequency domain was analyzed in MATLAB (The MathWorks, Inc., Natick, MA) by computing fast Fourier transforms (FFTs) with zero padding to increase spectral resolution (Skoe and Kraus, 2010). Average spectral amplitudes were calculated for the formant transition period and the steady-state period using 100 Hz bins centered around the fundamental frequency (100 Hz) and its harmonics (integer multiples of 100 Hz). The resulting spectral magnitudes provided information regarding the representation of F0 and its harmonics in the brainstem response as seen in Figure 1, bottom.

Statistical Analyses

Multivariate analyses of variance (MANOVA) were conducted using SPSS (SPSS Inc., Chicago, IL) with group (top SIN vs. bottom SIN) serving as the between-group independent variable. Consistent with our hypotheses, we expected to see significant differences in the low frequencies between the SIN groups. We therefore conducted 2 separate MANOVAs, one using the harmonic amplitudes associated with pitch (F0 and H2) and one using harmonics not associated with pitch (H3 – H10). Post-hoc t-tests were employed where appropriate. To assess the relationships between behavior and brainstem function, Pearson r correlations were calculated for the entire group between F0/H2 and the HINT-Front SNR scores.

Results

Transition Region

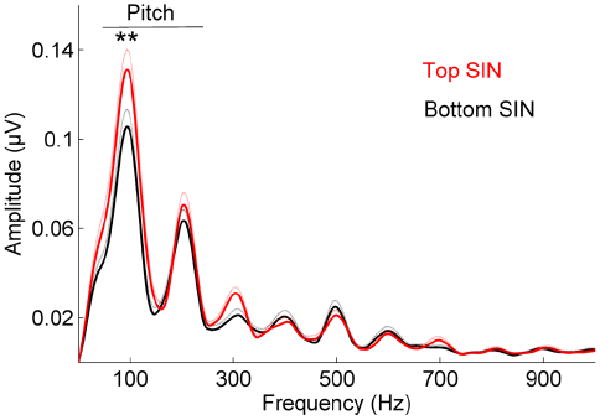

We found significant differences in low-frequency spectral encoding between top and bottom SIN perceivers in the region of the response corresponding to the formant transition period. There was a main effect of group in the frequency regions associated with pitch, with the top SIN group having greater spectral magnitudes for the F0 and H2 compared to the bottom SIN group (F(2,35) = 3.926, p = 0.029), and post-hoc t-tests revealed significant SIN group differences for the F0 (F(1,36) = 8.057, p = 0.007) but not for H2 (F(1,36) = 0.547, p = 0.464) (Figure 2). There was no main effect of group when we included the higher harmonics (H3 – H10) as dependent variables in the MANOVA (F(10,27) = 1.727, p = 0.126).

Figure 2.

Comparison of response spectra in top (red) and bottom (black) SIN perceivers. Group differences are found in the lower harmonics (F(2,35) = 3.926, p = 0.029). Standard errors are plotted with dotted lines.

Steady State Region

High and low-performing SIN groups did not differ in their brainstem responses to the steady-state portion of the stimulus either for F0 and H2 (F(1,35) = 1.298, p = 0.286) or the higher harmonics (F(10,27) = 1.232, p = 0.316).

There were no significant differences in RMS (t= -0.846, p = 0.403) or SNR (t = 0.798, p = 0.430) between the groups. Therefore, the group differences in F0 and H2 encoding did not result from differences in overall neural activity.

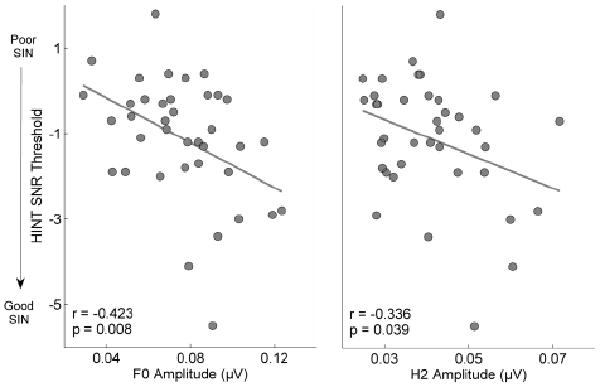

Correlations

Pearson r correlations using the entire group (N = 38) were significant between HINT-Front and the spectral magnitudes of F0 (r = -0.424, p = 0.008) and H2 (r = -0.336, p = 0.039) in the response period corresponding to the formant transition, indicating that better performance on SIN tasks is associated with greater amplitudes of the frequency components contributing to pitch (Figure 3). Because previous studies have demonstrated a relationship between working memory and SIN performance (Pichora-Fuller and Souza, 2003; Heinrich et al., 2007; Parbery-Clark et al., 2009b), we assessed working memory using the Woodcock Johnson III Digits Reversed subtest to determine whether memory was a factor in our results. We found no relationship with performance on the HINT (r = -0.114, p = 0.495). Furthermore, we found that working memory did not drive the group differences for the F0 between HINT and pitch-related brainstem measures; all relationships remained significant when partialling out the effect of working memory (F0: r = -0.430, p = 0.008; H2: r = -0.334, p = 0.044).

Figure 3.

HINT SNR thresholds are correlated with magnitudes of F0 (r = -0.424, p = 0.008) and H2 (r = -0.336, p = 0.039), indicating a relationship between SIN perception and subcortical encoding of pitch.

Discussion

The novel finding from the current study is that the strength of subcortical encoding of pitch has emerged as a factor for successful hearing in noise. Pitch (determined by the low harmonics) has been previously identified as an important component for object formation and speech-in-noise perception in behavioral studies; the present study provides a neural mechanism for this observation. This neural mechanism operated exclusively in response to the time-varying formant transition period, a region particularly important for syllable identification, a speech feature that is most vulnerable to masking by background noise (Tallal and Piercy, 1974; Tallal and Stark, 1981; Basu et al., 2010).

Interestingly, larger brainstem F0 encoding has not been found for good reading (Banai et al., 2009); as noted previously, good readers have greater representation of formant-related higher harmonics (e.g. H4-H7) in brainstem responses compared to poor readers, but the lower harmonics have not been implicated in poor readers. The differing importance of pitch-related lower harmonics for SIN perception and formant-related higher harmonics for reading suggests the existence of distinct neural signatures for these tasks.

Pitch cues may serve as perceptual anchors formed in response to stimulus regularities, and perceptual anchors enable the comparative discriminations needed for SIN perception (Ahissar et al., 2006; Ahissar, 2007; Chandrasekaran et al., 2009a). Ahissar et al. (2006) compared normal-learning children to children who had dyslexia combined with oral language disorders and found that the children with dyslexia had poorer SIN performance only when using small sets of stimuli but not when using large sets. They reasoned that the small set of stimuli allowed normal-learning children to form perceptual anchors, thereby profiting from repetition of the stimuli in order to improve performance. This context-dependent enhancement was recently noted in children using speech-evoked brainstem responses (Chandrasekaran et al., 2009a), in which greater brainstem representation of pitch-related spectral amplitudes in a repetitive vs. a variable stimulus context was associated with better SIN perception. The ability to modulate representation of pitch based on stimulus regularities is important for “tagging” relevant speech features, a key component of object identification and SIN perception. Therefore, in addition to difficulties with pitch perception and subsequent auditory grouping, children with diminished F0 and H2 representation are likely to have reduced ability to lock onto the pitch of the target signal, contributing to poorer SIN performance.

Subcortical spectral magnitude likely reflects interplay of central and peripheral processes. Brainstem responses to speech are determined by the acoustics of the incoming signal, and peripheral sensory processes may be impacted by environmental noise and/or hearing loss. However, cognitive processes such as attention, memory and object formation likely also affect subcortical encoding of sound (Lukas, 1981; Bauer and Bayles, 1990; Galbraith et al., 1997; Galbraith et al., 1998; Parbery-Clark et al., 2009a). When a listener judges a signal as important, auditory attention works to extract relevant signal elements from the competing background noise and stores them in working memory (Johnson and Zatorre, 2005). The cortex then uses this information to make predictions regarding the most relevant features of the stimulus, likely resulting in corticofugal enhancement in lower structures (Ahissar and Hochstein, 2004; Musacchia et al., 2007; Wong et al., 2007; de Boer and Thornton, 2008; Nahum et al., 2008; Song et al., 2008; Chandrasekaran et al., 2009b; Hill and Miller, 2010). Subcortical enhancement of relevant features in turn provides improved signal quality to the auditory cortex. Thus children with poor SIN perception likely have deficient encoding of sound due in part to a failure of cognitively-based processes such as attention, memory, and noise exclusion to shape effective subcortical sensory function. The differences in F0 representation were seen only in response to the time-varying transition region of the stimulus, likely reflecting a selective enhancement of this response component rather than an overall gain effect. This kind of top-down selective enhancement has also been proposed to explain differences between musicians and non-musicians' subcortical responses to specific salient and behaviorally-relevant aspects of the signal (Lee et al., 2009; Strait et al., 2009; Kraus and Chandrasekaran, 2010).

It would be useful to determine which children have biological signatures that may underlie an excessive difficulty hearing in background noise. Perhaps these children could benefit more from auditory training programs if the underlying issue (i.e., noise exclusion) was more directly addressed. Moreover, training gains should transfer to typical learning environments that tend to be noisy (e.g. classrooms). Consequently, some children may benefit from speech-in-noise training, training to strengthen the detection of relevant signals from complex soundscapes (e.g. music) and/or the use of augmentative communication devices (e.g., wireless assistive listening devices that provide an enhanced SNR). Objective neural indices, such as auditory brainstem responses, can aid the development and ongoing assessment of individual-specific training approaches that are needed to address the heterogeneous disorders associated with difficulty hearing speech in noise.

Acknowledgments

This work was funded by the National Institutes of Health (RO1 DC01510) and the Hugh Knowles Center of Northwestern University. We would like to thank Trent Nicol, Judy Song, Jane Hornickel, Alexandra Parbery-Clark, Jennifer Krizman, and Kyung Myun Lee for their helpful comments and suggestions regarding the manuscript. We would especially like to thank the children and their families who participated in the study.

Abbreviations

- SIN

speech-in-noise

- F0

fundamental frequency

- H2

second harmonic

- HINT

Hearing in Noise Test

- FFT

fast Fourier transform

- ABR

auditory brainstem response

- SNR

signal-to-noise ratio

- RMS

root mean square

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Abdo AGR, Murphy CFB, Schochat E. Habilidades auditivas em crianças com dislexia e transtorno do déficit de atenção e hiperatividade. Pró-Fono Revista de Atualização Científica. 2010;22:25–30. [Google Scholar]

- Ahissar M. Dyslexia and the anchoring-deficit hypothesis. Trends Cog Sci. 2007;11:458–465. doi: 10.1016/j.tics.2007.08.015. [DOI] [PubMed] [Google Scholar]

- Ahissar M, Hochstein S. The reverse hierarchy theory of visual perceptual learning. Trends Cog Sci. 2004;8:457–464. doi: 10.1016/j.tics.2004.08.011. [DOI] [PubMed] [Google Scholar]

- Ahissar M, Lubin Y, Putter-Katz H, Banai K. Dyslexia and the failure to form a perceptual anchor. Nat Neurosci. 2006;9:1558–1564. doi: 10.1038/nn1800. [DOI] [PubMed] [Google Scholar]

- Aiken SJ, Picton TW. Envelope and spectral frequency-following responses to vowel sounds. Hear Res. 2008;245:35–47. doi: 10.1016/j.heares.2008.08.004. [DOI] [PubMed] [Google Scholar]

- Alain C, Arnott SR, Picton TW. Bottom-up and top-down influences on auditory scene analysis: Evidence from event-related brain potentials. J Exp Psych-Human Perception and Performance. 2001;27:1072–1089. doi: 10.1037//0096-1523.27.5.1072. [DOI] [PubMed] [Google Scholar]

- Alain C, Reinke K, He Y, Wang C, Lobaugh N. Hearing two things at once: Neurophysiological indices of speech segregation and identification. J Cog Neurosci. 2005;17:811–818. doi: 10.1162/0898929053747621. [DOI] [PubMed] [Google Scholar]

- Anderson S, Skoe E, Chandrasekaran B, Kraus N. Neural timing is linked to speech perception in noise. J Neurosci. 2010;30:4922–4926. doi: 10.1523/JNEUROSCI.0107-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ansi. Acoustical performance criteria, design requirements and guidelines for schools. In: Institute, A. N. S., editor. ANSI S12.60. 2002. [Google Scholar]

- Assmann PF, Summerfield Q. Perceptual segregation of concurrent vowels. J Acoust Soc Am. 1987;82:S120. [Google Scholar]

- Banai K, Hornickel J, Skoe E, Nicol T, Zecker S, Kraus N. Reading and subcortical auditory function. Cereb Cort. 2009;19:2699–2707. doi: 10.1093/cercor/bhp024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Banai K, Nicol T, Zecker SG, Kraus N. Brainstem timing: Implications for cortical processing and literacy. J Neurosci. 2005;25:9850–9857. doi: 10.1523/JNEUROSCI.2373-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Basu M, Krishnan A, Weber-Fox C. Brainstem correlates of temporal auditory processing in children with specific language impairment. Dev Sci. 2010;13:77–91. doi: 10.1111/j.1467-7687.2009.00849.x. [DOI] [PubMed] [Google Scholar]

- Bauer LO, Bayles RL. Precortical filtering and selective attention: An evoked potential analysis. Biol Psych. 1990;30:21–33. doi: 10.1016/0301-0511(90)90088-e. [DOI] [PubMed] [Google Scholar]

- Bench J, Kowal A, Bamford J. The BKB (Bamford-Kowal-Bench) sentence lists for partially-hearing children. Brit J Audiol. 1979;13:108–112. doi: 10.3109/03005367909078884. [DOI] [PubMed] [Google Scholar]

- Bird J, Darwin CJ. Effects of a difference in fundamental frequency in separating two sentences. In: Palmer AR, Rees A, Summerfield AQ, Meddis R, editors. Psychophysical and physiological advances in hearing. London: Whurr; 1998. [Google Scholar]

- Bradlow AR, Kraus N, Hayes E. Speaking clearly for children with learning disabilities: Sentence perception in noise. J Speech Lang Hear Res. 2003;46:80–97. doi: 10.1044/1092-4388(2003/007). [DOI] [PubMed] [Google Scholar]

- Bregman AS. Auditory scene analysis. Cambridge: MIT; 1990. [Google Scholar]

- Brokx JP, Nooteboom S. Intonation and the perceptual separation of simultaneous voices. J Phonetics. 1982;10:23–26. [Google Scholar]

- Chandrasekaran B, Hornickel J, Skoe E, Nicol TG, Kraus N. Context-dependent encoding in the human auditory brainstem relates to hearing speech in noise: Implications for developmental dyslexia. Neuron. 2009a;64:311–319. doi: 10.1016/j.neuron.2009.10.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chandrasekaran B, Kraus N. Music, noise-exclusion, and learning disabilities. Music Perception. 2010a;47:297–306. [Google Scholar]

- Chandrasekaran B, Kraus N. The scalp-recorded brainstem response to speech: Neural origins and plasticity. Psychophysiology. 2010b;47:236–246. doi: 10.1111/j.1469-8986.2009.00928.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chandrasekaran B, Krishnan A, Gandour JT. Relative influence of musical and linguistic experience on early cortical processing of pitch contours. Brain Lang. 2009b;108:1–9. doi: 10.1016/j.bandl.2008.02.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Culling JF, Darwin CJ. Perceptual separation of simultaneous vowels: Within and across-formant grouping by f0. J Acoust Soc Am. 1993;93:3454–3467. doi: 10.1121/1.405675. [DOI] [PubMed] [Google Scholar]

- Cunningham J, Nicol TG, Zecker SG, Bradlow AR, Kraus N. Neurobiologic responses to speech in noise in children with learning problems: Deficits and strategies for improvement. Clin Neurophysiol. 2001;112:758–767. doi: 10.1016/s1388-2457(01)00465-5. [DOI] [PubMed] [Google Scholar]

- De Boer J, Thornton ARD. Neural correlates of perceptual learning in the auditory brainstem: Efferent activity predicts and reflects improvement at a speech-in-noise discrimination task. J Neurosci. 2008;28:4929–4937. doi: 10.1523/JNEUROSCI.0902-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Cheveigne A. Concurrent vowel identification. Iii. A neural model of harmonic interference cancellation. J Acoust Soc Am. 1997;101:2857–2865. [Google Scholar]

- Fellowes J, Remez R, Rubin P. Perceiving the sex and identity of a talker without natural vocal timbre. Perception & Psychophysics. 1997;59:839–840. doi: 10.3758/bf03205502. [DOI] [PubMed] [Google Scholar]

- Galbraith GC, Arbagey PW, Branski R, Comerci N, Rector PM. Intelligible speech encoded in the human brain stem frequency-following response. Neuroreport. 1995;6:2363–2367. doi: 10.1097/00001756-199511270-00021. [DOI] [PubMed] [Google Scholar]

- Galbraith GC, Bhuta SM, Choate AK, Kitahara JM, Mullen TAJ. Brain stem frequency-following response to dichotic vowels during attention. Neuroreport. 1998;9:1889–1893. doi: 10.1097/00001756-199806010-00041. [DOI] [PubMed] [Google Scholar]

- Galbraith GC, Jhaveri SP, Kuo J. Speech-evoked brainstem frequency-following responses during verbal transformations due to word repetition. Electroencephalography Clin Neurophysiol. 1997;102:46–53. doi: 10.1016/s0013-4694(96)96006-x. [DOI] [PubMed] [Google Scholar]

- Gorga M, Abbas P, Worthington D. Stimulus calibration in ABR measurements. In: Jacobsen J, editor. The Auditory Brainstem Response. San Diego: College Hill Press; 1985. [Google Scholar]

- Hall JW, Mueller HG. Audiologists' desk reference. San Diego: Singular; 1997. [Google Scholar]

- Heinrich A, Schneider BA, Craik FI. Investigating the influence of continuous babble on auditory short-term memory performance. Quart J Exp Psych. 2007;61:735–751. doi: 10.1080/17470210701402372. [DOI] [PubMed] [Google Scholar]

- Hill KT, Miller LM. Auditory attentional control and selection during cocktail party listening. Cereb Cort. 2010;20:583–590. doi: 10.1093/cercor/bhp124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hornickel J, Skoe E, Nicol T, Zecker S, Kraus N. Subcortical differentiation of stop consonants relates to reading and speech-in-noise perception. Proc Nat Acad Sci. 2009;106:13022–13027. doi: 10.1073/pnas.0901123106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson JA, Zatorre RJ. Attention to simultaneous unrelated auditory and visual events: Behavioral and neural correlates. Cereb Cort. 2005;15:1609–1620. doi: 10.1093/cercor/bhi039. [DOI] [PubMed] [Google Scholar]

- Johnson KL, Nicol T, Zecker SG, Bradlow AR, Skoe E, Kraus N. Brainstem encoding of voiced consonant-vowel stop syllables. Clin Neurophysiol. 2008;119:2623–2635. doi: 10.1016/j.clinph.2008.07.277. [DOI] [PubMed] [Google Scholar]

- Klatt D. Software for a cascade/parallel formant synthesizer. J Acoust Soc Am. 1980;67:971–995. [Google Scholar]

- Knecht HA, Nelson PB, Whitelaw GM, Feth LL. Background noise levels and reverberation times in unoccupied classrooms: Predictions and measurements. Am J Audiol. 2002;11:65. doi: 10.1044/1059-0889(2002/009). [DOI] [PubMed] [Google Scholar]

- Kraus N, Chandrasekaran B. Music training for the development of auditory skills. Nat Rev Neurosci. 2010;11:599–605. doi: 10.1038/nrn2882. [DOI] [PubMed] [Google Scholar]

- Kraus N, Nicol TG. How can the neural encoding and perception of speech be improved? In: Merzenich M, Syka J, editors. Plasticity and signal representation in the auditory system. New York: Spring; 2005. [Google Scholar]

- Kraus N, Skoe E, Parbery-Clark A, Ashley R. Exerience-induced malleability in neural encoding of pitch, timbre, and timing: Implications for language and music. Annals New York Acad Sci. 2009;1169:543–557. doi: 10.1111/j.1749-6632.2009.04549.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krishnan A, Gandour JT. The role of the auditory brainstem in processing linguistically-relevant pitch patterns. Brain Lang. 2009;110:135–148. doi: 10.1016/j.bandl.2009.03.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krishnan A, Xu Y, Gandour J, Cariani P. Encoding of pitch in the human brainstem is sensitive to language experience. Cog Brain Res. 2005;25:161–168. doi: 10.1016/j.cogbrainres.2005.05.004. [DOI] [PubMed] [Google Scholar]

- Krizman J, Skoe E, Kraus N. Stimulus rate and subcortical auditory processing of speech. Audiol Neuro-Otol. 2010;15:332–342. doi: 10.1159/000289572. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lagace J, Jutras B, Gagne J. Auditory processing disorder and speech perception problems in noise: Finding the underlying origin. Am J Audiol. 2010;1:1059. doi: 10.1044/1059-0889(2010/09-0022). [DOI] [PubMed] [Google Scholar]

- Lee KM, Skoe E, Kraus N, Ashley R. Selective subcortical enhancement of musical intervals in musicians. J Neurosci. 2009;29:5832–5840. doi: 10.1523/JNEUROSCI.6133-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lin JY, Hartmann WM. The pitch of a mistuned harmonic: Evidence for a template model. J Acoust Soc Am. 1998;103:2608–2617. doi: 10.1121/1.422781. [DOI] [PubMed] [Google Scholar]

- Lukas JH. The role of efferent inhibition in human auditory attention: An examination of the auditory brainstem potentials. Int'l J Neurosci. 1981;12:137–145. doi: 10.3109/00207458108985796. [DOI] [PubMed] [Google Scholar]

- Meddis R, O'mard L. A unitary model of pitch perception. J Acoust Soc Am. 1997;102:1811–1820. doi: 10.1121/1.420088. [DOI] [PubMed] [Google Scholar]

- Moore BC, Peters RW, Glasberg BR. Thresholds for the detection of inharmonicity in complex tones. J Acoust Soc Am. 1985;77:1861–1867. doi: 10.1121/1.391937. [DOI] [PubMed] [Google Scholar]

- Moore DR. Auditory processing disorders: Acquisition and treatment. J Comm Dis. 2007;40:295–304. doi: 10.1016/j.jcomdis.2007.03.005. [DOI] [PubMed] [Google Scholar]

- Moore DR, Rosenberg JF, Coleman JS. Discrimination training of phonemic contrasts enhances phonological processing in mainstream school children. Brain Lang. 2005;94:72–85. doi: 10.1016/j.bandl.2004.11.009. [DOI] [PubMed] [Google Scholar]

- Moushegian G, Rupert AL, Stillman RD. Scalp-recorded early responses in man to frequencies in the speech range. Electroencephal Clin Neurophysiol. 1973;35:665–667. doi: 10.1016/0013-4694(73)90223-x. [DOI] [PubMed] [Google Scholar]

- Musacchia G, Arum L, Nicol T, Garstecki D, Kraus N. Audiovisual deficits in older adults with hearing loss: Biological evidence. Ear Hear. 2009;30:505–514. doi: 10.1097/AUD.0b013e3181a7f5b7. [DOI] [PubMed] [Google Scholar]

- Musacchia G, Sams M, Skoe E, Kraus N. Musicians have enhanced subcortical auditory and audiovisual processing of speech and music. Proc Nat Acad Sci. 2007;104:15894–15898. doi: 10.1073/pnas.0701498104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nahum M, Nelken I, Ahissar M. Low-level information and high-level perception: The case of speech in noise. PLoS Biol. 2008;6:978–991. doi: 10.1371/journal.pbio.0060126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oxenham AJ. Pitch perception and auditory stream segregation: Implications for hearing loss and cochlear implants. Trends Amplif. 2008;12:316–331. doi: 10.1177/1084713808325881. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parbery-Clark A, Skoe E, Kraus N. Musical experience limits the degradative effects of background noise on the neural processing of sound. J Neurosci. 2009a;29:14100–14107. doi: 10.1523/JNEUROSCI.3256-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parbery-Clark A, Skoe E, Lam C, Kraus N. Musician enhancement for speech-in-noise. Ear Hear. 2009b;30:653–661. doi: 10.1097/AUD.0b013e3181b412e9. [DOI] [PubMed] [Google Scholar]

- Pichora-Fuller MK, Souza PE. Effects of aging on auditory processing of speech. Inter J Audiol. 2003;42:S11–S16. [PubMed] [Google Scholar]

- Russo N, Nicol T, Musacchia G, Kraus N. Brainstem responses to speech syllables. Clin Neurophysiol. 2004;115:2021–2030. doi: 10.1016/j.clinph.2004.04.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scheffers MTM. Simulation of auditory analysis of pitch: An elaboration on the dws pitch meter. J Acoust Soc Am. 1983;74:1716–1725. doi: 10.1121/1.390280. [DOI] [PubMed] [Google Scholar]

- Shield BM, Dockrell JE. The effects of noise on children at school: A review. Building Acoustics. 2003;10:97–116. [Google Scholar]

- Shield BM, Dockrell JE. The effects of environmental and classroom noise on the academic attainments of primary school children. J Acoust Soc Am. 2008;123:133–144. doi: 10.1121/1.2812596. [DOI] [PubMed] [Google Scholar]

- Shinn-Cunningham BG, Best V. Selective attention in normal and impaired hearing. Trends Amplif. 2008;12:283–299. doi: 10.1177/1084713808325306. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Skoe E, Kraus N. Auditory brain stem response to complex sounds: A tutorial. Ear Hear. 2010;31:302–324. doi: 10.1097/AUD.0b013e3181cdb272. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Song JH, Banai K, Russo NM, Kraus N. On the relationship between speech- and nonspeech-evoked auditory brainstem responses. Audiol Neuro-Otol. 2006;11:233–241. doi: 10.1159/000093058. [DOI] [PubMed] [Google Scholar]

- Song JH, Nicol T, Kraus N. Test-retest reliability of the speech-evoked auditory brainstem response in young adults. Clin Neurophysiol. doi: 10.1016/j.clinph.2010.07.009. in press-a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Song JH, Skoe E, Banai K, Kraus N. Perception of speech in noise: Neural correlates. J Cog Neurosci. doi: 10.1162/jocn.2010.21556. in press-b. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Song JH, Skoe E, Wong PCM, Kraus N. Plasticity in the adult human auditory brainstem following short-term linguistic training. J Cog Neurosci. 2008;20:1892–1902. doi: 10.1162/jocn.2008.20131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sperling AJ, Zhong-Lin L, Manis FR, Seidenberg MS. Deficits in perceptual noise exclusion in developmental dyslexia. Nat Neurosci. 2005;8:862–863. doi: 10.1038/nn1474. [DOI] [PubMed] [Google Scholar]

- Strait DL, Kraus N, Skoe E, Ashley R. Musical experience and neural efficiency: Effects of training on subcortical processing of vocal expressions of emotion. Eur J Neurosci. 2009;29:661–668. doi: 10.1111/j.1460-9568.2009.06617.x. [DOI] [PubMed] [Google Scholar]

- Summers V, Leek MR. F0 processing and the seperation of competing speech signals by listeners with normal hearing and with hearing loss. J Speech Lang Hear Res. 1998;41:1294–1306. doi: 10.1044/jslhr.4106.1294. [DOI] [PubMed] [Google Scholar]

- Tallal P, Piercy M. Developmental aphasia: Rate of auditory processing and selective impairment of consonant perception. Neuropsychologia. 1974;12:83–93. doi: 10.1016/0028-3932(74)90030-x. [DOI] [PubMed] [Google Scholar]

- Tallal P, Stark RE. Speech acoustic-cue discrimination abilities of normally developing and language-impaired children. J Acoust Soc Am. 1981;69:568–574. doi: 10.1121/1.385431. [DOI] [PubMed] [Google Scholar]

- Tzounopoulos T, Kraus N. Learning to encode timing: Mechanisms of plasticity in the auditory brainstem. Neuron. 2009;62:463–469. doi: 10.1016/j.neuron.2009.05.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wible B, Nicol T, Kraus N. Atypical brainstem representation of onset and formant structure of speech sounds in children with language-based learning problems. Biol Psych. 2004;67:299–317. doi: 10.1016/j.biopsycho.2004.02.002. [DOI] [PubMed] [Google Scholar]

- Wible B, Nicol T, Kraus N. Correlation between brainstem and cortical auditory processes in normal and language-impaired children. Brain. 2005;128:417–423. doi: 10.1093/brain/awh367. [DOI] [PubMed] [Google Scholar]

- Wong PCM, Skoe E, Russo NM, Dees T, Kraus N. Musical experience shapes human brainstem encoding of linguistic pitch patterns. Nat Neurosci. 2007;10:420–422. doi: 10.1038/nn1872. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woodcock RW, Mcgrew KS, Mather N. Woodcock-Johnson III Tests of Cognitive Abilities. Itasca, IL: Riverside Publishing; 2001. [Google Scholar]

- Zhu J, Garcia E. The Weschler Abbreviated Scale of Intelligence (WASI) New York: Psychological Corporation; 1999. [Google Scholar]

- Ziegler JC, Pech-Georgel C, George F, Alario FX, Lorenzi C. Deficits in speech perception predict language learning impairment. Proc Nat Acad Sci. 2005;102:14110–14115. doi: 10.1073/pnas.0504446102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ziegler JC, Pech-Georgel C, George F, Lorenzi C. Speech-perception-in-noise deficits in dyslexia. Dev Sci. 2009;12:732–745. doi: 10.1111/j.1467-7687.2009.00817.x. [DOI] [PubMed] [Google Scholar]