Abstract

We manipulated relative reinforcement for problem behavior and appropriate behavior using differential reinforcement of alternative behavior (DRA) without an extinction component. Seven children with developmental disabilities participated. We manipulated duration (Experiment 1), quality (Experiment 2), delay (Experiment 3), or a combination of each (Experiment 4), such that reinforcement favored appropriate behavior rather than problem behavior even though problem behavior still produced reinforcement. Results of Experiments 1 to 3 showed that behavior was often sensitive to manipulations of duration, quality, and delay in isolation, but the largest and most consistent behavior change was observed when several dimensions of reinforcement were combined to favor appropriate behavior (Experiment 4). Results suggest strategies for reducing problem behavior and increasing appropriate behavior without extinction.

Keywords: attention deficit hyperactivity disorder, autism, concurrent schedules, differential reinforcement, extinction, problem behavior

Differential reinforcement is a fundamental principle of behavior analysis that has led to the development of a set of procedures used as treatment for problem behavior (Cooper, Heron, & Heward, 2007). One of the most frequently used of these procedures is the differential reinforcement of alternative behavior (DRA). DRA typically involves withholding reinforcers following problem behavior (extinction) and providing reinforcers following appropriate behavior (Deitz & Repp, 1983). Pretreatment identification of the reinforcers that maintain problem behavior (i.e., functional analysis) permits the development of extinction procedures, which, by definition, must match the function of problem behavior (Iwata, Pace, Cowdery, & Miltenberger, 1994). In addition, the reinforcer maintaining problem behavior can be delivered contingent on the occurrence of an alternative, more appropriate response. Under these conditions, DRA has been successful at reducing problem behavior (Dwyer-Moore & Dixon, 2007; Vollmer & Iwata, 1992).

Although extinction is an important and powerful component of DRA, it is, unfortunately, not always possible to implement it (Fisher et al., 1993; Hagopian, Fisher, Sullivan, Acquisto, & LeBlanc, 1998). For example, a caregiver may be physically unable to prevent escape with a large or combative individual, leading to compromises in integrity of escape extinction. It would also be difficult to withhold reinforcement for behavior maintained by attention in the form of physical contact if physical blocking is required to protect the individual or others. For example, if an individual's attention-maintained eye gouging is a threat to his or her eyesight, intervention is necessary to protect vision.

Several studies have found that DRA is less effective at decreasing problem behavior when implemented without extinction (Volkert, Lerman, Call, & Trosclair-Lasserre, 2009). For example, Fisher et al. (1993) evaluated functional communication training (FCT; a specific type of DRA procedure) without extinction, with extinction, and with punishment contingent on problem behavior. Results showed that when FCT was introduced without an extinction or punishment component for problem behavior, the predetermined goal of 70% reduction in problem behavior was met with only one of three participants. FCT was more effective at reducing problem behavior when extinction was included, and the largest and most consistent reduction was observed when punishment was included.

Hagopian et al. (1998) conducted a replication of the Fisher et al. (1993) study and found that a predetermined goal of 90% reduction in problem behavior was not achieved with any of 11 participants exposed to FCT without extinction. When FCT was implemented with extinction, there was a 90% reduction in problem behavior for 11 of 25 applications, with a mean percentage reduction in problem behavior of 69% across all applications.

McCord, Thomson, and Iwata (2001) found that DRA without extinction had limited effects on the self-injurious behavior of two individuals, one whose behavior was reinforced by avoidance of transition and another whose behavior was reinforced by avoidance of transition and avoidance of task initiation. In both cases, DRA with extinction and response blocking produced sustained decreases in self-injury. These examinations of research on DRA without extinction have shown a bias in responding toward problem behavior when the rate and immediacy of reinforcement of problem and appropriate behavior are equivalent.

When considering variables that contribute to the effectiveness (or ineffectiveness) of DRA without extinction as a treatment for problem behavior, it is helpful to conceptualize differential reinforcement procedures in terms of a concurrent-operants arrangement (e.g., Fisher et al., 1993; Mace & Roberts, 1993). Concurrent schedules are two or more schedules in effect simultaneously. Each schedule independently arranges reinforcement for a different response (Ferster & Skinner, 1957). The matching law provides a quantitative description of responding on concurrent schedules of reinforcement (Baum, 1974; Herrnstein, 1961). In general, the matching law states that the relative rate of responding on one alternative will approximate the relative rate of reinforcement provided on that alternative. Consistent with the predictions of the matching law, some studies have reported reductions in problem behavior without extinction when differential reinforcement favors appropriate behavior rather than problem behavior (Piazza et al., 1997; Worsdell, Iwata, Hanley, Thompson, & Kahng, 2000).

For example, Worsdell et al. (2000) examined the effect of reinforcement rate on response allocation. Five individuals whose problem behavior was reinforced by social positive reinforcement were first exposed to an FCT condition in which both problem and appropriate behavior were reinforced on fixed-ratio (FR) 1 schedules. During subsequent FCT conditions, reinforcement for problem behavior was made more intermittent (e.g., FR 2, FR 3, FR 5), while appropriate behavior continued to be reinforced on an FR 1 schedule. Four of the participants showed shifts in response allocation to appropriate behavior as the schedule of reinforcement for problem behavior became more intermittent. There were several limitations to this research. For example, reinforcement rate was thinned in the same order for each participant such that reductions in problem behavior may have been due in part to sequence effects. In addition, the reinforcement schedule was thinned to FR 20 for two individuals. For these two participants, problem behavior rarely contacted reinforcement. The schedule in these cases may have been functionally equivalent to extinction rather than intermittent reinforcement. Nevertheless, these results suggest that extinction may not be a necessary treatment component when the rate of reinforcement favors appropriate behavior rather than problem behavior.

In another example of DRA without extinction, Piazza et al. (1997) examined the effects of increasing the quality of reinforcement for compliance relative to reinforcement associated with problem behavior. Three individuals whose problem behavior was sensitive to negative reinforcement (break from tasks) and positive reinforcement (access to tangible items, attention, or both) participated. Piazza et al. systematically evaluated the effects of reinforcing appropriate behavior with one, two, or three of the reinforcing consequences (a break, tangible items, attention), both when problem behavior produced a break and when it did not (escape extinction). For two of the three participants, appropriate behavior increased and problem behavior decreased when appropriate behavior produced a 30-s break with access to tangible items and problem behavior produced a 30-s break. The authors suggested that one potential explanation for these findings is that the relative rates of appropriate behavior and problem behavior were a function of the relative value of the reinforcement produced by escape. It is unclear, however, whether the intervention would be effective with individuals whose problem behavior was sensitive to only one type of reinforcement.

Together these and other studies have shown that behavior will covary based on rate, quality, magnitude, and delay of reinforcement. Responding will favor the alternative associated with a higher reinforcement rate (Conger & Killeen, 1974; Lalli & Casey, 1996; Mace, McCurdy, & Quigley, 1990; Neef, Mace, Shea, & Shade, 1992; Vollmer, Roane, Ringdahl, & Marcus, 1999; Worsdell et al., 2000), greater quality of reinforcement (Hoch, McComas, Johnson, Faranda, & Guenther, 2002; Lalli et al., 1999; Neef et al.; Piazza et al., 1997), greater magnitude of reinforcement (Catania, 1963; Hoch et al., 2002; Lerman, Kelley, Vorndran, Kuhn, & LaRue, 2002), or more immediate delivery of reinforcement (Mace, Neef, Shade, & Mauro, 1994; Neef, Mace, & Shade, 1993; Neef, Shade, & Miller, 1994).

Although previous research suggests that extinction may not always be a necessary component of differential reinforcement treatment packages, as described above there were certain limitations inherent in previous investigations. In addition, there has not been a comprehensive analysis of several different reinforcement dimensions both singly and in combination. The current study sought to extend this existing research by examining the influence of multiple dimensions of reinforcement and by incorporating variable-interval (VI) reinforcement schedules.

Interval schedules are less likely than ratio schedules to push response allocation exclusively toward one response over another. Under ratio schedules, reinforcer delivery is maximized when responding favors one alternative (Herrnstein & Loveland, 1975). Under interval schedules, reinforcer delivery is maximized by varying response allocation across alternatives (MacDonall, 2005). If responding favors one response alternative over another under an interval schedule, this would indicate a bias in responding that is independent of the schedule of reinforcement. This bias would not be as easily observable during ratio schedules of reinforcement. In the current application, an interval schedule allowed us to identify potential biases in responding that were independent of the reinforcement schedule. In addition, the application of a VI schedule mimics, to a degree, the integrity failures that could occur in the natural environment.

In the natural environment, caregivers may not always implement extinction procedures accurately. They also may fail to implement reinforcement procedures accurately (Shores et al., 1993). Therefore, it may be important to identify a therapeutic differential reinforcement procedure that is effective despite intermittent reinforcement of both appropriate and problem behavior. The use of concurrent VI schedules in the current experiments allowed the examination of the effects of failure to withhold reinforcement following every problem behavior and failure to reinforce every appropriate behavior in a highly controlled analogue setting.

We evaluated several manipulations that could be considered in the event that extinction either cannot or will not be implemented. In Experiments 1 to 3, we manipulated a single dimension of reinforcement such that reinforcement favored appropriate behavior along the lines of duration (Experiment 1), quality (Experiment 2), or delay (Experiment 3). In Experiment 4, we combined each of these dimensions of reinforcement such that reinforcement favored appropriate behavior.

GENERAL METHOD

Participants and Setting

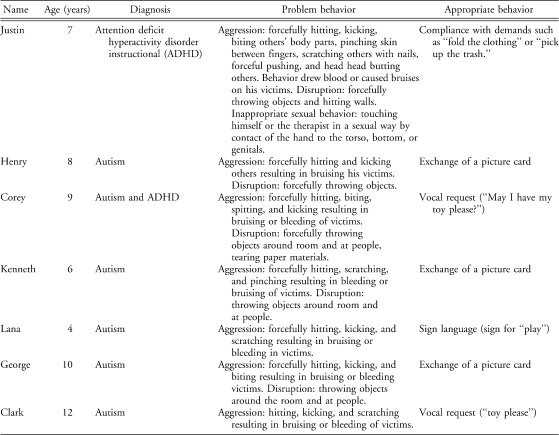

Seven individuals with developmental disorders who engaged in severe problem behavior participated. These were the first seven individuals who engaged in problem behavior sensitive to socially mediated reinforcement (as identified via functional analysis) and were admitted to an outpatient clinic (Justin, Henry, Corey, Kenneth, Lana) or referred for behavioral consultation services at local elementary schools (George, Clark). (See Table 1 for each participant's age, diagnosis, problem behavior, and appropriate behavior.) We selected the targeted appropriate behavior for each participant based on the function of problem behavior. For example, if an individual engaged in problem behavior to access attention, we selected a mand for attention as the appropriate behavior. Targeted response forms were in the participants' repertoires, although the behavior typically occurred at low rates.

Table 1.

Participants' Characteristics

Session rooms in the outpatient clinic (3 m by 3 m) were equipped with a one-way observation window and sound monitoring. We conducted sessions for George and Clark in a classroom at their elementary schools. The rooms for all participants contained materials necessary for a session (e.g., toys, task materials), and the elementary school classrooms contained materials such as posters and tables (George and Clark only). With the exception of the final experimental condition assessing generality, no other children were in the room during the analyses with George and Clark.

Trained clinicians served as therapists and conducted sessions 4 to 16 times per day, 5 days per week. Sessions were 10 min in duration, and there was a minimum 5-min break between each session. We used a multielement design during the functional analysis and a reversal design during all subsequent analyses.

Response Measurement and Interobserver Agreement

Observers were clinicians who had received training in behavioral observation and had previously demonstrated high interobserver agreement scores (>90%) with trained observers. Observers in the outpatient clinic sat behind a one-way observation window. Observers in the school sat out of the direct line of sight of the child. All observers collected data on desktop or laptop computers that provided real-time data and scored events as either frequency (e.g., aggression, disruption, self-injury, and screaming) or duration (e.g., delivery of attention, escape from instructions; see Table 1 for operational definitions of behavior). Observations were divided into 10-s bins, and observers scored the number (or duration) of observed responses for each bin. The smaller number (or duration) of observed responses within each bin was divided by the larger number and converted to agreement percentages for frequency measures (Bostow & Bailey, 1969). Agreement on the nonoccurrence of behavior within any given bin was scored as 100% agreement. The agreement scores for bins were then averaged across the session.

Two independent observers scored the target responses simultaneously but independently during a mean of 37% of functional analysis sessions (range, 27% to 49%) and 29% of experimental analysis sessions (range, 25% to 32%). We assessed interobserver agreement for problem behavior (aggression, disruption, inappropriate sexual behavior) and appropriate behavior (compliance and mands) of all participants and for the therapist's behavior, which included therapist attention, delivery of tangible items, and escape from demands.

For Justin, mean agreement was 98% for aggression (range, 87% to 100%), 96% for disruption (range, 85% to 100%), 100% for inappropriate sexual behavior, and 98% for compliance (range, 86% to 100%). For Henry, mean agreement was 100% for aggression, 99.9% for disruption (range, 99.7% to 100%), and 97% for mands (range, 95% to 99%). For Corey, mean agreement was 100% for aggression and disruption and 97% for mands (range, 95% to 100%). For Kenneth, mean agreement was 98% for aggression (range, 94% to 100%), 99% for disruption (range, 97% to 100%), and 99% for mands (range, 95% to 100%). For Lana, mean agreement was 99% for aggression (range, 99% to 100%) and 100% for mands. For George, mean agreement was 99% (range, 98% to 100%) for aggression, 99% for disruption (range, 98% to 100%), and 93% for mands (range, 88% to 99%). Mean interobserver agreement scores for 39% of all sessions was 100% for therapist attention, 99.9% for access to tangible items (range, 99% to 100%), and 100% for escape from instructions.

Stimulus Preference Assessment

We conducted a paired-stimulus preference assessment for each participant to identify a hierarchy of preferred items for use in the functional analysis (Fisher et al., 1992). In addition, for those participants whose problem behavior was reinforced by tangible items (Corey, Lana, and Clark), a multiple-stimulus-without-replacement (MSWO) preference assessment (DeLeon & Iwata, 1996) was conducted immediately prior to each session of the treatment analyses. We used informal caregiver interviews to select items used in the preference assessments, and a minimum of six items were included in the assessments.

Functional Analysis

We conducted functional analyses prior to the treatment evaluation. Procedures were similar to those described by Iwata, Dorsey, Slifer, Bauman, and Richman (1982/1994) with one exception to the procedures for George. His aggression was severe and primarily directed toward therapists' heads; therefore, a blocking procedure was in place throughout the functional analysis for the safety of the therapist. Blocking consisted of a therapist holding up his arm to prevent a hit from directly contacting his head. During the functional analysis, four test conditions (attention, tangible, escape, and ignore) were compared to a control condition (play) using a multielement design.

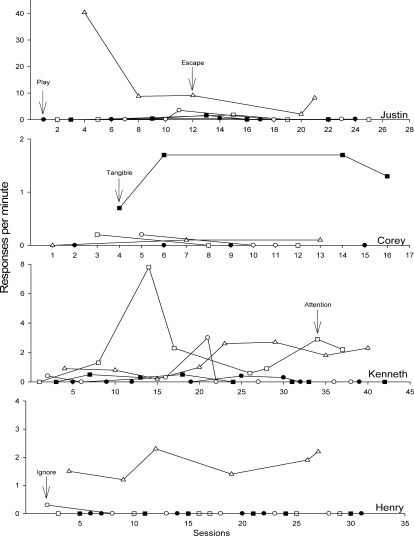

Figure 1 shows response rates of problem behavior during the functional analyses for Justin, Corey, Kenneth, and Henry. We collected data for aggression and disruption separately and obtained similar results for each topography for all participants; therefore, both topographies were combined in these data presentations. We obtained similar results for inappropriate sexual behavior for Justin, which we combined with aggression and disruption.

Figure 1.

Response rates during the functional analysis for Justin, Corey, Kenneth, and Henry.

Justin engaged in the highest rates of problem behavior in the escape condition. Although the overall trend in the escape condition is downward, inspection of the data showed that he was becoming more efficient in escape behavior by responding only when the therapist presented demands. Corey engaged in the highest rates of problem behavior during the tangible condition. Kenneth engaged in the highest rates of aggression and disruption during the attention and escape conditions. Henry displayed the highest rates of aggression and disruption in the escape condition.

Figure 2 shows the results of the functional analyses for Lana, Clark, and George. Lana and Clark displayed the highest rates of aggression during the tangible condition. George engaged in the highest rates of aggression and disruption during the attention condition.

Figure 2.

Response rates during the functional analysis for Lana, Clark, and George.

Baseline

During baseline and all subsequent conditions of Experiments 1 to 4, equal concurrent VI schedules of reinforcement (VI 20 s VI 20 s) were in place for both problem and appropriate behavior. A random number generator selected intervals between 1 s and 39 s, with a mean interval length of 20 s, and the programmed intervals for each session were available on a computer printout. A trained observer timed intervals using two timers set according to the programmed intervals. The first instance of behavior following availability of a reinforcer resulted in delivery of the reinforcer for 30 s (for an exception, see Experiment 1 involving manipulations of reinforcer duration). When reinforcement was available for a response (i.e., the interval elapsed) and the behavior occurred, the observer discreetly tapped on the one-way window from the observation room (clinic) or briefly nodded his head (classroom) to prompt the therapist to reinforce a response. After 30 s of reinforcer access (or the pertinent duration value in Experiment 1), the therapist removed the reinforcer and reset the timer for that response. The VI clock for one response (e.g., appropriate behavior) stopped while the participant consumed the reinforcer for the other response (e.g., problem behavior). The therapist reinforced responses regardless of the interval of time since the last changeover from the other response alternative. The reinforcer identified for problem behavior in the functional analysis served as the reinforcer for both responses during baseline. In Experiments 2 and 4, which involved manipulations of quality, participants received the same high-quality toy contingent on appropriate or problem behavior during baseline.

We conducted each baseline in the experiment as described but labeled them differently in order to highlight the dimensions of reinforcement that varied across experiments. For example, in Experiment 1 we manipulated duration of reinforcement, and baseline is labeled 30-s/30-s dur to indicate that reinforcement was provided for 30 s (duration) following problem and appropriate behavior. In Experiment 2, we manipulated quality of reinforcement, and baseline is labeled 1 HQ/1 HQ to indicate that a high-quality reinforcer was delivered following appropriate and problem behavior. In Experiment 3, we manipulated delay to reinforcement, and baseline is labeled 0-s/0-s delay. In Experiment 4 we manipulated duration, quality, and delay in combination, and baseline is labeled 30-s dur 1 HQ 0-s delay/30-s dur 1 HQ 0-s delay.

EXPERIMENT 1: DURATION

Method

The purpose of Experiment 1 was to examine whether we could obtain clinically acceptable changes in behavior by providing a longer duration of access to the reinforcer following appropriate behavior and shorter duration of access to the reinforcer following problem behavior.

30-s/10-s dur

Justin and Lana participated in the 30-s/10-s dur condition. For Justin, appropriate behavior produced a 30-s break from instructions. Problem behavior produced a 10-s break from instructions. For Lana, appropriate behavior produced access to the most preferred toy for 30 s, and problem behavior produced access to the same toy for 10 s.

45-s/5-s dur

Justin participated in the 45-s/5-s dur condition during which the duration of reinforcement was more discrepant across response alternatives. Appropriate behavior produced a 45-s break from instructions, and problem behavior produced a 5-s break from instructions.

Results and Discussion

Figure 3 shows the results for Justin and Lana. For Justin, during the 30-s/30-s dur baseline condition, problem behavior occurred at higher rates than appropriate behavior. In the 30-s/10-s dur condition, there was a slight decrease in the rate of problem behavior, and some appropriate behavior occurred. Because problem behavior still occurred at a higher rate than appropriate behavior, we conducted the 45-s/5-s dur condition. In the last five sessions of this condition, problem behavior decreased to low rates, and appropriate behavior increased. In a reversal to the 30-s/30-s dur baseline, problem behavior returned to levels higher than appropriate behavior. In the subsequent return to the 45-s/5-s dur condition, the favorable effects were replicated. Responding stabilized in the last five sessions of this condition, with problem behavior remaining low and appropriate behavior remaining high. In a reversal to the 30-s/30-s dur baseline, however, there was a failure to replicate previous baseline levels of responding. Instead, low rates of problem behavior and high rates of appropriate behavior occurred.

Figure 3.

Justin's and Lana's response rates during the duration analysis for problem behavior and appropriate behavior.

During the 30-s/30-s dur baseline, Lana's problem behavior occurred at higher rates than appropriate behavior. During the 30-s/10-s dur condition, appropriate behavior occurred at higher rates, and problem behavior decreased to zero. The effects of the 30-s/30-s dur baseline and the 30-s/10-s dur condition were replicated in the final two conditions.

In summary, the duration analysis indicated that for both participants, the relative rates of problem behavior and appropriate behavior were sensitive to the reinforcement duration available for each alternative in four of the five applications in which duration of reinforcement was unequal. This finding replicates the findings of previous investigations on the effects of reinforcement duration on choice responding (Catania, 1963; Lerman et al., 2002; Ten Eyck, 1970).

There were several limitations to this experiment. For example, the participants did not show sensitivity to the concurrent VI schedules when both the rate and duration of reinforcement were equal. Under this arrangement, the participants would have collected all of the available reinforcers had they distributed their responding roughly equally between the two response options. The failure to distribute responding across responses indicates a bias toward problem behavior. Additional research into this failure to show sensitivity to the concurrent VI schedules is warranted but was outside the scope of this experiment.

With Justin, we were unable to recapture baseline rates of problem and appropriate behavior in our final reversal to the 30-s/30-s dur baseline. This failure to replicate previous rates of responding may be a result of his recent history with a condition in which reinforcement favored appropriate behavior (i.e., the 5 s/45-s dur condition). Nevertheless, this lack of replication weakens the demonstration of experimental control with this participant. With both participants, there was a gradual change in responding in the condition that ultimately produced a change favoring the alternative behavior, which is not surprising given that extinction was not in place. Responding under intermittent schedules of reinforcement can be more resistant to change (Ferster & Skinner, 1957).

EXPERIMENT 2: QUALITY

The purpose of Experiment 2 was to examine whether we could obtain clinically acceptable changes in behavior by providing a higher quality reinforcer following appropriate behavior and lower quality reinforcer following problem behavior.

Method

Reinforcer assessment

We conducted a reinforcer assessment using procedures described by Piazza et al. (1999) before conducting the quality analysis with Kenneth. The assessment identified the relative efficacy of two reinforcers (i.e., praise and reprimands) in a concurrent-operants arrangement. During baseline, the therapist stood in the middle of a room that was divided by painter's tape and provided no social interaction; toy contact (e.g., playing with green or orange blocks on either side of the divided room) and problem behavior resulted in no arranged consequences. Presession prompting occurred prior to the beginning of the initial contingent attention phase and the reversal (described below). During presession prompting, the experimenter prompted Kenneth to make contact with the green and orange toys. Prompted contact with green toys resulted in praise (e.g., “Good job, Kenneth,” delivered in a high-pitched, loud voice with an excited tone). Prompted contact with the orange toys resulted in reprimands (e.g., “Don't play with that,” delivered in a deeper pitched, loud voice with a harsh tone). Following presession prompting, we implemented the contingent attention phase. The therapist stood in the middle of a room divided by painter's tape and delivered the consequences to which Kenneth had been exposed in presession prompting. The therapist delivered continuous reprimands or praise for the duration of toy contact and blocked attempts to play with two different-colored toys simultaneously.

During the second contingent attention phase, we reversed the consequences associated with each color of toys such that green toys were associated with reprimands and orange toys with praise. The different-colored toys were always associated with a specific side of the room, and the therapist ensured that they remained on that side. Kenneth selected the colored toy associated with praise on a mean of 98% of all contingent attention sessions.

1 HQ/1 LQ

For Justin, problem behavior produced 30 s of escape with access to one low-quality tangible item identified in a presession MSWO. Appropriate behavior produced 30 s of escape with access to one high-quality tangible item identified in a presession MSWO. Although the variable that maintained his problem behavior was escape, we used disparate quality toys as a way of creating a qualitative difference between the escape contingencies for appropriate and problem behavior.

For Kenneth, problem behavior produced reprimands (e.g., “Don't do that, I really do not like it, and you could end up hurting someone”), which the reinforcer assessment identified as a less effective form of reinforcement than social praise. Appropriate behavior produced praise (e.g., “Good job handing me the card; I really like it when you hand it to me so nicely.”), which was identified as a more effective form of reinforcement in the reinforcer assessment.

3 HQ/1 LQ

For Justin and Kenneth, problem behavior did not decrease to therapeutic levels in the 1 HQ/1 LQ condition. For Justin, within-session analysis showed that as sessions progressed during the 1 LQ/1 HQ condition, he stopped playing with the toy and showed decreases in compliance, possibly due to reinforcer satiation. Unfortunately, we did not have access to potentially higher quality toys that Justin had requested (e.g., video game systems). Given this limited access, we increased the number of preferred toys provided contingent on appropriate behavior as a way of addressing potential satiation with the toys. We provided three toys selected most frequently in presession MSWO assessments. Therefore, for Justin, in the 3 HQ/1 LQ condition, appropriate behavior produced 30 s of escape with access to three high-quality toys. Problem behavior produced 30 s of escape with access to one low-quality tangible item.

For Kenneth, anecdotal observations between sessions showed that he frequently requested physical attention in the forms of hugs and tickles by guiding the therapist's hands around him or to his stomach. Based on this observation, we added physical attention to the social praise available following appropriate behavior. Therefore, during the 3 HQ/1 LQ condition, appropriate behavior produced praise and the addition of physical attention (e.g., “Good job handing me the card,” hugs and tickles). Problem behavior produced reprimands.

Results and Discussion

During the 1 HQ/1 HQ baseline condition, Justin (Figure 4, top) engaged in higher rates of problem behavior than appropriate behavior. In the 1 HQ/1 LQ condition, rates of problem behavior decreased, and appropriate behavior increased. However, toward the end of the phase, problem behavior increased, and appropriate behavior decreased. Lower rates of problem behavior than appropriate behavior were obtained in the 3 HQ/1 LQ condition. During the subsequent 1 HQ/1 HQ baseline reversal, there was a failure to recapture previous rates of problem and appropriate behavior. Instead, problem behavior occurred at a lower rate than appropriate behavior. Despite this, problem behavior increased relative to what was observed in the immediately preceding 3 HQ/1 LQ condition. Problem behavior decreased, and appropriate behavior increased to high levels during the return to the 3 HQ/1 LQ condition.

Figure 4.

Response rates for Justin and Kenneth during the quality analysis for problem behavior and appropriate behavior.

Kenneth (Figure 4, bottom) engaged in higher rates of problem behavior than appropriate behavior in the 1 HQ/1 HQ baseline. In the 1 HQ/1 LQ condition, rates of problem behavior decreased, and appropriate behavior increased. During the last five sessions, responding shifted across response alternatives across sessions. During a replication of 1 HQ/1 HQ baseline, we observed high rates of problem behavior and relatively lower rates of appropriate behavior. During a subsequent replication of the 1 HQ/1 LQ condition, slightly higher rates of problem behavior than appropriate behavior were obtained, with responding again shifting across response alternatives across sessions. In a replication of 1 HQ/1 HQ baseline, high rates of problem behavior and lower rates of appropriate behavior were obtained. Following this replication, we conducted the 3 HQ/1 LQ condition, and problem behavior decreased to rates lower than observed in previous conditions and appropriate behavior increased to high rates. The effects of the 1 HQ/1 HQ baseline and the 3 HQ/1 LQ condition were replicated in the final two conditions.

In summary, results of the quality analyses indicated that for both participants, the relative rates of both problem behavior and appropriate behavior were sensitive to the quality of reinforcement available for each alternative. These results replicate the findings of previous investigations on the relative effects of quality of reinforcement on choice responding (Conger & Killeen, 1974; Hoch et al., 2002; Martens & Houk, 1989; Neef et al., 1992; Piazza et al., 1997).

One drawback to this study was the manipulation of both magnitude and quality of reinforcement with Justin. Given the circumstances described above, a greater number of higher quality toys were provided contingent on appropriate behavior relative to problem behavior prior to obtaining a consistent shift in response allocation.

As in Experiment 1, the failure to replicate prior rates of appropriate behavior in our final reversal to the 1 HQ/1 HQ baseline weakened experimental control with Justin. Again, baseline levels of behavior were not recaptured after an intervening history in which the reinforcement quality and magnitude favored appropriate behavior.

EXPERIMENT 3: DELAY

Method

The purpose of Experiment 3 was to examine whether we could produce clinically acceptable changes in behavior by providing immediate reinforcement following appropriate behavior and delayed reinforcement following problem behavior.

0-s/30-s delay

Corey and Henry participated in the 0-s/30-s delay condition. For Corey, appropriate behavior produced 30-s immediate access to a high-quality toy (selected from a presession MSWO). Problem behavior produced 30-s access to the same high-quality toy after a 30-s unsignaled delay. For Henry, appropriate behavior produced an immediate 30-s break from instructions. Problem behavior produced a 30-s break from instructions after a 30-s unsignaled delay. With both participants, once a delay interval started, additional instances of problem behavior did not reset the interval. When problem behavior occurred, the data collector started a timer and signaled the therapist to provide reinforcement when the timer elapsed by a discreet tap on the one-way window. If a participant engaged in appropriate behavior during the delay interval for problem behavior, the therapist immediately delivered the reinforcer for appropriate behavior (as programmed), and the delay clock for problem behavior temporarily stopped and then resumed after the reinforcement interval for appropriate behavior ended.

0-s/60-s delay

When the initial delay interval did not result in therapeutic decreases in problem behavior for Corey, we altered the delay interval such that problem behavior produced 30-s access to a high-quality toy (selected from a presession MSWO) after a 60-s unsignaled delay. Appropriate behavior continued to produce 30-s immediate access to the same high-quality toy. For Henry, problem behavior produced a 30-s break from instructions after a 60-s unsignaled delay, and appropriate behavior continued to produce an immediate 30-s break.

Results and Discussion

During the 0-s/0-s delay baseline, Corey (Figure 5, top) engaged in higher rates of problem behavior than appropriate behavior. In the 0-s/30-s delay condition, problem behavior continued to occur at a higher rate than appropriate behavior. Given this, the 0-s/60-s delay condition was implemented, and a gradual decrease in problem behavior and increase in appropriate behavior was obtained. During a reversal to the 0-s/0-s delay baseline, there was an increase in problem behavior and a decrease in appropriate behavior. In the final reversal to the 0-s/60-s delay condition, Corey became ill with strep throat. His caregiver continued to bring him to the clinic and did not inform us until after he began treatment. (We have indicated this period on the graph.) Following his illness, problem behavior ceased, and appropriate behavior increased to high, steady rates.

Figure 5.

Corey's and Henry's response rates during the delay analysis for problem behavior and appropriate behavior.

During the 0-s/0-s delay baseline, Henry (Figure 5, bottom) engaged in higher rates of problem behavior than appropriate behavior. In the 0-s/30-s delay condition, Henry continued to engage in a higher rate of problem behavior than appropriate behavior. In a reversal to 0-s/0-s delay baseline, there was a slight increase in problem behavior from the previous condition and a decrease in appropriate behavior. During the 0-s/60-s delay condition, there was a decrease in problem behavior to zero rates and an increase in appropriate behavior to steady rates of two per minute (perfectly efficient responding given 30-s access). These results were replicated in the reversals to 0-s/0-s delay baseline and 0-s/60-s delay condition.

In summary, results of the delay analysis indicate that the relative rates of problem behavior and appropriate behavior were sensitive to the delay to reinforcement following each alternative. These results replicate the findings of previous investigations on the effects of unsignaled delay to reinforcement (Sizemore & Lattal, 1978; Vollmer, Borrero, Lalli, & Daniel, 1999; Williams, 1976). For example, Vollmer et al. showed that aggression occurred when it produced immediate but small reinforcers even though mands produced larger reinforcers after an unsignaled delay. In their study, participants displayed self-control when therapists signaled the delay to reinforcement.

It is important to note that the programmed delays were not necessarily those experienced by the participant. The occurrence of problem behavior started a timer that, when elapsed, resulted in delivery of reinforcement. Additional problem behavior during the delay did not add to the delay in order to prevent extinction-like conditions. It was therefore possible that problem behavior occurred within the delay interval and resulted in shorter delays to reinforcement. This rarely occurred with Henry. By contrast, Corey's problem behavior sometimes occurred in bursts or at high rates. In these cases, problem behavior was reinforced after delays shorter than the programmed 30 s or 60 s. Nevertheless, the differential delays to reinforcement following inappropriate and appropriate behavior eventually shifted allocation toward appropriate responding. One way to address this potential limitation would be to add a differential reinforcement of other behavior (DRO) component with a resetting reinforcement interval. The resetting feature would result in the occurrence of problem behavior during the interval resetting the interval and therefore delaying reinforcement. With high-rate problem behavior, this DRO contingency would initially result in very low rates of reinforcement, making the condition similar to extinction. We did not add a DRO component in the current experiment because our aim was to evaluate treatments without extinction.

Another potential limitation to the current experiment was the possibility of adventitious reinforcement of chains of problem and appropriate behavior. For example, when appropriate behavior occurred during the delay interval for problem behavior and the VI schedule indicated reinforcement was available for that response, there was immediate reinforcement of appropriate behavior. This reinforcement could have strengthened a chain of problem and appropriate behavior. Although this did not seem to be a concern in the current experiment, one way to control for this limitation would be to add a changeover delay (COD). A COD allows a response to be reinforced only if a certain interval has passed since the last changeover from the other response alternative. The COD could prevent adventitious reinforcement of problem and appropriate behavior and result in longer periods of responding on a given alternative and thus greater control by the relative reinforcement available for those alternatives (Catania, 1966).

EXPERIMENT 4: DURATION, QUALITY, AND DELAY

The purpose of Experiment 4 was to evaluate the effects of delivering immediate, longer duration access to high-quality reinforcement following appropriate behavior and delayed, shorter duration access to low-quality reinforcement following problem behavior. We observed gradual treatment effects in the previous experiments. This was to be expected, because both types of responding were reinforced, but is not an ideal clinical outcome. In addition, experimental control was not clear in several of the cases, and none of the experiments clearly demonstrated how reinforcement that favored appropriate behavior could be used in a practical manner as a treatment for problem behavior. The focus of Experiment 4, therefore, was to combine all the variables and examine whether clinically acceptable changes in behavior could be produced by making reinforcement for appropriate behavior greater along several dimensions. We also assessed the maintenance and generality of treatment effects. George and Clark participated in Experiment 4.

Method

Reinforcer assessment

Before conducting the experimental analyses with George, we conducted a reinforcer assessment using procedures similar to those described in Experiment 2. We compared the reinforcing efficacy of praise (e.g., “Good job, George”) and physical contact (e.g., high fives, pats on the back) with reprimands (e.g., “Don't do that”) and physical contact (e.g., therapist using his hands to block aggression from George for safety reasons). George allocated a mean of 96% of his responses to the colored toys that resulted in praise and physical contact.

30-s dur HQ 0-s delay/5-s dur LQ 10-s delay

As in previous experiments, equal concurrent VI schedules of reinforcement (VI 20 s VI 20 s) were in place for both problem and appropriate behavior throughout the experiment. For George, appropriate behavior immediately produced 30 s of high-quality attention in the form of social praise and physical attention (e.g., high fives, pats on the back). Problem behavior produced 5 s of low-quality attention in the form of social disapproval and brief blocking of aggression after a 10-s unsignaled delay. For Clark, appropriate behavior produced 30 s of immediate access to a high-preference toy. Problem behavior produced 5 s of access to a low-preference toy after a 10-s unsignaled delay. The therapist timed delays to reinforcement in the same manner as described in Experiment 3. We assessed maintenance of treatment effects and extended treatment across therapists with both participants. George's participation concluded with a 1-month follow-up to evaluate the maintenance of treatment effects. His teacher conducted the final three sessions of this condition. Clark's participation concluded with a 2-month follow-up during which his teacher conducted sessions. Teachers received written descriptions of the protocol, one-on-one training with modeling of the procedures, and feedback after each session regarding the accuracy of their implementation of the procedures.

Results and Discussion

During the 30-s dur 1 HQ 0-s delay/30-s dur 1 HQ 0-s delay baseline, George (Figure 6, top) engaged in higher rates of problem behavior than appropriate behavior. In the 30-s dur HQ 0-s delay/5-s dur LQ 10-s delay condition, there was a decrease in problem behavior and an increase in appropriate behavior. In a reversal to baseline, there was an increase in problem behavior and a decrease in appropriate behavior. In the final reversal to the 30-s dur HQ 0-s delay/5-s dur LQ 10-s delay condition, there was a further decrease in problem behavior and an increase in appropriate behavior. At the 1-month follow-up, no problem behavior occurred, and appropriate behavior remained high.

Figure 6.

George's and Clark's response rates for problem behavior and appropriate behavior.

During the 30-s dur 1 HQ 0-s delay/30-s dur 1 HQ 0-s delay baseline, Clark (Figure 6, bottom) engaged in higher rates of problem behavior than appropriate behavior. In the initial 30-s dur HQ 0-s delay/5-s dur LQ 10-s delay condition, there was a decrease in problem behavior and an increase in appropriate behavior. In a reversal to baseline, there was an increase in problem behavior and a decrease in appropriate behavior. In a reversal to the 30-s dur HQ 0-s delay/5-s dur LQ 10-s delay condition, there was a further decrease in problem behavior and an increase in appropriate behavior. At the 2-month follow-up, no problem behavior occurred, and appropriate behavior remained high.

In summary, results of the combined analyses indicate that for these participants the relative rates of problem behavior and appropriate behavior were sensitive to a combination of the quality, delay, and duration of reinforcement following each alternative. Compared to the first three experiments, Experiment 4 resulted in clear experimental control; there were rapid changes in response allocation across conditions and consistent replications of responding under previous conditions, despite the fact that we did not include an extinction component.

There were several limitations to this experiment. We did not conduct within-subject comparisons of manipulating single versus multiple dimensions of reinforcement. In addition, the response blocking included in George's case limits conclusions regarding efficacy of treatments that do not include extinction because response blocking may function as either extinction or punishment (Lerman & Iwata, 1996). Unfortunately, George's aggression tended to cause substantial harm to others and warranted the use of the briefest sufficient block to prevent harm. The blocking used during treatment was the same as that used in the functional analysis. The blocking response did not serve to suppress aggression in the functional analysis, and it is doubtful that it exerted any such suppressive effects during the intervention. We did attempt to control for the addition of physical contact required following problem behavior by adding physical contact contingent on appropriate behavior.

A potential strength of this investigation was that we assessed both maintenance and generality of the procedures in a 1-month follow-up, with George's and Clark's teachers serving as therapists in several of the sessions. George's teacher reported that he had a history of attacking peers, making his behavior too severe to ignore. His teacher also indicated that the presence of four other children in the room limited the amount of attention she could deliver following appropriate behavior. Clark's behavior was so severe that prior to this investigation, he had been moved to a classroom in which he was the only student; he returned to a small-size (four peers) classroom following this investigation. The current procedure identified an effective treatment in which teachers delivered a relatively long duration of high-quality reinforcement immediately following some appropriate behavior and brief, low-quality reinforcement after a short delay following some problem behavior. Our specific recommendation to both teachers was to follow the procedures in Experiment 4 to the best of their abilities, with the caveat that each should immediately intervene for aggression that was directed toward peers or was likely to cause severe harm.

GENERAL DISCUSSION

The current experiments attempted to identify differential reinforcement procedures that were effective without extinction by manipulating several dimensions of reinforcement. We sought to extend prior research that focused solely on multiply maintained problem behavior (Piazza et al., 1997) and examined only single manipulations of reinforcement (Lalli & Casey, 1996; Piazza et al.). The present studies showed the effectiveness of DRA that provided some combination of more immediate, longer duration, or higher quality of reinforcement for appropriate behavior relative to reinforcement for problem behavior. In cases in which extinction is not feasible, the current studies offer a method of decreasing problem behavior and increasing appropriate behavior without the use of extinction. For example, if problem behavior is so severe (e.g., severe aggression, head banging on hard surfaces) that it is not possible to withhold or even delay reinforcement, it may be possible to manipulate other parameters of reinforcement such as duration and quality to favor appropriate behavior. If attention maintains problem behavior in the form of severe self-injury, for example, problem behavior could result in brief social attention and appropriate behavior could result in a longer duration of attention in the form of praise, smiles, conversation, laughter, and physical attention such as hugs and tickling.

One potential contribution of the current experiments was procedural. The use of intermittent schedules of reinforcement in the treatment of problem behavior had several benefits. For example, these schedules likely mimic to a degree the schedules of reinforcement in the natural environment. It is unlikely that at home or school, for example, each instance of behavior produces reinforcement. It is likely, however, that variable amounts of appropriate and problem behavior are reinforced or that varying amounts of time pass between reinforced episodes. Further, concurrent VI arrangements allow comparisons to and translations from experimental work on the matching law.

One limitation of these experiments is the brevity and varying length of the conditions. In a laboratory, it may be possible to conduct conditions until meeting a stability criterion (e.g., a difference of less than 5% between data points); however, in a clinical setting, it is not always possible to bring each condition to stability before exposing behavior to another condition (i.e., Corey and Kenneth).

A second potential limitation to the current experiment is the difference in obtained versus programmed schedules of reinforcement. VI schedules of reinforcement involve delivery of a reinforcer for the first response after an average length of time has passed since the last reinforcer. Participants did not always respond immediately after the required length of time elapsed, resulting at times in a less dense reinforcement schedule than programmed. The differences in obtained versus programmed reinforcement schedules were neither large nor consistent, however.

Our study suggests several areas for future research. These experiments included concurrent schedules of VI 20-s reinforcement for problem and appropriate behavior. Future research may involve similar analyses using concurrent-schedules arrangements based on naturalistic observations. The extent to which relative response allocation is similar under descriptive and experimental arrangements may suggest values of reinforcement parameters that may increase both the acceptability and integrity of treatment implementation by caregivers. For example, researchers could conduct descriptive analyses (Bijou, Peterson, & Ault, 1968) with caregivers and analyze the results using reinforcers identified in a functional analysis with procedures similar to those described by Borrero, Vollmer, Borrero, and Bourret (2005). If descriptive analysis data show that problem behavior is reinforced on average every 15 s and appropriate behavior is reinforced on average every 30 s, treatment might involve reinforcing appropriate behavior every 15 s and problem behavior every 30 s.

Investigations similar to the current experiments could further explore the dimensions of quality, duration, and delay with more participants and with additional values of these dimensions. In addition, future researchers could investigate the effect of concurrent manipulations of the dimensions of reinforcement as treatment for problem behavior. For example, when it is not possible to withhold reinforcement for problem behavior, it may be that the rate of reinforcement can continue to favor problem behavior if several dimensions of reinforcement, such as magnitude, quality, and duration, favor appropriate behavior. This area of research may result in the development of more practical and widely adopted interventions for problem behavior.

Acknowledgments

We thank Brian Iwata, Lise Abrams, and Stephen Smith for their comments on an earlier draft of this manuscript. Portions of this manuscript were included as part of the dissertation of the first author at the University of Florida.

REFERENCES

- Baum W.M. Choice in free-ranging wild pigeons. Science. 1974;185:78–79. doi: 10.1126/science.185.4145.78. [DOI] [PubMed] [Google Scholar]

- Bijou S.W, Peterson R.F, Ault M.H. A method to integrate descriptive and experimental field studies at the level of data and empirical concepts. Journal of Applied Behavior Analysis. 1968;1:175–191. doi: 10.1901/jaba.1968.1-175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Borrero C.S.W, Vollmer T.R, Borrero J.C, Bourret J. A method for evaluating the dimensions of reinforcement in parent-child interactions. Research in Developmental Disabilities. 2005;26:577–592. doi: 10.1016/j.ridd.2004.11.010. [DOI] [PubMed] [Google Scholar]

- Bostow D.E, Bailey J.B. Modification of severe disruptive and aggressive behavior using brief timeout and reinforcement procedures. Journal of Applied Behavior Analysis. 1969;2:31–37. doi: 10.1901/jaba.1969.2-31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Catania A.C. Concurrent performances: A baseline for the study of reinforcement magnitude. Journal of the Experimental Analysis of Behavior. 1963;6:299–300. doi: 10.1901/jeab.1963.6-299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Catania A.C. Concurrent operants. In: Honig W.K, editor. Operant behavior: Areas of research and application. Englewood Cliffs, NJ: Prentice Hall; 1966. pp. 213–270. (Ed.) [Google Scholar]

- Conger R, Killeen P. Use of concurrent operants in small group research. Pacific Sociological Review. 1974;17:399–416. [Google Scholar]

- Cooper J.O, Heron T.E, Heward W.L. Applied behavior analysis (2nd ed.) Upper Saddle River, NJ: Prentice Hall; 2007. [Google Scholar]

- Deitz D.E.D, Repp A.C. Reducing behavior through reinforcement. Exceptional Education Quarterly. 1983;3:34–46. [Google Scholar]

- DeLeon I.G, Iwata B.A. Evaluation of a multiple-stimulus presentation format for assessing reinforcer preferences. Journal of Applied Behavior Analysis. 1996;29:519–532. doi: 10.1901/jaba.1996.29-519. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dwyer-Moore K.J, Dixon M.R. Functional analysis and treatment of problem behavior of elderly adults in long-term care. Journal of Applied Behavior Analysis. 2007;40:679–683. doi: 10.1901/jaba.2007.679-683. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferster C.B, Skinner B.F. Schedules of reinforcement. New York: Appleton-Century-Crofts; 1957. [Google Scholar]

- Fisher W, Piazza C.C, Bowman L.G, Hagopian L.P, Owens J.C, Slevin I. A comparison of two approaches for identifying reinforcers for person with severe and profound disabilities. Journal of Applied Behavior Analysis. 1992;25:491–498. doi: 10.1901/jaba.1992.25-491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fisher W, Piazza C.C, Cataldo M.F, Harrell R, Jefferson G, Conner R. Functional communication training with and without extinction and punishment. Journal of Applied Behavior Analysis. 1993;26:23–36. doi: 10.1901/jaba.1993.26-23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hagopian L.P, Fisher W.W, Sullivan M.T, Acquisto J, LeBlanc L.A. Effectiveness of functional communication training with and without extinction and punishment: A summary of 21 inpatient cases. Journal of Applied Behavior Analysis. 1998;31:211–235. doi: 10.1901/jaba.1998.31-211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herrnstein R.J. Relative and absolute strength of response as a function of frequency of reinforcement. Journal of the Experimental Analysis of Behavior. 1961;4:267–272. doi: 10.1901/jeab.1961.4-267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herrnstein R.J, Loveland D.H. Maximizing and matching on concurrent ratio schedules. Journal of the Experimental Analysis of Behavior. 1975;24:107–116. doi: 10.1901/jeab.1975.24-107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoch H, McComas J.J, Johnson L, Faranda N, Guenther S.L. The effects of magnitude and quality of reinforcement on choice responding during play activities. Journal of Applied Behavior Analysis. 2002;35:171–181. doi: 10.1901/jaba.2002.35-171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iwata B.A, Dorsey M.F, Slifer K.J, Bauman K.E, Richman G.S. Toward a functional analysis of self-injury. Journal of Applied Behavior Analysis. 1994;27:197–209. doi: 10.1901/jaba.1994.27-197. (Reprinted from Analysis and Intervention in Developmental Disabilities, 2, 3–20, 1982) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iwata B.A, Pace G.M, Cowdery G.E, Miltenberger R.G. What makes extinction work: An analysis of procedural form and function. Journal of Applied Behavior Analysis. 1994;27:131–144. doi: 10.1901/jaba.1994.27-131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lalli J.S, Casey S.D. Treatment of multiply controlled problem behavior. Journal of Applied Behavior Analysis. 1996;29:391–395. doi: 10.1901/jaba.1996.29-391. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lalli J.S, Vollmer T.R, Progar P.R, Wright C, Borrero J, Daniel D, et al. Competition between positive and negative reinforcement in the treatment of escape behavior. Journal of Applied Behavior Analysis. 1999;32:285–296. doi: 10.1901/jaba.1999.32-285. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lerman D.C, Iwata B.A. A methodology for distinguishing between extinction and punishment effects of response blocking. Journal of Applied Behavior Analysis. 1996;29:231–233. doi: 10.1901/jaba.1996.29-231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lerman D.C, Kelley M.E, Vorndran C.M, Kuhn S.A.C, LaRue R.H.J. Reinforcement magnitude and responding during treatment with differential reinforcement. Journal of Applied Behavior Analysis. 2002;35:29–48. doi: 10.1901/jaba.2002.35-29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacDonall J.S. Earning and obtaining reinforcers under concurrent interval scheduling. Journal of the Experimental Analysis of Behavior. 2005;84:167–183. doi: 10.1901/jeab.2005.76-04. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mace F.C, McCurdy B, Quigley E.A. A collateral effect of reward predicted by matching theory. Journal of Applied Behavior Analysis. 1990;23:197–205. doi: 10.1901/jaba.1990.23-197. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mace F.C, Neef N.A, Shade D, Mauro B.C. Limited matching on concurrent-schedule reinforcement of academic behavior. Journal of Applied Behavior Analysis. 1994;24:719–732. doi: 10.1901/jaba.1994.27-585. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mace F.C, Roberts M.L. Factors affecting selection of behavioral interventions. In: Reichle J, Wacker D.P, editors. Communicative alternatives to challenging behavior: Integrating functional assessment and intervention strategies. Baltimore: Brookes; 1993. pp. 113–134. (Eds.) [Google Scholar]

- Martens B.K, Houk J.L. The application of Herrnstein's law of effect to disruptive and on-task behavior of a retarded adolescent girl. Journal of the Experimental Analysis of Behavior. 1989;51:17–27. doi: 10.1901/jeab.1989.51-17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCord B.E, Thomson R.J, Iwata B.A. Functional analysis and treatment of self-injury associated with transitions. Journal of Applied Behavior Analysis. 2001;34:195–210. doi: 10.1901/jaba.2001.34-195. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neef N.A, Mace F.C, Shade D. Impulsivity in students with serious emotional disturbance: The interactive effects of reinforcer rate, delay, and quality. Journal of Applied Behavior Analysis. 1993;26:37–52. doi: 10.1901/jaba.1993.26-37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neef N.A, Mace F.C, Shea M.C, Shade D. Effects of reinforcer rate and reinforcer quality on time allocation: Extensions of matching theory to educational settings. Journal of Applied Behavior Analysis. 1992;25:691–699. doi: 10.1901/jaba.1992.25-691. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neef N.A, Shade D, Miller M.S. Assessing influential dimensions of reinforcers on choice in students with serious emotional disturbance. Journal of Applied Behavior Analysis. 1994;27:575–583. doi: 10.1901/jaba.1994.27-575. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Piazza C.C, Bowman L.G, Contrucci S.A, Delia M.D, Adelinis J.D, Goh H. An evaluation of the properties of attention as reinforcement for destructive and appropriate behavior. Journal of Applied Behavior Analysis. 1999;32:437–449. doi: 10.1901/jaba.1999.32-437. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Piazza C.C, Fisher W.W, Hanley G.P, Remick M.L, Contrucci S.A, Aitken T.L. The use of positive and negative reinforcement in the treatment of escape-maintained destructive behavior. Journal of Applied Behavior Analysis. 1997;30:279–298. doi: 10.1901/jaba.1997.30-279. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shores R.E, Jack S.L, Gunter P.L, Ellis D.N, DeBriere T.J, Wehby J.H. Classroom interactions of children with behavior disorders. Journal of Emotional and Behavioral Disorders. 1993;1:27–39. [Google Scholar]

- Sizemore O.J, Lattal K.A. Unsignalled delay of reinforcement in variable-interval schedules. Journal of the Experimental Analysis of Behavior. 1978;30:169–175. doi: 10.1901/jeab.1978.30-169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ten Eyck R.L., Jr Effects of rate of reinforcement-time upon concurrent operant performance. Journal of the Experimental Analysis of Behavior. 1970;14:269–274. doi: 10.1901/jeab.1970.14-269. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Volkert V.M, Lerman D.C, Call N.A, Trosclair-Lasserre N. An evaluation of resurgence during treatment with functional communication training. Journal of Applied Behavior Analysis. 2009;42:145–160. doi: 10.1901/jaba.2009.42-145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vollmer T.R, Borrero J.C, Lalli J.S, Daniel D. Evaluating self-control and impulsivity in children with severe behavior disorders. Journal of Applied Behavior Analysis. 1999;32:451–466. doi: 10.1901/jaba.1999.32-451. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vollmer T.R, Iwata B.A. Differential reinforcement as treatment for behavior disorders: Procedural and functional variations. Research in Developmental Disabilities. 1992;13:393–417. doi: 10.1016/0891-4222(92)90013-v. [DOI] [PubMed] [Google Scholar]

- Vollmer T.R, Roane H.S, Ringdahl J.E, Marcus B.A. Evaluating treatment challenges with differential reinforcement of alternative behavior. Journal of Applied Behavior Analysis. 1999;32:9–23. [Google Scholar]

- Williams B.A. The effects of unsignalled delayed reinforcement. Journal of the Experimental Analysis of Behavior. 1976;26:441–449. doi: 10.1901/jeab.1976.26-441. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Worsdell A.S, Iwata B.A, Hanley G.P, Thompson R.T, Kahng S.W. Effects of continuous and intermittent reinforcement for problem behavior during functional communication training. Journal of Applied Behavior Analysis. 2000;33:167–179. doi: 10.1901/jaba.2000.33-167. [DOI] [PMC free article] [PubMed] [Google Scholar]