Abstract

While there seems to be relatively wide agreement about perceptual decision making relying on integration-to-threshold mechanisms, proposed models differ in a variety of details. This study compares a range of mechanisms for multi-alternative perceptual decision making, including integration with and without leakage, feedforward and feedback inhibition for mediating the competition between integrators, as well as linear and non-linear mechanisms for combining signals across alternatives. It is shown that a number of mechanisms make very similar predictions for the decision behavior and are therefore able to explain previously published data from a multi-alternative perceptual decision task. However, it is also demonstrated that the mechanisms differ in their internal dynamics and therefore make different predictions for neuorphysiological experiments. The study further addresses the relationship of these mechanisms with decision theory and statistical testing and analyzes their optimality.

Keywords: behavior, decision theory, mathematical models, multiple alternatives, neurophysiology, perceptual decisions

Introduction

Perceptual decision making involves selecting an appropriate action based on sensory evidence at an appropriate time. While there seems to be general agreement that perceptual decisions, at least those with response times that clearly exceed simple motor reaction times, involve some kind of integration-to-threshold mechanism, which accumulates sensory evidence until a decision boundary is crossed, there is still considerable debate about the details of the decision mechanism and their neural implementation. Furthermore, while an optimal algorithm for decisions between two alternatives based on sensory evidence that fluctuates over time is known: the sequential probability ratio test (SPRT; Wald, 1945), to date we only know an asymptotically optimal algorithm for decisions between more than two alternatives, which only applies to the asymptotic case of negligible error rates (Dragalin et al., 1999).

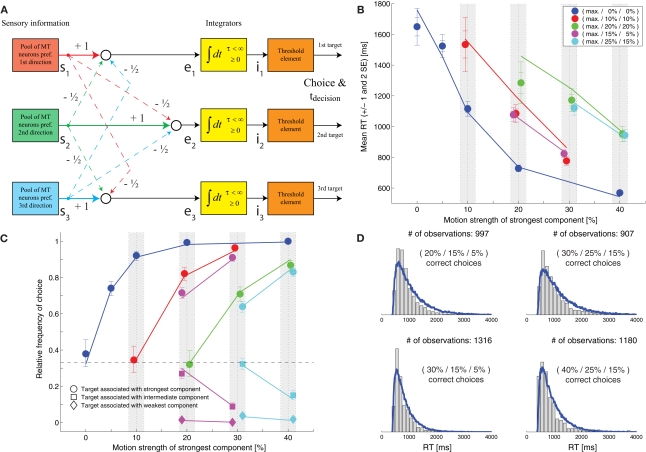

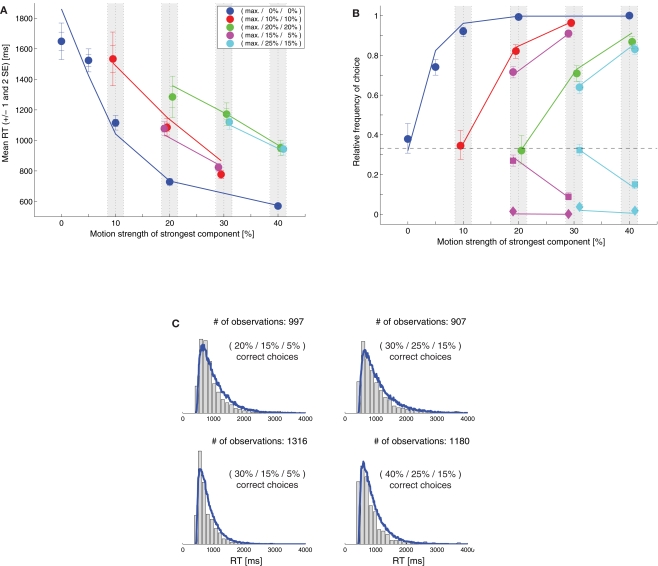

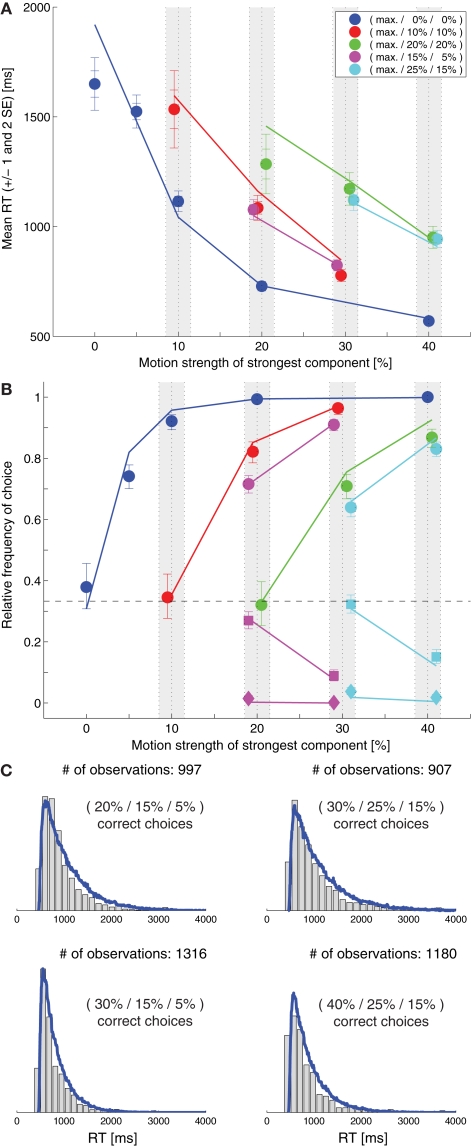

Niwa and Ditterich (2008) have presented a human behavioral dataset from a three-alternative random-dot motion direction-discrimination task, spanning a wide range of accuracy levels (from chance to perfect) and mean response times (with more than a second difference between the fastest and the slowest task condition). The observers made a judgment about the direction of the strongest motion component in a multi-component stimulus, allowing independent control of how much sensory evidence was provided for each of the alternatives and therefore an independent manipulation of speed and accuracy. Details on the behavioral dataset can be found in Niwa and Ditterich (2008). Briefly, human observers were watching a random-dot motion stimulus with three embedded coherent motion components, moving in three different directions all separated by 120°. The task was to identify the direction of the strongest motion component. The subjects were allowed to watch the stimulus as long as they wanted and responded with a goal-directed eye movement to one of three choice targets. Choices and response times were measured. On each trial the computer randomly picked a combination of coherences from 51 possible trial types. The symbols in Figure 1C show the choice data, mean response times (RTs) are shown in Figure 1B. The position on the horizontal axis indicates the coherence of the strongest motion component in the stimulus, the other two coherences are defined by the color of the symbol (see legend). In the choice plot circles represent correct choices, squares are choices of the direction of the intermediate motion component, and diamonds are choices of the direction of the weakest motion component. Figure 1C indicates that accuracy improved with stronger sensory evidence for making a particular choice (without changing the coherences of the components providing evidence for one of the alternative choices; same color in the plot), that the psychometric function was getting shallower the more distracting sensory evidence was present (from blue to red to green), and that the relative frequencies of the three possible choices followed the order of the coherences of the three motion components (circles above squares above diamonds). Figure 1B shows that responses were, on average, faster when stronger sensory evidence was presented for a particular choice (again without changing the coherences of the components providing evidence for one of the alternative choices; same color in the plot). One of the striking features of our behavioral dataset is that accuracy and mean RT can change independently. For example, all stimuli with three identical motion strengths are associated with choice performance at chance level, but the mean RTs are quite different for the different coherences (faster responses for higher coherence; see the leftmost blue, red, and green symbols in Figures 1B,C). Likewise, whereas mean RT was largely governed by the average of the two lower coherences (high degree of overlap between red and magenta symbols and green and cyan symbols in Figure 1B), accuracy was more sensitive to the individual coherences (larger separation between red and magenta circles and green and cyan circles in Figure 1C).

Figure 1.

Slightly leaky feedforward inhibition model. (A) Structure of the model. Solid lines indicate excitatory connections, dashed lines inhibitory connections. (B) Fit of the model (lines) to the mean response time data (symbols). The coherence of the strongest motion component is plotted on the horizontal axis. The color codes for the strengths of the other two components. Data points within the light gray bars, which would normally all be aligned with the center of the bar, have been shifted horizontally for presentation purposes to reduce overlap. (C) Comparison between the model's choice predictions (lines) and the behavioral data (symbols). The error bars indicate 95% confidence intervals. (D) Comparison between the model's predictions for some RT distributions (blue lines) and the behavioral data (gray histograms).

In Niwa and Ditterich (2008) we have also demonstrated that both the datasets from individual subjects as well as the pooled dataset (which we are focusing on in this study) are consistent with a decision mechanism that assumes a race-to-threshold between three independent integrators, each of which accumulates a different linear combination of three relevant sensory signals. Our model made use of perfect integrators and feedforward inhibition was responsible for mediating competition between the alternative choices. However, many alternative models of multi-alternative perceptual decision making assume purely excitatory feedforward connections, propose feedback inhibition as the mechanism responsible for mediating competition between the choice alternatives, and involve imperfections of the integrators, like leakiness or saturation effects (Usher and McClelland, 2001; McMillen and Holmes, 2006; Bogacz et al., 2007; Beck et al., 2008; Furman and Wang, 2008; Albantakis and Deco, 2009).

It was therefore natural to ask whether our behavioral dataset was also consistent with some of these alternative model architectures. We chose to use one aspect of the behavioral dataset, the mean RTs, to fit these models and to test their predictive power for the remaining aspects of the dataset, the choice proportions and the RT distributions. As we will see, the behavioral data cannot distinguish well between different decision mechanisms. We therefore wanted to know whether different models would make different predictions for decision-related internal signals, which should allow a dissociation of the competing models based on neurophysiological experiments. Furthermore, we wanted to discuss how different model structures relate to decision theory, in particular the multihypothesis sequential probability ratio test (MSPRT; Dragalin et al., 1999). To be able to fit models to our data we will focus on abstract stochastic process models with only a small number of parameters rather than more complex biologically inspired models like Furman and Wang (2008) or Albantakis and Deco (2009), which require a different approach, but we will consider decision mechanisms based on both feedforward and feedback inhibition, integration with and without leakage, saturating integration, and non-linear mechanisms for a closer approximation of MSPRT (Bogacz and Gurney, 2007; Bogacz, 2009; Zhang and Bogacz, 2010). Finally, since MSPRT is only known to be optimal for the asymptotic case of negligible error rates, we wanted to address the optimality of different decision mechanisms over a wider range of error rates since human/animal decisions do not tend to be perfect.

Materials and Methods

Analysis of the behavioral data

Details on the data analysis can be found in Niwa and Ditterich (2008). The 95% confidence limits for the probabilities of making a particular choice are based on a method proposed by Goodman (1965).

Model of the sensory response to the multi-component random-dot patterns

All of the models for explaining the behavioral data rely on the same representation of the sensory information as the input to the decision stage. As in Niwa and Ditterich (2008), the response of three pools of sensory neurons tuned to the three possible directions of coherent motion in the stimulus was modeled as three independent normal (Gaussian) random processes with means of

with j ranging from 1 to 3. Each stimulus is characterized by a set of three coherences c1, c2, and c3. cj is the coherence (between 0 and 1) of the motion component in the preferred direction of a particular pool of neurons. The first term of the sum in the numerator represents a strong linear response to coherent motion in the preferred direction. The second term reflects a weaker linear response to the noise component of the stimulus. The denominator reflects a divisive normalization process. Most of the models discussed here assume that the variance of each stochastic process scales linearly with its mean:

Only the LCA model with fixed variance assumes that the variances of the random processes are all the same and do not depend on the stimulus.

The means and the variances expressed by these formulas are means and variances per unit time, which we defined to be 1 ms. Thus, when performing a simulation with larger time steps, as we did (see below), both means and variances need to be multiplied accordingly when generating the random samples (by a factor of 5 for a time step of 5 ms).

Simulation of the decision models and fit to the mean response time data

All models were simulated in MATLAB (The MathWorks, Natick, MA, USA) using the Stochastic Integration Modeling Toolbox developed by the author, which can be downloaded from http://master.peractionlab.org/software/. A temporal resolution of 5 ms was chosen for all simulations. Optimal model parameter sets were obtained through an optimization procedure using a multi-dimensional simplex algorithm (provided by MATLAB's Optimization Toolbox). Simulations with 10,000 trials per experimental condition were run in parallel on a small computer cluster (using MATLAB's Parallel Computing Toolbox and Distributed Computing Server) to minimize the sum of the squared differences between the mean RTs in the data and the mean RTs predicted by the model, taking the standard errors of the estimated means into account. We used the mean RTs for each combination of coherences, regardless of choice (15 data points). For the model these were obtained by calculating a weighted sum of the predicted mean RTs for the different choices based on the predicted probabilities of these choices. The set of model parameters yielding the smallest error (shown in brackets in the column “Remaining error” in Table 1) was then used to perform a higher-resolution simulation with 50,000 trials per condition for obtaining the behavior. The remaining error resulting from this simulation is shown in front of the brackets in the column “Remaining error” in Table 1. These simulations yield distributions of decision times. A fixed residual time tres was added to convert these into distributions of response times. Leaky integrators are characterized by an exponential decay of their state toward 0 in the absence of any other input. How quickly an integrator decays is defined by the integration time constant τ. After τ the state has decayed to 37% of its initial value. Mathematically, the leakage shows up in the differential equation describing the integrator as a term that is proportional to the current integrator state with a negative sign:

Table 1.

Model parameters.

| Model parameters | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Model | Gain of sensory response (g) | Response to stimulus noise (kn) | Strength of divisive normalization (ks) | (Population) Fano factor (kv) | Fixed variance | Offset | (Effective) integration time constant (ms; τ or τeff) | Decision threshold | Residual time (ms; tres) | Remaining error after mean RT fit | Percentage of predicted choice data points within 95% confidence intervals | Quality of RT distribution match: mean intersection/mean fidelity |

| Slightly leaky feedforward inhibition model (Figure 1) | 0.0101 | 0.120 | 2.01 | 0.332 | N/A | N/A | 714 (imposed; chosen to match feedback inhibition model) | 1 (imposed) | 353 | 97 (49) | 87 | 0.858/0.984 |

| Very leaky feedforward inhibition model (Figure 2) | 0.0315 | 0.135 | 1.83 | 0.675 | N/A | N/A | 50 (imposed) | 1 (imposed) | 477 | 80 (50) | 83 | 0.822/0.926 |

| Feedback inhibition (LCA) model with scaling variance (Figure 4) | 0.0117 | 0.0928 | 2.78 | 0.417 | N/A | N/A | 714(b = 0.00140 for a time unit of 1 ms) | 1 (imposed) | 404 | 84 (75) | 65 | 0.829/0.935 |

| Feedback inhibition (LCA) model with fixed variance (Figure 5) | 0.0131 | 0.0967 | 2.29 | N/A | 0.000407 | N/A | 694(b = 0.00144 for a time unit of 1 ms) | 1 (imposed) | 392 | 61 (44) | 74 | 0.751/0.920 |

| Feedforward MSPRT model (Figure 6) | 0.0192 | 0.0975 | 2.08 | 0.434 | N/A | N/A | ∞ (imposed) | −0.383 | 390 | 92 (65) | 61 | 0.773/0.928 |

| Feedback MSPRT model (Figure 8) | 0.0174 | 0.101 | 2.19 | 0.696 | N/A | 1.47 | ∞ (imposed) | 1 (imposed) | 447 | 81 (69) | 57 | 0.841/0.926 |

Parameters in bold have been determined through optimization. The first number in the “Remaining error” column indicates the remaining error after a high-resolution simulation (50,000 trials per experimental condition) of the model with the indicated parameter set. The second number in brackets indicates the smallest error that was seen during the optimization process based on a lower-resolution simulation (10,000 trials per condition).

All of the simulations of models that were also used for making predictions for neurophysiology did not allow negative integrator states (indicated as “≥0” in the signal flow diagrams). This was implemented by setting an integrator state to 0 in any given time step if, without restrictions, it would have ended up with a negative state. Only the simulations of the model shown in Figure 6 did not prohibit negative integrator states since this model was excluded from further discussing its neurophysiological predictions due to its unrealistic predictions for the threshold crossing activity of the winning integrator (shown in Figure 6D). All of the shown trajectories of internal signals are based on simulations with 10,000 trials per condition.

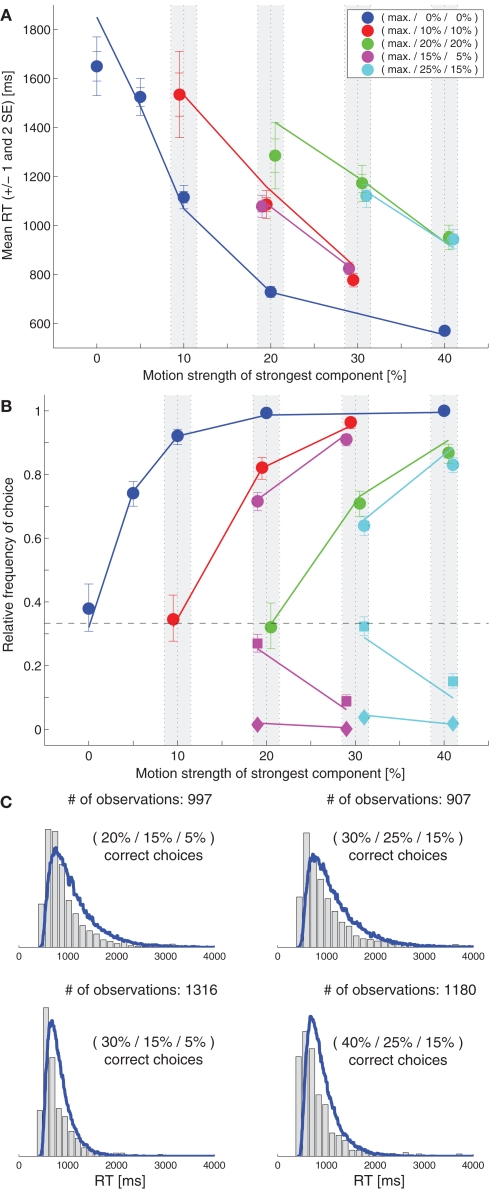

Figure 6.

Feedforward MSPRT model. (A) Structure of the model. (B) Fit of the model (lines) to the mean response time data (symbols). (C) Comparison between the model's choice predictions (lines) and the actual data (symbols). (D) Model predictions for the state of the winning integrator just prior to decision threshold crossing. Line width codes for the coherence of the strongest motion component (thicker = higher coherence), line color codes for the other two motion strengths. The integrator state at threshold crossing is very different for different combinations of motion coherence.

Simulations for comparing the optimality of different decision mechanisms

To obtain the relationship between error rate and mean sample size for each of the compared mechanisms, 20,000 trials were simulated for a variety of decision thresholds. For these simulations the integration range was unlimited, meaning that any integrator state was allowed, to provide a fair comparison between the feedforward and the feedback inhibition models and MSPRT, which also requires non-saturating integrators for a true implementation. For the feedforward inhibition mechanism the integrators xk, (k = 1…3) were updated in each time step n according to

with l defining the leakiness of the integrators and ek, n, being the current sensory evidence (linear combinations of random samples). For the feedback inhibition mechanism the integrators were updated according to

with b being the strength of the inhibitory feedback and ek, n, again being the current sensory evidence, which, in this case, are just the random samples.

Results

Models based on feedforward inhibition

We will start with a model that is similar to the one presented in Niwa and Ditterich (2008). The structure of this model is shown in Figure 1A. Three independent integrators race against each other until the first one reaches a fixed decision threshold, which determines the choice and the decision time. Each of the integrators accumulates a different linear combination of three relevant sensory signals (with one sensory pool providing feedforward excitation and the other two pools providing feedforward inhibition). Each sensory pool shows a linear response to the coherent motion in its preferred direction as well as a weaker linear response to the noise (non-coherent) component of the stimulus. Simultaneous activation of multiple sensory pools by coherent motion induces divisive normalization, and the variance of the response of a sensory pool is proportional to its mean. The formulas describing the sensory response can be found in section “Materials and Methods.” The response time is determined by adding a constant residual (non-decision) time to the decision time. The model shown in Figure 1 is different from the one presented in Niwa and Ditterich (2008) in two aspects: the integrators are leaky and saturate. The leakiness was chosen to be identical to the one of a feedback inhibition model, which will be discussed later, to be able to make a direct comparison between both models. As we will see below, the feedforward inhibition model is able to explain the behavioral data for a wide range of integration time constants. The second modification is motivated by a biological constraint: since we want to look at model predictions for neurophysiological data later in this manuscript and since neural integrators cannot represent negative values by their firing rates, we imposed the constraint that the integrators cannot assume negative values. An upper limit is naturally given by the decision threshold. Since one of the model parameters can be chosen arbitrarily we defined the decision threshold to be 1. The starting point of the integration was chosen to be 1/3 of the decision threshold. This choice was informed by a preliminary analysis of recordings from the lateral intraparietal area (LIP) in monkeys performing the same task (Bollimunta and Ditterich, 2009), but is also consistent with the findings of Churchland et al. (2008). The exact location of the starting point turns out not to be too critical for being able to explain the behavioral data (data not shown). Figure 1B shows a fit of the model to the mean RT data. The symbols represent the data, the lines the model. The model parameters can be found in Table 1. This table also lists the remaining error after the fit as a goodness of fit measure with smaller numbers indicating a better fit. However, these numbers need to be taken with a grain of salt. All model calculations are based on simulations and even when simulating 50,000 trials per experimental condition this number shows a standard deviation of roughly 2.5 when repeating simulations with the same parameter set. Furthermore, the model fitting procedure had to be based on lower-resolution simulations (10,000 trials per condition), making the error during the optimization process even more variable. Once the mean RT data have been used for fitting the model, other aspects of the dataset can be used for testing the model. Figure 1C shows a comparison between the model's predictions for the choice data (lines) with the actual choice data (symbols). Figure 1D compares the model's predictions for the RT distributions (blue lines) with the actual RT distributions (gray histograms) for the four combinations of coherences for which we have the largest number of trials. The results indicate that adding some leakiness to the integrators and limiting the integration range to positive values (prohibiting negative integrator states) does not impact the model's ability to account for the behavioral data.

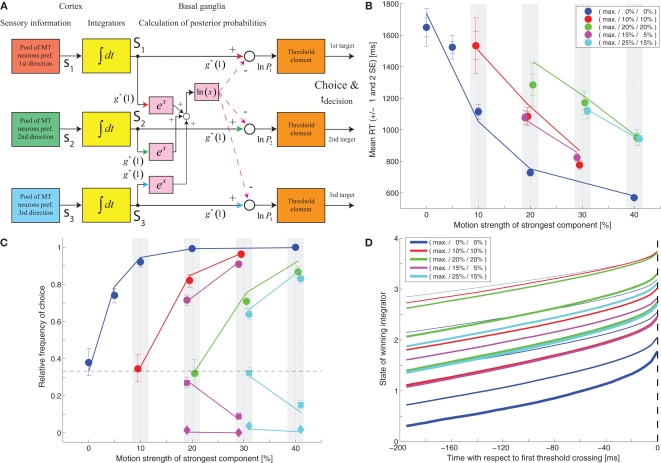

We had previously demonstrated that a feedforward inhibition model was able to capture the behavior of monkeys performing a two-choice random-dot motion discrimination (Roitman and Shadlen, 2002) over a wide range of integration time constants (degrees of leakiness of the integrators; see section Simulation of the Decision Models and Fit to the Mean Response Time Data for an explanation; Ditterich, 2006). It turns out that the same is true for our human three-choice dataset. Figure 2A shows the results of fitting a model with the same structure as the one shown in Figure 1, but with very leaky integrators with an integration time constant of just 50 ms to the mean RT data. The model parameters can again be found in Table 1. Figures 2B,C show a comparison between the model's predictions and the choice data and RT distributions. The match is not as good as the one for the longer integration time constant. In particular, the model's mean RTs for the hardest trials are too long and the shape of the RT distributions approaches the typical exponential distribution of a probability summation model, a memory-free mechanism that keeps comparing incoming samples with a threshold until a threshold crossing occurs. However, overall, the results indicate that the behavioral data are consistent with a surprisingly wide range of integration time constants. To determine how other model parameters change with the integration time constant (τ) we fitted a number of models with different time constants to the mean RT data. The results are shown in Figure 2D. All model parameters but the strength of the divisive normalization (ks; blue) changed systematically with τ (significant correlations with p < 0.01). As the leakiness increases (smaller τ) the strength of the sensory input (g; red solid line) has to increase as well as the Fano factor, the ratio between the variance of a sensory input and its mean (kv; black solid line). However, g changes faster than kv, leading to an improved signal-to-noise ratio (red dashed line) for smaller time constants. Being able to achieve the same performance requires better quality sensory signals when the integrators are not as efficient (leakier). At the same time, the residual time (tres; magenta) also increases, meaning that the mean decision time is getting shorter for leakier integrators.

Figure 2.

Very leaky feedforward inhibition model. (A) Fit of the model (lines) to the mean response time data (symbols). (B) Comparison between the model's choice predictions (lines) and the actual data (symbols). (C) Comparison between the model's predictions for some RT distributions (blue lines) and the behavioral data (gray histograms). (D) Dependency of remaining model parameters on the integration time constant. All parameters but the strength of the divisive normalization (ks; blue) were significantly correlated with τ.

Models based on feedback inhibition

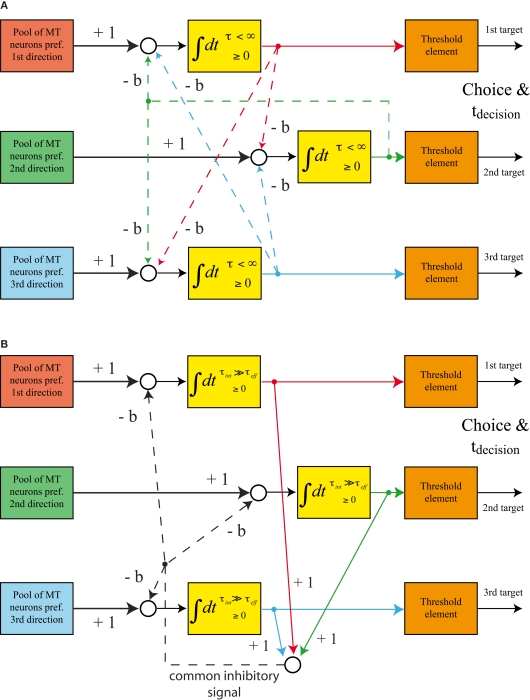

Rather than basing the competition between the integrators on feedforward inhibition, the majority of published models of perceptual decisions between multiple alternatives rely on feedback inhibition. One of the most influential models in this area is the “leaky, competing accumulator (LCA) model” (Usher and McClelland, 2001). The structure of this model is shown in Figure 3A. Several leaky integrators compete against each other by laterally inhibiting their competitors. The integrators have an intrinsic leakiness (given by the inverse of the integration time constant τ) and inhibit each other with strength b. It has been pointed out that in the case of decisions between two alternatives a balanced LCA model (strength of inhibition equals leakiness of integrators) generates a decision behavior that is very similar to the one generated by a drift-diffusion model, which, in turn, is equivalent to a feedforward inhibition model (Bogacz et al., 2006). Here, we will also focus on the balanced version of a multi-alternative LCA model. A particularly interesting alternative implementation of this model is shown in Figure 3B. The need for tuning the network parameters in such a way that the intrinsic leak of the integrators matches the strength of the lateral inhibition disappears when the effective leakiness of the integrators is provided by an explicit inhibitory feedback rather than by intrinsic leakage. Mathematically, leaky integration simply means that the differential equation for the change of the state of the integrator has a term that is proportional to the current state with a negative coefficient. Whether this term is provided by an intrinsic leak of the integrator or by an external feedback does not matter. Thus, it is possible to start out with integrators whose intrinsic leakiness (yielding an intrinsic integration time constant τint) is substantially lower than the desired effective leakiness and to set the effective leakiness (yielding an effective integration time constant τeff) by feedback inhibition from the output of an integrator to its own input. A balance between the effective leakiness of the integrators and the strength of lateral inhibition means that the same amount of inhibitory feedback has to be provided from the output of an integrator to its own input as well as the inputs of all other integrators. Thus, this kind of inhibition can be provided by a common inhibitory signal that can be derived from the sum of all current states of the integrators. The structure of such a model (Figure 3B) is therefore very similar to the structure of more biologically inspired models that make use of a common pool of inhibitory interneurons (Furman and Wang, 2008; Albantakis and Deco, 2009).

Figure 3.

Structure of feedback inhibition models. (A) “Leaky, competing accumulator (LCA) model”: the integrators are intrinsically leaky, lateral inhibition is provided by individual feedback projections from the output of each integrator to the inputs of the other two integrators. (B) Alternative implementation of a balanced LCA model. The integrators’ intrinsic leak (determining τint) is substantially smaller than the effective leak (determining τeff) caused by the circuitry. The balance between leakiness and lateral inhibition is achieved by generating one common inhibitory feedback signal.

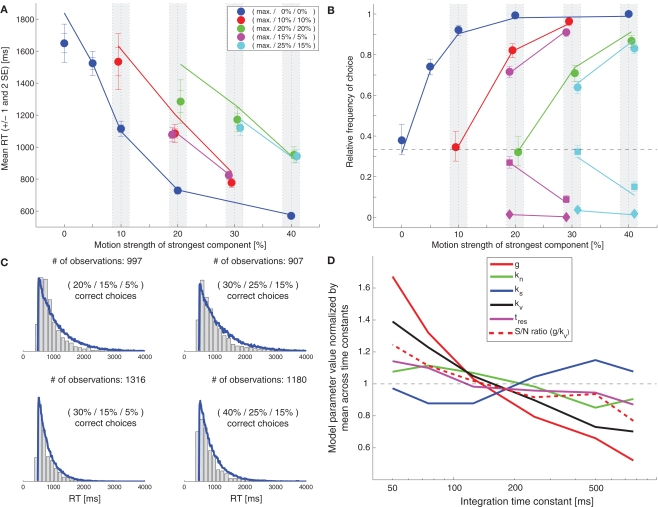

We were wondering whether our behavioral dataset was consistent with such a balanced LCA model. Using the same formalism for describing the sensory responses as the one used in the feedforward inhibition model, we again fitted the feedback inhibition model to the mean RT data (Figure 4A). The resulting model parameters can be found in Table 1. Figure 4B shows a comparison between the model's predictions for the choice behavior (lines) and the actual choice data (symbols). The model's predictions for some RT distributions (blue lines) are compared with the actual data (gray histograms) in Figure 4C. While the overall match seems slightly worse than in the case of the feedforward inhibition model, the results indicate that our behavioral dataset is consistent with both feedforward as well as feedback inhibition models of multi-alternative perceptual decision making.

Figure 4.

Feedback inhibition model with scaling variance. (A) Fit of the model (lines) to the mean response time data (symbols). (B) Comparison between the model's choice predictions (lines) and the actual data (symbols). (C) Comparison between the model's predictions for some RT distributions (blue lines) and the behavioral data (gray histograms).

As mentioned in the section “Introduction,” one of the striking features of our behavioral dataset is that in the case of three motion components with identical coherence faster mean RTs were observed for higher coherence. As we have pointed out in Niwa and Ditterich (2008), in the context of the feedforward inhibition model this observation can only be explained through changes in the variance of the combined sensory signals (e1, e2, and e3 in Figure 1A) since the means are always 0 for three identical coherences. We therefore concluded that a significant scaling of the variance of the sensory signals with their mean has to be postulated. However, the situation is different in the case of the feedback inhibition model. The excitatory input to a particular integrator changes whenever the mean of the associated sensory signal changes. Thus, changes in the means only (without any changes of the variance) could, in principle, be driving the behavioral effects. To test whether such an alternative explanation would be consistent with our data we also fitted a version of the feedback inhibition model with fixed (rather than scaling) variance sensory signals. The results are shown in Figure 5A; the model parameters can be found in Table 1. Figures 5B,C show comparisons of the model's predictions for choice and RT distributions with the actual data. The results indicate that a feedback inhibition model could explain the observed independent changes of accuracy and mean RT on the basis of changes of the mean sensory signals only without having to postulate additional changes of the variance (although, as we have seen in Figure 4, a scaling of the variance does not hurt).

Figure 5.

Feedback inhibition model with fixed variance. (A) Fit of the model (lines) to the mean response time data (symbols). (B) Comparison between the model's choice predictions (lines) and the actual data (symbols). (C) Comparison between the model's predictions for some RT distributions (blue lines) and the behavioral data (gray histograms).

Models implementing or approximating MSPRT

For choices between two alternatives Gold and Shadlen (2001) were able to demonstrate that, under reasonable assumptions about how sensory neurons represent the state of the sensory world, integrating the difference between the activities of two pools of opposing sensory neurons up to a decision threshold provides a good approximation of the SPRT, which has been shown to be optimal in the sense of minimizing the average decision time for any desired accuracy level (Wald, 1945). This is because the difference between the activities of the two sensory pools can be shown to be (roughly) proportional to the logarithm of the likelihood ratio between the two competing hypotheses based on the momentary sensory evidence. Assuming statistical independence, summing these differences over time yields a value proportional to the logarithm of the likelihood ratio based on the complete sensory evidence provided so far. According to SPRT the decision process should be terminated when this likelihood ratio exceeds a decision threshold.

While the optimal algorithm for decisions between more than two alternatives for any desired accuracy level is still unknown, an extension of SPRT, the MSPRT has been shown to be asymptotically optimal for negligible error rates (Dragalin et al., 1999). As will be discussed later in this manuscript, the models that we have been looking at so far do not implement MSPRT. However, Bogacz and Gurney (2007) have proposed that a circuit involving the basal ganglia could help implementing or at least approximating this algorithm. Dragalin et al. (1999) have proposed two versions of MSPRT: MSPRTa calculates the posterior probability of each hypothesis and stops the decision process when one of these exceeds a decision threshold; MSPRTb is based on multiple pairwise comparisons and stops the decision process when all likelihood ratios between a given hypothesis and all alternative hypotheses exceed a decision threshold. Bogacz and Gurney (2007) describe a potential implementation of MSPRTa. Cortex is assumed to integrate sensory evidence for each hypothesis independently and the basal ganglia are assumed to perform the calculations that are necessary for obtaining the posterior probabilities. The decision process is assumed to terminate when one of the posterior probabilities exceeds a critical threshold. The structure of such a model is shown in Figure 6A. Calculating the posterior probabilities involves obtaining the logarithm of a sum of exponentials. A truthful implementation of MSPRT would require the weight (g*) of the excitatory connections with the red, green, and blue arrowheads to change with the statistics of the sensory input. However, Bogacz and Gurney (2007) have demonstrated that the model is relatively robust with respect to how this weight is set. Here we only consider a model where these weights are set to 1. We used perfect integrators (since this is what a true implementation of MSPRT would require) and initialized them with a value of 0. The starting point of the integration can actually be chosen arbitrarily since it is easy to demonstrate that adding the same offset to the states of all integrators does not alter the result of calculating the posterior probabilities (see Bogacz and Gurney, 2007, Appendix D or Bogacz, 2009, Appendix E). The result of fitting this model to the mean RT data is shown in Figure 6B and the resulting model parameters can be found in Table 1. Note that the decision threshold is negative. This is because the logarithm of a probability is a negative number. Bogacz and Gurney (2007) have therefore proposed that the output of the basal ganglia should be the negative of this logarithm and that a threshold crossing would then occur when one of the outputs drops below a critical value. Figure 6C shows a comparison between this model's predictions for the choice behavior and the actual choice data. As can be seen, our behavioral dataset seems also consistent with an MSPRT model.

This feedforward implementation of MSPRT, however, makes a prediction that is incompatible with experimental observations that have been made in non-human primates performing random-dot motion discrimination tasks. Figure 6D shows the model's prediction for the state of the winning integrator immediately prior to the decision threshold crossing for different combinations of motion coherences. The dashed vertical line marks the time of the threshold crossing. It is obvious that, at this point in time, the state of the winning integrator spans a wide range for different coherence combinations. This is because the thresholding is not performed on the state of the integrator, but on the output of the circuit calculating the posterior probabilities. In contrast, recordings from parietal cortex of monkeys performing such tasks, which have revealed neurons that appear to reflect the state of the integrator, suggest that the activity of the winning integrator is very stereotyped around the time when the decision is made (Roitman and Shadlen, 2002; Churchland et al., 2008; Bollimunta and Ditterich, 2009). Aware of this issue, Bogacz (2009) has considered an alternative implementation: rather than having the basal ganglia circuit operate downstream from the cortical integrators, it could also be part of a cortico-cortical feedback loop. Thus, if the circuit could be arranged such that the integrators themselves carry the information about the posterior probabilities, the thresholding could be moved back to the cortical level.

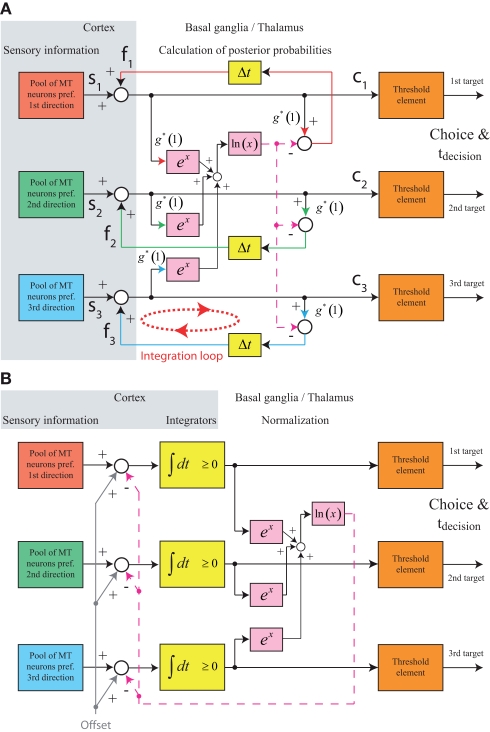

Figure 7A shows a feedback implementation of MSPRT that closely follows the suggestion in Bogacz (2009). What does this mechanism calculate compared to the mechanism shown in Figure 6A? The feedforward mechanism in Figure 6A takes an integrated sensory evidence signal Si, multiplies it with g*, and subtracts the logarithm of the sum of the exponentials of all integrated evidence signals, again multiplied by g*, to obtain the logarithm of the posterior probability Pi:

Figure 7.

Structure of feedback MSPRT models. (A) Feedback implementation of MSPRT following Bogacz (2009). The basal ganglia are part of a cortico-cortical feedback loop that provides an excitatory feedback signal representing the logarithm of the posterior probability. Note that in this implementation the integration of the sensory evidence is mediated by the cortico-cortical feedback loop involving the basal ganglia. The yellow boxes with “Δt” in them indicate some time delay in the feedback loop. (B) Alternative feedback implementation of MSPRT. The feedback signals in (A) have been separated into an excitatory component responsible for integrating the sensory evidence and an inhibitory component responsible for normalizing the posterior probabilities. This implementation no longer requires the basal ganglia to participate in the temporal accumulation process. Furthermore, an excitatory offset has been added to the inputs of all integrators to move the operating point of the circuit into the positive range (recall that the logarithm of a probability is a negative value). As long as the same offset is added to the input of all integrators, this does not affect the result of calculating the posterior probabilities. Thus, each integrator now carries the sum of the logarithm of the posterior probability and the offset. The integrators now also have the property that they cannot represent negative values (like the integrators in the earlier models).

Thinking of this as a discrete time process, which will make it easier to compare it with the feedback process, the integrals over the sensory signals up to time t would be replaced by sums of sensory samples up to some index n:

Let us now have a look at the mechanism in Figure 7A. As demonstrated in the Appendix, for a g* of 1 the feedback signal fi, n, is always the logarithm of the posterior probability from the previous iteration ln Pi,n−1. The summation nodes update these with the current sensory evidence and the feedback loops again normalize the results. Note that in this implementation the accumulation of the sensory evidence (temporal integration) is performed by the feedback loops involving the basal ganglia.

However, the integration does not necessarily have to be performed by the cortico-cortical loop involving the basal ganglia. A closer inspection of the feedback signals reveals that they are composed of two additive components: an excitatory component with a gain of 1 for feeding back each individual signal and a shared inhibitory component that carries the logarithm of the sum of exponentials. The individual excitatory component simply ensures perfect integration and can be separated from the shared inhibitory component, removing the function of temporal integration from the basal ganglia loop and using it only for the normalization process. Such an implementation is shown in Figure 7B. Another modification has been applied to this implementation. Recall that the logarithm of a probability is a negative number. However, we want the integrators to operate in a positive range. To achieve this, we are adding an identical excitatory offset to the inputs of all integrators. Remember that adding an identical offset to all integrators does not change the outcome of the posterior probability calculation (see above). This circuit has in interesting property: Assume that the actual decision thresholds are fixed. (We set them to 1 as in the earlier models.) To change the effective decision threshold the excursion from the starting point of integration to the actual threshold has to be adjusted. In this circuit this is achieved by changing the additive offset, since the combination of the additive offset with the feedback circuit takes care of automatically setting the starting point of integration. Why is this? Let us assume that the integrators start out with any arbitrary activity A. What exactly A is does not matter as long as the activation of all integrators is identical. This leads to an inhibitory feedback signal of size ln[3 exp(A)] = A + ln 3. In the absence of sensory input the integrators therefore receive an excitatory input of size “Offset” and an inhibitory input of size A + ln 3. Thus, the current integrator activity is subtracted and the integration starting point is set at Offset − ln 3.

Figure 8A shows a fit of the model in Figure 7B to the mean RT data, again using a more realistic integrator that cannot assume negative values. The parameter values can be found in Table 1. Figures 8B,C compare the model's predictions for choice behavior and RT distributions with the actual data. The automatically set starting point of integration turns out to be roughly 0.38 (see Figure 11), which is not unrealistic and similar to the imposed starting point of 0.33, which has been applied to all other models on the basis of neurophysiological observations in animal experiments.

Figure 8.

Results of the feedback MSPRT model (shown in Figure 7B). (A) Fit of the model (lines) to the mean response time data (symbols). (B) Comparison between the model's choice predictions (lines) and the actual data (symbols). (C) Comparison between the model's predictions for some RT distributions (blue lines) and the behavioral data (gray histograms).

Figure 11.

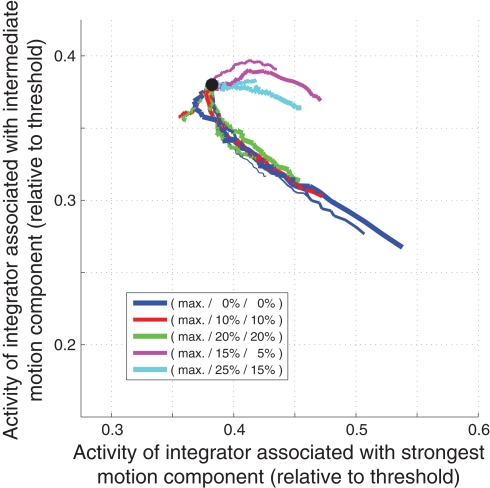

Early state space of the feedback MSPRT model (shown in Figures 7B and 8). Note that the integration starting point (approx. 0.38) is set by the feedback mechanism and not imposed as in the other models.

Predictions for neurophysiology

What we have seen so far is that the pattern of behavioral data that we have observed in our multi-alternative perceptual decision-making task seems to be consistent with a number of integration-to-threshold model structures that involve a broad range of integration time constants, feedforward as well as feedback inhibition mechanisms, as well as linear and non-linear mechanisms for combining signals across alternatives. Since it seems to be virtually impossible to discriminate between these different options based on the behavioral data alone, the question arises whether other types of data would help with this discrimination. What I am going to demonstrate in the following is that, although the models make almost identical predictions for behavior, the predictions for how the internal state of the system should evolve over time are quite different. Thus, invasive recordings in animal models that aim at tapping into the state of the integrator (like Roitman and Shadlen, 2002; Churchland et al., 2008) should be helpful in discriminating the alternatives.

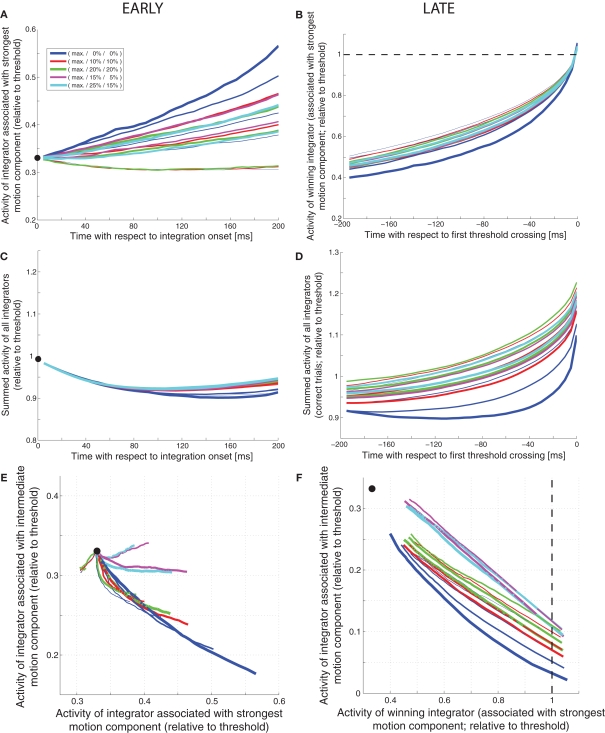

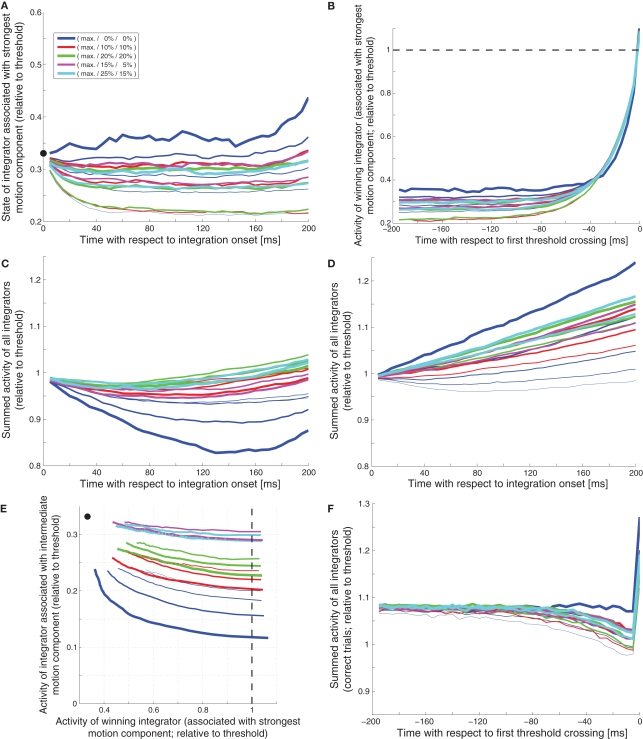

We will be looking at a number of measures for assessing the internal dynamics of a decision mechanism: how the activity of the integrator associated with the strongest motion component evolves over time, how the summed activity of all integrators evolves over time, and how the states of the two integrators associated with the two larger motion coherences move through state space over time. Figure 9 shows all of these measures for the feedforward inhibition model presented in Figure 1. The left column indicates the expected trajectories during the first 200 ms after integration onset (ignoring the outcome of the decision); the right column presents the expected trajectories during the last 200 ms before the decision threshold crossing (for correct trials only). Different trajectories are associated with different combinations of coherences, with the width of the line representing the coherence of the strongest motion component and the color representing the coherences of the other two components.

Figure 9.

Internal dynamics of feedforward inhibition mechanism (shown in Figure 1). (A) Expected activity (relative to threshold) of the integrator associated with the strongest motion component during the first 200 ms after integration onset. The thickness of the line represents the coherence of the strongest motion component (thicker = higher coherence), the color codes for the coherences of the other two components (see legend). The black dot marks the starting point of integration. (B) Expected activity of the integrator associated with the strongest motion component during the last 200 ms before threshold crossing on correct trials. The dashed line indicates the decision threshold. (C) Expected sum of the activities of all integrators during the first 200 ms. (D) Expected sum of the activities of all integrators during the last 200 ms on correct trials. (E) Expected movement through state space (states of the integrators associated with the two stronger motion components) during the first 200 ms. (F) Expected movement through state space during the last 200 ms.

How can these measures be used to differentiate between different decision mechanisms? How, for example, can we dissociate a slightly leaky feedforward inhibition mechanism (Figure 1) from a very leaky feedforward inhibition mechanism (Figure 2)? As shown in Figure 9A, the slightly leaky mechanism predicts early trajectories that are roughly linear ramps with different slopes for different combinations of coherences. The very leaky mechanism on the other hand, as shown in Figure 10A, predicts that the integrator assumes different steady states for different combinations of coherences. Likewise, the slightly leaky mechanism predicts continuously rising late trajectories (Figure 9B), whereas the very leaky mechanism again predicts different steady states during the second to last 100 ms before the threshold crossing (Figure 10B). Is there a way to tell a feedback inhibition mechanism with scaling variance (Figure 4) from one with fixed variance (Figure 5)? Figure 10C shows the early expected summed activity of all integrators for the scaling variance model. It can be seen that for trials with only a single coherent motion component (blue lines) the summed activity is expected to be lowest for the highest coherence (thicker lines below thinner lines). On the contrary, Figure 10D shows that in the fixed variance case the summed activity is expected to be highest for the highest coherence (thicker blue lines above thinner blue lines). Can we also tell a feedforward inhibition model (Figure 1) from a feedback inhibition model (Figure 2)? Figure 9F shows how a feedforward inhibition mechanism (regardless of the leakiness) approaches the decision threshold: the activity of the integrator associated with the intermediate motion component keeps falling as the activity of the winning integrator keeps rising. In contrast, Figure 10E shows the situation for the feedback inhibition mechanism (regardless of whether the variance scales or not): the integrator associated with the intermediate motion component tends to assume different steady states as the winning integrator approaches threshold. Finally, how can we tell a feedback MSPRT mechanism (Figure 8) from any of the mechanisms based on linear combinations of signals (regardless of feedforward or feedback inhibition)? With the exemption of high coherence single component trials, most of the presented decision mechanisms predict a continuous increase in the summed activity of all integrators during the last 200 ms before threshold crossing. Only two mechanisms are different: the very leaky feedforward inhibition mechanism (Figure 2), which predicts a roughly flat sum up to approx. 50 ms before threshold crossing when the sum starts rising continuously, and the feedback MSPRT mechanism (Figure 8), which also predicts a roughly flat sum up to approx. 100 ms before threshold crossing when the sum tends to start dropping (see Figure 10F). Thus, although all of the discussed decision mechanisms predicted virtually indistinguishable behavior, the predictions for the internal dynamics are quite different. These differences are summarized in Table 2.

Figure 10.

Deviating internal dynamics of other decision mechanisms. (A) Very leaky feedforward inhibition model (Figure 2): Early activity of leading integrator. (B) Very leaky feedforward inhibition model (Figure 2): Late activity of winning integrator. (C) LCA model with scaling variance (Figure 4): Early summed activity of all integrators. (D) LCA model with fixed variance (Figure 5): Early summed activity of all integrators. (E) LCA model with scaling variance (Figure 4): Late state space. (F) Feedback MSPRT model (Figure 8): Late summed activity of all integrators.

Table 2.

Comparison of internal model dynamics.

| Model | Early trajectories of leading integrator | Late trajectories of winning integrator | Early sum of activities | Late sum of activities | Early state space | Late state space |

|---|---|---|---|---|---|---|

| Slightly leaky feedfoward inhibition model (Figure 1) | Approximately linear ramps with different slopes for different coherence combinations (see Figure 9A) | Tend to rise throughout the 200 ms interval (see Figure 9B | Tends to rise between 100 and 200 ms after integration onset (see Figure 9C) | Tends to rise between 200 and 100 ms before threshold crossing (see Figure 9D) | See Figure 9E | Activity of losing integrator keeps falling while activity of winning integrator keeps rising; activities of losing integrator at threshold crossing relatively similar across coherence combinations (within approx. 10% of threshold activity; see Figure 9F) |

| Very leaky feedforward inhibition model (Figure 2) | Assumes different steady states for different coherence combinations (see Figure 10A) | Flat between 200 and 100 ms before threshold crossing (see Figure 10B) | Flat between 100 and 200 ms after integration onset | Flat between 200 and 100 ms before threshold crossing | Dominant immediate drop of the activity of the integrator associated with the intermediate motion component | Similar to Figure 9F |

| Feedback inhibition (LCA) model with scaling variance (Figure 4) | Approximately linear ramps… (similar to Figure 9A) | Tend to rise throughout the 200 ms interval (similar to Figure 9B) | Tends to rise between 100 and 200 ms after integration onset; for single component trials the summed activity is lowest for high coherence trials (see Figure 10C) | Tends to rise between 200 and 100 ms before threshold crossing (similar to Figure 9D) | Similar to Figure 9E | Losing integrator tends to saturate at different levels (spanning approx. 20% of threshold activity) for different coherence combinations as winning integrator approaches threshold (see Figure 10E) |

| Feedback inhibition (LCA) model with fixed variance (Figure 5) | Approximately linear ramps… (similar to Figure 9A) | Tend to rise throughout the 200 ms interval (similar to Figure 9B) | Tends to rise between 100 and 200 ms after integration onset; for single component trials the summed activity is lowest for low coherence trials (see Figure 10D) | Tends to rise between 200 and 100 ms before threshold crossing (similar to Figure 9D) | Similar to Figure 9E | Similar to Figure 10E |

| Feedback MSPRT model (Figure 8) | Approximately linear ramps… (similar to Figure 9A) | Tend to rise throughout the 200 ms interval (similar to Figure 9B) | Flat between 100 and 200 ms after integration onset | Flat between 200 and 100 ms before threshold crossing; tends to drop immediately before threshold crossing (see Figure 10F) | See Figure 11 | Similar to Figure 9F |

Figure 11 shows the early state space trajectories for the feedback MSPRT model (Figure 8). Qualitatively, these trajectories are similar to the ones predicted by most of the other decision mechanism. The reason for showing these trajectories is to demonstrate the result of the automatic setting of the integration starting point by the feedback mechanism as has been discussed above.

Another difference between the considered decision models pertains to the correlation between the input signals to the integrators. This correlation structure could potentially show up in spike time correlations between simultaneously recorded neurons representing the states of two different integrators (de la Rocha et al., 2007; Ostojic et al., 2009). There are too many unknowns (see section Discussion) to make a quantitative prediction for the expected correlations, but we will discuss the qualitative pattern that should be seen if correlations can be observed at all. A hallmark of the feedforward inhibition mechanism shown in Figure 1A is that a sensory signal that provides excitatory input to one of the integrators also provides inhibitory input to the other integrators. Thus, pairs of integrators share an anti-correlated input component that could show up as a reduced likelihood of (nearly) simultaneous spiking of neurons belonging to different integrator populations. In contrast, in the feedback inhibition mechanisms shown in Figure 3 different integrators only share inhibitory input. Thus, these positively correlated input components could show up as an increased likelihood of simultaneous spiking of neurons belonging to different integrator populations. In the feedback MSPRT mechanism shown in Figure 7A the feedback signals to the different integrators are largely independent and we therefore would not expect any significant correlation between the spike timing of neurons belonging to different integrator populations. In contrast, the integrators in the alternative implementation shown in Figure 7B share both an excitatory offset input as well as an inhibitory feedback input. The input signals to different integrators are therefore expected to be positively correlated, which could again show up as an increased likelihood of simultaneous spiking of neurons belonging to different integrator populations.

Relationship with decision theory

We have seen that a variety of integration-to-threshold models are apparently able to explain behavioral data from multi-alternative perceptual decision making, but what is it that these mechanisms are calculating and what is the relationship between the underlying algorithms and decision theory? Making a decision has two essential aspects: deciding when one has collected enough evidence for being able to make an informed choice, which we will refer to as the “stopping rule,” and choosing one out of a limited set of alternative hypotheses, which we will refer to as the “decision rule.” For example, when making a decision between two possible alternatives based on sequential sampling of sensory evidence, it has been demonstrated that the SPRT is the optimal algorithm in the sense of minimizing the mean sample size for any given desired accuracy level (Wald, 1945). In this case the stopping rule is to terminate the decision process when the likelihood ratio for the two alternatives exceeds a particular critical threshold that depends on the desired accuracy. And the decision rule is to pick the alternative with the larger likelihood.

To address the relationship between the discussed multi-alternative decision mechanisms and decision theory, I will start out developing an argument that is analogous to what Gold and Shadlen (2001) have done for the case of two alternatives: we will see that under certain circumstances the integration of the difference between the activities of two pools of sensory neurons yields a result that is proportional to the log-likelihood ratio between two hypotheses. To keep the argument as simple as possible, let us consider a slightly less complex problem than what the discussed experimental task requires: assume that the brain is listening to the outputs of three pools of sensory neurons, which are emitting random samples that can be described as originating from a stochastic process. The mean of one of these processes μ+ is slightly larger than the means of the other two, which are identical (μ−; this is the simplification). The brain's job is to find out which of the three streams is the one with the larger mean. Thus, there are three possible hypotheses H1, H2, and H3 with Hi meaning that stream i is the one with the larger mean:

Each time step k we observe a sample xk from the first stream, a sample yk from the second stream, and a sample zk from the third stream. In the following we will consider two special cases: (1) all stochastic processes are Poisson and (2) all stochastic processes are normal with equal variance.

Let us first look at the Poisson case. The conditional probabilities of observing a sample xk given the different hypotheses are

The conditional probabilities for the samples from the other two streams can be written analogously. We can thus establish a number of log-likelihood ratios:

Assuming statistical independence between the samples obtained from the different streams, the total log-likelihood ratios for a set of observations (xk, yk, zk) can be written as

Furthermore, assuming statistical independence between successive observations, the log-likelihood ratios based on the sensory observations up to time index n can be written as

This means that the log-likelihood ratio between two hypotheses is proportional to the sum of the differences between the samples obtained from two sensory streams or, in the continuous case, to the integral over the difference between two sensory streams. Equivalently, it is also possible to sum/integrate each stream independently and then calculate the difference afterward. The proportionality factor, which we will refer to as g*, in the case of Poisson processes is . Thus, we see that the same principal result that was developed by Gold and Shadlen (2001) for the 2AFC case, namely that the log-likelihood ratio between two hypotheses is proportional to the integral of the difference between the activities of two pools of sensory neurons, also holds for multiple alternatives.

Let us now consider the case of normal processes with equal variance. Under these circumstances the conditional probabilities for observing an individual sample can be written as

Following the same steps as in the case of the Poisson processes above, one arrives at the following final expressions:

Thus, the same principal result holds, but the proportionality factor g* is now .

Given these results, what is it that the feedforward inhibition model (Figure 1A) computes? It calculates the integrals over

Assuming that the outputs of the sensory pools can be approximated by either Poisson processes or by normal processes with roughly equal variance and ignoring imperfections of the integrators, the integrators then carry signals that are roughly proportional to the average log-likelihood ratio between one hypothesis and the other two hypotheses. The integration process stops once one of these values exceeds a threshold and the corresponding hypothesis is picked. This is what McMillen and Holmes (2006) have referred to as a max-vs-average test. Note that this is different from what MSPRT does. Recall that there are two versions of MSPRT: MSPRTa, which stops when the posterior probability of one hypothesis exceeds a threshold and picks the corresponding hypothesis, and MSPRTb, which stops when all of the likelihood ratios between a given hypothesis and all alternatives exceed a threshold and picks the corresponding hypothesis. This is what McMillen and Holmes have referred to as a max-vs-next test. What does the calculation of the posterior probability look like? Assuming flat priors, each posterior can be expressed as the corresponding likelihood multiplied by some factor. Since the posterior probabilities have to sum to 1 (one of the hypotheses has to be true and only one of them can be true at a time), this factor has to be 1 divided by the sum of the likelihoods. Thus the posteriors can be written as

Here, “obs” stands for all sensory observations. Note that implementing this algorithm would require either integrators for N alternatives (leading to an explosion in the number of required integrators with increasing number of alternatives) if the system wanted to keep track of all possible differences or N integrators for N alternatives if each sensory signal were integrated independently, plus calculating exponentials.

For a thorough comparison between the LCA model and MSPRT please see McMillen and Holmes (2006). Briefly, the authors demonstrated that, similar to our feedforward inhibition model, the LCA model also approximates a max-vs-average test.

Why is it that the mechanism shown in Figure 6A implements/approximates MSPRT? The calculation looks quite different from the formula for the posterior probabilities shown above. We can rewrite the posterior probability of the first hypothesis as

Multiplying both the numerator as well as the denominator by exp (g* ∫s1) yields

Taking the logarithm yields

This is what the circuit shown in Figure 6A computes. It therefore implements MSPRTa.

Optimality of the discussed decision mechanisms at different accuracy levels

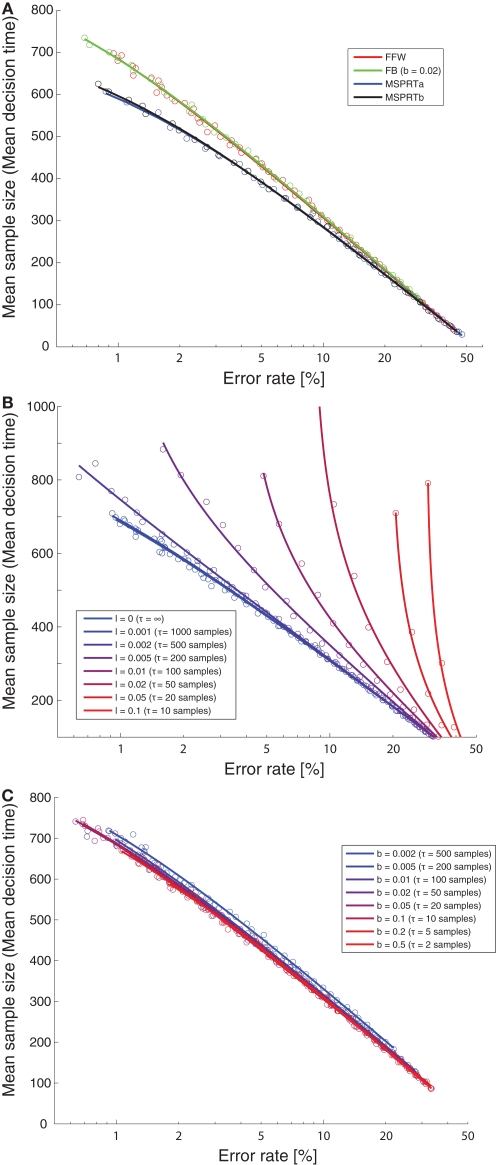

Multihypothesis sequential probability ratio test has been shown to be optimal in the sense of minimizing sample size or decision time for the asymptotic case of negligible error rates. However, when facing difficult decisions, humans or animals often do not operate in a regime of no or almost no errors. For example, our human subjects had an error rate of 20%. Our monkeys tend to be closer to a 30% error rate (Bollimunta and Ditterich, 2009). It is still unknown what the optimal multi-alternative decision algorithm in such a regime is. We therefore wanted to perform an empirical comparison of the optimality of the different decision mechanisms that are being discussed here for a range of biologically plausible error rates. The decision mechanisms had to solve the following challenging problem: From listening to three streams of random numbers they had to find out which of the streams had a slightly higher mean than the other two. All of the random processes were normal processes with a variance of 1. One of them had a mean of 0.1, the other two means were 0. The decision threshold was varied systematically to obtain the relationship between mean sample size and error rate for error rates ranging from roughly 1% to 40% for each of the decision mechanisms. Figure 12A shows a comparison between the optimality of a feedforward inhibition mechanism (“FFW”, red), a feedback inhibition mechanism (“FB”, green), MSPRTa (blue), and MSPRTb (black). FFW and FB showed identical performance. The same was true for the two MSPRT algorithms. For low error rates, the MSPRT algorithms clearly outperformed the integration-to-threshold mechanisms based on linear combinations. However, for error rates of 20% and more the performance was virtually indistinguishable. This indicates that an implementation based on linear combinations (using either feedforward or feedback inhibition) might no longer have a serious optimality disadvantage compared to MSPRT when looking at a biologically more relevant accuracy regime. Figure 12B shows how the optimality of the feedforward inhibition mechanism changes with the integration time constant. As can be seen, the optimality quickly declines with increasing leakiness of the integrators. The feedback inhibition mechanism's optimality, on the other hand, is largely insensitive to changes in the leakiness of the integrators (Figure 12C).

Figure 12.

Optimality of decision mechanisms. (A) Mean sample size (decision time) as a function of error rate for different decision mechanisms. A feedforward inhibition mechanism (“FFW”, red) and a feedback inhibition mechanism (“FB”, green) are compared with MSPRTa (blue) and MSPRTb (black). The circles are the simulation results, the lines are all fits of the form mean sample size = α + log(β + γ error rate) (with α, β, and γ free parameters), which provided good empirical fits for all simulations. (B) Effect of leakiness of the integrators on optimality of the feedforward inhibition mechanism. The leakiness ranges from 0 (perfect integration; blue) to very leaky (integration time constant of 10 samples; red). As can be seen, the optimality quickly declines with increasing leakiness. See (A) for an explanation of the circles and the lines. (C) Effect of leakiness of the integrators on optimality of the feedback inhibition mechanism. The leakiness ranges from slightly leaky (integration time constant of 500 samples; blue) to very leaky (integration time constant of two samples; red). As can be seen, over a wide range of time constants, the optimality is insensitive to the leakiness of the integrators. See (A) for an explanation of the circles and the lines.

Discussion

In this manuscript we have examined a number of different integration-to-threshold mechanisms that could potentially underlie perceptual decisions between multiple alternatives. These mechanisms included integrators with and without leakage, competition mechanisms based on either feedforward or feedback inhibition, and both linear and non-linear mechanisms for combining signals across alternatives. Overall, we have seen that these mechanisms can produce virtually indistinguishable decision behavior, which can make it difficult to impossible to distinguish between different alternative implementations based on behavioral data alone, at least with the dataset from our perceptual decision task. On the other hand, we have seen that these mechanisms make quite different predictions for the internal dynamics during the decision process. Thus, it is likely that high-resolution neural recordings will be helpful in figuring out which implementation is actually being used by the brain. Furthermore, we have discussed what kind of statistical tests are being implemented or approximated by these mechanisms and we have seen that the optimality advantage of the MSPRT tends to disappear once error rates on the order of 20% are being reached. In the following, we will be discussing the observations that have been made in this study and how they relate to previous work in more detail.

Similarity of the decision behavior predicted by different integration-to-threshold mechanisms

For perceptual decisions between two alternatives it has been shown previously that a balanced LCA model can mimic the choice behavior of a drift-diffusion model, which is equivalent to a feedforward inhibition model (Bogacz et al., 2006). Similarly, McMillen and Holmes (2006) have pointed out that, in the case of decisions between multiple alternatives, the LCA model approximates a max-vs-average test, which is, as demonstrated in this manuscript, the statistical test that is being implemented/approximated by the feedforward inhibition model. It is therefore perhaps not too surprising that feedforward and feedback inhibition models can also mimic each other's decision behavior in multi-alternative perceptual decision making and that both of these mechanisms can explain our behavioral dataset (Niwa and Ditterich, 2008).

For choices between two alternatives I had shown previously that feedforward inhibition mechanisms with a wide range of integration time constants are able to account for the choice behavior observed in a random-dot motion discrimination task (Ditterich, 2006). Similarly, we have seen here that the same is also true for decisions between more than two alternatives. What is becoming clear from both of these studies and what makes intuitive sense is that, although a wide range of integration time constants can produce very similar behavior, the fidelity or signal-to-noise ratio (SNR) of the sensory evidence signal has to change with the leakiness. A very leaky (feedforward) integration mechanism requires better quality (less noisy) sensory evidence signals for achieving the same performance (accuracy) than perfect or only slightly leaky integrators, but can do so in a shorter period of time (shorter mean decision times). Therefore, if the SNR is known, the integration time constant can, in principle, be determined from the decision behavior. I have used this type of argument in Ditterich (2006) to make the claim that, for the 2AFC case, the SNR required by an integration mechanism with a long integration time constant was closer to the expected SNR of a pool of extrastriate visual neurons representing the motion information than the SNR required by a very leaky integration mechanism. We currently have recordings of the sensory evidence signal from monkeys performing the same task as our human subjects under way and we expect these recordings to be helpful in addressing the integration time constant question for the multi-alternative case.

What is perhaps a bit more surprising is that the implementations of MSPRT that we have looked at in this study, which actually perform different statistical tests, also produced very similar decision behavior. Whereas, as already mentioned above, the feedforward inhibition mechanism and LCA approximate a max-vs-average test, MSPRT comes in two flavors and either performs a max-vs-next test (MSPRTb) or evaluates the posterior probability of each hypothesis (MSPRTa). Although our experimental task was designed such that we had control over how much sensory evidence was provided for each of the alternatives and although the experimental protocol included trials with different amounts of sensory evidence for the two not-maximally supported hypotheses, the dataset seems consistent with both max-vs-average-style as well as MSPRT-style mechanisms.

Overall, the observation that different multi-alternative decision mechanisms are difficult to differentiate on the basis of behavioral data alone seems consistent with a recent study by Leite and Ratcliff (2010), which also revealed a relatively high degree of mimicking when modeling data from a multi-alternative letter discrimination task. This does not necessarily mean that these mechanisms can mimic each other for any given parameter set. It might still be possible to design a clever experiment that drives these mechanisms into an operating range where the mimicking breaks down.

Do any of the discussed decision mechanisms provide a better account of our behavioral dataset than others?

We have used the strategy to fit the models on the basis of the mean response time (RT) data and to compare the models’ predictions for choice behavior and RT distributions with the actual data. Since all model predictions had to be simulated and since the required computing time had to be limited, the results are necessarily noisy. The reported model parameter sets are the ones that provided the smallest error during the optimization procedure, but due to the simulation-induced noise they probably deviate somewhat from the true optimum. It is therefore difficult to use smaller deviations in the remaining error after the fit to argue in favor of or against particular models. This becomes obvious when looking at the slightly leaky feedforward inhibition model, which achieved the second smallest error during the fitting procedure, but ended up with the largest error after the final higher-resolution simulation (see Table 1). The model with the lowest remaining error both during and after the fit was the LCA model with fixed variance. A visual inspection of the fits does not provide any major qualitative differences. All of the models tended to show a bigger difference in mean RT between stimuli with equal distracting coherences (red and green in the figures) and stimuli with unequal distracting coherences (magenta and cyan in the figures) than the dataset. Why do the models predict faster responses for unequal distracting coherences than for equal coherences (with the same mean)? The closer race between the two leading integrators in the unequal coherence case tends to cut off some slower correct responses that are still possible in the not so close race in the equal coherence case. This conditionalization effect, the integrator associated with the strongest motion component can only win the race if the integrator associated with the intermediate motion component has not crossed threshold yet, leads to an overall speedup of the responses. A variance of the sensory signals that scales with the mean tends to increase the size of this effect. This is potentially why the only discussed model with a fixed variance provided a slightly better fit to the mean RT data.

To address how well a model predicts the choice behavior I have decided to assess the proportion of predicted choice data points that are located within the 95% confidence intervals of the actual data. This assessment shows a slightly clearer ranking of the models than the quality of the mean RT fit. The feedforward inhibition models provided the best accuracy predictions (approx. 85%), followed by the feedback inhibition models (approx. 70%), and the MSPRT models (approx. 60%; see Table 1). The LCA model with scaling variance and the MSPRT models tended to predict somewhat better choice performance than was actually observed.

For quantifying the similarity of probability distributions a large number of potential measures have been proposed (Cha, 2007). I have picked two relatively simple measures that, as Vegelius et al. (1986) have pointed out, have a number of desirable properties: the “proportional similarity” (Vegelius et al., 1986) or “intersection” (Cha, 2007), which is simply the shared area of two compared histograms and therefore ranges from 0 (no overlap) to 1 (identical distributions), and the “Hellinger coefficient” (Vegelius et al., 1986) or “fidelity” (Cha, 2007), which uses the geometric mean of two corresponding histogram bins rather than the minimum, but also ranges from 0 (for no overlap) to 1 (identical distributions). While there are minor differences in the results provided by these two measures, both agree in ranking the slightly leaky feedforward inhibition model as providing the best account of the RT distribution shape (roughly 85% overlap) and the LCA model with fixed variance as the one providing the worst account (roughly 75% overlap). However, the differences are certainly not major. Thus, to summarize the results of comparing the behavioral data with the predictions of different decision mechanisms, I would be hesitant to reject any of the considered models on the basis of the behavioral data alone.

Neurophysiological predictions based on the internal dynamics of the decision models

Since it is apparently extremely difficult to differentiate between alternative decision mechanisms on the basis of behavioral data alone, the question arises whether other types of measurements could be more informative. To address this question, we have analyzed the internal dynamics of the competing mechanisms. We have looked at how the activity of individual integrators is expected to evolve over time, how the summed activity of all integrators is expected to evolve over time, and how the pair of activities of two of the integrators is expected to evolve over time (state space analysis). The results, summarized in Table 2, indicated that by taking all of these measures into account it should be much easier to tell the difference between various decision mechanisms. Such an analysis requires recordings of the activity of individual integrators with high temporal resolution and therefore currently can only be obtained using invasive recording techniques. Previous non-human primate work suggests that such data can likely be obtained from behaving animals (Roitman and Shadlen, 2002; Churchland et al., 2008) and we are currently in the process of recording from monkeys that are performing the same task as our human subjects (Bollimunta and Ditterich, 2009). In a previous analysis of neural data recorded from monkeys performing perceptual decisions between two alternatives I had considered different variants of feedforward inhibition mechanisms, but no feedback inhibition mechanisms (Ditterich, 2006). The observation that neural data can be helpful in distinguishing models that cannot be distinguished on the basis of behavioral data has recently also been made by Purcell et al. (2010) when analyzing monkeys’ visual search behavior and associated neural activity in the frontal eye fields.