Abstract

The purpose of this review is to consolidate existing evidence from published systematic reviews on health information system (HIS) evaluation studies to inform HIS practice and research. Fifty reviews published during 1994–2008 were selected for meta-level synthesis. These reviews covered five areas: medication management, preventive care, health conditions, data quality, and care process/outcome. After reconciliation for duplicates, 1276 HIS studies were arrived at as the non-overlapping corpus. On the basis of a subset of 287 controlled HIS studies, there is some evidence for improved quality of care, but in varying degrees across topic areas. For instance, 31/43 (72%) controlled HIS studies had positive results using preventive care reminders, mostly through guideline adherence such as immunization and health screening. Key factors that influence HIS success included having in-house systems, developers as users, integrated decision support and benchmark practices, and addressing such contextual issues as provider knowledge and perception, incentives, and legislation/policy.

Keywords: Systematic reviews, meta-synthesis, health information systems, field evaluation studies

Introduction

The use of information technology to improve patient care continues to be a laudable goal in the health sector. Some argue we are near the tipping point where one can expect a steady rise in the number of health information systems (HISs) implemented and their intensity of use in different settings, especially by healthcare providers at point of contact.1 A number of European nations are already considered leaders in the use of electronic medical records in primary care, where physicians have been using electronic medical records in their day-to-day practice for over a decade.2 As for our current state of HIS knowledge, a 2005 review by Ammenwerth and de Keizer3 has identified 1035 HIS field evaluation studies reported during 1982–2002. Over 100 systematic reviews have also been published to date on various HIS evaluation studies. Despite the impressive number of HIS studies and reviews available, the cumulative evidence on the effects of HIS on the quality of care continues to be mixed or even contradictory. For example, Han et al4 reported an unexpected rise in mortality after their implementation of a computerized physician order entry (CPOE) system in a tertiary care children's hospital. Yet, Del Beccaro et al5 found no association between increased mortality and their CPOE implementation in a pediatric intensive care unit. Even in a computerized hospital, Nebeker et al6 found that high adverse drug event (ADE) rates persisted. However, as demonstrated by Ash et al,7 CPOE effects can be unpredictable because of the complex interplay between the HIS, users, workflows, and settings involved. There is a need for higher level synthesis to reconcile and make sense of these HIS evaluation studies, especially those systematic review findings already published.

This review addresses the latter gap by conducting a meta-level synthesis to reconcile the HIS evidence base that exists at present. Our overall aim is to consolidate published systematic reviews on the effects of HIS on the quality of care. This will help to better inform HIS practice and research. In particular, this meta-level synthesis offers three contributions to practitioners and researchers involved with HIS implementation and evaluation. Firstly, it provides a comprehensive guide on the work performed to date, allowing one to build on existing evidence and avoid repetition. Secondly, by reconciling and reporting the systematic review findings in a consistent manner, we translate these synthesized reviews in ways that are relevant and meaningful to HIS practitioners. Lastly, the consolidated evidence provides a rational basis for our recommendations to improve HIS adoption and identify areas that require further research.

In this paper, we first describe the review method used. Then we report the review findings, emphasizing the meta-synthesis to make sense of the published systematic reviews found. Lastly, we discuss the knowledge and insights gained, and offer recommendations to guide HIS practice and research.

Review method

Research questions

This review is intended to address the current need for a higher level synthesis of existing systematic reviews on HIS evaluation studies to make sense of the findings. To do so, we focused on reconciling the published evidence and comparing the evaluation metrics and quality criteria of the multiple studies. Our specific research questions were: (1) What is the cumulative effect of HIS based on existing systematic reviews of HIS evaluation studies? (2) How was the quality of the HIS studies in these reviews determined? (3) What evaluation metrics were used in the HIS studies reviewed? (4) What recommendations can be made from this meta-synthesis to improve future HIS adoption efforts? (5) What are the research implications? Through this review, we aimed to synthesize the disparate HIS review literature published to date in ways that are rigorous, meaningful, and useful to HIS practitioners and researchers. At the same time, by examining the quality of the HIS studies reviewed and the evaluation metrics used, we should be able to improve the rigor of planning, conduct, and critique of future HIS evaluation studies and reviews.

Review identification and selection

An extensive search of systematic review articles on HIS field evaluation studies was conducted by two researchers using Medline and Cochrane Database of Systematic Reviews covering 1966–2008. The search strategy combined terms in two broad themes of information systems and reviews: the former included information technology, computer system, and such MeSH headings as electronic patient record, decision support, and reminder system; the latter included systematic review, literature review, and review. The search was repeated by a medical librarian to ensure all known reviews had been identified. The reference sections of each article retrieved were scanned for additional reviews to be included. A hand search of key health informatics journals was carried out by the lead researcher, and known personal collections of review articles were included.

The inclusion criteria used in this review focused on published systematic reviews in English on HIS used by healthcare providers in different settings. The meaning of HIS was broadly defined on the basis of the categories of Ammenwerth and de Keizer3 to cover different types of systems and tools for information processing, decision support, and management reporting, but excluded telemedicine/telehealth applications, digital devices, systems used by patients, and those for patient/provider education. The reason for such exclusion was that separate reviews were planned in these areas for subsequent publication. All citation screening and article selection were performed independently by two researchers and a second librarian. Discrepancies in the review process were resolved by consensus among the two researchers, and subsequently confirmed by the second librarian.

Meta-synthesis of the reviews

The meta-level synthesis involved reconciliation of key aspects of the systematic review articles through consensus by two researchers to make sense of the cumulative evidence. The meta-synthesis involved six steps: (1) the characteristics of each review were summarized by topic areas, care settings, HIS features, evaluation metrics, and key findings; (2) the assessment criteria used in the reviews to appraise the quality of HIS studies were compared; (3) the evaluation metrics used and the effects reported were categorized according to an existing HIS evaluation framework; (4) duplicate HIS studies from the reviews were reconciled to arrive at a non-overlapping corpus; (5) the aggregate effects of a subset of non-overlapping controlled HIS studies from selected topic areas were tabulated by HIS features and metrics already used as organizing schemes in the reviews; (6) factors identified in the reviews that influenced HIS success were consolidated and reported.

Specifically, the type and relationship of specific HIS features, metrics, and their effects on quality of care were summarized using the methods and outputs found in the existing HIS reviews. Five predefined topic areas for medication management, preventive care, health conditions, data quality, and care process/outcome were used. These topics were adapted from the organizing schemes used in the reviews by Balas et al,8 Cramer et al,9 and Garg et al10 which covered multiple healthcare domains. The existing HIS evaluation framework used was the Canada Health Infoway Benefits Evaluation (BE) Framework already adopted in Canada.11 This is similar to the approach used by van der Meijden et al12 in categorizing a set of evaluation attributes from 33 clinical information systems according to the Information System (IS) Success model by DeLone and McLean13 on which the Infoway BE Framework was based.

To identify the subset of controlled HIS studies and their effects, two researchers worked independently to retrieve the full articles for all original HIS studies within the corpus to extract the data on designs, metrics, and results. To aggregate HIS effects, the ‘vote-counting’ method applied in four reviews was used to tally the number of positive/neutral/negative studies based on significant differences between groups.8 10 14 15 In studies with multiple measures, Garg's method was adopted where ≥50% of the results should be significant to be counted as positive.10 To visualize the aggregate effects, Dorr's method was applied to plot the frequency of positive, neutral, and negative studies in stacked bar graphs.14 The two researchers worked independently on the aggregate analysis and reconciled the outputs through consensus afterwards.

Review findings

Synopsis of HIS reviews

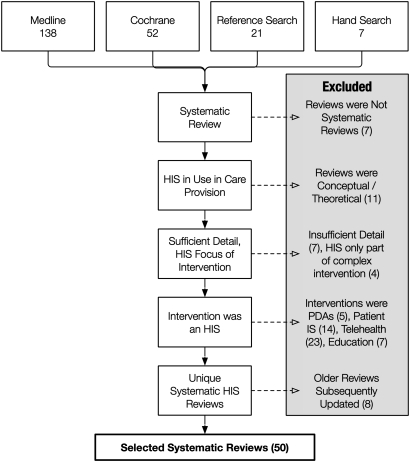

Our initial library database and hand searches returned over 1200 citation titles/abstracts. By applying and refining the inclusion/exclusion criteria, we eventually identified 136 articles for further screening. Of these 136 articles, 58 were considered relevant and reviewed in detail. Of the 78 rejected articles, 23 were telehealth/telemedicine-related, 14 were patient-oriented systems, 11 were conceptual papers, seven had insufficient detail, seven involved other types of technologies, seven were not systematic reviews, five were on personal digital assistant devices, and four had HIS as only one of the interventions examined. Twenty-nine (50%) of the 58 selected review articles were published since 2005. Most had lead authors from the USA (22 (38%)) and UK (16 (28%)). The remaining reviews were from Canada (six (10%)), France (five (9%)), the Netherlands (four (7%)), Australia (three (5%)), Austria (one (2%)), and Belgium (one (2%)). Further examination of the 58 reviews showed that eight were updates or summaries of earlier publications. Hence, our final selection consisted of 50 review articles,8–10 14–60 which included the eight updated/summary reviews instead of the original versions.61–68 The review selection process is summarized in figure 1.

Figure 1.

Review selection method. IS, information system; HIS, health information system; PDA, personal digital assistant.

A synopsis of the 50 reviews by topic, author, care setting, study design, evaluation metric, and key findings is shown in table 1, available as an online data supplement at www.jamia.org. The HIS features in these reviews varied widely, ranging from the types of information systems and technologies used, the functional capabilities involved, to the intent of these systems. Examples are the review of administrative registers,19 reminders,27 and diabetes management,32 respectively. A variety of care settings were reported, including academic/medical centers, hospitals, clinics, general practices, laboratories, and patient homes. Most of the studies were randomized controlled trials and quasi-experimental and observational studies, although some were qualitative or descriptive in nature.18 30 56 59

In terms of evaluation metrics and study findings, most reviews included tables to show the statistical measures and effects as reported in the original field studies. These measures and effects were mostly related to detecting significant between-group differences in guideline compliance/adherence, utilization rates, physiologic values, and surrogate/clinical outcomes. Examples include cancer screening rates,38 clinic visit frequencies,14 hemoglobin A1c levels,32 lengths of stay,54 adverse events,40 and death rates.55 Four reviews on data quality reported predictive values and sensitivity/specificity rates.19 35 39 56 Most of the reviews were narrative, with no pooling of the individual study results. Six reviews summarized their individual studies to provide aggregate assessment of whether the HIS had led to improvement in provider performance and patient outcome.8 14 15 29 30 32 For instance, Garg et al10 assigned a yes/no value to each HIS study depending on whether ≥50% of its evaluation metrics had significant differences. Only nine (18%) reviews included meta-analysis of aggregate effects.9 21 24 27 28 32 42 51 55 The metrics used in these nine meta-analyses were odds/risk ratios and standardized mean differences with CIs shown as forest plots; eight included summary statistics to describe the aggregate effects, seven adjusted for heterogeneity (four fixed effect,9 21 27 55 two random28 32 and one mixed51), and three included funnel plots for publication bias.9 42 51

Assessment of methodological quality

Of the 50 reviews included in the synthesis, 31 (62%) mentioned they had conducted an assessment of the methodological quality of the HIS studies as part of their review. Of these 31 reviews, 20 included the individual quality rating of each HIS study in the article or via a website. For quality assessment instruments, there were 16 different variations of 14 existing quality scales and checklists reported, while eight others were created by review authors on an ad hoc basis. Thirteen of these 24 quality assessment instruments had items with numeric ratings that added up to an overall score, while the remaining 11 were in the form of checklists mostly with items for yes/no responses. Of the 14 existing instruments mentioned, the most common was the five-item scale from Johnston et al,62 which was used in nine reviews.17 10 15 41 43 48 61 63 65

Further examination of the 24 instruments revealed three broad approaches. The first is based on the evidence-based medicine and Cochrane Review paradigm that assesses the quality of a HIS study design for potential selection, performance, attrition, and detection bias.69 The second extends the assessment to include the reporting of such aspects as inclusion/exclusion criteria, power calculation, and main/secondary effect variables. The third is on HIS data/feature quality by comparing specific HIS features against some reference standards. An example of the first approach is the Johnston five-item scale with 0–2 points each based on the method of allocation to study groups, unit of allocation, baseline group differences, objectivity of outcome with blinding, and follow-up for analysis.62 An example of the second is the 20-item scale by Balas et al32 which includes the study site, sampling and size, randomization, intervention, blinding of patients/providers/measurements, main/secondary effects, ratio/timing of withdrawals, and analysis of primary/secondary variables. The third example is the Jaeschke et al70 four-item checklist for data accuracy based on sample representativeness, independent/blind comparison against a reference standard not affected by test results, and reproducible method/results.

Types of evaluation metrics used

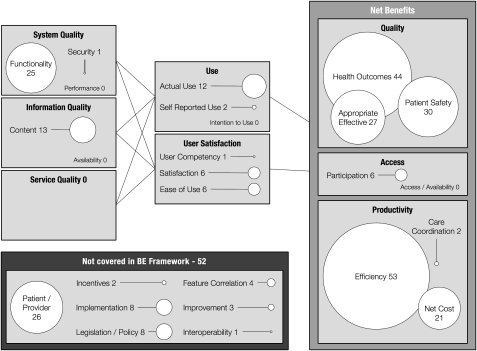

To make sense of the HIS evaluation metrics from the 50 reviews, we applied the Infoway BE Framework11 as an organizing scheme from which we could categorize the measures in meaningful ways. The BE Framework explains how information, system, and service quality can affect the use of an HIS and user satisfaction, which in turn can influence the net benefits that are realized over time. In this framework, net benefits are measured under the dimensions of healthcare quality, provider productivity, and access to care. Measures that did not fit into the existing BE dimensions were grouped under new categories that we created on the basis of the types of measures and effects involved. A summary of the evaluation metrics from the 50 reviews under the BE Framework dimensions of system, information and service quality, HIS usage and satisfaction, and net benefits of care quality, productivity and access are shown in table 1. The additional categories of evaluation metrics identified in our meta-synthesis are shown in table 2.

Table 1.

Mapping factors from HIS studies to the benefits evaluation framework

| HIS quality | HIS use | Net benefits |

|

|

|

AE, adverse event; DS, decision support; HIS, health information system; LOS, lenth of stay.

Table 2.

Additional measures not found in the benefits evaluation framework

| Category | HIS evaluation metrics | Review reference sources |

| Patient/provider | Patient knowledge, attitude, perception, decision confidence, compliance | 8 9 32 36 43 44 53 57 |

| Patient/provider overall satisfaction | 20 25 32 36 43 53 58 | |

| Patient/provider knowledge acquisition, relationship | 9 25 43 57 | |

| Provider attitude, perceptions, autonomy, experience and performance | 10 25 43 57 | |

| Workflow | 14 20 30 | |

| Incentives | Reimbursement mix, degree of capitation | 22 40 |

| Implementation | Barriers, training, organizational support, time-to-evaluation, lessons, success factors, project management, leadership, costs | 14 20 22 25 40 43 45 49 |

| Legislation/policy | Privacy, security, legislations, mandates, confidentiality | 14 20 25 40 43 58 |

| Correlation | Correlation of HIS feature/use with change in process, outcome, success | 14 15 49 54 |

| Change/improvement | Data quality improvement, reduced loss/paper and transcription errors, DS improvement | 35 57 59 |

| Interoperability | Information exchange and interoperability, standards | 20 |

DS, decision support.

In table 1, under the HIS quality dimensions, most of the evaluation metrics reported were on system function and information content. Examples of ‘functionality’ include evaluation of: CPOE with integrated, stand alone or no-decision support features24 29; commercial HIS compared with home-grown systems22 24; and the accuracy of decision support triggers such as medication alerts.9 23 Examples of information ‘content’ metrics were related to the accuracy, completeness, and comprehension of electronic patient data collected.19 20 Under the HIS use dimensions, most of the measures were on actual HIS use, provider satisfaction, and usability.14 20 25 Under the net benefits dimensions, most of the measures were around ‘care quality’ and ‘provider productivity.’ For care quality, the most common measures in the ‘patient safety’ category were medical errors and reportable and drug dosing-related events.20 22 24 In the ‘appropriateness and effectiveness’ category the most common measures were adherence/compliance to guidelines and protocols.8 27 In the ‘health outcomes’ category the most common measures include mortality/morbidity, length of stay, and physiological and psychological measures.8 60 For provider productivity, the most common measures in the ‘efficiency’ category were resource utilization and provider time spent, time-to-care and service turnaround time.8 27 32 In the ‘net cost’ category, different types of healthcare costs, especially hospital and drug charges, were among the common measures.8 32 45

Table 2 shows the measures from the reviews that did not fit into the dimensions/categories under the BE Framework. The most common measures were related to ‘patients/providers’, such as their knowledge, attitude, perception, compliance, decision confidence, overall satisfaction, and relationships.9 36 43 Another group of measures were ‘implementation’ related including barriers, training, organizational support, project management, leadership and cost.20 25 32 40 Others were related to ‘legislation/policy’, such as mandate and confidentiality,20 58 as well as the correlation between HIS features with extent of changes and intended effects.14 15

Finally, we created a visual diagram in figure 2 to show the frequency distribution of HIS studies for the evaluation dimensions/categories examined in the reviews. From this figure one can see that efficiency, health outcomes, and patient safety are the three categories with the most HIS studies reported. Conversely, there is little to no study for such categories as care coordination, user competency, information availability, and service quality.

Figure 2.

Distribution of health information system studies by evaluation dimensions/categories.

Non-overlapping review corpus

The 50 reviews in our meta-synthesis covered 2122 HIS studies. However, many of these studies were duplicates, as they appeared in more than one review. For instance, the 1999 CPOE study by Bates et al71 was appraised in seven different reviews.22 24 30 40 46 49 60 The 50 reviews covered the topics of medication management, preventive care, health conditions, and data quality, plus an assortment of care process/management. There were multiple reviews published in each of these five areas, and they all had overlapping HIS studies. For example, there were 13 reviews with 275 HIS studies on medication management. But only 206 of these studies were unique, as the remaining 69 were duplicates. Some studies were reviewed differently, not only from a methodological standpoint, but also in the indicators examined. Four of the reviews under care process/outcome each contained 100 or more HIS studies in multiple domains.10 15 20 22 Yet, many of these studies were also contained in the reviews under the four other topic areas mentioned. For instance, the review by Garg et al10 on clinical decision support systems (CDSS) had 100 HIS studies covering the domains of diagnosis, prevention, disease management, drug dosing, and prescribing. However, only eight (8%) were unique studies72–79 that had not already appeared in the other reviews.

As part of our meta-synthesis, we reconciled the 50 reviews to eliminate duplicate HIS studies to arrive at a non-overlapping corpus. When the HIS studies appeared in multiple reviews, we included them just once in the most recent review under a specific topic where possible. After the reconciliation, we arrived at 1276 non-overlapping HIS studies. Next we took the 30 reviews under the four topic areas of medication management, preventive care, health conditions, and data quality to examine the HIS and effects reported. The 20 reviews under care process/outcome were not included as they were too diverse for meaningful categorization and comparison. Upon closer examination, we found that over half of the 709 non-overlapping HIS studies in these 30 reviews contained descriptive results, insufficient detail, no control groups, patient/paper systems or special devices, which made it infeasible to tabulate the effects. For example, we eliminated 24 of the 67 HIS studies in the review of Eslami et al30 as they had no controls or insufficient detail for comparison. After this reconciliation, we reduced the 709 non-overlapping HIS studies to 287 controlled HIS studies for the 30 reviews under the four topic areas.

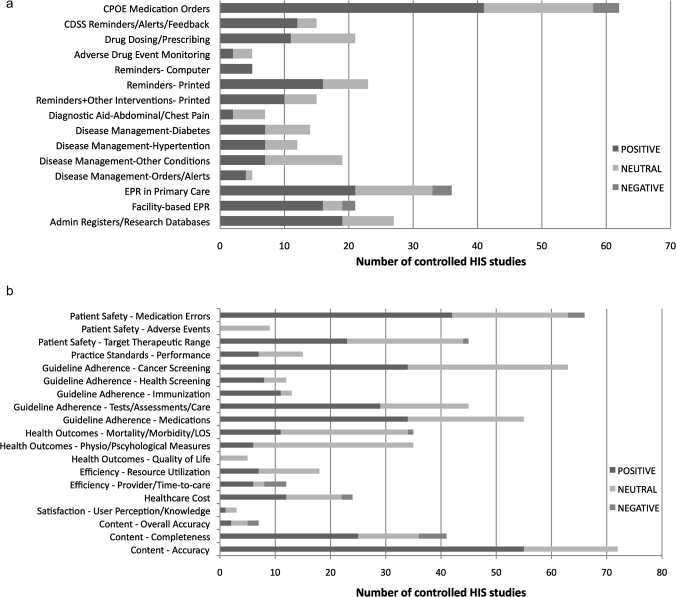

Associating HIS features, metrics with effects

The cumulative effects by HIS features from the 30 reviews for the four topic areas are shown in table 3 and summarized in figure 3A. In table 3, the overall ratio of positive controlled HIS studies is 180/287 (62.7%). The most effective HIS features were computer-based reminder systems in preventive care (100%), CDSS reminders/alerts in medication management (80%), and disease management-orders/alerts in health conditions (80%). The HIS features that were somewhat effective included CPOE medication orders (66.1%), reminders in printed form (69.6%), and reminders combined with other interventions (66.7%). Facility-based electronic patient record (EPR) systems and administrative registers/research databases had better data quality than primary-care EPR systems (76.2% and 70.4% vs 58.3%). Note that 98/287 (34.1%) of these controlled HIS studies reported no significant effects, mostly in the area of disease management where 30/57 (52.6%) had neutral findings.

Table 3.

Frequency of positive, neutral, and negative controlled health information system (HIS) studies by reported HIS features

| HIS features | Positive (%) | Neutral (%) | Negative (%) | Total |

| Medication management | ||||

| CPOE medication orders | 41 (66.1) | 17 (27.4) | 4 (6.5) | 62 |

| CDSS reminders/alerts/feedback | 12 (80.0) | 3 (20.0) | 0 (0.0) | 15 |

| Drug dosing/prescribing | 11 (52.4) | 10 (47.6) | 0 (0.0) | 21 |

| Adverse drug event monitoring | 2 (40.0) | 3 (60.0) | 0 (0.0) | 5 |

| Subtotal | 66 (64.1) | 33 (32.0) | 4 (3.9) | 103 |

| Preventive care | ||||

| Reminders—computer | 5 (100.0) | 0 (0.0) | 0 (0.0) | 5 |

| Reminders—printed | 16 (69.6) | 7 (30.4) | 0 (0.0) | 23 |

| Reminders+other interventions—printed | 10 (66.7) | 5 (33.3) | 0 (0.0) | 15 |

| Subtotal | 31 (72.1) | 12 (27.9) | 0 (0.0) | 43 |

| Health conditions | ||||

| Diagnostic aid—abdominal/chest pain | 2 (28.6) | 5 (71.4) | 0 (0.0) | 7 |

| Disease management—diabetes | 7 (50.0) | 7 (50.0) | 0 (0.0) | 14 |

| Disease management—hypertension | 7 (58.3) | 5 (41.7) | 0 (0.0) | 12 |

| Disease management—other conditions | 7 (36.8) | 12 (63.2) | 0 (0.0) | 19 |

| Disease management—orders/alerts | 4 (80.0) | 1 (20.0) | 0 (0.0) | 5 |

| Subtotal | 27 (47.4) | 30 (52.6) | 0 (0.0) | 57 |

| Data quality | ||||

| EPR in primary care | 21 (58.3) | 12 (33.3) | 3 (8.3) | 36 |

| Facility-based EPR | 16 (76.2) | 3 (14.3) | 2 (9.5) | 21 |

| Admin registers/research databases | 19 (70.4) | 8 (29.6) | 0 (0.0) | 27 |

| Subtotal | 56 (66.7) | 23 (27.4) | 5 (6.0) | 84 |

| Total | 180 (62.7) | 98 (34.1) | 9 (3.1) | 287 |

Values are number (%).

CDSS, clinical decision support systems; CPOE, computerized physician order entry; EPR, electronic patient record.

Figure 3.

(A) Frequency of positive, neutral and negative controlled health information system (HIS) studies by reported HIS features. (B) Frequency of positive, neutral, and negative controlled HIS studies by reported HIS metrics. CDSS, clinical decision support systems; CPOE, computerized physician order entry; EPR, electronic patient record; LOS, length of stay.

Next, the cumulative effects by evaluation measures from the 30 reviews for the four topic areas are shown in table 4 and summarized in Figure 3B. In total, 575 evaluation measures were reported in the 287 controlled HIS studies. Table 4 shows that the overall ratio of HIS metrics with positive effects is 313/575 (54.4%). The HIS metrics with positive effects are mostly under the dimension of care quality in patient safety for medication errors (63.6%), and in guideline adherence for immunization (84.6%), health screening (66.7%), tests/assessments/care (64.4%), and medications (61.8%). Under information quality, 76.4% of HIS metrics had positive effects in content accuracy, and 61.0% were positive in completeness. Note that 244/575 (42.4%) of HIS metrics showed no significant effects, mostly in the areas of health outcomes, adverse event detection, and resource utilization.

Table 4.

Frequency of positive, neutral and negative controlled health information system (HIS) studies by reported HIS metrics

| HIS metrics | Positive (%) | Neutral (%) | Negative (%) | Total |

| Care quality | ||||

| Patient safety—medication errors | 42 (63.6) | 21 (31.8) | 3 (4.5) | 66 |

| Patient safety—adverse events | 0 (0.0) | 9 (100.0) | 0 (0.0) | 9 |

| Patient safety—target therapeutic ranges | 23 (51.1) | 21 (46.7) | 1 (2.2) | 45 |

| Practice standards—provider performance | 7 (46.7) | 8 (53.3%) | 0 (0.0) | 15 |

| Guideline adherence—cancer screening | 34 (54.0) | 29 (46.0) | 0 (0.0) | 63 |

| Guideline adherence—health screening | 8 (66.7) | 4 (33.3) | 0 (0.0) | 12 |

| Guideline adherence—immunization | 11 (84.6) | 2 (15.4) | 0 (0.0) | 13 |

| Guideline adherence—tests/assessments/care | 29 (64.4) | 16 (35.6) | 0 (0.0) | 45 |

| Guideline adherence—medications | 34 (61.8) | 21 (38.2) | 0 (0.0) | 55 |

| Health outcomes—mortality/morbidity/LOS | 11 (31.4) | 23 (65.7) | 1 (2.9) | 35 |

| Health outcomes—physio/psychological measures | 6 (17.1) | 29 (82.9) | 0 (0.0) | 35 |

| Health outcomes—quality of life | 0 (0.0) | 5 (100.0) | 0 (0.0) | 5 |

| Subtotal | 205 (51.5) | 188 (47.2) | 5 (1.3) | 398 |

| Provider productivity | ||||

| Efficiency—resource utilization | 7 (38.9) | 11 (61.1) | 0 (0.0) | 18 |

| Efficiency—provider time/time-to-care | 6 (50.0) | 2 (16.7) | 4 (33.3) | 12 |

| Healthcare cost | 12 (50.0) | 10 (41.7) | 2 (8.3) | 24 |

| Subtotal | 25 (46.3) | 23 (42.6) | 6 (11.1) | 54 |

| User satisfaction | ||||

| User perception—experiences, knowledge | 1 (33.3) | 2 (66.7) | 0 (0.0) | 3 |

| Information quality | ||||

| Content—accuracy | 55 (76.4) | 17 (23.6) | 0 (0.0) | 72 |

| Content—completeness | 25 (61.0) | 11 (26.8) | 5 (12.2) | 41 |

| Content—overall quality | 2 (28.6) | 3 (42.9) | 2 (28.6) | 7 |

| Subtotal | 82 (68.3) | 31 (25.8) | 7 (5.8) | 120 |

| Total | 313 (54.4) | 244 (42.4) | 18 (3.1) | 575 |

LOS, length of stay.

Summary of key findings

The ‘take-home message’ from this review is that there is some evidence for improved quality of care, but in varying degrees across topic areas. For instance, HIS with CPOE and CDSS were effective in reducing medication errors, but not those for drug dosing in maintaining therapeutic target ranges or ADE monitoring because of high signal-to-noise ratios. Reminders were effective mostly through preventive care guideline adherence. The quality of electronic patient data was generally accurate and complete. Areas where HIS did not lead to significant improvement included resource utilization, healthcare cost, and health outcomes. However, in many instances, the studies were not designed nor had sufficient power/duration to properly assess health outcomes. For provider time efficiency, four of 12 studies reported negative effect where HIS required more time and effort to complete the tasks. Caution is needed when interpreting these findings, because there were wide variations in organizational contexts and how the HIS were designed/implemented, used, and perceived. In some cases, the HIS was only part of a complex set of interventions that included changes in clinical workflow, provider behavior, and scope of practice.

Discussion

Cumulative evidence on HIS studies

This review extends the HIS evidence base in three significant ways. Firstly, our synopsis of the 50 HIS reviews provide a critical assessment of the current state of knowledge on the effects of HIS in medication management, health conditions, preventive care, data quality, and care process/outcome. Our concise summary of the selected reviews in supplementary online table 1 can guide HIS practitioners in planning/conducting HIS evaluation studies by drawing on approaches used by others and comparing their results with what is already known in such areas as electronic prescribing,24 drug dosing,28 preventive care reminders,26 and EPR quality.56

Secondly, the grouping of evaluation metrics from the 50 HIS reviews according to the Infoway BE Framework (which is based on DeLone's IS Success Model13) provides a coherent scheme when implementing HIS to make sense of the different factors that influence HIS success. Through this review, we also found additional factors not covered by the BE Framework that warrant its further refinement (refer to table 2). These factors include having in-house systems, developers as users, integrated decision support, and benchmark practices. Important contextual factors include: patient/provider knowledge, perception and attitude; implementation; improvement; incentives; legislation/policy; and interoperability.

Thirdly and most importantly, our meta-synthesis produced a non-overlapping corpus of 1276 HIS studies from the 50 reviews and consolidated the cumulative HIS effects in four healthcare domains with a subset of 287 controlled studies. This is a significant milestone that has not been attempted previously. To illustrate, many of the 50 reviews were found subsumed by the more recent Garg et al,10 Nies et al,15 Chaudhry et al,22 and Car et al20 reviews which cover 100, 106, 257, and 284 HIS studies in multiple domains, respectively. Yet with these four comprehensive reviews, it was difficult to integrate their findings in a meaningful way because of significant overlapping of the HIS studies. The findings were also reported in different forms, making comparison even more challenging. In contrast, our organizing scheme for associating HIS features, metrics, and effects using a non-overlapping corpus as shown in Figure 3A, B provide a concise and quantifiable way of consolidating review findings that is relevant and meaningful to HIS practitioners.

Recommendations to improve HIS adoption

We believe the cumulative evidence from this meta-synthesis provides the contexts needed to guide future HIS adoption efforts. For example, our consolidated findings suggest there is evidence of improved quality in preventive care reminders and CPOE/CDSS for medication management. As such, one may focus on replicating successful HIS adoption efforts from benchmark institutions such as those described in the Dexheimer et al26 and Ammenwerth et al24 reviews for reminders and e-prescribing, respectively, by incorporating similar HIS features and practices into the local settings. Conversely, in drug dosing, ADE monitoring, and disease management, where the evidence from our synthesis is variable, attention may shift to redesigning HIS features/workflows and addressing contextual barriers that have hindered adoption, as described in the van der Sijs et al,59 Bates et al45 and Dorr et al14 reviews. The distribution of HIS studies by evaluation dimension from our meta-synthesis (refer to figure 2) shows that the areas requiring ongoing research attention are HIS technical performance, information availability, service quality, user readiness (intention to use HIS), user competency, care access/availability, and care coordination. In particular, the shift toward team-based care, as shown in the review of van der Kam et al,58 will require the careful implementation of HIS to facilitate effective communication and information sharing across the care continuum, which is not well addressed at present.80 Given the importance of contexts in HIS adoption as suggested in the Chaudhry et al22 and Car et al20 reviews, practitioners and researchers should refer to specific HIS studies in the corpus that are similar to their organizational settings and practices for comparison and guidance.

Drawing on this cumulative evidence, we have three recommendations to improve HIS adoption. Firstly, to emulate successful HIS benchmark practices, one must pay attention to specific HIS features and key factors that are critical to ‘making the system workable.’ To do so, frontline healthcare providers must be engaged on an ongoing basis to ensure the HIS can be integrated into the day-to-day work practice to improve their overall performance. The HIS must be sufficiently adaptable over time as providers gain experience and insights on how best to use more advanced HIS features such as CDSS and reminders. Secondly, there should be a planned and coordinated approach to ‘addressing the contextual issues.’ The metrics identified as extensions to the Infoway BE Framework on patients/providers, incentives, change management, implementation, legislation/policy, interoperability, and correlation of HIS features/effects are all issues that must be addressed as needed. Thirdly, one has to demonstrate return-on-value by ‘measuring the clinical impact.’ Evaluation should be an integral part of all HIS adoption efforts in healthcare organizations. Depending on the stage of HIS adoption, appropriate evaluation design and metrics should be used to examine the contexts, quality, use, and effects of the HIS involved. For example, organizations in the process of implementing an HIS should conduct formative evaluation studies to ensure HIS–practice fit and sustained use through ongoing feedback and adaptation of the system and contexts. When a HIS is already in routine use, summative evaluation with controlled studies and performance/outcome-oriented metrics should be used to determine the impact of HIS usage. Qualitative methods should be included to examine subjective effects such as provider/patient perceptions and unintended consequences that may have emerged.

Implications for HIS research

Given the amount of evidence already in existence, it is important to build on such knowledge without duplicating effort. Researchers interested in conducting reviews on the effects of specific HIS could benefit from our review corpus by leveraging what has already been reported to avoid repetition. Those wishing to conduct HIS evaluation studies could consider our organizing schemes for categorizing HIS features, metrics, and effects to improve their consistency and comparability across studies. The variable findings across individual studies evaluating equivalent HIS features suggest that further research is needed to understand how these systems should be designed. Even having HIS features such as CDSS in medication management with strong evidence does not guarantee success, and indeed, may cause harm.81 Research into the characteristics of success using such methods as participatory design,82 usability engineering,83 and project risk assessment84 will be critical to planning and guiding practitioners in successful implementations. Also, further research into the nature of system design, as suggested in the Kawamoto et al41 review (eg, usability, user experience, and contextualized process analysis), could help to promote safer and more effective HIS design.

This meta-synthesis has shown that different methodological quality assessment instruments were applied in the reviews. There was considerable variability across reviews when the same studies were assessed. These instruments need to be streamlined to provide a consistent approach to appraising the quality of HIS studies. For example, the Johnston et al62 five-item quality scale could be adopted as the common instrument, as it is already used in 10 reviews. The analysis and reporting of HIS evaluation findings in the reviews also require work. The current narrative approach to summarizing evaluation findings lacks a concise synthesis for HIS practitioners, yet more sophisticated techniques such as meta-analysis are not easy to comprehend. Further work is needed on how one can organize review findings in meaningful ways to inform HIS practice.

Review limitations

There are limitations to this meta-synthesis. Firstly, only English review articles in scientific journals were included; we could have missed reviews in other languages and those in gray literature. Secondly, we excluded reviews in telemedicine/telehealth, patient systems, education interventions, and mobile devices; their inclusion may have led to different interpretations. Thirdly, our organizing schemes and vote-counting methods for correlating HIS features, metrics, and effects were simplistic, which may not have reflected the intricacies associated with specific HIS and evaluation findings reported. Lastly, our meta-analysis covered a wide range of complex issues, and could be viewed as ambitious and inadequate for addressing them in a substantive manner.

Conclusions

This meta-synthesis shows there is some evidence for improved quality of care from HIS adoption. However, the strength of this evidence varies by topic, HIS feature, setting, and evaluation metric. While some areas, such as the use of reminders for guideline adherence in preventive care, were effective, others, notably in disease management and provider productivity, showed no significant improvement. Factors that influence HIS success include having in-house systems, developers as users, integrated decision support and benchmark practices, and addressing such contextual issues as provider knowledge and perception, incentives, and legislation/policy. Drawing on this evidence to establish benchmark practices, especially in non-academic settings, is an important step towards advancing HIS knowledge.

Supplementary Material

Acknowledgments

We acknowledge Dr Kathryn Hornby's help as the medical librarian on the literature searches.

Footnotes

Funding: Support for this review was provided by the Canadian Institutes for Health Research, Canada Health Infoway and the College of Pharmacists of British Columbia.

Competing interests: None.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1.Berner ES, Detmer DE, Simborg D. Will the wave finally break? A brief view of the adoption of electronic medical records in the United States. J Am Med Inform Assoc 2005;12:3–7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Schoen C, Osborn R, Huynh PH, et al. On the front lines of care: Primary care physician office systems, experiences and views in seven countries. Health Aff 2006;25:w555–71 [DOI] [PubMed] [Google Scholar]

- 3.Ammenwerth E, de Keizer N. An inventory of evaluation studies of information technology in health care. Methods Inf Med 2005;44:44–56 [PubMed] [Google Scholar]

- 4.Han YY, Carcillo JA, Venkataraman ST, et al. Unexpected increased mortality after implementation of a commercially sold computerized physician order entry system. Pediatrics 2005;116:1506–12 [DOI] [PubMed] [Google Scholar]

- 5.Del Becarro MA, Jeffries HE, Eisenberg MA, et al. Computerized provider order entry implementation: no association with increased mortality rates in an intensive care unit. Pediatrics 2006;119:290–5 [DOI] [PubMed] [Google Scholar]

- 6.Nebeker JR, Hoffman JM, Weir CR, et al. High rates of adverse drug events in a highly computerized hospital. Arch Intern Med 2005;165:1111–16 [DOI] [PubMed] [Google Scholar]

- 7.Ash JS, Sittig DF, Dykstra RH, et al. Categorizing the unitended sociotechnical consequences of computerized provider order entry. Int J Med Inf 2007;76S:S21–7 [DOI] [PubMed] [Google Scholar]

- 8.Balas EA, Austin SM, Mitchell JA, et al. The clinical value of computerized information services. A review of 98 randomized clinical trials. Arch Fam Med 1996;5:271–8 [DOI] [PubMed] [Google Scholar]

- 9.Cramer K, Hartling L, Wiebe N, et al. Computer-based delivery of health evidence: a systematic review of randomised controlled trials and systematic reviews of the effectiveness on the process of care and patient outcomes. Alberta Heritage Foundation (Final Report), Jan 2003 [Google Scholar]

- 10.Garg AX, Adhikari NK, McDonald H, et al. Effects of computerized clinical decision support systems on practitioner performance and patient outcomes: a systematic review. JAMA 2005;293:1223–38 [DOI] [PubMed] [Google Scholar]

- 11.Lau F, Hagens S, Muttitt S. A proposed benefits evaluation framework for health information systems in Canada. Healthcare Quarterly 2007;10:112–18 [PubMed] [Google Scholar]

- 12.Van der Meijden MJ, Tange HJ, Hasman TA. Determinants of success of inpatient clinical information systems: A literature review. J Am Med Inform Assoc 2003;10:235–43 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.DeLone WH, McLean ER. The DeLone and McLean model of information system success: a ten year update. J Manag Info Systems 2003;19:9–30 [Google Scholar]

- 14.Dorr D, Bonner LM, Cohen AN, et al. Informatics systems to promote improved care for chronic illness: a literature review. J Am Med Inform Assoc 2007;14:156–63 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Niès J, Colombet I, Degoulet P, et al. Determinants of success for computerized clinical decision support systems integrated into CPOE systems: a systematic review. Proc AMIA Symp 2006:594–8 [PMC free article] [PubMed] [Google Scholar]

- 16.Bennett JW, Glasziou PP. Computerised reminders and feedback in medication management: a systematic review of randomised controlled trials. Med J Aust 2003;178:217–22 [DOI] [PubMed] [Google Scholar]

- 17.Bryan C, Austin Boren S. The use and effectiveness of electronic clinical decision support tools in the ambulatory/primary care setting: a systematic review of the literature. Inform Prim Care 2008;16:79–91 [DOI] [PubMed] [Google Scholar]

- 18.Buntinx F, Winkens R, Grol R, et al. Influencing diagnostic and preventive performance in ambulatory care by feedback and reminders, a Review. J Fam Pract 1993;10:219–28 [DOI] [PubMed] [Google Scholar]

- 19.Byrne N, Regan C, Howard L. Administrative registers in psychiatric research: a systematic review of validity studies. Acta Psychiatr Scand 2005;112:409–14 [DOI] [PubMed] [Google Scholar]

- 20.Car J, Black A, Anandan A, et al. The Impact of eHealth on the Quality & Safety of Healthcare: A Systemic Overview & Synthesis of the Literature. Report for the NHS Connecting for Health Evaluation Programme. 2008 [Google Scholar]

- 21.Chatellier G, Colombet I, Degoulet P. An overview of the effect of computer-assisted management of anticoagulant therapy on the quality of anticoagulation. Int J Med Inf 1998;49:311–20 [DOI] [PubMed] [Google Scholar]

- 22.Chaudhry B, Wang J, Wu S, et al. Systematic review: impact of health information technology on quality, efficiency, and costs of medical care. Ann Intern Med 2006;144:742–52 [DOI] [PubMed] [Google Scholar]

- 23.Colombet I, Chatellier G, Jaulent MC, et al. Decision aids for triage of patients with chest pain: a systematic review of field evaluation studies. Proc AMIA Symp 1999:231–5 [PMC free article] [PubMed] [Google Scholar]

- 24.Ammenwerth E, Schnell-Inderst P, Machan C, et al. The effect of electronic prescribing on medication errors and adverse drug events: a systematic review. J Am Med Inform Assoc 2008;15:585–600 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Delpierre C, Cuzin L, Fillaux J, et al. A systematic review of computer-based patient record systems and quality of care: more randomized clinical trials or a broader approach? Int J Qual Health Care 2004;16:407–16 [DOI] [PubMed] [Google Scholar]

- 26.Dexheimer JW, Talbot TR, Sanders DL, et al. Prompting clinicians about preventive care measures: a systematic review of randomized controlled trials. J Am Med Inform Assoc 2008;15:311–20 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Austin SM, Balas EA, Mitchell JA, et al. Effect of physician reminders on preventive care: meta-analysis of randomized clinical trials. Proc Annu Symp Comput Appl Med Care 1994:121–4 [PMC free article] [PubMed] [Google Scholar]

- 28.Durieux P, Trinquart L, Colombet I, et al. Computerized advice on drug dosage to improve prescribing practice. Cochrane Database Syst Rev 2008;(3):CD002894. [DOI] [PubMed] [Google Scholar]

- 29.Eslami S, Abu-Hanna A, De Keizer N. Evaluation of outpatient computerized physician medication order entry systems: a systematic review. J Am Med Inform Assoc 2007;14:400–6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Eslami S, De Keizer N, Abu-Hanna A. The impact of computerized physician medication order entry in hospitalized patients—a systematic review. Int J Med Inf 2008;77:365–76 [DOI] [PubMed] [Google Scholar]

- 31.Fitzmaurice DA, Hobbs FD, Delaney BC, et al. Review of computerized decision support systems for oral anticoagulation management. Br J Haematol 1998;102:907–9 [DOI] [PubMed] [Google Scholar]

- 32.Balas EA, Krishna S, Kretschmer RA, et al. Computerized knowledge management in diabetes care. Med Care 2004;42:610–21 [DOI] [PubMed] [Google Scholar]

- 33.Georgiou A, Williamson M, Westbrook JI, et al. The impact of computerised physician order entry systems on pathology services: a systematic review. Int J Med Inf 2007;76:514–29 [DOI] [PubMed] [Google Scholar]

- 34.Handler SM, Altman RL, Perera S, et al. A Systematic Review of the Performance Characteristics of Clinical Event Monitor Signals Used to Detect Adverse Drug Events in the Hospital Setting. J Am Med Inform Assoc 2007;14:451–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Hogan WR, Wagner MM. Accuracy of data in computer-based patient records. J Am Med Inform Assoc 1997;4:342–55 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Jackson CL, Bolen S, Brancati FL, et al. A systematic review of interactive computer-assisted technology in diabetes care. J Gen Intern Med 2006;21:105–10 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Jerant AF, Hill DB. Does the use of electronic medical records improve surrogate patient outcomes in outpatient settings? J Fam Pract 2000;49:349–57 [PubMed] [Google Scholar]

- 38.Jimbo M, Nease DE., Jr Ruffin 4th MT, Rana GK. Information technology and cancer prevention. CA Cancer J Clin 2006;56:26–36 [DOI] [PubMed] [Google Scholar]

- 39.Jordan K, Porcheret M, Croft P. Quality of morbidity coding in general practice computerized medical records: a systematic review. J Fam Pract 2004;21:396–412 [DOI] [PubMed] [Google Scholar]

- 40.Kaushal R, Shojania KG, Bates DW. Effects of computerized physician order entry and clinical decision support systems on medication safety: a systematic review. Arch Intern Med 2003;163:1409–16 [DOI] [PubMed] [Google Scholar]

- 41.Kawamoto K, Houlihan CA, Balas EA, et al. Improving clinical practice using clinical decision support systems: a systematic review of trials to identify features critical to success. BMJ 2005;330:765–72 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Liu JLY, Wyatt JC, Deeks JJ, et al. Systematic reviews of clinical decision tools for acute abdominal pain. Health Technol Assess (NHS R&D HTA) 2006;10:1–167 [DOI] [PubMed] [Google Scholar]

- 43.Mitchell E, Sullivan F. A descriptive feast but an evaluative famine: systematic review of published articles on primary care computing during 1980-97. BMJ 2001;322:279–82 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Montgomery AA, Fahey T. A systematic review of the use of computers in the management of hypertension. J Epidemiol Community Health 1998;52:520–5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Bates DW, Evans RS, Murff H, et al. Detecting adverse events using information technology. J Am Med Inform Assoc 2003;10:115–28 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Oren E, Shaffer ER, Guglielmo BJ. Impact of emerging technologies on medication errors and adverse drug events. Am J Health-Syst Pharm 2003;60:1447–58 [DOI] [PubMed] [Google Scholar]

- 47.Poissant L, Pereira J, Tamblyn R, et al. The impact of electronic health records on time efficiency of physicians and nurses: a systematic review. J Am Med Inform Assoc 2005;12:505–16 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Randell R, Mitchell N, Dowding D, et al. Effects of computerized decision support systems on nursing performance and patient outcomes: a systematic review. J Health Serv Res Policy 2007;12:242–9 [DOI] [PubMed] [Google Scholar]

- 49.Rothschild J. Computerized physician order entry in the critical care and general inpatient setting: a narrative review. J Crit Care 2004;19:271–8 [DOI] [PubMed] [Google Scholar]

- 50.Sanders DL, Aronsky D. Biomedical applications for asthma care: a systematic review. J Am Med Inform Assoc 2006;13:418–27 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Shea S, DuMouchel W, Bahamonde L. A meta-analysis of 16 randomized controlled trials to evaluate computer-based clinical reminder systems for preventive care in the ambulatory setting. J Am Med Inform Assoc 1996;3:399–409 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Shebl NA, Franklin BD, Barber N. Clinical decision support systems and antibiotic use. Pharm World Sci 2007;29:342–9 [DOI] [PubMed] [Google Scholar]

- 53.Shiffman RN, Liaw Y, Brandt CA, et al. Computer-based guideline implementation systems: a systematic review of functionality and effectiveness. J Am Med Inform Assoc 1999;6:104–14 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Sintchenko V, Magrabi F, Tipper S. Are we measuring the right end-points? Variables that affect the impact of computerised decision support on patient outcomes: A systematic review. Med Inform Internet Med 2007;32:225–40 [DOI] [PubMed] [Google Scholar]

- 55.Tan K, Dear PRF, Newell SJ. Clinical decision support systems for neonatal care. Cochrane Database Syst Rev 2008;(2):CD004211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Thiru K, Hassey A, Sullivan F. Systematic review of scope and quality of electronic patient record data in primary care. BMJ 2003;326:1070–4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Urquhart C, Currell R, Grant MJ, et al. Nursing record systems: effects on nursing practice and healthcare outcomes. Cochrane Database Syst Rev 2008;(1):CD002099. [DOI] [PubMed] [Google Scholar]

- 58.Van der Kam WJ, Moorman PW, Koppejan-Mulder MJ. Effects of electronic communication in general practice. Int J Med Inf 2000;60:59–70 [DOI] [PubMed] [Google Scholar]

- 59.Van der Sijs H, Aarts J, Vulto A, et al. Overriding of drug safety alerts in computerized physician order entry. J Am Med Inform Assoc 2006;13:138–47 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Wolfstadt JL, Gurwitz JH, Field TS, et al. The effect of computerized physician order entry with clinical decision support on the rates of adverse drug events: a systematic review. J Gen Intern Med 2008;23:451–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Hunt DL, Haynes RB, Hanna SE, et al. Effects of computer-based clinical decision support systems on physician performance and patient outcomes. J Am Med Inform Assoc 1998;280:1339–46 [DOI] [PubMed] [Google Scholar]

- 62.Johnston ME, Langton KB, Haynes RB, et al. Effects of computer-based clinical decision support systems on clinician performance and patient outcome. A critical appraisal of research. Ann Intern Med 1994;120:135–42 [DOI] [PubMed] [Google Scholar]

- 63.Kawamoto K, Lobach DF. Clinical decision support provided within physician order entry systems: A systematic review of features effective for changing clinician behavior. AMIA Annu Symp Proc 2003:361–5 [PMC free article] [PubMed] [Google Scholar]

- 64.Shekelle PG, Morton SC, Keeler EB. Costs and benefits of health information technology. Evid Rep Technol Assess (Full Rep) 2006:1–71 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Sullivan F, Mitchell E. Has general practitioner computing made a difference to patient care? A systematic review of published reports. BMJ 1995;311:848–52 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Tan K, Dear PRF, Newell SJ. Clinical decision support systems for neonatal care. Cochrane Database Syst Rev 2005;(2):CD004211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Walton RT, Dovey S, Harvey E, et al. Computer support for determining drug dose: systematic review and meta-analysis. BMJ 1999;318:984–90 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Walton RT, Harvey E, Dovey S, et al. Computerized advice on drug dosage to improve prescribing practice. Cochrane Database Syst Rev 2001;(1):CD002894. [DOI] [PubMed] [Google Scholar]

- 69.Higgins JPT, Green S, eds. Cochrane Handbook for Systematic Reviews of Interventions Version 5.0.2 [updated September 2009]. The Cochrane Collaboration, 2009. Available from www.cochrane-handbook.org (accessed 20 August 2010). [Google Scholar]

- 70.Jaeschke R, Guyatt G, Sackett DL. Users' guides to the medical literature, III. How to use an article about a diagnostic test. A. Are the results of the study valid? Evidence-based Medicine Working Group. JAMA 1994;271:389–91 [DOI] [PubMed] [Google Scholar]

- 71.Bates D, Teich J, Lee J, et al. The impact of computerized physician order entry on medication error prevention. J Am Med Inform Assoc 1999;6:313–21 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Abbrecht PH, O'Leary TJ, Behrendt DM. Evaluation of a computer-assisted method for individualized anticoagulation: retrospective and prospective studies with a pharmacodynamic model. Clin Pharmcol Ther 1982;32:129–36 [DOI] [PubMed] [Google Scholar]

- 73.Hales JW, Gardner RM, Jacobson JT. Factors impacting the success of computerized preadmission screening. Proc Annu Symp Comput Appl Med Care 2005:728–32 [PMC free article] [PubMed] [Google Scholar]

- 74.Horn W, Popow C, Miksch S, et al. Development and evaluation of VIE-PNN, a knowledge-based system for calculating the parenteral nutrition of newborn infants. Artif Intell Med 2002;24:217–28 [DOI] [PubMed] [Google Scholar]

- 75.Selker HP, Beshansky JR, Griffith JL, et al. Use of the acute cardiac ischemia time-insensitive predictive instrument (ACI-TIPI) to assist with triage of patients with chest pain or other symptoms suggestive of acute cardiac ischemia: a multicentre, controlled clinical trial. Ann Intern Med 1998;129:845–55 [DOI] [PubMed] [Google Scholar]

- 76.Tang PC, LaRose MP, Newcomb C, et al. Measuring the effects of reminders for outpatient influenze immunizations at the point of clinical opportunity. J Am Med Inform Assoc 1999;6:115–21 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Thomas JC, Moore A, Qualls PE. The effect on cost of medical care for patients treated with an automated clinical audit system. J Med Syst 1983;7:307–13 [DOI] [PubMed] [Google Scholar]

- 78.Wexler JR, Swender PT, Tunnessen WW, Jr, et al. Impact of a system of computer-assisted diagnosis. Am J Dis Child 1975;129:203–5 [DOI] [PubMed] [Google Scholar]

- 79.Wyatt JR. Lessons learnt from the field trial of ACORN, an expert system to advise on chest pain. In: Barber B, Cao D, Quin D, Wagner G, eds. Proceedings of the 6th World Conference on Medical Informatics. Singapore: North-Holland, 1989:111–15 [Google Scholar]

- 80.Tang PC. Key Capabilities of an Electronic Health Record System—Letter Report. Committee on Data Standards for Patient Safety; http://www.nap.edu/catalog/10781.html (accessed 10 Nov 2009). [PubMed] [Google Scholar]

- 81.Koppel R, Metlay JP, Cohen A, et al. Role of computerized physician order entry systems in facilitating medication errors. J Am Med Inform Assoc 2005;293:1197–203 [DOI] [PubMed] [Google Scholar]

- 82.Vimarlund V, Timpka T. Design participation as insurance: risk management and end-user participation in the development of information system in healthcare organizations. Methods Inf Med 2002;41:76–80 [PubMed] [Google Scholar]

- 83.Kushniruk AW, Patel VL. Cognitive and usability engineering methods for the evaluation of clinical information systems. J Biomed Inform 2005;37:56–76 [DOI] [PubMed] [Google Scholar]

- 84.Pare G, Sicotte C, Joana M, et al. Prioritizing the risk factors influencing the success of clinical information system projects: A Delphi study in Canada. Methods Inf Med 2008;47:251–9 [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.