Abstract

Objective

To develop and validate the Readiness for Implementation Model (RIM). This model predicts a healthcare organization's potential for success in implementing an interactive health communication system (IHCS). The model consists of seven weighted factors, with each factor containing five to seven elements.

Design

Two decision-analytic approaches, self-explicated and conjoint analysis, were used to measure the weights of the RIM with a sample of 410 experts. The RIM model with weights was then validated in a prospective study of 25 IHCS implementation cases.

Measurements

Orthogonal main effects design was used to develop 700 conjoint-analysis profiles, which varied on seven factors. Each of the 410 experts rated the importance and desirability of the factors and their levels, as well as a set of 10 different profiles. For the prospective 25-case validation, three time-repeated measures of the RIM scores were collected for comparison with the implementation outcomes.

Results

Two of the seven factors, ‘organizational motivation’ and ‘meeting user needs,’ were found to be most important in predicting implementation readiness. No statistically significant difference was found in the predictive validity of the two approaches (self-explicated and conjoint analysis). The RIM was a better predictor for the 1-year implementation outcome than the half-year outcome.

Limitations

The expert sample, the order of the survey tasks, the additive model, and basing the RIM cut-off score on experience are possible limitations of the study.

Conclusion

The RIM needs to be empirically evaluated in institutions adopting IHCS and sustaining the system in the long term.

Introduction

More healthcare organizations are adopting interactive health communication systems (IHCS), making it important to understand the factors that predict a successful implementation. This paper describes two empirical studies used to formulate and validate such a predictive model, the Readiness for Implementation Model (RIM).

Background

Interactive health communication systems (IHCS)

The major conceptualization of interactive health communication systems (IHCS) in the USA has been undertaken by the Science Panel on Interactive Communication and Health.1 The panel defines IHCS as “the operational software program or modules that interface with the end user, including health information and support web sites and clinical decision-support and risk assessment software.” ‘End user’ refers to patients and their families, and their use distinguishes an IHCS from other types of health information systems, such as those for clinicians and administrators. An IHCS supplies information, enables informed decision making, promotes healthful behaviors, encourages peer communication and emotional support, and helps manage the demand for health services.1 As IHCS become more available, they provide healthcare organizations with a valuable method to encourage patients take a more active role in their healthcare.2–5 Several studies have reported that patients have widely accepted IHCS and benefited from a better quality of life, greater participation in healthcare, and reduced communication barriers and cost of care, regardless of race, education, or age.6–15

Implementation challenges with IHCS

Health information systems hold great promise for improving healthcare, but can also produce unwanted consequences.16–20 A failed implementation can be costly and raise cynicism about such innovations.21–24 Most research about implementing technology relates to administrative, financial, or clinical data, such as electronic medical records,25–27 computerized physician order entry,28–31 and expert clinical decision-support systems.32 Few studies have reported on implementing IHCS,33 34 although introducing this tool poses unique challenges compared to introducing other types of technology.2 For example, when an IHCS is adopted in a cancer clinic, the end-user (the patient and their family) is not an employee of the organization but a customer. This means that the change requires different communication and operational adjustments compared to those required of new technology used only by staff members.35–37 In addition, clinicians are especially influential in promoting the use of an IHCS to their patients and families, although the system changes aspects of the doctor–patient relationship, such as communication between them. Thus, adopting IHCS involves barriers and facilitators not examined previously in the literature on implementing health information technology. To our knowledge, no previous research has been designed to predict and guide IHCS implementation.

Theoretical framework

A theoretical approach to adopting IHCS can organize and guide activities in ways that increase the likelihood of a successful implementation. Three theories have guided our work on a RIM for IHCS. Diffusion of innovations is the “process by which an innovation is communicated through certain channels over time among the members of a social system.” Adopting an IHCS is an innovation for patients and the healthcare system. This theory organizes insights into adopting the innovation, such as stages of adoption, attributes of innovations and innovators, and social-structural constraints.38–42 Because we view implementing an IHCS as an organizational act and issue, organizational change theory relates to adopting IHCS as well. This theory suggests that readiness for change can ease adoption, which requires modifications in the behavior of organizational members and often involves realignments of departmental and personnel responsibilities.43–45 Finally, an organization's policies and practices need to support putting an IHCS into practice after the adoption decision has been made. Implementation theory describes the phases of the process, from a formal introduction to the institutionalization of the change. It also addresses the extent to which an innovation fits within the organization's infrastructure and climate.46 47

The Readiness for Implementation Model (RIM)

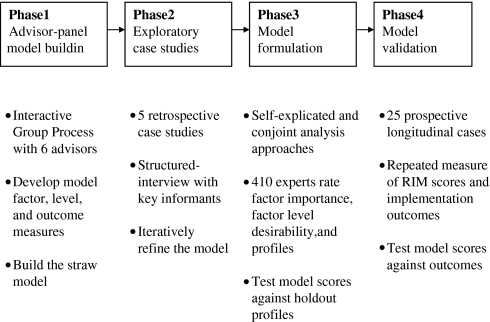

Guided by the three theories, the RIM was conceptualized, developed, and validated in four phases (figure 1). Phases 1 and 2 are briefly described below (see online appendix A available at www.jamia.org for an in-depth description).48 Phases 3 and 4 are the main subject of this paper.

Figure 1.

The RIM development process. The headings describe the phases of the process and beneath are descriptions of the key components in each phase. Phases 3 and 4 are the focus of this paper.

Phase 1: Advisor-panel model building

A panel of six advisors developed a straw model of elements likely to relate to implementation success, along with two or three descriptions, called ‘element levels,’ of how strongly each element influences implementation. The element levels represent a continuum from strong positive influence to minor influence to strong negative influence.

Phase 2: Exploratory case studies

We refined the straw model by interviewing key informants at five sites where IHCS had been adopted, asking about implementation barriers and facilitators. When the interviews at one site were finished, we modified the model and used the revision in the interviews at the next site.49 We concluded with a model that has seven higher-layer factors and 42 elements without weights (table 1).

Table 1.

The seven RIM factors with their definitions and elements

| Model factor | Definition | Elements |

| Organizational environment | State of the institution |

|

| Organizational motivation | Extent to which the innovation fits with institutional goals, resources, and support |

|

| Meeting user needs | Quality of the innovation and the availability of help |

|

| Promotion | Presence and influence of institutional champions and communication channels |

|

| Implementation | Robustness of implementation strategies |

|

| Fit in department | Extent to which the innovation fits with departmental processes |

|

| Awareness and support | Ongoing internal marketing and enthusiasm for the innovation |

|

Model formulation (phase 3)

In phase 3, 410 experts quantified the weights of RIM factors in two approaches, self-explicated (SE) and conjoint analysis (CA). Both approaches are popular among marketers for measuring customer preferences, and both have been used increasingly to answer questions about healthcare preferences and resource allocation.50–61 We used the two approaches to produce decision-making information which will guide organizational strategies for implementing IHCS.62–64 The validity, reliability, and predictive power of both approaches are well established.65–67

The SE approach asks respondents to rate (1) how desirable each factor is on a scale of 0–100, and (2) how important each factor is. To determine importance, respondents typically allocate 100 points across the factors. Overall preferences are obtained by multiplying the importance number by the factor level.68 The SE is substantially easier to use than the CA approach for both respondents and investigators.69

Conjoint analysis was developed in mathematical psychology and has a strong theoretical basis.58 59 70 71 Respondents react to a set of hypothetical profiles. Each profile realistically describes a product, service, or situation; respondents give ratings of or make choices based on the profiles. The goal of CA is to determine which combination of factors most influences respondents' decisions. This approach yields more realistic information than the SE approach,50 but it requires creating and evaluating many hypothetical profiles, which can be overwhelming with a large number of factors.

Phase 3 study methods

Self-explicated and conjoint analysis survey development procedures (four steps)

Identifying factors

The factors and elements developed in phases 1 and 2 were used to construct the SE and CA surveys. For the SE approach, experts rated the importance of the factors by allocating 100 points across the seven factors.

Assigning factor performance level

In phase 1, the advisory panel wrote two or three descriptions of the potential of each element to influence implementation. In phase 3, the seven factors were described in four levels, from the least desired condition to the most, by assigning to each level a different combination of element descriptions. In the least desired condition, all the elements in the factor have no influence or negative influence on implementation. In the most desired condition, all the elements have a positive influence on implementation. The other two factor levels (medium high and medium low) have elements of medium intensity between the most and the least desired conditions. The most desired level for the factor ‘organizational environment’ is the one in which the six elements are all positive: a past history of successful innovation, innovative leaders, and so on. For the SE approach, experts would rate the factor levels on a 0–100 desirability scale.

Developing hypothetical profiles for the conjoint analysis

This step involved designing profiles that described a hypothetical organization's implementation efforts by using different combinations of the factor levels. Respondents would rate the profiles by weighing the factors jointly. The number of profiles that can be constructed from a set of seven factors, each with four levels, is 47 or 16 384. We reduced this to a more manageable 700 profiles using a fractional factorial design to generate an orthogonal array. We also produced a set of 120 profiles, which we called ‘holdout profiles,’ to use in assessing the validity of the estimated weights from both the SE and CA approaches (see online appendix B available at www.jamia.org for a sample profile). Five experts rated each of the 820 profiles (a within-profile design), and each expert was given a different set of 10 profiles (a within-subject design). This required a total of 410 experts and 4100 profile scores (5×820=4100 profile scores and 4100/10=410 experts).

Deciding profile rating preference

To make the model useful for predicting sustainability, we asked each expert how likely the hypothetical organization was to continue using the technology after the initial implementation. They responded by giving a ‘% chance,’ such as a 70% chance. These responses were used to derive the factor weights for the CA (700 profiles) and to cross-validate both the SE and CA models (120 profiles).

Expert sample eligibility

Members of the American Medical Informatics Association and the Society of Behavioral Medicine were invited by mail to participate in the project because of their potential expertise in implementing IHCS. We sought individuals in these categories: (1) corporate executive involved in approving and/or securing resources for an IHCS; (2) department manager with overall responsibility for implementing an IHCS; (3) champion of an IHCS who has pushed to get it implemented; (4) front-line staff person who had some responsibility for implementing an IHCS; and (5) academic or consultant who has studied or offered advice on implementations.

Expert data analysis

Self-explicated approach analysis

Using Pearson's correlation, we first carried out a consistency check to confirm that the order of factor-level desirability ratings would correspond to the order of their theoretical readiness intensity levels.56 We computed the relative importance of each factor in percentage terms in order to compare SE results with CA results.

Conjoint approach analysis

Regression techniques are commonly used to analyze CA responses.56 72 We used stepwise regression for parameter fitting, assuming a linear function of the readiness factors. This procedure has often been employed in healthcare research using the CA approach.73–76 Expert ratings for the 700 profiles were used as the dependent variables. Stepwise linear regression with dummy coding was performed to generate factor weights (p for entry <0.05). The β weight of the least desired level for each factor was set at zero; the remaining levels were estimated in contrast to zero. We computed the relative importance of each factor in percentage terms by taking the range of weights for any factor (highest minus lowest), dividing it by the sum of all the weights, and multiplying by 100.75

Holdout profiles for cross-validation analyses

We used the 120 holdout profiles to carry out a preliminary evaluation of both approaches. Spearman's P correlations were conducted to test the predictive validity of calculated model scores from both approaches.

Phase 3 study results

Expert responses

Each wave of invitations to experts had an average participation rate of 33%. Of the 314 individuals who returned opt-out cards, 67% reported that they did not fit into the expert categories and the rest did not have time to participate. We mailed 33 waves of invitations, reached 1490 individuals, and sent 2079 survey packets (some individuals were sent the same packet twice) to obtain responses from 410 experts. Experts were contacted if their surveys had missing values in order to have a complete data set.

Characteristics of experts

Most participants reported that they were implementation team members (73%) and champions (61%). Participants could give multiple responses. For example, one person could be both a champion and a member of the implementation team. As to their roles within organizations, participants' top three responses were clinician (50%), academic researcher (50%), and department manager (25%). Again, participants could give multiple responses.

Factor importance weights from the SE and CA approaches

The internal consistency check showed that the SE factor level ratings were consistent with their theoretical readiness intensity (r>0.90, p<0.001). In the CA approach, all factors were retained in the regression model (p<0.05). Factor importance weights separately derived by the SE and CA approaches are shown in table 2. Both approaches identified ‘organizational motivation’ and ‘meeting user needs’ as most important in predicting successful implementation. These factors were twice as important as some other factors. ‘Awareness and support’ was identified as a relatively more important factor by the CA. ‘Promotion’ was deemed relatively less influential.

Table 2.

Relative importance of the RIM factors as percent of total weights

| Factor: mean importance (%) | Self-explicated | Conjoint analysis |

| Organizational environment | 14 | 11 |

| Organizational motivation | 16 | 22 |

| Meeting user needs | 21 | 18 |

| Promotion | 10 | 7 |

| Implementation | 15 | 10 |

| Fit in department | 11 | 14 |

| Awareness and support | 13 | 19 |

| Internal validity of 120 holdout profiles: Spearman's r correlation between predictive scores and observed ratings | 0.81 (p<0.001) | 0.85 (p<0.001) |

Holdout profile cross-validation results

For both approaches, internal cross-validation showed that the observed ratings for the 120 holdout profiles and the calculated predictive model scores were highly correlated (r>0.81, p<0.001; table 2). The CA model was constructed as a least-square fit to the 700 profile ratings, while the SE model was developed independently without profile information. We would expect, therefore, that the CA would perform better than the SE approach in this comparison using the same set of 120 profiles.

Model validation (phase 4)

To see how well the RIM reflects actual implementations, we conducted in phase 4 a 1-year longitudinal study in 25 sites that were implementing IHCS. We used the study to validate the weights developed from the SE and CA approaches and compare the effectiveness of the two analytic techniques.

Phase 4 study methods

Recruitment and data collection

Criteria for organizations to be included in the study were: (1) the IHCS would allow patients to be more actively involved in their healthcare; (2) organizations were providing the system to their patients; and (3) organizations were just starting to implement the system. At each site, we recruited representatives of a cross-section of roles (eg, administrators who pushed the project, implementation team members, front-line staff). To observe changes in implementation readiness over time, respondents were surveyed at three time points (0, 6, and 12 months). At each point, we asked participants to rate how their organization was functioning on the 42 RIM elements. Time 0 was the point when a formal decision to adopt an IHCS was made but actual implementation had not yet started.

Implementation outcome questions

We also asked respondents questions about the implementation at 6 and 12 months. We had learned through our interviews in phase 2 that organizations defined success in an implementation not simply by the number of patients using the IHCS, but by the attitude toward the technology and other factors. As a result, our six outcome questions asked, for example, whether respondents were glad the IHCS was available and if it was used in other parts of the organization. We also conducted a factor analysis to determine how the questions related to one another.

Site-level consistency analysis

Some of the sites recruited for this phase 4 study were different departments or locations in the same organization. Nonetheless, we treated each site as an individual case. We made this decision because sites within a single organization often differ greatly in their environment, thus producing different needs and circumstances. They may also differ widely in support from management, climate for implementation, and other factors. Sometimes different sites within one organization were adopting different IHCS. To estimate internal consistency within each site, we calculated the percentage of agreement across respondents.

Validation data analysis

To estimate how well the model captured implementation, we used a Spearman's P non-parametric test to compare the RIM prediction and the outcome at 6 and 12 months. The closer the measures, the more accurate we expected the model to be. We were also interested in the long-term predictive power of the model. Hence we had three prediction–outcome combinations: prediction at the start versus outcomes at months 6 and 12; prediction at month 6 versus outcome at month 12; and prediction at the start and month 6 versus outcome at month 12. Regression analysis was used to evaluate the predictive power of the model.

To judge an IHCS implementation as successful or not according to the model, we needed a cut-off level. The cut-off of the implementation outcomes, which were assessed on a 0–5 scale, was set at 3.5. For the RIM scores, 70 (out of a maximum of 100) was set as the cut-off. We chose this high figure to minimize the number of falsely identified successful IHCS adoptions. We examined the long-term success rates (12-month outcomes) between sites with low RIM scores and those with high RIM scores.

Phase 4 study results

Healthcare organization recruitment results

We contacted more than 50 healthcare organizations about the study and recruited 25 sites from 15 healthcare organizations. The organizations included managed-care organizations, social-service agencies, university medical centers, and inner-city clinics. The organizations were spread across the country.

Implementation outcome questions

Using data from months 6 and 12, an exploratory factor analysis of the six outcome questions revealed a single underlying construct. As a result, we used the average score of the six outcome questions as a composite measure for the rest of the outcome evaluation.

Site-level consistency results

The number of respondents per site ranged from 3 to 16. Across the 25 sites, the average percentage of element-rating agreement ranged from 65% to 80%. This supported aggregating individual responses to the site level for analysis. Aggregated site means were adopted for the rest of the analysis.

Validation data analysis results

The correlation between the RIM predictive scores using both approaches and outcomes for the concurrent assessment ranged from 0.65 to 0.70 (p<0.001), demonstrating the accuracy of both models. As for long-term prediction, the model scores at month 6 were more accurate than at month 0 in predicting outcomes at month 12. The results of using both month 0 and month 6 model scores (adjusting for baseline variability) to predict month 12 outcomes was virtually the same as using month 0 data alone, without any significant R2 change. The model is a slightly better predictor of 1-year outcomes than half-year outcomes, perhaps because it takes time for a picture of implementation to emerge. The predictive validity of the SE approach for month 12 was slightly better than for the CA approach, but was not statistically significant (table 3).

Table 3.

Comparison of the RIM predictive score and organization self-reported outcome in 25 implementation cases

| Spearman's r correlation for accuracy analysis | Self-explicated | Conjoint analysis |

| M6 RIM predictive score versus M6 organization self-reported outcome | 0.65 (p<0.001) | 0.66 (p<0.001) |

| M12 RIM predictive score versus M12 organization self-reported outcome | 0.68 (p<0.001) | 0.70 (p<0.001) |

| Regression R2 for long-term prediction | ||

| M0 RIM predictive score (independent variable) versus M6 organization self-reported outcome (dependent variable) | 0.51 | 0.50 |

| M0 RIM predictive score (independent variable) versus M12 organization self-reported outcome (dependent variable) | 0.51 | 0.50 |

| M6 RIM predictive score (independent variable) versus M12 organization self-reported outcome (dependent variable) | 0.55 | 0.54 |

| M0+M6 RIM predictive scores (independent variables) versus M12 organization self-reported outcome (dependent variable) | 0.57 | 0.56 |

M indicates month.

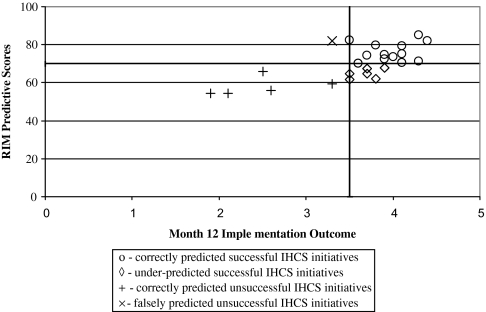

Because we found no significant difference between the two approaches, we compared only the SE baseline scores to the outcomes at month 12. The model correctly predicted 68% of the successful IHCS implementations and under-estimated 32% of the successful ones (figure 2). Further, it correctly identified 83% of the unsuccessful IHCS initiatives and falsely identified 17% of the unsuccessful IHCS initiatives as potentially positive.

Figure 2.

RIM predictive scores compared with perceived implementation success. The bolded lines indicate the cut-off points. ○, Correctly predicted successful IHCS initiatives; ◊, under-predicted successful IHCS initiatives; +, correctly predicted unsuccessful IHCS initiatives; ×, falsely predicted unsuccessful IHCS initiatives.

Discussion

Study findings validated the RIM model. It appears that the conceptual development, mathematical foundation, and data collections that built RIM produced the desired results.

Comparison of the two decision-analytic modeling techniques

Although CA has a theoretical advantage over SE in predictive validity,58 59 our analysis did not find that the two approaches produce different results. Sattler and Haensel-Borner conducted a comprehensive analysis of empirical studies of these approaches and failed to confirm the superiority of CA.50 The majority of the comparisons they studied found either non-significant differences between the methods or higher predictive validity for the SE approach.

Characteristics of the RIM

Several unique characteristics of the RIM make it a useful tool. First, it is quick and easy to use; respondents can complete the survey in about 15 min. Second, the response options for each question (element) in the RIM are exclusive descriptions rather than Likert-type scales. Exclusive descriptions force respondents to choose an answer that best describes their organization's readiness for each of the 42 model elements. We believe that this process also fosters in respondents a better understanding of their organization's readiness to change. Answering with a number from 1 to 10 on a Likert-type scale would be less likely to stimulate this understanding and could easily result in a ceiling effect. Third, our RIM allows the seven factors to be weighted differently. Stablein et al examined the readiness of 17 hospitals to implement computerized physician order entry and concluded after additional research that some of the readiness indicators should be more heavily weighted than others.29 With the pre-calculated score conversion table, the global RIM scores are very convenient to compute with factor weights incorporated (see online appendix C available at www.jamia.org for the RIM survey and score conversion table).

Application of the RIM

The RIM clearly identifies factors critical to implementing an IHCS. The model provides a way to determine whether the likelihood of success warrants the effort required. An organization can also use the RIM to assess its own strengths and barriers to adoption and prepare for implementation. In addition, the model can be used to monitor progress over time to keep the effort on track and measure the effect of actions taken between evaluations. The factor weights can be used to help allocate limited resources to produce the greatest chance of success.

We suggest that at least five to seven individuals, including members of the implementation team and the project champion, complete the RIM survey to assess how their organization functions on each element. The RIM assessment should take place while the organization is deciding whether to adopt an IHCS, during the implementation planning, 6 months after implementation begins, and annually thereafter.

Study limitations

While the model validation is encouraging, results should be considered in light of study limitations. First, 50% of the 410 external experts used in phase 3 identified themselves as working in academic settings, which may bias the findings. All experts were drawn from American Medical Informatics Association and Society of Behavioral Medicine members, possibly explaining the high percentage of academic respondents and suggesting potential bias in sample selection. Second, the order of the SE and CA tasks may have affected the results. Each expert first completed the SE task and then did the profile ratings for the CA task. Participants might respond to the profiles more cautiously to create answers consistent with their ratings in the SE task. This behavior might also contribute to the small difference found in the results of the two approaches. Completing two decisional tasks in one survey also increased the cognitive burden on participants and may have affected their ratings. Future studies should focus on the effect of the task order and minimize the cognitive burden on participants. Third, in theory, decisional factors should be mutually independent in CA experiments. The outcome in one factor should not influence the outcome in another. In our study, some interaction between factors might be present. For instance, ‘internal awareness and support’ might be enhanced when ‘promotion’ of the IHCS is pushed. We acknowledge that an additive model such as ours prevents estimation of how factors interact and may therefore be considered inaccurate. But using an additive model works well in practice. Using multilevel analysis would make data analysis and interpretation much more complicated while hardly improving the fit of the model.77 Fourth, we selected 70 as the RIM score cut-off (used to minimize falsely identified successful implementations) based on our experience in developing multi-attribute predictive models of the efforts required in successful implementations. A ROC curve analysis is needed in future research.78

Future direction

First, the model would benefit from research with a larger sample for a longer time. Because of resource limits, we were able to follow 25 sites for just 1 year. Observing implementation over at least 2 years would be much better (our advisory panel said 2 years are needed to show sustainability). The sensitivity of the RIM to change over time also needs validation in a longer study. Second, although the RIM was assessed in 25 sites prospectively for its predictive validity, future studies are needed to examine the effectiveness of the RIM in guiding implementation and improving outcomes. Such studies will use baseline and process assessments by the RIM to develop tailored implementation strategies.

Conclusion

Installing an IHCS from adoption through institutional acceptance requires careful attention to implementation. The RIM has great potential for helping institutional planners measure an organization's readiness for IHCS adoption and implementation.

Supplementary Material

Acknowledgments

We thank Bobbie Johnson for her editorial assistance.

Footnotes

Funding: This research was supported by the Agency for Healthcare Research and Quality (AHRQ R01 HS10246).

Competing interests: None.

Ethics approval: This study was conducted with the approval of the University of Wisconsin, Madison, Wisconsin.

Provenance and peer review: Not commissioned; not externally peer reviewed.

References

- 1.Eng TR, Gustafson DH, Henderson J, et al. Introduction to evaluation of interactive health communication applications. Am J Prev Med 1999;16:10–15 [DOI] [PubMed] [Google Scholar]

- 2.Robinson TN, Patrick K, Eng TR, et al. ; for the Science Panel on Interactive Communication and Health An evidence-based approach to interactive health communication: A challenge to medicine in the information age. JAMA 1998;280:1264–9 [DOI] [PubMed] [Google Scholar]

- 3.Gustafson DH, Robinson TN, Ansley D, et al. Consumers and evaluation of interactive health communication applications. Am J Prev Med 1999;16:23–9 [DOI] [PubMed] [Google Scholar]

- 4.Henderson J, Noell J, Reeves T, et al. Developers and evaluation of interactive health communication applications. Am J Prev Med 1999;16:30–4 [DOI] [PubMed] [Google Scholar]

- 5.Murray E, Burns J, See TS, et al. Interactive health communication applications for people with chronic disease. Cochrane Database Syst Rev 2005;4:CD004274. [DOI] [PubMed] [Google Scholar]

- 6.Boberg EW, Gustafson DH, Hawkins RP, et al. Development, acceptance, and use patterns of a computer-based education and social support system for people living with aids/HIV infection. Comput Hum Behav 1995;11:289–311 [Google Scholar]

- 7.Gustafson D, McTavish F, Stengle W, et al. Use and impact of ehealth dystem by low-income women with breast cancer. J Health Commun 2005;10(Suppl 1):195–218 [DOI] [PubMed] [Google Scholar]

- 8.Gustafson DH, Hawkins R, Boberg E, et al. Impact of a patient-centered, computer-based health information/support system. Am J Prev Med 1999;16:1–9 [DOI] [PubMed] [Google Scholar]

- 9.Gustafson DH, Hawkins R, Pingree S, et al. Effect of computer support on younger women with breast cancer. J Gen Intern Med 2001;16:435–45 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Gustafson DH, McTavish F, Hawkins R, et al. Computer support for elderly women with breast cancer. J Am Med Assoc 1998;280:1305. [DOI] [PubMed] [Google Scholar]

- 11.Lin CT, Wittevrongel L, Moore L, et al. An internet-based patient-provider communication system: Randomized controlled trial. J Med Internet Res 2005;7:e47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.McTavish FM, Gustafson DH, Owens BH, et al. Chess: an interactive computer system for women with breast cancer piloted with an under-served population. J Ambul Care Manage 1994;18:35–41 [DOI] [PubMed] [Google Scholar]

- 13.Pingree S, Hawkins RP, Gustafson DH, et al. Will the disadvantaged ride the information highway: Hopeful answers from a computer-based health crisis system. J Broadcast Electron Media 1996;40:331–53 [Google Scholar]

- 14.Shegog R, McAlister AL, Hu S, et al. Use of interactive health communication to affect smoking intentions in middle school students: a pilot test of the “headbutt” risk assessment program. Am J Health Promot 2005;19:334–8 [DOI] [PubMed] [Google Scholar]

- 15.Smith B, Ivnik M, Owens B, et al. Use of an interactive health communication application in a patient education center. J Hosp Libr 2005;5:41–9 [Google Scholar]

- 16.Anderson JG, Aydin CE, Jay SJ. Evaluating Health Care Information Systems: Methods And Applications. 289;Thousand Oaks, CA: Sage Publications, 1994 [Google Scholar]

- 17.Mitka M. Joint Commission Offers Warnings, Advice on Adopting New Health Care IT Systems. JAMA 2009;301:587–9 [DOI] [PubMed] [Google Scholar]

- 18.Wachter RM. Expected and unanticipated consequences of the quality and information technology revolutions. JAMA 2006;295:2780–3 [DOI] [PubMed] [Google Scholar]

- 19.Harrison MI, Koppel R, Bar-Lev S. Unintended consequences of information technologies in health care: an interactive sociotechnical analysis. J Am Med Inform Assoc 2007;14:542–9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Berg M. Implementing information systems in health care organizations: myths and challenges. Int J Med Inform 2001;64:143–56 [DOI] [PubMed] [Google Scholar]

- 21.Markus ML. Technochange management: using IT to drive organizational change. J Inf technol 2004;19:4–20 [Google Scholar]

- 22.Reichers AE, Wanous JP, Austin JT. Understanding and managing cynicism about organizational change. Acad Manage Exec 1997;11:48–58 [Google Scholar]

- 23.Southon G, Sauer C, Dampney K. Lessons from a failed information systems initiative: issues for complex organisations. Int J Med Inform 1999;55:33–46 [DOI] [PubMed] [Google Scholar]

- 24.Kaplan B, Brennan PF, Dowling AF, et al. Toward an informatics research agenda: key people and organizational issues. J Am Med Inform Assoc 2001;83:235–41 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Dansky KH, Gamm LD, Vasey JJ, et al. Electronic medical records: Are physicians ready? J Healthcare Manage 1999;44:440–54 [PubMed] [Google Scholar]

- 26.Sicotte C, Denis JL, Lehoux P, et al. The Computer-based patient record challenges towards timeless and spaceless medical practice. J Med Syst 1998;22:237–56 [DOI] [PubMed] [Google Scholar]

- 27.Lehoux P, Sicotte C, Denis JL. Assessment of a computerized medical record system: disclosing scripts of use. Eval Program Plan 1999;22:439–53 [Google Scholar]

- 28.Poon EG, Blumenthal D, Jaggi T, et al. Overcoming barriers to adopting and implementing computerized physician order entry systems In U.S. hospitals. Health Affair 2004;23:184–90 [DOI] [PubMed] [Google Scholar]

- 29.Stablein D, Welebob E, Johnson E, et al. Understanding hospital readiness for computerized physician order entry. Jt Comm J Qual Safety 2003;29:336–44 [DOI] [PubMed] [Google Scholar]

- 30.Massaro TA. Introducing physician order entry at a major academic medical center: I. Impact on organizational culture and behavior. Academic Med 1993;68:20–5 [DOI] [PubMed] [Google Scholar]

- 31.Ash JS, Stavri PZ, Kuperman GJ. A consensus statement on considerations for a successful CPOE implementation. J Am Med Inform Assoc 2003;10:229–34 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Shiffman RJ, Liaw Y, Brandt CA, Corb GJ. Computer-based guideline implementation systems: a systematic review of functionality and effectiveness. J Am Med Inform Assoc 1999;6:104–14 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Rogers A, Mead N. More than technology and access: primary care patients' views on the use and non-use of health information in the Internet age. Health Soc Care Community 2004;12:102–10 [DOI] [PubMed] [Google Scholar]

- 34.Stoop AP, van't Riet A, Berg M. Using information technology for patient education: realizing surplus value? Patient Educ Counsel 2004;54:187–95 [DOI] [PubMed] [Google Scholar]

- 35.Gustafson DH, Brennan PF, Hawkins R. Investing in e-health: What it takes to sustain consumer health informatics. New York: Springer Science and Business Media, Inc, 2008 [Google Scholar]

- 36.DuBenske LL, Chih M-Y, Dinauer S, et al. Development and implementation of a clinician reporting system for advanced stage cancer: initial lessons learned. J Am Med Inform Assoc 2008;15:679–86 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Boddy D, King G, Clark J, et al. The influence of context and process when implementing e-health. BMC Med Inform Decis Mak 2009;9:9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Roger E. Communication of Innovations. 4th edn New York: Free Press, 1995 [Google Scholar]

- 39.Rogers EM. The “critical mass” in the diffusion of interactive technologies in organizations. In: Kraemer KL, Cash JLJ, eds. Survey Research Methods. Boston, USA: Harvard Business School, 1991 [Google Scholar]

- 40.Romano CA. Diffusion of technology innovation. Adv Nurs Sci 1990;13:11–21 [DOI] [PubMed] [Google Scholar]

- 41.Greenhalgh T, Stramer K, Bratan T, et al. Introduction of shared electronic records: multi-site case study using diffusion of innovation theory. BMJ 2008;337:a1786. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Ovretveit J, Scott T, Rundall TG, et al. Implementation of electronic medical records in hospitals: two case studies. Health Policy 2007;84:181–90 [DOI] [PubMed] [Google Scholar]

- 43.Armenakis AA, Harris SG, Mossholder KW. Creating readiness for organizational change. Hum Relat 1993;46:681–703 [Google Scholar]

- 44.Ash J. Organizational factors that influence information technology diffusion in academic health sciences centers. J Am Med Inform Assoc 1997;4:102–11 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Weiner BJ, Lewis MA, Linnan LA. Using organization theory to understand the determinants of effective implementation of worksite health promotion programs. Health Educ Res, 2009;24:292–305 [DOI] [PubMed] [Google Scholar]

- 46.Klein KJ, Sorra JS. The challenge of innovation implementation. Acad Manage Rev 1996;21:1055–80 [Google Scholar]

- 47.Van de Ven AH, Polley DE, Garud R, et al. The Innovation Journey. Oxford: Oxford University Press, 1999 [Google Scholar]

- 48.Gustafson DH, Brennan PF, Hawkins R. Investing in e-health: What It Takes To Sustain Consumer Health Informatics. New York: Springer Science and Business Media, Inc, 2008 [Google Scholar]

- 49.Yin RK. The Abridged Version Of Case Study Research: Design And Method, In Handbook Of Applied Social Research Methods. Thousand Oaks, CA, US: Sage Publications, Inc, 1998:229–59 [Google Scholar]

- 50.Sattler H, Haensel-Borner S. A comparison of conjoint measurement with self-explicated approaches. In: Gustafsson A, Herrmann A, Huber F, eds. Conjoint Measurement: Methods And Applications. Berlin: Springer Verlag, 2000:121–33 [Google Scholar]

- 51.Green P, Rao V. Conjoint measurement for quantifying judgmental data. J Mark Res 1971;8:355–63 [Google Scholar]

- 52.Leung GM, Chan SSC, Chau PYK, et al. Using conjoint analysis to assess patients' preferences when visiting emergency departments in Hong Kong. Acad Emerg Med 2001;8:894–8 [DOI] [PubMed] [Google Scholar]

- 53.Shackley P, Slack R, Michaels J. Vascular patients' preferences for local treatment: an application of conjoint analysis. J Health Serv Res Policy 2001;6:151–7 [DOI] [PubMed] [Google Scholar]

- 54.Jan S, Mooney G, Ryan M, et al. The use of conjoint analysis to elicit community preferences in public health research: a case study of hospital services in South Australia. Aust N Z J Public Health 2000;24:64–70 [DOI] [PubMed] [Google Scholar]

- 55.Markham FW, Diamond JJ, Hermansen CL. the use of conjoint analysis to study patient satisfaction. Eval Health Prof 1999;22:371–8 [DOI] [PubMed] [Google Scholar]

- 56.Ryan M, Farrar S. Using conjoint analysis to elicit preferences for health care. BMJ 2000;320:1530–3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Alriksson S, Öberg T. Conjoint analysis for environmental evaluation. Environ Sci Pollut Res 2008;15:244–57 [DOI] [PubMed] [Google Scholar]

- 58.Green P, Srinivasan V. Conjoint analysis in consumer research: issues and outlook. J Consumer Res 1978;5:103–23 [Google Scholar]

- 59.Green PE, Srinivasan V. Conjoint analysis in marketing: New developments with implications for research and practice. J Market 1990;54:3–19 [Google Scholar]

- 60.Sachan A, Datta S, Arora AP. Integrating customer preferences and organisation strategy for resource allocation. Int J Serv Operat Manage 2008;4:345–67 [Google Scholar]

- 61.Goossens A, Bossuyt PM, de Haan RJ. Physicians and nurses focus on different aspects of guidelines when deciding whether to adopt them: an application of conjoint analysis. Med Decis Mak 2008;28:138–45 [DOI] [PubMed] [Google Scholar]

- 62.Priem RL. An application of metric conjoint analysis for the evaluation of top managers' individual strategic decision making processes: a research note. Strateg Manage J 1992;13:143–51 [Google Scholar]

- 63.Reutterer T, Kotzab HW. The use of conjoint-analysis for measuring preferences in supply chain design. Ind Market Manage 2000;29:27–35 [Google Scholar]

- 64.Lohrke FT, Holloway BB, Woolley TW. Conjoint analysis in entrepreneurship research. Organ Res Methods 2010;13:16–30 [Google Scholar]

- 65.Srinivasan V, Jain A, Malhotra N. Improving predictive power of conjoint analysis by constrained parameter estimation. J Mark Res 1983;20:433–8 [Google Scholar]

- 66.Srinivasan V, Flachsbart PG, Dajani JS, et al. Forecasting the effectiveness of work-trip gasoline conservation policies through conjoint analysis. J Mark Res 1981;45:157–72 [Google Scholar]

- 67.Leigh T, MacKay D, Summers J. Reliability and validity of conjoint analysis and self-explicated weights: A comparison. J Mark Res 1984;21:456–62 [Google Scholar]

- 68.von Winterfeldt D, Edwards W. Decision analysis and behavioral research. Cambridge: Cambridge University Press, 1986 [Google Scholar]

- 69.Akaah IP, Korgaonkar PK. An empirical comparison of the predictive validity of self-explicated, h-hybrid, traditional conjoint, and hybrid conjoint models. J Market Res 1983;20:187–97 [Google Scholar]

- 70.Hammond KR. Human judgment and social policy: Irreducible uncertainty, inevitable error, unavoidable injustice. New York: Oxford University Press, 1996 [Google Scholar]

- 71.Gustafson DH, Fryback DG, Rose JH, et al. A decision theoretic methodology for severity index development. Med Decis Mak 1986;6:27–35 [DOI] [PubMed] [Google Scholar]

- 72.Cattin P, Wittink DR. Commercial use of conjoint analysis: a survey. in Journal of Marketing. American Marketing Association, 1982:44–53 [Google Scholar]

- 73.Bhargava JS, Patel B, Foss AJE, et al. Views of glaucoma patients on aspects of their treatment: an assessment of patient preference by conjoint analysis. Invest. Ophthalmol Vis Sci 2006;47:2885–8 [DOI] [PubMed] [Google Scholar]

- 74.Smith DG, Wigton RS. Modeling decisions to use tube feeding in seriously ill patients. Arch Intern Med 1987;147:1242–5 [PubMed] [Google Scholar]

- 75.Bhargava JS, Bhan-Bhargava A, Foss AJE, et al. Views of glaucoma patients on provision of follow-up care; an assessment of patient preferences by conjoint analysis. British J Ophthalmol 2008;92:1601–5 [DOI] [PubMed] [Google Scholar]

- 76.Lohrke FT, Holloway BB, Woolley TW. conjoint analysis in entrepreneurship research: a review and research agenda. Organ Res Method 2010;13:16–30 [Google Scholar]

- 77.Orme B. Getting started with conjoint analysis: strategies for product design and pricing research. Madison, WI: Research Publishers LLC, 2006 [Google Scholar]

- 78.Sainfort F. Eualuation of Medical Technologies. Med Decis Mak 1991;11:208–20 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.