Abstract

We investigated the training effects and transfer effects associated with two approaches to cognitive activities (so-called “brain training”) that might mitigate age-related cognitive decline. A sample of 78 adults between the ages of 50 and 71 completed 20, one-hour training sessions with the Wii Big Brain Academy software over the course of one month, and in a second month, completed 20, one-hour reading sessions with articles on four different current topics (order of assignment was counterbalanced for the participants). An extensive battery of cognitive and perceptual speed ability measures was administered before and after each month of cognitive training activities, along with a battery of domain-knowledge tests. Results indicated substantial improvements on the Wii tasks, somewhat less improvement on the domain-knowledge tests, and practice-related improvements on 6 of the 10 ability tests. However, there was no significant transfer-of-training from either the Wii practice or the reading tasks to measures of cognitive and perceptual speed abilities. Implications for these findings are discussed in terms of adult intellectual development and maintenance.

Introduction

Whether intelligence increases, is maintained, or declines with age in adulthood has been a topic of speculation, theory, and empirical research since the early part of the last century (e.g., Hsiao, 1927). When it comes to changes within individuals, longitudinal data, such as those described by Schaie (1996, 2005) provide a consistent view. On average, as people reach middle-age and beyond, there are significant declines in abilities associated with speeded information processing (such as measures of fluid intelligence [Gf] -- see, e.g., Horn, 1989; Salthouse,1996). However, with increasing age, there are more modest declines, or at least the declines come later, for domain knowledge and verbal abilities (e.g., measures of crystallized intelligence [Gc], broadly considered -- for a review, see Ackerman, 2008).

There has been a recent surge in interest and controversy around whether particular activities may help older adults prevent loss of cognitive function with increasing age. This issue has been addressed both in the scholarly literature (e.g., see Günther, Schäfer, Holzner, & Kemmler, 2003; Hultsch, Hertzog, Small, & Dixon, 1999; Mahncke, et al., 2006; Salthouse, 2006, 2007; Schooler, 2007; also see the Special Section on Cognitive Plasticity in the Aging Mind in Psychology and Aging, Mayr [Ed.], 2008), public policy literature (Hertzog, Kramer, Wilson, & Lindenberger, 2009), and recently has received substantial attention in the popular press (e.g., see Begley, 2007; Beulluck, 2006; Scott, 2007). From a scientific perspective, the fundamental issue is a confirmation or refutation of the “use it or lose it” concept. That is, seeking the answer to the question of whether there are activities that may attenuate or stop age-related cognitive decline. To date, however, there are many claims made, but relatively few randomized controlled studies, and no studies where extensive ability measurements are conducted to assess transfer of training. And, there has been no direct contrast of the effects of cognitive engagement for domain knowledge with the effects of cognitive engagement for fluid intellectual abilities. The study reported in this paper attempts to provide further evidence on the use it or lose it concept, using a within-subjects design, practice on both cognitive processing tasks and reading for domain knowledge, and a broad assessment of cognitive and perceptual speed abilities.

Direct Training

At the outset of the discussion, it important to note that, generally speaking, whether older adults can learn new tasks is not controversial. For example, in an early study of cognitive training, Schaie and Willis (1986) provided adults between 64 and 95 years of age with 5-hour training programs for inductive reasoning or spatial orientation. They found direct effects on performance of these two abilities on later tests that included retesting after an additional 14 years. These results are a striking demonstration that brief training on measures of intellectual abilities can have lasting effects. Although it is clear that older adults can learn new skills, a meta-analysis of training studies indicated that older adults tend to acquire new skills more slowly and less effectively than younger adults (Kubeck et al., 1996). Rather, the most controversial aspect of the use it or lose it concept is whether training adults on cognitively-intensive tasks results in marked transfer-of-training to other tasks that involve cognitive/intellectual content. Specifically, the central question is: Does training have any positive influences beyond performance on the training tasks themselves?

Transfer of training

The topic of transfer-of-training has been one that has vexed experimental and educational psychologists for much of the last century. In a series of early studies, Thorndike and Woodworth (1901) came to the conclusion that practice on mental tasks resulted in relatively little impact on the performance and learning of other tasks, unless the tasks shared “identical elements,” meaning that the tasks had substantial degrees of underlying common determinants of performance. Research and applications in the mid-20th century resulted in general perspectives on transfer that provided a more operationally-based approach, such as Osgood’s transfer surface approach (Osgood, 1953). More recent approaches (e.g., Singley & Anderson, 1989) have attempted to model transfer of training within larger cognitive systems (e.g., Anderson’s ACT model). Although it can be said that transfer of training is better understood today than it was in 1901, problems remain in predicting when transfer occurs, and how much transfer will be obtained from a specific training program. There is still no definitive treatment that allows for determination of whether a particular transfer task represents “near transfer” or “far transfer.”

Notwithstanding these limitations, there are two central points that can be made from the body of research and theory on transfer. First, although it is possible to assess transfer with a variety of different designs (e.g., see Gagné, Foster, & Crowley, 1948), one cannot validly assess transfer without the use of some kind of control or comparison group, because practice effects on a transfer task itself will be confounded with the effects of transfer. Second, it is more difficult to demonstrate transfer across tasks that appear to be relatively different on the surface, in comparison to tasks that have a high degree of deep and surface similarity. Thus, the a priori hypothesis in most training for broad transfer situations should be an expectation of a null transfer effect. In a recent review of the literature on training and transfer in adult samples, this overarching perspective was well documented by Green and Bavelier (2008, see also the discussion by Mayr, 2008).

Transfer of Training in Adults

In the most stark contrast of views on the question of the benefits of cognitive training in adults, Salthouse (2006, 2007) reviewed the existing experimental literature and concluded that although direct effects of practice or training may be found, the evidence for transfer beyond the specific tests or tasks was dubious at best. In response, Schooler (2007) argued that some studies show evidence of transfer beyond the tasks that received practice or training (e.g., studies by Sacynski, Margrett, & Willis, 2004; Dunlosky, Kubat-Silman, & Hertzog, 2003; and Edwards et al., 2002, 2005). Although none of these experiments demonstrated substantial transfer from the learning of specific tasks to more general changes in broad intellectual abilities, these studies share two methodological limitations. First, the samples for these studies included adults who were over the age of 65, and as old as 95. This age group may represent a band of age levels where acquiring new skills may be more limited, in comparison to adults who are in late middle age (see, e.g., the discussion by Hertzog, et al., 2009). Second, the assessments of far transfer to traditional abilities (e.g., Gf and Gc abilities -- see Horn, 1989; or perceptual speed [PS] abilities -- see Ackerman & Cianciolo, 2000) are based on an extremely limited sampling of abilities. The determination of ability factors by multiple measures is a hallmark of differential psychology research (e.g., see Carroll, 1993; Humphreys, 1962), yet is often overlooked in basic experimental investigations. In order to provide adequate assessments (e.g., reliable and valid) of these abilities, it is generally advised that at least three good reference or ‘marker’ tests be administered (e.g., see Mulaik, 1972).

With this as background, it is important to point out that there have been several recent investigations that have indicated the presence of transfer effects in adults. Perhaps the most promising results have been found in the enhancement of visual skills (for reviews, see Achtman, Green, & Bavelier, 2008; Green, Li, & Bavelier, 2009) and processing speed (see Dye, Green, & Bavelier, 2009 for a review). Results from these studies support the notion that playing action video games may yield positive transfer to visuospatial attention skills (Green & Bavelier, 2003, 2006), even after a relatively modest amount of training (e.g., 10–30 hours). While these studies have primarily assessed visual skills, this line of research illustrates the potential of video game training to improve existing capabilities.

Transfer of Training in Adults - Cognitive Abilities

An extensive review of the literature on transfer of training for cognitive abilities in adults is beyond the scope of this paper (though see Green & Bavelier, 2008 for an extensive treatment of this topic). Instead, we provide a brief review of experiment types and salient results that have broad intellectual functioning as the target of training and transfer effects.

Studies of training and transfer that fail to use control groups or provide other means of accounting for practice effects on the target (transfer) tasks may provide suggestive evidence of transfer, but it is impossible to tease out transfer effects from the effects of practice on the target tasks, regardless of the training intervention. For example, Karbach and Kray (2009) provided task-switching training over 8 sessions of practice to groups of children, young adults, and old adults. They found improved performance on near transfer (another task switching task) and far transfer tasks (i.e., a dissimilar executive task, verbal and spatial working memory, and measures of fluid intelligence), among all age groups. However, actual ‘transfer’ cannot be assumed for these measures, given the lack of either a control group or a comparison to existing data on test-retest practice effects on either the near transfer or far transfer tasks.

In contrast, studies with control groups allow for a comparison between those participants who receive the training and those who receive no training on the target transfer tasks of cognitive abilities and skills. If there is a transfer effect, the gains in performance on the transfer task by the group receiving the intervention should exceed practice-related gains by the control group. That is, from the abilities literature (e.g., Hayslip, 1989; Yang, Reed, Russo, & Wilkinson, 2009), the a priori expectation for such studies is that both the control and training/transfer groups will show increases in performance. Such a result would be attributed to the participants learning the general strategies for test/task performance (or learning specific stimuli, when alternate form retest designs are not used). For example, Basak, Boot, Voss, and Kramer (2008) investigated the training and transfer effects of a “real-time strategy game” in a sample of adults with an average age of 70. An experimental group of 19 participants completed 23.5 hr of training on the Rise of Nations: Gold Edition game, and their data were compared to a “no-training no-contact” control group of 20 participants. Basak et al. (2008) found that the experimental group showed improved scores on several information processing tasks, and on a sample of Raven’s Advanced Progressive Matrices items, where performance was scored as the “proportion of items correctly completed compared with the items attempted” (p. 768). In contrast, the no-training no-contact control group generally failed to show any improvement in performance on these various tasks. There was a significant Group X Time interaction (F(2,68) = 4.12, p = .02; f = .35), indicating positive transfer of training. However, the lack of practice-related effects in the no-training no-contact control group data is puzzling, given that many of the tasks under investigation would be expected to show practice effects due to repeated exposure to the demands of these tasks.

Similarly, Mahnke et al. (2006) trained a group of 62 participants (mean age 69.3), on unspecified tasks of “speed of processing, spatial syllable match memory, forward word recognition span, working memory, and narrative memory” (p. 12524) over 8–10 weeks. They compared the experimental group mean performance to that of an “active control” group and a “no-contact control” group on a transfer task of “global auditory memory.” At the conclusion of the study, the group that received the “memory enhancement” training performed as well as the active control group, and slightly worse than (though not significantly worse) the no-contact control group on the transfer task. The authors argued that the memory enhancement training improved performance on the transfer task, but it is hard to reconcile this conclusion with the lack of differences between groups in post-training scores.

In contrast, Tranter and Koutstaal (2008) examined transfer to fluid intellectual capabilities, from a variety of take-home activities. Participants who were assigned mentally stimulating activities over the course of a 10–12 week period showed significant gains on fluid intelligence measures, relative to control participants. While this research demonstrates the potential benefits of mental stimulation on cognitive functioning in older adulthood, it fails to explicate the degree to which specific activities are differentially beneficial. In another set of studies, participants receiving theater training were found to have positive transfer effects, in comparison to a control group (Noice & Noice, 2009; Noice, Noice, & Staines, 2004). Older adults given theater training for approximately one month demonstrated positive transfer on measures of memory, problem solving, and verbal fluency, in comparison to control participants, even as the control group also increased in performance from pretest to posttest (Noice & Noice, 2009; Noice, Noice, & Staines, 2004).

However, other studies have indicated that although control and training intervention participants show increases in cognitive ability test performance (again, due primarily to test-specific practice effects), the gains in the training/transfer group do not exceed those of the control group. For example, Dahlin, Nyberg, Bäckman, and Neely (2008) trained both young and older adults on several tasks requiring updating, an executive control function involving working memory. Participants received 15 sessions of updating training over the course of a 5-week period, with performance on the updating tasks and a set of transfer tasks assessed prior to training, immediately after training, and 18 months after training. In this study, there was no evidence of differential transfer to measures of mental speed, working memory, episodic memory, or verbal fluency and reasoning (Dahlin et al., 2008).

Commercial Software for Cognitive Training in Adults

In the past decade there has been an explosion of products in the marketplace aimed at ‘brain training’ or cognitive exercise. Many of these products have two salient characteristics: First, they are directed at a population of middle-age or older adults as tools intended to engage their mental capabilities, with an implicit or explicit ‘use it or lose it’ theory. Second, they are highly engaging, from a motivational perspective, and provide many of the stimulus characteristics that have been identified as provoking interest and encouraging effort (e.g., Dickey, 2005; Malone, 1981), such as “focused goals, challenging tasks, affirmation of performance,” and so on (Dickey, 2005, p. 70). With the theoretical question of use it or lose it as the foundation, and the popular interest in products that have been advertised as valuable for increasing or maintaining cognitive abilities into middle and late adulthood, empirical research aimed at evaluating training and transfer has started to follow.

The Current Experiment

Although the recent investigations provide general clues about the direct training and transfer effects of cognitive training in adults, several important questions remain unresolved. First, do these cognitive training procedures affect the training and transfer performance of adults who are younger than the 70+ population, for example adults between 50 and 70, when marked cognitive declines are often first experienced (at least subjectively)? Second, how do cognitive exercises associated with nonverbal abilities, such as math, spatial, and perceptual speed, contrast with cognitive exercises that emphasize crystallized abilities, such as reading for domain knowledge? To date, there have been no experimental studies that have examined cognitive training in this domain. Third, what is the difference between practice effects on standard ability measures and practice effects associated with specific cognitive training?

For the current experiment, we attempted to address three questions. The first question is whether cognitive-training methods might result in transfer among middle-aged adults. The second question is how the effects of cognitive exercise in practicing nonverbal tasks (e.g., math, reasoning, memory) that are closely related to Gf and perceptual speed contrast with the effects of cognitive exercise on tasks that are more closely related to Gc (e.g., reading and acquisition of domain knowledge). The third question is whether transfer occurs to broadly assessed Gf, Gc, and PS abilities, where each of the abilities is multiply determined. These general questions have been identified by Hertzog et al. (2009) as some of the critical issues that need to be addressed with direct experimentation, in order to evaluate the role that enhancement programs might play in the maintenance and/or remediation of cognitive decline associated with aging in middle age and beyond.

The experiment described here was designed to test two different facets of the broad question of whether engaging in structured cognition-demanding activities in adulthood results in an increment in cognitive abilities. First, we examine whether practice on tasks that are similar in content (e.g., verbal, numerical, figural, and perceptual speed) to the ability measures themselves has an impact on Gf, Gc, and/or PS abilities. Second, we investigate the training and transfer effects of structured reading assignments for acquisition of domain knowledge. For a variety of reasons, cognitive-ability types of practice have been the centerpiece of several recent investigations. The most salient reason is the current interest in the centrality of working memory, executive function, and speeded processing in theories of adult cognitive decline (e.g., Salthouse, 1996). Another prominent reason is the marketplace proliferation of commercial software and hardware platforms that have been aimed at middle-aged and older adults, as tools for preventing or remediating cognitive decline (e.g., see Mahncke et al., 2006). In contrast, there have been few empirical studies that have examined whether domain-specific knowledge acquisition results in transfer to cognitive and intellectual abilities, even though middle-aged adults frequently read for pleasure and/or cognitive stimulation.

Design Overview

For one month, participants were provided with a Wii and the Wii Big Brain Academy (2007) software. Participants were instructed to practice the full array of 15 individual tasks for one hour each day, 5 days/week for four weeks, for a total of 20 hours of Wii practice. For another month, the participants were provided with a set of readings in 4 different topic domains (going green, new medical drug developments, food, and technology). The participants were instructed to spend one-hour/day, 5 days/week doing the readings, with topics switched each week, for a total of 20 hours of readings. Cognitive ability, perceptual speed ability, and domain knowledge tests were administered three times during the study: (1) prior to any Wii task practice/reading, (2) after the first assignment (one month after the initial tests), and (3) after the second assignment (two months after the initial tests). The design was a within-participants procedure, where each participant completed both the Wii practice and the reading assignments. In order to determine whether the Wii practice or the reading assignments resulted in transfer to the ability measures, the order of Wii practice and reading assignments was counterbalanced. That is, half of the participants completed the Wii practice in the first month, and the reading assignments in the second month, while the other half of the participants completed the Reading assignments in the first month, and the Wii practice in the second month of the study.

Hypotheses

The initial hypotheses pertain to performance on the practice tasks themselves. We expected that over the course of a month of purposeful practice, middle-aged adults would show gains in performance on the Wii tasks directly, and would show gains on domain-knowledge tests for the four areas represented in the assigned readings. Thus:

H1: Performance on the Wii tasks will show improvement over the 20 practice sessions.

H2: Performance on the domain knowledge tests will show improvement after the reading assignments are completed.

Moreover, we expected that performance on the Wii tasks before and after practice would be substantially correlated with three ability domains - crystallized intellectual abilities (Gc), fluid intellectual abilities (Gf), and perceptual speed (PS) abilities. (In a small study with young adults on a few of the Wii Big Brain Academy tasks, Quiroga, Herranz, et al. (2009) reported positive and significant correlations between Wii performance and ability.) We also expected that domain knowledge test performance before and after the reading assignments would be more highly correlated with Gc abilities than with Gf and PS abilities. Thus:

H3. Wii performance will be positively correlated with Gc, Gf and PS abilities

H4. Domain knowledge will be positively correlated with Gc ability.

Based on the literature on practice effects for measures of PS abilities and intellectual abilities for young adults (e.g., see Ackerman & Cianciolo, 2000; Hauknect, Halpert et al., 2007), and research with older adults (e.g., Hayslip, 1989; Yang et al., 2009), we expected a range of practice-related improvements on the ability tests. Because specific learning of test strategies occurs frequently in repeated measurement of PS ability tests, somewhat less frequently in fluid intelligence (Gf) tests, and very infrequently in Gc tests, we predicted the following:

H5. Repeated assessments of ability tests will result in large practice effects for tests of PS ability, modest practice effects for tests of Gf ability, and no practice effects for tests of Gc ability.

Good reasons for transfer of the kinds of skills developed with Wii practice or domain knowledge have been stated in the literature (e.g. Halpern, 1998; Singley & Anderson, 1989), but there has also been abundant evidence of the lack of transfer in the literature over the last 100 years of transfer-of-training research (e.g., Adams, 1987; Green & Bavelier, 2008; Salomon & Perkins, 1989). As a consequence, we did not make a priori predictions about whether or not transfer of training/knowledge would occur. If transfer did occur, we expected that the most likely source of transfer for the Wii skills would be for PS abilities, given that the common source of demands on the Wii tasks was for rapid eye-hand coordination and reaction time. Similarly, the most likely source of transfer for the reading assignments would be for Gc abilities. In fact, no transfer was expected for the reading assignments to Gf abilities, given that the reading assignments had no surface-level overlap with those abilities. Thus:

H6-null. Performance on the ability tests will not be affected by the Wii practice experience, or

H6-alternative. There will be positive transfer-of-training from the Wii practice to the ability tests, with the largest effects found on the perceptual speed ability measures

H7-null. Performance on the ability tests will not be affected by the reading assignment experience, or

H7-alternative. There will be positive transfer-of-training from the reading assignment to the ability tests, but only for the Gc ability tests.

Method

Participants

Participants were recruited via local newspaper advertisements and flyers posted around Atlanta, GA. Participants were required to be native English speakers, have normal or corrected to normal vision, and to have completed at least one college level course. A total of 78 adults participated in all phases of the study (42 men, 36 women). Age range was 50 to 71, with a mean of 60.7 years of age. The highest level of educational attainment of these participants was as follows: PhD/EdD/PsyD (5), MA/MS/MBA/JD/MD (31), BA/BS (29), AA or equivalent (5), and some college, no degree (8). None of the participants had prior experience with the Wii software. Fifty-eight of 78 participants reported either never or seldom using computer or game platform systems, and only 4 participants reported being very active with such games. Participants were given a choice of receiving a Nintendo Wii video game system (with one game) or $200 as compensation for their participation in the study.

Homework Assignments

There were two types of homework assignments: (1) a reading assignment and (2) a Nintendo Wii practice assignment.

Reading Assignment

For the reading assignment, participants were provided with four packets of newspaper and magazine articles on the topics of Medical Drugs, Food, Going Green, and Technology. Articles were obtained from a variety of popular newspapers and magazines which feature articles of a scientific nature (e.g., Scientific American, Discover, Time, Newsweek). Table 1 provides examples of the subject matter of the articles included within the four broad topics. Participants were instructed to spend five hours per week reading through the provided articles. They were also told to read articles from a different broad topic on each of the four weeks following their most recent laboratory session. Participants were randomly assigned to focus on the four broad topics in one of two orders. A diary was provided in which participants indicated the dates and times during which they worked on the assignment and which articles they had read on each day. Participants were told that they could take notes about the articles, but could not use their notes during the laboratory test sessions.

Table 1.

Reading homework assignments.

| Topic | Number of Articles | Sample Topics |

|---|---|---|

| Medical Drug Developments “Drugs” | 46 | Cancer treatment, anti-depressants, diabetes, influenza, osteoporosis, cholesterol |

| Food | 37 | Food safety, genetically modified food, nutrition and diet, organic food |

| Going Green | 38 | Alternative energy sources, global warming, public policy, pollution |

| Technology | 46 | Communication, computers, e-books, global positioning systems, nanotechnology, robotics, smart homes |

Nintendo Wii Practice Assignment

For the Wii practice assignment, participants were given a Nintendo Wii video game system and a copy of the game Big Brain Academy to use in their homes. The Wii consists of a system unit, a wireless controller, and an infrared receiver. It connects directly to a television. The wireless controller (called the Wii Remote) uses accelerometers to detect the orientation and motion of the user, to move a cursor or another other indicator on the screen. The Wii remote also has a set of buttons for selecting options shown on the screen while playing a game. The Big Brain Academy game consists of a set of 15 mini-games which are designed to provide training and practice on a variety of mental tasks. Table 2 briefly describes the expected abilities that underlie successful performance on the mini-games, based on a task analysis and reference to existing taxonomies of ability constructs (e.g., Ackerman & Cianciolo, 2000; Carroll, 1993; Eliot & Smith, 1983). For practice, participants could choose to either do a 10 trial practice sequence on one of the mini-games or complete a test in which they solve problems of varying difficulty from each of the 15 mini-games consecutively. A performance score is provided following each practice session, while the test mode provided an overall performance score as well as a letter grade. Participants were instructed to work with Big Brain Academy five days a week for the period of four weeks following their most recent laboratory session. On each day, they were told to complete at least one practice session for each of the 15 mini-games on the “Medium” difficulty setting and record their scores (a level that pretesting indicated would be challenging throughout the practice sessions, and not result in ceiling effects). Additionally, participants were to take one complete test each day and record their overall score and letter grade for that day. The time to complete the Wii practice assignment on each day was approximately one hour. Participants were instructed to complete the Wii practice at approximately the same time each day in a quiet, undisturbed environment. Participants were provided with a diary to keep track of their scores on the practice and test portions of Big Brain Academy, as well as the dates and times during which they completed the assignment.

Table 2.

Nintendo Big Brain Academy task components and underlying abilities.

| Task Component Title | Underlying Dominant Ability |

|---|---|

| 1.Whack Match | Perceptual Speed - Scanning |

| 2.Fast Focus | Closure Speed |

| 3.Species Spotlight | Numerical Estimation |

| 4.Covered Cages | Visual Working Memory |

| 5.Face Case | Visual Working Memory |

| 6.Reverse Retention | Backwards Memory Span |

| 7.Match Blast | Spatial Integration |

| 8.Speed Sorting | Categorical Matching/Verbal & Visual |

| 9.Block Spot | Spatial Visualization |

| 10. Balloon Burst | Perceptual Speed - Scanning |

| 11. Mallet Mash | Numerical Computation |

| 12. Color Count | Working Memory |

| 13. Art Parts | Visual Matching |

| 14. Train Turn | Spatial Orientation |

| 15. Odd One Out | Visual Inspection (Dynamic) |

Measures

Wii Performance Measures

Scores on each of the Wii component tasks were reported to participants in terms of ‘grams’ of brain weight, with larger values indicating higher levels of performance. These scores, in turn, represented a weighted average of the number of correct responses and the speed with which the correct responses were made (no credit was given for incorrect answers). In general, speed of responding represented a multiplier that increased as response times decreased.

Knowledge Tests

Sets of domain knowledge tests were created, based on the four topics listed in Table 1. In each test of 90 multiple-choice items, 60 items were based on specific information presented in the reading homework article packets and 30 items were based on general information in the domain area obtained from various general source materials. Four tests made up the total domain knowledge battery. Three alternate forms were created for each topic domain (alternate form reliabilities of r = .91 to .93 were obtained), for administration at the beginning of the study, after one month, and after two months. Participants completed the tests in a counterbalanced order (using a Latin square design), and the order of the domain tests was also alternated across participants sitting next to one another within sessions.

Ability Tests

A set of ability tests was administered to provide estimates of Gc, Gf and a broad PS ability. Six tests from the CogAT battery (Lohman & Hagen, 2003) were used to measure Gc and Gf abilities. The Gc tests were taken from the verbal battery (Sentence Completion, Verbal Classification, and Verbal Analogies). The tests for Gf were taken from the Nonverbal battery (Figure Analogies and Figure Analysis), and from the Quantitative Battery (Number Series). Three alternate forms for each test were created from items taken from Forms 4, 5 and 6, and Levels G and H of the CogAT. The set of tests for the PS ability included measures of Name Comparison, Factors of 7, Naming Symbols, and Digit/Symbol Substitution (see Ackerman & Cianciolo, 2000). These four tests represent a broad sampling of Perceptual Speed ability sub-categories (namely, Scanning, Pattern Recognition, and Memory). Three parallel alternate forms of each of these tests were created from existing test forms, and were administered to the participants in counterbalanced orders.

Procedure

Participants attended three laboratory sessions, each separated by a period of exactly four weeks. In each of the three laboratory sessions, participants completed a set of ability tests (where test types were distributed across the first two hours of the session), followed by a set of four domain knowledge tests, where each topic test was given a 25 minute time limit. Test instructions and timings were presented over a public address system with prerecorded content. At the end of the first and second laboratory sessions, participants received instructions for completing a set of homework assignments during the four-week period prior to their next laboratory session. Each participant completed two different four-week homework assignments (Reading and Wii Practice). Order of these assignments was counterbalanced across participants. Laboratory sessions were four hours in length, with 5-minute breaks after approximately each hour of testing.

Results

The results are presented in six sections. First, we provide an analysis of the Wii performance data, including underlying factor structure and an evaluation of performance on the Wii tasks over practice. Next, we provide an analysis of domain knowledge test performance over the three testing sessions. In the third section, we provide an analysis of the reference ability test measures, and describe the creation of ability composites. The fourth and fifth sections are devoted to evaluating the correlations between the ability measures and performance on the Wii tasks and performance on the domain knowledge tests. In the final section, we provide an evaluation of transfer-of-training from the Wii and reading assignments to the reference ability measures.

Wii Performance

Performance on the 15 different Wii tasks was recorded for each day of practice over the course of four weeks (5 days/week). An initial factor analysis was conducted to determine the factor structure of the individual tests, so that the most appropriate level-of-analysis could be determined, while preserving parsimony. That is, although there were 15 different tasks that were ostensibly distributed across multiple domains (e.g., Working Memory, Spatial Orientation, Closure Speed, Perceptual Speed, etc. -- see Table 2), it was appropriate to determine whether there were separable content or process factors, or whether the tasks were all tapping the same underlying ability. A MINRES factor analysis, with squared multiple correlations as communality estimates was conducted, along with a Humphreys-Montanelli parallel analysis (Carroll, 1989; Montanelli & Humphreys, 1976), which allowed for comparison between the eigenvalues derived from the real data and from random data. The parallel analysis indicated that only one underlying factor could be justified for the Wii tasks. This result indicates that the most appropriate index for scoring the Wii performance would be a single composite score (e.g., see Cohen, 1990; Thorndike, 1986). Thus, for each daily- practice session, a composite score was derived.

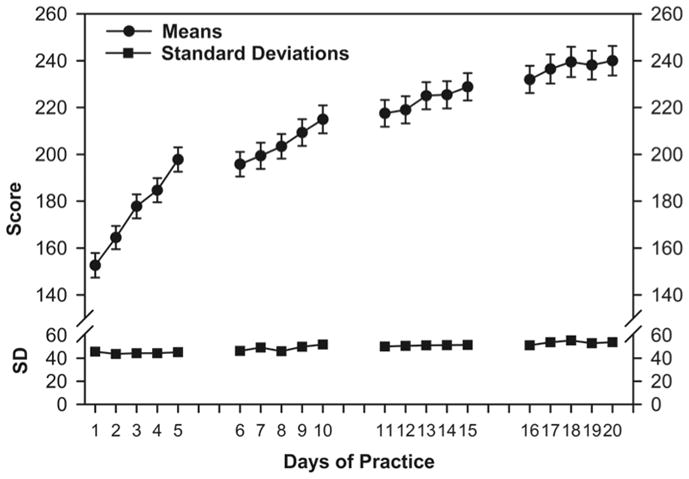

To evaluate Hypothesis #1 (practice will result in improvement in Wii performance scores), the Wii data were examined for practice effects and any potential effects of order of practice (either Wii practice first or Reading first). A 2 (order) × 20 (practice session) between-within repeated-measures ANOVA was conducted. The results indicated, as expected, no significant effect of practice order (F(1,66) = .70, ns), and no significant interaction between practice and test order (F (19,1254) = 1.36, ns, f = .14). A significant effect of practice was obtained (F (19, 1254) = 114.16, p < .01, f = 1.32), and is illustrated in Figure 1. Using the rule-of-thumb for effect sizes suggested by Cohen (1988) with effects larger than d = .80 considered to be “large” effects, the performance gain from initial to final practice session clearly had a large effect size (d = 1.70) Consistent with the power law of practice (e.g., Newell & Rosenbloom, 1981), the practice function for the Wii tasks is best described as showing substantial initial gains in performance, followed by a negatively accelerating function as increments in performance become smaller with each additional practice session. A quadratic function applied to the pattern of means over days of practice fitted the data extremely well (R2 = .98, F(3,17) = 452.30, p < .01). These results are consistent with Hypothesis #1.

Figure 1.

Mean task component scores and standard deviations on the Wii Big Brain Academy, over the course of 20 one-hour practice sessions. Five days of practice per week, over four weeks. Error bars are +/− one standard error of the means.

Individual differences in performance (i.e., the rank-ordering of individuals in performance) on the Wii tasks across the 20 daily practice sessions were consistent. Intercorrelations across test sessions followed a simplex-like structure that is ubiquitous in multi-occasion practice data (e.g., see Ackerman, 1987). Day-to-day correlations were high (ranging from r = .85 on Day 1/2 to r = .96 for Day 13/14), and the correlation between the most distant sessions, Day 1/20, was r = .63. In addition, because the magnitude of individual differences in performance did not significantly increase over time (sd Day 1 = 45.7, sd Day 20 = 53.9, rDS = .21, ns, Snedecor & Cochran, 1967), there was no indication of a Matthew effect (that is, an increasing spread of performance with the higher performing individuals showing greater increases with practice than the lower performing individuals -- see Stanovich, 1986).

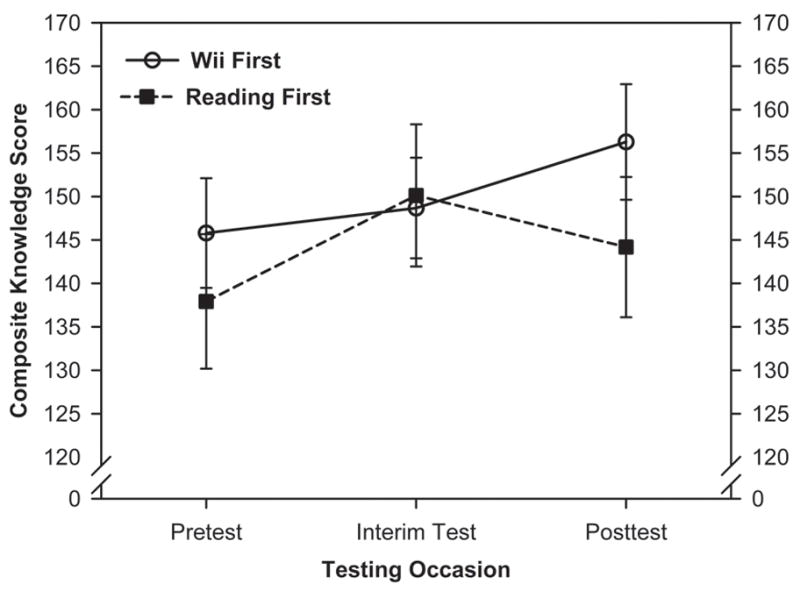

Reading -- Domain Knowledge

The assessment of knowledge associated with the readings consisted of three parallel forms of each content domain (Drugs, Food, Going Green, and Technology). A composite domain knowledge score was computed for each testing occasion (Pretest, Interim Test and Posttest). Hypothesis #2 was that performance on the domain knowledge tests would improve (compared to the pretest baseline), once the participants had completed the 4 weeks of 5 hours/week of reading. Because of practice effects (and the fact that we did not instruct the participants to avoid discussing or learning about the topics during the period when they were completing the Wii tasks), we expected an overall time (practice) effect. However, because only half the participants (Reading First) had completed the readings at the time of the Interim test, we expected that there would be an interaction effect between the order factor and the time of testing. To evaluate these data, a 2 (order) × 3 (time) between-within ANOVA was conducted on the composite domain knowledge test scores. Means for the composite knowledge test scores are shown in Figure 2. A significant Time effect was found (F (2,152) = 9.85, p < .01, f = .36), indicating that performance was higher on the Interim test and Posttest than on the Pretest. Also, a significant Order X Time effect was found (F (2, 152) = 7.46, p < .01 f = .31), and no significant overall Order effect was found (F (1,76) = .33, ns, f = .07). As can be seen in the figure, the reading-first group showed a marked increase in performance on the Interim test, but the Wii-first group continued to show improvement after their reading assignment, while the Reading first group indicated some relative deterioration of performance a month after they completed their reading assignments. The interaction effect suggests that whatever positive effects on domain knowledge were obtained from 20 hours of topical reading may be somewhat time-limited, at least in the context of a month without additional reading assignments. In general, then, Hypothesis #2 received support, though the positive effects of the reading assignments on the domain knowledge tests were somewhat more transient than was anticipated.

Figure 2.

Mean total domain knowledge scores at pretest, interim test (after one month) and final posttest (after two months), by task order condition (Wii-first participants completed the reading assignment between the Interim test and the Posttest; Reading-first participants completed the reading assignments between the Pretest and the Interim Test). Error bars are +/− one standard error of the means.

A check on the locus of the domain-knowledge learning was conducted by separately analyzing the test items that were covered directly in the reading materials (60 of 90 in each test) from those that were not covered directly in the reading materials (the remaining 30 items in each test). For the reading-based materials, there was a significant effect of Time (F(2,152) = 10.10, p < .01, f = .36), but no significant effect of test Order (F(1,76) = .19, ns), and a marginal effect of Order X Time (F(2, 152) = 2.87, p = .06, f = .19). For the general domain knowledge questions there was no significant effect of either Order F(1,76) = .68, ns, or Time F(2,152) = 1.02, ns; but there was a significant Order X Time interaction F(2,152) = 7.51, p < .01, f = .31. Together, these results indicate that at the end of the study, the group with the most recent reading assignment (the Wii-first group) performed better than the Reading-first group on both the general domain items and the items covered in the readings. The Reading-first group had increases in both general domain items and items covered in the readings at the interim test, but showed declines in both sets of items at the final testing session, though the mean levels of performance on both sets were slightly higher than at pretest.

Reference Ability Measure Analyses

Three tests of Gc, Gf, and four tests of PS ability were administered three times, corresponding to the Pretest, Interim, and Posttest testing sessions. A factor analysis of the Gc and Gf tests was conducted to confirm the a priori selection of the tests as markers. The results of the MINRES factor analysis of the Gc and Gf tests, with an oblique rotation, based on a direct artificial personal probability factor rotation procedure (DAPPFR; Tucker & Finkbeiner, 1981) are shown in Table 3, along with individual test means at pretest, standard deviations, reliabilities, and intercorrelations. The factor analysis results indicate that the Gf and Gc tests loaded on the expected factors, though it should be noted that, as is typical for this age group, Gf and Gc factors were substantially correlated (r = .66). For some later analyses, we computed unit-weighted z-score composites for the Gc, Gf, and PS abilities, respectively. The correlation between the PS ability composite and the Gc composite was r = .46, and the correlation between the PS ability composite and the Gf composite was r = .49. The correlation between the Gf composite and Gc composite was similar to that derived in the factor solution (r = .57).

Table 3.

Ability Tests: DAPPFR Factor loadings, Test-Retest Reliability, Means, standard deviations, and Intercorrelations.

| Cognitive Tests | Factors | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Gc | Gf | rxx | M | sd | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | |

| 1. Sentence Completion | .48 | .05 | .51 | 20.32 | 2.89 | ||||||||||

| 2. Verbal Analogies | .64 | −.01 | .77 | 24.14 | 4.21 | .58 | |||||||||

| 3. Verbal Classification | .57 | .04 | .50 | 19.90 | 3.55 | .66 | .64 | ||||||||

| 4. Figure Analogies | −.15 | .81 | .47 | 12.67 | 4.97 | .34 | .33 | .49 | |||||||

| 5. Number Series | .19 | .40 | .81 | 13.82 | 5.62 | .39 | .59 | .36 | .50 | ||||||

| 6. Figure Analysis | .11 | .49 | .75 | 7.65 | 3.90 | .41 | .40 | .46 | .74 | .48 | |||||

| Perceptual Speed Tests | |||||||||||||||

| 7. Name Comparison | a | a | .82 | 23.07 | 6.24 | .32 | .37 | .34 | .48 | .46 | .31 | ||||

| 8. Factors of 7 | a | a | .82 | 32.37 | 13.10 | .22 | .37 | .27 | .25 | .53 | .22 | .44 | |||

| 9. Naming Symbols | a | a | .83 | 71.06 | 18.49 | .28 | .39 | .33 | .27 | .35 | .15 | .62 | .46 | ||

| 10. Digit/Symbol Substitution | a | a | .71 | 56.53 | 13.06 | .27 | .38 | .28 | .24 | .35 | .28 | .59 | .42 | .61 | |

Notes. N=78. df = 76. Correlations greater than r = .22 are significant at α = .05, correlations greater than r = .29 are significant at α = .01; DAPPFR = Direct Artificial Personal Probability Factor Rotation; Salient factor loadings shown in boldface. Correlation between Gc and Gf factors r = .66.

rxx =Test-retest alternate form reliability based on first and second administrations (with counterbalanced forms).

Because of content overlap, the PS tests were not included with the cognitive tests in the factor analysis.

Ability Predictors of Wii Performance

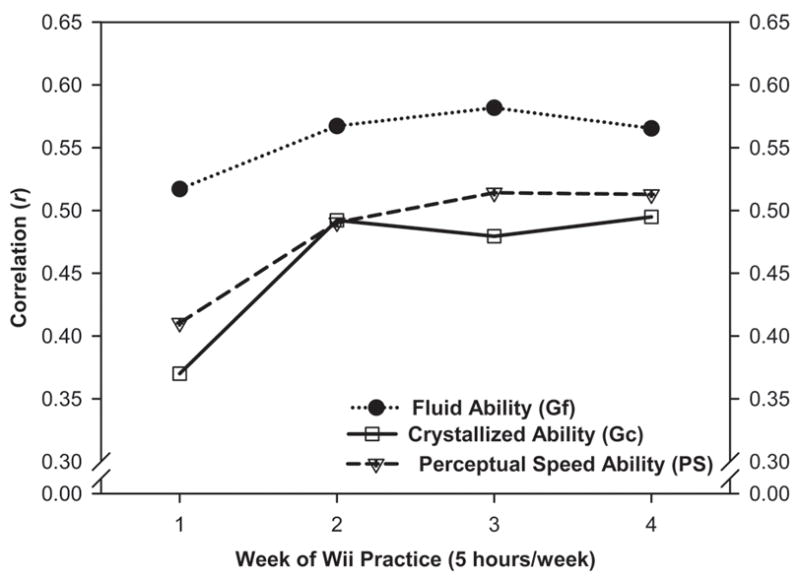

One of the most likely limitations for observing transfer-of-training effects would occur if performance on the transfer tasks were unrelated to performance on the training tasks. To evaluate this question (Hypothesis #3: Wii performance will be positively correlated with Gc, Gf, and PS abilities), we computed correlations between the ability composites, based on the initial session assessments, with performance on the Wii task -- aggregated at the week-of-practice level. Correlations among the ability composites, Wii task performance, and domain knowledge pretest and posttest composites are provided in Table 4. It should be noted that in this sample, participant age was not significantly correlated with any of the ability or performance measures. The correlations between the ability composites and Wii task performance are shown in Figure 3. Performance on the Wii tasks was most highly associated with the Gf composite. However, as can be seen from the figure, the correlations between each of the three ability composites and overall Wii performance were substantial. The correlations also tended to increase from the first to the fourth week of Wii practice, although only the Gc ability correlations showed a significant increase (t(75) = −1.73, p < .05, q = .15). Taking account of the common variance among ability composites with a multiple correlation analysis, the three abilities accounted for 29.9% (R = .547) of the variance in initial Wii performance, and 41.4% (R = .643) of the variance in final week Wii performance. These results suggest that Wii performance was significantly and substantially associated with the standard ability measures, and thus provide support for some common determinants among the Wii tasks and the ability measures. The results provide support for Hypothesis #3.

Table 4.

Correlations among ability composites, domain knowledge tests and weekly average Wii performance, and Age.

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | ||

|---|---|---|---|---|---|---|---|---|---|---|

| 1. | Gc | |||||||||

| 2. | Gf | .57** | ||||||||

| 3. | PS | .46** | .48** | |||||||

| 4. | Domain Knowledge Pretest | .59** | .47** | .40** | ||||||

| 5. | Domain Knowledge Posttest | .58** | .38** | .43** | .92** | |||||

| 6. | Wii - Week 1 | .37** | .51** | .41** | .31** | .30** | ||||

| 7. | Wii - Week 2 | .49** | .57** | .49** | .36** | .36** | .84** | |||

| 8. | Wii - Week 3 | .48** | .58** | .51** | .32** | .34** | .79** | .94** | ||

| 9. | Wii - Week 4 | .49** | .56** | .51** | .35** | .35** | .74** | .91** | .97** | |

| 10. | Age | .02 | .01 | −.22 | .02 | .03 | −.02 | −.18 | −.21 | −.19 |

Notes. df = 76.

p < .05;

p < .01.

Figure 3.

Correlations between three ability composites (Gc, Gf, and PS) and Wii task performance, where task performance was averaged across Wii tasks and across each week of practice. Correlations larger than r = .24 are significant, with α=.05. Gc = Crystallized ability, Gf = Fluid ability, and PS = Perceptual Speed ability.

Ability Predictors of Reading/Domain Knowledge Performance

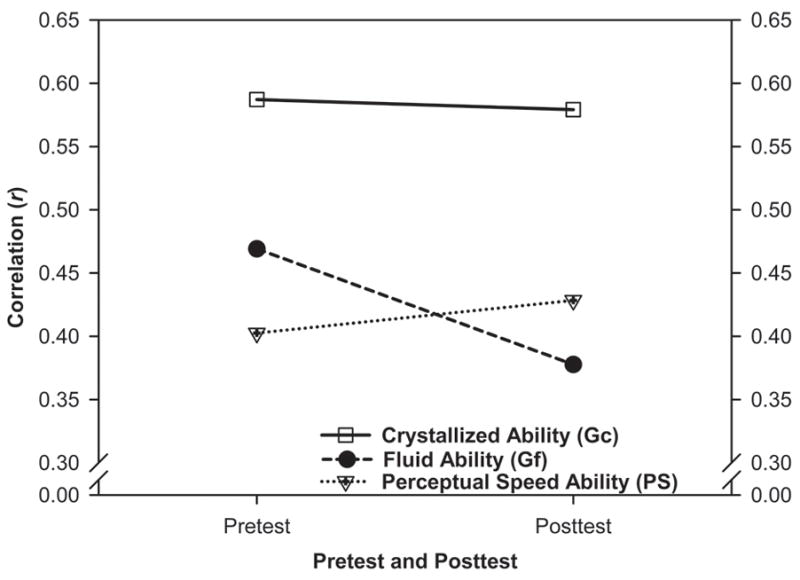

To evaluate Hypothesis #4 (domain knowledge test performance will be positively correlated with Gc ability), ability correlates with domain knowledge test performance were examined to determine if traditional ability measures also predicted performance before and after the 20 hours of assigned readings across one month. Because there was a reduction of performance for the Reading-first condition a month after the initial reading assignments, the test results for post-reading assignment were combined from the second testing occasion for the Reading-first condition, and the third testing occasion for the Wii-first condition. With this procedure, test results immediately after the reading section of the study represented the post-reading test performance. The correlations are shown in Figure 4. As expected, the Gc ability composite had the most substantial correlations with initial test (i.e., before the reading assignments were done) domain-knowledge composite performance (r = .59, p < .01), followed by the Gf ability composite (r = .47), and finally the PS composite (r = .40), though only the difference between the correlations with Gc and PS was significant (t(75) = 1.99, p < .05). We conclude that Hypothesis #4 was supported in these data.

Figure 4.

Correlations between three ability composites (Gc, Gf, & PS) and composite domain knowledge test performance at initial session (before the reading assignment) -- Pretest; and in the session immediately following the reading assignment -- Posttest. Correlations larger than r = .24 are significant, with α=.05. Gc = Crystallized ability, Gf = Fluid ability, and PS = Perceptual Speed ability.

Transfer of Training Effects for Wii Practice and Reading Assignments

For the initial analyses to evaluate test practice effects (Hypothesis #5, practice effects will be large for PS tests, modest for Gf tests, and no practice effects for the Gc tests) and transfer of training from the homework assignments to the ability tests (Hypothesis 6 and Hypothesis 7, for the Wii and Reading tasks, respectively), we wanted to allow for any evidence to be found that there might have been specific transfer-of-training from the Wii and Reading tasks. Therefore, we report the results for individual tests. Because this allows for the possibility of experiment-wide Type I errors, we will interpret significant effects cautiously. This analysis plan has the advantage of reducing the probability of Type II errors, which would represent a failure to find a transfer effect, when one is present. A 2 (order) × 3 (time) between-within ANOVA was computed for each of the 10 ability tests. The results of these analyses are shown in Table 5 (ANOVA results) and Table 6 (means and standard deviations). No significant Order main effects were expected, as participants were randomly assigned to the order conditions, and whether they received practice on the Wii or Reading first was not expected to affect overall performance on the ability tests. However, one out of the 10 order effects did show a significant difference, namely that of the Figure Analysis test. The differences between the two order conditions were present at all three testing occasions, and significant even prior to the study/practice period (t(76) = −2.49, p < .05, d = .28). This pattern of results suggests that the effect is attributable to higher spatial visualization ability of some of the participants in the Wii-first condition, and not something that could be attributed to the experiment procedures.

Table 5.

ANOVA for ability measures.

| F Ratios | |||

|---|---|---|---|

| Order (condition) | Time (practice) | Order by Time Interaction | |

| Crystallized Ability Tests | |||

| Sentence Completion | 1.02 (.12)a | 1.01 (.12)a | 2.30 (.18) |

| Verbal Analogies | 1.67 (.15) | 1.11 (.12)a | .02 (.02)a |

| Verbal Classification | 2.61 (.19) | .59 (.09)a | 1.87 (.16) |

| Fluid Ability Tests | |||

| Figural Analogies | 1.62 (.15) | 1.96 (.16) | 1.59 (.15) |

| Number Series | 2.11 (.17) | 5.47** (.27) | .87 (.11)a |

| Figure Analysis (paper folding) | 7.30** (.31) | 9.30** (.35) | .37 (.07)a |

| Perceptual Speed Ability Tests | |||

| Name Comparison | 2.62 (.19) | 24.44** (.57) | .23 (.06)a |

| Factors of 7 | 1.97 (.16) | 48.45** (.80) | 1.30 (.13)a |

| Naming Symbols | .64 (.09)a | 77.30** (1.00) | 1.31 (.13)a |

| Digit/Symbol Substitution | 2.08 (.17) | 9.28** (.35) | .34 (.07)a |

| df | 1,76 | 2,152 | 2,152 |

N = 78

p < .01

Note: Effect size (f) in parenthesis.

The expected value of F is 1.0 when the null hypothesis is true (and therefore, there is no effect of the factor in question. Cohen’s f statistic is not meaningful or interpretable as the F ratio tends toward or below 1.00 (see Voelkle, Wittmann, & Ackerman, 2007). These values are reported only for reference information.

Table 6.

Means and standard deviations for ability measures by test session and assignment order.

| Pretest | Interim Test | Posttest | ||||

|---|---|---|---|---|---|---|

| Read-first | Wii-first | Read-first | Wii-first | Read-first | Wii-first | |

| Crystallized Ability Tests | ||||||

| Sentence Completion | 19.74 (3.29) | 20.90 (2.31) | 20.82 (2.75) | 20.62 (2.46) | 20.41 (3.03) | 21.00 (2.64) |

| Verbal Analogies | 23.62 (4.96) | 24.67 (3.30) | 23.44 (4.94) | 24.62 (4.08) | 23.92 (4.08) | 25.08 (3.65) |

| Verbal Classification | 19.33 (4.12) | 20.46 (2.81) | 19.59 (3.91) | 19.77 (2.71) | 19.26 (3.52) | 20.97 (3.30) |

| Fluid Ability Tests | ||||||

| Figural Analogies | 11.56 (4.65) | 13.77 (5.10) | 13.49 (4.56) | 13.69 (4.35) | 13.38 (4.91) | 14.08 (4.36) |

| Number Series | 12.67 (6.24) | 14.97 (4.72) | 14.41 (5.72) | 15.59 (5.40) | 14.41 (5.87) | 15.90 (5.01) |

| Figure Analysis (paper folding) | 6.59 (3.93) | 8.72 (3.61) | 7.41 (4.06) | 9.44 (3.28) | 8.28 (3.43) | 9.87 (2.89) |

| Perceptual Speed Ability Tests | ||||||

| Name Comparison | 21.73 (6.53) | 24.41 (5.71) | 24.19 (6.98) | 26.44 (6.71) | 25.55 (7.42) | 27.55 (7.34) |

| Factors of 7 | 30.59 (10.34) | 34.15 (15.31) | 36.73 (13.14) | 42.91 (16.53) | 41.36 (13.32) | 44.19 (16.20) |

| Naming Symbols | 68.47 (19.20) | 73.65 (17.60) | 79.74 (18.88) | 80.83 (22.57) | 85.71 (24.74) | 90.28 (22.94) |

| Digit/Symbol Substitution | 54.38 (13.08) | 58.68 (12.84) | 58.72 (13.99) | 61.64 (12.79) | 59.17 (16.21) | 64.03 (13.52) |

Notes: Standard deviations shown in parentheses.

The Time and Order X Time interaction effects address the ‘use it or lose it’ hypotheses. A significant effect of Time represents the effect of practice (or non-experimental influences, such as participants who sought out other opportunities to improve these general abilities), whereas significant effects of Order X Time interactions would indicate that either the Reading or the Wii practice conditions resulted in significant transfer-of-training to one or more ability tests. As shown in Table 5, there were significant Time (practice) effects for all of the Perceptual Speed tests, and two of the three Gf Tests (Number Series and Figure Analysis), but not for any of the Gc tests (which matches the predictions made in Hypothesis #5). In contrast, there were no significant Order X Time effects, which indicates that neither the Wii practice nor the Reading assignments resulted in specific transfer-of-training to the ability tests (which corresponds to the Null hypotheses for #6 and #7). Only the Sentence Completion Test interaction effect approached statistical significance (p = .10). To affirm the null hypothesis requires a demonstration of the statistical power of the design, sample size, and the test-retest correlations on the ability tests, to detect an effect if indeed one existed. For the test of these between-within interaction effects, the power to detect a “medium-sized” effect, based on an average test-retest correlation from the experiment (Cohen, 1988) (f = .25) was .999, though the power to detect a “small” effect (f = .10) was .74 (G*Power; Faul et al., 2007). That is, if the transfer of training effect were of a medium-sized magnitude, it would have been detected 99.9% of the time with the current design, and if the effect had been of small magnitude, it would have been detected 74% of the time. With this as background, we can confidently conclude (with a 99% confidence) that there was no medium-sized effect of transfer, and only a 26% probability that an effect of “small” magnitude was present in the data.

The reading tasks were not expected to have a significant impact on the ability measures, but if they did, we would have expected the most likely source of transfer would be in Gc abilities, where vocabulary and reading comprehension are most frequently identified (e.g., see Carroll, 1993). The lack of any significant main effects of Time or Order X Time effects indicates that whatever direct effects were found for domain knowledge, these did not transfer to any of the standard ability tests. Together, these results suggest that neither the Wii practice nor the reading activities resulted in transfer to a broad battery of cognitive/intellectual or PS abilities.

Discussion

We found that practice can result in improved performance on the Wii ‘brain’ exercise tasks, in a group of middle-aged adults. Participants showed significant and substantial gains in performance on the Big Brain Academy tasks over 20 hours of practice across four weeks. On the question of whether domain-specific reading assignments can lead to improved domain knowledge, the answer is “yes.” But this finding must be qualified by the decline in domain-knowledge test performance that resulted from a four-week delay between the reading assignment and final testing. That is, at least some of the domain knowledge acquired by reading was only temporary for these participants. Some speculation might be in order about why the reading activities did not result in larger performance increments on the domain knowledge tests (e.g., in comparison to studies of domain knowledge acquisition in this age group -- see Ackerman & Beier, 2006; Beier & Ackerman, 2005). We believe it is likely that this group of participants were more active, from an intellectual point of view, than a random sample of age cohorts. At the end of the study, we asked the participants an open-ended question about what activities they engaged in to “keep yourself mentally fit.” All but two of the participants reported myriad activities ranging from intellectual to cultural to physical exercise. Three-quarters of the participants (59) reported some regular reading activities, including newspapers, magazines, novels and so on. Together with the data reported earlier on the educational achievement of these participants, these self-reports appear to indicate that most of the participants were already highly engaged in acquiring and maintaining knowledge across many of the domains that we investigated in this study. Thus, the additional 20 hours of reading required for this study, in comparison to the participants’ outside intellectual activities, may have had a comparatively smaller overall effect on their domain knowledge. Whether larger effects or longer-lasting effects would be found with a less educated or less intellectually active sample remains an open question.

For both the Wii tasks and the domain knowledge tests, individual differences in performance were well accounted for by measures of standardized Gc, Gf, and PS abilities, both at initial testing and after the Wii-practice sessions and reading assignments. That is, individual differences in abilities matter, to a considerable degree, in predicting performance on tasks that involve intellectual content, in a sample of middle-aged adults.

Examination of reference ability test performance at the three testing occasions indicated that neither Wii task practice nor performing the reading assignments had any significant effect on subsequent performance on the Gc, Gf, or PS tests. Significant increases in test performance were found for all four of the PS tests and two of the Gf tests. However, these increases were found regardless of whether the participants were engaged in the Wii or the Reading tasks, and thus indicate that the gains found are most clearly attributable to practice on the tests themselves -- results that are consistent with the literature on test practice effects (e.g., see Ackerman & Cianciolo, 2000; Ackerman & Beier, 2007).

As we discussed earlier, the Wii tasks were designed in a way that they were expected to be engaging to middle aged and older adults. However, one might well ask whether our participants were likely to continue practicing the Wii tasks, even after the end of the study. At the end of the study, we asked the participants to respond to the following statement: “After the study is over, I probably will not ever again use the Wii Big Brain Academy software.” 49 of the 78 participants indicated Moderately or Strongly Agree to the statement, where as only 7 indicated Moderately or Strongly Disagree to the statement. These self-reports indicated that most of the participants weren’t particularly excited about doing these tasks more than they had to, in the context of the study. However, it should be noted that the participants did enjoy doing the Wii task practice more than doing the readings (Wii Mean = 4.73, sd=1.28; Readings Mean = 3.47, sd = 3.47), a highly significant difference (t(77) = 5.71, p < .01, d = .95).

Conclusions

To date, the most supportive results for transfer in adult samples have been found for low-structure interventions (e.g., Noice & Noice, 2009), long-term interventions (e.g., see Schooler, 2007, for a review), or lower-level training/transfer for specific task strategies and processes, such as visual skills and processing speed (e.g., Achtman, et al., 2008; Dye et al., 2009; Green, et al., 2009). As others have noted (e.g., Green & Bavelier, 2008; Mayr, 2008), the empirical evidence reported for broad transfer from specific cognitive training interventions to tests of broad cognitive abilities is relatively thin. Of the reports that purport to show positive transfer in older adults, many are limited by: (a) a failure to include a control group, (b) a failure to take account of practice-only effects on the transfer tasks, (c) control groups that show no practice-based increases in performance that should be expected on most standardized ability tests, and/or (d) identical forms of the standardized tests that allow for item-specific learning.

Our findings add to the literature on age-related training and transfer in two ways. First, we found that among a sample of middle-aged adults, both the cognitive exercise assignment and reading assignment resulted in expected task-specific improvement over the course of training. Second, we showed that although the reference ability tests were substantially correlated with performance on the Wii Big Brain Academy tasks and domain knowledge measures, there was no appreciable transfer from the Wii or reading tasks to the ability tests following practice. With respect to the use it or lose it hypothesis, the pattern of results obtained in the current study provides mixed support. We conclude that the gains made through practice are not so broad to result in improvement to measures of the underlying reference cognitive abilities, but rather are task-specific.

The findings obtained with respect to transfer effects are not as positive as the developers of ‘brain exercise’ software programs might prefer. As such, some might dispute the generalizability of these findings. For example, it could be argued that the cognitive processes tapped by the Wii Big Brain Academy tasks are insufficiently related to the kinds of cognitive activities that are assessed by the broad sets of intellectual and perceptual speed abilities referent measures. However, this argument is not very compelling, because there was a substantial degree of common variance among the ability composites and Wii task performance. Alternatively, a more plausible argument might be made that the intellectual abilities we assessed are not relevant to the everyday functioning of the middle-aged adults in our sample. That is, learning brain exercise tasks might show transfer effects on everyday functioning, but not ability measures. Although we suggest that such an argument would also apply to any standardized intellectual ability assessments administered to adults (ranging from Raven’s Progressive Matrices to the Wechsler Adult Intelligence Scale), this remains an empirical question for future research.

Our findings illustrate the complexity of the use it or lose it issue, and show that middle-aged adults can acquire skills and knowledge, as evidenced by the significant practice effects observed on both cognitive engagement activities (Wii practice and reading for domain knowledge) and from the significant practice effects observed for six of the ten reference ability tests. The lack of support for transfer effects, however, suggests that learning for middle aged adults may be more narrow than for young adults and children. A particularly noteworthy theory, supported by a small number of studies, was initially articulated by Ferguson (1956) and refined by Sullivan (1964; see also Skanes, Sullivan, Rowe, & Shannon, 1974), proposed that children with higher ability levels tended to acquire knowledge and skills that transferred more widely than children with lower ability levels. Taking this proposition and applying it to adult intelligence, where there are significant declines in fluid intelligence, compared to the intelligence of young adults, it may be that one of the characteristics of adult intelligence is that newly acquired knowledge and skills do not lead to wide transfer. At this point, although the practice-related improvements on the specific tasks in this study are consistent with this view, this proposal is speculative. Repeating this experiment with young adults or adolescents and evaluating any transfer-of-training from the Wii practice or reading assignments might yield data that would address this issue.

The results of this experiment can be interpreted as falling closer to the position taken by Salthouse (2006, 2007) than to the position articulated by Schooler (2007). That is, over the course of 20 hours of cognitive exercises and 20 hours of domain knowledge reading, we found negligible transfer effects that could be associated with ‘brain exercise,’ and no support for medium or large transfer effects, beyond relatively narrow skills from either cognitive assignment. Of course, it is always possible that such transfer effects might be obtained over much longer periods, such as years, but the degree of effort that might be required on the part of the individual learner would have to be far beyond the level exerted in the current study and require additional interventions to sustain motivation.

Those who have been skeptical of “brain training” and “brain exercise” as short-term fixes for mitigating or precluding decline in cognitive abilities over the life course may find these results encouraging. It may very well be that in order to maintain cognitive abilities, it takes as much effort over a long period of time as it does to originally acquire the abilities. This perspective is consistent with the positive results of cognitive engagement on adult intellect described by Schooler and his colleagues (e.g., Kohn & Schooler, 1978; Schooler, 2001; Schooler, Mulatu, & Oates, 1999), who found positive long-term effects among persons who were engaged in jobs that involved a high degree of cognitive complexity.

Ultimately, the goal of this study was to address the use it or lose it hypothesis, as it applies to purposeful practice on “brain exercise” tasks or reading tasks. The results indicate that adults can indeed improve their performance on specific tasks by practice and by engaging in directed reading. The results also indicate that the gains made from these activities are narrow, in that they do not yield substantial increments to any of a wide array of standardized ability measures. Although there were clear limitations to this investigation, the results indicate that the benefits of “using it” in short-term time periods may be limited to the specific tasks which the individual is engaged in, rather than to overall intellectual abilities or other, nonpracticed tasks.

Acknowledgments

This research was supported by the National Institutes of Health/National Institute on Aging Grant AG16648.

References

- Achtman R, Green C, Bavelier D. Video games as a tool to train visual skills. Restorative Neurology and Neuroscience. 2008;26:435–446. [PMC free article] [PubMed] [Google Scholar]

- Ackerman PL. Individual differences in skill learning: An integration of psychometric and information processing perspectives. Psychological Bulletin. 1987;102:3–27. [Google Scholar]

- Ackerman PL. Domain-specific knowledge as the “dark matter” of adult intelligence: gf/gc, personality and interest correlates. Journal of Gerontology: Psychological Sciences. 2000;55B(2):P69–P84. doi: 10.1093/geronb/55.2.p69. [DOI] [PubMed] [Google Scholar]

- Ackerman PL. Knowledge and cognitive aging. In: Craik F, Salthouse T, editors. The Handbook of Aging and Cognition: Third Edition. New York: Psychology Press; 2008. pp. 443–489. [Google Scholar]

- Ackerman PL, Beier ME. Determinants of domain knowledge and independent study learning in an adult sample. Journal of Educational Psychology. 2006;98:366–381. [Google Scholar]

- Ackerman PL, Beier ME. Further explorations of perceptual speed abilities, in the context of assessment methods, cognitive abilities and individual differences during skill acquisition. Journal of Experimental Psychology: Applied. 2007;13:249–272. doi: 10.1037/1076-898X.13.4.249. [DOI] [PubMed] [Google Scholar]

- Ackerman PL, Cianciolo AT. Cognitive, perceptual speed, and psychomotor determinants of individual differences during skill acquisition. Journal of Experimental Psychology: Applied. 2000;6:259–290. doi: 10.1037//1076-898x.6.4.259. [DOI] [PubMed] [Google Scholar]

- Adams JA. Historical review and appraisal of research on the learning, retention, and transfer of human motor skills. Psychological Bulletin. 1987;101:41–74. [Google Scholar]

- Basak C, Boot WR, Voss MW, Kramer AF. Can training in a real-time strategy video game attenuate cognitive decline in older adults? Psychology and Aging. 2008;23:765–777. doi: 10.1037/a0013494. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Begley S. The upside of aging. The Wall Street Journal 2007 February 17; [Google Scholar]

- Beier ME, Ackerman PL. Age, ability and the role of prior knowledge on the acquisition of new domain knowledge. Psychology and Aging. 2005;20:341–355. doi: 10.1037/0882-7974.20.2.341. [DOI] [PubMed] [Google Scholar]

- Beulluck P. Brain calisthenics. The New York Times; Dec 27, 2006. As minds age, what’s next? [Google Scholar]

- Carroll JB. Exploratory factor analysis programs for the IBM PC. Chapel Hill, NC: Author; 1989. [Google Scholar]

- Carroll JB. Human cognitive abilities: A survey of factor-analytic studies. New York: Cambridge University Press; 1993. [Google Scholar]

- Cohen J. Statistical power analysis for the behavioral sciences. Hillsdale, NJ: Erlbaum; 1988. [Google Scholar]

- Cohen J. Things I have learned (so far) American Psychologist. 1990;45:1304–1312. [Google Scholar]

- Dahlin E, Nyberg L, Bäckman L, Neely A. Plasticity of executive functioning in young and older adults: Immediate training gains, transfer, and long-term maintenance. Psychology & Aging. 2008;23(4):720–730. doi: 10.1037/a0014296. [DOI] [PubMed] [Google Scholar]

- Dickey MD. Engaging by design: How engagement strategies in popular computer and video games can inform instructional design. Educational Technology Research & Design. 2005;2:67–83. [Google Scholar]

- Dunlosky J, Kubat-Silman AK, Hertzog C. Training monitoring skills improves older adults’ self-paced associative learning. Psychology & Aging. 2003;18:340–345. doi: 10.1037/0882-7974.18.2.340. [DOI] [PubMed] [Google Scholar]

- Dye M, Green C, Bavelier D. Increasing speed of processing with action video games. Current Directions in Psychological Science. 2009;18(6):321–326. doi: 10.1111/j.1467-8721.2009.01660.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Edwards JD, Wadley VG, Myers RS, Roenker DL, Cissell GM, Ball KK. Transfer of a speed of processing intervention to near and far cognitive functions. Gerontology. 2002;48:329–340. doi: 10.1159/000065259. [DOI] [PubMed] [Google Scholar]

- Edwards JD, Wadley VG, Vance DE, Wood K, Roenker DL, Ball KK. The impact of speed of processing training on cognitive and everyday performance. Aging & Mental Health. 2005;9:262–271. doi: 10.1080/13607860412331336788. [DOI] [PubMed] [Google Scholar]

- Eliot J, Smith IM. An international directory of spatial tests. Berks, UK: NFER-NELSON; 1983. [Google Scholar]

- Faul F, Erdfelder E, Lang AG, Buchner A. G*Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behavior Research Methods. 2007;39:175–191. doi: 10.3758/bf03193146. [DOI] [PubMed] [Google Scholar]

- Ferguson GA. On transfer and the abilities of man. Canadian Journal of Psychology. 1956;10:121–131. doi: 10.1037/h0083676. [DOI] [PubMed] [Google Scholar]

- Gagné RM, Foster H, Crowley ME. The measurement of transfer of training. Psychological Bulletin. 1948;45:97–130. doi: 10.1037/h0061154. [DOI] [PubMed] [Google Scholar]

- Green C, Bavelier D. Action video game modifies visual selective attention. Nature. 2003;423:534–537. doi: 10.1038/nature01647. [DOI] [PubMed] [Google Scholar]

- Green C, Bavelier D. Effect of action video games on the spatial distribution of visuospatial attention. Journal of Experimental Psychology: Human Perception and Performance. 2006;32(6):1465–1478. doi: 10.1037/0096-1523.32.6.1465. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green CS, Bavelier D. Exercising your brain: A review of human brain plasticity and training-induced learning. Psychology and Aging. 2008;23:692–701. doi: 10.1037/a0014345. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green C, Li R, Bavelier D. Perceptual learning during action video game playing. Topics in Cognitive Science. 2009:1–15. doi: 10.1111/j.1756-8765.2009.01054.x. [DOI] [PubMed] [Google Scholar]

- Günther VK, Schăfer P, Holzner BJ, Kemmler GW. Long-term improvements in cognitive performance through computer-assisted cognitive training: A pilot study in a residential home for older people. Aging & Mental Health. 2003;7:200–206. doi: 10.1080/1360786031000101175. [DOI] [PubMed] [Google Scholar]

- Halpern DF. Teaching critical thinking for transfer across domains. American Psychologist. 1998;53:449–455. doi: 10.1037//0003-066x.53.4.449. [DOI] [PubMed] [Google Scholar]

- Hayslip B., Jr Alternative mechanisms for improvements in fluid ability performance among older adults. Psychology and Aging. 1989;4:122–124. doi: 10.1037//0882-7974.4.1.122. [DOI] [PubMed] [Google Scholar]

- Hertzog C, Kramer AF, Wilson RS, Lindenberger U. Enrichment effects on adult cognitive development: Can the functional capacity of older adults be preserved and enhanced. Psychological Science in the Public Interest. 2009;9:1–65. doi: 10.1111/j.1539-6053.2009.01034.x. [DOI] [PubMed] [Google Scholar]

- Horn JL. Cognitive diversity: A framework of learning. In: Ackerman PL, Sternberg RJ, Glaser R, editors. Learning and individual differences. Advances in theory and research. New York: W. H. Freeman; 1989. pp. 61–116. [Google Scholar]

- Hsiao H-h. Unpublished Master’s Thesis. NY: Columbia University; 1927. The performance of the Army Alpha as a function of age. [Google Scholar]

- Hultsch DF, Hertzog C, Small BJ, Dixon RA. Use it or lose it: Engaged lifestyle as a buffer of cognitive decline in aging? Psychology and Aging. 1999;14:245–263. doi: 10.1037//0882-7974.14.2.245. [DOI] [PubMed] [Google Scholar]

- Humphreys LG. The organization of human abilities. American Psychologist. 1962;17:475–483. [Google Scholar]

- Karbach J, Kray J. How useful is executive control training? Age differences in near and far transfer of task-switching training. Developmental Science. 2009;12(6):978–990. doi: 10.1111/j.1467-7687.2009.00846.x. [DOI] [PubMed] [Google Scholar]

- Kohn ML, Schooler C. The reciprocal effects of the substantive complicity of work and intellectual flexibility: A longitudinal assessment. American Journal of Sociology. 1978;84:24–52. [Google Scholar]

- Kubeck JE, Delp ND, Haslett TK, McDaniel MA. Does job-related training performance decline with age? Psychology and Aging. 1996;11(1):92–107. doi: 10.1037//0882-7974.11.1.92. [DOI] [PubMed] [Google Scholar]

- Lohman DF, Hagen EP. CogAT Form 6 Research Handbook. Itasca, IL: Riverside Publishing; 2003. [Google Scholar]