Abstract

Objective

Informatics applications have the potential to improve participation in clinical trials, but their design must be based on user-centered research. This research used a fully counterbalanced experimental design to investigate the effect of changes made to the original version of a website, http://BreastCancerTrials.org/, and confirm that the revised version addressed and reinforced patients' needs and expectations.

Design

Participants included women who had received a breast cancer diagnosis within the last 5 years (N=77). They were randomized into two groups: one group used and reviewed the original version first followed by the redesigned version, and the other group used and reviewed them in reverse order.

Measurements

The study used both quantitative and qualitative measures. During use, participants' click paths and general reactions were observed. After use, participants were asked to answer survey items and open-ended questions to indicate their reactions and which version they preferred and met their needs and expectations better.

Results

Overall, the revised version of the site was preferred and perceived to be clearer, easier to navigate, more trustworthy and credible, and more private and safe overall. However, users who viewed the original version last had similar attitudes toward both versions.

Conclusion

By applying research findings to the redesign of a website for clinical trial searching, it was possible to re-engineer the interface to better support patients' decisions to participate in clinical trials. The mechanisms of action in this case appeared to revolve around creating an environment that supported a sense of personal control and decisional autonomy.

Keywords: Clinical trials, breast neoplasms, user-computer interface, user-centered design, human–computer interaction, quantitative research

Introduction

Clinical trials are essential to addressing the National Cancer Institute's (NCI's) mission to eliminate death and suffering due to cancer,1 yet clinical trial research often suffers from threats to accrual2 and a disparate reach to eligible populations.3 4 Several efforts are underway to improve accrual to clinical trials through the use of information technology, especially through the world wide web.5–7 While some internet-based tools are being developed to help clinicians find trials for their patients,8 many websites are available for patients to use themselves to locate clinical trials.7 What is unclear is how well tools for patients help them understand clinical trials and make decisions about participation.

Although patients have many barriers to participating in clinical trials,9 the primary barriers have to do with lack of awareness of clinical trials in general and specific trials open to them.10 Those who know about clinical trials have generally positive attitudes about clinical trial research and are willing to consider enrolling.10 Patients decide to participate to receive better or promising treatments, to benefit others, and because they trust their physicians.11 Patients are more likely to agree to participate when they understand and accept the randomization process and are satisfied with their doctors' communication.12

Although clinical trial enrollment is often facilitated through healthcare providers, a recent unpublished study conducted by NCI with a national sample found that people considering such trials were likely to use the internet to find out about them. Not only is this recruitment strategy passive, but people who seek information on clinical trials this way experience an online information environment that is extensive but not ideal.7 Several websites with tools to search for trials recruiting patients exist,7 but actually using the tools can be difficult because of complex user interfaces and dense medical and technical terminology.7 13 This situation poses even more difficulty for patients with lower health literacy.13

When applied to clinical trials, basic search engines are likely to yield many trials,14 but few for which the person would qualify or would want to enroll.7 Some clinical trial search tools match clinical trials to a person's health status and preferences, such as those available in some personal health records, and have the potential to improve recruitment and limit frustration.6 Instead of getting a full list of available trials, the person would only be presented with trials consistent with his or her trial preferences and medical history. This strategy, however, often requires that a person first register and then enter more technical medical information than they have or are prepared to share.15 Therefore, the clinical trial search process, which is already complex,9 can become even more complicated and onerous. Researchers may, as a result, drive away the very people they are trying to attract to their studies.

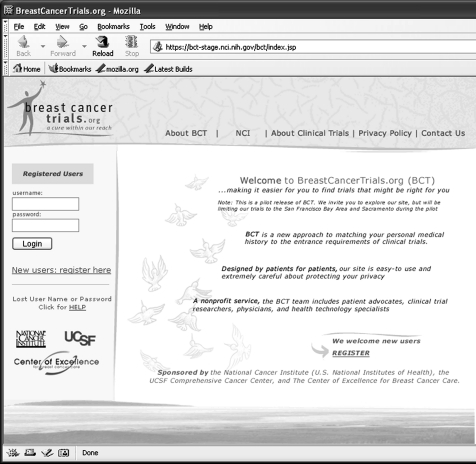

The research presented in this article focused on a web-based clinical trial search tool, http://BreastCancerTrials.org/ (BCT). This application was a pilot project sponsored by NCI and developed by two breast cancer survivors working with the University of California, San Francisco (UCSF) Comprehensive Cancer Center and the Center of Excellence for Breast Cancer Care. Unlike most clinical trial search tools,7 BCT limits the list of clinical trials to those that match the patient's actual medical history record as a way to improve the chance of a productive search. Our previous research revealed that BCT's mandatory registration process early in the site frustrated and annoyed users to the point of driving them away.15 A key problem was the lack of availability of clear information about the benefits and functionality of the matching system before patients registered. Users also needed more information to trust the website and have confidence that it was secure, as well as assistance when inputting complex personal medical information in the site's extensive forms.15 The BCT pilot site launched in 2006 did not incorporate the majority of the findings from this user research.

According to research-based web design and usability guidelines, changes made in response to user input must be evaluated to confirm that the resulting systems are acceptable and function as desired by the end users (Guideline 18:3).16 17 Follow-up research was therefore necessary on the revised prototype site to assess the impact of revisions on acceptability and performance. A second ‘revised’ prototype was built off-record (figure 2) with the suggested changes to provide a means to assess the impact of integrating user input on acceptability of the site, including: concise information on the homepage about clinical trials, the matching system, and the benefits of the system; links on the homepage to an area to browse clinical trials in the system and to a tour of the system; prominent logos for NCI and UCSF and other relevant graphics; and providing only medical history questions relevant to the user. To address rigor, iterative research was needed at this point involving a larger study using quantitative methods and random assignment.17

Figure 2.

Revised breastcancertrials.org homepage.

Figure 1.

Original breast cancer trials home page (http://BreastCancerTrials.org).

This article seeks to clarify the impact of changes made to the original pilot BCT website by testing the original version against a revised version that incorporated the additional user research. The purpose of this study was to confirm the findings of the user-centered research on BCT15 that there was a need to address the following: (1) frustration and annoyance with mandatory registration; (2) lack of clear information about the benefits and functionality of the matching system; and (3) lack of accessible information to inspire trust in the website and confidence that it was secure. Specifically, by addressing these concerns, did the design changes affect the users' preferences, satisfaction and attitudes toward the original and revised eHealth clinical trial search tools?

Methods

Sample

Using a multi-tiered approach, recruitment procedures included collaboration with local clinical partners for recruiting patients from their current inventory and use of a professional recruiting firm. Study participants included women (N=77) who had received breast cancer as a clinical diagnosis and were currently either receiving treatment or had concluded treatment within the last 5 years. Women with prior clinical trial participation or work experience in a medical or information technology profession were excluded.

Research design

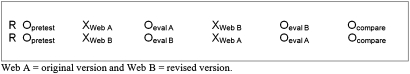

The study used a 2×2 fully counterbalanced design whereby users were presented with the original and revised versions to test for the presence of an order effect. After confirming eligibility, participants were randomized to one of the two arms of the experiment: arm one reviewed the original version of the website first followed by the revised version, and arm two reviewed the versions in the reverse order (figure 3). Participants were blinded to which version was the original website and which was revised; only the terms website A and B were used. On the participant survey, website A consistently referred to the first website that the participant viewed regardless of whether it was the original or revised version.

Figure 3.

Research design.

Data collection process

Data collection occurred at two different locations: (1) RTI's Washington DC office; (2) Duke University Hospital. At the start of the interview, participants received a hard copy questionnaire containing detailed instructions on how to access each version and fill out the questionnaire. Trained interviewers observed participants while they followed the self-guided questionnaire which instructed them to review each website version individually online and rate each on the questionnaire. Participants were given up to 30 min to review each version before answering the subsequent evaluation questions. Then participants were asked questions comparing the two versions. All participant responses were recorded on the hard copy version of the questionnaire. Lastly, to overcome any potential issues related to illegible handwriting, the interviewer and the participant discussed their responses to the few open-ended questionnaire items.

The interview instrument was a self-administered paper-and-pencil questionnaire. The testing materials underwent two rounds of cognitive pretesting to ensure comprehension and ease of use.18 The instrument included primarily closed-ended questions and was broken down into three major sections to enable ‘pre–post’ comparisons. Each section is described below.

Pretest questions in section one assessed participants' status of their breast cancer diagnosis, participant information seeking practices related to cancer, and their attitudes toward clinical trials.

Section two assessed feedback on the web tool including knowledge about the purpose of each version in an open-ended question format. These were followed by eight Likert survey questions about the participant's attitudes to the version on a 10-point scale (eg, ‘I feel pressured by this site to join a trial,’ ‘Overall, this site prepared me for what to expect before registering’), ranging from ‘strongly agree’ (value=1) to ‘strongly disagree’ (value=10). Thirteen other attitude questions were next asked using 7-point semantic differential items (eg, trustworthy versus dishonest, easy versus hard). The values assigned take into consideration the social desirability bias and double-negative questions. Participants were also asked to provide their overall reactions to the versions in response to three 10-point Likert items (‘Not at all likely’ =1; ‘Very likely’ =10) about their likelihood of participating in a clinical trials as a result of using the site, using the site to find clinical trials, and recommending the site to others. At the end of this section, participants were asked to report what they liked most and least about the version of the site in response to two open-ended questions.

Section three of the questionnaire contained questions comparing the two versions. Specifically, participants were asked to state their preference (original versus revised versions) with respect to information clarity, ease of navigation, perceived usefulness, and overall preference and the strength of their preference (ie, ‘How much more would you recommend the web you chose over the other?’) using a 10-point Likert scale (1= ‘a little’ and 10= ‘a lot’). Verbal probes were used as appropriate to obtain more data regarding the interface, personal health record, and the registration process. The last page of the questionnaire contained seven questions related to demographic variables including age, race/ethnicity, education level, social support methods used, health insurance, and annual household income.

The study protocol and research design was reviewed and approved by accredited institutional review boards (IRB) at RTI International, Rex Hospital, and Duke University Medical Center. The protocol was granted clearance under NCI's OMB generic clearance package for pretesting and formative research (#0925-0046-01, expiry date 1/31/2010).

Prior to the self-administered questionnaire, interviewers obtained written consent from the participants via a form explaining the purpose of the study, the procedure used for data collection, and an explanation of the risks (none) and benefits (the value of learning about the web tool). Once the participant had provided consent, the interviewer presented a brief verbal introduction to the study and described the data collection process described above (eg, use of self-guided questionnaire to review the website versions). Participants were then provided with laptops to begin the review of one of two versions. In efforts to start the participants off from a similar frame of mind, each was asked to use the following pilot-tested scenario when viewing the versions: ‘You are coping with a diagnosis of breast cancer. Someone recommended you consider a clinical trial. You've come across this website.’ Once they opened a version of the site, participants were told ‘We want you to look at/move through the website as you would in your normal/usual setting where you use the internet. Assume we are not here.’

As participants completed steps 2 and 3, the interviewer completed an observation form with a subset of the participants (35 of 77) while they browsed each version of the site. Interviewers recorded data such as where participants went first on each version, where the participants had difficulty, what amount of time they spent on each, and whether they accessed the link to the NCI home page. The interviewers also documented additional qualitative data such as participant's non-verbal communication or repetitive verbal comments.

After the interview was complete, participants were asked to provide their contact information for the delivery of their monetary incentive check (US$60–100, based on geographic location and distance traveled to the research site). Checks were mailed within 2 weeks of the participant's interview, and their contact information was destroyed at the close of the study.

Data analysis

The data analysis consisted of four parts. First, statistical analysis for the demographic variables and breast cancer characteristics, such as frequencies and percentages, were obtained for all participants (table 1). Second, the overall perceptions of original and revised versions were examined using goodness-of-fit χ2 tests for categorical variables (table 2) and analysis of variance for continuous variables with Likert scales (table 3). All quantitative analyses were conducted using SAS V.9.1.3. The significance level was set at p<0.05.

Table 1.

Characteristics of research participants (N=77)

| Demographic characteristic (N=77) | N | % |

| Age | ||

| <40 | 10 | 13 |

| 40–49 | 26 | 34 |

| 50–59 | 19 | 25 |

| 60–69 | 15 | 20 |

| 70+ | 3 | 4 |

| Refused | 4 | 5 |

| Race/ethnicity | ||

| Non-Hispanic black | 11 | 12 |

| Non-Hispanic white | 58 | 75 |

| Hispanic | 3 | 4 |

| Other | 1 | 1 |

| Missing | 3 | 4 |

| Education | ||

| College or more | 41 | 53 |

| Some college | 18 | 23 |

| High school or less | 12 | 16 |

| Missing | 6 | 8 |

| Household income | ||

| ≤US$75 000 | 31 | 40 |

| >US$75 000 | 34 | 44 |

| Missing | 12 | 16 |

| Healthcare coverage | ||

| Yes | 71 | 92 |

| No | 3 | 4 |

| Missing | 3 | 4 |

| Breast cancer characteristics | N | % |

| Number of years since breast cancer diagnosis | ||

| <1 year | 24 | 5 |

| 1 year | 16 | 21 |

| 2 years | 13 | 17 |

| 3 years | 12 | 16 |

| 4 years | 9 | 12 |

| 5 years | 3 | 4 |

| Missing | 20 | 26 |

| Stage at diagnosis | ||

| Stage 0 (ductal carcinoma in situ) | 12 | 16 |

| Stage 1 | 26 | 34 |

| Stage 2 | 22 | 29 |

| Stage 3 | 11 | 14 |

| Stage 4 | 3 | 4 |

| Multiple response | 2 | 3 |

| Missing | 1 | 1 |

| Stage of cancer treatment | ||

| Completed | 41 | 53 |

| Ongoing | 36 | 47 |

Table 2.

Perceptions of original and revised versions, overall (N=74) and in relation to order of site version presentation (N=36)

| Outcome measure | Overall results | Original → Revised | Revised → Original | ||||||

| Original | Revised | Test | Original | Revised | Original | Revised | Test† | ||

| Version comparison | N | N (%) | N (%) | χ2 | N (%) | N (%) | N (%) | N (%) | χ2 |

| Overall preference | 74 | 26 (35) | 48 (65) | 6.54** | 8 (22) | 29 (78) | 18 (49) | 19 (51) | 5.85* |

| Would recommend | 74 | 29 (39) | 45 (62) | 3.46 | 10 (27) | 27 (73) | 19 (51) | 18 (49) | 4.53* |

| Clarity‡ | 73 | 29 (39) | 44 (56) | 3.08 | 11 (31) | 25 (70) | 18 (49) | 19 (51) | 9.23* |

| Ease of navigation‡ | 73 | 23 (31) | 50 (68) | 9.99** | 6 (17) | 30 (83) | 17 (46) | 19 (53) | 7.68 |

| Usefulness‡ | 72 | 26 (35) | 46 (62) | 5.56* | 9 (25) | 27 (75) | 17 (47) | 19 (53) | 4.86 |

*p≤0.05; **p≤0.01.

The χ2 tests refer to differences across time and across groups.

‘Much’ and ‘somewhat’ categories were collapsed, so respondents were grouped by preference to one version or another.

Table 3.

Attitudes toward original and revised versions

| Outcome measure | Original version | Revised version | Test | |

| Attitude* | Total N | Mean (SD) | Mean (SD) | Mean difference (p) |

| I felt pressured by site to register on it | 74 | 6.5 (3.42) | 7.0 (3.13) | 0.54 (0.216) |

| I felt pressured by site to join a clinical trial | 74 | 7.8 (2.96) | 8.3 (2.49) | 0.51 (0.171) |

| Purpose of this site was clear right away | 74 | 3.6 (2.64) | 3.0 (2.20) | −0.59 (0.064) |

| Obvious on homepage that this site is for people with breast cancer | 74 | 2.7 (2.47) | 2.7 (2.76) | 0.07 (0.834) |

| I wouldn't lose much by trying this site | 74 | 3.0 (2.64) | 2.9 (2.84) | −0.04 (0.899) |

| Gave me opportunity to find out about clinical trials near me without registering | 74 | 6.5 (3.45) | 4.2 (3.34) | −2.28 (0.000) |

| This site offered enough background information on the matching process | 74 | 5.1 (2.75) | 4.0 (2.42) | −1.09 (0.011) |

| This site prepared me for what to expect before registering | 74 | 4.6 (2.78) | 3.7 (2.54) | −0.91 (0.021) |

Scale is 1 (strongly agree) to 10 (strongly disagree). Lower numbers are associated with a greater level of agreement with the statement, whereas higher numbers indicate greater levels of disagreement with the statement.

Finally, the interviewer participant-observation data were used to describe the location of the user's first action on both the original and revised versions, and responses to open-ended survey questions were used to categorize and tabulate the specific likes and dislikes of the two versions. One researcher developed a list of categories, from which two other researchers coded the lists of likes and dislikes independently. Some statements contained multiple likes and dislikes, so some were given more than one code. The researchers compared findings and discussed discrepancies until they reached agreement. The difference in the number of responses was tabulated to compare reactions to the two versions (table 4).

Table 4.

Specific likes and dislikes (N=54)

| Open-ended responses | Original version | Revised version | Difference |

| Frequency (%) | Frequency (%) | Revised—Original | |

| Liked most | |||

| Informative, thorough, information that's easy to follow | 19 (35) | 22 (41) | 3 |

| Ease in using version functions | 14 (26) | 22 (41) | 8 |

| Purpose of version (as intended to match women with trials) | 8 (15) | 10 (19) | 2 |

| Personal health record question and answer formatting, process | 3 (6) | 7 (13) | 4 |

| Credible version, resourceful links | 7 (13) | 3 (6) | −4 |

| Appearance (look and feel) | 4 (7) | 4 (7) | 0 |

| Personalized, specific, relevant to me | 4 (7) | 4 (7) | 0 |

| Layout (organization of version) | 3 (6) | 5 (9) | 2 |

| Convenient, can do on my own | 4 (7) | 2 (4) | −2 |

| Allows tour, can try version out ahead of time without registering | 0 (0) | 6 (11) | 6 |

| Output, results of match | 3 (6) | 3 (6) | 0 |

| Don't like | 5 (9) | 0 (0) | −5 |

| Safe/private | 1 (2) | 1 (2) | 0 |

| Liked least | |||

| Not enough information/purpose of version unclear | 10 (19) | 5 (9) | −5 |

| Difficult to navigate/use functions | 8 (15) | 6 (11) | −2 |

| Nothing | 3 (6) | 11 (20) | 8 |

| Match results unsatisfying (not applicable, wrong location) | 8 (15) | 5 (9) | −3 |

| PHR questions & answer process difficult/hard to follow | 6 (11) | 7 (13) | 1 |

| Want more information before login and registration | 8 (15) | 2 (4) | −6 |

| Over-informative/wordy/too much information | 5 (9) | 3 (6) | −2 |

| Registration process difficult/too long/ cumbersome/confusing | 3 (6) | 4 (7) | 1 |

| Appearance of version | 4 (7) | 1 (2) | −3 |

| Credibility of information/sources/lack of safety | 2 (4) | 2 (4) | 0 |

| Layout/unorganized or overwhelming too busy | 1 (2) | 2 (4) | 1 |

PHR, personal health record.

Results

The following results summarize information about the participants, their reactions to the two website versions they were presented, and an analysis of attitudes toward the two versions tested. Of the 77 participants, 74 answered the question about which version was most preferred after viewing both. Questions related to comparing the two versions are focused on these 74 participants.

Sample characteristics

Table 1 presents the sample's demographic and breast cancer history characteristics. The participants represented a wide range of ages, and the largest proportions were in their 40s (34%) or 50s (25%). Three-fourths of the participants were non-Hispanic white women (75%), half had a college-level education (53%), and the majority (92%) had healthcare coverage.

Study participants also represented a range of breast cancer experiences (table 1). Only women whose diagnosis was in the past 5 years were included in the study, and most were dealing with a diagnosis of 1–3 years in duration and breast cancer at stage 2 or 3. Half (53%) had completed their cancer treatment at the time of participation in the study.

Preferences

When looking at the overall sample, participants preferred the revised version nearly twice as much as the original version (65% to 35%, respectively). Because of the unique research design, however, preferences for the two versions were compared by the order in which they were presented to participants (table 2). A significant order effect was found (p=0.02). Those who viewed the original version first preferred the revised version approximately three times as much as the first (22% vs 78%; p=0.02) and indicated they would recommend it over the original version (p=0.03). The participants in this condition were also significantly more likely to find the revised version clearer than the original one (p=0.03).

Those who viewed the revised version first appeared to have similar rates of preference for the two versions, with half preferring the original and half the revised version. Their opinions about clarity, ease of navigation, and usefulness also did not differ for the original and revised versions. Preference also did not differ significantly by age, race/ethnicity, education, or income.

Table 3 presents participants' attitudes about the respective version's registration process, its intended purpose, and other features (lower scores indicate greater agreement). Overall, participants were significantly more likely to feel that the revised version better oriented them about clinical trials (χ2=−2.28, p=0.000) and the matching process (χ2=−1.09, p=0.011). They were also significantly more likely to feel better prepared for what to expect before registering (χ2=−0.91, p=0.021). None of the other attitudes differed significantly across versions, although the mean scores trended in a more favorable direction for the revised version for attitudes related to purpose being clear (p=0.064).

User likes and dislikes

Participant observation (N=35) and an analysis of open-ended survey responses (N=74) about likes and dislikes allowed further comparison of the original and revised versions and revealed more about the users' preferences and nature of their use of the two versions. When using the original version, participants in both conditions were most likely to select the registration button first (N=14). The next most commonly chosen link for both groups was the ‘About Clinical Trials’ hyperlink (N=11). When using the revised version, however, participants in both conditions were most likely to select to view the ‘Browse Trials’ area of the website first (N=18), an area not available to users of the original version. Another item unique to the revised version, ‘Take a Tour,’ was only selected by three people, two of whom were in the group seeing the original version first. Across groups, the second most common link chosen first on the revised version was the registration button (N=9); five of these participants were in the group that saw the original version first.

Participants provided their opinions about what they liked most and least about each version in response to two open-ended questions (table 4). Although several features that they liked were similarly cited (‘informative,’ ‘purpose,’ ‘appearance,’ and ‘relevance’), participants were more likely to volunteer that the revised version was easy to use and that they liked the format and process of filling in the health history.

Six of the users of the revised version mentioned liking the opportunity to try out the version before registering, but no original version users cited this feature. Instead, one participant reviewing the original version stated in response to what she liked least: ‘I had to register in order to get to the information I wanted. It would be nice to get some basic information to see if a person will want to go there.’ A different participant reviewing the revised version stated that she liked ‘that you could get an idea of trials currently underway before having to enter any detailed personal info.’ Interestingly, five participants answered that they did not like the original version at all when asked what they liked most about it. However, twice as many people mentioned that the credibility of the original version was what they liked most about it (N=7) compared with the revised version (N=3).

When asked what they liked least about each version, users were also likely to volunteer that ‘nothing’ was a problem, with 11 (20%) of the users of the revised version unable to come up with any problem or concern, while only three (6%) had no concerns to report for the original version. Many more users stated that they disliked the lack of information about the process and the purpose of the original version than they did for the revised version.

An analysis of the comments submitted found a few statements where participants compared the two versions (table 5). Four participants who saw the original version after the revised one compared the original version favorably when asked what they liked most about it, but three others compared the original version less favorably than the revision. Among participants who saw the revised version second, seven compared it favorably with the original one. The most common themes in positive comments about the original version were the personal quotes and graphic elements (eg, ‘I liked the link to the art for recovery. Pleasant colors’; ‘I liked the message to patients’).

Table 5.

Comments comparing original and revised versions

| Version | Favorable comparison | Unfavorable comparison |

| Original |

|

|

| Revised |

|

N/A |

Discussion

Using advances in communication technology to accelerate accrual to clinical trials is one way in which cancer researchers can reach out to potential research participants. To be effective in the online arena, however, researchers must recognize that cognitive factors as well as technological factors will influence whether the online experience is successful.19 In this study, we used a set of empirical methods guided by experimental cognitive psychology20 and user-centered research21 to evaluate the effectiveness of improvements to an application designed to enable patients to seek clinical trials. The study built on previous research15 on clinical trial recruitment and usability and found that users were open to an internet-based clinical trial matching system when it prepared them for the experience.

In terms of preferences, the results of this study showed that the revised version of the website was overwhelmingly preferred overall and when viewed after the original version. Observations of the women who experienced the original version first revealed that many were visibly distressed at their experiences with this version, and several commented to the observer about their frustrations. On moving to the revised version, these women offered further comments on their relief at finally getting the information they wanted. It appears, therefore, that seeing the original version first provided women the opportunity to clearly identify the improvements they sought in their experiences using the original system. This is consistent with contrast effect, a type of carryover effect in within-subjects design where a more negative condition presented before a more positive one is perceived more negatively than usual.

Although the revised version was preferred most, there was a clear order effect. Participants who saw the revised version before the original liked the two versions equally. Here, the findings show that the experience of using the revised version first did not result in significantly better ratings once users experienced the original version. It is possible that the revised version—with its additional background information and features—inadvertently prepared participants to move on and view the original version with fewer frustrations. This effect is consistent with the notion of an assimilation carryover effect in within-subjects design—that is, participants' attitudes and knowledge about a poor stimulus presented after a better stimulus might be perceived as better than it normally would be.22 It speaks to the importance of using a fully counterbalanced methodology to control for exposure effects in comparing design alternatives.

If this study had evaluated only a single group where the original version was presented ahead of the revised version, the numbers would lead to an overestimate of preference for the revised version. Therefore, overall preference likely lies between 51% and 78% in favor of the revised site. Although these data cannot provide a precise point estimate, we avoided the biased finding that would have resulted from a non-counterbalanced design.

An analysis of user attitudes about the two versions demonstrated that the clinical trial recruitment system represented in the revised version better met end-users' needs and expectations by preparing them for what to expect and familiarizing users about the nature of clinical trials that they could access. Consistent with earlier research,15 women found the lack of supporting information before registration on the original version a major barrier to accepting and wanting to use the search tool. Many participants who experienced the original version first reacted so negatively to the registration process that they did not want to continue its use at all in the experiment. The analysis of the users' first actions on the version showed that the original version provided few response options besides registering, whereas the revised version gave them the opportunity to browse trials and take a site tour. When online, patients' impressions of an institution or enterprise will be influenced by the nature and design of websites representing it.23 Providing different ways to view information about clinical trials and about the website before registration (eg, browse and tour features) is consistent with enabling self-determination, which is crucial to helping patients to regain autonomy of their personal health.24

These findings suggest that users may have difficulty adequately critiquing interventions and tools with which they are just becoming familiar in isolation of other examples. Without having adequate time and alternative designs to evaluate, their review would be more preliminary and incomplete.

Implications and strengths

This research has implications for the design of other sites that provide a service to registered users. People may be concerned about entering personal information into websites because of issues of privacy and confidentiality, such as we found in the initial user-centered research.15 Such sites should include information and resources that reduce uncertainty about the nature and purpose of the service itself before asking people to register. Including design features to boost source credibility with clear sponsorship (ie, NCI and UCSF logos in the revised version) may further comfort participants. Browse and tour functions are recommended to orient potential users to the service. The original developers of the site had no or little expectation that registration would be off putting, so this research also supports the use of pilot testing strategies for introducing registration procedures so potential participants are not inadvertently offended.

The specific likes and dislikes of an online system aimed at improving recruitment to clinical trials showed that ‘ease of use’ emerged as one of the most noticeable differences between the users' experiences with the two versions of the online tool. The importance of ease-of-use as an evaluative dimension is not surprising given the preponderance of experience in online web environments.16 In the area of commercial websites, a site that is not easy to use and that does not provide users with a perceived sense of value within the first few moments of usage may be abandoned in favor of friendlier sites just a few mouse clicks away.23

This study also has implications related to future user-centered research. For example, testing alternative designs against each other is critical when determining users' preferences. Without the ability to compare the two versions against each other (ie, providing only one version to comment on), the original version might have appeared to fair well with women because they had no reference on which to base their reactions. When given a second version of the site, the comparison highlighted what they wanted to make the final site acceptable. Therefore, it is essential that at least two options are provided to users when testing ideas during developmental research. Evaluating order effects is also important in future research of this nature. Studies that do not use a counterbalanced design are susceptible to biased findings. In the case of this study, testing by presenting first the original and the revised versions would have led to an exaggerated estimate of how much the revised version was preferred.

In terms of study procedures, a strength of the study protocol was that it was designed to approximate a real-world experience by encouraging users to view the versions as if they had come across them on their own. The data collection process was also deliberately slowed so that participants had time to explore each version before choosing to register and moving through the system. Although the research process could not fully represent what would happen in reality, this strategy was necessary to give participants the time needed to develop an understanding of a complex issue (clinical trials) and system (matching system for clinical trials). Other user-centered testing of intricate eHealth tools, especially those requiring registration, should allow sufficient time for users to develop an understanding of how they operate.17

Limitations

This study had limitations related to the sample and the study procedures. The sample was highly educated and had higher than average incomes. As a result, these women may have been more open to trials, as evidenced by the 7.6 score on this variable. The majority also had health insurance and may have assumed (correctly or incorrectly) that trial participation was a covered benefit and may have affected their attitudes overall. This sample had few racial or ethnic minority participants, so further research is needed to assess the acceptability of eHealth clinical trial search tools among other populations, such as the traditionally underserved.

Conclusions

This research built on previous user-centered research by applying an empirical, quantitative research process and demonstrated that assessing the adequacy of complex interactive tools and technologies requires (1) offering users alternatives to compare, and (2) presenting alternatives in variable order. While we cannot precisely assess user preferences across the two versions, the study's counterbalanced design avoided bias and an overestimate of preference for one version over another. What the findings suggest, however, is that design changes and added features improved the experience for many of the participants. Women will still need some level of interest in clinical trials and in such a tool to find it on their own and to spend the time using it. At the same time, more will be able to satisfy their information needs, feel ready to use sites such as these, and find clinical trials for which they qualify with the changes similar to those in the revised version.

The BCT project has gone on to integrate many of the findings from this user-centered research. In 2008, http://BreastCancerTrials.org/ was upgraded and launched as a nationwide, non-profit service under the auspices of Quantum Leap Healthcare Collaborative with the following improvements to the user interface: ability to use the matching service anonymously, ability to browse trials without registering, tailoring medical history questions to the needs of different patients. They have also gone on to integrate even more improvements, such as (a) simplified formatting of trial summaries so users can quickly review a trial summary along with requirements for study participation before clicking through for more detailed information and (b) presentation of research site information on a list or Google Map. Since 2009, users who choose to register and save their health history are also able to get email alerts when a new trial matches their needs (Trial Alert Service) and to allow research staff to access and preview their health history prior to a first phone interview (SecureCONNECT). In the 18 months between January 2009 and June 2010, BCT received 25 206 visits, and 3563 users started a health history, with 2206 (62%) completing it and matching to trials. They are committed to continuous improvement and are currently redesigning the site with improved educational content.

Acknowledgments

The National Cancer Institute's Office of Market Research and Evaluation funded the study design, collection, management, analysis, and interpretation of the data, and preparation, review, and approval of the manuscript. The systems tested were developed collaboratively by: NCI's Center for Biomedical Informatics and Technology, which manages the cancer Biomedical Informatics Grid (caBIG) initiative; NCI's Office of Communications and Education, which manages NCI's clinical trials database PDQ; and the University of California, San Francisco, Comprehensive Cancer Center and the UCSF Center of Excellence for Breast Cancer Care. We also thank: Dr Gretchen Kimmick, oncologist from Duke University Medical Center, Durham, North Carolina; Ms Regina Heroux, Cancer Outreach Manager, Rex Hospital, Raleigh, North Carolina; and our other collaborators at NCI and RTI who helped with this effort (Farrah Darbouze; Brenda Duggan; Mary Jo Deering, PhD; Katherine Treiman, PhD; Tania Fitzgerald; and Claudia Squire, MS).

Footnotes

Funding: National Cancer Institute, NIH6116 Executive Blvd, Suite 400, Rockville, MD 20852.

Ethics approval: This study was conducted with the approval of the institutional review boards at RTI International, Rex Hospital, and Duke University Medical Center. The protocol was granted clearance under NCI's OMB generic clearance package for pretesting and formative research (#0925-0046-01, expiry date 1/31/2010).

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1.U.S. Department of Health and Human Services National Institutes of Health. National Cancer Institute. The NCI Strategic Plan for Leading the Nation to Eliminate the Suffering and Death Due to Cancer. NIH Publication No. 06-5773, Washington, DC: US Department of Health and Human Services, National Institutes of Health, 2006 [Google Scholar]

- 2.Mathews C, Restivo A, Raker C, et al. Willingness of gynecological cancer patients to participate in clinical trials. Gynecol Oncol 2009;112:161–5 [DOI] [PubMed] [Google Scholar]

- 3.Fernandez CV, Barr RD. Adolescents and young adults with cancer: An orphaned population. Paediatr Child Health 2006;11:103–6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Go RS, Frisby KA, Lee JA, et al. Clinical trial accrual among new cancer patients at a community-based cancer center. Cancer 2006;106:426–33 [DOI] [PubMed] [Google Scholar]

- 5.Wei SJ, Metz JM, Coyle C, et al. Recruitment of patients into an internet-based clinical trials database: the experience of OncoLink and the National Colorectal Cancer Research Alliance 2004;22:4730–6 [DOI] [PubMed] [Google Scholar]

- 6.Metz JM, Coyle C, Hudson C, et al. An Internet-based cancer clinical trials matching resource. J Med Internet Res 2005;7:e24 http://www.jmir.org/2005/3/e24/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Atkinson NL, Saperstein SL, Massett HA, et al. Using the Internet to search for cancer clinical trials: A comparative audit of clinical trial search tools. Contemp Clin Trials 2008;29:555–64 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Embi PJ, Jain A, Clark J, et al. Effect of a clinical trial alert system on physician participation in trial recruitment. Arch Intern Med 2005;165:2272–7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Mills EJ, Seely D, Rachlis B, et al. Barriers to participation in clinical trials of cancer: a meta-analysis and systematic review of patient-reported factors. Lancet Oncol 2006;7:141–8 [DOI] [PubMed] [Google Scholar]

- 10.Comis RL, Miller JD, Aldigé CR, et al. Public attitudes toward participation in cancer clinical trials. J Clin Oncol. 2003;21:830–5 [DOI] [PubMed] [Google Scholar]

- 11.Jenkins V, Fallowfield L. Reasons for accepting or declining to participate in randomized clinical trials for cancer therapy. B J Cancer 2000;82:1783–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Mancini J, Genève J, Dalenc F, et al. Decision-making and breast cancer clinical trials: How experience challenges attitudes. Contemp Clin Trials 2007;28:684–94 [DOI] [PubMed] [Google Scholar]

- 13.Shieh C, Mays R, McDaniel A, et al. Health literacy and its association with the use of information sources and with barriers to information seeking in clinic-based pregnant women. Health Care Women Int 2009;30:971–88 [DOI] [PubMed] [Google Scholar]

- 14.Nielsen J. Mental models for search are getting firmer. Useit.com 2005. http://www.useit.com/alertbox/20050509.html (accessed 11 Sep 2006). [Google Scholar]

- 15.Atkinson NL, Massett HA, Mylks C, et al. User-centered research on breast cancer patient needs and preferences on an internet-based clinical trials matching system. J Med Internet Res 2007;9:e13 http://www.jmir.org/2007/2/e13/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.U.S. Department of Health and Human Services Research-based web design & usability guidelines. Washington, DC: US Department of Health and Human Services; 2006. [FREE full text]. [Google Scholar]

- 17.Kushniruk AW, Patel VL. Cognitive and usability engineering methods for the evaluation of clinical information systems. J of Biomed Inform 2004;37:56–76 [DOI] [PubMed] [Google Scholar]

- 18.Willis GB. Cognitive Interviewing: A Tool for Improving Questionnaire Design. Thousand Oaks, Calif: Sage Publications, 2005 [Google Scholar]

- 19.National Research Council Computational Technology for Effective Health Care: Immediate Steps and Strategic Directions. Washington: National Academies Press, 2009 [PubMed] [Google Scholar]

- 20.Myers A, Hansen CH. Experimental psychology. 6th edn Florence, KY: Wadsworth Publishing, 2005 [Google Scholar]

- 21.Barnum CM. Usability testing and research. New York: Longman, 2002 [Google Scholar]

- 22.Sternberg RJ, Roediger HL, III, Halpern DF, eds. Critical thinking in psychology. Cambridge: Cambridge University Press, 2007 [Google Scholar]

- 23.Nielsen J, Tahir M. Homepage Usability: 50 websites Deconstructed. Indianapolis, IN: New Riders, 2002 [Google Scholar]

- 24.Hesse BW. Enhancing consumer involvement in health care. In: Parker JC, Thornson E, eds. Health communication in the new media landscape. New York, NY: Springer Publishing Company, 2008:119–49 [Google Scholar]