Abstract

Abbreviation use is a preventable cause of medication errors. The objective of this study was to test whether computerized alerts designed to reduce medication abbreviations and embedded within an electronic progress note program could reduce these abbreviations in the non-computer-assisted handwritten notes of physicians. Fifty-nine physicians were randomized to one of three groups: a forced correction alert group; an auto-correction alert group; or a group that received no alerts. Over time, physicians in all groups significantly reduced their use of these abbreviations in their handwritten notes. Physicians exposed to the forced correction alert showed the greatest reductions in use when compared to controls (p=0.02) and the auto-correction alert group (p=0.0005). Knowledge of unapproved abbreviations was measured before and after the intervention and did not improve (p=0.81). This work demonstrates the effects that alert systems can have on physician behavior in a non-computerized environment and in the absence of knowledge.

Introduction

Medication errors are responsible for a large number of adverse drug events in patients each year, and the use of medication abbreviations accounts for a subset of these errors.1–3 For years, professional organizations and regulatory agencies have emphasized the danger of medication abbreviations and have mandated the elimination of the most error-prone abbreviations in medical documentation.4–7 Because the majority of abbreviation errors originate during medication prescribing,8 strategies to reduce abbreviations have largely focused on education to modify physician documentation.9–11 Promulgation of a ‘Do Not Use’ list of abbreviations created by the Institute of Safe Medication Practices, included in the National Patient Safety Goals, and endorsed by the Joint Commission4 has served as the primary educational campaign, but there is poor compliance among hospital staff with this practice.12

From 2004 to 2006, 643 151 medication errors were reported to the United States Pharmacopeia MEDMARX program from 628 facilities, and 29 974 (4.7%) of these errors involved abbreviation use.8 Eighty-one per cent of the abbreviation errors occurred during medication prescribing, and 0.3% of errors resulted in patient harm. While a direct association between abbreviations and medication errors has been established, little is known about the best ways to eliminate or reduce abbreviation use.

Medication errors, and in some settings adverse drug events, have been reduced with the adoption of computerized provider order entry (CPOE) and clinical decision support systems (CDSS).13 14 However, despite widespread acceptance of the benefits of health information technology and national agendas to expand their use,15 16 in 2008 only 17% of US hospitals had adopted CPOE.17 As a result, opportunities to introduce medication abbreviations into handwritten documentation remain a source of medication errors and patient harm.

Although a direct link between abbreviations in handwritten notes and medication prescribing errors has not been established, written documentation in the form of handwritten notes and electronic entries with free text is capable of introducing abbreviations that can be misinterpreted and cause errors.18 19 As the integration of electronic medical records expands nationally, it is important to understand how computerized alerts and clinical decision support influence the knowledge and behaviors of healthcare professionals. Given the paucity of research around electronic interventions to decrease unsafe medication abbreviation use, we conducted a randomized-controlled trial to evaluate the effects of computerized alerts designed to reduce unapproved abbreviations on the frequency of use of these abbreviations in an electronic progress note system and in the non-computer-assisted handwritten documentation of physicians.

Methods

Study design overview

This study was conducted between July 2006 and June 2007. All internal medicine interns (N=59) at the Hospital of the University of Pennsylvania enrolled in the study at the beginning of their internship. The University of Pennsylvania Institutional Review Board approved the study and granted a waiver of written informed consent. As a condition of the Institutional Review Board approval, participating interns were told that they were part of an ongoing study to examine the effects of computerized interventions designed to reduce unapproved abbreviations but given minimal information about the study. Specific details of the study were withheld to avoid biasing the results. No sources of external funding supported this investigation.

Overview of information systems and medical records at the study site

The hospital has a CPOE system for physician orders and diagnostic test results. The inpatient medical record is a hybrid of electronic and handwritten documentation. At the time of this study, all history and physical exams (H & Ps) were handwritten, and daily progress notes were created using a customized electronic progress note template. These templates were created within a data-storage program at the University of Pennsylvania (Medview, Microsoft ASP.NET v1.1). All clinical data including medications was entered into the computer by hand and copied forward for daily editing. Progress notes were printed daily and placed into the paper medical record where attending physicians could review and addend them by hand.

Design of the clinical decision support system

The authors of this study designed the clinical decision-support system. Alerts were integrated into the customized electronic progress note templates. The progress note application was modified with regular expression pattern-matching code on the client and server to recognize abbreviations from the Joint Commissions' ‘Do Not Use’ abbreviation list4 anywhere in the text and medication lists of the notes, and to generate an alert based on the participant's study group assignment. The application tracked the number of alerts generated for each note. The ‘Do Not Use’ abbreviation list includes: QD, QOD, MS04, MgSO4, U, IU, trailing zeros, and naked decimal points (table 1). The abbreviation ‘MS—morphine sulfate’ was not included in our study because we believed that it would reduce the specificity of the alert system. ‘MS’ within medical record documentation is commonly used to denote terms other than morphine sulfate such as mental status, mitral stenosis, or multiple sclerosis. Since we could not isolate the alert to the medication list, we believed that including it would cause alerts for the non-medication ‘MS’ terms and lead to documentation errors and clinician frustration.

Table 1.

Official ‘Do Not Use’ List of Abbreviations from the Joint Commission4

| Do not use* | Potential problems | Use instead |

| U (unit) | Mistaken for ‘0’ (zero), the number ‘4’ (four) or ‘cc’ | Write ‘unit’ |

| IU (international unit) | Mistaken for IV (intravenous) or the number 10 (ten) | Write ‘International Unit’ |

| Q.D, QD, q.d, qd (daily) | Mistaken for each other Period after the Q mistaken for ‘I’ and the ‘O’ mistaken for ‘I’ | Write ‘daily’ |

| Q.O.D,. QOD, q.o.d., qod (every other day) | Write ‘every other day’ | |

| Trailing zero (X.0 mg) | Decimal point is missed | Write X mg |

| Lack of leading zero (.X mg) | Write 0.X mg | |

| MS | Can mean morphine sulfate or magnesium sulfate; confused for one another | Write ‘morphine sulfate’ |

| MSO4 and MgSO4 | Write ‘magnesium sulfate’ |

Applies to all orders and all medication-related documentation that is handwritten (including free-text computer entry) or on preprinted forms.

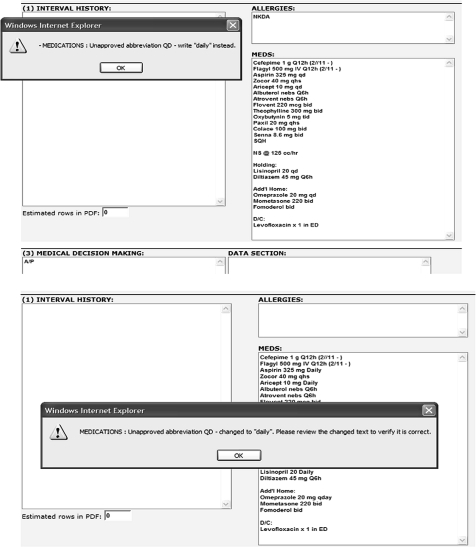

Randomization

Fifty-nine interns were randomized to one of three study arms using a computer-generated random numbers table. Group 1 received a forced or ‘hard-stop’ alert that appeared when interns attempted to enter unapproved abbreviations into the electronic progress notes. This alert identified the unapproved abbreviation(s), informed interns of the correct non-abbreviated notation, and forced them to correct the abbreviation before allowing them to save or print their note (figure 1). Group 2 also received an alert when an unapproved abbreviation was entered, but instead of forcing the interns to make a correction, an autocorrection feature displayed the correction and automatically replaced the abbreviation with the acceptable non-abbreviated notation (figure 1). Group 3 was a control group and received no alerts. The alert intervention was introduced 3 months after the study began to allow for observation of baseline medical record documentation practices (figure 2).

Figure 1.

Examples of computerized alert screens used in the intervention. (Top) Example of alert with forced functionality (‘hard stop’). (Bottom) Example of alert with an auto-correction feature.

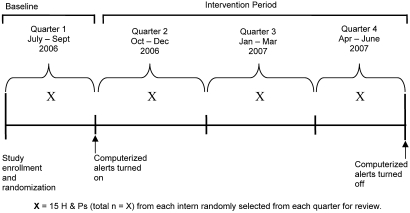

Figure 2.

Study design overview.

Participants did not receive any training sessions about the computerized enhancements and were not informed of their study-group assignment. All groups were exposed to the hospital's standard education for unapproved abbreviations that consisted of reminders to avoid unapproved abbreviations on printed medical note templates.

Primary outcomes

Retrospective reviews of the medication lists within interns' non-computer-assisted handwritten H & Ps were performed at study conclusion. The medication lists were reviewed to identify the presence or absence of the seven previously defined unapproved abbreviations, and an audit tool was developed to measure the frequency of these abbreviations. In order to estimate the opportunity for an abbreviation error, we had to define the frequency of an absence of the abbreviation. This absence was defined as the frequency with which a correct notation (non-abbreviation) was used. The total opportunity for error was the sum of all present and absent abbreviations. The percentage of unapproved medication abbreviations was defined as the number of abbreviation errors divided by the opportunity for error. Four study investigators (SG, JM, AL, SA) independently reviewed 100 H & Ps to assess reliability of the audit tool. One study investigator (SG) reviewed the remaining H & Ps after reliability statistics were obtained. All reviewers were blinded to the participants' study-group assignment.

A maximum of 15 H & Ps were randomly selected for each participant during each of four study time periods to determine the rate of unapproved abbreviations used over time (figure 2). The numbers of available H & Ps per quarter varied because interns were on vacation, on outpatient rotations, or on rotations at affiliated hospitals. If an intern did not have 15 H & Ps available during a study period, the total number of available H & Ps for that time period was used in the analysis. Interns spent an average of 7 months on inpatient rotations at the hospital where the study was performed.

Secondary outcomes

Secondary outcomes included the frequency of computerized alerts over time and intern knowledge of unapproved abbreviations before and after the study intervention. Knowledge was measured by a test created by the investigators in which interns were asked to identify error-prone abbreviations (unapproved) versus acceptable abbreviations (approved) out of a list of 30 total abbreviations in random order. Additional test items surveyed interns about prior exposure to medication safety education, experiences during medical school (pre-test), and their attitudes about the alerts (post-test).

Statistical analysis

Baseline characteristics among the three groups were compared using the Fisher exact test for categorical variables and Kruskal–Wallis test for continuous variables. Comparisons of the percentages of unapproved medication abbreviations at follow-up periods were done by fitting a pooled logistic regression model which included group indicator, indicator of follow-up time, and their interaction terms (group×follow-up time) as predictors. In this model, each H & P was considered a separate record. Compared to a method in which the percentages for each subject were calculated first and then compared across groups, this method may result in a better precision of estimates by putting less weight on subjects who had fewer H & Ps. Robust variance estimation with a first-order autoregressive (AR (1)) working correlation structure was used to account for repeated measurements within each subject. Both estimated percentages for each group at each follow-up period and the p values for comparisons of the estimated percentages between groups and their change within each group were reported. κ Statistics were used to assess the degree of congruency among four raters of the medication list audit tool. Pre- and post-knowledge differences between the groups were assessed using the Wilcoxon signed-rank test and overall with the Kruskal–Wallis test. All analyses were carried out in SAS version 9.1.

Results

One hundred per cent (n=59) of interns randomized completed the study and had primary data available for review. Interns had previously attended 23 different medical schools, and their characteristics are listed in table 2. There was no difference among the three groups in their ability to correctly identify unapproved medication abbreviations at baseline (p=0.20).

Table 2.

Characteristics and baseline knowledge of unapproved medication abbreviations among study participants (interns)

| Control group (N=19) | Hard stop alert group (N=20) | Auto-correction alert group (N=20) | Total (N=59) | p Value | |

| Men, n (%) | 9 (47%) | 7 (35%) | 9 (45%) | 25 (42%) | 0.80 |

| Received education in medical school about medication errors related to abbreviations, n (%) | 11 (58%) | 15 (75%) | 9 (45%) | 35 (59%) | 0.15 |

| Involved in the care of a patient who experienced a medication error, n (%) | 10 (53%) | 13 (65%) | 13 (65%) | 36 (61%) | 0.67 |

| Baseline knowledge of error-prone abbreviations, mean (IQR)* | 8.0 (3.46 to 11) | 8.7 (1.87 to 10) | 9.2 (2.78 to 11) | 8.6 (2.77 to 11) | 0.20 |

Number of unapproved abbreviations identified correctly out of a list of 11.

IQR, interquartile range.

The median number of H & Ps per study period was 12 (range 0–39). Of the 236 study periods available (59 interns×4 study periods each), there were 13 interns (four control, four hard stop, and five auto-correct) who had one study period with zero H & Ps to review. Based on these numbers, a total of 2371 H & Ps were evaluated with a mean of 42 H & Ps per intern (median=41, range 20–59).

Overall there were 4191 total opportunities to use a ‘Do Not Use’ abbreviation. Unapproved abbreviations were used 1832 times or 44% of the time. The median number of abbreviation errors per H & P was 2.5 (range 0–17). The frequency of errors for each abbreviation type was as follows: QD 1672 (91.4%); U 92 (5%); QOD 39 (2.1%); naked decimal point: 20 (1.1%); trailing zero: 5 (0.3%); MgSO4 1 (0.06%); MSO4 1 (0.06%); IU 0 (not written in any H & Ps). Many H & Ps contained more than one abbreviation error. The inter-rater agreement for the medication list audit tool was excellent (κ=1).

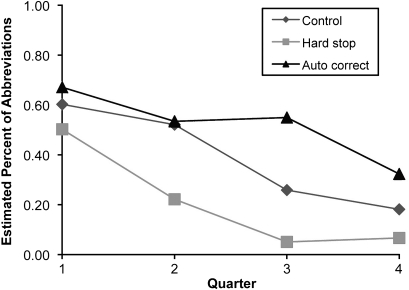

Primary outcome

The percentages of unapproved medication abbreviations in each quarter are shown in table 3. At baseline (Quarter 1), there were no significant differences in the frequency of unapproved abbreviations in the non-computer-assisted handwritten notes among the three study groups (p=0.54) (table 3). Interns in each group significantly reduced their use of non-computer-assisted, written unapproved abbreviations over time (control: p=0.004; hard stop: p<0.0001; autocorrect p=0.04) (figure 3). When compared with controls, interns in the hard-stop alert group had a lower rate of unapproved abbreviations in their non-computer-assisted handwritten notes during the alert intervention (p=0.02), whereas interns in the auto-correction group did not (p=0.21). Interns in the hard-stop alert group had a significantly lower rate of unapproved abbreviations in their non-computer-assisted handwritten notes when compared with interns in the auto-correction group. (p=0.0005).

Table 3.

Summary of the percentage of unapproved medication abbreviations in handwritten notes among interns exposed to no alerts, hard stop alerts, or auto-correction alerts in an electronic note writing program

| Follow-up time | Control group | Hard stop alert group | Auto-correction alert group | ||||||

| Total no of opportunities for error | No of unapproved medication abbreviations | Percentage of unapproved medication abbreviations | Total no of opportunities for error | No of unapproved medication abbreviations | Percentage of unapproved medication abbreviations | Total no of opportunities for error | No of unapproved medication abbreviations | Percentage of unapproved medication abbreviations | |

| Quarter 1 (baseline) | 317 | 191 | 0.60 | 426 | 214 | 0.50 | 231 | 155 | 0.67 |

| Quarter 2 | 386 | 188 | 0.49 | 299 | 79 | 0.26 | 182 | 99 | 0.54 |

| Quarter 3 | 281 | 63 | 0.22 | 373 | 31 | 0.08 | 252 | 150 | 0.60 |

| Quarter 4 | 366 | 49 | 0.13 | 324 | 36 | 0.11 | 271 | 94 | 0.35 |

Figure 3.

Comparisons of estimated percentages of unapproved abbreviations in handwritten notes across different groups. Error rate for Quarter 1 (baseline) was estimated using the raw data; error rates for Quarters 2–4 were estimated using a pooled logistic regression model which includes group indicator, indicator of follow-up time, and their interaction terms (group×follow-up time), and specifies autoregression working correlation matrix. p Value for comparisons of error rates across the three groups at baseline is 0.54. p Values for trend test within each group are 0.004, <0.0001, and 0.04 for control, hard stop, and auto-correction group respectively. p Value for comparisons of the error rate between hard stop and control groups is 0.02; 0.21 between auto-correction and control groups, and p=0.0005 between auto-correction and hard stop groups.

Secondary outcome

The number of alerts that fired decreased over time (p<0.01) in both alert intervention groups. There was a trend toward fewer alerts firing in the ‘hard-stop’ group compared with the auto-correction group (p=0.06).

Forty-seven interns (80%) completed the knowledge test at study conclusion. Knowledge of unapproved abbreviations did not improve after the alert intervention. At baseline, interns correctly identified 8.6 (range 5–11; 73% correct) unapproved abbreviations compared with 9.0 (range 5–11; 82% correct) after the intervention. This was true for the entire sample (p=0.81), within individual groups (hard-stop, p=0.67; auto-correction, p=0.09; control, p=0.31), and between groups (p=0.39). Intern attitudes about the alerts were assessed in the post-test. Among interns who received alerts, nine (26%) believed that the alerts interfered with their ability to efficiently complete their documentation, 14 (41%) did not, and 11 (32%) were neutral. No attitude differences were detected between the two alert groups.

Power analysis

Repeated measurements in this study allowed for an increase in statistical power to detect treatment differences among study conditions. The correlation between two successive measurements was 0.62. In randomized trials with repeated measurements, an important determinant of the minimum detectable difference is the design effect, which is defined as 1+ICC(k−1), where ICC is the intraclass correlation coefficient, that is, the correlation between two successive measurements, and k is the number of repeated measurements. The three repeated measurements in this study yielded a design effect of 2.24. The effective sample size for each group is 20×3/2.24=27. Using an estimated SD of the percentage of unapproved abbreviations of 0.5 (table 3), the study has 80% power to detect a difference in percentages of abbreviations of 0.39 between groups with a type I error of 0.05.

Discussion

This randomized-controlled trial compared two types of computerized alerts designed to reduce the use of unapproved medication abbreviations by physicians. We demonstrated that alerts embedded within an electronic progress note program reduced the use of abbreviations within the electronic program (as measured by frequency of alerts fired) and within the non-computer-assisted handwritten H & Ps authored by physicians over the same time period. Alerts with a forced correction feature decreased the use of abbreviations to a much greater extent than alerts with an auto-correction feature. Moreover, an unanticipated but particularly interesting finding in our study was that reductions in abbreviation use were observed in a control group who were unexposed to alerts, but who were exposed to the overall study environment.

Eliminating error-prone medication abbreviations has been extremely challenging for hospitals, and there are very few effective interventions in the literature for this vexing problem. An educational intervention designed to reduce prescribing errors in the handwritten medication orders of residents reduced overall prescribing errors among surgery but not medicine residents.11 Enforcement strategies at the level of medical staff leadership proved more effective than education alone in a single study10; however, enforcing physician accountability for documentation skills is difficult. Given the strong and repeated association between abbreviation use and medication errors,3 8 it will be necessary and important for healthcare leaders to use multiple strategies to improve this unsafe and therefore unacceptable practice. Health information technology is just one of those strategies. As demonstrated in this study, a clinical decision-support system designed to reduce abbreviations may be an effective addition to administrative oversight and routine education.

Of the 2371 H & Ps reviewed, there were 4191 unapproved abbreviations noted, which equates to approximately two unapproved abbreviations per H & P. On the surface, this average seems low considering the high numbers of patients in our hospital treated with multiple medications. However, when considering the frequency of these occurrences in H & Ps (range 0–17; median=2.5), one can see that significant abbreviation use with the opportunity for medication errors exists. Significant reductions in abbreviations were demonstrated in the non-computer-assisted handwritten notes over time and across all three study groups, further reducing the abbreviation errors in the H & Ps.

The ability for health information technology to intercept unsafe practices and prevent serious medication errors has been described.20–22 Improvements in medication safety with the use of CDSS occur through both direct and indirect effects. Direct effects alter medication prescribing or management at the time practitioners interact with system. Indirect or ‘spillover’ effects result from the carry-over into practice of knowledge or behaviors learned during exposure to the system.23 Few studies of CDSSs have been designed to measure indirect effects. Glassman et al reported that exposure to automated drug alerts had little effect on the recognition of selected drug–drug or drug–condition interactions as measured by a cross-sectional survey.23 Studies of drug-utilization reviews describe indirect effects of interventions on future clinician behavior.24 25 For example, a time-series study that involved mailing letters to physicians about drug interactions and monitoring their subsequent prescribing patterns found no effect on future prescribing behavior as a result of the intervention.24 In contrast, our study found large indirect effects by demonstrating significant reductions in the frequency of medication abbreviations in physicians' non-computer-assisted handwritten notes when they were prompted to correct the abbreviations in the electronic notes over the same time period.

The rapid expansion of alert systems in medical informatics calls for more research comparing the effects of different alert systems on the same outcome. Previous studies have described the over-riding of drug safety alerts26 and demonstrated that the nature of alerts influences clinician behavior.22 27 28 Thus, the inability to detect significant reductions in abbreviation use in the non-computer-assisted handwritten notes in the auto-correction alert group compared with the forced correction alert group is not surprising. One reason for this is that interns who received auto-correction alerts disregarded the educational message or simply acknowledged the alert without reading the information given human factors such as time pressure, competing priorities, and alert fatigue. In contrast, interns exposed to the forced alert were unable to complete their electronic notes without making manual corrections. It is known that mere repetition facilitates long-term memory,29 30 and it may be that by forcing physicians to correct abbreviations, their knowledge of these abbreviations was solidified and translated into improvements in written practice. In summary, our study found direct evidence that passive alerts do little to influence clinician behavior. Additional studies will be important to substantiate these findings and advance the field of health informatics.

Reductions in abbreviation use in the control group were not anticipated by the investigators, but there are several possible explanations for the observation. Experimental diffusion, which occurs when a treatment effect applied to one group unintentionally spills over and contaminates another group,31 may explain our findings. Interns in the control group were working in a study environment designed to modify physician behavior. Even though they were not directly exposed to alerts, their behavior may have been influenced by the improving documentation patterns of the interns exposed to the intervention who worked with them. Diffusion of effects threatens the internal validity of research, but it is difficult to control for in quality improvement research. While it is possible that the hospitals' educational strategies to reduce unapproved abbreviations contributed to the documentation improvements, this seems unlikely given the historical failure of routine education related to abbreviation avoidance.10–12

Despite the improvements in documentation practices, we failed to find any significant improvements in physician knowledge of unapproved abbreviations. This apparent ‘disconnect’ in knowledge versus practice is intriguing and has been demonstrated previously by Glassman et al.23 There are several possible explanations for this finding. Our sample size may have been too small to detect a meaningful difference. The participants had varying degrees of exposure to the abbreviation alerts and thus may have been unable to remember the abbreviations when they were presented to them in the post-test. The alerts may not have been perceived as important or relevant to the interns, especially since they were alerted when writing notes rather than when ordering medications. Nonetheless, it is hard to ignore the substantial reductions in abbreviation use in the non-computer-assisted handwritten notes as a result of the intervention, and this could be interpreted as a surrogate for knowledge acquisition.

The Joint Commission has strictly prohibited the use of seven common and unsafe medication abbreviations,4 and The Institute for Safe Medication Practices has promulgated a list of over 50 abbreviations that have been associated with harm in their error-reporting systems and should never be used.5 However, given the fact that any medication abbreviation creates an opportunity for misinterpretation and error potential, some organizations have attempted to limit all medication abbreviations by creating policies with ‘approved’ (rather then unapproved) abbreviation lists in which all medication abbreviations are prohibited. Since clinicians are in the habit of using medication abbreviations frequently, it is unlikely that one intervention alone will eliminate this practice, and it will be necessary to consider electronic interventions such as this to curb their use in free text entries in prescription writing and medical records.

Our study has several limitations. Because our hospital has an integrated CPOE system, we were unable to assess whether our intervention would have affected handwritten medication prescribing errors related to abbreviations. Handwritten abbreviations in prescriptions present a larger risk to patients than handwritten abbreviations in medical records. However, it is possible that documentation skills learned by physicians in an electronic environment and practiced in handwritten notes will carry over into their future handwritten or electronic free text prescriptions, and recommendations for prescriptions are often made in medical record documentation, so the potential for abbreviation errors exists even outside of the prescription-writing environment. Feasibility issues prevented retrospective reviews of the handwritten medication prescriptions of study participants.

We did not study the documentation practices of the participants after the alerts were turned off. Consequently, we cannot be certain of the long-term sustainability of our intervention and whether the documentation improvements would have improved further, plateaued, or waned had the alerts been turned off or continued. Additionally, given that exposure to the alerts was not continuous over time based upon the sequence of intern rotations and that these alerts varied in frequency among all interns, we may have reduced our ability to detect important differences among the groups and within certain participants. We did not evaluate documentation practices in the year(s) prior to our intervention and thus cannot completely exclude the possibility that a trend towards reduction in unapproved abbreviations occurred from a natural history effect encountered with introducing electronic platforms for documentation.

Finally, our study has several features that may limit its generalizablity. We studied only interns at a single academic medical center with a hybrid information system comprising both paper and electronic documentation. Since many organizations currently practice in hybrid systems, and many physicians practice in multiple information systems over the course of their career, we believe that the information related to the secular trends in physician non-computer assisted handwritten notes as a result of exposure to computerized alerts is relevant. Compared with interns, residents, attending physicians, non-physician providers, or practitioners in community hospitals may have responded differently to the intervention; however, there are elements of practitioner performance that are not unique to interns or academic medical centers, and some generalizations can be made from this study. The undergraduate and graduate medical training years are an ideal time to introduce information technology designed to improve medication safety, since trainees have not yet been influenced by unsafe medication documentation practices in the hospital and may be more open to changes in practice.

In summary, our study contributes important information to the health information technology literature by describing the effect that CDSS can have on physician behavior in the absence of knowledge and demonstrating that an informatics intervention can create large behavioral changes in a control group unexposed to the actual intervention but exposed to the study environment in which the intervention was performed. The methods used in this study to examine the indirect effects of health information systems to modify physician behavior outside of the electronic environment are unique and may have relevance for other health information technology interventions. We have established a methodology within a randomized-controlled trial to evaluate the effects of alerts embedded within a clinical decision-support system on physician knowledge and practice. Estimates for the percentage of unapproved abbreviation use were calculated based on the number of opportunities for error and offer additional endpoints to measure practice changes with technology-based interventions. These estimates can be used to determine samples sizes for adequate statistical power to evaluate the effects of interventions to reduce medication errors and test information systems in patient safety research. We found that alerts for unapproved medication abbreviations within electronic medical record systems are effective in changing physician documentation and thus promoting medication safety. Given that many healthcare organizations do not have fully integrated health information technology systems, researchers and patient safety leaders will continue to be challenged with ways to promote safe medication practices through electronic tools and education.

Acknowledgments

The authors are grateful to L Fleisher and S Williams, for their comments on earlier versions of this manuscript. We also thank S Kratowicz for his technical support in building the computerized alerts.

Footnotes

Competing interests: None.

Ethics approval: Ethics approval was provided by the University of Pennsylvania IRB.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1.Kohn LT, Corrigan JM, Donaldson MS. To Err is Human: Building a Safer Health System. Washington, DC: (US) Committee on Quality of Health Care in America, Institute of Medicine, National Academy Press, 2000 [PubMed] [Google Scholar]

- 2.Lesar TS, Briceland L, Stein DS. Factors related to errors in medication prescribing. JAMA 1997;277:312–17 [PubMed] [Google Scholar]

- 3.Barker KN, Flynn EA, Pepper GA, et al. Medication errors observed in 36 healthcare facilities. Arch Intern Med 2002;162:1897–903 [DOI] [PubMed] [Google Scholar]

- 4.The Joint Commission Accreditation Program: Hospital National Patient Safety Goals. NPSG 02.02.01 accessed at http://www.jointcommission.org/NR/rdonlyres/31666E86-E7F4-423E-9BE8-F05BD1CB0AA8/0/HAP_NPSG.pdf page 5 on 8 Jun 2009.

- 5.ISMP and FDA Campaign to eliminate use of error-prone abbreviations. http://www.ismp.org/tools/abbreviations (accessed 8 Jun 2009).

- 6.Landers SJ. Goodbye to U: safety campaign nixes abbreviations. Am Med News 17 July 2006;48(27). http://www.ama-assn.org/amednews/2006/07/17/hll20717.htm (accessed 8 Jun 2009). [Google Scholar]

- 7.von Eschenbach AC. Eliminating error-prone notations in medical communications. Expert Opin Drug Saf 2007;6:233–4 [DOI] [PubMed] [Google Scholar]

- 8.Brunetti L, Santell JP, Hicks RW. The impact of abbreviations on patient safety. Jt Comm J Qual Pt Saf 2007;33:576–83 [DOI] [PubMed] [Google Scholar]

- 9.Abushaiqa ME, Zaran FK, Bach DS, et al. Educational interventions to reduce use of unsafe abbreviations. Am J Health Syst Pharm 2007;64:1170–3 [DOI] [PubMed] [Google Scholar]

- 10.Traynor K. Enforcement outdoes education at eliminating unsafe abbreviations. Am J Health Syst Pharm 2004;61:1314, 1317, 1322. [DOI] [PubMed] [Google Scholar]

- 11.Garbutt J, Milligan PE, McNaughton C, et al. Reducing medication prescribing errors in a teaching hospital. Jt Comm J Qual Patient Saf 2008;34:528–36 [DOI] [PubMed] [Google Scholar]

- 12.Anonymous. Compliance data for the Joint Commissions' 2004 and 2005 national patient safety goals. Jt Comm Perspect 2005;25:7–8 [PubMed] [Google Scholar]

- 13.Bates DW, Gawande AA. Improving safety with information technology. N Engl J Med 2003;25:2526–34 [DOI] [PubMed] [Google Scholar]

- 14.Chaundhry B, Wang J, Wu S, et al. Systematic review: impact of health information technology on quality, efficiency, and costs of medical care. Ann Intern Med 2006;144:742–52 [DOI] [PubMed] [Google Scholar]

- 15.National Committee for Vital and Health Statistics, Information for Health: A Strategy for Building the National Health Information Infrastructure. Washington, DC: US Department of Health and Human Services, 15 November 2001 [Google Scholar]

- 16.Thompson T, Brailer D. The Decade of Health Information Technology: Delivering Consumer-Centric and Information-Rich Health Care: Framework for Strategic Action. Washington: U.S. Department of Health and Human Services, 21 July 2004 [Google Scholar]

- 17.Jha AK, DesRoches CM, Campbell EG, et al. Use of electronic health records in U.S. hospitals. N Engl J Med 2009;360:1628–38 [DOI] [PubMed] [Google Scholar]

- 18.Manzar S, Nair AK, Govind Pai M, et al. Use of abbreviations in daily progress notes. Arch Dis Child Fetal Neonatal Ed 2004;89:374. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Sheppard JE, Weidner LC, Zakai S, et al. Ambiguous abbreviations: an audit of abbreviations in paediatric note keeping. Arch Dis Child 2008;93:204–6 [DOI] [PubMed] [Google Scholar]

- 20.Bates DW, Leape LL, Cullen DJ, et al. Effect of computerized physician order entry and a team intervention on prevention of serious medication errors. JAMA 1998;280:1311–16 [DOI] [PubMed] [Google Scholar]

- 21.Potts AL, Barr FE, Gregory DF, et al. Computerized physician order entry and medication errors in a pediatric critical care unit. Pediatrics 2004;113:59–63 [DOI] [PubMed] [Google Scholar]

- 22.Garg AX, Adhikari NKJ, McDonald H, et al. Effects of computerized clinical decision support systems on practitioner performance and patient outcomes. JAMA 2005;293:1223–38 [DOI] [PubMed] [Google Scholar]

- 23.Glassman PA, Belperio P, Simon B, et al. Exposure to automated drug alerts over time: effects on clinicians' knowledge and perceptions. Med Care 2006;44:250–6 [DOI] [PubMed] [Google Scholar]

- 24.Hennessy S, Bilker WB, Zhou L, et al. Retrospective drug utilization review, prescribing errors, and clinical outcomes. JAMA 2003;290:1494–9 [DOI] [PubMed] [Google Scholar]

- 25.Lipton HL, Bird JA. Drug utilization and review in ambulatory settings: state of the science and directions for outcomes research. Med Care 1993;31:1069–982 [DOI] [PubMed] [Google Scholar]

- 26.Van Der Sijs H, Aarts J, Vulto A, et al. Overriding of drug safety alerts in computerized physician order entry. J Am Med Inform Assoc 2006;13:138–47 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Spina JR, Glassman PA, Belperio P, et al. Primary Care Investigative Group of the VA Los Angeles Healthcare System Clinical relevance of automated drug alerts from the perspective of medical providers. Am J Med Qual 2005;20:7–14 [DOI] [PubMed] [Google Scholar]

- 28.Glassman PA, Simon B, Belperio P, et al. Improving recognition of drug interactions. Benefits and barriers to using automated drug alert. Med Care 2002;40:1161–71 [DOI] [PubMed] [Google Scholar]

- 29.Naka N, Naoi H. The effect of repeated writing on memory. Mem Cognit 1995;23:201–12 [DOI] [PubMed] [Google Scholar]

- 30.Dark VJ, Loftus GR. The role of rehearsal in long-term memory performance. J Verbal Learn and Verbal Behav 1976;15:479–90 [Google Scholar]

- 31.Rubinson L, Neutens JJ. Research Techniques for the Health Sciences. 3rd edn San Francisco, CA: Pearson Education Inc, 2002 [Google Scholar]