Abstract

Federal legislation (Health Information Technology for Economic and Clinical Health (HITECH) Act) has provided funds to support an unprecedented increase in health information technology (HIT) adoption for healthcare provider organizations and professionals throughout the U.S. While recognizing the promise that widespread HIT adoption and meaningful use can bring to efforts to improve the quality, safety, and efficiency of healthcare, the American Medical Informatics Association devoted its 2009 Annual Health Policy Meeting to consideration of unanticipated consequences that could result with the increased implementation of HIT. Conference participants focused on possible unintended and unanticipated, as well as undesirable, consequences of HIT implementation. They employed an input–output model to guide discussion on occurrence of these consequences in four domains: technical, human/cognitive, organizational, and fiscal/policy and regulation. The authors outline the conference's recommendations: (1) an enhanced research agenda to guide study into the causes, manifestations, and mitigation of unintended consequences resulting from HIT implementations; (2) creation of a framework to promote sharing of HIT implementation experiences and the development of best practices that minimize unintended consequences; and (3) recognition of the key role of the Federal Government in providing leadership and oversight in analyzing the effects of HIT-related implementations and policies.

Introduction

As part of efforts to improve healthcare delivery and reduce healthcare costs, the U.S. has invested considerable resources in health information technology (HIT) and electronic health records (EHRs). The unprecedented availability of funding provided by the American Recovery and Reinvestment Act (ARRA)/Health Information Technology for Economic and Clinical Health (HITECH) legislation1 is a key factor in spurring a flurry of HIT implementations. The federal Office of the National Coordinator (ONC) for HIT highlights the enormous potential of HIT to yield improvements in patient care, prevention of medical errors, an increase in the efficiency of care provision and a reduction in unnecessary costs, expansion of access to affordable care, and improvements in population health.2 While expectations are high, experience shows that unplanned and unexpected consequences have resulted from major policy and technological changes. Studies have demonstrated that HIT is not immune from this phenomenon.3–9 Thus, it is important to consider the potential unintended consequences that may be engendered by the accelerated adoption of technology.

The American Medical Informatics Association (AMIA) dedicated its 2009 Annual Health Policy Meeting to an examination of the unintended consequences of HIT and related policies. The conference convened stakeholders representing a variety of backgrounds, disciplines, and work environments.

Combining a summary of conference discussions with findings from research, this article presents an overview of the factors that can lead to negative unintended consequences as a result of HIT implementations and related policies, and outlines approaches to identifying and avoiding (or mitigating) unwanted outcomes. The article concludes with recommendations aimed at maximizing HIT's benefits while minimizing negative consequences.

Previous study of unintended consequences

The idea that endeavors can have outcomes other than those originally planned is not novel. Robert K Merton is credited with popularizing the concept of unanticipated consequences. In his 1936 paper, Merton listed possible causes of unanticipated consequences: ignorance, error, overriding of long-term interest by immediate interest, basic values that require or prohibit action, and self-defeating prophecy.10

Discussions about unintended consequences resulting from public policy and technological innovation are found in the healthcare domain as well as in other fields. In 2000, the Robert Wood Johnson (RWJ) Health Policy Fellowships Program of the Institute of Medicine conducted a workshop on the subject of unintended consequences of health programs and policies.11 Examples of unplanned outcomes of health-related policies include the linking of a cap on Medicare drug benefits with lower drug consumption and unfavorable clinical outcomes,12 the impact of federal regulations and hospital policies on indebtedness of patients,13 and unintended consequences of tobacco policies affecting women and girls of low socioeconomic status.14

The introduction of new technology in non-healthcare organizations has yielded examples of unintended consequences.15–17 Many recognized problems arise as a result of new demands made on users of these technologies and the organizations that implement them. In January 2010, the ONC acknowledged this concern by issuing a request for proposals that focused on an analysis of and recommendations regarding unanticipated consequences of HIT.18

Researchers have identified and categorized unintended consequences that can accompany the use of patient care information systems,3 with specific attention to computerized provider order entry (CPOE) systems,4–6 19 20 clinical decision support systems,7 8 and bar code medication administration technology.21 22 (It may be noted that studies of unintended consequences related to CPOE are disproportionately represented in the above list of references. While ample anecdotal examples exist for unintended consequences in other HIT-related areas, to date there have been few formal studies of these. Indeed, CPOE has been particularly fertile ground for study due to the fact that errors in medications and procedures are among the easiest to connect directly to patient harm.)

Studies have highlighted conflicts between the cognitive demands of HIT systems and limits of the human information processing system,23–25 drawn attention to EHR usage patterns and usability issues,26 27 and summarized requirements necessary for safe use of EHRs.28 They have discussed the challenges associated with the introduction of HIT applications into organizational structures.9 29–32 Currently, researchers are beginning to focus on the challenges that accompany current government incentives that promote widespread implementation of HIT.33

Definitional issues

Clarification of terminology is provided by Ash et al: “The terms ‘unintended consequences’ and ‘unanticipated consequences’ are not synonymous. The ‘unintended’ implies lack of purposeful action or causation, while the ‘unanticipated’ means an inability to forecast what eventually occurred. Either kind of consequence can be adverse or beneficial. Unanticipated beneficial consequences are actually happy surprises. Unanticipated, unintended adverse consequences capture news headlines and are often what people imagine when they hear the term ‘unintended consequences.’”34

By their nature, unintended consequences are difficult to classify according to a single taxonomy,5 because they are frequently a side effect of an unknown or hidden social-technical system. Nevertheless, unintended consequences have been characterized by a number of attributes as outlined below; the first three of these attributes were noted by Rogers in his Diffusion of Innovations theory.35

Desirable or undesirable: is the outcome positive, negative, or mixed? Or good in some ways and bad in other ways? For example, structured EHR data entry allows for organization of data into discrete categories so that these data can be efficiently processed. However, this can result in loss of elements of the clinical narrative that serve as a record of potentially important points in the patient's history.25

Anticipated or unanticipated: can the consequences be predicted or anticipated, and if so, by whom? This is a scale ranging from events that are easily anticipated, through those that are anticipatable by an average practitioner with effort, to those that are only anticipatable by experts, and culminating in events that are completely unanticipatable by anyone or a total surprise. This ‘anticipatablity scale' applies not only to the presence or absence of an event, but also to the magnitude of an event. An example of an anticipated unintended consequence is the weight gain/loss associated with many medications. Even so, a given individual may have a weight change so large that the magnitude was unanticipated, even though some weight change was expected. In another example, investigators found, unexpectedly, that introduction of EHRs had a profound and enduring impact on the organization of information by doctors.25

Direct versus indirect: does the input cause the consequence directly or is there a chain of events leading to it? It is worth noting that unanticipated unintended consequences are frequently the result of an indirect causal chain. For example, in the study referenced above, EHR use led to use of discrete categories of information which led to information reorganization, which resulted in a change in the direction of reasoning based on the reorganization, and use of decision support.25

Latent versus obvious: is the consequence easily visible or does it become obvious only in another context or environment or at a later time?

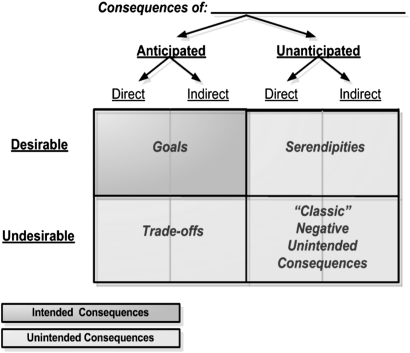

Figure 1 depicts the potential relationships across and among types of consequences. This depiction draws upon earlier work, particularly that of Ash et al.5 20 34 If a consequence is undesirable but anticipated, it can be addressed and managed as a tradeoff. Before accepting a tradeoff, its effect is estimated as closely as possible, but it is possible to underestimate the impact of the tradeoff. For example, hospitals implement drug interaction alerts with the intention of interrupting a physician's workflow, having made an assessment that this tradeoff might annoy the physician but stop an error. However, an overabundance of alerts can generate an unintended and also unanticipated consequence—ignoring all alerts or refusing to use the system.

Figure 1.

Relationships across and among consequences.

The most troubling unintended consequences are those that are undesirable as well as unanticipated, with no mitigation plan in place.

The AMIA 2009 health policy conference

As noted above, federal legislation is stimulating numerous HIT implementation efforts around the country. While the potential benefit of these efforts is broad, experience has shown that major technological changes can bring about negative unintended consequences that can jeopardize the success of implementations. Consequently, AMIA dedicated its 2009 Annual Health Policy Meeting to an exploration of unintended consequences of HIT and related policies.

The goals of AMIA's 2009 health policy conference were as follows:

Explore and outline approaches to recognizing, anticipating, and addressing unintended consequences of HIT and HIT-related policies and legislation.

Identify areas for further study and research in the above areas.

The recommendations of the meeting (described at the end of the paper) were formulated during facilitated breakout discussion sessions attended by conference participants; these sessions are described in detail below.

Two conference speakers, representing other safety-conscious industries, shared their experience relating to HIT and unintended consequences, providing critical knowledge that informed the breakout discussions. Dr Nancy Leveson, an expert in aviation software safety, presented highlights of the experience of the aviation industry in addressing safety issues. Dr Leveson focused on factors contributing to previous IT disasters, noting that a high percentage of famous aviation disasters involved software that had been adapted from another product or use, rather than developed de novo. She discussed mistakes commonly made in introducing technology: attempting to do too much too fast, building technology-centered automation that is unusable or prone to error and necessitates unacceptable changes in workflow, and failing to build in safety at the start of design. Rodeina Davis, CIO of the Blood Center of Wisconsin, discussed the history and experience of blood banking software under FDA regulation. Keynote speakers, Dr David Blumenthal, ONC Director, and Aneesh Chopra, Federal Chief Technology Officer of the U.S., addressed national issues in the HIT arena.

First, AMIA initiated the meeting's discussions with the following questions:

What do we know about unintended consequences that are related to HIT design, implementation, and use? To what extent can we anticipate, describe, categorize, and prevent (or mitigate) unintended consequences and what approaches have been effective? To what extent are unintended consequences undesirable? Desirable (conferring benefits or efficiencies)? What do we still need to learn?

Who is responsible for anticipating unintended consequences?

What unintended consequences may arise from policies engendered by current/pending legislation and regulation related to HIT?

What lessons can we learn from other industries and how can we leverage them in addressing unintended consequences related to HIT?

The meeting's Steering Committee developed a working definition of unintended consequences: “Unintended consequences are outcomes of actions that are not originally intended in a particular situation (eg, HIT implementation).” In particular, the focus of the meeting was on those undesirable outcomes that are rarely, if ever, foreseen. For the sake of brevity, they are referred to subsequently in this paper simply as unintended consequences.

Breakout sessions focused on four intersecting domains

The breakout sessions were planned by the Steering Committee to stimulate in-depth consideration of HIT-related topics through an open exchange of knowledge and experience by meeting participants. Participants chose among breakout sessions focused on four domains: technology, human factors and cognition, organization, and fiscal/policy and regulation.

While unintended consequences from health IT are frequently considered in terms of a single domain (eg, technology), when addressing unintended consequences of HIT implementations and policy, there are intersections across the domains. For example, a recent qualitative meta-analysis of HIT implementations found that organizational efficiency is not automatically increased just by implementing a technology solution; actions that were needed to promote success include management involvement, integration of the system in clinical workflow, establishment of compatibility between software and hardware, and user involvement, education and training.36 These actions straddle the domains.

Decisions made about the technical design of an HIT system (eg, organization and display of information) can affect the ease or difficulty of its use due to limitations of human cognitive abilities. Failure to consider the complexities and dynamism of clinical workflow when designing and implementing HIT solutions can impact their effectiveness and may lead to unintentional errors that can impact patient safety. Unintended consequences can arise in the organization domain as a result of regulation/legislation that mandates meaningful use of HIT by a certain deadline, whereby some practitioners are not yet included in the definition of meaningful users, and thus are not eligible for payment incentives.

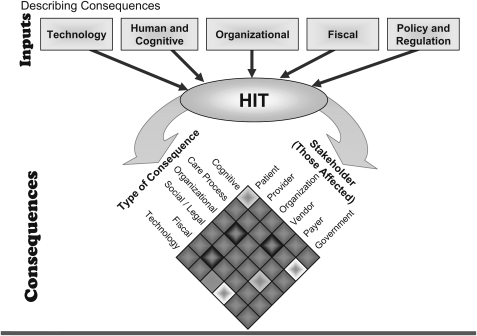

Therefore, AMIA introduced an input–output model to characterize unintended consequences, with several factors serving as inputs:

Technology: hardware and software systems that are implemented and the constraints they impose.

Human factors and cognition: the thought processes, habits of behavior, and mental capabilities that humans bring to the use of HIT tools and processes.

Organization: the embedding of technology in the complex environment of healthcare organizations.

Fiscal/policy and regulation: the legislative and regulatory environment governing the design, implementation, and use of HIT.

There are multi-dimensional implications of HIT-related unintended consequences arising in these domains. Figure 2 outlines relationships among the types of consequences and how they affect stakeholders. (The model shows Fiscal, and Policy and Regulation separately, but breakout discussions about these topics were combined because of their overlap.)

Figure 2.

Input–output model of unintended consequences.

Technology domain

The conversion from a paper-based system to an electronic system results in inevitable challenges. Some problems are caused by attributes or characteristics of specific HIT systems, while others are the result of the general change process or may be related to the existing level of computerization in an organization. New types of errors may be generated when performing a task using a computer rather than paper, and communication via electronic means differs from face-to-face communication among clinicians and between clinicians and patients.

The transition from paper to an electronic system can result in errors due to problems with technical design, confusion about system features by users, and workflow mismatches.20 Examples include:

Users of CPOE will often select an item from a pick list that is close to their desired choice but not technically correct or less precise than intended. The list of choices may be limited by tool design (eg, no facility for an ‘other’ type choice) or the terminology used to specify list choices.

The quality of clinical documentation may be affected by a feature of EHRs that allows multiple parties to access and edit the same records. A positive consequence is that multiple providers can double check and refine summaries of clinical problems or medications; providers may correct entries, reconcile with patient lists, or add their own insights. However, different providers may disagree on the quantity and specificity of documentation to include on shared lists. When conflicts such as these are not managed, shared lists may develop duplicate, or even contradictory, entries that lead to confusion as different providers attempt to document in a manner that matches their needs and cognitive workflow.

Problems may arise when clinicians have false expectations regarding data accuracy and processing or have an unquestioning trust of automated systems without a thorough understanding of their limitations; when clinicians who have worked exclusively in automated environments find themselves in work settings without these technologies; and when there are insufficient backup systems and processes in place if applications go down.37 Another way that computerization affects workflow is termed ‘alert dependence.’ Electronic systems which track and audit physician decisions through clinical decision support or other mechanisms may potentially lead to an overdependence on safety checks. This is true when alerting only interacts with the provider when a problem has been detected. Moreover, providers who have grown accustomed to a particular alert at one practice site may fail to realize that a second site is not running that particular rule. On the other hand, the phenomenon of ‘alert fatigue’ may prompt CPOE users to override a large percentage of them, potentially compromising the safety effects that are the goal of integrating decision support into the application.38

Furthermore, EHR systems newly installed in an organization must work in synchronization with the computer systems already in place; harmonization between these systems may be overridden by subsequent implementations and updates.39 Systems that are improperly integrated, requiring that data be entered into multiple systems, may result in data fragmentation. For example, when a CPOE system is not integrated with a pharmacy system, every order has to be printed manually and then electronically transcribed into the pharmacy system. Further, the maintenance of multiple networks in an organization requires that data be updated in all relevant systems or records can become outdated, incomplete, or inconsistent.40

Experts recommend that organizations use rigorous approaches to ensure the quality of data in HIT systems. These include manual checking of results, development of measurable data quality benchmarks and procedures for identifying deviations from benchmarks, and training of users to correctly enter data in electronic forms.37

Human factors and cognition domain

Knowledge of the principles of human–computer interaction and an appreciation of the importance of human and cognitive factors are critical to HIT design and implementation. The study of human factors related to HIT systems focuses on the systematic application of knowledge about human sensory, perceptual, mental, psychomotor, and other characteristics to the design of these systems. Human and cognitive factors place emphasis on the mental (memory, knowledge, strategies) and social properties characteristic of humans.23 Horsky et al noted, ”Cognition is considered to be a process of coordinating, mediating, and redistributing knowledge representations that are internal (ie, in the mind) and external (eg, visual displays, written instructions, etc). Environmental, social, cultural, organizational and regulatory factors contribute to the complexity of these systems that stretch over human beings and the technology they work with. Computing technology and artifacts are integral parts of this cognitive process and should be designed to correspond to human characteristics of reasoning, memory, attention, and constraints (human-centered design).”41

Electronic health records have enabled the collection of large quantities of data and text regarding patient encounters, hospitalizations, procedures, and test results. Increasingly voluminous repositories of electronic records that have been converted from paper can lead to unintended consequences such as difficulty in finding data that are relevant to a specific clinical need. Coupled with certain aspects of system design, these vast quantities of data not adequately organized can result in increased cognitive load, a term originating in cognitive science that refers to the load on working memory during information processing.

In addition to cognitive load, clinicians also face usability limitations with many current HIT systems. For example, order sets in CPOE systems are intended to relieve much of the tedious burden of selecting one order at a time and enable physicians to devote more cognitive resources to treatment and management planning. A study of strategy selection in order entry found that entering orders is a complex process that can be made more difficult or eased by interface and design support.42 Dense information displays (eg, many levels of nested drop-down lists) can make the selection process cumbersome.24 The very changes that ease the problems of usability can create additional problems as an unintended consequence if changes are not adequately monitored.

Other concerns have arisen from the inherent properties of HIT systems. Patel et al report that exposure to EHRs' tightly structured format has been shown to be associated with changes in physicians’ information gathering (more efficient) and reasoning strategies (hypothesis driven) compared to their use of paper-based records (slow and data driven). The support provided by the system to guide and narrow down the search that changed the directionality of reasoning from data-driven to hypothesis-based, resulted in errors which were anticipated. With other intended changes, there were also unintended changes: loss of the narrative thread of the patient history and the additional cognitive effort needed to complete a patient history based on discrete information, leading to different types of errors.25

Alert and reminder systems are frequently characterized by linear, rigid rules, an approach that is a poor fit for the inherent complexity of medical decision making and is inconsistent with the way in which people tend to make decisions as shown by the classical decision making literature on heuristics and biases.43 This formalization of rules to manage decisions that were previously managed informally entails loss of flexibility, leading to loss of resilience, with the danger of generating medical error as an unintended consequence. Such examples indicate that a deeper understanding of the cognitive properties of a system prior to its implementation is needed to help planners anticipate and pre-empt many unintended consequences.

Organization domain

Peter Drucker described the modern hospital as “altogether the most complex human organization ever devised.”44 As increasingly complex HIT systems are implemented in complex environments, they will affect larger, more heterogeneous groups of people and organizations in a variety of settings. Major implementation challenges for an organization tend to be behavioral, sociological, cultural, and financial rather than strictly technical. An HIT implementation can lead to physical, mental, and emotional exhaustion of an organization and its workforce, thus rendering the organization reluctant (or unable) to move forward with further implementation efforts. The critical importance of managing the power and organizational conflicts inherent in information system development is being increasingly understood.17 To create an effective foundation for organizational transformation, there is a need for strong support by both management and future users during HIT implementations.32

Discussing factors contributing to HIT implementation challenges, Lorenzi et al focus on the organization's capacity for change and its recognition of the importance of context: “The implementation of an IT system… requires a detailed plan that is driven by both capacity for change and context of change. Capacity represents the ability of the organization to invest in high-quality training, extensive support at go-live, and managers who can respond flexibly to changes in the environment so that patient safety is maintained as the highest priority. Context is the environment to which the implementation plan must adapt. A rollout schedule, for example, must take into account the many interdependencies that exist among clinical units as well as organizational changes that are occurring during the implementation.” Examples of aspects of implementation that are embedded in organizational structures, supports, and processes and may become sources of frustration include workflow changes, difficulty getting technical help at the time when it is needed, perceived (or actual) disassociation of IT staff from operational needs, and conflicting organizational priorities.31

A systematic literature review outlined lessons learned from HIT implementations in seven countries and found that strong project leadership using appropriate project management techniques, the establishment of standards, and staff training are needed to avoid risks that could compromise success. The review described ways in which HIT technical features interact with the social features of the healthcare work environment, and how this juxtaposition may contribute to complications of HIT deployment.45 Harrison et al described unintended consequences resulting from the interaction between HIT and the healthcare organization's socio-technical system (workflows, culture, social interactions, and technologies), and offered the ISTA (interactive socio-technical analysis) model to help study these consequences and their causes.9

The Institute of Medicine report, To Err is Human, noted that for optimum use, technology must be a ‘member’ of the work team.46 Considering an organization's workflow and procedures and the roles of its clinical teams during system planning, design, and implementation is critical. Workflow problems after CPOE implementation were rated high on a list of concerns of representatives from 176 U.S. hospitals responding to a recent survey.34 Indeed, HIT implementations are opportunities to review existing workflow processes to make sure that all are effective and up to date, and identify those that are unique to the institution; information gained from these reviews can guide modifications that need to be made to off-the-shelf HIT products. Implementation of HIT may blur the distinctions among traditional role lines, such as those of clinicians and information technology providers and administrators. When developing HIT solutions, it is necessary to balance system standardization with flexibility: standardization allows for a consistent approach to clinical care, information exchange, and related processes, while flexibility allows for customization to patient individuality, distinct clinical workflows, and HIT users' preferences.

Ongoing evaluating and monitoring of HIT systems to measure implementation success and pinpoint unintended consequences that occur during system usage is important. Sittig et al recommended measures to assess system availability, use, benefits, and potential hazards.19 Campbell et al addressed the pervasive problem of system downtime which can throw an organization's HIT-dependent operations into chaos. They advised healthcare organizations to prepare and test contingency plans so that operations can continue during system downtimes; these plans should include requirements for paper backup systems, procedures for operating without electronic resources, training for employees, and periodic drills to assure that these procedures function as planned and that all staff are thoroughly familiar with them.37

Fiscal/policy and regulatory domain

The widespread, accelerated diffusion of technology resulting from recent legislation may engender unintended consequences manifested in various ways throughout those organizations under pressure to accommodate the changes. Legislative and regulatory changes are moving health IT from a voluntary initiative to a highly regulated activity. Examples include revised privacy and security obligations for practices covered by the Health Insurance Portability and Accountability Act of 1996 (HIPAA), modifications to definitions of covered entities, and the inclusion of new ramifications for HIPAA violations.47

While ARRA holds out the promise of incentives to acquire and implement EHR systems, these efforts may introduce some unintended consequences related to its specific requirements and the fast pace of the implementations across large numbers of providers. Examples include the lack of empirical data to support the phased-in implementation of certain indicators of meaningful use, and concerns about the effect of current and future feature-oriented certification criteria on the ability of EHR vendors to innovate. Also, it is unclear to what extent the requirements for meaningful use could become barriers to HIT adoption by physicians and hospitals.48 The ONC Request for Proposal mentioned above acknowledges the need to study unintended consequences that may arise from the ARRA-driven, rapid market growth (by and large unregulated and potentially not evidence-based) of HIT vendors and software.18

The group did not reach consensus on whether formal regulation of EHR software would, on the whole, be beneficial or harmful. Plenary speaker, Rodeina Davis, CIO of the Blood Center of Wisconsin, discussed the experiences of the blood bank community with regulated software. She reported that the consensus of that community was that although regulation was necessary when it originally occurred, the advancements in software development methodologies have made formal regulation less advantageous.

Although meeting participants agreed that current EHR software, like all software, contains errors, there was debate about the impact of the rigidity and loss of nimbleness that formal software regulation entails. One anecdotal example given was that regulated software, such as that in some radiology systems or blood banking, is tied to a specific operating system version. As a result, vendors are prohibited from rapidly patching systems when the underlying operating system (eg, Windows) is compromised by a new virus or exploit. Given the evolving meaningful use requirements, and the mandate for interconnection, this inability to respond to novel threats concerned some participants. A loss of nimbleness can also impact the pace of innovation. While breakthrough innovation is possible in a highly regulated environment, conventional wisdom is that the regulation slows innovation. All of the participants agreed that the current generation of EHR software was less than optimal, and that significant innovation would be necessary before EHRs could achieve the promise of radical transformation of the healthcare process. In the time available, participants could not reach closure on an optimal tradeoff between regulation and nimbleness.

Recommendations

Oversimplifying the challenges inherent in HIT design, development, and implementation can lead to potentially avoidable unintended consequences. There is an increasing body of knowledge indicating that errors can result from HIT systems' failure to support the inherent complexity and dynamism of clinical workflow. It is also critical to understand the cognitive factors at the individual and social levels that come into play when human beings interact with technology and ensure that these factors are taken into account early in the design and implementation of systems.

We present several recommendations aimed at helping stakeholders anticipate and avoid (or mitigate) the unintended consequences of HIT implementations and related policies. Three overarching themes of these recommendations are: the need for additional research into the causes, manifestations, and mitigation of unintended consequences of HIT implementation; the importance of coordination of efforts and sharing of results by the various stakeholders working in the field of HIT design and implementation; and the major role that the Federal Government plays in providing oversight and leadership. While several of the recommendations for additional research are multi-year projects, suggestions in the subsection focusing on Federal Government oversight and leadership specifically address studying the impact of HIT deployment as a result of ARRA/HITECH. These activities may require a shorter timeframe than other research activities proposed below in order to take advantage of the window of opportunity to study the impact of meaningful use and factors relating to the fast pace of upcoming HIT deployments generated by the federal funds.

Research agenda

Create a taxonomy to improve understanding of and develop consensus around terminology related to unintended consequences of HIT implementations

While acknowledging the inherent difficulty in unambiguously classifying unintended consequences, a taxonomy (including cognitive concepts) which documents a broad (albeit not exhaustive) array of these consequences will assist implementers and users of HIT as well as policymakers to view the range of potential unexpected, negative effects related to HIT implementations. It would be a useful tool to help build consensus around definitions of terms that are related (and potentially misused or misunderstood); for example, consequences that are anticipated versus unanticipated, direct versus indirect, desirable versus undesirable, etc. This, in turn, will aid in the development of workable approaches for identifying and mitigating unwanted consequences.

Conduct research to improve the ability to identify, anticipate, and avoid/mitigate unintended consequences

The implementation of complex HIT systems in complex healthcare organizations remains too much an art and too little a science. Research is needed to support ongoing identification of unintended consequences of HIT design and implementation efforts and the situations in which they are most likely to occur. These examinations would enable a better understanding of the risks inherent in applying technological solutions in clinical care settings, how these risks can be more effectively anticipated, and the actions that could be taken to reduce the chances of failure due to the risks. A first step would be to develop a list of common risk factors, drawn from the experience of HIT implementers in a wide variety of settings. Research may also be able to aid in the development of a predictive model that could help determine the extent to which unintended consequences are contributing factors to HIT success/failure.

Conduct cognitive-based research and development studies to relate HIT system design to management of unintended consequences

Although multiple studies have documented that the use of HIT changes care provider cognition, current knowledge is limited to the extent to which we know how to anticipate and manage these consequences in the natural chaotic and complex health system. Understanding and mitigating the unintended consequences that can arise as a result of human interaction with complex technology can help guide strategies to reduce some of these consequences, and facilitate improved management of those that cannot be reduced, leading to enhanced performance and patient safety, and increased user acceptance. Current HIT systems have been found to often violate usability heuristics; thus, research is needed on the human–computer interface to address reported and anecdotal usability issues related to system design problems. For example, research is needed on the best ways to display, synthesize, and process the increasingly large amounts of health data that can be stored in HIT systems (eg, EHRs) so that the cognitive abilities of the humans using these systems are not overtaxed and the best possible practical use can be made of the data without generating additional problems.

Determine and disseminate best practices for HIT design

Efforts are needed to synthesize the results of existing and future studies on unintended consequences in order to capture, compile, and disseminate best practices and guidelines for designing HIT systems; these should include usability guidelines, as well as proven technical and organizational safeguards. Comparing the usability of different HIT solutions would help identify those system features and factors that contribute the most to the success of HIT, thus providing data needed for best practices.

Determine and disseminate optimal organizational strategies for HIT system implementation

Implementation of an HIT solution in organizations with complex, clinical workflows brings with it many challenges and threats, including the risk of unintended consequences. A critically important component of an organization's preparation for an HIT implementation is a thorough review of its workflow processes, procedures, and role assignments; yet the complexity of the healthcare workflow makes it resistant to many conventional workflow modeling and automation approaches. Organizational strategies are needed to help prepare managers and users to anticipate, prevent, mitigate, or overcome negative consequences if they occur in the course of the implementation. For example, before embarking on a major implementation, an organizational readiness assessment and risk reduction plan should be carried out to determine if the organization is fully prepared, and cognizant of the possible consequences. A readiness assessment instrument/toolkit should be developed that addresses concerns in the multiple domains affected by the implementation.

Coordination and knowledge sharing

Develop a framework for sharing of experiences

The themes of ‘reinventing the wheel’ and ‘rediscovering best practices’ were raised with regard to learning about and applying knowledge of HIT-related unintended consequences. One model, described by Pronovost et al, proposes that the healthcare field coordinate national efforts to move patient safety forward by learning from the experience of the aviation industry; the Commercial Aviation Safety Team, a public/private partnership of safety officials and technical experts created after a 1995 plane crash to reduce fatal accidents, has been responsible for decreasing the average rate of fatal aviation accidents.49 Sittig et al have suggested the formation of a central clearinghouse to facilitate the creation and dissemination of national EHR safety benchmarks.50 While there was general agreement on the value of sharing HIT experiences, with Dr Blumenthal calling for the creation of a “learning community” by HIT practitioners during his plenary address, concern was also expressed about potential liability and litigation. The Aviation Safety Reporting System (http://asrs.arc.nasa.gov/) was mentioned repeatedly as a potential model system. That system has three critical factors that need to be considered for any system designed to address patient safety issues in HIT: third party reporting, confidentiality, and limited liability projection. The open exchange of ideas is critical to the development and maintenance of optimal systems. Methods for identifying flaws in our current systems that take a punitive or regulatory approach will stifle this open exchange and will, ultimately, lead to self-protective behavior and inferior systems.

Federal Government oversight and leadership

Acknowledge the role and limitations of HIT

The Federal Government should take a leadership role in assuring that HIT is viewed as a strategic enabler of health system strengthening, but not the entire solution. Federal efforts should avoid fostering either a ‘technology for technology's sake,’ attitude, or a belief that technology will somehow ‘fix’ all of the healthcare systems ills. Rather, the Federal Government should encourage system designers and implementers to focus on the use of HIT to contribute to the ultimate goals of improvements in efficiency and outcomes. This approach should include an evidence-based assessment of HIT itself for both potential benefits and potential harms (eg, unintended consequences).

Undertake comparative effectiveness studies of HIT systems and implementations

Resources should be allocated to develop and implement the critical evaluative efforts noted above for systems purchased with ARRA-designated funds. For example, the Federal Government could fund the development and dissemination of validated approaches to measure implementation impact and help identify needed changes.

Identify and analyze effects of HIT-related policies

Analysis of intended and unintended consequences of ARRA/HITECH-related policies should be an integral component of Department of Health and Human Services (DHHS)/ONC efforts to promote HIT deployment in the U.S. It remains to be seen whether new Medicare and Medicaid incentives for meaningful use of HIT will ultimately result in quality improvements in health systems as well as patient outcomes. Thus, funding is needed to support research aimed at understanding the benefits and risks of these policies. This research will need to include rigorous monitoring and evaluation mechanisms to determine whether HIT meaningful use program goals are achieved, and also whether unintended consequences result at the population, patient, or provider level (eg, administrative burden on physicians and hospitals). The earlier that information is available about policy outcomes the better, with respect to reducing unintended consequences.11

Create a framework and designate official groups to help ensure the safety and effectiveness of HIT systems

The Federal Government, building on existing models and working with organizations active in the patient safety and quality of care areas, should lead in the development of processes, systems, and entities to ensure the safe and effective use of HIT. For example, experts argue that it will be necessary to develop a comprehensive monitoring and evaluation framework and the infrastructure to oversee EHR use and implementation. This framework could include features such as a system to report adverse events or potential safety hazards; a national investigative board, created to look into them and make findings public; a self-assessment tool for EHR users and implementing organizations; and enhanced EHR certification and onsite accreditation of EHRs via periodic inspections.50 Additionally, the interconnectivity of HIT that is envisioned and promoted by current legislation and regulation carries with it the need for HIT to be able to respond rapidly to evolving cyber-threats.

Promote additional information dissemination

Enhanced communication among multiple stakeholders in different sectors and disciplines will strengthen the collective ability to identify and address unintended consequences of HIT. Federal leadership is required to create incentives so that organizations will be more willing and able to share information about technical and organizational safeguards that address unintended consequences. Further, mechanisms are needed to facilitate sharing of the findings of HIT system implementers so that data captured by individual organizations can have broader impact.

Conclusions

Research on the challenges posed by implementing HIT in various care settings has already yielded much valuable data and many recommendations for identifying and ameliorating some of the unintended consequences related to these implementations. However, this knowledge has not sufficiently permeated the practice of HIT nationally; there has not been a concerted effort to collect, refine, and systematically disseminate these research findings to HIT implementers and users. Of further concern is the fact that this research has been conducted during a period of relatively slow adoption of HIT; with the current speeded-up trajectory of uptake of HIT, there may be new unintended consequences engendered by the rapidity of this technological and organizational change. The need for additional research into the unintended consequences of health IT implementations and the widespread dissemination of these findings is even more pressing now as, spurred by federal incentives, the nation moves into uncharted territory.

Weiner et al have called attention to and coined a term for what they describe as the ultimate of unintended consequences associated with HIT: ‘e-iatrogenesis,’ defined as “patient harm caused at least in part by the application of health information technology.” They urge the healthcare industry to acknowledge that HIT represents “a new 21st century vector for medical care-system induced harm—something we must all work to understand, measure, and mitigate.”51

Healthcare is a complex socio-technical system, and it is the nature of such systems that failures and other undesirable outcomes may be unavoidable.52 Understanding the limits and failures of decisions as we interact with HIT is important if we are to build robust systems and manage the risks of unintended consequences. Detection and correction of potential errors is an integral part of cognitive work in the complex, healthcare workplace. Ongoing research, usability, and evaluation efforts should also focus on developing approaches and strategies to enhance the ability to recover from or manage any unintended adverse consequences. While harmful unintended consequences, like many errors deriving from complex systems, can never be completely eliminated, the consensus of the meeting was that they can and should be reduced from their present levels.

Response and action by the AMIA Board of Directors (BOD)

By convening the 2009 conference and disseminating this paper, AMIA has further delineated critical issues related to unintended consequences of HIT and related policy. The AMIA BOD reviewed the article and endorsed its findings, conclusions, and recommendations. The BOD will continue to encourage other organizations to work collaboratively to continue this important public discourse. In addition, AMIA will forward the article and its recommendations to DHHS organizations for their review and consideration.

Supplementary Material

Acknowledgments

AMIA would like to acknowledge the input of the many participants and presenters from the 2009 AMIA Health Policy Meeting on which this article is based. Nancy Lorenzi and Justin Starren co-chaired the Steering Committee for the meeting. Joan Ash, David Bates, Meryl Bloomrosen, Trevor Cohen, Don Detmer, Richard Dykstra, Vojtech Huser, Julie McGowan, Ebele Okwumabua, Vimla Patel, Doug Peddicord, Josh Peterson, David Pieczkiewicz, Trent Rosenbloom, Ted Shortliffe, Freda Temple, and Adam Wright served as members of the Steering Committee. They were actively involved in and provided valuable input to all aspects of the planning processes. The authors also wish to express their thanks to Freda Temple for her careful review and editing of all versions of this manuscript.

Footnotes

Funding: The following organizations generously supported the 2009 meeting upon which this article is based: Cattails Software, GSK, IMO, Lilly, MedAssurant, Netezza, Security Health Plan, and Westat.

Competing interests: None.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1.Information related to the American Recovery and Reinvestment Act of 2009 Office of the National Coordinator for Health Information Technology. http://healthit.hhs.gov/portal/server.pt?open=512&objID=1233&parentname=CommunityPage&parentid=8&mode=2&in_hi_userid=10741&cached=true (accessed 28 Oct 2010).

- 2.Why health IT? Office of the National Coordinator for Health Information Technology. http://healthit.hhs.gov/portal/server.pt/community/healthit_hhs_gov__home/1204 (accessed 28 Oct 2010).

- 3.Ash JS, Berg M, Coiera E. Some unintended consequences of information technology in health care: the nature of patient care information system-related errors. J Am Med Inform Assoc 2004;11:104–12 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Ash JS, Sittig DF, Dykstra R, et al. The unintended consequences of computerized provider order entry: findings from a mixed methods exploration. Int J Med Inform 2009;78(Suppl 1):S69–76 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Ash JS, Sittig DF, Dykstra RH, et al. Categorizing the unintended sociotechnical consequences of computerized provider order entry. Int J Med Inform 2007;76 Suppl 1:S21–7 [DOI] [PubMed] [Google Scholar]

- 6.Koppel R, Metlay JP, Cohen A, et al. Role of computerized physician order entry systems in facilitating medication errors. JAMA 2005;293:1197–203 [DOI] [PubMed] [Google Scholar]

- 7.Ash JS, Sittig DF, Campbell EM, et al. Some unintended consequences of clinical decision support systems. AMIA Annu Symp Proc 2007;26–30 [PMC free article] [PubMed] [Google Scholar]

- 8.Campion TR, Jr, Waitman LR, May AK, et al. Social, organizational, and contextual characteristics of clinical decision support systems for intensive insulin therapy: a literature review and case study. Int J Med Inform 2010;79:31–43 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Harrison MI, Koppel R, Bar-Lev S. Unintended consequences of information technologies in health care–an interactive sociotechnical analysis. J Am Med Inform Assoc 2007;14:542–9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Merton R. The unanticipated consequences of purposive social action. Am Sociol Rev 1936;1:894–904 [Google Scholar]

- 11.The Robert Wood Johnson Health Policy Fellowship Program Unintended Consequences of Health Policy Programs and Policies: Workshop Summary. Washington, D.C.: National Academies Press, 2001. http://www.nap.edu/catalog.php?record_id=10192 (accessed 28 Oct 2010). [PubMed] [Google Scholar]

- 12.Hsu J, Price M, Huang J, et al. Unintended consequences of caps on Medicare drug benefits. N Engl J Med 2006;354:2349–59 [DOI] [PubMed] [Google Scholar]

- 13.Pryor C, Seifert R, Gurewich D, et al. Commonwealth Fund. Unintended consequences: how federal regulations and hospital policies can leave patients in debt. 2003. http://www.accessproject.org/adobe/unintended_consequences.pdf (accessed 28 Oct 2010).

- 14.Healton CG, Vallone D, Cartwright J. Unintended consequences of tobacco policies. Implications for public health practice. Am J Prev Med 2009;37(2 Suppl):S181–2 [DOI] [PubMed] [Google Scholar]

- 15.Sveiby K, Gripenberg P, Segercrantz B, et al. Unintended and undesirable consequences of innovation. XX ISPIM Conference, The Future of Innovation. Vienna: 2009. http://www.sveiby.com/articles/UnintendedconsequencesISPIMfinal.pdf (accessed 28 Oct 2010). [Google Scholar]

- 16.Davenport TH. Putting the enterprise into the enterprise system. Harv Bus Rev 1998;76:121–31 [PubMed] [Google Scholar]

- 17.Warne L. Conflict and politics and information systems failure: a challenge for information systems professionals and researchers. In: Socio-Technical and Human Cognition Elements of Information Systems. Hershey, PA: Idea Group Publishing, 2003:104–34 [Google Scholar]

- 18.Competitive RFTOP 0S25788: Anticipating Unintended Consequences of Health Information Technology and Health Information Exchange. Office of National Coordinator for Health Information Technology; https://www.fbo.gov/index?s=opportunity&mode=form&id=22633354ce0c9b300b832ff16c2658a7&tab=core&_cview=0 (accessed 28 Oct 2010). [Google Scholar]

- 19.Sittig DF, Campbell E, Guappone K, et al. Recommendations for monitoring and evaluation of in-patient computer-based provider order entry systems: results of a Delphi survey. AMIA Annu Symp Proc 2007: 671–5 [PMC free article] [PubMed] [Google Scholar]

- 20.Campbell EM, Sittig DF, Ash JS, et al. Types of unintended consequences related to computerized provider order entry. J Am Med Inform Assoc 2006;13:547–56 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Patterson ES, Cook RI, Render ML. Improving patient safety by identifying side effects from introducing bar coding in medication administration. J Am Med Inform Assoc 2002;9:540–53 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Koppel R, Wetterneck T, Telles JL, et al. Workarounds to barcode medication administration systems: their occurrences, causes, and threats to patient safety. J Am Med Inform Assoc 2008;15:408–23 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Patel VL, Arocha JF, Kaufman DR. A primer on aspects of cognition for medical informatics. J Am Med Inform Assoc 2001;8:324–43 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Horsky J, Kuperman GJ, Patel VL. Comprehensive analysis of a medication dosing error related to CPOE. J Am Med Inform Assoc 2005;12:377–82 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Patel VL, Kushniruk AW, Yang S, et al. Impact of a computer-based patient record system on data collection, knowledge organization, and reasoning. J Am Med Inform Assoc 2000;7:569–85 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Armijo D, McDonnell C, Werner K. Electronic Health Record Usability. Interface Design Considerations. James Bell Associates. The Altarum Institute, 2009. AHRQ Publication No. 09(10)-0091-2-EF. [Google Scholar]

- 27.Zheng K, Padman R, Johnson MP, et al. An interface-driven analysis of user interactions with an electronic health records system. J Am Med Inform Assoc 2009;16:228–37 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Sittig DF, Singh H. Eight rights of safe electronic health record use. JAMA 2009;302:1111–13 [DOI] [PubMed] [Google Scholar]

- 29.Kushniruk A, Borycki E, Kuwata S, et al. Predicting changes in workflow resulting from healthcare information systems: ensuring the safety of healthcare. Healthc Q 2006;9 Spec No:114–18 [DOI] [PubMed] [Google Scholar]

- 30.Borycki EM, Kushniruk AW, Keay L, et al. A framework for diagnosing and identifying where technology-induced errors come from. Stud Health Technol Inform 2009;148:181–7 [PubMed] [Google Scholar]

- 31.Lorenzi NM, Novak LL, Weiss JB, et al. Crossing the implementation chasm: a proposal for bold action. J Am Med Inform Assoc 2008;15:290–6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Berg M. Implementing information systems in health care organizations: myths and challenges. Int J Med Inform 2001;64:143–56 [DOI] [PubMed] [Google Scholar]

- 33.Kadry B, Sanderson IC, Macario A. Challenges that limit meaningful use of health information technology. Curr Opin Anaesthesiol. Published Online First: 2010;23:184–92 [DOI] [PubMed] [Google Scholar]

- 34.Ash JS, Sittig DF, Poon EG, et al. The extent and importance of unintended consequences related to computerized provider order entry. J Am Med Inform Assoc 2007;14:415–23 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Rogers E. Diffusion of Innovations. 5th edn New York: Simon and Schuster, 2003 [Google Scholar]

- 36.Rahimi B, Vimarlund V, Timpka T. Health information system implementation: a qualitative meta-analysis. J Med Syst 2009;33:359–68 [DOI] [PubMed] [Google Scholar]

- 37.Campbell EM, Sittig DF, Guappone KP, et al. Overdependence on technology: an unintended adverse consequence of computerized provider order entry. AMIA Annu Symp Proc 2007. October 11: 94–8 [PMC free article] [PubMed] [Google Scholar]

- 38.van der Sijs H, Aarts J, Vulto A, et al. Overriding of drug safety alerts in computerized physician order entry. J Am Med Inform Assoc 2006;13:138–47 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Koppel R. EMR entry error: not so benign. AHRQ WebM&M, Case & Commentary. 2009. http://www.webmm.ahrq.gov/case.aspx?caseID=199 (accessed 28 Oct 2010).

- 40.Joint Commission Sentinel event alert. Safely implementing health information and converging technologies. Issue 42, 2008 Dec 11. http://www.jointcommission.org/SentinelEvents/SentinelEventAlert/sea_42.htm (accessed 28 October 2010). [PubMed]

- 41.Horsky J, Zhang J, Patel VL. To err is not entirely human: complex technology and user cognition. J Biomed Inform 2005;38:264–6 [DOI] [PubMed] [Google Scholar]

- 42.Horsky J, Kaufman DR, Patel VL. When you come to a fork in the road, take it; strategy selection in order entry. AMIA Annu Symp Proc 2005:350–4 [PMC free article] [PubMed] [Google Scholar]

- 43.Chapman GB, Elstein AS. Cognitive processes and biases in medical decision making. In: Chapman GB, Sonnenberg ES, eds. Decision-Making in Health Care: Theory, Psychology and Applications. Cambridge: Cambridge University Press, 2000: 183–210 [Google Scholar]

- 44.Drucker P. They're not employees, they're people. Harv Bus Rev 2002: 5 http://www.peowebhr.com/Newsreleases/Harvard%20Business%20Review.pdf (accessed 28 Oct 2010). [PubMed] [Google Scholar]

- 45.Ludwick DA, Doucette J. Adopting electronic medical records in primary care: lessons learned from health information systems implementation experience in seven countries. Int J Med Inform 2009;78:22–31 [DOI] [PubMed] [Google Scholar]

- 46.Kohn LT, Corrigan JM, Donaldson MS, eds. Committee on Quality of Health Care in America. Institute of Medicine. To err is human: building a safer health system. Washington, D.C.: National Academies Press, 2000 [PubMed] [Google Scholar]

- 47.U.S. Department of Health & Human Services Health Information Privacy. HITECH breach notification interim final rule. http://www.hhs.gov/ocr/privacy/hipaa/understanding/coveredentities/breachnotificationifr.html (accessed 28 Oct 2010).

- 48.Robert Wood Johnson Foundation, Massachusetts General Hospital Health information technology in the United States: on the cusp of change. Executive Summary. 2009. http://www.rwjf.org/healthreform/product.jsp?id=50308 (accessed 28 Oct 2010).

- 49.Pronovost PJ, Goeschel CA, Olsen KL, et al. Reducing health care hazards: lessons from the Commercial Aviation Safety Team. Health Aff (Millwood) 2009;28:w479–89 http://content.healthaffairs.org/cgi/content/abstract/hlthaff.28.3.w479 (accessed 28 Oct 2010). [DOI] [PubMed] [Google Scholar]

- 50.Sittig DF, Classen DC. Safe electronic health record use requires a comprehensive monitoring and evaluation framework. JAMA 2010;303:450–1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Weiner JP, Kfuri T, Chan K, et al. “e-Iatrogenesis”: the most critical unintended consequence of CPOE and other HIT. J Am Med Inform Assoc 2007;14:387–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Perrow C. Normal Accidents: Living with High Risk Technologies. Princeton, NJ: Princeton University Press, 1999 [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.