Abstract

Objective

Pharmacy clinical decision-support (CDS) software that contains drug–drug interaction (DDI) information may augment pharmacists' ability to detect clinically significant interactions. However, studies indicate these systems may miss some important interactions. The purpose of this study was to assess the performance of pharmacy CDS programs to detect clinically important DDIs.

Design

Researchers made on-site visits to 64 participating Arizona pharmacies between December 2008 and November 2009 to analyze the ability of pharmacy information systems and associated CDS to detect DDIs. Software evaluation was conducted to determine whether DDI alerts arose from prescription orders entered into the pharmacy computer systems for a standardized fictitious patient. The fictitious patient's orders consisted of 18 different medications including 19 drug pairs—13 of which were clinically significant DDIs, and six were non-interacting drug pairs.

Measurements

The sensitivity, specificity, positive predictive value, negative predictive value, and percentage of correct responses were measured for each of the pharmacy CDS systems.

Results

Only 18 (28%) of the 64 pharmacies correctly identified eligible interactions and non-interactions. The median percentage of correct DDI responses was 89% (range 47–100%) for participating pharmacies. The median sensitivity to detect well-established interactions was 0.85 (range 0.23–1.0); median specificity was 1.0 (range 0.83–1.0); median positive predictive value was 1.0 (range 0.88–1.0); and median negative predictive value was 0.75 (range 0.38–1.0).

Conclusions

These study results indicate that many pharmacy clinical decision-support systems perform less than optimally with respect to identifying well-known, clinically relevant interactions. Comprehensive system improvements regarding the manner in which pharmacy information systems identify potential DDIs are warranted.

Introduction

According to the 2007 Institute of Medicine (IOM) report entitled, Preventing Medication Errors: Quality Chasm Series, approximately 1.5 million preventable adverse drug events (ADEs) occur annually in the USA.1 Many ADEs are unavoidable; however, recognition of potentially interacting drug pairs, and subsequent appropriate action, is essential to protecting the public's health and safety. Despite this, studies show that prescribers' ability to recognize well-documented drug interactions is limited.2 3 Prescribers commonly rely on pharmacists as a key source of drug-interaction information.3 4 However, research indicates that pharmacists' ability to identify important drug interactions is also lacking.5

Drug-interaction alerting is one of several types of computerized medication-related clinical decision-support (CDS) used by pharmacists to improve patient safety. Although DDI screening software may augment pharmacists' ability to detect clinically significant interactions, these systems are far from fail-safe, oftentimes alerting pharmacists of clinically insignificant drug interactions or failing to alert them about clinically important interactions.6 7 Too many intrusive alerts are mentally draining and time-consuming, and result in providers ignoring both relevant and irrelevant warnings (‘alert fatigue’).2 8 9 Although problems with high alert over-ride rates and alert fatigue are frequently discussed within the context of CPOE, pharmacists have long-experienced such challenges associated with medication-related CDS.10–15 For example, one study reported that most pharmacists over-rode the majority of DDI alerts (mean 73.8%).15

A limited number of reports focus on medication-related CDS uses by pharmacists. The purpose of this study was to evaluate the performance of DDI software programs currently in use in pharmacy practice.

Methods

This evaluation was conducted at participating community pharmacies throughout Arizona between December 2008 and November 2009. Pharmacies were eligible to participate if they were a University of Arizona College of Pharmacy rotation site.

Sixty-four pharmacies agreed to participate. Pharmacies were categorized as community, inpatient hospital or other (ie, non-hospital institutional, long-term care). Initial recruitment of retail ‘chain pharmacies,’ defined by the National Association of Chain Drug Stores as pharmacies belonging to a company that operates at least four retail pharmacies,16 was limited to inclusion of two urban and one rural site per chain to prevent oversampling of this type of pharmacy. Recognizing that many of Arizona's retail pharmacies are part of national or regional chain operations and that all pharmacies under the same ownership are likely to use the same software systems, subsequent recruitment of retail chain pharmacies within the same chain ensued only when the results of the initial three sites differed; recruitment attempts continued until the DDI results from three pharmacies were identical. This study was deemed exempt from Human Subject Protection by University of Arizona's Institutional Review Board.

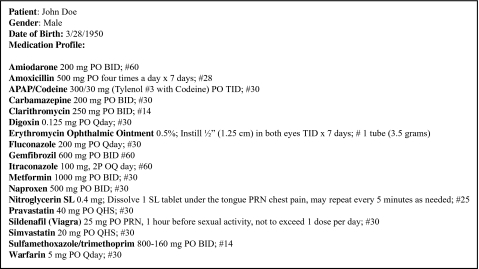

Researchers made on-site visits to participating pharmacies to analyze their respective information systems and associated CDS software's ability to detect DDIs. To maintain consistency in data collection, detailed information about the computer-generated alerts was recorded on data-collection forms and augmented by screen prints, for all participating pharmacies. Specifically, software evaluation was conducted by identifying DDI alerts that arose from prescription orders entered into respective software systems, for a standardized fictitious patient (figure 1). The fictitious orders consisted of 18 different medications including 19 drug pairs, 13 of which were clinically significant DDIs, and six were non-interacting drug pairs.

Figure 1.

Fictitious patient profile.

The interacting medications, mainly oral cardiovascular medications, were chosen by consensus among the researchers based on their widespread use, clinical importance, propensity to cause adverse events, and level of documentation. A list of clinically important DDIs developed by Malone et al served as a starting-point for item selection,17 with a focus on relatively frequently coprescribed interacting drug pairs.18 Following a review of the primary literature and DDI-specific resources (Drug Interaction Facts,19 Drug Interactions Analysis and Management,20 The Top 100 Drug Interactions: A Guide to Patient Management,21 and Stockley's Drug Interactions22), an evidence-based summary was developed for each of the 13 interacting drug pairs. The four DDI compendia were selected on the basis that they are commonly used by clinicians, have been used in previous research, and were readily available to the authors.

The absence of an interaction was also verified for the remaining six drug pairs using the primary and tertiary literature. Two of the six non-interacting drug pairs contained ophthalmic erythromycin ointment. This medication was chosen to determine whether pharmacy software systems could discern interactions based on route of administration. For example, absorption of ophthalmic erythromycin typically does not result in an interaction with a systemic medication. Pravastatin was included in an attempt to distinguish whether pharmacy software classified the interaction based on a class effect (some statins interact with warfarin, whereas pravastatin does not) or interaction potential of the drug itself.

The presence or absence of an alert, regardless of its severity level, was the basis for determining whether a response was classified as correct. Sensitivity refers to the software program's ability to accurately recognize interacting drug pairs that are clinically significant, and was calculated by dividing the number of true positives by the number of clinically significant interacting drug pairs. Specificity refers to the software program's ability to disregard any pairs of medications not defined as clinically significant. This value was calculated by dividing the number of true negatives by the number of non-interacting drug pairs. Positive predictive value (PPV) is a measure of the usefulness of the alert – the probability that a DDI alert represents a true DDI. This was calculated by dividing the number of true positives by the sum of the number of true positives plus the number of false positives. Negative predictive value is the probability that the absence of a DDI alert represents a true absence of a DDI. This was calculated by dividing the number of true negatives by the sum of the number of true negatives plus the number of false negatives. The number of correct responses, sensitivity, specificity, PPV, and NPV were calculated for individual pharmacies. In addition, the median sensitivity, specificity, PPV, NPV, and median percentage of correct responses were calculated for all participating pharmacies combined.

In addition to whether an alert was generated for a specific, DDI, the following information was gathered at each pharmacy: date of visit; location (urban or rural); software vendor; software version; date of most recent software update; and whether the pharmacist on duty believed any categories/levels of interactions were ‘suppressed’ or ‘turned off.’

A post-hoc analysis using a Kruskal–Wallis test was undertaken to determine if there were any statistically significant differences between community, inpatient, and ‘other’ pharmacies with respect to DDI sensitivity and specificity. Probability values less than 0.05 were considered statistically significant.

Descriptive statistics were summarized using Microsoft Excel 2007. Statistical comparisons were made using Stata software, version 10.0.

Results

Sixty-four pharmacies throughout Arizona participated in the study. Of those participating, 40 (63%) were community pharmacies, 14 (22%) were inpatient hospital pharmacies, and 10 (16%) pharmacies were classified as ‘other.’ Of the 40 community pharmacies, approximately two-thirds (n=27) were classified as retail ‘chain pharmacies.’ Those classified as ‘other’ included two infusion pharmacies, two pharmacies located in penal institutions, one long-term care pharmacy, two pharmacies associated with rehabilitative-care facilities, and three Indian Health Service facilities.

For all the participating pharmacies, the median percentage of correct DDI responses was 89% (range 47%–100%). Table 1 shows the number of correct responses by drug combination and pharmacy type.

Table 1.

Correct drug–drug interaction alert responses by combination and pharmacy type*

| Drug combinations | Correct responses all pharmacies (n=64) | Correct responses community pharmacies (n=40) | Correct responses inpatient hospital pharmacies (n=14) | Correct responses ‘other’ pharmacies (n=10) |

| N (%) | N (%) | N (%) | N (%) | |

| Clinically significant drug–drug interactions | ||||

| Carbamazepine+clarithromycin | 57/64 (89) | 37/40 (93) | 13/14 (93) | 7/10 (70) |

| Digoxin+amiodarone | 55/64 (86) | 32/40 (80) | 13/14 (93) | 10/10 (100) |

| Digoxin+clarithromycin | 56/64 (88) | 36/40 (90) | 11/14 (79) | 9/10 (90) |

| Digoxin+itraconazole | 27/60 (45) | 20/39 (51) | 4/14 (29) | 3/7 (43) |

| Nitroglycerine+sildenafil | 51/63 (81) | 32/40 (80) | 12/14 (86) | 7/9 (78) |

| Simvastatin+amiodarone | 48/64 (75) | 32/40 (80) | 7/14 (50) | 9/10 (90) |

| Simvastatin+gemfibrozil | 54/64 (84) | 35/40 (88) | 12/14 (86) | 7/10 (70) |

| Simvastatin+itraconazole | 54/60 (90) | 36/39 (92) | 12/14 (86) | 6/7 (86) |

| Warfarin+amiodarone | 55/63 (87) | 34/40 (85) | 11/13 (85) | 10/10 (100) |

| Warfarin+fluconazole | 53/64 (83) | 34/40 (85) | 12/14 (86) | 7/10 (70) |

| Warfarin+gemfibrozil | 51/64 (80) | 35/40 (88) | 10/14 (71) | 6/10 (60) |

| Warfarin+naproxen | 45/64 (70) | 32/40 (80) | 4/14 (29) | 9/10 (90) |

| Warfarin+sulfamethoxazole/trimethoprim | 48/64 (75) | 27/40 (68) | 12/14 (86) | 9/10 (90) |

| Non-interacting pairs | ||||

| Acetaminophen/codeine+amoxicillin | 64/64 (100) | 40/40 (100) | 14/14 (100) | 10/10 (100) |

| Carbamazepine+erythromycin ophthalmic | 57/64 (89) | 34/40 (85) | 13/14 (93) | 10/10 (100) |

| Metformin+erythromycin ophthalmic | 64/64 (100) | 40/40 (100) | 14/14 (100) | 10/10 (100) |

| Digoxin+sildenafil | 63/63 (100) | 40/40 (100) | 14/14 (100) | 9/9 (100) |

| Warfarin+digoxin | 64/64 (100) | 40/40 (100) | 14/14 (100) | 10/10 (100) |

| Warfarin+pravastatin | 62/62 (100) | 40/40 (100) | 14/14 (100) | 8/8 (100) |

Four of the surveyed pharmacies (6%) were unable to enter at least one of the medications in the fictitious profile; either the medication was not on the formulary, or it was unavailable in their drug database. Consequently, the results for these pharmacies were calculated based on the drug pairs that were successfully entered into their respective systems.

Four of the surveyed pharmacies (6%) were unable to enter at least one of the medications in the fictitious profile; either the medication was not on the formulary, or it was unavailable in their drug database. Consequently, the results for these pharmacies were calculated based on the drug pairs that were successfully entered into their respective systems. Exclusion of a particular medication within a pharmacy software system negatively affected detection of two to four drug pairs, depending on the pharmacy.

Eighteen of 64 pharmacies (28%) correctly classified eligible possible interacting and non-interacting drug pairs. The digoxin and itraconazole drug pair was incorrectly identified more often than any other drug-interacting pair. Only 45% (27 of 60) of participating pharmacies correctly classified this well-documented drug pair as a clinically significant drug interaction.23–30 Five of the six non-interacting drug pairs were correctly classified by all of the pharmacies. Carbamazepine and erythromycin ophthalmic was the most common incorrectly identified, non-interacting pair: seven (11%) of pharmacies misidentified this pair—of these, six were community-based, and one was an inpatient pharmacy.

Participating pharmacies' DDI software programs had a median sensitivity of 0.85 (range 0.23–1.0). These same programs had a median specificity of 1.0 (range 0.83–1.0), a median PPV of 1.0 (range 0.88–1.0), and a median NPV of 0.75 (range 0.38–1.0). Table 2 provides a summary of the performance of drug-interaction computer software by pharmacy type. The post-hoc analysis failed to detect any statistically significant differences in sensitivity or specificity between the community, inpatient, and ‘other’ pharmacies. The study may have been underpowered to detect such differences, since this was not a main focus of the research.

Table 2.

Performance of drug-interaction software by pharmacy type

| Sensitivity | Specificity | Positive predictive value | Negative predictive value | |

| Community pharmacies (n=40)* | ||||

| Median | 0.92 | 1.00 | 1.00 | 0.86 |

| Maximum | 1.00 | 1.00 | 1.00 | 1.00 |

| Minimum | 0.31 | 0.83 | 0.88 | 0.40 |

| Inpatient hospital pharmacies (n=14) | ||||

| Median | 0.77 | 1.00 | 1.00 | 0.67 |

| Maximum | 1.00 | 1.00 | 1.00 | 1.00 |

| Minimum | 0.38 | 0.83 | 0.93 | 0.43 |

| ‘Other’ pharmacies (n=10)† | ||||

| Median | 0.85 | 1.00 | 1.00 | 0.75 |

| Maximum | 1.00 | 1.00 | 1.00 | 1.00 |

| Minimum | 0.23 | 1.00 | 1.00 | 0.38 |

Approximately two-thirds (n=27) of community pharmacies were classified as retail ‘chain pharmacies.’

‘Other’ pharmacies included: two infusion pharmacies, two prison pharmacies, a long-term care pharmacy, two rehabilitative-care pharmacies, and three Indian Health Service facilities.

Of the pharmacies surveyed, a total of 24 different software vendors were represented, including both commercial and proprietary systems; Health Business Systems, PDX, and QS/1 were the three most commonly utilized software vendors. Performance of the DDI software systems varied both within and between vendors. Of the software vendors utilized by five or more pharmacies, none of the results were consistent across all sites. Two of the three most common vendors had perfect specificity across all sites (1.00), while the third vendor's specificity varied from 0.83 to 1.00. To exemplify the degree of variation observed within a single software vendor, the sensitivity of the software of one of the most common vendors in this study ranged from 0.54 to 1.00.

Of the pharmacists on duty that were able to respond to more detailed questioning about their software systems, less than half (40%) confirmed the suppression of some DDI alerts based on severity. The majority of pharmacists on-duty at the time of data collection were knowledgeable about the frequency with which their software was updated; monthly was most common (44%).

Discussion

Research has demonstrated that without the use of a computer, DDI knowledge is poor among health professionals, including both physicians and pharmacists.2 3 5 Drug-interaction screening, a CDS tool, has been a mainstay of pharmacy software packages for many years and is now available in many computerized prescriber order-entry systems. These provider- and pharmacist-based DDI CDS systems share many similarities, including their source of drug information: the knowledge bases are created, maintained, and sold by a small number of firms (eg, First DataBank, Wolters Kluwer Health, Cerner, Thomson Reuters Healthcare). However, each firm typically relies on their own classification system for interactions.

Recently, much attention has focused on features that constitute a useful CDS tool, in part, due to the Office of the National Coordinator for Health Information Technology's task of advancing the adoption of health technology.31 The general consensus, from a recent CDS workshop, is that information presented by CDS must be pertinent and beneficial, and prevent overburdening the user with irrelevant alerts.31 For instance, drug-interaction screening was specifically mentioned as an area in which the sensitivity and specificity of software settings may have a significant impact on the quality and number of alerts presented. In the current study, the researchers observed a high level of variability in the performance of pharmacy software systems and, more importantly, the failure of some systems to detect well-documented DDIs.

This CDS software evaluation study provides insight into the poor performance of pharmacy systems in alerting pharmacists of clinically significant drug interactions. Additionally, the current results may address broader public safety concerns associated with the manner in which potential DDIs are detected within CDS systems.

Metzger et al evaluated the performance of electronic alerts arising from computerized prescriber order entry in hospitals further exemplifying potential safety issues associated with the use of CDS systems.32 They also utilized fictitious patient profiles to examine the ability of software systems to detect various medication safety issues. However, Metzger et al examined a more comprehensive set of medication alerts, including but not limited to drug-allergy, drug-diagnosis, and therapeutic duplications. Despite differences in settings and types of alerts examined, both studies demonstrated significant variability in CDS system performance between and within vendors as well as the failure of some systems to detect clinically significant medication safety issues.

Suboptimal performance of the pharmacy DDI software systems in this study was confirmed, in part, by the failure of these systems to detect approximately one in seven clinically significant DDIs. The most poorly performing software system had a sensitivity of 0.23, meaning that approximately 77% of the DDIs evaluated would go undetected. Community pharmacies failed to detect approximately one in 12 clinically significant DDIs, while hospital pharmacy systems failed to detect approximately one in four DDIs. In addition, systems in other settings incorrectly categorized approximately one in seven of the DDIs evaluated. Based on the current study, it is evident that additional efforts are needed to improve the ability of pharmacy software systems to detect clinically significant DDIs.

Prior research on the accuracy and reliability of pharmacy software programs suggests that poor performance is due, in part, to the inability of these systems to warn pharmacists of potentially clinically significant DDIs.6 7 Cavuto et al determined the likelihood that pharmacists would fill prescriptions for two medications whose concurrent use was contraindicated (terfenadine and ketoconazole).33 Of the 48 pharmacies with computerized DDI screening, approximately one-third of pharmacies filled the two prescriptions. Hazlet et al evaluated nine community-pharmacy software programs and found that one-third of DDIs went undetected.7 Another study conducted by this research group in Tucson, Arizona evaluated pharmacy information systems in eight community pharmacies and five hospital pharmacies.6 For community pharmacies, the median sensitivity and specificity were 0.88 and 0.91, respectively; hospital pharmacies had a median sensitivity and specificity of 0.38 and 0.95, respectively.

The current study confirms continued variability in system performance across and within pharmacy organizations. This variability in CDS software system performance may be due, in part, to software customization by its users, at the pharmacist, pharmacy, or corporate level. Specifically, clinicians may ‘customize’ the software by suppressing certain categories or tiers of drug-interaction warnings in an attempt to minimize alert fatigue, a phenomenon caused by excessive warnings including irrelevant, non-significant, or repetitious alerts.11 34 35 Alert fatigue may compromise patient safety, especially if the CDS program presents excessive warnings (ie, low signal-to-noise ratios), thus causing clinician desensitization to warnings and even over-riding clinically significant warnings.9 The literature contains many studies documenting widespread dissatisfaction with alerts perceived as inappropriate, inconsequential, disruptive, or redundant and high rates (up to 89%) of ‘over-ridden’ DDI alerts.2 4 8 14 15 36–39 Despite well-documented research on issues with CDS program alerts, including the current study results, clinicians continue to face challenges when using this type of software.

The drug knowledge database may also be a source of variability in software performance; the database is integrated into the software and serves as a basis of its drug information. Currently, no universal standard exists for classifying the severity of drug interactions.40 Furthermore, many drug combinations have not been thoroughly studied; case reports, in vitro studies, and retrospective reviews are common in the drug-interaction literature.41 Consequently, research has demonstrated substantial variation among the major drug compendia regarding inclusion of drug interactions and assignment of severity levels to known interactions.40 42 In a comparison study of four drug-interaction compendia, only 2% of the major DDIs were included in all four compendia.40 The DDI knowledge bases tend to be highly inclusive with respect to drug-interaction alerts, focusing on the scope of drug-interaction alert coverage rather than the clinical significance and estimated rate of occurrence.8 43–45 The tendency for DDI knowledge bases to be more inclusive may be due, in part, to perceived potential for legal liability.

Compared with other previous relevant research, the methodology employed in the current study imparted several advantages. With the unit of analysis at the individual pharmacy level, no central corporate locations were included in the analysis. This design feature enabled researchers to examine more closely the variability within pharmacy chains. The relatively large number and variety of participating sites improved the generalizability of the results. Furthermore, researchers recorded all data on site, thereby mitigating opportunities for participants to misrepresent results.

There are limitations to this study that need to be considered when interpreting the results. The fictitious patient profile reflected a limited set of available medications; results are likely to vary based on the set of interactions evaluated. In addition, using a single patient profile may have caused many pharmacy software systems to generate additional DDI alerts (eg, amiodarone and clarithromycin) as well as alerts for therapeutic duplication (eg, pravastatin and simvastatin). However, these alerts for non-targeted drug combinations were not documented or analyzed. Some variability in the systems' performance may be due, in part, to software updates for clinical evidence of interaction potential that may have occurred during the data-collection period (almost 1 year). For example, pharmacies whose site visits occurred earlier in the data collection period may have been using an older software version than those pharmacies visited in the latter part of the study. Updated software may account for some of the differences in the number of DDIs detected by the various software systems over the study period and, in particular, between the early and later site visits.

The generalizability of the results may be limited because a non-random process was used to recruit pharmacies. All participating pharmacies were affiliated with the university and located within the state of Arizona. Computer software systems installed in other pharmacies may not be comparable; however, many of the pharmacies evaluated were national retail chain pharmacies. In addition, future studies should include objective verification of the pharmacy software vendor, ascertainment of knowledge base vendor used by the pharmacy software, and the date of the last update.

Conclusion

We found that comprehensive system improvements are essential to improve the manner in which pharmacy information systems identify potential DDIs. To this end, issues surrounding the reliability of pharmacy systems are likely to transcend to other systems that utilize drug-interaction screening algorithms. Additional research is warranted to improve pharmacists' ability to detect DDIs, to prevent potential adverse events, and to protect patient health and safety.

Acknowledgments

The authors thank the participating pharmacists and pharmacies.

Footnotes

Funding: This study was funded by the Agency for Healthcare Research and Quality (# 5 U18 HS017001-02).

Ethics approval: This study was conducted with the approval of the University of Arizona.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1.Committee on Identifying and Preventing Medication Errors Preventing Medication Errors: Quality Chasm Series. Washington, DC: National Academies Press, 2007 [Google Scholar]

- 2.Glassman PA, Simon B, Belperio P, et al. Improving recognition of drug interactions: benefits and barriers to using automated drug alerts. Med Care 2002;40:1161–71 [DOI] [PubMed] [Google Scholar]

- 3.Ko Y, Malone DC, Skrepnek GH, et al. Prescribers' knowledge of and sources of information for potential drug–drug interactions: a postal survey of US prescribers. Drug Saf 2008;31:525–36 [DOI] [PubMed] [Google Scholar]

- 4.Ko Y, Abarca J, Malone DC, et al. Practitioners' views on computerized drug–drug interaction alerts in the VA system. J Am Med Inform Assoc 2007;14:56–64 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Weideman RA, Bernstein IH, McKinney WP. Pharmacist recognition of potential drug interactions. Am J Health Syst Pharm 1999;56:1524–9 [DOI] [PubMed] [Google Scholar]

- 6.Abarca J, Colon LR, Wang VS, et al. Evaluation of the performance of drug–drug interaction screening software in community and hospital pharmacies. J Manag Care Pharm 2006;12:383–9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Hazlet TK, Lee TA, Hansten PD, et al. Performance of community pharmacy drug interaction software. J Am Pharm Assoc (Wash) 2001;41:200–4 [DOI] [PubMed] [Google Scholar]

- 8.Shah NR, Seger AC, Seger DL, et al. Improving acceptance of computerized prescribing alerts in ambulatory care. J Am Med Inform Assoc 2006;13:5–11 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.van der Sijs H, Aarts J, Vulto A, et al. Overriding of drug safety alerts in computerized physician order entry. J Am Med Inform Assoc 2006;13:138–47 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Abarca J, Malone DC, Skrepnek GH, et al. Community pharmacy managers' perception of computerized drug–drug interaction alerts. J Am Pharm Assoc (2003) 2006;46:148–53 [DOI] [PubMed] [Google Scholar]

- 11.Cash JJ. Alert fatigue. Am J Health Syst Pharm 2009;66:2098–101 [DOI] [PubMed] [Google Scholar]

- 12.Chaffee BW. Future of clinical decision support in computerized prescriber order entry. Am J Health Syst Pharm 2010;67:932–5 [DOI] [PubMed] [Google Scholar]

- 13.Chrischilles EA, Fulda TR, Byrns PJ, et al. The role of pharmacy computer systems in preventing medication errors. J Am Pharm Assoc (Wash) 2002;42:439–48 [DOI] [PubMed] [Google Scholar]

- 14.Chui MA, Rupp MT. Evalutaion of online prospective DUR programs in community pharmacy practice. J Manag Care Pharm 2000;6:27–32 [Google Scholar]

- 15.Murphy JE, Forrey RA, Desiraju U. Community pharmacists' responses to drug–drug interaction alerts. Am J Health Syst Pharm 2004;61:1484–7 [DOI] [PubMed] [Google Scholar]

- 16.National Association of Chain Drug Stores, Inc. 2009. http://www.nacds.org/wmspage.cfm?parm1=6421

- 17.Malone DC, Abarca J, Hansten PD, et al. Identification of serious drug–drug interactions: results of the partnership to prevent drug–drug interactions. J Am Pharm Assoc (2003) 2004;44:142–51 [DOI] [PubMed] [Google Scholar]

- 18.Malone DC, Hutchins DS, Haupert H, et al. Assessment of potential drug–drug interactions with a prescription claims database. Am J Health Syst Pharm 2005;62:1983–91 [DOI] [PubMed] [Google Scholar]

- 19.Drug Interaction Facts. St Louis, MO: Wolters Kluwer Health, 2008 [Google Scholar]

- 20.Hansten PD, Horn JR. Drug Interactions Analysis and Management. St Louis, MO: Wolters Kluwer Health, 2008 [Google Scholar]

- 21.Hansten PD, Horn JR. The Top 100 Drug Interactions. A Guide to Patient Management. 2008 edn Freeland, WA: H&H Publications, LLP, 2008 [Google Scholar]

- 22.Baxter K, ed. Stockley's Drug Interactions 8 [CD-ROM]. London: Pharmaceutical Press, 2008 [Google Scholar]

- 23.Cone LA, Himelman RB, Hirschberg JN, et al. Itraconazole-related amaurosis and vomiting due to digoxin toxicity. West J Med 1996;165:322. [PMC free article] [PubMed] [Google Scholar]

- 24.Jalava KM, Partanen J, Neuvonen PJ. Itraconazole decreases renal clearance of digoxin. Ther Drug Monit 1997;19:609–13 [DOI] [PubMed] [Google Scholar]

- 25.Partanen J, Jalava KM, Neuvonen PJ. Itraconazole increases serum digoxin concentration. Pharmacol Toxicol 1996;79:274–6 [DOI] [PubMed] [Google Scholar]

- 26.Kauffman CA, Bagnasco FA. Digoxin toxicity associated with itraconazole therapy. Clin Infect Dis 1992;15:886–7 [DOI] [PubMed] [Google Scholar]

- 27.Lopez F, Hancock EW. Nausea and malaise during treatment of coccidioidomycosis. Hosp Pract (Minneap) 1997;32:21–2 [DOI] [PubMed] [Google Scholar]

- 28.McClean KL, Sheehan GJ. Interaction between itraconazole and digoxin. Clin Infect Dis 1994;18:259–60 [DOI] [PubMed] [Google Scholar]

- 29.Rex J. Itraconazole–digoxin interaction. Ann Intern Med 1992;116:525. [DOI] [PubMed] [Google Scholar]

- 30.Sachs MK, Blanchard LM, Green PJ. Interaction of itraconazole and digoxin. Clin Infect Dis 1993;16:400–3 [DOI] [PubMed] [Google Scholar]

- 31.Clinical Decision Support Workshop Meeting Summary: August 25–26, 2009. Washington, DC: Office of the National Coordinator for Health Information Technology, Department of Health & Human Services, 2010. http://healthit.hhs.gov/portal/server.pt/gateway/PTARGS_0_11113_898639_0_0_18/ONC%20CDS%20Workshop%20Meeting%20Summary_f.pdf [Google Scholar]

- 32.Metzger J, Welebob E, Bates DW, et al. Mixed results in the safety performance of computerized physician order entry. Health Aff (Millwood) 2010;29:655–9 [DOI] [PubMed] [Google Scholar]

- 33.Cavuto NJ, Woosley RL, Sale M. Pharmacies and prevention of potentially fatal drug interactions. JAMA 1996;275:1086–7 [PubMed] [Google Scholar]

- 34.Horn JR, Hansten PD. Reducing drug interaction alerts: not so easy. Pharmacy Times June 2007:34 [Google Scholar]

- 35.Institute for Safe Mediction Practices Heed this Warning! Don't Miss Important Computer Alerts. ISMP Med Saf Alert. 2007; 12(3);1–2 2010. http://www.ismp.org/newsletters/acutecare/archives/NL_20070208.pdf [Google Scholar]

- 36.Payne TH, Nichol WP, Hoey P, et al. Characteristics and override rates of order checks in a practitioner order entry system. Proc AMIA Symp 2002:602–6 [PMC free article] [PubMed] [Google Scholar]

- 37.Weingart SN, Toth M, Sands DZ, et al. Physicians' decisions to override computerized drug alerts in primary care. Arch Intern Med 2003;163:2625–31 [DOI] [PubMed] [Google Scholar]

- 38.Isaac T, Weissman JS, Davis RB, et al. Overrides of medication alerts in ambulatory care. Arch Intern Med 2009;169:305–11 [DOI] [PubMed] [Google Scholar]

- 39.Magnus D, Rodgers S, Avery AJ. GPs' views on computerized drug interaction alerts: questionnaire survey. J Clin Pharm Ther 2002;27:377–82 [DOI] [PubMed] [Google Scholar]

- 40.Abarca J, Malone DC, Armstrong EP, et al. Concordance of severity ratings provided in four drug interaction compendia. J Am Pharm Assoc (2003). 2004;44:136–41 [DOI] [PubMed] [Google Scholar]

- 41.Pham PA. Drug–drug interaction programs in clinical practice. Clin Pharmacol Ther 2008;83:396–8 [DOI] [PubMed] [Google Scholar]

- 42.Fulda TR, Baluck R, Vander zanden J, et al. Disagreement among drug compendia on inclusion and ratings of drug–drug interactions. Curr Ther Res Clin Exp 2000;61:540–8 [Google Scholar]

- 43.Kuperman GJ, Reichley RM, Bailey TC. Using commercial knowledge bases for clinical decision support: opportunities, hurdles, and recommendations. J Am Med Inform Assoc 2006;13:369–71 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Reichley RM, Seaton TL, Resetar E, et al. Implementing a commercial rule base as a medication order safety net. J Am Med Inform Assoc 2005;12:383–9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Miller RA, Gardner RM, Johnson KB, et al. Clinical decision support and electronic prescribing systems: a time for responsible thought and action. J Am Med Inform Assoc 2005;12:403–9 [DOI] [PMC free article] [PubMed] [Google Scholar]