Abstract

Animals assess the values of rewards to learn and choose the best possible outcomes. We studied how single neurons in the primate amygdala coded reward magnitude, an important variable determining the value of rewards. A single, Pavlovian-conditioned visual stimulus predicted fruit juice to be delivered with one of three equiprobable volumes (P = 1/3). A population of amygdala neurons showed increased activity after reward delivery, and almost one half of these responses covaried with reward magnitude in a monotonically increasing or decreasing fashion. A subset of the reward responding neurons were tested with two different probability distributions of reward magnitude; the reward responses in almost one half of them adapted to the predicted distribution and thus showed reference-dependent coding. These data suggest parametric reward value coding in the amygdala as a characteristic component of its function in reinforcement learning and economic decision making.

INTRODUCTION

Reward is central to processes underlying reinforcement learning, approach behavior, and decision making. Behavioral reactions vary depending on the amount of received outcome. Blaise Pascal defined expected value in 1654 as the sum of the probability-weighted possible values (anticipated mean) of a probability distribution. He famously noted that humans tend to maximize this variable when making decisions about future outcomes. Subsequent concepts used monotonic transformations of outcome value, such as utility and prospect, but confirmed the essential nature of reward valuation (Bernoulli 1738; Kahneman and Tversky 1984; Von Neumann and Morgenstern 1944). Animals rationally and consistently prefer larger compared with smaller rewards (Boysen et al. 2001; Collier 1982; Watanabe et al. 2001), suggesting that reward magnitude is an important component of reward value. Therefore studying the coding of reward magnitude may help to advance our understanding of the neurophysiological mechanisms underlying the valuation of reward for choices between differently rewarded options.

The amygdala is known to be involved in the processing of aversive events and in mediating fear conditioning (Medina et al. 2002). However, it is also a key brain structure for reward. Amygdala lesions disrupt behavioral reward processes and associated brain activations in humans (Bechara et al. 1999; Hampton et al. 2007; Johnsrude et al. 2000) and impair many aspects of reward related behavior in animals (Baxter et al. 2000; Everitt et al. 1991; Gaffan et al. 1993; Parkinson et al. 2001). Primate amygdala neurons respond to reward predicting stimuli irrespective of visual stimulus properties, code reward prediction errors, track state value, and process reward prediction based on contingency rather than stimulus-reward pairing (monkey: Belova et al. 2007; Bermudez and Schultz 2010; Nishijo et al. 1988; Paton et al. 2006; Sanghera et al. 1979; Sugase-Miyamoto and Richmond 2005; rat: Carelli et al. 2003; Schoenbaum et al. 1999; Tye and Janak 2007). Post-training inactivation of the amygdala in rats attenuates behavioral responses to reductions of reward, suggesting an involvement in assessing or consolidating changes in reward magnitude (Salinas et al. 1993). Amygdala neurons in monkeys distinguish between small and large rewards (Belova et al. 2008), and amygdala neurons in rats performing in a radial arm maze show differential activity between arms providing small or large rewards (Pratt and Mizumori 1998). Together, these data show a role of the amygdala in the processing of reward information, but we know rather little about the specific reward variable being encoded.

This study was based on the rationale that tests for neuronal coding require variations of the crucial variables of the studied phenomenon. A temporal response to the appearance of reward as such carries rather little information about the particular reward variable being encoded. Reward neurons in several brain structures encode reward variables such as value and risk separately and distinguish between different forms of value (Fiorillo et al. 2003; Lau and Glimcher 2008; Padoa-Schioppa and Assad 2006; Samejima et al. 2005). The neuronal coding of a basic reward variable such as value can be tested by varying the magnitude (volume) of attractive liquids. The most straightforward form of neuronal coding consists in a monotonic, linear relationship between the variable and the response, which can be adequately modeled with linear regressions. However, outcomes of behavior are often appreciated in relation to external references, such as other available reward values (Kahneman and Tversky 1984), and may therefore show additional, nonmonotonic changes when references change. This experiment assessed the role of the primate amygdala in the processing of reward value by testing monotonic relationships to reward magnitude in a simple Pavlovian task and, in addition, probing the adaptation to external references.

METHODS

Animals

Two adult male Macaca mulatta monkeys weighing 4.4 and 6.7 kg served for the experiment. All procedures conformed to National Institutes of Health Guidelines and were approved by the Home Office of the United Kingdom. These animals served also to study reward contingency (Bermudez and Schultz 2010), but all neurons reported here were tested only with reward magnitude.

Behavioral task

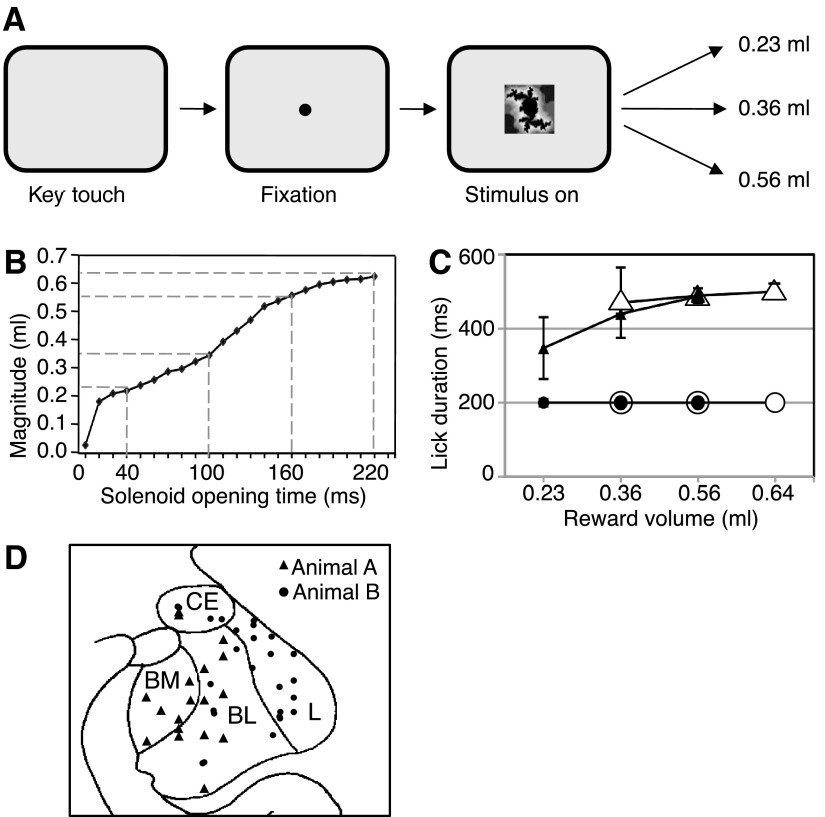

Each trial started when the animal contacted a touch-sensitive key. A 1.3° ocular fixation spot appeared at the center of a computer monitor, and the animal fixated the spot with its eyes. At 1,150 ms plus a mean of 500 ms (truncated exponential distribution) after fixation spot onset, a central 7° visual stimulus appeared with the fixation spot superimposed. An infrared optical system tracked eye position with 5-ms resolution (Iscan). After a fixed interval of 1.5, 1.8, or 2.0 s after stimulus onset, a small volume of raspberry juice reward was delivered via an electromagnetic solenoid valve; the interval was kept constant for several weeks or months. The stimulus predicted three equiprobable (P = 1/3), pseudorandomly varying liquid volumes. For the main experiment, these volumes were 0.23, 0.36, and 0.56 ml (reward probability distribution A predicted by stimulus A; Fig. 1A). To deliver liquid volumes accurately, we regularly calibrated the solenoid valve by adjusting its opening durations, which for all volumes used ranged from 10 to 220 ms (Fig. 1B). The stimulus and fixation spot extinguished at the same time as the solenoid valve closed. The intertrial interval, from reward offset to next stimulus onset, was fixed at 4.0 s. All correctly performed trials were rewarded. Key release or lack or break of ocular fixation during the fixation spot or stimulus was considered as error and led to trial abortion, no reward, and trial repetition.

Fig. 1.

Task, licking, and recording sites. A: sequence of task events in the main experiment. The single stimulus predicted low, intermediate, or high magnitude of liquid reward with equal probability (P = 1/3) drawn semirandomly from distribution A. B: calibration of liquid reward magnitude as a function of solenoid valve opening time. The dashed lines indicate 4 typical values used for distributions A (main experiment) and B (additional for adaptation test). C: median lick durations during stimulus (●) and after reward onset (▴) for main distribution A (195 trials from 12 trial blocks). ○ and ▵, licking with the additional distribution B (adaptation test). The 95% CIs were (from left to right): stimulus A, 0.3, 0.1, and 0.1 (too small for error bars); stimulus B, 0.25, 0.25, and 0.1; rewards A, 83.3 (error bar), 0.4, and 0.5; rewards B, 95.4, 20.1, and 19.0. Trials were grouped according to the 2 distributions and the actual reward delivered (abscissa). D: histological reconstruction of recording sites in the amygdala for magnitude coding neurons in animal A, with approximate positions for animal B superimposed (total n = 56 neurons). CE, central nucleus; L, lateral nucleus; BL, basolateral nucleus; BM, basomedial nucleus.

Adaptation to predicted reward distributions was tested in separate trial blocks from the main experiment. We used a second probability distribution of reward magnitudes (B) indicated by a different stimulus B in pseudorandom alternation with distribution A. Distribution B consisted of 0.36, 0.56, and 0.64 ml (each P = 1/3).

Neuronal recordings

A head holder and recording chamber were fixed to the skull under general anesthesia and aseptic conditions. Single moveable tungsten microelectrodes served to record the activity of single neurons during task performance. We estimated the position of the amygdala from bone marks on frontal and lateral radiographs taken with an electrode guide cannula inserted at known coordinates relative to the stereotaxically implanted chamber (Aggleton and Passingham 1981). Electrode positions were reconstructed in one animal from small electrolytic lesions (15–20 μA × 20–60 s) on 50-μm-thick, cresyl violet–stained histological brain sections. For reasons of ongoing experimentation, we reconstructed recording positions in the second animal approximately from radiographic images. We collapsed recording sites from both monkeys spanning 3 mm in the anterior–posterior dimension onto the same coronal outline.

Data acquisition and analysis

Animals performed at least eight correct trials of each type during neuronal recordings (mean n = 15 correct trials). Tongue interruptions of an infrared light beam at the liquid spout served to monitor lick movements (0.5-ms resolution, STM Sensor Technology). We measured the total duration of tongue interruptions during the stimulus before reward delivery (1.5, 1.8, or 2.0 s) to assess anticipatory licking and during 1,000 ms immediately after reward onset to assess consummatory licking. We evaluated lick durations in individual trials in each trial block with the nonparametric, one-tailed Wilcoxon test (P < 0.05; normalized durations) and ANOVA (P < 0.05; 1-way and 2-way ANOVAs).

The analysis of neuronal activity advanced in two consecutive steps. First, we identified responses in individual neurons by testing for significant activity differences between a test and a control period in the same trials of any type using the paired, nonparametric, two-tailed Wilcoxon test (P < 0.05). For reward responses, the test period was a fixed 400-ms window immediately after reward onset, and the control period was a 400-ms window immediately preceding reward. This control period was beyond any stimulus responses observed in this study. However, a few neurons showed slightly elevated activity preceding the reward; in these cases, we used a 400-ms control period immediately preceding the stimulus. For stimulus responses, we tested activity in a 400-ms window after stimulus onset against control activity during 400 ms immediately preceding the stimulus. We eliminated all neurons whose activity during the control periods varied significantly between trial types (P < 0.05; 1-way ANOVA; 3 neurons).

The second step of neuronal data analysis assessed neuronal coding only in those neurons that showed a significant reward response defined by the Wilcoxon test. A single linear regression served to assessed monotonic relationships to the three magnitudes during the same 400-ms postreward period as the Wilcoxon test, identifying significant slope deviations from 0 with Student's t-test.

In addition to assessing monotonic coding by the regression, we used a one-way ANOVA on all significant, Wilcoxon-defined reward responses to show neuronal response variations across the three magnitudes irrespective of monotonicity (P < 0.05). Alternative testing with the nonparametric (1-way) Kruskal-Walllis test confirmed the ANOVA results and will not be further reported.

We tested reference-dependent adaptation to the two distributions in responses to reward delivery that were both significant in the Wilcoxon test and showed monotonic coding in the subsequent linear regression. We assessed the responses to the two reward magnitudes that were identical in both distributions (0.36 and 0.56 ml) and compared the responses between the two distributions (P < 0.05; 2-tailed Mann-Whitney test). The few observed stimulus responses were compared between the two distributions using also a two-tailed Mann-Whitney test (P < 0.05).

To analyze population responses, we normalized neuronal activity in several steps. First, we expressed the strength of reward responses as percentage of control activity. We used this normalization as initial step for the analysis shown in Fig. 2, E and F, and as basis for Fig. 4, B–E. In the second step, we used the response strengths determined in step 1 to normalize the population data for the regressions on reward responses shown in Fig. 2, E and F. We divided the response strength for a given reward magnitude by the response strength for the highest or lowest of the three magnitudes (reference response), depending on positive or negative response slope, respectively. Then we set the reference response to 1, expressed each response as a fraction of the respective reference response, and regressed the data separately for positive and negative responses slopes (Fig. 2, E and F, respectively).

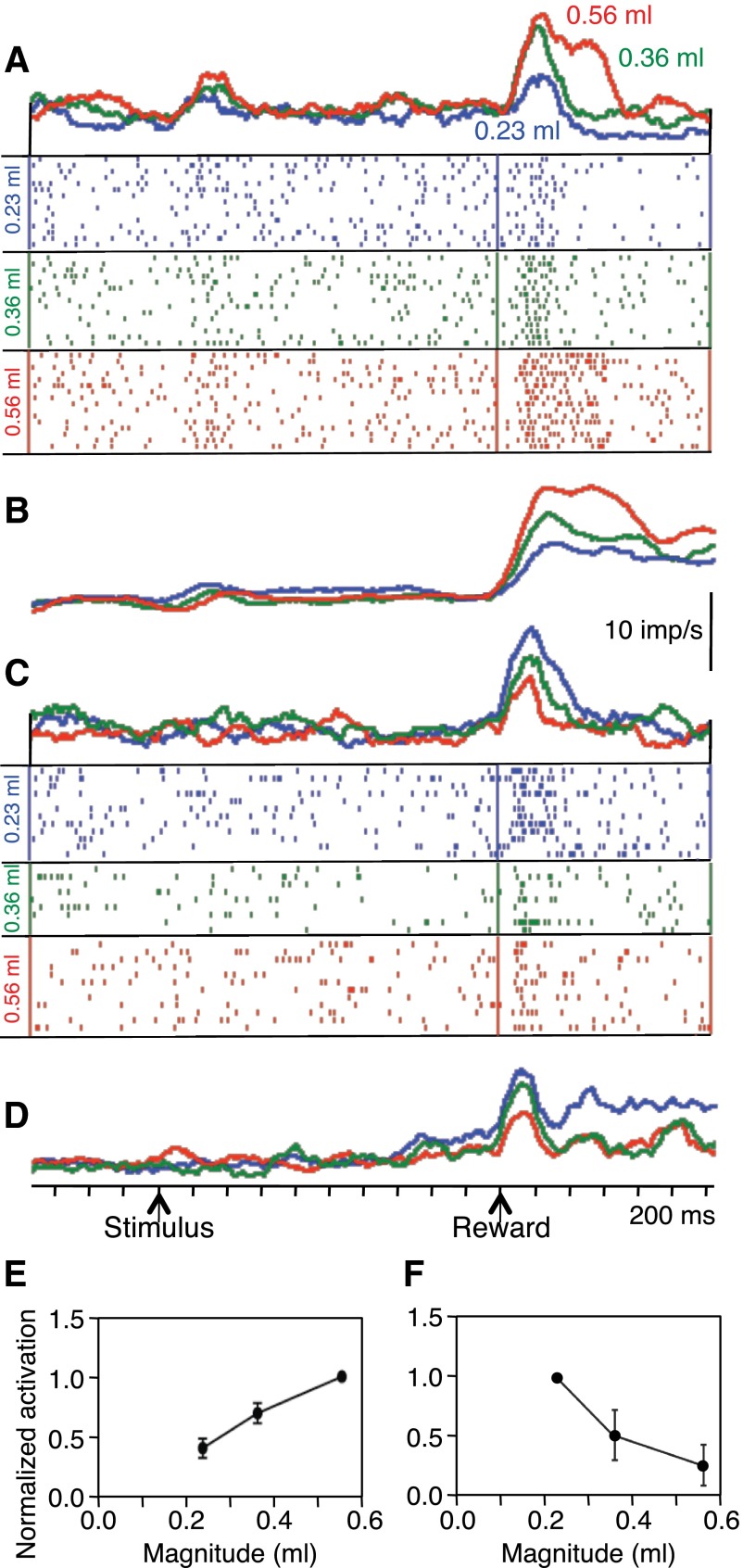

Fig. 2.

Monotonic relationships of neuronal reward responses to variations in liquid magnitude. A: rasters and density functions of monotonically increasing responses from single amygdala neuron with reward magnitude. B: population density functions of averaged activity from all 46 positive monotonically coding neurons in both animals. C: monotonic decrease of neuronal reward responses with increasing liquid magnitude in single amygdala neuron (different neuron than shown in A). D: population density functions of averaged activity from all 10 inverse monotonically varying neurons. In A–D, red = 0.56 ml, green = 0.36 ml, blue = 0.23 ml, bin width = 10 ms, flat smoothing on 15 bins running, imp/s = impulses per second. E: increase in median normalized response strength (fractional change during 400-ms postreward period vs. 400-ms control period) as function of reward magnitude (±95% CIs; 46 neurons). Activity in each neuron was normalized to response to 0.56-ml reward, which itself consisted of a median increase of 251%. F: decrease in median normalized response strength as function of reward magnitude (10 neurons). Activity in each neuron was normalized to response to 0.23 ml reward, whose median increase was 284%.

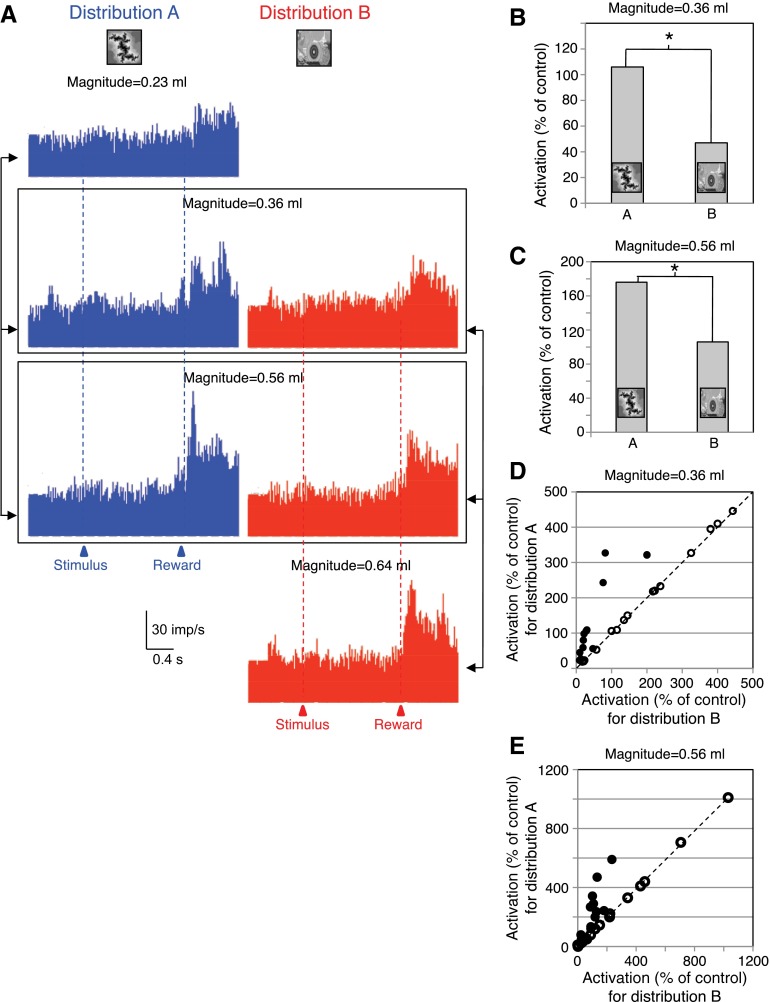

Fig. 4.

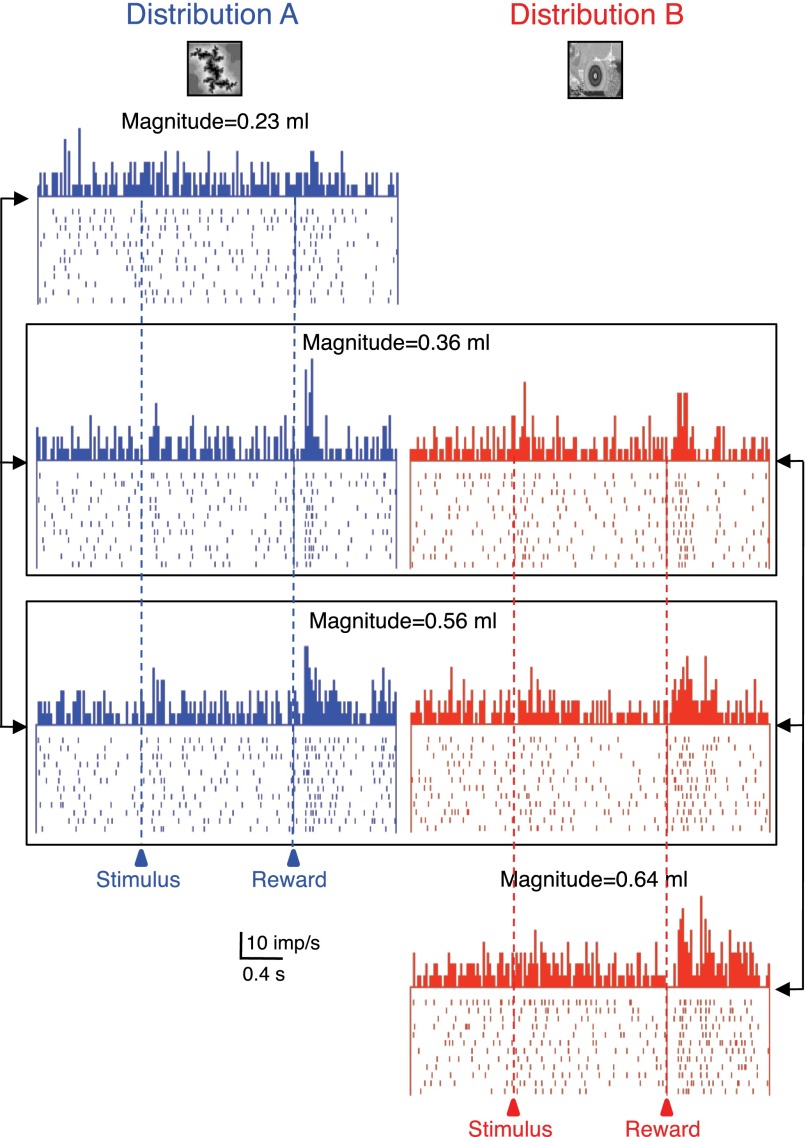

Population display and analysis of adaptive reward magnitude coding in amygdala neurons. A: responses of the 14 adaptive amygdala neurons to delivery of 3 reward magnitudes as elements of 2 different distributions (A: blue, left, B: red, right). Bin width = 10 ms. B and C: median population responses to identical reward magnitudes in the adaptive neurons (B: 0.36 ml, n = 14 neurons; C: 0.56 ml, n = 13) delivered as elements of 2 different reward distributions (A vs. B). *P < 0.05; Mann-Whitney test. D and E: median responses of each neuron to reward magnitude = 0.36 ml (D) and magnitude = 0.56 ml (E) as elements of distribution A plotted against the same magnitudes as elements of distribution B. ●, significant response differences of the adaptive neurons between the 2 distributions in D (n = 14) and E (n = 13) (P < 0.05; Mann-Whitney test). ○, nonadaptive neurons (insignificantly different responses between distributions). Diagonal lines indicate identical responses with both distributions. Total n = 30 neurons in both D and E.

Control for mouth movements

We discarded five neurons whose activity showed close temporal relationships to licking. These responses were closely related to the typical rythmic tongue extensions and retractions, as observed with mouth movement–related activity in the striatum (Apicella et al. 1991).

RESULTS

Design

The experiment used two awake and fully trained male rhesus monkeys. While the animal fixated a small central spot, we presented a single, Pavlovian-conditioned visual stimulus centrally on a computer monitor; thus the stimulus occurred always at the same retinal position. The stimulus predicted a probability distribution of three different, equiprobable reward magnitudes (each at P = 1/3). Unbeknown to the animal, one of the three rewards was pseudorandomly selected in each trial and delivered 2 s after stimulus onset (Fig. 1A). To assess neuronal coding, we regressed the neurophysiological responses of single amygdala neurons on the magnitude of the delivered reward. In a supplementary experiment on a subset of responding neurons, we tested reference-dependent response adaptation with two different reward distributions; we used two visual stimuli predicting two probability distributions, each with three reward magnitudes but different expected values (means).

Behavior

Each animal was trained with distributions A and B for 34 days with 200 daily trials, totaling 6,800 trials, before neuronal recordings began. Both animals performed >95% of all trial types correctly throughout neuronal recordings.

Delivery of all rewards, including the smallest magnitude of 0.23 ml, elicited more licking after the reward compared with the prereward period (P < 0.05; Wilcoxon test), indicating that all magnitudes induced consummatory behavior and thus constituted positive reward value for the animals. Licking durations during 1,000 ms immediately after onset of reward delivery varied significantly between the three reward magnitudes with distributions A and B (Fig. 1C, ▴ and ▵, respectively). This result was seen with a two-way ANOVA [3 magnitudes: P < 0.05; F(2,686) = 4.06; distribution A vs. B: P > 0.05; F(1,686) = 1.06; interaction: P > 0.05; F(2,686) = 1.09] and with two separate one-way ANOVAs on reward magnitude [distribution A: P < 0.05; F(2,342) = 4.19; distribution B: P < 0.05; F(2,342) = 4.47]. There was no difference in licking between the two distributions in a two-way ANOVA on the two common reward magnitudes on individual days and in the overall average across days [0.36 and 0.56 ml: distribution A vs. B: P > 0.05; F(1,460) = 0.013; 2 magnitudes: P < 0.05; F(1,460) = 19.03; interaction: P < 0.05; F(1,460) = 4.424]. Taken together, these data suggest good behavioral discrimination between reward magnitudes but do not indicate behavioral adaptation to the two reward magnitude distributions.

Each of the two stimuli A and B predicted three equiprobable, pseudorandomly alternating reward magnitudes (P = 1/3). Durations of anticipatory licking during each stimulus failed to differ significantly between trials in which different reward magnitudes were delivered at stimulus end, with both distributions A and B (Fig. 1C, ● and ○, respectively). This result was seen with a two-way ANOVA [3 magnitudes: P > 0.05; F(2,686) = 1.01; distribution A vs. B: P > 0.05; F(1,686) = 1.00; interaction: P > 0.05; F(2,686) = 1.00] and with two separate one-way ANOVAs on reward magnitude [distribution A: P > 0.05; F(2,342) = 0.96; distribution B: P > 0.05; F(2,342) = 1.65]. There was no difference in anticipatory licking between the two distributions in a two-way ANOVA on the two common reward magnitudes on individual days and in the overall average [0.36 and 0.56 ml: distribution A vs. B: P > 0.05; F (1, 460) = 0.10; 2 magnitudes: P > 0.05; F(1,460) = 0.11; interaction: P > 0.05; F(1,460) = 0.10]. These data confirm that the two stimuli A and B failed to predict individual reward magnitudes, compatible with the task design, but also suggest that the animals' licking failed to differ between the two distributions.

Neuronal reward magnitude coding

We recorded from 317 neurons in the central nucleus (n = 48), basolateral and basomedial nuclei (n = 119), and lateral nucleus (n = 150) of the amygdala in the two monkeys during Pavlovian reward prediction. Of these, 222 neurons (70%) showed significant increases of activity after reward delivery (P < 0.05; Wilcoxon test). These were different neurons from those tested for reward contingency, which were unresponsive to reward delivery itself (Bermudez and Schultz 2010).

To study reward value coding, we tested the Wilcoxon-significant reward responses in 124 of the 222 neurons with three reward magnitudes (the remaining 98 neurons were subjected to other tests not reported here). Single linear regressions showed monotonic, statistically significant variations of reward responses (P < 0.05, t-test against 0 slope; R2 > 0.72) across the three reward magnitudes in 56 of the 124 neurons (45%; 25% of the 222 reward responding neurons; 18% of the 317 recorded neurons). The one-way ANOVA across the three magnitudes showed significant variations with magnitude (P < 0.05) in 52 of the 56 monotonically varying responses identified by the regression. The ANOVA showed an additional 19 neurons with nonmonotonically varying reward responses across magnitudes (mostly regular V or inverted V function). The higher number of significant responses in the ANOVA compared with the regression would be compatible with the absent requirement for monotonicity in the ANOVA.

The 56 amygdala neurons with monotonically varying reward responses identified by the regression were considered to be coding reward magnitude and were the subjects of this study. They were located in the central nucleus (8 of 48 neurons, 17%), the basolateral and basomedial nuclei (20 of 119 neurons, 17%), and the lateral nucleus (28 of 150 neurons, 19%) of the amygdala (Fig. 1D).

Most monotonically magnitude coding amygdala neurons showed a positive relationship (46 of 56 neurons, 82%). This is evident from the reward responses of single neurons (Fig. 2A) and the averaged population responses (Fig. 2B). The remaining 10 monotonically magnitude coding neurons showed an inverse, negative relation to reward magnitude (Fig. 2, C and D). Figure 2, E and F, shows the results from the regressions on reward magnitudes in the two populations of positively and inverse coding amygdala neurons.

Adaptation to reward distributions

Reward magnitude coding may occur relative to an absolute, physical scale or adapt to the available alternatives within a given probability distribution that serves as a reference. To test this possibility in a supplementary experiment, we used two different, pseudorandomly alternating probability distributions of reward magnitudes, each predicted by a single, specific visual stimulus. Reward magnitudes were 0.23, 0.36, and 0.56 ml for distribution A, as described above, and 0.36, 0.56, and 0.64 ml for distribution B. The 0.36 ml reward constituted the intermediate magnitude within distribution A but the low magnitude within distribution B, and the 0.56 ml constituted the high magnitude within distribution A but the intermediate magnitude within distribution B. We compared the responses to delivery of the two rewards (0.36 and 0.56 ml) between the two distributions in 30 of the 56 neurons with monotonic magnitude relationships (24 neurons with positive slope, 6 neurons with negative slope).

Fourteen of the 24 positively magnitude coding amygdala neurons (58%) showed adaptive reward magnitude coding but none of the 6 negatively coding neurons. The adaptive neurons responded significantly more to one or both of the 0.36 and 0.56 ml reward magnitudes when these rewards were the intermediate and high elements of distribution A compared with being the low and intermediate elements of distribution B (P < 0.05, Mann-Whitney test; Fig. 3, compare left vs. right columns). Of the 14 adaptive neurons, 13 showed adaptive coding with both the 0.36 and 0.56 ml reward magnitudes. The response strengths, measured as percentage increases in reward response compared with prereward baseline, correlated significantly between the two distributions in the 13 neurons adapting at both reward magnitudes (0.36 ml: P = 0.0001; ρ = 0.8941; 0.56 ml: P = 0.003; ρ = 0.7521; Spearman rank correlation). Thus a subset of monotonically reward magnitude coding amygdalar neurons did not seem to encode reward according to its physical magnitude but relative to the other magnitudes within each distribution.

Fig. 3.

Adaptive coding of reward magnitude in 1 amygdala neuron. The 3 reward magnitudes were delivered as elements of 2 different distributions (A: blue, left, B: red, right). Responses to the identical magnitudes varied depending on the distributions from which these magnitudes were drawn (0.36 and 0.56 ml, framed). Bin width = 10 ms.

Figure 4A shows the population responses in the 14 adaptive neurons. The two boxes indicate the two identical reward magnitudes shared between the two distributions (compare left vs. right). Quantatively, adaptive coding resulted in an almost doubling of responses with both tested reward magnitudes (Fig. 4, B and C). Plotting the responses to the same 0.36 and 0.56 ml rewards for the two distributions in Fig. 4, D and E, confirmed the neuronal response adaptation. The adaptive neurons showed higher responses with distribution A compared with B (●), whereas the absolute magnitude coding, nonadaptive neurons showed similar responses in the two distributions (○ on diagonal line). The adaptive response changes between the two distributions in the 13 neurons adapting with both magnitudes failed to correlate significantly between the two reward volumes 0.36 and 0.56 ml (P = 0.1173; ρ = 0.456; Spearman rank correlation).

The 14 adaptive neurons were located in the central, basolateral-basomedial, and lateral amygdalar nuclei (4 of 6, 2 of 8, and 8 of 16 tested neurons, respectively). The occurrences of adaptive and nonadaptive neurons varied insignificantly between the three amygdalar nuclei (P > 0.2; χ2 test). However, the small numbers of neurons in these nuclei precluded further functional distinctions.

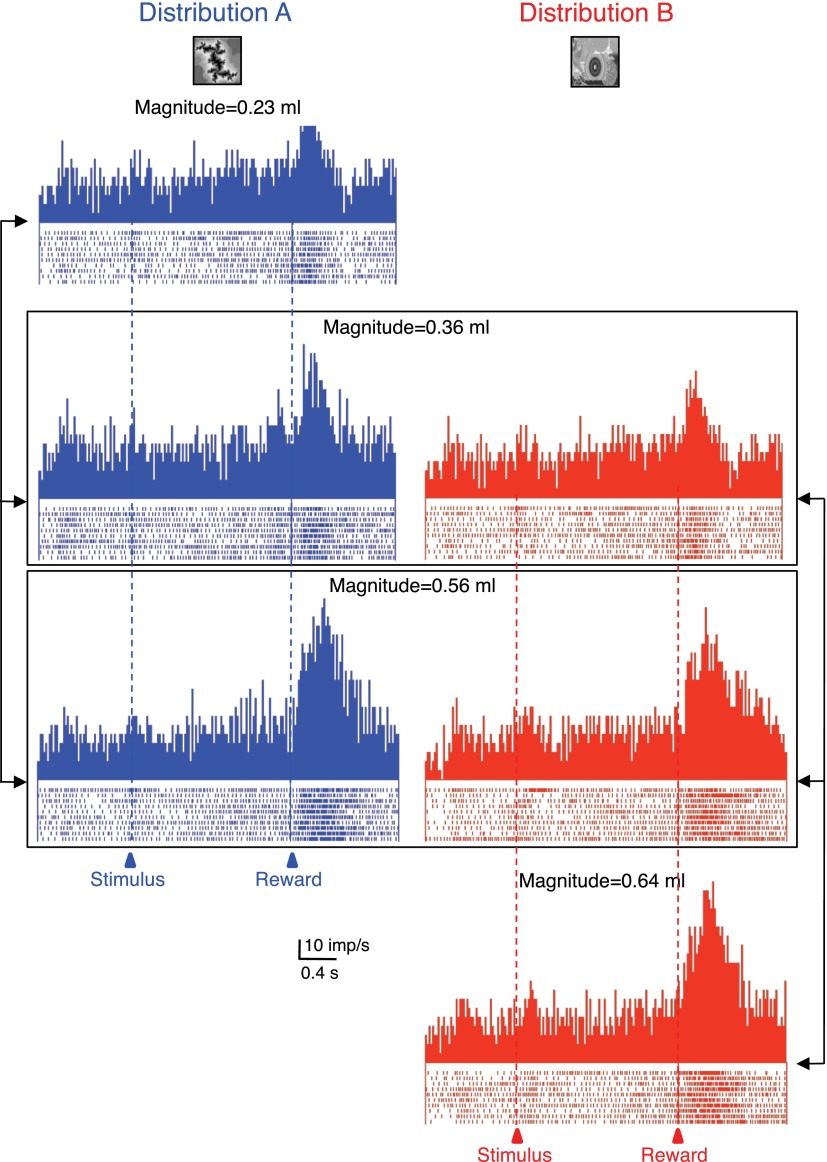

In contrast to the adaptive neurons, the 16 nonadaptive neurons showed monotonic response increases or decreases across all four tested reward magnitudes, irrespective of the probability distribution from which these magnitudes were drawn (Fig. 5).

Fig. 5.

Absolute, nonadaptive coding of reward magnitude in 1 amygdala neuron. Three different reward magnitudes were delivered as elements of 2 different distributions (A: blue, left, B: red, right). Reward responses increased monotonically across all 4 magnitudes, irrespective of the distribution from which the magnitudes were drawn. Bin width = 10 ms.

Stimulus responses

A subset of the reward magnitude coding neurons showed also significant responses to the reward predicting stimulus for distribution A (10 of the 46 positively coding neurons; 1 of the 10 inverse coding neurons; P < 0.05, Wilcoxon test). Of the 30 magnitude coding neurons that were tested with both reward distributions A and B, only 6 (20%) showed stimulus responses (of which 4 showed adaptive responses to reward delivery). The stimulus responses in these six neurons failed to discriminate significantly between the two stimuli A and B (P > 0.05; Mann-Whitney test).

DISCUSSION

These data showed that reward responses of amygdala neurons coded the magnitude of liquid reward in a positive or negative monotonic fashion in a simple Pavlovian task. In addition, some responses adapted to the predicted distribution of reward magnitudes rather than coding reward magnitude in an absolute fashion across the full range of possible rewards. These data suggest that amygdala neurons code reward magnitude as one of the main variables determining the value of rewards for reinforcement learning, approach behavior, and decision making.

An earlier study tested amygdala neurons in rats encountering two different magnitudes of chocolate milk reward during performance of a radial arm memory task (Pratt and Mizumori 1998). Some of these neurons showed different responses depending on the number of reward drops. A subsequent study reported that amygdala neurons discriminated between two reward magnitudes in a more simple Pavlovian task (Belova et al. 2008). Our data showing graded responses to three reward magnitudes in a Pavlovian task are compatible with these results and demonstrate monotonic coding of reward magnitude.

Previous studies reported activating or depressant responses of amygdala neurons signaling unsigned (rectified) or, occasionally, signed reward prediction errors (Belova et al. 2007; Roesch et al. 2010; Tye et al. 2010). In these experiments, each stimulus predicted a mean reward magnitude close to the expected value of the respective distribution. Thus delivery of the extreme magnitudes in each distribution would have induced positive and negative prediction errors, respectively. Neuronal coding of signed prediction errors would consist of bidirectional, activating, and depressant responses in the same neuron, whereas unsigned coding would produce regular or inverted V-shaped response functions. Whereas the former pattern was not shown by any analysis, a few neuronal responses identified by the ANOVA test showed V-shaped profiles. Thus the monotonic coding of reward magnitude in our study is unlikely explained by prediction error coding.

The percentage of amygdala responses varying with reward magnitude (45%) was somewhat lower than the percentages found in other studies: 54% in rat amygdala (Pratt and Mizumori 1998); 55% in monkey striatum (Cromwell and Schultz 2003); 21–66% in monkey orbitofrontal, ventrolateral, and dorsolateral prefrontal cortex (Kennerley and Wallis 2009; Padoa-Schioppa and Assad 2006; Wallis and Miller 2003); and 69% in rat orbitofrontal cortex (Van Duuren et al. 2007). There are several possible reasons for these differences. First, most studies varied reward magnitude with reward predicting stimuli and with reward delivery, whereas we restricted experimentally the magnitude variations to reward delivery. Second, most studies tested only two magnitudes, which are easier to distinguish statistically than three magnitudes. Finally, many studies identified variations by ANOVAs that would also show nonmonotonic changes, whereas we used linear regressions to restrict the statistics to monotonic changes. However, with an ANOVA, we found covariations with magnitude in 57% of neurons (n = 71), which was closer to that found in the other studies. Thus given the current experimental restrictions, the proportion of magnitude sensitive amygdala neurons appeared to be in a similar range as in other major reward structures.

There might be alternative explanations for the monotonic reward magnitude coding. The reward responses failed to show the typical alternating phasic activations correlated with extension and retraction of the tongue (Apicella et al. 1991). Thus they unlikely reflected licking movements. Although the neuron of Fig. 2A showed only a small response to low reward, the inverse magnitude coding neurons showed substantial responses to the same small liquid quantity (Fig. 2C). Thus low neuronal responsiveness to smallest reward magnitude was unlikely caused by failed or undetectable delivery of the small quantity. Taken together, the monotonic relationships to reward magnitude were unlikely attributed to unspecific factors such as mouth movements or failed reward detection.

Our limited tests with different probability distributions may suggest that the reward responses of some amygdala neurons adapted to the available reward magnitudes within each distribution. Although the animals' licking responses to the predictive stimulus failed to distinguish between the two distributions, all adaptive neurons showed monotonic coding of the magnitude of the received reward within each distribution and should have accurately signaled the absolute magnitude had they been nonadaptive. However, the adaptive neurons failed to code magnitude in an absolute manner and rather coded it in reference to the current probability distribution.

Comparable adaptive response shifts between distributions with different expected values have been reported for orbitofrontal, striatal, and dopamine neurons (Cromwell et al. 2005; Hosokawa et al. 2007; Padoa-Schioppa 2009; Tobler et al. 2005; Tremblay and Schultz 1999). The relative, rather than absolute, valuation of items by individual reward neurons may underlie the well-known negative contrast effect, in which a downshift of reward induces lower behavioral reactions compared with the same low reward occurring without preceding higher values (for review, see Black 1968). Economic prospect theory conceptualizes such shifts in valuation as reference-dependent outcome processing in an attempt to understand seemingly irrational decisions (Kahneman and Tversky 1984). Thus contrast effect and reference dependent outcome valuation may reflect a common fundamental phenomenon in behavioral choices that is possibly based on adaptive coding. This form of neuronal coding may constitute a mechanism that regulates the impact of reward information on neuronal responses. The mechanism may help the brain with its limited processing capacity to deal efficiently with the almost unlimited number of rewards in the environment.

The current basic tests of reward magnitude addressed the known involvement of the amygdala in reward processing in general but were not designed to assess the specific reward functions of the different amygdalar nuclei. For example, the central nucleus is prominently involved in the formation of Pavlovian stimulus–reward associations, whereas the basolateral complex is more involved in higher-order conditioning (for review, see Baxter and Murray 2002). The basic valuation of reward is common to all of these processes; thus the present lack of differential distributions of simple reward magnitude coding neurons among the amygdalar nuclei is not surprising. Once such basic reward mechanisms have been established, future work could address more specific reward aspects in the amygdalar nuclei.

Besides the amygdala, other, anatomically connected components of the brain's reward system process also reward value in a quantitative fashion, including dopamine neurons (Tobler et al. 2005), striatum (Cromwell and Schultz 2003), and orbital and dorsolateral prefrontal cortex (Leon and Shadlen 1999; Padoa-Schioppa and Assad 2006; Wallis and Miller 2003). Indeed, learning and expression of stimulus–reward associations in amygdala neurons have been directly related to connections with orbitofrontal cortex (Saddoris et al. 2005; Schoenbaum et al. 2000). Taken together, these data suggest that amygdala neurons are parts of interconnected brain structures that process basic aspects of rewards.

GRANTS

This work was supported by the Wellcome Trust, the Behavioural and Clinical Neuroscience Institute Cambridge, and the Human Frontiers Science Program.

DISCLOSURES

No conflicts of interest, financial or otherwise, are declared by the authors.

ACKNOWLEDGMENTS

We thank M. Arroyo for expert histology.

REFERENCES

- Aggleton and Passingham, 1981. Aggleton JP, Passingham RE. Stereotaxic surgery under X-ray guidance in the rhesus monkey, with special reference to the amygdala. Exp Brain Res 44: 271–276, 1981 [DOI] [PubMed] [Google Scholar]

- Apicella et al., 1991. Apicella P, Ljungberg T, Scarnati E, Schultz W. Responses to reward in monkey dorsal and ventral striatum. Exp Brain Res 85: 491–500, 1991 [DOI] [PubMed] [Google Scholar]

- Baxter and Murray, 2002. Baxter MG, Murray EA. The amygdala and reward. Nat Rev Neurosci 3: 563–573, 2002 [DOI] [PubMed] [Google Scholar]

- Baxter et al., 2000. Baxter MG, Parker A, Lindner CCC, Izquierdo AD, Murray EA. Control of response selection by reinforcer value requires interaction of amygdala and orbital prefrontal cortex. J Neurosci 20: 4311–4319, 2000 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bechara et al., 1999. Bechara A, Damasio H, Damasio AR, Lee GP. Different contributions of the human amygdala and ventromedial prefrontal cortex to decision-making. J Neurosci 19: 5473–5481, 1999 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Belova et al., 2007. Belova MA, Paton JJ, Morrison SE, Salzman CD. Expectation modulates neural responses to pleasant and aversive stimuli in primate amygdala. Neuron 55: 970–984, 2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Belova et al., 2008. Belova MA, Paton JJ, Salzman CD. Moment-to-moment tracking of state value in the amygdala. J Neurosci 28: 10023–10030, 2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bermudez and Schultz, 2010. Bermudez MA, Schultz W. Responses of amygdala neurons to positive reward predicting stimuli depend on background reward (contingency) rather than stimulus-reward pairing (contiguity). J Neurophysiol 103: 1158–1170, 2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bernoulli, 1954. Bernoulli D. Specimen theoriae novae de mensura sortis. Comentarii Academiae Scientiarum Imperialis Petropolitanae (Papers Imp. Acad. Sci. St. Petersburg) 5, 175–192, 1738. Translated as: Exposition of a new theory on the measurement of risk. Econometrica 22, 23–36, 1954 [Google Scholar]

- Black, 1968. Black RW. Shifts in magnitude of reward and contrast effects in instrumental and selective learning: a reinterpretation. Psychol Rev 75: 114–126, 1968 [DOI] [PubMed] [Google Scholar]

- Boysen et al., 2001. Boysen ST, Berntson GG, Mukobi KL. Size matters: impact of item size and quantity on array choice by chimpanzees (Pan troglodytes). J Comp Psychol 115: 106–110, 2001 [DOI] [PubMed] [Google Scholar]

- Carelli et al., 2003. Carelli RM, Williams JG, Hollander JA. Basolateral amygdala neurons encode cocaine self-administration and cocaine-associated cues. J Neurosci 23: 8204–8211, 2003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collier, 1982. Collier GH. Determinants of choice. Nebr Symp Motiv 29: 69–127, 1982 [PubMed] [Google Scholar]

- Cromwell et al., 2005. Cromwell HC, Hassani OK, Schultz W. Relative reward processing in primate striatum. Exp Brain Res 162: 520–525, 2005 [DOI] [PubMed] [Google Scholar]

- Cromwell and Schultz, 2003. Cromwell HC, Schultz W. Effects of expectations for different reward magnitudes on neuronal activity in primate striatum. J Neurophysiol 89: 2823–2838, 2003 [DOI] [PubMed] [Google Scholar]

- Everitt et al., 1991. Everitt BJ, Morris KA, O′Brien A, Robbins TW. The basolateral amygdala-ventral striatal system and conditioned place preference: further evidence of limbic-striatal interactions underlying reward-related processes. Neuroscience 42: 1–18, 1991 [DOI] [PubMed] [Google Scholar]

- Fiorillo et al., 2003. Fiorillo CD, Tobler PN, Schultz W. Discrete coding of reward probability and uncertainty by dopamine neurons. Science 299: 1898–1902, 2003 [DOI] [PubMed] [Google Scholar]

- Gaffan et al., 1993. Gaffan D, Murray EA, Fabre-Thorpe M. Interaction of the amygdala with the frontal lobe in reward memory. Eur J Neurosci 5: 968–975, 1993 [DOI] [PubMed] [Google Scholar]

- Hampton et al., 2007. Hampton AN, Adolphs R, Tyszka JM, O'Doherty JP. Contributions of the amygdala to reward expectancy and choice signals in human prefrontal cortex. Neuron 55: 545–555, 2007 [DOI] [PubMed] [Google Scholar]

- Hosokawa et al., 2007. Hosokawa T, Kato K, Inoue M, Mikami A. Neurons in the macaque orbitofrontal cortex code relative preference of both rewarding and aversive outcomes. Neurosci Res 57: 434–445, 2007 [DOI] [PubMed] [Google Scholar]

- Johnsrude et al., 2000. Johnsrude IS, Owen AM, White NM, Zhao WV, Bohbot V. Impaired preference conditioning after anterior temporal lobe resection in humans. J Neurosci 20: 2649–2656, 2000 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahneman and Tversky, 1984. Kahneman D, Tversky A. Choices, values, and frames. Am Psychol 4: 341–350, 1984 [Google Scholar]

- Kennerley and Wallis, 2009. Kennerley SW, Wallis JD. Reward-dependent modulation of working memory in lateral prefrontal cortex. J Neurosci 29: 3259–3270, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lau and Glimcher, 2008. Lau B, Glimcher PW. Value representations in the primate striatum during matching behavior. Neuron 58: 451–463, 2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leon and Shadlen, 1999. Leon MI, Shadlen MN. Effect of expected reward magnitude on the responses of neurons in the dorsolateral prefrontal cortex of the macaque. Neuron 24: 415–425, 1999 [DOI] [PubMed] [Google Scholar]

- Medina et al., 2002. Medina JF, Repa JC, Mauk MD, LeDoux JE. Parallels between cerebellum- and amygdala-dependent conditioning. Nat Rev Neurosci 3: 122–131, 2002 [DOI] [PubMed] [Google Scholar]

- Nishijo et al., 1988. Nishijo H, Ono T, Nishino H. Single neuron responses in amygdala of alert monkey during complex sensory stimulation with affective significance. J Neurosci 8: 3570–3583, 1988 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Padoa-Schioppa, 2009. Padoa-Schioppa C. Range-adapting representation of economic value in the orbotofrontal cortex. J Neurosci 29: 14004–14014, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Padoa-Schioppa and Assad, 2006. Padoa-Schioppa C, Assad JA. Neurons in the orbitofrontal cortex encode economic value. Nature 441: 223–226, 2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parkinson et al., 2001. Parkinson JA, Crofts HS, McGuigan M, Tomic DL, Everitt BJ, Roberts AC. The role of primate amygdala in conditioned reinforcement. J Neurosci 21: 7770–7780, 2001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paton et al., 2006. Paton JJ, Belova MA, Morrison SE, Salzman CD. The primate amygdala represents the positive and negative value of visual stimuli during learning. Nature 439: 865–870, 2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pratt and Mizumori, 1998. Pratt WE, Mizumori SJY. Characteristics of basolateral amygdala neuronal firing on a spatial memory task involving differential reward. Behav Neurosci 112: 554–570, 1998 [DOI] [PubMed] [Google Scholar]

- Roesch et al., 2010. Roesch MR, Calu DJ, Esber GR, Schoenbaum G. Neural correlates of variations in event processing during learning in basolateral amygdala. J Neurosci 30: 2464–2471, 2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saddoris et al., 2005. Saddoris MP, Gallagher M, Schoenbaum G. Rapid associative encoding in basolateral amygdala depends on connections with orbitofrontal cortex. Neuron 46: 321–331, 2005 [DOI] [PubMed] [Google Scholar]

- Salinas et al., 1993. Salinas JA, Packard MG, McGaugh JL. Amygdala modulates memory for changes in reward magnitude: reversible post-training inactivation with lidocaine attenuates the response to a reduction in reward. Behav Brain Res 59: 153–159, 1993 [DOI] [PubMed] [Google Scholar]

- Samejima et al., 2005. Samejima K, Ueda Y, Doya K, Kimura M. Representation of action-specific reward values in the striatum. Science 310: 1337–1340, 2005 [DOI] [PubMed] [Google Scholar]

- Sanghera et al., 1979. Sanghera MK, Rolls ET, Roper-Hall A. Visual responses of neurons in the dorsolateral amygdala of the alert monkey. Exp Neurol 63: 610–626, 1979 [DOI] [PubMed] [Google Scholar]

- Schoenbaum et al., 1999. Schoenbaum G, Chiba AA, Gallagher M. Neural encoding in orbitofrontal cortex and basolateral amygdala during olfactory discrimination learning. J Neurosci 19: 1876–1884, 1999 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schoenbaum et al., 2000. Schoenbaum G, Chiba AA, Gallagher M. Changes in functional connectivity in orbitofrontal cortex and basolateral amygdala during learning and reversal training. J Neurosci 20: 5179–5189, 2000 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sugase-Miyamoto and Richmond, 2005. Sugase-Miyamoto Y, Richmond BJ. Neuronal signals in the monkey basolateral amygdala during reward schedules. J Neurosci 25: 11071–11083, 2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tobler et al., 2005. Tobler PN, Fiorillo CD, Schultz W. Adaptive coding of reward value by dopamine neurons. Science 307: 1642–1645, 2005 [DOI] [PubMed] [Google Scholar]

- Tremblay and Schultz, 1999. Tremblay L, Schultz W. Relative reward preference in primate orbitofrontal cortex. Nature 398: 704–708, 1999 [DOI] [PubMed] [Google Scholar]

- Tye et al., 2010. Tye KM, Cone JJ, Schairer WW, Janak PH. Amygdala neural encoding in the absence of reward during extinction. J Neurosci 30: 116–125, 2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tye and Janak, 2007. Tye KM, Janak PH. Amygdala neurons differentially encode motivation and reinforcement. J Neurosci 27: 3937–3945, 2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Duuren et al., 2007. Van Duuren E, Escamez FA, Joosten RN, Visser R, Mulder AB, Pennartz CM. Neural coding of reward magnitude in orbitofrontal cortex of the rat during a five-odor olfactory discrimination task. Learn Mem 14: 446–456, 2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Von Neumann and Morgenstern, 1944. Von Neumann J, Morgenstern O. The Theory of Games and Economic Behavior. Princeton, NJ: Princeton University Press, 1944 [Google Scholar]

- Wallis and Miller, 2003. Wallis JD, Miller EK. Neuronal activity in primate dorsolateral and orbital prefrontal cortex during performance of a reward preference task. Eur J Neurosci 18: 2069–2081, 2003 [DOI] [PubMed] [Google Scholar]

- Watanabe et al., 2001. Watanabe M, Cromwell HC, Tremblay L, Hollerman JR, Schultz W. Behavioral reactions reflecting differential expectations of outcomes in monkeys. Exp Brain Res 140: 511–518, 2001 [DOI] [PubMed] [Google Scholar]