Abstract

Here we report early cross-sensory activations and audiovisual interactions at the visual and auditory cortices using magnetoencephalography (MEG) to obtain accurate timing information. Data from an identical fMRI experiment were employed to support MEG source localization results. Simple auditory and visual stimuli (300-ms noise bursts and checkerboards) were presented to seven healthy humans. MEG source analysis suggested generators in the auditory and visual sensory cortices for both within-modality and cross-sensory activations. fMRI cross-sensory activations were strong in the visual but almost absent in the auditory cortex; this discrepancy with MEG possibly reflects influence of acoustical scanner noise in fMRI. In the primary auditory cortices (Heschl’s gyrus) onset of activity to auditory stimuli was observed at 23 ms in both hemispheres, and to visual stimuli at 82 ms in the left and at 75 ms in the right hemisphere. In the primary visual cortex (Calcarine fissure) the activations to visual stimuli started at 43 ms and to auditory stimuli at 53 ms. Cross-sensory activations thus started later than sensory-specific activations, by 55 ms in the auditory cortex and by 10 ms in the visual cortex, suggesting that the origins of the cross-sensory activations may be in the primary sensory cortices of the opposite modality, with conduction delays (from one sensory cortex to another) of 30–35 ms. Audiovisual interactions started at 85 ms in the left auditory, 80 ms in the right auditory, and 74 ms in the visual cortex, i.e., 3–21 ms after inputs from both modalities converged.

Introduction

Prevailing ideas on multisensory integration suggest that high-order heteromodal association cortical areas receive input from different sensory cortices and then integrate the signals (Mesulam, 1998). While there is much experimental evidence to support this notion (Cusick, 1997), recent research suggests that this view is incomplete. Specifically, low-order sensory areas may show cross-sensory (i.e., cross-modal) activations and multisensory interactions starting already at about 40–50 ms post stimulus. The evidence includes intracranial electrophysiological recordings in nonhuman primates (Schroeder et al., 2001; Schroeder & Foxe, 2002) and human EEG/MEG studies (Giard & Peronnet, 1999; Foxe et al., 2000; Molholm et al., 2002; Teder-Sälejärvi et al., 2002; Molholm et al., 2004; Murray et al., 2005; Talsma et al., 2007). Supporting evidence comes from fMRI studies that show such activations in or very close to primary sensory areas (Pekkola et al., 2005; Martuzzi et al., 2006). Taken together, these findings suggest that low-order sensory areas may contribute to multisensory integration starting from very early processing stages (Schroeder et al., 2003; Foxe & Schroeder, 2005; Macaluso & Driver, 2005; Molholm & Foxe, 2005; Schroeder & Foxe, 2005; Ghazanfar & Schroeder, 2006; Macaluso, 2006; Musacchia & Schroeder, 2009).

Supporting these findings, anatomical connectivity studies in nonhuman primates have revealed direct cortico-cortical pathways from primary auditory (A1) to primary visual (V1) cortex (Rockland & Van Hoesen, 1994; Falchier et al., 2002; Rockland & Ojima, 2003; Clavagnier et al., 2004; Budinger et al., 2006). Direct connections from V1 to A1 are not known, but the visual area V2 is directly connected with A1 (Budinger et al., 2006).

Another slightly longer pathway between A1 and V1 is through the heteromodal association cortical area STP/STS (Schroeder & Foxe, 2002; Cappe & Barone, 2005). This area also sends feedback to both V1 (Benevento et al., 1977) and A1 ((Smiley & Falchier, 2009) for a review). Further, STS is connected to the non-primary supratemporal caudomedial auditory area that has connections with the primary auditory cortex (de la Mothe et al., 2006a).

Multisensory integration additionally takes place in several subcortical structures of which the superior colliculus (SC) has been studied the most (Stein & Meredith, 1993). SC receives direct sensory input from central sensory pathways and then projects to multiple cortical areas (Stein & Meredith, 1993); it also receives cortical feedback (Stein et al., 2002; Jiang & Stein, 2003). Connections between SC and STP/STS have been shown in primates (Bruce et al., 1986; Gross, 1991). However, there are no known direct connections from SC to A1 or V1 (although SC receives direct input from V1, see (Collins et al., 2005)). Anatomical connectivity mappings in nonhuman primates have revealed yet other possible subcortical and cortical locations from which A1 and V1 might receive multisensory inputs (de la Mothe et al., 2006b; Hackett et al., 2007; Smiley et al., 2007; Cappe et al., 2009; Musacchia & Schroeder, 2009; Smiley & Falchier, 2009).

Here we examine the timing and possible pathways underlying early cross-sensory activations and audiovisual interactions by recording both MEG and fMRI responses in humans.

Materials and Methods

Subjects, Stimuli, and Tasks

Subjects were studied after giving their written informed consent; the study protocol was approved by the Massachusetts General Hospital institutional review board and followed the guidelines of the Declaration of Helsinki. We presented 300-ms auditory (A), visual (V), and audiovisual (AV, simultaneous auditory and visual) stimuli to 8 healthy right-handed human subjects (6 females, age 22–30) in a rapid event-related fMRI design with pseudorandom stimulus order and ISI. A/V/AV stimuli were equiprobable. The auditory stimuli were white noise bursts (15 ms rise and decay) and the visual stimuli static checkerboard patterns (visual angle 3.5°x3.5° and contrast 100%, foveal presentation). The task was to respond to rare (10%) target auditory (tone pips), visual (checkerboard with a diamond pattern in the middle), or audiovisual (combination) stimuli with the right index finger as quickly as possible while reaction time (RT) was measured. All subjects were recorded with three stimulus sequences with different interstimulus intervals (ISIs). The three sequences had different mean (1.5/3.1/6.1 s) ISIs–inside each sequence the ISI was jittered at 1.15 sec (equivalent to TR of the fMRI acquisition) resolution to improve fMRI analysis power (Dale, 1999; Burock & Dale, 2000). All subjects were recorded with identical stimuli and tasks in both MEG and fMRI. The visual stimuli were projected with a video projector on a translucent screen. In MEG, the auditory stimuli were presented with MEG-compatible headphones. During fMRI the auditory stimuli were presented through MRI-compatible headphones (MR Confon GmbH, Magdeburg, Germany). Auditory stimulus intensity was adjusted to be as high as the subject could comfortably listen to (in MEG about 65 dB SPL; in fMRI clearly above the scanner acoustical noise). The stimuli were presented with a PC running Presentation 9.20 (Neurobehavioral Systems Inc, Albany, CA, USA.). During fMRI the stimuli were synchronized with triggers from the fMRI scanner. The timing of the stimuli with respect to the trigger signals was confirmed with a digital oscilloscope.

Structural MRI recordings, brain segmentation, and spatial intersubject alignment/morphing

Structural T1-weighted MRIs of the subjects were acquired with a 1.5T Siemens Avanto scanner (Siemens Medical Solutions, Erlangen, Germany) and a head coil using a standard MPRAGE sequence. Anatomical images were segmented with the FreeSurfer software (http://www.surfer.nmr.mgh.harvard.edu) (Fischl et al., 2002; Fischl et al., 2004). The individual brains were spatially co-registered by morphing them into the FreeSurfer average brain via a spherical surface (Fischl et al., 1999).

fMRI recordings and analysis

Brain activity was measured using a 3.0T Siemens Trio scanner (Siemens Medical Solutions) with a Siemens head coil, and an echo planar imaging (EPI) sequence which is blood oxygenation level dependent (BOLD; flip angle 90°, TR=1.15 s, TE=30 ms, 25 horizontal 4-mm slices with 0.4 mm gap, 3.1x3.1 mm in-plane resolution, fat saturation off). The rapid event-related functional data were analyzed with FreeSurfer (http://www.surfer.nmr.mgh.harvard.edu). During preprocessing, each individual’s data were motion corrected (Cox & Jesmanowicz, 1999), spatially smoothed with a Gaussian kernel of full-width at half maximum (FWHM) 5 mm, and normalized by scaling the whole brain intensity to a fixed value of 1000. The first three images of each run were discarded as were rare images showing abrupt changes in intensity. Any remaining head motion was used as an external regressor. A finite impulse response (FIR) model (Burock & Dale, 2000) was applied to estimate the activations as a function of time separately for each trial type (Auditory/Visual/Audiovisual/Auditory Target/Visual Target/Audiovisual Target) with a time window of 2.3 s pre-stimulus to 16.1 s post-stimulus. FIR method estimates the hemodynamic response time courses without assuming any form for the response. The functional volumes were spatially aligned with the structural MRI of individual subjects. During group analysis, the individual results were morphed through a spherical surface into the FreeSurfer average brain (Fischl et al., 1999) and spatially smoothed at 10 mm FWHM.

MEG recordings

Whole-head 306-channel MEG (VectorView, Elekta-Neuromag, Finland) was recorded in a magnetically shielded room (Cohen et al., 2002; Hämäläinen & Hari, 2002). The instrument employs three sensors (one magnetometer and two planar gradiometers) at each of the 102 measurement locations. We also recorded simultaneous horizontal and vertical electro-oculogram (EOG). All signals were band-pass filtered to 0.03–200 Hz prior to sampling at 600 Hz.

Spatial registration of MEG data with MRI

Prior to the MEG recordings, the locations of four small head position indicator coils attached to the scalp and several additional scalp surface points were recorded with respect to the fiduciary landmarks (nasion and two preauricular points) using a 3-D digitizer (Fastrak Polhemus, VT). For MRI/MEG coordinate system alignment, the fiduciary points were then identified from the structural MRIs. Using scalp surface locations, this initial approximation was refined using an iterative closest point search algorithm.

MEG analysis of evoked responses

Responses were averaged offline separately for each trial type (Auditory/Visual/Audiovisual/Auditory Target/Visual Target/Audiovisual Target) time locked to the stimulus onsets with a time window of 250 ms pre-stimulus to 1150 ms post-stimulus, with a total of 375 individual epochs per category for all non-target conditions (100 epochs for the long, 125 for the intermediate, and 150 for the short ISI run). Epochs exceeding 150 μV or 3000 fT/cm at any EOG or MEG channel, respectively, were automatically discarded from the averages. For analysis of the MEG response waveforms, the averaged signals were digitally low-pass filtered at 40 Hz and amplitudes were measured with respect to a 200-ms pre-stimulus baseline. Non-target Auditory (A), Visual (V), and Audiovisual (AV) evoked responses were analyzed for timing information, as were audiovisual interactions estimated from the calculated response [AV − (A+V)]. For sensor analysis, we estimated the onset latencies from the gradient amplitudes from the two planar gradiometers x and y at each sensor location. Onset latencies were picked at the first time point that exceeded 3SDs above noise level estimated from the 200-ms pre-stimulus baseline. We additionally required that the onset must not occur earlier than 15 ms and the response has to stay above the noise level for at least 20 ms. Data from one subject were too noisy for accurate onset latency determination and were therefore discarded. Onset latencies from the three runs with different ISIs were practically identical; thus, the responses were averaged across ISI conditions, resulting in that each subject’s averaged response consisted of about 300 responses to individual stimuli (for detailed numbers of epochs see Supporting Information). Interaction responses had stronger noise (in sensor space, theoretically by times) than their constituent (A/V/AV) responses, requiring stronger low-pass filtering (20 Hz with 3 dB roll-off). Further, for the same reason the onsets picked from individual subjects’ interaction responses were less reliable. We therefore used bootstrapping to estimate the means and variances of interaction onsets across subjects (for details see supporting information, Appendix S1).

MEG source analysis and source-specific time course extraction

Minimum-norm estimates (MNEs) (Hämäläinen & Ilmoniemi, 1984; Hämäläinen & Ilmoniemi, 1994) were computed from combined anatomical MRI and MEG data (Dale & Sereno, 1993; Liu et al., 1998; Dale et al., 2000). The anatomically constrained MNE solutions are implemented in our software package available at http://www.nmr.mgh.harvard.edu/martinos/userInfo/data/sofMNE.php. For inverse computations, the cortical surface was decimated to 5,000–10,000 vertices per hemisphere. A gain matrix A describing the ensemble of MEG sensor measurements with one current dipole on every vertex point was calculated using a realistic single-compartment boundary element model (Hämäläinen & Sarvas, 1989) based on the structural MRI data. The noise covariance matrix (C) was estimated from the pre-stimulus baselines of individual trials. These two matrices, along with the source covariance matrix R, were used to calculate the inverse operator W = RAT (ARAT + C)−1. The MEG data at each time point were then multiplied by W to yield the estimated source activity in the cortical surface: s(t) = Wx(t). Finally, dSPM values (noise normalized MNE) were calculated to reduce the point-spread function, and to allow displaying the activations using the F-statistic. Using the MNE software, the individual dSPM results were morphed through a spherical surface into the FreeSurfer average brain (Fischl et al., 1999). Grand average dSPM estimates were calculated from the grand average MNE and the grand average noise covariance matrix. dSPM time courses were extracted from pre-determined (Desikan et al., 2006) anatomical locations of A1 and V1, after which their onset latencies were measured as described above for sensor signals.

Results

Behavioral results

Hit rates and reaction times (RTs) to target stimuli were measured during MEG and fMRI. Hit rates were excellent (96%) across all experimental conditions. During MEG, the RTs were faster for audiovisual (median 421 ms, mean ± SD 430 ± 60 ms) and auditory (median 462 ms, mean ± SD 464 ± 72 ms) than for visual (median 520 ms, mean ± SD 526 ± 54 ms) stimuli with outliers excluded according to the Median Absolute Deviation statistics criterion. In fMRI the difference was slightly smaller (for audiovisual median 539 ms, mean 546 ± 86 ms, for auditory median 584 ms, mean 596 ± 112 ms, and for visual stimuli median 628 ms, mean 634 ± 74 ms). The longer RTs in fMRI may be due to slower response pads and the MR environment. Since the auditory, visual, and audiovisual stimuli were in random order within stimulus sequences and stimulus timing was pseudorandom, it is unlikely that attention related differences could have influenced the onset latencies. RT cumulative distributions showed behavioral evidence of multisensory integration (Raij T, unpublished observations).

MEG onset latencies: sensor data

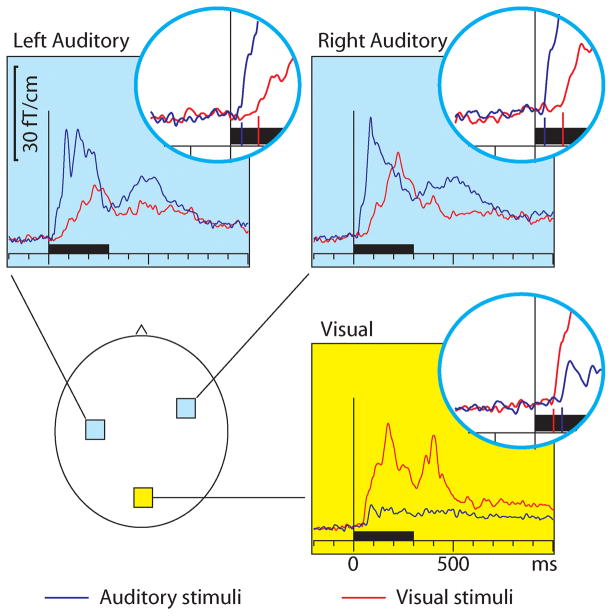

Figure 1 shows that, as expected, the auditory stimuli activated the auditory cortices bilaterally and the visual stimuli the midline occipital visual cortex. However, cross-sensory effects were also observed: visual stimuli strongly activated temporal cortices and auditory stimuli (more weakly) the midline occipital cortex. Table 1 lists the corresponding onset latencies (the time when the grand average response first exceeded 3SD noise level estimated from the pre-stimulus baseline). The sensory-specific activations started 19–22 ms earlier over the auditory than visual cortex. The cross-sensory activations started after the sensory-specific responses, by 21 ms over visual cortex and by 46 ms over auditory cortex. The left and right auditory cortices showed similar timings and were thus averaged for individual level analysis. Table 2 lists the across-subjects onset latencies. The individual subjects’ responses were clearly noisier and thus relatively poorly corresponded to the grand average results; hence, no statistical comparisons were done for the sensor data (see dSPM data below for statistical tests).

Figure 1.

MEG sensor responses over the auditory (light blue background) and visual (yellow background) cortices for auditory (blue traces) and visual (red traces) stimuli; the approximate sensor locations are shown in the lower left panel. The circular insets show the beginning of the response enlarged twofold, with vertical lines where the onsets were found; the corresponding numerical values are reported in Table 1. The responses show the magnetic field gradient amplitudes as a function of time. From each subject, the sensor location showing the maximal ~100 ms sensory-specific activation was selected, and the signals from these sensors were averaged across subjects. Sensors over both auditory and visual cortices show cross-sensory activations, but these are stronger over the auditory than the visual cortex. The sensory-specific activations occur earlier than the cross-sensory activations, especially over the auditory cortices. Time scales −200 to +1000 ms post stimulus, stimulus duration 300 ms (black bar).

Table 1.

Grand average results in sensor space. The values were picked from the grand average sensor signals shown in Figure 1; for across-subjects values see Table 2.

| Sensor latencies (ms) Grand average values | Auditory Cortex | Visual cortex | |

|---|---|---|---|

| Left | Right | ||

| Auditory stimuli | 28 | 25 | 68 |

| Visual stimuli | 72 | 72 | 47 |

| AV stimuli | 25 | 27 | 40 |

Table 2.

Across-subjects results in sensor space.

| Sensor latencies (ms) Across-subjects values | Auditory Cortex (L&R) Mean ± SD | Visual cortex Mean ± SD |

|---|---|---|

| Auditory stimuli | 30 ± 4 | 83 ± 40 |

| Visual stimuli | 84 ± 28 | 61 ± 12 |

| AV stimuli | 42 ± 9 | 50 ± 11 |

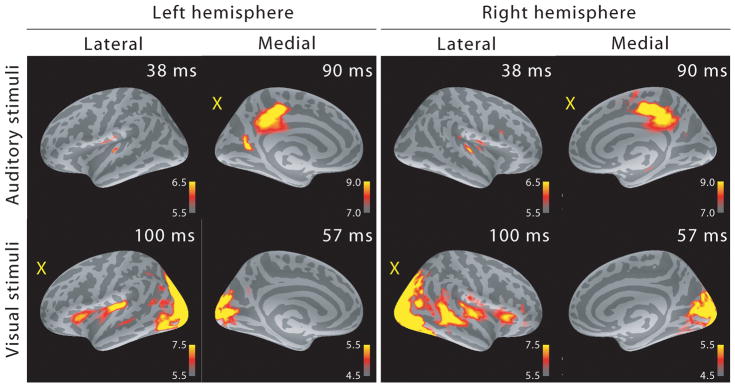

MEG dSPM source analysis

Due to the relatively large distance between the sensors and the sources, each MEG sensor records activity from a rather large cortical area, and MEG source analysis can better estimate the actual source locations. Figure 2 shows the MEG localization results at selected time points after the onset of activity. As expected, auditory stimuli activated the supratemporal auditory cortex and visual stimuli the primary visual cortex in the calcarine fissure. Cross-sensory activations were also clear: visual stimuli strongly activated large areas of temporal cortex including the supratemporal auditory cortex, and auditory stimuli (albeit more weakly) some parts of the calcarine fissure especially in the left hemisphere (right hemisphere cross-sensory activity in calcarine cortex was below selected visualization threshold). Additional cross-sensory activations were observed outside primary sensory areas.

Figure 2.

MEG source analysis snapshots (dSPM F-statistics) picked at early activation latencies. Both sensory-specific and cross-sensory (marked with a yellow “X”) activations are seen (the right hemisphere calcarine cortex cross-sensory activity is not visible at this threshold). While some of the cross-sensory activations are located inside the sensory areas (as delineated in (Desikan et al., 2006)), these seem to occupy slightly different locations than the sensory-specific activations. However, the spatial resolution of MEG is somewhat limited – hence exact comparisons are discouraged. Visual checkerboard stimuli activated additional areas outside the sensory cortices, for example superior temporal sulci (STS) especially in the right hemisphere and Broca’s areas bilaterally.

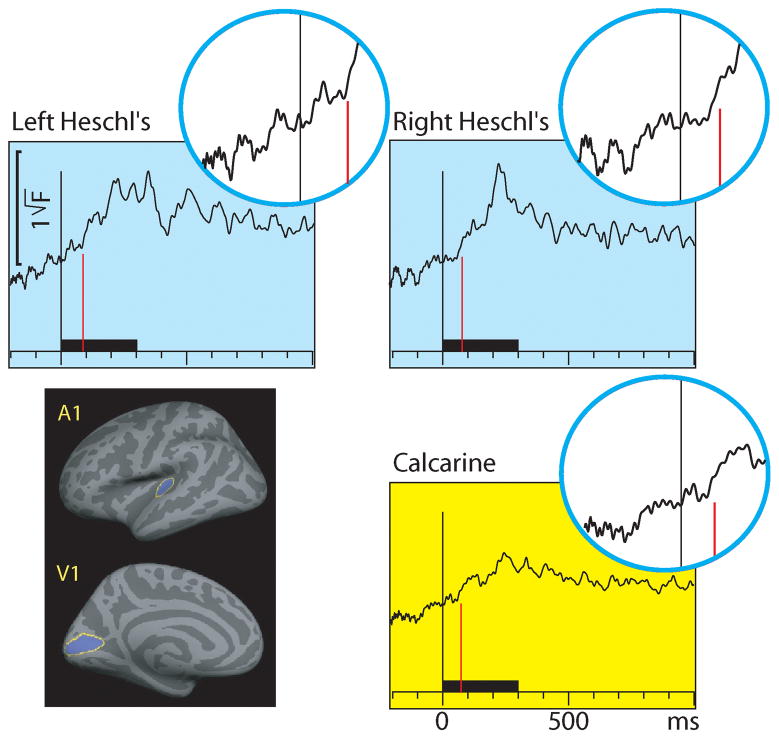

MEG dSPM source-specific onset latencies

Figure 3 shows the source-specific grand average dSPM time courses from Heschl’s gyri (auditory cortices) and calcarine fissure (visual cortex) for auditory and visual stimuli. The areas were localized based on an anatomical parcellation of the FreeSurfer analysis package (Desikan et al., 2006). The left and right calcarine fissure activations, due to their close anatomical proximity and similar timings and current orientations for foveal stimuli, were averaged. As expected, the dSPM source-specific time courses were similar as for the sensor data in Fig. 1. However, in the presence of multiple source areas, time courses extracted from specific cortical locations can more accurately reflect activity of the selected area than sensors that collect activity from a rather large area, due to volume conduction. Table 3 lists the onset latencies measured from the grand average dSPM responses. The sensory-specific activations started 20 ms earlier in A1 than in V1. The cross-sensory activations started after the sensory-specific responses, by 10 ms in V1, and by 59 ms in the left and 52 ms in the right A1. The conduction delays (the time it takes for one stimulus to spread from one sensory cortex to another) were 30 ms for auditory and 35 ms for visual stimuli. As expected, the onsets of responses to AV stimuli (not shown in Figure 3) closely followed the onsets to the unimodal stimulus that first reached the sensory cortex. Figure 4 shows the dSPM time courses calculated from the audiovisual interaction responses [AV-(A+V)]. These were much weaker than the constituent A/V/AV responses and had a poorer signal-to-noise ratio (SNR). The interactions started 3–21 ms after inputs from the two sensory modalities converged on the sensory cortex.

Figure 3.

MEG source-specific (dSPM) time courses for Heschl’s gyri (auditory cortex; light blue background) and calcarine fissure (visual cortex; yellow background) to auditory and visual stimuli; responses to AV stimuli are not shown. The source areas, shown for the left hemisphere in the lower left panel, were based on an anatomical parcellation (Desikan et al., 2006); left and right calcarine sources were averaged. The circular insets show the beginning of each response enlarged twofold, with vertical lines where the onsets for auditory (blue traces) and visual (red traces) stimuli were found; the corresponding numerical values are reported in Table 3. Both sensory-specific and cross-sensory activations are observed. The sensory-specific activations occur earlier than the cross-sensory activations, especially in the auditory cortices. Time scales –200 to +1000 ms post stimulus, stimulus duration 300 ms (black bar).

Table 3.

Grand average results in source space. The values were picked from the dSPM grand average time courses shown in Figures 3–4; for across-subjects values see Table 4.

| Source latencies (ms) Grand average values | Heschl’s gyrus | Calcarine cortex | |

|---|---|---|---|

| Left | Right | ||

| Auditory stimuli | 23 | 23 | 53 |

| Visual stimuli | 82 | 75 | 43 |

| AV stimuli | 23 | 27 | 47 |

| AV interaction | 85 | 80 | 74 |

Figure 4.

MEG source-specific (dSPM) audiovisual interaction [AV-(A+V)] time courses from Heschl’s gyri (auditory cortex; light blue background) and calcarine fissure (visual cortex; yellow background). The circular insets show the beginning of each response enlarged twofold, with vertical lines where the onsets were found; the corresponding numerical values are reported in Table 3. Interactions are observed in both the auditory and visual cortices, starting 3–21 ms after the inputs from both sensory modalities converge in the sensory cortex. Time scales –200 to +1000 ms post stimulus, stimulus duration 300 ms (black bar).

The individual subjects’ dSPM time courses had an improved SNR and much better corresponded to the latencies picked from the grand average responses than the sensor data (Fig. 1, Tables 1–2); hence, we considered the dSPM time courses the more accurate metrics. Again, since the onset latencies did not clearly differ across hemispheres, for individual level analysis the responses were averaged across the left and right hemisphere. Table 4 lists the across-subjects onset latencies (mean ± SD and median across the latencies measured from the individual subjects’ responses). The sensory-specific auditory evoked responses in Heschl’s gyrus started 21 ms earlier than the visual evoked responses in the calcarine cortex (Wilcoxon signed rank test (n=7), P=0.0156). Cross-sensory activations in Heschl’s gyrus occurred 49 ms later than sensory-specific activations which was statistically significant (P=0.0156). Cross-sensory activations in calcarine cortex occurred 22 ms later than sensory-specific activations, but this difference did not quite reach statistical significance (P=0.0781). The difference between cross-sensory conduction delays (from one sensory cortex to another) for auditory and visual stimuli was non-significant (P=0.578). These non-parametric test results were highly consistent with confirmatory analyses conducted with parametric methods (paired t-tests).

Table 4.

Across-subjects results in source space.

| Source latencies (ms) Across-subjects values | Heschl’s gyrus (L&R) Mean ± SD (Median) | Calcarine cortex Mean ± SD (Median) |

|---|---|---|

| Auditory stimuli | 27 ± 8 (28) | 70 ± 26 (73) |

| Visual stimuli | 76 ± 10 (77) | 48 ± 8 (45) |

| AV stimuli | 29 ± 11 (27) | 50 ± 6 (52) |

| AV interaction | 77 ± 22 (84) * | 68 ± 28 (75) * |

Interaction responses were low-pass filtered at 20 Hz and their mean/SD/median estimated by bootstrapping to mitigate SNR problems caused by the [AV − (A+V)] operation. To see onset latencies for all stimulus categories using bootstrapping, see Supporting Information Table S1.

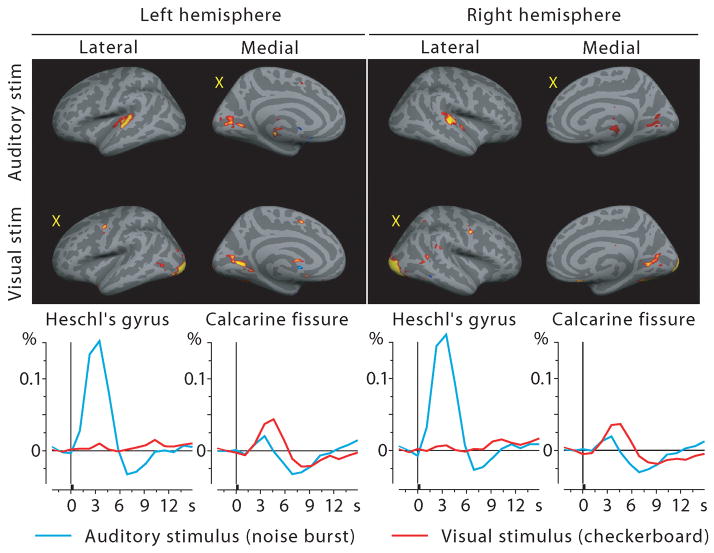

fMRI activations

MEG source analysis has some uncertainty due to the electromagnetic inverse problem. We therefore attempted to confirm the MEG source analysis with fMRI using the same subjects and stimuli. Figure 5 shows the fMRI results averaged across subjects; the BOLD time courses are shown below the activation maps. The calcarine cortex was activated by both visual and auditory stimuli. The auditory cortex showed activity for auditory stimuli, but, in contrast to the MEG results, for visual stimuli showed only a tiny positive deflection at the typical BOLD signal peak latency (Fig. 5 time courses). At closer inspection some voxels in medial parts of Heschl’s gyri were activated by visual stimuli (p<0.01 in the left and p<0.1 in the right hemisphere, grand average fMRI signal, fixed effects analysis) while the majority were not, diluting these effects in the spatial average across the entire region-of-interest.

Figure 5.

fMRI activations to auditory and visual stimuli projected on the inflated cortex at the 4th time frame after stimulus onset (top) and the corresponding BOLD % signal change time courses from Heschl’s gyrus and calcarine fissure (bottom). Sensory-specific activations are very clear; cross-sensory responses are strong in Calcarine fissure but almost absent in Heschl’s gyri (see Discussion). Yellow X letters in the brain images mark cross-sensory conditions. Responses to AV stimuli and audiovisual interactions not shown.

Discussion

Here we report early cross-sensory activations and audiovisual interactions in both A1 and V1 in humans. The current study is to our knowledge the first to utilize both MEG and fMRI in the same subjects for this purpose, which has the advantage of offering spatiotemporally accurate estimates; individually, the methods offer compromises between spatial and temporal accuracy. The delay from sensory-specific to cross-sensory activity was 55 ms in the auditory and 10 ms in the visual cortex, which is clearly asymmetrical. This timing pattern reflects the fact that sensory-specific activations start earlier in the auditory (23 ms) than in the visual (43 ms) cortex, and is thus consistent with the idea that the origin of the cross-sensory activations is in the sensory cortex of the opposite stimulus modality, with about 30–35 ms conduction delay between the two areas. Audiovisual interactions were observed after both sensory-specific and cross-sensory inputs converged on the sensory cortex.

Since MEG detects synchronous activity of thousands of neurons, the relationship between anatomical distance and conduction delay is not necessarily straightforward. Therefore, with the ~30 ms delay, the cross-sensory activations could utilize direct cortico-cortical connections between the auditory and visual cortices, connect through a subcortical relay, or travel through an association cortical area such as STP/STS (e.g., (Raij et al., 2000)). In the last option one would additionally expect activity in STS before observing cross-sensory activity in A1/V1. The analysis is complicated by the fact that, based on intracranial data from primates (Schroeder & Foxe, 2002; Schroeder et al., 2003) and EEG recordings in humans (Foxe & Simpson, 2002), visual stimuli would be expected to activate STS starting only about 8 ms after V1 onset, therefore largely overlapping cross-sensory activations in the auditory cortex. In our data, STS was strongly activated in the right hemisphere at the same time as the cross-sensory auditory cortex activation occurred, consistent with the possibility of the signal traveling through STS, but in the left hemisphere no clear STS activation was observed. Hence, STS seems unlikely to play a key role. An additional factor to take into account is that the conduction delay had a small asymmetric trend: 30 ms for auditory stimuli with a known monosynaptic connection A1→V1, and 35 ms for visual stimuli with a known somewhat longer known pathway V1→V2→A1. Hence, it appears plausible that the earliest cross-sensory activations may utilize the A1→V1 and V1→V2→A1 pathways. Future studies utilizing dynamic causality modeling (Lin et al., 2009; Schoffelen & Gross, 2009) might provide additional insight.

As described in Introduction, another possibility is that subcortical pathways may send direct cross-sensory inputs to sensory cortices. If the subcortical structures have a similar delay between auditory and visual processing as A1 and V1, then latency data alone cannot distinguish between cortico-cortical and subcortico-cortical cross-sensory influences. However, currently no such audiovisual pathways are known. Clearly, correct interpretation of functional connectivity analyses greatly benefits from accurate anatomical connectivity information.

The current results could mistakenly be interpreted to suggest that earliest audiovisual interactions can occur only after the cross-sensory inputs arrive at the sensory cortex. This would put a lower limit of 53 ms in the visual cortex and 75 ms for auditory cortex for audiovisual interactions to start, which is in fact what was observed in the present MEG data. Yet, there is strong EEG evidence of audiovisual interactions in humans occurring earlier, starting at about 40 ms, being maximal over posterior areas (Giard & Peronnet, 1999; Molholm et al., 2002; Teder-Sälejärvi et al., 2002; Molholm et al., 2004). We suggest three options why these early interactions were not observed in the present MEG study. First, EEG may receive somewhat stronger contribution from subcortical generators than MEG (Goldenholz et al., 2009), which is consistent with the idea that the early interactions in EEG may be generated in subcortical structures participating in multisensory processes. Second, the subcortical parts of afferent pathways leading to sensory cortex could be modulated by subcortical multisensory influences, which would allow audiovisual interactions to occur from the very beginning of the cortically generated “sensory-specific” responses. However, this scenario would predict that the early interactions should be equally visible for EEG and MEG. Third, due to the sensitivity of MEG to mainly tangentially oriented currents, we could have missed some earlier components if they were radial. However, this is unlikely given than it has been estimated that only about 10% of the cortical surface (thin strips at crests of gyri) are radial enough to generate currents undetectable with MEG (Hillebrand & Barnes, 2002), and further, source orientation differences would be expected to influence all activations and interactions equally because in the present study source areas were kept constant. Therefore, the most likely explanation is that the early interactions are generated in subcortical structures. EEG/MEG source localization accuracy for deep generators is poor, resulting in that these methods are not well suited for more accurate localization of the subcortical structures.

The finding that fMRI could detect strong cross-sensory activations in the calcarine fissure but in Heschl’s gyri these were almost absent was unexpected. In the present data some voxels in Heschl’s gyri were significantly activated by visual stimuli (albeit weakly) at the typical BOLD signal peak latency (see Fig. 5) while the majority were not, rendering the reliability of this observation inconclusive. Previous fMRI studies have shown that at least some classes of visual stimuli (such as lip movements) may robustly activate A1 (Pekkola et al., 2005). Even simple stimuli such as those employed in the current study have been reported to result in cross-sensory activations (Martuzzi et al., 2007). One possible explanation is that the acoustical EPI scanner noise dampened evoked responses in the auditory cortex due to neuronal adaptation. It is also possible that, again, due to the acoustical scanner noise, the BOLD signal may saturate before the neurons do (Bandettini et al., 1998). A possible reason why our study may have been affected by this more than the above mentioned could be that the acoustic noise is EPI parameter dependent – our faster scanning could have increased the noise. This interpretation is supported by that MEG, where the scanner is completely quiet, showed clear cross-sensory responses in the supratemporal auditory cortex.

It is unclear what the functional roles of the early cross-sensory activations might be. Behaviorally, for complex processing such as audiovisual speech, asynchrony as large as 250 ms can go unnoticed (Miller & D’Esposito, 2005). Moreover, in realistic stimulus environments auditory input lags the visual input, depending on the distance from the source (9 ms increase for every 3 m distance), which influences the relative timings of the auditory and visual inputs. Possibly the early cross-sensory influences have a role for lower-order processing (where synchrony requirements may be tighter) than audiovisual speech. There is also evidence that these activations may be task dependent (Wang et al., 2008). Plausibly, early cross-sensory activations could serve to facilitate later processing stages and reaction times by enhancing top-down processing and speeding up the exchange of signals between brain areas (Bar et al., 2006; Raij et al., 2008; Sperdin et al., 2009).

As a technical finding, a very high SNR was necessary in order to detect onset latencies accurately. The present results were achieved by using a low-noise MEG instrument, high quality shielded room, and a large number of stimuli. The averaged responses in the current study consisted of about 300 individual responses per subject, which was not quite sufficient for sensor space analysis at the individual subject level, but quite sufficient for grand average analysis (about 2100 individual responses, or twice as much when additionally averaging across hemispheres). However, compared with the sensor data, extracting time courses from the auditory and visual sensory cortices by dSPM source analysis greatly improved SNR at the individual level (more robust onsets and less interindividual variability), hence giving more accurate results that also agreed with the grand average values well. Moreover, in both sensor and source space, we present two different across-subjects analyses: onset latencies picked (i) from grand average (N=7) responses (Tables 1 and 3) and (ii) from individual subjects’ responses (Tables 2 and 4). The latter were useful for testing the statistical significance of latency differences across areas. However, the grand average response consists of the largest number of epochs and therefore has by far the best SNR, consequently showing slightly earlier onsets than those picked from the individual subjects’ responses (e.g., compare Tables 3 and 4). Yet, grand average responses could also be biased to show early onsets if some of the subjects show earlier onsets than the others. In our data this bias appears to be quite small as most latencies are similar across Tables 3 and 4. Still, differentiating between the boost given by improved SNR and the possible bias caused by subjects with faster onsets is difficult. The two analyses complement each other and offer slightly different interpretations. The grand average analysis is well suited for finding the earliest onset latencies across the subject pool. The means across values from individual subjects, in turn, show slightly longer onset latencies but are better protected from individual bias. An additional possibility would be to use bootstrapping to synthesize multiple grand averages and, after picking onsets from each, study their means and variances. The results, shown in Supporting Table S1, may offer a compromise between the two analyses. With the present data all three analyses give quite similar results and lead to the same conclusions; to improve comparability with earlier studies we here focus on reporting the results with most widely used methods.

The current results are not directly comparable with studies where stimuli or tasks in one modality precede the other. For example, in audiovisual speech, the visual input (lip movements) typically starts 100–300 ms before the auditory stimulus onset, and therefore may modulate the incoming auditory signals at multiple levels, including in secondary auditory cortex (Besle et al., 2008) and even in central auditory pathways (Musacchia et al., 2006). Similarly, auditory evoked responses can be modulated by visuomotor processes such as gaze direction already in inferior colliculus (Groh et al., 2001). As yet another example, attention may modulate responses and interactions through top-down mechanism in primary sensory cortices as soon as they appear (Talsma et al., 2007; Poghosyan & Ioannides, 2008; Karns & Knight, 2009). The flash-sound illusion also would appear to belong in this category (Shams et al., 2002; Shams et al., 2005; Watkins et al., 2006; Mishra et al., 2007).

In the current study visual stimuli were presented foveally. However, anatomical studies have shown that areas in the calcarine fissure representing peripheral vision may be more strongly connected with the auditory cortex than areas representing fovea (Falchier et al., 2002; Wang et al., 2008). It is therefore plausible that cross-sensory latencies could be faster for peripherally than for foveally presented visual stimuli, although some previous studies have found the opposite effect (Talsma & Woldorff, 2005; Talsma et al., 2007).

The late BOLD negative undershoots for auditory stimuli in the calcarine cortex (Fig. 5) are consistent with an earlier block design fMRI study reporting cross-sensory negative BOLD activations (Laurienti et al., 2002); however, due to their study design, they could not investigate the time courses of the BOLD responses. Our BOLD time course analysis shows that the cross-sensory responses in the visual cortex show a small initial positive component, followed by a clearly stronger negative de-activation component. Temporal summation of such events in a block design would be expected to result in a net negative BOLD effect.

These findings are consistent with previously shown sensory-specific and cross-sensory activations (see Introduction). For example, the A1 onsets for our auditory stimuli at 23 ms are only ~8 ms slower than the earliest responses to clicks recorded from the human auditory cortex intracranially (Celesia, 1976) or by MEG (Parkkonen et al., 2009), and the V1 onset at 43 ms simultaneous with the earliest reported responses from V1 ((Foxe & Schroeder, 2005; Musacchia & Schroeder, 2009) for reviews). The observed cross-sensory onset latencies are, to our knowledge, the fastest reported in humans. This was made possible by the good SNR in our data and the extraction of source-specific amplitudes. Audiovisual interactions were observed only after the uni- and cross-sensory inputs converged on the sensory cortex, but once this happened the interactions appeared almost instantaneously (3–21 ms after convergence). The findings contribute to understanding of cross-sensory activations and interactions in sensory cortices by establishing lower limits to the latency when they can be expected to occur. The results have implications regarding the possible pathways that cross-sensory activations utilize, and suggest that audiovisual interactions occurring before cross-sensory signals arrive (for simultaneous stimuli, 53 ms in visual cortex and 75 ms in auditory cortex) are most likely of subcortical origin; interactions after these latencies could be either cortically or subcortically generated.

Supplementary Material

Acknowledgments

We would like to thank Valerie Carr, Deirdre Foxe, Mark Halko, Hsiao-Wen Huang, Yu-Hua Huang, Adrian KC Lee, Natsuko Mori, Mark Vangel, and Dan Wakeman for invaluable help. This work was supported by grants from the National Institutes of Health R01 NS048279, R01 HD040712, R01 NS037462, P41 RR14075, R21EB007298, National Center for Research Resources, Harvard Catalyst Pilot Grant/The Harvard Clinical and Translational Science Center (NIH UL1 RR 025758-02 and financial contributions from participating organizations), Sigrid Juselius Foundation, Academy of Finland, Finnish Cultural Foundation, National Science Council, Taiwan (NSC 98-2320-B-002-004-MY3, NSC 97-2320-B-002-058-MY3), and National Health Research Institute, Taiwan (NHRI-EX97-9715EC).

Abbreviations

- A1

primary auditory cortex

- AV

audiovisual

- BOLD

blood oxygen level dependent

- dSPM

dynamic statistical parametric mapping

- EEG

electroencephalography

- EPI

echo planar imaging

- FIR

finite impulse response

- fMRI

functional magnetic resonance imaging

- FWHM

full-width at half maximum

- MEG

magnetoencephalography

- MNE

minimum-norm estimate

- MRI

magnetic resonance imaging

- RT

reaction time

- SC

superior colliculus

- SD

standard deviation

- SNR

signal-to-noise ratio

- STP/STS

superior temporal polysensory area/superior temporal sulcus

- V1

primary visual cortex

Footnotes

Some of these data have been presented in abstract form at the 8th annual meeting of the International Multisensory Research Forum (IMRF), Sydney, Australia, July 4–8, 2007.

References

- Bandettini P, Jesmanowicz A, Van Kylen J, Birn R, Hyde J. Functional MRI of brain activation induced by scanner acoustic noise. Magn Reson Med. 1998;39:410–416. doi: 10.1002/mrm.1910390311. [DOI] [PubMed] [Google Scholar]

- Bar M, Kassam K, Ghuman A, Boshyan J, Schmid A, Dale A, Hämäläinen M, Marinkovic K, Schacter D, Rosen B, Halgren E. Top-down facilitation of visual recognition. Proc Natl Acad Sci USA. 2006;103:449–454. doi: 10.1073/pnas.0507062103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benevento L, Fallon J, Davis B, Rezak M. Auditory-visual interaction in single cells in the cortex of the superior temporal sulcus and orbital cortex of the macaque monkey. Exp Neurol. 1977;57:849–872. doi: 10.1016/0014-4886(77)90112-1. [DOI] [PubMed] [Google Scholar]

- Besle J, Fischer C, Bidet-Caulet A, Lecaignard F, Bertrand O, Giard M. Visual activation and audiovisual interactions in the auditory cortex during speech perception: intracranial recordings in humans. J Neurosci. 2008;28:14301–14310. doi: 10.1523/JNEUROSCI.2875-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bruce C, Desimone R, Gross C. Both striate cortex and superior colliculus contribute to visual properties of neurons in superior temporal polysensory area of macaque monkey. J Neurophysiol. 1986;55:1057–1075. doi: 10.1152/jn.1986.55.5.1057. [DOI] [PubMed] [Google Scholar]

- Budinger E, Heil P, Hess A, Scheich H. Multisensory processing via early cortical stages: connections of the primary auditory cortical field with other sensory systems. Neuroscience. 2006;143:1065–1083. doi: 10.1016/j.neuroscience.2006.08.035. [DOI] [PubMed] [Google Scholar]

- Burock M, Dale A. Estimation and detection of event-related fMRI signals with temporally correlated noise: a statistically efficient and unbiased approach. Hum Brain Mapp. 2000;11:249–260. doi: 10.1002/1097-0193(200012)11:4<249::AID-HBM20>3.0.CO;2-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cappe C, Barone P. Heteromodal connections supporting multisensory integration at low levels of cortical processing in the monkey. Eur J Neurosci. 2005;22:2886–2902. doi: 10.1111/j.1460-9568.2005.04462.x. [DOI] [PubMed] [Google Scholar]

- Cappe C, Morel A, Barone P, Rouiller E. The thalamocortical projection systems in primate: an anatomical support for multisensory and sensorimotor interplay. Cereb Cortex. 2009;19:2025–2037. doi: 10.1093/cercor/bhn228. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Celesia G. Organization of auditory cortical areas in man. Brain. 1976;99:403–414. doi: 10.1093/brain/99.3.403. [DOI] [PubMed] [Google Scholar]

- Clavagnier S, Falchier A, Kennedy H. Long-distance feedback projections to area V1: implications for multisensory integration, spatial awareness, and visual consciousness. Cogn Affect Behav Neurosci. 2004;4:117–126. doi: 10.3758/cabn.4.2.117. [DOI] [PubMed] [Google Scholar]

- Cohen D, Schlapfer U, Ahlfors S, Hamalainen M, Halgren E. New six-layer magnetically-shielded room for MEG. In: Nowak H, Haueisen J, Giessler F, Huonker R, editors. 13th International Conference on Biomagnetism. VDE Verlag; Jena, Germany: 2002. pp. 919–921. [Google Scholar]

- Collins C, Lyon D, Kaas J. Distribution across cortical areas of neurons projecting to the superior colliculus in new world monkeys. Anat Rec A Discov Mol Cell Evol Biol. 2005;285:619–627. doi: 10.1002/ar.a.20207. [DOI] [PubMed] [Google Scholar]

- Cox R, Jesmanowicz A. Real-time 3D image registration for functional MRI. Magn Reson Med. 1999;42:1014–1018. doi: 10.1002/(sici)1522-2594(199912)42:6<1014::aid-mrm4>3.0.co;2-f. [DOI] [PubMed] [Google Scholar]

- Cusick C. The superior temporal polysensory region in monkeys. In: Rockland K, Kaas J, Peters A, editors. Cerebral Cortex Volume 12: Extrastriate Cortex in Primates. Plenum Press; New York: 1997. pp. 435–468. [Google Scholar]

- Dale A. Optimal experimental design for event-related fMRI. Hum Brain Mapp. 1999;8:109–114. doi: 10.1002/(SICI)1097-0193(1999)8:2/3<109::AID-HBM7>3.0.CO;2-W. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dale A, Liu A, Fischl B, Buckner R, Belliveau J, Lewine J, Halgren E. Dynamic statistical parametric mapping: Combining fMRI and MEG for high-resolution imaging of cortical activity. Neuron. 2000;26:55–67. doi: 10.1016/s0896-6273(00)81138-1. [DOI] [PubMed] [Google Scholar]

- Dale A, Sereno M. Improved localization of cortical activity by combining EEG and MEG with MRI cortical surface reconstruction: A linear approach. J Cogn Neurosci. 1993;5:162–176. doi: 10.1162/jocn.1993.5.2.162. [DOI] [PubMed] [Google Scholar]

- de la Mothe L, Blumell S, Kajikawa Y, Hackett T. Cortical connections of the auditory cortex in marmoset monkeys: Core and medial belt regions. J Comp Neurol. 2006a;496:27–71. doi: 10.1002/cne.20923. [DOI] [PubMed] [Google Scholar]

- de la Mothe L, Blumell S, Kajikawa Y, Hackett T. Thalamic connections of the auditory cortex in marmoset monkeys: Core and medial belt regions. J Comp Neurol. 2006b;496:72–96. doi: 10.1002/cne.20924. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desikan R, Segonne F, Fischl B, Quinn B, Dickerson B, Blacker D, Buckner R, Dale A, Maguire R, Hyman B, Albert M, Killiany R. An automated labeling system for subdividing the human cerebral cortex on MRI scans into gyral based regions of interest. Neuroimage. 2006;31:968–980. doi: 10.1016/j.neuroimage.2006.01.021. [DOI] [PubMed] [Google Scholar]

- Falchier A, Clavagnier S, Barone P, Kennedy H. Anatomical evidence of multimodal integration in primate striate cortex. J Neurosci. 2002;22:5749–5759. doi: 10.1523/JNEUROSCI.22-13-05749.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fischl B, Salat D, Busa E, Albert M, Dieterich M, Haselgrove C, van der Kouwe A, Killiany R, Kennedy D, Klaveness S, Montillo A, Makris N, Rosen B, Dale A. Whole brain segmentation: automated labeling of neuroanatomical structures in the human brain. Neuron. 2002;33:341–355. doi: 10.1016/s0896-6273(02)00569-x. [DOI] [PubMed] [Google Scholar]

- Fischl B, Sereno M, Tootell R, Dale A. High-resolution inter-subject averaging and a coordinate system for the cortical surface. Hum Brain Mapp. 1999;8:272–284. doi: 10.1002/(SICI)1097-0193(1999)8:4<272::AID-HBM10>3.0.CO;2-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fischl B, van der Kouwe A, Destrieux C, Halgren E, Segonne F, Salat D, Busa E, Seidman L, Goldstein J, Kennedy D, Caviness V, Makris N, Rosen B, Dale A. Automatically parcellating the human cerebral cortex. Cereb Cortex. 2004;14:11–22. doi: 10.1093/cercor/bhg087. [DOI] [PubMed] [Google Scholar]

- Foxe J, Morocz I, Murray M, Higgins B, Javitt D, Schroeder C. Multisensory auditory-somatosensory interactions in early cortical processing revealed by high-density electrical mapping. Brain Res Cogn Brain Res. 2000;10:77–83. doi: 10.1016/s0926-6410(00)00024-0. [DOI] [PubMed] [Google Scholar]

- Foxe J, Schroeder C. The case for feedforward multisensory convergence during early cortical processing. Neuroreport. 2005;16:419–423. doi: 10.1097/00001756-200504040-00001. [DOI] [PubMed] [Google Scholar]

- Foxe J, Simpson G. Flow of activation from V1 to frontal cortex in humans. A framework for defining "early" visual processing. Exp Brain Res. 2002;142:139–150. doi: 10.1007/s00221-001-0906-7. [DOI] [PubMed] [Google Scholar]

- Ghazanfar A, Schroeder C. Is neocortex essentially multisensory? Trends Cogn Sci. 2006;10:278–285. doi: 10.1016/j.tics.2006.04.008. [DOI] [PubMed] [Google Scholar]

- Giard M, Peronnet F. Auditory-visual integration during multimodal object recognition in humans: a behavioral and electrophysiological study. J Cogn Neurosci. 1999;11:473–490. doi: 10.1162/089892999563544. [DOI] [PubMed] [Google Scholar]

- Goldenholz D, Ahlfors S, Hämäläinen M, Sharon D, Ishitobi M, Vaina L, Stufflebeam S. Mapping the signal-to-noise-ratios of cortical sources in magnetoencephalography and electroencephalography. Hum Brain Mapp. 2009;30:1077–1086. doi: 10.1002/hbm.20571. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Groh J, Trause A, Underhill A, Clark K, Inati S. Eye position influences auditory responses in primate inferior colliculus. Neuron. 2001;29:509–518. doi: 10.1016/s0896-6273(01)00222-7. [DOI] [PubMed] [Google Scholar]

- Gross C. Contribution of striate cortex and the superior colliculus to visual function in area MT, the superior temporal polysensory area and the inferior temporal cortex. Neuropsychologia. 1991;29:497–515. doi: 10.1016/0028-3932(91)90007-u. [DOI] [PubMed] [Google Scholar]

- Hackett T, De La Mothe L, Ulbert I, Karmos G, Smiley J, Schroeder C. Multisensory convergence in auditory cortex, II. Thalamocortical connections of the caudal superior temporal plane. J Comp Neurol. 2007;502:924–952. doi: 10.1002/cne.21326. [DOI] [PubMed] [Google Scholar]

- Hämäläinen M, Hari R. Magnetoencephalographic characterization of dynamic brain activation. Basic principles and methods of data collection and source analysis. In: Toga AW, Mazziotta JC, editors. Brain Mapping: The Methods. Academic Press; New York: 2002. pp. 227–253. [Google Scholar]

- Hämäläinen M, Ilmoniemi R. Interpreting measured magnetic fields of the brain: estimates of current distributions. Helsinki University of Technology; Helsinki, Finland: 1984. [Google Scholar]

- Hämäläinen M, Ilmoniemi R. Interpreting magnetic fields of the brain: minimum norm estimates. Med Biol Eng Comput. 1994;32:35–42. doi: 10.1007/BF02512476. [DOI] [PubMed] [Google Scholar]

- Hämäläinen M, Sarvas J. Realistic conductivity geometry model of the human head for interpretation of neuromagnetic data. IEEE Trans Biomed Eng. 1989;36:165–171. doi: 10.1109/10.16463. [DOI] [PubMed] [Google Scholar]

- Hillebrand A, Barnes G. A quantitative assessment of the sensitivity of whole-head MEG to activity in the adult human cortex. Neuroimage. 2002;16:638–650. doi: 10.1006/nimg.2002.1102. [DOI] [PubMed] [Google Scholar]

- Jiang W, Stein B. Cortex controls multisensory depression in superior colliculus. Journal of Neurophysiology. 2003;90:2132–2135. doi: 10.1152/jn.00369.2003. [DOI] [PubMed] [Google Scholar]

- Karns C, Knight R. Intermodal auditory, visual, and tactile attention modulates early stages of neural processing. J Cogn Neurosci. 2009;21:669–683. doi: 10.1162/jocn.2009.21037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laurienti P, Burdette J, Wallace M, Yen Y, Field A, Stein B. Deactivation of sensory-specific cortex by cross-modal stimuli. J Cogn Neurosci. 2002;14:420–429. doi: 10.1162/089892902317361930. [DOI] [PubMed] [Google Scholar]

- Lin F, Hara K, Solo V, Vangel M, Belliveau J, Stufflebeam S, Hämäläinen M. Dynamic Granger-Geweke causality modeling with application to interictal spike propagation. Hum Brain Mapp. 2009;30:1877–1886. doi: 10.1002/hbm.20772. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu A, Belliveau J, Dale A. Spatiotemporal imaging of human brain activity using functional MRI constrained magnetoenceohalography data: Monte Carlo simulations. Proc Natl Acad Sci U S A. 1998;95:8945–8950. doi: 10.1073/pnas.95.15.8945. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Macaluso E. Multisensory processing in sensory-specific cortical areas. Neuroscientist. 2006;12:327–338. doi: 10.1177/1073858406287908. [DOI] [PubMed] [Google Scholar]

- Macaluso E, Driver J. Multisensory spatial interactions: a window onto functional integration in the human brain. Trends Neurosci. 2005;28:264–271. doi: 10.1016/j.tins.2005.03.008. [DOI] [PubMed] [Google Scholar]

- Martuzzi R, Murray M, Maeder P, Fornari E, Thiran J, Clarke S, Michel C, Meuli R. Visuo–motor pathways in humans revealed by event-related fMRI. Exp Brain Res. 2006;170:472–487. doi: 10.1007/s00221-005-0232-6. [DOI] [PubMed] [Google Scholar]

- Martuzzi R, Murray M, Michel C, Thiran JP, Maeder P, Clarke S, Meul R. Multisensory interactions within human primary cortices revealed by BOLD dynamics. Cereb Cortex. 2007;17:1672–1679. doi: 10.1093/cercor/bhl077. [DOI] [PubMed] [Google Scholar]

- Mesulam MM. From sensation to cognition. Brain. 1998;121:1013–1052. doi: 10.1093/brain/121.6.1013. [DOI] [PubMed] [Google Scholar]

- Miller L, D’Esposito M. Perceptual fusion and stimulus coincidence in the cross-modal integration of speech. J Neurosci. 2005;25:5884–5893. doi: 10.1523/JNEUROSCI.0896-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mishra J, Martinez A, Sejnowski T, Hillyard S. Early cross-modal interactions in auditory and visual cortex underlie a sound-induced visual illusion. J Neurosci. 2007;27:4120–4131. doi: 10.1523/JNEUROSCI.4912-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Molholm S, Foxe J. Look 'hear', primary auditory cortex is active during lip-reading. Neuroreport. 2005;16:123–124. doi: 10.1097/00001756-200502080-00009. [DOI] [PubMed] [Google Scholar]

- Molholm S, Ritter W, Javitt D, Foxe J. Multisensory visual-auditory object recognition in humans: a high-density electrical mapping study. Cerebral Cortex. 2004;14:452–465. doi: 10.1093/cercor/bhh007. [DOI] [PubMed] [Google Scholar]

- Molholm S, Ritter W, Murray M, Javitt D, Schroeder C, Foxe J. Multisensory auditory-visual interactions during early sensory processing in humans: a high-density electrical mapping study. Brain Res Cogn Brain Res. 2002;14:115–128. doi: 10.1016/s0926-6410(02)00066-6. [DOI] [PubMed] [Google Scholar]

- Murray M, Molholm S, Michel C, Heslenfeld D, Ritter W, Javitt D, Schroeder C, Foxe J. Grabbing your ear: rapid auditory-somatosensory multisensory interactions in low-level sensory cortices are not constrained by stimulus alignment. Cereb Cortex. 2005;15:963–974. doi: 10.1093/cercor/bhh197. [DOI] [PubMed] [Google Scholar]

- Musacchia G, Sams M, Nicol T, Kraus N. Seeing speech affects acoustic information processing in the human brainstem. Exp Brain Res. 2006;168:1–10. doi: 10.1007/s00221-005-0071-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Musacchia G, Schroeder C. Neuronal mechanisms, response dynamics and perceptual functions of multisensory interactions in auditory cortex. Hear Res. 2009;258:72–79. doi: 10.1016/j.heares.2009.06.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parkkonen L, Fujiki N, Mäkelä J. Sources of auditory brainstem responses revisited: contribution by magnetoencephalography. Hum Brain Mapp. 2009;30:1772–1782. doi: 10.1002/hbm.20788. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pekkola J, Ojanen V, Autti T, Jaaskelainen I, Mottonen R, Tarkiainen A, Sams M. Primary auditory cortex activation by visual speech: an fMRI study at 3 T. Neuroreport. 2005;16:125–128. doi: 10.1097/00001756-200502080-00010. [DOI] [PubMed] [Google Scholar]

- Poghosyan V, Ioannides A. Attention modulates earliest responses in the primary auditory and visual cortices. Neuron. 2008;58:802–813. doi: 10.1016/j.neuron.2008.04.013. [DOI] [PubMed] [Google Scholar]

- Raij T, Karhu J, Kičić D, Lioumis P, Julkunen P, Lin F, Ahveninen J, Ilmoniemi R, Mäkelä J, Hämäläinen M, Rosen B, Belliveau J. Parallel input makes the brain run faster. Neuroimage. 2008;40:1792–1797. doi: 10.1016/j.neuroimage.2008.01.055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raij T, Uutela K, Hari R. Audiovisual integration of letters in the human brain. Neuron. 2000;28:617–625. doi: 10.1016/s0896-6273(00)00138-0. [DOI] [PubMed] [Google Scholar]

- Rockland K, Ojima H. Multisensory convergence in calcarine visual areas in macaque monkey. Int J Psychophysiol. 2003;50:19–26. doi: 10.1016/s0167-8760(03)00121-1. [DOI] [PubMed] [Google Scholar]

- Rockland K, Van Hoesen G. Direct temporal-occipital feedback connections to striate cortex (V1) in the macaque monkey. Cerebral Cortex. 1994;4:300–313. doi: 10.1093/cercor/4.3.300. [DOI] [PubMed] [Google Scholar]

- Schoffelen J, Gross J. Source connectivity analysis with MEG and EEG. Hum Brain Mapp. 2009;30:1857–1865. doi: 10.1002/hbm.20745. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schroeder C, Foxe J. The timing and laminar profile of converging inputs to multisensory areas of the macaque neocortex. Brain Res Cogn Brain Res. 2002;14:187–198. doi: 10.1016/s0926-6410(02)00073-3. [DOI] [PubMed] [Google Scholar]

- Schroeder C, Foxe J. Multisensory contributions to low–level, 'unisensory' processing. Curr Opin Neurobiol. 2005;15:454–458. doi: 10.1016/j.conb.2005.06.008. [DOI] [PubMed] [Google Scholar]

- Schroeder C, Smiley J, Fu K, McGinnis T, O'Connell M, Hackett T. Anatomical mechanisms and functional implications of multisensory convergence in early cortical processing. Int J Psychophysiol. 2003;50:5–17. doi: 10.1016/s0167-8760(03)00120-x. [DOI] [PubMed] [Google Scholar]

- Schroeder CE, Lindsley RW, Specht C, Marcovici A, Smiley JF, Javitt DC. Somatosensory input to auditory association cortex in the macaque monkey. J Neurophysiol. 2001;85:1322–1327. doi: 10.1152/jn.2001.85.3.1322. [DOI] [PubMed] [Google Scholar]

- Shams L, Iwaki S, Chawla A, Bhattacharya J. Early modulation of visual cortex by sound: an MEG study. Neurosci Lett. 2005;378:76–81. doi: 10.1016/j.neulet.2004.12.035. [DOI] [PubMed] [Google Scholar]

- Shams L, Kamitani Y, Shimojo S. Visual illusion induced by sound. Brain Res Cogn Brain Res. 2002;14:147–152. doi: 10.1016/s0926-6410(02)00069-1. [DOI] [PubMed] [Google Scholar]

- Smiley J, Falchier A. Multisensory connections of monkey auditory cerebral cortex. Hear Res. 2009;258:37–46. doi: 10.1016/j.heares.2009.06.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smiley J, Hackett T, Ulbert I, Karmas G, Lakatos P, Javitt D, Schroeder C. Multisensory convergence in auditory cortex, I. Cortical connections of the caudal superior temporal plane in macaque monkeys. J Comp Neurol. 2007;502:894–923. doi: 10.1002/cne.21325. [DOI] [PubMed] [Google Scholar]

- Sperdin H, Cappe C, Foxe J, Murray M. Early, low-level auditory-somatosensory multisensory interactions impact reaction time speed. Front Integr Neurosci. 2009;3:2. doi: 10.3389/neuro.07.002.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stein B, Meredith M. The Merging of the Senses. MIT Press; Cambridge MA: 1993. [Google Scholar]

- Stein B, Wallace M, Stanford T, Jiang W. Cortex governs multisensory integration in the midbrain. Neuroscientist. 2002;8:306–314. doi: 10.1177/107385840200800406. [DOI] [PubMed] [Google Scholar]

- Talsma D, Doty T, Woldorff M. Selective attention and audiovisual integration: is attending to both modalities a prerequisite for early integration? Cereb Cortex. 2007;17:679–690. doi: 10.1093/cercor/bhk016. [DOI] [PubMed] [Google Scholar]

- Talsma D, Woldorff M. Selective attention and multisensory integration: multiple phases of effects on the evoked brain activity. J Cogn Neurosci. 2005;17:1098–1114. doi: 10.1162/0898929054475172. [DOI] [PubMed] [Google Scholar]

- Teder-Sälejärvi W, McDonald J, Di Russo F, Hillyard S. An analysis of audio-visual crossmodal integration by means of event–related potential (ERP) recordings. Brain Res Cogn Brain Res. 2002;14:106–114. doi: 10.1016/s0926-6410(02)00065-4. [DOI] [PubMed] [Google Scholar]

- Wang Y, Celebrini S, Trotter Y, Barone P. Visuo-auditory interactions in the primary visual cortex of the behaving monkey: electrophysiological evidence. BMC Neurosci. 2008;9:79. doi: 10.1186/1471-2202-9-79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Watkins S, Shams L, Tanaka S, Haynes J, Rees G. Sound alters activity in human V1 in association with illusory visual perception. Neuroimage. 2006;31:1247–1256. doi: 10.1016/j.neuroimage.2006.01.016. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.