Abstract

Controversy surrounds the proposal that specific human cortical regions in the ventral occipitotemporal cortex, commonly called the fusiform face area (FFA) and occipital face area (OFA), are specialized for face processing. Here, we present findings from an fMRI study of identity discrimination of faces and objects that demonstrates the FFA and OFA are equally responsive to processing stimuli at the level of individuals (i.e., individuation), be they human faces or non-face objects. The FFA and OFA were defined via a passive viewing task as regions that produced greater activation to faces relative to non-face stimuli within the middle fusiform gyrus and inferior occipital gyrus. In the individuation task, participants judged whether sequentially presented images of faces, diverse objects, or wristwatches depicted the identical or a different exemplar. All three stimulus types produced equivalent BOLD activation within the FFA and OFA; that is, there was no face-specific or face-preferential processing. Critically, individuation processing did not eliminate an object superiority effect relative to faces within a region more closely linked to object processing in the lateral occipital complex (LOC), suggesting that individuation processes are reasonably specific to the FFA and OFA. Taken together, these findings challenge the prevailing view that the FFA and OFA are face-specific processing regions, demonstrating instead that they function to individuate – i.e., identify specific individuals – within a category. These findings have significant implications for understanding the function of brain regions widely believed to play an important role in social cognition.

Keywords: fusiform face area, face processing, functional MRI, visual processing, occipital face area

Introduction

As a key element in social cognition, visual face processing receives extensive study within cognitive neuroscience. This research has led to considerable debate about the function of two particular cortical regions located in the ventral occipitotemporal lobe (VOT), the so-called fusiform face area (FFA; Kanwisher et al., 1997) and the occipital face area (OFA; Clark et al., 1996; Gauthier et al., 2000; Haxby et al., 2000), although most of the debate has focused on the role of the FFA (Kanwisher and Yovel, 2006). There are two primary threads in this debate. One asks whether brain activity to stimulus input reflects a distributed representation of features (Haxby et al., 2001; Hanson et al., 2004; O’Toole et al., 2005) or a modular, category specific representation (Kanwisher et al., 1997; Yovel and Kanwisher, 2004; Kanwisher and Yovel, 2006). A second asks whether brain areas respond to specific stimulus inputs (Kanwisher et al., 1997; Haxby et al., 2001; Hanson et al., 2004; Yovel and Kanwisher, 2004; O’Toole et al., 2005; Kanwisher and Yovel, 2006) or to processing demands and operations (Tarr and Gauthier, 2000). Here, we present findings relevant to this second line of debate demonstrating that the FFA and OFA respond to a specific type of visual processing operation and thus are not just face-specific processing regions.

Neuromodular accounts posit that biologically dedicated regions of cortex respond selectively to specific categories of input such as faces, objects, or spatial locations (Kanwisher et al., 1997; Yovel and Kanwisher, 2004; Kanwisher and Yovel, 2006). Functional magnetic resonance imaging (fMRI) studies provide support for this hypothesis with numerous demonstrations that faces produce greater brain activations compared to other categories of visual stimuli in both the FFA and OFA, particularly when observers view the stimuli passively or covertly (Puce et al., 1995; Kanwisher et al., 1997; Aguirre et al., 1998; Downing et al., 2001). In contrast, studies of patients with severe deficits specific to facial recognition, known as prosopagnosia, challenge the proposal that face selectivity in the FFA is necessary for overt face recognition. Specifically, patients with prosopagnosia with lesions that spare the middle fusiform gyrus can produce normal FFA regions in terms of intensity of blood oxygenation level-dependent (BOLD) signal and extent of activation (Hadjikhani and Gelder, 2002; Rossion et al., 2003; Avidan et al., 2005; Steeves et al., 2006, 2009).

Alternative hypotheses challenge the neuromodular view of VOT organization (Gauthier et al., 2000). The process accounts propose that it is the demands of visual processing, rather than stimulus properties that drive activity within the FFA and OFA. Studies focusing on the level of categorical recognition have offered some support for this hypothesis. One specific processing account suggests that FFA activation is associated with subordinate-level processing in general and processing at the individual, identity level in particular (Gauthier et al., 2000; Tarr and Gauthier, 2000). Individuation or identity processing where category membership is limited to a single exemplar is considered the ultimate example of subordinate-level processing (Gauthier et al., 2000).

Behavioral studies have in fact shown that adults tend to use the individual level as the entry point to face processing (Tanaka, 2001). In other words, for adults, processing a face at the individual level is the default mode and seems to be at least as efficient, if not more, than processing that face at the basic level. By contrast, the entry point for nearly all other object classes (e.g., birds, chairs) is at the basic level. Explicit tests of this individuation hypothesis by a small number of studies have yielded inconclusive results. For example, Mason and Macrae (2004) found greater activation for individuating faces compared to face categorization in right-hemisphere brain areas. George et al. (1999) observed that individuating faces produced greater fusiform gyrus activation than detecting faces within a contrast-polarity reversal task, consistent with the individuation hypothesis. Neither of these studies, however, included a condition that required participants to process non-face stimuli at the individual level. Such results are thus inconclusive with regard to whether an activation advantage for individuation over detection is face-specific or a more general phenomenon.

Gauthier et al. trained participants to be experts in identifying highly homogeneous, novel stimuli (Greebles) and showed greater activation in the FFA relative to non-experts when individuating those stimuli (Gauthier and Tarr, 2002). This finding provides the strongest evidence to date that enhanced FFA activation may not be face-specific. However, some researchers have argued that these results do not necessarily pose a true challenge to neuromodular stimulus-specific accounts because the Greeble stimuli contain features that make them very face-like (Kanwisher and Yovel, 2006). In addition, proponents of the neuromodular theory also have pointed out that although individuation of non-face stimuli induces enhanced FFA activation (Gauthier et al., 2000), the magnitude of this activation does not reach the level observed for faces (Kanwisher et al., 1997, 1998; McCarthy et al., 1997; Grill-Spector et al., 2004; Rhodes et al., 2004). Moreover, Kanwisher et al. found that while non-face objects did produce FFA activation during an individuation task, the greatest activation was still produced by faces (Grill-Spector et al., 2004; Yovel and Kanwisher, 2004; Kanwisher and Yovel, 2006).

Rhodes et al. (2004) addressed the individuation hypothesis by contrasting human face processing to the processing of individual Lepidoptera (i.e., moths and butterflies). They found that faces produced significantly greater activation in the FFA than Lepidoptera. However, behavioral performance for the Lepidoptera was substantially lower than that for faces in both Lepidoptera non-experts (66.5% versus 90.5%, respectively) and experts (71.6% for Lepidoptera), raising the possibility that the activation differences arose from differences in task difficulty rather than the nature of the stimuli per se. Finally, it is difficult to evaluate the individuation hypothesis from the results of the existing studies because individuation was co-varied with the effects of expertise (Gauthier et al., 1997, 2000) or manipulations of configural and featural processing (Yovel and Kanwisher, 2004). Nevertheless, these studies converge on the fact that while reliable FFA activation can be obtained from non-face stimuli, this activity is invariably quantitatively lower than activation for faces. This fact sustains the proposal that the FFA is specialized for face processing (Kanwisher and Yovel, 2006).

The present study was designed to directly test the specific effects of individuation on FFA activation during face and non-face object processing with the inclusion of controls that we consider crucial for testing the hypothesis. Following the established convention in the field, we used a passive viewing localizer task to identify putative face- and object-selective regions in the VOT. Second, we asked participants to perform an individuation task on which they judged whether a pair of sequentially presented stimuli depicted the same stimulus or different ones. On different trials the pictures were of two different stimuli from the same category. On same trials the two pictures showed slightly different views of the same face, wristwatch, or other common objects. This manipulation ensured that participants were specifically engaged in individuation, and not merely picture matching – a concern that most previous studies failed to address. Also, unlike most prior studies, all stimuli were matched in terms of spectral power, contrast, and brightness to control for possible undue influences of non-critical physical characteristics on face processing. More importantly, because faces are highly homogeneous, we used sets of similarly homogeneous common objects. One set comprised wristwatches that somewhat resembled faces (e.g., circular contours, consistent features contained within the outer counter, hour- and minute-arm configuration, or digital number arrangements). Another set was a collection of diverse objects (e.g., chair, cell phones). Although diverse objects were used, on any given trial participants saw a pair of objects from the same homogenous object category (e.g., a cell phone versus the same cell phone from a different view or a different cell phone) and were asked to determine whether they were of the same identity. We hypothesized that if the FFA and OFA are indeed face-specific, then faces should lead to greater activation compared to objects in both these two areas during this individuation task. However, if the areas are not face-specific but rather are engaged specifically by individuation processing, then FFA and OFA activation to faces and non-face objects should be indistinguishable.

Materials and Methods

Participants

We tested 17 healthy young adults. Data from two participants were eliminated from the final analyses because one participant had uncorrectable fMRI artifact in one of the individuation tasks, and one participant did not produce a reliable FFA and a lateral occipital complex (LOC) in the localizer task. The final test sample was thus comprised of 15 participants (six females) with a mean age of 24.8 years (SD = 5.7). All participants were right-handed and had normal or corrected-to-normal vision, with no history of psychiatric or neurological disease, significant head trauma, substance abuse, or other known condition that may negatively impact brain function. The Human Research Protections Program of the University of California, San Diego approved this study. Participants provided informed consent prior to the study and were paid for participation.

Stimuli

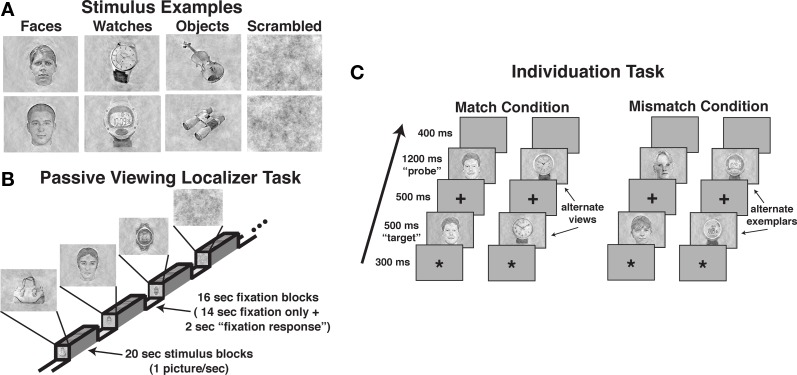

The tasks used images of male and female faces, diverse objects, digital and analog wristwatches, and scrambled stimuli. Intact stimuli were obtained from digital pictures or digitized from photographs, and scrambled stimuli were created by randomly arranging 97% of the pixels within face, watch, and object images. All images were balanced for spectral power, contrast, and brightness to equate lower-level perceptual information using a customized Matlab program (see Figure 1A). Non-identical pictures of faces, watches, and objects were used in the matching “same” trials of the individuation task (i.e., “alternate views”). These “same” pairs presented the same stimulus with modest angle and lighting changes, and in the case of faces, slight variations in neutral facial expressions (see Figure 1C). Face stimuli (half male and half female) for the individuation task were obtained from the face database of the Psychological Image Collection at Stirling (PICS1). Watch stimuli (half analog and half digital) were obtained from various sources on the Internet with all watches set to the same time (i.e., 10:09). Diverse object stimuli were comprised of stimuli selected from 40 different basic-level categories (e.g., baskets, apples, balls, whistles, vases, cameras, umbrellas, jackets, books, lamps, etc.), and were obtained from various sources on the Internet (i.e., retail store sites such as amazon.com) or photographed in the lab. Alternate view stimuli for the individuation task were all obtained from objects photographed in the lab.

Figure 1.

Examples of stimuli and the task design used in the three tasks. (A) Examples of perceptually balanced stimuli used in the three tasks. Note that all watches were set to the same time. (B) The passive viewing localizer task used a blocked design format. (C) The design of match and mismatch trials in the individuation task. Alternate views of the same exemplar were used in the match trials (i.e., perceptually non-identical stimuli), whereas different exemplars from the same class (i.e., sex for faces, type for watches) were used in the mismatch trials.

General task design and procedure

The experiment consisted of two tasks conducted during fMRI BOLD acquisition. Participants always received the passive viewing localizer task followed by the individuation task.

Passive viewing localizer task

A blocked fMRI design was used to present images of male and female faces (intermixed), diverse objects, analog and digital watches (intermixed), and scrambled stimuli. Each participant was presented two task runs using unique stimuli in each run (see Figure 1B). Each run included eight 20-s stimulus blocks (two for each stimulus category), interleaved with 16-s fixation epochs (crosshair stimulus only), with an additional 8-s fixation block at the beginning of each run. Within each stimulus block, 20 unique images were presented. Stimuli were presented for 300 ms followed by a 700-ms fixation interval. Participants were instructed to simply view the pictures during presentation. To ensure adequate attention during the task, participants were instructed to press a button each time they observed the fixation cross that was presented during the fixation period change from a standard typeface black cross to red bold typeface cross. This occurred within each fixation period 2-s prior to the beginning of the stimulus presentation. Potential effects of this fixation period response were removed during the regression analysis. Each localizer run lasted 4:56, during which 148 fMRI volumes were obtained (TR = 2000 ms).

Individuation task

The individuation task required participants to judge whether pairs of images of faces, watches, objects, and scrambled stimuli were of the same identity (see Figure 1C). The task was administered in three experimental runs, one each for faces, watches, and objects. Scrambled stimuli were presented in each of the three task runs. A single task run consisted of 120 trials, including 48 stimulus match trials, 32 scrambled stimulus trials, and 40 fixation-only “null” trials. Individual trials were 2900 ms in duration, including the presentation of an asterisk for 300 ms to signal the start of a trial followed by a 200 ms blank screen, the presentation of the first stimulus (i.e., “target” stimulus) for 500 ms, a 300 ms fixation cross, the presentation of the second stimulus (e.g., “probe” stimulus) for 1200 ms, followed by a 400 ms blank screen. Participants were instructed to press one of two buttons to indicate whether the probe stimulus was identical to the target stimulus shown in an alternate view (i.e., match) or was a different stimulus (i.e., mismatch). Half of the trials were match trials, and half were mismatch trials. In each run, 12 unique stimuli together with 12 alternate views of the stimuli were used (24 total pictures). In match trials, the original and alternate view of a stimulus were presented each as the target and probe stimuli during the task in two of the trials. We used alternate views of the same stimuli to prevent participants from using picture-matching strategies rather than individuation.

In the mismatch, the original and alternate view stimuli were paired with similar but different stimuli. For example, in the face runs, female faces were paired with different female faces and male faces were paired with different male faces; in the watch runs, digital watches were paired with different digital watches and analog watches were paired with analog watches; in the diverse object runs, cell phones were paired with different cell phones and chairs were paired with different chairs. The stimuli were paired with unique stimuli across trials (i.e., no pairs of mismatch stimuli were repeated). In addition, eight unique scrambled stimuli were used in each run to create 16 match and 16 mismatch trials. Each run lasted 5:48, during which 120 fMRI volumes were obtained (TR = 2900 ms). The order of face, watch, and object test runs were counterbalanced across participants.

Image acquisition

Imaging data were obtained at the University of California, San Diego Center for Functional Magnetic Resonance Imaging using a short-bore 3.0-tesla General Electric Signa EXCITE MR scanner (Waubesha, WI, USA) equipped with a parallel-imaging capable GE eight-channel head coil. FMRI data were acquired using a single-shot gradient-recalled echo-planer imaging sequence with BOLD contrast (31 slices; 4-mm slab; TR = 2000 or 2900 ms; TE = 36 ms; flip angle = 90°; FOV = 240 mm; matrix = 64 × 64; in-plane resolution = 3.75 mm2). Two 2D FLASH sequences were collected to estimate magnetic field maps and were used in post-processing to correct for geometric distortions. A high-resolution Fast SPGR scan was acquired for anatomical localization (sagittal acquisition; TR = 8.0 ms; TE = 3.1 ms; TI = 450 ms; NEX = 1; flip angle = 12°; FOV = 250 mm; acquisition matrix = 256 × 192; 172 slices; slice thickness = 1 mm; resolution = 0.98 × 0.98 × 1 mm).

Data analysis

FMRI preprocessing and analyses were performed using the Analysis of Functional NeuroImages (AFNI2; Cox and Hyde, 1997) and FSL3 (Smith et al., 2004) packages. The fMRI BOLD image sequences were corrected for geometric distortion prior to the analyses with a customized script based on the FSL FUGUE program. Motion correction, slice time correction, and three-dimensional registration were done with an automated alignment program that co-registered each volume in the time series to the middle volume of the task run (Cox and Jesmanowicz, 1999). The images in each run were registered into standardized MNI/Talairach space, resampled to 27 mm3 voxels (3 × 3 × 3 mm), and smoothed spatially to a fixed level of FWHM = 8 mm throughout the brain (Friedman et al., 2006).

fMRI analyses of individual participants

The fMRI data from individual participants were analyzed using a deconvolution approach (AFNI 3dDeconvolve). In the blocked design passive localizer task, the hemodynamic response function (HRF) for each stimulus condition was modeled from a gamma variate function convolved with the stimulus time series (Cohen, 1997). For the event-related individuation tasks, the HRF for each stimulus was estimated from a series of seven spline basis functions (i.e., “tent functions”) that modeled the post-trial onset window from 0 to 17.4 s (i.e., the fMRI volume acquisitions that included the stimulus presentation trial and the five subsequent post-stimulus volumes). Each task was analyzed using multiple regression that included the stimulus HRF parameters together with six parameters to account for motion artifacts (three rotation and three displacement variables), and polynomial factors of no interest (i.e., linear (all tasks), quadratic (all tasks), and cubic (individuation task)). The resulting regression weights for the stimuli were converted into percent signal values based on the voxel-wise global mean activation estimated from the regression analysis.

Definition of FFA, OFA, and LOC

The FFA was defined for each participant as the area within the lateral fusiform gyrus where face stimuli produced reliably greater activation than diverse objects and scrambled stimuli in the passive localizer task, a method that has proven in prior studies (Rotshtein et al., 2005, 2007a,b; Yue et al., 2006) to produce FFA ROIs consistent with other methods used to define face superior processing in the lateral fusiform region (for review, see Berman et al., 2010). Similarly, the OFA defined as the area within the inferior occipital gyrus (BA 19) where faces produced reliably greater activation than diverse objects and scrambled stimuli. To correct against Type I errors, a cluster-threshold correction was applied that used a voxel-wise threshold of P ≤ 0.001 with a minimum cluster volume of 225 μl resulting in an effective alpha level = 0.01 (Forman et al., 1995). The LOC region of interest was defined as the areas within the inferior and middle occipital gyri where diverse object stimuli produced reliably greater activation than scrambled stimuli in the passive localizer task (Malach et al., 1995; Moore and Engel, 2001; Kourtzi et al., 2003; Haushofer et al., 2008a,b). A cluster-threshold correction was applied that used a voxel-wise threshold of P ≤ 0.0001 with a minimum cluster volume of 225 μl resulting in an effective alpha = 0.001. The location of the FFA, OFA, and LOC regions from each participant were confirmed against their respective high-resolution anatomical scan to insure that voxels were restricted to the FFA, OFA, and LOC, respectively.

Analysis of individuation task ROI activity

Mean activation to each stimulus type was calculated for each participant within the FFA, OFA, and LOC ROIs for each of the six acquisition volumes estimated for the HRF. The data from each of the five ROIs were submitted to separate repeated measures analyses of variance (ANOVA) with stimulus (fixed effect), HRF time sample (fixed effect), and subject (random effect) as factors. Significant ANOVA effects were investigated using Bonferroni-corrected, paired-sample t tests (i.e., post hoc analyses). Bonferroni-corrected correlation coefficients were used for the comparison of ROI activation and behavioral performance. The effective alpha for the post hoc analyses and correlational analyses following correction was 0.050. Results from male and female faces were combined because each produced comparable results, and likewise for analog and digital watches.

Results

Passive viewing localizer task

The behavioral task demands during the localizer were minimal and required only that the participants respond to a change in the fixation stimulus that occurred outside the presentation of the stimuli of interest. This procedure documented vigilance during the task while allowing for examination of brain activation to the stimuli of interest under passive viewing conditions. All participants correctly responded to 100% of these stimuli. We removed the brain activation effects of the fixation task manipulation via statistical regression.

The localizer task was administered to define face-selective regions within the VOT, namely, the FFA and OFA, and the object-selective regions within the LOC. Of the 16 participants that produced artifact-free fMRI data, 15 participants produced a reliable right-hemisphere FFA (rFFA), 14 produced a reliable left-hemisphere FFA (lFFA); 12 participants produced a reliable right OFA (rOFA), but only nine participants produced a reliable left OFA (lOFA); 15 participants produced a reliable right LOC (rLOC), and 14 produced a reliable left LOC (lLOC). As noted previously, one participant failed to produce an FFA or LOC and was eliminated from the sample. Due to the low number of participants producing a lOFA, this region was eliminated from the analysis. A composite depiction of the FFA, OFA, and LOC ROIs across participants is shown in Figures 2A–C, respectively, with descriptive statistics regarding the location and extent of the ROIs shown in Table 1. The location and extent of activation in the ROIs is in accordance with many previous descriptions of the FFA and LOC. Activation differences between hemispheres of homologous regions were not of interest, and due to different numbers of participants providing data in homologous regions, we analyzed the results from each of the five regions separately.

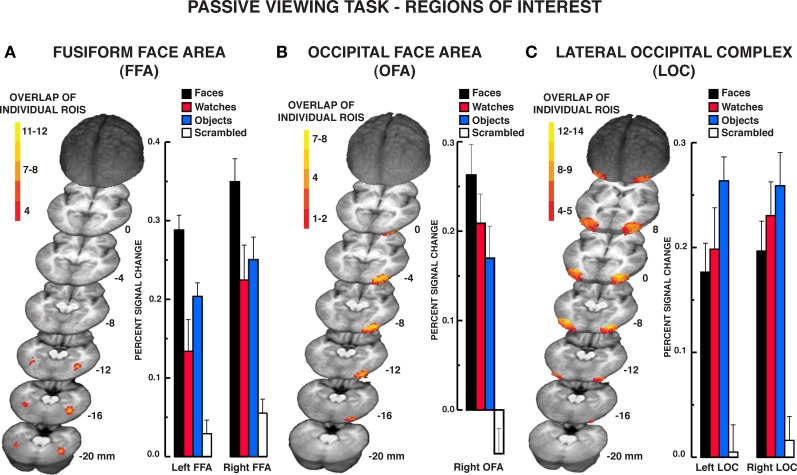

Figure 2.

Results from the passive viewing localizer task. Color-coded depiction of the location of FFA, OFA, and LOC regions of interest, respectively, from individual participants. The structural MRI underlay is the mean MRI from the 15 participants. (A) The FFA was defined as regions within the lateral fusiform gyrus producing significantly greater BOLD activation for faces relative to diverse object and scrambled stimuli. The location of the maximum overlap in the rFFA in standard Talairach coordinates (x, y, z; positive values = left, anterior, and superior, respectively) occurred at −40, −50, −16; 12 of 15 participants included this location within their rFFA. The location of the maximum overlap in the lFFA occurred at 38, −44, −16; six of 14 participants included this location within their lFFA. (B) The overlap map of individual right-hemisphere OFA ROIs. The left-hemisphere OFA is not shown as only 12 of the 17 participants produced a reliable left OFA. The location of the maximum overlap in the right OFA occurred at −28, 86, −10. Seven of the 15 participants included this location within their right OFA. (C) The LOC was defined as regions within the middle and inferior occipital gyri producing significantly greater activation to diverse objects relative to scrambled stimuli. The location of the maximum overlap in the rLOC occurred at −30, −81, −4; 14 of 15 participants included this location within their rLOC. The location of the maximum overlap in the lLOC occurred at 35, −70, −7; 13 of 14 participants included this location within their lLOC. The bar graphs display the mean signal in the respective regions of interest. Error bars = SEM.

Table 1.

Characterization of FFA, OFA, and LOC regions of interest.

| Region | n | Talairach coordinates center of cluster | Talairach coordinates Max. intensity voxel | Volume (μl) | Max. intensity difference (%) | ||||

|---|---|---|---|---|---|---|---|---|---|

| x | y | z | x | y | z | ||||

| Right FFA | 15 | −39 | −49 | −16 | −39 | −50 | −17 | 1179.0 | 0.76 |

| SD | 2.3 | 5.0 | 2.4 | 4.1 | 7.5 | 3.4 | 825.8 | 0.33 | |

| Left FFA | 14 | 37 | −47 | −15 | 37 | −46 | −17 | 754.1 | 0.59 |

| SD | 3.5 | 6.9 | 2.6 | 4.2 | 8.4 | 3.6 | 488.4 | 0.22 | |

| Right OFA | 12 | −34 | −81 | −9 | −37 | −80 | −10 | 1515.2 | 0.83 |

| SD | 3.8 | 4.0 | 1.8 | 6.3 | 6.2 | 1.8 | 656.8 | 0.41 | |

| Right LOC | 15 | −36 | −79 | −1 | −40 | −80 | −3 | 3947.1 | 0.65 |

| SD | 2.4 | 1.9 | 3.3 | 656.8 | 0.48 | ||||

| Left LOC | 14 | 37 | −78 | −3 | 38 | −81 | −5 | 3997.9 | 0.67 |

| SD | 3.5 | 3.9 | 3.5 | 5.3 | 8.0 | 7.9 | 2901.1 | 0.28 | |

Notes: Regions of interest were derived from the passive viewing localizer task. n, number of participants producing a statistically reliable region of interest. Talairach coordinates follow the convention of the Talairach and Tournoux atlas (Talairach and Tournoux, 1988). Positive coordinate values indicate left (x), anterior (y), and superior (z). Coordinates are provided for the estimated centroid of the ROI cluster and the location of the voxel showing the greatest intensity difference within the cluster (e.g., “hot spot”). Volume: mean volume of the region of interest. Maximum intensity difference: percent signal difference of faces versus diverse objects and scrambled stimuli (FFA and OFA) or diverse objects versus scrambled stimuli (LOC) at the voxel with the greatest activation difference within the ROI. SD: standard deviation.

The mean percent BOLD signal activation across the ROI for the four stimulus classes is shown in Figure 2. As faces, objects, and scrambled stimuli were used to define the FFA and OFA, direct contrasts between these stimulus types were not warranted (Kriegeskorte et al., 2009). However, watch stimuli were withheld from use in characterizing these ROIs. The contrast of watch stimuli to faces and objects across the ROIs provides two important pieces of information. First, it establishes the reliability of face selectivity in the FFA and OFA ROIs, and it provides a reliability measure of object selectivity within the LOC ROI. Second, because the watch stimuli comprise a homogeneous class of objects relative to the diverse object stimuli, the watch contrasts allow for the evaluation of homogeneity effects under conditions of spontaneous processing during the passive viewing task. Bonferroni-corrected, paired t tests were used for the contrast of watches to the other stimuli. Within the FFA, BOLD activation to watches was significantly less than faces bilaterally, rFFA: t15 = 3.80, P = 0.002; lFFA: t13 = 3.59, P = 0.003. However, the BOLD activation for watches and diverse objects did not differ in the FFA bilaterally, rFFA: t15 = 1.01, P = 0.329; lFFA: t13 = 1.74, P = 0.106. Within the rOFA, BOLD activation between watches and objects did not differ significantly, t11 = 1.36, P = 0.202, but there was only a trend for faces to produce greater BOLD activation than watches, t11 = 2.51, P = 0.029. This suggests that the rOFA may be sensitive to the homogeneity of stimuli during spontaneous processing.

The LOC ROIs defined object-preferential processing in the inferior/middle occipital gyrus area using the diverse object and scrambled stimuli. This allowed for the comparison of BOLD activation between objects, watches, and faces using Bonferroni-corrected, paired t tests objects versus faces, objects versus watches, and faces versus watches. Diverse objects produced significantly greater BOLD activation than faces within the LOC bilaterally, lLOC: t13 = 3.32, P = 0.006; rLOC: t14 = 3.08, P = 0.008. BOLD activation to watches did not differ significantly from that observed for objects bilaterally, lLOC: t13 = 2.03, P = 0.063; rLOC: t14 = 0.94, P = 0.003, and watches did not differ significantly from faces bilaterally, lLOC: t13 = 0.78, P = 0.451; rLOC: t14 = 1.35, P = 0.198. Thus, when defined in the traditional manner, the LOC produced a reliable object-superior processing effect relative to face stimuli. On the other hand, this effect did not extend to another class of object stimuli, watches that were more homogeneous than the diverse objects used in the localizer.

Individuation task

Participants in the individuation task judged whether sequentially presented pairs of faces, watches, objects, or scrambled stimuli were of the same identity. The stimulus pairs, with the exception of scrambled stimuli, were alternate views of the same exemplar or different exemplars from the same object class (e.g., male faces, digital watches, cell phones). A d’ statistic was calculated to describe behavioral performance accuracy in discriminating same and different stimulus pairs as this measure considers both sensitivity to item similarity and response bias. Mean behavioral performance indicated that participants were highly accurate in their judgments across all stimulus types (d’ mean ± SEM for faces = 4.58 ± 0.17, watches = 3.71 ± 0.23, objects = 4.44 ± 0.13, scrambled [collapsed across three runs] = 3.95 ± 0.14). A repeated measures one-way ANOVA indicated a significant main effect of stimulus, F3,42 = 6.07, P = 0.002. Post hoc analyses indicated that performance was lower for watches relative to objects, t14 = 3.32, P = 0.005. However, watch and object accuracies were not different from accuracy for faces, Ps > 0.05. These relatively small differences in otherwise highly accurate behavioral performance did not appreciably impact the essential fMRI BOLD findings. There was no statistically significant correlation between behavioral performance (d’) and activation within any of the five regions of interest in the individuation task (peak HRF response), Pearson correlation coefficient Ps > 0.050.

The design of the individuation task tested face, diverse object, and watch stimuli in three sequential test blocks, counter-balanced between subjects, rather than intermixed within test blocks. The major drawback to the use of an intermixed design is that participants would not be able to anticipate which type of stimuli they were going to view and they would likely have to both categorize the stimuli and individuate them. Thus, BOLD activation associated with each stimulus trial might be attributed to both categorical and individuation processing, not individuation exclusively. In this stimulus blocked, event-related design, participants knew the type of stimuli that they were going to view. Their task was purely to individuate them. Moreover, there is considerable precedence in the face-processing literature for investigating different stimuli across separate experimental runs within the same scanning session (Downing et al., 2001, 2006; Grill-Spector et al., 2004). Nevertheless, acquiring data from different stimuli in different runs raises the potential that fMRI BOLD signal differences may arise due to baseline fluctuations or context effects. To evaluate this possibility, our design included similar scrambled stimuli tested in each of the three runs. A repeated measures one-way ANOVA of the peak BOLD signal response in the estimated HRF to scrambled stimuli in each task run within each of the five ROIs revealed no significant task run main effects, rFFA: F2,28 = 2.33, P = 0.116; lFFA: F2,26 = 1.46, P = 0.251; rOFA: F2,22 = 1.55, P = 0.235; rLOC: F2,28 = 2.51, P = 0.099; lLOC: F2,26 = 1.77, P = 0.191. This similarity in scrambled stimuli demonstrates that baseline or context effects arising from separate stimulus task runs did not influence the findings described below. Based on the similarity in BOLD activation for scrambled stimuli across tasks, the following analyses use the mean scrambled stimulus HRF from the three test runs.

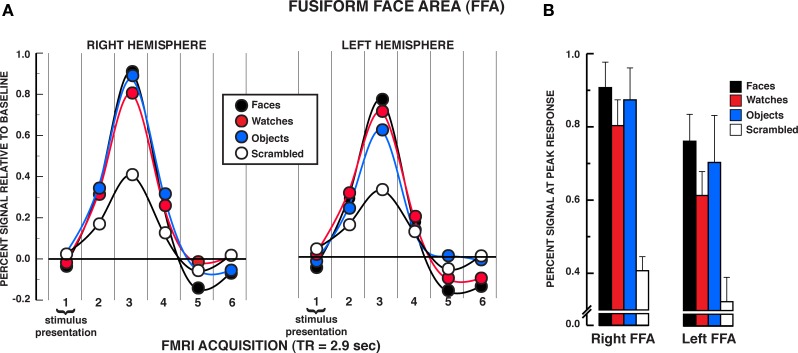

The results from the individuation task offer compelling evidence that the FFA responds significantly to individuation processing factors rather than face-specific stimulus factors exclusively. The mean BOLD activations across the five regions of interest over the estimated HRF are shown in Figures 3–5 (see Supplementary material for whole brain analysis of the individuation task). In the FFA bilaterally, a significant stimulus × time interaction was found in the two-way repeated measures ANOVA, rFFA: F15,210 = 8.33, P < 0.001; lFFA: F15,195 = 4.88, P < 0.001 (see Figure 3A). Focusing on the peak response in the HRF shown in Figure 3B (acquisition volume 3 in Figure 3A), post hoc analyses revealed that faces, diverse objects, and watches produced similar activation bilaterally, rFFA: Ps > 0.104, lFFA: Ps > 0.050. All three stimuli produced significantly greater activation than scrambled stimuli at the peak response, rFFA: Ps < 0.001; lFFA: Ps < 0.003. In addition, no differences were found in the activation levels for faces, diverse objects, and watches at any of the other HRF time points, rFFA and lFFA: Ps > 0.050. In the rFFA, the stimulus main effect was reliable, F3,42 = 7.24, P = 0.001, due to faces, watches, and diverse objects producing overall greater activation than scrambled stimuli. The stimulus main effect was not significant in the lFFA, F3,39 = 1.08, P = 0.369. The time main effect was reliable in both the rFFA and lFFA, rFFA: F5,70 = 72.32, P < 0.001; lFFA: F5,65 = 36.88, P < 0.001. In summary, there was no difference in activation between faces, diverse objects, and watches within the FFA. All three of these categories produced reliably greater activation than the scrambled stimulus control.

Figure 3.

FMRI BOLD results from the individuation task in the FFA regions of interest. (A) Estimated hemodynamic response function (HRF) for the faces, watches, diverse objects, and scrambled stimuli (mean from three individuation task runs) in the FFA. BOLD signal expressed in percent signal difference from null trial fixation baseline. The six acquisition volumes from the initiation of stimulus presentation were modeled. Each volume was acquired over 2.9 s (TR) that translates to the first volume acquired from 0 to 2.9 s, the second volume from 2.9 to 5.8 s, etc., and the entire HRF covered the 0–17.4 s window from the beginning of the trial. With slice-time resampling to the middle of the acquisition period, the peak HRF occurred in the third acquisition for each stimulus type in each ROI that translates to the peak response occurring at approximately 7.25 s post-trial onset. The HRF is shown with a spline interpolation for display purposes. (B) Mean peak response (third HRF acquisition volume) for the four stimuli in the FFA regions of interest. In both the rFFA and lFFA, no statistically significant differences were found between faces, watches, and diverse objects in the rFFA and lFFA. Error bars = SEM.

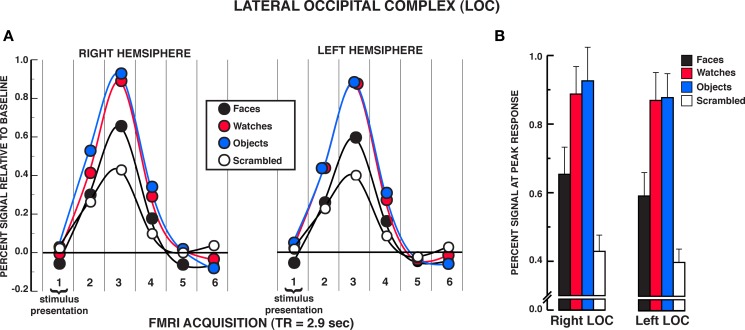

Figure 5.

FMRI BOLD results from the individuation task in the LOC regions of interest. (A) Estimated hemodynamic response function (HRF) for the faces, watches, diverse objects, and scrambled stimuli (mean from three individuation task runs) in the LOC. See Figure 3 for detailed description of HRF function. (B) Mean peak response (third HRF acquisition volume) for the four stimuli in the LOC regions of interest. Activation to diverse objects and watches was significantly greater than faces in both the rLOC and lLOC. Error bars: SEM.

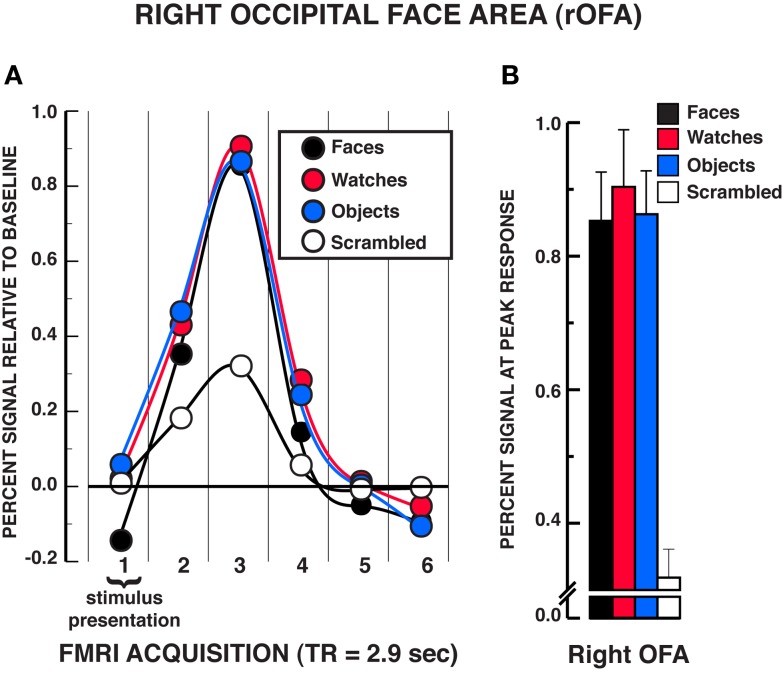

The findings in the rOFA substantially replicated those in the rFFA (see Figure 4). A significant stimulus × time interaction was found, rOFA: F15,165 = 11.01, P < 0.001. Focusing on the peak response in the HRF shown in Figure 4B, post hoc analyses revealed that faces, diverse objects, and watches produced similar activation in the rOFA, Ps> 0.438. All three stimuli produced significantly greater activation than scrambled stimuli at the peak response, Ps < 0.001. In addition, no differences were found in the activation levels for faces, diverse objects, and watches at any of the other HRF time points, Ps > 0.050, with the exception that watches produced modestly greater activation than faces at the fourth acquisition, t11 = 2.75, P = 0.019. The stimulus main effect was reliable, F3,33 = 7.65, P = 0.001, due to faces, watches, and diverse objects producing overall greater activation than scrambled stimuli. The time main effect was reliable F5,55 = 40.88, P < 0.001. In summary, there was virtually no difference in activation between faces, diverse objects, and watches within the rOFA. All three of these categories produced reliably greater activation than the scrambled stimulus control.

Figure 4.

FMRI BOLD results from the individuation task in the right OFA region of interest. (A) Estimated hemodynamic response function (HRF) for the faces, watches, diverse objects, and scrambled stimuli (mean from three individuation task runs) in the rOFA. See Figure 3 for detailed description of HRF function. (B) Mean peak response (third HRF acquisition volume) for the four stimuli in the rOFA region of interest. The results are virtually identical to those observed in the rFFA in that no statistically significant differences were found between faces, watches, and diverse objects in the rOFA. Error bars = SEM.

Unlike findings within the FFA and OFA for equivalent activation of face and non-face stimuli under individuation instructions, the findings from the LOC suggested reliable differences between face and object processing (see Figure 5A). A significant stimulus × time interaction was observed in the two-way repeated measures ANOVA in both the rLOC and lLOC, rLOC: F15,210 = 10.06, P < 0.001; lLOC: F15,195 = 8.78, P < 0.001. Within the peak response of the HRF shown in Figure 5B, post hoc analyses revealed that diverse objects and watches produced similar activation, rLOC, t14 = 0.59, P = 0.567; lLOC, t13 = 0.10, P = 0.919, and both produced significantly greater activation than faces, rLOC: Ps < 0.005; lLOC, Ps < 0.002. All three stimuli produced greater activation within the LOC than scrambled stimuli, rLOC: Ps < 0.005; lLOC, Ps < 0.007. No significant differences were found between objects and watches at any of the other HRF time points, rLOC and lLOC: Ps > 0.050. In the rLOC, diverse objects produced significantly greater activation than faces at the second and fourth time points in the HRF as well as at the peak response, HRF time 2, t14 = 3.22, P = 0.006; HRF time 4, t14 = 3.35, P = 0.005. In the lLOC, diverse objects and watches produced greater activation than faces in the second HRF time point, faces versus objects, t13 = 3.64, P = 0.003; faces versus watches, t13 = 4.16, P = 0.001. The stimulus main effect was reliable in both the rLOC and lLOC, rLOC: F3,42 = 12.52, P = 0.001; lLOC: F3,39 = 13.35, P < 0.001. In both LOC ROIs, watches and diverse objects produced greater overall activation than faces and scrambled stimuli, Ps < 0.050, but did not differ from one another, Ps > 0.050. In addition, overall activation to faces did not differ from that of scrambled stimuli in either LOC ROI, Ps > 0.050. The time main effect was reliable in both the rLOC and lLOC, rLOC: F5,70 = 36.97, P < 0.001; lLOC: F5,65 = 13.35, P < 0.001. In summary, in both LOC ROIs, faces produced significantly less activation than diverse objects and watches in the individuation task.

To summarize, the FFA and OFA results suggest that there is no difference in FFA and OFA activation to faces and non-face objects under individuation instructions. This occurred in a region defined by a standard localizer task of passive viewing as having produced reliably greater activation to faces than to objects and watches. In a region commonly associated with object processing, the LOC produced significantly greater activation for diverse objects and watches than faces. The processing differences between stimuli in the LOC suggest that individuation processing per se is not the dominant factor driving BOLD activation in this region.

Discussion

The present study was designed to determine whether the putative face-selective brain regions within the ventral occipitotemporal cortex (VOT) are indeed selectively responsive to faces. We find that this is not the case. More specifically, we find that although these areas appear to be face-specific when the contrast is between the passive viewing of faces versus other non-face object stimuli, this apparent specificity vanishes when the task requires object individuation. In other words, under task conditions calling for processing at the level of individual objects (i.e., are these the exact same or different object?), these putative face-selective brain regions within the VOT (FFA and OFA) are equally activated for carefully selected non-face stimuli as for faces.

Two findings from this study are paramount to understanding the functional role of the VOT in visual face and object processing. First, we showed that under task conditions that require explicit individuation of visual stimuli, both face and non-face objects produced equivalent levels of BOLD activation within the functionally defined FFA and OFA regions of interest that produced a face-superiority effect in a traditional form of a passive viewing localizer task. By contrast, this individuation task did not eliminate the object-superior processing effect (preferential processing for objects relative to faces) within the LOC, especially the left-hemisphere LOC. This pattern of results indicates that individuation processing does not indiscriminately raise face and non-face object processing to equal levels across all regions within the VOT extrastriate brain regions; it does so only in the putative face areas – FFA and OFA.

Taken together, these findings have significant implications for current models of the role of the FFA specifically in face processing, and more generally in visual object recognition. Most notably, our findings do not support the hypothesis that the FFA and OFA are specialized for face processing (Yovel and Kanwisher, 2004; Kanwisher and Yovel, 2006) because we find that this is not the case. Indeed, we found that at least under individuation task conditions, non-face objects such as watches and diverse objects generate the same level of FFA and OFA activation as faces. To our knowledge, this is the first report of face-equivalent activation of the FFA for non-face objects, despite many such attempts.

Naturally, the key question is why our task induces these levels of activation to non-face objects when previous studies have not. Proponents of face-specific processing accounts have dismissed individuation as a key function of the FFA based primarily on the failed attempts to produce face-level activation for non-face objects within the FFA in presumed individuation processing tasks (c.f., Kanwisher and Yovel, 2006). One of the early studies establishing the role of the FFA in face processing, for example, demonstrated face-superior processing in a passive viewing task similar to that presented in this study and in a task that used a sparse one-back matching task (Kanwisher et al., 1997). In fact, the sparse one-back matching task is one of the most commonly used face-processing tasks used today; in this task participants are instructed to identify infrequent repetitions of stimuli within a stimulus block. The original finding is taken as evidence against an individuation account of the FFA because face stimuli produced greater activation within the FFA (across five subjects) in both the passive viewing and sparse one-back tasks, thereby suggesting that individual item matching did not meaningfully alter FFA responsiveness. This argument is based on the assumption that the sparse one-back matching task requires item-by-item individual discrimination decisions, even though behavioral responses occur only to the infrequent mismatches. We question this assumption.

The typical sparse one-back matching task, including Kanwisher and colleagues’ original instantiation of it, does not adequately assess individuation processing because the match decisions always refer to the exact same stimulus whereas the mismatching decisions always refer to physically different stimuli. In these paradigms, then, match and mismatch decisions can be made purely on the basis of simple visual perceptual differences and do not by necessity entail substantive individuation discriminations. In contrast, our individuation task required item-by-item matching decisions on alternative views of the identical items; mismatching decisions were based on visually similar items in the same category (e.g., male versus another male face, analog watch versus another analog watch). We maintain that using alternative views of the same object for matching decisions and highly homogeneous though different stimuli for mismatching decision is crucial for minimizing simple perceptual matching and truly evaluating individuation processing without confounding factors.

Results from the Yovel and Kanwisher (2004) study evaluating the role of the FFA in configural and featural processing might, at first blush, appear to challenge a role for individuation in the FFA. They presented participants with pairs of faces or houses and asked them to judge whether the probe stimulus was identical to the target stimulus. Stimuli were either identical or varied in the spacing of the features (e.g., configural differences) or in the features themselves (e.g., featural differences). Their key finding was that faces produced significantly greater FFA activation than did houses regardless of the type of stimulus change, which they interpreted as evidence for the face-specificity of the FFA. However, the task manipulation they used to insure task compliance may have altered the tasks demands so as to direct participant judgments toward categorical level judgments. Specifically, a cue at the beginning of each stimulus block instructed participants as to whether the stimuli would differ in their features or in the configuration of features. This cue essentially altered the task to a decision from one requiring individuation to one in which participants decided whether or not a change took place in features or in feature spacing. This, in essence, converted the task into a form of categorization or subordinate processing task. While subordinate processing task can modulate FFA activity, past studies of subordinate processing have failed to eliminate face superior processing in the FFA (see Kanwisher and Yovel, 2006). The results of Rhodes and colleagues contrasting brain activity for judgments about faces versus Lepidoptera also might seem to contradict the FFA individuation hypothesis (Rhodes et al., 2004). In addition to the problematic difference in behavioral performance between the conditions, as already noted, the decision task in this study also precluded a direct test of the individuation hypothesis. Specifically, they had participants study individual exemplars of faces and Lepidoptera over several days, and then perform old/new judgments. Studies of declarative memory using tasks such as this have shown clearly that familiarity may provide the sole basis for any given recognition judgment, rather than explicit recollection of definitive features of items or context (Yonelinas, 2002). The difference in recognition levels for Lepidoptera versus faces in Rhodes et al. raises the valid concern that participants made judgments for the Lepidoptera with lower confidence. It is reasonable to suggest that familiarity-based judgments do not engage individuation processing that requires explicit recollection of specific features, configurations, or holistic information. As discussed above, the entry point for nearly all non-face object classes, which would include Lepidoptera, is at the basic level (Tanaka, 2001). Thus, the failure to modulate FFA activation for non-face objects in a task using difficult to discriminate objects with which one has minimal recollection of specific details is not strong evidence against the role of the FFA in individuation processing.

Grill-Spector et al. (2004) investigated the role of the FFA in face and non-face object detection and identification, concluding that the FFA had no major role in within-category identification of non-face objects, even in people with specialized expertise with those particular objects (e.g., car experts). They asked participants to identify a specific target picture appearing in a stream of non-target pictures drawn from the same category (e.g., other faces), stimuli from a different category (e.g., birds), or textured (nonsense) pictures. Identification was defined as a correct judgment of the target stimulus, whereas detection was defined either as judging the target as a category member without indicating it was the target or by correctly identifying a non-target stimulus as a member of a particular category (e.g., judging a car as a car). They found that faces produced greater activation of the FFA in both detection and identification. In contrast, detection of non-face objects was not correlated with FFA activation. Again, an analysis of Grill-Spector et al.’s methods leads us to conclude that they were not assessing the process of individuating non-face objects. To assess face processing, participants were instructed to identify a particular face, such as Harrison Ford, in a series of other famous faces; this is a direct test of individuation processing. Their assessment of non-face objects, by contrast, required only subordinate, categorical level processing. Specifically, their participants were instructed to identify pigeons from among a series of other type of birds. Their participants were not asked to identify any individual or particular pigeon in a series of pigeons. As a consequence, this experiment compared individuation processing in faces to subordinate processing at the categorical, not the individual, level in non-face objects. In sum, ours is the first neuroimaging study to directly compare the process of individuation for faces and non-face objects without any confounding concerns, and to find that the level of face and non-face object activations in the FFA and OFA are the same.

Our results offer new insights into accounts of FFA and OFA functions that have emphasized various processing factors. For example, the homogeneity of stimuli to be discriminated is proposed to be one factor that modulates FFA and OFA activation (Gauthier et al., 2000; Tarr and Gauthier, 2000). Stimulus homogeneity alone, however, cannot account for the present findings. All of the stimuli in our individuation task were indeed highly homogeneous. That is, we paired faces to faces, watches to watches, and similar basic-level objects to each other (e.g., cell phone to cell phone). Moreover, the results from the passive viewing task suggest that homogeneity of stimuli per se did not significantly modulate spontaneous object processing in the FFA. Watches represented a more homogeneous category of stimuli relative to diverse objects, but watches and diverse objects produced equivalent activation in the FFA during passive viewing, which were both significantly less than the activation to faces. We note, however, that watches produced only a marginal difference in activation within the OFA compared to faces during the localizer task. This may suggest that the OFA may be slightly more sensitive to homogeneity factors than the FFA. Perhaps more notable than stimulus homogeneity effects, visual processing expertise also cannot explain the present findings. Our individuation task results suggest that substantial expertise is not a necessary precondition for FFA activation modulation by non-face objects as observers (presumably non-experts with either watches or our other diverse objects) produced equivalent activations to faces and non-face object stimuli when assessed under strict instructions to individuate them.

Whereas visual processing expertise does not seem to play a necessary role in engendering a heightened level of activation in the FFA, our data suggest another role for expertise. Specifically, expertise with a particular stimulus class seems to influence the entry level or default processing strategy adopted by an individual. The vast expertise for faces acquired by typical adults establishes individuation as the entry level of processing regardless of external task demands (Tanaka, 2001). Expertise with faces appears to lead to the automatic (default) engagement of individuation processing, thereby activating the FFA regardless of the externally specified task demands. In the current study, the default engagement of individuation during face processing produced apparent face-preferential FFA and OFA activation during the passive localizer task. In contrast, given a lower level of visual processing expertise with a particular stimulus class, such as watches or diverse objects, differing tasks demands modulate the level of processing, and the associated FFA and OFA activation. Because individuation is not the entry level of processing for watches and diverse objects, only the explicit instructions to individuate items in these categories produced robust FFA and OFA activation. We observed reduced FFA and OFA activation for watches and other common objects when the task was unspecified as in the localizer task.

Data accumulating from a number of different fronts are beginning to inform the functional architecture of individuation processing for faces. An important source of such data is functional imaging studies of patients with the severe facial recognition deficits of prosopagnosia. Several reports based on a limited number of well-characterized patients with acquired prosopagnosia question a crucial role for the FFA in individuation processing as we suggest based on the present findings, and instead suggest a larger role in individuation processing for the OFA. For example, the severe facial recognition deficits of two often-studied acquired prosopagnosia patients, P.S. and D.F., are linked to lesions that include the right inferior occipital gyrus region that encompasses the territory of the OFA, but the right middle fusiform gyrus region that includes the FFA is spared in both patients (Rossion et al., 2003; Schiltz et al., 2006; Steeves et al., 2006, 2009; Dricot et al., 2008a,b). A normal FFA has been observed in both patients obtained across multiple localizer tasks. Perhaps the most striking finding is that both patients fail to show the typical face repetition suppression effect when identical face stimuli are repeated across multiple presentations (Schiltz et al., 2006; Dricot et al., 2008a,b; Steeves et al., 2009). Moreover, it has been observed that regions adjacent to the FFA that are not typically observed as showing face-specific processing can produce the face repetition suppresssion effect (Dricot et al., 2008a,b). Overall, findings from acquired prosopagnosia suggest that individual identity processing, particularly for faces, is not the sole domain of middle fusiform gyrus region and emphasizes an important role for the OFA, a finding consistent with the present study. However, a recent study indicates that the OFA alone may not be the exclusive locus for face individuation processing because it may not be the locus for the face repetition suppression effect. Gilaie-Dotan et al. (2010) applied transcranial magnetic stimulation (TMS) to the OFA region during the period between the first and second repetition of famous faces, thus disrupting potential stimulus repetition effects in the OFA during this period, and found a repetition suppression effect. This is not inconsistent with data from congenital prosopagnosia that has found relatively normal fMRI activation to faces and objects within the FFA and OFA regions despite severe facial recognition deficits, and decreased activation within the face-sensitive areas in anterior brain areas, the so-called “extended” face-processing network (Avidan et al., 2005; Avidan and Behrmann, 2009). One region within this extended network garnering attention for a specific role in individuation of faces beyond that of simple detection is located on the extreme inferior aspects of the temporal lobe including the inferior temporal gyrus (BA 20) (Nestor et al., 2008) and/or middle temporal gyrus (BA 38) (Kriegeskorte et al., 2007). We did not find face-specific individuation processing activation in this region (see Supplementary material). Due to its location in a region well known to be susceptible to MR dropout effects, this region may be difficult to ascertain in group studies (Kriegeskorte et al., 2007). In addition, those other studies used face individuation tasks that significantly differed in methodology from that used here; Kriegeskorte et al. (2007) used an anomaly-detection task with infrequent deviations in sequential image presentation, while Nestor et al. (2008) used a challenging face individuation task based on face fragment stimuli. We did, however, find regions that produced face-greater-than-object activation during individuation that are considered part of the extended face-processing network, including posterior cingulate gyrus and middle temporal gyrus (see Supplementary material). In contrast, a larger number of regions were identified that produced object-greater-than-face activation during individuation. This may be due to the simple fact that individuation processing for non-face objects is more difficult than faces because individuation processing is not the default or typical entry point of processing for non-face objects (Tanaka, 2001). Taken together, these findings suggest that the FFA or OFA are not the sole regions participating in individuation processing; other brain areas certainly participate in the individuation for faces and non-face objects. These brain areas may be part of a network that is enlisted as individuals process any visual stimuli at the subordinate levels with individuation engaging the network to the greatest extent.

In summary, we find that fMRI activations in the FFA and the OFA show no face specificity when faces and non-face objects are both processed at an individual (as opposed to categorical) level. Rather than being specific to faces, these brain areas play a critical role in individuation – the process of distinguishing one individual exemplar (face or otherwise) from another. It has taken this long to come to this realization because our natural tendency when we look at faces (relative to other objects) is to individuate.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Supplementary Material

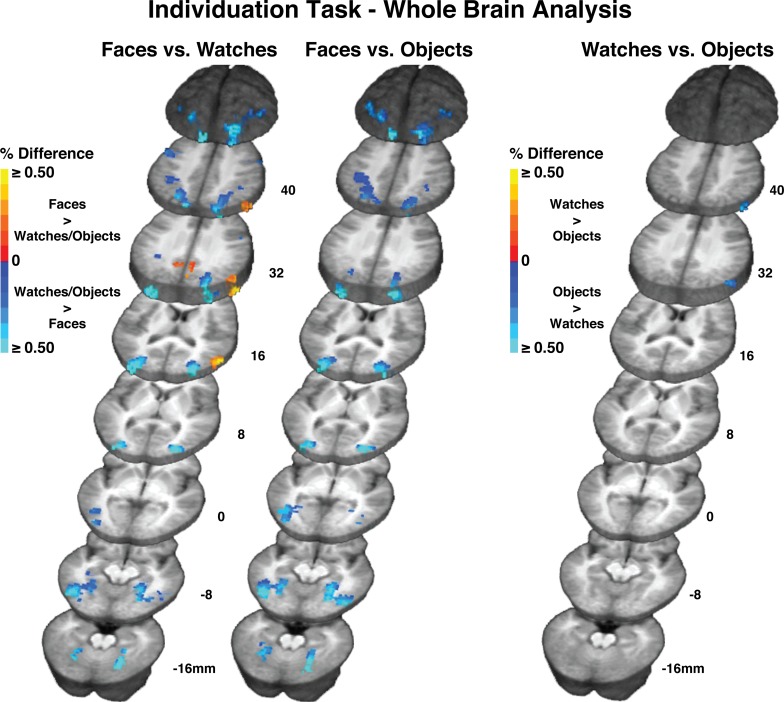

The main study investigated BOLD activation in three ROIs, the FFA, OFA, and LOC, determined from the passive viewing localizer task. Here, we present the results from the whole brain analysis of the individuation task.

Data Analysis

Analysis of individuation task whole brain activity

The data from the peak response of the HRF for faces, watches, and objects from each of the 15 participants were analyzed using a repeated measures analysis of variance (ANOVA) with stimulus (fixed effect) and subject (random effect) as factors. We restricted analysis to voxels that produced a significant main effect of stimulus (P ≤ 0.010 uncorrected). To correct that statistics for multiple comparisons, a voxel-cluster-threshold correction was used with parameters based on a Monte Carlo simulation (Forman et al., 1995). The cluster-threshold correction required a voxelwise threshold of P ≤ 0.005 within a volume of at least 351 μl (13 contiguous voxels) to yield an effective alpha ≤ 0.050.

Results

Individuation task

The central ROI-based analysis revealed that faces, watches, and diverse object produced virtually identical activation within the FFA in the individuation task. Here, we describe differences in activation observed during the individuation task throughout the rest of the brain for the contrast between the faces, watches, and diverse object stimuli. These results are shown in Figure S1. Table S1 describes the regional activation observed in the individuation task. Faces produced greater activation than watches during the individuation task within a limited number of posterior regions located primarily in the right hemisphere. However, faces did not produce any regions of greater activity relative to objects. We observed many more regions active for watches and objects relative to faces in the individuation task. These were distributed primarily as bilateral activation of posterior temporal, parietal, and occipital areas, but also included left hemisphere superior dorsolateral prefrontal cortical areas. Note the substantial watch and object greater than face activation located bilaterally in fusiform and occipital regions surrounding the FFA (see Figure 2 in main text for FFA). These regions are commonly activated in object processing studies (c.f. Haxby et al., 2001; Downing et al., 2006; Reddy and Kanwisher, 2006; Spiridon et al., 2006). The only noteworthy differences seen between objects and watches relative to faces was that watches produced greater activation than faces bilaterally in dorsolateral frontal cortex, whereas no differences were observed in frontal areas between objects and faces. In the direct contrast between watches and objects, the only significant difference that was observed was objects greater than watches activation in the right angular gyrus/inferior parietal lobule region.

Figure S1.

Whole brain activation results for faces and watches in the individuation task. Color bars indicate percent signal change differences. Red to yellow colors indicates regions where faces produced greater activation than watches. Blue colors indicate regions where watches produced greater activation than faces. All activation differences corrected to alpha ≤ 0.05. Activation is shown on averaged structural anatomical scans from all 15 participants.

Table S1.

Brain activation differences between face, watch, and object stimuli from the individuation task.

| Maximum intensity voxel in region | |||||||

|---|---|---|---|---|---|---|---|

| Talairach coordinates | |||||||

| Region | BA | Hemi | x | y | z | % | t |

| FACES > WATCHES | |||||||

| Middle temporal gyrus/Middle occipital gyrus | 39/19 | R | −55 | −66 | 18 | 0.53 | 4.94 |

| Precuneus | 31 | R | −2 | −68 | 29 | 0.29 | 4.46 |

| Posterior cingulate gyrus | 31/23 | R | −4 | −53 | 23 | 0.27 | 3.41 |

| Inferior parietal lobule/Angular gyrus | 39 | R | −49 | −67 | 38 | 0.28 | 5.00 |

| Precuneus | 31 | L | 6 | −53 | 32 | 0.21 | 3.96 |

| FACES > OBJECTS | |||||||

| No regions found | |||||||

| WATCHES > FACES | |||||||

| Fusiform gyrus | 19 | R | −25 | −52 | −13 | 0.53 | 5.97 |

| Middle occipital gyrus | 19 | R | −31 | −77 | 12 | 0.61 | 6.05 |

| Parahippocampal gyrus | 36 | R | −28 | −38 | −10 | 0.40 | 6.01 |

| Inferior parietal lobule | 40 | R | −46 | −47 | 52 | 0.24 | 3.71 |

| Middle frontal gyrus | 9 | R | −37 | 5 | 36 | 0.26 | 4.25 |

| Superior occipital gyrus | 19 | R | −25 | −77 | 34 | 0.34 | 6.01 |

| Superior parietal lobule | 7 | R | −26 | −62 | 44 | 0.22 | 5.33 |

| Precuneus/Superior occipital gyrus | 19 | R | −22 | −80 | 42 | 0.38 | 5.47 |

| Fusiform gyrus | 37 | L | 26 | −46 | −12 | 0.37 | 5.58 |

| Parahippocampal gyrus | 36 | L | 26 | −38 | −10 | 0.27 | 4.24 |

| Middle occipital gyrus | 19 | L | 34 | −86 | 12 | 0.68 | 6.45 |

| Middle occipital gyrus/Inferior temporal gyrus | 37 | L | 44 | −58 | −6 | 0.51 | 10.24 |

| Middle frontal gyrus | 10 | L | 32 | 50 | 20 | 0.23 | 4.30 |

| Middle frontal gyrus | 6 | L | 46 | 2 | 38 | 0.29 | 6.09 |

| Inferior temporal gyrus | 19/37 | L | 44 | 58 | −6 | 0.51 | 10.24 |

| Inferior parietal lobule | 40 | L | 34 | −44 | 38 | 0.19 | 4.74 |

| Inferior parietal lobule | 40 | L | 40 | −52 | 54 | 0.33 | 3.46 |

| Superior parietal lobule | 7 | L | 22 | −68 | 44 | 0.37 | 4.04 |

| Precuneus | 7 | L | 10 | −76 | 42 | 0.30 | 4.39 |

| OBJECTS > FACES | |||||||

| Fusiform gyrus | 19 | R | −28 | −52 | −12 | 0.60 | 6.88 |

| Middle occipital gyrus | 19 | R | −32 | −80 | 12 | 0.58 | 5.49 |

| Parahippocampal gyrus | 36 | R | −26 | −38 | −12 | 0.39 | 6.38 |

| Middle occipital gyrus | 37 | R | −50 | −64 | −10 | 0.49 | 3.66 |

| Middle temporal gyrus | 37 | R | −52 | −58 | −10 | 0.45 | 3.71 |

| Middle occipital gyrus | 19 | R | −32 | −80 | 14 | 0.46 | 5.95 |

| Inferior parietal lobule | 40 | R | −40 | −50 | 54 | 0.24 | 4.66 |

| Precuneus/Superior occipital gyrus | 19 | R | −28 | −70 | 36 | 0.28 | 4.56 |

| Superior parietal lobule | 7 | R | −28 | −68 | 48 | 0.26 | 4.90 |

| Fusiform gyrus | 19 | L | 26 | −58 | −12 | 0.44 | 5.64 |

| Fusiform gyrus | 37 | L | −44 | −64 | −10 | 0.46 | 4.84 |

| Parahippocampal gyrus | 36 | L | 26 | −44 | −12 | 0.50 | 6.01 |

| Middle occipital gyrus | 18 | L | 28 | −82 | 8 | 0.47 | 5.22 |

| Middle occipital gyrus/Inferior temporal gyrus | 37 | L | 44 | −62 | −4 | 0.52 | 4.69 |

| Middle occipital gyrus | 19 | L | 34 | −82 | 12 | 0.40 | 5.31 |

| Superior occipital gyrus/Cuneus | 19 | L | 32 | −86 | 24 | 0.41 | 4.85 |

| Precuneus | 7 | L | 26 | −70 | 38 | 0.28 | 4.50 |

| Superior parietal lobule | 7 | L | 26 | −58 | 44 | 0.16 | 6.02 |

| Superior parietal lobule | 7 | L | 26 | −64 | 54 | 0.26 | 5.78 |

| Inferior parietal lobule | 40 | L | 34 | −50 | 44 | 0.12 | 5.77 |

| Inferior parietal lobule | 40 | L | 38 | −52 | 54 | 0.24 | 6.32 |

| WATCHES > OBJECTS | |||||||

| No regions observed | |||||||

| OBJECTS > WATCHES | |||||||

| Angular gyrus/Inferior parietal lobule | 39/40 | R | −50 | −68 | 36 | 0.31 | 3.91 |

Notes: Talairach coordinates follow the convention of the Talairach and Tournoux atlas (Talairach and Tournoux, 1988). Positive coordinate values indicate left (X), anterior (y), and superior (z). BA, Brodmann's area. %, percent signal difference of contrast. t = t value of contrast.

Summary of Whole Brain Activation

In the main text, we argue that individuation may be a default processing procedure for faces in typically developed adults and that the equivalency of FFA activation during individuation processing for faces and non-face objects is consistent with this suggestion. The whole brain results generally suggest that this proposition extends beyond processing in the FFA. Specifically, relatively few brain regions produced greater activation to faces than watches during the individuation task. Moreover, most of the regions that did produce greater face compared to watch activation during the individuation task form what is sometimes termed an extended face-processing network, including the posterior cingulate gyrus, middle temporal gyrus, and middle occipital gyrus (Clark et al., 1996; Haxby et al., 2000; Ishai et al., 2005; Avidan and Behrmann, 2009). Therefore, the results may be interpreted to suggest that individuation processing may be a component of the extended face system, and that the extended system may have greater face specificity during individuation processing than the FFA.

Abbreviations

fMRI, functional magnetic resonance imaging; FFA, fusiform face area; OFA, occipital face area; VOT, ventral occipitotemporal cortex; LOC, lateral occipital complex; ROI, region of interest.

Acknowledgments

We thank Janet Shin for assistance in the conduct of this study, Deborah Weiss and Sarah Noonan for assistance with preliminary studies, Flora Suh for assistance with manuscript preparation, Dirk Beer for the development of the balanced stimuli, and Maha Adamo and Marta Kutas for comments on the manuscript. The National Institute of Child Health and Human Development Grants R01-HD041581, R01-HD046526, and R01-HD060595, Natural Sciences and Engineering Research Council of Canada Grant, and National Science Foundation of China Grants 60910006 and 31028010 supported this work.

Footnotes

References

- Aguirre G. K., Zarahn E., D'Esposito M. (1998). An area within human ventral cortex sensitive to “building” stimuli: evidence and implications. Neuron 21, 373–383 10.1016/S0896-6273(00)80546-2 [DOI] [PubMed] [Google Scholar]

- Avidan G., Behrmann M. (2009). Functional MRI reveals compromised neural integrity of the face processing network in congenital prosopagnosia. Curr. Biol. 19, 1146–1150 10.1016/j.cub.2009.04.060 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Avidan G., Hasson U., Malach R., Behrmann M. (2005). Detailed exploration of face-related processing in congenital prosopagnosia: 2. Functional neuroimaging findings. J. Cogn. Neurosci. 17, 1150–1167 10.1162/0898929054475145 [DOI] [PubMed] [Google Scholar]

- Berman M. G., Park J., Gonzalez R., Polk T. A., Gehrke A., Knaffla S., Jonides J. (2010). Evaluating functional localizers: the case of the FFA. Neuroimage 50, 56–71 10.1016/j.neuroimage.2009.12.024 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clark V. P., Keil K., Maisog J. M., Courtney S., Ungerleider L. G., Haxby J. V. (1996). Functional magnetic resonance imaging of human visual cortex during face matching: a comparison with positron emission tomography. Neuroimage 4, 1–15 10.1006/nimg.1996.0025 [DOI] [PubMed] [Google Scholar]

- Cohen M. S. (1997). Parametric analysis of fMRI data using linear systems methods. Neuroimage 6, 93–103 10.1006/nimg.1997.0278 [DOI] [PubMed] [Google Scholar]

- Cox R. W., Hyde J. S. (1997). Software tools for analysis and visualization of fMRI data. NMR Biomed. 10, 171–178 [DOI] [PubMed] [Google Scholar]

- Cox R. W., Jesmanowicz A. (1999). Real-time 3D image registration for functional MRI. Magn. Reson. Med. 42, 1014–1018 [DOI] [PubMed] [Google Scholar]

- Downing P. E., Chan A. W., Peelen M. V., Dodds C. M., Kanwisher N. (2006). Domain specificity in visual cortex. Cereb. Cortex 16, 1453–1461 10.1093/cercor/bhj086 [DOI] [PubMed] [Google Scholar]

- Downing P. E., Jiang Y., Shuman M., Kanwisher N. (2001). A cortical area selective for visual processing of the human body. Science 293, 2470–2473 10.1126/science.1063414 [DOI] [PubMed] [Google Scholar]

- Dricot L., Sorger B., Schiltz C., Goebel R., Rossion B. (2008a). Evidence for individual face discrimination in non-face selective areas of the visual cortex in acquired prosopagnosia. Behav. Neurol. 19, 75–79 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dricot L., Sorger B., Schiltz C., Goebel R., Rossion B. (2008b). The roles of “face” and “non-face” areas during individual face perception: evidence by fMRI adaptation in a brain-damaged prosopagnosic patient. Neuroimage 40, 318–332 10.1016/j.neuroimage.2007.11.012 [DOI] [PubMed] [Google Scholar]

- Forman S. D., Cohen J. D., Fitzgerald M., Eddy W. F., Mintun M. A., Noll D. C. (1995). Improved assessment of significant activation in functional magnetic resonance imaging (fMRI): use of a cluster-size threshold. Magn. Reson. Med. 33, 636–647 10.1002/mrm.1910330508 [DOI] [PubMed] [Google Scholar]

- Friedman L., Glover G. H., Krenz D., Magnotta V. (2006). Reducing inter-scanner variability of activation in a multicenter fMRI study: role of smoothness equalization. Neuroimage 32, 1656–1668 10.1016/j.neuroimage.2006.03.062 [DOI] [PubMed] [Google Scholar]

- Gauthier I., Anderson A. W., Tarr M. J., Skudlarski P., Gore J. C. (1997). Levels of categorization in visual recognition studied using functional magnetic resonance imaging. Curr. Biol. 7, 645–651 10.1016/S0960-9822(06)00291-0 [DOI] [PubMed] [Google Scholar]

- Gauthier I., Skudlarski P., Gore J. C., Anderson A. W. (2000). Expertise for cars and birds recruits brain areas involved in face recognition. Nat. Neurosci. 3, 191–197 10.1038/72140 [DOI] [PubMed] [Google Scholar]

- Gauthier I., Tarr M. J. (2002). Unraveling mechanisms for expert object recognition: bridging brain activity and behavior. J. Exp. Psychol. Hum. Percept. Perform. 28, 431–446 10.1037/0096-1523.28.2.431 [DOI] [PubMed] [Google Scholar]

- Gauthier I., Tarr M. J., Moylan J., Anderson A. W., Skudlarski P., Gore J. C. (2000). Does visual subordinate-level categorisation engage the functionally defined fusiform face area? Cogn. Neuropsychol. Special Issue: Cogn. Neurosci. Face Process. 17, 143–163 [DOI] [PubMed] [Google Scholar]

- Gauthier I., Tarr M. J., Moylan J., Skudlarski P., Gore J. C., Anderson A. W. (2000). The fusiform “face area” is part of a network that processes faces at the individual level. J. Cogn. Neurosci. 12, 495–504 10.1162/089892900562165 [DOI] [PubMed] [Google Scholar]

- George N., Dolan R. J., Fink G. R., Baylis G. C., Russell C., Driver J. (1999). Contrast polarity and face recognition in the human fusiform gyrus. Nat. Neurosci. 2, 574–580 10.1038/9230 [DOI] [PubMed] [Google Scholar]

- Gilaie-Dotan S., Silvanto J., Schwarzkopf D. S., Rees G. (2010). Investigating representations of facial identity in human ventral visual cortex with transcranial magnetic stimulation. Front. Hum. Neurosci. 4:50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grill-Spector K., Knouf N., Kanwisher N. (2004). The fusiform face area subserves face perception, not generic within-category identification. Nat. Neurosci. 7, 555–562 10.1038/nn1224 [DOI] [PubMed] [Google Scholar]

- Hadjikhani N., Gelder B. D. (2002). Neural basis of prosopagnosia: an fMRI study. Hum. Brain Mapp. 16, 176–182 10.1002/hbm.10043 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hanson S. J., Matsuka T., Haxby J. V. (2004). Combinatorial codes in ventral temporal lobe for object recognition: Haxby (2001) revisited: is there a “face” area? Neuroimage 23, 156–166 10.1016/j.neuroimage.2004.05.020 [DOI] [PubMed] [Google Scholar]

- Haushofer J., Baker C. I., Livingstone M. S., Kanwisher N. (2008a). Privileged coding of convex shapes in human object-selective cortex. J. Neurophysiol. 100, 753–762 10.1152/jn.90310.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haushofer J., Livingstone M. S., Kanwisher N. (2008b). Multivariate patterns in object-selective cortex dissociate perceptual and physical shape similarity. PLoS Biol. 6:7, e187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haxby J. V., Gobbini M. I., Furey M. L., Ishai A., Schouten J. L., Pietrini P. (2001). Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science 293, 2425–2430 10.1126/science.1063736 [DOI] [PubMed] [Google Scholar]

- Haxby J. V., Hoffman E. A., Gobbini M. I. (2000). The distributed human neural system for face perception. Trends Cogn. Sci. 4, 223–233 10.1016/S1364-6613(00)01482-0 [DOI] [PubMed] [Google Scholar]

- Kanwisher N., McDermott J., Chun M. M. (1997). The fusiform face area: a module in human extrastriate cortex specialized for face perception. J. Neurosci. 17, 4302–4311 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanwisher N., Tong F., Nakayama K. (1998). The effect of face inversion on the human fusiform face area. Cognition 68, B1–B11 10.1016/S0010-0277(98)00035-3 [DOI] [PubMed] [Google Scholar]

- Kanwisher N., Yovel G. (2006). The fusiform face area: a cortical region specialized for the perception of faces. Philos. Trans. R. Soc. Lond. B, Biol. Sci. 361, 2109–2128 10.1098/rstb.2006.1934 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kourtzi Z., Erb M., Grodd W., Bulthoff H. H. (2003). Representation of the perceived 3-D object shape in the human lateral occipital complex. Cereb. Cortex 13, 911–920 10.1093/cercor/13.9.911 [DOI] [PubMed] [Google Scholar]

- Kriegeskorte N., Formisano E., Sorger B., Goebel R. (2007). Individual faces elicit distinct response patterns in human anterior temporal cortex. Proc. Natl. Acad. Sci. U.S.A. 104, 20600–20605 10.1073/pnas.0705654104 [DOI] [PMC free article] [PubMed] [Google Scholar]