Abstract

Objectives:

Tabletop inanimate trainers have proven to be a safe, inexpensive, and convenient platform for developing laparoscopic skills. Historically, programs that utilize these trainers rely on subjective evaluation of errors and time as the only measures of performance. Virtual reality simulators offer more extensive data collection capability, but they are expensive and lack realism. This study reviews a new electronic proctor (EP), and its performance within the Rosser Top Gun Laparoscopic Skills and Suturing Program. This “hybrid” training device seeks to capture the strengths of both platforms by providing an affordable, reliable, realistic training arena with metrics to objectively evaluate performance.

Methods:

An electronic proctor was designed for use in conjunction with drills from the Top Gun Program. The tabletop trainers used were outfitted with an automated electromechanically monitored task arena. Subjects performed 10 repetitions of each of 3 drills: “Cup Drop,” “Triangle Transfer,” and “Intracorporeal Suturing.” In real time, this device evaluates for instrument targeting accuracy, economy of motion, and adherence to the rules of the exercises. A buzzer and flashing light serve to alert the student to inaccuracies and breaches of the defined skill transference parameters.

Results:

Between July 2001 and June 2003, 117 subjects participated in courses. Seventy-three who met data evaluation criteria were assessed and compared with 744 surgeons who had previously taken the course. The total time to complete each task was significantly longer with the EP in place. The Cup Drop drill with the EP had a mean total time of 1661 seconds (average, 166.10) with 54.49 errors (average, 5.45) vs. 1252 seconds (average, 125.2) without the EP (P=0.000, t=6.735, df=814). The Triangle Transfer drill mean total time was 556 seconds (average, 55.63) and 167.57 errors (average. 16.75) (EP) vs. 454 seconds (non-EP) (average. 45.4) (P=0.000, t=4.447, df=814). The mean total times of the suturing task was 1777 seconds (average, 177.73) and 90.46 errors (average. 9.04) (EP) vs. 1682 seconds (non-EP) (average, 168.2) (P=0.040, t=1.150, df=814). When compared with surgeons who had participated in the Top Gun course prior to EP, the participants in the study collectively scored in the 18.3th percentile with the Cup Drop drill, 22.6th percentile with the Triangle Transfer drill, and 36.7th percentile with the Intracorporeal Suturing exercise. When penalizing for errors recorded by the EP, participants scored collectively in the 9.9th, 0.1th, and 17.7th percentile, respectively. No equipment failures occurred, and the agenda of the course did not have to be modified to accommodate the new platform.

Conclusions:

The EP utilized during the Top Gun Course was introduced without modification of the core curriculum and experienced no device failures. This hybrid trainer offers a cost-effective inanimate simulator that brings quality performance monitoring to traditional inanimate trainers. It appears that the EP influenced student performance by alerting them to errors made, thus causing an increased awareness of and focus on precision and accuracy. This suggests that the EP could have internal guidance capabilities. However, validation studies must be done in the future.

Keywords: Laparoscopy, Training, Simulator

INTRODUCTION

The apprenticeship model has been the traditional approach to the acquisition of surgical skills. With open surgical procedures, a 3-dimensional field provides good visibility, and large incisions offer convenient manipulation of tissue and the use of conventional instruments. Laparoscopic procedures introduce obstacles not present in the open environment. The length of the instrument reduces tactile feedback and diminishes instrument stability. Furthermore, a “fulcrum effect” exists, where the insertion of the instruments through the abdominal wall causes the instrument tips to move in the opposite direction of the surgeon's hands making videoscopic procedures counter-intuitive.1 These factors contribute to a very steep learning curve. Many clinical examples exist that demonstrate this issue.

Over 10 years after the introduction of laparoscopic cholecystectomy, the incidence of bile duct injuries has not decreased to that of the open technique.2,3 Recently, the prevalence of physician and hospital medical errors has generated well-founded patient safety concerns. The Institute of Medicine estimated that between 44 000 and 98 000 people die in hospitals each year as a result of medical or surgical errors, making this the eighth leading cause of death in the United States.4 Many of these errors are the result of surgical misadventures in both open and laparoscopic cases. Finding a solution to this problem is a matter of the highest priority. However, the total answer will not be found with just the accrual of surgeon experience. To make matters worse, the learning curves for emerging procedures are more aggressive. Schauer et al5 reported that the complication rates and operating times of a laparoscopic Roux-en-Y gastric bypass approach levels equivalent to those of open gastric bypass only after gaining experience with 100 cases. A more aggressive commitment to training and continued development, refinement, and deployment of training appliances and curriculum will be the cornerstone of change. Over the last 3 decades, many training devices have been developed in an attempt to remove the bulk of the training burden from the operating room. All of these platforms hope to minimize the morbidity and mortality associated with the learning curve of laparoscopic techniques by providing maximum surgeon preparedness before embarking on new procedures.

Historically, minimally invasive surgical training has been conducted with animate and inanimate training tools. Animate models have occupied a significant but decreasing role over recent years for several reasons, including cost, lack of clinical correlation, and social objections. Inanimate training tools can be grouped into 1 of 2 categories: tabletop videoscopic trainers or virtual reality simulators. The videoscopic inanimate trainers have been the mainstay of skills acquisition. They have proven to be safe, inexpensive, and offer a convenient opportunity for practice. These trainers are typically equipped with the surgical instruments and videoscopic displays native to the operating room, thus promoting appliance function familiarity. In doing so, a learning environment is created where surgeons can gain experience and refine their laparoscopic skills without placing patients at risk.

Inanimate trainers, with their many realistic properties, are not without their shortcomings. Many use the time taken to complete a task as the only objective measurement and fail to account for accuracy. This is common to training systems developed by Rosser,6 SAGES,5 and Scott.7 Objective assessment of simulation performance is key to laparoscopic skills acquisition. Without valid performance metrics, simulation training loses much of its credibility and value.8 Also, input from an experienced instructor is required to achieve maximum skill transference, thus requiring a high teacher to student ratio. The cost and availability of capable staff present a barrier to the widespread deployment of many current inanimate training programs. Additionally, the feedback given by instructors is based on subjective discretion, thus hindering the establishment of standardized comparisons.

The second group of trainers consists of virtual reality simulators. As with their inanimate counterparts, virtual reality simulators, such as the Minimally Invasive Surgical Trainer –Virtual Reality (MIST-VR; Mentice Medical Simulation, Gothenburg, Sweden) or the LapSim (Surgical Science, Göteborg, Sweden) allow students to enhance their laparoscopic surgical skills in a controlled environment. Validated reports have shown that basic skills obtained with the assistance of virtual reality simulators are transferable to executing procedures in the operating room.9 Furthermore, research has suggested that these basic skills translate well into the performance of advanced clinical tasks, specifically intricate instrument manipulation and complex surgical tasks, such as intracorporeal suturing.9,10

Unfortunately, despite the great promise and many strides being made with virtual reality simulators, efforts to date still represent a technology in its infancy. Although the designers of MIST-VR and LapSim have attempted to create suitable laparoscopic environments, the incorporation of haptic feedback mechanisms is rudimentary or entirely absent. An overall lack of realism stems from the fact that the presented clinical landscape is missing the proper textures and shadowing, including the reproduction of glare. In addition, virtual reality systems analyze efficiency based on optimal instrument movement determined by a computer-generated pathway that may or may not be based on clinically validated algorithms. It does not account for acceptable variations that may lead to a successful outcome.11 With the consideration of expense, reliability, and customer service issues of current virtual reality simulators, the sluggish deployment of these training platforms should come as no surprise.

The limitations of the current inanimate and virtual reality trainers have set the stage for the introduction of hybrid trainer systems. In 2001, Hasson et al8 reported on the use of the LTS2000 (RealSim Systems, www.realismsystems.com), which has since been combined with a computer-based monitoring system for data storage and renamed the LTS200-ISM60 Laparoscopy Training Simulator (RealSim Systems, www.realsimsystems.com) thus launching upon the hybrid scene.8 To date, this system has not yet been validated. When combined with the validated Top Gun Laparoscopic Skills and Suturing Program, the EP used in this study combines the error recognition provided by the virtual reality simulator with the realistic immersion of an inanimate trainer. It addresses the less than ideal time based performance parameters utilized by tabletop inanimate systems. This “smart skill tool” is a compromise between the 2 current systems. In addition, the EP features a unique auditory and visual prompting when errors are committed. The real-time error feedback provides an alert to suboptimal or inefficient performance, thus providing the student an opportunity to make immediate corrections. The defined task arena promotes the development of economy of motion that can improve precision and accuracy. Finally, haptic feedback is not lost because the realism of the inanimate trainer is maintained. This study will profile the EP's applicability and performance in a validated laparoscopic skills and suturing training program.

METHODS

Program Summary

All of the participants in this study attended the Rosser Top Gun Laparoscopic Skills and Suturing Program, previously presented by Rosser et al.6 This program consists of a pretutorial suturing trial and written pretest to establish starting skill level and cognitive parameters, followed by 10 trials of preparatory drills, a suturing algorithm lecture, posttutorial written test, and finally 10 intracorporeal suture trials. Top Gun's main purpose is to rapidly establish a skill set for intracorporeal suturing.

The EP Top Gun curriculum offers 2 new features. First, the EP scoring incorporates errors that reward accuracy and economy of motion. Each error equates to a 5-second penalty added to the time required to complete each exercise. Secondly, the rope pass drill is completed but has been excluded from the analysis in this study. At this time this drill does not feature the EP.

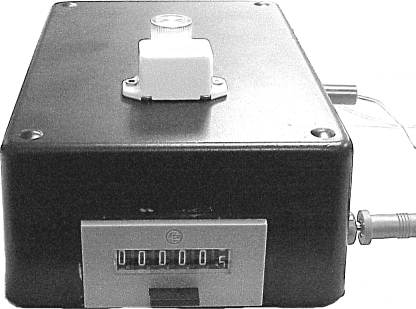

The EP tabulates errors by means of an electromechanical counter that is activated whenever the boundaries of the training arena are violated (Figure 1). As one performs the dexterity drills and suturing exercises, immediate announcement of errors is relayed to the participant through a battery powered buzzer and a red light.

Figure 1.

Electromechanical counter device of electronic proctor.

The EP currently is operational for 3 drills: the “Cup Drop,” the “Triangle Transfer,” and the “Interrupted Intracorporeal Suture” (Figure 2). All exercises, including the Interrupted Intracorporeal Suture, are performed in a standardized laparoscopic trainer (Minimal Access Therapy Technique (MATT) Trainer; Limbs & Things Ltd, Bristol UK). The defined task boundaries are electrically conductive and are wired to the EP. Each time an errant instrument movement touches these boundaries, the circuit is completed, an error is registered, a buzzer sounds, and a red light flashes. All time measurements are done manually by a stopwatch and a cumulative total of errors is recorded by a mechanical counter that is incorporated within the EP. The instructors record the number of errors and the time on score sheets.

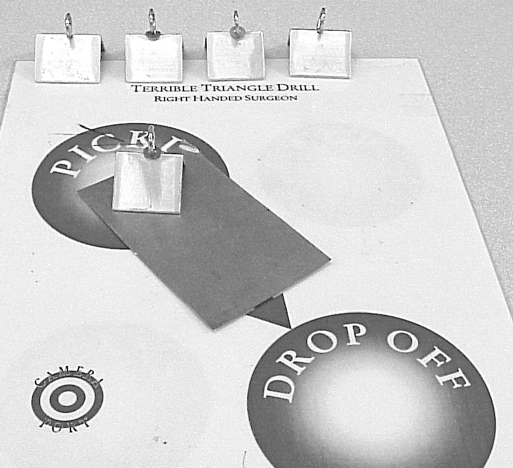

Figure 2.

Template of Triangle Transfer drill with electronic proctor device.

Cup Drop Drill

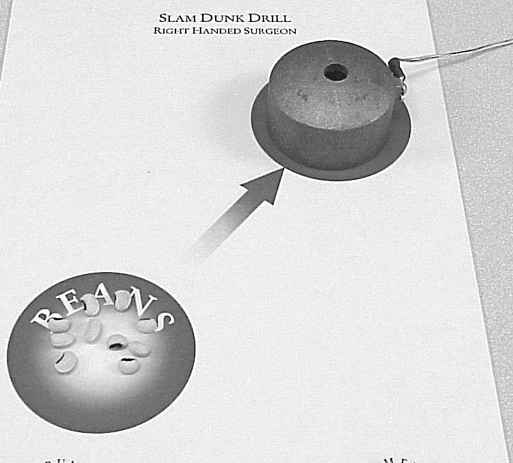

The “Cup Drop” exercise helps to develop nondominant hand dexterity, 2-D depth perception, eye-hand coordination, and fine motor control. This exercise requires black-eyed peas to be grasped from a designated circle on the template and transferred to a dome-shaped copper canister where the pea is dropped through a circular aperture 1-cm in diameter (Figure 3). The parameters for acceptable economy of movement for this drill are defined by the width of the base and height of the copper canister. The participant must maintain precise control of the instrument within this 98.96-cm3 space. As long as the participant works in the defined arena, no errors are accounted for. Its only when the electro conductive surfaces come in contact that the errors are counted. The instrument for this drill is an endoscopic grasper (EndoGrasp; Ethicon EndoSurgery, Cincinnati, OH) used to grasp each pea individually, position it over the canister, and drop it through the aperture from a minimal height without contacting the canister. The grasper and copper canister are electronically wired to the EP. As the graspers come into contact with the copper canister, the circuit is completed, the bulb illuminates, buzzer sounds, and an error is recorded. The time is recorded by a stopwatch that is started just as the participant is ready to grab the first pea and is stopped when all the peas are dropped inside the aperture. Completion of the drill is accomplished by successfully transferring 10 peas into the canister, and time is stopped when the tenth pea enters the canister.

Figure 3.

Template of Cup Drop drill with electronic proctor device.

Triangle Transfer

The “Triangle Transfer” drill helps to develop nondominant hand dexterity, targeting, 2-D depth perception compensation, as well as fine-motor control. With the nondominant hand, a needle holder with a curved needle in a flat configuration is used to transfer 5 triangular-shaped metallic objects from a “pick up” area to the “drop off” area (Figure 2). Each metal triangle is crowned by a 1-cm diameter metallic loop on its apex, positioned on end, such that the participant cannot see its aperture. The aperture of the loop must be anticipated through 2-D depth perception compensation. After placing the needle through the loop, the triangle is then elevated and moved to the drop off area. The needle is then abducted and rotated free from the loop. An acceptable parameter for economy of motion is defined by the 39.2-cm3 area above the base of the triangle and extends to the top of the loop. Participants have to repeat the drill 5 times and the time and number of errors are counted. The time is recorded on a stopwatch and is started when the participant is ready to come down with the needle to lift the first triangle and is stopped when all the triangles are placed on the drop zone. Each time the participant comes in contact with the triangle, the needle, and the template, the circuit is complete; and a buzzer and a light switch on indicating that an error has occurred.

Interrupted Intracorporeal Suturing

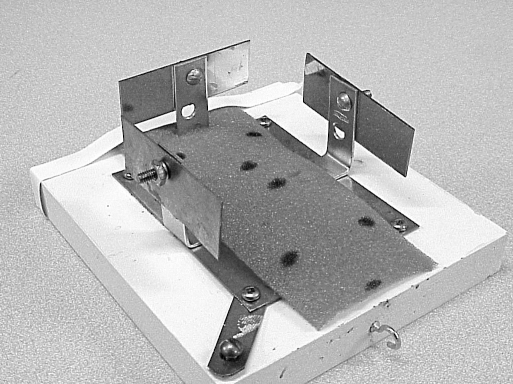

The third task is “Interrupted Intracorporeal Suturing,” which uses a detailed suturing algorithm previously described by Rosser et al.12 The suturing arena is a 300-cm3 area within which all required movements should take place (Figure 4). Uncontrolled movements that breach the acceptable economy of movement will trigger the recording of errors. This box has openings in the corners at the 11 o’clock and 1 o’clock positions that reinforce the performance of maneuvers that produce a square knot. Also it promotes safety by stressing forward deflection of the needle. This device, along with the suturing instruments (Rosser Signature Series Needle Holder; Stryker, Kalamazoo, MI, USA), are electronically wired to the EP. The time and number of errors are recorded. Time is recorded from when the participant grabs the suture at the grasping point outside the drill arena, until he or she completes the entire suturing algorithm.

Figure 4.

Electronic proctor suturing device.

RESULTS

Thirteen courses were carried out using the standardized EP devices between January 2001 and May 2003. A total of 117 participants used the EP platform in some capacity. If an individual repeated the course more than once, only the initial 10 trials were used in this study. Two courses taking place in 2001, with 29 participants, lacked the EP devices for the Cup Drop and Triangle Transfer drills. These were not included in the percentile rankings for this study. Also, 15 individuals left the course early and were therefore unable to complete all 10 trials. These too were discarded from the comparison of percentiles.

For those who completed 10 trials of all 3 specified drills using the EP devices (n=73), a percentile ranking was calculated. First, a percentile ranking was established by using the total time taken to complete 10 repetitions of each exercise. This score was then compared with a percentile ranking database created during the Top Gun courses, which did not feature error tabulation.6,12 As previously stated, each error led to a 5-second penalty. The percentile ranking was calculated after accounting for errors and was compared with those percentile rankings without consideration of errors (Table 1).

Table 1.

Three Drill Percentile Comparison

| Cup Drop | Triangle Transfer | Suturing | |

|---|---|---|---|

| Percentile (Time) | 18.3% | 22.6% | 36.7% |

| Percentile (Time + Errors) | 9.9% | 0.1% | 17.7% |

The performance of individuals utilizing the EP system was compared with the performance of those who have engaged in the Top Gun course prior to the EP. This Pre-EP database includes the scores of 744 participants.

Looking purely at time, we analyzed the sum of the 10 trials for each participant on all 3 drills tested. With equal variances assumed, we compared the means of those who used the EP modality (n=73) with those who performed the same drills without the EP (n=744). For all 3 drills, a statistically significant increase was noted in time for the group using the EP (Table 2).

Table 2.

Total Time Comparison of Electronic Proctor (EP) and Nonelectronic Proctor (Non-EP)

| Drill | Group | N | Mean Total Time (seconds) | P Value | t | Degrees of Freedom |

|---|---|---|---|---|---|---|

| Cup drop | EP | 73 | 1661 | 0.000 | 6.725 | 814 |

| Non-EP | 744 | 1252 | ||||

| Triangle Transfer | EP | 73 | 556 | 0.000 | 4.447 | 814 |

| Non-EP | 744 | 454 | ||||

| Suturing | EP | 73 | 1777 | 0.040 | 1.150 | 814 |

| Non-EP | 744 | 1682 |

In each of the 3 drills evaluated, consideration of errors resulted in a decline in the overall percentile raking. For the Cup Drop drill, the percentile ranking decreased from the 18.3th percentile to 9.9th as a result of a mean total of 54.49 errors. Regarding the Triangle Transfer drill, the group average decreased severely from the 22.6th to the 0.1th percentile, as a result of a mean total of 167.57 errors. Lastly, the ranking amongst participants in the Intracorporeal Suturing drill dropped from the 36.7th percentile to the 17.7th percentile as a result of a mean total of 90.46 errors.

A long-term goal of this study is to establish a database similar to that created under Top Gun, whereby all those who participate in the EP course can be registered and ranked by their performance among each of these aforementioned skill sets.

CONCLUSION

The emphasis on preparatory training and evaluation outside of the operating room occupies a long overdue focal point. The effectiveness of such preemptive preparation has a long and distinguished track record in the aerospace industry.8 Similar profiles must be established in the medical profession, if we are going to maximally protect our patients and generate good outcomes. In spite of compelling technological advances and appliance development, operative skill and the ability to suture remain the mainstays of surgeon confidence, patient protection, and ultimately procedure proliferation. We must guard against complacency and stop the erosion of this ideal. Mechanical suturing devices, such as the EndoStitch (US Surgical; Norwalk, CT) and the Suture Assist (Ethicon Enco-Surgery; Cincinnati, OH), can effectively decrease the skill requirement for intracorporeal suturing; however, conventional suturing skills should be readily available in the event of a mechanical failure or lack of clinical applicability. Concerns about skill development complacency are well founded as residents at UC Davis Medical Center reported that they prefer to use the Endostich in completing the task of intracorporeal knot tying.1 The cost of these advanced mechanical tools creates an economic concern as well. These issues will become increasingly important in the future. Above all, one must remember these technological advancements should accompany and not replace conventional laparoscopic skill and suturing capability.

If we are to execute an effective “practice before you play” training philosophy, our traditional tabletop training platforms have shortcomings that must be addressed. These include economy of motion evaluation and error calculation to augment time as a parameter of performance evaluation. Computer-based virtual reality platforms offer a tremendous step forward but are still in their infancy. Cost effectiveness, reliability, and realism are issues that must be addressed. Hamilton and Scott13 showed how operative performance of residents trained on virtual reality simulators demonstrates no statistically significant difference in comparison with those trained on a video inanimate trainer. Eighty-three percent of residents, however, claimed that the inanimate trainers were more effective training tools than the virtual reality trainers because they were more realistic, provided better depth perception, and gave better tactile feedback. In spite of this, it is accurate to anticipate that virtual reality simulators may represent the future of surgical training and evaluation.7

The EP illustrates the introduction of a hybrid-training platform that tries to address the shortcomings of the 2 current training systems. The EP performed effectively in the validated “Rosser Top Gun Laparoscopic Skills and Suturing Program.” No significant technical failures occurred, and no modification of the core curriculum was needed to accommodate the appliance. The addition of the consideration of errors obviously decreased performance when compared with performance recorded in the early Top Gun database that did not consider errors. An interesting finding is that without consideration of errors, the time needed to complete each drill was significantly longer amongst the new EP group. This suggests that the electronic proctor could have internal guidance capability. It will be interesting in the future to evaluate whether the feature of real-time error announcement increases the time to complete tasks or decreases the number of participant errors, or both. Another question to be answered is whether the EP provides feedback that has a similar effectiveness as an onsite instructor in assisting in skill transfer. If it does, this could be a great step forward in solving proctor recruitment challenges that hinder many training efforts.

References:

- 1. Pearson AM, Gallagher AG, Rosser JC, Satava RM. Evaluation of structured and quantitative training methods for teaching intracorporeal knot tying. Surg Endosc. 2002; 16 (1): 130–137 [DOI] [PubMed] [Google Scholar]

- 2. Krahenbuhl L, Sclabas G, Wente MN, Schafer M, Schumpf R, Buchler MW. Incidence, risk factors, and prevention of biliary tract injuries during laparoscopic cholecystectomy in Switzerland. World J Surg. 2001; 25 (10): 1325–1330 [DOI] [PubMed] [Google Scholar]

- 3. Strasberg SM, Hertl M, Soper NJ. An analysis of the problem of biliary injury during laparoscopic cholecystectomy. J Am Coll Surg. 1995; 180: 101–125 [PubMed] [Google Scholar]

- 4. Medical Errors: The Scope of the Problem. Fact sheet, Publication No. AHRQ 00-P037 Agency for Healthcare Research and Quality, Rockville, MD: Available at: http://www.ahrq.gov/qual/errback.htm. Accessed November 1999 [Google Scholar]

- 5. Rosser JC, Jr, Murayama M, Gabriel N. Minimally invasive surgical training solutions for the twenty-first century. Surg Clin North Am. 2000; 80 (5): 1607–1624 [DOI] [PubMed] [Google Scholar]

- 6. Rosser JC, Jr, Rosser LE, Savalgi RS. Objective evaluation in laparoscopic surgical skill program for residents and senior surgeons. Arch Surg. 1998; 133 (6): 657–661 [DOI] [PubMed] [Google Scholar]

- 7. Scott D, Bergen P, Rege R. Laparoscopic training on bench models: better and more cost effective than operating room experience? J Am Coll Surg. 2000; 191 (3): 272–283 [DOI] [PubMed] [Google Scholar]

- 8. Hasson HM. New paradigms in surgical education: web based learning and simulation. Laparoscopy Today. 2005; 3 (2): 9–11 [Google Scholar]

- 9. Hyltander A, Liljegren E, Rhodin PH, Lonroth H. The transfer of basic skills learned in a laparoscopic simulator to the operating room. Surg Endosc. 2002; 16 (9): 1324–1328 [DOI] [PubMed] [Google Scholar]

- 10. Seymour NE, Gallagher AG, Roman SA, et al. Virtual Reality Training Improves Operating Room Performance. Ann of Surg. 2002; 236 (4): 458–464 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Grantcharov TP, Rosenberg J, Pahle E, Funch-Jensen PM. Virtual reality computer simulation: an objective method for evaluation of laparoscopic surgical skills. Surg Endosc. 2001; 15: 242–244 [DOI] [PubMed] [Google Scholar]

- 12. Rosser JC, Rosser LE, Raghu SS. Skill acquisition and assessment for laparoscopic surgery. Arch Surg. 1997; 132 (2): 200–204 [DOI] [PubMed] [Google Scholar]

- 13. Hamilton EC, Scott DJ, Fleming JB, Rege RV, Laycock R, Bergen PC, Tesfay ST, Jones DB. Comparison of video trainer and virtual reality training systems on acquisition of laparoscopic skills. Surg Endosc. 2002; 16 (3): 406–11 [DOI] [PubMed] [Google Scholar]