Abstract

Objective:

In our effort to establish criterion-based skills training for surgeons, we assessed the performance of 17 experienced laparoscopic surgeons on basic technical surgical skills recorded electronically in 26 modules selected in 5 commercially available, computer-based simulators.

Methods:

Performance data were derived from selected surgeons randomly assigned to simulator stations, and practicing repetitively during one and one-half day sessions on 5 different simulators. We measured surgeon proficiency defined as efficient, error-free performance and developed proficiency score formulas for each module. Demographic and opinion data were also collected.

Results:

Surgeons' performance demonstrated a sharp learning curve with the most performance improvement seen in early practice attempts. Median scores and performance levels at the 10th, 25th, 75th, and 90th percentiles are provided for each module. Construct validity was examined for 2 modules by comparing experienced surgeons' performance with that of a convenience sample of less-experienced surgeons.

Conclusion:

A simple mathematical method for scoring performance is applicable to these simulators. Proficiency levels for training courses can now be specified objectively by residency directors and by professional organizations for different levels of training or post-training assessment of technical performance. But data users should be cautious due to the small sample size in this study and the need for further study into the reliability and validity of the use of surgical simulators as assessment tools.

Keywords: Surgical simulation, Proficiency scores, Laparoscopic surgery, Experienced surgeons

INTRODUCTION

The 1999 Institute of Medicine report To Err is Human1 riveted the medical establishment's attention onto errors made during patient care. A significant portion of the errors occurred during the care of surgical patients, and the report made recommendations for mitigation. Also in 1999, the American Council on Graduate Medical Education (ACGME) endorsed 6 competencies required for resident medical education.2–4 Those in Patient Care and in Practice-Based Learning concern several components of surgical management, one of which is technical competence in conducting surgical procedures. By 2002, training programs were required to implement the ACGME recommendations to achieve program certification. Simultaneously and independently, surgical simulation has become established as a valid technique for training basic surgical skills performance of novice surgeons and demonstrating that their performances suffer compared with those of experienced surgeons.5–7

Performance can be measured electronically on many surgical simulators, thereby affording objective assessments of technical competency not possible with prior methods of training and assessment.6,8–11 Commercially available surgical simulators have unique outputs of performance and errors that are different between systems because standards have not been developed. The metrics found in simulators are of several types including units that describe distances that instrument tips travel (mm) in pursuit of a prescribed target, an economy measure (%) that relates the distance traveled compared with the direct distance, smoothness of the movement (a rate), the percentage of targets touched and transferred, the number (#) of minor or major errors, and other things (Appendices 1–4). This diverse set of outputs provides immediate feedback to users, but only a few (such as time taken) can also be utilized for determining normative performances across the various commercially available simulators. This research project has its roots in the need to document these metrics, to establish performance data for guiding the use of simulators in surgical training, and to develop a criterion-based training capability that is useful for residency program directors, vendors, and professional surgical organizations that seek to adopt surgical simulation as a learning and assessment technology.

METHODS

The Surgical Simulation Committee of the Society of Laparoendoscopic Surgeons (SLS) (Drs Satava, McDougall, Hasson, Heinrichs, Youngblood, Wetter) authorized SUMMIT to conduct this study before the 15th Annual Meeting in San Diego, California, during September 2005. Committee members and vendors met at SUMMIT on July 25th to review the modules of each simulator and select the 26 modules to be performed (Table 1). Based on professional reputation of surgical excellence and volume of surgical cases, laparoscopic surgeons in General Surgery, 7; Obstetrics and Gynecology, 6; and Urology, 3, (one surgeon's specialty was unknown) were recruited by committee members not conducting the trials. The 17 surgeon-participants included members of the following professional organizations: the American Association of Laparoendoscopic Laparoscopists, American College of Surgeons, American Urological Association, Society of American Gastrointestinal Endoscopic Surgeons, and Society of Laparoendoscopic Surgeons. The participants were paid to join this one and one-half day study group to provide their performance of surgical skills in an IRB-approved study. The number and type of systems available from vendors were Lap Mentor (2, Symbionix, Cleveland, OH), LapSim (4, Surgical-Science AB, Göteborg Sweden), LTS2000 ISM60 (4, RealSim, Albuquerque, NM), ProMIS (2, Haptica, Boston, MA), and SurgicalSIM, (3, METI, Sarasota, FL).

Table 1.

Modules/Tasks Selected for Each Simulator

| Lap Mentor | Skills for Completing the Tasks |

|---|---|

| Camera navigation - 0° | Navigate to target, fix on target, activate hand signal of completion |

| Camera navigation - 30° | Same as for 0° endoscope |

| Eye-hand coordination | Navigate instruments to targets, touch target to signal completion |

| Clip applying | Navigate instrument to target, apply clip(s) |

| Grasping and clipping | Select instruments, navigate to target, grasp tube, retract & clip |

| Two-handed maneuvers | Select instruments, navigate, retract, grasp, transfer, & place |

| Cutting - dissecting | Select instruments, navigate, grasp, retract, expose, excise |

| Hook electrodes | Navigate, identify & hook (band), expose, desiccate (foot pedal) |

| Translocation of objects | Navigate, elevate, rotate, orient, transfer, place |

| LapSim | |

| Camera navigation | Navigate camera to target, fix on target, hold |

| Eye-hand coordination | Navigate instruments to target, touch target |

| Grasping | Navigate, grasp, extract, transfer, insert, place |

| Grasping & cutting | Navigate, grasp, retract, incise, place |

| Lifting & grasping | Navigate, expose, grasp, transfer, place |

| Suturing | Navigate, grasp, penetrate target, rotate, grasp, tie square knot |

| LTS2000 ISM60 | |

| Peg manipulation | Navigate, grasp, transfer, place, release |

| Ring manipulation | Navigate, grasp, rotate, traverse, guide, stretch, place, release |

| Ductal cannulation | Navigate, grasp, push to cannulate, grasp, extract |

| Lasso loop formation & cinching | Navigate, grasp suture, loop instrument around, navigate to suture end, grasp and pull; repeat to make lasso, place onto peg, and pull |

| Intracorporeal suturing | Navigate, grasp, penetrate target, rotate, grasp, tie knot, test |

| Tissue “disc” dissection | Navigate, grasp, incise, rotate, elevate, release |

| ProMIS | |

| Object positioning: grasp & transfer | Navigate, grasp, transfer |

| Sharp dissection: cut out circle | Navigate, grasp, position, incise, rotate, excise repeatedly |

| Knot tying: surgeon's knot | Navigate, grasp suture, loop instrument around, navigate to suture end, grasp and pull; repeat twice |

| Surgical SIM | |

| Retract-dissect | Navigate, grasp, navigate, desiccate, repeat |

| Traverse tube | Navigate, grasp, navigate, grasp, and others things |

| Place arrow | Navigate, grasp, navigate, grasp, place, hold, repeat |

| Dissect gallbladder | Navigate, grasp, retract, navigate, desiccate, excise |

Data were collected anonymously, and participants completed 2 questionnaires, one providing demographic information and the other a rating scale filled out immediately after participants completed their last performance on each simulator. Participants were assigned randomly to each system that was initially demonstrated by trained personnel who then answered the subjects' questions before logging them into the system. After the demonstration, surgeons completed the first module at least once and repeated the module if time were available before participants were signaled to move to another system; performance data were collected on all trials. After completion of a trial, assistants logged participants out and saved their results. On Day 1, 35 minutes was allocated for each system; later sessions allocated 30 minutes per system. In the interest of accumulating the maximal number of performances, a flexible schedule allowed participants to complete a module before moving to their next assigned system. The mean number of trials per surgeon was 3.5, and the maximum was 10. A preliminary report of this study has been presented.12

These procedures are very similar to those developed and used on 2 previous occasions for collecting data from a “convenience sample” of attendees at the 2004 annual meetings of the SLS and the AAGL in New York City and San Francisco, respectively.13 These trials, used in this report as a reference sample of less-experienced surgeons, were limited to the Peg Manipulation module of the LTS 2000 and the Lifting and Grasping module of the LapSim. These trials were not timed and were not repetitive, although some surgeons performed them more than twice.

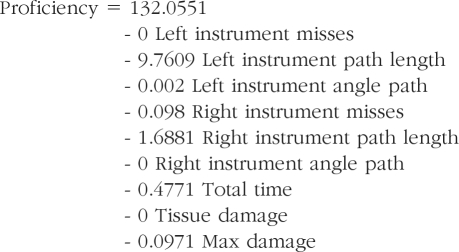

We developed a proficiency score formula for each module of the form b0 + b1X1 + b2X2 +… + bkXk, where b0, b1, b2,… bk are constants (called coefficients) and X1, X2,… , Xk are the measures (variables) recorded in the module. As an example, one possible proficiency score formula is proficiency score=120 − (2 × Time) − (4 × Errors). The number 120 is arbitrary and can be adjusted upward or downward to achieve a desired shift of the values. Achieving a theoretical proficiency score of 120 would require using zero time and making zero errors during a performance, obviously impossible conditions. The coefficient of each variable indicates the amount by which the proficiency score changes for each unit increase in the measure. In the example proficiency score formula above, each extra error results in a proficiency score decrease of 4 points.

Assumptions in the analysis are that the proficiency levels of our participants (the experts) are at least 50 on a 0 –100 scale, proficiency increases with practice, and that best performances are near 100. We compared other formulas that made assumptions of longer-time-to-plateau in proficiency scores, but the data reported below represent the “best fit” to the formulas.

RESULTS

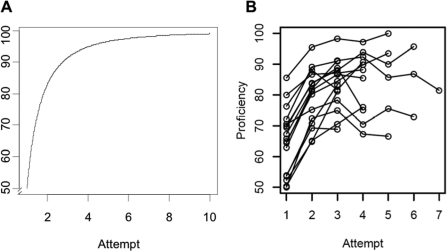

The dataset for this benchmark study comprises 204 measurements for the 26 modules selected and was performed 0 to 10 times each by 17 surgeons. As expected and illustrated in Figure 1, the earlier practice attempts demonstrate a sharp learning curve followed by less proficiency score improvement. Table 2 provides data that guided our decision for using attempt #4 for presenting benchmark data: out of 204 measures across all of the modules, 183 (90%) exhibited their largest changes between attempts by attempt #4.

Figure 1.

Graph of proficiency scores: (A) ideal practice curve; (B) lifting and grasping module of LapSim.

Table 2.

| The Change Between Attempts | Was Largest for This Many Variables (out of 204) |

|---|---|

| #1-#2 | 100 |

| #2-#3 | 73 |

| #3-#4 | 10 |

| #4-#5 | 03 |

We present Attempt #2 data from the LapMentor tasks because less data were available for these tasks.

Because the number of surgeons present for Attempt #3 was on average about 2.1 higher than the number present for Attempt #4, the accompanying website presents data for both Attempts #3 and #4.

Median scores and performance levels at the 10th, 25th, 50th, 75th, and 90th percentiles are provided in Table 3 to characterize the behavioral (performance) domain for experienced surgeons performing each module. See Appendices 1–5 for the remaining data. To provide the most uniform dataset and proficiency scores, data points further than 2 SD away from the mean were purged, to reduce the influence of outliers.

Table 3.

LapSim: Variables Measured and Criterion Percentile, Lifting and Grasping

| Variable | 10 | 25 | 50 | 75 | 90 |

|---|---|---|---|---|---|

| Left instrument misses | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| Left instrument path length | 1.593 | 1.562 | 1.442 | 1.332 | 1.183 |

| Left instrument angle path | 432.997 | 358.058 | 333.868 | 315.473 | 306.557 |

| Right instrument misses | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| Right instrument path length | 1.81 | 1.626 | 1.497 | 1.443 | 1.17 |

| Right instrument angle path | 483.922 | 381.742 | 320.654 | 303.44 | 293.167 |

| Total time | 74.787 | 71.879 | 52.455 | 46.602 | 43.455 |

| Tissue damage | 5 | 3.5 | 2 | 1 | 0 |

| Maximum damage | 45.042 | 33.066 | 17.529 | 5.035 | 3.323 |

| Proficiency score | 70.509 | 75.581 | 88.227 | 91.579 | 93.929 |

Each proficiency score has behind it a formula that combines the measures taken by the simulator into a single score. For LapSim Lifting & Grasping, that formula is:

|

For example, a surgeon with a median-level performance on each of the variables (the 50th percentile column in Table 3) would have a proficiency score of

|

Mean values and SDs were also computed for completeness. However, for technical reasons, we prefer the use of percentiles rather than means and standard deviations, so such information can be found in Appendix 1.

Some participants were unable to complete the 3 half-days due to competing activities and unexpected responsibilities. Also, one vendor's systems were delayed in US Customs, and 2 provided fewer than the ideal number of 4 systems needed for this number of participants. The consequence was fewer data for those systems, particularly the Lap Mentor.

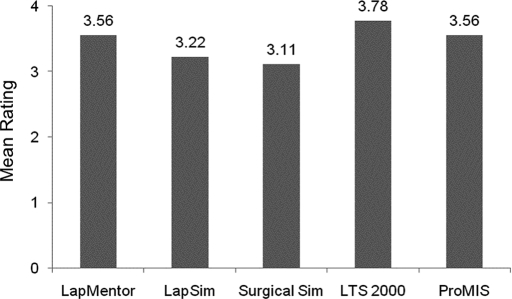

Opinions for Surgeon Users

On the third half-day of the study, the surgeons evaluated the overall effectiveness of the 5 simulators as training tools (in comparison with training not given on a computer) on a 4-point scale. Their average ratings ranged from 3.1 to 3.8, signifying the range of very good to excellent (Figure 2). Nevertheless, the mean effectiveness ratings for each

Figure 2.

Mean ratings of the effectiveness of the 5 simulators (1 is poor and 4 is excellent).

Reliability

One simple way to get a measure of reliability is to compute the correlation between proficiency scores on successive attempts after the learning curve has flattened out. We computed the correlations between proficiency scores on attempts 3 and 4 on all the systems except LapMentor tasks for which we had only 2 attempts. The average correlation was 0.65, with quite a large range (0.14 to 0.96).

Validity

One simple measure of the validity of our proficiency score formula is to see whether it distinguishes between the experts in our sample and the “convenience sample” taken at the 2004 SLS and AAGL meetings. Unfortunately, we only had 2 tasks of overlap between the 2 samples, and the sample sizes were fairly small. However, the results do suggest some validity for the proficiency score formulas tested. For the Peg Manipulation task of the LTS 2000 simulator, our expert sample had a mean score of 85.49, while the convenience sample had a mean score of 81.43. However, this difference was not significant (P=0.25). For the Lifting and Grasping task of the LapSim simulator, our expert sample had a mean score of 79.36, while the convenience sample had a mean score of 68.04. This difference was statistically significant (P<0.01). It should be noted that these results are merely suggestive for a number of reasons (eg, only 2 tasks were available for comparison, and the expert sample was used to create the proficiency score in the first place). Further work is needed to ensure that our proficiency score formulas are valid. For example, a validity study might compare our proficiency score formulas with independent ratings of surgeon performance by experts in the field.

DISCUSSION AND CONCLUSION

This study provides the surgical community with the first set of performance data for criterion-based training on a group of 5 surgical simulators based on the performance of 17 experienced laparoscopic surgeons. Three objectives were met1: acquiring standardized data simultaneously from a practically large group of experienced surgeons,2 providing vendors with data for guiding their development of courses for general use, and3 providing surgical program directors and professional organizations with data for setting standards for criterion-based training and assessment. Using these criteria, training program administrators will tentatively be able to calibrate their training programs and requirements with any of these systems. We say tentatively because experience with the proficiency scores will provide feedback only as to reasonable levels of performance in practice, because none of the simulators were developed as an assessment instrument per se, and because future studies should map the link between performance on the simulator tasks and performance in surgery. Although we believe that these data are too few for attempting to certify the technical skills of surgeons with the present systems, they provide a strong resource for guiding self-learning goals by surgical residents and residency achievement benchmarks. They also may inform medical students making career decisions about the level of technical skills required in laparoscopic surgery.

The data provide a criterion against which trainee performance can be evaluated. Two different representations of the criterion data were provided: percentiles and means±SD. We recommend use of percentiles for criterion setting as this representation is directly interpretable—for example, a trainee's performance is equivalent to the 25th percentile performance of experienced surgeons, is less influenced than means by extreme performance scores, and does not depend on the assumption of normality to interpret, as does the interpretation of means with SD.

A Proficiency Score at or near the median is consistent with performances by the middle individual among a group of experienced surgeons who performed this exercise/module; a score at or near the 25th percentile indicates a performance better than those given by 25% of the experienced group, and a score at or near the 75th percentile indicates a performance better than those given by 75% of the experienced group.”

With further experience with criterion-referenced data, our objective will become competence-based training, fulfilling the ACGME objectives. Academic surgeons, professional societies, and certifying boards must soon adopt training objectives and curricula that move away from the calendar as a training-endpoint.14 The United Kingdom has already taken a step in that direction.15,16

The language of metrics used within the surgical community deserves comment. All of the several skills required for performing these tasks are based on and reflect the inherent abilities of each user, including eye-hand coordination, visual-spatial perception, focus, neuro-muscular stability, and other such things.17,18 The skills required for performing the tasks listed in Table 1 require practice to improve performance and are shared by most of the simulators. Beyond tasks, procedures are the product of choreographing multiple tasks that, when combined, comprise a surgical manipulation or procedure.19,20 Some systems describe tasks by using the names of skills, providing confusion for users. For example, grasping and transfer or grasping and lifting are individual skills, not tasks, but the combination of 2 skills has been labeled as a task in the LapSim. As development of simulators evolves, additional graphics and functions are being introduced, moving toward “part-procedure” trainers. Thus, nomenclature too has not been standardized across systems.21 Delineation of the skills that comprise each task is presented in Table 1 to clarify the nomenclature.

Similarly, error(s) recorded vary among modules. In the Peg Manipulation module of the LTS2000, dropping a peg is recorded as one error. Errors could also reflect touching the target with the shaft of a grasper, or striking the edge of a bounding box with the target-in-transfer, or the instrument tip, or the instrument shaft, etc. The LapSim module on Lifting and Grasping records errors of several types, such as touching the cover lying over a target object (surgical needle) with the shaft of a handle or touching the background (producing a red-out), and it records the depth of pressure-distortion of the background. It does not record the number of attempts the user makes in lifting the lid, nor the number of times that it is dropped inadvertently. These are additional features by which stability of performance can be assessed on that module. The Simulation Committee will respectfully address each vendor with suggestions for improvement of the measures recorded, with a request that such changes be introduced as an incentive for obtaining endorsement from professional surgical societies.

Appendix 1. LapSim Modules (Medium Difficulty) Attempt #4

Module 1: Camera Navigation, 0°

Proficiency = 112.4693 Intercept - 3.9135 Path length - 0.3464 Total time - 0.0982 Drift

| Variables Measured and Criterion Percentile Values | |||||

|---|---|---|---|---|---|

| Variable | 10 | 25 | 50 | 75 | 90 |

| Path length | 2.155 | 1.768 | 1.502 | 1.373 | 1.268 |

| Angular path | 915.586 | 596.561 | 472.468 | 370.479 | 279.943 |

| Total time | 70.359 | 58.448 | 38.893 | 31.447 | 28.312 |

| Drift | 7.417 | 6.516 | 5.372 | 3.829 | 3.302 |

| Tissue damage | 0 | 0 | 0 | 0 | 0 |

| Maximum damage | 0 | 0 | 0 | 0 | 0 |

| Proficiency score | 78.546 | 84.654 | 93.052 | 95.782 | 96.787 |

| Means ± Standard Deviations for Each Variable | |||||

| Variable | −1.5 | −1 | 0 | +1 | +1.5 |

| Path length | 1.061 | 1.246 | 1.618 | 1.989 | 2.175 |

| Angular path | 137.723 | 270.31 | 535.483 | 800.656 | 933.242 |

| Total time | 20.839 | 29.405 | 46.537 | 63.668 | 72.234 |

| Drift | 2.467 | 3.386 | 5.224 | 7.062 | 7.981 |

| Tissue damage | 0 | 0 | 0 | 0 | 0 |

| Maximum damage | 0 | 0 | 0 | 0 | 0 |

| Proficiency score | 78.082 | 81.792 | 89.213 | 96.634 | 100.344 |

Module 2: Instrument Navigation

Proficiency = 136.4479 − 36.7202 Left instrument path length −21.4565 Right instrument path length −0.012 Right instrument angular path − 0.6106 Right instrument time − 0.2756 Tissue damage − 0.1563 Maximum damage

| Variables Measured and Criterion Percentile Values | |||||

|---|---|---|---|---|---|

| Variable | 10 | 25 | 50 | 75 | 90 |

| Left instrument path length | 0.06 | 0.65 | 0.72 | 0.77 | 0.81 |

| Left instrument angle path | 168.37 | 180.38 | 204.47 | 228.95 | 245.88 |

| Left instrument time | 9.20 | 10.13 | 11.11 | 12.76 | 14.86 |

| Right instrument path length | 0.58 | 0.62 | 0.70 | 0.74 | 0.81 |

| Right instrument angle path | 131.35 | 142.44 | 155.53 | 180.19 | 194.22 |

| Right instrument time | 9.74 | 11.39 | 14.11 | 15.53 | 17.32 |

| Tissue damage | 0.00 | 0.00 | 1.00 | 1.00 | 4.00 |

| Maximum damage | 0.00 | 0.00 | 0.75 | 1.37 | 5.33 |

| Proficiency score | 77.49 | 78.89 | 84.37 | 88.50 | 93.37 |

| Means ± Standard Deviations for Each Variable | |||||

| Variable | −1.5 | −1 | 0 | +1 | +1.5 |

| Left instrument path length | 0.545 | 0.6 | 0.709 | 0.819 | 0.874 |

| Left instrument angle path | 132.508 | 151.354 | 189.045 | 226.736 | 245.582 |

| Left instrument time | 7.639 | 8.783 | 11.071 | 13.36 | 14.504 |

| Right instrument path length | 0.491 | 0.551 | 0.669 | 0.787 | 0.846 |

| Right instrument angle path | 102.534 | 120.756 | 157.202 | 193.648 | 211.87 |

| Right instrument time | 8.284 | 9.736 | 12.64 | 15.545 | 16.997 |

| Tissue damage | −0.716 | 0.038 | 1.545 | 3.053 | 3.807 |

| Maximum damage | −1.015 | −0.182 | 1.484 | 3.15 | 3.983 |

| Proficiency score | 75.113 | 78.898 | 86.466 | 94.035 | 97.819 |

Module 3: Grasping

Proficiency = 111.5076 − 2.9354 Left instrument path length − 0.0013 Left instrument angular path −0.0632 Left instrument misses − 1.2948 Right instrument path length − 0.2603 Right instrument time −0.1122 Right instrument misses − 0.1343 Maximum damage

| Variables Measured and Criterion Percentile Values | |||||

|---|---|---|---|---|---|

| Variable | 10 | 25 | 50 | 75 | 90 |

| Left instrument path length | 2.965 | 2.764 | 2.424 | 1.778 | 1.608 |

| Left instrument angular path | 701.949 | 626.146 | 499.675 | 399.267 | 379.985 |

| Left instrument time | 61.518 | 59.456 | 50.205 | 34.913 | 31.722 |

| Left instrument misses | 0 | 0 | 0 | 0 | 0 |

| Right instrument path length | 2.765 | 2.429 | 2.173 | 1.891 | 1.613 |

| Right instrument angular path | 496.771 | 424.834 | 331.345 | 305.61 | 295.78 |

| Right instrument time | 57.939 | 54.327 | 39.816 | 35.297 | 31.411 |

| Right instrument misses | 0 | 0 | 0 | 0 | 0 |

| Tissue damage | 5 | 5 | 4 | 2 | 1 |

| Maximum damage | 8.207 | 5.748 | 4.453 | 3.055 | 1.43 |

| Proficiency score | 80.914 | 84.84 | 88.821 | 92.713 | 95.749 |

| Means ± Standard Deviations for Each Variable | |||||

| Variable | −1.5 | −1 | 0 | +1 | +1.5 |

| Left instrument path length | 1.37 | 1.689 | 2.327 | 2.965 | 3.284 |

| Left instrument angular path | 315.689 | 382.294 | 515.504 | 648.713 | 715.318 |

| Left instrument time | 28.369 | 34.912 | 47.997 | 61.083 | 67.626 |

| Left instrument misses | 0 | 0 | 0 | 0 | 0 |

| Right instrument path length | 1.509 | 1.727 | 2.165 | 2.603 | 2.821 |

| Right instrument angular path | 238.458 | 281.731 | 368.278 | 454.825 | 498.099 |

| Right instrument time | 24.833 | 31.098 | 43.63 | 56.161 | 62.426 |

| Right instrument misses | 0 | 0 | 0 | 0 | 0 |

| Tissue damage | −1.05 | 0.633 | 4 | 7.367 | 9.05 |

| Maximum damage | 0.244 | 1.771 | 4.824 | 7.877 | 9.404 |

| Proficiency score | 79.908 | 82.833 | 88.683 | 94.533 | 97.458 |

Module 4: Cutting

Proficiency = 120.2763 − 0.0461 Cutter angular path − 0.4382 Total time − 0.0685 Maximum stretch damage − 0.1884 Rip failure

| Variable Measured and Criterion Percentile Values | |||||

|---|---|---|---|---|---|

| Variable | 10 | 25 | 50 | 75 | 90 |

| Cutter path length | 0.685 | 0.594 | 0.487 | 0.417 | 0.324 |

| Cutter angular path | 162.363 | 146.659 | 122.407 | 96.565 | 78.135 |

| Total time | 95.647 | 71.46 | 48.401 | 44.088 | 43.322 |

| Maximum stretch damage | 97.481 | 64.448 | 37.526 | 24.817 | 2.407 |

| Tissue damage | 2 | 1 | 1 | 0 | 0 |

| Maximum damage | 4.688 | 3.73 | 1.534 | 0 | 0 |

| Rip failure | 0 | 0 | 0 | 0 | 0 |

| Drop failure | 0 | 0 | 0 | 0 | 0 |

| Proficiency score | 68.967 | 83.389 | 89.569 | 93.428 | 94.8 |

| Means ± Standard Deviations for Each Variable | |||||

| Variable | −1.5 | −1 | 0 | +1 | +1.5 |

| Cutter path length | 0.268 | 0.35 | 0.515 | 0.679 | 0.761 |

| Cutter angle path | 59.773 | 81.837 | 125.965 | 170.092 | 192.156 |

| Total time | 25.18 | 36.957 | 60.511 | 84.065 | 95.842 |

| Maximum stretch damage | −4.899 | 11.828 | 45.282 | 78.735 | 95.462 |

| Tissue damage | −0.922 | −0.312 | 0.909 | 2.13 | 2.741 |

| Maximum damage | −4.058 | −1.746 | 2.876 | 7.499 | 9.81 |

| Rip failure | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| Drop failure | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| Proficiency score | 70.489 | 75.78 | 86.36 | 96.94 | 102.23 |

Module 5: Lifting and Grasping

Proficiency = 132.0551 − 9.7609 Left instrument path length − 0.002 Left instrument angle path − 0.098 Right instrument misses 1.6881 Right instrument path length − 0.4771 Total time − 0.0971 Max damage

| Variables Measured and Criterion Percentile Values | |||||

|---|---|---|---|---|---|

| Variable | 10 | 25 | 50 | 75 | 90 |

| Left instrument misses | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| Left instrument path length | 1.593 | 1.562 | 1.442 | 1.332 | 1.183 |

| Left instrument angle path | 432.997 | 358.058 | 333.868 | 315.473 | 306.557 |

| Right instrument misses | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| Right instrument path length | 1.81 | 1.626 | 1.497 | 1.443 | 1.17 |

| Right instrument angle path | 483.922 | 381.742 | 320.654 | 303.44 | 293.167 |

| Total time | 74.787 | 71.879 | 52.455 | 46.602 | 43.455 |

| Tissue damage | 5 | 3.5 | 2 | 1 | 0 |

| Maximum damage | 45.042 | 33.066 | 17.529 | 5.035 | 3.323 |

| Proficiency score | 70.509 | 75.581 | 88.227 | 91.579 | 93.929 |

| Means ± Standard Deviations for Each Variable | |||||

| Variable | −1.5 | −1 | 0 | +1 | +1.5 |

| Left instrument misses | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| Left instrument path length | 1.128 | 1.231 | 1.435 | 1.639 | 1.741 |

| Left instrument angle path | 274.425 | 299.511 | 349.682 | 399.854 | 424.939 |

| Right instrument misses | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| Right instrument path length | 1.152 | 1.275 | 1.523 | 1.77 | 1.894 |

| Right instrument angle path | 241.43 | 279.339 | 355.157 | 430.975 | 468.884 |

| Total time | 36.137 | 43.184 | 57.277 | 71.37 | 78.416 |

| Tissue damage | −0.734 | 0.268 | 2.273 | 4.277 | 5.28 |

| Maximum damage | −5.776 | 3.008 | 20.576 | 38.143 | 46.927 |

| Proficiency score | 68.917 | 74.033 | 84.263 | 94.494 | 99.61 |

Appendix 2: LTS2000 ISM60* Attempt #4

Module 1: Peg Manipulation

Proficiency = 104.319 − 0.1309 Time − 2.5093 Errors

| Variables Measured and Criterion Percentile Values | |||||

|---|---|---|---|---|---|

| Variable | 10 | 25 | 50 | 75 | 90 |

| Time | 143.0 | 135.5 | 83.0 | 54.0 | 47.0 |

| Errors | 1.2 | 1 | 0 | 0 | 0 |

| Proficiency score | 77.552 | 85.734 | 93.456 | 95.462 | 96.728 |

| Means ± Standard Deviations for Each Variable | |||||

| Variable | −1.5 | −1 | 0 | +1 | +1.5 |

| Time | 29.198 | 50.617 | 93.455 | 136.292 | 157.711 |

| Errors | −0.849 | −0.366 | 0.6 | 1.566 | 2.049 |

| Proficiency score | 77.901 | 81.793 | 89.579 | 97.364 | 101.257 |

Module 2: Ring Manipulation (Dominant Hand)

Proficiency = 103.0973 − 0.4425 Time

| Variables Measured and Criterion Percentile Values | |||||

|---|---|---|---|---|---|

| Variable | 10 | 25 | 50 | 75 | 90 |

| Time | 143.0 | 135.5 | 83.0 | 54.0 | 47.0 |

| Errors | 1.2 | 1 | 0 | 0 | 0 |

| Proficiency score | 77.552 | 85.734 | 93.456 | 95.462 | 96.728 |

| Means ± Standard Deviations for Each Variable | |||||

| Variable | −1.5 | −1 | 0 | +1 | +1.5 |

| Time | 5.883 | 9.407 | 16.455 | 23.502 | 27.026 |

| Errors | −0.131 | 0.413 | 1.5 | 2.587 | 3.131 |

| Proficiency score | 91.139 | 92.698 | 95.817 | 98.935 | 100.494 |

Module 3: Ring Manipulation (Non-dominant Hand)

Proficiency = 100.4142 − 0.1381 Time − 11.282 Errors

| Variables Measured and Criterion Percentile Values | |||||

|---|---|---|---|---|---|

| Variable | 10 | 25 | 50 | 75 | 90 |

| Time | 26 | 23 | 12 | 10.5 | 8 |

| Errors | 3 | 3 | 1 | 1 | 1 |

| Proficiency score | 62.978 | 63.6 | 86.233 | 87.648 | 88 |

| Means ± Standard Deviations for Each Variable | |||||

| Variable | −1.5 | −1 | 0 | +1 | +1.5 |

| Time | 3.989 | 8.023 | 16.091 | 24.159 | 28.193 |

| Errors | 0.057 | 0.594 | 1.667 | 2.74 | 3.276 |

| Proficiency score | 57.515 | 64.408 | 78.194 | 91.98 | 98.873 |

Module 4: Knot Integrity

R-Proficiency = 106.8519 − 0.1852 Time

| Variables Measured and Criterion Percentile Values | |||||

|---|---|---|---|---|---|

| Variable | 10 | 25 | 50 | 75 | 90 |

| Time | 133 | 132.25 | 107 | 74.75 | 58.7 |

| Proficiency score | 82.222 | 82.361 | 87.037 | 93.009 | 95.981 |

| Means ± Standard Deviations for Each Variable | |||||

| Variable | −1.5 | −1 | 0 | +1 | +1.5 |

| Time | 52.229 | 68.708 | 101.667 | 134.625 | 151.104 |

| Proficiency score | 78.87 | 81.921 | 88.025 | 94.128 | 97.18 |

Module 5: Circle Cutting

Proficiency = 116.7375 − 0.172 Time − 1.1435 Errors

| Variables Measured and Criterion Percentile Values | |||||

|---|---|---|---|---|---|

| Variable | 10 | 25 | 50 | 75 | 90 |

| Time | 220.3 | 189.25 | 166.5 | 148.5 | 98.1 |

| Errors | 7.9 | 5.5 | 2 | 1 | 0.1 |

| Proficiency score | 78.375 | 81.567 | 87.614 | 90.316 | 91.591 |

| Means ± Standard Deviations for Each Variable | |||||

| Variable | −1.5 | −1 | 0 | +1 | +1.5 |

| Time | 94.317 | 118.85 | 167.917 | 216.983 | 241.517 |

| Errors | −1.323 | 0.201 | 3.25 | 6.299 | 7.823 |

| Proficiency score | 77.167 | 80.046 | 85.803 | 91.561 | 94.44 |

*As of 1/1/07, this second generation model, superseded by the LTS 3e model has been licensed by METI (personal communication, Dr. Hasson).

Appendix 3: Surgical Sim Attempt #4

Module 1: Gallbladder Dissection

Proficiency = 109.0262 − 0.0398 Total time − 0.0238 Tip trajectory

| Variables Measured and Criterion Percentile Values | |||||

|---|---|---|---|---|---|

| Variable | 10 | 25 | 50 | 75 | 90 |

| Total time | 335.3 | 296 | 213 | 188.5 | 176.5 |

| Tip-trajectory | 475.579 | 370.53 | 319.762 | 237.19 | 204.669 |

| Burning-in-air time | 13.242 | 7.733 | 3.5 | 1.804 | 1.276 |

| Tissue overstretched | 5 | 4.25 | 2.5 | 1 | 1 |

| Dissection-outside-target | 24.8 | 14 | 8 | 3 | 3 |

| Proficiency score | 85.024 | 88.044 | 92.922 | 96.066 | 97.782 |

| Means ± Standard Deviations for Each Variable | |||||

| Variable | −1.5 | −1 | 0 | +1 | +1.5 |

| Total time | 122.734 | 163.962 | 246.417 | 328.872 | 370.099 |

| Tip-trajectory | 104.294 | 184.53 | 345.003 | 505.476 | 585.712 |

| Burning-in-air time | −2.143 | 0.372 | 5.402 | 10.432 | 12.947 |

| Tissue overstretched | −4.531 | −1.715 | 3.917 | 9.549 | 12.365 |

| Dissection-outside-target | −2.752 | 1.652 | 10.462 | 19.271 | 23.675 |

| Proficiency score | 80.47 | 83.977 | 90.99 | 98.003 | 101.51 |

Module 2: Place Arrow

Proficiency = 113.4184 − 1.3418 Total time − 1.1734 Dropped arrow − 1.7601 Closed entry right tool

| Variables Measured and Criterion Percentile Values | |||||

|---|---|---|---|---|---|

| Variable | 10 | 25 | 50 | 75 | 90 |

| Total time | 22.2 | 19 | 16 | 13 | 12 |

| Tip-trajectory | 55.789 | 39.438 | 37.375 | 34.313 | 31.128 |

| Dropped arrow | 0.4 | 0.35 | 0.2 | 0 | 0 |

| Lost arrow | 0.2 | 0.05 | 0 | 0 | 0 |

| Closed-entry-left-tool | 0.2 | 0 | 0 | 0 | 0 |

| Closed-entry-right-tool | 0.2 | 0.05 | 0 | 0 | 0 |

| Proficiency score | 83.442 | 87.689 | 91.597 | 95.974 | 96.982 |

| Means ± Standard Deviations for Each Variable | |||||

| Variable | −1.5 | −1 | 0 | +1 | +1.5 |

| Total time | 9.906 | 12.091 | 16.462 | 20.832 | 23.018 |

| Tip-trajectory | 26.013 | 30.687 | 40.035 | 49.383 | 54.057 |

| Dropped arrow | −0.088 | −0.001 | 0.171 | 0.344 | 0.431 |

| Lost arrow | −0.086 | −0.04 | 0.05 | 0.14 | 0.186 |

| Closed-entry-left-tool | −0.128 | −0.065 | 0.062 | 0.188 | 0.251 |

| Closed-entry-right-tool | −0.129 | −0.064 | 0.067 | 0.197 | 0.262 |

| Proficiency score | 81.965 | 84.929 | 90.851 | 96.775 | 99.737 |

Module 3: Retract and Dissect

Proficiency = 105.6126 − 0.244 Total time − 6.8972 Dissected outside target left − 5.3848 Dissected outside target right − 1.3444 Lost aligned pod left − 10.7167 Lost aligned pod right

| Variables Measured and Criterion Percentile Values | |||||

|---|---|---|---|---|---|

| Variable | 10 | 25 | 50 | 75 | 90 |

| Total time | 40.8 | 37.5 | 32 | 28 | 23.5 |

| Tip-trajectory | 126.543 | 79.923 | 75.77 | 70.803 | 61.879 |

| Burning-in-air time-left | 0.624 | 0.437 | 0.242 | 0 | 0 |

| Burning-in-air time-right | 0.483 | 0.354 | 0.143 | 0.021 | 0 |

| Tissue overstretched-left | 0.25 | 0.25 | 0 | 0 | 0 |

| Tissue overstretched-right | 0.975 | 0.562 | 0.25 | 0 | 0 |

| Dissected outside target-left | 0.5 | 0.312 | 0.125 | 0 | |

| Dissected outside target-right | 0.7 | 0.5 | 0.25 | 0 | 0 |

| Dissected pod-not aligned-left | 0.45 | 0.25 | 0 | 0 | 0 |

| Dissected pod-not-aligned-right | 0.5 | 0.25 | 0.25 | 0 | 0 |

| Lost-aligned pod-left | 0.25 | 0.25 | 0 | 0 | 0 |

| Lost-aligned pod-right | 0.225 | 0 | 0 | 0 | 0 |

| Proficiency score | 84.162 | 89.144 | 93.725 | 96.079 | 97.41 |

| Means ± Standard Deviations for Each Variable | |||||

| Variable | −1.5 | −1 | 0 | +1 | +1.5 |

| Total time | 22.077 | 25.44 | 32.167 | 38.893 | 42.256 |

| Tip-trajectory | 47.198 | 59.383 | 83.754 | 108.125 | 120.311 |

| Burning-in-air time-left | −0.159 | −0.012 | 0.282 | 0.575 | 0.72 |

| Burning-in-air time-right | −0.09 | 0.008 | 0.206 | 0.404 | 0.503 |

| Tissue overstretched-left | −0.089 | −0.025 | 0.104 | 0.233 | 0.297 |

| Tissue overstretched-right | −0.286 | −0.052 | 0.417 | 0.885 | 1.12 |

| Dissected outside target-left | −0.304 | −0.119 | 0.25 | 0.619 | 0.804 |

| Dissected outside target-right | −0.183 | −0.039 | 0.25 | 0.539 | 0.683 |

| Dissected pod-not aligned-left | −0.134 | −0.038 | 0.154 | 0.346 | 0.442 |

| Dissected pod-not-aligned-right | −0.12 | −0.016 | 0.192 | 0.4 | 0.504 |

| Lost-aligned pod-left | −0.101 | −0.04 | 0.083 | 0.206 | 0.268 |

| Lost-aligned pod-right | −0.104 | −0.056 | 0.042 | 0.139 | 0.188 |

| Proficiency score | 83.783 | 86.527 | 92.014 | 97.501 | 100.245 |

Module 4: Transverse Tube

Proficiency = 116.6667 − 1.2821 Total time

| Variables Measured and Criterion Percentile Values | |||||

|---|---|---|---|---|---|

| Variable | 10 | 25 | 50 | 75 | 90 |

| Total time | 29.6 | 28 | 22 | 18 | 17 |

| Tip-trajectory | 93.841 | 78.917 | 75.802 | 62.419 | 60.805 |

| Dropped tube | 1 | 0.4 | 0.2 | 0 | 0 |

| Wrong segment | 0.56 | 0.4 | 0.2 | 0.2 | 0.0 |

| Proficiency score | 78.718 | 80.769 | 88.462 | 93.59 | 94.872 |

| Means ± Standard Deviations for Each Variable | |||||

| Variable | −1.5 | −1 | 0 | +1 | +1.5 |

| Total time | 14.22 | 17.198 | 23.154 | 29.11 | 32.088 |

| Tip-trajectory | 54.989 | 61.679 | 75.059 | 88.438 | 95.128 |

| Dropped tube | −0.245 | −0.035 | 0.385 | 0.805 | 1.015 |

| Wrong segment | −0.011 | 0.085 | 0.277 | 0.469 | 0.565 |

| Proficiency score | 75.528 | 79.346 | 86.982 | 94.618 | 98.436 |

Appendix 4. ProMIS Attempt #4

Module 1: Dissection

Proficiency = 111.4094 − 0.0649 Left instrument path − 0.0097 Right instrument path − 0.0286 Left instrument smoothness 0.0106 Right instrument smoothness

| Variables Measured and Criterion Percentile Values | |||||

|---|---|---|---|---|---|

| Variable | 10 | 25 | 50 | 75 | 90 |

| Total time | 118.101 | 99.993 | 69.165 | 59.37 | 52.875 |

| Left instrument path | 107.904 | 96.62 | 85.22 | 72.22 | 68.876 |

| Right instrument path | 318.034 | 281.74 | 223.38 | 195.33 | 128.32 |

| Left instrument smoothness | 497.9 | 406 | 259.5 | 236.25 | 207.5 |

| Right instrument smoothness | 381.6 | 316 | 282 | 225 | 170.2 |

| Proficiency score | 84.751 | 90.112 | 93.47 | 94.981 | 98.031 |

| Means ± Standard Deviations for Each Variable | |||||

| Variable | −1.5 | −1 | 0 | +1 | +1.5 |

| Total time | 33.043 | 48.79 | 80.284 | 111.778 | 127.525 |

| Left instrument path | 55.91 | 66.055 | 86.347 | 106.638 | 116.784 |

| Right instrument path | 117.488 | 156.129 | 233.41 | 310.691 | 349.332 |

| Left instrument smoothness | 133.918 | 194.745 | 316.4 | 438.055 | 498.882 |

| Right instrument smoothness | 134.885 | 184.22 | 282.889 | 381.558 | 430.893 |

| Proficiency score | 83.519 | 86.389 | 92.13 | 97.871 | 100.741 |

Module 2: Instrument Handling

Proficiency = 127.6061 −0.7341 Total time −0.09 Left instrument path −0.0171 Left instrument smoothness −0.0149 Right instrument smoothness

| Variables Measured and Criterion Percentile Values | |||||

|---|---|---|---|---|---|

| Variable | 10 | 25 | 50 | 75 | 90 |

| Total time | 39.457 | 36.848 | 32.855 | 29.105 | 25.323 |

| Left instrument path | 121.697 | 117.532 | 113.115 | 102.043 | 93.457 |

| Right instrument path | 120.204 | 114.25 | 109.845 | 99.373 | 95.195 |

| Left instrument smoothness | 110.7 | 105.75 | 94.5 | 82.5 | 71.8 |

| Right instrument smoothness | 120.2 | 117 | 112 | 97 | 81.4 |

| Proficiency score | 84.392 | 86.033 | 90.194 | 93.06 | 98.247 |

| Means ± Standard Deviations for Each Variable | |||||

| Variable | −1.5 | −1 | 0 | +1 | +1.5 |

| Total time | 24.296 | 27.04 | 32.526 | 38.012 | 40.756 |

| Left instrument path | 88.268 | 95.651 | 110.417 | 125.183 | 132.566 |

| Right instrument path | 92.082 | 97.361 | 107.921 | 118.481 | 123.76 |

| Left instrument smoothness | 66.525 | 75.383 | 93.1 | 110.817 | 119.675 |

| Right instrument smoothness | 79.733 | 88.155 | 105 | 121.845 | 130.267 |

| Proficiency score | 82.336 | 85.075 | 90.554 | 96.032 | 98.771 |

Module 3: Suturing & Knot Tying

R-Proficiency = 100.1275 − 0.005 Left instrument path − 0.013 Right instrument smoothness

| Variables Measured and Criterion Percentile Values | |||||

|---|---|---|---|---|---|

| Variable | 10 | 25 | 50 | 75 | 90 |

| Total time | 295.784 | 266.577 | 114.63 | 94.248 | 74.59 |

| Left instrument path | 694.584 | 560.12 | 348.67 | 249.77 | 202.574 |

| Right instrument path | 854.166 | 672.87 | 409.62 | 246.39 | 241.238 |

| Left instrument smoothness | 891 | 817 | 343 | 301 | 225.8 |

| Right instrument smoothness | 1154.1 | 938.5 | 423 | 354.5 | 246.8 |

| Proficiency score | 81.612 | 84.144 | 92.947 | 94.031 | 96.019 |

| Means ± Standard Deviations for Each Variable | |||||

| Variable | −1.5 | −1 | 0 | +1 | +1.5 |

| Total time | 5.068 | 62.387 | 177.024 | 291.661 | 348.98 |

| Left instrument path | 79.253 | 189.984 | 411.446 | 632.907 | 743.638 |

| Right instrument path | 72.381 | 210.16 | 485.719 | 761.278 | 899.057 |

| Left instrument smoothness | 23.086 | 178.835 | 490.333 | 801.832 | 957.581 |

| Right instrument smoothness | −4.813 | 212.625 | 647.5 | 1082.375 | 1299.813 |

| Proficiency score | 78.65 | 82.206 | 89.318 | 96.43 | 99.985 |

Appendix 5: LapMentor Modules Attempt #2

Module 1: Camera Navigation (0°)

Proficiency = 43.1963 − 0.0457 Total time *−0.2223 The time the horizontal view is maintained while using the 0° camera + 0.7437 Maintaining the horizontal view while using the 0° camera

| Variables Measured and Criterion Percentile Values | |||||

|---|---|---|---|---|---|

| Variable | 10 | 25 | 50 | 75 | 90 |

| Total time | 85.5 | 83.25 | 78.5 | 61.5 | 58.5 |

| Total no of camera shots | 12.6 | 12 | 11 | 10 | 10 |

| Time horizontal view maintained | 78.9 | 69.75 | 63 | 53.75 | 51.2 |

| Total path length of camera cm | 269 | 264.5 | 225.7 | 212.4 | 200.0 |

| No correct hits | 10 | 10 | 10 | 10 | 10 |

| Accuracy rate target hits | 79.73 | 83.3 | 90.9 | 100 | 100 |

| Maintain horizontal view of 0° camera | 75.36 | 79.35 | 83.55 | 94.23 | 95.32 |

| Ave speed of camera cm sec | 8.88 | 9.225 | 10.3 | 10.5 | 10.59 |

| Proficiency score | 82.92 | 84.35 | 86.45 | 93.38 | 97.96 |

| Means ± Standard Deviations for Each Variable | |||||

| Variable | −1.5 | −1 | 0 | +1 | +1.5 |

| Total time | 54.35 | 60.81 | 73.75 | 86.69 | 93.16 |

| Total no of camera shots | 9.167 | 9.861 | 11.25 | 12.64 | 13.33 |

| Time horizontal view maintained | 45.02 | 51.10 | 63.25 | 75.40 | 81.48 |

| Total path length of camera cm | 187.4 | 203.5 | 235.7 | 267.9 | 284.0 |

| No correct hits | 10 | 10 | 10 | 10 | 10 |

| Accuracy rate target hits. | 74.58 | 79.71 | 90.00 | 100.2 | 105.4 |

| Maintain horizontal view of 0° camera | 71.00 | 75.66 | 84.98 | 94.29 | 98.95 |

| Ave speed of camera cm sec | 8.68 | 9.09 | 9.91 | 10.73 | 11.15 |

| Proficiency score | 78.70 | 82.12 | 88.97 | 95.81 | 99.23 |

Module 2: Camera Navigation (30°)

Proficiency = 131.2485 − 0.1744 Total time − 11.6569 Total no of camera shots − 0.0118 Total path length of camera in cm + 9.8071 No of correct hits

| Variables Measured and Criterion Percentile Values | |||||

|---|---|---|---|---|---|

| Variable | 10 | 25 | 50 | 75 | 90 |

| Total time | 84.2 | 77 | 71 | 66 | 61.2 |

| Total no of camera shots | 11 | 11 | 10 | 10 | 10 |

| Total path length of camera cm | 358.1 | 322.5 | 287.6 | 275.7 | 228.1 |

| No correct hits | 10 | 10 | 10 | 10 | 10 |

| Accuracy rate target hits | 90.9 | 90.9 | 100.0 | 100 | 100 |

| Ave speed of camera cm sec | 8.01 | 8.1 | 8.4 | 9.35 | 9.9 |

| Proficiency score | 85.13 | 91.11 | 96.1 | 96.75 | 98.28 |

| Means ± Standard Deviations for Each Variable | |||||

| Variable | −1.5 | −1 | 0 | +1 | +1.5 |

| Total time | 56.47 | 61.89 | 72.71 | 83.54 | 88.96 |

| Total no of camera shots | 9.60 | 9.86 | 10.38 | 10.89 | 11.15 |

| Total path length of camera cm | 189.3 | 221.7 | 287.1 | 352.6 | 385.3 |

| No correct hits | 10 | 10 | 10 | 10 | 10 |

| Accuracy rate target hits | 89.52 | 91.88 | 96.59 | 101.3 | 103.7 |

| Ave speed of camera cm sec | 7.43 | 7.87 | 8.75 | 9.63 | 10.07 |

| Proficiency score | 83.69 | 86.91 | 93.35 | 99.79 | 103.0 |

Module 3: Eye-hand Coordination

R-Proficiency = 183.0005 − 2.0767 Total number of touched balls − 1.5668 Number of movements of left instrument − 0.5533 Total path length of right instrument in cm

| Variables Measured and Criterion Percentile Values | |||||

|---|---|---|---|---|---|

| Variable | 10 | 25 | 50 | 75 | 90 |

| Total time | 47.8 | 46.5 | 39 | 33 | 28.8 |

| Total no of touched balls | 10 | 10 | 10 | 10 | 10 |

| No moves of right instrument | 20.5 | 19.75 | 18 | 16.3 | 16 |

| No moves of left instrument | 19.4 | 18.5 | 18 | 17 | 15.4 |

| Total path length right instrument cm | 112.2 | 108.1 | 88.4 | 80.8 | 75.1 |

| Total path length left instrument cm | 102.7 | 101.6 | 84.8 | 78.2 | 73.9 |

| Relevant path right Instrument cm | 72.04 | 68.05 | 57.5 | 41.1 | 36.1 |

| Relevant path left instrument cm | 52.12 | 51.1 | 44.1 | 41.5 | 37.7 |

| No correct hits | 10 | 10 | 10 | 10 | 10 |

| Accuracy rate touched targets | 100 | 100 | 100 | 100 | 100 |

| Ideal path length right instrument cm | 26.44 | 30.1 | 34.1 | 37.8 | 39.62 |

| Ideal path length left instrument cm | 27.12 | 30.45 | 32.7 | 34.2 | 35.92 |

| Economy of moves right instrument | 52.26 | 55.5 | 64.8 | 73.3 | 76.06 |

| Economy of moves left instrument | 63.7 | 65.05 | 70.7 | 7.50 | 80.54 |

| Ave speed right instrument moves cm sec | 2.58 | 2.85 | 3.2 | 3.3 | 3.34 |

| Ave speed left instrument moves cm sec | 1.66 | 2.7 | 3.1 | 3.45 | 3.54 |

| Proficiency score | 70.74 | 72.68 | 85.12 | 91.58 | 96.80 |

| Means ± Standard Deviations for Each Variable | |||||

| Variable | −1.5 | −1 | 0 | +1 | +1.5 |

| Total time | 26.20 | 30.51 | 39.14 | 47.77 | 52.09 |

| Total no of touched balls | 10 | 10 | 10 | 10 | 10 |

| No moves right instrument | 14.96 | 16.03 | 18.17 | 20.30 | 21.37 |

| No moves left instrument | 14.09 | 15.20 | 17.43 | 19.65 | 20.77 |

| Total path length right instrument cm | 14.96 | 16.03 | 18.17 | 20.30 | 21.37 |

| Total path length left instrument cm | 14.09 | 15.20 | 17.43 | 19.65 | 20.8 |

| Relevant path right instrument cm | 28.02 | 36.86 | 54.56 | 72.25 | 81.10 |

| Relevant path left instrument cm | 34.53 | 38.05 | 45.09 | 52.13 | 55.65 |

| No correct hits | 10 | 10 | 10 | 10 | 10 |

| Accuracy rate touched targets | 100 | 100 | 100 | 100 | 100 |

| Ideal path length right instrument cm | 23.80 | 27.02 | 33.44 | 39.87 | 43.08 |

| Ideal path length left instrument cm | 25.85 | 27.91 | 32.01 | 36.12 | 38.18 |

| Economy of moves right instrument | 47.79 | 53.25 | 64.16 | 75.07 | 80.52 |

| Economy of moves left instrument | 58.73 | 63.07 | 71.74 | 80.42 | 84.76 |

| Ave speed right instrument moves cm sec | 2.489 | 2.674 | 3.043 | 3.412 | 3.596 |

| Ave speed left instrument moves cm sec | 2.453 | 2.664 | 3.086 | 3.508 | 3.719 |

| Proficiency score | 65.02 | 71.06 | 83.14 | 95.22 | 101.3 |

Module 4: Clip Applying

Proficiency = 143.5707 − 0.4326 Total time − 0.0838 Number of movements of right instrument − 0.2969 Number of movements of left instrument −0.1786 Relevant path length of right instrument in cm

| Variables Measured and Criterion Percentile Values | |||||

|---|---|---|---|---|---|

| Variable | 10 | 25 | 50 | 75 | 90 |

| Total time | 67 | 64.5 | 60 | 55.5 | 52.5 |

| No of lost clips | 5.8 | 4.5 | 2 | 1 | 0.6 |

| Total no of clipping attempts | 14.8 | 13.5 | 11 | 10 | 9.6 |

| No of movements of right instrument | 64.8 | 48 | 38 | 31.5 | 28 |

| No of movements of left instrument | 36.4 | 35 | 28 | 18.5 | 10.4 |

| Total path length of right instrument cm | 198.1 | 175.5 | 132.3 | 117.5 | 95.34 |

| Total path length of left instrument cm | 122.0 | 114.3 | 104.1 | 57.7 | 10.62 |

| Relevant path length right instrument cm | 175.2 | 137.1 | 117.7 | 95.9 | 65.06 |

| Relevant path length left instrument cm | 98.64 | 93.3 | 81.7 | 71.8 | 51.94 |

| Accuracy rate applied clips | 61.1 | 66.75 | 81.8 | 90 | 94 |

| Ideal path length of right instrument cm | 26.92 | 36.55 | 68 | 98 | 102.7 |

| Ideal path length of left instrument cm | 16.72 | 30.7 | 37.9 | 39.9 | 47.82 |

| Economy of movement right instrument | 38.42 | 41.05 | 46.4 | 65.15 | 74.9 |

| Economy of movement left instrument | 23.5 | 30.1 | 42.8 | 52.8 | 60.12 |

| Ave speed right instrument moves cm sec | 2.7 | 2.75 | 3.1 | 3.45 | 3.8 |

| Ave speed left instrument moves cm sec | 2.65 | 2.7 | 2.95 | 3.2 | 3.25 |

| Proficiency score | 66.56 | 79.48 | 83.19 | 92.91 | 96.74 |

| Means ± Standard Deviations for Each Variable | |||||

| Variable | −1.5 | −1 | 0 | +1 | +1.5 |

| Total time | 49.56 | 53.25 | 59.83 | 66.42 | 69.71 |

| No of lost clips | −0.96 | 0.312 | 2.857 | 5.402 | 6.674 |

| Total no of clipping attempts | 8.04 | 9.312 | 11.86 | 14.40 | 15.67 |

| No of movements of right instrument | 16.78 | 25.48 | 42.86 | 60.24 | 68.93 |

| No of movements of left instrument | 5.678 | 12.07 | 24.86 | 37.64 | 44.04 |

| Total path length of right instrument cm | 75.14 | 98.7 | 145.9 | 193.0 | 216.6 |

| Total path length of left instrument cm | 5.576 | 31.23 | 82.54 | 133.9 | 159.5 |

| Relevant path length right instrument cm | 40.83 | 67.32 | 120.3 | 173.3 | 199.8 |

| Relevant path length left instrument cm | 40.68 | 52.97 | 77.54 | 102.1 | 114.4 |

| Accuracy rate applied clips | 54.94 | 62.89 | 78.8 | 94.71 | 102.7 |

| Ideal path length of right instrument cm | 11.85 | 30.07 | 66.33 | 102.7 | 120.8 |

| Ideal path length of left instrument cm | 8.554 | 16.97 | 33.8 | 50.63 | 59.05 |

| Economy of movement right instrument | 28.13 | 36.51 | 53.27 | 70.04 | 78.42 |

| Economy of movement left instrument | 14.79 | 23.85 | 41.96 | 60.07 | 69.13 |

| Ave speed right instrument moves cm sec | 2.424 | 2.664 | 3.143 | 3.622 | 3.861 |

| Ave speed left instrument moves cm sec | 2.478 | 2.635 | 2.95 | 3.265 | 3.422 |

| Proficiency score | 57.99 | 66.18 | 82.57 | 98.95 | 107.1 |

Module 5: Grasping and Clipping

Proficiency = 148.6876 − 2e-04 Total time − 7e-04 No of lost clips−2e-04 No of movements of right instrument − 0.1511 Total path length of clipper in cm − 0.1518 Total path length of grasper in cm − 6e-04 Relevant path length clipper in cm

| Variables Measured and Criterion Percentile Values | |||||

|---|---|---|---|---|---|

| Variable | 10 | 25 | 50 | 75 | 90 |

| Total time | 125.4 | 109.5 | 101 | 83 | 70.6 |

| No of lost clips | 2 | 2 | 1 | 1 | 0.6 |

| Total no of clipping attempts | 11 | 11 | 10 | 10 | 9.6 |

| No of movements of right instrument | 66 | 58.5 | 53 | 43 | 35.6 |

| No of movements of left instrument | 82.2 | 74 | 64 | 51 | 45.4 |

| Total path length of right instrument cm | 222.2 | 207.3 | 185.6 | 174.7 | 170.4 |

| Total path length of left instrument cm | 267.1 | 260.9 | 232.2 | 211.8 | 174.7 |

| Total path length of clipper cm | 244 | 219.1 | 206 | 169.5 | 157.1 |

| Total path length of grasper cm | 261.4 | 249.5 | 232.2 | 189.8 | 181.6 |

| Relevant path length right instrument cm | 215.9 | 202.3 | 177.3 | 165.8 | 161.7 |

| Relevant path length left instrument cm | 258.9 | 252.5 | 221.1 | 200.7 | 166.2 |

| Relevant path length clipper cm | 234.9 | 212.8 | 200.1 | 161.6 | 148.7 |

| Relevant path length grasper cm | 255.1 | 241.1 | 215.5 | 181.6 | 172.4 |

| Accuracy rate applied clips | 81.8 | 81.8 | 90 | 90 | 94 |

| Ideal path length of clipper cm | 92.96 | 99.75 | 108.5 | 124.2 | 132.2 |

| Ideal path length of grasper cm | 105.6 | 106.7 | 111.4 | 113.5 | 115.5 |

| Economy of movement right instrument | 50.92 | 56.6 | 60.4 | 62.5 | 69.8 |

| Economy of movement left instrument | 40.62 | 44.25 | 54.1 | 56.65 | 63.38 |

| Economy of movement clipper | 46.92 | 54.5 | 60.2 | 67.15 | 75.38 |

| Economy of movement grasper | 44.6 | 46.9 | 54.1 | 58.85 | 61.52 |

| Ave speed of right instrument movement cm sec | 2.46 | 2.65 | 2.8 | 3.1 | 3.28 |

| Ave speed of left instrument movement cm sec | 2.9 | 3.025 | 3.15 | 3.425 | 3.6 |

| Proficiency score | 74.53 | 77.34 | 85.67 | 90.62 | 95.67 |

| Means ± Standard Deviations for Each Variable | |||||

| Variable | −1.5 | −1 | 0 | +1 | +1.5 |

| Total time | 61.47 | 74.01 | 99.14 | 124.3 | 136.8 |

| No of lost clips | 0.152 | 0.53 | 1.286 | 2.042 | 2.42 |

| Total no of clipping attempts | 9.152 | 9.53 | 10.29 | 11.04 | 11.42 |

| No of movements of right instrument | 31.36 | 38.34 | 52.29 | 66.24 | 73.21 |

| No of movements of left instrument | 37.80 | 46.20 | 63 | 79.80 | 88.20 |

| Total path length of right instrument cm | 153.2 | 166.7 | 193.8 | 221.0 | 234.5 |

| Total path length of left instrument cm | 160.9 | 183.1 | 227.5 | 272.0 | 294.2 |

| Total path length of clipper cm | 137.6 | 158.1 | 199.2 | 240.3 | 260.8 |

| Total path length of grasper cm | 166.5 | 185.1 | 222.2 | 259.3 | 277.8 |

| Relevant path length right instrument cm | 145.1 | 158.8 | 186.1 | 213.5 | 227.2 |

| Relevant path length left instrument cm | 150.7 | 173.2 | 218.3 | 263.3 | 285.8 |

| Relevant path length clipper cm | 130.2 | 150.6 | 191.6 | 232.6 | 253.0 |

| Relevant path length grasper cm | 155.9 | 174.9 | 212.8 | 250.7 | 269.7 |

| Accuracy rate applied clips | 77.83 | 81.19 | 87.91 | 94.7 | 98.0 |

| Ideal path length of clipper cm | 81.94 | 91.92 | 111.9 | 131.9 | 141.8 |

| Ideal path length of grasper cm | 103.5 | 105.8 | 110.4 | 115.0 | 117.3 |

| Economy of movement right instrument | 45.94 | 50.92 | 60.87 | 70.82 | 75.8 |

| Economy of movement left instrument | 35.04 | 40.80 | 52.31 | 63.83 | 69.59 |

| Economy of movement clipper | 39.78 | 46.57 | 60.14 | 73.72 | 80.50 |

| Economy of movement grasper | 41.35 | 45.25 | 53.04 | 60.84 | 64.74 |

| Ave speed of right instrument movement cm sec | 2.333 | 2.512 | 2.871 | 3.231 | 3.41 |

| Ave speed of left instrument movement cm sec | 2.72 | 2.886 | 3.217 | 3.548 | 3.713 |

| Proficiency score | 69.76 | 74.75 | 84.73 | 94.71 | 99.70 |

Module 7: Cutting - Dissecting

Proficiency = 112.6316 − 0.13 Number of movements of right instrument − 0.071 Total path length of left instrument in cm

| Variables Measured and Criterion Percentile Values | |||||

|---|---|---|---|---|---|

| Variable | 10 | 25 | 50 | 75 | 90 |

| Total time | 175.2 | 136 | 90 | 74.5 | 65.4 |

| Total no of cutting maneuvers | 37.4 | 37 | 34 | 29.5 | 24.4 |

| Total no of retraction operations | 5.4 | 4.5 | 4 | 1.5 | 1 |

| No of movements of right instrument | 151.6 | 125 | 99 | 81.5 | 64.6 |

| No of movements of left instrument | 53 | 40.5 | 34 | 26.5 | 22 |

| Total path length of right instrument cm | 386.9 | 297.6 | 251.3 | 184.5 | 161.8 |

| Total path length of left instrument cm | 130.2 | 95.4 | 83.3 | 71.1 | 52.66 |

| No cutting maneuvers with no injury | 24.4 | 29.5 | 34 | 37 | 37.4 |

| No of retraction operations with no overstretch | 1 | 1 | 1 | 3 | 3.8 |

| Safe retraction overstretch | 40 | 50 | 75 | 100 | 100 |

| Ave speed of right instrument movement cm sec | 2.4 | 2.6 | 3 | 3.55 | 4 |

| Ave speed of it instrument movement cm sec | 1.88 | 2.15 | 2.6 | 2.75 | 2.8 |

| Proficiency score | 84.46 | 89.74 | 93.72 | 97.64 | 99.04 |

| Means ± Standard Deviations for Each Variable | |||||

| Variable | −1.5 | −1 | 0 | +1 | +1.5 |

| Total time | 34.13 | 58.66 | 107.7 | 156.8 | 181.3 |

| Total no of cutting maneuvers | 23.23 | 26.30 | 32.43 | 38.56 | 41.63 |

| Total no of retraction operations | 0.322 | 1.31 | 3.286 | 5.262 | 6.25 |

| No of movements of right instrument | 50.16 | 68.20 | 104.3 | 140.4 | 158.4 |

| No of movements of left instrument | 13.20 | 21.08 | 36.86 | 52.63 | 60.52 |

| Total path length of right instrument cm | 93.11 | 148.0 | 257.9 | 367.8 | 422.8 |

| Total path length of left instrument cm | 28.68 | 48.90 | 89.36 | 129.8 | 150.0 |

| No cutting maneuver performed with no injury | 23.23 | 26.30 | 32.43 | 38.56 | 41.63 |

| No retraction operations with no overstretch. | −0.22 | 0.569 | 2.143 | 3.716 | 4.503 |

| Safe retraction overstretch | 25.87 | 41.05 | 71.43 | 101.8 | 117.0 |

| Ave speed of right instrument movement cm sec | 2.004 | 2.381 | 3.133 | 3.886 | 4.262 |

| Ave speed of It instrument movement cm sec | 1.77 | 1.984 | 2.414 | 2.844 | 3.059 |

| Proficiency score | 82.44 | 85.87 | 92.73 | 99.59 | 103.0 |

Module 6: Two-handed Maneuvers

Proficiency = 103.6793 − 0.2457 No of movements of right instrument − 0.0311 Total path length of left instrument in cm − 0.0357 Relevant path length right instrument cm − 0.0377 Relevant path length left instrument cm + 1.9945 No of exposed green balls that are collected

| Variables Measured and Criterion Percentile Values | |||||

|---|---|---|---|---|---|

| Variable | 10 | 25 | 50 | 75 | 90 |

| Total time | 178 | 112 | 84 | 73.5 | 50.2 |

| No of lost balls that miss the basket | 1 | 0.75 | 0 | 0 | 0 |

| No of movements of right instrument | 119.2 | 92 | 49 | 42 | 26.6 |

| No of movements of left instrument | 122 | 82 | 53 | 45 | 24.4 |

| Total path length of right instrument cm | 455.8 | 331.2 | 224.2 | 169.9 | 95.84 |

| Total path length of left instrument cm | 398.8 | 288.7 | 228.7 | 151.7 | 85.62 |

| Relevant path length right instrument cm | 253.1 | 207.2 | 148.7 | 80.05 | 61.75 |

| Relevant path length left instrument cm | 267.5 | 200.2 | 135.1 | 128.4 | 79.32 |

| No of exposed green balls that are collected | 7.5 | 8.25 | 9 | 9 | 9 |

| Ideal path length of right instrument cm | 33.2 | 47.2 | 59.75 | 85.43 | 91.85 |

| Ideal path length of left instrument cm | 24.56 | 29 | 30.9 | 57.8 | 67.16 |

| Economy of movement right instrument | 31.35 | 32.33 | 37.75 | 49.48 | 62.7 |

| Economy of movement left instrument | 14.98 | 22.6 | 36.7 | 42.8 | 44.96 |

| Ave speed of right instrument movement cm sec | 3.38 | 3.65 | 3.9 | 3.9 | 3.9 |

| Ave speed of left instrument movement cm sec | 2.82 | 2.95 | 3.2 | 3.55 | 3.86 |

| Proficiency score | 57.97 | 69.91 | 95.31 | 95.72 | 97.73 |

| Means ± Standard Deviations for Each Variable | |||||

| Variable | −1.5 | −1 | 0 | +1 | +1.5 |

| Total time | −5.11 | 30.64 | 102.1 | 173.6 | 209.4 |

| No of lost balls that miss the basket | −0.44 | −0.18 | 0.333 | 0.85 | 1.108 |

| No of movements of right instrument | 2.212 | 23.00 | 64.57 | 106.1 | 126.9 |

| No of movements of left instrument | −5.54 | 18.36 | 66.14 | 113.9 | 137.8 |

| Total path length of right instrument cm | 9.343 | 91.30 | 255.2 | 419.1 | 501.1 |

| Total path length of left instrument cm | 4.044 | 79.75 | 231.2 | 382.6 | 458.3 |

| Relevant path length right instrument cm | 21.55 | 65.87 | 154.5 | 243.1 | 287.4 |

| Relevant path length left instrument cm | 16.05 | 65.54 | 164.5 | 264.0 | 313.0 |

| No of exposed green balls that are collected | 7.245 | 7.663 | 8.5 | 9.337 | 9.755 |

| Ideal path length of right instrument cm | 19.50 | 33.53 | 61.6 | 89.67 | 103.7 |

| Ideal path length of left instrument cm | 9.485 | 20.50 | 42.54 | 64.58 | 75.60 |

| Economy of movement right instrument | 19.18 | 27.43 | 43.93 | 60.44 | 68.69 |

| Economy of movement left instrument | 8.902 | 16.50 | 31.68 | 46.87 | 54.46 |

| Ave speed of right instrument movement cm sec | 3.316 | 3.453 | 3.729 | 4.004 | 4.141 |

| Ave speed of left instrument movement cm sec | 2.554 | 2.798 | 3.286 | 3.774 | 4.018 |

| Proficiency score | 49.95 | 60.63 | 82.00 | 103.4 | 114.1 |

Module 8: Scarification - Hook Electrodes

Proficiency = 144.9011 − 0.116 Total time − 0.2142 Total cautery time − 1.2048 No of nonhighlighted bands that were cut − 0.1173 No of movements of right instrument − 0.09 Total path length of left instrument in cm

| Variables Measured and Criterion Percentile Values | |||||

|---|---|---|---|---|---|

| Variable | 10 | 25 | 50 | 75 | 90 |

| Total time | 176.5 | 164 | 154.5 | 149.5 | 145 |

| Time cautery applied with no contact to bands | 10.6 | 7.5 | 5 | 3 | 2.2 |

| Total cautery time | 52.6 | 48.5 | 45 | 42.5 | 41.6 |

| Time cautery applied on nonhighlighted bands | 6.8 | 6 | 6 | 4.5 | 2.8 |

| No of nonhighlighted bands that were cut | 0 | 0 | 0 | 0 | 0 |

| No of movements of right instrument | 106.5 | 102.8 | 87 | 75.75 | 64.5 |

| No of movements of left instrument | 75.5 | 72.75 | 70.5 | 66.75 | 58.5 |

| Total path length of right instrument cm | 346.3 | 275.1 | 202.8 | 197.8 | 175.1 |

| Total path length of left instrument cm | 201.4 | 194.8 | 186.7 | 152.7 | 123.7 |

| Efficiency of cautery | 77.7 | 83.15 | 89.9 | 93.05 | 94.1 |

| No of highlighted bands that were cut | 21 | 21 | 21 | 21 | 21 |

| Accuracy rate highlighted bands | 100 | 100 | 100 | 100 | 100 |

| Ave speed right instrument movement cm sec | 1.92 | 2.05 | 2.2 | 2.3 | 2.38 |

| Ave speed left instrument movement cm sec | 2.02 | 2.15 | 2.2 | 2.5 | 2.54 |

| Proficiency score | 84.49 | 89.10 | 93.62 | 93.81 | 94.05 |

| Means ± Standard Deviations for Each Variable | |||||

| Variable | −1.5 | −1 | 0 | +1 | +1.5 |

| Total time | 135.0 | 142.9 | 158.7 | 174.4 | 182.3 |

| Time cautery applied with no contact to bands | −0.45 | 1.603 | 5.714 | 9.826 | 11.88 |

| Total cautery time | 38.47 | 41.03 | 46.14 | 51.26 | 53.81 |

| Time cautery applied on nonhighlighted bands | 1.853 | 2.95 | 5.143 | 7.336 | 8.432 |

| No of nonhighlighted bands that were cut | 0 | 0 | 0 | 0 | 0 |

| No of movements of right instrument | 55.85 | 65.9 | 86.0 | 106.1 | 116.2 |

| No of movements of left instrument | 54.18 | 58.84 | 68.17 | 77.49 | 82.16 |

| Total path length of right instrument cm | 114.9 | 156.5 | 239.6 | 322.7 | 364.2 |

| Total path length of left instrument cm | 112.0 | 131.5 | 170.6 | 209.7 | 229.2 |

| Efficiency of cautery | 76.19 | 79.98 | 87.56 | 95.14 | 98.93 |

| No of highlighted bands that were cut | 21 | 21 | 21 | 21 | 21 |

| Accuracy rate highlighted bands | 100 | 100 | 100 | 100 | 100 |

| Ave speed of right instrument movement cm sec | 1.828 | 1.943 | 2.171 | 2.4 | 2.515 |

| Ave speed of It instrument movement cm sec | 1.904 | 2.031 | 2.286 | 2.54 | 2.667 |

| Proficiency score | 82.88 | 85.49 | 90.72 | 95.95 | 98.56 |

Module 9: Translocation of Objects

Proficiency = 100.8715 − 0.1731 No of dropped objects − 0.0386 No of movements of right instrument − 0.0067 No of movements of left instrument − 0.0039 Total path length of left instrument cm + 0.8116 No of properly placed objects + 0.2401 No of translocations

| Variables Measured and Criterion Percentile Values | |||||

|---|---|---|---|---|---|

| Variable | 10 | 25 | 50 | 75 | 90 |

| Total time | 460 | 392.8 | 346.5 | 243.3 | 168 |

| Average no of translocations per object | 10.6 | 8.9 | 6.6 | 4.45 | 3.4 |

| No of dropped objects | 38 | 31.25 | 17 | 11.75 | 10 |

| No of movements of right instrument | 797 | 633 | 438 | 328.5 | 245 |

| No of movements of left instrument | 708 | 482 | 375 | 313 | 240 |

| Total path length of right instrument cm | 2254 | 1817 | 1073 | 935.9 | 753.4 |

| Total path length of left instrument cm | 1625 | 1131 | 996.4 | 826.6 | 659.5 |

| No of properly placed objects | 5 | 5 | 5 | 5 | 5 |

| No of translocations | 17 | 22.25 | 33 | 44.5 | 53 |

| Efficiency of translocations | 45.9 | 54.55 | 73.85 | 95.7 | 100 |

| Ave speed of right instrument movement cm sec | 2.5 | 2.575 | 2.85 | 3.125 | 3.2 |

| Ave speed of left instrument movement cm sec | 2.3 | 2.4 | 2.5 | 2.6 | 2.75 |

| Proficiency score | 69.95 | 78.85 | 86.68 | 90.56 | 92.91 |

| Means ± Standard Deviations for Each Variable | |||||

| Variable | −1.5 | −1 | 0 | +1 | +1.5 |

| Total time | 106.4 | 179.2 | 324.8 | 470.5 | 543.3 |

| Average no of translocations per object | 1.805 | 3.492 | 6.867 | 10.24 | 11.93 |

| No of dropped objects | 1.684 | 8.345 | 21.67 | 34.99 | 41.65 |

| No of movements of right instrument | 93.34 | 226.7 | 493.3 | 760.0 | 893.3 |

| No of movements of left instrument | 55.28 | 183.9 | 441 | 698.2 | 826.7 |

| Total path length of right instrument cm | 259.2 | 626.1 | 1360 | 2094 | 2461 |

| Total path length of left instrument cm | 301.1 | 565.2 | 1093 | 1622 | 1886 |

| No of properly placed objects | 5 | 5 | 5 | 5 | 5 |

| No of translocations | 9.025 | 17.46 | 34.33 | 51.21 | 59.64 |

| Efficiency of translocations | 35.66 | 48.18 | 73.25 | 98.32 | 110.9 |

| Ave speed right instrument movement cm sec | 2.378 | 2.535 | 2.85 | 3.165 | 3.322 |

| Ave speed left instrument movement cm sec | 2.156 | 2.277 | 2.517 | 2.757 | 2.877 |

| Proficiency score | 65.28 | 71.24 | 83.18 | 95.12 | 101.1 |

Contributor Information

LeRoy Heinrichs, Wm., Department of Obstetrics–Gynecology, Stanford University, Stanford, California, USA; Stanford University Medical Media and Information Technologies (SUMMIT), Stanford, California, USA.

Brian Lukoff, School of Education, Stanford University, Stanford, California, USA.

Patricia Youngblood, Stanford University Medical Media and Information Technologies (SUMMIT), Stanford, California, USA.

Parvati Dev, Stanford University Medical Media and Information Technologies (SUMMIT), Stanford, California, USA.

Richard Shavelson, School of Education, Stanford University, Stanford, California, USA.

Harrith M. Hasson, RealSim Systems, Albuquerque, New Mexico, USA.

Richard M. Satava, Department of Surgery, University of Washington, Seattle, Washington, USA.

Elspeth M. McDougall, Department of Urology, University of California–Irvine, Irvine, California, USA.

Paul Alan Wetter, Society of Laparoendoscopic Surgeons (SLS), Miami, Florida, USA..

References:

- 1. Corrigan J, Kohn L, Donaldson M. To Err Is Human: Building a Safer Health System. Washington, DC: Institute of Medicine; 1999. ACGME Outcome Project Available at: http://www.acgme.org/outcome/project/proHome.asp Accessed December 6, 2006 [Google Scholar]

- 3. Kavic MS. Competency and the six core competencies [editorial]. JSLS. 2002;6(2):95–97 [PMC free article] [PubMed] [Google Scholar]

- 4. Sachdeva AK. Invited commentary: Educational interventions to address the core competencies in surgery. Surgery. 2004;135(1):43–47 [DOI] [PubMed] [Google Scholar]

- 5. Sachdeva AK. Acquisition and maintenance of surgical competence. Semin Vasc Surg. 2002;15(3):182–190 [PubMed] [Google Scholar]

- 6. Fried GM, Feldman LS, Vassiliou MC, et al. Proving the value of simulation in laparoscopic surgery. Ann Surg. 2004;240(3):518–528 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Gallagher AG, Ritter EM, Champion H, et al. Virtual reality simulation for the operating room: proficiency-based training as a paradigm shift in surgical skills training. Ann Surg. 2005; 241(2):364–372 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Martin JA, Regehr G, Reznick R, et al. Objective structured assessment of technical skill (OSATS) for surgical residents. Br J Surg. 1997;84:273–278 [DOI] [PubMed] [Google Scholar]

- 9. Reznick R, Regehr G, MacRae H, Martin J, McCulloch W. Testing technical skill via an innovative “bench station“ examination. Am J Surg. 1997;173(3):226–230 [DOI] [PubMed] [Google Scholar]

- 10. Francis NK, Hanna GB, Cuschieri A. The performance of master surgeons on the Advanced Dundee Endoscopic Psychomotor Tester (ADEPT): contrast validity study. Arch Surg. 2002;137(7):841–844 [DOI] [PubMed] [Google Scholar]

- 11. Sharp JF, Cozens N, Robinson I. Assessment of surgical competence in parotid surgery using a CUSUM assessment tool. Clin Otolaryngol. 2003;28(3):248–251 [DOI] [PubMed] [Google Scholar]

- 12. Heinrichs WL, Lukoff B, Youngblood P, Shavelson R, Dev P. Criterion-based technical training for surgeons. Paper presented at: 15th SLS Annual Meeting and Endo Expo 2006; September 6-9, 2006; Boston, MA [Google Scholar]

- 13. Heinrichs WL, Lukoff B, Youngblood P, Shavelson R, Dev P. Proficiency as the objective metric of technical surgical competency on the LTS2000 and the hysteroscopy trainers. Paper presented at: 35th Annual Meeting of the American Association of Gynecological Laparoscopy; November 2005; Chicago, IL [Google Scholar]

- 14. Kavic MS. A new use for an old paradigm [editorial]. JSLS. 2006;10(3):281–282 [PMC free article] [PubMed] [Google Scholar]

- 15.Report of the Intercollegiate Surgical Curriculum Project of the Royal College of Surgeons of England. 2005–07. Available at: http://www.rcseng.ac.uk/curriculum Accessed December 10, 2006

- 16.Surgical training cut in one-half beginning in 2007. October 2004 announcement. Available at: http://news.bbc.co.uk/1/hi/health/3960883.stm Accessed December 10, 2006

- 17. Satava RM, Cuschieri A, Hamdorf J. Metrics for Objective Assessment of Surgical Skills Workshop. Surg Endosc. 2003;17(2):220–226 [DOI] [PubMed] [Google Scholar]

- 18. Satava RM, Gallagher AG, Pellegrini CA. Surgical competence and surgical proficiency: definitions, taxonomy, and metrics. J Am Coll Surg. 2003;196(6):933–937 [DOI] [PubMed] [Google Scholar]

- 19. Heinrichs WL, Srivastava S, Montgomery K, Dev P. The fundamental manipulations of surgery: a structured vocabulary for designing surgical curricula and simulators. J Amer Assoc Gynecol Lapar. 2004;11(4):450–456 [DOI] [PubMed] [Google Scholar]

- 20. Heinrichs WL. Simulators. In: Wetter PA, Kavic MS, Levinson CJ, Kelley WE, Jr, McDougall EM, Nezhat C, eds. Prevention and Management of Laparoendoscopic Surgical Complications, 2nd Edition. Miami, FL: Society of Laparoendoscopic Surgeons; 2005; 131–139 [Google Scholar]

- 21. Heinrichs WL. Laparoscopy simulators for training basic surgical skills, tasks, and procedures. In: Nezhat C, Nezhat F, Nezhat C, eds. Operative Gynecologic Laparoscopy With Hysteroscopy. Cambridge, MA: Cambridge Publishers; In press [Google Scholar]