Abstract

Background:

The study aim was to compare the effectiveness of virtual reality and computer-enhanced video-scopic training devices for training novice surgeons in complex laparoscopic skills.

Methods:

Third-year medical students received instruction on laparoscopic intracorporeal suturing and knot tying and then underwent a pretraining assessment of the task using a live porcine model. Students were then randomized to objectives-based training on either the virtual reality (n=8) or computer-enhanced (n=8) training devices for 4 weeks, after which the assessment was repeated.

Results:

Posttraining performance had improved compared with pretraining performance in both task completion rate (94% versus 18%; P<0.001*) and time [181±58 (SD) versus 292±24*]. Performance of the 2 groups was comparable before and after training. Of the subjects, 88% thought that haptic cues were important in simulators. Both groups agreed that their respective training systems were effective teaching tools, but computer-enhanced device trainees were more likely to rate their training as representative of reality (P<0.01).

Conclusions:

Training on virtual reality and computer-enhanced devices had equivalent effects on skills improvement in novices. Despite the perception that haptic feedback is important in laparoscopic simulation training, its absence in the virtual reality device did not impede acquisition of skill.

Keywords: Surgical education, Laparoscopic skills, Simulation training, Virtual reality

INTRODUCTION

The advent of simulation training of minimally invasive surgical skills has created significant opportunities for ongoing development of innovative training methods. Several recent investigations have shown that the use of computer-driven simulation training devices results in transfer of skills into the operating room environment,1–4 and mandatory application of simulation methods has been forwarded as a means of improving surgical results and patient safety.5 A growing number of laparoscopic simulation training platforms and generally limited institutional resources have created difficulties for educators faced with the prospect of introducing these training methods into their programs.6 Ideally, the decision to procure a specific device ought to be based on the anticipated effectiveness in the specific application for which it will be used.

A wide variety of laparoscopic simulators is now available, and they can be broadly classified into video-scopic and computer-driven laparoscopic simulation platforms, which are further divided into virtual reality (VR) and computer-enhanced videoscopic (CE) trainers. These trainers primarily differ in their user interface and ability to provide reliable performance measurements. Videoscopic trainers allow manipulation of actual physical objects and require manual data collection. In contrast, VR trainers utilize a virtual environment and provide computer automated performance metrics. CE trainers attempt to bridge the gap between videoscopic and VR systems, their user interface is similar to the former, but they provide computer-generated performance metrics like VR trainers do.7 Despite these fundamental differences, their intended purpose is the same: To provide assessment and training in specific skills based on sophisticated performance measurement capabilities that would not be available without the use of desktop computing. Effective performance measurement is the basis for establishment of performance objectives and for proficiency-based training, which is emerging as the educational model of choice in skills training.8

In the present study, we examined training effectiveness of examples of the 2 classifications of computer-driven laparoscopic skills trainers using proficiency-based training models with the specific aims of1 demonstrating that novice surgical trainees can acquire complex laparoscopic skills using fundamentally different simulation systems and2 to demonstrate that the use of performance objectives established by a homogeneous group of more advanced trainees will result in similar levels of skills improvement with the 2 systems.

METHODS

Study participants were 16 Tufts University School of Medicine third-year medical students on their General Surgery and Obstetrics and Gynecology clerkships at Baystate Medical Center. The study was exempted from full review by our Institutional Review Board, and informed consent was not required for enrollment. The general study design called for students to undergo a pretraining assessment in laparoscopic intracorporeal suturing and knot tying. Participants were then randomized to train to perform this task using either a VR (n=8) or CE (n=8) simulator. At the end of the 4-week clerkship, a posttraining assessment identical to the pretraining assessment was conducted, and students had to complete an end of study survey characterizing qualitative aspects of their training experience.

Pre- and Posttraining Assessments

The pre- and posttraining assessments consisted of performance of a laparoscopic suturing and intracorporeal knot tying task in a live anesthetized porcine model (25kg to 30kg, Yorkshire pig sedated with intramuscular ketamine 100mg/kg and xylazine 10mg/kg and maintained under general anesthesia using endotracheal isoflurane) under a specific protocol approved by the Institutional Animal Care and Use Committee. Immediately before both assessments, all participants received standardized didactic instruction explaining task performance as described in the SAGES Fundamentals of Laparoscopic Surgery (FLS) course, and viewed the FLS video demonstration of a suturing and knot tying sequence. This was followed by a brief quiz to assess their understanding of the task and associated errors. In the operating room, each student was given 5 minutes (min) to perform the task, which was video-recorded for subsequent analysis. The specific task consisted of approximation of 2 loops of small intestine using standard instrumentation and laparoscopic port placement. This was accomplished with 2–0 silk suture and SH needle (Ethicon) with an initial surgeon's knot and then 2 subsequent square throws. The animal was euthanized after the assessments were completed. Although general instructions were provided, no mentoring or feedback was given during student performance of any task.

Simulation Training

VR simulation training was conducted using MIST-Suture software (SimSurgery, AS, Oslo, Norway). “Interrupted Suture” task was run on a MIST-VR simulator (Mentice AB, Göteborg, Sweden) with an Immersion Virtual Laparoscopic Interface (Immersion Medical, Gaithersburg, MD) (Figure 1). Performance metrics consisted of a composite score for time and errors.

Figure 1.

MIST-VR simulator (Mentice AB, Göteborg, Sweden) with Immersion Virtual Laparoscopic Interface (Immersion Medical, Gaithersburg, MD) (A). This device was set up to run MIST-Suture software (SimSurgery, AS, Oslo, Norway) on the “Interrupted Suture” task (B).

CE training was accomplished using a ProMIS simulator (Haptica Ltd., Dublin, Ireland) (Figure 2), and a custom model of 2 adjacent 1-inch Penrose drains that permitted the intracorporeal suturing and knot tying task to be performed with the same technique and instrumentation used for the operating room assessments. This simulator consists of a torso model containing optical motion sensors to detect instrument movement characteristics. Performance metrics consisted of time, instrument path length, and smoothness of motion.

Figure 2.

The ProMIS computer-enhanced simulator (Haptica Ltd., Dublin, Ireland) (A). This device was set up for users to approximate 2 segments of Penrose drain with an interrupted suture (B).

To facilitate distributed learning of the task, students were scheduled for 8 one-hour mentored training sessions over the 4-week rotation, but were permitted to have additional training under the same conditions. VR and CE training was mentored by either the full-time skills lab training technician or a surgeon researcher, both of whom were experts in performing the task. The training objectives for each system were based on the performance scores of 2 fourth- and 2 fifth-year general surgery residents. For the purposes of this study, proficiency was defined as achievement of performance scores within one standard deviation (SD) of the predefined objectives on 3 consecutive task iterations.

End-of-Study Survey

After the posttraining assessment, students completed a survey soliciting demographic information and prior laparoscopic experience (description of specific activities during cases). Qualitative impressions of the importance of simulation training, the importance of haptic cues in simulators, and the educational value of the specific training system used, were surveyed with responses given on a 3-point scale of “very effective,” “effective,” or “not effective.”

Video Analysis

The pre- and posttraining assessment videos were reviewed by 2 independent surgeon raters, blinded to student identity and training status, using a performance assessment tool previously validated at our institution.9 For the purposes of this analysis, the task was divided into 2 phases. In the “Suturing Phase,” the needle was brought to a functional position, driven through the 2 loops of bowel, and then secured after the suture was pulled through the tissue to the appropriate length to permit knot tying. The “Knot-tying Phase” was defined as the performance of a surgeon's knot and then 2 successive square simple throws to complete a square knot. Video rating consisted of quantifying discreet events during each phase that pertained to efficiency, expert-defined correct behaviors, and specific errors to produce a summative performance score.

Statistical Analysis

Data are expressed as means with 95% confidence intervals (CI). Comparisons between groups were conducted by Mann Whitney U test and comparisons within groups before and after training by Wilcoxon matched pairs test. Comparisons of achievement of proficiency, task completion rates, and questionnaire data were by Fisher's exact test. The Mann Whitney U test was performed using Epi Info software (Version 3.3.2, Centers for Disease Control, Atlanta, GA), and Wilcoxon matched pairs test and Fisher exact test were performed using GraphPad Instat software (San Diego, CA). Statistical significance was taken at a P<0.05.

RESULTS

The average age of the participants was 26±1 years, and the sex distribution was 63% (n=10) male and 37% (n=6) female. The participants had minimal prior laparoscopic experience, ranging from no experience to holding the camera.

Training Sessions

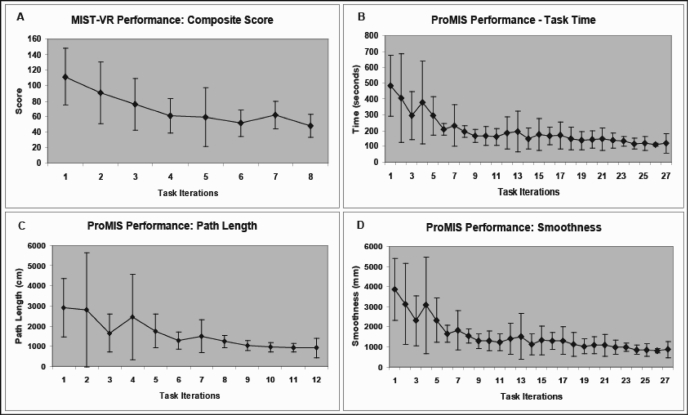

Performance curves for the VR and the CE-trained groups had a classic appearance of early, rapid improvement, followed by a more gradual pattern of incremental improvement (Figure 3). There were no significant differences in the proportion of students who reached proficiency [VR 75% (n=6); CE 88% (n=7)] and in percentage compliance for scheduled training sessions (VR 73%; CE 67%) (Table 1). The sum of total recorded task time was comparable between groups [VR 115 min (range, 61 to 169); CE 111 min85-136; P>0.05]. However, the total number of iterations completed by the VR-trained students was significantly lower compared with that of CE-trained students [VR 178-26; CE 3830-45; P<0.05], because the time taken to complete one iteration on the VR trainer was longer than that on the CE trainer (VR 9±2 min; CE 3±1 min). Time taken to reach the predefined proficiency level was significantly shorter in the VR group compared with that in the CE group [VR 43 min (range, 28 to 59); CE 75 min (range, 45 to 104); P<0.05).

Figure 3.

Performance curves for trainees on both virtual reality and computer-enhanced devices had a classic appearance of early, rapid improvement, followed by a more gradual pattern of incremental improvement. Virtual reality device: MIST-VR (A); Computer-enhanced device: ProMIS (B, C, D).

Table 1.

Virtual Reality (VR) versus Computer Enhanced Videoscopic (CE) Training Group: Training Session Characteristics*

| VR Training Group | CE Training Group | P Value | |

|---|---|---|---|

| Reached Proficiency (%) | 75 (n = 6) | 88 (n = 7) | 0.5000 |

| Attendance (%) | 73 [56-90] | 67 [57-77] | 0.3337 |

| Total Task Time (min) | 115 [61-169] | 111 [85-136] | 0.5286 |

| Total Iterations | 17 [8-26] | 38 [30-45] | 0.0063 |

| Time to Proficiency (min) | 43 [28-59] | 75 [45-104] | 0.0455 |

Data expressed as mean [95% CI].

Pre- versus Posttraining Assessment Performance

The interrater reliability for video analysis of pre- and posttraining performance was 0.88. The overall task completion rate was significantly higher posttraining for both the VR-trained and CE-trained groups (P<0.01) (Table 2). The time to task completion decreased on the posttraining assessment (P<0.01) for both the VR (P<0.05) and CE (P<0.01) groups. It must be noted that time to task completion did not represent a true value, reflecting completion of the task in all students because the longest possible figure for task time capped at the 300 second limit. This resulted in a larger effect on the pretraining assessment, where 13 of 16 students did not complete the task. Despite this limitation, the decrease in mean time after training was highly significant. Suturing phase time and video analysis score were also compared because all students completed this phase on both pre- and posttraining assessments. A significant improvement was demonstrated for both measures in the VR-trained group but not in the CE-trained group. Comparison of pre- and posttraining total video analysis scores was not feasible due to the very low task completion rate on pretraining assessment (3 of 16 participants). No significant differences were noted between groups on the pretraining assessment with the exception of the suturing phase score, which was higher in the CE group. The 2 groups did not differ in their posttraining assessment time or total video analysis score.

Table 2.

Title: Virtual Reality (VR) versus Computer Enhanced Videoscopic (CE) Training Group Performance

| VR Training Group | CE Training Group | P Value* | |

|---|---|---|---|

| Pre-Training | |||

| Task Completion Rate (%) | 13 (n = 1) | 25 (n = 2) | 0.5351 |

| Task Completion Time (seconds) | 291 [271-311]† | 293 [279-306]† | 0.6440 |

| Total Task Score | NA | NA | - |

| Suturing Phase Time (seconds) | 150 [97-203] | 102 [84-121] | 0.1415 |

| Suturing Phase Score | 18 [14-22] | 13 [10-15] | 0.0401 |

| Post-Training | |||

| Task Completion Rate (%) | 88 (n = 7)‡ | 100 (n = 8)‡ | 0.3173 |

| Task Completion Time (seconds) | 206 [165-248]§ | 156 [124-188]§ | 0.2076 |

| Total Task Score | 14 [13-15] | 17 [15-19] | 0.0711 |

| Suturing Phase Time (seconds) | 63 [47-78]§ | 70 [49-91] | 0.8335 |

| Suturing Phase Score | 9 [8-10]§ | 12 [10-15] | 0.0508 |

P value, VR vs CE training groups, Mann Whitney U test.

Upper confidence interval is an artificial construct as task completion time is capped at 300 s.

P > 0.05 versus Pre-Training, Fisher's exact test.

P > 0.05 versus Pre-Training, Wilcoxon test for matched pairs. Data expressed as mean [95% CI].

End-of-Study Survey

Survey responses indicated that students had minimal exposure to laparoscopic surgery, ranging from no experience to watching cases and holding the camera. Students generally felt that haptic feedback was important during training on simulators, and that the use of the 2 platforms was effective in increasing their skill levels, without any significant differences in the frequency of “effective” and “very effective” responses between the 2 groups (Table 3). However, all students in the CE group felt that their system simulated reality effectively, compared with only 38% in the VR group, a difference that was statistically significant.

Table 3.

Virtual Reality (VR) versus Computer Enhanced Videoscopic (CE) Training Group: Survey of End-of-Study Participant Perceptions

| VR Training Group | CE Training Group | P Value | |

|---|---|---|---|

| Importance of haptic feedback in simulators | 75% | 100% | 0.4667 |

| Effective Teaching Tool | 75% | 100% | 0.4667 |

| Improved Psychomotor Skills | 88% | 100% | 1.0000 |

| Improved Post-training Performance | 75% | 100% | 0.4667 |

| Improved Post-training Comfort with Task | 88% | 100% | 1.0000 |

| Simulates Reality | 38% | 100% | 0.0256 |

Data expressed as percentage of users who rated their simulator “Effective” or “Very Effective.”

DISCUSSION

Based on results from previous studies,1–4 we assumed that laparoscopic skills in novices would improve with objectives-based training and did not include an untrained control arm in the study design. This reflects our belief that properly implemented training on simulator systems with demonstrated face and construct validity will result in skills transfer to an OR setting and that examination of performance relative to totally untrained individuals does not have to be pursued in every circumstance. The repeated measures model utilizing each subject as his or her own control was selected instead, permitting us to address the study aim with an appropriate number of subjects. Medical students with minimal prior laparoscopic experience achieved a training benefit within the framework of a 4-week clerkship. Survey results indicate that over the course of their rotations, activities during laparoscopic teaching cases contributed minimally to the observed improvement in skills.

Although there were no significant differences in either the magnitude of skills improvement achieved with training on the VR system versus the CE system, or in the absolute levels of measured skills at the end of training, the study may not have been sufficiently statistically powered to detect small differences in the magnitude of skills transfer. Despite this, the skills transfer effects of simulation training to operative performance, can be described as comparable. Although pretraining skills were otherwise homogeneous in the 2 groups, a slightly higher CE-trained group pretraining suture phase scoring was observed. This is likely due to a sampling phenomenon with a fairly small experimental group size. The proficiency targets proved to be achievable for the majority of students, and the fact that 3 students did not achieve these objectives did not hinder demonstration of skills transfer. Because training was conducted on platforms that used different performance metrics (time and error composite scores on the VR trainer, and time, path length, and smoothness on the CE trainer), it is difficult to compare some of the training results. Students in the VR training group took less time to reach the designated proficiency targets compared with the CE training group. However, we cannot conclude that the VR system facilitates faster learning because we did not stop training on either system when the proficiency targets were reached, and some students did additional task iterations after achieving proficiency levels. In addition, as stated above, 3 of the students did not achieve proficiency levels. Performance objectives were based on historical performance of PGY 4 and 5 residents, with objectives for VR established 1 year before CE objectives. It is possible that uneven skill levels between disparate groups of residents may have confounded the simulator performance data on which the objectives were based, and also contributed to the differing times to achieve proficiency targets.

The inability to make comparisons of total video analysis scores pre- and posttraining due to the low task completion rate on the pretraining assessment was a limitation in our study. Although we have given the results of the suturing phase score, this value is limited as it represents only a portion of the task that is arguably less difficult than knot tying. The low task completion rate was probably because the task is a fairly difficult one for novices and task performance time was limited to 5 minutes. Time was capped based on the expectation that all students would be able to complete the task in the posttraining assessment after sufficient training. We felt that it was important not to allow the initial assessment to constitute a training opportunity by allowing essentially unlimited time to complete the task. Though a truncated task completion time may seem problematic, we successfully demonstrated a significant improvement in task completion rate and task completion time during the posttraining assessment.

Several prior studies have compared the effectiveness of videoscopic and VR trainers. These have made recommendations that both systems are effective in improving skills,10 that there may be training value to concurrent use of both system types,11 or that VR training has an advantage.12,13 The application of performance objectives in our study allowed us, to a great extent, to ensure that desired performance benchmarks on the 2 system types were comparable. Under these circumstances, although a minority of participants (comparable proportions on the 2 systems) did not achieve these objectives, we have demonstrated that comparable levels of performance improvement can be achieved with trainers that are fundamentally different in the experience provided to users.

Although performance results were similar, we have found in our experience that VR trainers offer some practical advantages over videoscopic and CE trainers. These pertain to automated performance metrics that can be easily retrieved and examined, but more importantly, are obtained under very standardized conditions. During self-directed practice, even with the performance measures available with a sophisticated system such as ProMIS (CE trainer), it is impossible to comment on what actually occurred during training unless video recordings are examined. Because VR tasks are, for the most part, rules-based, performance measures reflect achievement of steps specifically defined in the simulator software. Although this facilitates standardization, software-dependent tasks can be less free-form compared with videoscopic and CE trainers, and such constraints can be viewed as a disadvantage.

Both VR and CE training devices are roughly equivalent in price ($35,000 to $50,000), and the number of facilitator hours for training on the respective systems was also approximately the same (despite the small difference in “time to reach proficiency” between the systems). Hence, there does not appear to be an advantage that would steer a program director to one or the other of these systems. However, it is important to note that VR trainers may prove to be more cost-effective when compared with videoscopic trainers (computer-enhanced, or not) due to considerations that enter the usage picture that might influence the quality of the training experience during self-directed practice. These include automation in the course of uniform task setup, consistent qualitative performance metrics, and mentoring cues in more advanced systems. These features allow more effective self-directed practice in VR, and might necessitate the use of a facilitator with all associated costs to achieve a similar effect on a videoscopic (or CE) trainer. Our study was not designed to analyze the cost-benefit ratio of individual systems, but we believe it is an important question that ought to be addressed in our future work.

The absence of haptic feedback features on the VR system we used permitted some information to be gleaned on the value of these characteristics in this type of training. The presence of “haptic cues,” defined as “sense of touch” or tactile characteristics associated with interactions between physical objects, may have contributed to the higher perceived level of realism associated with the CE trainer. The end-of-study survey results for the 2 systems were comparable, except that CE trainees were more likely to feel that their system simulated reality effectively compared with VR trainees. Because subjects performed pre- and posttests with real laparoscopic instruments in live porcine models, they were able to compare their simulation training experience with “reality,” despite the fact that laparoscopic surgical exposure was limited. Our results are supported by other studies comparing videoscopic trainers and nonhaptic VR trainers.12,14,15 We hypothesize that this is due to both realism and familiarity issues that do not necessarily result in a degraded training experience. Although the ability to appreciate tactile features of objects with which a surgeon interacts may be perceived as an essential component of learning, there is no compelling evidence to show that it is necessary for the types of skills acquisition we have studied. Despite a clear perception among the participants, irrespective of the training platform used, that haptic features are important in a simulator, the performance results of our study do not substantiate this belief. Because VR trainers at an approximate price point less than $80,000 do not feature haptic user interfaces, this finding is an important one, irrespective of any preconceived beliefs. Considerable development efforts are required to achieve believable force feedback interactions, and newer generation high-fidelity VR simulators that offer this feature are quite expensive.6 It may be that this higher level of fidelity will be shown to be important for full procedural simulations, but for basic manipulative skills training, the haptic component of fidelity appears to be dispensable. The newest full haptic VR trainers may offer force feedback interactions of sufficiently high quality to permit a comparison of training effectiveness with nonhaptic VR systems to be made using identical software platforms. This would remove the variable of fundamentally different operating environments from the comparison.

CONCLUSION

Based on this study's data, we conclude that novice surgical trainees can acquire complex laparoscopic skills using fundamentally different simulation systems provided that training is objectives based and ample opportunities are given to achieve these objectives. However, it is not possible to recommend one simulator type over another. Given the devices that are currently available, it is our belief that expected performance outcomes are more tightly linked to the quality of training and to the clinical assessment methodology, than to the specific features of the simulator. Although the assumption that haptic feedback is important for simulator fidelity may be supportable, it appears that use of a VR system with a nonhaptic user interface permits very similar training results to that achieved with a CE system that allows interaction with real physical objects. Based on our use of these 2 systems, we feel that either can be used in a formative training program with the expectation of a good training effect. The results of future use in routine training activities should provide additional opportunities to confirm the achievement of training goals with virtual reality and hybrid, computer-enhanced training platforms.

Footnotes

Financial support provided by the Department of Surgery, Baystate Medical Center, Springfield, MA, USA.

Contributor Information

Prathima Kanumuri, Baystate Medical Center, Department of Surgery, Tufts University School of Medicine, Springfield, Massachusetts, USA..

Sabha Ganai, Baystate Medical Center, Department of Surgery, Tufts University School of Medicine, Springfield, Massachusetts, USA..

Eyad M. Wohaibi, Baystate Medical Center, Department of Surgery, Tufts University School of Medicine, Springfield, Massachusetts, USA..

Ronald W. Bush, Baystate Medical Center, Department of Surgery, Tufts University School of Medicine, Springfield, Massachusetts, USA..

Daniel R. Grow, Baystate Medical Center, Department of Obstetrics and Gynecology, Tufts University School of Medicine, Springfield, Massachusetts, USA..

Neal E. Seymour, Baystate Medical Center, Department of Surgery, Tufts University School of Medicine, Springfield, Massachusetts, USA..

References:

- 1.Seymour NE, Gallagher AG, Roman SA, et al. Virtual reality training improves operating room performance: results of a randomized, double-blinded study. Ann Surg. 2002;236:458–463 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Grantcharov TP, Kristiansen VB, Bendix J, et al. Randomized clinical trial of virtual reality simulation for laparoscopic skills training. Br J Surg. 2004;91:146–150 [DOI] [PubMed] [Google Scholar]

- 3.Hyltander A, Liljegren E, Rhodin PH, Lonroth H. The transfer of basic skills learned in a laparoscopic simulator to the operating room. Surg Endosc. 2002;16:1324–1328 [DOI] [PubMed] [Google Scholar]

- 4.Ganai S, Donroe JA, St Louis MR, et al. Virtual-reality training improves angled telescope skills in novice laparoscopists. Am J Surg. 2007;193:260–265 [DOI] [PubMed] [Google Scholar]

- 5.Schijven MP, Jakimowicz JJ, Broeders IA, Tseng LN. The Eindhoven laparoscopic cholecystectomy training course–improving operating room performance using virtual reality training: results from the first E.A.E.S. accredited virtual reality training curriculum. Surg Endosc. 2005;19:1220–1226 [DOI] [PubMed] [Google Scholar]

- 6.Schijven M, Jakimowicz J. Virtual reality surgical laparoscopic simulators. Surg Endosc. 2003;17:1943–1950 [DOI] [PubMed] [Google Scholar]

- 7.Stylopoulos N, Cotin S, Maithel SK, et al. Computer-enhanced laparoscopic training system (CELTS): bridging the gap. Surg Endosc. 2004;18:782–789 [DOI] [PubMed] [Google Scholar]

- 8.Gallagher AG, Ritter EM, Champion H, et al. Virtual reality simulation for the operating room: proficiency-based training as a paradigm shift in surgical skills training. Ann Surg. 2005;241:364–372 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Thompson RE, Earle DB, Kuhn JN, et al. Use of a new performance assessment tool for a complex laparoscopic task. Surg Endosc. 2006;20(suppl 1):S342 [Google Scholar]

- 10.Munz Y, Kumar BD, Moorthy K, et al. Laparoscopic virtual reality and box trainers: is one superior to the other? Surg Endosc. 2004;18:485–494 [DOI] [PubMed] [Google Scholar]

- 11.Madan AK, Frantzides CT. Prospective randomized controlled trial of laparoscopic trainers for basic laparoscopic skills acquisition. Surg Endosc. 2007;21:209–213 [DOI] [PubMed] [Google Scholar]

- 12.Hamilton EC, Scott DJ, Fleming JB, et al. Comparison of video trainer and virtual reality training systems on acquisition of laparoscopic skills. Surg Endosc. 2002;16:406–411 [DOI] [PubMed] [Google Scholar]

- 13.Youngblood PL, Srivastava S, Curet M, et al. Comparison of training on two laparoscopic simulators and assessment of skills transfer to surgical performance. J Am Coll Surg. 2005;200:546–551 [DOI] [PubMed] [Google Scholar]

- 14.Madan AK, Frantzides CT, Tebbit C, Quiros RM. Participants' opinions of laparoscopic training devices after a basic laparoscopic training course. Am J Surg. 2005;189:758–761 [DOI] [PubMed] [Google Scholar]

- 15.Botden SM, Buzink SN, Schijven MP, Jakimowicz JJ. Augmented versus virtual reality laparoscopic simulation: what is the difference?: a comparison of the ProMIS Augmented Reality Laparoscopic Simulator versus LapSim Virtual Reality Laparoscopic Simulator. World J Surg. 2007;31:764–772 [DOI] [PMC free article] [PubMed] [Google Scholar]