Abstract

The useful imaging range in spectral domain optical coherence tomography (SD-OCT) is often limited by the depth dependent sensitivity fall-off. Processing SD-OCT data with the non-uniform fast Fourier transform (NFFT) can improve the sensitivity fall-off at maximum depth by greater than 5dB concurrently with a 30 fold decrease in processing time compared to the fast Fourier transform with cubic spline interpolation method. NFFT can also improve local signal to noise ratio (SNR) and reduce image artifacts introduced in post-processing. Combined with parallel processing, NFFT is shown to have the ability to process up to 90k A-lines per second. High-speed SD-OCT imaging is demonstrated at camera-limited 100 frames per second on an ex-vivo squid eye.

OCIS codes: (170.4500) Optical coherence tomography, (070.2025) Discrete optical signal processing, (110.3010) Image reconstruction techniques

1. Introduction

Spectral domain optical coherence tomography (SD-OCT) is an imaging modality that provides cross-sectional images with micrometer resolution. An SD-OCT system employs a broadband light source together with a spectrometer for detection. A major drawback of this implementation, however, has been the axial depth dependent sensitivity fall-off, in which sensitivity rapidly decreases at deeper locations of the sample. The sensitivity fall-off is due to the finite spectral resolution of the spectrometer as well as the software reconstruction method.

In SD-OCT, image reconstruction is primarily based on the discrete Fourier transform (DFT) of the interference fringes measured in the spectral domain, where the data is transformed from wavenumber k domain to axial depth z domain. DFT can be computed using the fast Fourier transform (FFT) algorithm if the data is uniformly sampled. However, diffraction gratings in SD-OCT systems separate spectral components almost linearly in wavelength λ. The data becomes unevenly sampled in k domain due to the inverse relationship, , and needs to be resampled to achieve uniform spacing in k in order to use FFT. The accuracy of the resampling method is important to the image reconstruction. Traditional resampling methods include linear and cubic spline interpolations. Although relatively fast, linear interpolation introduces a large amount of interpolation error. Alternatively, cubic spline interpolation can be used to reduce this error, but this method requires a long processing time. The performance of these traditional interpolation algorithms degrades as the signal frequency approaches Nyquist sampling rate. This causes the sensitivity to decrease for signals originating at greater depths which correspond to a higher oscillation frequency in the interference fringes.

Numerous techniques have been developed to reduce the interpolation error with additional hardware or elaborate processing algorithms. Hu and Rollins [1] introduced a linear-in-wavenumber spectrometer to eliminate interpolation, however, with the added complexity of a custom-made prism. Zhang et al. [2] have developed a relatively slow (2.1ms per A-line) time-domain interpolation method to improve the sensitivity fall-off by 2dB. Recently, Wang et al. [3] developed an SD-OCT system employing non-uniform discrete Fourier transforms (NDFT) which directly computes DFT with matrix multiplication on the unevenly sampled data. By eliminating the interpolation process, Wang et al. showed that they can improve the sensitivity fall-off. But the processing speed of NDFT is very slow (4.7ms per A-line) because of its implementation with a direct matrix multiplication. The slow processing speed of NDFT imposes a barrier on real-time display and restricts its use to non-real time applications.

In this paper, an SD-OCT system using non-uniform fast Fourier transform (NFFT) is presented to overcome the speed limit of NDFT. It is shown that NFFT can significantly improve the processing speed of NDFT while maintaining the same advantage of NDFT on reduced sensitivity fall-off. Compared with traditional linear and cubic interpolation methods, NFFT improves not only the depth dependent sensitivity fall-off but also the processing time. Using NFFT and parallel computing techniques, our system can process a single A-line in 11.1μs and achieve over 100 frames per second with less than 12.5 dB sensitivity fall-off over the full imaging range of 1.73 mm.

2. SD-OCT processing principles

Built upon spectral interferometry, SD-OCT uses optical interference of its reference and sample beams. The A-line depth profile of the sample is reconstructed by first measuring the spectral interference signal expressed by [4,5]:

| (1) |

In this expression, s(k) is the spectral intensity distribution of the light source. Rr is the reflectivity of the reference arm mirror. Ri and Rj are the reflectivity in the ith and jth layers of the sample; zi is the optical path length difference of the ith layer compared to the reference arm and similarly zij is the path length difference between the ith and jth sample layers. The third term in Eq. (1) encapsulates the axial depth information in the sample which appears as interferences of light waves. The axial reflectivity profile of the sample can be retrieved by performing a discrete Fourier transform from k to axial depth z domain, resulting in the following equation:

| (2) |

Here Γ(z), the Fourier transform of the source spectrum, represents the envelope of its coherence function. The first and second terms in the bracket of Eq. (2) are non interferometric, and contribute to a DC term at z = 0. The third term contains the axial depth information related to the reference path as mentioned above. The final term corresponds to autocorrelation noise between layers within the samples which is usually small and located near z = 0 [4].

2.1 Traditional software reconstruction methods

As described above, the axial reflectivity profile is obtained by an inverse Fourier transform from k domain to z domain. In order to separate the spectral contents of the signal, most SD-OCT systems use a grating based spectrometer, which disperses the light evenly with respect to λ. The inverse relationship between wavenumber and wavelength precludes the use of FFT, unless the data is resampled using interpolation prior to applying the algorithm. This means that the intensity value measured by the spectrometer at evenly spaced λ value needs to be resampled into points at evenly spaced k value. A simple method for resampling, linear interpolation, is used in high-speed SD-OCT systems [6]. The interpolants of linear interpolation are calculated from two nearest data points using a first order linear equation. This method is advantageous in settings where speed is important, but post-FFT results show that the sensitivity fall-off is inferior to more accurate methods such as cubic spline interpolation. Cubic spline interpolation uses a cubic polynomial to interpolate points in intervals between two known data points [7,8]. Although this method shows a better sensitivity roll-off, it is more complex and requires longer processing time. A recent paper by Wang et al. [3] shows that NDFT performs even better in SD-OCT image reconstruction than FFT used with cubic spline interpolation. The NDFT computes DFT directly at unequally spaced nodes in k using a Vendermode matrix [3] with a direct matrix multiplication of complexity O(M2), where M is the number of samples. Although NDFT is one of the more successful algorithms in alleviating the sensitivity fall-off problem [3], it is not however useful for the real-time clinical application of OCT because of its slow processing speed.

2.2 Non-uniform fast Fourier transform (NFFT)

NFFT is a fast algorithm that approximates NDFT. NFFT can significantly improve the processing speed while matching the sensitivity performance of NDFT. NFFT has been used previously in medical image reconstructions such as magnetic resonance imaging [9], computed tomography [10] and ultrasound imaging [11].

The NFFT algorithm was presented by Dutt and Rokhlin [12] in 1991. Similar to the application of FFT to perform a DFT, NFFT is an accelerated algorithm for computing the NDFT by reducing the computational complexity to O(MlogM) [12]. There are three types of NFFT which are distinguished by its inputs and outputs. Type I NFFT transforms data from a non-uniform grid to a uniform grid, type II NFFT goes from uniform sampling to non-uniform sampling and type III NFFT starts on a non-uniform grid that results in another non-uniform grid [13]. This paper will focus on the used of type I NFFT, specifically transforming data non-uniformly sampled in k-domain to axial reflectivity information in the uniform z-domain.

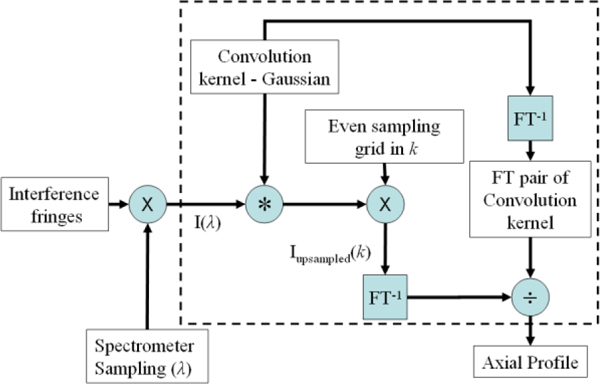

A flow chart of the NFFT processing is shown in Fig. 1 . Basically NFFT computes the NDFT by a convolution based interpolation followed by an upsampled FFT [14]. First, the non-uniformly sampled data is interpolated and upsampled by convolving with an interpolation kernel. It is then Fourier transformed by an evaluation of a standard FFT. The result of the FFT is then subjected to a deconvolution with the Fourier transform pair of the interpolation kernel, producing an approximation of the NDFT. The speed and accuracy of the NFFT algorithm can be adjusted by modifying the upsampling rate and the interpolation kernel. The choice of interpolation kernel can be optimized for different spacings [15]. In this paper, we have used the fast Gaussian gridding method presented by Greengard and Lee [13] which was based on the work of Dutt and Rokhlin [12]. This version of the NFFT uses a Gaussian interpolation kernel and has enhanced speed performance due to the fast Gaussian gridding technique, which is 5-10 times faster than traditional gridding methods [15].NDFT can be used to compute the inverse Fourier transform which is given by the equation,

Fig. 1.

Flow chart of the NFFT processing algorithm.

| (3) |

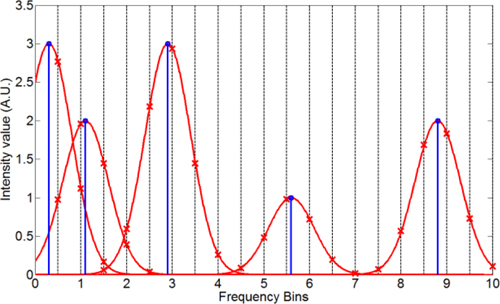

Here zm is the axial depth location, ΔK is the wavenumber range, m is the index for samples in the axial depth z domain, I(kn) is the interference signal sampled at non-uniform k spacing and M is the number of sample points. Equation (3) cannot be computed using existing FFT algorithm because kn are not evenly spaced. NFFT, however, will resample the signal to an evenly spaced grid via a convolution based interpolation as illustrated in Fig. 2 . The signal can be interpolated using an user defined interpolation kernel Gτ(k) [15]. The interpolated signal is then resampled on a uniform grid. In the following calculation, an Gaussian interpolation kernel is selected which is defined as

| (4) |

where

Fig. 2.

Illustration of the resampling of data with Gaussian interpolation kernel into equally spaced grid. The circles are the original unevenly sampled data and the vertical dashed lines are the new uniform grid. A Gaussian function is convolved with each original data point, spreading its power over a few adjacent grid points as shown in the crosses. The new evenly sampled data value is the summation of the values of the crosses on each grid line.

| (5) |

Here M is the number of sample points, R is the upsampling ratio Mr/M where Mr is the length of the upsampled signal, and Msp is the kernel width which denotes the number of grid points on each side of the original data point to which the Gaussian kernel is accounted for in calculation. An infinite length Gaussian would produce the most accurate results, but the value of Msp is often set to a small finite value in consideration of computational efficiency. The use of finite Msp value introduces a truncation error [16] because the tail of the Gaussian is not used. Another type of error introduced in NFFT is aliasing. By resampling the interpolated signal in the k domain onto a uniform grid, aliasing would occur in the z domain [17]. Increasing the upsampling ratio R would decrease the amount of aliasing and hence increase the accuracy of NFFT. The truncation and aliasing errors account for the small deviation between the results of NFFT and NDFT. Readers should refer to [12,15,16] for a detailed derivation of the computational errors and the method of choosing τ. To balance the processing time and the accuracy, we used Msp of three and R of two. Theoretically this combination of Msp and R would result in an error of less than 1.9 × 10−3 when compared to NDFT [15].

The convolution of Gτ(k) with I(k) gives the intermediate function Iτ(k) that can be defined as,

| (6) |

In order to compute the Fourier transform, Iτ(k) is resampled in an evenly spaced grid with Mr samples. In the discrete from,

| (7) |

The discrete Fourier transform of Eq. (7) can then be computed using standard FFT algorithm on the oversampled grid with Mr points.

| (8) |

Once aτ(zm) has been calculated, a(zm) can be calculated by a deconvolution in k space by Gτ(k) or alternatively with a simple division by the Fourier transform of Gτ(k) in z space. The Fourier transform of Gτ(k) can be expressed as,

| (9) |

This would result in

| (10) |

The resulting vector will have Mr points, which is larger than the original M points input because of upsampling. The points a(zm), where m>M, represents deeper locations in the sample in which the interference fringes were not captured by the spectrometer. Recall that the imaging depth a(zm) is determined by the original sampling rate at M points. The extra points in the z domain contain artifacts, primarily introduced through aliasing in interpolation and resampling of the data. No additional physical information from the sample is contained and thus the extra points can be discarded. Hence, the vector of useful data will contain only M points as expected.

The improvement in NFFT over linear and cubic interpolation methods is mainly due to the deconvolution post-FFT. Linear interpolation and cubic spline interpolation could be thought of as a convolution with their respective kernel. However, a deconvolution is not applied after the Fourier transform. Therefore the Fourier transformed data represents both the acquired spectrum and the interpolation kernel. In addition, high frequency oscillations of the interference fringes near the Nyquist frequency vary too rapidly for traditional interpolation methods to perform well. At Nyquist sampling rate, the interference fringes contain no more than two points per period, which cannot be accurately interpolated by linear or cubic interpolation [18,19]. The convolution with a Gaussian function spreads the data over more Fourier transform bins allowing for a more accurate calculation of the Fourier transform.

The input and output of NFFT is quite similar to FFT, both take vectors of complex numbers in one domain and produce its counterpart in another domain. The only difference being that the input of NFFT is not required to be equally spaced. The interpolation step is inherited in the NFFT algorithm. This is certainly an attractive trait of NFFT in which the sensitivity fall-off can be improved with only software changes in the system.

3. System and experiments

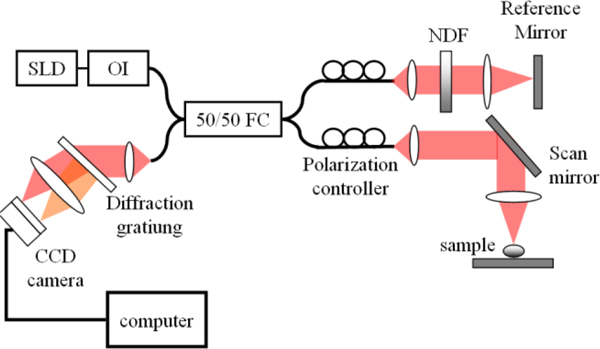

A schematic of the SD-OCT system is shown in Fig. 3 . The source is a superluminescent diode (Superlum) with a center wavelength of 845 nm, a full width at half maximum (FWHM) bandwidth of 45 nm and an output power of 5 mW. The light is delivered to the sample and reference arms through an optical isolator (AC Photonics) and a 50/50 fiber coupler (Thorlabs). The reference arm consists of a collimation lens, a neutral density filter (NDF), a dispersion compensation lens, and a reference mirror. In the sample arm, the light is collimated by a lens and scanned by a galvanometer mirror (Cambridge Technology). The light is delivered to the sample via an achromatic focusing lens with a focal length of 30 mm, resulting in a FWHM spotsize of ~11 μm.

Fig. 3.

Schematic of the SD-OCT system. SLD, superluminescent diode; OI, optical isolator, FC, fiber coupler, NDF: neutral density filter.

The returning beams from the two arms are collected and combined in the fiber coupler, where 50% intensity is delivered to the custom-built spectrometer unit. The spectrometer consists of an achromatic collimation lens, a transmission grating (Wasatch Photonics) with 1200 lines/mm and a set of air-spaced lenses with an effective focal length of 100 mm. The linescan CCD camera (Atmel) has 1024 pixels with 14 × 14 μm2 pixel size. The data from the camera is transferred to a computer via a CameraLink frame grabber (National instrument) for further processing. The spectrometer was designed to realize a source limited axial resolution of 7 μm and minimized sensitivity fall-off. The theoretical spectral resolution is 0.101 nm and the total imaging depth is 1.73 mm.

3.1 Experiment for sensitivity fall-off and artifact reduction

For SD-OCT, the signal sensitivity falls off with increased depth from zero path length within an A-line scan. To measure the improvement of sensitivity fall-off using different processing algorithms, 1000 A-lines were acquired from a mirror reflector in the sample arm at 17 positions along the imaging depth. The camera exposure time is 20 μs for each A-line. The interference fringes were processed using linear interpolation with FFT, cubic spline interpolation with FFT, NDFT and NFFT. The applications of deconvolution to the traditional interpolation methods were also investigated. Linear interpolation can be viewed as a convolution with a triangular function and cubic spline interpolation can be approximated to a convolution with cubic kernel [20]. The width of the triangle and cubic kernel, however, is not constant due to the non-linear spacing in k space. To compute the deconvolution coefficient, the shapes of the respective functions were first averaged before computing the inverse Fourier transform. For the NFFT, averaging is not needed as the Gaussian shape and width is constant.

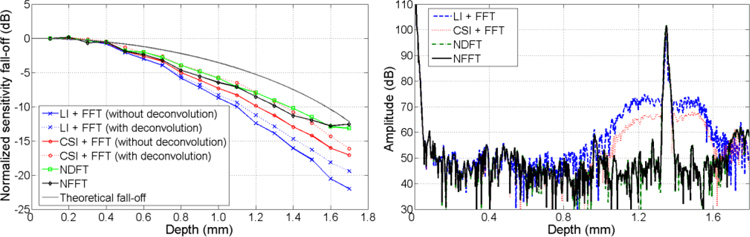

The depth dependent sensitivity fall-off of each method are plotted in Fig. 4 along with the theoretical sensitivity fall-off [5] calculated from the spectral resolution of the spectrometer. It can be seen that at deeper axial depth, the sensitivity fall-off due to the interpolation method is significant. Possible reasons for the difference between the theoretical sensitivity fall-off with the NDFT and NFFT method are misalignment of the camera and inaccuracy in calibration. The NDFT and NFFT achieve the best fall-off of 12.5 dB over the full depth range, while cubic spline interpolation suffers an 18.1 dB decrease in sensitivity. Therefore, NFFT improves the sensitivity fall-off by 5.6 dB. The linear interpolation has a sensitivity fall-off greater than 22 dB, nearly 10 dB worst than the NFFT counterpart. The improvement of NFFT gradually starts from the shallow depths and increases significantly at deeper depths. The application of deconvolution to the traditional interpolation methods shows an improvement in sensitivity fall-off. However, the inaccuracy of deconvolution coefficients based on an averaged convolution shape can affect the performance of the deconvolution. This is a possible reason for their deviation from NDFT method. It should be noted that deconvolution for the traditional linear and cubic spline interpolation methods is not generally applied due to its additional computation time for averaging the convolution shape and the inaccuracy of the deconvolution based on this averaging.

Fig. 4.

Left: Sensitivity fall-off based on different reconstruction methods. LI, Linear interpolation; CSI, cubic spline interpolation; NDFT, non-unifrom discrete Fourier transform; NFFT, non-uniform fast Fourier transform. Right: Typical axial reflectivity profile with a single partial reflector showing shoulder artifacts of linear and cubic spline interpolation. The data for NDFT and NFFT overlaps each other, showing the accuracy of the approximation.

The effect of deconvolution on the signal to noise ratio (SNR) is dependent on the signal location when considering the mean noise floor. The deconvolution coefficients gradually increase from shallower to deeper depths of an A-line, causing an amplification of the signal positioned deeper in the sample. Although simultaneously the noise also gets amplified, its overall effect on the mean noise floor is minimal because the amplification of background noise is averaged over the full range. The SNR for signals at the deeper depths will improve slightly, whereas the SNR at shallower depths will decrease slightly. Nonetheless the overall effect of deconvolution on SNR is minimal.

In addition to the benefit of improved sensitivity fall-off, the NFFT algorithm can remove artifacts by removing shoulders or side-lobes and would improve the SNR when considering the noise near the signal. These side-lobes were not eliminated after the deconvolution with the traditional interpolation methods. The shoulder artifacts are due to the interpolation error as the frequency of the interference fringes of measured OCT signal approaches Nyquist rate, where local interpolation algorithm fails to resample the data with the correct value [18]. Depicted in Fig. 4, a single reflector at 1.3 mm depth produced a single peak in the A-line profile. However, when using linear or cubic spline interpolation for processing, a broad shoulder can be seen in the profile that has also been reported by others [5,19,21]. This shoulder can degrade the image quality when multiple reflections occur closely such as in biological samples. A typical method to reduce this shoulder is to perform zero-padding technique [2,5,19] on the interference spectrum. However, zero-padding is inherently slow due to its extra computational steps and large arrays of points [2]. The NDFT and NFFT methods as shown in Fig. 4 do not produce this shoulder even at deep imaging depth, eliminating the need to perform zero-padding.

3.2 Computation Speed

NDFT improves the sensitivity fall-off but its processing speed is slow and cannot perform real-time imaging. The NFFT can significantly improve the image processing speed while maintaining the same sensitivity fall-off as NDFT. To demonstrate the speed advantage using NFFT compared to tradition methods, the processing time and frame rate of the different methods for 100 B-mode frames of 512 A-lines are averaged and presented in Table 1 . The data presented is measured on a Dell Vostro 420 with an Intel Q9400 Core 2 Quad (2.66 GHz) and 3 GB of memory. The acquisition and processing program is written in VC++ and is compiled using Intel C++ compiler. The processing algorithm converts raw data to an image which includes the Fourier transform of data with FFTW [22] with interpolation methods previously mentioned, numerical dispersion compensation [23], logarithmic scale calculation, contrast and brightness adjustment as well as on screen display. The algorithm was accelerated by processing with all four cores available in the machine. Once the frame grabber and data acquisition board is setup and started, it runs without CPU intervention during a single frame. During this time all four processors are used to process the data. This multi-processing scheme was realized using OpenMP [24]. The processing time evaluation was performed with and without software numerical dispersion compensation; the latter was hardware compensated with a lens in the reference arm.

Table 1. Computation time for one A-line (μs) and display frame rate (fps) of a 512 × 512 pixels image based on different processing methods.

| Processing method | Hardware dispersion compensation | Numerical dispersion compensation | |||

|---|---|---|---|---|---|

| Processing time (μs) | Frame rate (fps) | Processing time (μs) | Frame rate (fps) | ||

| Linear interpolation + FFT | 6.64 | 296 | 10.9 | 179 | |

| Cubic spline interpolation + FFT | 330 | 5.9 | 429 | 4.5 | |

| NDFT | 1470 | 1.3 | 1635 | 1.2 | |

| NFFT | 11.1 | 175 | 18.6 | 95 | |

It can be seen that the processing time of NFFT is on the same order of magnitude as linear interpolation and is approximately 30 times and 130 times faster than cubic spline interpolation and NDFT respectively. The NFFT processing speed of 5.7 ms per 512 A-lines corresponds to using 11.1 us to process a single A-line. This results in a theoretical reconstruction speed at over 90k A-line/s. Even with the numerical dispersion compensation, NFFT can process over 48k A-line/s, which translates to a display rate of 95 frames per second (fps).

The increase in processing speed can be attributed to the reduction of calculation over the full reconstruction of an SD-OCT image that consists of multiple A-lines. For linear and cubic spline interpolations, the interpolation polynomial must be recalculated for each A-line. In NFFT, the Gaussian interpolation kernel is the same for every A-line, and therefore part of the calculation can be performed prior to the acquisition of data.

Alternatively, hardware-based parallel processing has been developed to reconstruct SD-OCT images in real time. Researchers have used field programmable grid arrays [25] and digital signal processor [26] to realize imaging speed of 14k and 4k A-lines/s respectively. A recently developed parallel processing based SD-OCT system using linear interpolation can generate images at 80k A-lines/s [6]. Our image processing based on NFFT can achieve a comparable speed as systems using linear interpolation with FFT. Furthermore, NFFT can improve the sensitivity fall-off compared to the common linear interpolation method.

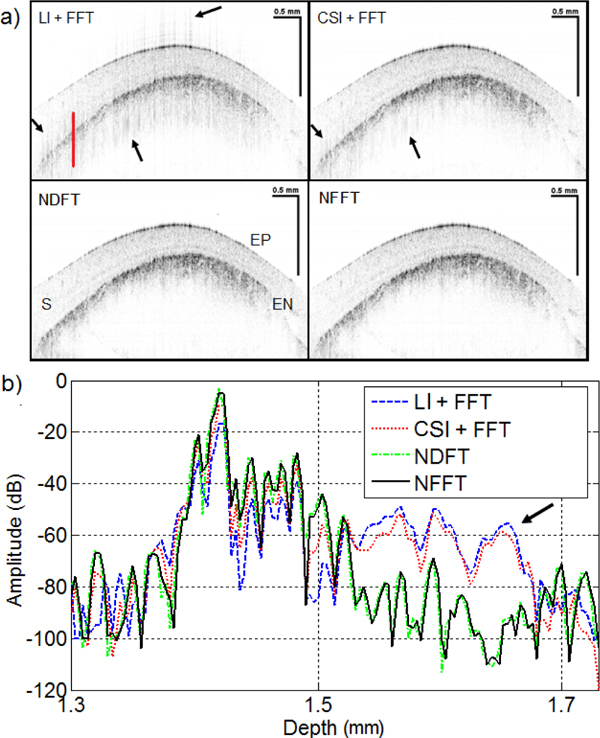

3.3 Demonstration of high-speed Imaging on an ex-vivo squid eye

Finally the performance of the system was demonstrated on ex-vivo imaging of a squid eye, shown in Fig. 5 . The image was taken with an integration time of 20 μs, which limited the frame rate to ~100 fps. Line artifacts and blurring can be observed at the anterior and posterior edges of the cornea when processed with linear and cubic spline interpolations. Both of these artifacts are absent in the NDFT and NFFT produced images. The cause of these artifacts is attributed to the broad shoulder effect, shown previously in Fig. 4. The NFFT is also shown to have higher signal intensities at common peaks in the images. The peak of the NFFT signal is 5dB and 7dB higher than that obtained with the cubic spline interpolation and linear interpolation methods respectively, which is a result of the improved sensitivity fall-off.

Fig. 5.

(a) Corneal images obtained from different processing techniques. The arrows indicate the location of the image artifacts. EP, epithelium; S, stroma; EN, endothelium. (b) Representative part of an A-line located at the solid line in the corneal image. NFFT produced peaks with higher intensity as a result of the improved sensitivity fall-off. LI, Linear interpolation; CSI, cubic spline interpolation; NDFT, non-unifrom discrete Fourier transform; NFFT, non-uniform fast Fourier transform.

4. Conclusion

Although the depth dependent sensitivity fall-off restricts the useful imaging range of an SD-OCT system, it can be minimized through careful design considerations. We have shown that processing SD-OCT data with NFFT can improve the sensitivity fall-off at maximum depth by greater than 5 dB concurrently with a 30 fold decrease in processing time compared to the cubic spline interpolation method. The NFFT algorithm can also remove shoulder artifacts, eliminating the need for time consuming zero-padding techniques. The improvement by using NFFT is demonstrated by camera-limited real-time imaging of ex-vivo squid cornea at over 100 frames per second. The system speed can be further improved by using workstation and server processors with more processing cores. In addition, the NFFT processing method does not increase system cost and complexity with added hardware and is an attractive software upgrade for existing SD-OCT systems. Furthermore, it can be used in conjunction with traditional numerical dispersion compensation techniques.

Acknowledgment

This project is support by the Natural Sciences and Engineering Research Council of Canada and the Canada Foundation for Innovation.

References and links

- 1.Hu Z., Rollins A. M., “Fourier domain optical coherence tomography with a linear-in-wavenumber spectrometer,” Opt. Lett. 32(24), 3525–3527 (2007). [DOI] [PubMed] [Google Scholar]

- 2.Zhang Y., Li X., Wei L., Wang K., Ding Z., Shi G., “Time-domain interpolation for Fourier-domain optical coherence tomography,” Opt. Lett. 34(12), 1849–1851 (2009). [DOI] [PubMed] [Google Scholar]

- 3.Wang K., Ding Z., Wu T., Wang C., Meng J., Chen M., Xu L., “Development of a non-uniform discrete Fourier transform based high speed spectral domain optical coherence tomography system,” Opt. Express 17(14), 12121–12131 (2009). [DOI] [PubMed] [Google Scholar]

- 4.Hausler G., Lindner M. W., “Coherence radar and spectral radar – new tools for dermatological diagnosis,” J. Biomed. Opt. 3(1), 21–31 (1998). [DOI] [PubMed] [Google Scholar]

- 5.Nassif N., Cense B., Park B., Pierce M., Yun S., Bouma B., Tearney G., Chen T., de Boer J., “In vivo high-resolution video-rate spectral-domain optical coherence tomography of the human retina and optic nerve,” Opt. Express 12(3), 367–376 (2004). [DOI] [PubMed] [Google Scholar]

- 6.Liu G., Zhang J., Yu L., Xie T., Chen Z., “Real-time polarization-sensitive optical coherence tomography data processing with parallel computing,” Appl. Opt. 48(32), 6365–6370 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Maeland E., “On the comparison of interpolation methods,” IEEE Trans. Med. Imaging 7(3), 213–217 (1988). [DOI] [PubMed] [Google Scholar]

- 8.Hou H., Andrews H. C., “Cubic splines for image interpolation and digital filtering,” IEEE Trans. Acoust. Speech Signal Process. 26(6), 508–516 (1978). [Google Scholar]

- 9.Sarty G. E., Bennett R., Cox R. W., “Direct reconstruction of non-Cartesian k-space data using a nonuniform fast Fourier transform,” Magn. Reson. Med. 45(5), 908–915 (2001). [DOI] [PubMed] [Google Scholar]

- 10.De Francesco S., da Silva A. M. F., “Efficient NUFFT-based direct Fourier algorithm for fan beam CT reconstruction,” Proc. SPIE 5370, 666–677 (2004). [Google Scholar]

- 11.Bronstein M. M., Bronstein A. M., Zibulevsky M., Azhari H., “Reconstruction in diffraction ultrasound tomography using nonuniform FFT,” IEEE Trans. Med. Imaging 21(11), 1395–1401 (2002). [DOI] [PubMed] [Google Scholar]

- 12.Dutt A., Rokhlin V., “Fast Fourier transforms for nonequispaced data,” SIAM J. Sci. Comput. 14(6), 1368–1393 (1993). [Google Scholar]

- 13.Lee J., Greengard L., “The type 3 nonuniform FFT and its application,” J. Comput. Phys. 206(iss. 1), 1–5 (2005). [Google Scholar]

- 14.Fessler J. A., Sutton B. P., “Nonuniform fast Fourier transforms using min-max interpolation,” IEEE Trans. Signal Process. 51(2), 560–574 (2003). [Google Scholar]

- 15.Greengard L., Lee J., “Accelerating the Nonuniform Fast Fourier Transform,” SIAM Rev. 46(3), 443–454 (2004). [Google Scholar]

- 16.D. Potts, G. Steidl, and M. Tasche, “Fast Fourier transforms for nonequispaced data: a tutorial,” in Modern Sampling Theory: Mathematics and Applications, J.J.Benedetto and P.Ferreira, eds. (Springer, 2001), Chap. 12, pp. 249–274. [Google Scholar]

- 17.Duijndam A. J. W., Schonewille M. A., “Nonuniform fast Fourier transform,” Geophys. 64, 539–551 (1999). [Google Scholar]

- 18.Rolain Y., Schoukens J., Vandersteen G., ““Signal Reconstruction for Non-Equidistant Finite Length Sample Sets: A “KIS” Approach,” IEEE Trans. Instrum. Meas. 47(5), 1046–1052 (1998). [Google Scholar]

- 19.Dorrer C., Belabas N., Likforman J. P., Joffre M., “Spectral resolution and sampling issues in Fourier-transform spectral interferometry,” J. Opt. Soc. Am. B 17, 1795–1802 (2000). [Google Scholar]

- 20.P. Thevenaz, T. Blu, and M. Unser, Handbook of Medical Imaging (Academic Press, 2000), Chap. 25. [Google Scholar]

- 21.Choma M., Sarunic M. V., Yang C., Izatt J., “Sensitivity advantage of swept source and Fourier domain optical coherence tomography,” Opt. Express 11(18), 2183–2189 (2003). [DOI] [PubMed] [Google Scholar]

- 22.M. Frigo, and S. G. Johnson, “FFTW: an adaptive software architecture for the FFT,” in Proceedings of IEEE International Conference on Acoustics, Speech and Signal Processing. (Institute of Electrical and Electronics Engineers, New York, 1988), pp. 1381–1384. [Google Scholar]

- 23.Cense B., Nassif N., Chen T., Pierce M., Yun S. H., Park B., Bouma B., Tearney G., de Boer J., “Ultrahigh-resolution high-speed retinal imaging using spectral-domain optical coherence tomography,” Opt. Express 12(11), 2435–2447 (2004). [DOI] [PubMed] [Google Scholar]

- 24.OpenMP Architecture Review Board, “The OpenMP API specification for parallel programming,” http://www.openmp.org/

- 25.Ustun T. E., Iftimia N. V., Ferguson R. D., Hammer D. X., “Real-time processing for Fourier domain optical coherence tomography using a field programmable gate array,” Rev. Sci. Instrum. 79(11), 114301 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Schaefer A. W., Reynolds J. J., Marks D. L., Boppart S. A., “Real-time digital signal processing-based optical coherence tomography and Doppler optical coherence tomography,” IEEE Trans. Biomed. Eng. 51(1), 186–190 (2004). [DOI] [PubMed] [Google Scholar]