Abstract

The prefrontal cortex (PFC) has been implicated in higher order cognitive control of behavior. Sometimes such control is executed through suppression of an unwanted response in order to avoid conflict. Conflict occurs when two simultaneously competing processes lead to different behavioral outcomes, as seen in tasks such as the anti-saccade, go/no-go, and the Stroop task. We set out to examine whether different types of stimuli in a modified emotional Stroop task would cause similar interference effects as the original Stroop-color/word, and whether the required suppression mechanism(s) would recruit similar regions of the medial PFC (mPFC). By using emotional words and emotional faces in this Stroop experiment, we examined the two well-learned automatic behaviors of word reading and recognition of face expressions. In our emotional Stroop paradigm, words were processed faster than face expressions with incongruent trials yielding longer reaction times and larger number of errors compared to the congruent trials. This novel Stroop effect activated the anterior and inferior regions of the mPFC, namely the anterior cingulate cortex, inferior frontal gyrus as well as the superior frontal gyrus. Our results suggest that prepotent behaviors such as reading and recognition of face expressions are stimulus-dependent and perhaps hierarchical, hence recruiting distinct regions of the mPFC. Moreover, the faster processing of word reading compared to reporting face expressions is indicative of the formation of stronger stimulus–response associations of an over-learned behavior compared to an instinctive one, which could alternatively be explained through the distinction between awareness and selective attention.

Keywords: emotion, face expression, anti-saccade, medial prefrontal cortex, fMRI, inferior frontal gyrus, inhibition

Introduction

Surrounding environments in conjunction with the situations we encounter everyday require us to apply our cognition, plans, and goals to structure our actions through the top-down organization of our attention (Allport, 1993; Desimone and Duncan, 1995; Milner and Goodale, 2006; Fuster, 2009). In determining what is important during any given goal directed activity, we need to suppress erroneous actions that may arise due to the ambiguity of our surroundings (Schall, et al., 2002). When faced with competing demands contrary to habit with more than one option for behavior, further complex decision-making strategies and coordination of actions are required (Miller and Cohen, 2001; Behrens et al., 2007; Cisek et al., 2009). Previous literature on the prefrontal cortex (PFC) has found this region to be implicated in higher cognitive functions, including long term planning, response suppression, and response selection (Miller, 2000; Duncan, 2001; Miller and Cohen, 2001). This role is exerted when learned and expected stimulus associations that guide behavior are violated and inhibition of the prepared response is necessary to redirect attention (Nobre et al., 1999). A key function of the PFC is evident in situations when we need to draw on our internal representations of goals and use the most appropriate rules to achieve them (White and Wise, 1999; Asaad et al., 2000; Wallis et al., 2001; Everling and DeSouza, 2005). A classic task that demonstrates such situations in a laboratory setting is the Stroop (1935) task.

In the Stroop task, participants must name the color of ink in which a color word is written (Stroop, 1935; MacLeod, 1991). When the ink and the word refer to different colors, a conflict between what must be said and what is automatically read occurs. The participant must resolve the conflict between two competing processes: word reading and color naming. Our urge to read out a word leads to strong “stimulus–response (SR)” associations; hence inhibiting these strong SRs is difficult and prone to more errors. Such interference is due to the effortless nature of reading, and the less habitual behavior of color naming (MacLeod, 1991). When task irrelevant information such as the color word wins priority in processing by grabbing our attention, the PFC must exert top-down control in order to solve such biased processing of task contents (Milham et al., 2003). The medial frontal region has been implicated in control of voluntary actions, especially during tasks requiring a choice between competing responses (Schlag-Rey et al., 1997; Carter et al., 1999; Ito et al., 2003; Sumner et al., 2007). The anterior cingulate cortex (ACC) particularly, is involved in a large range of behavioral adjustments and cognitive controls namely detecting conflicts caused by competition of responses/behaviors, more broadly in processing cognitively demanding tasks (Carter et al., 1998; Kerns et al., 2004; Fellows and Farah, 2005). Imaging studies have confirmed increased activation within the PFC, particularly the ACC, which is proposed to be caused by the cognitive interference that results from simultaneous processing of two stimulus features with contrasting SR associations (Pardo et al., 1990; Bench et al., 1993; Vendrell et al., 1995; Bush et al., 1998; Barch et al., 2001; Zysset et al., 2001; Melcher and Gruber, 2009). Greater conflict-related activity within the ACC associated with the high-adjustment (i.e., incongruent) trials (MacDonald et al., 2000; Kerns et al., 2004) supports a role of this region in conflict monitoring and its engagement in cognitive control as stated by the conflict hypothesis (Carter et al., 1998; Botvinick et al., 2004). A host of imaging studies have reinforced the role of the orbitofrontal and inferior regions of the PFC in the regulation of emotion (Lévesque et al., 2004; Ohira et al., 2006). These two areas have been particularly implicated in suppression and reappraisal of negative emotional stimuli (Ochsner et al., 2004; Phan et al., 2005). The inferior frontal cortex has also been implicated in response conflict and inhibition as well as in selective attention processes (Kemmotsu et al., 2005). Studies on the go/no-go task (Menon et al., 2001; Rubia et al., 2001), the Eriksen flanker task (Bunge, 2002), and the Stroop task (Bush et al., 1998; Matthews et al., 2004; Kemmotsu et al., 2005), have found a role of the inferior frontal cortex on the no-go and incongruent conditions of these tasks. The activation of the inferior frontal region during the no-go trials and the incongruent conditions suggests the involvement of this area in restraining a more habitual response in favor of a more effortful one.

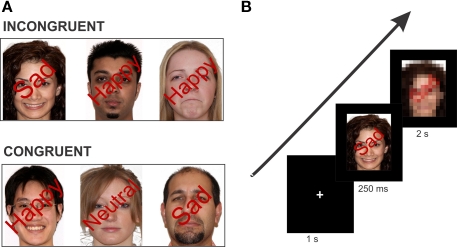

Based on our previous results on the anti-saccade and the Stroop task (DeSouza et al., 2003; Ovaysikia et al., 2008), we set out to further investigate the role of the medial PFC (mPFC) in an extended face processing network (Haxby et al., 1996; Ó Scalaidhe et al., 1997; Marinkovic et al., 2000). Using affective faces in an interference task (emotional Stroop) similar to the original Stroop-color/word, now introduces response conflict in conjunction with emotion of faces. This emotional Stroop task involves a picture–word interference (Rosinski et al., 1975; Beall and Herbert, 2008), in which descriptive words are superimposed over the pictures of individual faces. These words indicate the expression (happy, neutral, sad) that is either congruent or incongruent with the emotion depicted by the face in the picture (Figure 2A). By pairing word reading with a behavior such as recognition of face expressions, we will be able to see whether socially relevant stimuli (i.e., faces) alter the automatic processing of reading, as shown by the classic Stroop effect. We hypothesize that a conflict will occur because people's reading abilities interfere with their attempt to correctly identify face expressions, hence recruiting similar frontal regions as the classic Stroop (Pardo et al., 1990; Bench et al., 1993; Vendrell et al., 1995; Bush et al., 1998; Zysset et al., 2001; Botvinick et al., 2004; Melcher and Gruber, 2009).

Figure 2.

Example stimulus of each condition type and task paradigm. (A) Stimuli were isoluminant with superimposed words positioned at a 45° angle over the face image. In the incongruent condition, the face expression and the superimposed emotional word are conflicting. In the congruent condition, the face expression and the superimposed word describe the same emotion. Note: Stimuli here are not exact depiction of those used in the experiment. (B) Schematic overview of one trial of the experiment. The example here is an incongruent trial (face with a “Happy” expression superimposed by the word “Sad”). Each trial consisted of the fixation cross (1 s), face stimulus (250 ms), and the response image (2 s) which required the participant's button response.

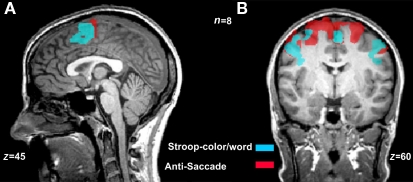

We wanted to shift the participants’ attention to either the word or to the face by switching instructions to report the written word or the face expression across blocks or individual trials, in order to examine which conflicting paradigm is modulated by the instruction both in behavior and across the mPFC network. In a previous experiment (Ovaysikia et al., 2008), we examined the overlapping neural systems recruited during the classical Stroop-color/word task and the anti-saccade task (Hallett, 1978; DeSouza et al., 2003; Figure 1). At first glance, the Stroop task and the anti-saccade task seem to involve very diverse neural mechanisms, since one appears to be more of a cognitive task (i.e., the Stroop) whereas the other seems to require less cognition but more visual attention (i.e., the anti-saccade). We were interested in their common association in the inhibition of a highly automatic process. Our novel emotional Stroop paradigm would allow us to further examine this suppression network and its activity pattern in the mPFC.

Figure 1.

Overlapping activations during the Stroop-color/word and the anti-saccade tasks. Color maps are overlay activations from incongruent and congruent blocks during the Stroop task (blue map) and the anti-saccade and pro-saccade trials (red map) during the anti-saccade task [n = 8, p(Bonferroni ) < 0.0001]. (A) The frontal regions activated during the classical Stroop-color/word task included the more anterior areas of pre-supplementary motor area (preSMA) and supplementary eye fields (SEF). (B) The active neural network during the anti-saccade task was similar to that of the Stroop-color/word task, with the addition of frontal eye fields (FEF).

Materials and Methods

Participants

Eighteen right-handed participants (nine females) aged 21–40 years with a mean age of 24.1 ± 4.4 years, participated in our study. Ten of these participated in the fMRI experiment. Participants did not report any history of neurological disorder and all had normal or correct-to-normal vision acuity. They were all either native English speaking or had attained fluency in the language by the age of 12 years. All procedures were approved by York University Human Participants Review Subcommittee and the Queen's University Ethics Review Board. All participants were debriefed and gave their informed consents prior to the study.

Stimulus presentation

The experiment was presented using Presentation 12.1 (Neurobehavioral Systems Inc., CA, USA). Superimposed words were positioned at a 45° angle over the face images using Eye Batch 2.1 (Atalasoft Inc., Easthampton, MA, USA) and all images were processed to equalize the isoluminance levels. Images were projected onto a screen in the MRI with a digital projector (NEC LT265), with the resolution of 1024 × 768. In both the MRI study and the behavioral study, all visual stimuli were presented on a black background and projected at a visual angle of 2.4° (fixation crosses) and the visual angle of the faces was 23.4° (horizontally) and 26.4° (vertically).

Scanning procedure

All imaging was conducted at the Queen's University MRI Facility in Kingston, Ontario using 3-Tesla Siemens Magnetom Trio with Tim (Erlangen, Germany) whole body MRI scanner equipped with a 12-channel head coil. Slices were oriented in the transverse plane. T2* -weighted segmented echo-planar imaging (EPI) was conducted (32 slices, 64 × 64 matrix, 211 cm × 211 cm field of view, 3.3 mm × 3.3 mm in-plane pixel size, 3.3 mm slice thickness, 0 mm gap, echo time of 30 ms, repetition time of 1970 ms, flip angle of 78°, volume acquisition time of 2.0 s, EPI voxel size of 3.3 mm × 3.3 mm × 3.3 mm) for the blood oxygenation level-dependent (BOLD) signal. A total of 188 volumes/scan and on average, 11 functional scans were collected for each participant. Functional data were superimposed on the T1-saturated images.

Task paradigm

A pseudo event-related design was used to identify the regions activated by suppression of an automatic behavior as created by emotional faces in conjunction with word processing. Although we acknowledged the existence of other simultaneous processes such as recognition of familiar faces, intention to press the button, and error monitoring, we mainly focused on the suppression effect by looking at the incongruent vs. congruent contrast in our analyses. Before entering the MRI, each participant was given a practice session using cartoon faces as stimuli. The practice session included 15 practice trials with different combinations of face–word expressions. The purpose of the practice was for the participants to learn the task and what is expected of them in pressing the appropriate button in the MRI scanner. They were instructed as to which buttons to press for reporting a happy expression/word, neutral expression/word, and sad expression/word. Additionally, when inside the scanner, participants were reminded of and briefly tested on what emotion each button represented.

The presented stimuli were made up of a set of familiar faces (i.e., members of the lab) and novel faces (i.e., friends of the first author unknown to the lab members) of males and females with happy, neutral, and sad face expressions (Figure 2A). The pictures were taken using a Sony DSLRA330L camera. There were a total of 216 different combinations of faces and words, with 12 different individual identities. The face blocks were presented in a pseudo-random order, alternating between the four conditions of congruent familiar, incongruent familiar, congruent unfamiliar, and incongruent unfamiliar. Each face block had a set duration of 10 fMRI volumes, with two repetitions of each expression with a different face identity presented in a row.

Functional scans consisted of twelve 20-s blocks with six face stimuli in each, interleaved with thirteen 10-s blocks of fixation for a total of 370 s (i.e., 6.2 min). Inside the MRI scanner, the participants were supine in a darkened room while holding a four-button joystick (Current Designs, Inc., Philadelphia, PA, USA) in their right hands for reporting their responses, using three of the buttons. Each scan began with a written instruction on the screen reminding the participants to either report the “FACE EXPRESSION (happy, neutral, sad)” or the “WRITTEN WORD (happy, neutral, sad)” by pressing the corresponding button as quickly as possible. The instruction was displayed for 1 s, followed by a fixation cross, which the participants fixated on for another 1 s (Figure 2B). The fixation cross was followed by the face stimuli presented for 250 ms and then followed by the response image (2 s). The response image was used to give the participants time to report their responses by pressing the appropriate button. The next visual presentation of the fixation cross began after the end of this response image. Each participant repeated the experimental scan in each instruction group (i.e., face expression or written word) four to six times for a total of 9–12 scans each.

Our aim in the fMRI portion of this experiment was to measure the differential BOLD signals as induced by: (1) the instruction effect, and (2) the incongruence vs. congruence effect.

Behavioral analysis

All reported statistics are reported from the fMRI study (n = 10; Figures 3C,D and 4C,D) and are also presented together with the behavioral portions of the experiment (n = 18; Figures 3A,B and 4A,B).

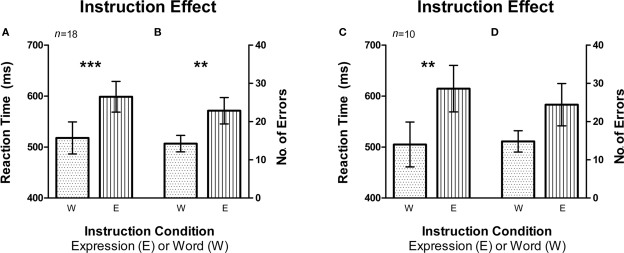

Figure 3.

Instruction effect depicting the automaticity of word reading compared to expression recognition. Error bars signify the standard error of mean (SEM). (A) Average reaction time (RT) was higher for the expression instruction condition compared to word instruction (n = 18, fMRI and Behavioral subjects). (B) There was also a larger average number of errors made on the expression instruction condition as compared to the word instruction condition (n = 18, fMRI and Behavioral subjects). (C,D) Same pattern was observed in RT and number of errors for fMRI subjects only (n = 10). **signifies p < 0.005, ***signifies p < 0.0005.

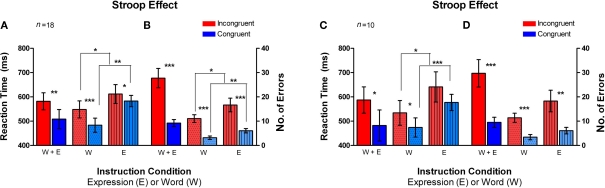

Figure 4.

Classic Stroop effect in the Emotional Stroop task. Incongruent trials (red bars) had an overall higher average RT and errors as compared to the congruent trials (blue bars). Error bars signify the standard error of mean (SEM). (A) Average reaction time (RT) plotted on the left axis for different instruction conditions (n = 18, fMRI and Behavioral subjects). (B) Number of errors is plotted for the same conditions on the right axis (n = 18, fMRI and Behavioral subjects). (C,D) Same pattern of RT and number of errors difference was observed for fMRI subjects only (n = 10). *signifies p < 0.05, **signifies p < 0.005, ***signifies p < 0.0005.

Reaction time (RT) was the measure of participant's button press latency (happy, neutral, or sad), which was the time between stimulus onset and the response. This RT was pooled within each of the incongruent and congruent conditions and within each instruction sets (expression and word). The errors were averaged within each of the incongruent and congruent conditions separately for the face instruction and word instruction sets. Our statistical tests were performed using the Student's paired t-tests.

fMRI data analysis

BrainVoyager QX software package (Version 1.10.4, Brain Innovation, Maastricht, The Netherlands) was used for data analysis. Functional data were superimposed on anatomical brain images, aligned in the anterior-commissure posterior-commissure plane, and transformed into the stereotaxic frame of Talairach space (Talairach and Tournoux, 1988). For each participant, the EPI images were realigned to the first image (T1-saturated volume) in correcting for movement throughout the scan. Motion-correction was performed using a trilinear interpolation approach (Ciulla and Deek, 2002).

The brain regions of interests (ROIs) were functionally defined using the general linear model (GLM) as carried out in Brain Voyager QX, with separate predictors for Fixation (fix), Incongruent Happy (InconHAP), Incongruent Neutral (InconNEUT), Incongruent Sad (InconSAD), Congruent Happy (ConHAP), Congruent Neutral (ConNEUT), and Congruent Sad (ConSAD). There were two sets of these seven predictors, for each of the two instruction conditions (i.e., expression instruction, and word instruction). These predictors were then convolved with the hemodynamic response function (Two-Gamma HRF: onset, 0; time to response peak, 4 s; response dispersion, 1; response to undershoot ratio, 6; time to undershoot peak, 15 s; undershoot dispersion, 1) and the resulting average across all participants (n = 10) was used to obtain a separate functional map at the Bonferroni corrected p-value (p ≤ 0.05), for each of the instruction conditions. The functional maps were used to overlay on each participant's corresponding data in order to extract the BOLD signal changes for those brain regions defined by the ROIs. ROIs were labeled using anatomical landmarks by comparing Talairach coordinates with those in the Talairach applet (Lancaster, 2000) and those identified in previous studies (Picard and Strick, 2001; DeSouza et al., 2003; Matsuda et al., 2004; Kemmotsu et al., 2005). Talairach coordinates reported in the fMRI results section are of the voxels of peak activation for that region. The data was modeled using the behavioral average RT (n = 10) for the incongruent trials and congruent trials on the corresponding blocks in order to include the presented stimuli (250 ms) plus the time it took for the brain to process the response (average RT).

BOLD signal data analysis

The BOLD percent signal change was computed by averaging the volumes for the three different expressions (happy, neutral, sad) within each condition block of Incongruent and Congruent for each participant. This was done for both the expression instruction and the word instruction conditions separately. The average of all fixation data points was used as the baseline. Single-factor analysis of variance (ANOVA) was used to compare the six expressions within each of the mentioned ROIs. This mixed-design ANOVA was performed using Statistical Package for the Social Sciences (SPSS) v15.0, (SPSS Inc., IL, USA).

Results

Behavior

In order to examine whether instruction had an effect on RT and number of errors, we sorted the data by the instruction sets of Word and Expression (Figure 3). The sets that required the participant to report the expression of the faces had a significantly higher mean RT than the ones that required them to report the written word on the faces (i.e., Word Instruction sets) [t(17) = 4.684, p < 0.0002, Figure 3A; t(9) = 4.654, p < 0.005, Figure 3C]. The same was also true for the number of errors with the Expression instruction group eliciting significantly more errors than the Word instruction group [t(17) = 3.443, p < 0.005, Figure 3B]. Within the fMRI group, the difference between errors made on the Expression instruction and Word instruction only approached significance [t(9) = 2.222, p = 0.053, Figure 3D].

When examining RTs in the incongruent vs. congruent trials, averaged across the two instruction sets (W + E), the incongruent trials yielded a significantly higher average RT than the congruent trials [t(17) = 2.824, p < 0.01; t(9) = 2.094, p < 0.05, solid colors in Figures 4A,C]. In the Word instruction set (W), the incongruent trials had a significantly higher mean RT than the congruent trials [t(17) = 4.514, p < 0.0002; t(9) = 2.758, p < 0.01, dotted bars in Figures 4A,C]. In the Expression instruction set (E), the mean RT difference between the incongruent trials and the congruent trials approached significance [t(17) = 1.451, p = 0.08; t(9) = 1.689, p = 0.06, striped bars in Figures 4A,C]. The mean number of errors made was also significantly greater on the incongruent trials as compared to congruent trials, both on the Word instruction set [t(17) = 6.198, p < 0.0001, t(9) = 6.197, p < 0.0001, dotted bars in Figures 4B,D] as well as the Expression instruction set [t(17) = 4.496, p < 0.0002, t(9) = 3.387, p < 0.005, striped bars in Figures 4B,D]. Thus, the average total number of errors made across both instruction sets, was significantly higher on the incongruent trials [t(17) = 6.198, p < 0.0001; t(9) = 5.201, p < 0.0003, solid colors in Figures 4B,D].

We also analyzed the data for the effect of familiarity of the two sets of face stimuli on RT and number of errors. This was done by now categorizing the conditions based on whether they were sets of familiar faces or sets of unfamiliar faces. Ignoring whether the trials were incongruent or congruent, we averaged the RTs in the familiar conditions and unfamiliar condition across both instruction sets (i.e., Expression and Word). In comparing the RT and number of errors, we did not observe a significant difference between the two conditions of familiar and unfamiliar faces on any of the comparisons and thus for all remaining analyses we will collapse across these groups.

fMRI

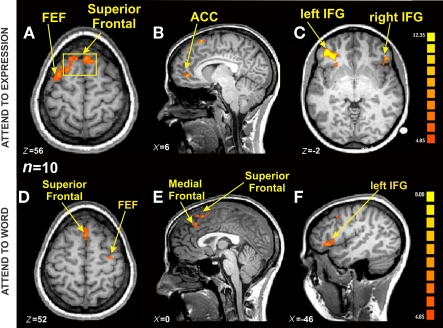

We identified regions of the frontal cortex that were activated for the incongruent trials compared with the congruent trials (i.e., contrast: incongruent–congruent) across all participants (n = 10; Table 1). All three expressions (i.e., happy, neutral, sad) were collapsed together in the incongruent condition and compared to all three expressions in the congruent condition. Due to the incongruency/congruency effect that was observed during the behavioral results, this contrast would allow us to make comments about the activation pattern resulting from the suppression of the automatic behavior modulated by our attention to the instructions. Thus, we looked at the activation patterns separately for the two instruction sets of attend to the “Expression” (i.e., asked to report the expression of the faces) or attend to the “Word” (i.e., asked to report the written word). As shown on Figure 5A, during the Expression instruction subset of the task, there was bilateral activation of the superior frontal gyrus (x = −8, y = 22, z = 54). In Figure 5B, there is a lateralized activation of the most anterior part of the cingulate cortex (ACC) was observed in the right hemisphere (x = 12, y = 44, z = 2). The strongest activation was localized in the left inferior frontal gyrus (Figure 5C; IFG; x = −38, y = 33, z = 1) with a relatively smaller right IFG activation (x = 43, y = 28, z = −3). We also observed activation of the frontal eye fields (FEF) on the opposite hemisphere as in the Word instruction (left: x = −30, y = 2, z = 57).

Table 1.

Regions of significant activation resulting from Incongruent blocks compared with Congruent blocks in the Expression and Word instruction conditions (n = 10).

| Region | Maximally activated voxel coordinates | No. Of active voxels | t-score | p-value | ||

|---|---|---|---|---|---|---|

| x | y | z | ||||

| WORD INSTRUCTION | ||||||

| L IFG | −47 | 28 | 6 | 620 | 6.32 | <0.001 |

| R IFG | 43 | 25 | −3 | 373 | 7.02 | <0.001 |

| Medial frontal | −5 | 36 | 42 | 175 | 6.74 | <0.001 |

| Precentral gyrus | 34 | −11 | 49 | 212 | 6.89 | <0.001 |

| R inferior temporal | 52 | −33 | 3 | 133 | 5.66 | <0.001 |

| L superior temporal | −44 | 16 | −21 | 125 | 5.76 | <0.001 |

| Cuneus | 16 | −80 | 33 | 127 | 7.12 | <0.001 |

| FACE INSTRUCTION | ||||||

| L IFG | −35 | 37 | −3 | 1094 | 13.63 | <0.001 |

| R IFG | 46 | 28 | −3 | 123 | 7.51 | <0.001 |

| Medial frontal | −29 | 4 | 54 | 494 | 10.84 | <0.001 |

| Anterior cingulate | 19 | 46 | 0 | 296 | 10.18 | <0.001 |

| Superior frontal | 4 | 22 | 54 | 1800 | 10.17 | <0.001 |

Figure 5.

fMRI BOLD activation from Incongruent blocks compared with Congruent blocks in the Expression and Word instruction conditions [n = 10, p(Bonferroni) < 0.05]. (A) Axial view showing the same Superior Frontal activation from (B) (yellow square), and a lateralized FEF activation on the left hemisphere (TAL coordinates: x = 5, y >= 22, z = 56). (B) Sagittal view centered on ACC on the right hemisphere (TAL coordinates: x = 6, y = 45, z = 1). (C) Axial view showing bilateral IFG activation (TAL coordinates: x = −39, y = 39, z = −2). (D) Axial view showing Superior Frontal activation, and a lateralized FEF activation on the right hemisphere (x = 0, y = −20, z = 52). (E) Sagittal view centered on Superior and Medial Frontal regions (TAL coordinates: x = 0, y = −9, z = 22). (F) Sagittal view centered on the left IFG (TAL coordinates: left IFG TAL coordinates: x = −46, y = 36, z = 4).

Overall, there was a similar activation pattern observed during attend to the Word instruction. The superior frontal cortex was activated in a bilateral manner (Figure 5D; x = 0, y = 23, z = 50) as was the IFG (Figure 5F, right: x = 52, y = 31, z = 5; left: x = −49, y = 33, z = 9). The medial frontal region inferior to the superior frontal activation in Figure 5E showed significant activation in the left hemisphere (x = −3, y = 32, z = 40). Activation of the FEF was observed now in the right hemisphere (x = 31, y = 9, z = 51). Some of the other brain regions that showed significant BOLD activation during the Word instruction set were the left superior temporal gyrus (x = −1, y = 23, z = 50), bilateral medial temporal gyrus (left: x = −54, y = −18, z = −3; right: x = 44, y = 16, z = 19), and the right cuneus (x = 13, y = 78, z = 33). The ACC activation was absent during the Word instruction set of the task, presumably due to the fact that there were very few number of errors made during the Word instruction condition (see “W” bars for number of errors, Figure 4D). Unfortunately, our subjects were too accurate (an average of 11 errors on the incongruent trials and 3 errors on the congruent trials per subject) and hence there were not enough trials to make functional maps of the errors. In future studies we plan to make the task more difficult in order to examine this putative error network.

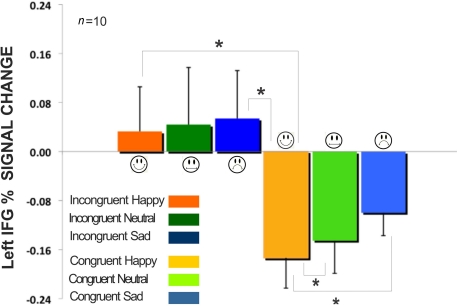

We examined the signal intensity in the regions of medial frontal, superior frontal, and inferior frontal. The standardized BOLD signal was computed across all participants (n = 10) and compared for the incongruent expressions (happy, neutral, sad) and congruent expressions (happy, neutral, sad) for both the expression instruction condition and the word instruction condition. All BOLD signal results were also submitted to a 6 × 2 × 3 mixed-design ANOVA having expression (within factor, six levels: incongruent happy, incongruent neutral, incongruent sad, congruent happy, congruent neutral, and congruent sad), instruction (within factor, two levels: word instruction, and expression instruction), and brain region (between factor, three levels: right inferior frontal, left inferior frontal, and medial frontal). Results showed an interaction effect between all three factors of expression, instruction, and brain region [sphericity assumed test: F(10,135) = 2.96, p < 0.005, partial η2 = 0.18]. There was no main effect of expression and no main effect of instruction. We found that when the expression of the face was incongruent to the superimposed emotional word, the incongruency produced from reporting the written word produced higher BOLD signal intensity in the left IFG (Figure 6). The larger signal intensity on the incongruent expressions compared to the congruent expressions was significant, with happy congruent showing the largest difference [incongruent happy compared to congruent happy: t(5) = 2.64 p < 0.05; incongruent sad compared to congruent happy: t(5) = 2.76, p < 0.05; congruent neutral compared to congruent happy: t(5) = 2.62, p < 0.05; congruent sad compared to congruent happy: t(5) = 4.03, p < 0.05].

Figure 6.

Comparison of incongruent and congruent expressions in the Word instruction condition for the left inferior frontal gyrus in Figure 5F. All fixation volumes were used as the baseline. Error bars signify the standard error of mean (SEM). Incongruent expressions (Happy, Neutral, Sad) showed significantly larger BOLD signal change compared to congruent expressions. (*signifies p < 0.05).

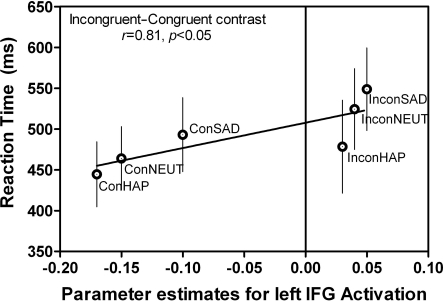

A regression analysis was conducted to test whether RT for the incongruent and congruent conditions were predictive of the BOLD signal activity within each of the ROIs based on a linear model (Figure 7). We found that RT accounts for 81% of the variation in left IFG activity when reporting the word expressions of Happy, Neutral, and Sad during the incongruent and congruent conditions [F(1,4) = 7.78, p < 0.05, r2 = 0.66]. The higher the RT was predictive of larger left IFG activation, with the incongruent sad condition yielding the greatest RT/signal intensity ratio compared to all other expression conditions.

Figure 7.

Correlations between behavior and BOLD signal in left IFG. The same left IFG ROI (Figure 5F: −46, 36, 4) during the “Attend to Word” instruction, incongruent–congruent contrast showed a positive correlation between the RT and BOLD signal intensity. This indicates that additional resources in this region were recruited when the incongruent expressions were being reported. This graph is an average of all subjects’ RT and BOLD signal during each of the conditions (n = 10). Error bars signify the standard error of mean (SEM).

Discussion

When comparing the two behaviors of recognition of face expressions and reading, one which is assumed to be an innate ability (Meltzoff and Moore, 1983; Valenza et al., 1996; Farah et al., 2000; Sugita, 2009) and the other which is a learned behavior, it would be expected that the innate ability be processed more automatically in our brain. However, contrary to popular assumptions (Öhman, 2002; Dolan and Vuileumier, 2003), our results demonstrated the opposite. We found that attending to words is processed in a more automatic manner than attending to faces. The automatic nature of word reading which in our task integrated affective processing through emotional words, is concluded from the shorter response latencies and smaller number of errors yielded during the word instruction condition (Figures 3 and 4). This finding is in contrast to a study by Beall and Herbert (2008) that used affective faces and words in a similar modified version of the Stroop task. Beall and Herbert (2008) found that words produced larger interference effects than face expressions, suggesting that affective faces were processed more automatically. Discrepancies between our findings could be due to differences in stimuli presentation time and saliency, and also the instructions given to the participants. In the Beall and Herbert (2008) study, the face stimuli was presented for 1 s compared to the 250 ms in our study; the longer presentation time would allow for the face expressions to be processed more completely and hence reported at a faster rate. Their superimposed words were placed right on the nose of the face stimuli (very small font size) in a dark gray color, making the expressions easier to identify than ours that was placed diagonally in an attempt to cover as much of the face as possible. The color of the words were also not as salient as the red we used in our experiment; the more salient color could have caused reporting the word to be an easier task and hence leading to the faster RT on our word instruction. Additionally, in the Beall and Herbert (2008) study, participants were asked to respond by reporting whether the emotion is positive or negative whereas in ours they were simply reading the written words or reporting the particular face expression. The longer time during the word instruction in their study could also be due to the extra step that the participant has to undergo to identify each word or expression as either a positive or negative one.

Our brain selectively processes not only the more salient stimuli in the environment but also those that are most relevant to behavior. Whether a behavior is an effortless one such as word reading because of over-learning or is an instinctive one based on its importance for survival (i.e., recognition of emotions), our brain needs to prioritize these in the most efficient way possible. One way the brain achieves such efficient processing is through hierarchical organization (Felleman and van Essen, 1991; Badre and D'Esposito, 2009) of the processing sequence. By placing all the information competing for neural processing on a continuum of rankings, behaviors with greater importance (i.e., stronger SR associations) are processed in a faster manner. Another possible explanation could be that word reading is computed faster because more neural resources have been dedicated to its processing over the years, making our brain more efficient and hence faster at such coding. One could also argue that equal neural resources have been dedicated to expression processing and that a key difference may be one's awareness of doing so (Lamme, 2003). We are much more aware of what we are doing when word reading, whereas often, interpretation of expression is done “unconsciously” or without awareness. The complex nature of a face compared to a word could also be a contributing factor to the longer behavioral latencies observed during the processing of face expressions in our data.

We observed that the mPFC (in Figures 5B,E) was more activated during trials that required the suppression of a more automatic action in favor of a controlled, less automatic one, which was also observed in our previous findings for the anti-saccade task and the Stroop-color/word task (Ovaysikia et al., 2008). Our present results show that different regions of the mPFC are activated in each of the two instruction conditions (attend to the word or attend to the expression). Though both the expression instruction and the word instruction conditions entailed inhibitory responses, the distinct pattern of activation within the PFC areas suggests the involvement of a suppression mechanism specific to the type of inhibition at hand. Recognition of face expressions may be more difficult to suppress because of the socially relevant nature of faces to us humans. In our task, the familiarity of some of the faces could have also had an effect in distracting the participants as their attention would have been captured by the face due to the self-referential significance attached to it. The more difficult nature of expression recognition is behaviorally evident through the longer RTs and increased number of errors made on this condition compared to the word instruction condition (Figure 3). During the expression instruction condition, a region within the ACC was activated (Figure 5B), which could also be indicative of the greater complexity level of reporting face expressions in presence of incongruent emotional words. Overall, we make the assertion that the more conflicting nature of the suppression, the more anterior the mPFC activation will be.

The observed bilateral activation of the IFG during the attend to word and expression instructions is consistent with previous studies showing inferior frontal activity in response inhibition tasks (Konishi et al., 1998; Menon et al., 2001; Kemmotsu et al., 2005). This activation was a result of the contrast of incongruent trials compared to congruent trials, as has also been demonstrated in previous Stroop tasks (Matthews et al., 2004). Recognition of others’ face expressions is assumed to be an adaptive behavior with substantial social importance attached to the understanding of these expressions. The involvement of the IFG has been established in recognition and interpretation of others’ actions (Chong et al., 2008). Our present study showed the left IFG's sensitivity to face expressions as we observed significant BOLD signal differences between all three expressions of happy, neutral, and sad (Figure 6). Hence not only is there a suppression mechanism accounting for the higher signal intensity on the incongruent trials but the particular face expressions presented within those trials also have an effect on this region's activation. The emotion-specific activation of the left IFG has previously been demonstrated using disgust, fearful, and angry face expressions (Sprengelmeyer et al., 1998), which can now be extended to include happy, neutral, and sad expressions from our results. The linguistic processing of emotional information has also been shown to activate the right IFG (Rota et al., 2009), which in our task is evidently carried out in an automatic manner under both instruction conditions, but the BOLD intensities in this region were not significantly different between happy, neutral, and sad expressions. It is possible that by attending to the face expressions of the presented faces in the experiment, participants experienced those affective states by making inferences from the emotional faces. The presence of such experience is to be expected especially since half of the faces viewed by the participants in this study were of familiar individuals with whom they would have had personal acquaintances. The involvement of IFG in emotional empathy is evident through the lack of such ability in patients with lesions in this region (Shamay-Tsoory et al., 2009).

An interesting pattern of FEF activation was observed with lateralization to the right hemisphere during attend to the word instruction and to the left hemisphere during attend to the expression instruction. The FEF region has well-established connections with the ventral stream areas as well as area V4 which are involved in attentional processing (Schall et al., 1995; Moore and Fallah, 2001; Gregoriou et al., 2009). During the word instruction of the task, the participants are required to ignore the face in order to successfully read the word. Perhaps, it may be that the attentional suppression of affective faces is recruiting the right FEF, given that the right hemisphere is known to be dominant in the processing of face expressions (Natale et al., 1983; Hauser, 1993; Borod et al., 1998; Killgore and Yurgelun-Todd, 2007). The right hemisphere of the prefrontal regions is also implicated when conflict arises at the response level, whereas the left hemisphere is more involved in attentional control at non-response levels (Milham et al., 2001). In contrast, during the expression instruction of the task, participants need to successfully suppress their urge to attend to the superimposed word in order to report the face expression. Since reading is dominated by the left hemisphere (Perret, 1974) in our 10 right-handed participants, we propose that the left FEF is now only laterally recruited during this subset of the task as it requires the suppression of word reading. Our results cannot be explained using the hemispheric dominance in attentional control view (Milham et al., 2003), since our task design does not allow us to disambiguate between conflict at response levels and non-response levels; we are assuming the conflict is occurring just prior to the response level in both instruction sets.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This research was funded by the Faculty of Health at York University, National Science and Engineering Research Council (NSERC) and Research at York (RAY) Program. We thank Sharon David for data acquisition, Dr. Kari L. Hoffman for all the helpful comments and suggestions, Jonathan Lissoos and Simina Luca for image processing, Cecilia Jobst, Cecilia Meza, and Laura Pynn for analysis assistance.

References

- Allport A. (1993). “Attention and control: have we been asking the wrong questions? A critical review of twenty-five years,” in Attention and Performance, Vol. XIV, eds Meyer D. E., Kornblum S. (London: MIT Press; ), 183–218 [Google Scholar]

- Asaad W. F., Rainer G., Miller E. K. (2000). Task-specific neural activity in the primate prefrontal cortex. J. Neurophysiol. 84, 451–459 [DOI] [PubMed] [Google Scholar]

- Badre D., D'Esposito M. (2009). Is the rostro-caudal axis of the frontal lobe hierarchical? Nat. Rev. Neurosci. 10, 659–669 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barch D. M., Braver T. S., Akbudak E., Conturo T., Ollinger J., Snyder A. (2001). Anterior cingulate cortex and response conflict: effects of response modality and processing domain. Cereb. Cortex 11, 837–848 10.1093/cercor/11.9.837 [DOI] [PubMed] [Google Scholar]

- Beall P. M., Herbert A. M. (2008). The face wins: stronger automatic processing of affect in facial expressions than words in a modified Stroop task. Cogn. Emot. 22, 1613–1642 10.1080/02699930801940370 [DOI] [Google Scholar]

- Behrens T. E. J., Woolrich M. W., Walton M. E., Rushworth M. F. S. (2007). Learning the value of information in an uncertain world. Nat. Neurosci. 10, 1214–1221 [DOI] [PubMed] [Google Scholar]

- Bench C., Frith C., Grasby P., Friston K., Paulesu E., Frackowiak R., Dolan R. (1993). Investigations of the functional anatomy of attention using the Stroop test. Neuropsychologia 31, 907–922 10.1016/0028-3932(93)90147-R [DOI] [PubMed] [Google Scholar]

- Borod J. C., Obler L. K., Erhan H. M., Grunwald I. S., Cicero B. A., Welkowitz J., Santschi C., Agosti R. M., Whalen J. R. (1998). Right hemisphere emotional perception: evidence across multiple channels. Neuropsychology 12, 446–458 10.1037/0894-4105.12.3.446 [DOI] [PubMed] [Google Scholar]

- Botvinick M. M., Cohen J. D., Carter C. S. (2004). Conflict monitoring and anterior cingulate cortex: an update. Trends Cogn. Sci. 8, 539–546 [DOI] [PubMed] [Google Scholar]

- Bunge S. A., Dudukovic N. M., Thomason M. E., Vaidya C. J., Gabrieli J. D. E. (2002). Immature frontal lobe contributions to cognitive control in children: evidence from fMRI. Neuron 33, 301–311 10.1016/S0896-6273(01)00583-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bush G., Whalen P. J., Rosen B. R., Jenike M. A., McInerney S. C., Rauch S. L. (1998). Counting Stroop: an interference task specialized for functional neuroimaging validation study with functional MRI. Hum. Brain Mapp. 6, 270–282 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carter C. S., Botvinick M. M., Cohen J. D. (1999). The contribution of the anterior cingulate cortex to executive processes in cognition. Rev. Neurosci. 10, 49–57 [DOI] [PubMed] [Google Scholar]

- Carter C. S., Braver T. S., Barch D. M., Botvinick M. M., Noll D., Cohen J. D. (1998). Anterior cingulate cortex, error detection, and the online monitoring of performance. Science 280, 747–749 10.1126/science.280.5364.747 [DOI] [PubMed] [Google Scholar]

- Chong T. T. J., Williams M. A., Cunnington R., Mattingley J. B. (2008). Selective attention modulates inferior frontal gyrus activity during action observation. Neuroimage 40, 298–307 10.1016/j.neuroimage.2007.11.030 [DOI] [PubMed] [Google Scholar]

- Cisek P., Puskas G. A., El Murr S. (2009). Decisions in changing conditions: the urgency-gating model. J. Neurosci. 29, 11560–11571 10.1523/JNEUROSCI.1844-09.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ciulla C., Deek F. P. (2002). Performance assessment of an algorithm for the alignment of fMRI time series. Brain Topogr. 14, 313–332 10.1023/A:1015756812054 [DOI] [PubMed] [Google Scholar]

- Desimone R., Duncan J. (1995). Neural mechanisms of selective visual attention. Annu. Rev. Neurosci. 18, 193–222 [DOI] [PubMed] [Google Scholar]

- DeSouza J. F. X., Menon R. S., Everling S. (2003). Preparatory set associated with pro-saccades and anti-saccades in humans investigated with event-related fMRI. J. Neurophysiol. 89, 1016–1023 10.1152/jn.00562.2002 [DOI] [PubMed] [Google Scholar]

- Dolan R. J., Vuileumier P. (2003). Amygdala automaticity in emotional processing. Ann. N. Y. Acad. Sci. 985, 348–355 [DOI] [PubMed] [Google Scholar]

- Duncan J. (2001). An adaptive coding model of neural function in prefrontal cortex. Nat. Rev. Neurosci. 2, 820–829 [DOI] [PubMed] [Google Scholar]

- Everling S., DeSouza J. F. X. (2005). Rule-dependent activity for prosaccades and antisaccades in the primate prefrontal cortex. J. Cogn. Neurosci. 17, 1483–1496 [DOI] [PubMed] [Google Scholar]

- Farah M. J., Rabinowitz C., Quinn G. E., Liu G. T. (2000). Early commitment of neural substrates for face recognition. Cogn. Neuropsychol. 17, 117–123 [DOI] [PubMed] [Google Scholar]

- Felleman D. J., van Essen D. C. (1991). Distributed hierarchical processing in the primate cerebral cortex. Cereb. Cortex 1, 1–47 [DOI] [PubMed] [Google Scholar]

- Fellows L. K., Farah M. J. (2005). Is anterior cingulate cortex necessary for cognitive control? Brain 128, 788–796 10.1093/brain/awh405 [DOI] [PubMed] [Google Scholar]

- Fuster J. M. (2009). Cortex and memory: emergence of a new paradigm. J. Cogn. Neurosci. 21, 2047–2072 [DOI] [PubMed] [Google Scholar]

- Gregoriou G. G., Gotts S. J., Zhou H., Desimone R. (2009). High-Frequency, long-range coupling between prefrontal and visual cortex during attention. Science 324, 1207–1210 10.1126/science.1171402 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hallett P. E. (1978). Primary and secondary saccades to goals defined by instructions. Vision Res. 18, 1279–1296 10.1016/0042-6989(78)90218-3 [DOI] [PubMed] [Google Scholar]

- Hauser M. D. (1993). Right hemisphere dominance for the production of face expression in monkeys. Science 261, 475–477 10.1126/science.8332914 [DOI] [PubMed] [Google Scholar]

- Haxby J. V., Ungerleider L. G., Horwitz B., Maisog J. M., Rapoport S. I., Grady C. L. (1996). Face encoding and recognition in the human brain. Proc. Natl. Acad. Sci. U.S.A. 93, 922–927 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ito S., Stuphorn V., Brown J. W., Schall J. D. (2003). Performance monitoring by the anterior cingulate cortex during saccade countermanding. Science 302, 120–122 10.1126/science.1087847 [DOI] [PubMed] [Google Scholar]

- Kemmotsu N., Villalobos M. E., Gaffrey M. S., Courchesne E., Muller R. (2005). Activity and functional connectivity of inferior frontal cortex associated with response conflict. Cogn. Brain Res. 24, 335–342 [DOI] [PubMed] [Google Scholar]

- Kerns J. G., Cohen J. D., MacDonald A. W., Cho R. Y., Stenger V. A., Carter C. S. (2004). Anterior cingulated conflict monitoring and adjustments in control. Science 303, 1023–1026 10.1126/science.1089910 [DOI] [PubMed] [Google Scholar]

- Killgore W. D. S., Yurgelun-Todd D. A. (2007). The right-hemisphere and valence hypotheses: could they both be right (and sometimes left)? Soc. Cogn. Affect. Neurosci. 2, 240–250 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Konishi S., Nakajima K., Uchida I., Sekihara K., Miyashita Y. (1998). No-go dominant brain activity in human inferior prefrontal cortex revealed by functional magnetic resonance imaging. Eur. J. Neurosci. 10, 1209–1213 [DOI] [PubMed] [Google Scholar]

- Lamme V. A. (2003). Why visual attention and awareness are different. Trends Cogn. Sci. 7, 12–18 [DOI] [PubMed] [Google Scholar]

- Lancaster J. L. (2000). Automated Talairach atlas labels for functional brain mapping. Hum. Brain Mapp. 10, 120–131 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lévesque J., Joanette Y., Mensour B., Beaudoin G., Leroux J. M., Bourgouin P., Beauregard M. (2004). Neural basis of emotional self-regulation in childhood. Neuroscience 129, 361–369 10.1016/j.neuroscience.2004.07.032 [DOI] [PubMed] [Google Scholar]

- MacDonald A. W., Cohen J. D., Stenger V. A., Carter C. S. (2000). Dissociating the role of the dorsolateral prefrontal and anterior cingulate cortex in cognitive control. Science 288, 1835–1838 10.1126/science.288.5472.1835 [DOI] [PubMed] [Google Scholar]

- MacLeod C. M. (1991). Half a century of research on the Stroop effect: an integrative review. Psychol. Bull. 109, 163–203 [DOI] [PubMed] [Google Scholar]

- Marinkovic K., Trebon P., Chauvel P., Halgren E. (2000). Localised face processing by the human prefrontal cortex: face-selective intracerebral potentials and post-lesion deficits. Cogn. Neuropsychol. 17, 187–199 [DOI] [PubMed] [Google Scholar]

- Matsuda T., Matsuura M., Ohkubo T., Ohkubo H., Matsushima E., Inoue K., Taira M., Kojima T. (2004). Functional MRI mapping of brain activation during visually guided saccades and antisaccades: cortical and subcortical networks. Psychiatry Res. 131, 147–155 10.1016/j.pscychresns.2003.12.007 [DOI] [PubMed] [Google Scholar]

- Matthews S. C., Paulus M. P., Simmons A. N., Nelesen R. A., Dimsdale J. E. (2004). Functional subdivisions within anterior cingulate cortex and their relationship to autonomic nervous system function. Neuroimage 22, 1151–1156 10.1016/j.neuroimage.2004.03.005 [DOI] [PubMed] [Google Scholar]

- Melcher T., Gruber O. (2009). Decomposing interference during Stroop performance into different conflict factors: an event-related fMRI study. Cortex 45, 189–200 10.1016/j.cortex.2007.06.004 [DOI] [PubMed] [Google Scholar]

- Meltzoff A. N., Moore M. K. (1983). Newborn infants imitate adult face gestures. Child Dev. 54, 702–709 10.2307/1130058 [DOI] [PubMed] [Google Scholar]

- Menon V., Adleman N. E., White C. D., Glover G. H., Reiss A. L. (2001). Error-related brain activation during a go/nogo response inhibition task. Hum. Brain Mapp. 12, 131–143 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Milham M. P., Banich M. T., Barad V. (2003). Competition for priority in processing increases prefrontal cortex's involvement in top-down control: an event-related fMRI study of the Stroop task. Cogn. Brain Res. 17, 212–222 [DOI] [PubMed] [Google Scholar]

- Milham M. P., Banich M. T., Webb A., Barad V., Cohen N. J., Wszalek T. (2001). The relative involvement of anterior cingulate and prefrontal cortex in attentional control depends on nature of conflict. Cogn. Brain Res. 12, 467–473 [DOI] [PubMed] [Google Scholar]

- Miller E. K. (2000). The prefrontal cortex and cognitive control. Nat. Rev. Neurosci. 1, 59–65 [DOI] [PubMed] [Google Scholar]

- Miller E. K., Cohen J. D. (2001). An integrative theory of prefrontal cortex function. Annu. Rev. Neurosci. 24, 167–202 [DOI] [PubMed] [Google Scholar]

- Milner A. D., Goodale M. A. (2006). The Visual Brain in Action, 2nd Edn Oxford: Oxford University Press [Google Scholar]

- Moore T., Fallah M. (2001). Control of eye movements and spatial attention. Proc. Natl. Acad. Sci. U.S.A. 98, 1273–1276 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Natale M., Gur R. E., Gur R. C. (1983). Hemispheric asymmetries in processing emotional expressions. Neuropsychologia 21, 555–565 10.1016/0028-3932(83)90011-8 [DOI] [PubMed] [Google Scholar]

- Nobre A. C., Coull J. T., Frith C. D., Mesulam M. M. (1999). Orbitofrontal cortex is activated during breaches of expectation in tasks of visual attention. Nat. Neurosci. 2, 11–12 [DOI] [PubMed] [Google Scholar]

- ÓScalaidhe S. P., Wilson F. A. W., Goldman-Rakic P. S. (1997). Areal segregation of face-processing neurons in prefrontal cortex. Science 278, 1135–1138 10.1126/science.278.5340.1135 [DOI] [PubMed] [Google Scholar]

- Ochsner K. N., Ray R. D., Cooper J. C., Robertson E. R., Chopra S., Gabrieli J. D. E. (2004). For better or for worse: neural systems supporting the cognitive down- and up-regulation of negative emotion. Neuroimage 23, 483–499 10.1016/j.neuroimage.2004.06.030 [DOI] [PubMed] [Google Scholar]

- Ohira H., Nomura M., Ichikawa N., Isowa T., Iidaka T., Sato A. (2006). Association of neural and physiological responses during voluntary emotion suppression. Neuroimage 29, 721–733 10.1016/j.neuroimage.2005.08.047 [DOI] [PubMed] [Google Scholar]

- Öhman A. (2002). Automaticity and the amygdala: nonconscious responses to emotional faces. Curr. Dir. Psychol. Sci. 11, 62–69 [Google Scholar]

- Ovaysikia S., Danckert J., DeSouza J. F. X. (2008). “A common medial frontal cortical network for the Stroop and anti-saccade tasks but not for the subcortical area in the putamen,” in Poster presented at Cognitive Neuroscience Society, San Francisco, CA [Google Scholar]

- Pardo J. V., Pardo P. J., Janer K. W., Raichle M. E. (1990). The anterior cingulate cortex mediates processing selection in the Stroop attentional conflict paradigm. Proc. Natl. Acad. Sci. U.S.A. 87, 256–259 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perret E. (1974). The left frontal lobe of man and the suppression of habitual responses in verbal categorical behaviour. Neuropsychologia 12, 323–330 10.1016/0028-3932(74)90047-5 [DOI] [PubMed] [Google Scholar]

- Phan K. L., Fitzgerald D. A., Nathan P. J., Moore G. J., Uhde T. W., Tancer M. E. (2005). Neural substrates for voluntary suppression of negative affect: a functional magnetic resonance imaging study. Biol. Psychiatry 57, 210–219 [DOI] [PubMed] [Google Scholar]

- Picard N., Strick P. L. (2001). Imaging the premotor areas. Curr. Opin. Neurobiol. 11, 663–672 [DOI] [PubMed] [Google Scholar]

- Rosinski R. R., Golinkoff R. M., Kukish K. S. (1975). Automatic semantic processing in a picture word interference task. Child Dev. 46, 247–253 10.2307/1128859 [DOI] [Google Scholar]

- Rota G., Sitaram R., Veit R., Erb M., Weiskopf N., Dogil G., Birbaumer N. (2009). Self-regulation of regional cortical activity using real-time fMRI: the right inferior frontal gyrus and linguistic processing. Hum. Brain Mapp. 30, 1605–1614 10.1002/hbm.20621 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rubia K., Russell T., Overmeyer S., Brammer M. J., Bullmore E. T., Sharma T. (2001). Mapping motor inhibition: conjunctive brain activations across different versions of go/no-go and stop tasks. Neuroimage 13, 250–261 10.1006/nimg.2000.0685 [DOI] [PubMed] [Google Scholar]

- Schall J. D., Hanes D. P., Thompson K. G., King D. J. (1995). Saccade target selection in frontal eye field of macaque. I. Visual and premovement activation. J. Neurosci. 15, 6905–6918 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schall J. D., Stuphorn V., Brown J. W. (2002). Monitoring and control of action by the frontal lobes. Neuron 36, 309–322 10.1016/S0896-6273(02)00964-9 [DOI] [PubMed] [Google Scholar]

- Schlag-Rey M., Amador N., Sanchez H., Schlag J. (1997). Antisaccade performance predicted by neuronal activity in the supplementary eye field. Nature 390, 398–401 10.1038/37114 [DOI] [PubMed] [Google Scholar]

- Shamay-Tsoory S. G., Aharon-Peretz J., Perry D. (2009). Two systems for empathy: a double dissociation between emotional and cognitive empathy in inferior frontal gyrus versus ventromedial prefrontal lesions. Brain 132, 617–627 10.1093/brain/awn279 [DOI] [PubMed] [Google Scholar]

- Sprengelmeyer R., Rausch M., Eysel U. T., Przuntek H. (1998). Neural structures associated with recognition of face expressions of basic emotions. Proc. Biol. Sci. 265, 1927–1931 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stroop J. R. (1935). Studies of interference in serial verbal reactions. J. Exp. Psychol. 18, 643–662 [Google Scholar]

- Sugita Y. (2009). Innate face processing. Curr. Opin. Neurobiol. 19, 39–44 [DOI] [PubMed] [Google Scholar]

- Sumner P., Nachev P., Morris P., Peters A. M., Jackson S. R., Kennard C. (2007). Human medial frontal cortex mediates unconscious inhibition of voluntary action. Neuron 54, 697–711 10.1016/j.neuron.2007.05.016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Talairach J., Tournoux P. (1988). Co-planar Stereotaxic Atlas of the Human Brain: 3-Dimensional Proportional System: An Approach to Cerebral Imaging. New York: G. Thieme/Thieme Medical Publishers [Google Scholar]

- Valenza E., Simion F., Cassia V. M., Umiltà C. (1996). Face preference at birth. J. Exp. Psychol. Hum. Percept. Perform. 22, 892–903 [DOI] [PubMed] [Google Scholar]

- Vendrell P., Junque C., Pujol J., Jurado M., Molet J., Grafman J. (1995). The role of prefrontal regions in the Stroop task. Neuropsychologia 33, 341–352 10.1016/0028-3932(94)00116-7 [DOI] [PubMed] [Google Scholar]

- Wallis J. D., Anderson K. C., Miller E. K. (2001). Single neurons in prefrontal cortex encode abstract rules. Nature 411, 953–956 10.1038/35082081 [DOI] [PubMed] [Google Scholar]

- White I. M., Wise S. P. (1999). Rule-dependent neuronal activity in the prefrontal cortex. Exp. Brain Res. 126, 315–335 10.1007/s002210050740 [DOI] [PubMed] [Google Scholar]

- Zysset S., Müller K., Lohmann G., von Cramon D. Y. (2001). Colour-word matching Stroop task: separating interference and response conflict. Neuroimage 13, 29–36 10.1006/nimg.2000.0665 [DOI] [PubMed] [Google Scholar]